Introduction to Data Center Computing Derek Murray October

Introduction to Data Center Computing Derek Murray October 2010

What we’ll cover • Techniques for handling “big data” – Distributed storage – Distributed computation • Focus on recent papers describing real systems

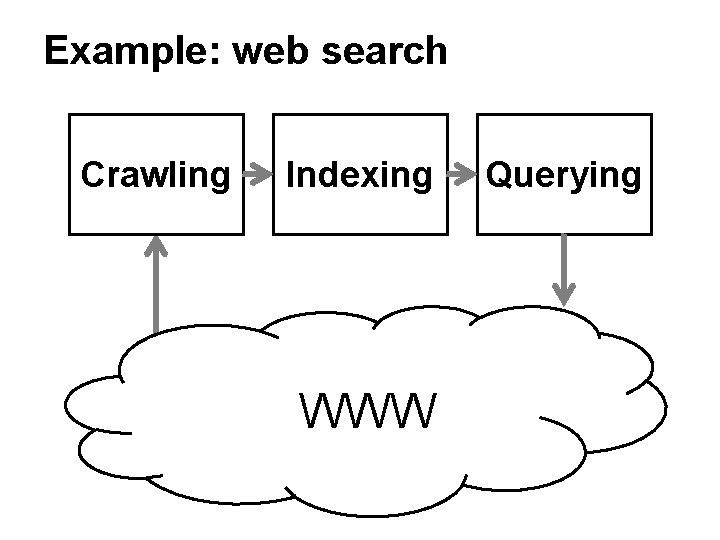

Example: web search Crawling Indexing WWW Querying

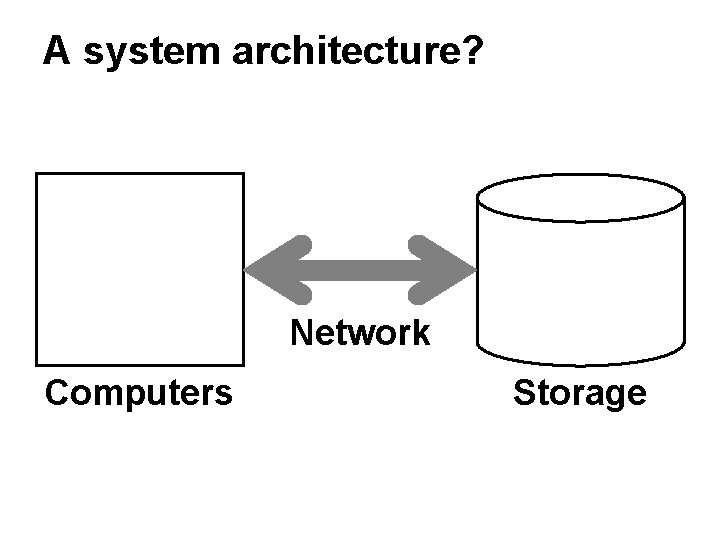

A system architecture? Network Computers Storage

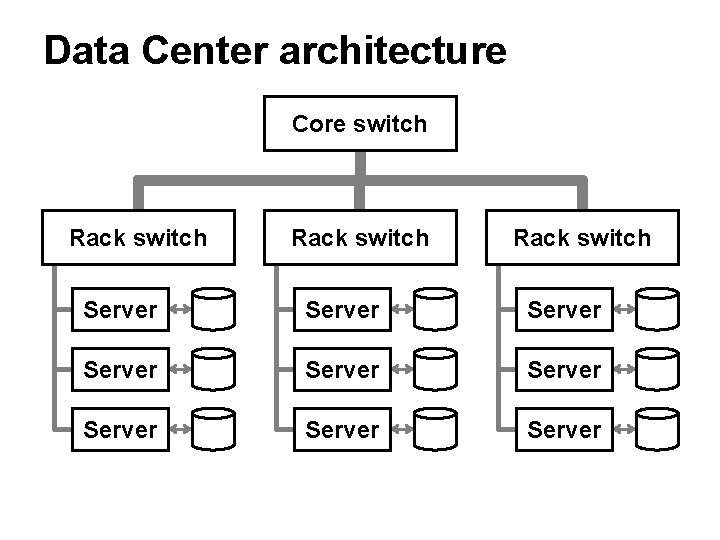

Data Center architecture Core switch Rack switch Server Server Server

Distributed storage • High volume of data • High volume of read/write requests • Fault tolerance

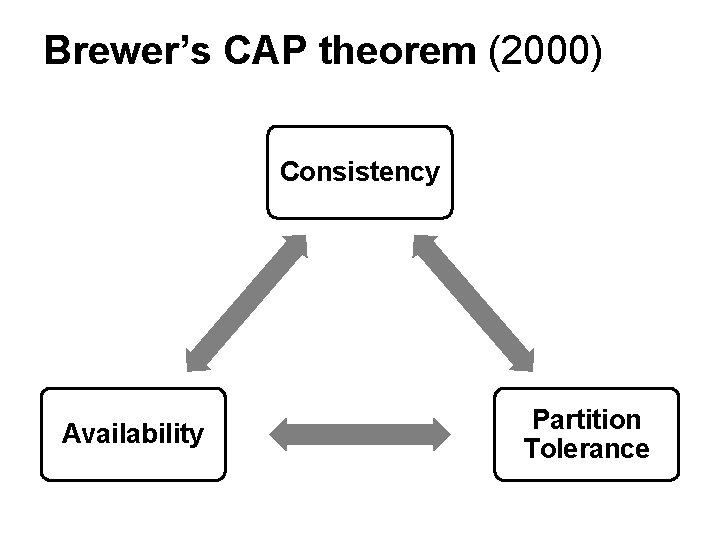

Brewer’s CAP theorem (2000) Consistency Availability Partition Tolerance

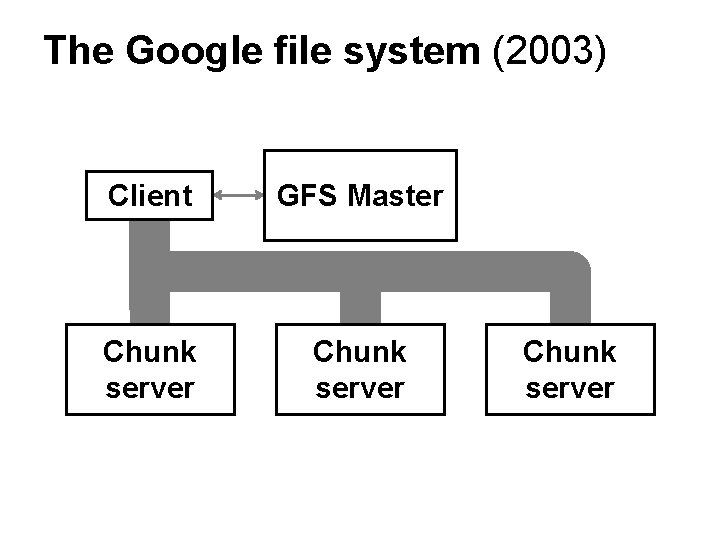

The Google file system (2003) Client GFS Master Chunk server

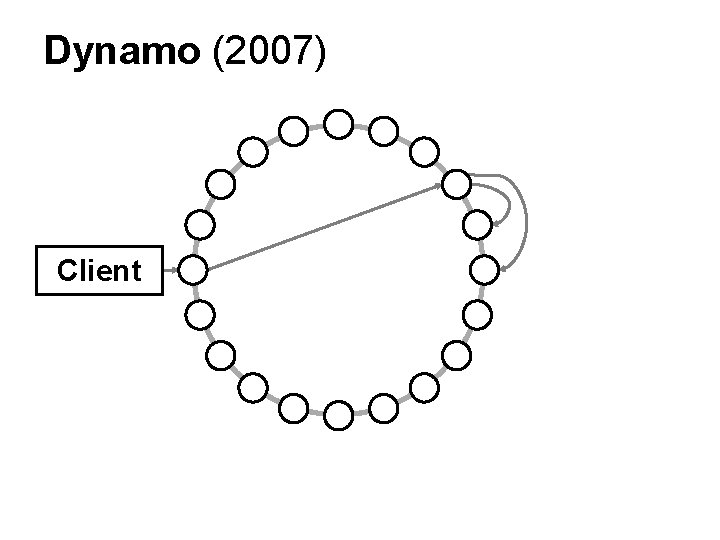

Dynamo (2007) Client

Distributed computation • Parallel distributed processing • Single Program, Multiple Data (SPMD) • Fault tolerance • Applications

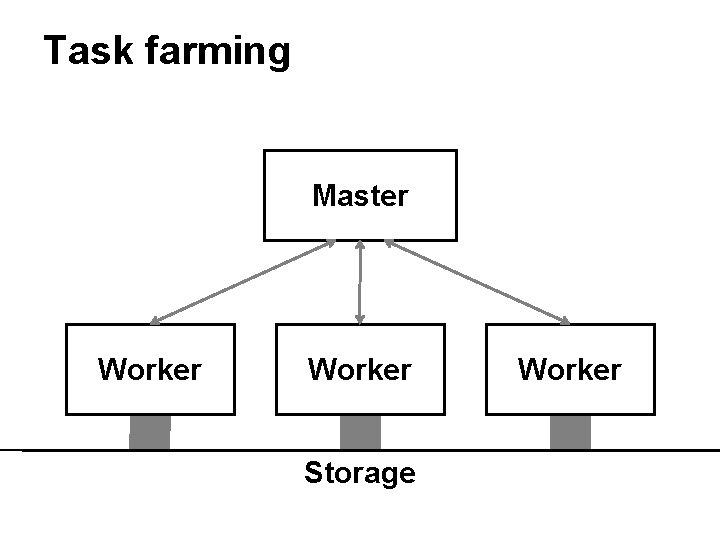

Task farming Master Worker Storage Worker

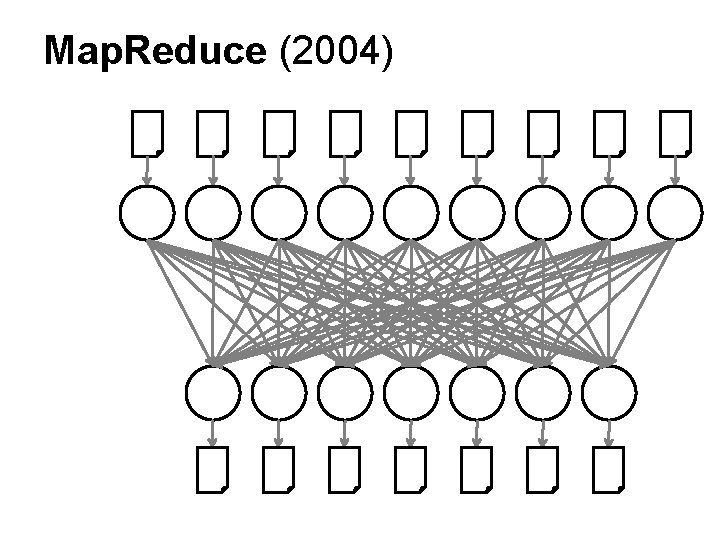

Map. Reduce (2004)

Dryad (2007) • Arbitrary directed acyclic graph (DAG) • Vertices and channels • Topological ordering

Dryad. LINQ (2008) • Language Integrated Query (LINQ) var table = Partitioned. Table. Get<int>(“…”); var result = from x in table select x * x; int sum. Squares = result. Sum();

Scheduling issues • Heterogeneous performance • Sharing a cluster fairly • Data locality

Percolator (2010) • Built on Google Big. Table • Transactions via snapshot isolation • Per-column notifications (triggers)

Skywriting and CIEL (2010) • Universal distributed execution engine • Script language for distributed programs • Opportunities for student projects…

References • Storage – – • Computation – – – • Dean and Ghemawat, “Map. Reduce: Simplified Data Processing on Large Clusters”, Proceedings of OSDI 2004 Isard et al. , “Dryad: Distributed Data-Parallel Programs from Sequential Building Blocks”, Proceedings of Euro. Sys 2007 Yu et al. , “Dryad. LINQ: A System for General-Purpose Distributed Data-Parallel Computing Using a High-Level Language”, Proceedings of OSDI 2008 Olston et al. , “Pig Latin: A Not-So-Foreign Language for Data Processing”, Proceedings of SIGMOD 2008 Murray and Hand, “Scripting the Cloud with Skywriting”, Proceedings of Hot. Cloud 2010 Scheduling – – – • Ghemawat et al. , “The Google File System”, Proceedings of SOSP 2003 De. Candia et al. , “Dynamo: Amazon’s Highly-Available Key-value Store”, Proceedings of SOSP 2007 Zaharia et al. , “Improving Map. Reduce Performance in Heterogeneous Environments”, Proceedings of OSDI 2008 Isard et al. , “Quincy: Fair Scheduling for Distributed Computing Clusters”, Proceedings of SOSP 2009 Zaharia et al. , “Delay Scheduling: A Simple Technique for Achieving Locality and Fairness in Cluster Scheduling”, Proceedings of Euro. Sys 2010 Transactions – Peng and Dabek, “Large-Scale Incremental Processing using Distributed Transactions and Notifications”, Proceedings of OSDI 2010

Conclusions • Data centers achieve high performance with commodity parts • Efficient storage requires applicationspecific trade-offs • Data-parallelism simplifies distributed computation on the data

Questions • Now or after the lecture – Email • Derek. Murray@cl. cam. ac. uk – Web • http: //www. cl. cam. ac. uk/~dgm 36/

- Slides: 20