Introduction to Convolutional Neural Network and ILSVRC Advisors

- Slides: 48

Introduction to Convolutional Neural Network and ILSVRC Advisors: S. J. Wang , F. T. Chien Students: M. C. Sun, K. C. Lo Date: 2014/11/19

2 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

3 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

4 Why do we need Neural Network? • Inspired by human’s neural system • Parallel processing • Lots of neurons connected • Do not need to assume distribution of model

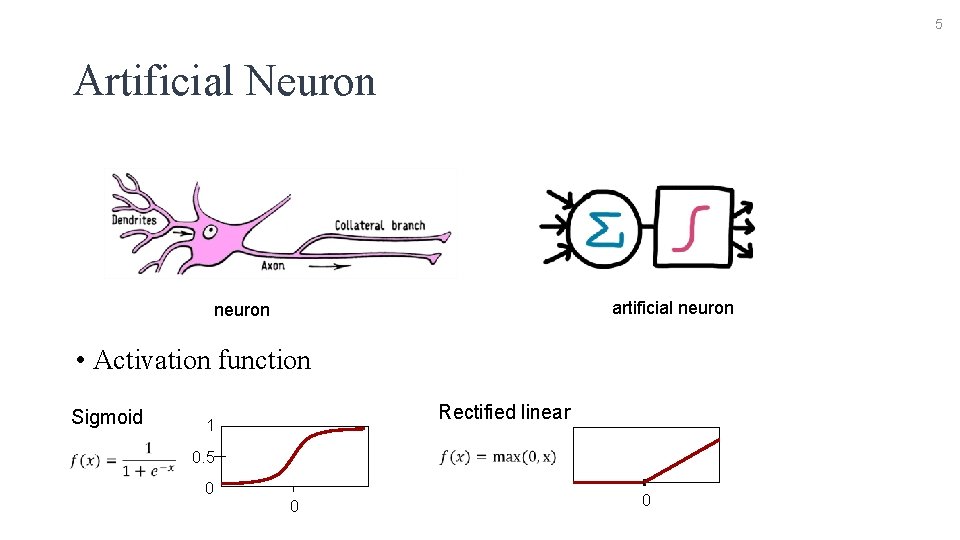

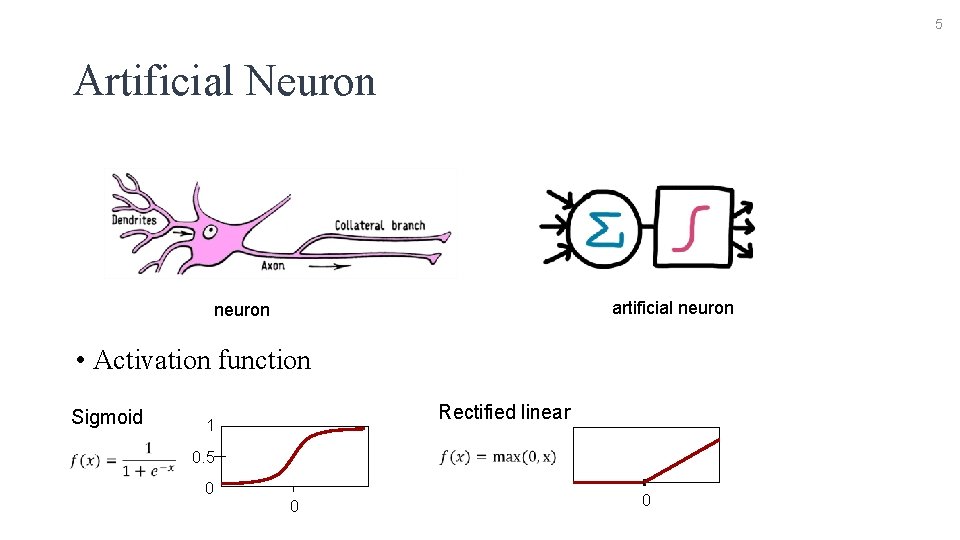

5 Artificial Neuron artificial neuron • Activation function Sigmoid Rectified linear 1 0. 5 0 0 0

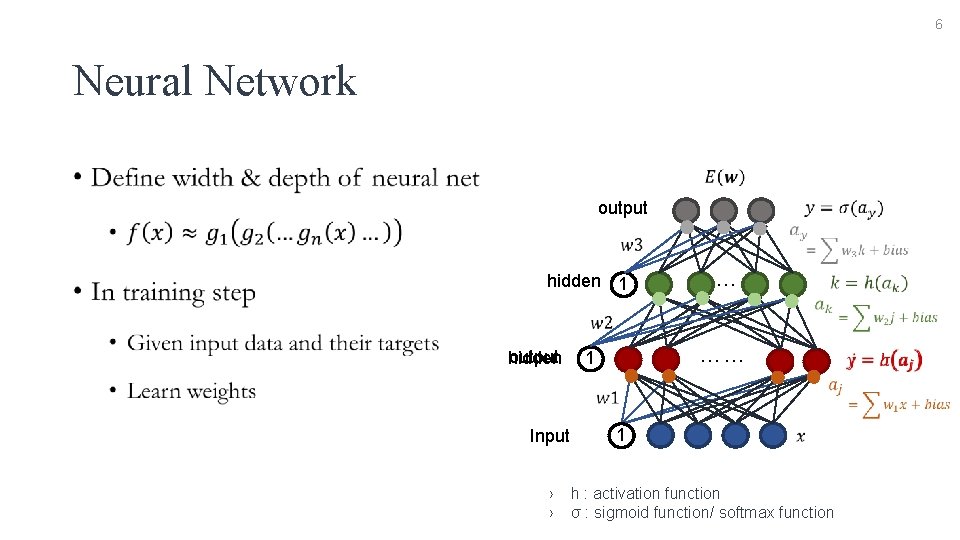

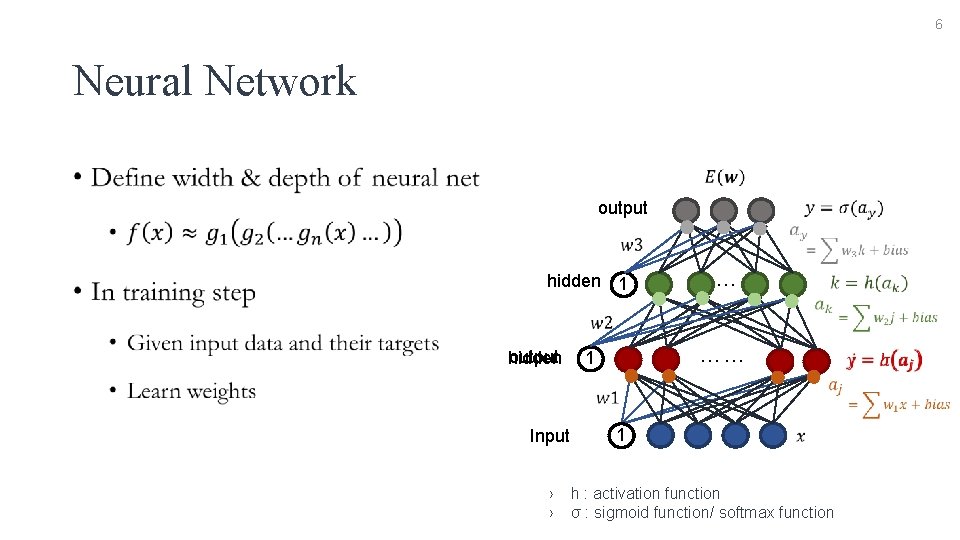

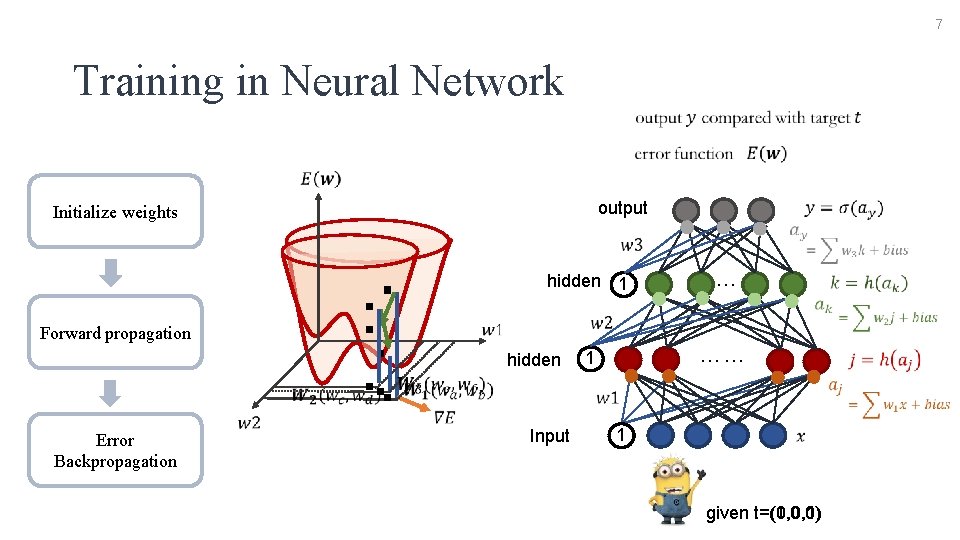

6 Neural Network • output hidden 1 output hidden Input › › … …… 1 1 h : activation function σ : sigmoid function/ softmax function

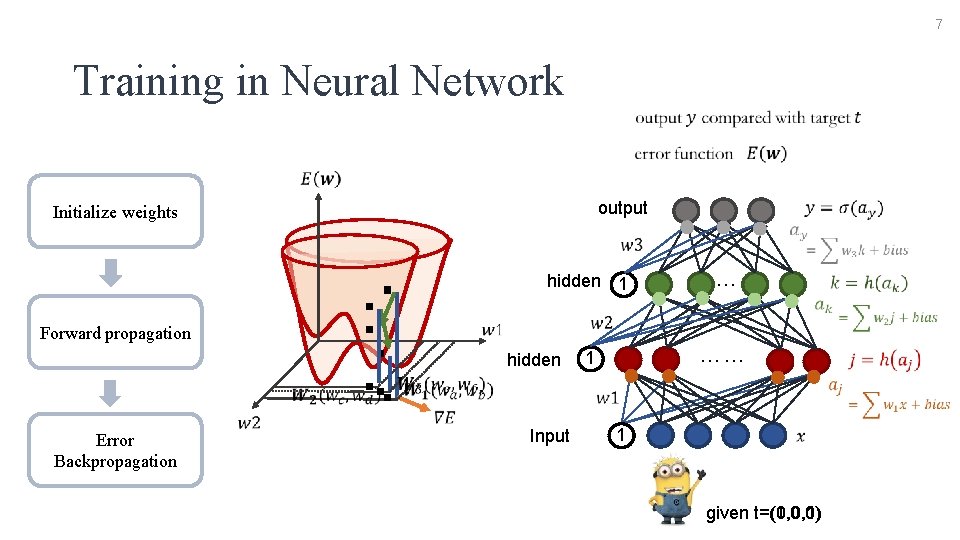

7 Training in Neural Network output Initialize weights Forward propagation Error Backpropagation ‧ ‧ ‧‧‧ hidden 1 …… hidden Input 1 t=(0, 0, 1) given t=(1, 0, 0)

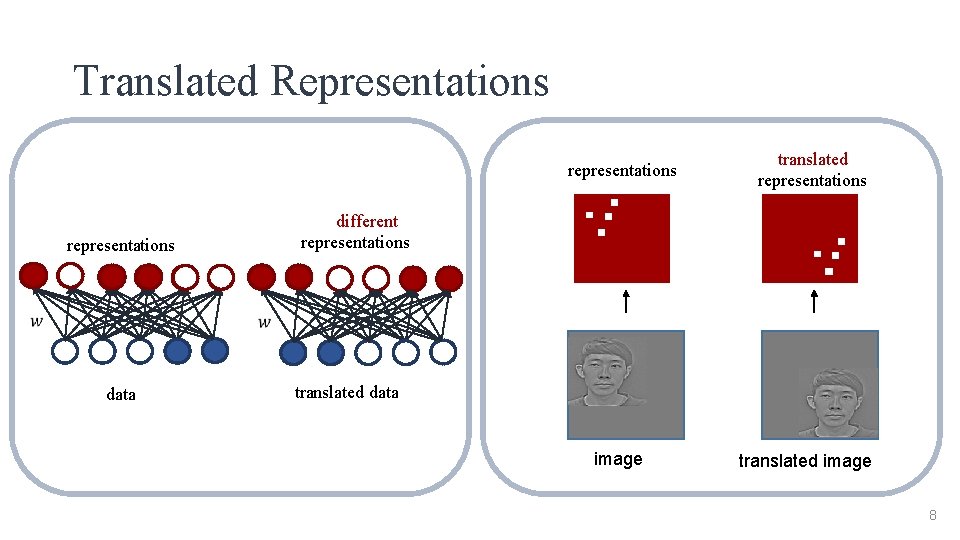

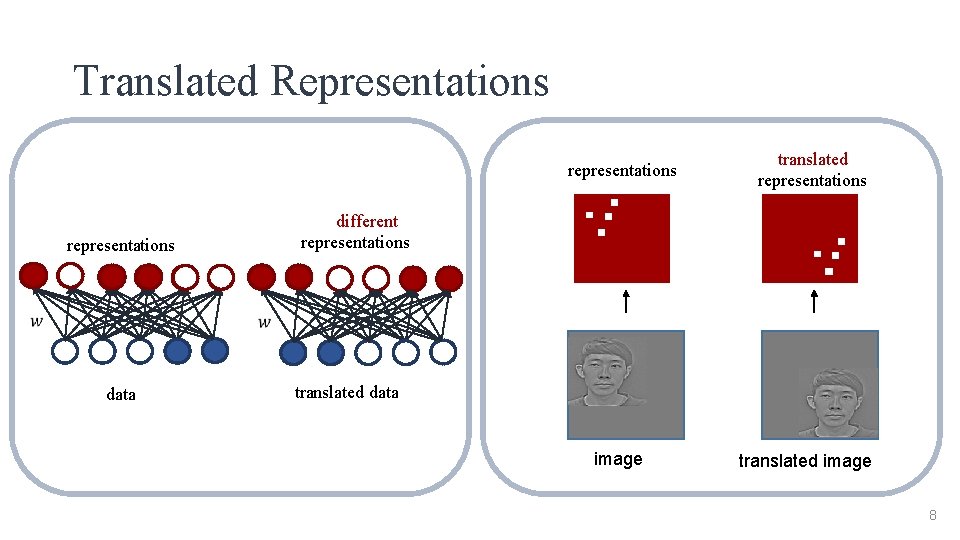

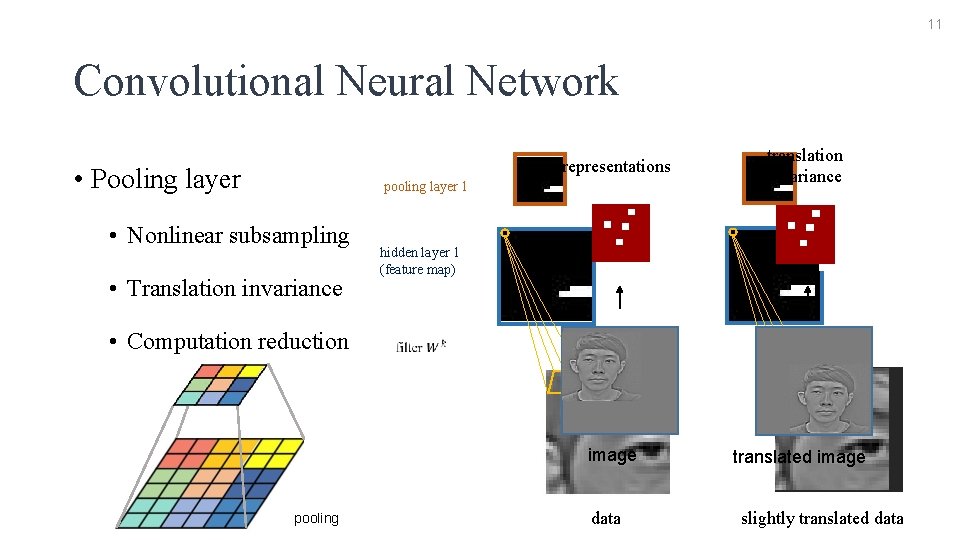

Translated Representations representations data translated representations different representations translated data image translated image 8

9 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

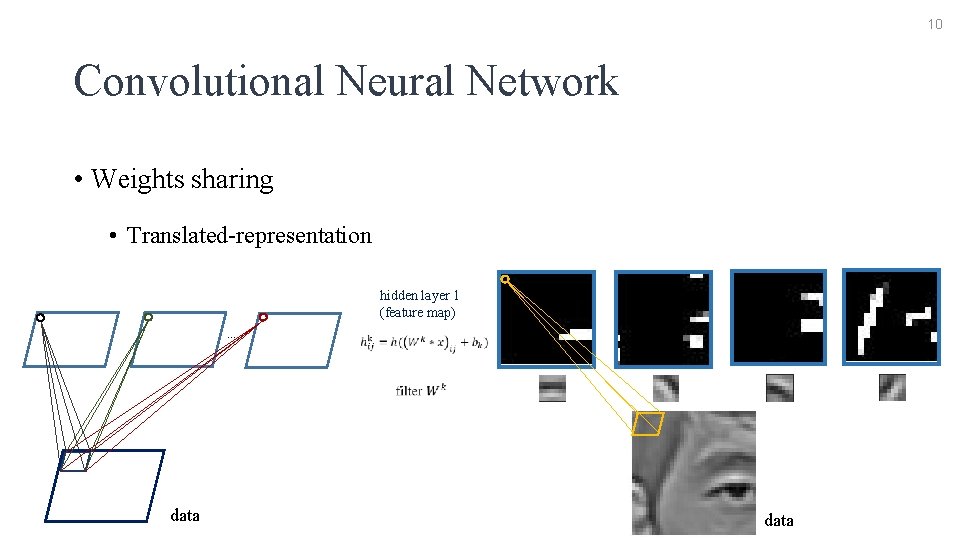

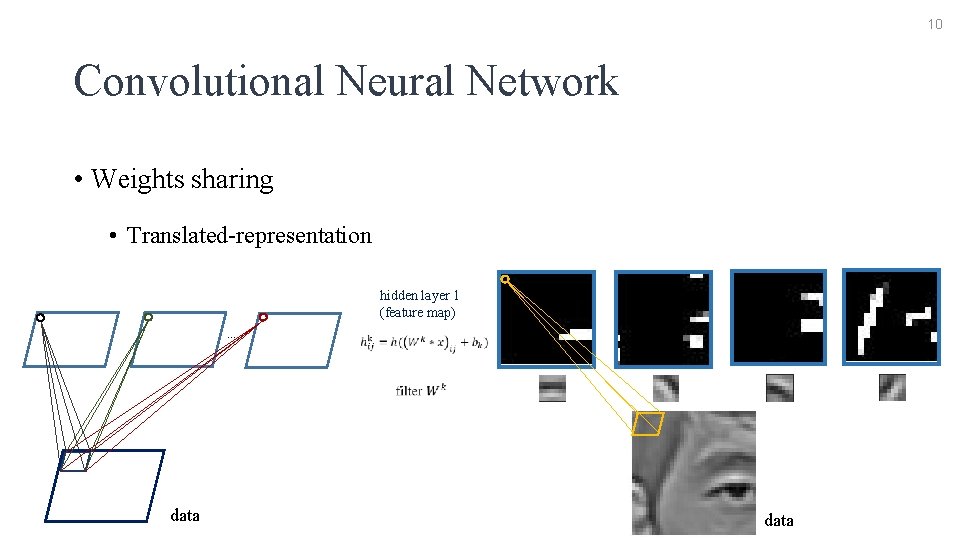

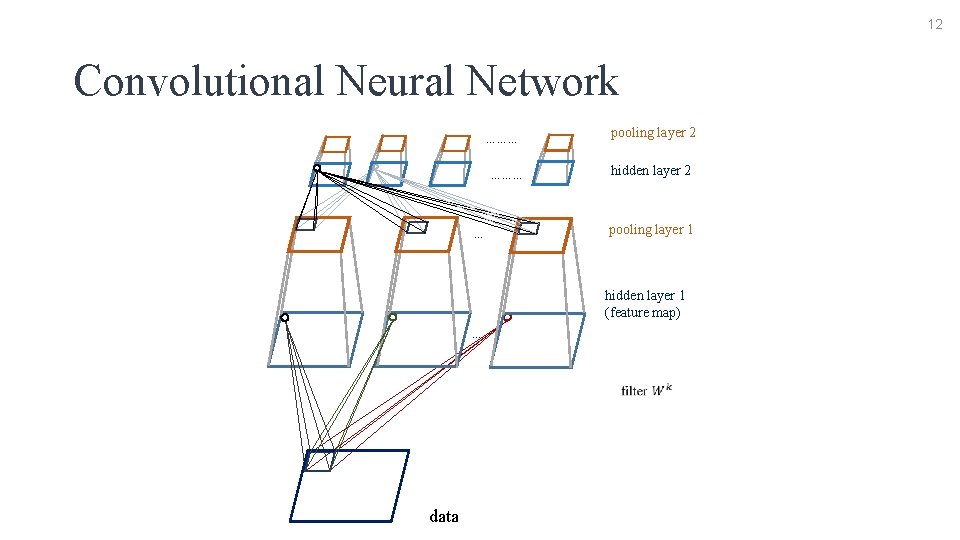

10 Convolutional Neural Network • Weights sharing • Translated-representation hidden layer 1 (feature map) … data

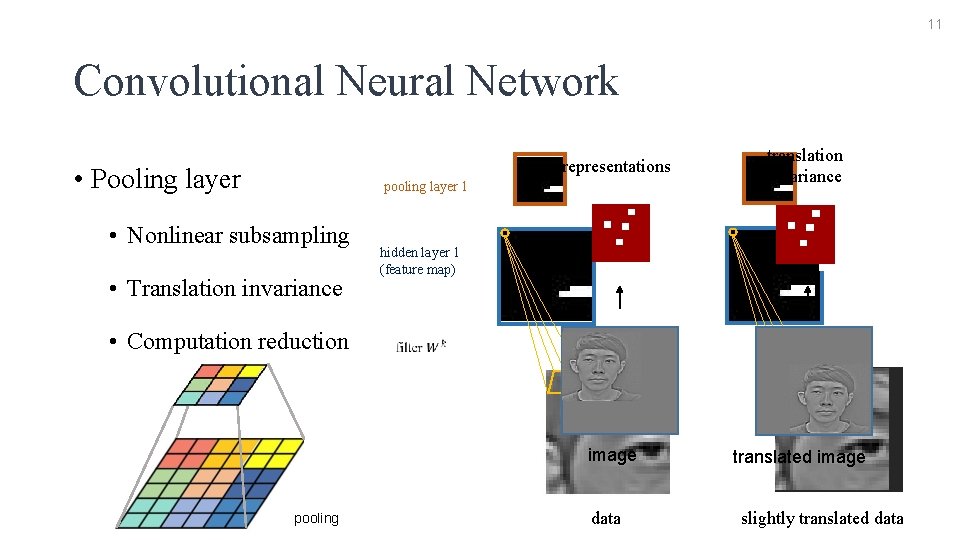

11 Convolutional Neural Network representations • Pooling layer pooling layer 1 • Nonlinear subsampling • Translation invariance translation invariance hidden layer 1 (feature map) • Computation reduction image pooling data translated image slightly translated data

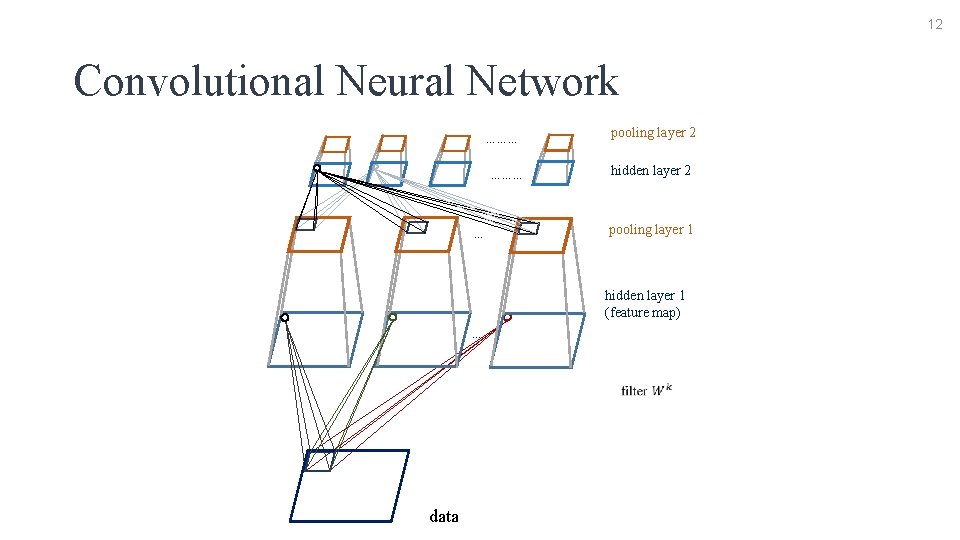

12 Convolutional Neural Network ……… … pooling layer 2 hidden layer 2 pooling layer 1 hidden layer 1 (feature map) … data

13 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

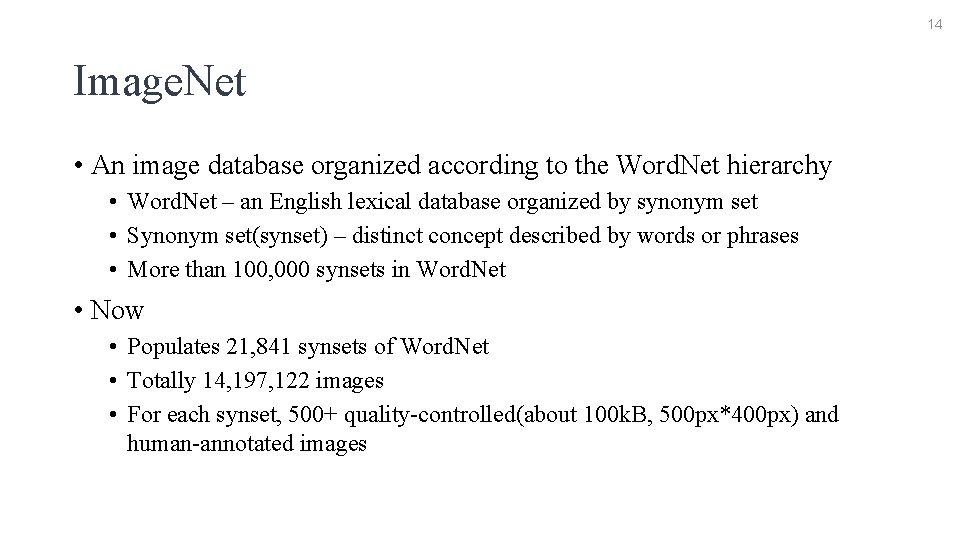

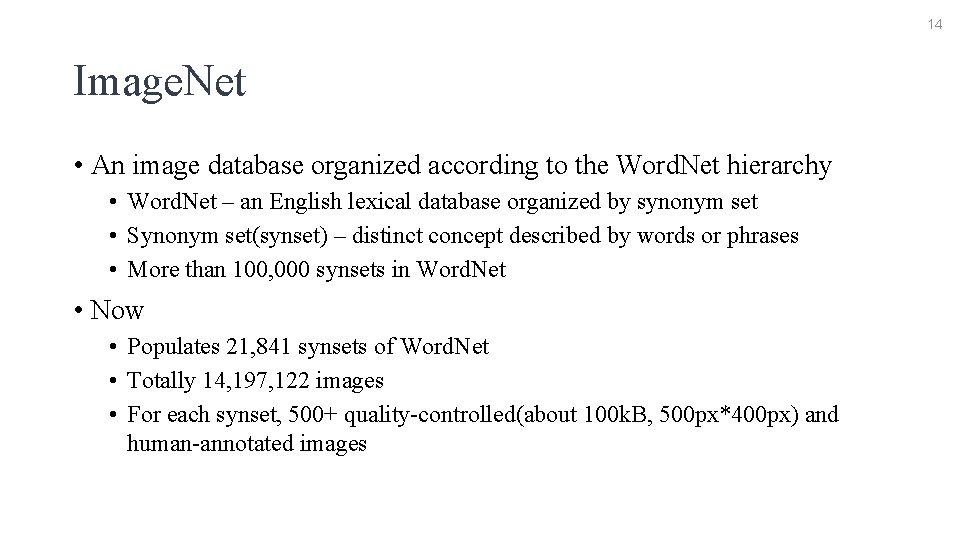

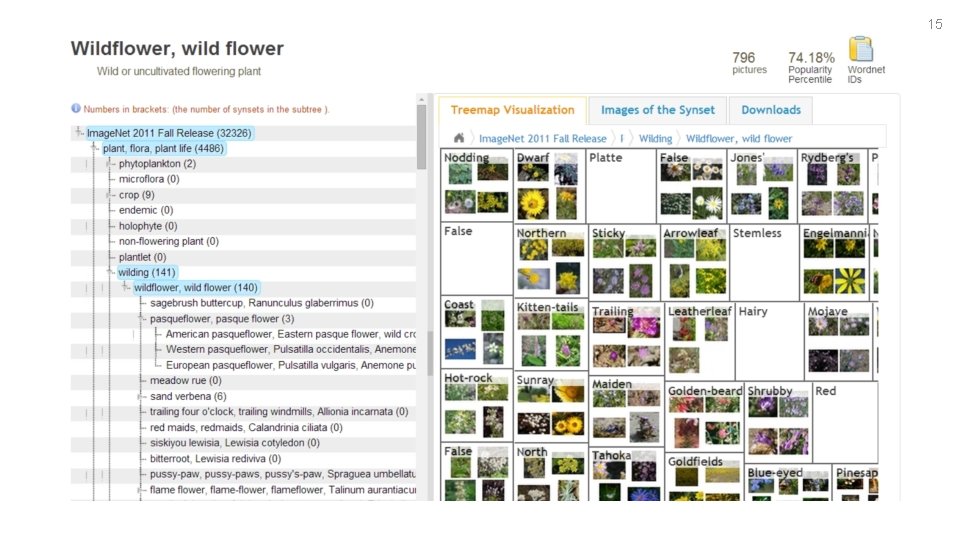

14 Image. Net • An image database organized according to the Word. Net hierarchy • Word. Net – an English lexical database organized by synonym set • Synonym set(synset) – distinct concept described by words or phrases • More than 100, 000 synsets in Word. Net • Now • Populates 21, 841 synsets of Word. Net • Totally 14, 197, 122 images • For each synset, 500+ quality-controlled(about 100 k. B, 500 px*400 px) and human-annotated images

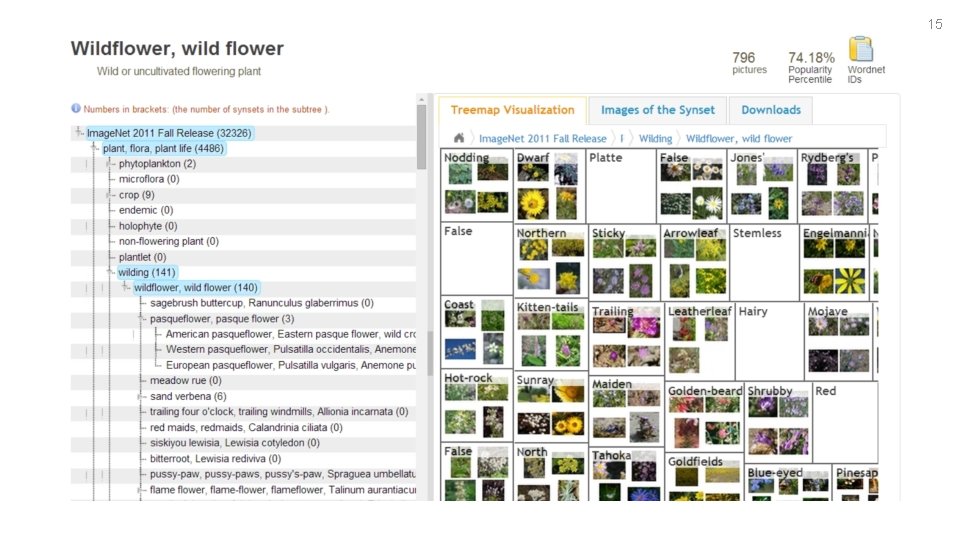

15 Synset Explore

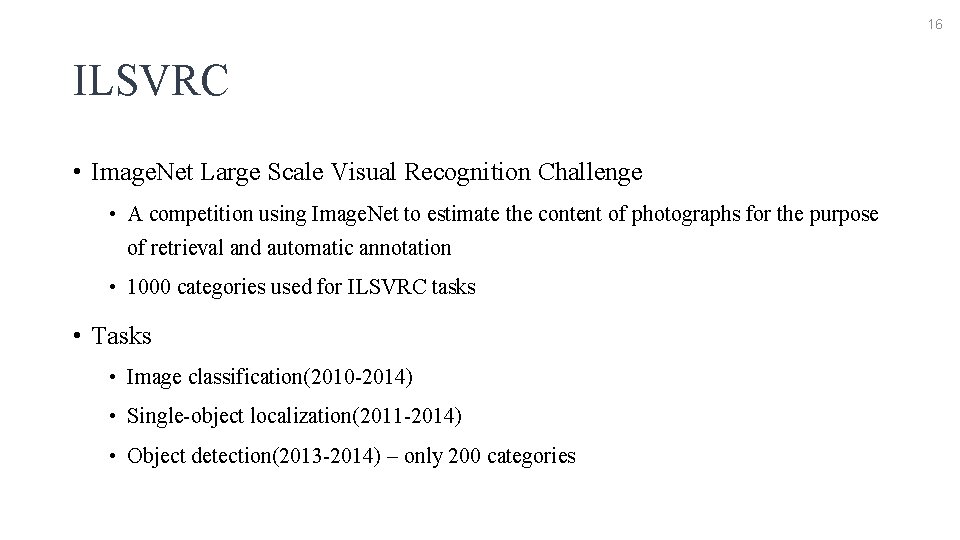

16 ILSVRC • Image. Net Large Scale Visual Recognition Challenge • A competition using Image. Net to estimate the content of photographs for the purpose of retrieval and automatic annotation • 1000 categories used for ILSVRC tasks • Tasks • Image classification(2010 -2014) • Single-object localization(2011 -2014) • Object detection(2013 -2014) – only 200 categories

17

18 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

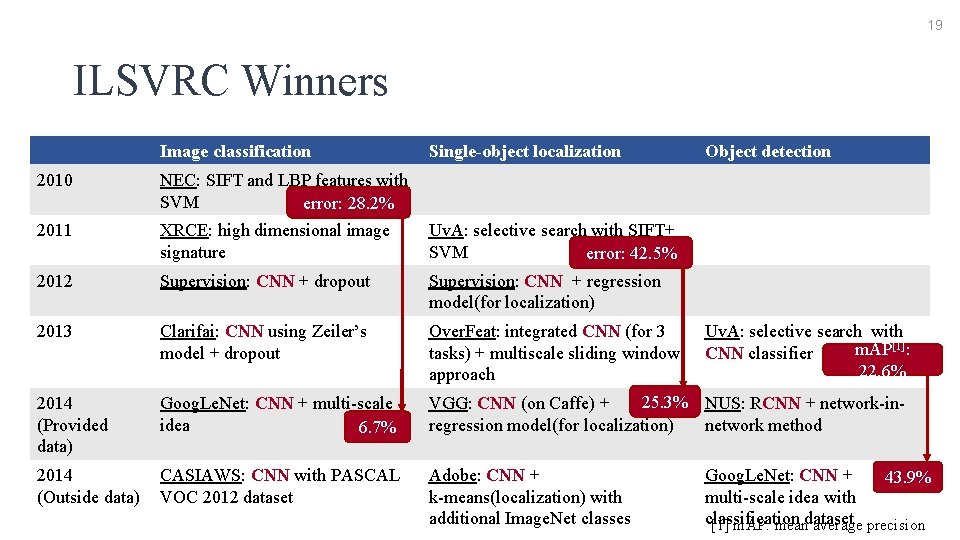

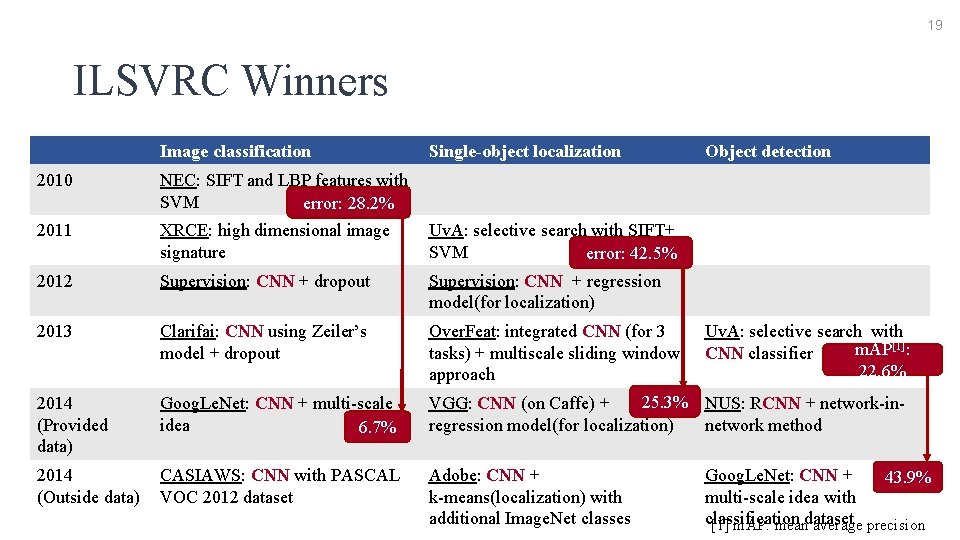

19 ILSVRC Winners Image classification Single-object localization Object detection 2010 NEC: SIFT and LBP features with SVM error: 28. 2% 2011 XRCE: high dimensional image signature Uv. A: selective search with SIFT+ SVM error: 42. 5% 2012 Supervision: CNN + dropout Supervision: CNN + regression model(for localization) 2013 Clarifai: CNN using Zeiler’s model + dropout Over. Feat: integrated CNN (for 3 tasks) + multiscale sliding window approach 2014 (Provided data) Goog. Le. Net: CNN + multi-scale idea 6. 7% 25. 3% NUS: RCNN + network-in. VGG: CNN (on Caffe) + regression model(for localization) network method 2014 (Outside data) CASIAWS: CNN with PASCAL VOC 2012 dataset Adobe: CNN + k-means(localization) with additional Image. Net classes Uv. A: selective search with m. AP[1]: CNN classifier 22. 6% Goog. Le. Net: CNN + 43. 9% multi-scale idea with classification [1] m. AP: meandataset average precision

2012 Super. Vision University of Toronto

21 2012 Super. Vision – Introduction • Deep convolutional neural network • 5 convolutional layers and 3 fully connected layers • Training using backpropagatiom with efficient GPU implementation • With "dropout" trick • In ILSVRC 2012, Super. Vision was the winner of image classification and single-object localization. Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks.

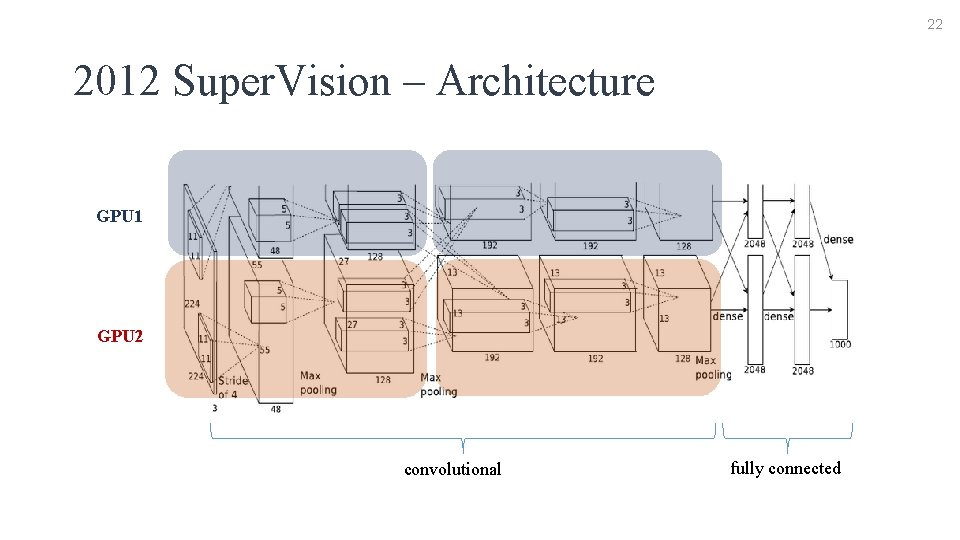

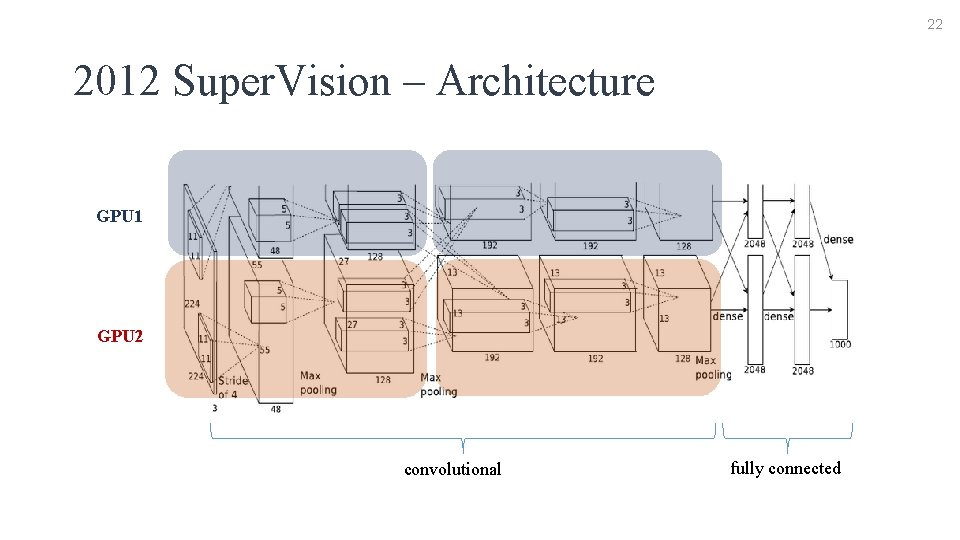

22 2012 Super. Vision – Architecture GPU 1 GPU 2 convolutional fully connected

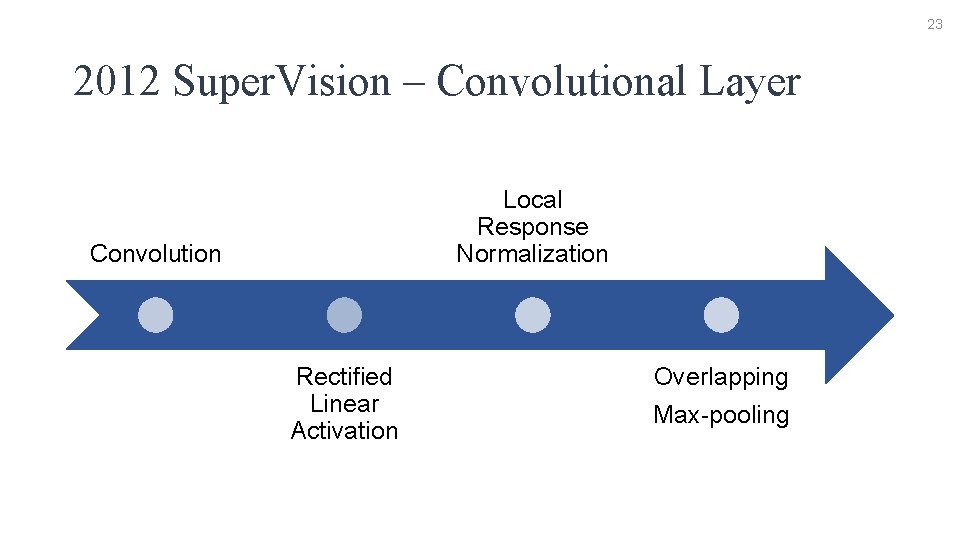

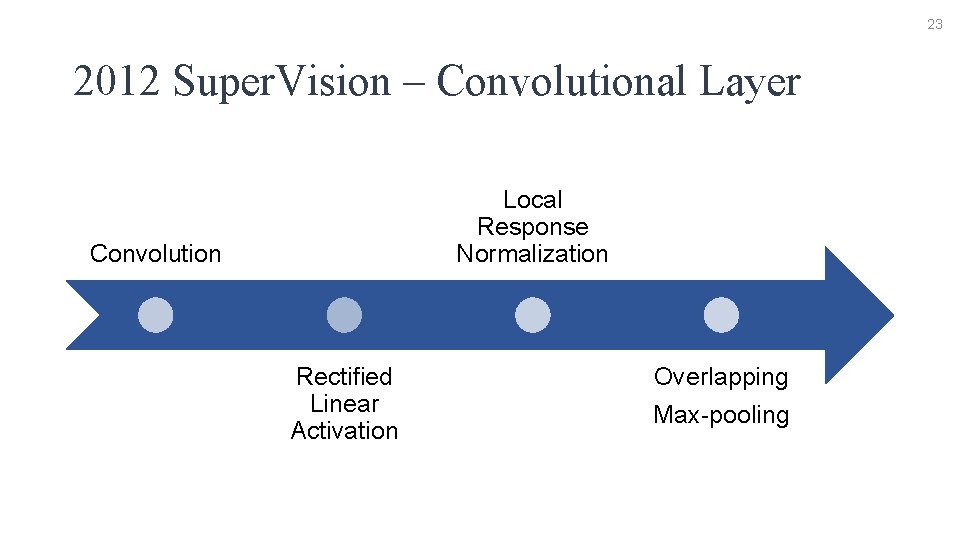

23 2012 Super. Vision – Convolutional Layer Local Response Normalization Convolution Rectified Linear Activation Overlapping Max-pooling

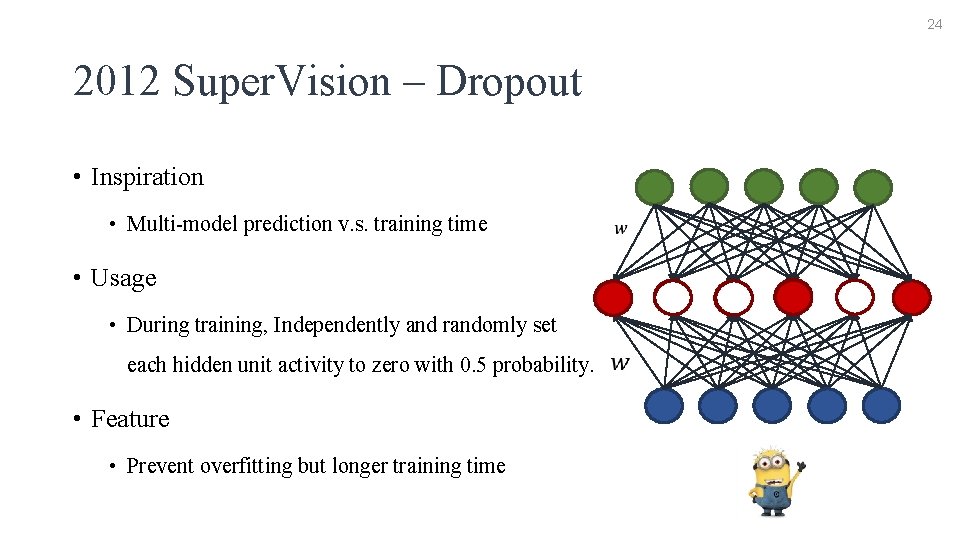

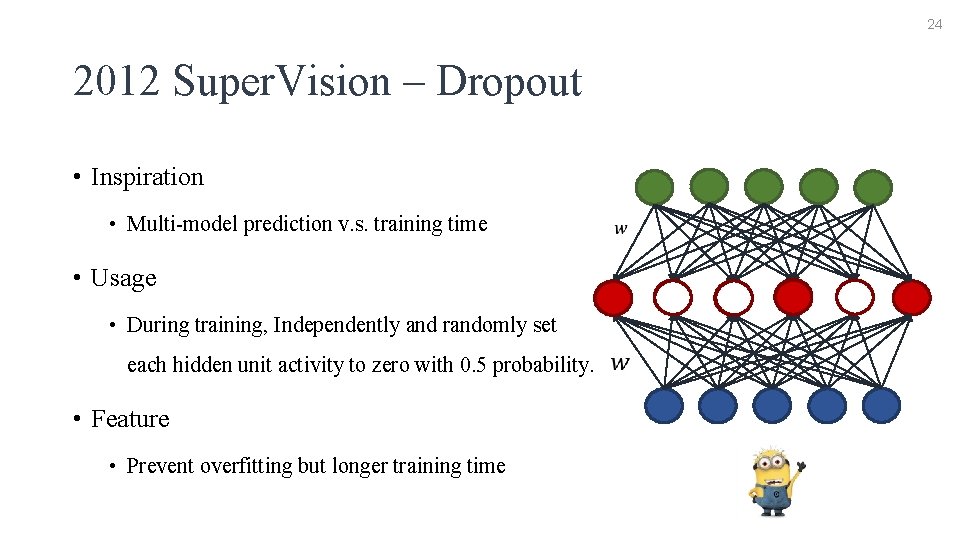

24 2012 Super. Vision – Dropout • Inspiration • Multi-model prediction v. s. training time • Usage • During training, Independently and randomly set each hidden unit activity to zero with 0. 5 probability. • Feature • Prevent overfitting but longer training time

2013 Clarifai New York University

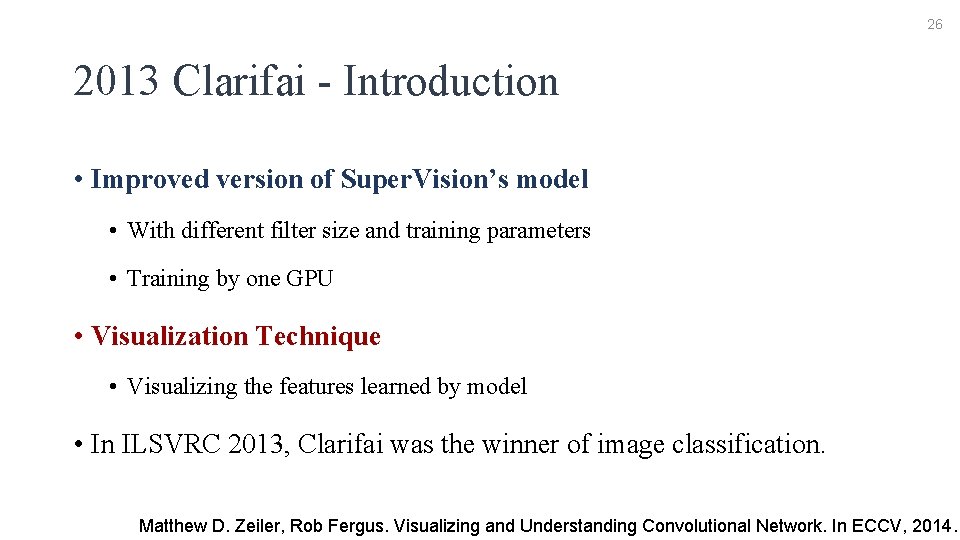

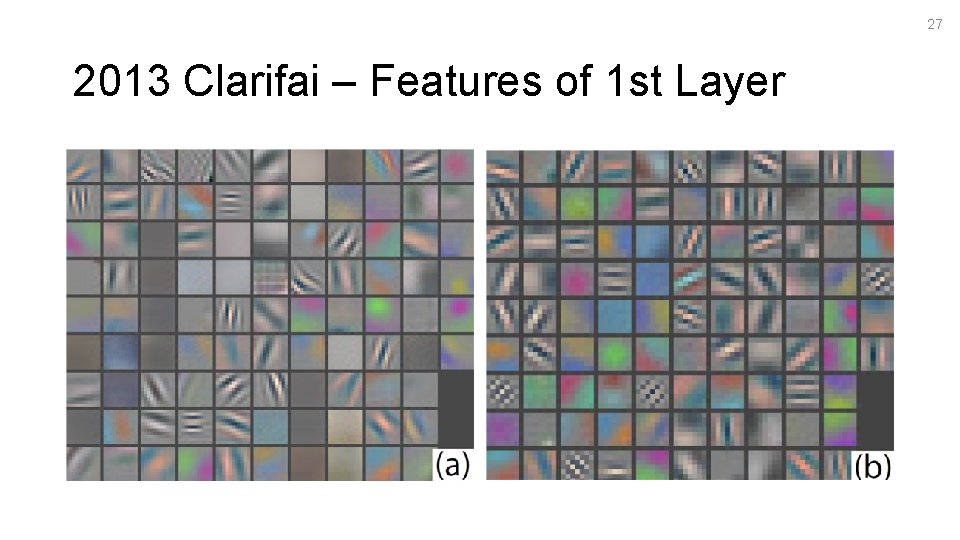

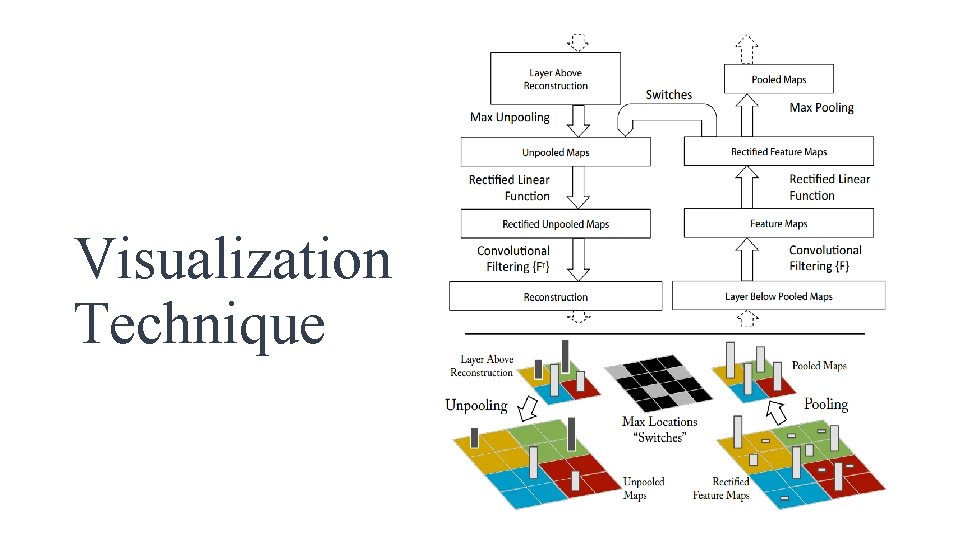

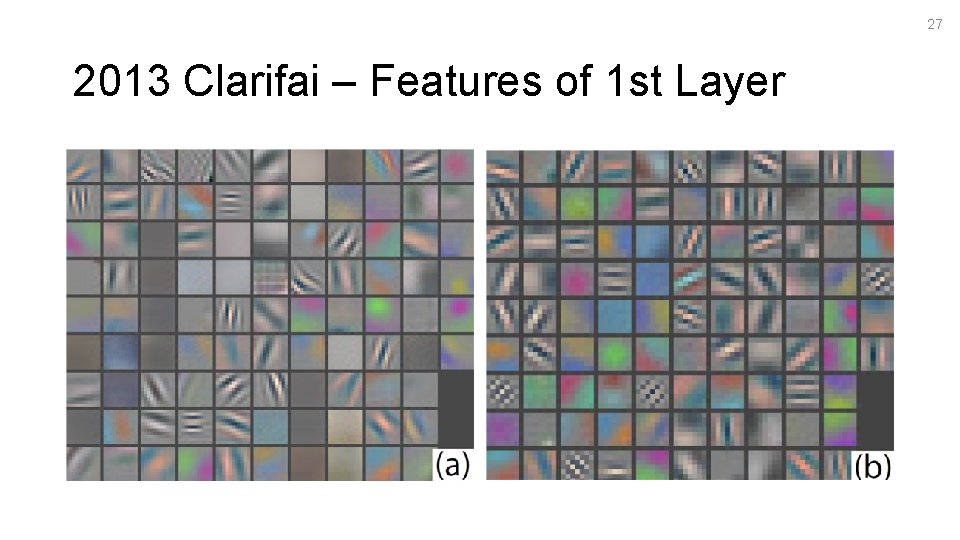

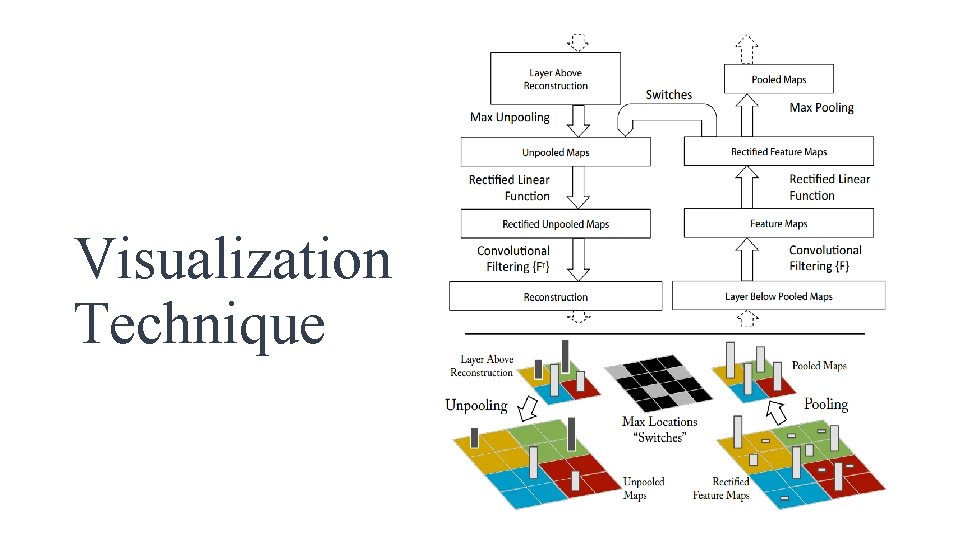

26 2013 Clarifai - Introduction • Improved version of Super. Vision’s model • With different filter size and training parameters • Training by one GPU • Visualization Technique • Visualizing the features learned by model • In ILSVRC 2013, Clarifai was the winner of image classification. Matthew D. Zeiler, Rob Fergus. Visualizing and Understanding Convolutional Network. In ECCV, 2014.

27 2013 Clarifai – Features of 1 st Layer

Visualization Technique

29 2013 Clarifai – Layer #3 Visualization

2014 Goog. Le. Net Google Inc.

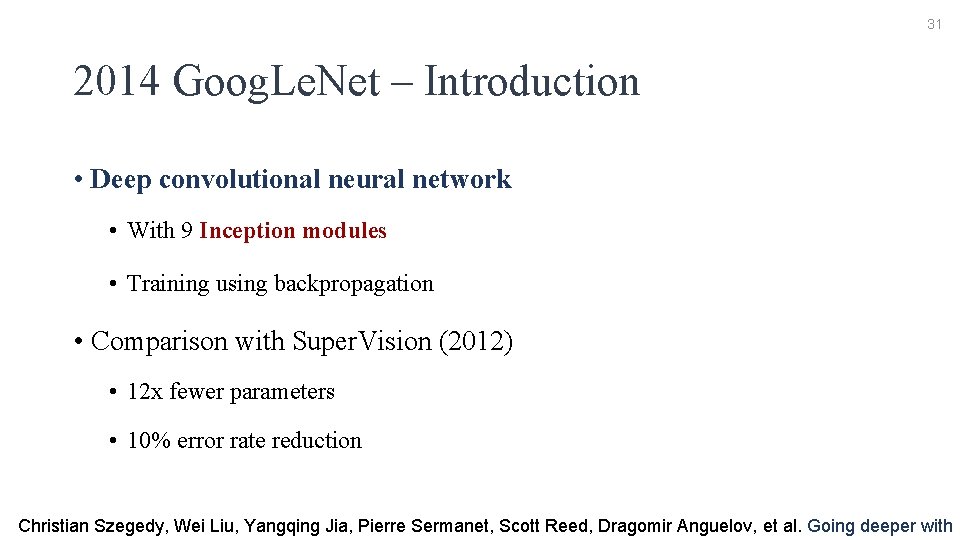

31 2014 Goog. Le. Net – Introduction • Deep convolutional neural network • With 9 Inception modules • Training using backpropagation • Comparison with Super. Vision (2012) • 12 x fewer parameters • 10% error rate reduction Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, et al. Going deeper with

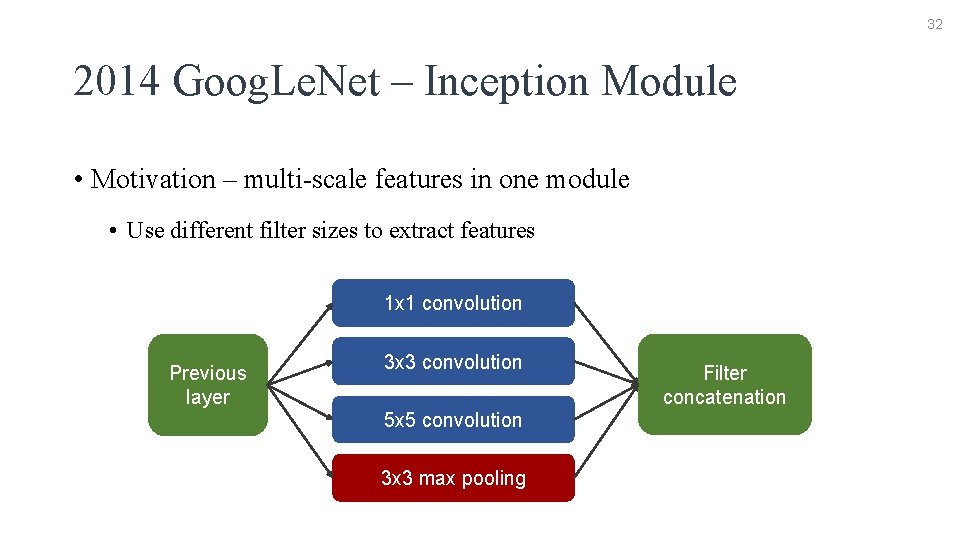

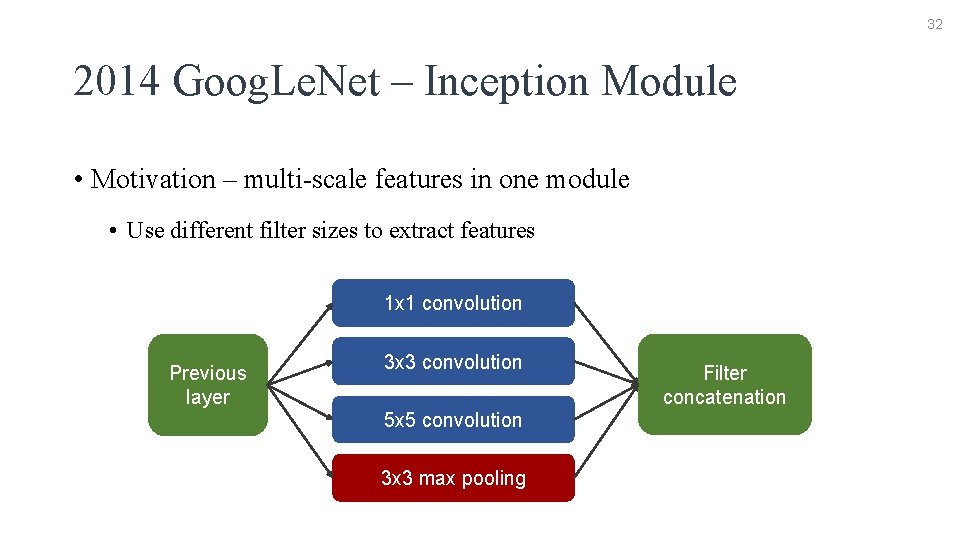

32 2014 Goog. Le. Net – Inception Module • Motivation – multi-scale features in one module • Use different filter sizes to extract features 1 x 1 convolution Previous layer 3 x 3 convolution 5 x 5 convolution 3 x 3 max pooling Filter concatenation

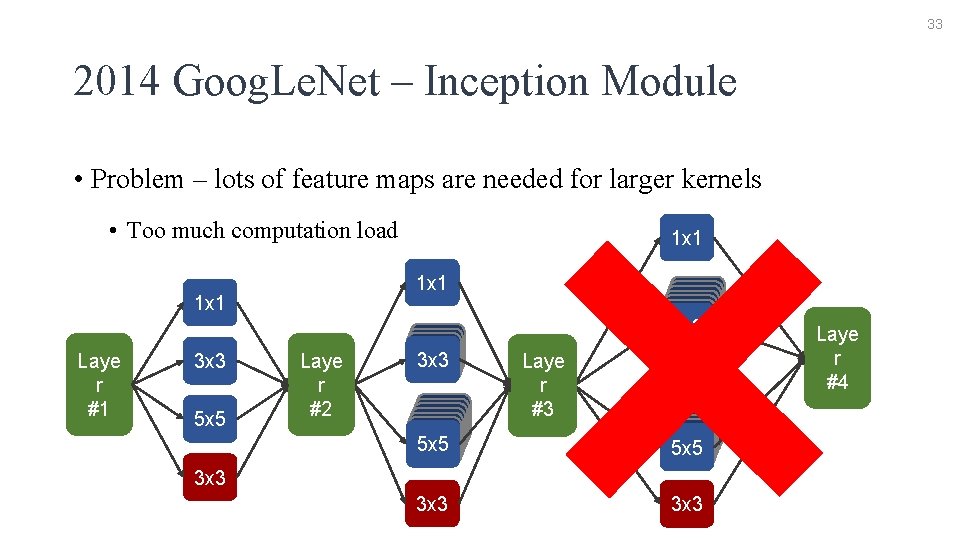

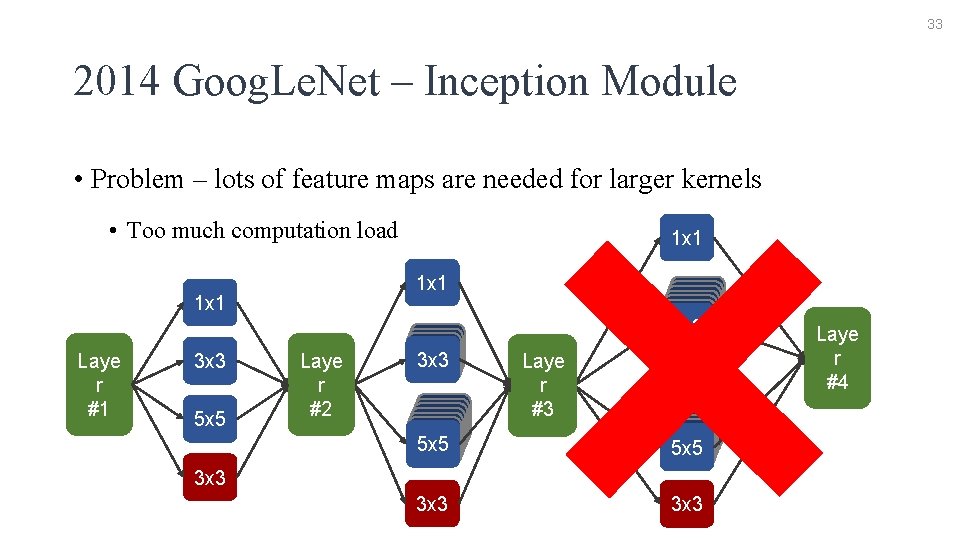

33 2014 Goog. Le. Net – Inception Module • Problem – lots of feature maps are needed for larger kernels • Too much computation load 1 x 1 Laye r #1 3 x 3 5 x 5 1 x 1 Laye r #1 #2 3 x 3 3 x 3 3 x 3 5 x 5 3 x 3 3 x 3 Laye rr #3 #1 3 x 3 3 x 3 3 x 3 5 x 5 3 x 3 Laye r #4

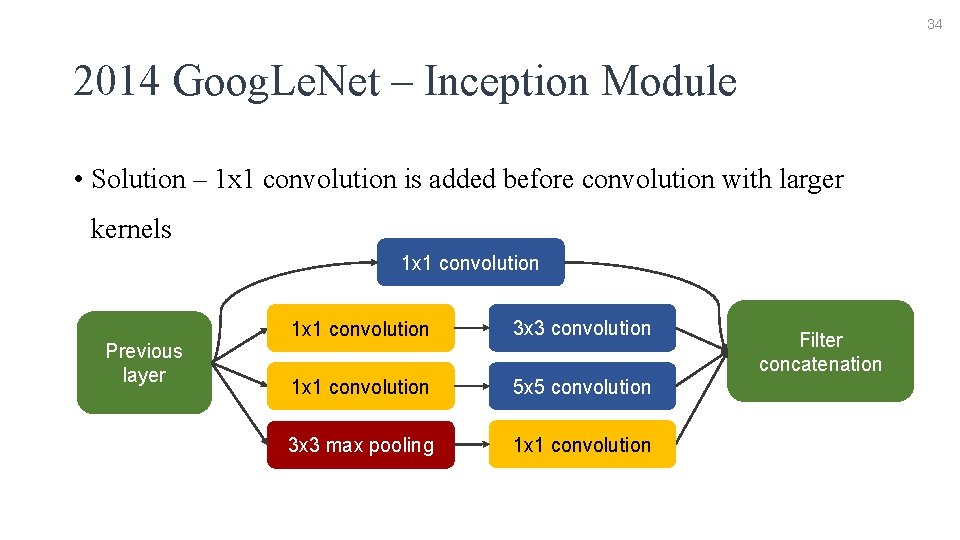

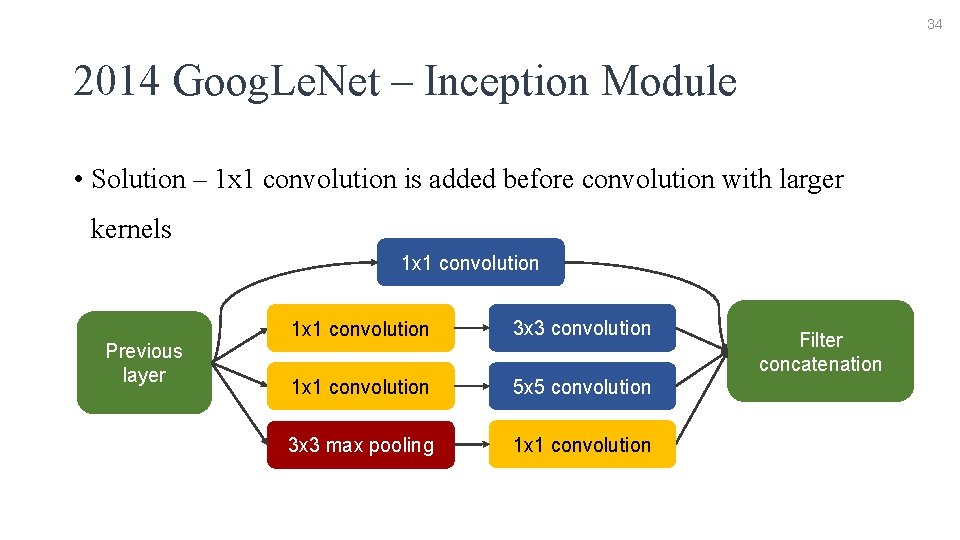

34 2014 Goog. Le. Net – Inception Module • Solution – 1 x 1 convolution is added before convolution with larger kernels 1 x 1 convolution Previous layer 1 x 1 convolution 3 x 3 convolution 1 x 1 convolution 5 x 5 convolution 3 x 3 max pooling 1 x 1 convolution Filter concatenation

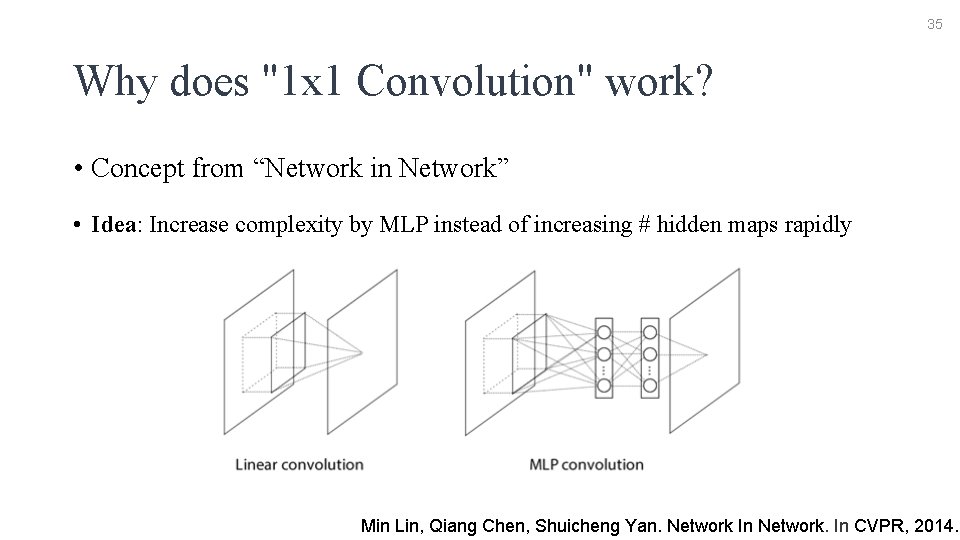

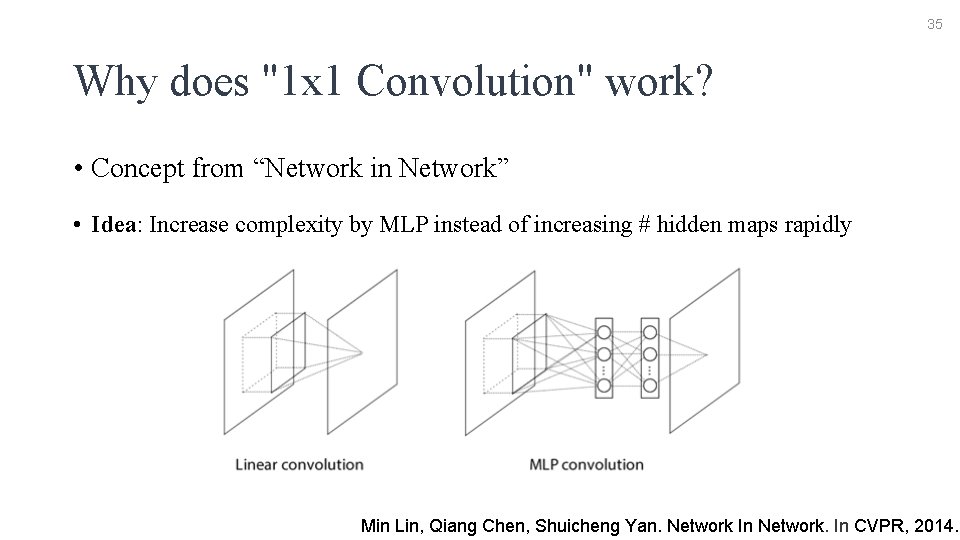

35 Why does "1 x 1 Convolution" work? • Concept from “Network in Network” • Idea: Increase complexity by MLP instead of increasing # hidden maps rapidly Min Lin, Qiang Chen, Shuicheng Yan. Network In Network. In CVPR, 2014.

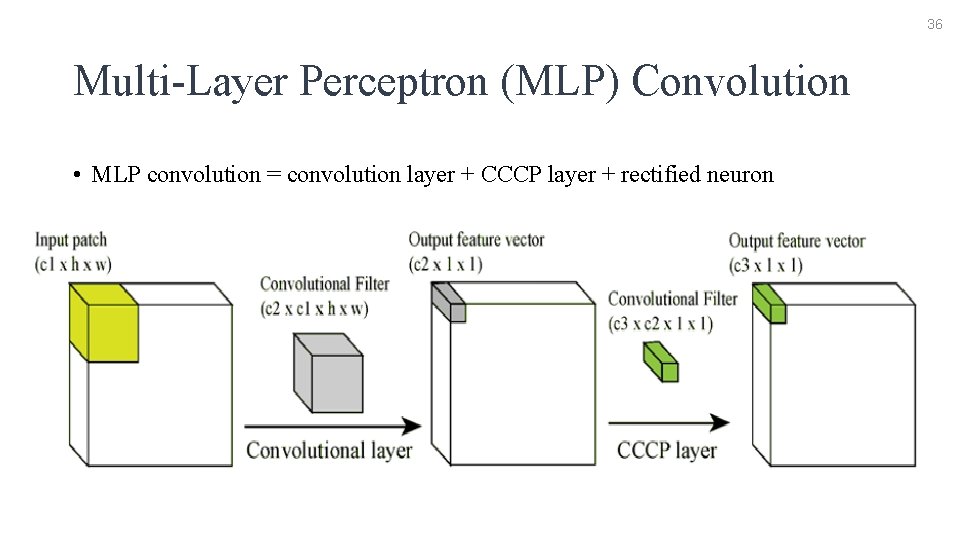

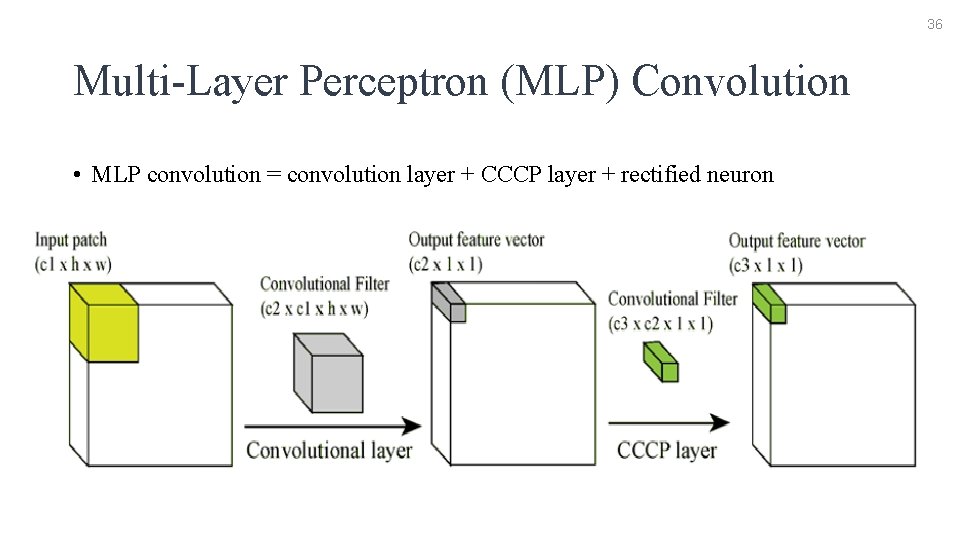

36 Multi-Layer Perceptron (MLP) Convolution • MLP convolution = convolution layer + CCCP layer + rectified neuron

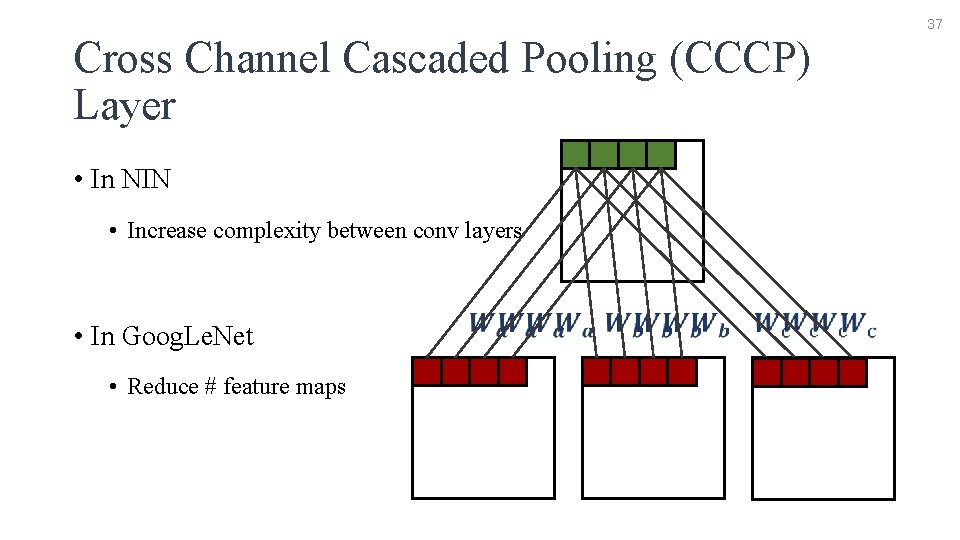

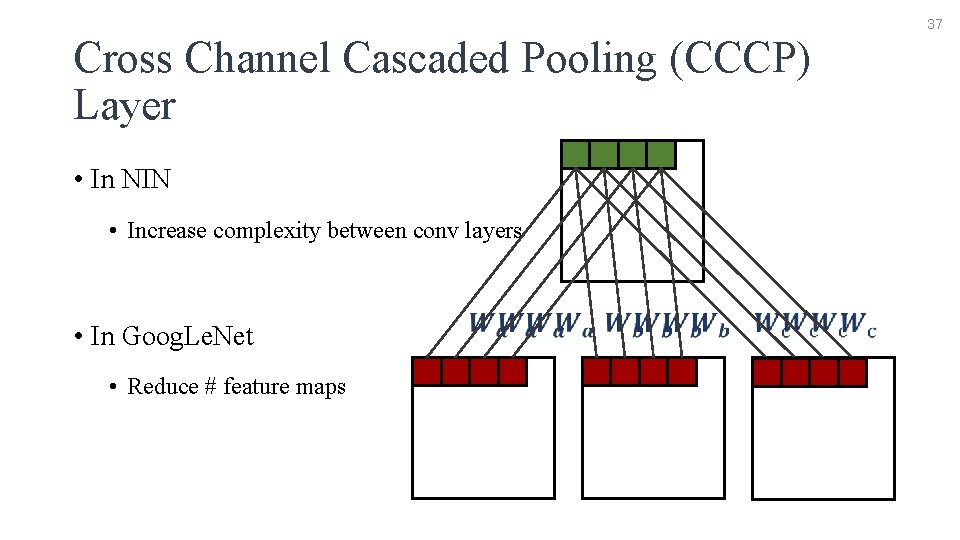

37 Cross Channel Cascaded Pooling (CCCP) Layer • In NIN • Increase complexity between conv layers • In Goog. Le. Net • Reduce # feature maps

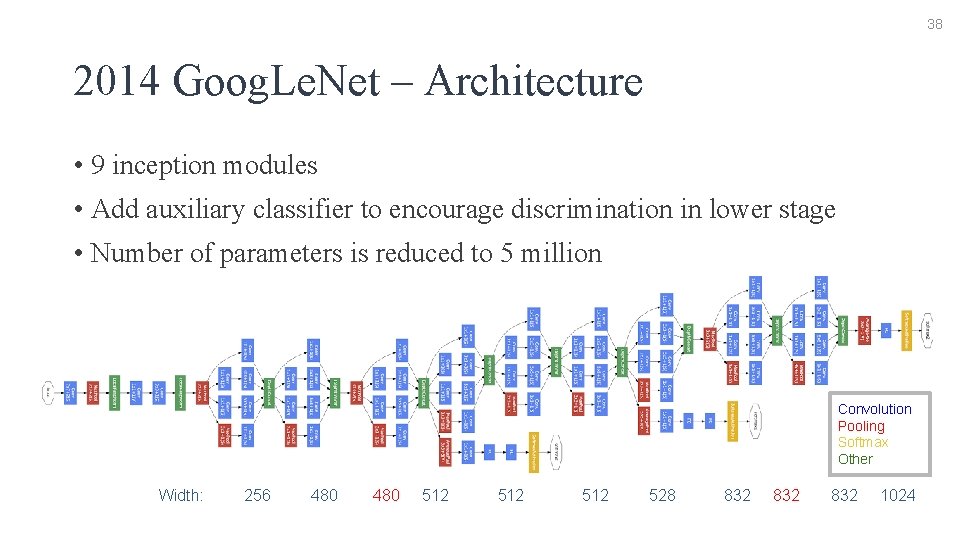

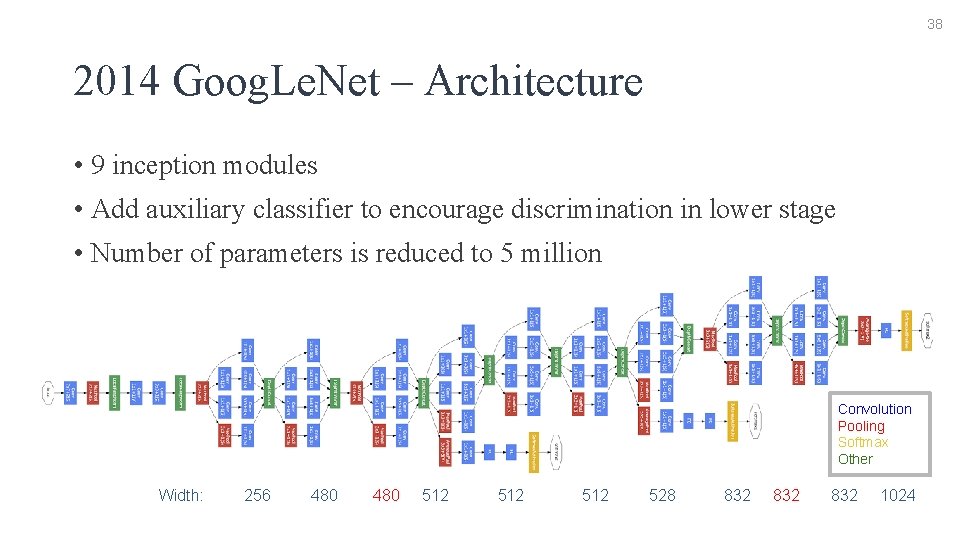

38 2014 Goog. Le. Net – Architecture • 9 inception modules • Add auxiliary classifier to encourage discrimination in lower stage • Number of parameters is reduced to 5 million Convolution Pooling Softmax Other Width: 256 480 512 512 528 832 832 1024

39 Outline • Introduction to convolutional neural network • Neural network • Convolutional neural network • Introduction to Image. Net & ILSVRC • Winners of ILSVRC • 2012 Super. Vision • 2013 Clarifai • 2014 Goog. Le. Net • ILSVRC results

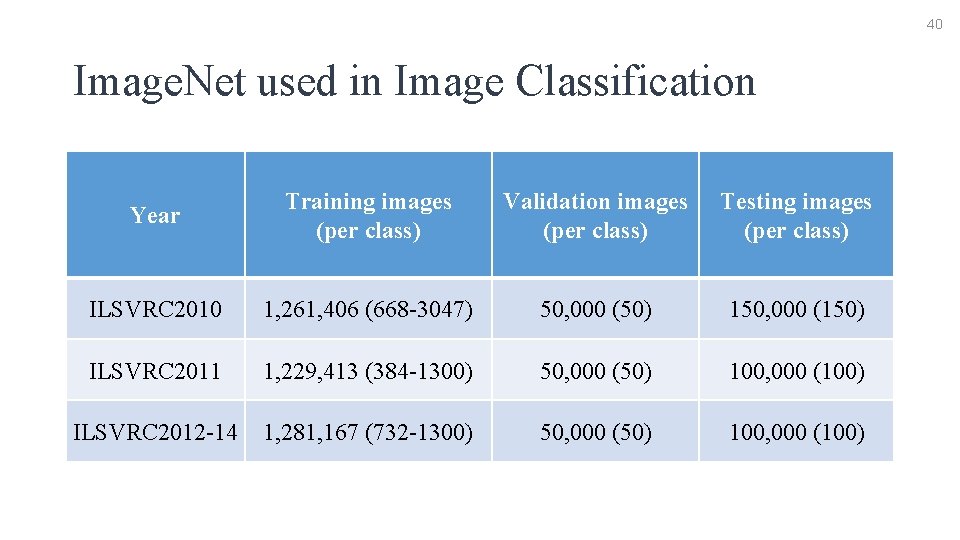

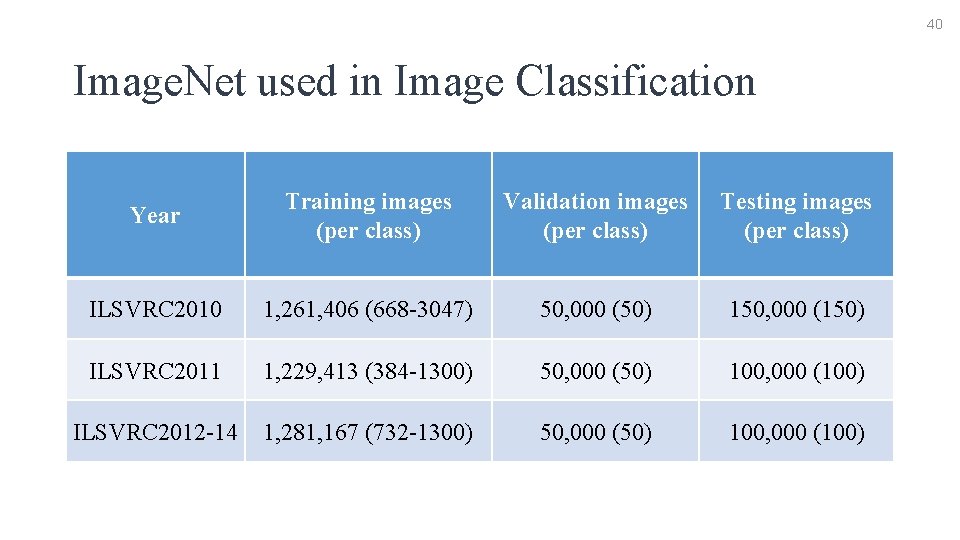

40 Image. Net used in Image Classification Year Training images (per class) Validation images (per class) Testing images (per class) ILSVRC 2010 1, 261, 406 (668 -3047) 50, 000 (50) 150, 000 (150) ILSVRC 2011 1, 229, 413 (384 -1300) 50, 000 (50) 100, 000 (100) ILSVRC 2012 -14 1, 281, 167 (732 -1300) 50, 000 (50) 100, 000 (100)

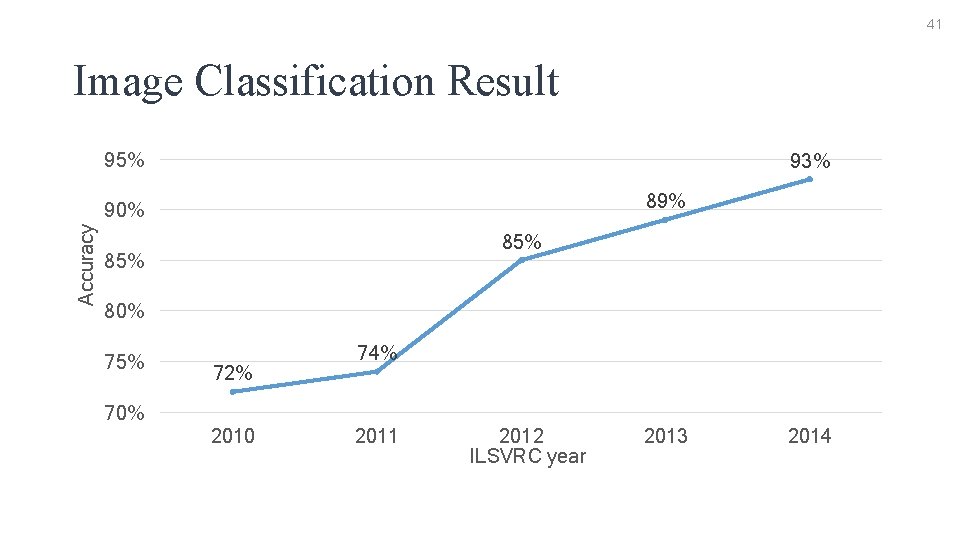

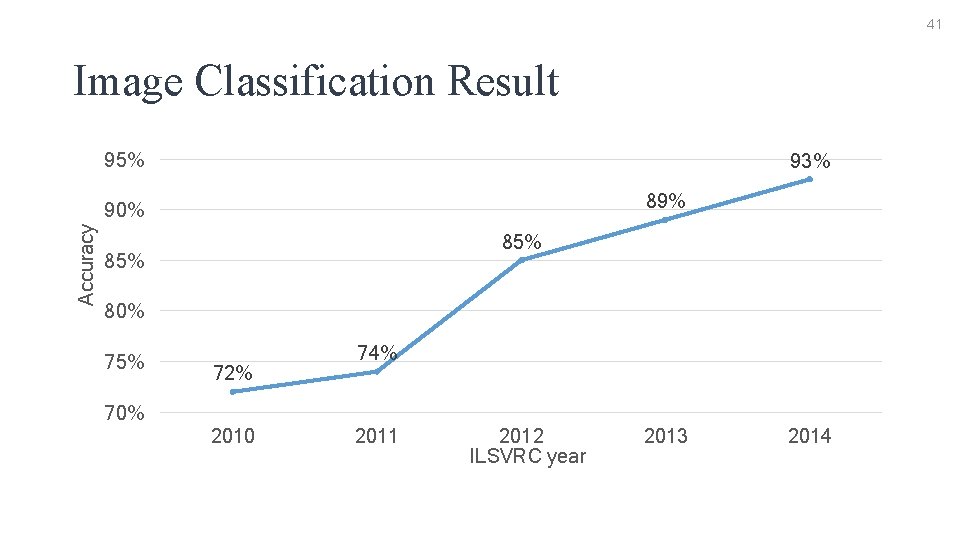

41 Image Classification Result 95% 93% 89% Accuracy 90% 85% 80% 75% 72% 74% 70% 2010 2011 2012 ILSVRC year 2013 2014

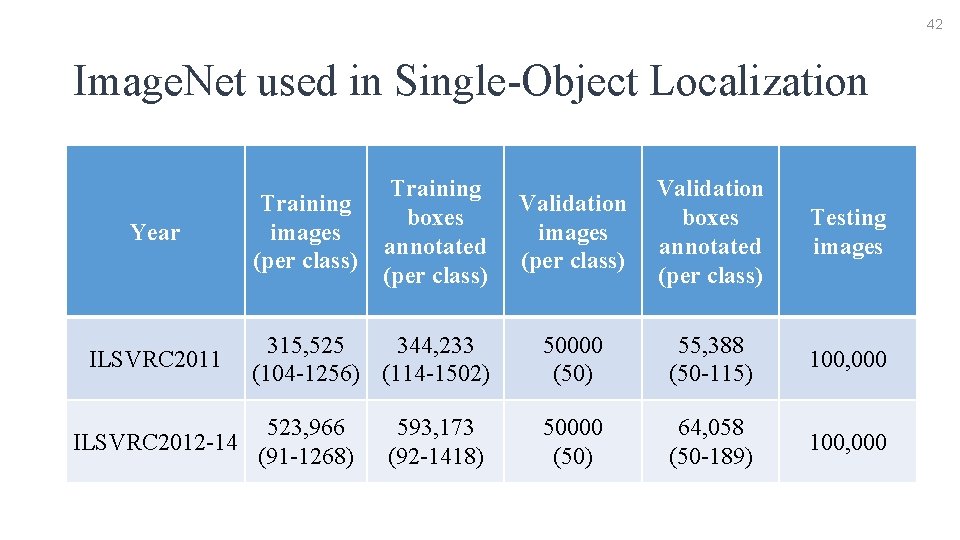

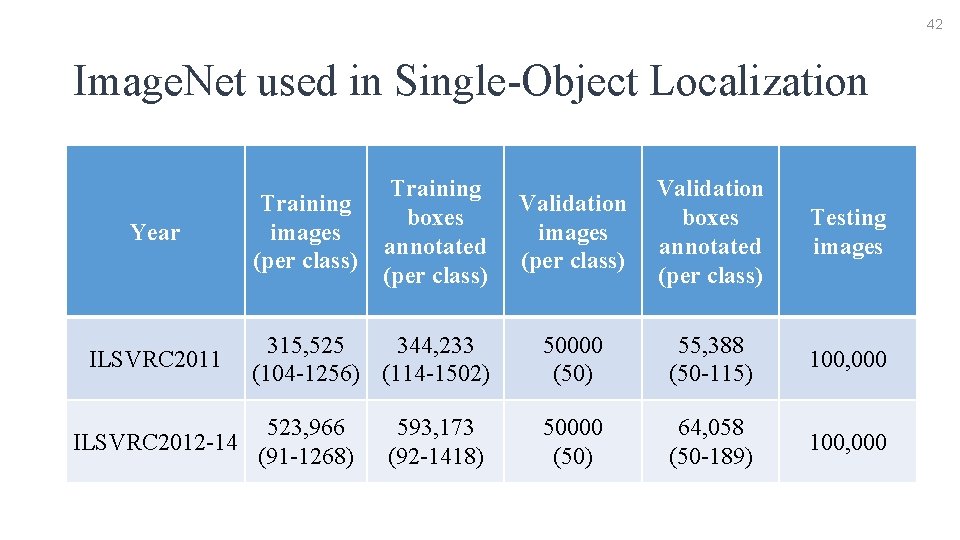

42 Image. Net used in Single-Object Localization Year ILSVRC 2011 Training images (per class) Training boxes annotated (per class) 315, 525 344, 233 (104 -1256) (114 -1502) 523, 966 ILSVRC 2012 -14 (91 -1268) 593, 173 (92 -1418) Validation images (per class) Validation boxes annotated (per class) Testing images 50000 (50) 55, 388 (50 -115) 100, 000 50000 (50) 64, 058 (50 -189) 100, 000

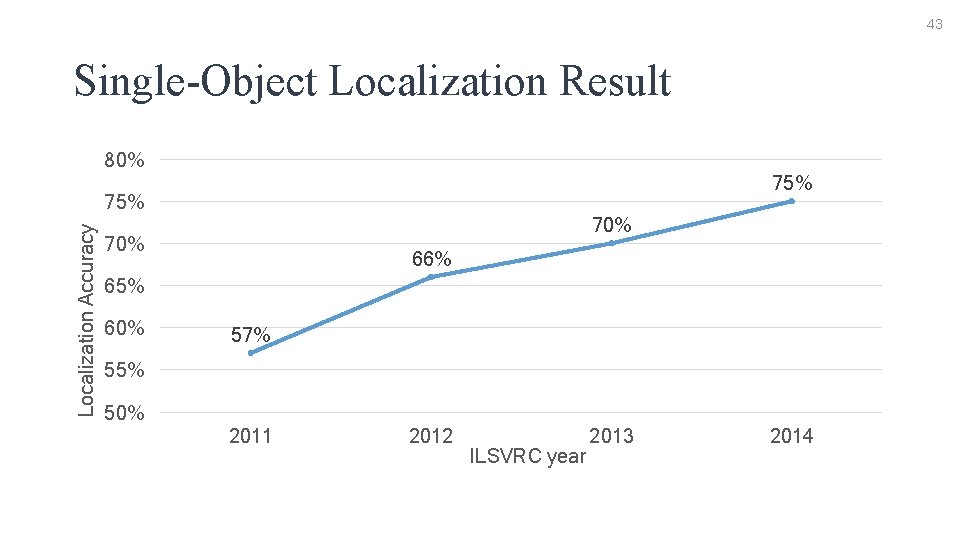

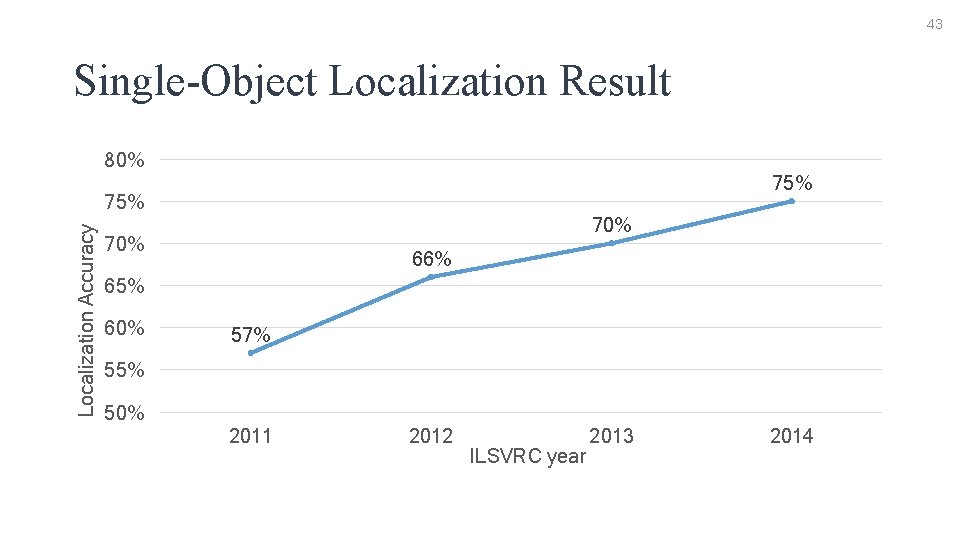

43 Single-Object Localization Result 80% 75% Localization Accuracy 75% 70% 66% 65% 60% 57% 55% 50% 2011 2012 ILSVRC year 2013 2014

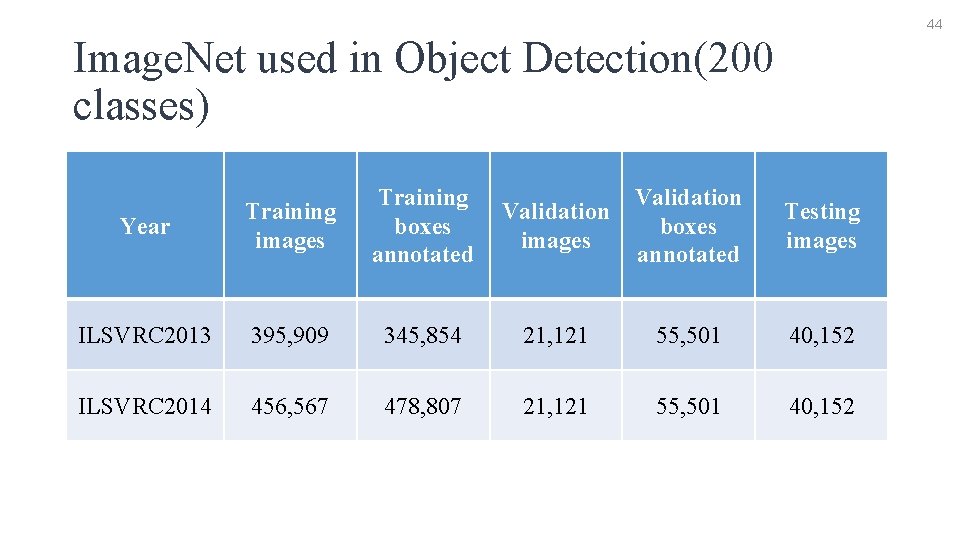

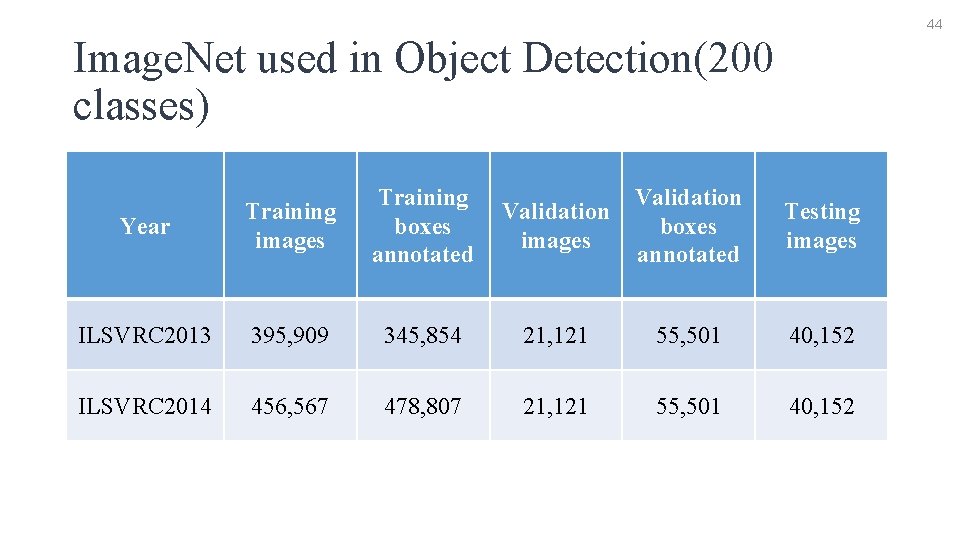

44 Image. Net used in Object Detection(200 classes) Year Training images Training boxes annotated Validation images Validation boxes annotated Testing images ILSVRC 2013 395, 909 345, 854 21, 121 55, 501 40, 152 ILSVRC 2014 456, 567 478, 807 21, 121 55, 501 40, 152

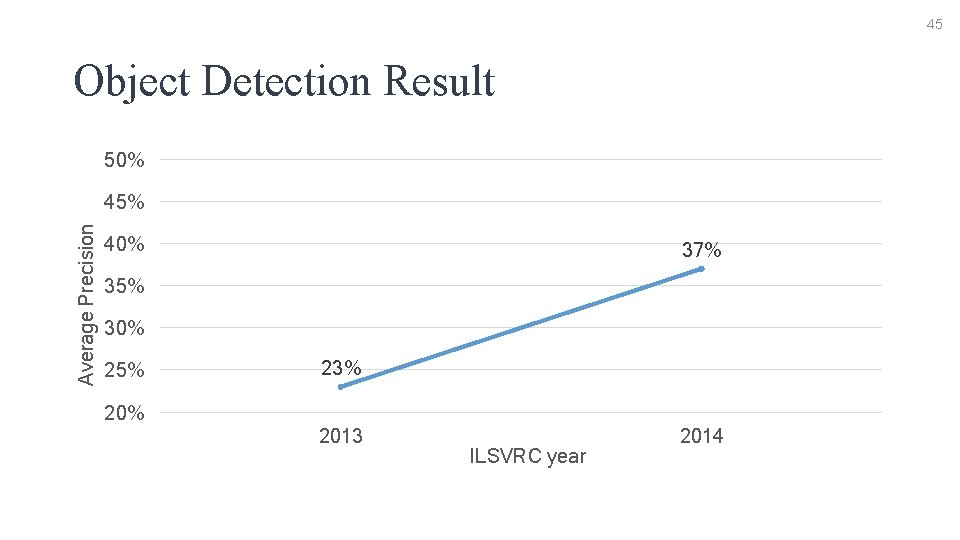

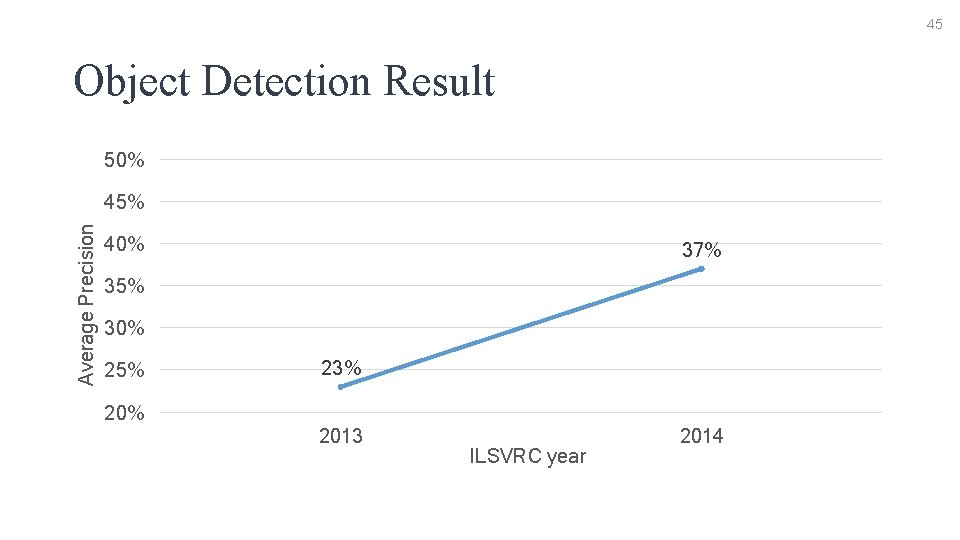

45 Object Detection Result 50% Average Precision 45% 40% 37% 35% 30% 25% 23% 2013 ILSVRC year 2014

46 Reference • Coursera, Geoffrey E Hinton, “Neural Network for Machine Learning” • Image. Net Website – http: //image-net. org/ • Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg and Li Fei-Fei. Image. Net Large Scale Visual Recognition Challenge • Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. In NIPS, 2012. • Matthew D. Zeiler, Rob Fergus. Visualizing and Understanding Convolutional Network. In ECCV, 2014. • Min Lin, Qiang Chen, Shuicheng Yan. Network In Network. In CVPR, 2014. • Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions.

Q&A Q&A Q&AQ&A Q&A

Thank you Thank you