Introduction to Concurrency in Programming Languages Appendices A

![Using Erlang. • Let’s do something simple. A routine that squares numbers. -module(squarer). -export([square/1]). Using Erlang. • Let’s do something simple. A routine that squares numbers. -module(squarer). -export([square/1]).](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-42.jpg)

![Interactive session Start Erlang shell Compile squarer. erl matt@magnolia [code]$ erl Erlang (BEAM) emulator Interactive session Start Erlang shell Compile squarer. erl matt@magnolia [code]$ erl Erlang (BEAM) emulator](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-43.jpg)

![Lists squarer. erl -module(squarer). -export([square/1, squarelist/1]). square(N) -> N*N. squarelist([H|T]) -> [square(H)|squarelist(T)]; squarelist([]) -> Lists squarer. erl -module(squarer). -export([square/1, squarelist/1]). square(N) -> N*N. squarelist([H|T]) -> [square(H)|squarelist(T)]; squarelist([]) ->](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-45.jpg)

![Parallel Fibonacci numbers % test to compute fibonacci numbers -module(fib). -export([start/0, fib/1, sfib/1]). sfib(0) Parallel Fibonacci numbers % test to compute fibonacci numbers -module(fib). -export([start/0, fib/1, sfib/1]). sfib(0)](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-52.jpg)

![Pipeline -module(pipeline). -export([start/0]). start() -> register(double, spawn(fun loop/0)), register(add, spawn(fun loop/0)), register(divide, spawn(fun loop/0)), Pipeline -module(pipeline). -export([start/0]). start() -> register(double, spawn(fun loop/0)), register(add, spawn(fun loop/0)), register(divide, spawn(fun loop/0)),](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-60.jpg)

![Count keys: Main program #define N 131072 int main() { long a[N]; int i; Count keys: Main program #define N 131072 int main() { long a[N]; int i;](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-79.jpg)

- Slides: 82

Introduction to Concurrency in Programming Languages: Appendices: A brief overview of Open. MP, Erlang, and Cilk Matthew J. Sottile Timothy G. Mattson Craig E Rasmussen © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 1

Objectives • To provide enough background information so you can start writing simple programs in Open. MP, Erlang or Cilk. Programmers learn by writing code. Our goal is to give students enough information to start writing parallel code. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 2

Outline • Open. MP • Erlang • Cilk © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 3

Open. MP* Overview: C$OMP FLUSH C$OMP THREADPRIVATE(/ABC/) #pragma omp critical CALL OMP_SET_NUM_THREADS(10) An API for Multithreaded C$OMP Open. MP: parallel do shared(a, b, Writing c) call omp_test_lock(jlok) Applications call OMP_INIT_LOCK (ilok) C$OMP ATOMIC C$OMP MASTER SINGLE PRIVATE(X) §A set of compiler directives and library setenv OMP_SCHEDULE “dynamic” routines for parallel application programmers C$OMP ORDERED §Makes writing multi-threaded applications in C$OMP PARALLEL REDUCTION (+: A, B) SECTIONS Fortran, C and C++ as easy as we. C$OMP can make it. #pragma omp parallel for last private(A, B) of !$OMP §Standardizes 20 years SMP practice BARRIER C$OMP PARALLEL DO ORDERED PRIVATE (A, B, C) C$OMP PARALLEL COPYIN(/blk/) C$OMP DO lastprivate(XX) Nthrds = OMP_GET_NUM_PROCS() omp_set_lock(lck) * The name “Open. MP” is the property of the Open. MP Architecture Review Board. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 4

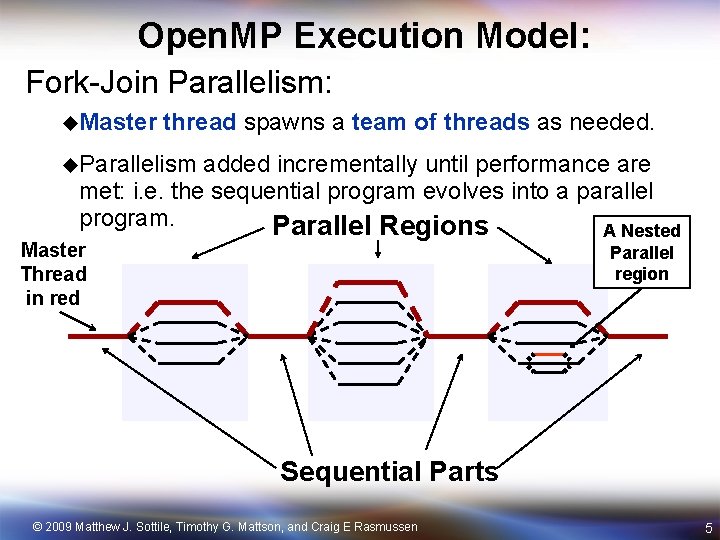

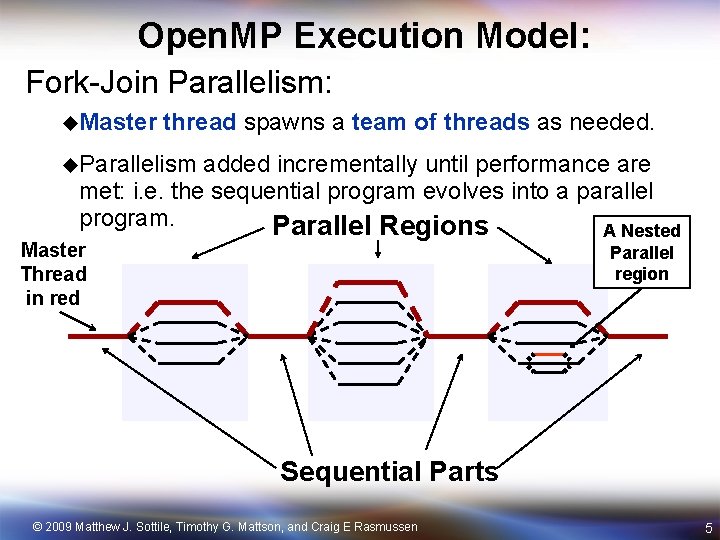

Open. MP Execution Model: Fork-Join Parallelism: u. Master thread spawns a team of threads as needed. u. Parallelism added incrementally until performance are met: i. e. the sequential program evolves into a parallel program. Parallel Regions A Nested Master Thread in red Parallel region Sequential Parts © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 5

The essence of Open. MP • Create threads that execute in a shared address space: – The only way to create threads is with the “parallel construct” – Once created, all threads execute the code inside the construct. • Split up the work between threads by one of two means: – SPMD (Single program Multiple Data) … all threads execute the same code and you use thread ID to assign work to a thread. – Workshare constructs split up loops and tasks between threads. • Manage data environment to avoid data access conflicts – Synchronization so correct results are produced regardless of how threads are scheduled. – Carefully manage which data can be private (local to each thread) and shared. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 6

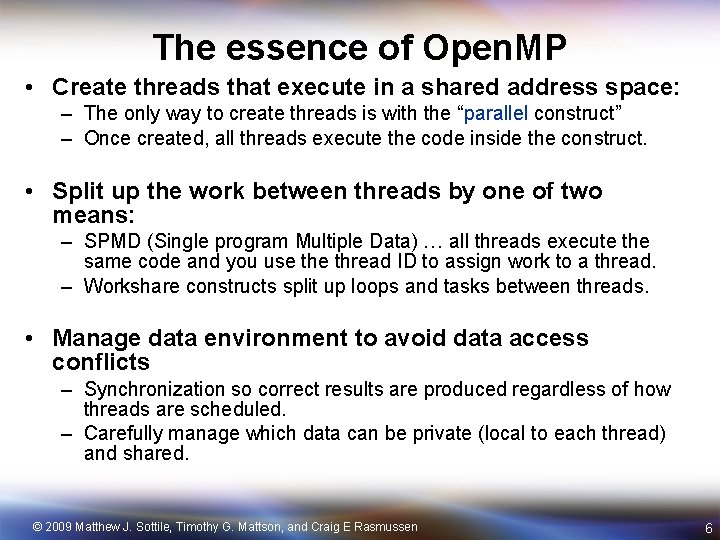

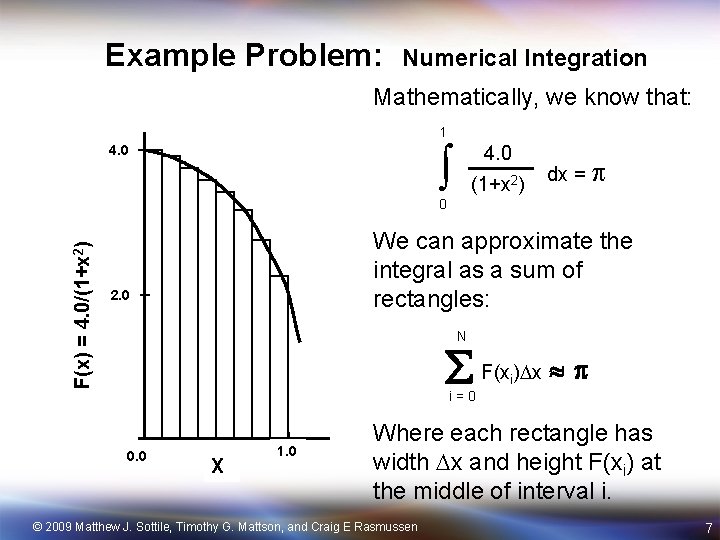

Example Problem: Numerical Integration Mathematically, we know that: 1 4. 0 (1+x 2) dx = F(x) = 4. 0/(1+x 2) 0 We can approximate the integral as a sum of rectangles: 2. 0 N F(x ) x i i=0 0. 0 X 1. 0 Where each rectangle has width x and height F(xi) at the middle of interval i. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 7

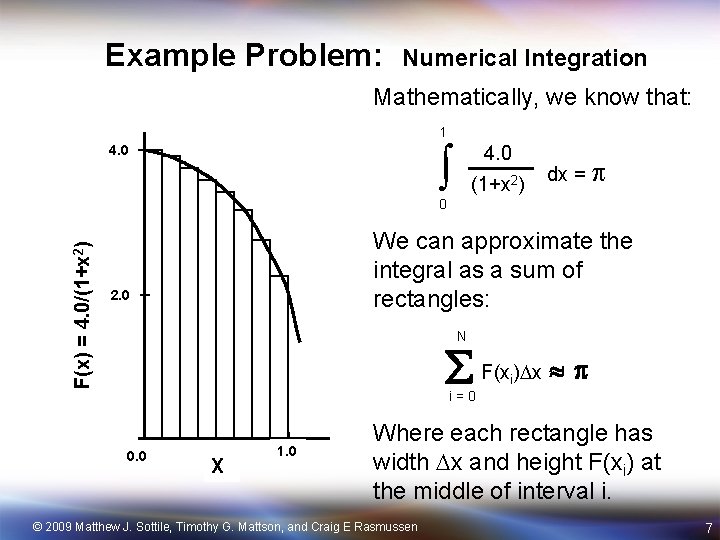

PI Program: an example static long num_steps = 100000; double step; void main () { int i; double x, pi, sum = 0. 0; step = 1. 0/(double) num_steps; x = 0. 5 * step; for (i=0; i<= num_steps; i++){ x+=step; sum += 4. 0/(1. 0+x*x); } pi = step * sum; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 8

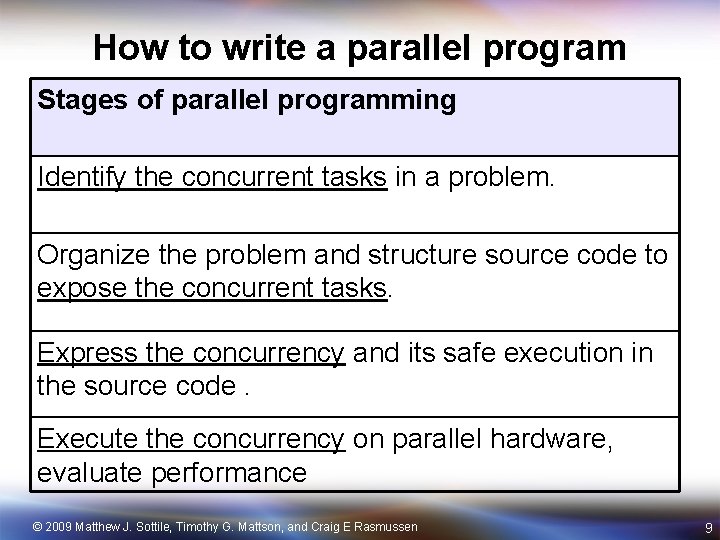

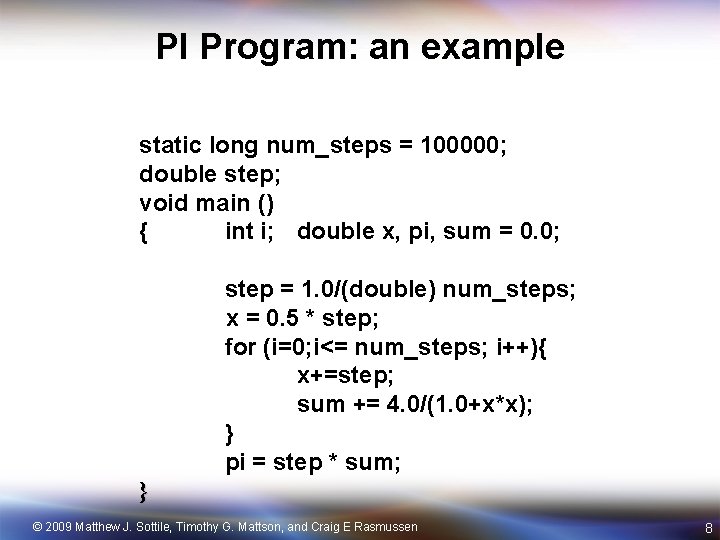

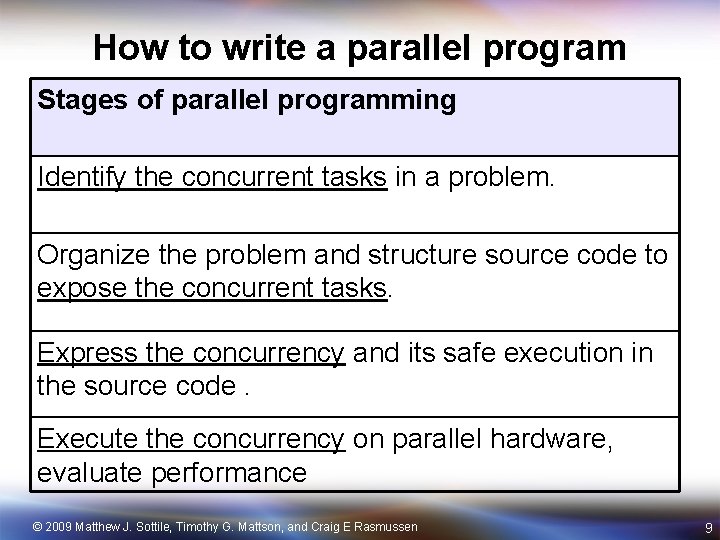

How to write a parallel program Stages of parallel programming Identify the concurrent tasks in a problem. Organize the problem and structure source code to expose the concurrent tasks. Express the concurrency and its safe execution in the source code. Execute the concurrency on parallel hardware, evaluate performance © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 9

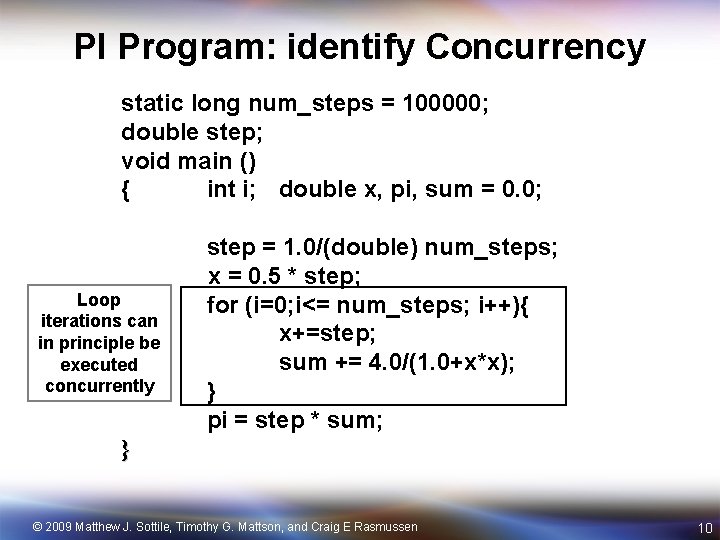

PI Program: identify Concurrency static long num_steps = 100000; double step; void main () { int i; double x, pi, sum = 0. 0; Loop iterations can in principle be executed concurrently step = 1. 0/(double) num_steps; x = 0. 5 * step; for (i=0; i<= num_steps; i++){ x+=step; sum += 4. 0/(1. 0+x*x); } pi = step * sum; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 10

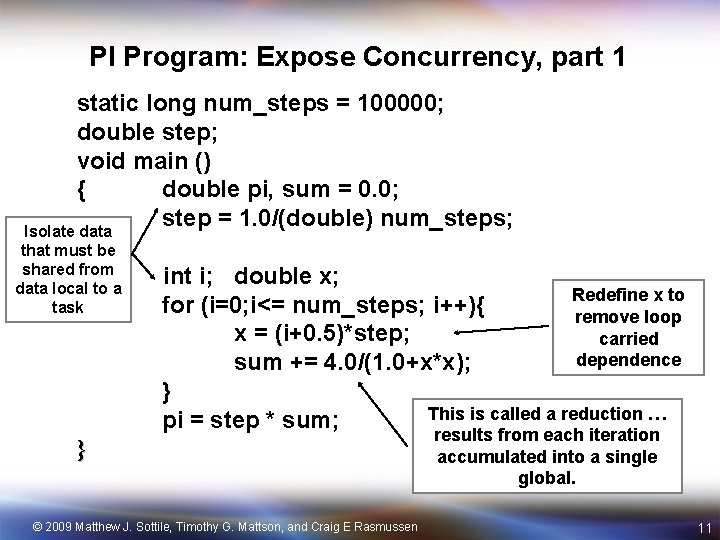

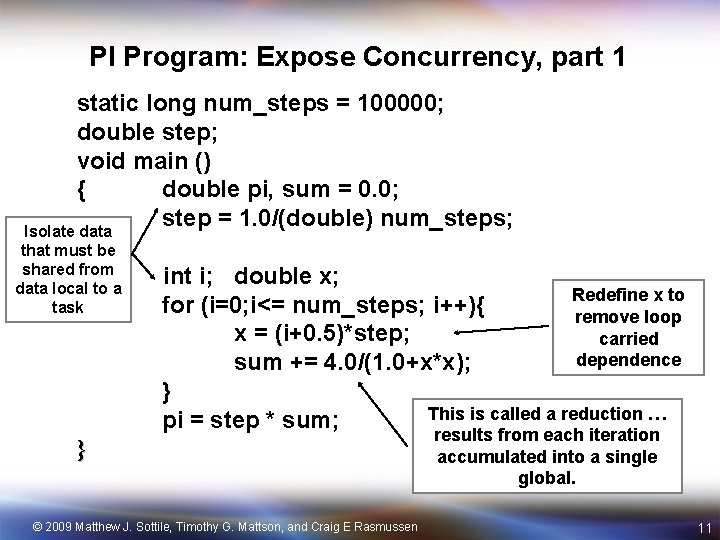

PI Program: Expose Concurrency, part 1 static long num_steps = 100000; double step; void main () { double pi, sum = 0. 0; step = 1. 0/(double) num_steps; Isolate data that must be shared from data local to a task int i; double x; Redefine x to for (i=0; i<= num_steps; i++){ remove loop x = (i+0. 5)*step; carried dependence sum += 4. 0/(1. 0+x*x); } This is called a reduction … pi = step * sum; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen results from each iteration accumulated into a single global. 11

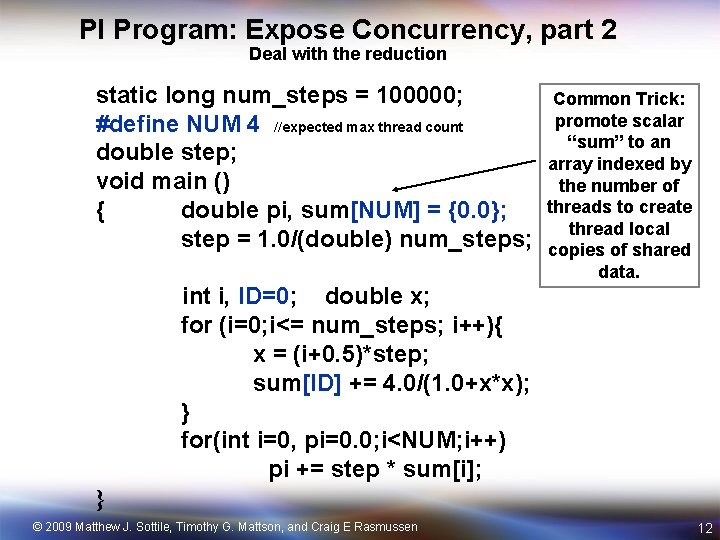

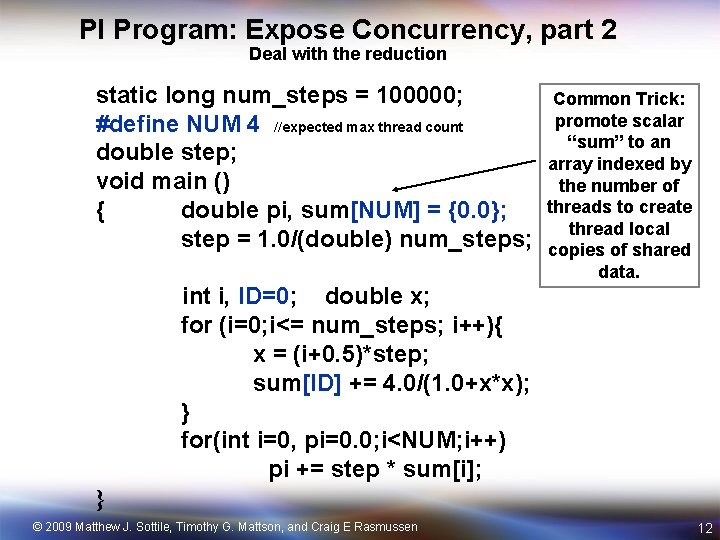

PI Program: Expose Concurrency, part 2 Deal with the reduction static long num_steps = 100000; #define NUM 4 //expected max thread count double step; void main () { double pi, sum[NUM] = {0. 0}; step = 1. 0/(double) num_steps; Common Trick: promote scalar “sum” to an array indexed by the number of threads to create thread local copies of shared data. int i, ID=0; double x; for (i=0; i<= num_steps; i++){ x = (i+0. 5)*step; sum[ID] += 4. 0/(1. 0+x*x); } for(int i=0, pi=0. 0; i<NUM; i++) pi += step * sum[i]; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 12

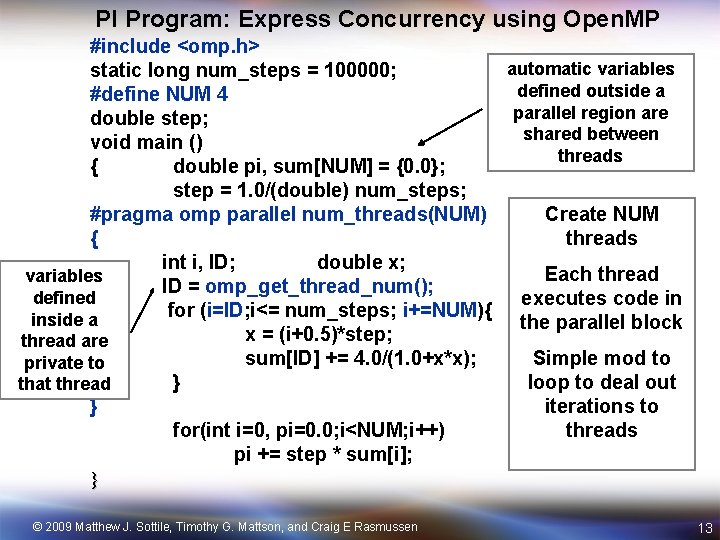

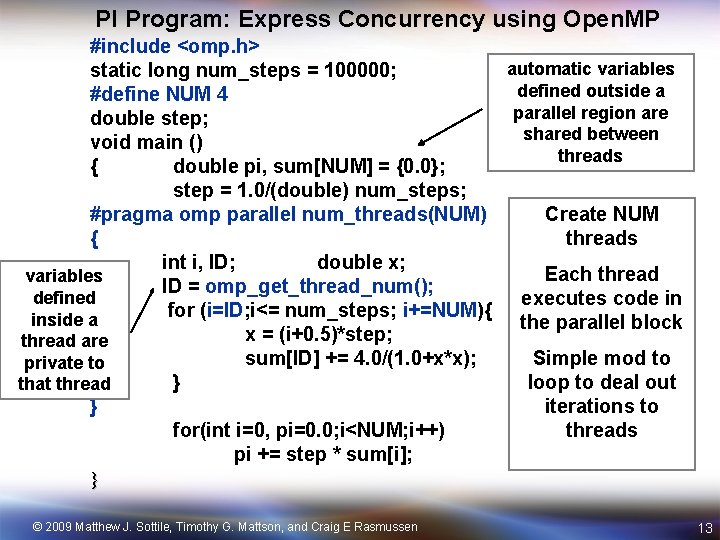

PI Program: Express Concurrency using Open. MP #include <omp. h> automatic variables static long num_steps = 100000; defined outside a #define NUM 4 parallel region are double step; shared between void main () threads { double pi, sum[NUM] = {0. 0}; step = 1. 0/(double) num_steps; #pragma omp parallel num_threads(NUM) Create NUM { threads int i, ID; double x; Each thread variables ID = omp_get_thread_num(); defined executes code in for (i=ID; i<= num_steps; i+=NUM){ inside a the parallel block x = (i+0. 5)*step; thread are sum[ID] += 4. 0/(1. 0+x*x); Simple mod to private to } loop to deal out that thread } iterations to for(int i=0, pi=0. 0; i<NUM; i++) threads pi += step * sum[i]; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 13

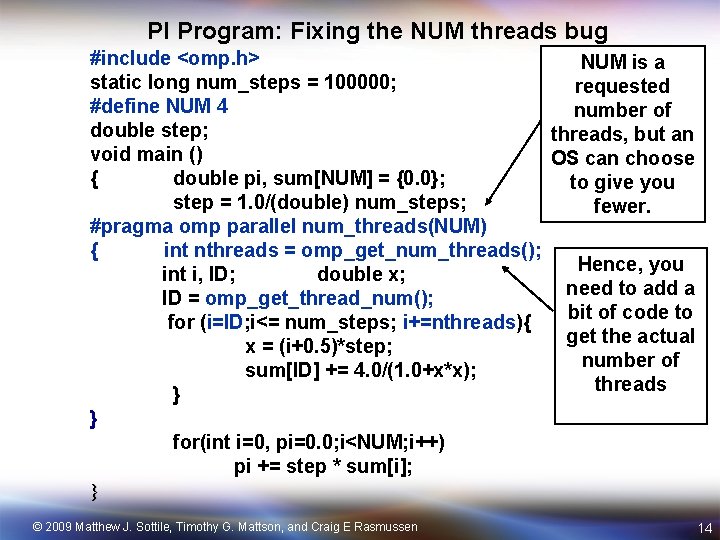

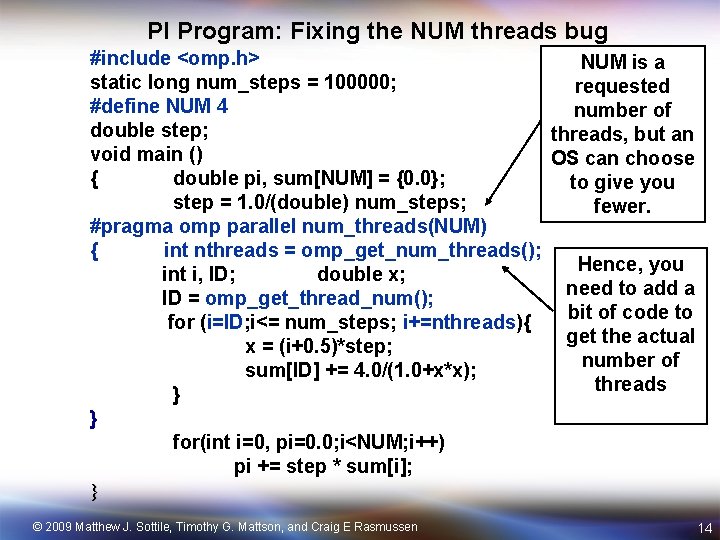

PI Program: Fixing the NUM threads bug #include <omp. h> NUM is a static long num_steps = 100000; requested #define NUM 4 number of double step; threads, but an void main () OS can choose { double pi, sum[NUM] = {0. 0}; to give you step = 1. 0/(double) num_steps; fewer. #pragma omp parallel num_threads(NUM) { int nthreads = omp_get_num_threads(); Hence, you int i, ID; double x; need to add a ID = omp_get_thread_num(); bit of code to for (i=ID; i<= num_steps; i+=nthreads){ get the actual x = (i+0. 5)*step; number of sum[ID] += 4. 0/(1. 0+x*x); threads } } for(int i=0, pi=0. 0; i<NUM; i++) pi += step * sum[i]; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 14

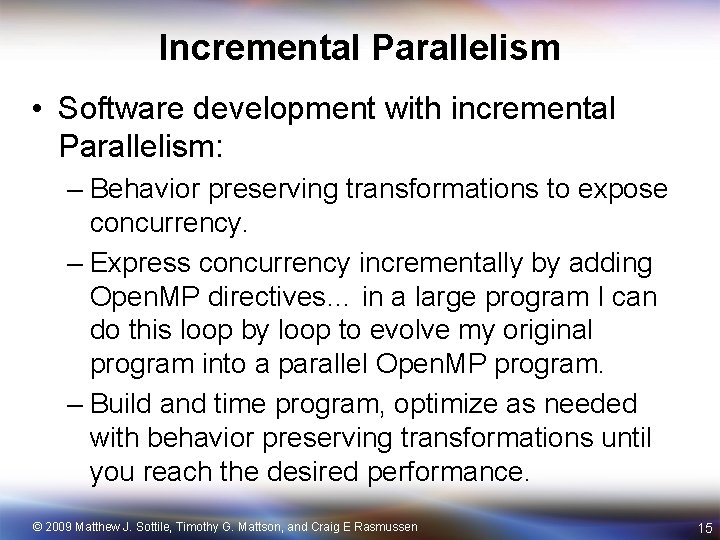

Incremental Parallelism • Software development with incremental Parallelism: – Behavior preserving transformations to expose concurrency. – Express concurrency incrementally by adding Open. MP directives… in a large program I can do this loop by loop to evolve my original program into a parallel Open. MP program. – Build and time program, optimize as needed with behavior preserving transformations until you reach the desired performance. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 15

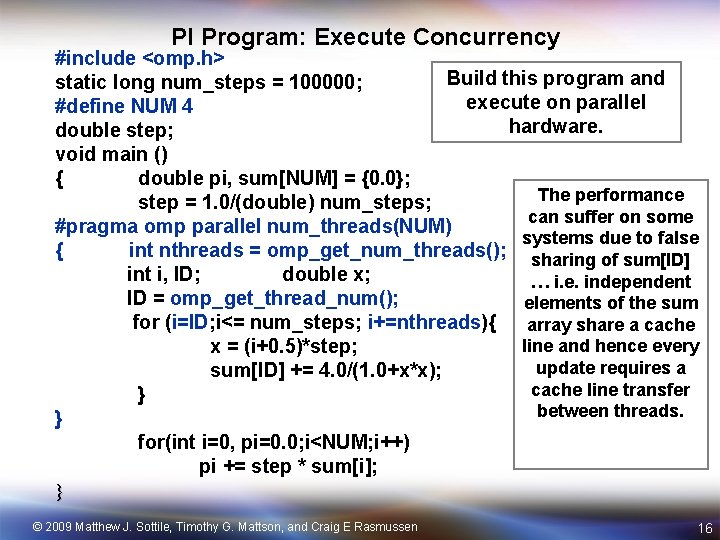

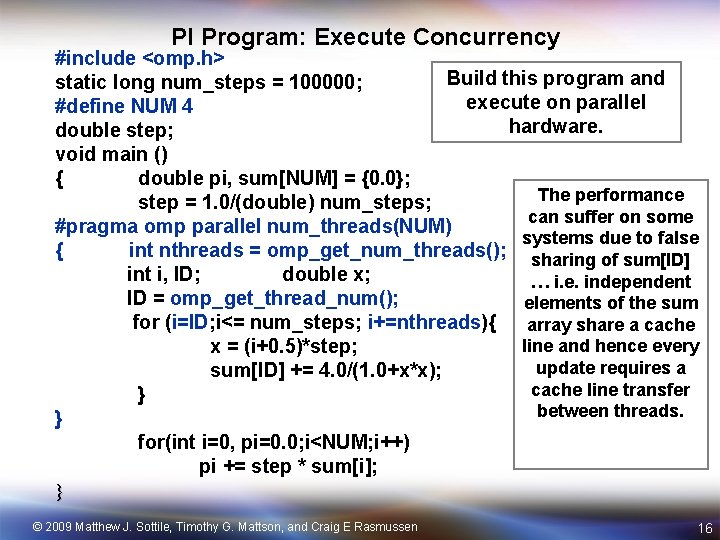

PI Program: Execute Concurrency #include <omp. h> Build this program and static long num_steps = 100000; execute on parallel #define NUM 4 hardware. double step; void main () { double pi, sum[NUM] = {0. 0}; The performance step = 1. 0/(double) num_steps; can suffer on some #pragma omp parallel num_threads(NUM) systems due to false { int nthreads = omp_get_num_threads(); sharing of sum[ID] int i, ID; double x; … i. e. independent ID = omp_get_thread_num(); elements of the sum for (i=ID; i<= num_steps; i+=nthreads){ array share a cache line and hence every x = (i+0. 5)*step; update requires a sum[ID] += 4. 0/(1. 0+x*x); cache line transfer } between threads. } for(int i=0, pi=0. 0; i<NUM; i++) pi += step * sum[i]; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 16

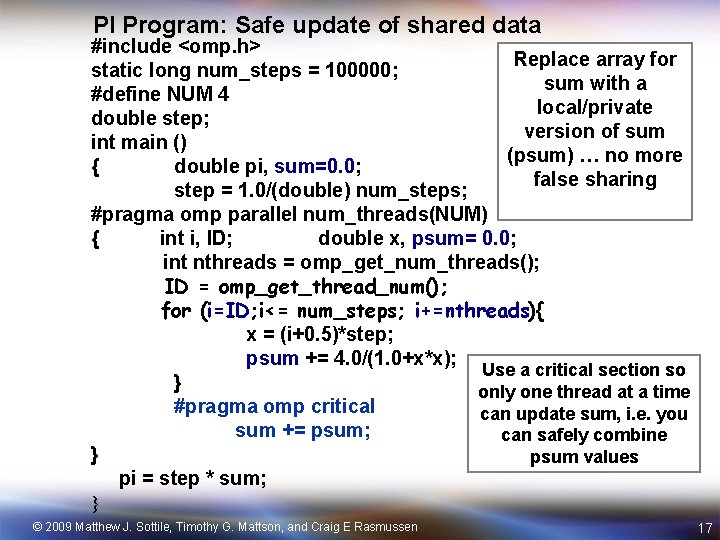

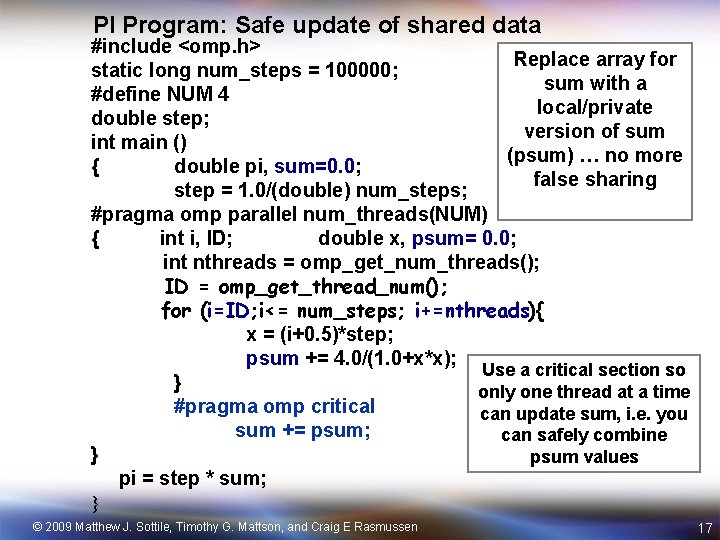

PI Program: Safe update of shared data #include <omp. h> Replace array for static long num_steps = 100000; sum with a #define NUM 4 local/private double step; version of sum int main () (psum) … no more { double pi, sum=0. 0; false sharing step = 1. 0/(double) num_steps; #pragma omp parallel num_threads(NUM) { int i, ID; double x, psum= 0. 0; int nthreads = omp_get_num_threads(); ID = omp_get_thread_num(); for (i=ID; i<= num_steps; i+=nthreads){ x = (i+0. 5)*step; psum += 4. 0/(1. 0+x*x); Use a critical section so } only one thread at a time #pragma omp critical can update sum, i. e. you sum += psum; can safely combine } psum values pi = step * sum; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 17

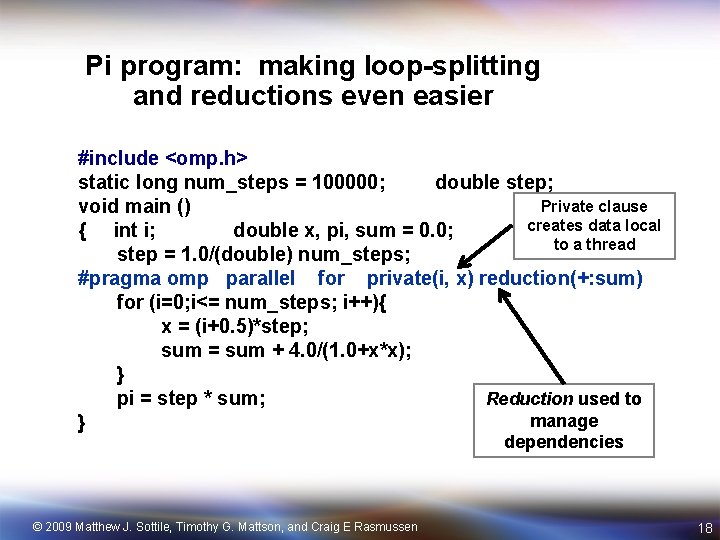

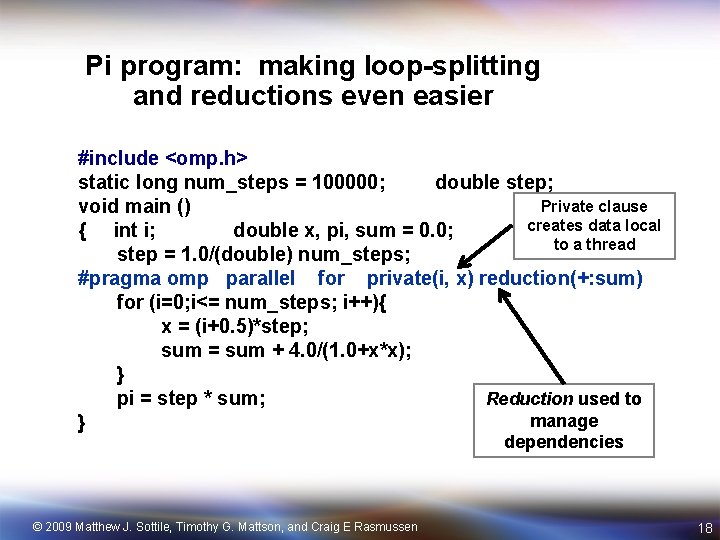

Pi program: making loop-splitting and reductions even easier #include <omp. h> static long num_steps = 100000; double step; Private clause void main () creates data local { int i; double x, pi, sum = 0. 0; to a thread step = 1. 0/(double) num_steps; #pragma omp parallel for private(i, x) reduction(+: sum) for (i=0; i<= num_steps; i++){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } pi = step * sum; Reduction used to manage } dependencies © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 18

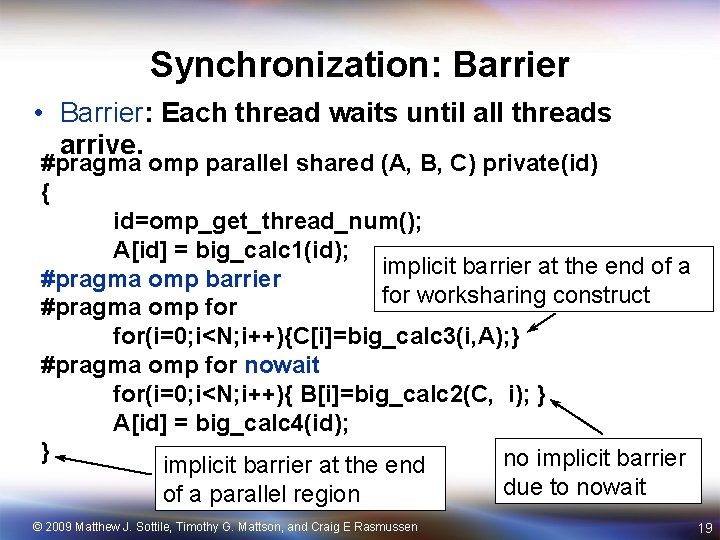

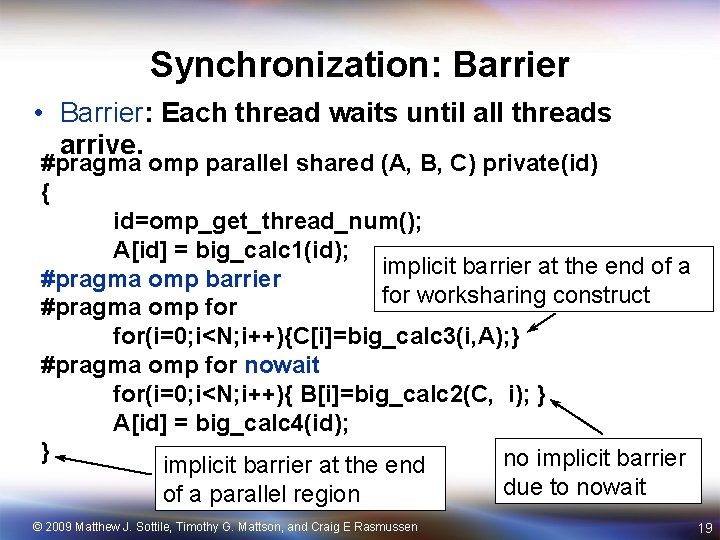

Synchronization: Barrier • Barrier: Each thread waits until all threads arrive. #pragma omp parallel shared (A, B, C) private(id) { id=omp_get_thread_num(); A[id] = big_calc 1(id); implicit barrier at the end of a #pragma omp barrier for worksharing construct #pragma omp for(i=0; i<N; i++){C[i]=big_calc 3(i, A); } #pragma omp for nowait for(i=0; i<N; i++){ B[i]=big_calc 2(C, i); } A[id] = big_calc 4(id); } no implicit barrier at the end due to nowait of a parallel region © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 19

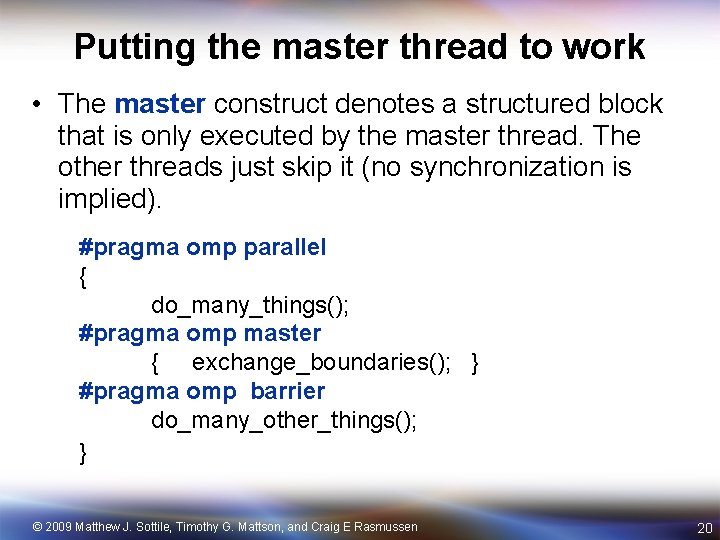

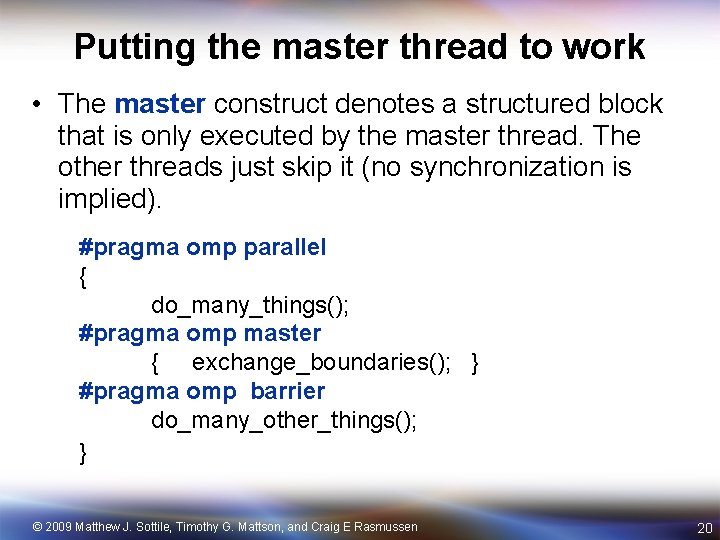

Putting the master thread to work • The master construct denotes a structured block that is only executed by the master thread. The other threads just skip it (no synchronization is implied). #pragma omp parallel { do_many_things(); #pragma omp master { exchange_boundaries(); } #pragma omp barrier do_many_other_things(); } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 20

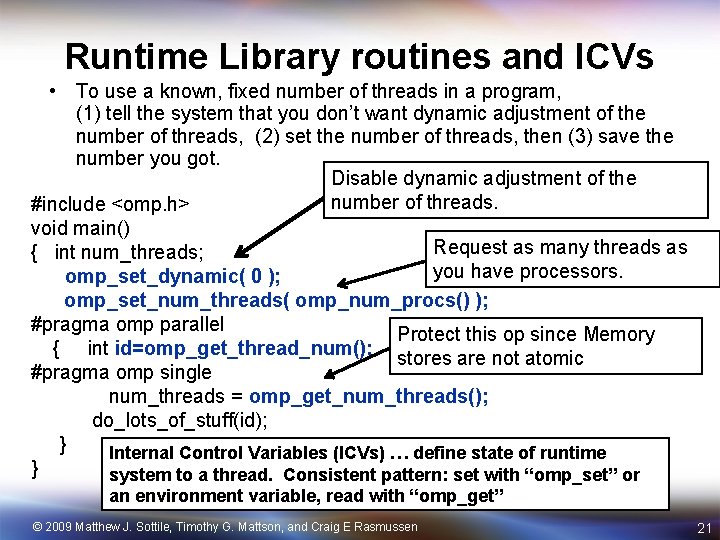

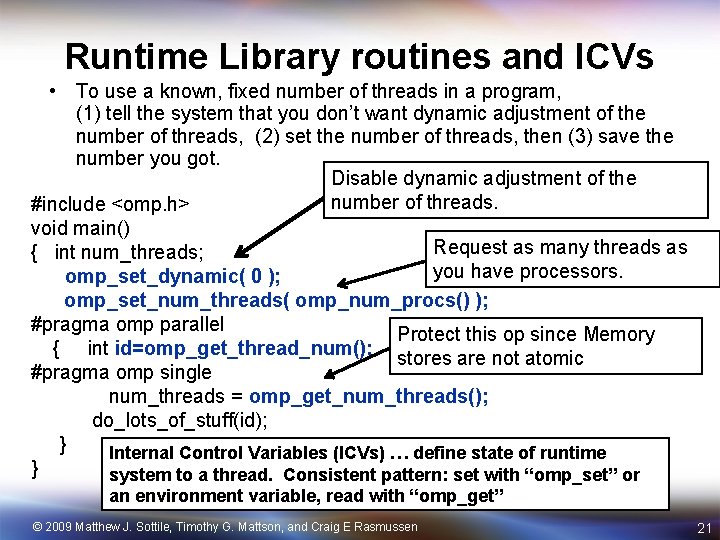

Runtime Library routines and ICVs • To use a known, fixed number of threads in a program, (1) tell the system that you don’t want dynamic adjustment of the number of threads, (2) set the number of threads, then (3) save the number you got. Disable dynamic adjustment of the number of threads. #include <omp. h> void main() Request as many threads as { int num_threads; you have processors. omp_set_dynamic( 0 ); omp_set_num_threads( omp_num_procs() ); #pragma omp parallel Protect this op since Memory { int id=omp_get_thread_num(); stores are not atomic #pragma omp single num_threads = omp_get_num_threads(); do_lots_of_stuff(id); } Internal Control Variables (ICVs) … define state of runtime } system to a thread. Consistent pattern: set with “omp_set” or an environment variable, read with “omp_get” © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 21

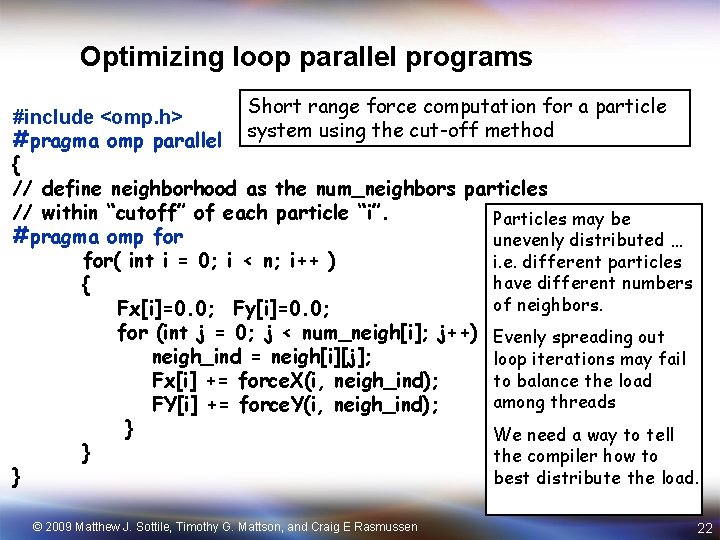

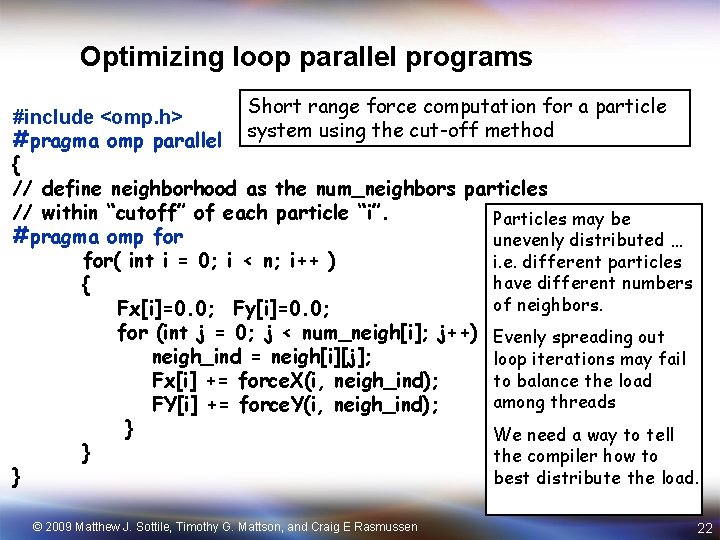

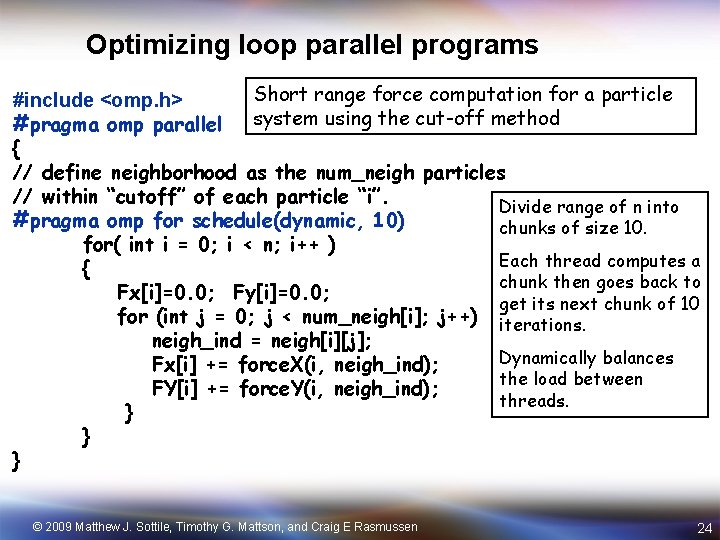

Optimizing loop parallel programs Short range force computation for a particle #include <omp. h> system using the cut-off method #pragma omp parallel { // define neighborhood as the num_neighbors particles // within “cutoff” of each particle “i”. Particles may be #pragma omp for unevenly distributed … for( int i = 0; i < n; i++ ) i. e. different particles have different numbers { of neighbors. Fx[i]=0. 0; Fy[i]=0. 0; for (int j = 0; j < num_neigh[i]; j++) Evenly spreading out neigh_ind = neigh[i][j]; loop iterations may fail to balance the load Fx[i] += force. X(i, neigh_ind); among threads FY[i] += force. Y(i, neigh_ind); } We need a way to tell } the compiler how to } best distribute the load. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 22

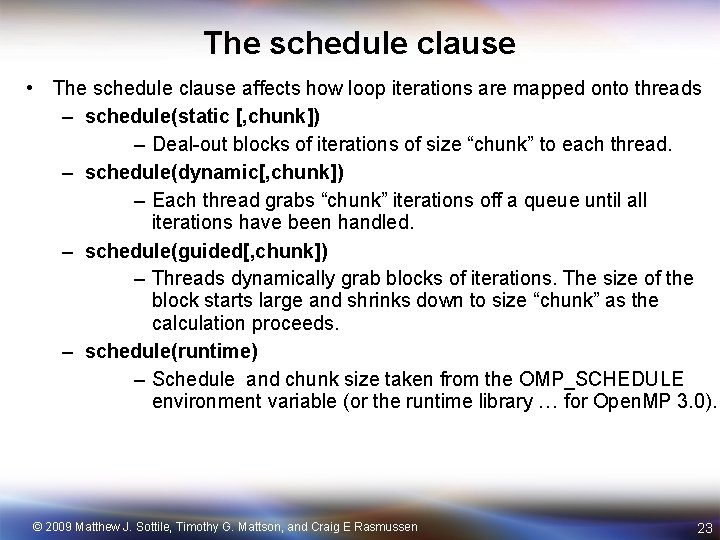

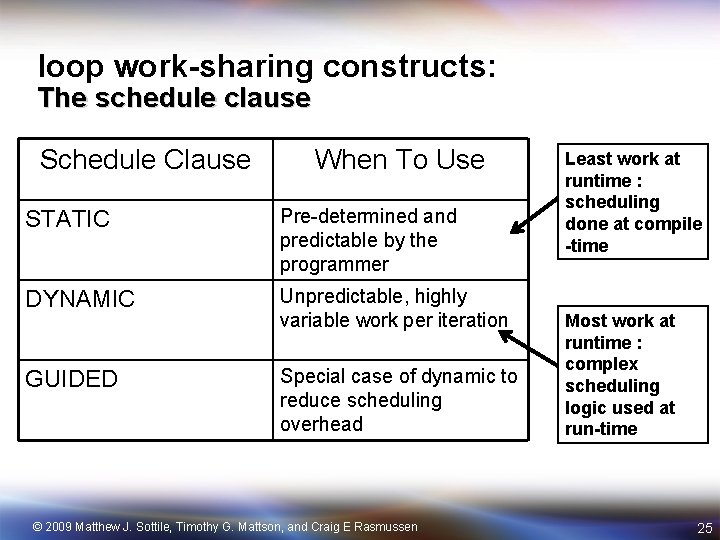

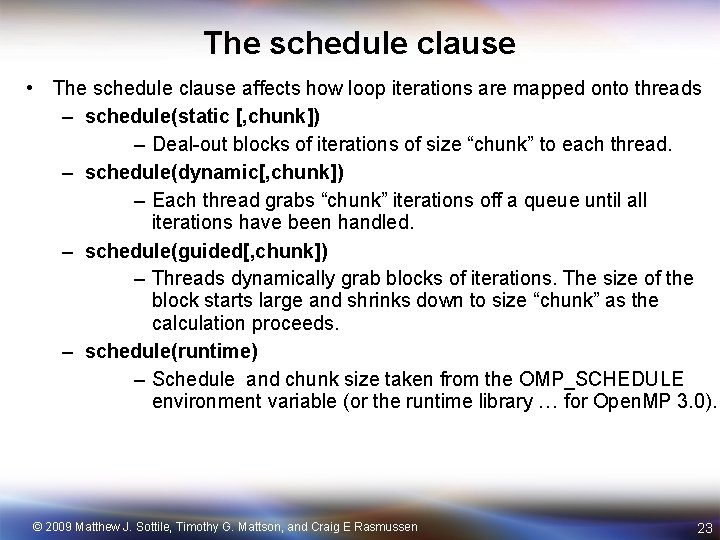

The schedule clause • The schedule clause affects how loop iterations are mapped onto threads – schedule(static [, chunk]) – Deal-out blocks of iterations of size “chunk” to each thread. – schedule(dynamic[, chunk]) – Each thread grabs “chunk” iterations off a queue until all iterations have been handled. – schedule(guided[, chunk]) – Threads dynamically grab blocks of iterations. The size of the block starts large and shrinks down to size “chunk” as the calculation proceeds. – schedule(runtime) – Schedule and chunk size taken from the OMP_SCHEDULE environment variable (or the runtime library … for Open. MP 3. 0). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 23

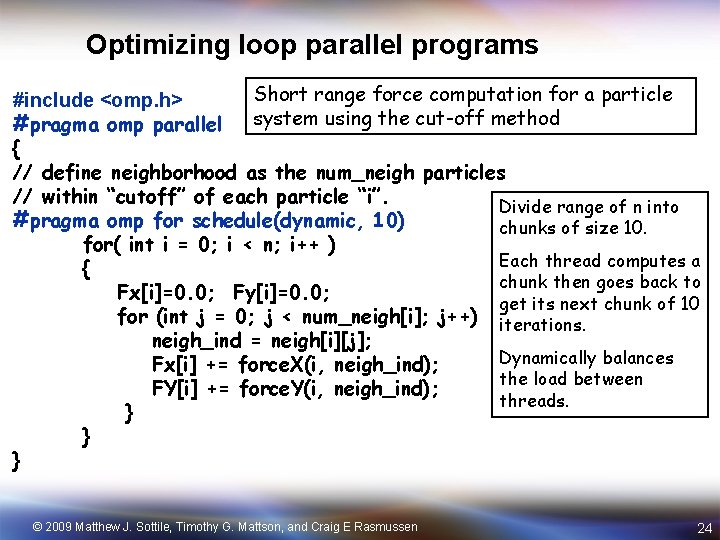

Optimizing loop parallel programs Short range force computation for a particle #include <omp. h> system using the cut-off method #pragma omp parallel { // define neighborhood as the num_neigh particles // within “cutoff” of each particle “i”. Divide range of n into #pragma omp for schedule(dynamic, 10) chunks of size 10. for( int i = 0; i < n; i++ ) Each thread computes a { chunk then goes back to Fx[i]=0. 0; Fy[i]=0. 0; get its next chunk of 10 for (int j = 0; j < num_neigh[i]; j++) iterations. neigh_ind = neigh[i][j]; Dynamically balances Fx[i] += force. X(i, neigh_ind); the load between FY[i] += force. Y(i, neigh_ind); threads. } } } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 24

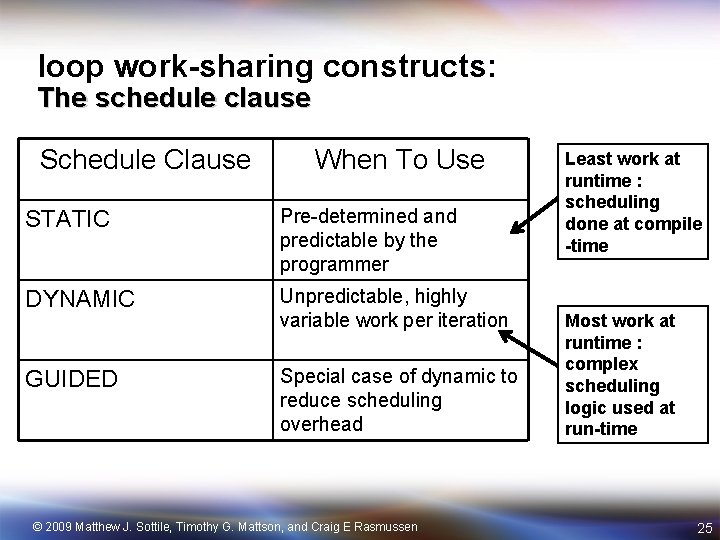

loop work-sharing constructs: The schedule clause Schedule Clause When To Use STATIC Pre-determined and predictable by the programmer DYNAMIC Unpredictable, highly variable work per iteration GUIDED Special case of dynamic to reduce scheduling overhead © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen Least work at runtime : scheduling done at compile -time Most work at runtime : complex scheduling logic used at run-time 25

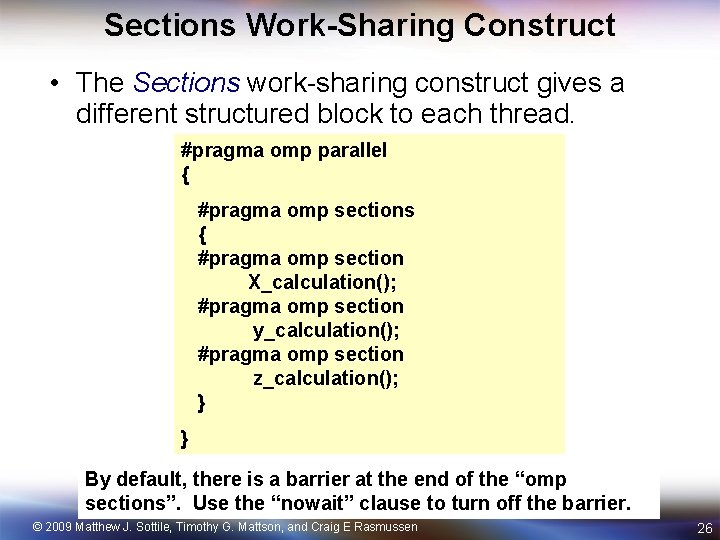

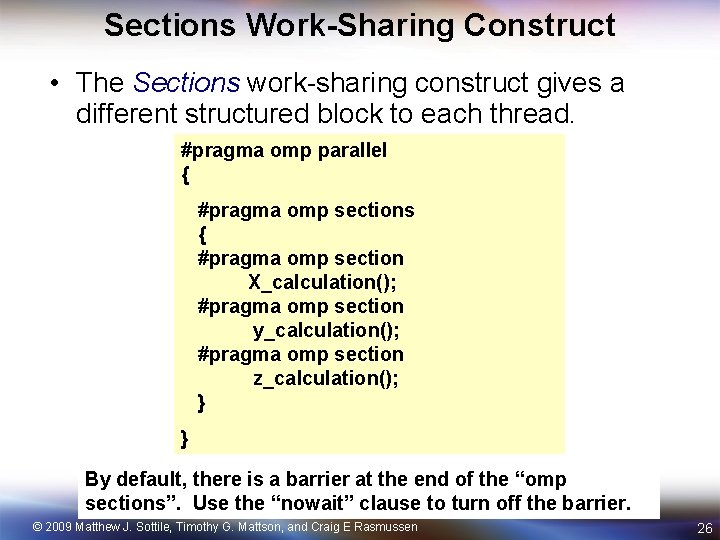

Sections Work-Sharing Construct • The Sections work-sharing construct gives a different structured block to each thread. #pragma omp parallel { #pragma omp sections { #pragma omp section X_calculation(); #pragma omp section y_calculation(); #pragma omp section z_calculation(); } } By default, there is a barrier at the end of the “omp sections”. Use the “nowait” clause to turn off the barrier. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 26

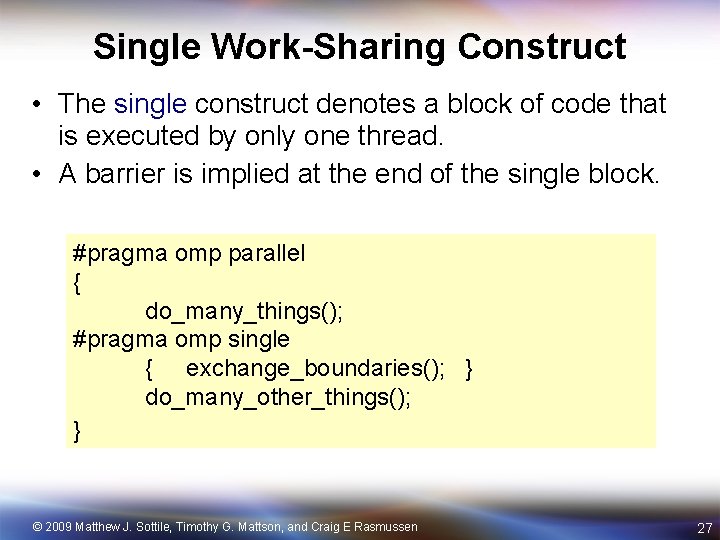

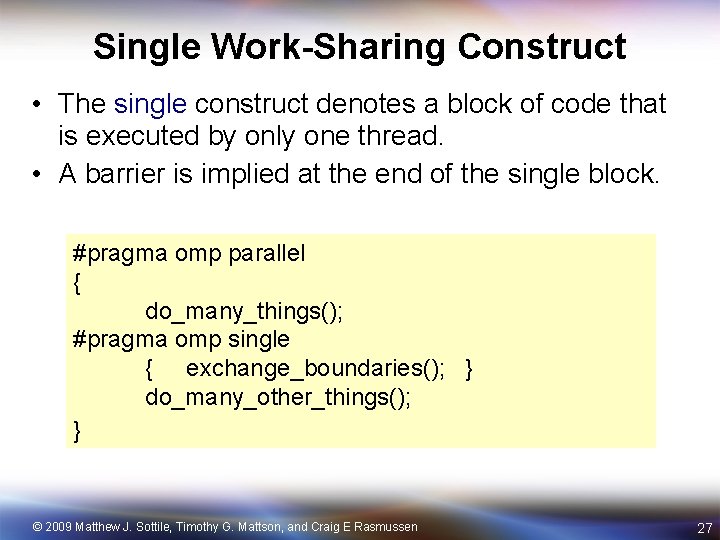

Single Work-Sharing Construct • The single construct denotes a block of code that is executed by only one thread. • A barrier is implied at the end of the single block. #pragma omp parallel { do_many_things(); #pragma omp single { exchange_boundaries(); } do_many_other_things(); } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 27

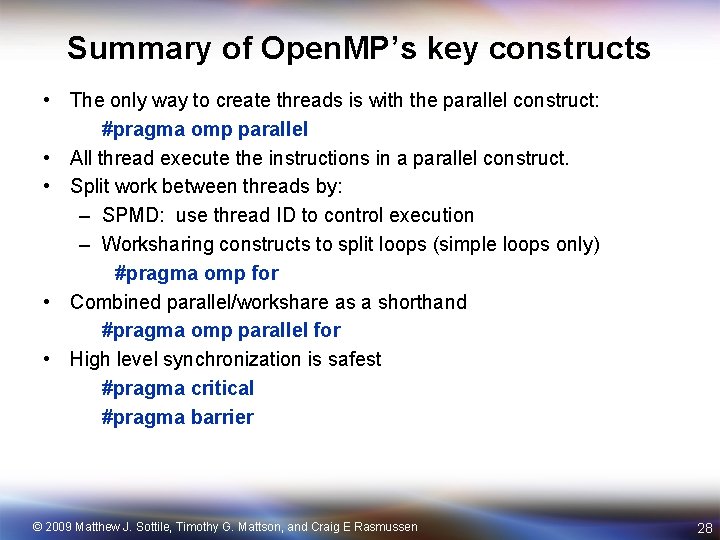

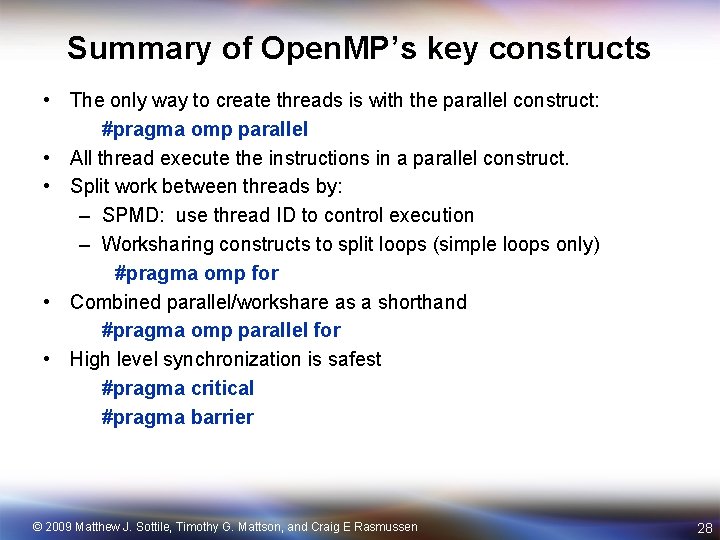

Summary of Open. MP’s key constructs • The only way to create threads is with the parallel construct: #pragma omp parallel • All thread execute the instructions in a parallel construct. • Split work between threads by: – SPMD: use thread ID to control execution – Worksharing constructs to split loops (simple loops only) #pragma omp for • Combined parallel/workshare as a shorthand #pragma omp parallel for • High level synchronization is safest #pragma critical #pragma barrier © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 28

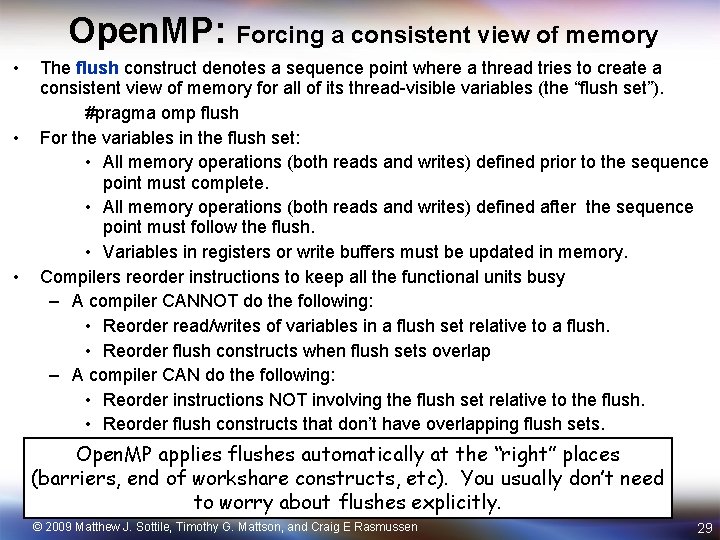

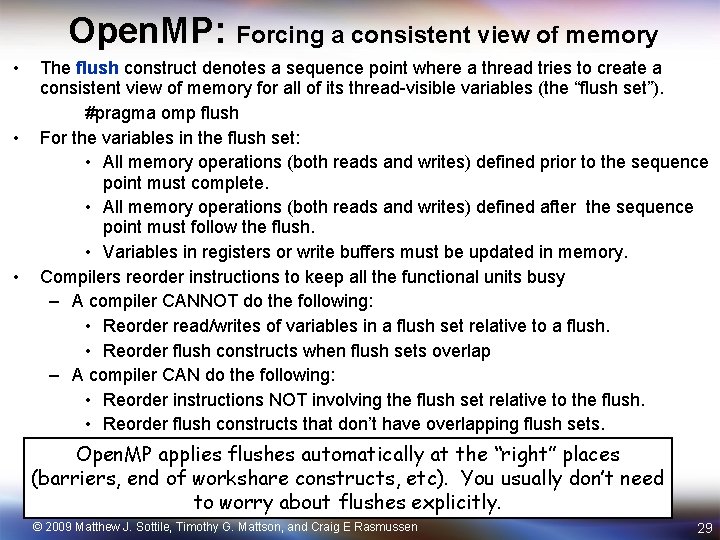

Open. MP: Forcing a consistent view of memory • • • The flush construct denotes a sequence point where a thread tries to create a consistent view of memory for all of its thread-visible variables (the “flush set”). #pragma omp flush For the variables in the flush set: • All memory operations (both reads and writes) defined prior to the sequence point must complete. • All memory operations (both reads and writes) defined after the sequence point must follow the flush. • Variables in registers or write buffers must be updated in memory. Compilers reorder instructions to keep all the functional units busy – A compiler CANNOT do the following: • Reorder read/writes of variables in a flush set relative to a flush. • Reorder flush constructs when flush sets overlap – A compiler CAN do the following: • Reorder instructions NOT involving the flush set relative to the flush. • Reorder flush constructs that don’t have overlapping flush sets. Open. MP applies flushes automatically at the “right” places (barriers, end of workshare constructs, etc). You usually don’t need to worry about flushes explicitly. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 29

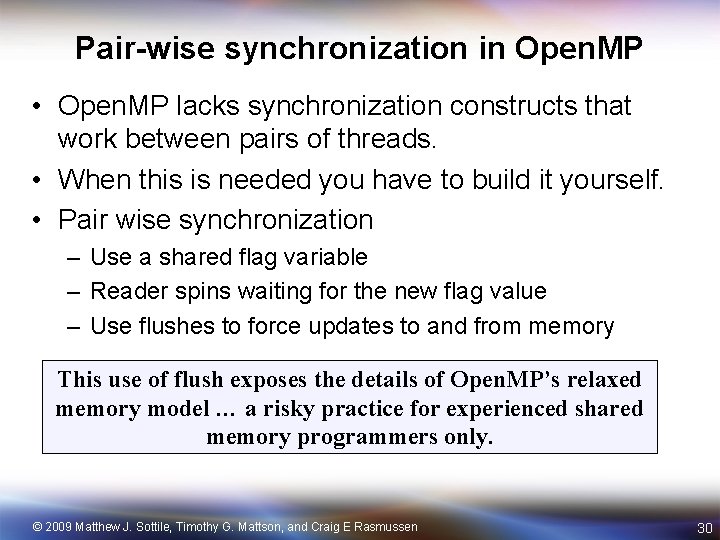

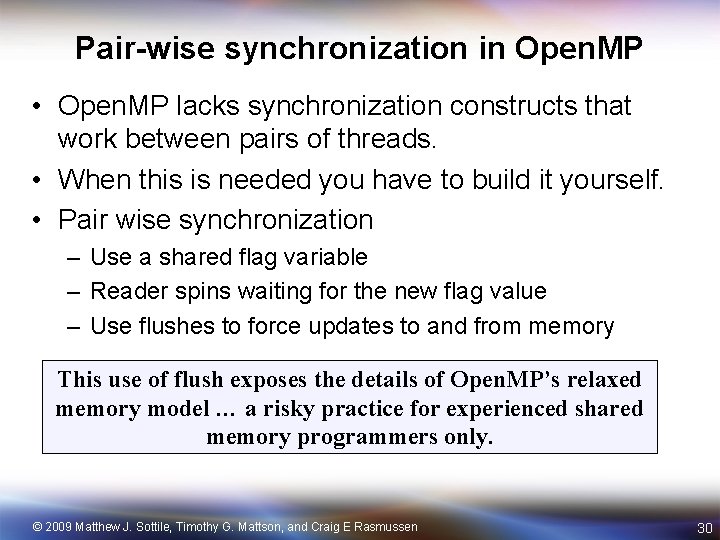

Pair-wise synchronization in Open. MP • Open. MP lacks synchronization constructs that work between pairs of threads. • When this is needed you have to build it yourself. • Pair wise synchronization – Use a shared flag variable – Reader spins waiting for the new flag value – Use flushes to force updates to and from memory This use of flush exposes the details of Open. MP’s relaxed memory model … a risky practice for experienced shared memory programmers only. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 30

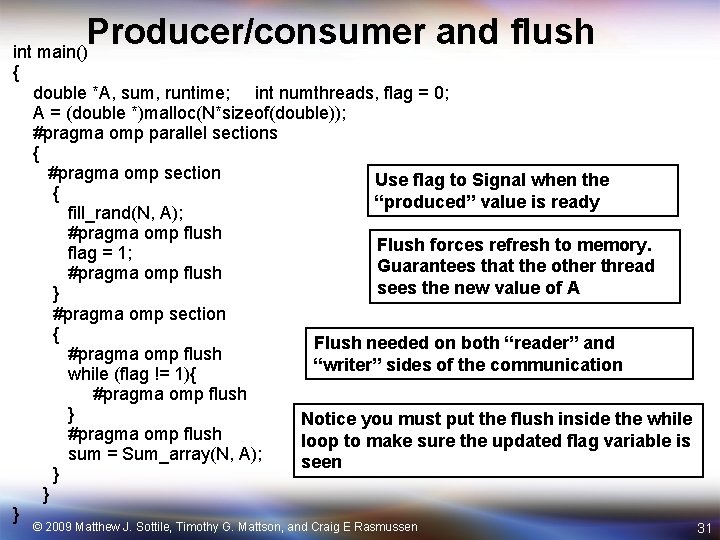

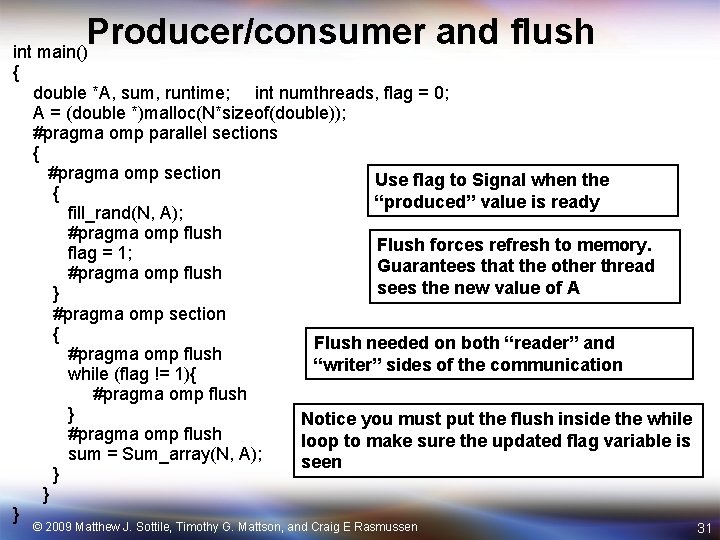

Producer/consumer and flush int main() { double *A, sum, runtime; int numthreads, flag = 0; A = (double *)malloc(N*sizeof(double)); #pragma omp parallel sections { #pragma omp section Use flag to Signal when the { “produced” value is ready fill_rand(N, A); #pragma omp flush Flush forces refresh to memory. flag = 1; Guarantees that the other thread #pragma omp flush sees the new value of A } #pragma omp section { Flush needed on both “reader” and #pragma omp flush “writer” sides of the communication while (flag != 1){ #pragma omp flush } Notice you must put the flush inside the while #pragma omp flush loop to make sure the updated flag variable is sum = Sum_array(N, A); seen } } } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 31

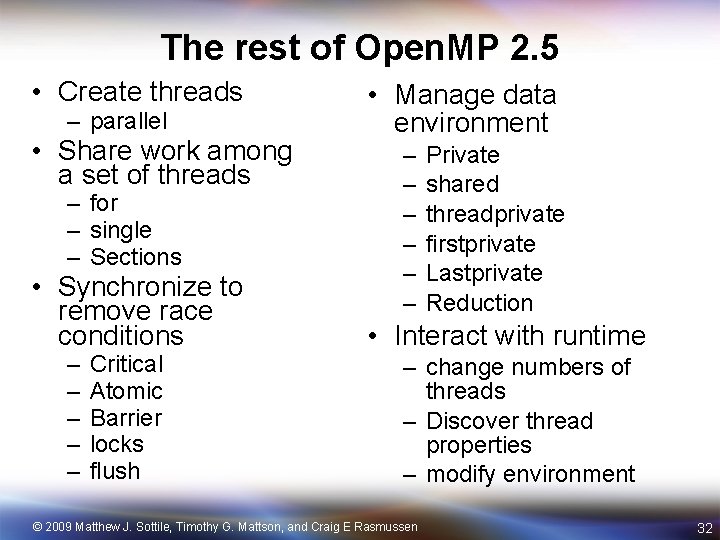

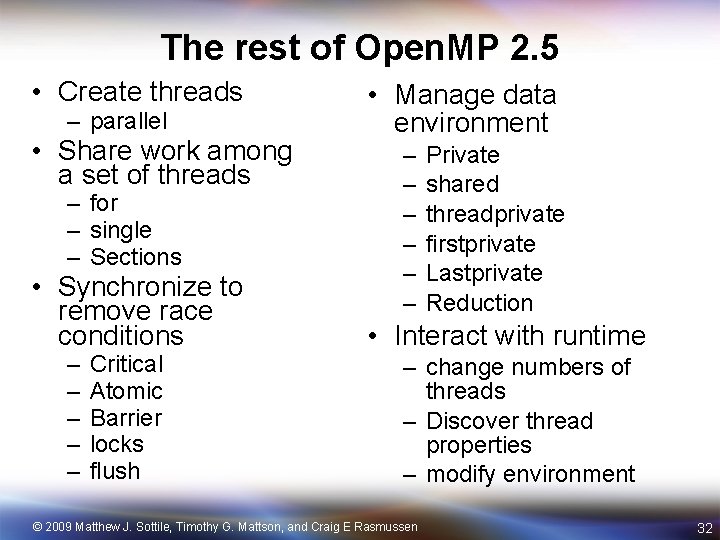

The rest of Open. MP 2. 5 • Create threads – parallel • Share work among a set of threads – for – single – Sections • Synchronize to remove race conditions – – – Critical Atomic Barrier locks flush • Manage data environment – – – Private shared threadprivate firstprivate Lastprivate Reduction • Interact with runtime – change numbers of threads – Discover thread properties – modify environment © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 32

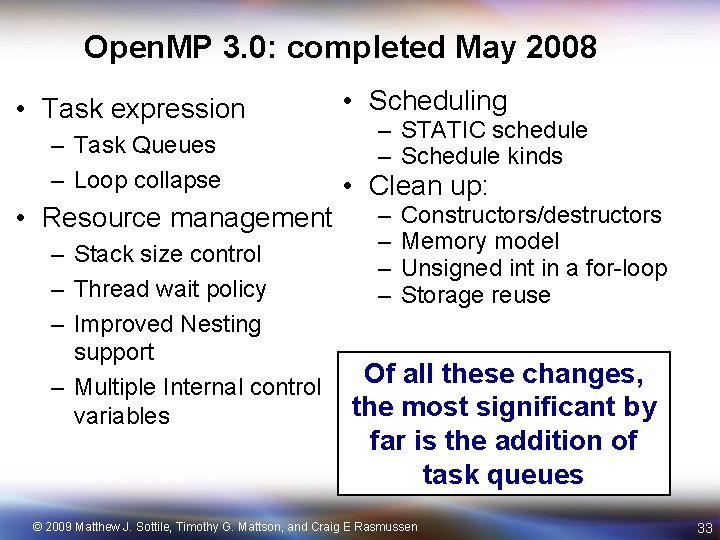

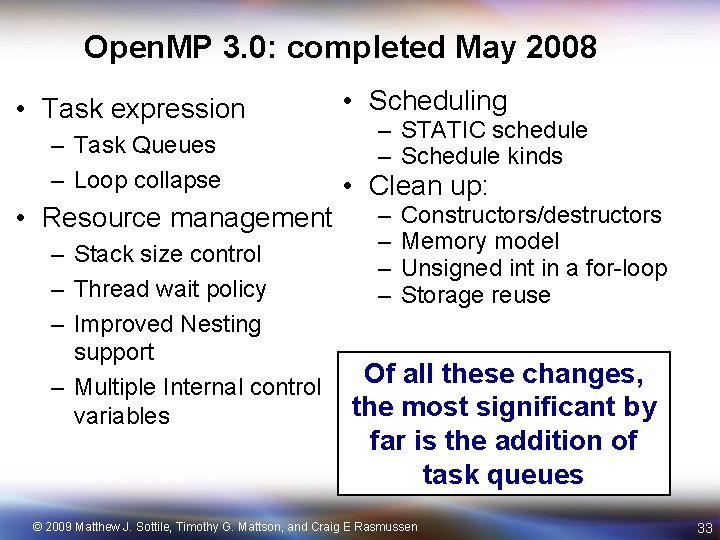

Open. MP 3. 0: completed May 2008 • Task expression – Task Queues – Loop collapse • Resource management – Stack size control – Thread wait policy – Improved Nesting support – Multiple Internal control variables • Scheduling – STATIC schedule – Schedule kinds • Clean up: – – Constructors/destructors Memory model Unsigned int in a for-loop Storage reuse Of all these changes, the most significant by far is the addition of task queues © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 33

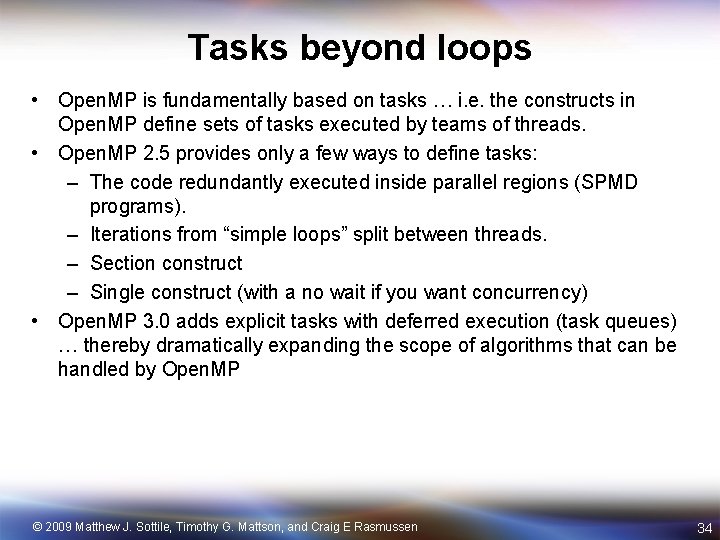

Tasks beyond loops • Open. MP is fundamentally based on tasks … i. e. the constructs in Open. MP define sets of tasks executed by teams of threads. • Open. MP 2. 5 provides only a few ways to define tasks: – The code redundantly executed inside parallel regions (SPMD programs). – Iterations from “simple loops” split between threads. – Section construct – Single construct (with a no wait if you want concurrency) • Open. MP 3. 0 adds explicit tasks with deferred execution (task queues) … thereby dramatically expanding the scope of algorithms that can be handled by Open. MP © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 34

Explicit Tasks in Open. MP 3. 0 • Open. MP 2. 5 can not handle the very common case of a pointer chasing loop: nodeptr list, p; for (p=list; p!=NULL; p=p->next) process(p->data); • Open. MP 3. 0 covers this case with explicit tasks: nodeptr list, p; #pragma omp single { for (p=list; p!=NULL; p=p->next) #pragma omp task firstprivate(p) process(p->data); } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen One thread goes through the loop and creates a set of tasks Captures value of p for each tasks go on a queue to be executed by an available thread 35

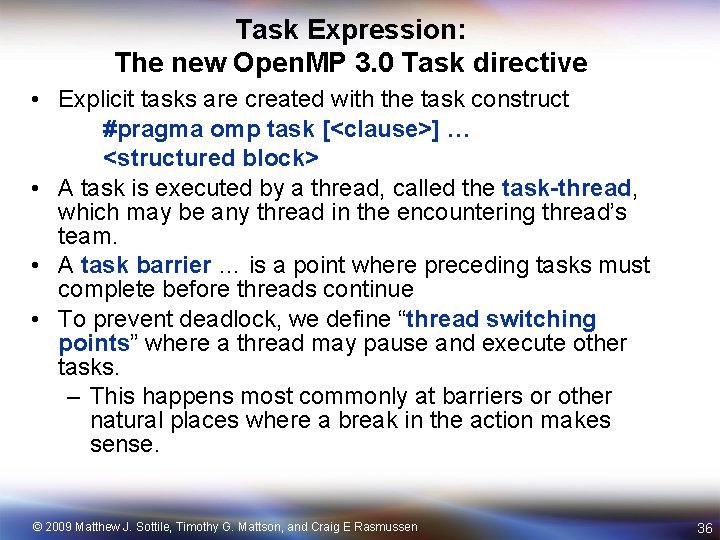

Task Expression: The new Open. MP 3. 0 Task directive • Explicit tasks are created with the task construct #pragma omp task [<clause>] … <structured block> • A task is executed by a thread, called the task-thread, which may be any thread in the encountering thread’s team. • A task barrier … is a point where preceding tasks must complete before threads continue • To prevent deadlock, we define “thread switching points” where a thread may pause and execute other tasks. – This happens most commonly at barriers or other natural places where a break in the action makes sense. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 36

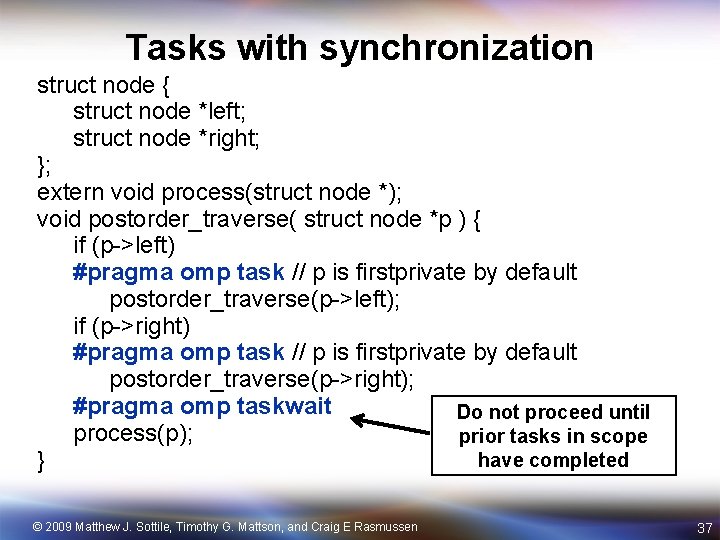

Tasks with synchronization struct node { struct node *left; struct node *right; }; extern void process(struct node *); void postorder_traverse( struct node *p ) { if (p->left) #pragma omp task // p is firstprivate by default postorder_traverse(p->left); if (p->right) #pragma omp task // p is firstprivate by default postorder_traverse(p->right); #pragma omp taskwait Do not proceed until process(p); prior tasks in scope have completed } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 37

Outline • Open. MP • Erlang • Cilk © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 38

Erlang: A real world concurrent language • Erlang. • Invented at Ericsson telecommunications approx. 20 years ago for the purpose of writing code for telecommunications systems. – Distributed systems: interacting telecom switches. – Robust to failures. – Tackling intrinsically parallel problems. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 39

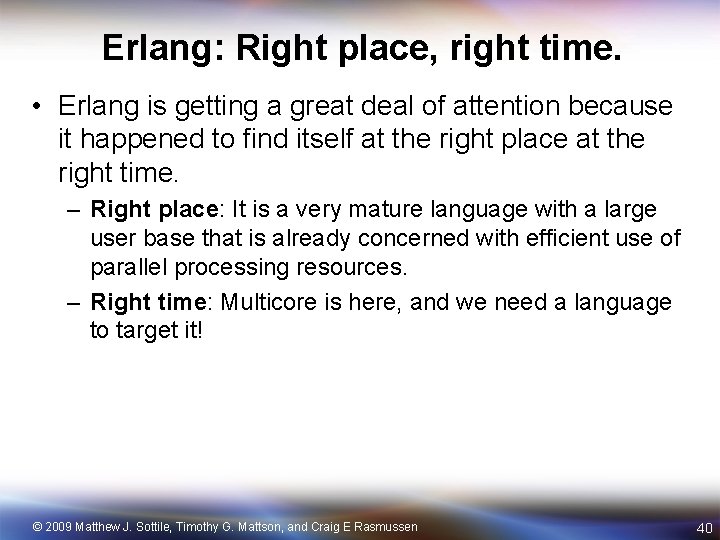

Erlang: Right place, right time. • Erlang is getting a great deal of attention because it happened to find itself at the right place at the right time. – Right place: It is a very mature language with a large user base that is already concerned with efficient use of parallel processing resources. – Right time: Multicore is here, and we need a language to target it! © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 40

So, what is Erlang? • Erlang is a single assignment functional language with strict (not lazy) evaluation and dynamic typing. – This is different from Haskell, which is lazy and not dynamically typed. • Provides pattern matching facilities for defining functions. • Syntactic means provided to create threads and communicate between them. • Built to withstand reliability problems with distributed systems and hot-swapping of code. • Concurrency is based on the “actor model”. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 41

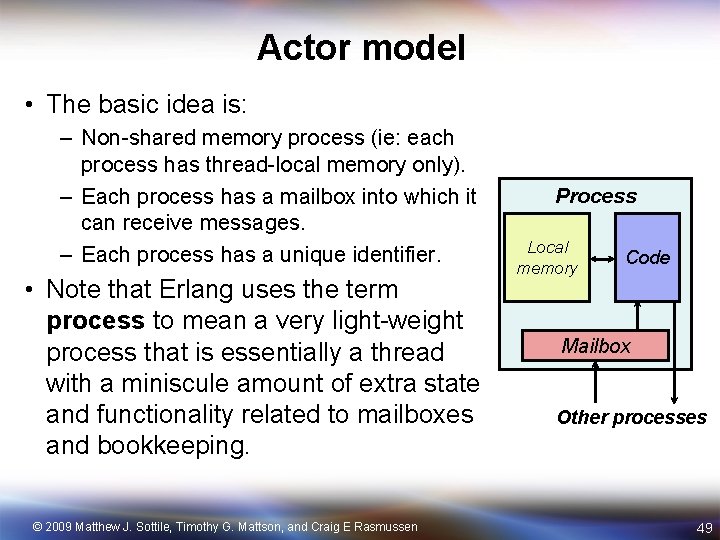

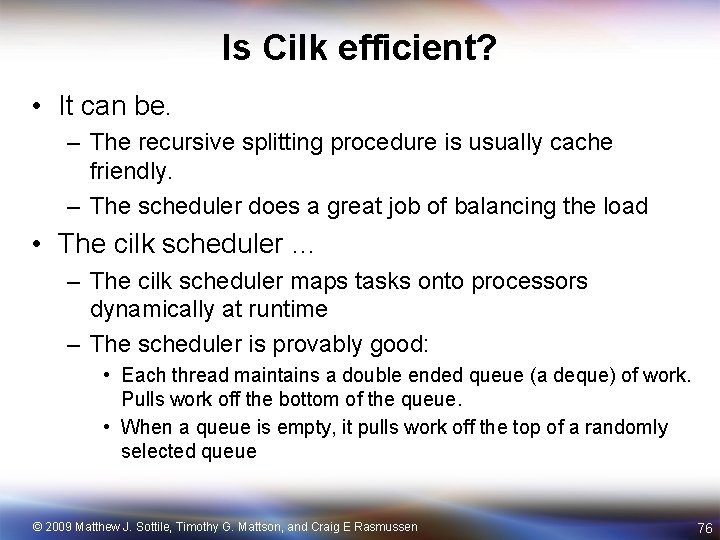

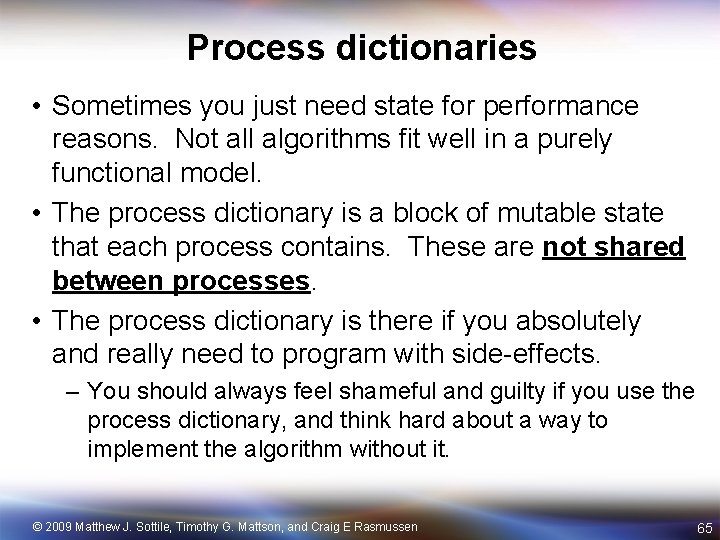

![Using Erlang Lets do something simple A routine that squares numbers modulesquarer exportsquare1 Using Erlang. • Let’s do something simple. A routine that squares numbers. -module(squarer). -export([square/1]).](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-42.jpg)

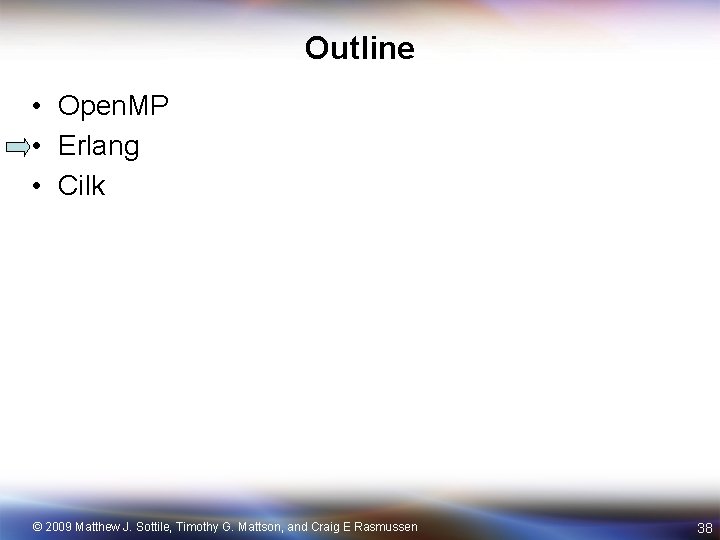

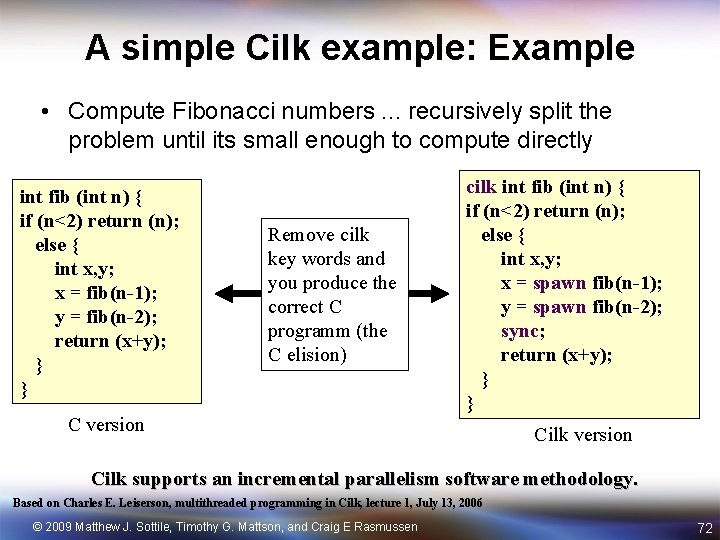

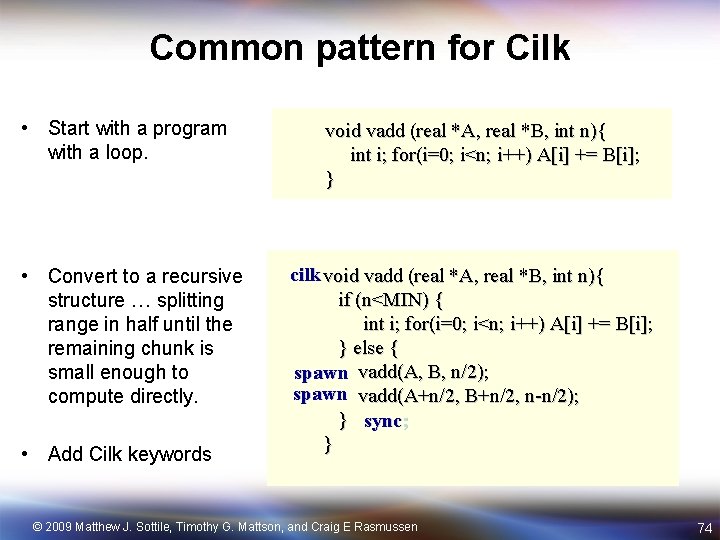

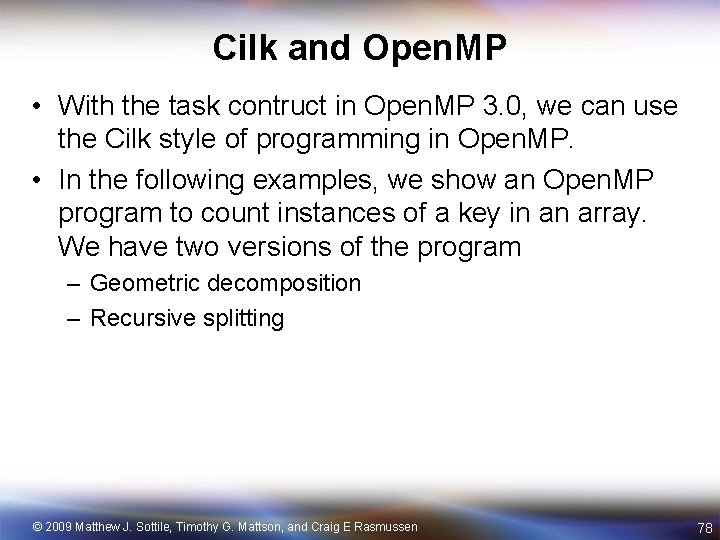

Using Erlang. • Let’s do something simple. A routine that squares numbers. -module(squarer). -export([square/1]). square(N) -> N*N. Define a module to hold the code. Export the squaring function which has arity 1. Define the function. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 42

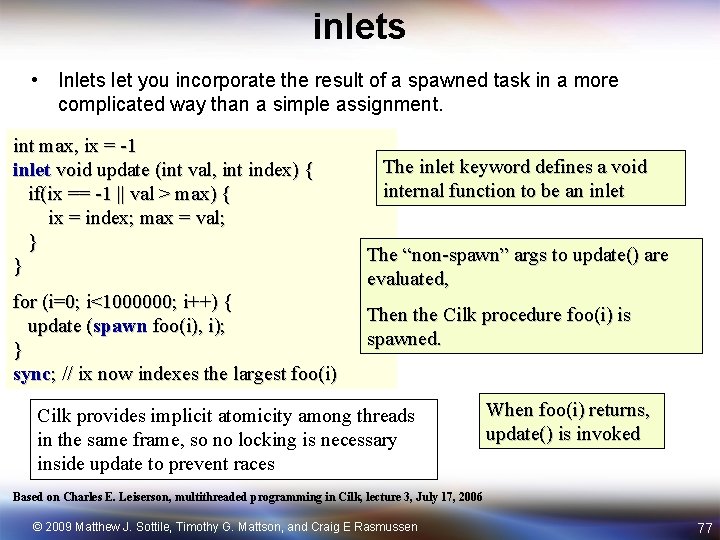

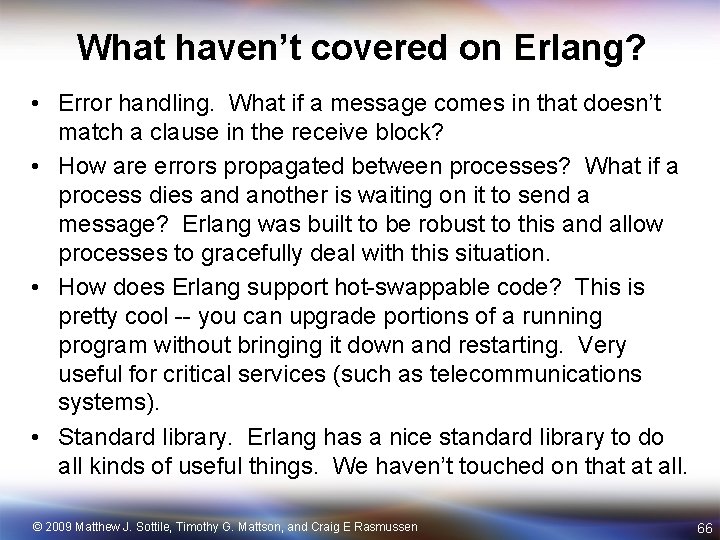

![Interactive session Start Erlang shell Compile squarer erl mattmagnolia code erl Erlang BEAM emulator Interactive session Start Erlang shell Compile squarer. erl matt@magnolia [code]$ erl Erlang (BEAM) emulator](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-43.jpg)

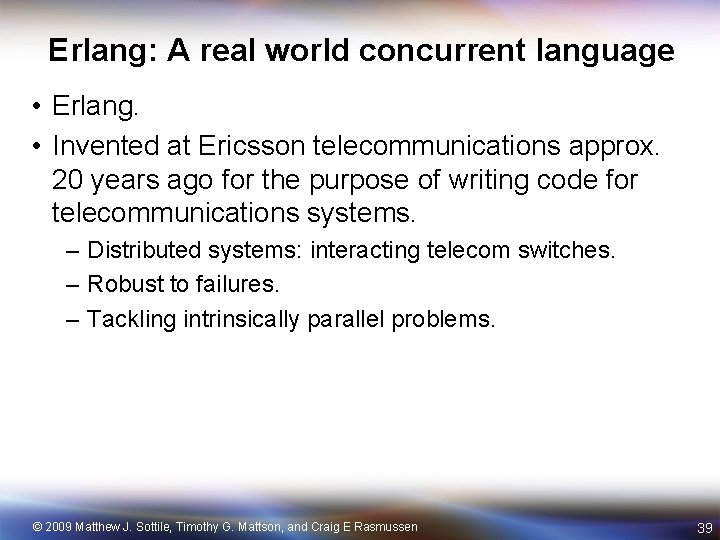

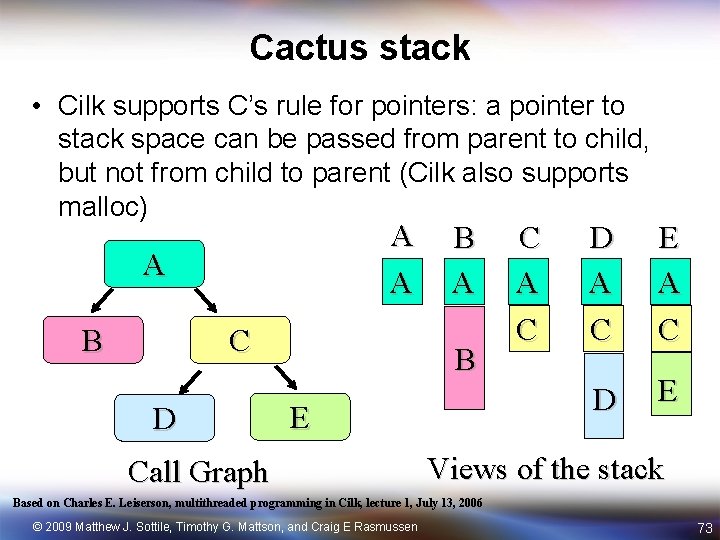

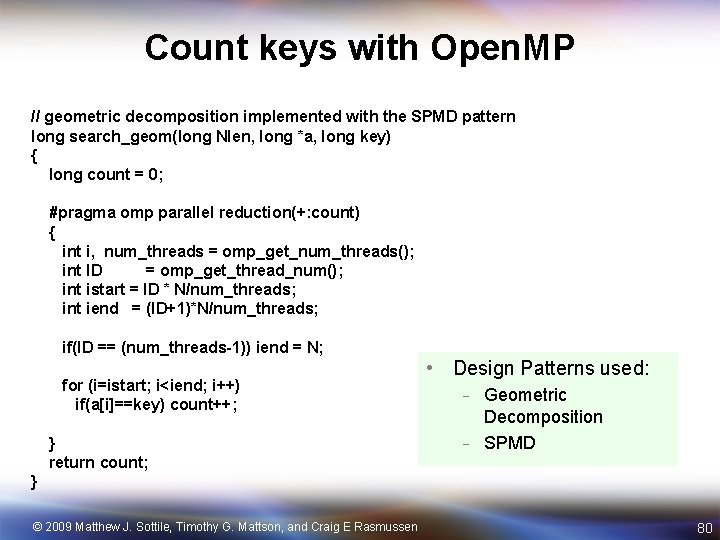

Interactive session Start Erlang shell Compile squarer. erl matt@magnolia [code]$ erl Erlang (BEAM) emulator version 5. 6. 1 [source] [smp: 2] [async-threads: 0] [kernel-poll: false] Eshell V 5. 6. 1 (abort with ^G) 1> c(squarer). {ok, squarer} Invoke function 2> squarer: square(2). 4 Result 3> squarer: square(7654). 58583716 Invoke function 4> Result © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 43

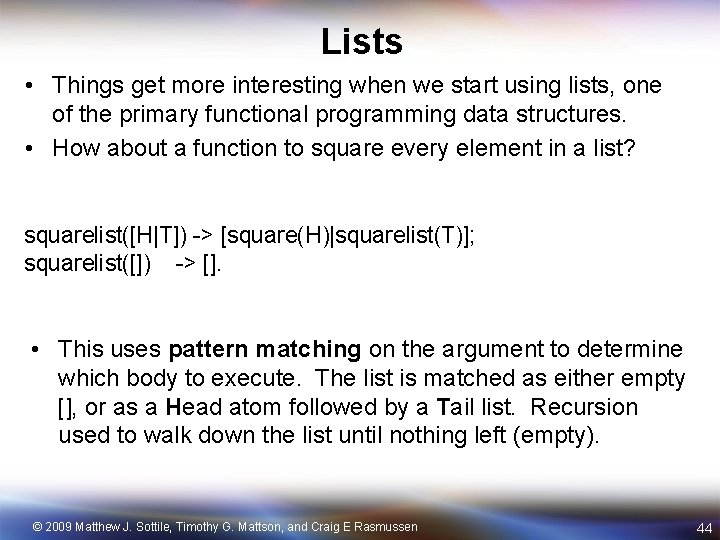

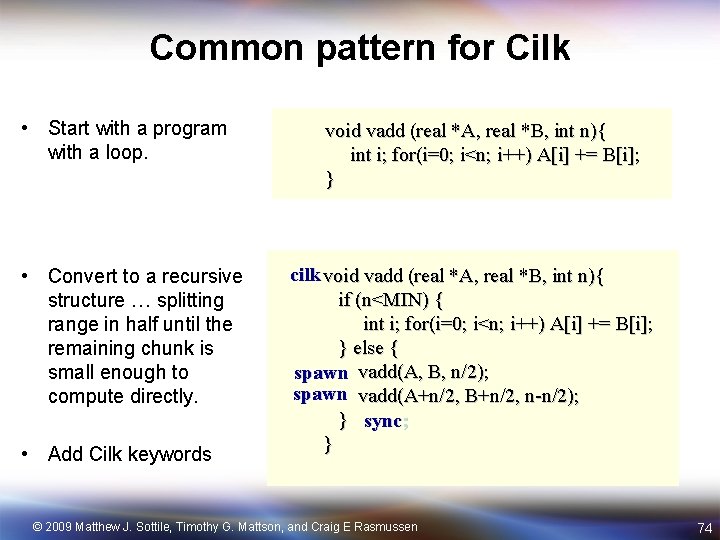

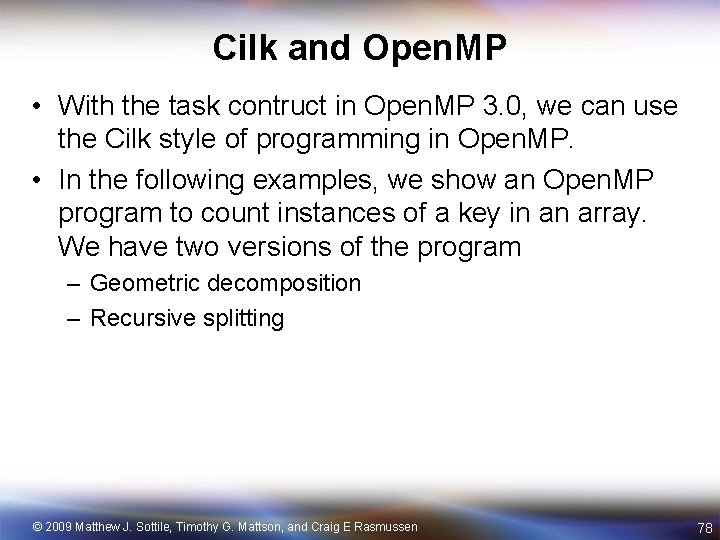

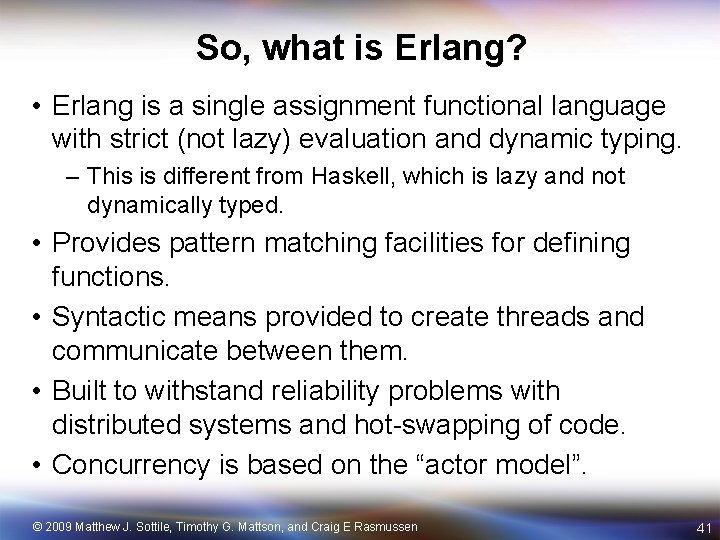

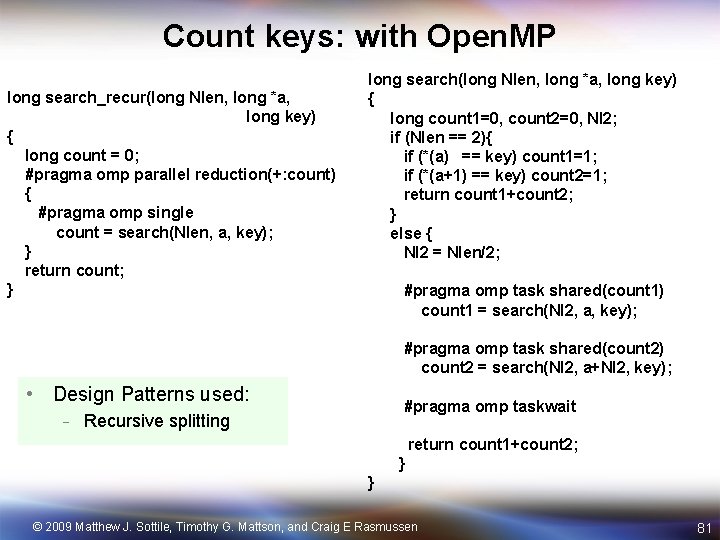

Lists • Things get more interesting when we start using lists, one of the primary functional programming data structures. • How about a function to square every element in a list? squarelist([H|T]) -> [square(H)|squarelist(T)]; squarelist([]) -> []. • This uses pattern matching on the argument to determine which body to execute. The list is matched as either empty [], or as a Head atom followed by a Tail list. Recursion used to walk down the list until nothing left (empty). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 44

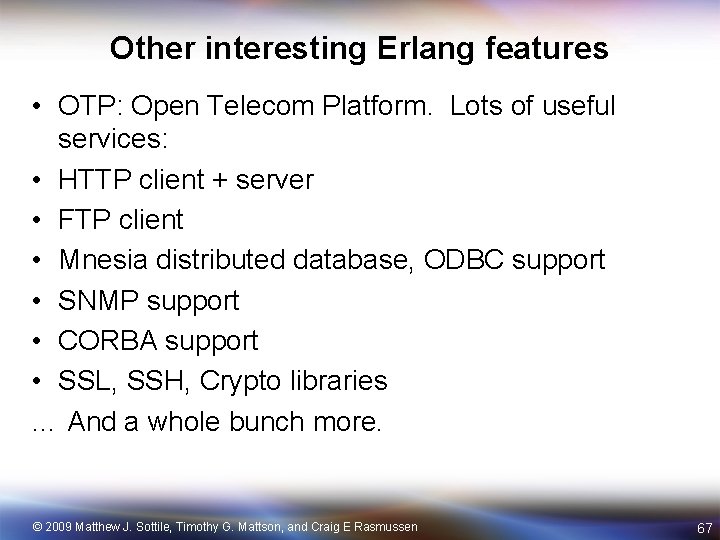

![Lists squarer erl modulesquarer exportsquare1 squarelist1 squareN NN squarelistHT squareHsquarelistT squarelist Lists squarer. erl -module(squarer). -export([square/1, squarelist/1]). square(N) -> N*N. squarelist([H|T]) -> [square(H)|squarelist(T)]; squarelist([]) ->](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-45.jpg)

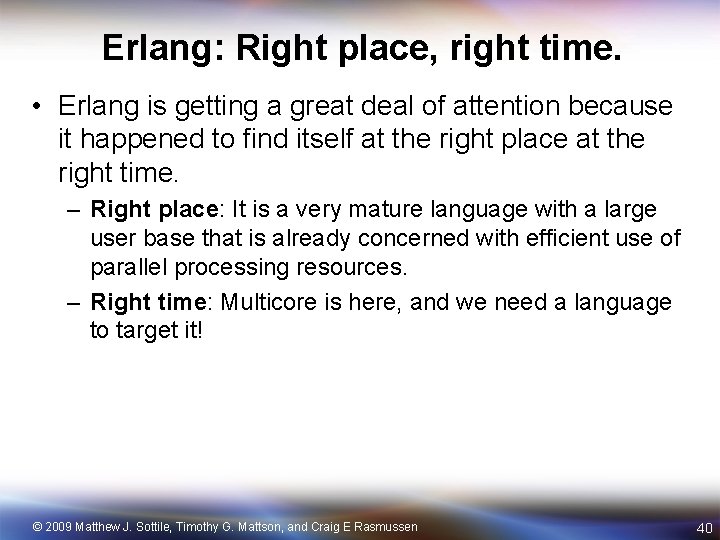

Lists squarer. erl -module(squarer). -export([square/1, squarelist/1]). square(N) -> N*N. squarelist([H|T]) -> [square(H)|squarelist(T)]; squarelist([]) -> []. 1> c(squarer). {ok, squarer} 2> L=[1, 2, 3, 4] 3> L 2=squarer: squarelist(L). [1, 4, 9, 16] 4> squarer: squarelist(L 2). [1, 16, 81, 256] © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 45

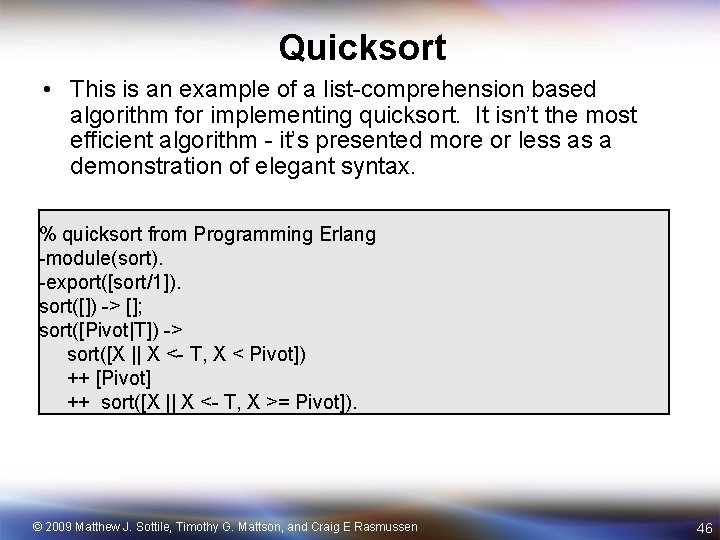

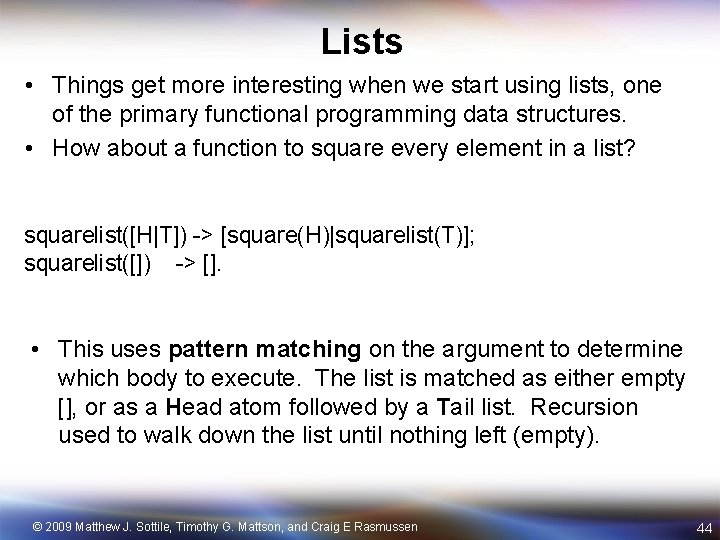

Quicksort • This is an example of a list-comprehension based algorithm for implementing quicksort. It isn’t the most efficient algorithm - it’s presented more or less as a demonstration of elegant syntax. % quicksort from Programming Erlang -module(sort). -export([sort/1]). sort([]) -> []; sort([Pivot|T]) -> sort([X || X <- T, X < Pivot]) ++ [Pivot] ++ sort([X || X <- T, X >= Pivot]). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 46

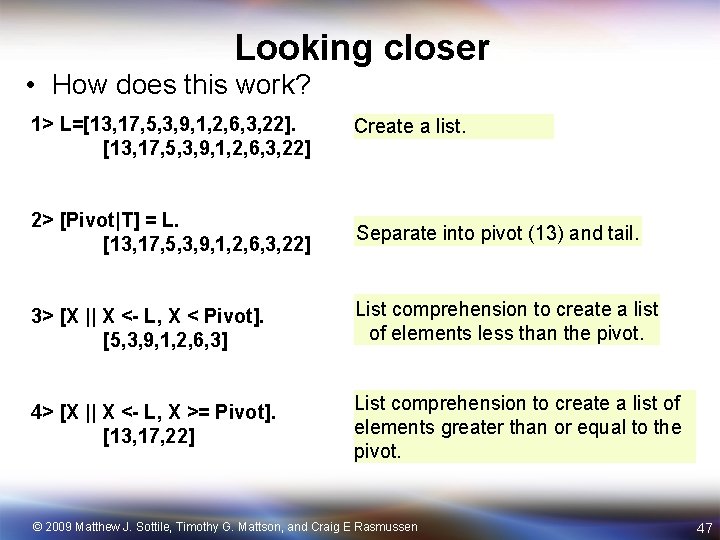

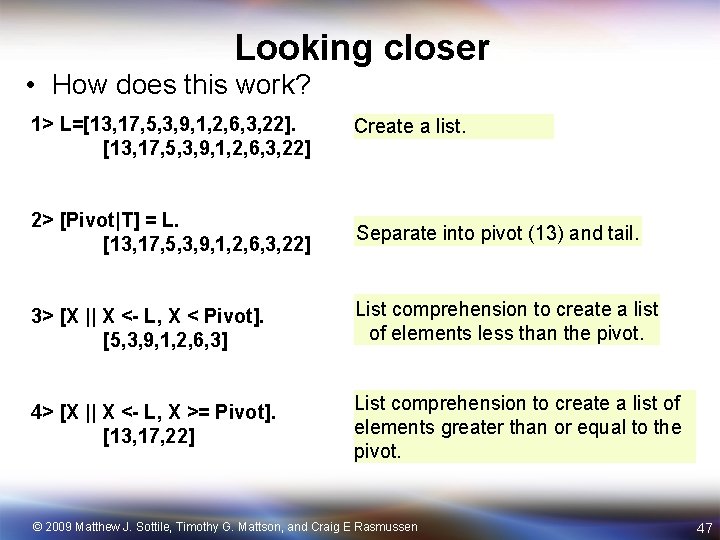

Looking closer • How does this work? 1> L=[13, 17, 5, 3, 9, 1, 2, 6, 3, 22] Create a list. 2> [Pivot|T] = L. [13, 17, 5, 3, 9, 1, 2, 6, 3, 22] Separate into pivot (13) and tail. 3> [X || X <- L, X < Pivot]. [5, 3, 9, 1, 2, 6, 3] List comprehension to create a list of elements less than the pivot. 4> [X || X <- L, X >= Pivot]. [13, 17, 22] List comprehension to create a list of elements greater than or equal to the pivot. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 47

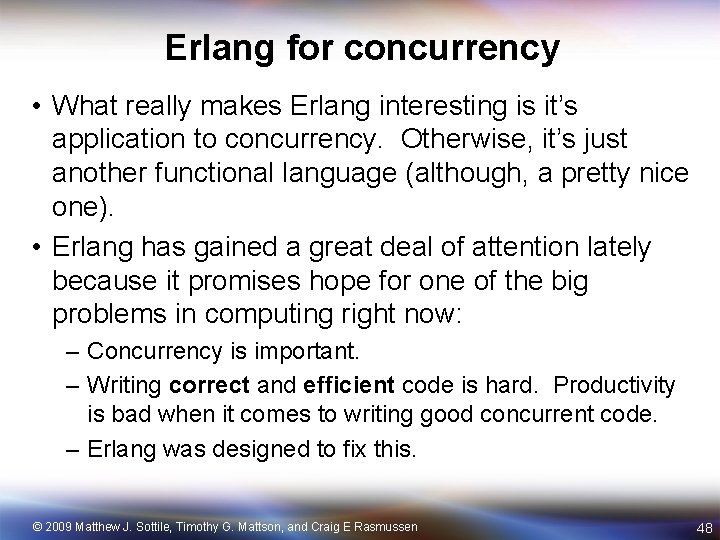

Erlang for concurrency • What really makes Erlang interesting is it’s application to concurrency. Otherwise, it’s just another functional language (although, a pretty nice one). • Erlang has gained a great deal of attention lately because it promises hope for one of the big problems in computing right now: – Concurrency is important. – Writing correct and efficient code is hard. Productivity is bad when it comes to writing good concurrent code. – Erlang was designed to fix this. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 48

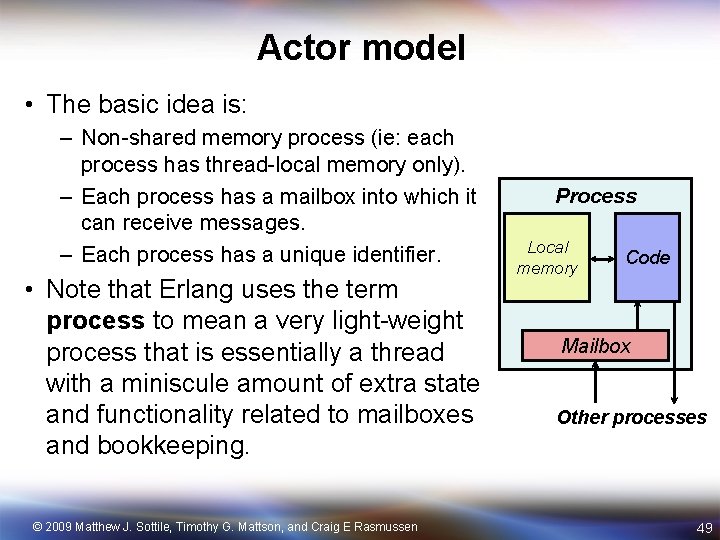

Actor model • The basic idea is: – Non-shared memory process (ie: each process has thread-local memory only). – Each process has a mailbox into which it can receive messages. – Each process has a unique identifier. • Note that Erlang uses the term process to mean a very light-weight process that is essentially a thread with a miniscule amount of extra state and functionality related to mailboxes and bookkeeping. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen Process Local memory Code Mailbox Other processes 49

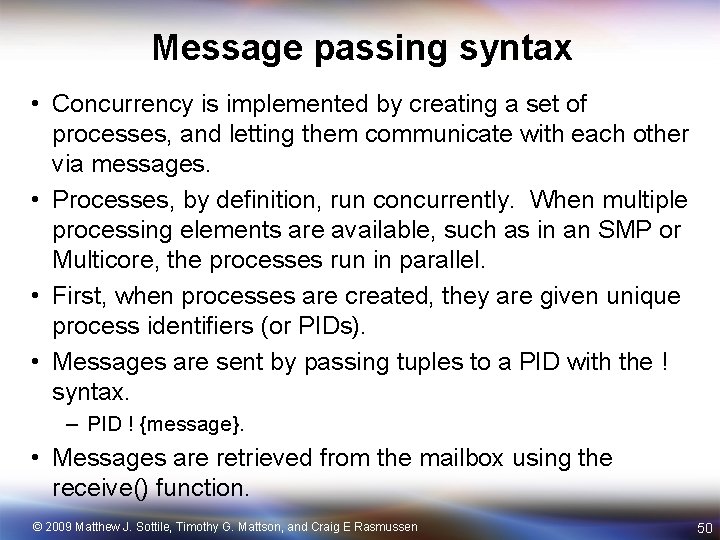

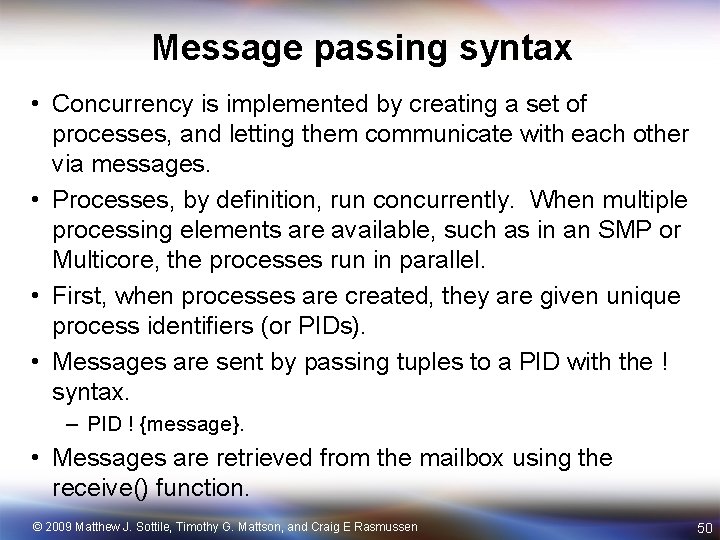

Message passing syntax • Concurrency is implemented by creating a set of processes, and letting them communicate with each other via messages. • Processes, by definition, run concurrently. When multiple processing elements are available, such as in an SMP or Multicore, the processes run in parallel. • First, when processes are created, they are given unique process identifiers (or PIDs). • Messages are sent by passing tuples to a PID with the ! syntax. – PID ! {message}. • Messages are retrieved from the mailbox using the receive() function. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 50

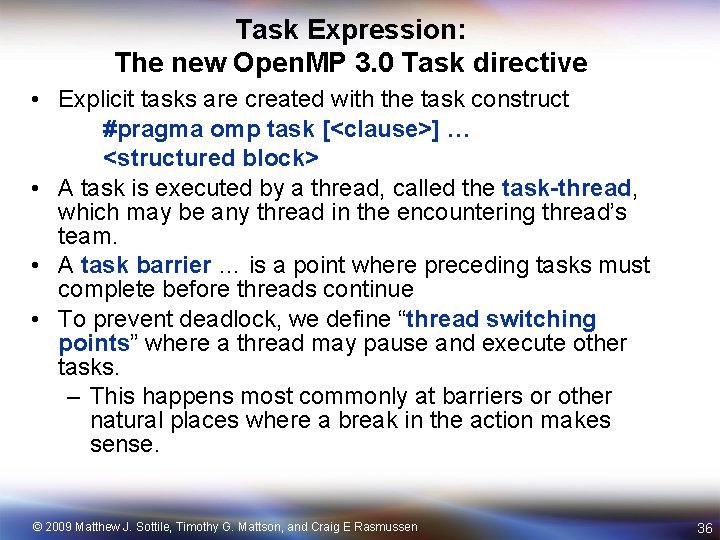

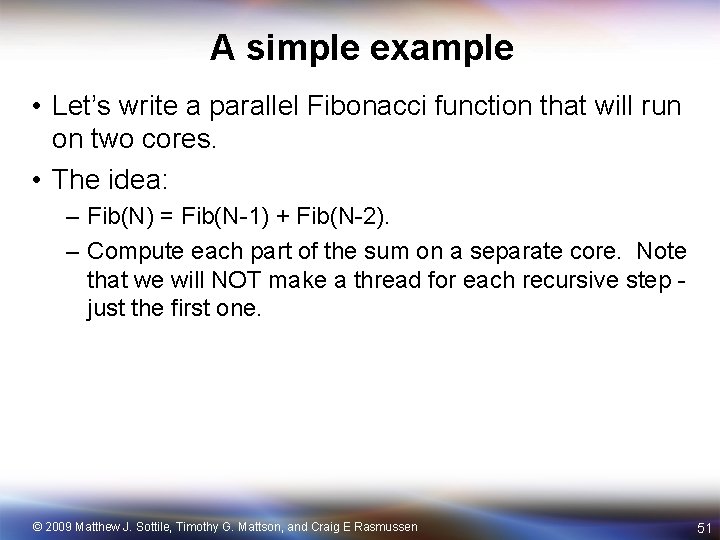

A simple example • Let’s write a parallel Fibonacci function that will run on two cores. • The idea: – Fib(N) = Fib(N-1) + Fib(N-2). – Compute each part of the sum on a separate core. Note that we will NOT make a thread for each recursive step just the first one. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 51

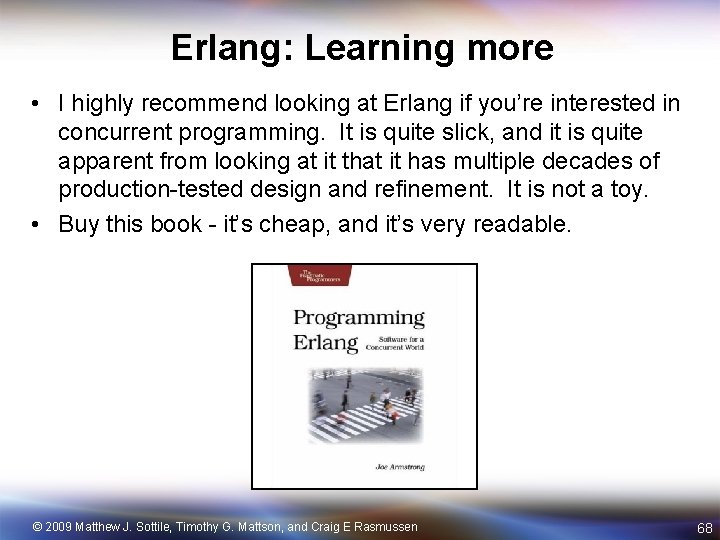

![Parallel Fibonacci numbers test to compute fibonacci numbers modulefib exportstart0 fib1 sfib1 sfib0 Parallel Fibonacci numbers % test to compute fibonacci numbers -module(fib). -export([start/0, fib/1, sfib/1]). sfib(0)](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-52.jpg)

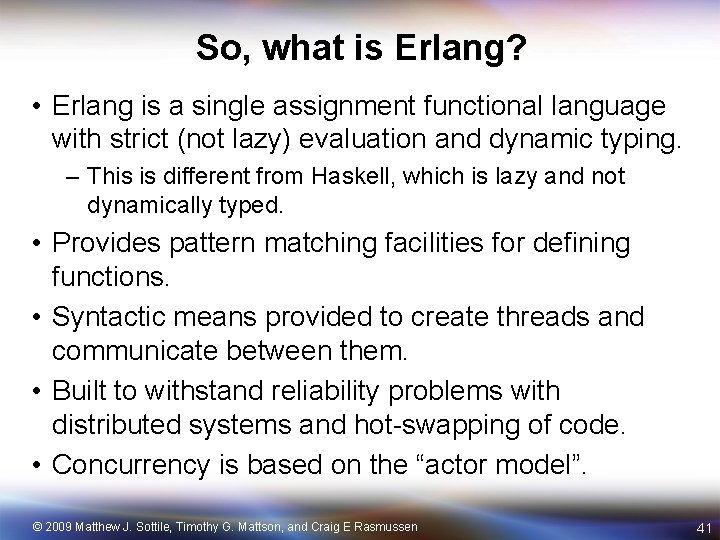

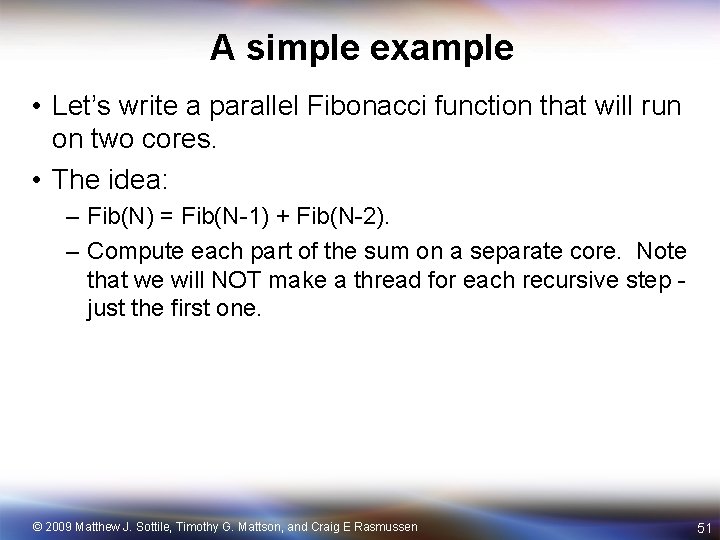

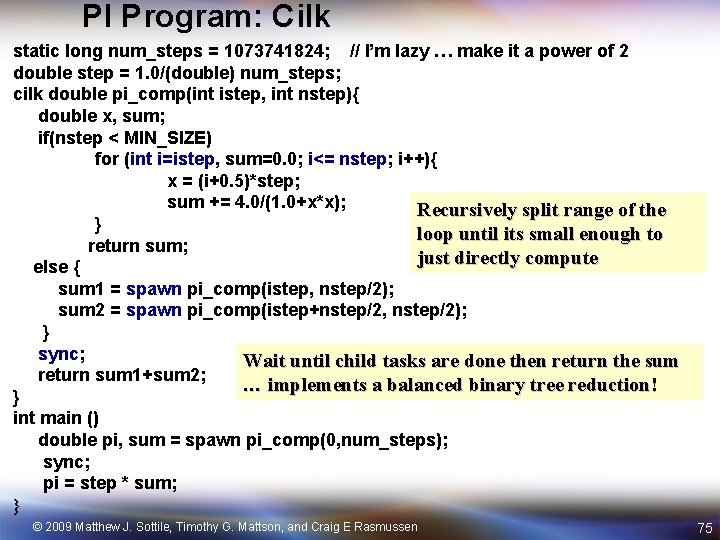

Parallel Fibonacci numbers % test to compute fibonacci numbers -module(fib). -export([start/0, fib/1, sfib/1]). sfib(0) -> 1; sfib(1) -> 1; sfib(N) -> sfib(N-1)+sfib(N-2). start() -> spawn(fun loop/0). loop() -> receive {From, N} -> From ! {self(), sfib(N)}, loop() end. fib(N) -> Pid = start(), Qid = start(), Pid ! {self(), N-1}, Qid ! {self(), N-2}, receive {_, Response. P} -> receive {_, Response. Q} -> Response. P+Response. Q end. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 52

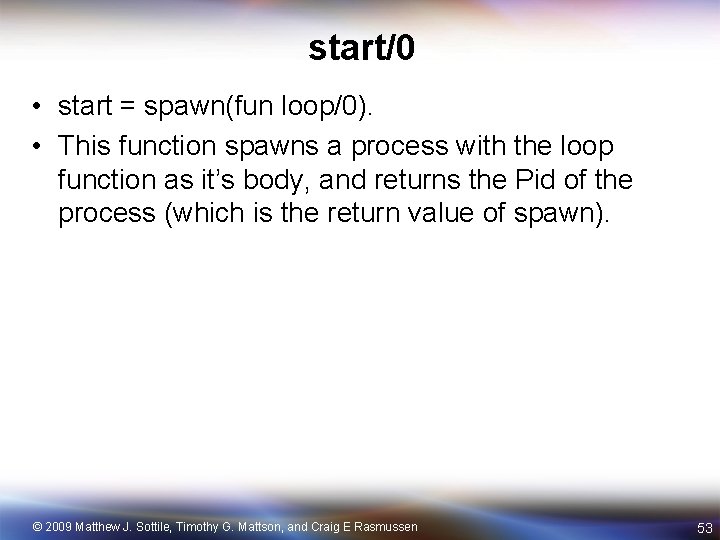

start/0 • start = spawn(fun loop/0). • This function spawns a process with the loop function as it’s body, and returns the Pid of the process (which is the return value of spawn). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 53

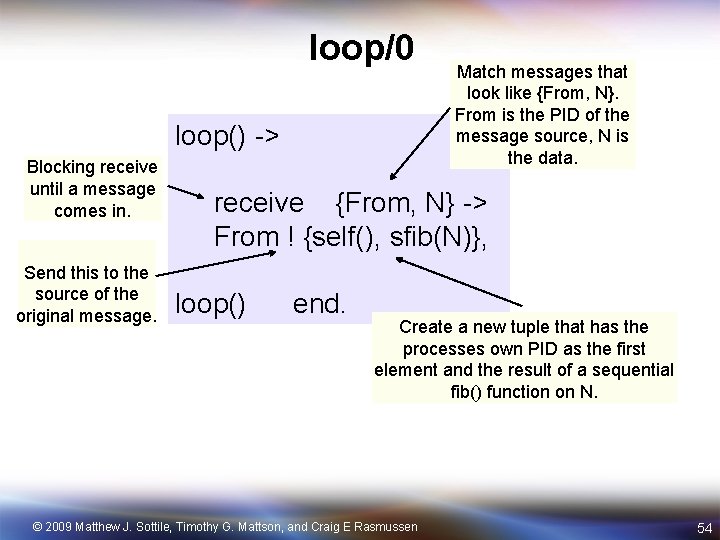

loop/0 loop() -> Blocking receive until a message comes in. Send this to the source of the original message. Match messages that look like {From, N}. From is the PID of the message source, N is the data. receive {From, N} -> From ! {self(), sfib(N)}, loop() end. Create a new tuple that has the processes own PID as the first element and the result of a sequential fib() function on N. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 54

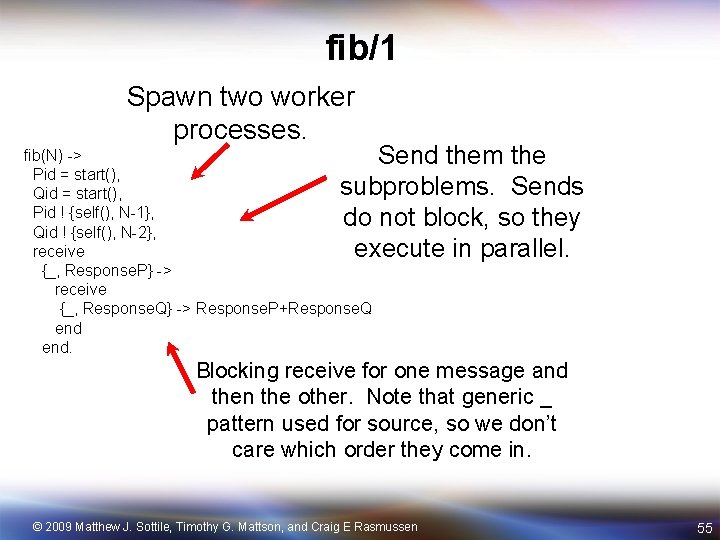

fib/1 Spawn two worker processes. Send them the subproblems. Sends do not block, so they execute in parallel. fib(N) -> Pid = start(), Qid = start(), Pid ! {self(), N-1}, Qid ! {self(), N-2}, receive {_, Response. P} -> receive {_, Response. Q} -> Response. P+Response. Q end. Blocking receive for one message and then the other. Note that generic _ pattern used for source, so we don’t care which order they come in. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 55

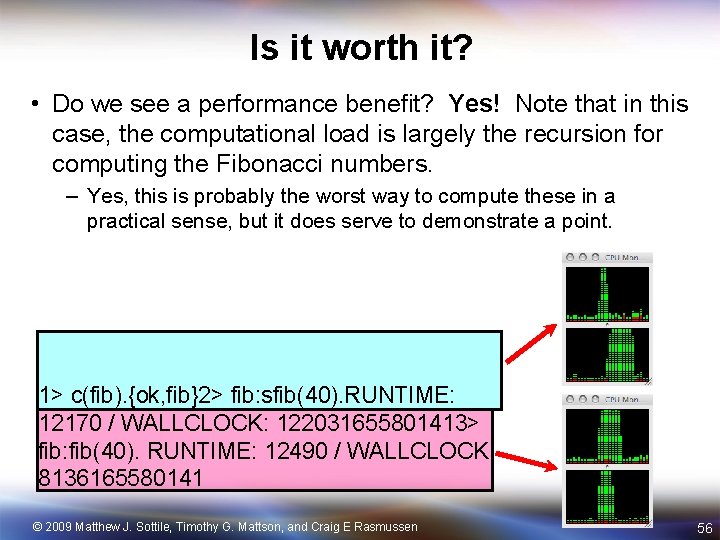

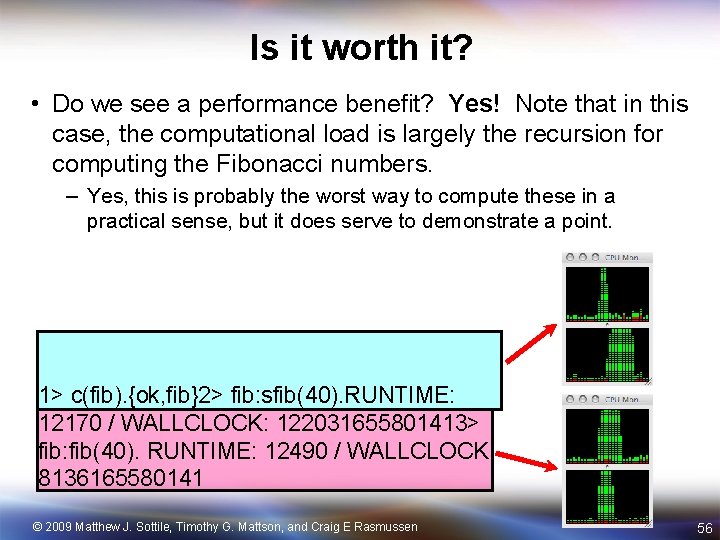

Is it worth it? • Do we see a performance benefit? Yes! Note that in this case, the computational load is largely the recursion for computing the Fibonacci numbers. – Yes, this is probably the worst way to compute these in a practical sense, but it does serve to demonstrate a point. 1> c(fib). {ok, fib}2> fib: sfib(40). RUNTIME: 12170 / WALLCLOCK: 122031655801413> fib: fib(40). RUNTIME: 12490 / WALLCLOCK: 8136165580141 © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 56

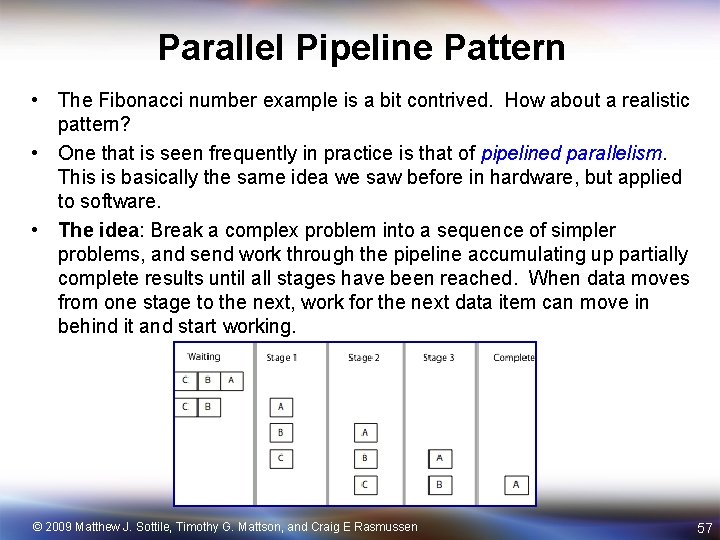

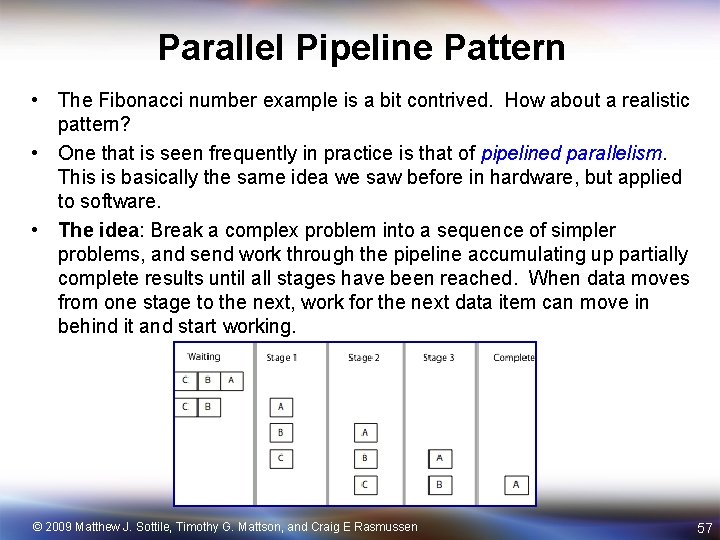

Parallel Pipeline Pattern • The Fibonacci number example is a bit contrived. How about a realistic pattern? • One that is seen frequently in practice is that of pipelined parallelism. This is basically the same idea we saw before in hardware, but applied to software. • The idea: Break a complex problem into a sequence of simpler problems, and send work through the pipeline accumulating up partially complete results until all stages have been reached. When data moves from one stage to the next, work for the next data item can move in behind it and start working. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 57

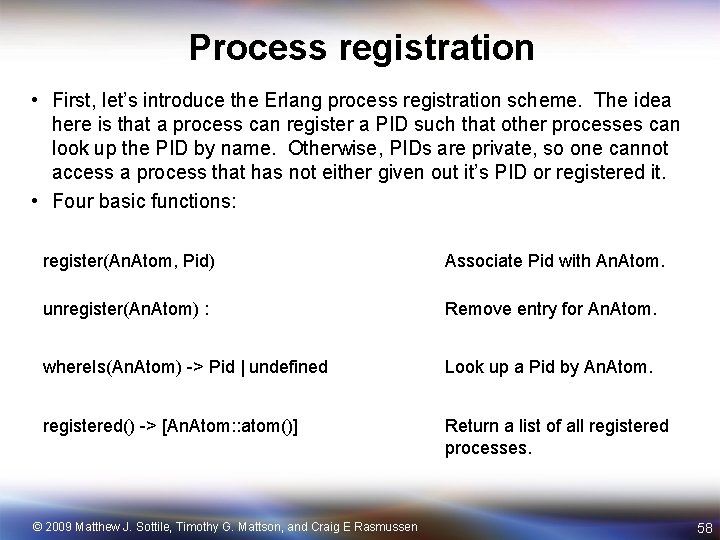

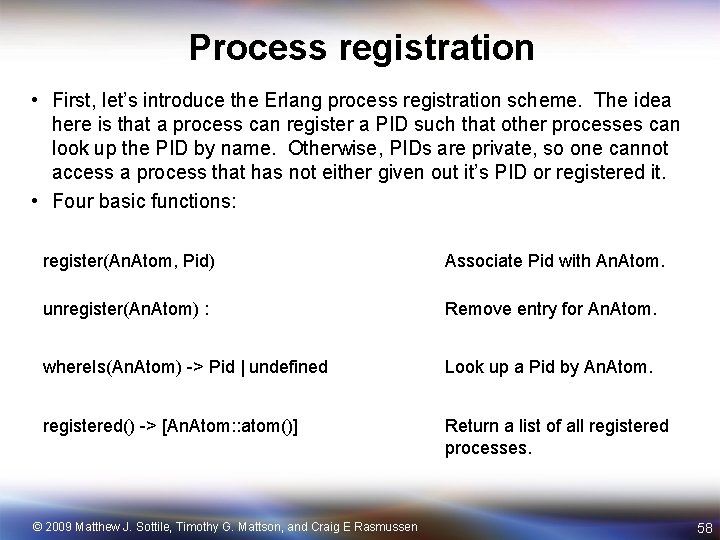

Process registration • First, let’s introduce the Erlang process registration scheme. The idea here is that a process can register a PID such that other processes can look up the PID by name. Otherwise, PIDs are private, so one cannot access a process that has not either given out it’s PID or registered it. • Four basic functions: register(An. Atom, Pid) Associate Pid with An. Atom. unregister(An. Atom) : Remove entry for An. Atom. where. Is(An. Atom) -> Pid | undefined Look up a Pid by An. Atom. registered() -> [An. Atom: : atom()] Return a list of all registered processes. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 58

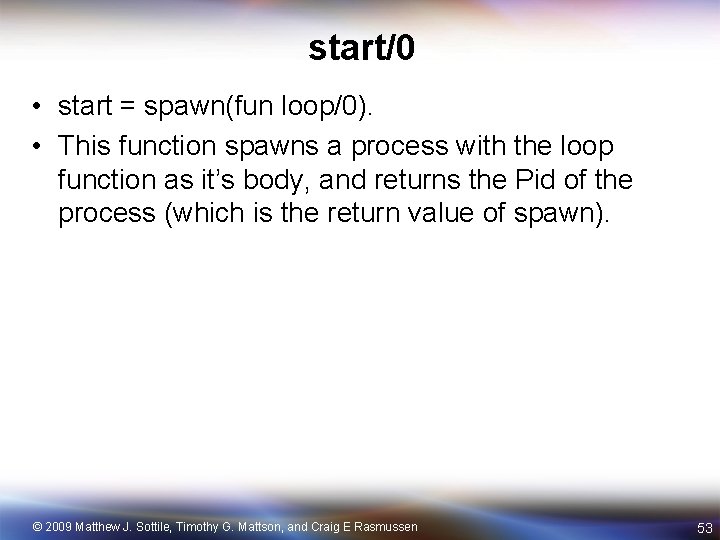

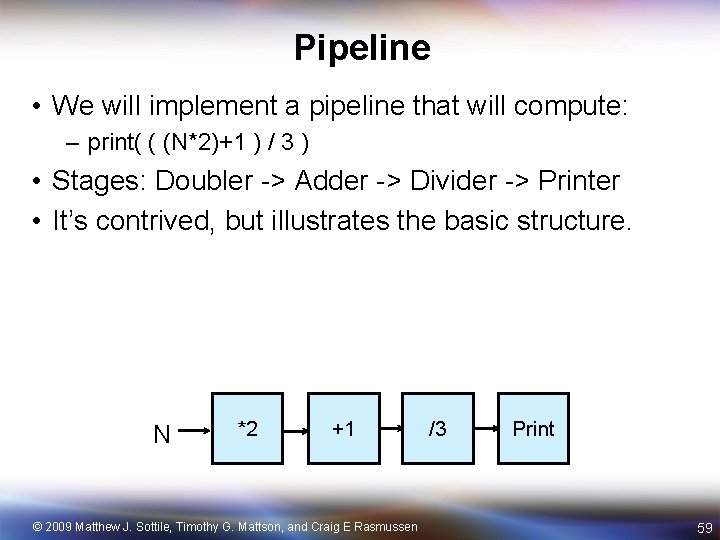

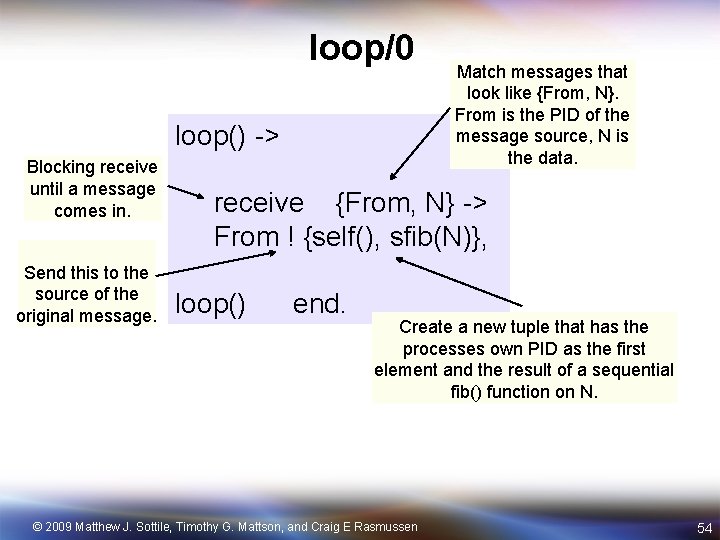

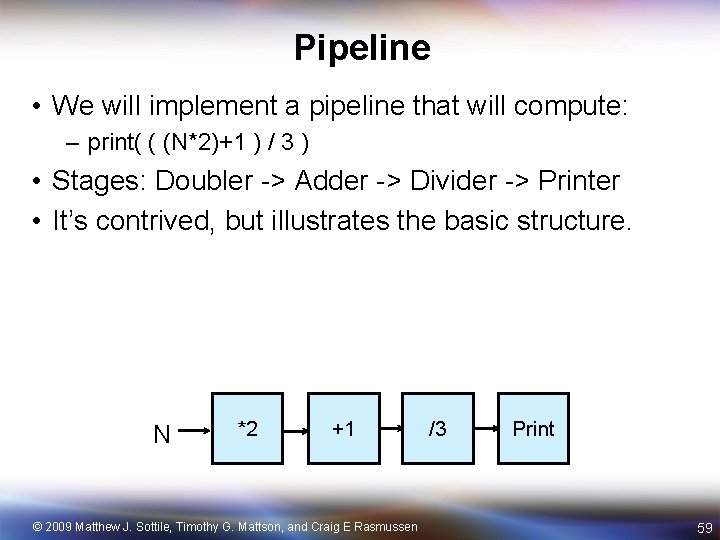

Pipeline • We will implement a pipeline that will compute: – print( ( (N*2)+1 ) / 3 ) • Stages: Doubler -> Adder -> Divider -> Printer • It’s contrived, but illustrates the basic structure. N *2 +1 © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen /3 Print 59

![Pipeline modulepipeline exportstart0 start registerdouble spawnfun loop0 registeradd spawnfun loop0 registerdivide spawnfun loop0 Pipeline -module(pipeline). -export([start/0]). start() -> register(double, spawn(fun loop/0)), register(add, spawn(fun loop/0)), register(divide, spawn(fun loop/0)),](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-60.jpg)

Pipeline -module(pipeline). -export([start/0]). start() -> register(double, spawn(fun loop/0)), register(add, spawn(fun loop/0)), register(divide, spawn(fun loop/0)), register(complete, spawn(fun loop/0)). % stage 1 : double the input doubler(N) -> N*2. % stage 2 : add one to the input adder(N) -> N+1. % stage 3 : divide input by three divider(N) -> N/3. % stage 4 : consume the results completer(N) -> io: format("COMPLETE: ~p ~n", [N]). % receive loop that hands work to the appropriate stage loop() -> receive {double, N} -> add ! {add, doubler(N)}, loop(); {add, N} -> divide ! {divide, adder(N)}, loop(); {divide, N} -> complete ! {complete, divider(N)}, loop(); {complete, N} -> completer(N), loop() end. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 60

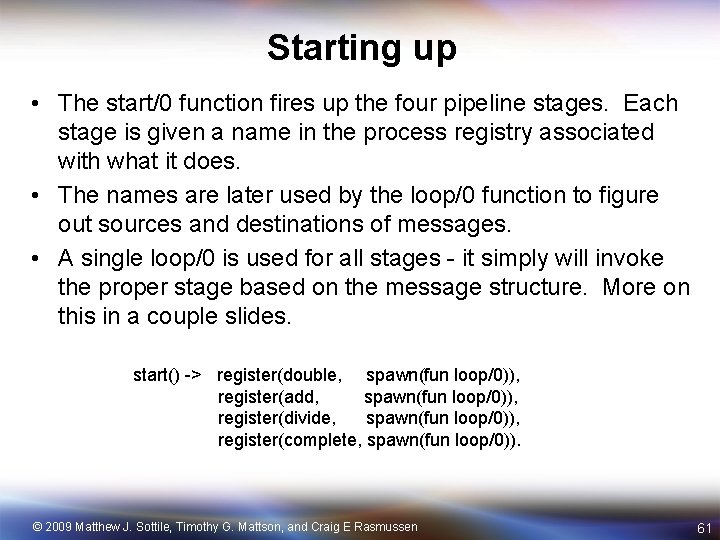

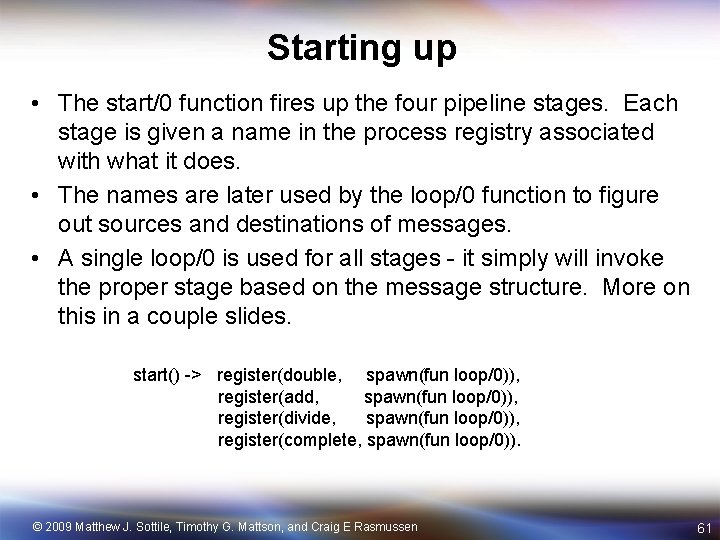

Starting up • The start/0 function fires up the four pipeline stages. Each stage is given a name in the process registry associated with what it does. • The names are later used by the loop/0 function to figure out sources and destinations of messages. • A single loop/0 is used for all stages - it simply will invoke the proper stage based on the message structure. More on this in a couple slides. start() -> register(double, spawn(fun loop/0)), register(add, spawn(fun loop/0)), register(divide, spawn(fun loop/0)), register(complete, spawn(fun loop/0)). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 61

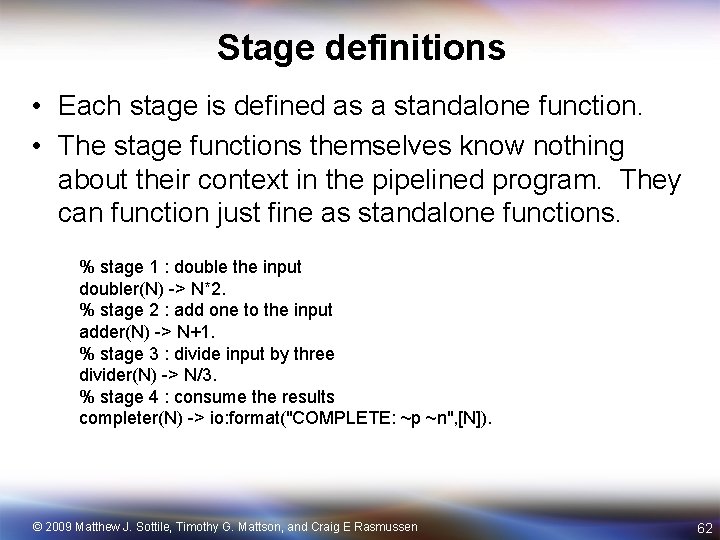

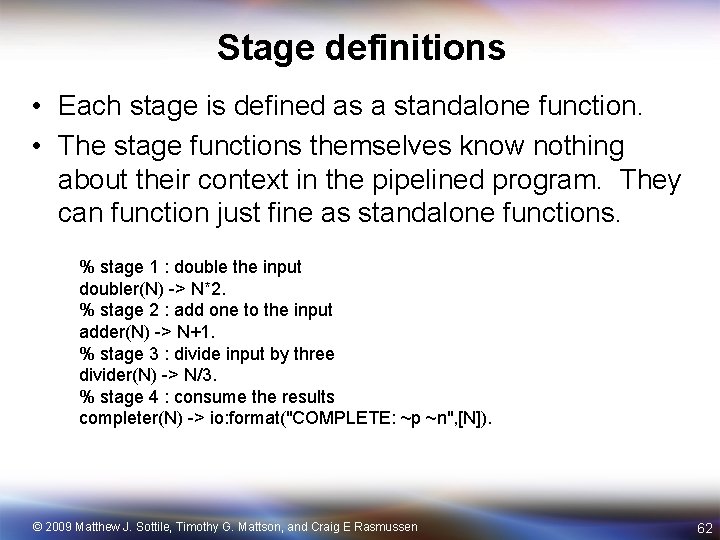

Stage definitions • Each stage is defined as a standalone function. • The stage functions themselves know nothing about their context in the pipelined program. They can function just fine as standalone functions. % stage 1 : double the input doubler(N) -> N*2. % stage 2 : add one to the input adder(N) -> N+1. % stage 3 : divide input by three divider(N) -> N/3. % stage 4 : consume the results completer(N) -> io: format("COMPLETE: ~p ~n", [N]). © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 62

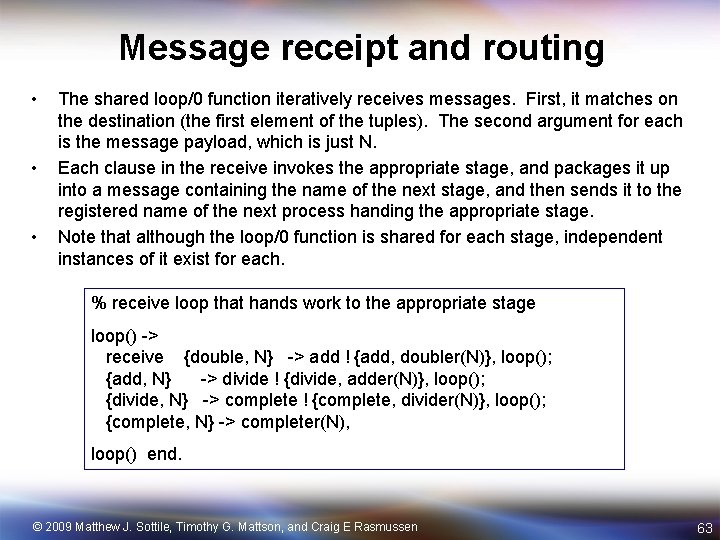

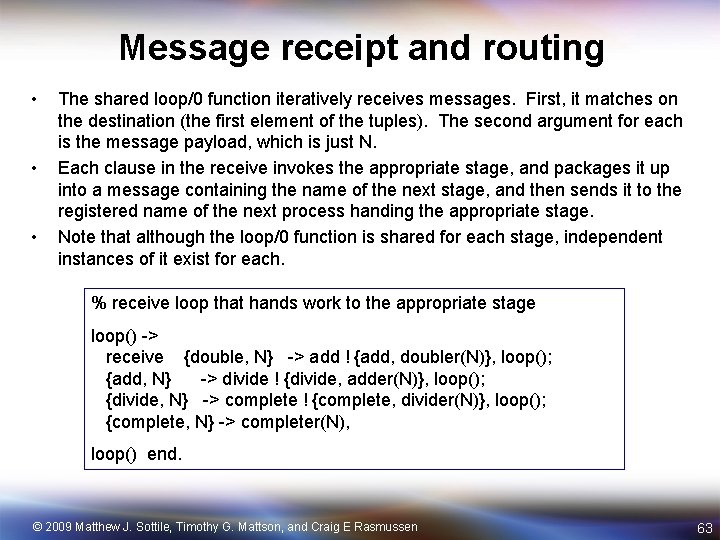

Message receipt and routing • • • The shared loop/0 function iteratively receives messages. First, it matches on the destination (the first element of the tuples). The second argument for each is the message payload, which is just N. Each clause in the receive invokes the appropriate stage, and packages it up into a message containing the name of the next stage, and then sends it to the registered name of the next process handing the appropriate stage. Note that although the loop/0 function is shared for each stage, independent instances of it exist for each. % receive loop that hands work to the appropriate stage loop() -> receive {double, N} -> add ! {add, doubler(N)}, loop(); {add, N} -> divide ! {divide, adder(N)}, loop(); {divide, N} -> complete ! {complete, divider(N)}, loop(); {complete, N} -> completer(N), loop() end. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 63

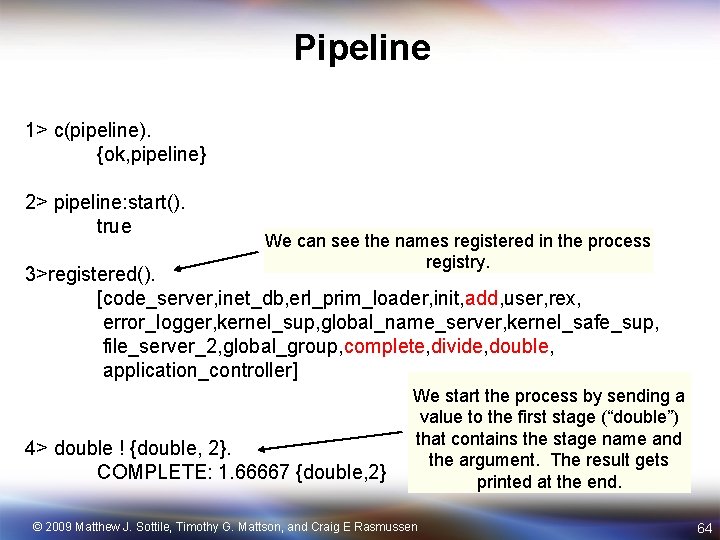

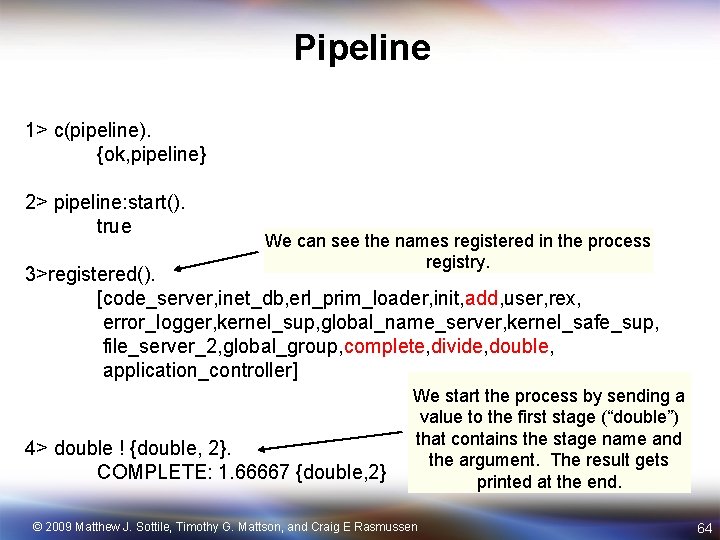

Pipeline 1> c(pipeline). {ok, pipeline} 2> pipeline: start(). true We can see the names registered in the process registry. 3>registered(). [code_server, inet_db, erl_prim_loader, init, add, user, rex, error_logger, kernel_sup, global_name_server, kernel_safe_sup, file_server_2, global_group, complete, divide, double, application_controller] 4> double ! {double, 2}. COMPLETE: 1. 66667 {double, 2} We start the process by sending a value to the first stage (“double”) that contains the stage name and the argument. The result gets printed at the end. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 64

Process dictionaries • Sometimes you just need state for performance reasons. Not all algorithms fit well in a purely functional model. • The process dictionary is a block of mutable state that each process contains. These are not shared between processes. • The process dictionary is there if you absolutely and really need to program with side-effects. – You should always feel shameful and guilty if you use the process dictionary, and think hard about a way to implement the algorithm without it. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 65

What haven’t covered on Erlang? • Error handling. What if a message comes in that doesn’t match a clause in the receive block? • How are errors propagated between processes? What if a process dies and another is waiting on it to send a message? Erlang was built to be robust to this and allow processes to gracefully deal with this situation. • How does Erlang support hot-swappable code? This is pretty cool -- you can upgrade portions of a running program without bringing it down and restarting. Very useful for critical services (such as telecommunications systems). • Standard library. Erlang has a nice standard library to do all kinds of useful things. We haven’t touched on that at all. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 66

Other interesting Erlang features • OTP: Open Telecom Platform. Lots of useful services: • HTTP client + server • FTP client • Mnesia distributed database, ODBC support • SNMP support • CORBA support • SSL, SSH, Crypto libraries … And a whole bunch more. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 67

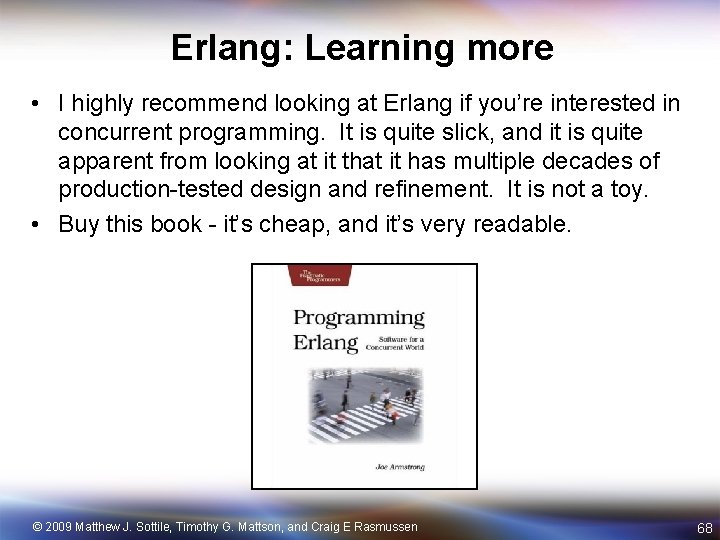

Erlang: Learning more • I highly recommend looking at Erlang if you’re interested in concurrent programming. It is quite slick, and it is quite apparent from looking at it that it has multiple decades of production-tested design and refinement. It is not a toy. • Buy this book - it’s cheap, and it’s very readable. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 68

Outline • Open. MP • Erlang • Cilk © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 69

Cilk in one slide • Extends C to create a parallel language but maintains serial semantics. • A fork-join style task oriented programming model perfect for recursive algorithms (e. g. branch-and-bound) … shared memory machines only! • Solid theoretical foundation … can prove performance theorems. cilk Marks a function as a “cilk” function that can be spawned spawn Spawns a cilk function … only 2 to 5 times the cost of a regular function call sync Wait until immediate children spawned functions return • “Advanced” key words inlet Define a function to handle return values from a cilk task cilk_fence A portable memory fence. abort Terminate all currently existing spawned tasks • Includes locks and a few other odds and ends. © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 70

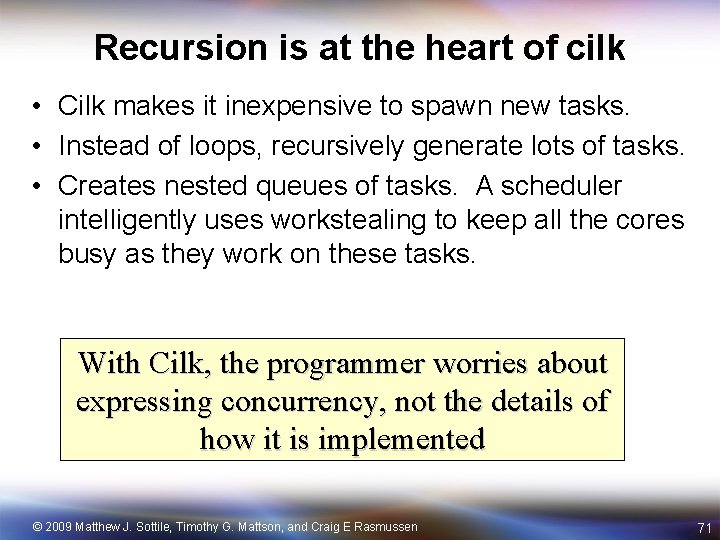

Recursion is at the heart of cilk • Cilk makes it inexpensive to spawn new tasks. • Instead of loops, recursively generate lots of tasks. • Creates nested queues of tasks. A scheduler intelligently uses workstealing to keep all the cores busy as they work on these tasks. With Cilk, the programmer worries about expressing concurrency, not the details of how it is implemented © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 71

A simple Cilk example: Example • Compute Fibonacci numbers. . . recursively split the problem until its small enough to compute directly int fib (int n) { if (n<2) return (n); else { int x, y; x = fib(n-1); y = fib(n-2); return (x+y); } } Remove cilk key words and you produce the correct C programm (the C elision) C version cilk int fib (int n) { if (n<2) return (n); else { int x, y; x = spawn fib(n-1); y = spawn fib(n-2); sync; return (x+y); } } Cilk version Cilk supports an incremental parallelism software methodology. Based on Charles E. Leiserson, multithreaded programming in Cilk, lecture 1, July 13, 2006 © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 72

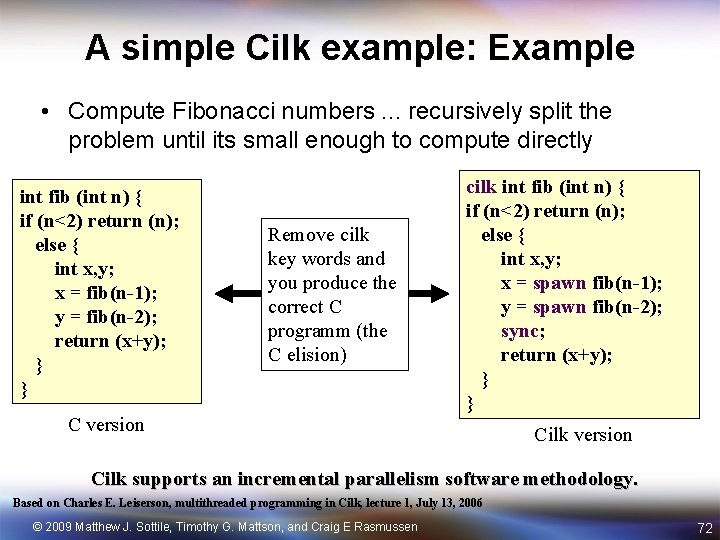

Cactus stack • Cilk supports C’s rule for pointers: a pointer to stack space can be passed from parent to child, but not from child to parent (Cilk also supports malloc) A A A B C D B A B E Call Graph C A C D A C E A C D E Views of the stack Based on Charles E. Leiserson, multithreaded programming in Cilk, lecture 1, July 13, 2006 © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 73

Common pattern for Cilk • Start with a program with a loop. • Convert to a recursive structure … splitting range in half until the remaining chunk is small enough to compute directly. • Add Cilk keywords void vadd (real *A, real *B, int n){ int i; for(i=0; i<n; i++) A[i] += B[i]; } cilk void vadd (real *A, real *B, int n){ if (n<MIN) { int i; for(i=0; i<n; i++) A[i] += B[i]; } else { spawn vadd(A, B, n/2); spawn vadd(A+n/2, B+n/2, n-n/2); } sync; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 74

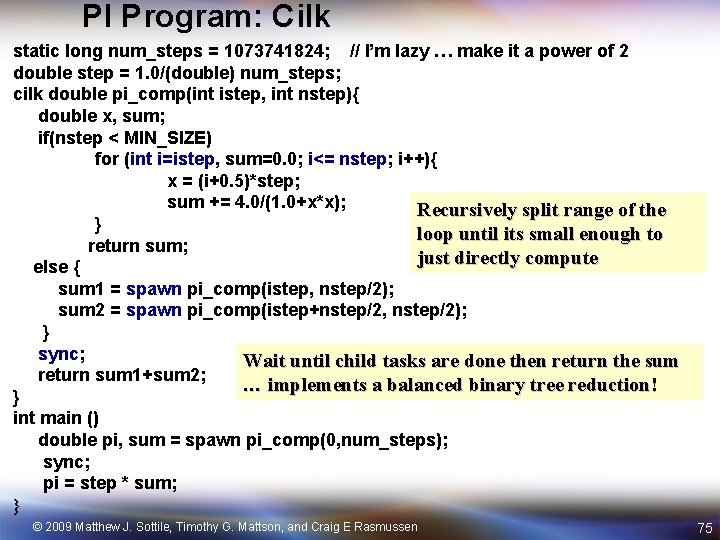

PI Program: Cilk static long num_steps = 1073741824; // I’m lazy … make it a power of 2 double step = 1. 0/(double) num_steps; cilk double pi_comp(int istep, int nstep){ double x, sum; if(nstep < MIN_SIZE) for (int i=istep, sum=0. 0; i<= nstep; i++){ x = (i+0. 5)*step; sum += 4. 0/(1. 0+x*x); Recursively split range of the } loop until its small enough to return sum; just directly compute else { sum 1 = spawn pi_comp(istep, nstep/2); sum 2 = spawn pi_comp(istep+nstep/2, nstep/2); } sync; Wait until child tasks are done then return the sum return sum 1+sum 2; … implements a balanced binary tree reduction! } int main () double pi, sum = spawn pi_comp(0, num_steps); sync; pi = step * sum; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 75

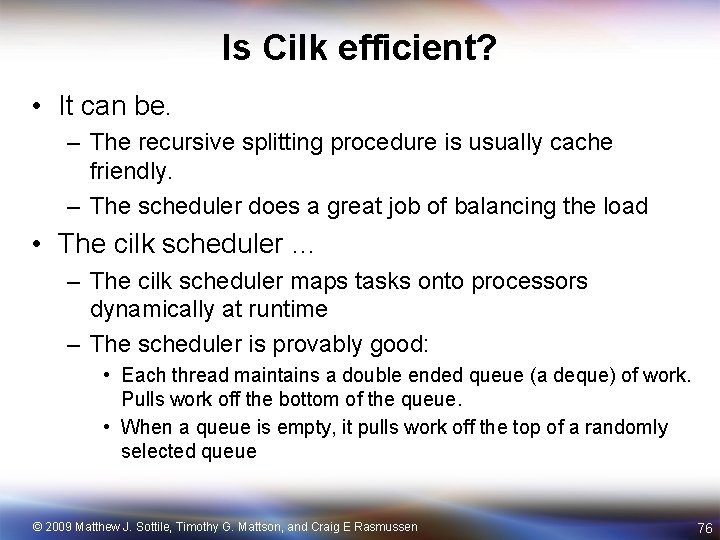

Is Cilk efficient? • It can be. – The recursive splitting procedure is usually cache friendly. – The scheduler does a great job of balancing the load • The cilk scheduler … – The cilk scheduler maps tasks onto processors dynamically at runtime – The scheduler is provably good: • Each thread maintains a double ended queue (a deque) of work. Pulls work off the bottom of the queue. • When a queue is empty, it pulls work off the top of a randomly selected queue © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 76

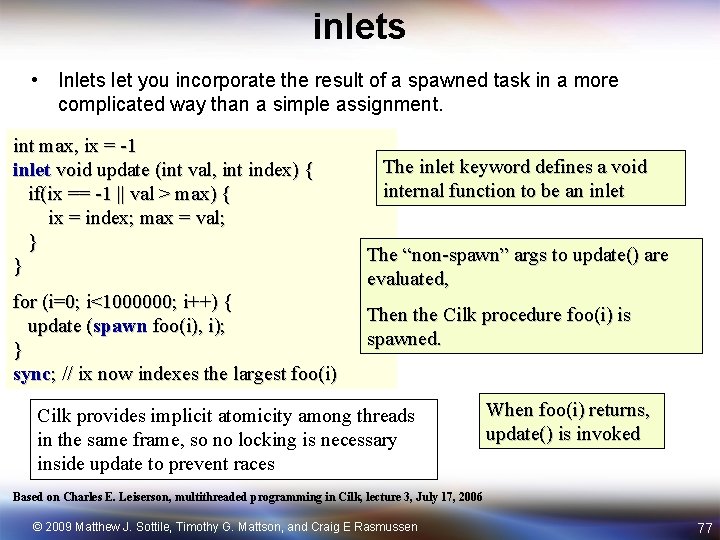

inlets • Inlets let you incorporate the result of a spawned task in a more complicated way than a simple assignment. int max, ix = -1 inlet void update (int val, int index) { if(ix == -1 || val > max) { ix = index; max = val; } } for (i=0; i<1000000; i++) { update (spawn foo(i), i); } sync; // ix now indexes the largest foo(i) The inlet keyword defines a void internal function to be an inlet The “non-spawn” args to update() are evaluated, Then the Cilk procedure foo(i) is spawned. Cilk provides implicit atomicity among threads in the same frame, so no locking is necessary inside update to prevent races When foo(i) returns, update() is invoked Based on Charles E. Leiserson, multithreaded programming in Cilk, lecture 3, July 17, 2006 © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 77

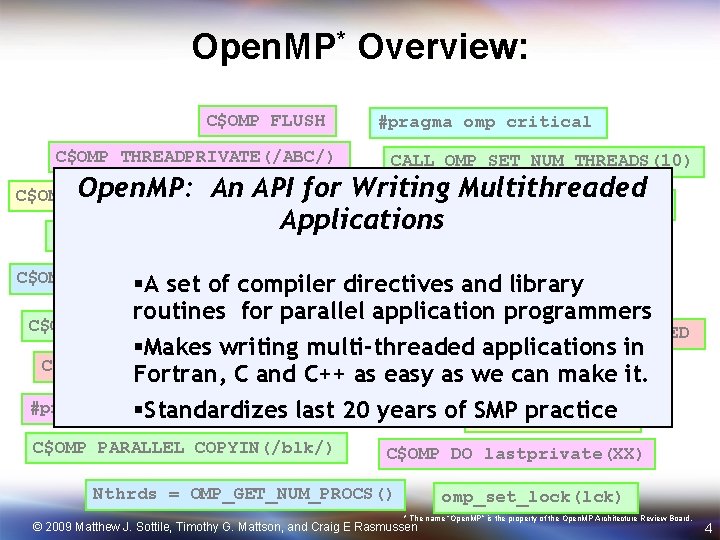

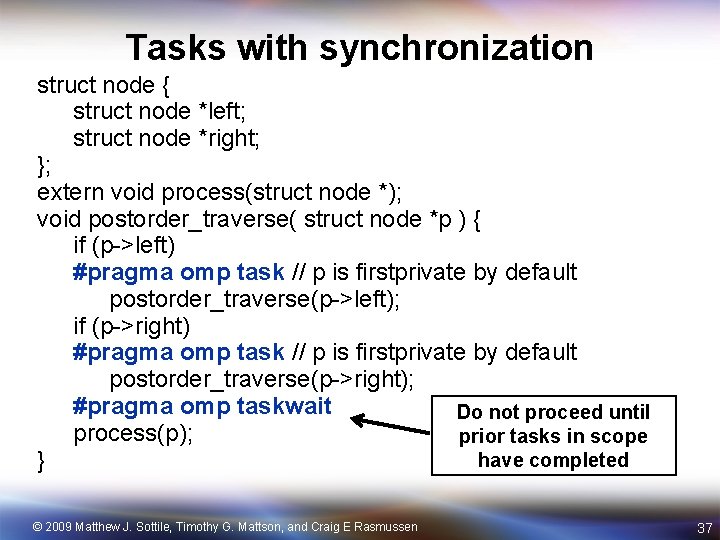

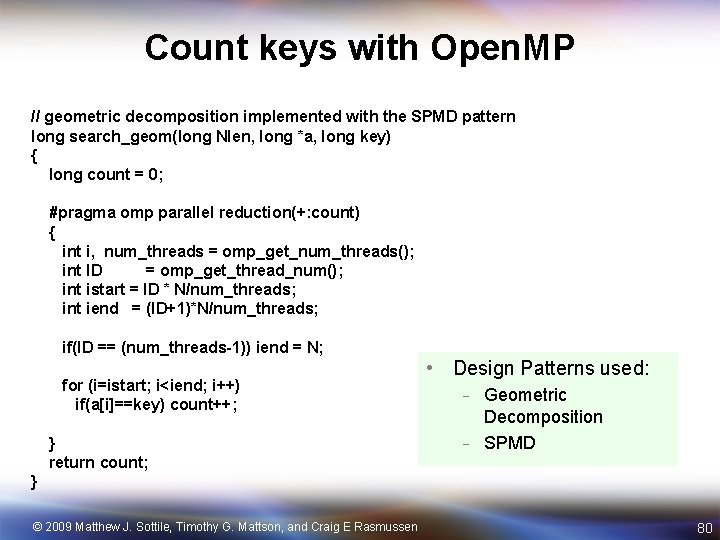

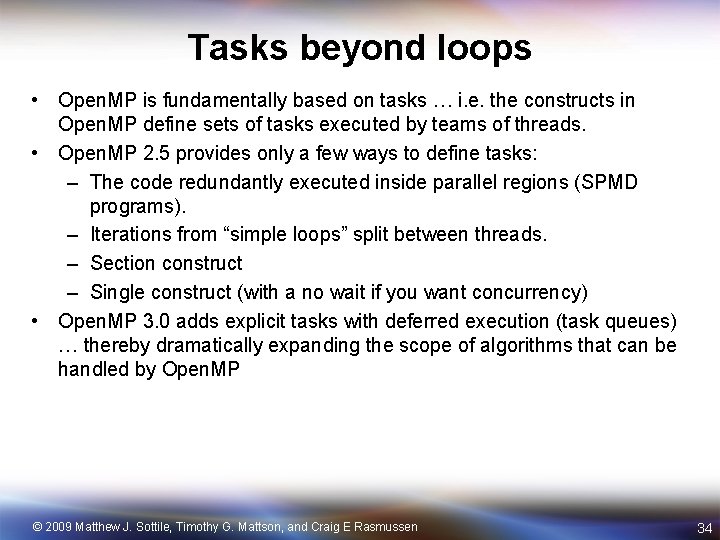

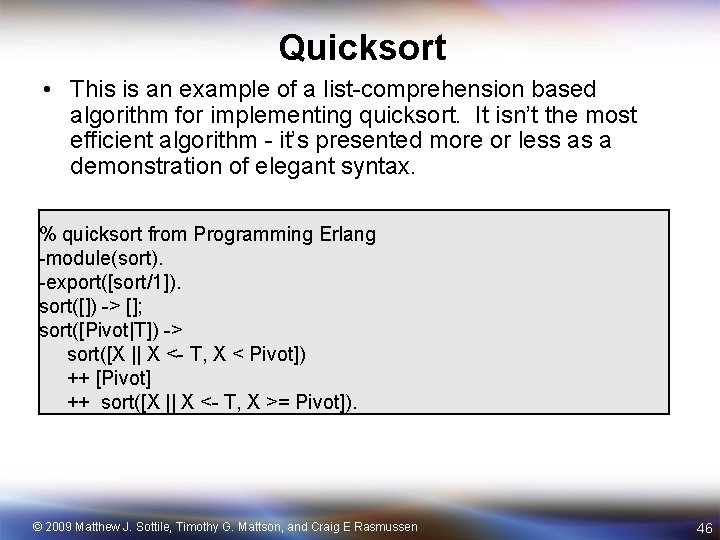

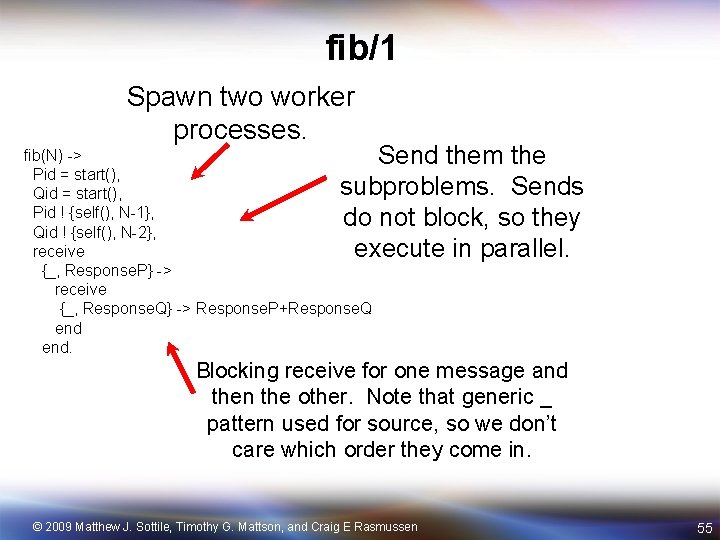

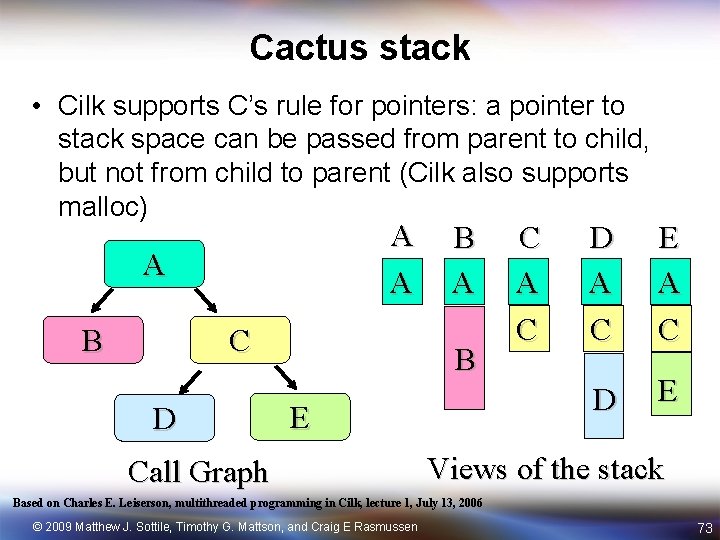

Cilk and Open. MP • With the task contruct in Open. MP 3. 0, we can use the Cilk style of programming in Open. MP. • In the following examples, we show an Open. MP program to count instances of a key in an array. We have two versions of the program – Geometric decomposition – Recursive splitting © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 78

![Count keys Main program define N 131072 int main long aN int i Count keys: Main program #define N 131072 int main() { long a[N]; int i;](https://slidetodoc.com/presentation_image_h2/aa03ed02895085c2151b0a5bc4c32488/image-79.jpg)

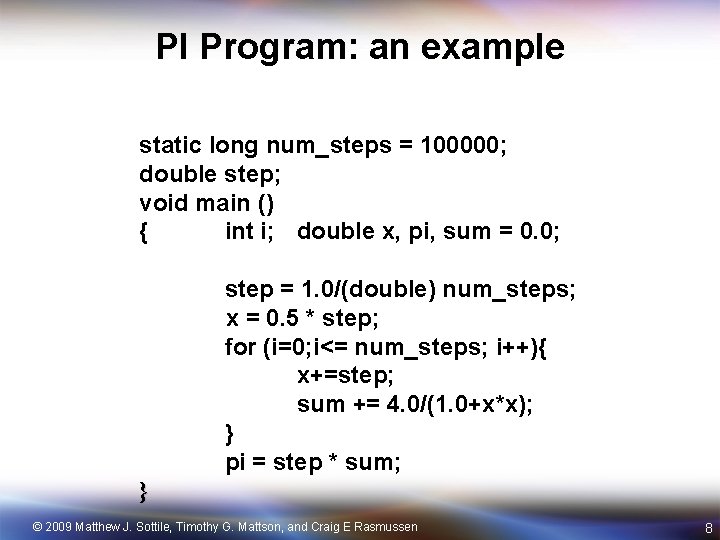

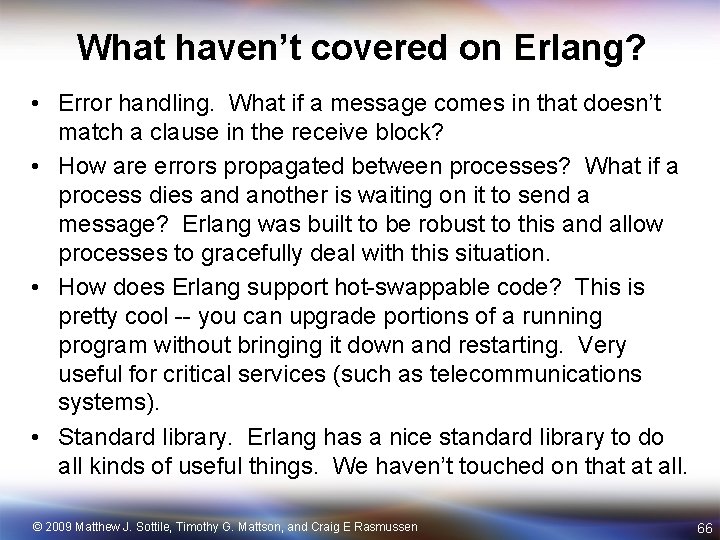

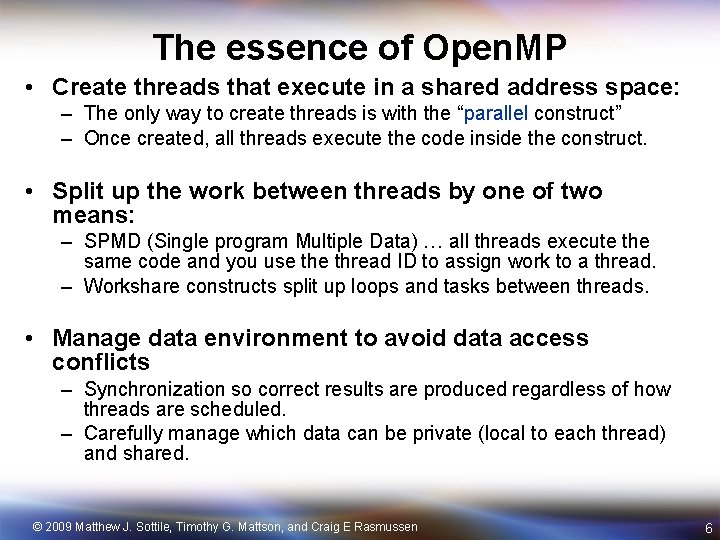

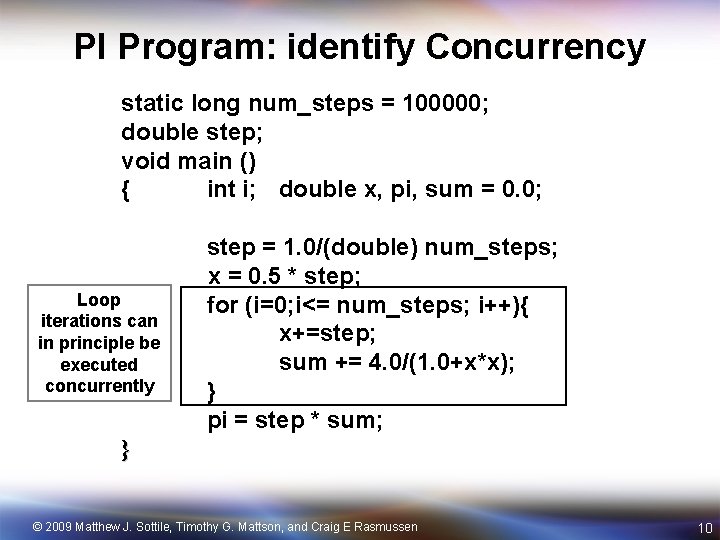

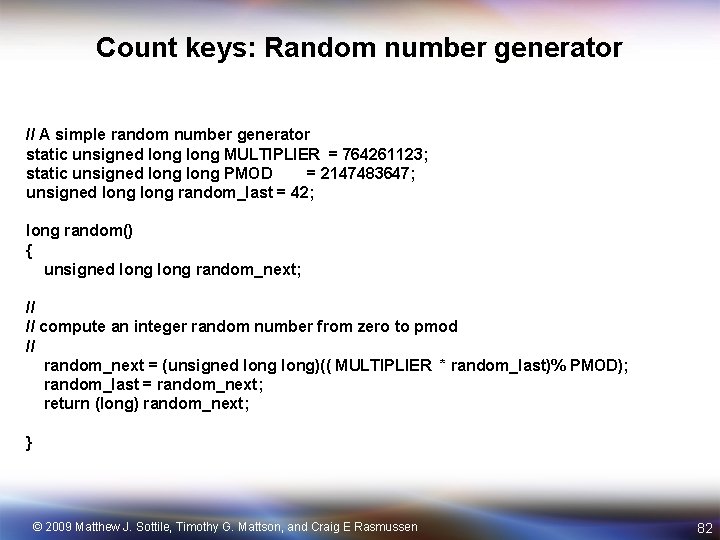

Count keys: Main program #define N 131072 int main() { long a[N]; int i; long key = 42, nkey=0; // fill the array and make sure it has a few instances of the key for (i=0; i<N; i++) a[i] = random()%N; a[N%43] = key; a[N%73] = key; a[N%3] = key; // count key in a with geometric decomposition nkey = search_geom(N, a, key); // count key in a with recursive splitting nkey = search_recur(N, a, key); } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 79

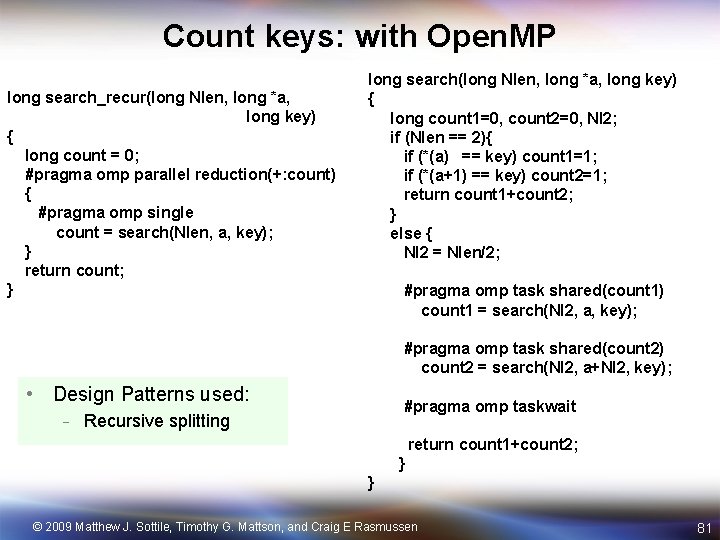

Count keys with Open. MP // geometric decomposition implemented with the SPMD pattern long search_geom(long Nlen, long *a, long key) { long count = 0; #pragma omp parallel reduction(+: count) { int i, num_threads = omp_get_num_threads(); int ID = omp_get_thread_num(); int istart = ID * N/num_threads; int iend = (ID+1)*N/num_threads; if(ID == (num_threads-1)) iend = N; for (i=istart; i<iend; i++) if(a[i]==key) count++; } return count; • Design Patterns used: - Geometric Decomposition - SPMD } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 80

Count keys: with Open. MP long search_recur(long Nlen, long *a, long key) { long count = 0; #pragma omp parallel reduction(+: count) { #pragma omp single count = search(Nlen, a, key); } return count; } long search(long Nlen, long *a, long key) { long count 1=0, count 2=0, Nl 2; if (Nlen == 2){ if (*(a) == key) count 1=1; if (*(a+1) == key) count 2=1; return count 1+count 2; } else { Nl 2 = Nlen/2; #pragma omp task shared(count 1) count 1 = search(Nl 2, a, key); #pragma omp task shared(count 2) count 2 = search(Nl 2, a+Nl 2, key); • Design Patterns used: #pragma omp taskwait - Recursive splitting return count 1+count 2; } } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 81

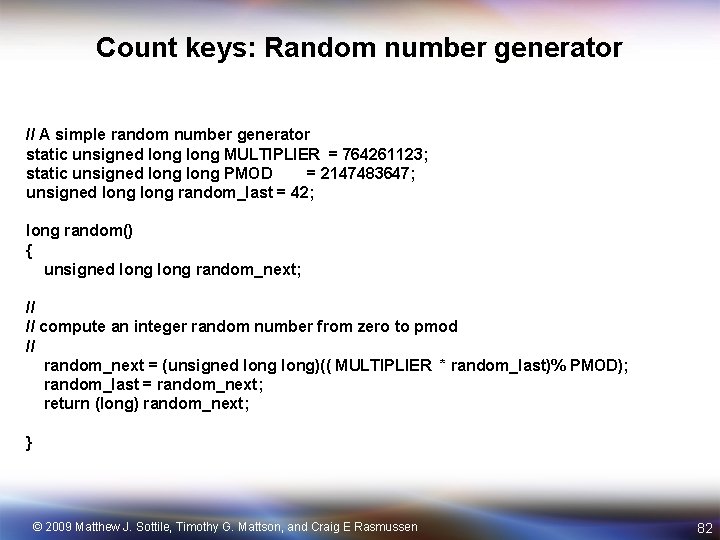

Count keys: Random number generator // A simple random number generator static unsigned long MULTIPLIER = 764261123; static unsigned long PMOD = 2147483647; unsigned long random_last = 42; long random() { unsigned long random_next; // // compute an integer random number from zero to pmod // random_next = (unsigned long)(( MULTIPLIER * random_last)% PMOD); random_last = random_next; return (long) random_next; } © 2009 Matthew J. Sottile, Timothy G. Mattson, and Craig E Rasmussen 82