Introduction to Coding Theory RongJaye Chen Outline n

![Outline n n n [1] Introduction [2] Basic assumptions [3] Correcting and detecting error Outline n n n [1] Introduction [2] Basic assumptions [3] Correcting and detecting error](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-2.jpg)

![Introduction to Coding Theory n [1] Introduction n Coding theory n n n The Introduction to Coding Theory n [1] Introduction n Coding theory n n n The](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-3.jpg)

![Introduction to Coding Theory [2] Basic assumptions Definitions n Digit: 0 or 1(binary digit) Introduction to Coding Theory [2] Basic assumptions Definitions n Digit: 0 or 1(binary digit)](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-4.jpg)

![Introduction to Coding Theory n [3] Correcting and detecting error patterns Any received word Introduction to Coding Theory n [3] Correcting and detecting error patterns Any received word](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-7.jpg)

![Introduction to Coding Theory n [4] Information rate n Definition: information rate of code Introduction to Coding Theory n [4] Information rate n Definition: information rate of code](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-8.jpg)

![Introduction to Coding Theory [5] The effects of error correction and detection 1. No Introduction to Coding Theory [5] The effects of error correction and detection 1. No](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-9.jpg)

![Introduction to Coding Theory n [6] finding the most likely codeword transmitted BSC channel Introduction to Coding Theory n [6] finding the most likely codeword transmitted BSC channel](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-12.jpg)

![Introduction to Coding Theory n [7] Some basic algebra Addition: Multiplication: :the set of Introduction to Coding Theory n [7] Some basic algebra Addition: Multiplication: :the set of](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-15.jpg)

![Introduction to Coding Theory n [8] Weight and distance n Hamming weight: n n Introduction to Coding Theory n [8] Weight and distance n Hamming weight: n n](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-17.jpg)

![Introduction to Coding Theory n [9] Maximum likelihood decoding w=v+u n Source string x Introduction to Coding Theory n [9] Maximum likelihood decoding w=v+u n Source string x](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-19.jpg)

![Introduction to Coding Theory n [10] Reliability of MLD n The probability that if Introduction to Coding Theory n [10] Reliability of MLD n The probability that if](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-21.jpg)

![Introduction to Coding Theory n [11] Error-detecting codes Can’t detect + Can detect Error Introduction to Coding Theory n [11] Error-detecting codes Can’t detect + Can detect Error](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-22.jpg)

![Introduction to Coding Theory n [12] Error-correcting codes + Error pattern n Theorem 1. Introduction to Coding Theory n [12] Error-correcting codes + Error pattern n Theorem 1.](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-24.jpg)

- Slides: 25

Introduction to Coding Theory Rong-Jaye Chen

![Outline n n n 1 Introduction 2 Basic assumptions 3 Correcting and detecting error Outline n n n [1] Introduction [2] Basic assumptions [3] Correcting and detecting error](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-2.jpg)

Outline n n n [1] Introduction [2] Basic assumptions [3] Correcting and detecting error patterns [4] Information rate [5] The effects of error correction and detection [6] Finding the most likely codeword transmitted [7] Some basic algebra [8] Weight and distance [9] Maximum likelihood decoding [10] Reliability of MLD [11] Error-detecting codes [12] Error-correcting codes p 2.

![Introduction to Coding Theory n 1 Introduction n Coding theory n n n The Introduction to Coding Theory n [1] Introduction n Coding theory n n n The](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-3.jpg)

Introduction to Coding Theory n [1] Introduction n Coding theory n n n The study of methods for efficient and accurate transfer of information Detecting and correcting transmission errors Information transmission system Information Source k-digit Transmitter (Encoder) n-digit Communication Channel Noise Receiver (Decoder) n-digit Information Sink k-digit p 3.

![Introduction to Coding Theory 2 Basic assumptions Definitions n Digit 0 or 1binary digit Introduction to Coding Theory [2] Basic assumptions Definitions n Digit: 0 or 1(binary digit)](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-4.jpg)

Introduction to Coding Theory [2] Basic assumptions Definitions n Digit: 0 or 1(binary digit) n Word:a sequence of digits n Example: 0110101 n Binary code:a set of words n Example: 1. {00, 01, 10, 11} , 2. {0, 01, 001} n Block code :a code having all its words of the same length n Example: {00, 01, 10, 11}, 2 is its length n Codewords :words belonging to a given code n |C| : Size of a code C(#codewords in C) p 4.

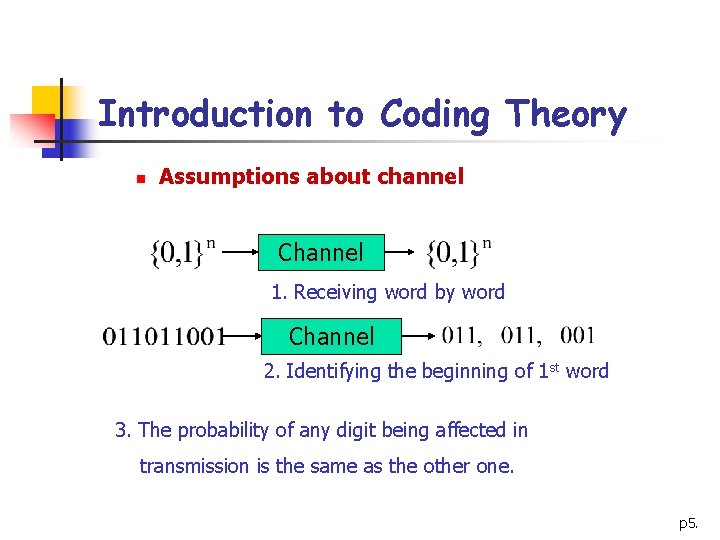

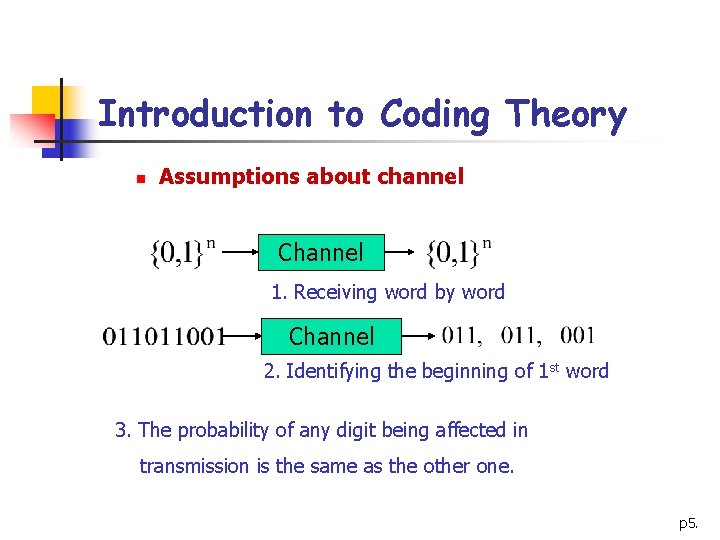

Introduction to Coding Theory n Assumptions about channel Channel 1. Receiving word by word Channel 2. Identifying the beginning of 1 st word 3. The probability of any digit being affected in transmission is the same as the other one. p 5.

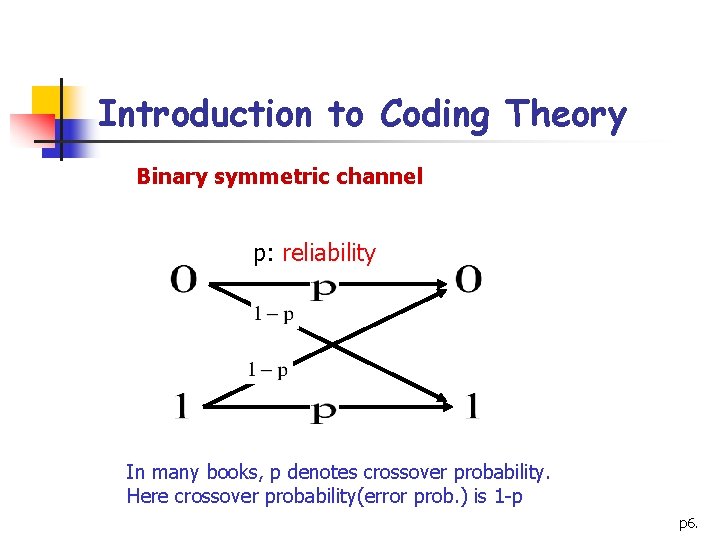

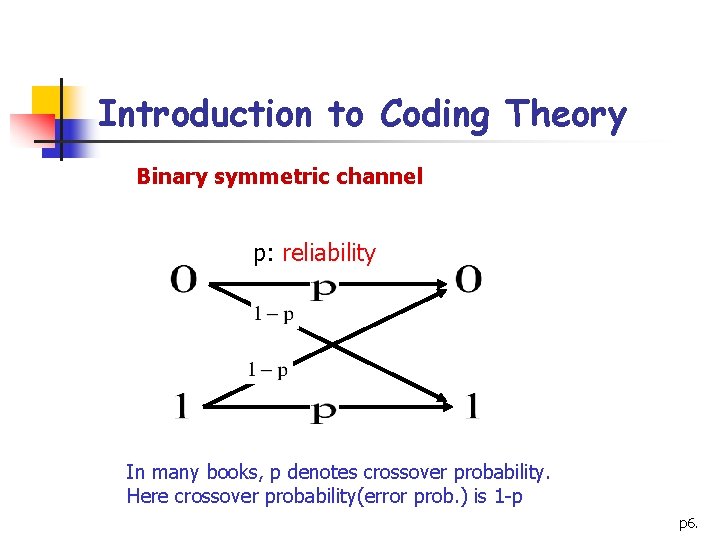

Introduction to Coding Theory Binary symmetric channel p: reliability In many books, p denotes crossover probability. Here crossover probability(error prob. ) is 1 -p p 6.

![Introduction to Coding Theory n 3 Correcting and detecting error patterns Any received word Introduction to Coding Theory n [3] Correcting and detecting error patterns Any received word](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-7.jpg)

Introduction to Coding Theory n [3] Correcting and detecting error patterns Any received word should be corrected to a codeword that requires as few changes as possible. Cannot detect any errors !!! source Channel correct parity-check digit source Channel correct p 7.

![Introduction to Coding Theory n 4 Information rate n Definition information rate of code Introduction to Coding Theory n [4] Information rate n Definition: information rate of code](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-8.jpg)

Introduction to Coding Theory n [4] Information rate n Definition: information rate of code C n Examples p 8.

![Introduction to Coding Theory 5 The effects of error correction and detection 1 No Introduction to Coding Theory [5] The effects of error correction and detection 1. No](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-9.jpg)

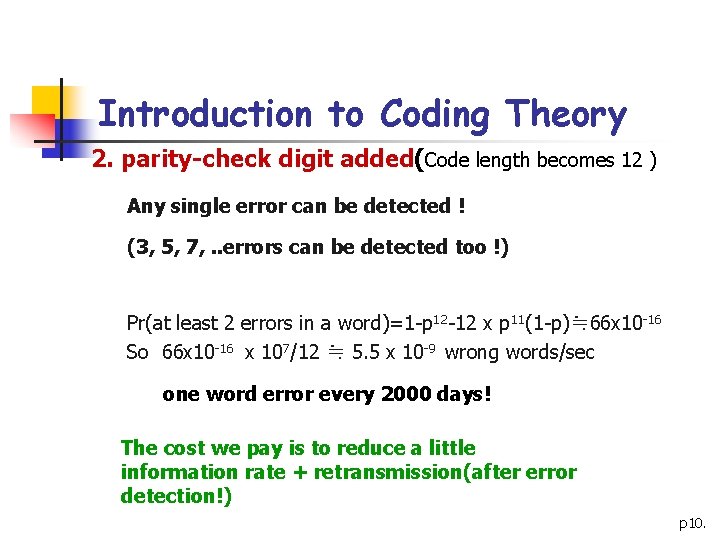

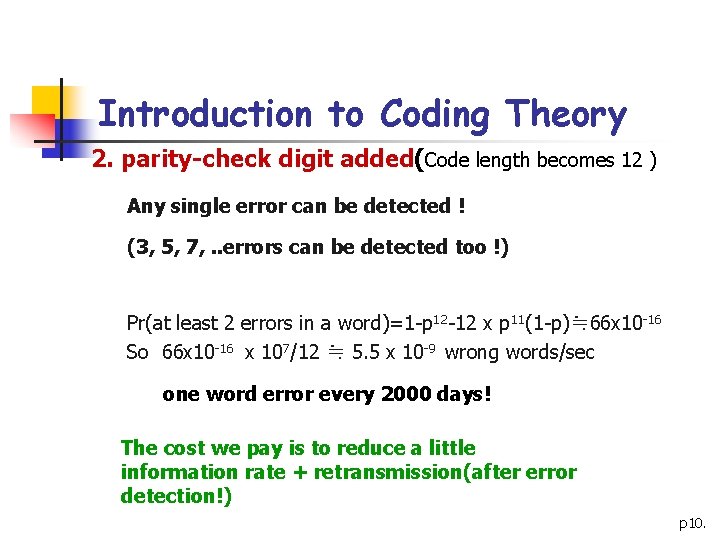

Introduction to Coding Theory [5] The effects of error correction and detection 1. No error detection and correction Let C={0, 1}11={00000, …, 111111} Reliability p=1 -10 -8 Transmission rate=107 digits/sec Then Pr(a word is transmitted incorrectly) = 1 -p 11 ≒ 11 x 10 -8(wrong words/words)x 107/11(words/sec)=0. 1 wrong words/sec 1 wrong word / 10 sec 6 wrong words / min 360 wrong words / hr 8640 wrong words / day p 9.

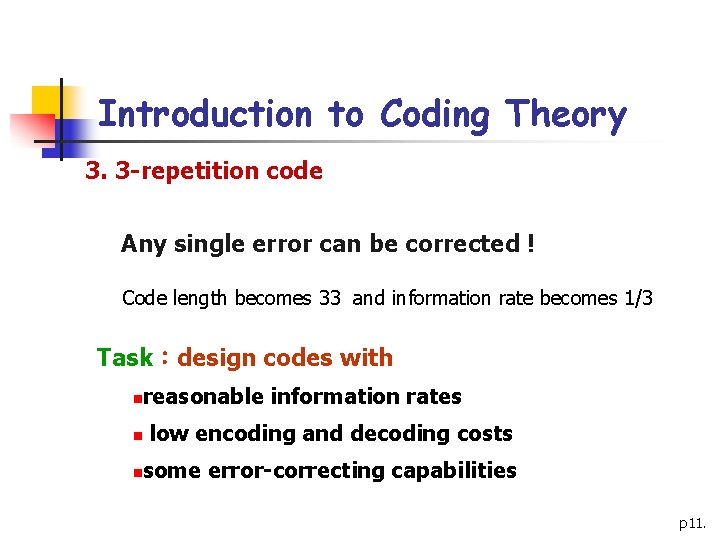

Introduction to Coding Theory 2. parity-check digit added(Code length becomes 12 ) Any single error can be detected ! (3, 5, 7, . . errors can be detected too !) Pr(at least 2 errors in a word)=1 -p 12 -12 x p 11(1 -p)≒ 66 x 10 -16 So 66 x 10 -16 x 107/12 ≒ 5. 5 x 10 -9 wrong words/sec one word error every 2000 days! The cost we pay is to reduce a little information rate + retransmission(after error detection!) p 10.

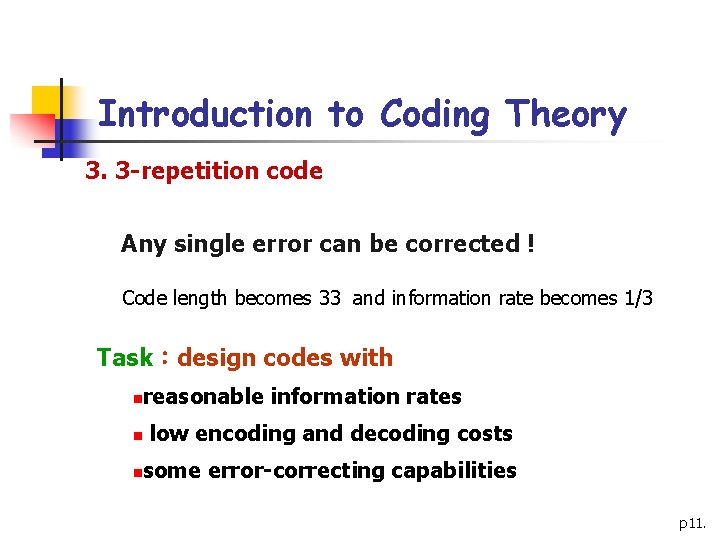

Introduction to Coding Theory 3. 3 -repetition code Any single error can be corrected ! Code length becomes 33 and information rate becomes 1/3 Task:design codes with reasonable information rates n n low encoding and decoding costs some error-correcting capabilities n p 11.

![Introduction to Coding Theory n 6 finding the most likely codeword transmitted BSC channel Introduction to Coding Theory n [6] finding the most likely codeword transmitted BSC channel](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-12.jpg)

Introduction to Coding Theory n [6] finding the most likely codeword transmitted BSC channel n p :reliability d :#digits incorrectly transmitted n :code length Example: Code length = 5 p 12.

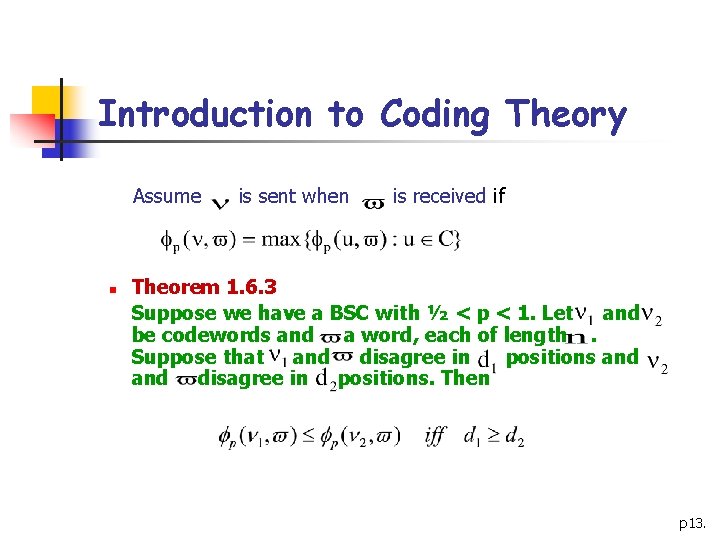

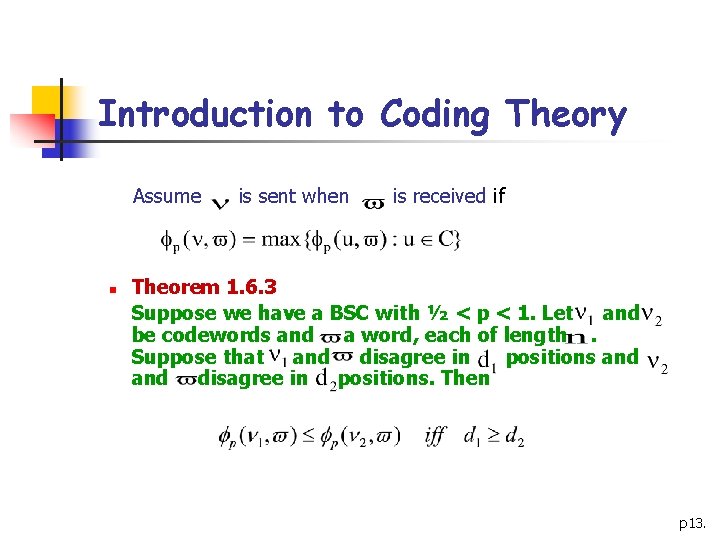

Introduction to Coding Theory Assume n is sent when is received if Theorem 1. 6. 3 Suppose we have a BSC with ½ < p < 1. Let and be codewords and a word, each of length. Suppose that and disagree in positions. Then p 13.

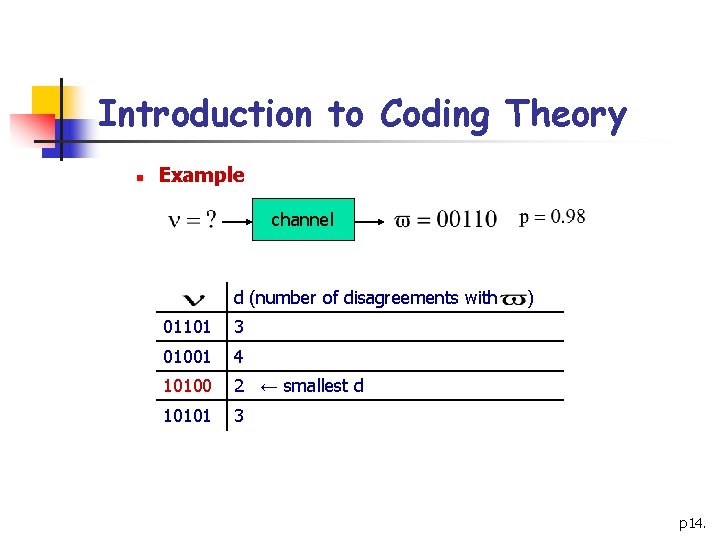

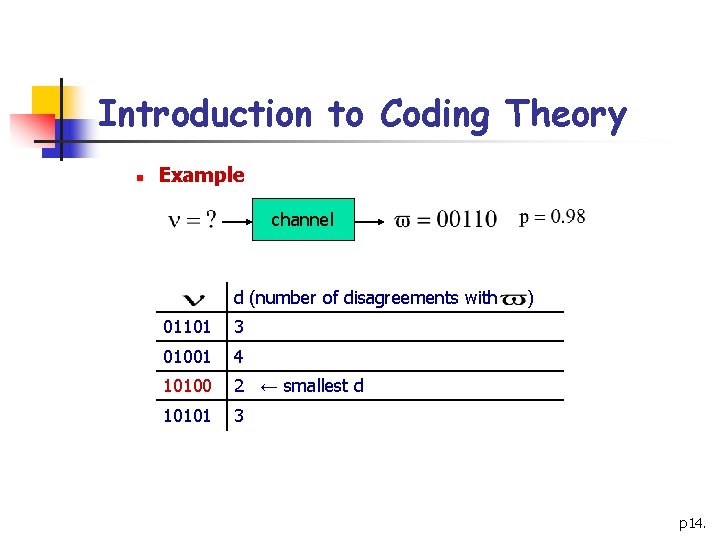

Introduction to Coding Theory n Example channel d (number of disagreements with 01101 3 01001 4 10100 2 ← smallest d 10101 3 ) p 14.

![Introduction to Coding Theory n 7 Some basic algebra Addition Multiplication the set of Introduction to Coding Theory n [7] Some basic algebra Addition: Multiplication: :the set of](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-15.jpg)

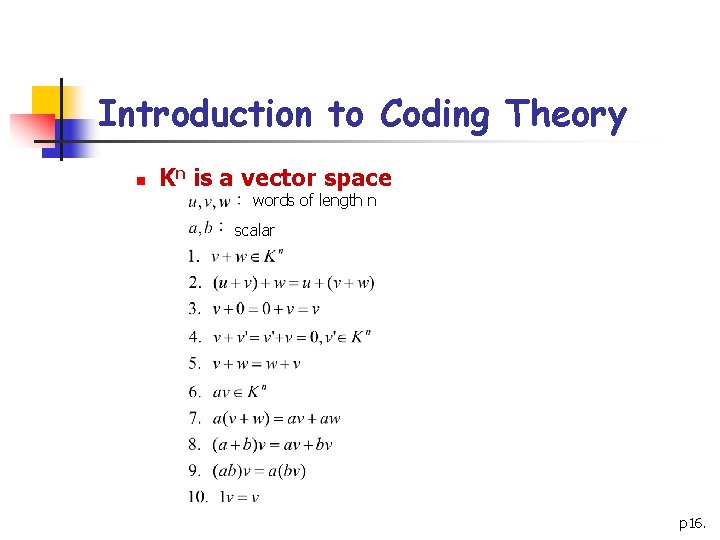

Introduction to Coding Theory n [7] Some basic algebra Addition: Multiplication: :the set of all binary words of length n Addition: Scalar multiplication: :zero word p 15.

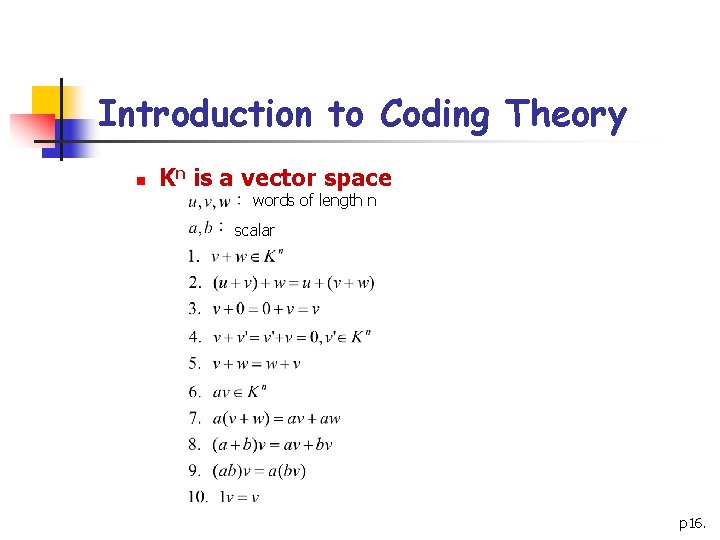

Introduction to Coding Theory n Kn is a vector space words of length n scalar p 16.

![Introduction to Coding Theory n 8 Weight and distance n Hamming weight n n Introduction to Coding Theory n [8] Weight and distance n Hamming weight: n n](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-17.jpg)

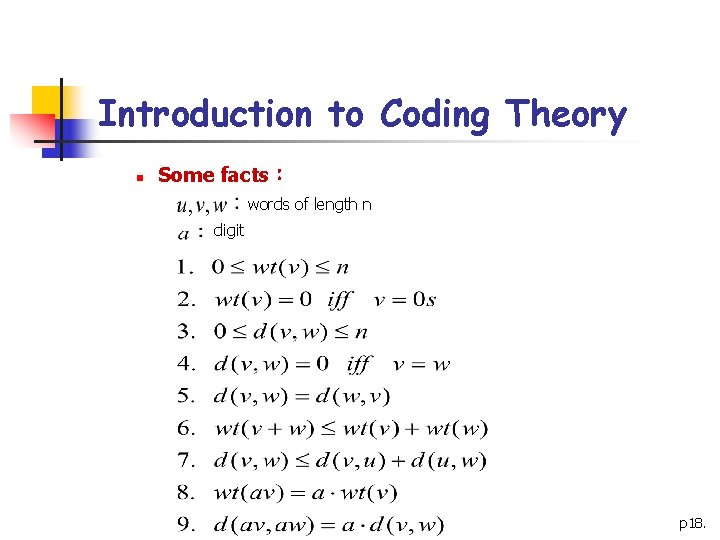

Introduction to Coding Theory n [8] Weight and distance n Hamming weight: n n n the number of times the digit 1 occurs in Example: Hamming distance: n the number of positions in which n Example: and disagree p 17.

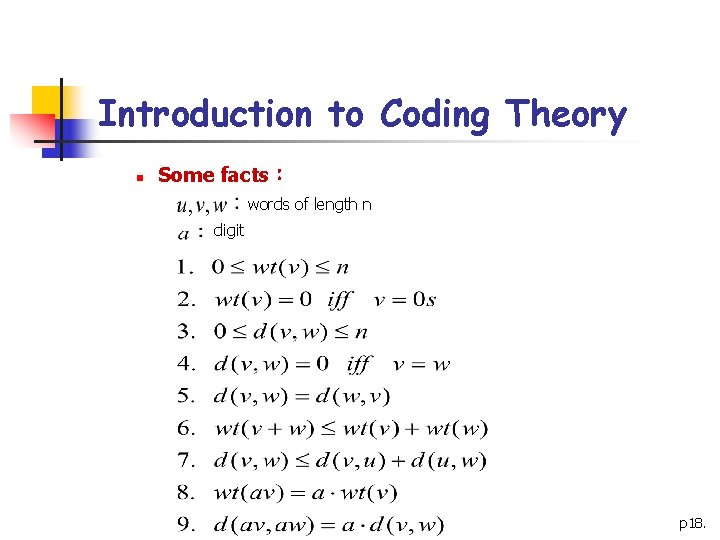

Introduction to Coding Theory n Some facts: words of length n digit p 18.

![Introduction to Coding Theory n 9 Maximum likelihood decoding wvu n Source string x Introduction to Coding Theory n [9] Maximum likelihood decoding w=v+u n Source string x](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-19.jpg)

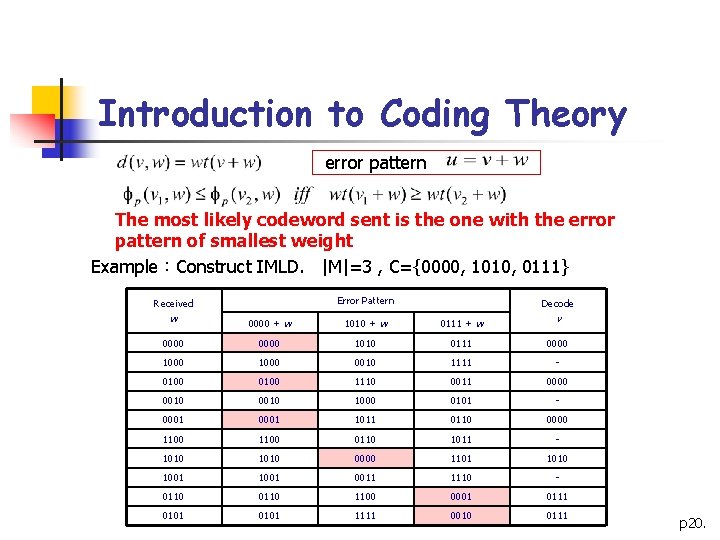

Introduction to Coding Theory n [9] Maximum likelihood decoding w=v+u n Source string x k codeword channel decode n n n Error pattern CMLD:Complete Maximum Likelihood Decoding n n n CMLD If only one word v in C closer to w , decode it to v If several words closest to w, select arbitrarily one of them IMLD:Incomplete Maximum Likelihood Decoding n n If only one word v in C closer to w, decode it to v If several words closest to w, ask for retransmission p 19.

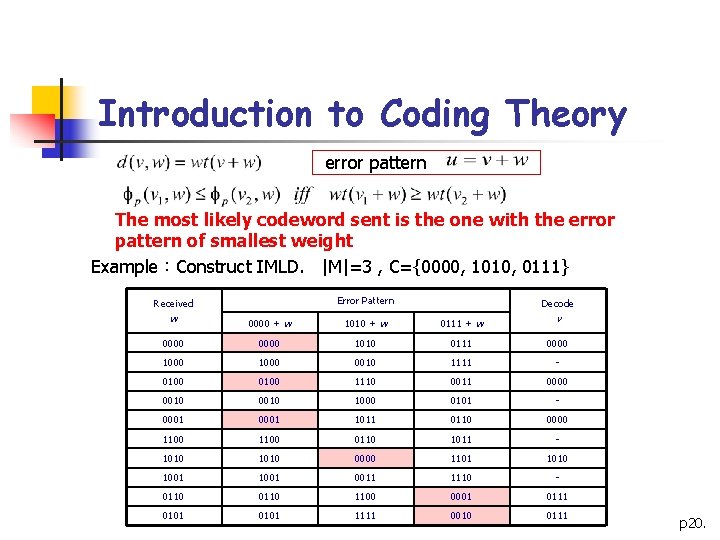

Introduction to Coding Theory error pattern The most likely codeword sent is the one with the error pattern of smallest weight Example:Construct IMLD. |M|=3 , C={0000, 1010, 0111} Error Pattern Received Decode w 0000 + w 1010 + w 0111 + w v 0000 1010 0111 0000 1000 0010 1111 - 0100 1110 0011 0000 0010 1000 0101 - 0001 1011 0110 0000 1100 0110 1011 - 1010 0000 1101 1010 1001 0011 1110 - 0110 1100 0001 0111 0101 1111 0010 0111 p 20.

![Introduction to Coding Theory n 10 Reliability of MLD n The probability that if Introduction to Coding Theory n [10] Reliability of MLD n The probability that if](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-21.jpg)

Introduction to Coding Theory n [10] Reliability of MLD n The probability that if v is sent over a BSC of probability p then IMLD correctly concludes that v was sent The higher the probability is, the more correctly the word can be decoded! p 21.

![Introduction to Coding Theory n 11 Errordetecting codes Cant detect Can detect Error Introduction to Coding Theory n [11] Error-detecting codes Can’t detect + Can detect Error](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-22.jpg)

Introduction to Coding Theory n [11] Error-detecting codes Can’t detect + Can detect Error pattern Example: Error Pattern u v = 000 v = 111 000 111 100 011 010 101 001 110 110 001 101 010 011 100 111 000 Can detect Can’t detect p 22.

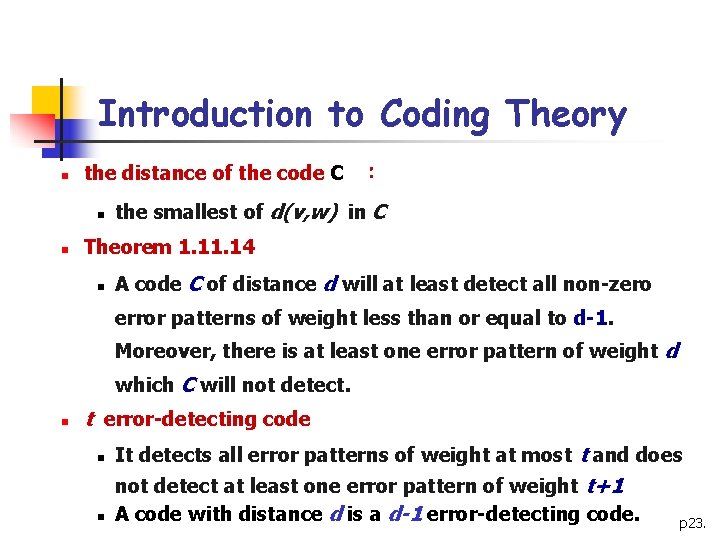

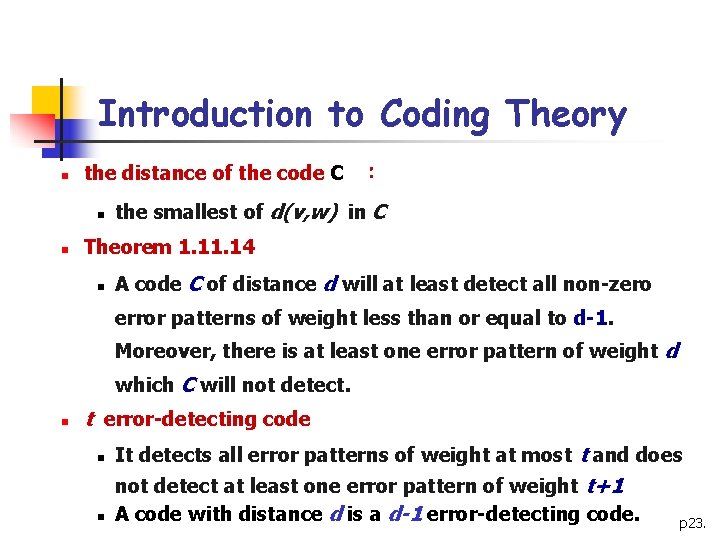

Introduction to Coding Theory n the distance of the code C : n n the smallest of d(v, w) in C Theorem 1. 14 n A code C of distance d will at least detect all non-zero error patterns of weight less than or equal to d-1. Moreover, there is at least one error pattern of weight d which C will not detect. n t error-detecting code n It detects all error patterns of weight at most t and does n not detect at least one error pattern of weight t+1 A code with distance d is a d-1 error-detecting code. p 23.

![Introduction to Coding Theory n 12 Errorcorrecting codes Error pattern n Theorem 1 Introduction to Coding Theory n [12] Error-correcting codes + Error pattern n Theorem 1.](https://slidetodoc.com/presentation_image_h/82160ca36d9169b423f975a6c9d01cb0/image-24.jpg)

Introduction to Coding Theory n [12] Error-correcting codes + Error pattern n Theorem 1. 12. 9 n n For all v in C , if it is closer to v than any other word in C, a code C can correct u. A code of distance d will correct all error patterns of weight less than or equal to. Moreover, there is at least one error pattern of weight 1+ which C will not correct. t error-correcting code n n It corrects all error patterns of weight at most t and does not correct at least one error pattern of weight t+1 A code of distance d is a error-correcting code. p 24.

Introduction to Coding Theory Received Error Pattern Decode w 000 + w 111 + w v 000* 111 000 100* 011 000 010* 101 000 001* 110 000 110 001* 111 101 010* 111 011 100* 111 111 000* 111 C corrects error patterns 000, 100, 010, 001 p 25.