Introducing Natural Language Program Analysis Lori Pollock K

![Our Research Path Motivated usefulness of exploiting natural [MACS 05, LATE 05] language (NL) Our Research Path Motivated usefulness of exploiting natural [MACS 05, LATE 05] language (NL)](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-8.jpg)

![Timna: An Aspect Mining Framework [ASE 05] ü ü Uses program analysis clues for Timna: An Aspect Mining Framework [ASE 05] ü ü Uses program analysis clues for](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-11.jpg)

![State of the Art for Concern Location ü Mining Dynamic Information [Wilde ICSM 00] State of the Art for Concern Location ü Mining Dynamic Information [Wilde ICSM 00]](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-15.jpg)

![Underlying Program Analysis ü Action-Oriented Identifier Graph (AOIG) [AOSD 06] ü Provides access to Underlying Program Analysis ü Action-Oriented Identifier Graph (AOIG) [AOSD 06] ü Provides access to](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-18.jpg)

- Slides: 47

Introducing Natural Language Program Analysis Lori Pollock, K. Vijay-Shanker, David Shepherd, Emily Hill, Zachary P. Fry, Kishen Maloor

NLPA Research Team Leaders K. Vijay-Shanker “The Umpire” Lori Pollock “Team Captain”

Problem Modern software is large and complex Software development tools are needed object oriented class hierarchy

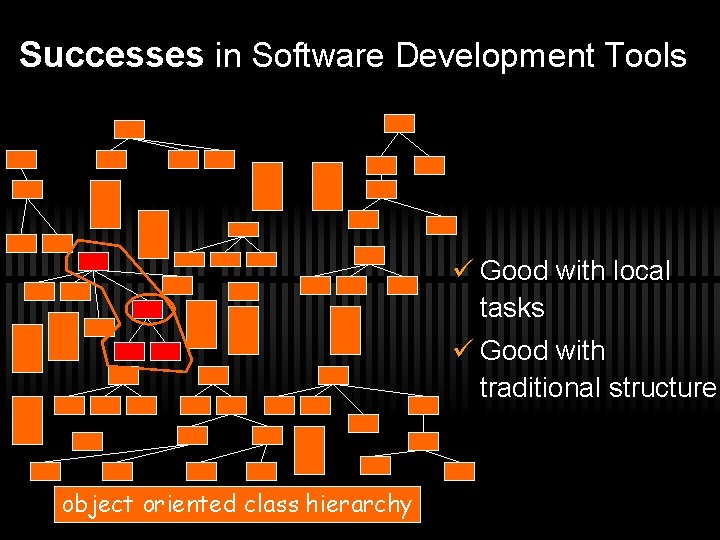

Successes in Software Development Tools ü Good with local tasks ü Good with traditional structure object oriented class hierarchy

Issues in Software Development Tools ü Scattered tasks are difficult ü Programmers use more than traditional program structure object oriented class hierarchy

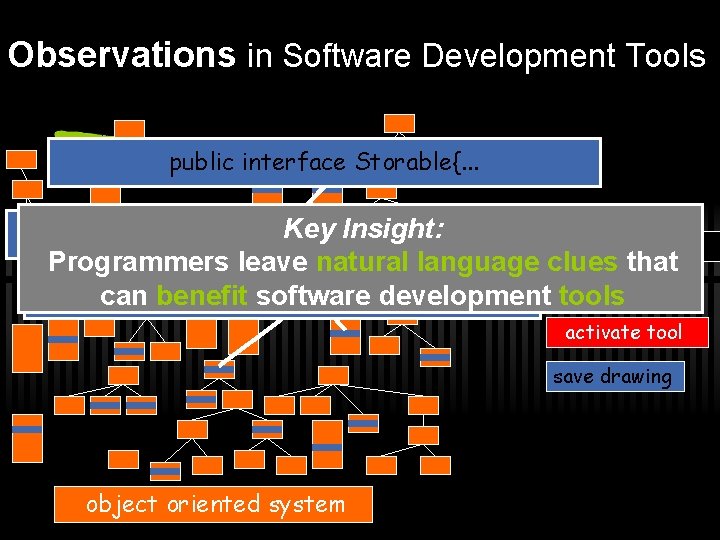

Observations in Software Development Tools public interface Storable{. . . Keyin. Insight: //Store the fields a file. . undo action Programmers leave natural language clues that voidsoftware Circle. save() canpublic benefit development update toolsdrawing activate tool save drawing object oriented system

Studies on choosing identifiers I don’t care about names. So, I could use x, y, z. But, no one will understand my code. Carla, the compiler writer Pete, the programmer ü Impact of human cognition on names [Liblit et al. PPIG 06] ü Metaphors, morphology, scope, part of speech hints ü Hints for understanding code ü Analysis of Function identifiers [Caprile and Tonella WCRE 99] ü Lexical, syntactic, semantic ü Use for software tools: metrics, traceability, program understanding

![Our Research Path Motivated usefulness of exploiting natural MACS 05 LATE 05 language NL Our Research Path Motivated usefulness of exploiting natural [MACS 05, LATE 05] language (NL)](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-8.jpg)

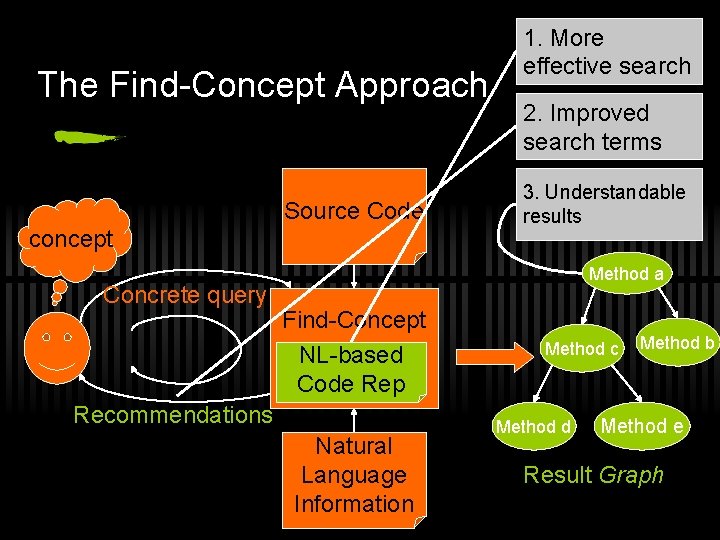

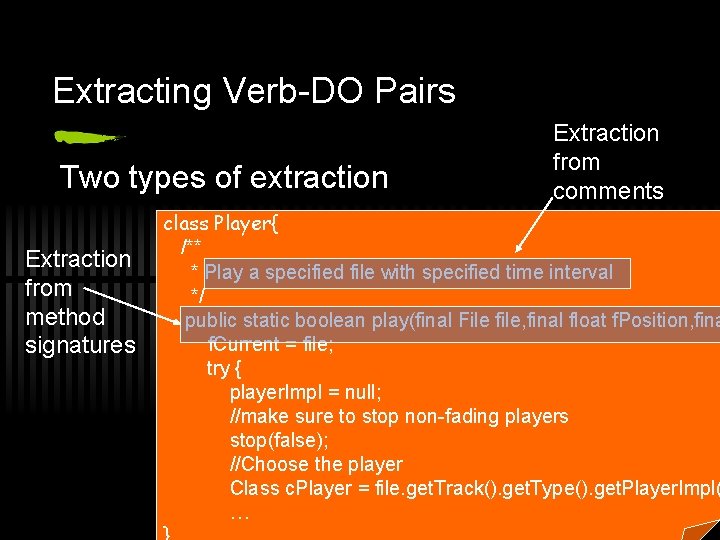

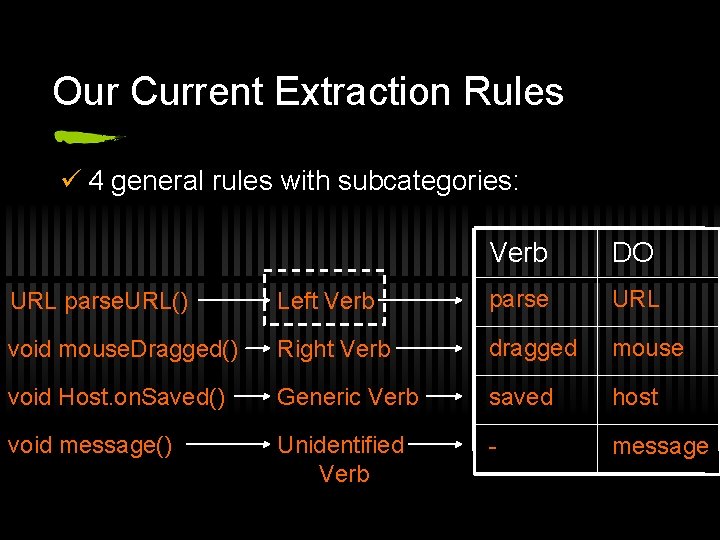

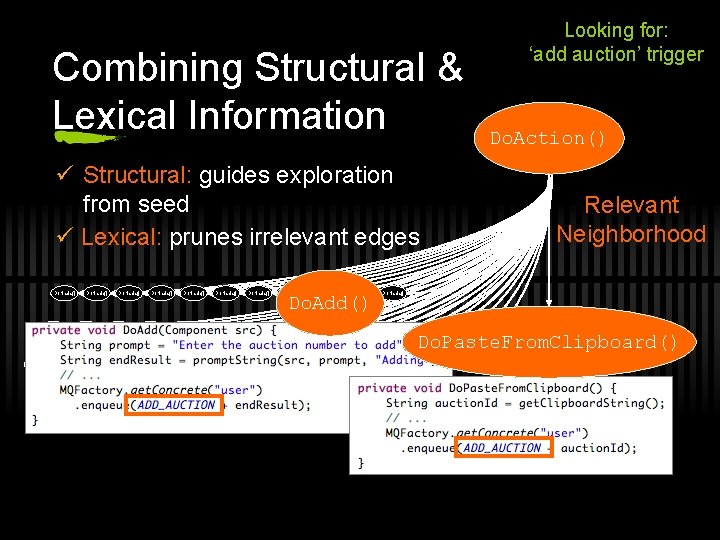

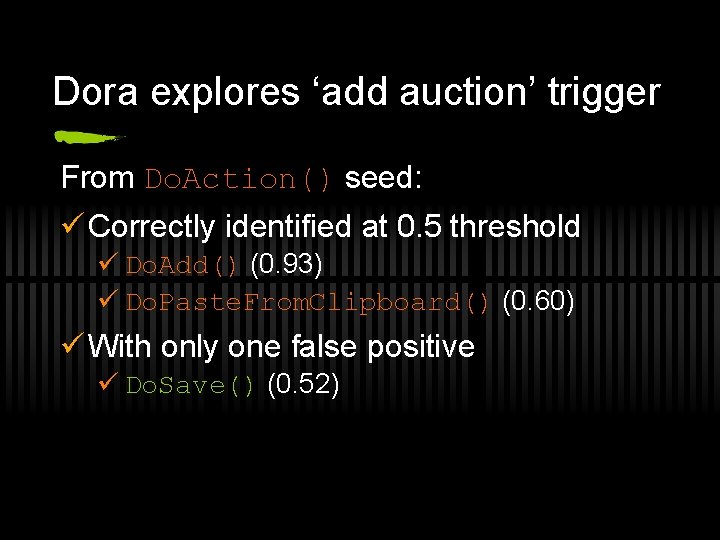

Our Research Path Motivated usefulness of exploiting natural [MACS 05, LATE 05] language (NL) clues in tools Developed extraction process and an NL[AOSD 06] based program representation Created and evaluated a concern location tool and an aspect miner with [ASE 05, AOSD 07, PASTE 07] NL-based analysis

pic Stats Year 2002 2007 Name: David C Shepherd Nickname: Leadoff Hitter Current Position: Ph. D May 30, 2007 Future Position: Postdoc, Gail Murphy coffees/day redmarks/paper draft 0. 1 500 2. 2 100

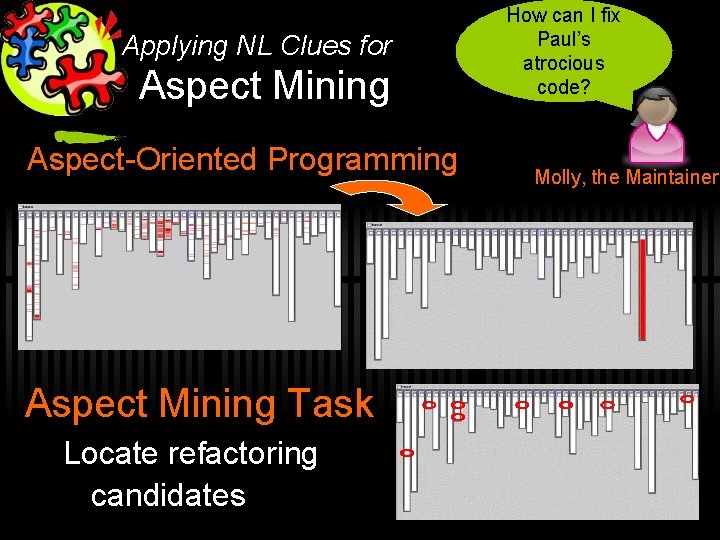

Applying NL Clues for Aspect Mining Aspect-Oriented Programming Aspect Mining Task Locate refactoring candidates How can I fix Paul’s atrocious code? Molly, the Maintainer

![Timna An Aspect Mining Framework ASE 05 ü ü Uses program analysis clues for Timna: An Aspect Mining Framework [ASE 05] ü ü Uses program analysis clues for](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-11.jpg)

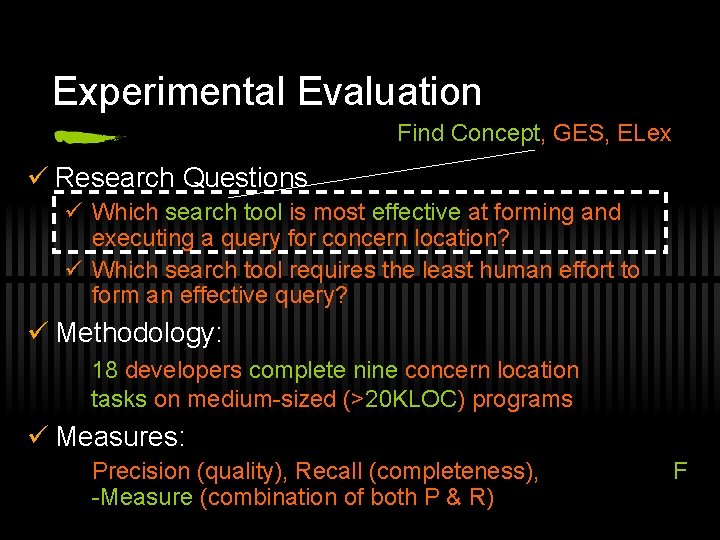

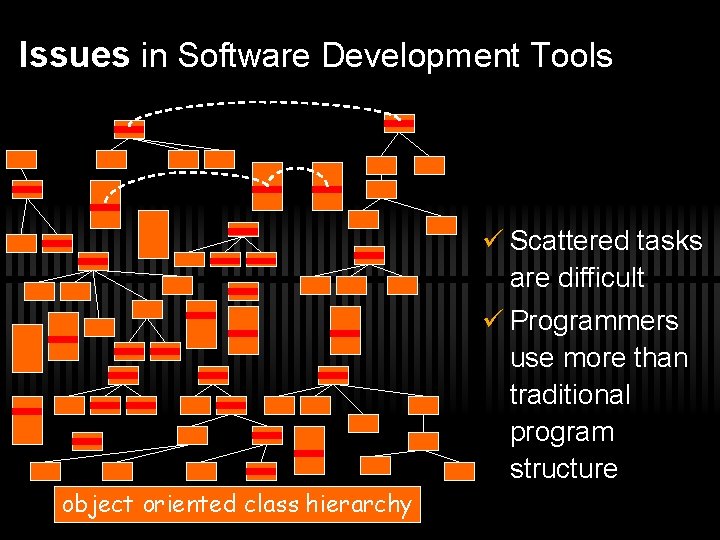

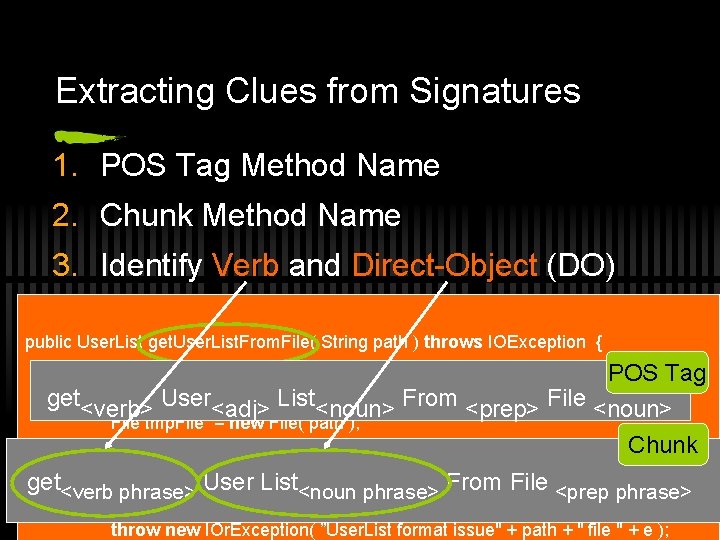

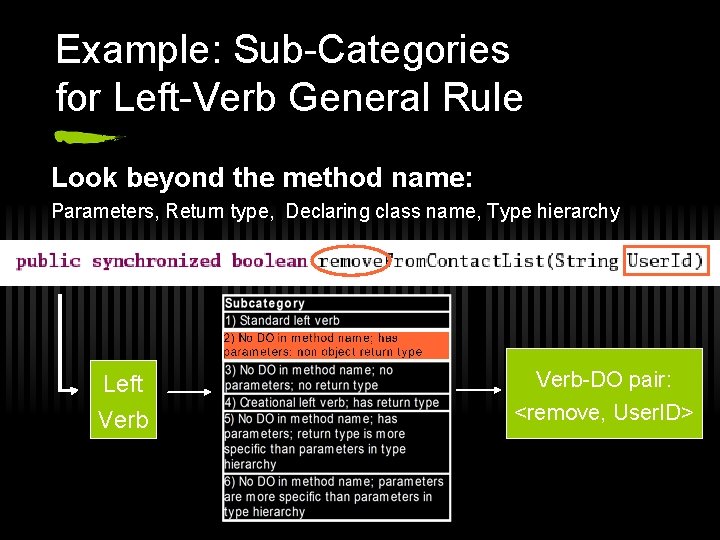

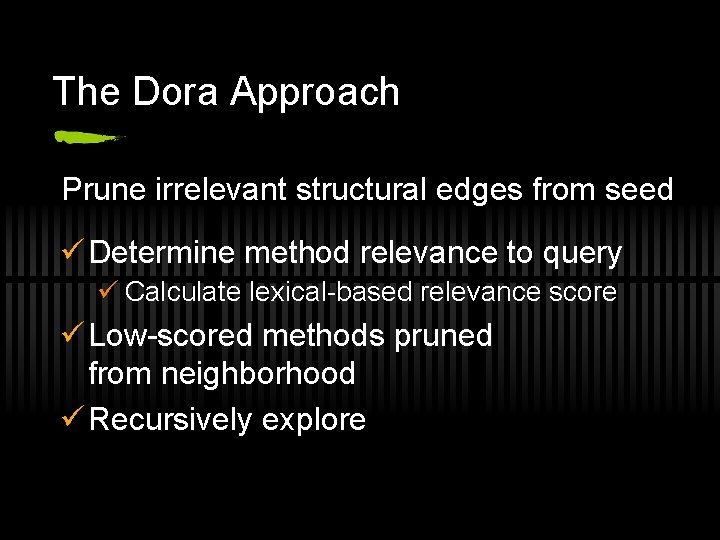

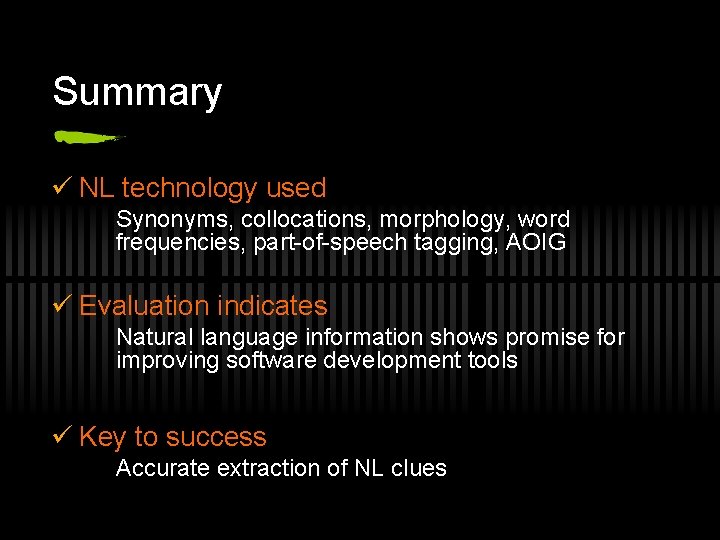

Timna: An Aspect Mining Framework [ASE 05] ü ü Uses program analysis clues for mining Combines clues using machine learning Evaluated vs. Fan-in Precision (quality) and Recall (completeness) P R Fan-In 37 2 Timna 62 60

Integrating NL Clues into Timna ü i. Timna (Timna with NL) ü Integrates natural language clues ü Example: Opposite verbs (open and close) P R Fan-In 37 2 Timna 62 60 i. Timna 81 73 Natural language information increases the effectiveness of Timna [Come back Thurs 10: 05 am]

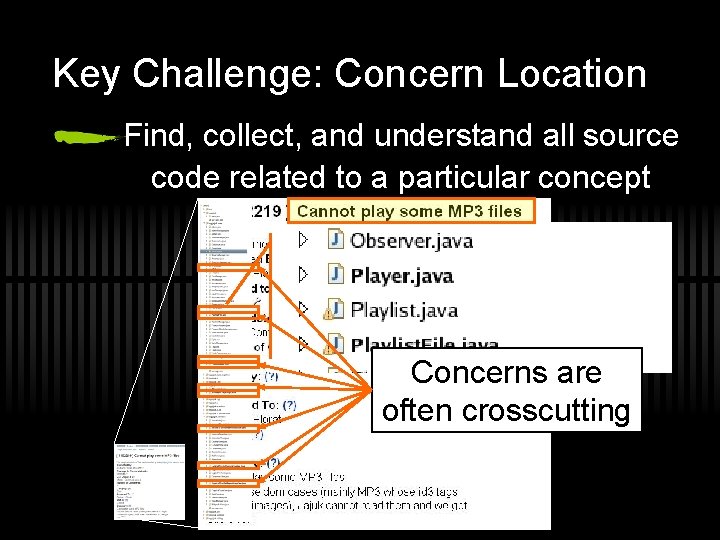

Applying NL Clues for Concern Location Motivation 60 -90% software costs spent on reading and navigating code for maintenance* (fixing bugs, adding features, etc. ) *[Erlikh] Leveraging Legacy System Dollars for E-Business

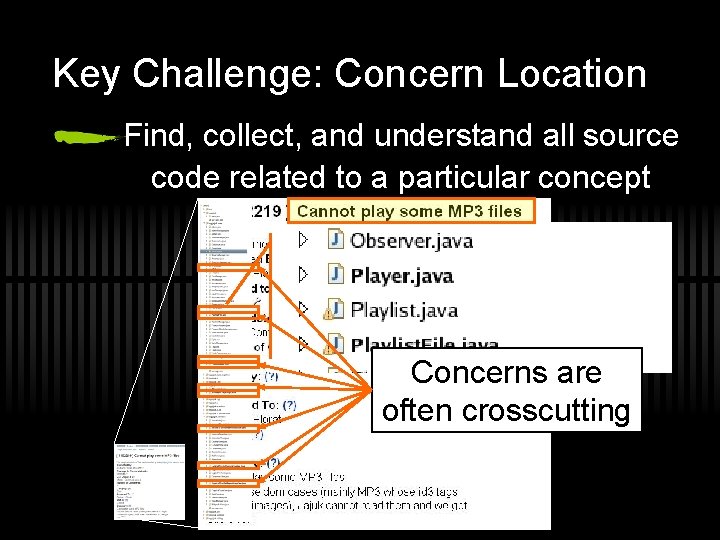

Key Challenge: Concern Location Find, collect, and understand all source code related to a particular concept Concerns are often crosscutting

![State of the Art for Concern Location ü Mining Dynamic Information Wilde ICSM 00 State of the Art for Concern Location ü Mining Dynamic Information [Wilde ICSM 00]](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-15.jpg)

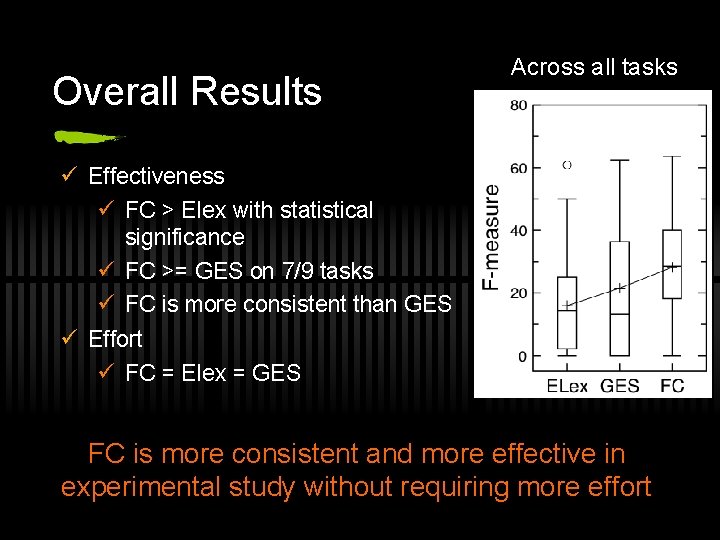

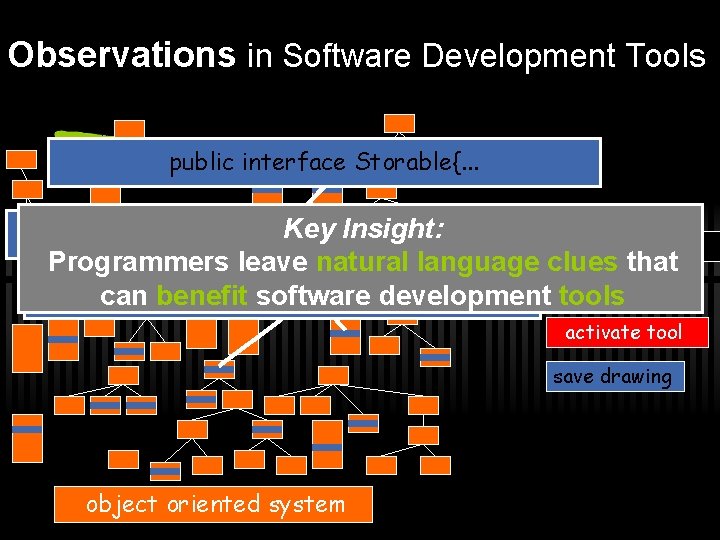

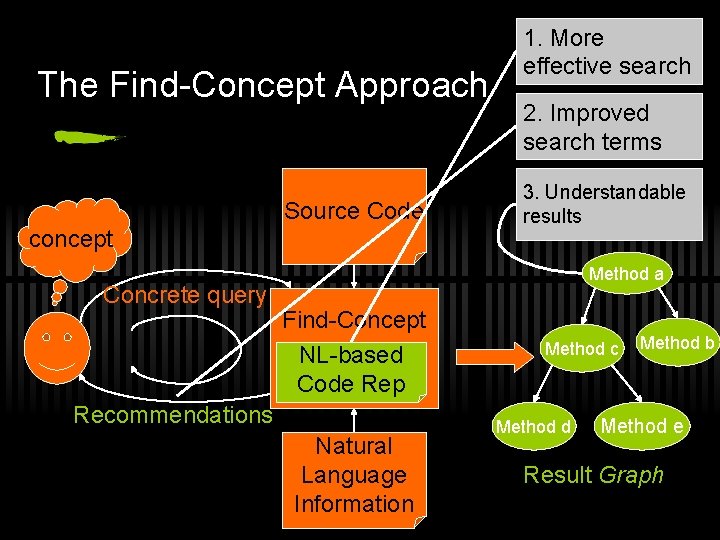

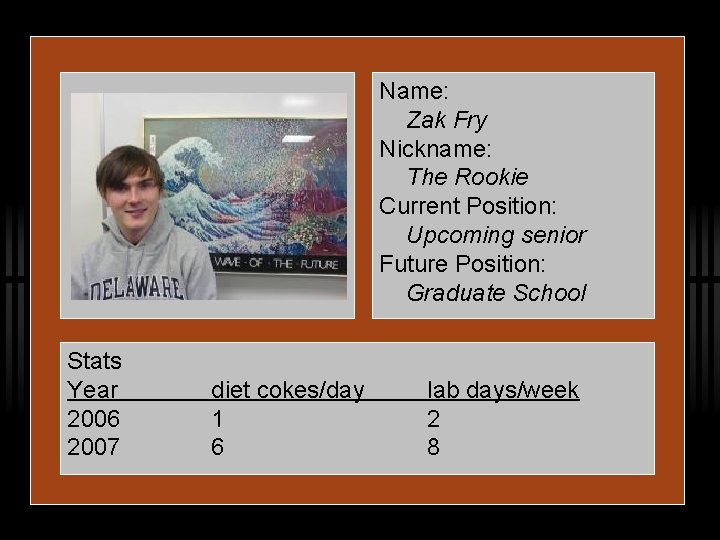

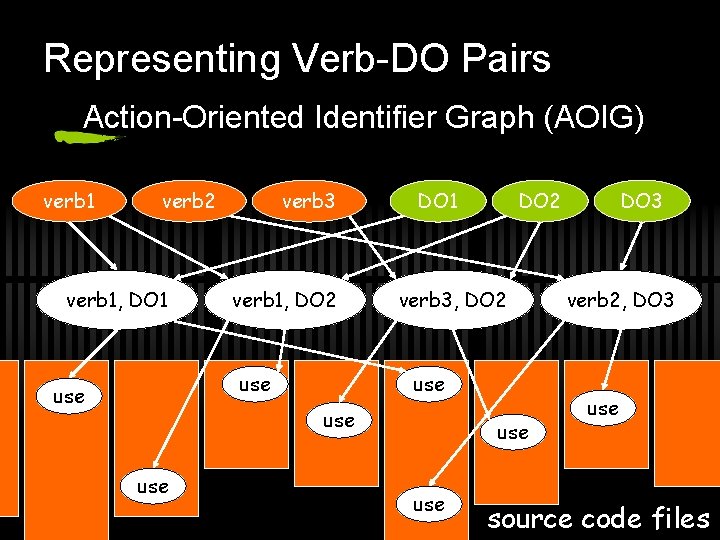

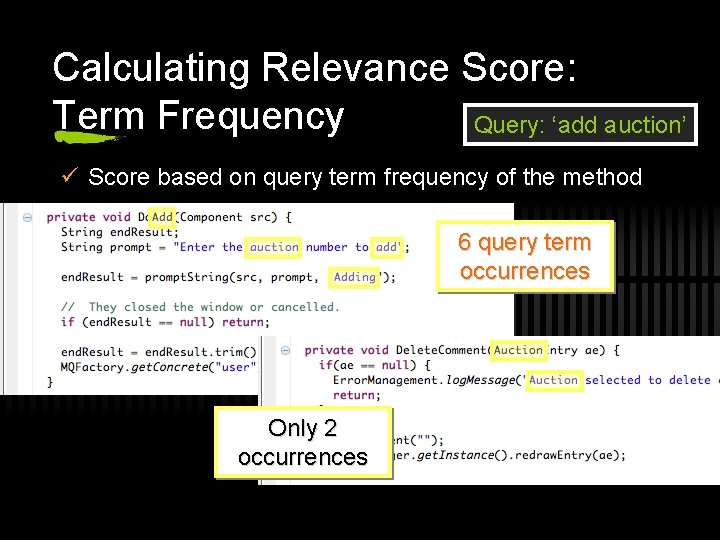

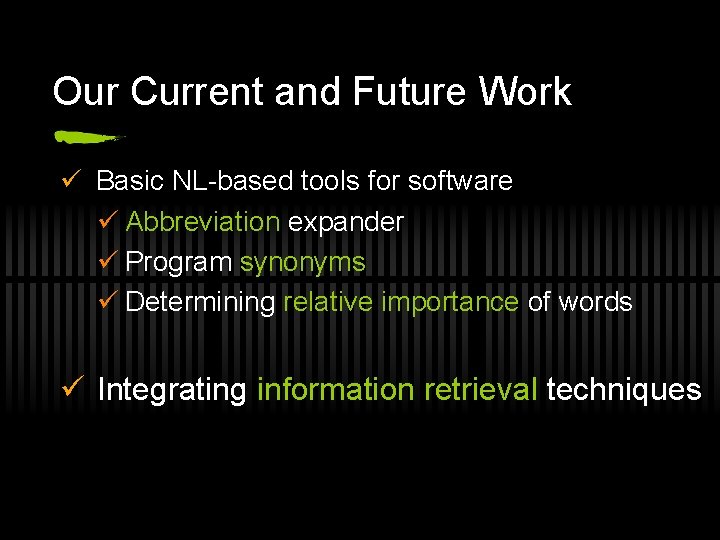

State of the Art for Concern Location ü Mining Dynamic Information [Wilde ICSM 00] ü Program Structure Navigation [Robillard FSE 05, FEAT, Schaefer ICSM 05] ü Search-Based Approaches ü Reg. Ex [grep, Aspect Mining Tool 00] ü LSA-Based [Marcus 04] ü Word-Frequency Based [GES 06] Reduced to similar problem Slow Fast Fragile Sensitive No Semantics

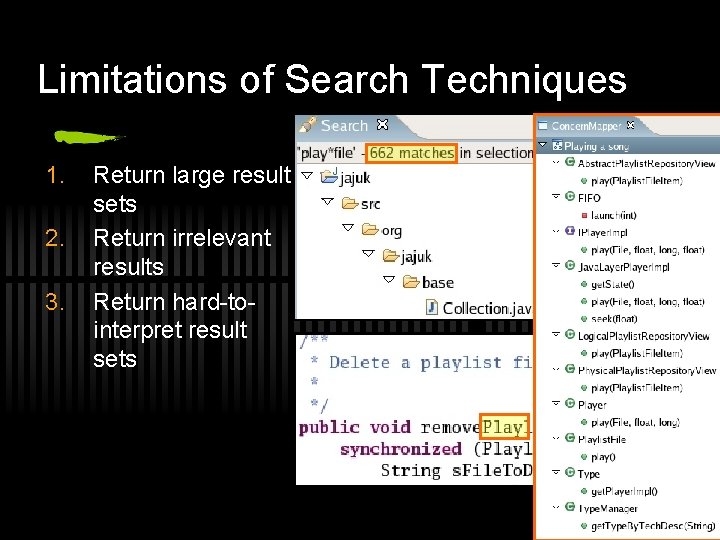

Limitations of Search Techniques 1. 2. 3. Return large result sets Return irrelevant results Return hard-tointerpret result sets

The Find-Concept Approach Source Code concept Concrete query 1. More effective search 2. Improved search terms 3. Understandable results Method a Find-Concept NL-based Code Rep Recommendations Natural Language Information Method c Method d Method b Method e Result Graph

![Underlying Program Analysis ü ActionOriented Identifier Graph AOIG AOSD 06 ü Provides access to Underlying Program Analysis ü Action-Oriented Identifier Graph (AOIG) [AOSD 06] ü Provides access to](https://slidetodoc.com/presentation_image_h/fb9330da7caff93e0b76b14dcb117cf8/image-18.jpg)

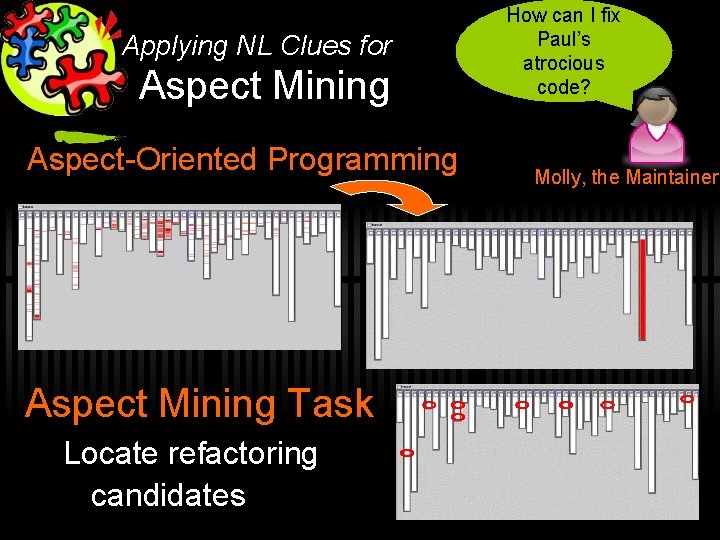

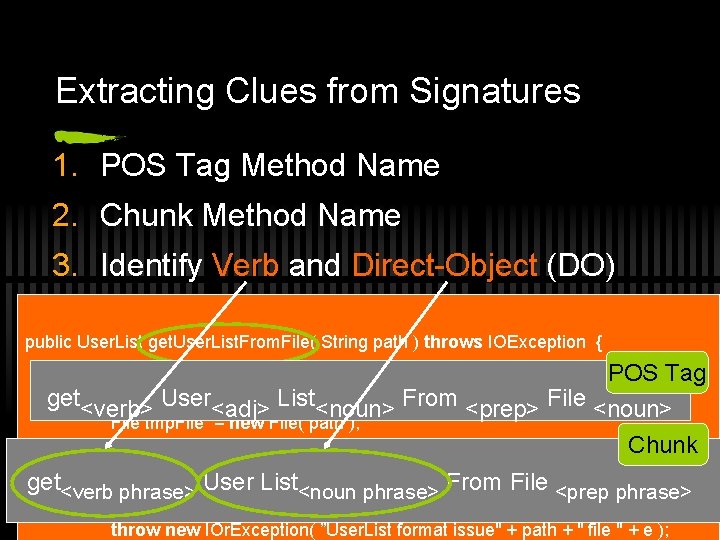

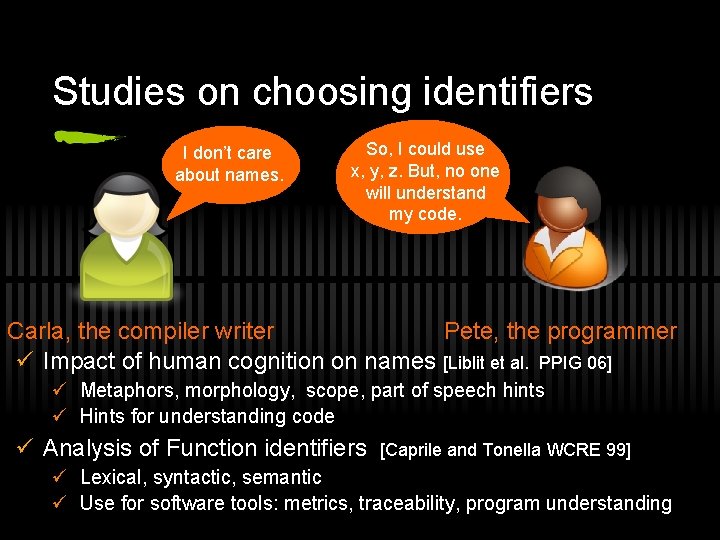

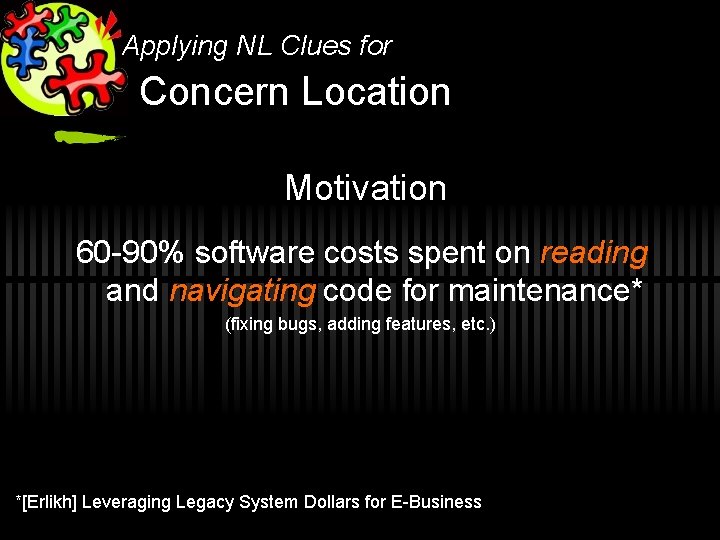

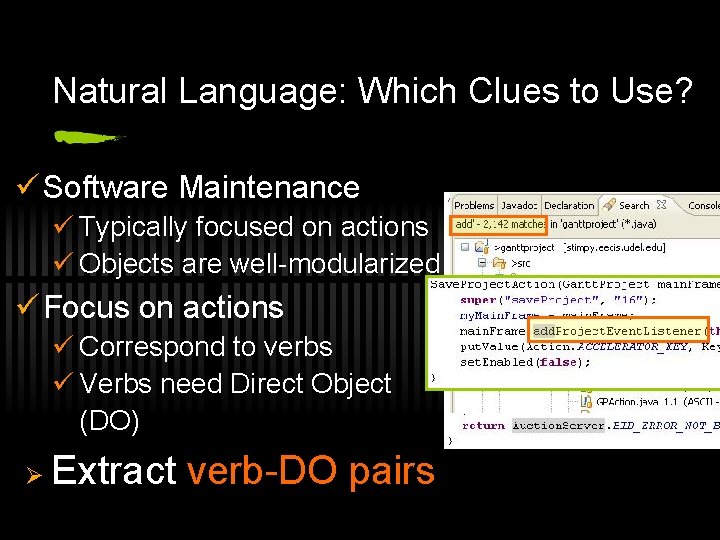

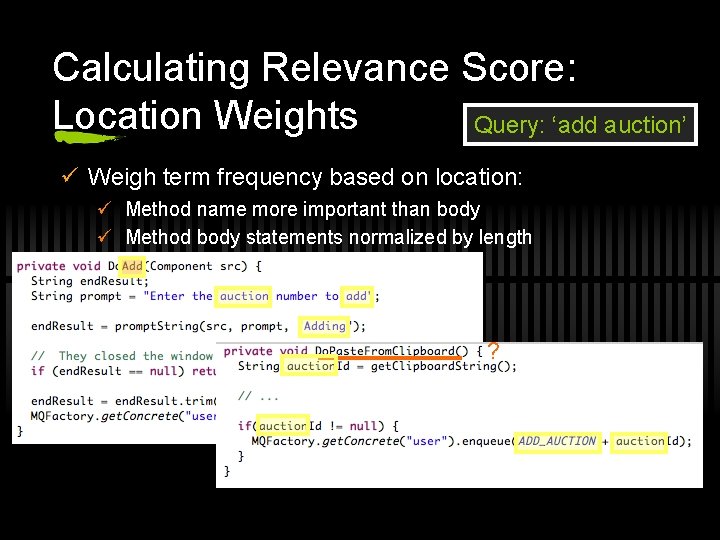

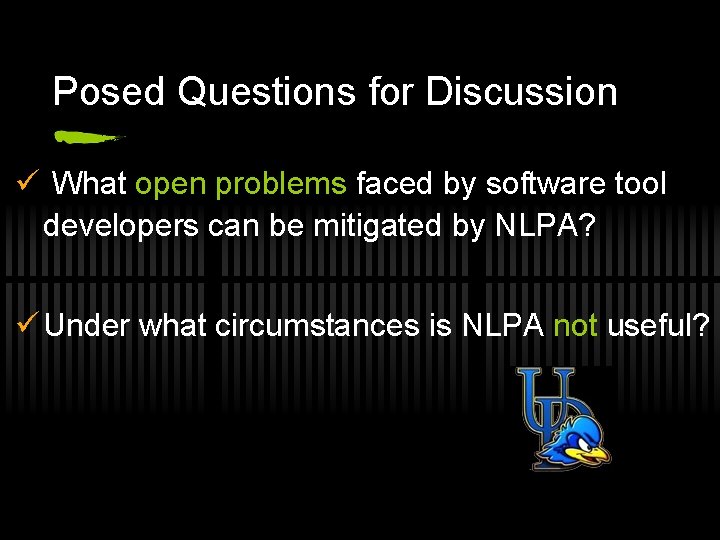

Underlying Program Analysis ü Action-Oriented Identifier Graph (AOIG) [AOSD 06] ü Provides access to NL information ü Provides interface between NL and traditional ü Word Recommendation Algorithm ü NL-based ü Stemmed/Rooted: complete, completing ü Synonym: finish, complete ü Combining NL and Traditional ü Co-location: complete. Word()

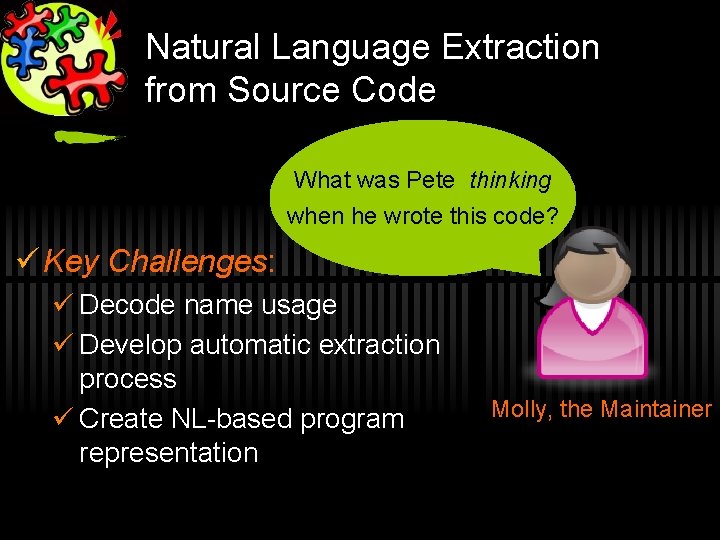

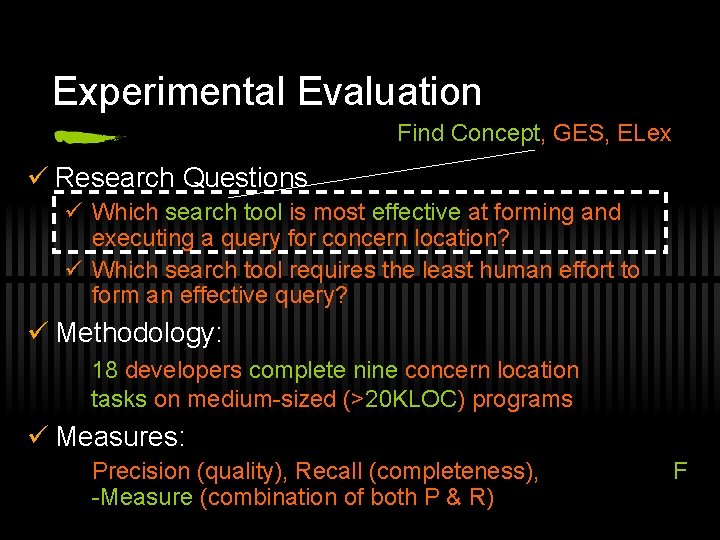

Experimental Evaluation Find Concept, GES, ELex ü Research Questions ü Which search tool is most effective at forming and executing a query for concern location? ü Which search tool requires the least human effort to form an effective query? ü Methodology: 18 developers complete nine concern location tasks on medium-sized (>20 KLOC) programs ü Measures: Precision (quality), Recall (completeness), -Measure (combination of both P & R) F

Overall Results Across all tasks ü Effectiveness ü FC > Elex with statistical significance ü FC >= GES on 7/9 tasks ü FC is more consistent than GES ü Effort ü FC = Elex = GES FC is more consistent and more effective in experimental study without requiring more effort

Natural Language Extraction from Source Code What was Pete thinking when he wrote this code? ü Key Challenges: ü Decode name usage ü Develop automatic extraction process ü Create NL-based program representation Molly, the Maintainer

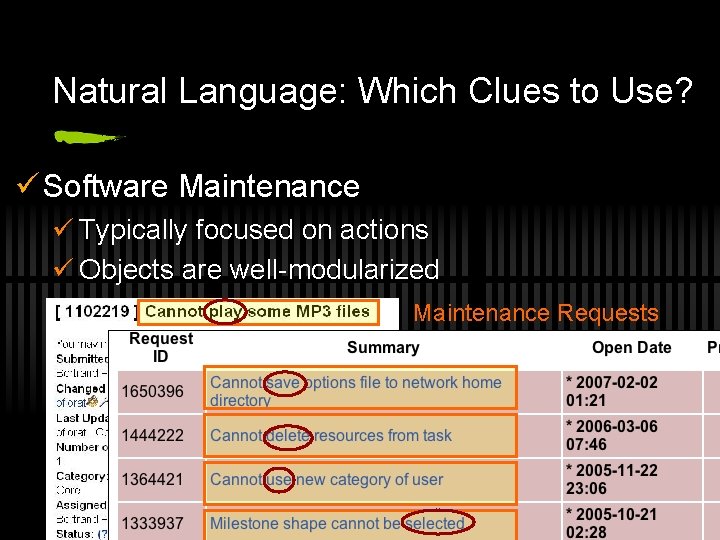

Natural Language: Which Clues to Use? ü Software Maintenance ü Typically focused on actions ü Objects are well-modularized Maintenance Requests

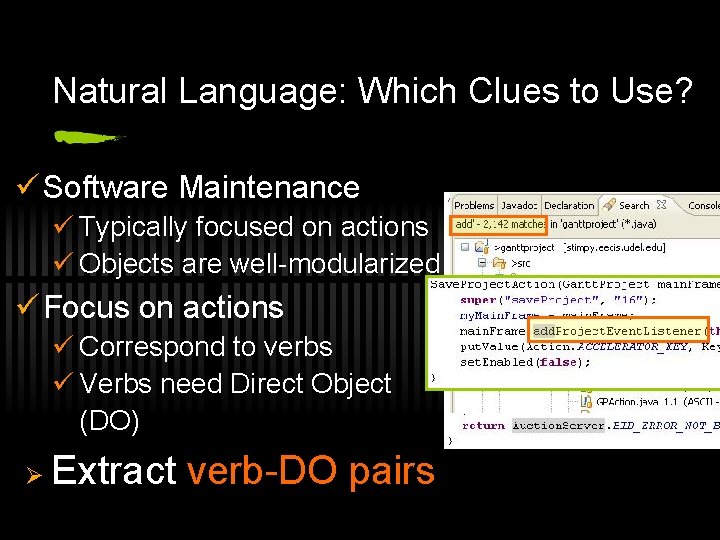

Natural Language: Which Clues to Use? ü Software Maintenance ü Typically focused on actions ü Objects are well-modularized ü Focus on actions ü Correspond to verbs ü Verbs need Direct Object (DO) Ø Extract verb-DO pairs

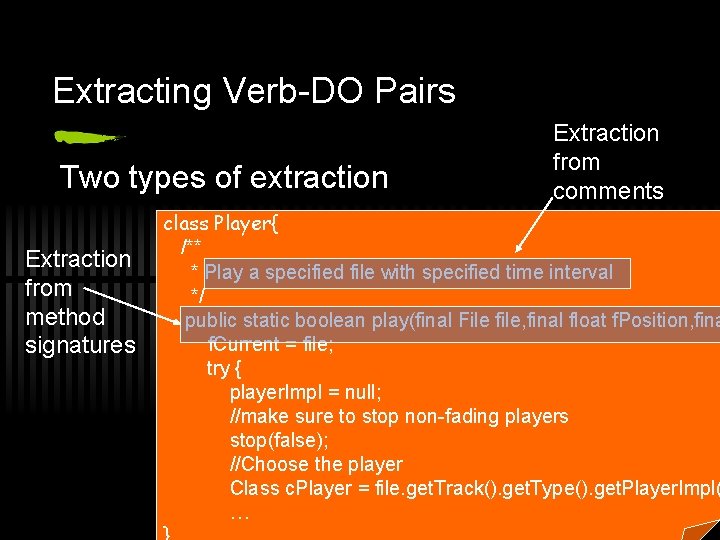

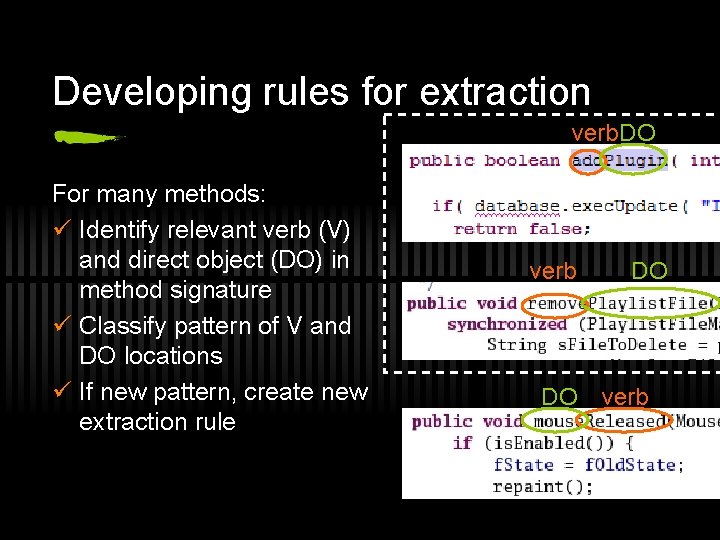

Extracting Verb-DO Pairs Two types of extraction Extraction from method signatures Extraction from comments class Player{ /** * Play a specified file with specified time interval */ public static boolean play(final File file, final float f. Position, fina f. Current = file; try { player. Impl = null; //make sure to stop non-fading players stop(false); //Choose the player Class c. Player = file. get. Track(). get. Type(). get. Player. Impl( …

Extracting Clues from Signatures 1. POS Tag Method Name 2. Chunk Method Name 3. Identify Verb and Direct-Object (DO) public User. List get. User. List. From. File( String path ) throws IOException { POS Tag { gettry <verb> User<adj> List<noun> From <prep> File <noun> File tmp. File = new File( path ); return parse. File(tmp. File); Chunk get<verb phrase> User List<noun phrase> From File <prep phrase> } catch( java. io. IOException e ) { throw new IOr. Exception( ”User. List format issue" + path + " file " + e );

pic Stats Year 2006 2007 diet cokes/day 1 6 Name: Zak Fry Nickname: The Rookie Current Position: Upcoming senior Future Position: Graduate School lab days/week 2 8

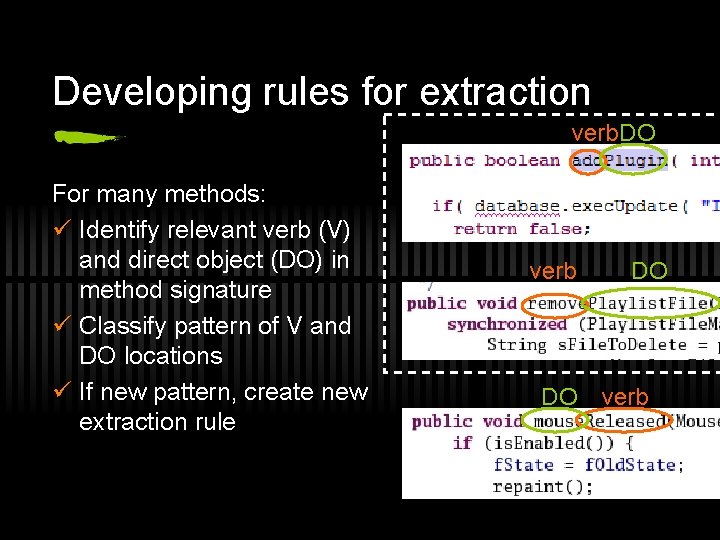

Developing rules for extraction verb. DO For many methods: ü Identify relevant verb (V) and direct object (DO) in method signature ü Classify pattern of V and DO locations ü If new pattern, create new extraction rule verb DO DO verb

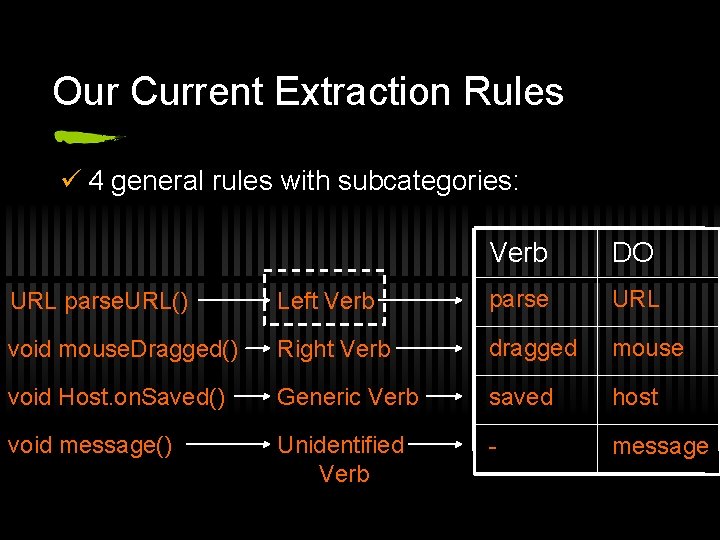

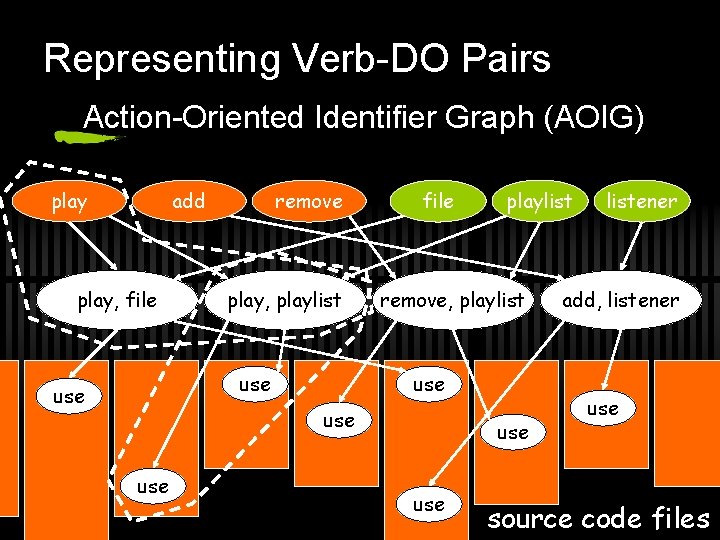

Our Current Extraction Rules ü 4 general rules with subcategories: Verb DO URL parse. URL() Left Verb parse URL void mouse. Dragged() Right Verb dragged mouse void Host. on. Saved() Generic Verb saved host void message() Unidentified Verb - message

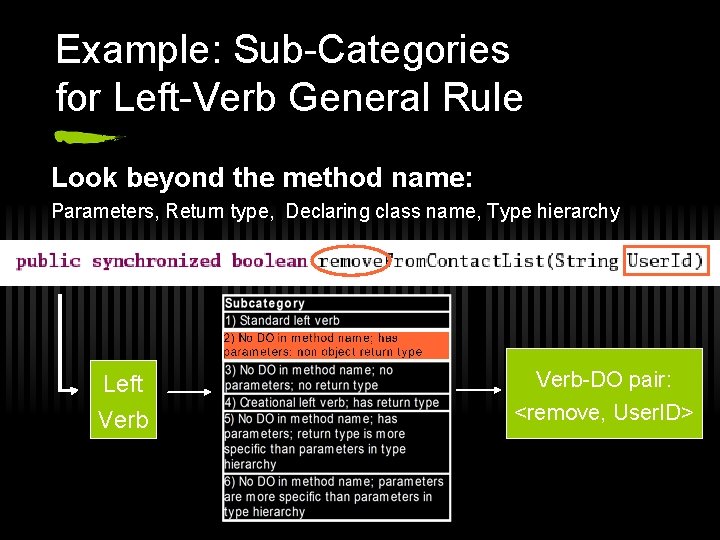

Example: Sub-Categories for Left-Verb General Rule Look beyond the method name: Parameters, Return type, Declaring class name, Type hierarchy Left Verb-DO pair: <remove, User. ID>

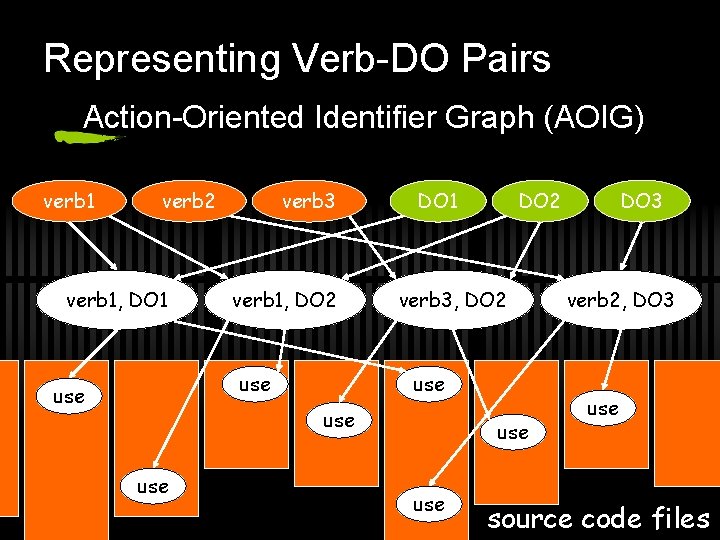

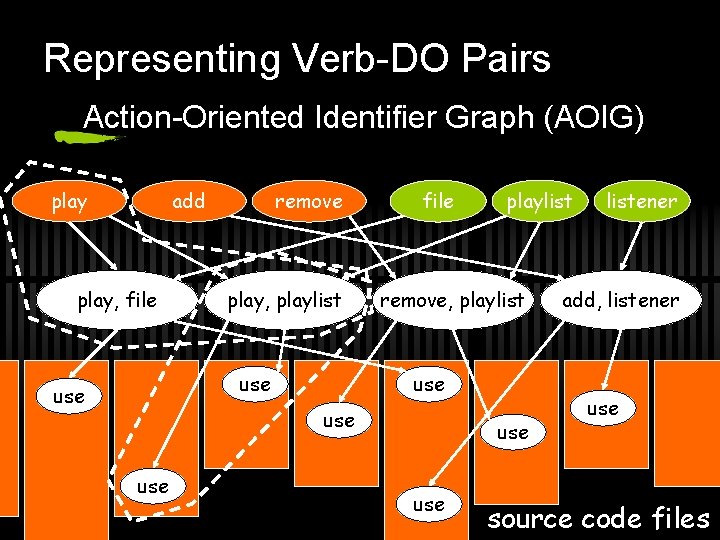

Representing Verb-DO Pairs Action-Oriented Identifier Graph (AOIG) verb 1 verb 2 verb 1, DO 1 verb 3 verb 1, DO 2 use DO 1 verb 3, DO 2 use use DO 2 use DO 3 verb 2, DO 3 use source code files

Representing Verb-DO Pairs Action-Oriented Identifier Graph (AOIG) play add play, file remove play, playlist use file remove, playlist use use playlist use listener add, listener use source code files

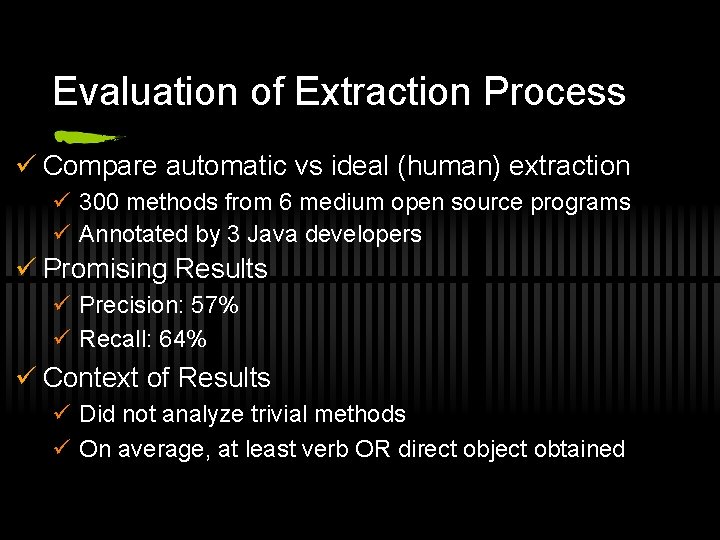

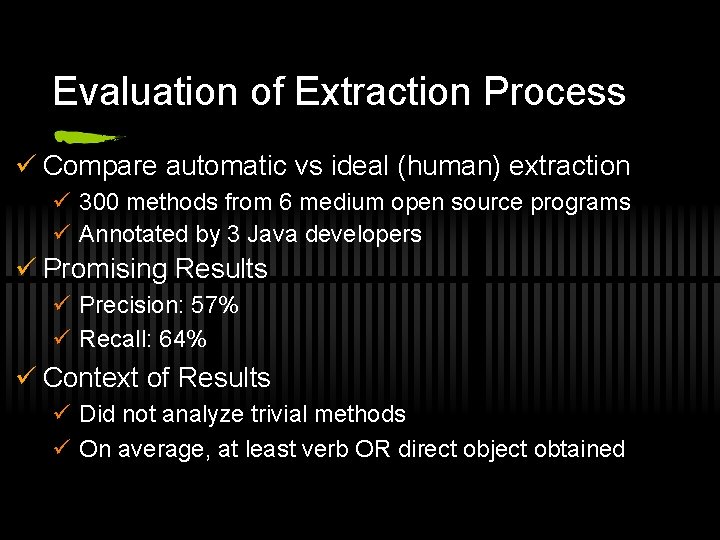

Evaluation of Extraction Process ü Compare automatic vs ideal (human) extraction ü 300 methods from 6 medium open source programs ü Annotated by 3 Java developers ü Promising Results ü Precision: 57% ü Recall: 64% ü Context of Results ü Did not analyze trivial methods ü On average, at least verb OR direct object obtained

pic Stats Year 2003 2007 cokes/day 0. 2 2 Name: Emily Gibson Hill Nickname: Batter on Deck Current Position: 2 nd year Ph. D Student Future Position: Ph. D Candidate meetings/week 1 5

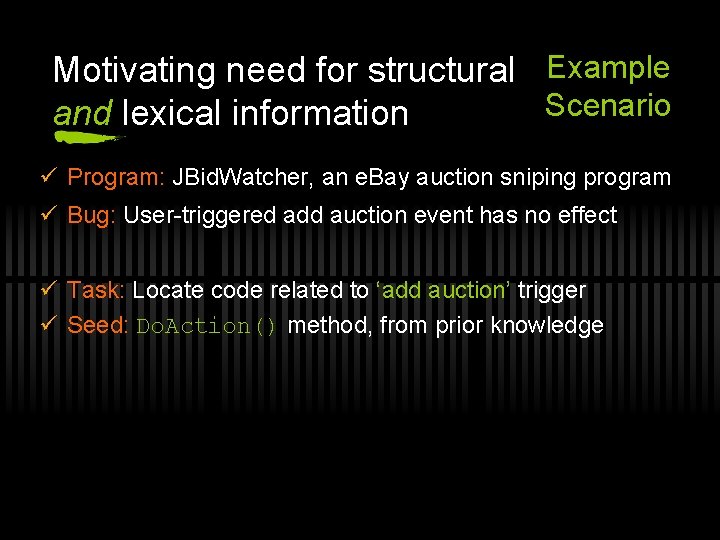

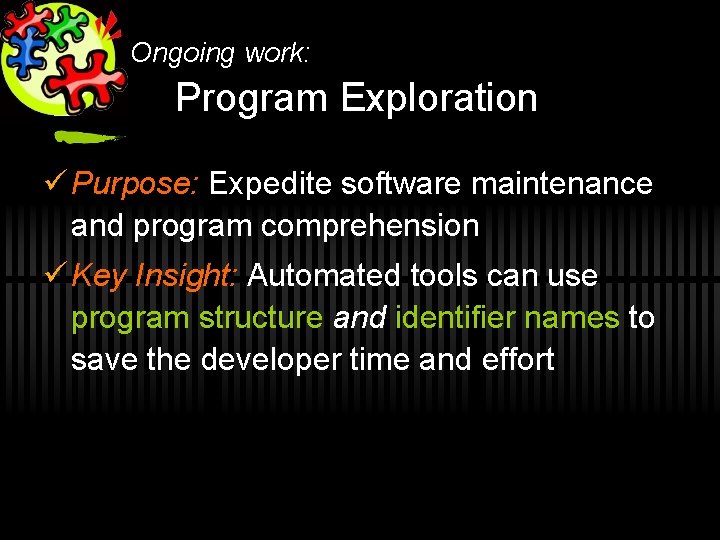

Ongoing work: Program Exploration ü Purpose: Expedite software maintenance and program comprehension ü Key Insight: Automated tools can use program structure and identifier names to save the developer time and effort

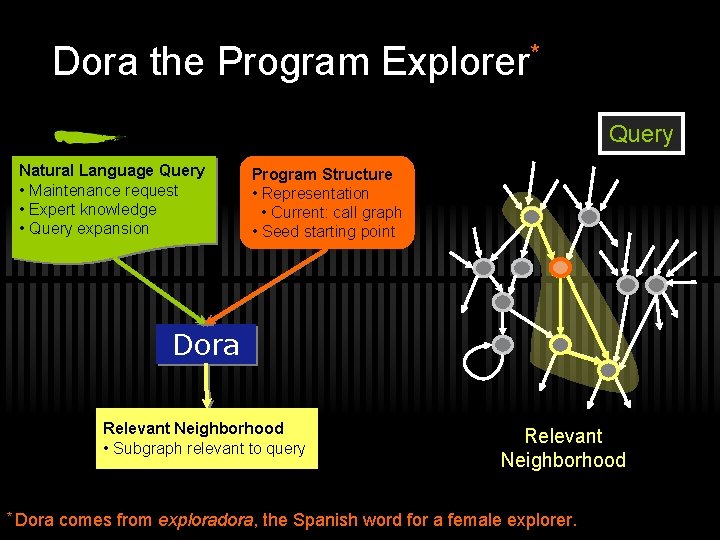

Dora the Program Explorer* Query Natural Language Query • Maintenance request • Expert knowledge • Query expansion Program Structure • Representation • Current: call graph • Seed starting point Dora Relevant Neighborhood • Subgraph relevant to query * Dora Relevant Neighborhood comes from exploradora, the Spanish word for a female explorer.

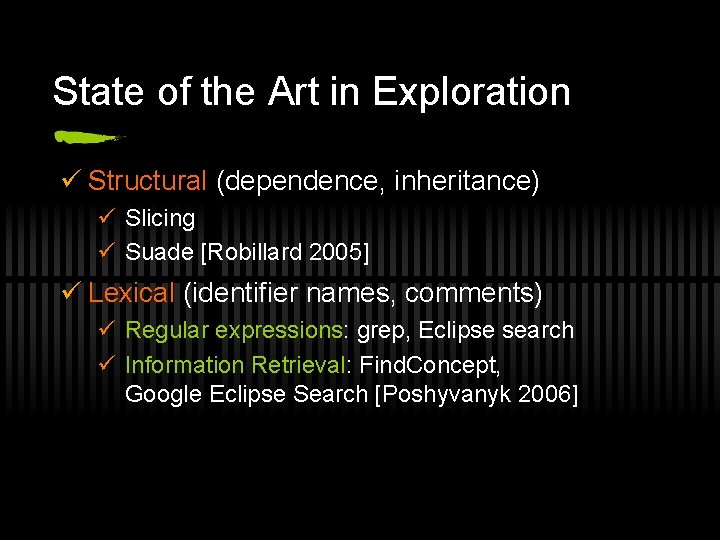

State of the Art in Exploration ü Structural (dependence, inheritance) ü Slicing ü Suade [Robillard 2005] ü Lexical (identifier names, comments) ü Regular expressions: grep, Eclipse search ü Information Retrieval: Find. Concept, Google Eclipse Search [Poshyvanyk 2006]

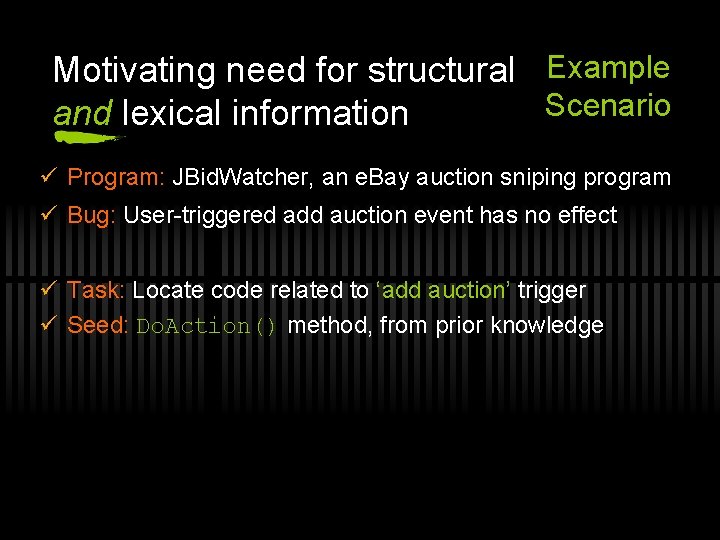

Motivating need for structural Example Scenario and lexical information ü Program: JBid. Watcher, an e. Bay auction sniping program ü Bug: User-triggered add auction event has no effect ü Task: Locate code related to ‘add auction’ trigger ü Seed: Do. Action() method, from prior knowledge

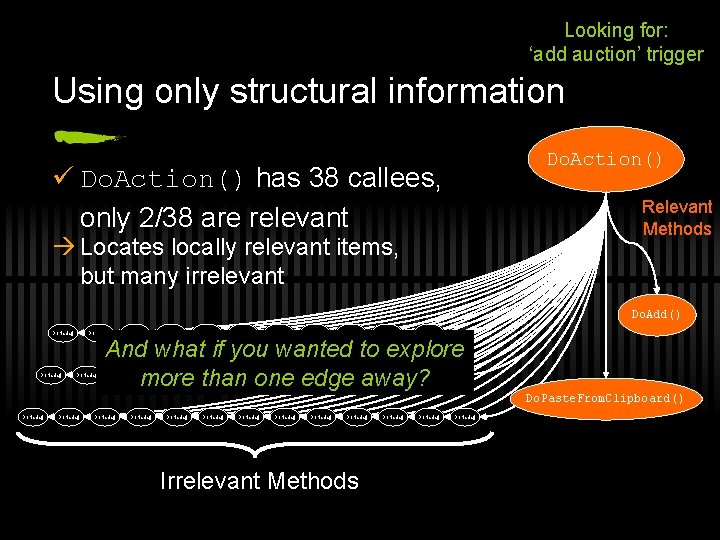

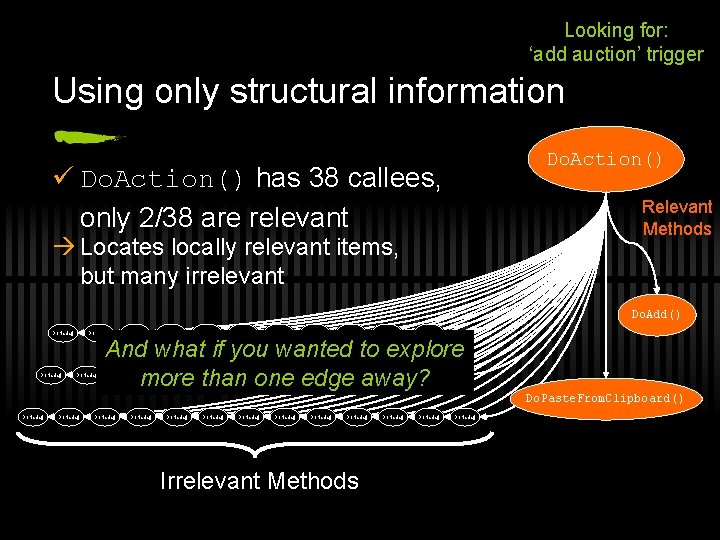

Looking for: ‘add auction’ trigger Using only structural information Do. Action() ü Do. Action() has 38 callees, only 2/38 are relevant Relevant Methods à Locates locally relevant items, but many irrelevant Do. Add() Do. Nada() Do. Nada() And what if you wanted to explore more than one edge away? Do. Nada() Do. Paste. From. Clipboard() Do. Nada() Do. Nada() Irrelevant Methods Do. Nada()

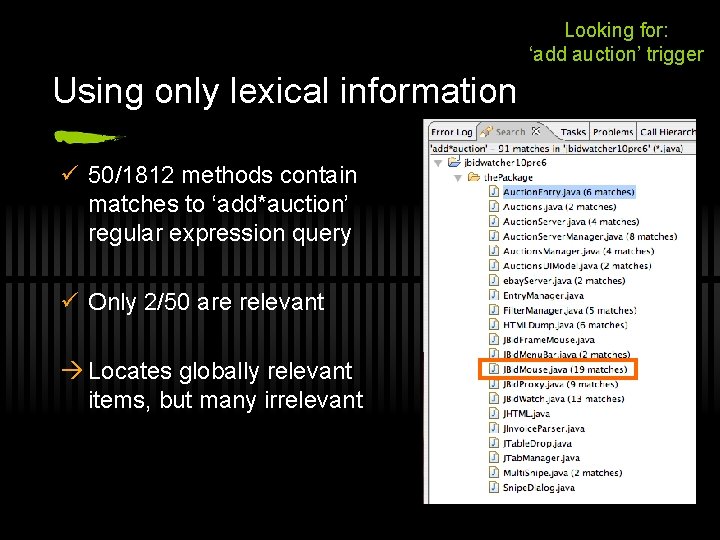

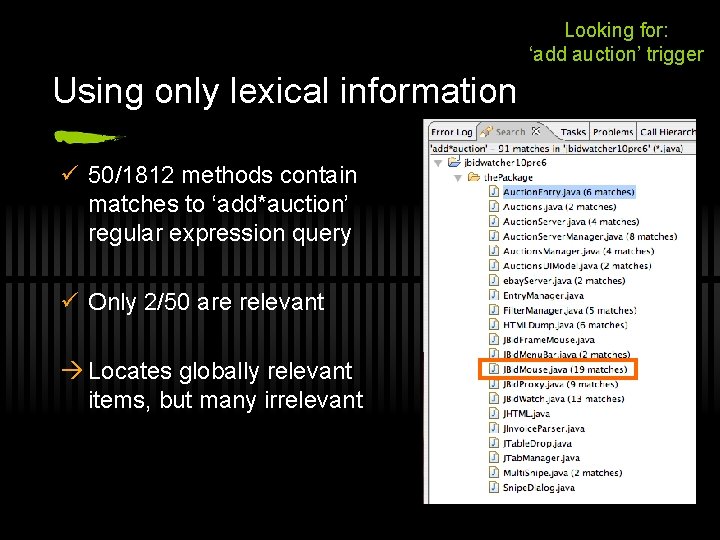

Looking for: ‘add auction’ trigger Using only lexical information ü 50/1812 methods contain matches to ‘add*auction’ regular expression query ü Only 2/50 are relevant à Locates globally relevant items, but many irrelevant

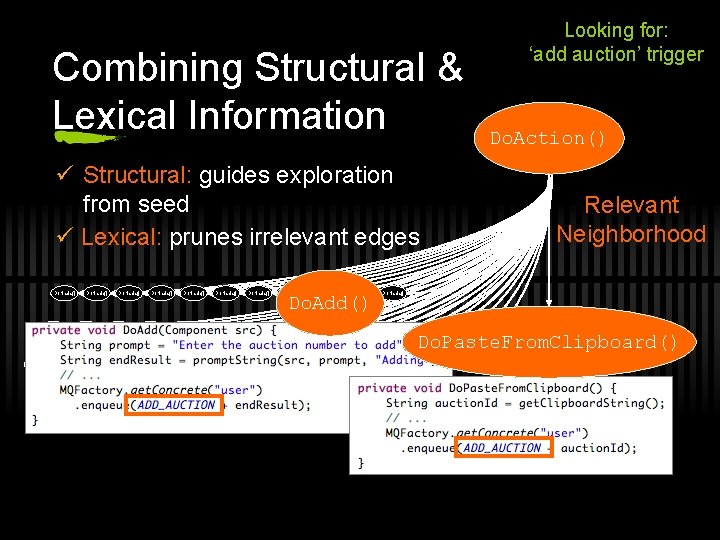

Combining Structural & Lexical Information ü Structural: guides exploration from seed ü Lexical: prunes irrelevant edges Do. Nada() Do. Nada() Do. Add() Do. Nada() Do. Action() Relevant Neighborhood Do. Nada() Looking for: ‘add auction’ trigger Do. Nada() Do. Paste. From. Clipboard() Do. Nada()

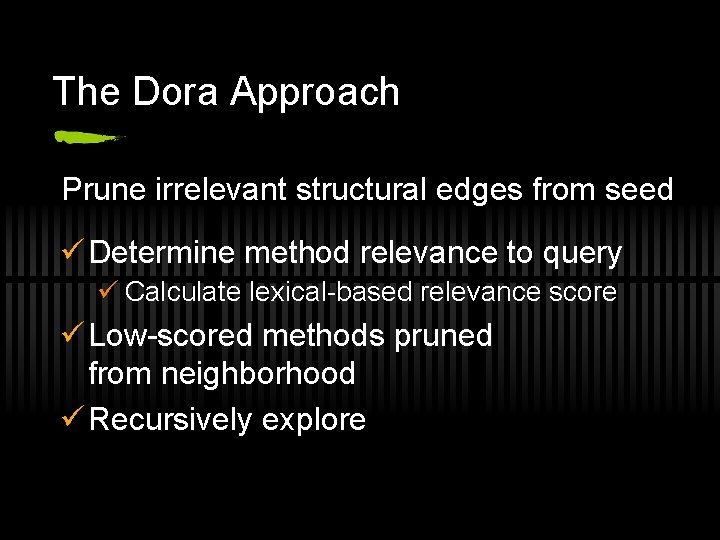

The Dora Approach Prune irrelevant structural edges from seed ü Determine method relevance to query ü Calculate lexical-based relevance score ü Low-scored methods pruned from neighborhood ü Recursively explore

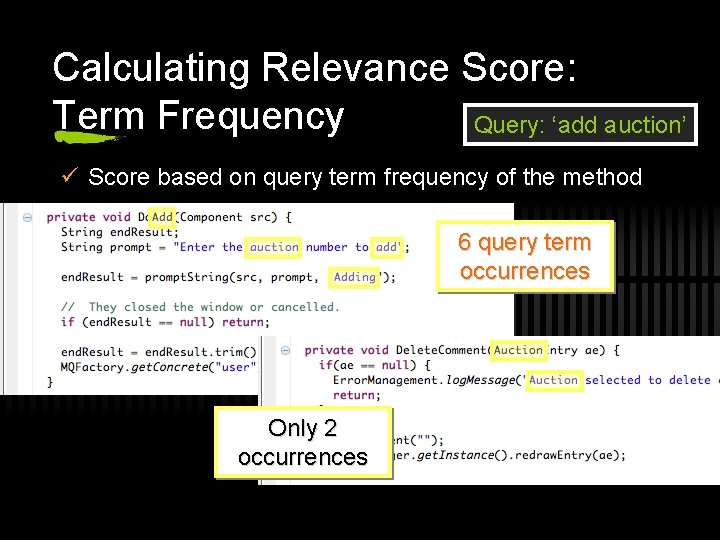

Calculating Relevance Score: Term Frequency Query: ‘add auction’ ü Score based on query term frequency of the method 6 query term occurrences Only 2 occurrences

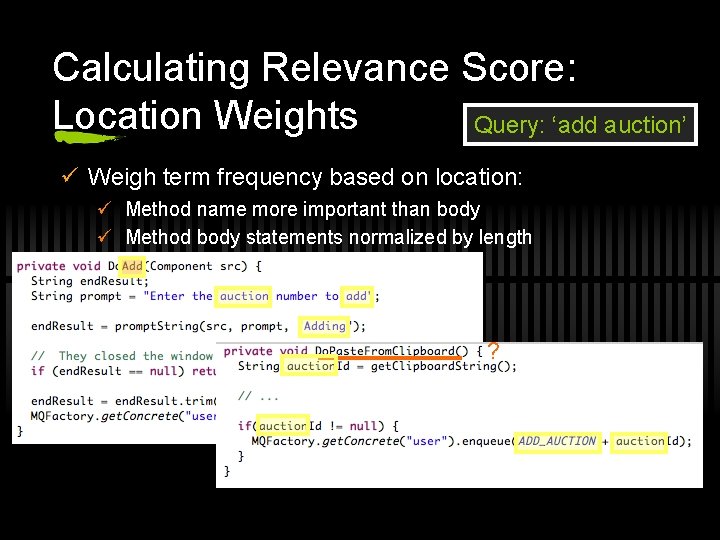

Calculating Relevance Score: Location Weights Query: ‘add auction’ ü Weigh term frequency based on location: ü Method name more important than body ü Method body statements normalized by length ?

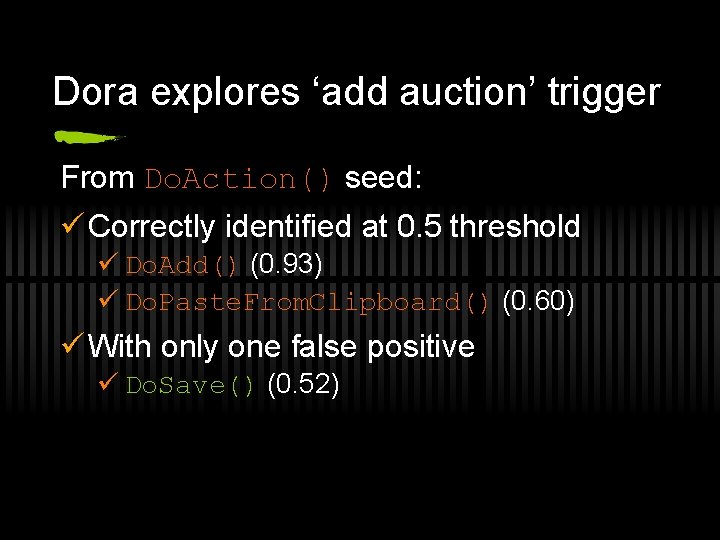

Dora explores ‘add auction’ trigger From Do. Action() seed: ü Correctly identified at 0. 5 threshold ü Do. Add() (0. 93) ü Do. Paste. From. Clipboard() (0. 60) ü With only one false positive ü Do. Save() (0. 52)

Summary ü NL technology used Synonyms, collocations, morphology, word frequencies, part-of-speech tagging, AOIG ü Evaluation indicates Natural language information shows promise for improving software development tools ü Key to success Accurate extraction of NL clues

Our Current and Future Work ü Basic NL-based tools for software ü Abbreviation expander ü Program synonyms ü Determining relative importance of words ü Integrating information retrieval techniques

Posed Questions for Discussion ü What open problems faced by software tool developers can be mitigated by NLPA? ü Under what circumstances is NLPA not useful?