Intro to Parallel and Distributed Processing Slides from

Intro to Parallel and Distributed Processing Slides from: Jimmy Lin The i. School University of Maryland Some material adapted from slides by Christophe Bisciglia, Aaron Kimball, & Sierra Michels-Slettvet, Google Distributed Computing Seminar, 2007 (licensed under Creation Commons Attribution 3. 0 License)

Web-Scale Problems? • How to approach problems in: – Biocomputing – Nanocomputing – Quantum computing –… • It all boils down to… – Divide-and-conquer – Throwing more hardware at the problem Simple to understand… a lifetime to master… – Clusters, Grids, Clouds

Cluster computing • Compute nodes located locally, connected by fast LAN • Tightly coupled – a few nodes to large number • Group of linked computers working closely together, in parallel • Sometimes viewed as forming single computer • Improve performance and availability

Clusters • Nodes have computational devices and local storage, networked disk storage allows for sharing • Static system, centralized control • Data Centers • Multiple clusters at a datacenter • Google cluster 1000 s servers – Racks 40 -80 servers

Grid Computing • Loosely coupled, heterogeneous, geographically dispersed • Although can be dedicated to single application, typically for variety of purposes • Can be formed from computing resources belonging to multiple organizations • Thousands of users worldwide – Compute grids, data grids – Access to resources not available locally

Grids • Resources in a grid geographically dispersed – Loosely coupled, heterogeneous • Sharing of data and resources in dynamic, multiinstitutional virtual organizations – Access to specialized resources – Academia • Allows users to connect/disconnect, e. g. electrical grid, plug in your device • Middleware needed to use grid • Can connect a data center (cluster) to a grid

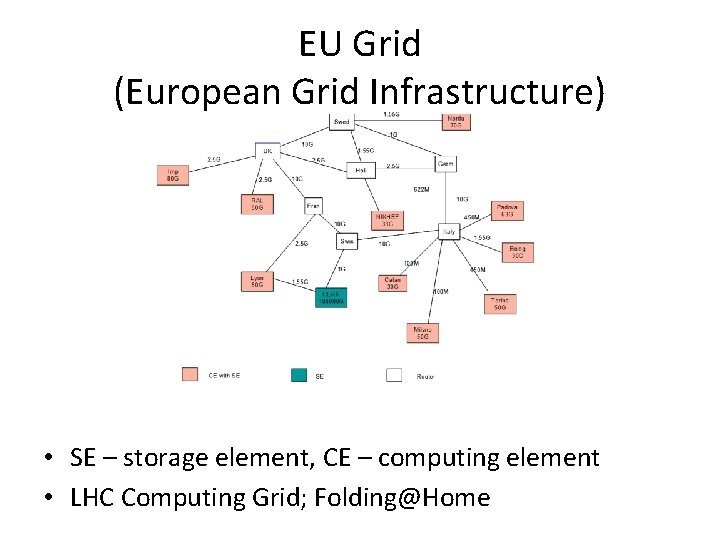

EU Grid (European Grid Infrastructure) • SE – storage element, CE – computing element • LHC Computing Grid; Folding@Home

Grid • Precursor to Cloud computing but no virtualization “Virtualization distinguishes cloud from grid” Zissis

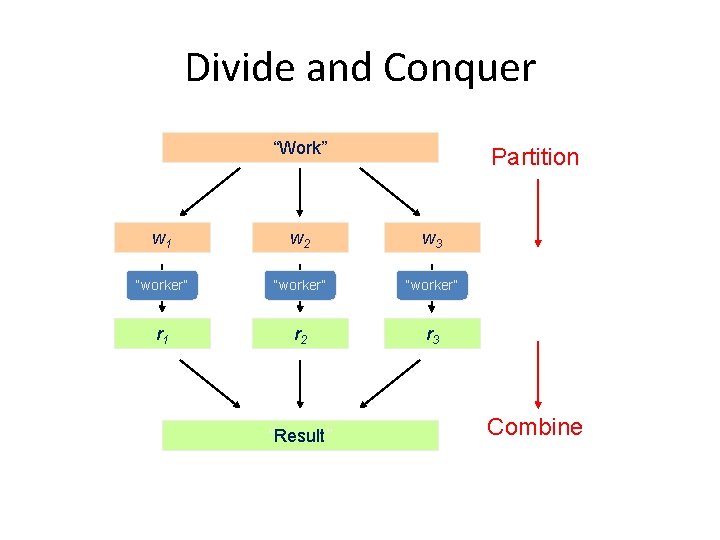

Divide and Conquer “Work” Partition w 1 w 2 w 3 “worker” r 1 r 2 r 3 “Result” Combine

Different Workers • • Different threads in the same core Different cores in the same CPU Different CPUs in a multi-processor system Different machines in a distributed system

Choices, Choices • • Commodity vs. “exotic” hardware Number of machines vs. processor vs. cores Bandwidth of memory vs. disk vs. network Different programming models

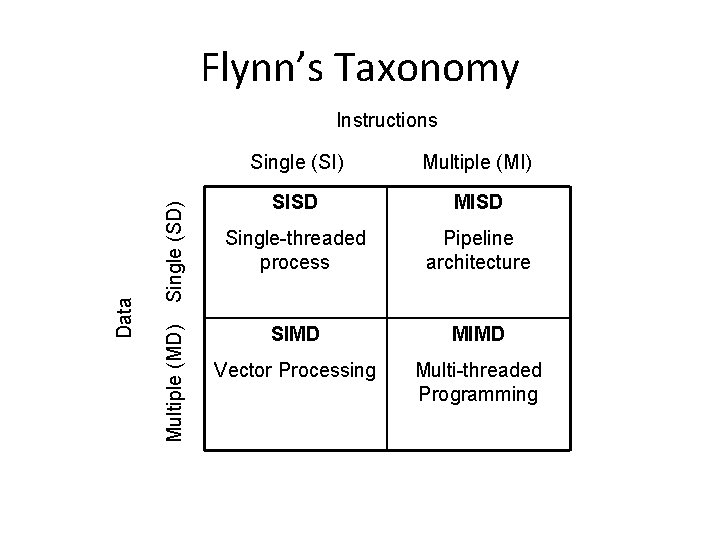

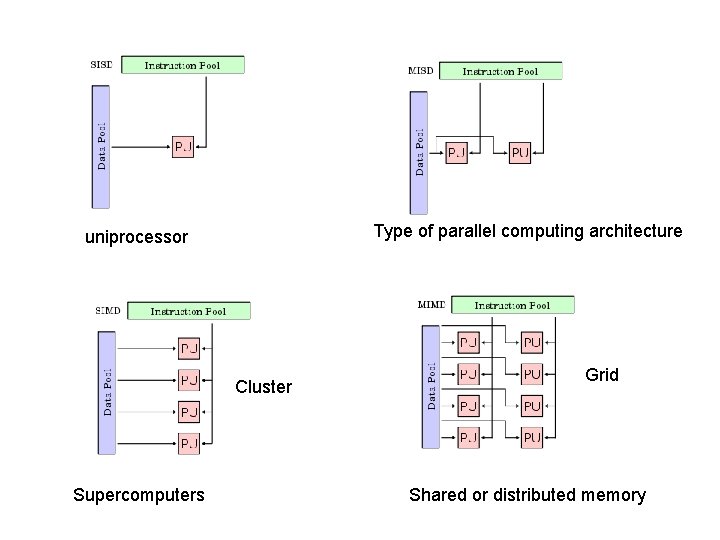

Flynn’s Taxonomy Single (SD) Multiple (MD) Data Instructions Single (SI) Multiple (MI) SISD MISD Single-threaded process Pipeline architecture SIMD MIMD Vector Processing Multi-threaded Programming

Type of parallel computing architecture uniprocessor Cluster Supercomputers Grid Shared or distributed memory

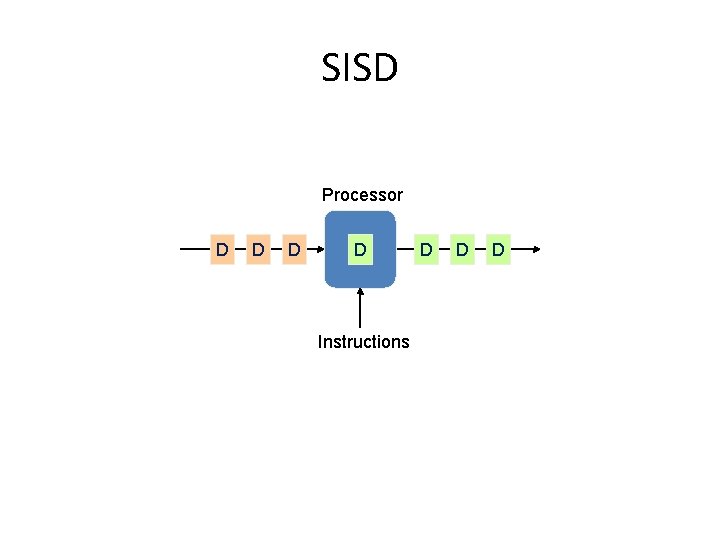

SISD Processor D D Instructions D D D

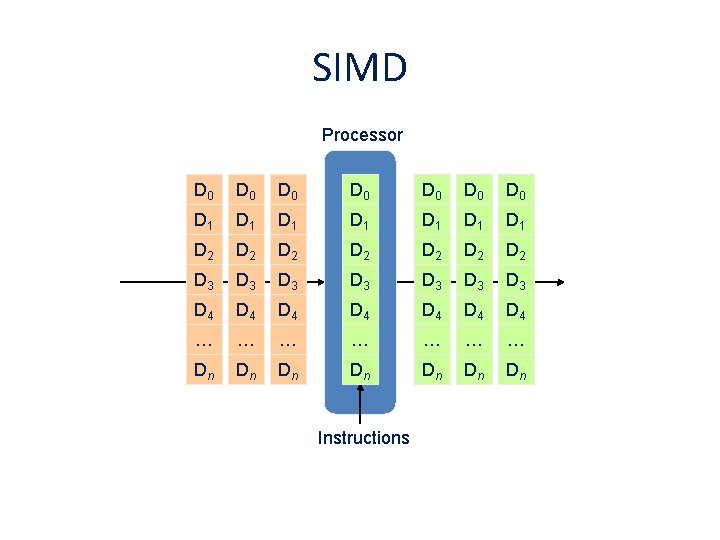

SIMD Processor D 0 D 0 D 1 D 1 D 2 D 2 D 3 D 3 D 4 D 4 … … … … Dn Dn Instructions

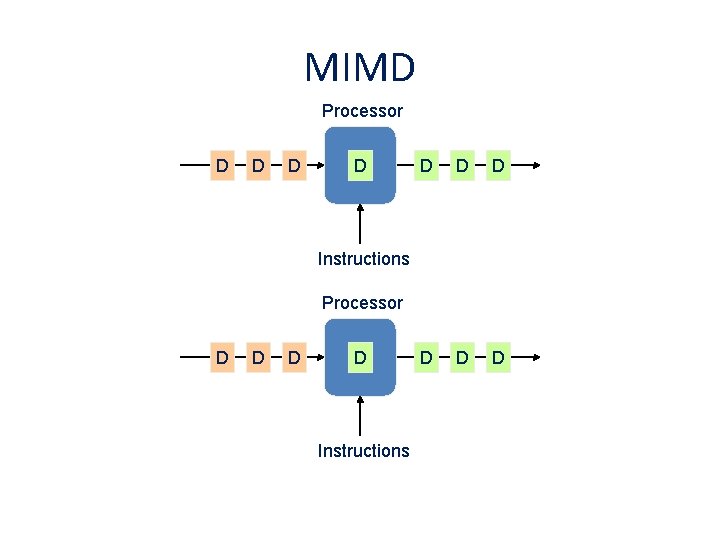

MIMD Processor D D D D D Instructions Processor D D Instructions

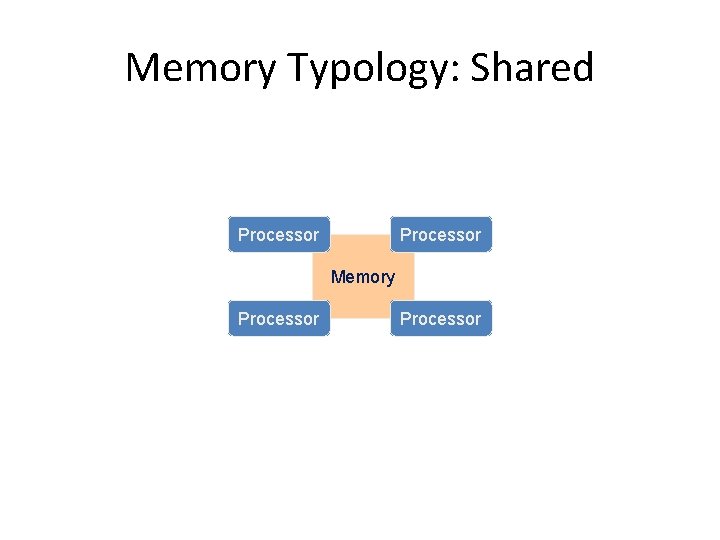

Memory Typology: Shared Processor Memory Processor

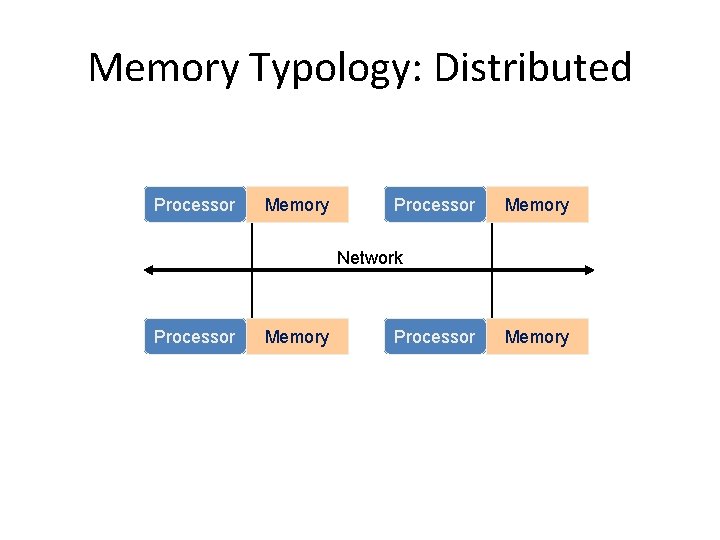

Memory Typology: Distributed Processor Memory Network Processor Memory

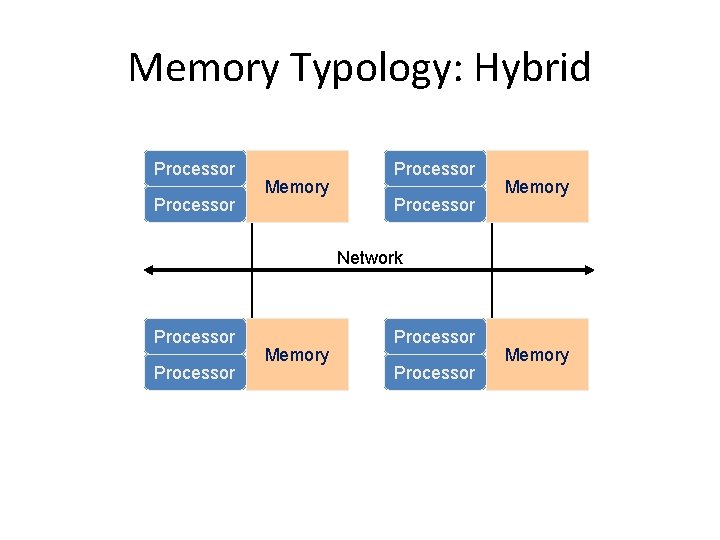

Memory Typology: Hybrid Processor Memory Network Processor Memory

Parallelization Problems • • • How do we assign work units to workers? What if we have more work units than workers? What if workers need to share partial results? How do we aggregate partial results? How do we know all the workers have finished? What if workers die? What is the common theme of all of these problems?

General Theme? • Parallelization problems arise from: – Communication between workers – Access to shared resources (e. g. , data) • Thus, we need a synchronization system! • This is tricky: – Finding bugs is hard – Solving bugs is even harder

Managing Multiple Workers • Difficult because – (Often) don’t know the order in which workers run – (Often) don’t know where the workers are running – (Often) don’t know when workers interrupt each other • Thus, we need: – Semaphores (lock, unlock) – Conditional variables (wait, notify, broadcast) – Barriers • Still, lots of problems: – Deadlock, livelock, race conditions, . . . • Moral of the story: be careful! – Even trickier if the workers are on different machines

Patterns for Parallelism • Parallel computing has been around for decades • Here are some “design patterns” …

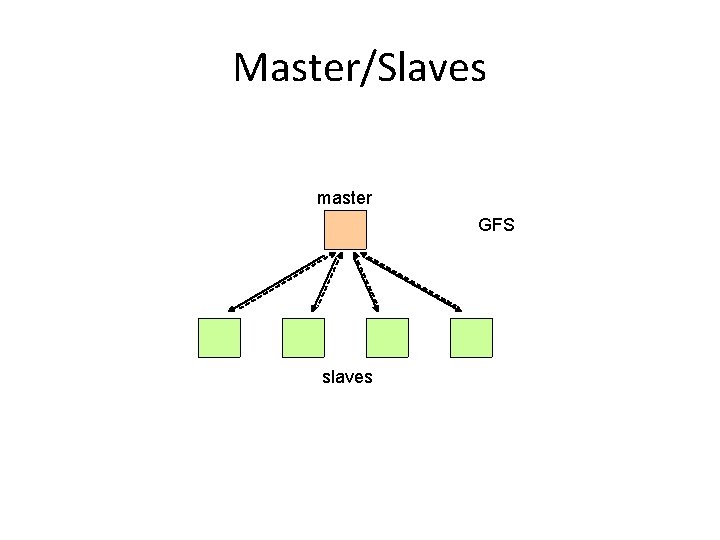

Master/Slaves master GFS slaves

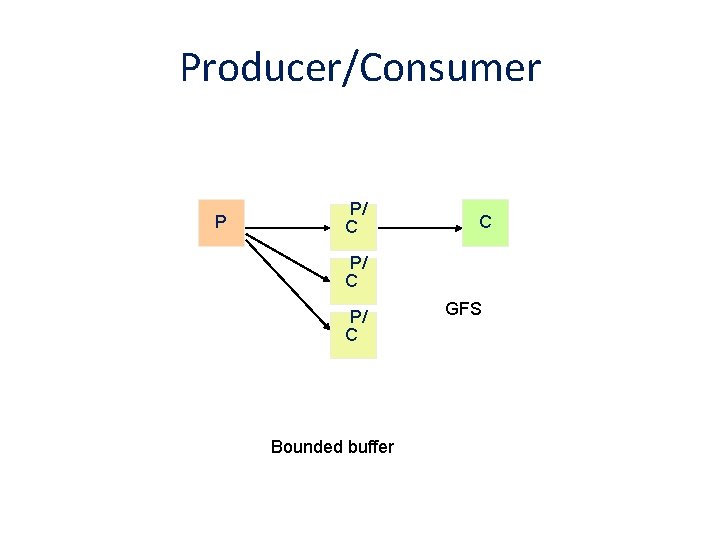

Producer/Consumer P P/ C C P/ C Bounded buffer GFS

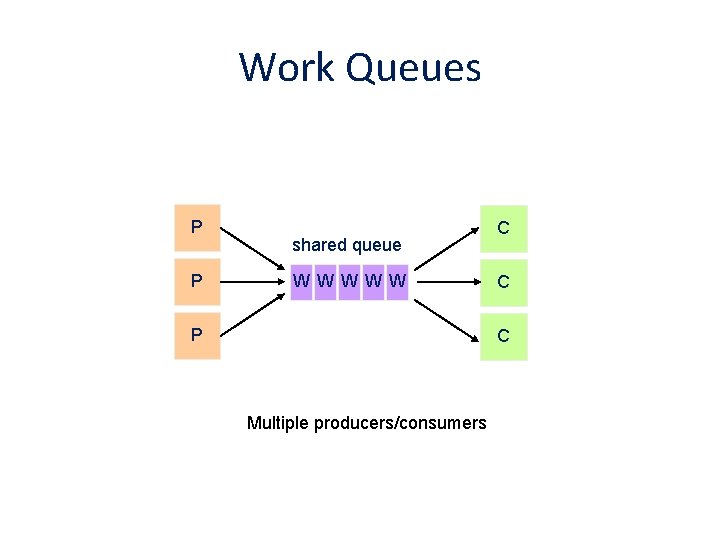

Work Queues P P shared queue WWWWW P C C C Multiple producers/consumers

Rubber Meets Road • From patterns to implementation: – pthreads, Open. MP for multi-threaded programming – MPI (message passing interface) for clustering computing –… • The reality: – Lots of one-off solutions, custom code – Write your own dedicated library, then program with it – Burden on the programmer to explicitly manage everything • Map. Reduce to the rescue!

Parallelization • Map. Reduce: the “back-end” of cloud computing – Batch-oriented processing of large datasets – Highly parallel – solve problem in parallel, reduce • e. g. count words in document • Hadoop Map. Reduce: a programming model and software framework – for writing applications that rapidly process vast amounts of data in parallel on large clusters of compute nodes. • Ajax: the “front-end” of cloud computing – Highly-interactive Web-based applications

- Slides: 28