Intro to InterProcessor Communications IPC Multicore Training Agenda

- Slides: 45

Intro to: Inter-Processor Communications (IPC) Multicore Training

Agenda Motivation IPC library msg. Com Demos and examples Multicore Training

IPC Challenges Multiple cores cooperation – needs a smart way to exchange data and messages Scaling the problem up – 2 to 12 cores in a device, ability to connect multiple devices Efficient scheme (does not cost a lot in terms of cpu cycles) Easy to use, clear and standard APIs The usual trade-offs –performances (speed, flexibility) versus cost (complexity, more resources) Multicore Training

Architecture Support for IPC Shared memory – MSMC memory or DDR IPC registers set provides hardware interrupt to cores Multicore navigator Various peripherals for communication between devices Multicore Training

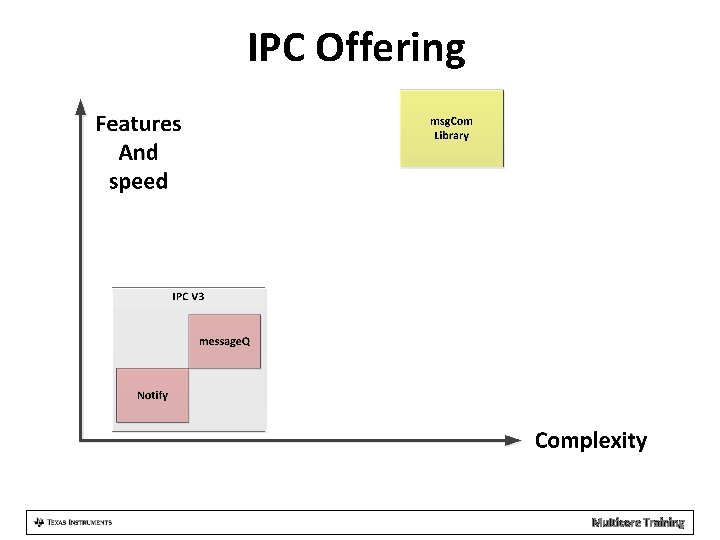

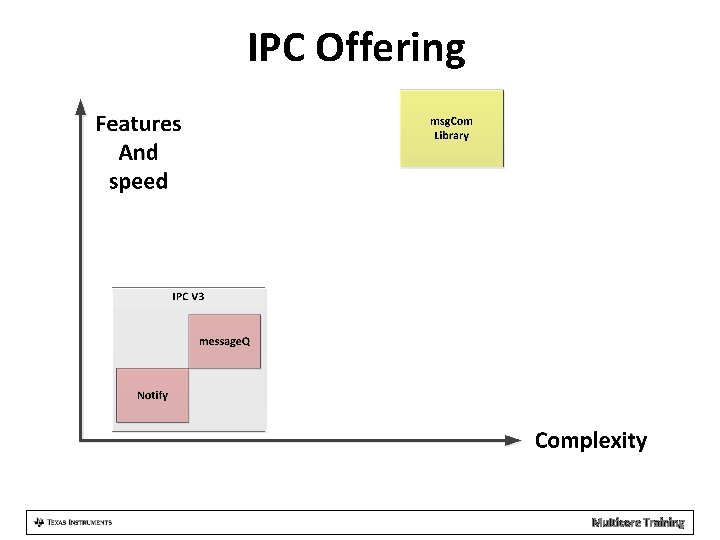

IPC Offering Multicore Training

Key. Stone Technologies (1) • IPCv 3 –library based on shared memory DSP - must build with BIOS Arm – Linux from user Mode Designed for moving messages and short data Called “the control path” because message. Q is the “slow” path for data and Notify is limited to 32 bit messages – Requires sys. Bios on the DSP side – Compatible with old devices – same API – – Multicore Training

Key. Stone Technologies (2) • Msg. Com –library based on the multicore navigator queues and logic – DSP – can work even without operating system – ARM – Linux library from User Mode – Moving data fast between ARM-DSP and DSP to DSP with minimum intervention of the CPU – Does not require BIOS – Supports many features of data move Multicore Training

Agenda Basic Concepts IPC library msg. Com Demos and examples Multicore Training

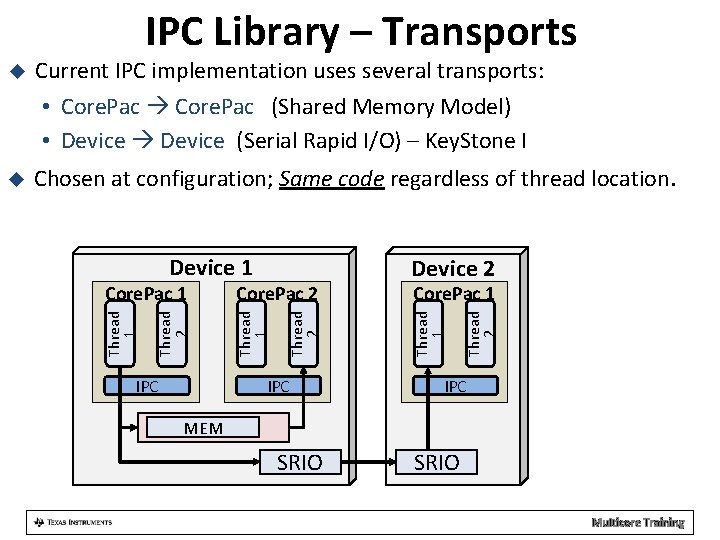

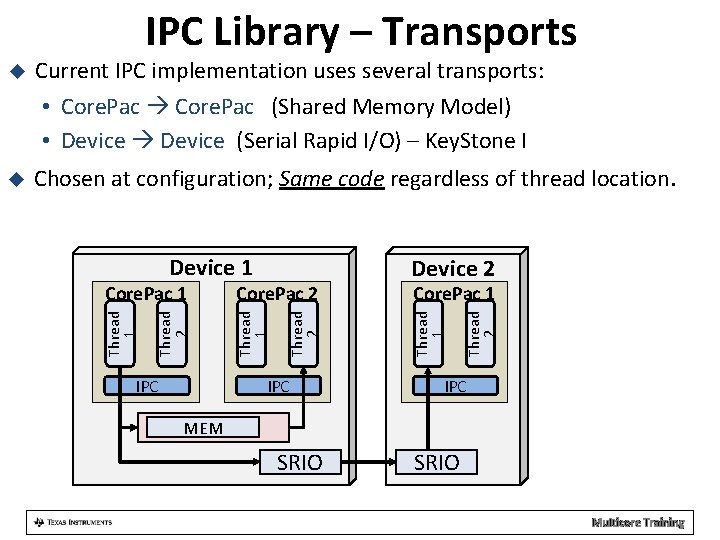

IPC Library – Transports Chosen at configuration; Same code regardless of thread location. IPC Device 2 Thread 2 Core. Pac 1 Thread 1 Core. Pac 2 Thread 2 Core. Pac 1 Thread 1 Device 1 Thread 2 Current IPC implementation uses several transports: • Core. Pac (Shared Memory Model) • Device (Serial Rapid I/O) – Key. Stone I Thread 1 IPC MEM SRIO Multicore Training

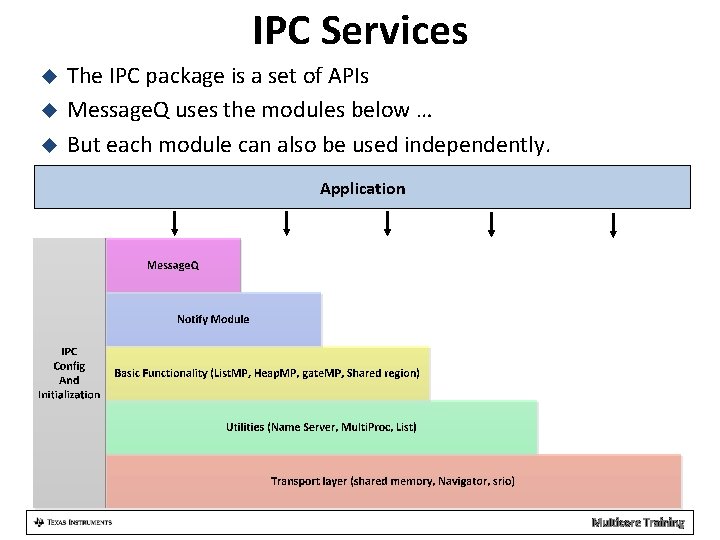

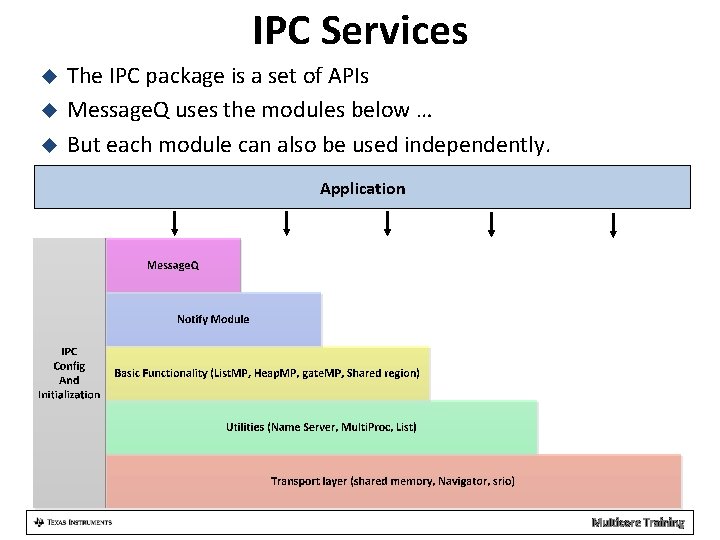

IPC Services The IPC package is a set of APIs Message. Q uses the modules below … But each module can also be used independently. Application Multicore Training

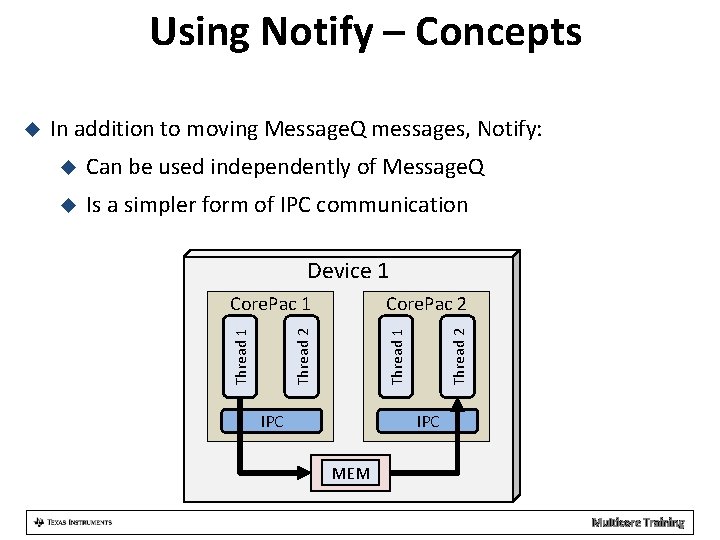

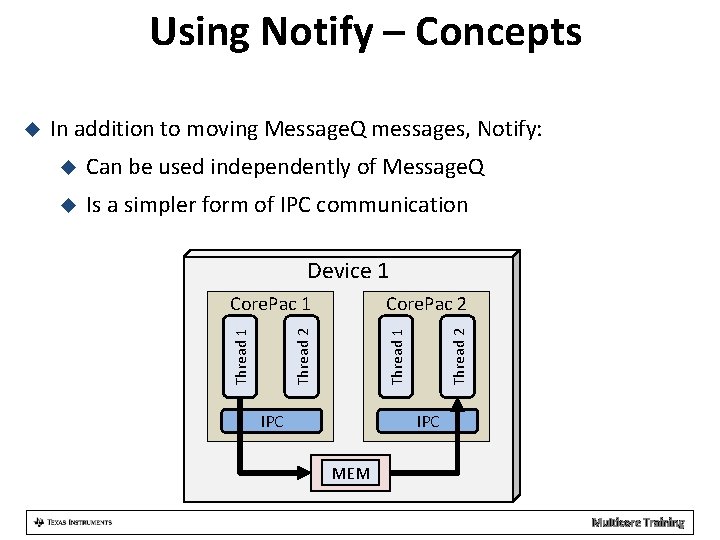

Using Notify – Concepts In addition to moving Message. Q messages, Notify: Can be used independently of Message. Q Is a simpler form of IPC communication Device 1 IPC Thread 2 Thread 1 Core. Pac 2 Thread 2 Core. Pac 1 Thread 1 IPC MEM Multicore Training

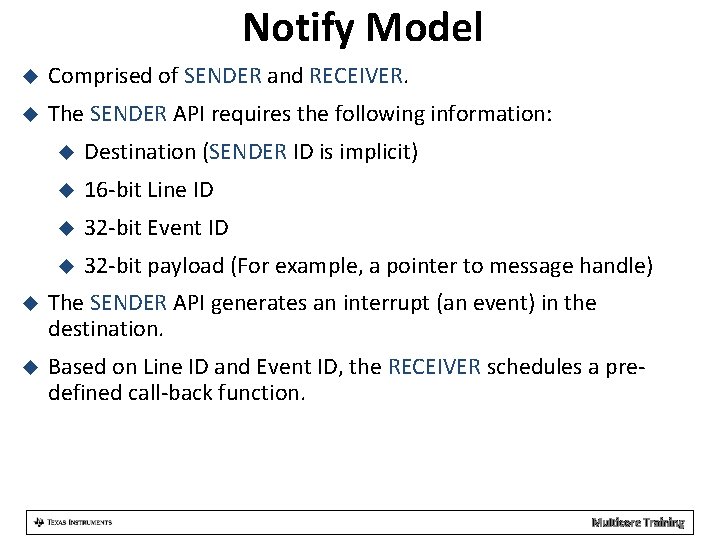

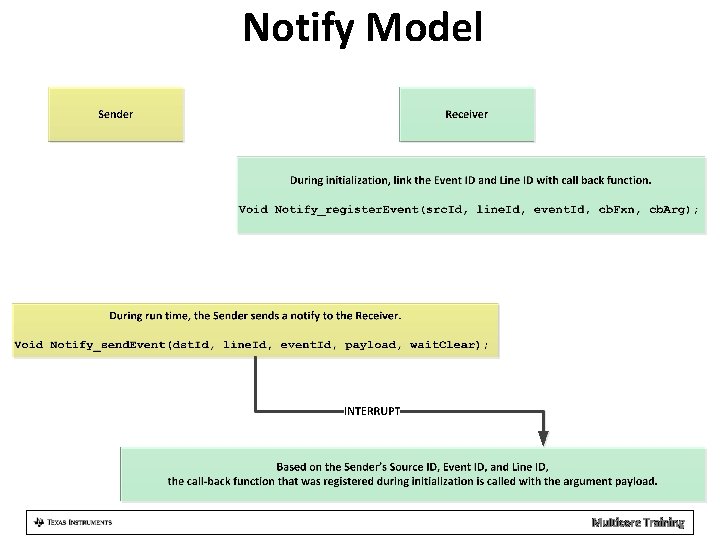

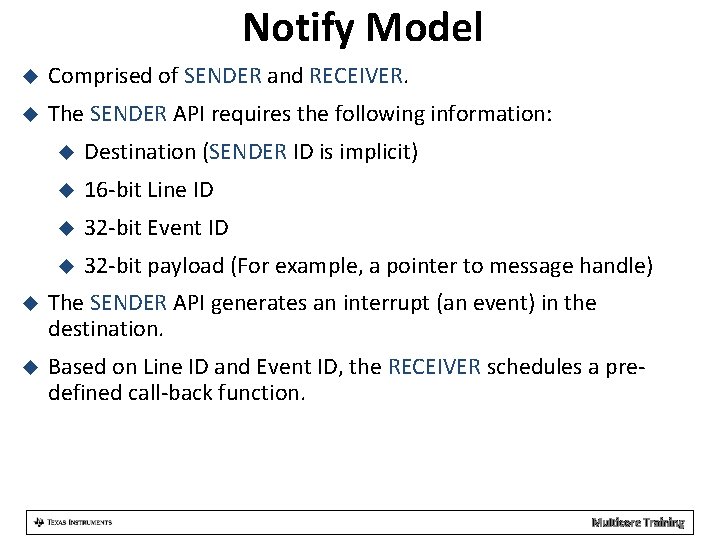

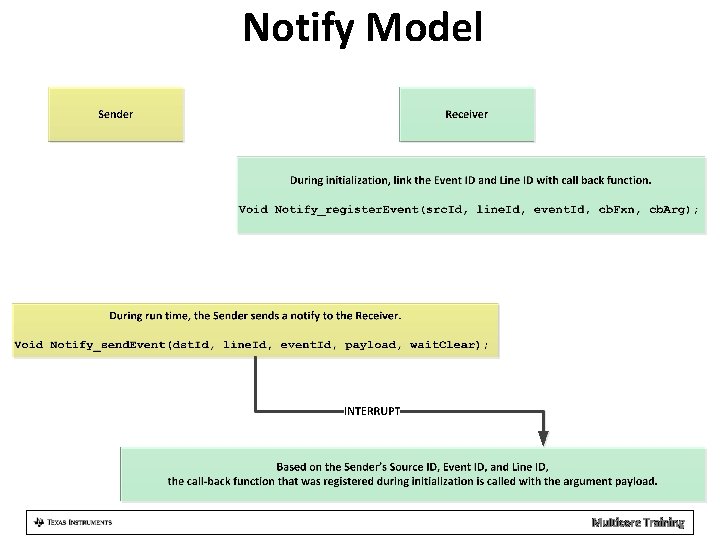

Notify Model Comprised of SENDER and RECEIVER. The SENDER API requires the following information: Destination (SENDER ID is implicit) 16 -bit Line ID 32 -bit Event ID 32 -bit payload (For example, a pointer to message handle) The SENDER API generates an interrupt (an event) in the destination. Based on Line ID and Event ID, the RECEIVER schedules a predefined call-back function. Multicore Training

Notify Model Multicore Training

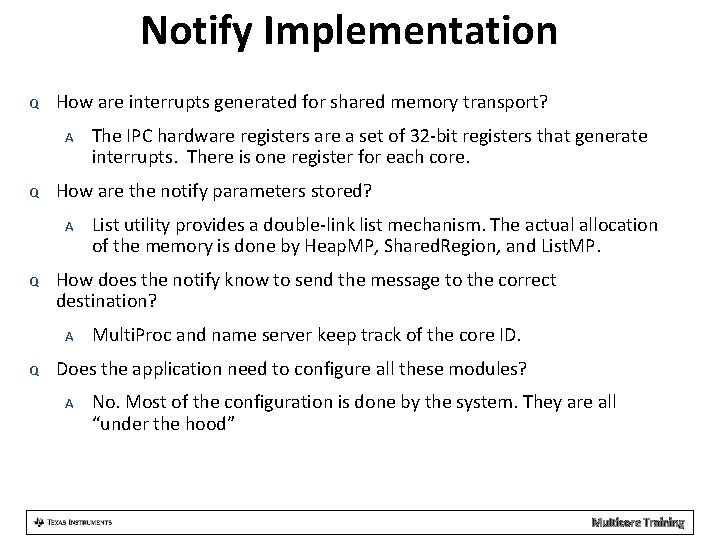

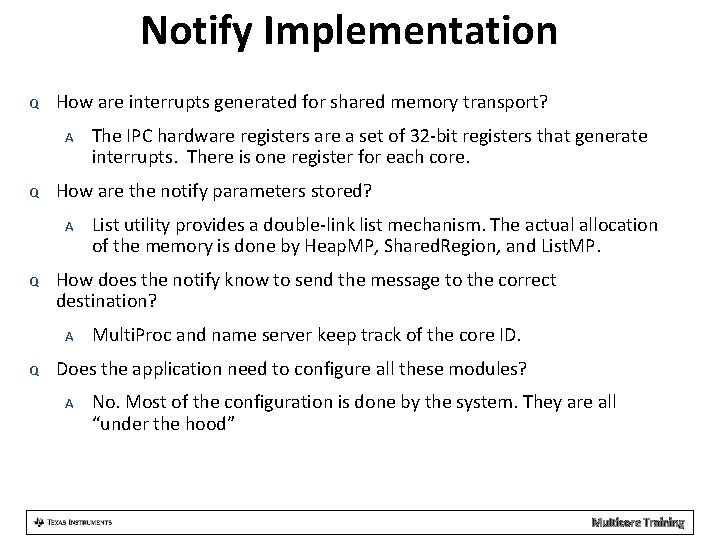

Notify Implementation Q How are interrupts generated for shared memory transport? A Q How are the notify parameters stored? A Q List utility provides a double-link list mechanism. The actual allocation of the memory is done by Heap. MP, Shared. Region, and List. MP. How does the notify know to send the message to the correct destination? A Q The IPC hardware registers are a set of 32 -bit registers that generate interrupts. There is one register for each core. Multi. Proc and name server keep track of the core ID. Does the application need to configure all these modules? A No. Most of the configuration is done by the system. They are all “under the hood” Multicore Training

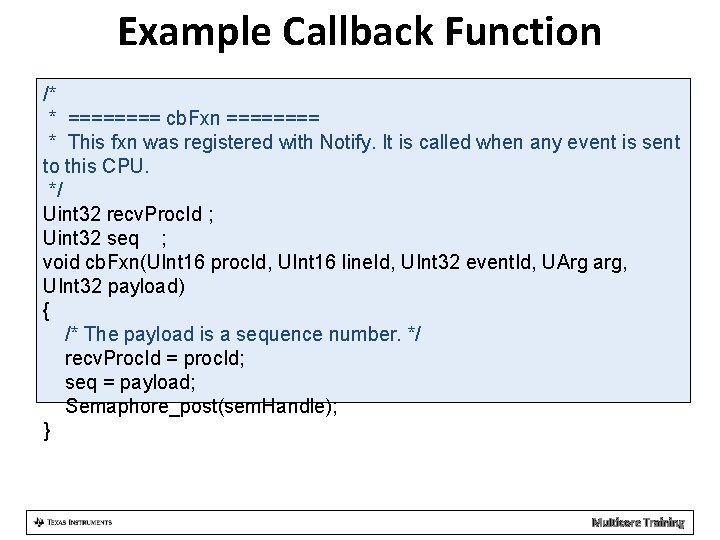

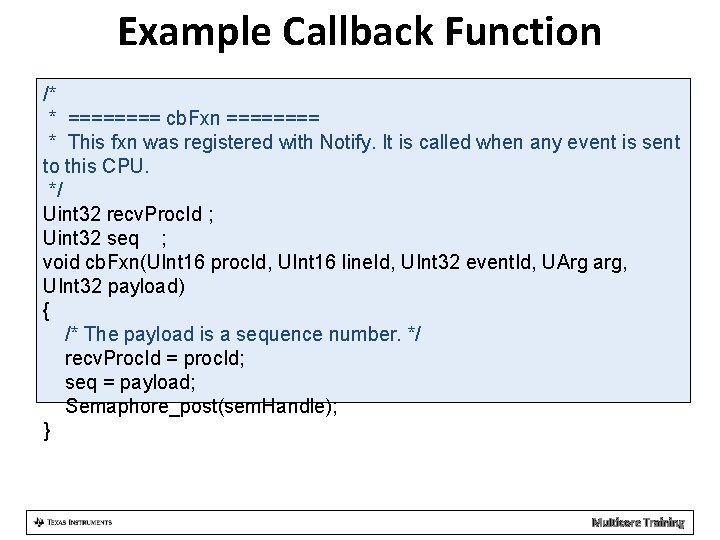

Example Callback Function /* * ==== cb. Fxn ==== * This fxn was registered with Notify. It is called when any event is sent to this CPU. */ Uint 32 recv. Proc. Id ; Uint 32 seq ; void cb. Fxn(UInt 16 proc. Id, UInt 16 line. Id, UInt 32 event. Id, UArg arg, UInt 32 payload) { /* The payload is a sequence number. */ recv. Proc. Id = proc. Id; seq = payload; Semaphore_post(sem. Handle); } Multicore Training

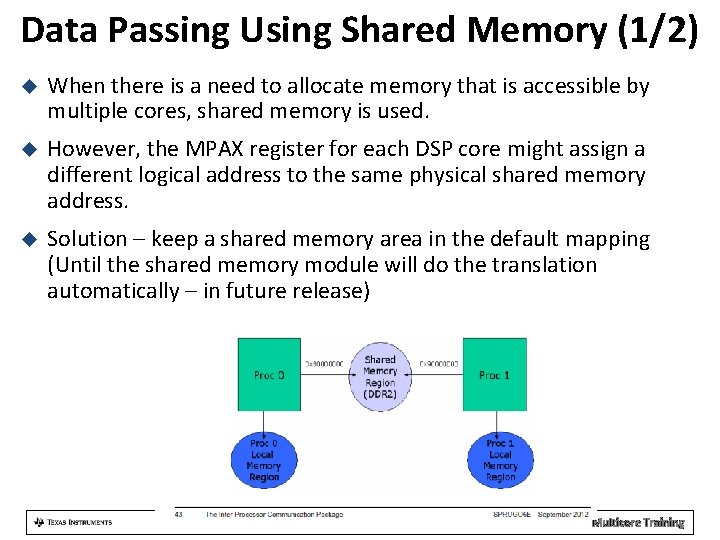

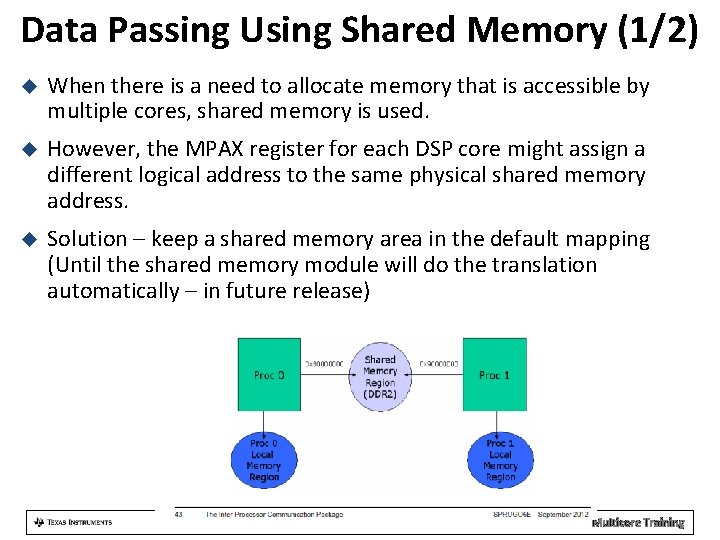

Data Passing Using Shared Memory (1/2) When there is a need to allocate memory that is accessible by multiple cores, shared memory is used. However, the MPAX register for each DSP core might assign a different logical address to the same physical shared memory address. Solution – keep a shared memory area in the default mapping (Until the shared memory module will do the translation automatically – in future release) Multicore Training

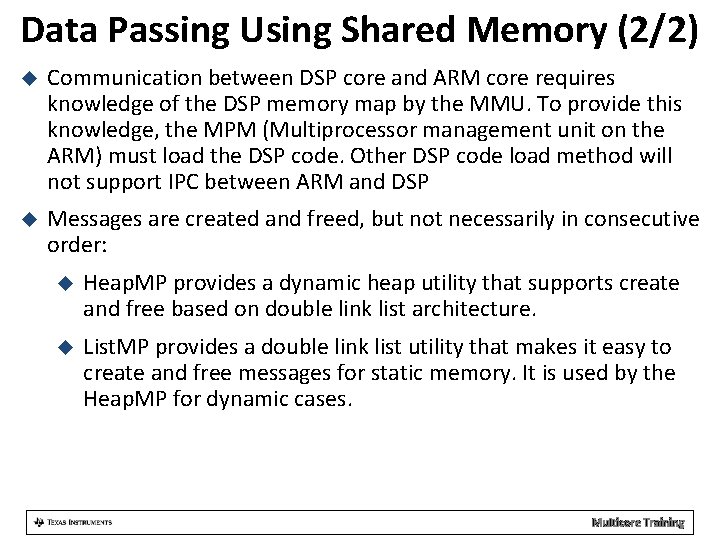

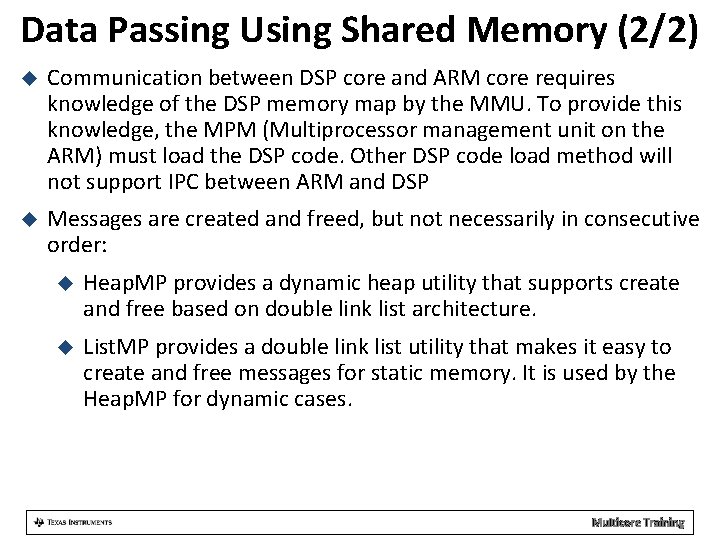

Data Passing Using Shared Memory (2/2) Communication between DSP core and ARM core requires knowledge of the DSP memory map by the MMU. To provide this knowledge, the MPM (Multiprocessor management unit on the ARM) must load the DSP code. Other DSP code load method will not support IPC between ARM and DSP Messages are created and freed, but not necessarily in consecutive order: Heap. MP provides a dynamic heap utility that supports create and free based on double link list architecture. List. MP provides a double link list utility that makes it easy to create and free messages for static memory. It is used by the Heap. MP for dynamic cases. Multicore Training

Message. Q – Highest Layer API SINGLE reader, multiple WRITERS model (READER owns queue/mailbox) Supports structured sending/receiving of variable-length messages, which can include (pointers to) data Uses all of the IPC services layers along with IPC Configuration & Initialization APIs do not change if the message is between two threads: On the same core On two different cores On two different devices APIs do NOT change based on transport – only the CFG (init) code Shared memory SRIO Multicore Training

Message. Q and Messages Q How does the writer connect with the reader queue? A Q What do we mean when we refer to structured messages with variable size? A Q Gate. MP provides hardware semaphore API to prevent race conditions. What facilitates the moving of a message to the receiver queue? A Q List utility provides a double-link list mechanism. The actual allocation of the memory is done by Heap. MP, Shared. Region, and List. MP. If there are multiple writers, how does the system prevent race conditions (e. g. , two writers attempting to allocate the same memory)? A Q Each message has a standard header and data. The header specifies the size of payload. How and where are messages allocated? A Q Multi. Proc and name server keep track of queue names and core IDs. This is done by Notify API using the transport layer. Does the application need to configure all these modules? A No. Most of the configuration is done by the system. More details later. Multicore Training

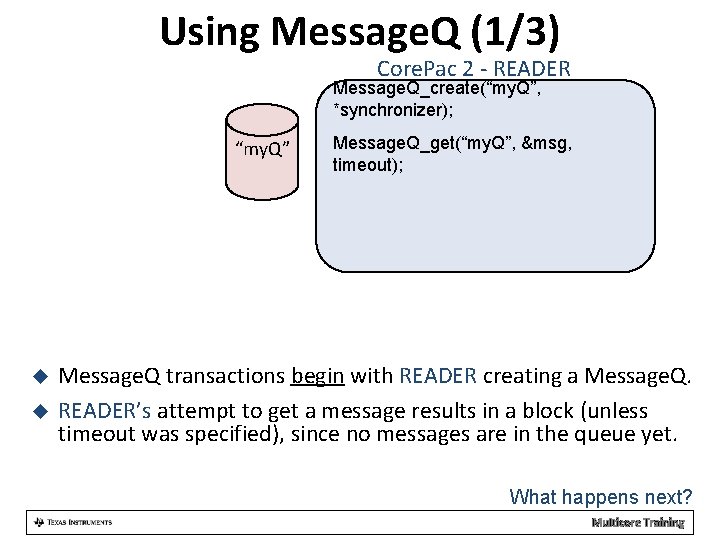

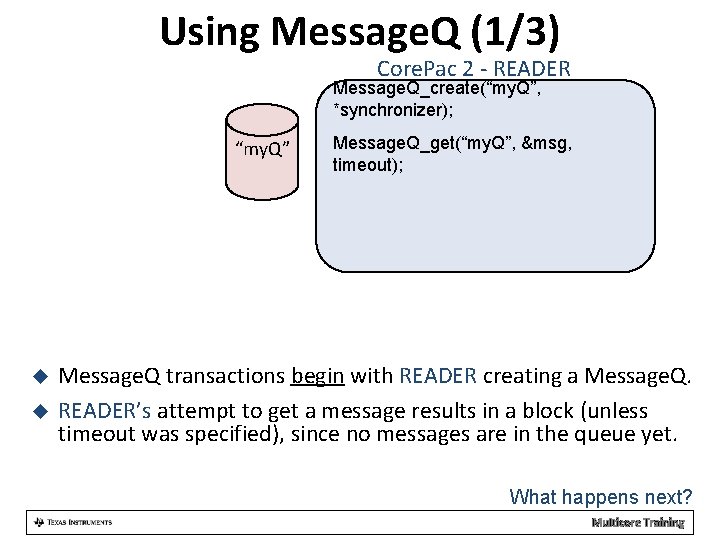

Using Message. Q (1/3) Core. Pac 2 - READER Message. Q_create(“my. Q”, *synchronizer); “my. Q” Message. Q_get(“my. Q”, &msg, timeout); Message. Q transactions begin with READER creating a Message. Q. READER’s attempt to get a message results in a block (unless timeout was specified), since no messages are in the queue yet. What happens next? Multicore Training

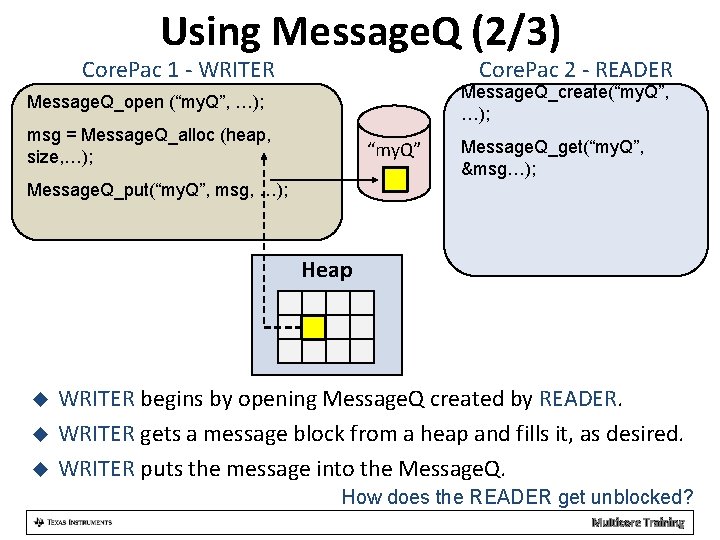

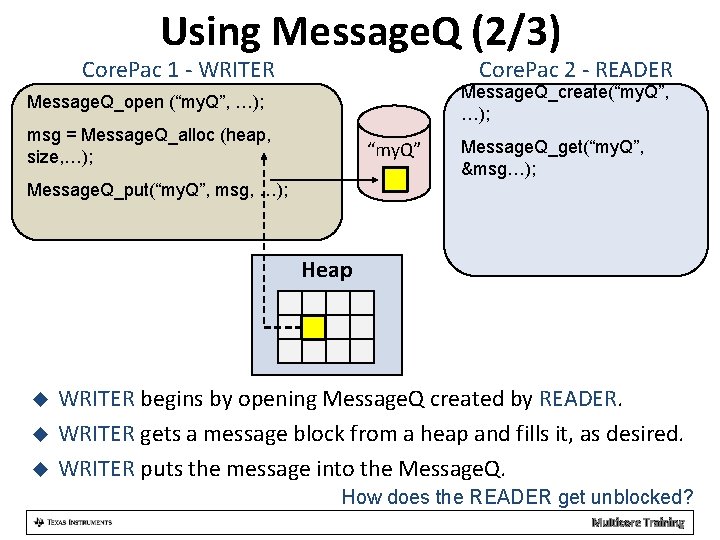

Using Message. Q (2/3) Core. Pac 1 - WRITER Core. Pac 2 - READER Message. Q_create(“my. Q”, …); Message. Q_open (“my. Q”, …); msg = Message. Q_alloc (heap, size, …); “my. Q” Message. Q_get(“my. Q”, &msg…); Message. Q_put(“my. Q”, msg, …); Heap WRITER begins by opening Message. Q created by READER. WRITER gets a message block from a heap and fills it, as desired. WRITER puts the message into the Message. Q. How does the READER get unblocked? Multicore Training

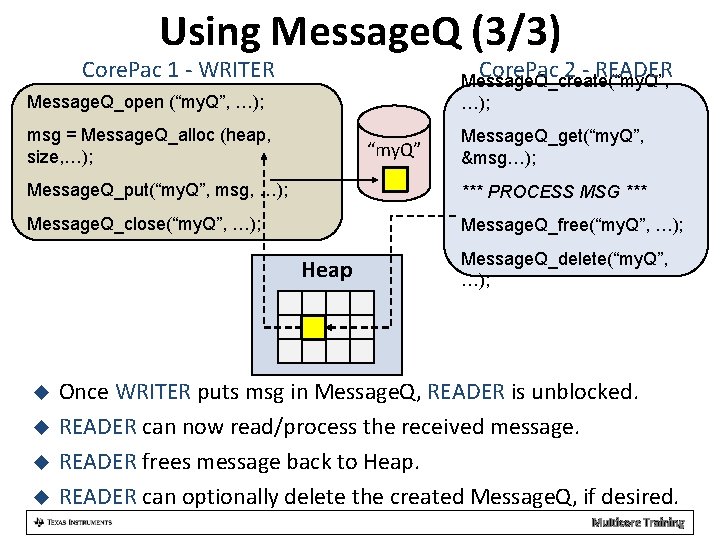

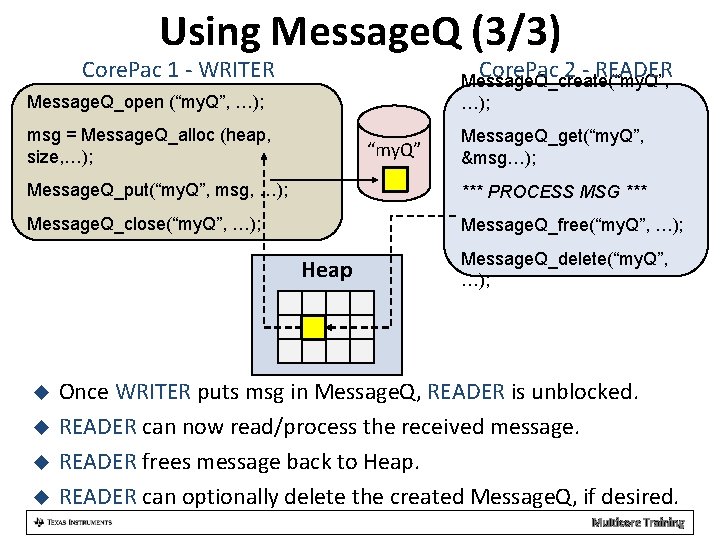

Using Message. Q (3/3) Core. Pac 1 - WRITER Core. Pac 2 - READER Message. Q_open (“my. Q”, …); Message. Q_create(“my. Q”, …); msg = Message. Q_alloc (heap, size, …); Message. Q_get(“my. Q”, &msg…); “my. Q” Message. Q_put(“my. Q”, msg, …); *** PROCESS MSG *** Message. Q_close(“my. Q”, …); Message. Q_free(“my. Q”, …); Heap Message. Q_delete(“my. Q”, …); Once WRITER puts msg in Message. Q, READER is unblocked. READER can now read/process the received message. READER frees message back to Heap. READER can optionally delete the created Message. Q, if desired. Multicore Training

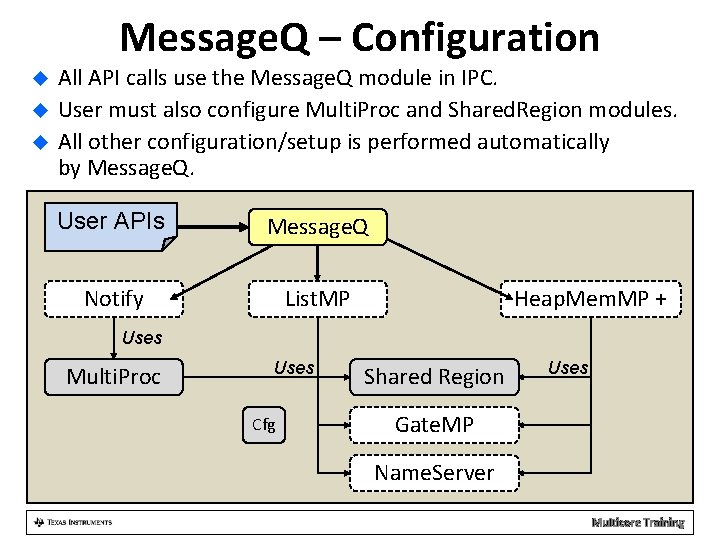

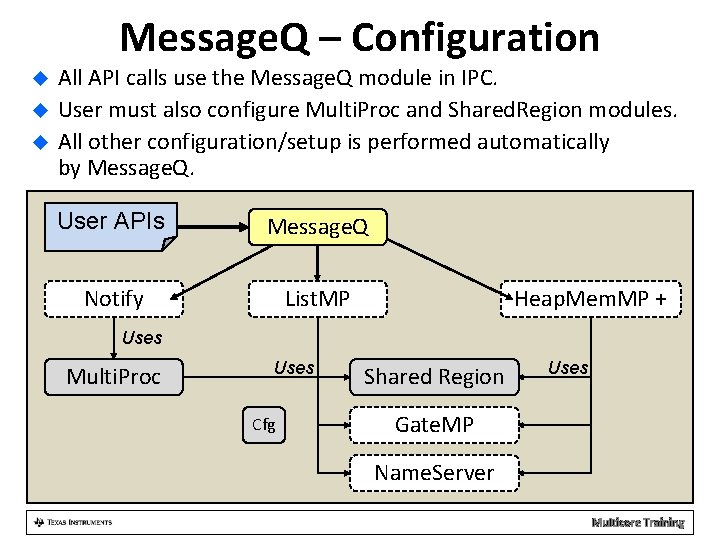

Message. Q – Configuration All API calls use the Message. Q module in IPC. User must also configure Multi. Proc and Shared. Region modules. All other configuration/setup is performed automatically by Message. Q. User APIs Message. Q Notify List. MP Heap. Mem. MP + Uses Multi. Proc Uses Cfg Shared Region Uses Gate. MP Name. Server Multicore Training

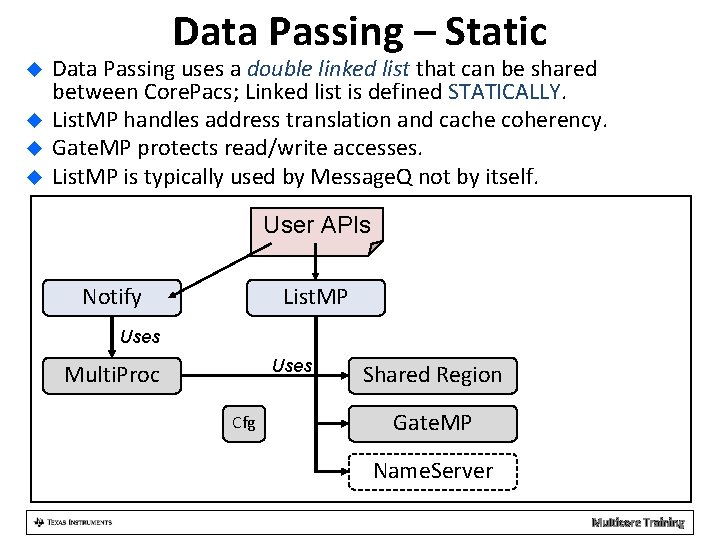

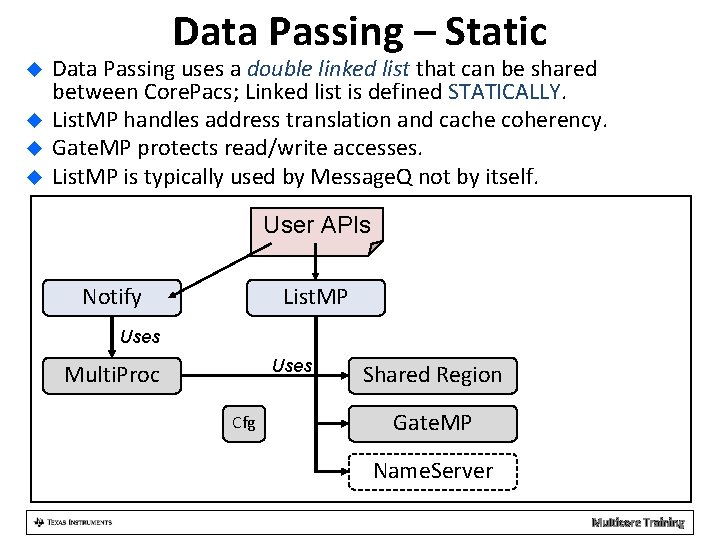

Data Passing – Static Data Passing uses a double linked list that can be shared between Core. Pacs; Linked list is defined STATICALLY. List. MP handles address translation and cache coherency. Gate. MP protects read/write accesses. List. MP is typically used by Message. Q not by itself. User APIs Notify List. MP Uses Multi. Proc Cfg Shared Region Gate. MP Name. Server Multicore Training

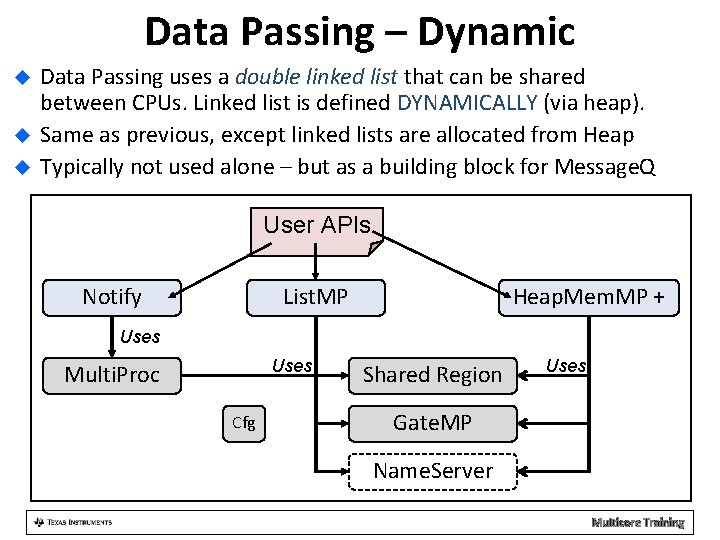

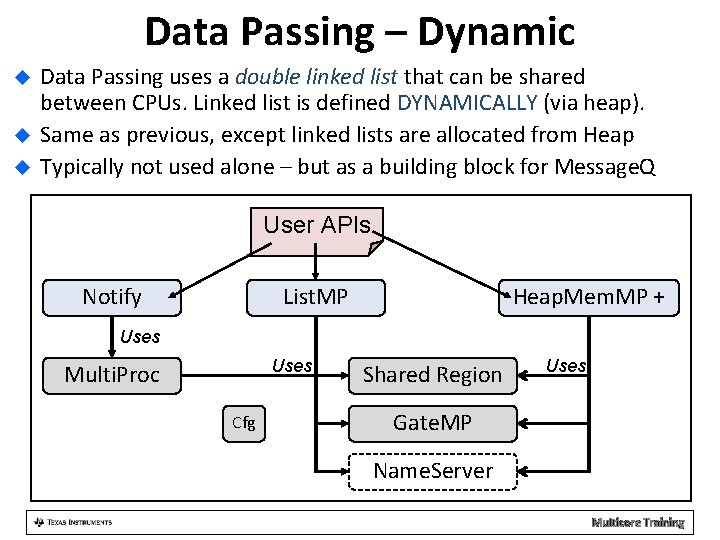

Data Passing – Dynamic Data Passing uses a double linked list that can be shared between CPUs. Linked list is defined DYNAMICALLY (via heap). Same as previous, except linked lists are allocated from Heap Typically not used alone – but as a building block for Message. Q User APIs Notify List. MP Heap. Mem. MP + Uses Multi. Proc Cfg Shared Region Uses Gate. MP Name. Server Multicore Training

More Information About Message. Q For the DSP, All structures and function descriptions are exposed to the user and can be found within the release: ipc_U_ZZ_YY_XXdocsdoxygenhtml_message_q_8 h. html IPC User Guide (for DSP and ARM) is in MCSDK_3_00_XXipc_3_XX_XX_XXdocsIPC_Users_Guide. pdf Multicore Training

IPC Device to Device Using SRIO Available only on Key. Stone I (for now) Multicore Training

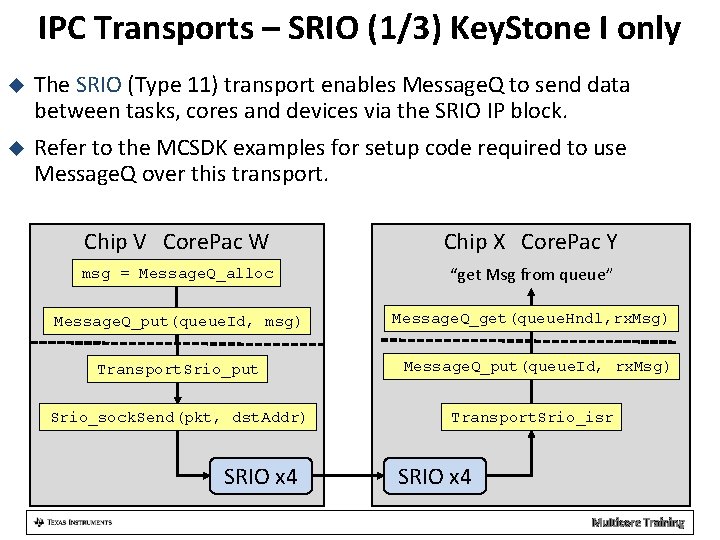

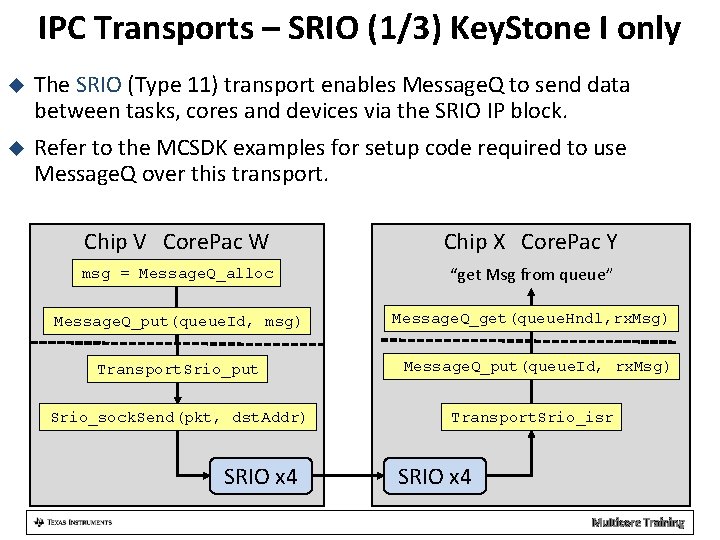

IPC Transports – SRIO (1/3) Key. Stone I only The SRIO (Type 11) transport enables Message. Q to send data between tasks, cores and devices via the SRIO IP block. Refer to the MCSDK examples for setup code required to use Message. Q over this transport. Chip V Core. Pac W Chip X Core. Pac Y msg = Message. Q_alloc “get Msg from queue” Message. Q_put(queue. Id, msg) Message. Q_get(queue. Hndl, rx. Msg) Transport. Srio_put Srio_sock. Send(pkt, dst. Addr) SRIO x 4 Message. Q_put(queue. Id, rx. Msg) Transport. Srio_isr SRIO x 4 Multicore Training

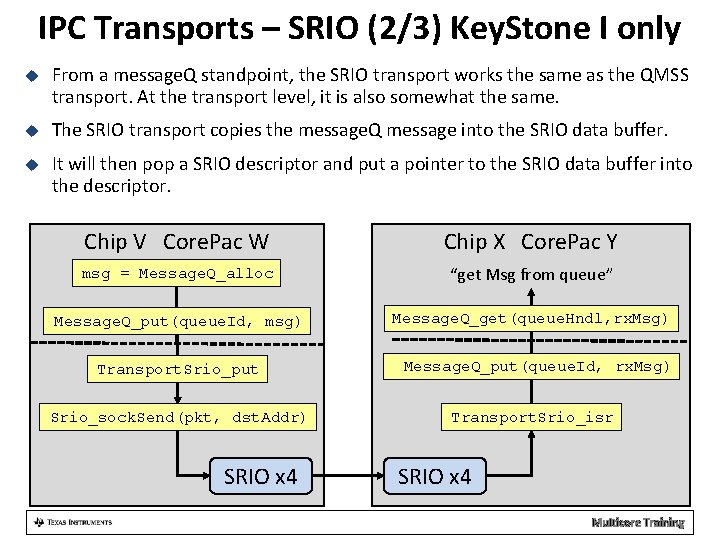

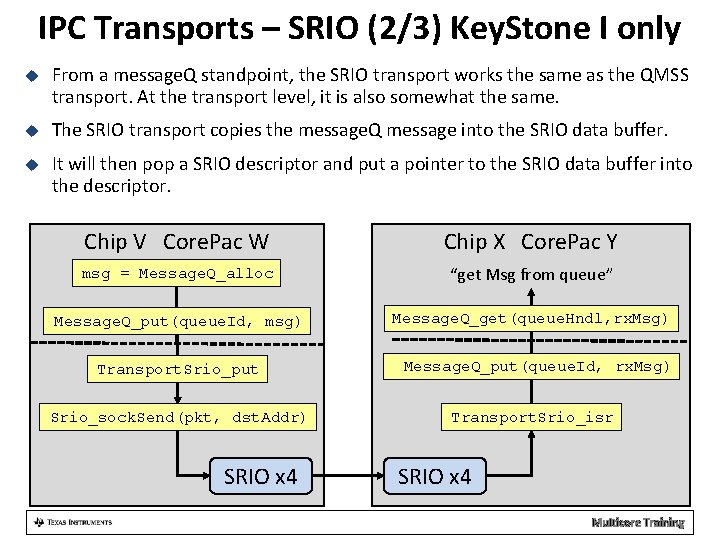

IPC Transports – SRIO (2/3) Key. Stone I only From a message. Q standpoint, the SRIO transport works the same as the QMSS transport. At the transport level, it is also somewhat the same. The SRIO transport copies the message. Q message into the SRIO data buffer. It will then pop a SRIO descriptor and put a pointer to the SRIO data buffer into the descriptor. Chip V Core. Pac W Chip X Core. Pac Y msg = Message. Q_alloc “get Msg from queue” Message. Q_put(queue. Id, msg) Message. Q_get(queue. Hndl, rx. Msg) Transport. Srio_put Srio_sock. Send(pkt, dst. Addr) SRIO x 4 Message. Q_put(queue. Id, rx. Msg) Transport. Srio_isr SRIO x 4 Multicore Training

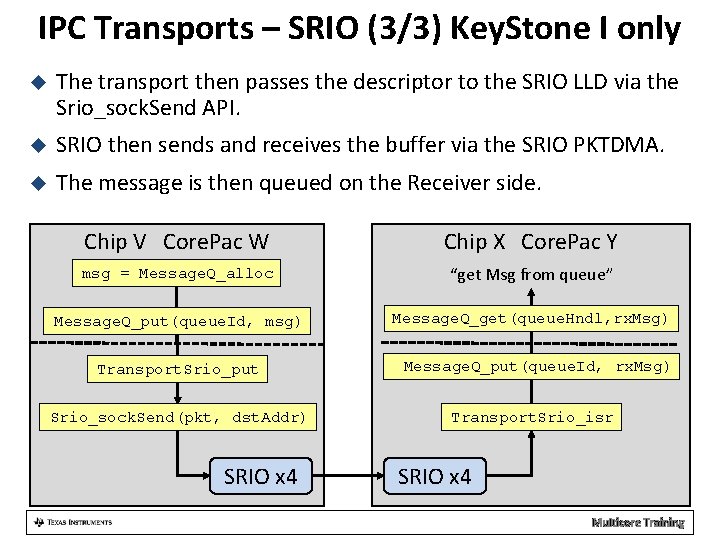

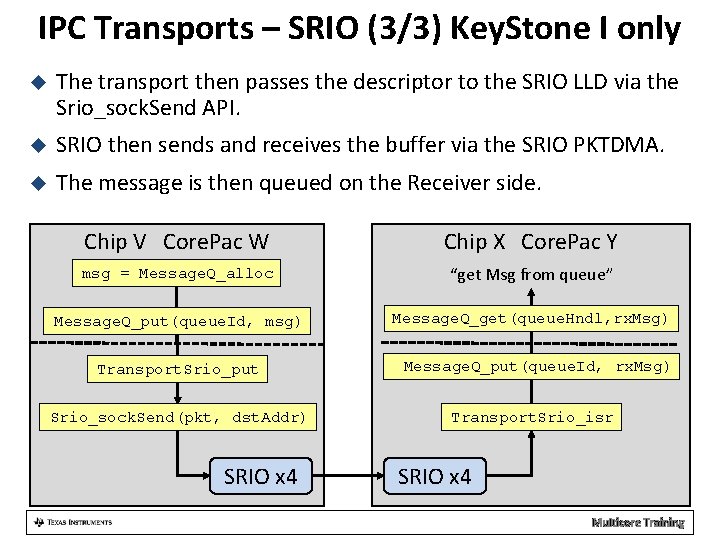

IPC Transports – SRIO (3/3) Key. Stone I only The transport then passes the descriptor to the SRIO LLD via the Srio_sock. Send API. SRIO then sends and receives the buffer via the SRIO PKTDMA. The message is then queued on the Receiver side. Chip V Core. Pac W Chip X Core. Pac Y msg = Message. Q_alloc “get Msg from queue” Message. Q_put(queue. Id, msg) Message. Q_get(queue. Hndl, rx. Msg) Transport. Srio_put Srio_sock. Send(pkt, dst. Addr) SRIO x 4 Message. Q_put(queue. Id, rx. Msg) Transport. Srio_isr SRIO x 4 Multicore Training

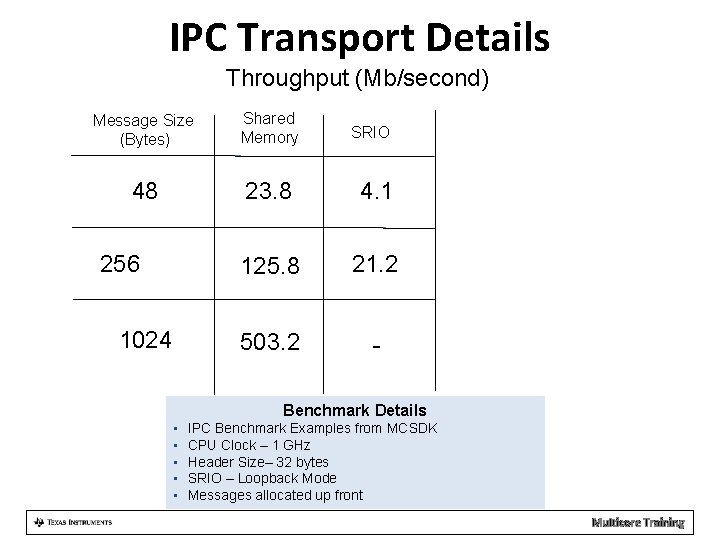

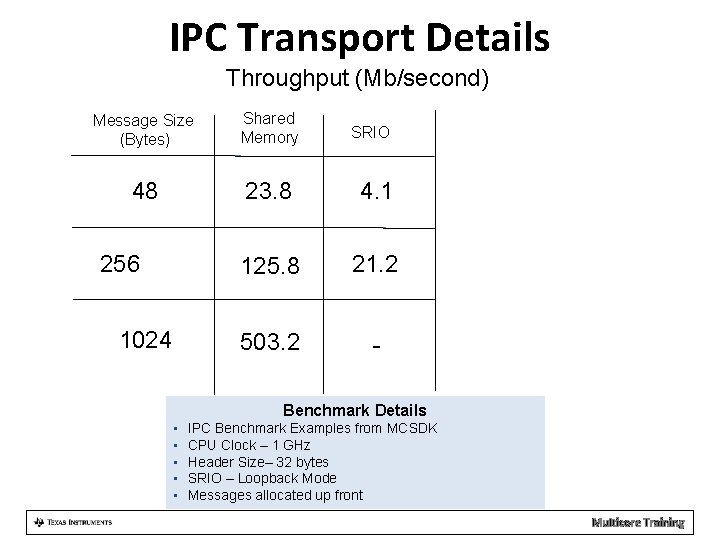

IPC Transport Details Throughput (Mb/second) Message Size (Bytes) Shared Memory 48 23. 8 4. 1 125. 8 21. 2 503. 2 - 256 1024 SRIO Benchmark Details • • • IPC Benchmark Examples from MCSDK CPU Clock – 1 GHz Header Size– 32 bytes SRIO – Loopback Mode Messages allocated up front Multicore Training

Agenda Basic Concepts IPC library msg. Com Demos and examples Multicore Training

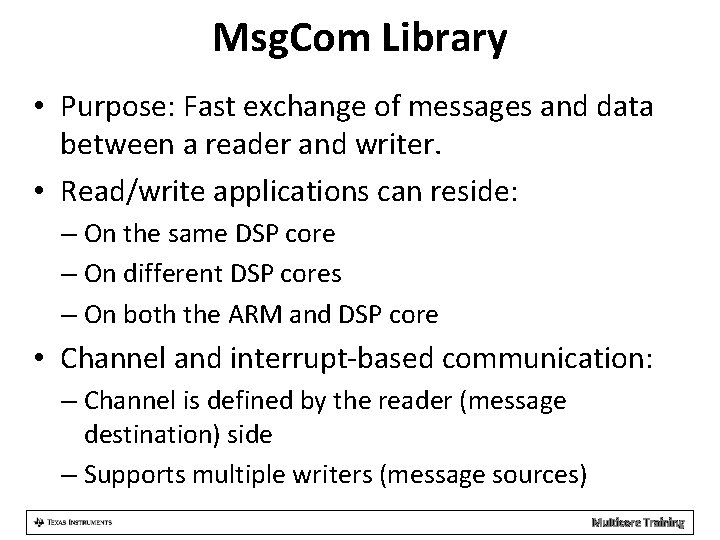

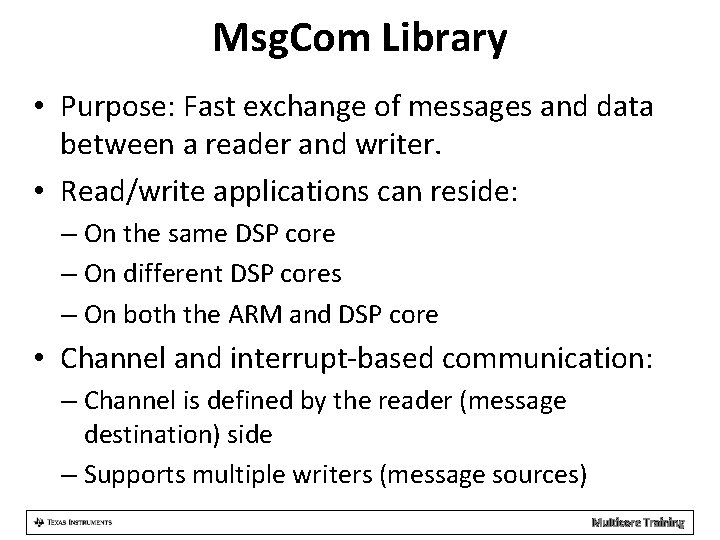

Msg. Com Library • Purpose: Fast exchange of messages and data between a reader and writer. • Read/write applications can reside: – On the same DSP core – On different DSP cores – On both the ARM and DSP core • Channel and interrupt-based communication: – Channel is defined by the reader (message destination) side – Supports multiple writers (message sources) Multicore Training

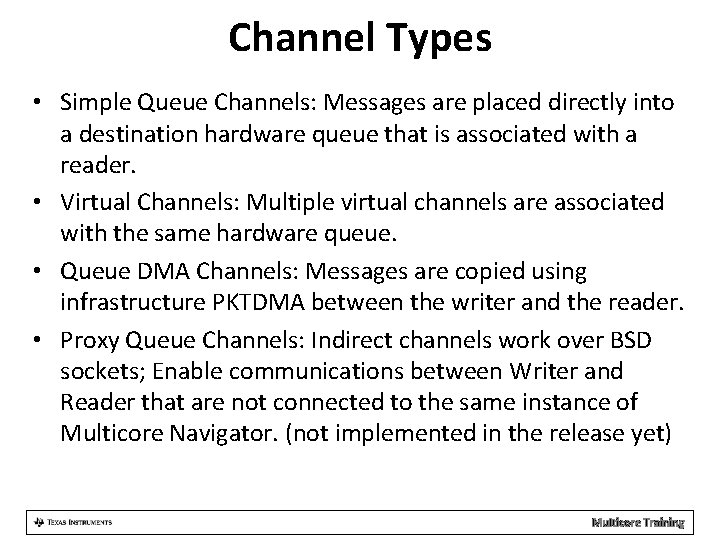

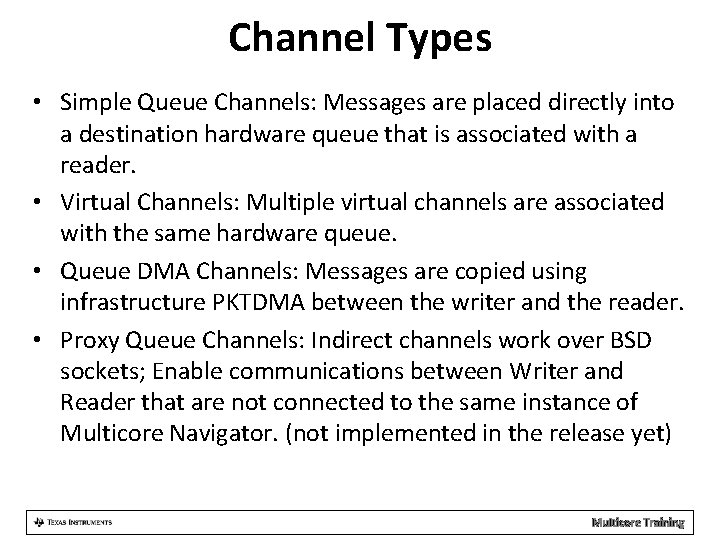

Channel Types • Simple Queue Channels: Messages are placed directly into a destination hardware queue that is associated with a reader. • Virtual Channels: Multiple virtual channels are associated with the same hardware queue. • Queue DMA Channels: Messages are copied using infrastructure PKTDMA between the writer and the reader. • Proxy Queue Channels: Indirect channels work over BSD sockets; Enable communications between Writer and Reader that are not connected to the same instance of Multicore Navigator. (not implemented in the release yet) Multicore Training

Interrupt Types • No interrupt: Reader polls until a message arrives. • Direct Interrupt: – Low-delay system – Special queues must be used. • Accumulated Interrupts: – Special queues are used. – Reader receives an interrupt when the number of messages crosses a defined threshold. Multicore Training

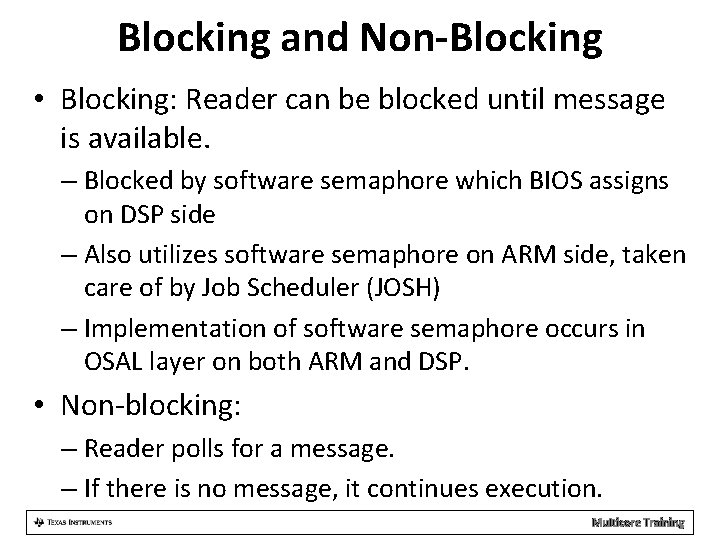

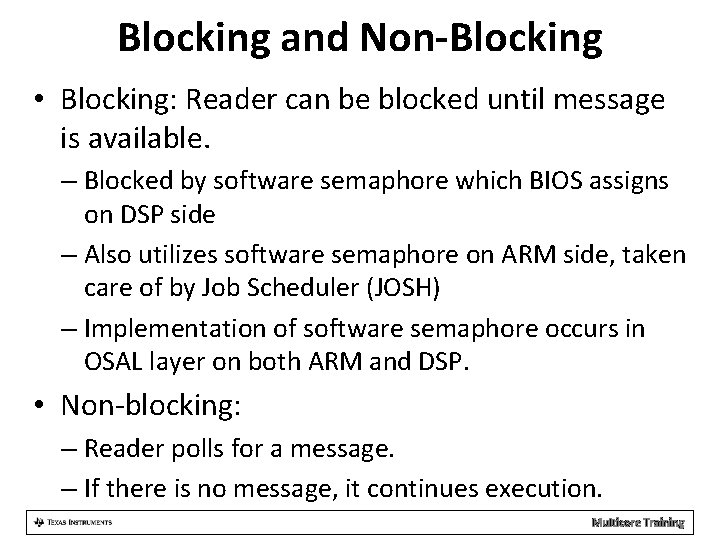

Blocking and Non-Blocking • Blocking: Reader can be blocked until message is available. – Blocked by software semaphore which BIOS assigns on DSP side – Also utilizes software semaphore on ARM side, taken care of by Job Scheduler (JOSH) – Implementation of software semaphore occurs in OSAL layer on both ARM and DSP. • Non-blocking: – Reader polls for a message. – If there is no message, it continues execution. Multicore Training

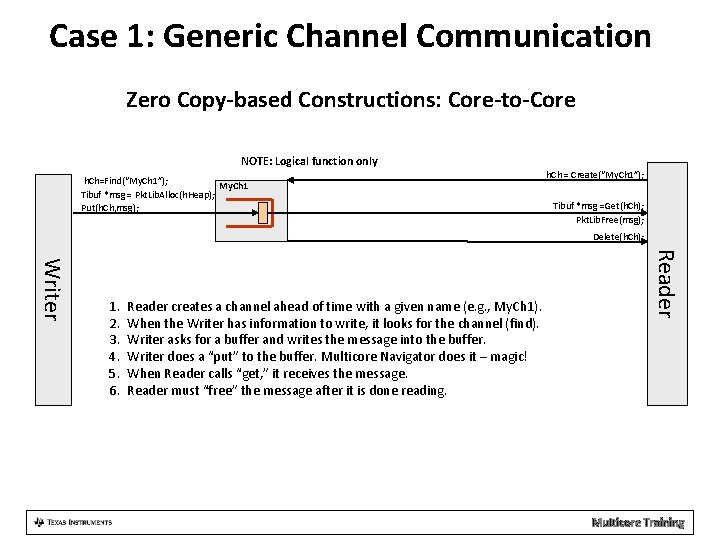

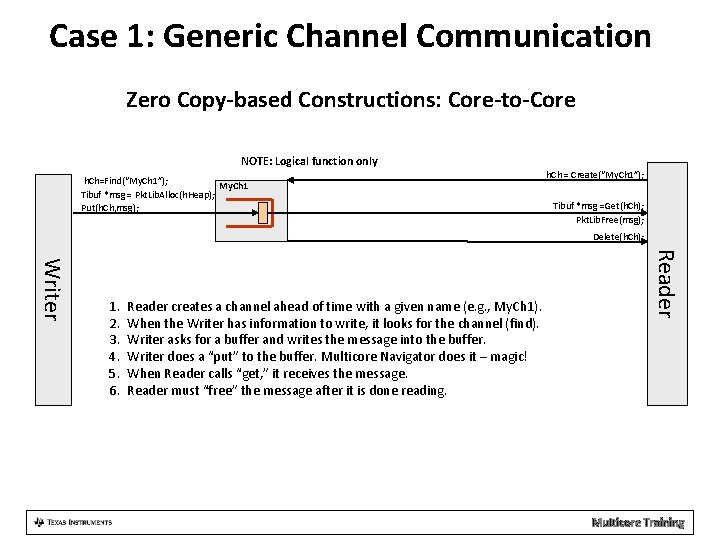

Case 1: Generic Channel Communication Zero Copy-based Constructions: Core-to-Core NOTE: Logical function only h. Ch=Find(“My. Ch 1”); My. Ch 1 Tibuf *msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); h. Ch = Create(“My. Ch 1”); Tibuf *msg =Get(h. Ch); Pkt. Lib. Free(msg); Delete(h. Ch); Reader creates a channel ahead of time with a given name (e. g. , My. Ch 1). When the Writer has information to write, it looks for the channel (find). Writer asks for a buffer and writes the message into the buffer. Writer does a “put” to the buffer. Multicore Navigator does it – magic! When Reader calls “get, ” it receives the message. Reader must “free” the message after it is done reading. Reader Writer 1. 2. 3. 4. 5. 6. Multicore Training

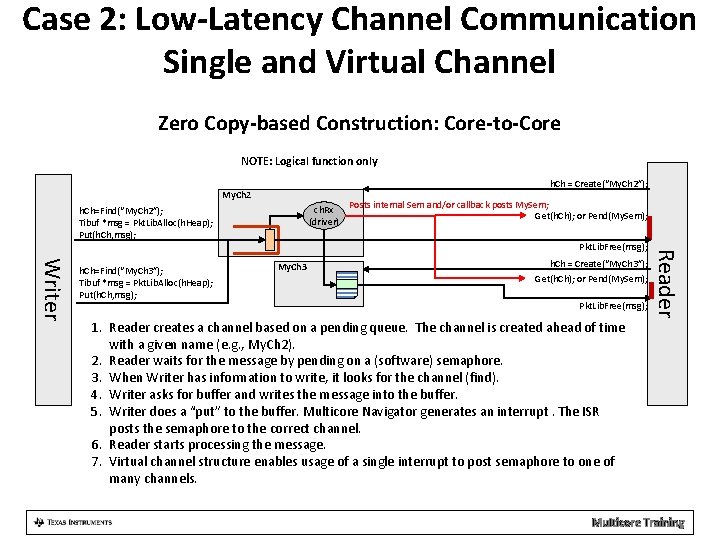

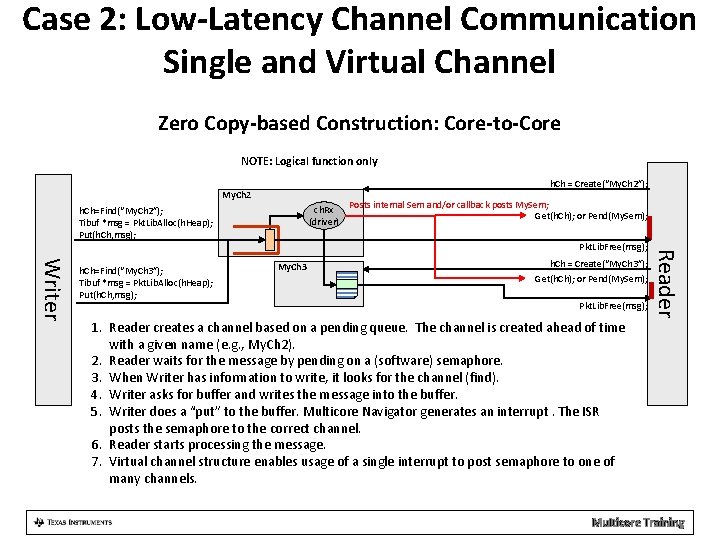

Case 2: Low-Latency Channel Communication Single and Virtual Channel Zero Copy-based Construction: Core-to-Core NOTE: Logical function only h. Ch = Create(“My. Ch 2”); My. Ch 2 ch. Rx (driver) h. Ch=Find(“My. Ch 2”); Tibuf *msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); Pkt. Lib. Free(msg); My. Ch 3 h. Ch = Create(“My. Ch 3”); Get(h. Ch); or Pend(My. Sem); Pkt. Lib. Free(msg); 1. Reader creates a channel based on a pending queue. The channel is created ahead of time with a given name (e. g. , My. Ch 2). 2. Reader waits for the message by pending on a (software) semaphore. 3. When Writer has information to write, it looks for the channel (find). 4. Writer asks for buffer and writes the message into the buffer. 5. Writer does a “put” to the buffer. Multicore Navigator generates an interrupt. The ISR posts the semaphore to the correct channel. 6. Reader starts processing the message. 7. Virtual channel structure enables usage of a single interrupt to post semaphore to one of many channels. Reader Writer h. Ch=Find(“My. Ch 3”); Tibuf *msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); Posts internal Sem and/or callback posts My. Sem; Get(h. Ch); or Pend(My. Sem); Multicore Training

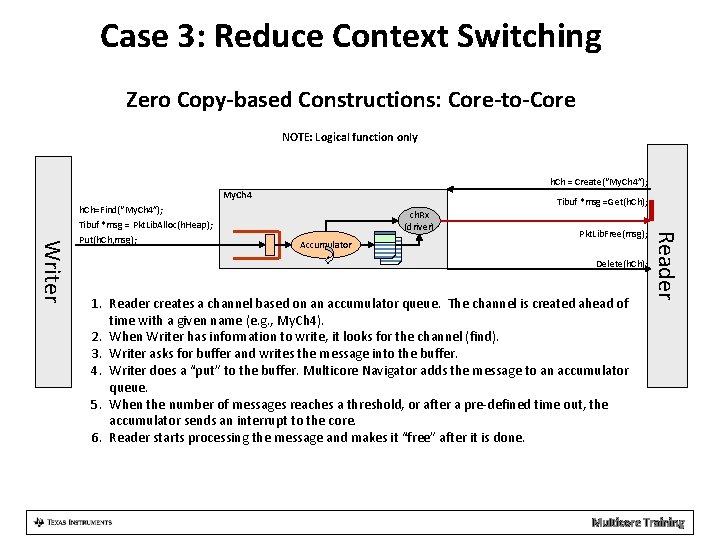

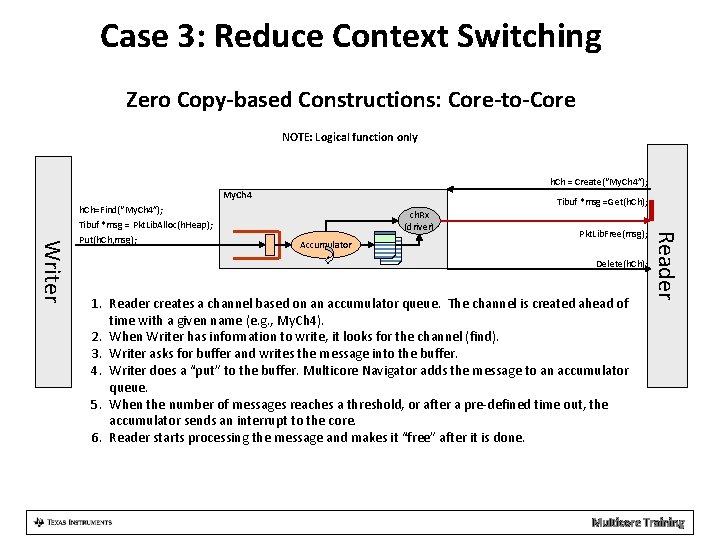

Case 3: Reduce Context Switching Zero Copy-based Constructions: Core-to-Core NOTE: Logical function only h. Ch = Create(“My. Ch 4”); My. Ch 4 Tibuf *msg =Get(h. Ch); h. Ch=Find(“My. Ch 4”); Accumulator Pkt. Lib. Free(msg); Delete(h. Ch); 1. Reader creates a channel based on an accumulator queue. The channel is created ahead of time with a given name (e. g. , My. Ch 4). 2. When Writer has information to write, it looks for the channel (find). 3. Writer asks for buffer and writes the message into the buffer. 4. Writer does a “put” to the buffer. Multicore Navigator adds the message to an accumulator queue. 5. When the number of messages reaches a threshold, or after a pre-defined time out, the accumulator sends an interrupt to the core. 6. Reader starts processing the message and makes it “free” after it is done. Reader Writer Tibuf *msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); ch. Rx (driver) Multicore Training

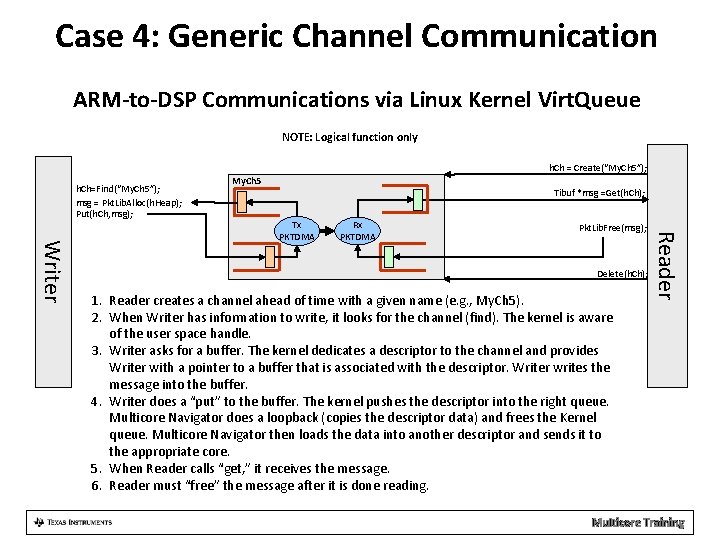

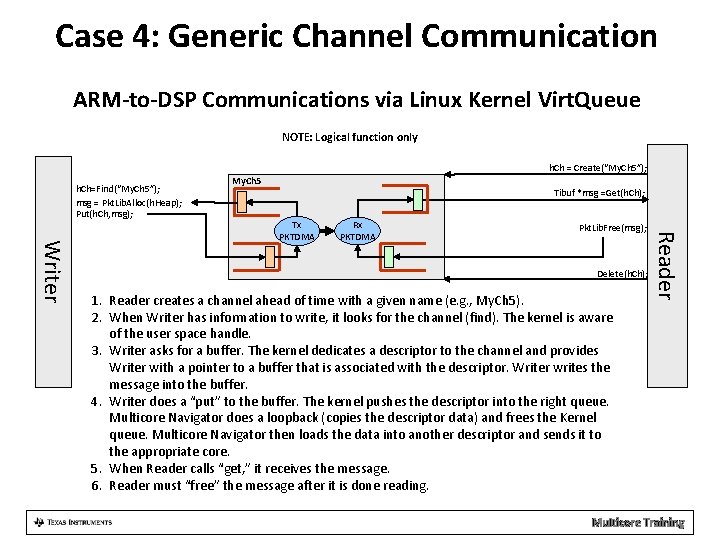

Case 4: Generic Channel Communication ARM-to-DSP Communications via Linux Kernel Virt. Queue NOTE: Logical function only h. Ch = Create(“My. Ch 5”); h. Ch=Find(“My. Ch 5”); msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); My. Ch 5 Tibuf *msg =Get(h. Ch); Rx PKTDMA Pkt. Lib. Free(msg); Delete(h. Ch); 1. Reader creates a channel ahead of time with a given name (e. g. , My. Ch 5). 2. When Writer has information to write, it looks for the channel (find). The kernel is aware of the user space handle. 3. Writer asks for a buffer. The kernel dedicates a descriptor to the channel and provides Writer with a pointer to a buffer that is associated with the descriptor. Writer writes the message into the buffer. 4. Writer does a “put” to the buffer. The kernel pushes the descriptor into the right queue. Multicore Navigator does a loopback (copies the descriptor data) and frees the Kernel queue. Multicore Navigator then loads the data into another descriptor and sends it to the appropriate core. 5. When Reader calls “get, ” it receives the message. 6. Reader must “free” the message after it is done reading. Reader Writer Tx PKTDMA Multicore Training

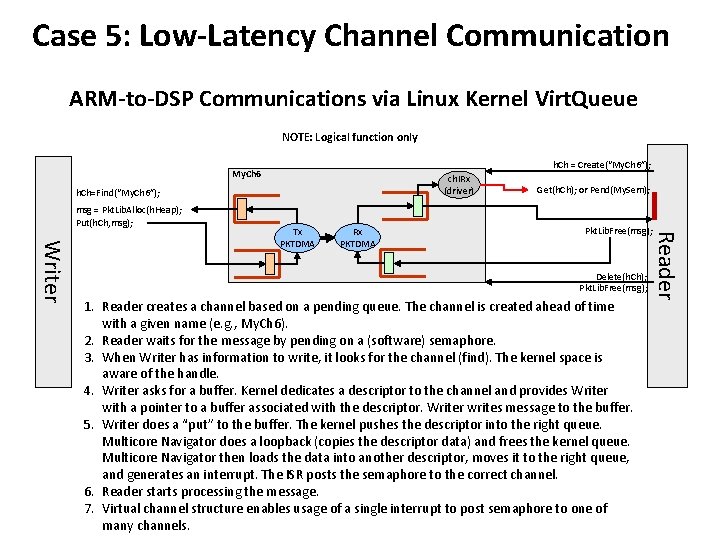

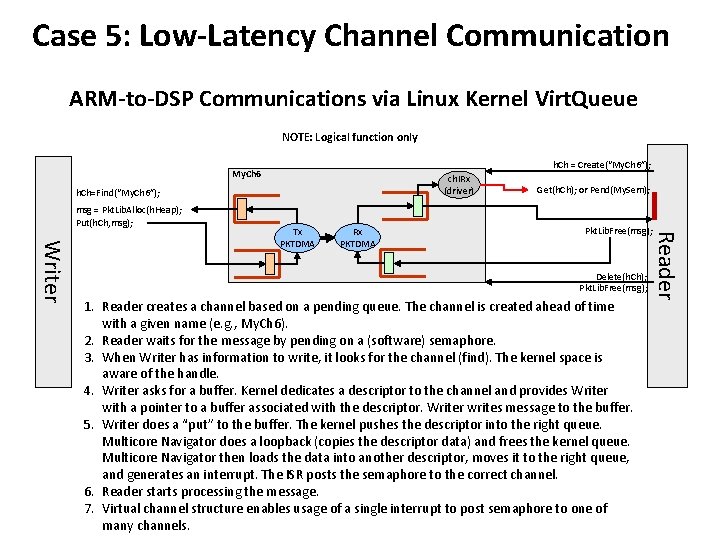

Case 5: Low-Latency Channel Communication ARM-to-DSP Communications via Linux Kernel Virt. Queue NOTE: Logical function only h. Ch = Create(“My. Ch 6”); My. Ch 6 ch. IRx (driver) h. Ch=Find(“My. Ch 6”); msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); Rx PKTDMA Pkt. Lib. Free(msg); Delete(h. Ch); Pkt. Lib. Free(msg); Reader Writer Tx PKTDMA Get(h. Ch); or Pend(My. Sem); 1. Reader creates a channel based on a pending queue. The channel is created ahead of time with a given name (e. g. , My. Ch 6). 2. Reader waits for the message by pending on a (software) semaphore. 3. When Writer has information to write, it looks for the channel (find). The kernel space is aware of the handle. 4. Writer asks for a buffer. Kernel dedicates a descriptor to the channel and provides Writer with a pointer to a buffer associated with the descriptor. Writer writes message to the buffer. 5. Writer does a “put” to the buffer. The kernel pushes the descriptor into the right queue. Multicore Navigator does a loopback (copies the descriptor data) and frees the kernel queue. Multicore Navigator then loads the data into another descriptor, moves it to the right queue, and generates an interrupt. The ISR posts the semaphore to the correct channel. 6. Reader starts processing the message. 7. Virtual channel structure enables usage of a single interrupt to post semaphore to one of Multicore Training many channels.

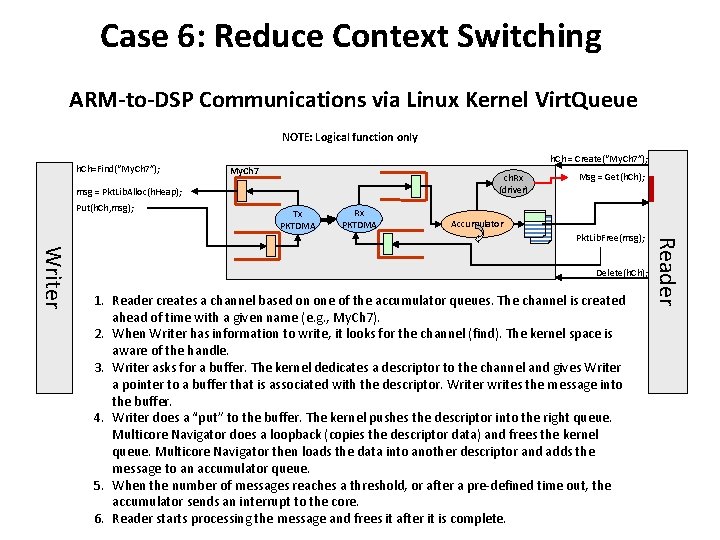

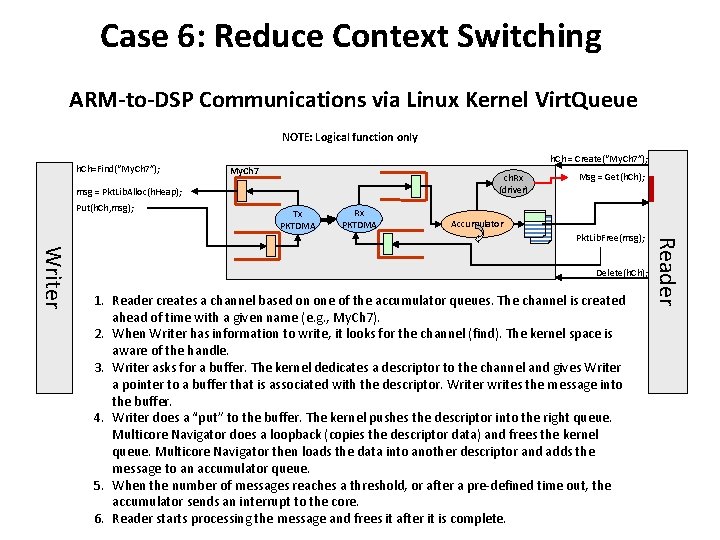

Case 6: Reduce Context Switching ARM-to-DSP Communications via Linux Kernel Virt. Queue NOTE: Logical function only h. Ch=Find(“My. Ch 7”); h. Ch = Create(“My. Ch 7”); My. Ch 7 ch. Rx (driver) msg = Pkt. Lib. Alloc(h. Heap); Put(h. Ch, msg); Tx PKTDMA Rx PKTDMA Msg = Get(h. Ch); Accumulator Writer Delete(h. Ch); Reader Pkt. Lib. Free(msg); 1. Reader creates a channel based on one of the accumulator queues. The channel is created ahead of time with a given name (e. g. , My. Ch 7). 2. When Writer has information to write, it looks for the channel (find). The kernel space is aware of the handle. 3. Writer asks for a buffer. The kernel dedicates a descriptor to the channel and gives Writer a pointer to a buffer that is associated with the descriptor. Writer writes the message into the buffer. 4. Writer does a “put” to the buffer. The kernel pushes the descriptor into the right queue. Multicore Navigator does a loopback (copies the descriptor data) and frees the kernel queue. Multicore Navigator then loads the data into another descriptor and adds the message to an accumulator queue. 5. When the number of messages reaches a threshold, or after a pre-defined time out, the accumulator sends an interrupt to the core. 6. Reader starts processing the message and frees it after it is complete. Multicore Training

Agenda Basic Concepts IPC library msg. Com Demos and examples Multicore Training

Examples and Demos • There are multiple IPC library example projects for Key. Stone I in the MCSDK 2 release at mcsdk_2_X_X_Xpdk_C 6678_1_1_2_5packagestitransportipcexamples • msg. Com project (on ARM and DSP) is part of Key. Stone II Lab Book Multicore Training

ti Multicore Training