InterProcessor Parallel Architecture Course No Lecture No Term

- Slides: 62

Inter-Processor Parallel Architecture Course No Lecture No Term Module developed Spring 2013 by Apan Qasem This module created with support form NSF under grant # DUE 1141022 Some slides adopted from Patterson and Hennessy 4 th Edition with permission

Outline Parallel Architectures • Symmetric multiprocessor architecture (SMP) • Distributed-memory multiprocessor architecture • Clusters • The Grid • The Cloud • Multicore architecture • Simultaneous Multithreaded architecture • Vector Processors • GPUs TXST TUES Module : C 2 2

Outline • Types of parallelism • Data-level parallelism (DLP) • Task-level parallelism (TLP) • Pipelined parallelism • Issues in Parallelism • Amdahl’s Law • Load balancing • Scalability TXST TUES Module : C 2 3

What’s a Parallel Computer? • Any system with more than one processing unit • Many names • • • Multiprocessor Systems Clusters Supercomputers Distributed Systems SMPs Multicore Terms widely misused! • May refer to different types of architectures • Not under the realm of parallel computers • OS running multiple processes on a single processor TXST TUES Module : C 2 4

The 50 s and 60 s IBM 360 time-share machines mainframes TXST TUES Module : C 2 UNIVAC 1 image : Wikipedia 5

Mainframes today IBM still selling mainframe today New line of mainframes code named “T-Rex Banks appear to be the biggest client Billion dollars in revenue IBM Z-enterprise image : The Economist. 2012 TXST TUES Module : C 2 6

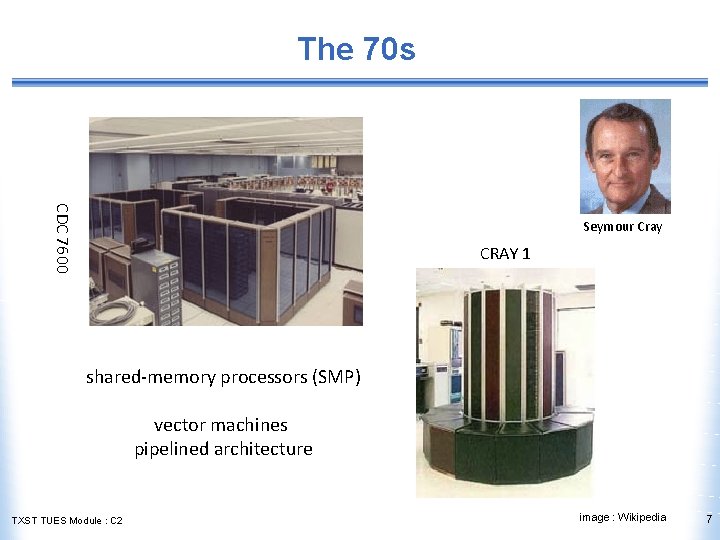

The 70 s CDC 7600 Seymour Cray CRAY 1 shared-memory processors (SMP) vector machines pipelined architecture TXST TUES Module : C 2 image : Wikipedia 7

The 80 s and 90 s Cluster Computing Distributed Computing Vax. Cluster IBM Power 5 -based Cluster TXST TUES Module : C 2 Intel-based Beowulf image : Wikipedia 8

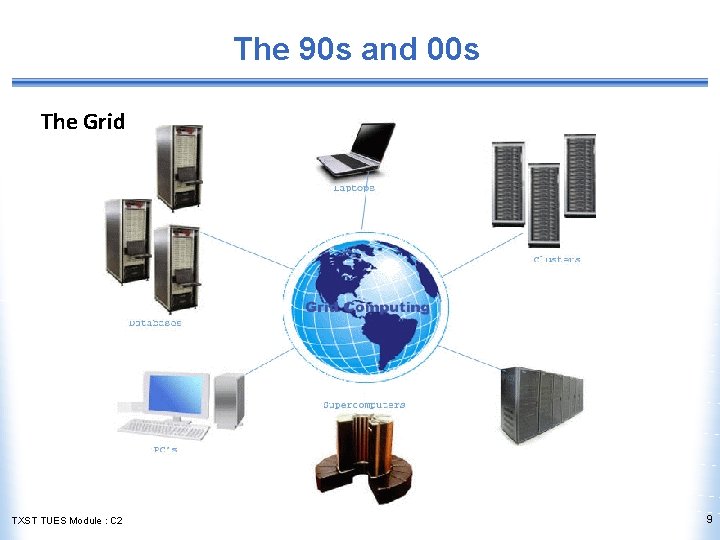

The 90 s and 00 s The Grid TXST TUES Module : C 2 9

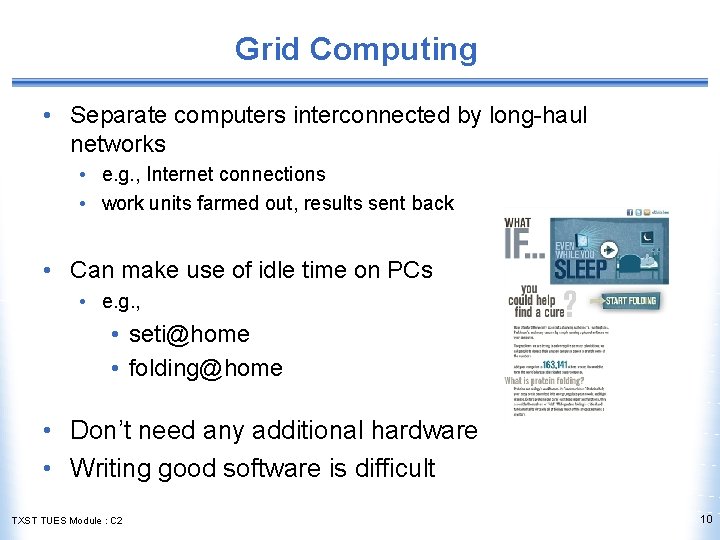

Grid Computing • Separate computers interconnected by long-haul networks • e. g. , Internet connections • work units farmed out, results sent back • Can make use of idle time on PCs • e. g. , • seti@home • folding@home • Don’t need any additional hardware • Writing good software is difficult TXST TUES Module : C 2 10

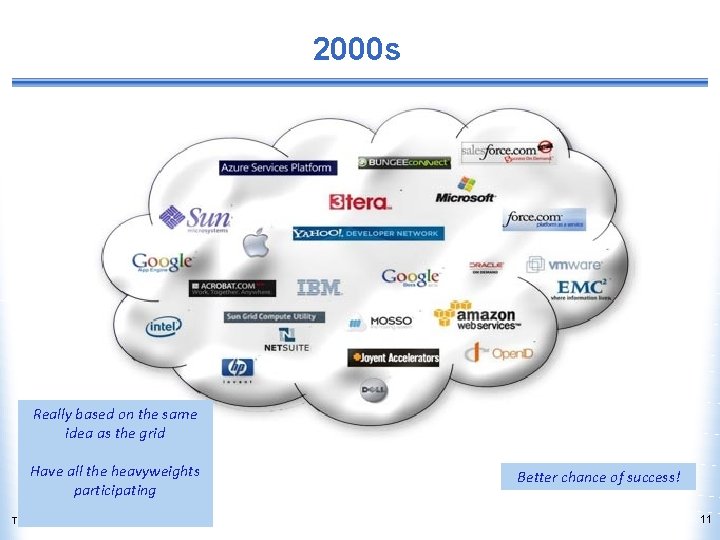

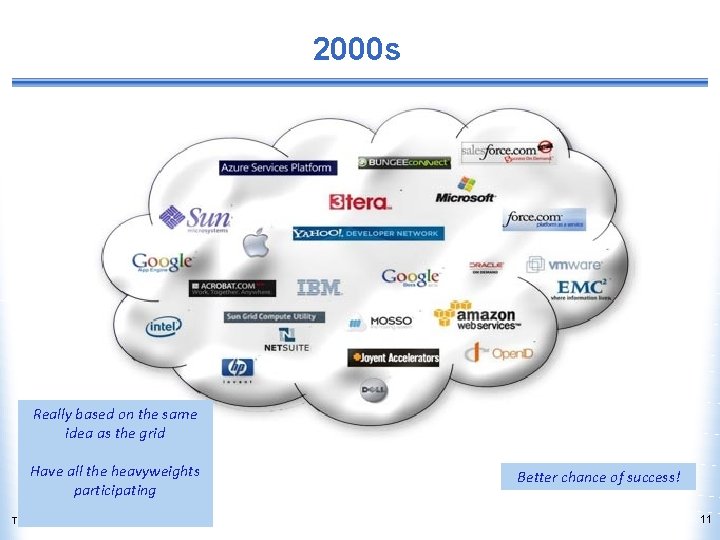

2000 s Really based on the same idea as the grid Have all the heavyweights participating TXST TUES Module : C 2 Better chance of success! 11

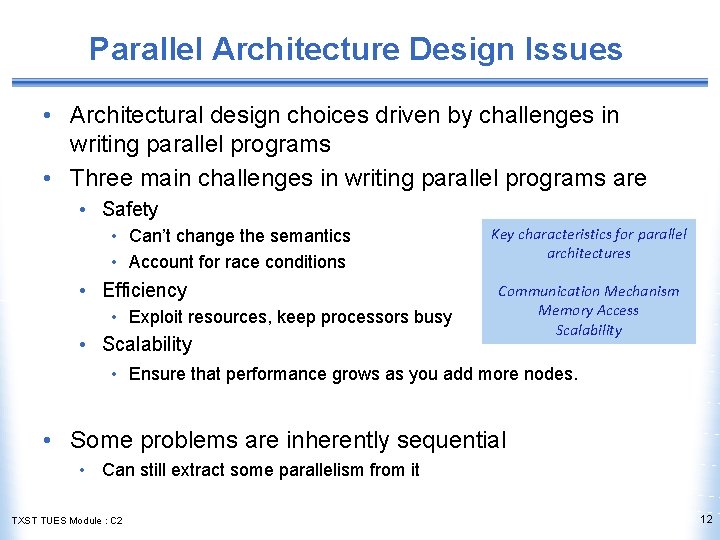

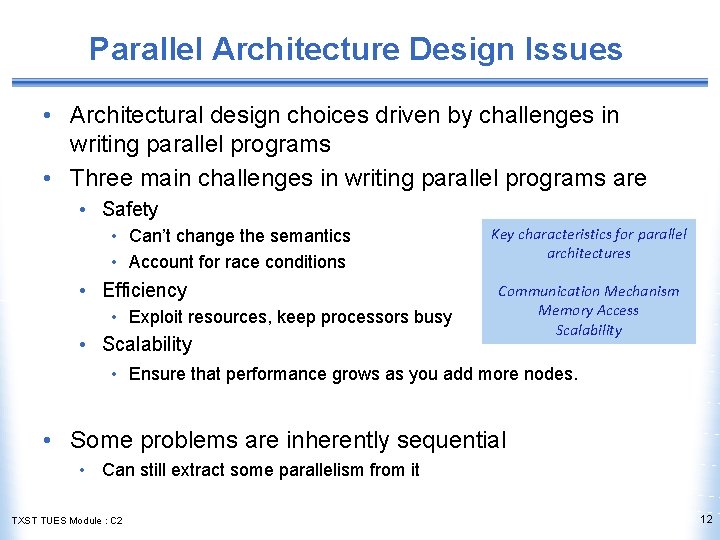

Parallel Architecture Design Issues • Architectural design choices driven by challenges in writing parallel programs • Three main challenges in writing parallel programs are • Safety • Can’t change the semantics • Account for race conditions • Efficiency • Exploit resources, keep processors busy • Scalability Key characteristics for parallel architectures Communication Mechanism Memory Access Scalability • Ensure that performance grows as you add more nodes. • Some problems are inherently sequential • Can still extract some parallelism from it TXST TUES Module : C 2 12

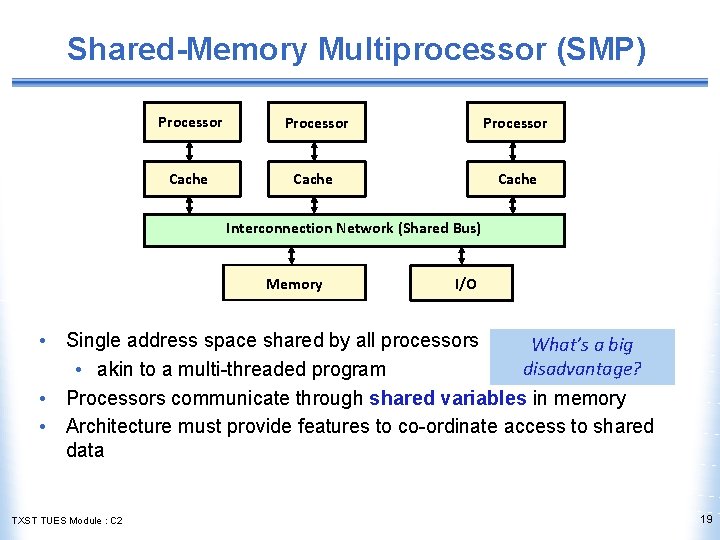

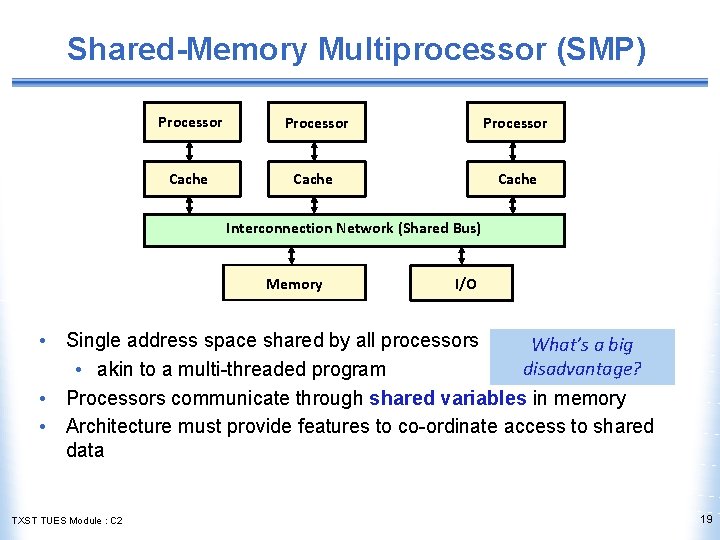

Shared-Memory Multiprocessor (SMP) Processor Cache Interconnection Network (Shared Bus) Memory • • • I/O until ~2006 most servers Single address space shared by all processors were set up as SMPs • akin to a multi-threaded program Processors communicate through shared variables in memory Architecture must provide features to co-ordinate access to shared data • synchronization primitives TXST TUES Module : C 2 13

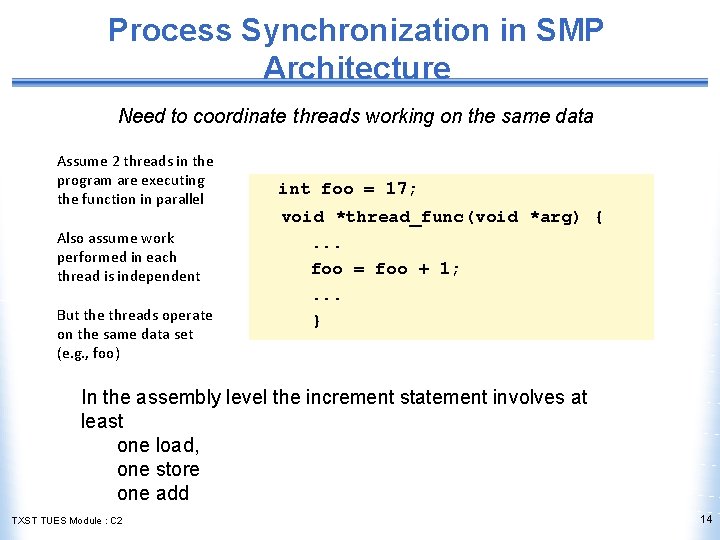

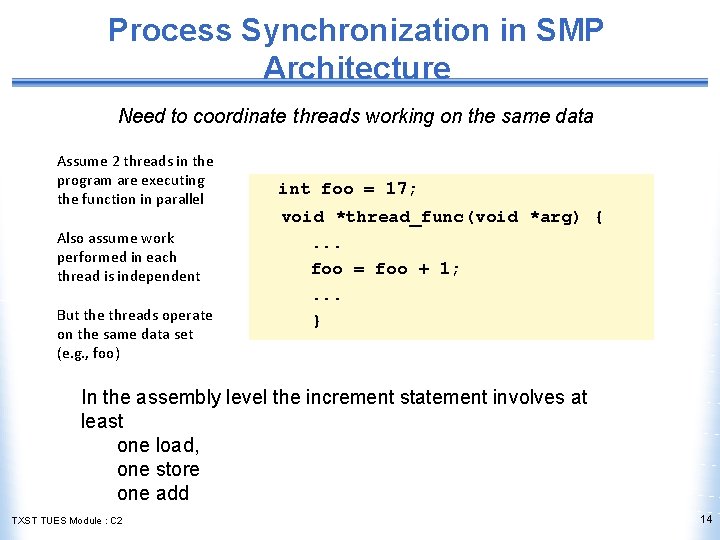

Process Synchronization in SMP Architecture Need to coordinate threads working on the same data Assume 2 threads in the program are executing the function in parallel Also assume work performed in each thread is independent But the threads operate on the same data set (e. g. , foo) int foo = 17; void *thread_func(void *arg) {. . . foo = foo + 1; . . . } In the assembly level the increment statement involves at least one load, one store one add TXST TUES Module : C 2 14

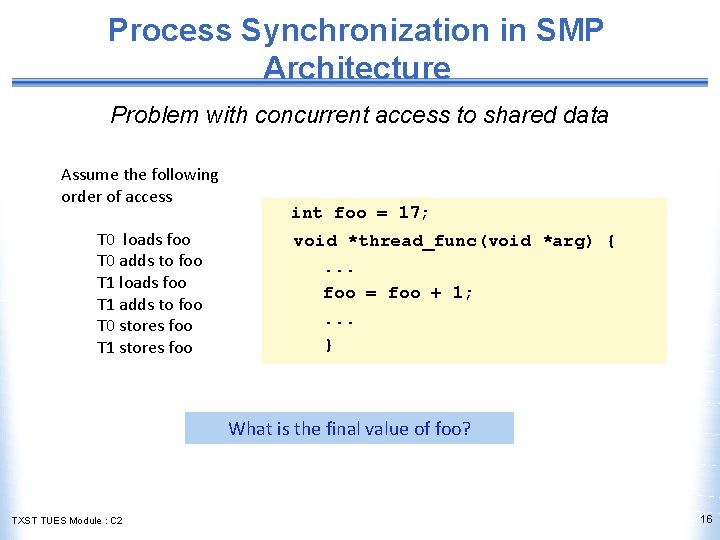

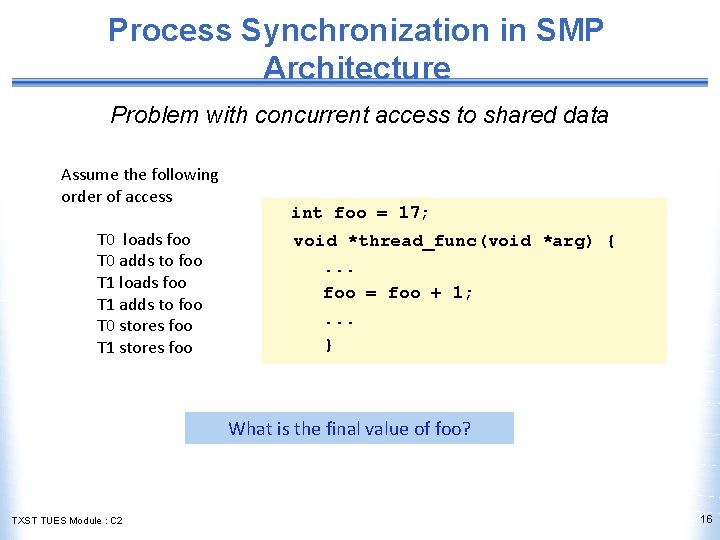

Process Synchronization in SMP Architecture Problem with concurrent access to shared data Assume the following order of access T 0 loads foo T 1 loads foo T 0 adds to foo T 1 adds to foo T 0 stores foo T 1 stores foo int foo = 17; void *thread_func(void *arg) {. . . foo = foo + 1; . . . } What is the final value of foo? TXST TUES Module : C 2 15

Process Synchronization in SMP Architecture Problem with concurrent access to shared data Assume the following order of access T 0 loads foo T 0 adds to foo T 1 loads foo T 1 adds to foo T 0 stores foo T 1 stores foo int foo = 17; void *thread_func(void *arg) {. . . foo = foo + 1; . . . } What is the final value of foo? TXST TUES Module : C 2 16

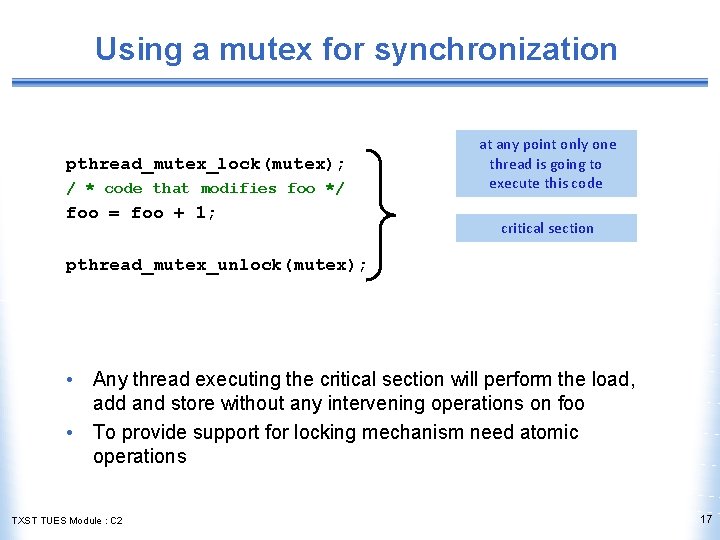

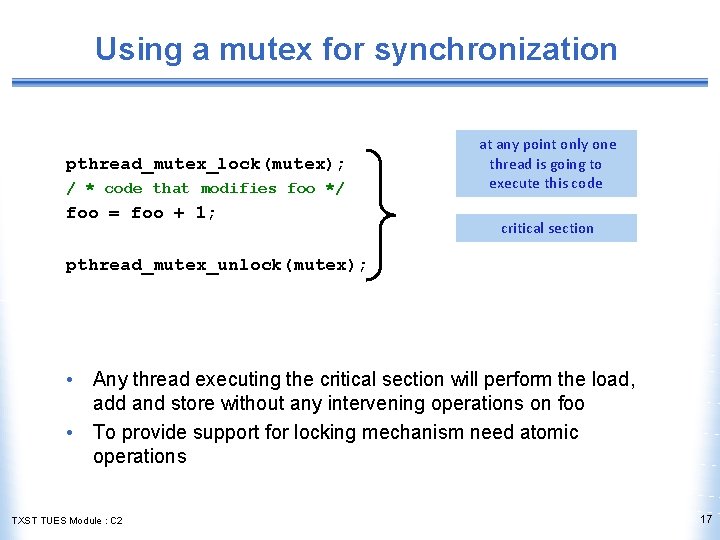

Using a mutex for synchronization pthread_mutex_lock(mutex); / * code that modifies foo */ foo = foo + 1; at any point only one thread is going to execute this code critical section pthread_mutex_unlock(mutex); • Any thread executing the critical section will perform the load, add and store without any intervening operations on foo • To provide support for locking mechanism need atomic operations TXST TUES Module : C 2 17

Process Synchronization in SMP Architecture • Need to be able to coordinate processes working on the same data • At the program-level can use semaphores or mutexes to synchronize processes and implement critical sections • Need architectural support to lock shared variables • atomic swap operation on MIPS (ll and sc)and SPARC (swp) • Need architectural support to determine which processor gets access to the lock variable • single bus provides arbitration mechanism since the bus is the only path to memory • the processor that gets the bus wins TXST TUES Module : C 2 18

Shared-Memory Multiprocessor (SMP) Processor Cache Interconnection Network (Shared Bus) Memory I/O • Single address space shared by all processors What’s a big disadvantage? • akin to a multi-threaded program • Processors communicate through shared variables in memory • Architecture must provide features to co-ordinate access to shared data TXST TUES Module : C 2 19

Types of SMP • SMPs come in two styles • Uniform memory access (UMA) multiprocessors • Any memory access takes the same amount of time • Non-uniform memory access (NUMA) multiprocessors • Memory is divided into banks • Memory latency depends on where the data is located • Programming NUMAs are harder but design is easier • NUMAs can scale to larger sizes and have lower latency to local memory leading to overall improved performance • Most SMPs in use today are NUMA TXST TUES Module : C 2 20

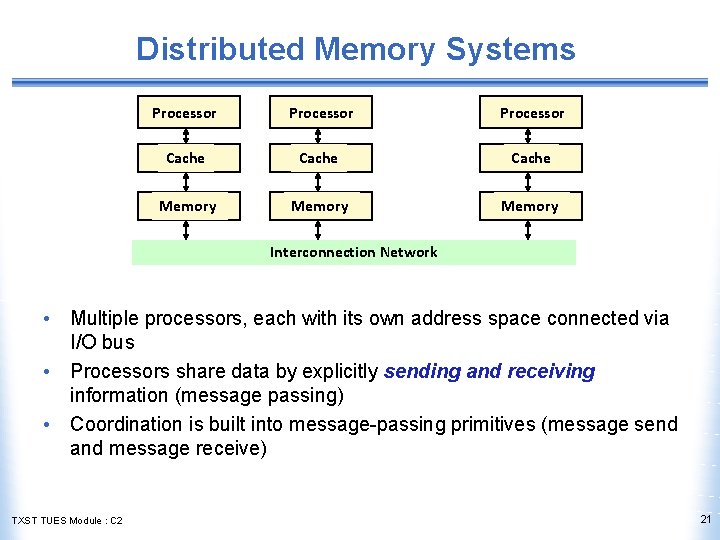

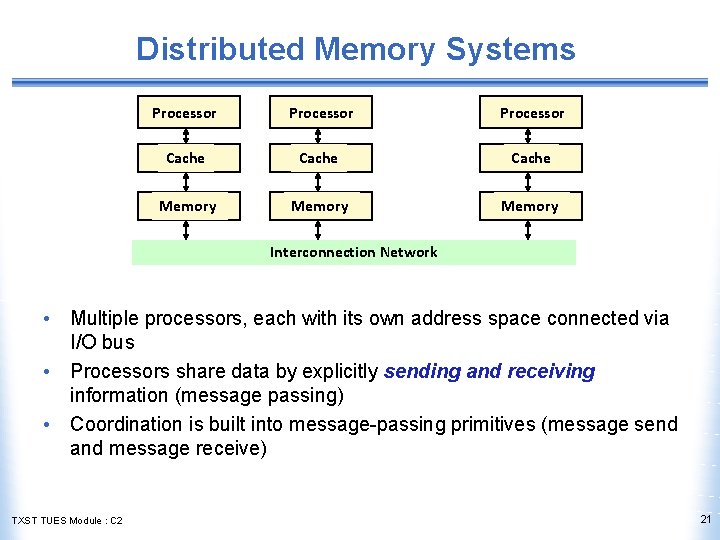

Distributed Memory Systems Processor Cache Memory Interconnection Network • Multiple processors, each with its own address space connected via I/O bus • Processors share data by explicitly sending and receiving information (message passing) • Coordination is built into message-passing primitives (message send and message receive) TXST TUES Module : C 2 21

Specialized Interconnection Networks • For distributed memory systems speed of communication between processors is critical • I/O bus or Ethernet, although viable solutions don’t provide the necessary performance infiniband • latency is important • high throughput is important • Most distributed systems today are implemented with specialized interconnect networks • Infiniband • Myrinet • Quadrics TXST TUES Module : C 2 myrinet 22

Clusters • Clusters are a type of distributed memory systems • They are off-the-shelf, whole computers with multiple private address spaces connected using the I/O bus and network switches • lower bandwidth than multiprocessor that use the processor-memory (front side) bus • lower speed network links • more conflicts with I/O traffic • Each node has its own OS, limiting the memory available for applications • Improved system availability and expandability • easier to replace a machine without bringing down the whole system • allows rapid, incremental expansion • Economies-of-scale advantages with respect to costs TXST TUES Module : C 2 23

Interconnection Networks • On distributed systems processors can be arranged in a variety of ways • Typically the more connections you have the better the performance and higher the cost Bus Ring N-cube (N = 3) 2 D Mesh TXST TUES Module : C 2 Fully connected 24

SMPs vs. Distributed Systems SMP Distributed Communication happens through shared memory Need explicit communication Harder to design and program Easier to design and program Not scalable Scalable Need special OS Can use regular OS Programming API : Open. MP Programming API : MPI Administration cost low Administration cost high TXST TUES Module : C 2 25

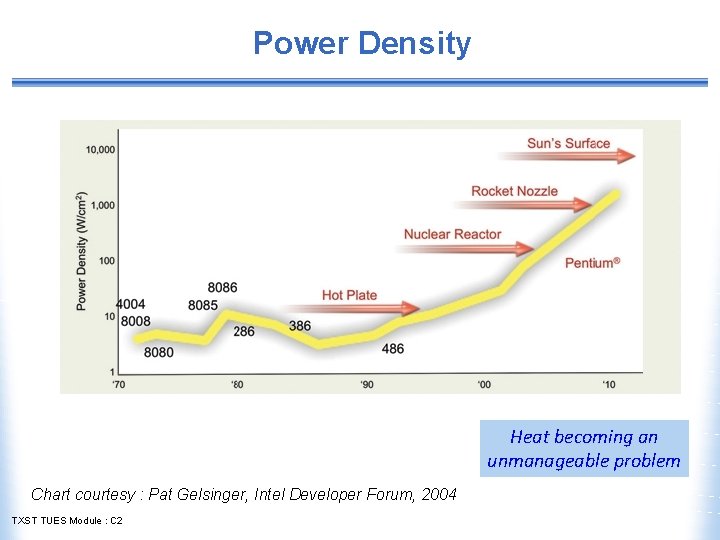

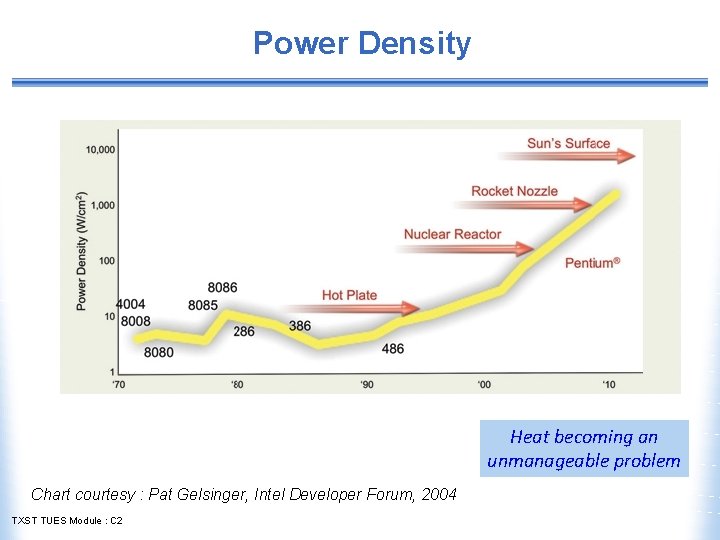

Power Density Heat becoming an unmanageable problem Chart courtesy : Pat Gelsinger, Intel Developer Forum, 2004 TXST TUES Module : C 2

The Power Wall • Moore’s law still holds but does not seem to be economically feasible • Power dissipation (and associated costs) too high • Solution • Put multiple simplified cores in the same chip area • Less power dissipation => Less heat => Lower cost TXST TUES Module : C 2 27

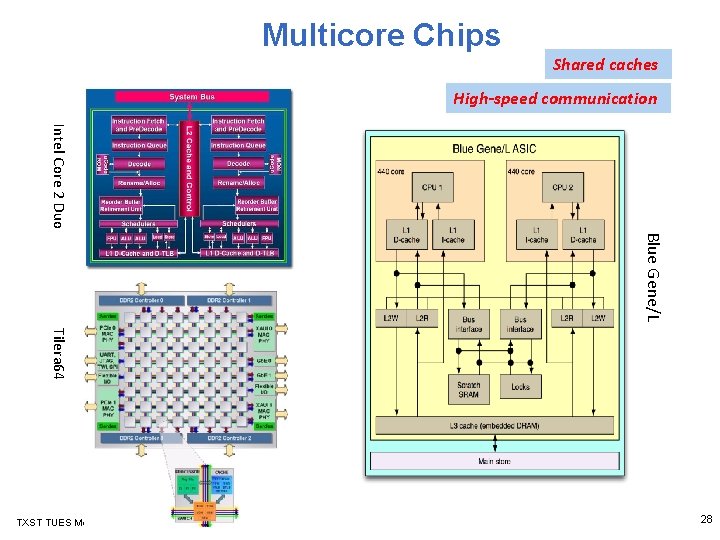

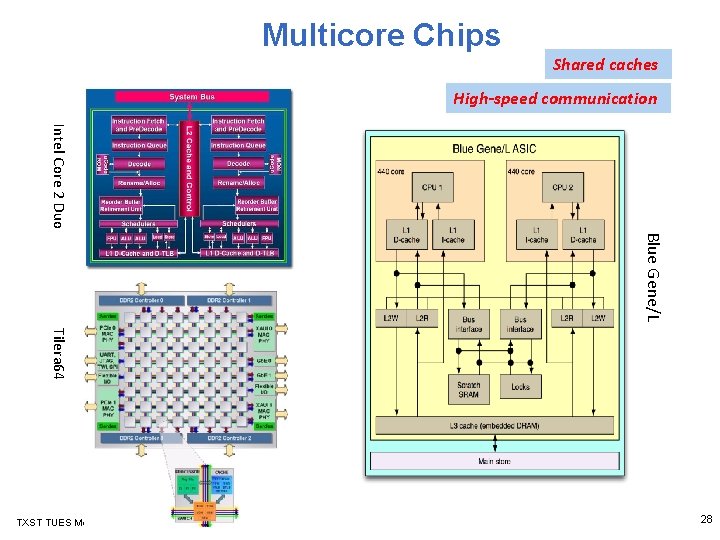

Multicore Chips Shared caches High-speed communication Intel Core 2 Duo Blue Gene/L Tilera 64 TXST TUES Module : C 2 28

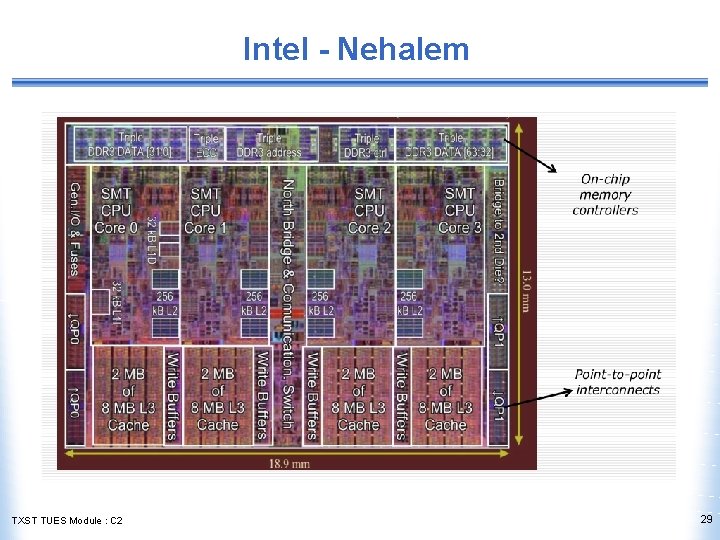

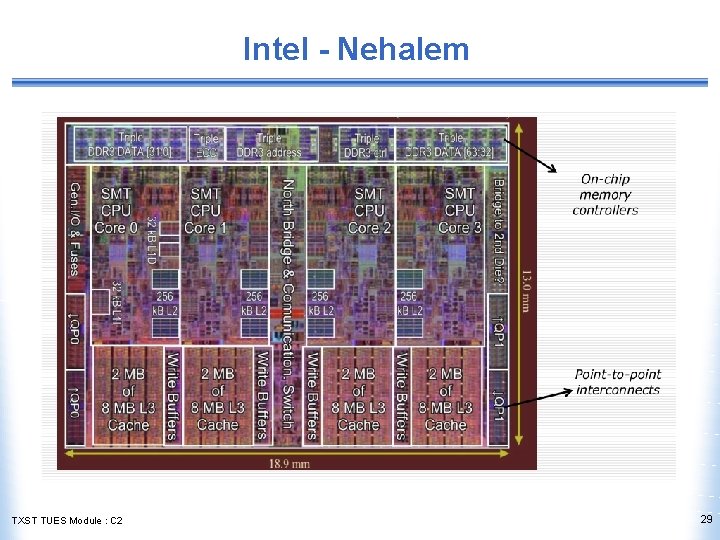

Intel - Nehalem TXST TUES Module : C 2 29

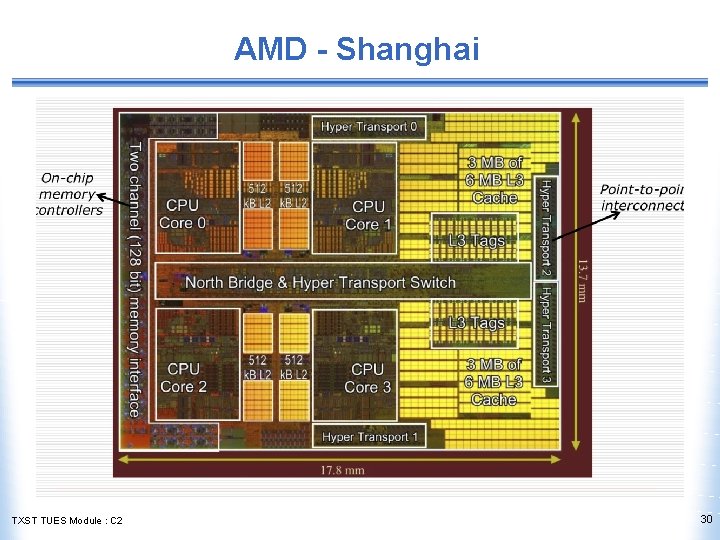

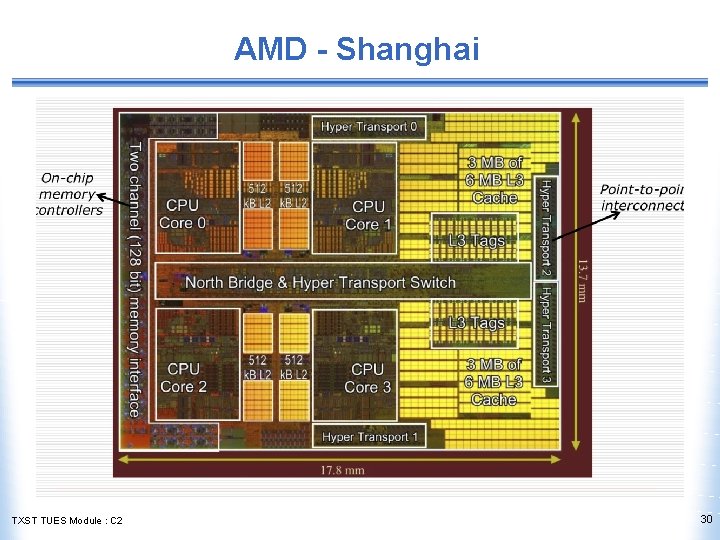

AMD - Shanghai TXST TUES Module : C 2 30

CMP Architectural Considerations In a way, each multicore chip is an SMP • memory => cache • processor => core Architectural considerations are same as for SMPs • Scalability • how many cores can we hook up to an L 2 cache? • Sharing cache coherence • how do concurrent threads share data? protocols • through LLC or memory • Communication • how do threads communicate? • semaphores and locks, use cache if possible TXST TUES Module : C 2 31

Simultaneous Multithreading (SMT) • Many architectures today support multiple HW threads • SMTs use the resources of superscalar to exploit both ILP and thread-level parallelism • Having more instructions to play with gives the scheduler more opportunities in scheduling • No dependence between threads from different programs • Need to rename registers • Intel calls it’s SMT technology hyperthreading • On most machines today, you have SMT on every core • Theoretically, a quad-core machine gives you 8 processors with hyperthreading • Logical cores TXST TUES Module : C 2 32

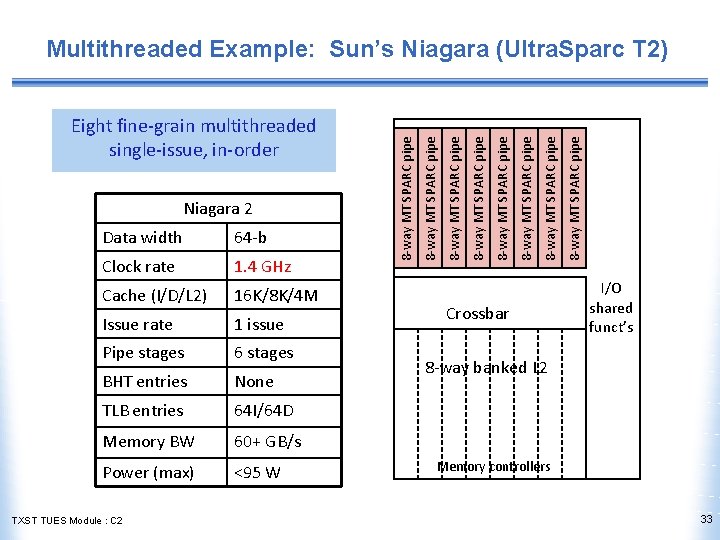

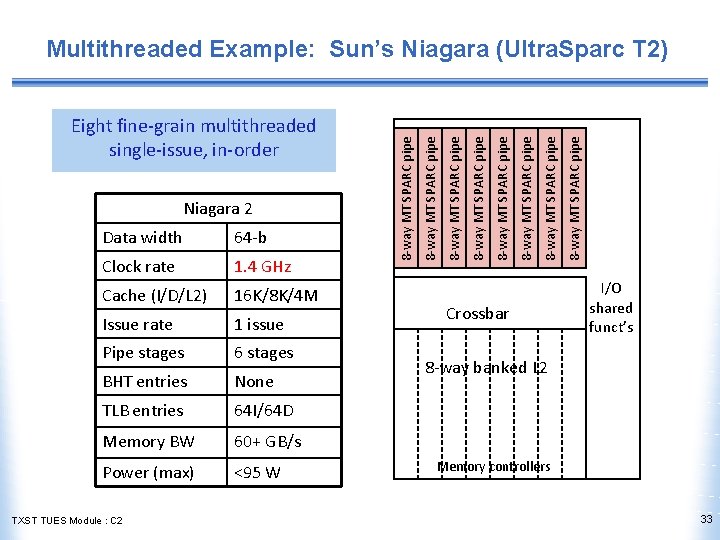

16 K/8 K/4 M Issue rate 1 issue Pipe stages 6 stages BHT entries None TLB entries 64 I/64 D Memory BW 60+ GB/s Power (max) <95 W TXST TUES Module : C 2 Crossbar 8 -way MT SPARC pipe Cache (I/D/L 2) 8 -way MT SPARC pipe 1. 4 GHz 8 -way MT SPARC pipe Clock rate 8 -way MT SPARC pipe 64 -b 8 -way MT SPARC pipe Data width 8 -way MT SPARC pipe Niagara 2 8 -way MT SPARC pipe Eight fine-grain multithreaded single-issue, in-order 8 -way MT SPARC pipe Multithreaded Example: Sun’s Niagara (Ultra. Sparc T 2) I/O shared funct’s 8 -way banked L 2 Memory controllers 33

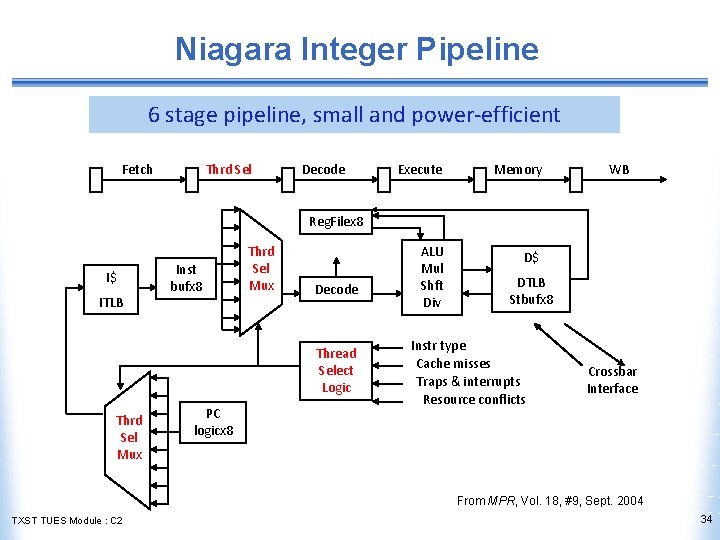

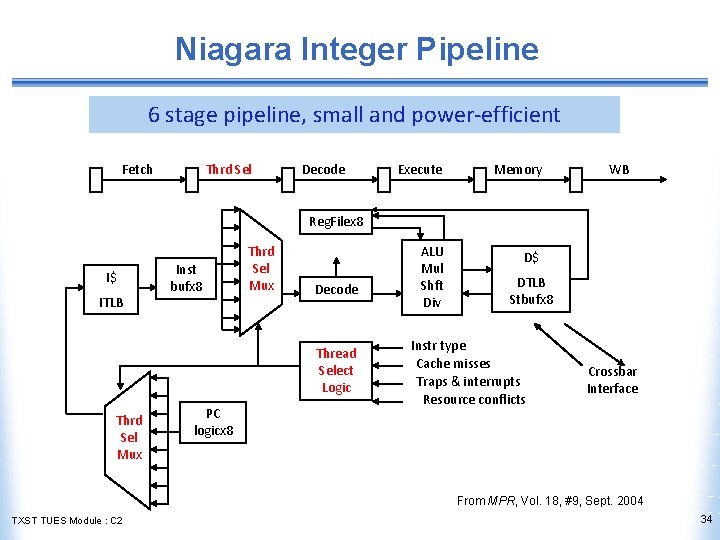

Niagara Integer Pipeline 6 stage pipeline, small and power-efficient Fetch Thrd Sel Decode Execute Memory ALU Mul Shft Div D$ WB Reg. Filex 8 I$ ITLB Inst bufx 8 Thrd Sel Mux Decode Thread Select Logic Thrd Sel Mux PC logicx 8 DTLB Stbufx 8 Instr type Cache misses Traps & interrupts Resource conflicts Crossbar Interface From MPR, Vol. 18, #9, Sept. 2004 TXST TUES Module : C 2 34

SMT Issues • Processor must duplicate the state hardware for each thread • a separate register file, PC, instruction buffer, store buffer for each thread • The caches, TLBs, BHT can be shared • although the miss rates may increase if they are not sized accordingly • The memory can be shared through virtual memory mechanisms • Hardware must support efficient thread context switching TXST TUES Module : C 2 35

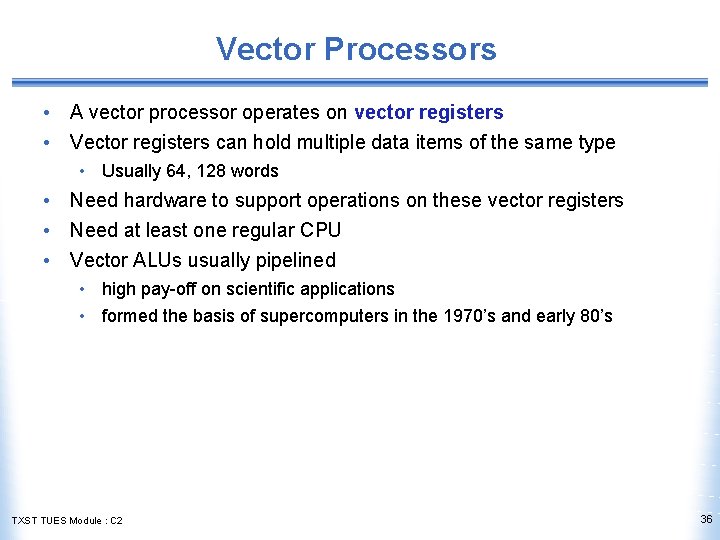

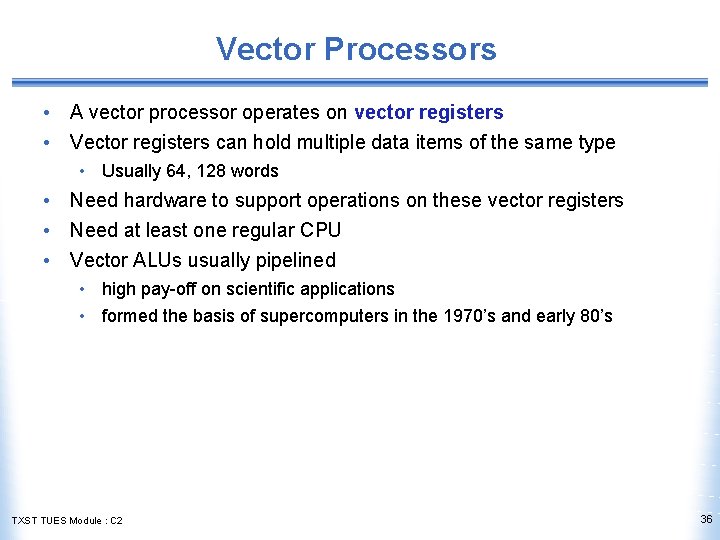

Vector Processors • A vector processor operates on vector registers • Vector registers can hold multiple data items of the same type • Usually 64, 128 words • Need hardware to support operations on these vector registers • Need at least one regular CPU • Vector ALUs usually pipelined • high pay-off on scientific applications • formed the basis of supercomputers in the 1970’s and early 80’s TXST TUES Module : C 2 36

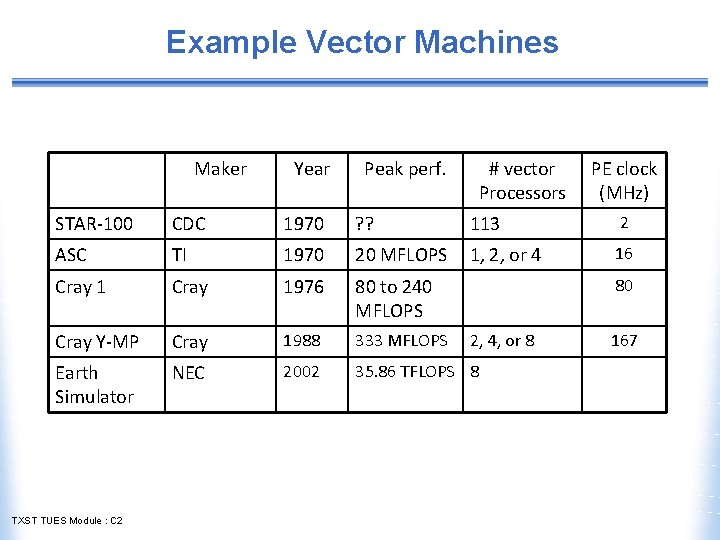

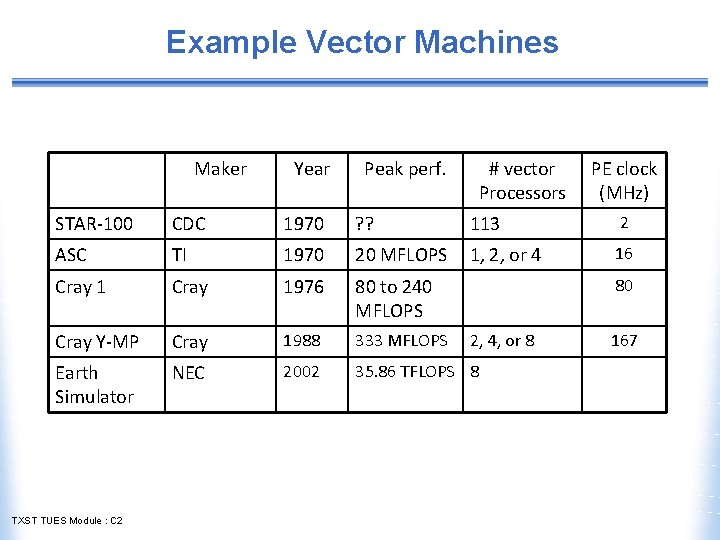

Example Vector Machines Maker Year Peak perf. # vector Processors PE clock (MHz) STAR-100 CDC 1970 ? ? 113 2 ASC TI 1970 20 MFLOPS 1, 2, or 4 16 Cray 1976 80 to 240 MFLOPS Cray Y-MP Cray 1988 333 MFLOPS Earth Simulator NEC 2002 35. 86 TFLOPS 8 TXST TUES Module : C 2 80 2, 4, or 8 167

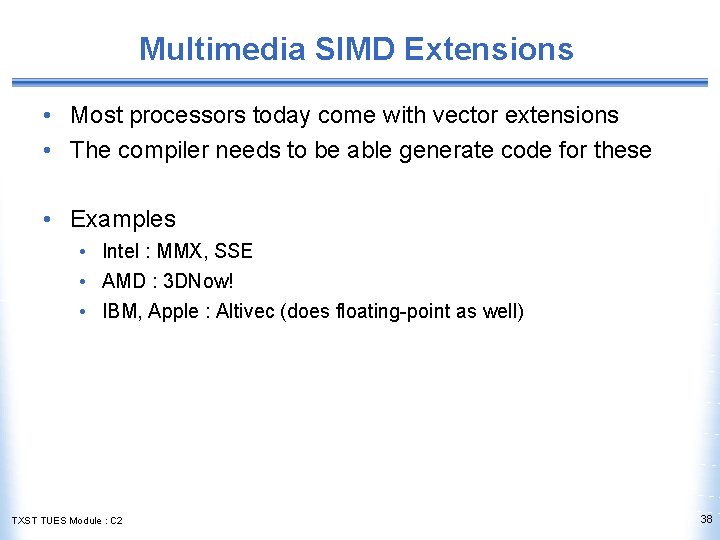

Multimedia SIMD Extensions • Most processors today come with vector extensions • The compiler needs to be able generate code for these • Examples • Intel : MMX, SSE • AMD : 3 DNow! • IBM, Apple : Altivec (does floating-point as well) TXST TUES Module : C 2 38

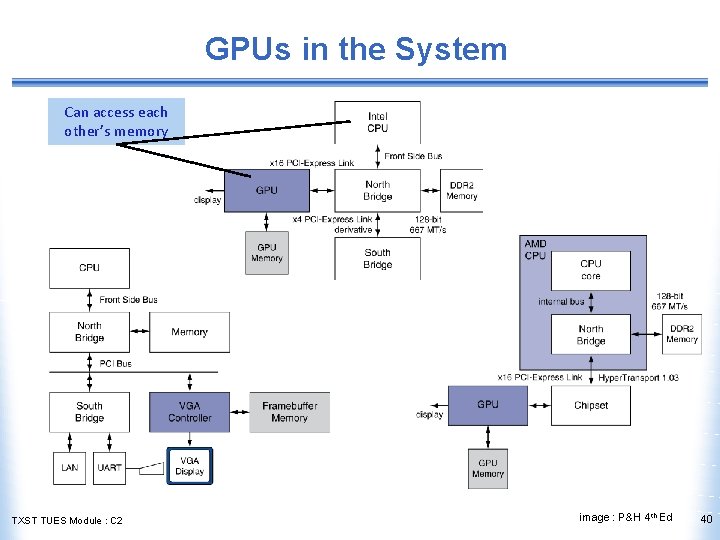

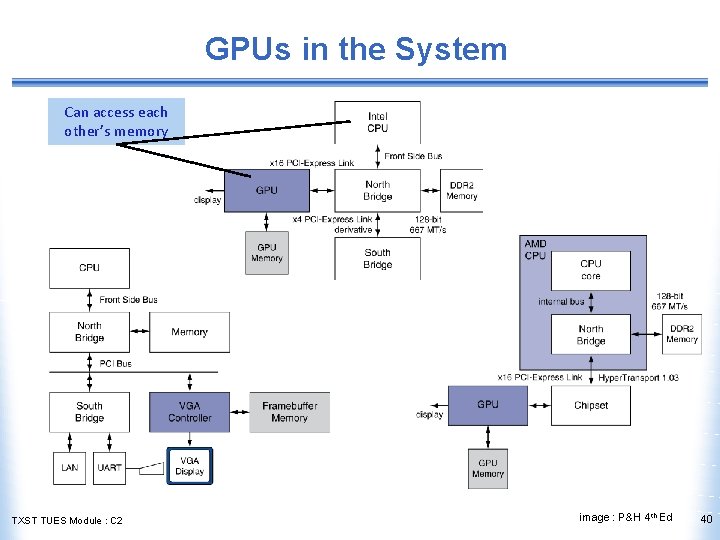

GPUs • Graphics cards have come a long way since the early days of 8 -bit graphics • Most machines today come with graphics processing unit that is highly powerful and capable of doing general purpose computation as well • Nvidia is leading the market (GTX, Tesla) AMD’s competing (ATI) Intel’s kind of behind • • • May change with MICs TXST TUES Module : C 2 39

GPUs in the System Can access each other’s memory TXST TUES Module : C 2 image : P&H 4 th Ed 40

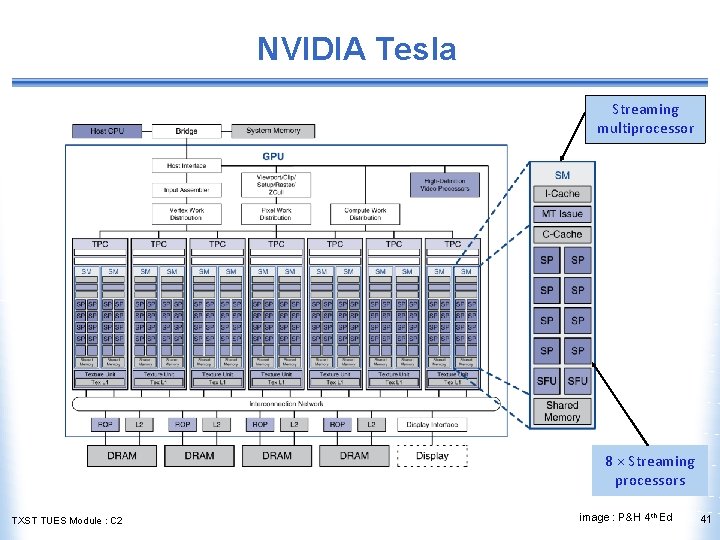

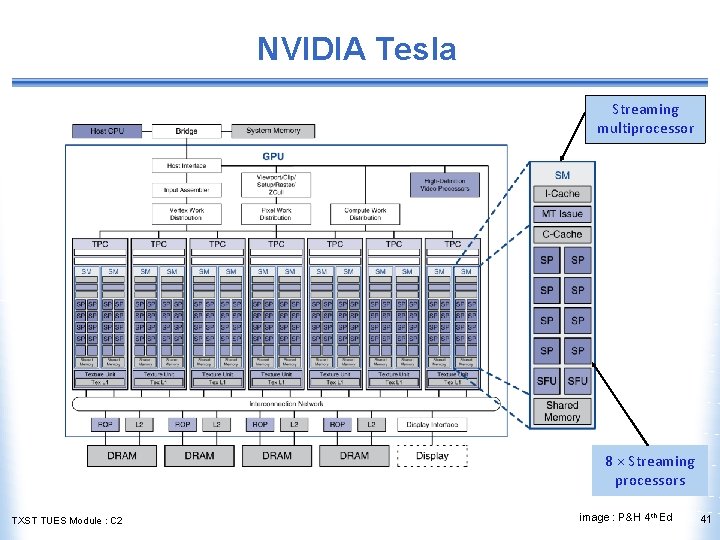

NVIDIA Tesla Streaming multiprocessor 8 × Streaming processors TXST TUES Module : C 2 image : P&H 4 th Ed 41

GPU Architectures • Many processing cores • Cores not as powerful as CPU • Processing is highly data-parallel • Use thread switching to hide memory latency • Less reliance on multi-level caches • Graphics memory is wide and high-bandwidth • Trend toward heterogeneous CPU/GPU systems in HPC • Top 500 • Programming languages/APIs • Compute Unified Device Architecture (CUDA) from NVidia • Open. CL for ATI TXST TUES Module : C 2 42

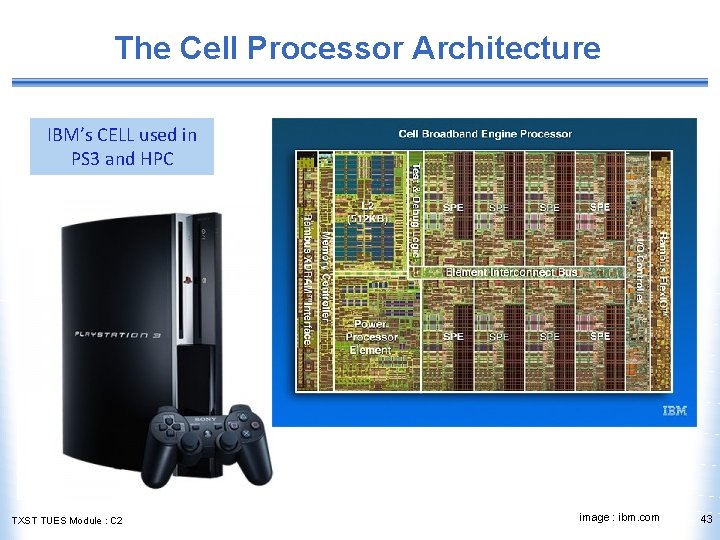

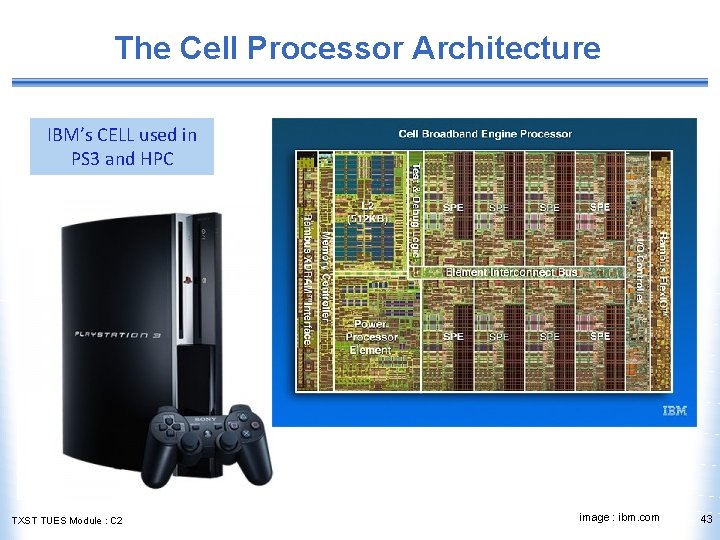

The Cell Processor Architecture IBM’s CELL used in PS 3 and HPC TXST TUES Module : C 2 image : ibm. com 43

Types of Parallelism • Models of parallelism • Message Passing • Shared-memory • Classification based on decomposition • Data parallelism • Task parallelism • Pipelined parallelism • Classification based on • TLP • ILP // thread-level parallelism // instruction-level parallelism • Other related terms • Massively Parallel • Petascale, Exascale computing • Embarrassingly Parallel TXST TUES Module : C 2 44

Flynn’s Taxonomy • SISD – single instruction, single data stream • aka uniprocessor • SIMD – single instruction, multiple data streams • single control unit broadcasting operations to multiple datapaths • MISD – multiple instruction, single data • no such machine • MIMD – multiple instructions, multiple data streams • aka multiprocessors (SMPs, clusters) TXST TUES Module : C 2 45

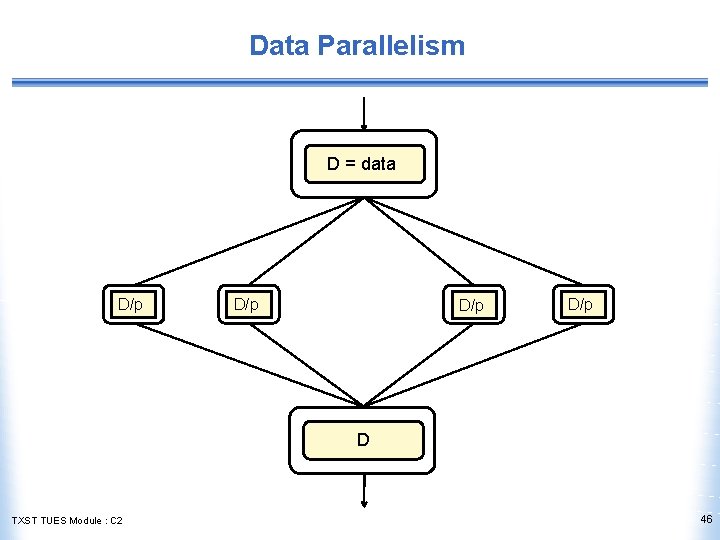

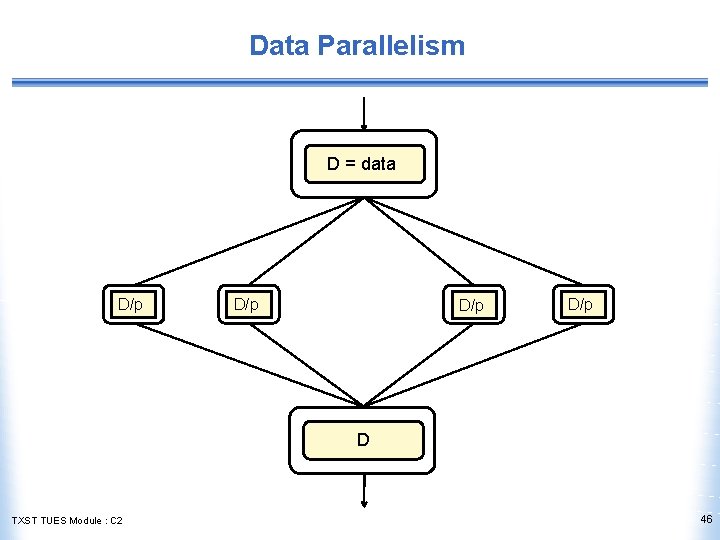

Data Parallelism D = data D/p D/p D TXST TUES Module : C 2 46

Data Parallelism D = data typically, same task on different parts of the data D/p D/p D TXST TUES Module : C 2 spawn D/p synchronize 47

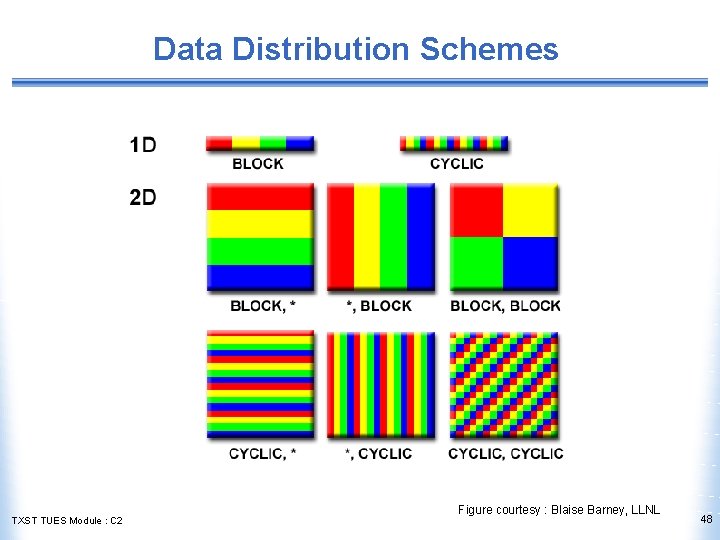

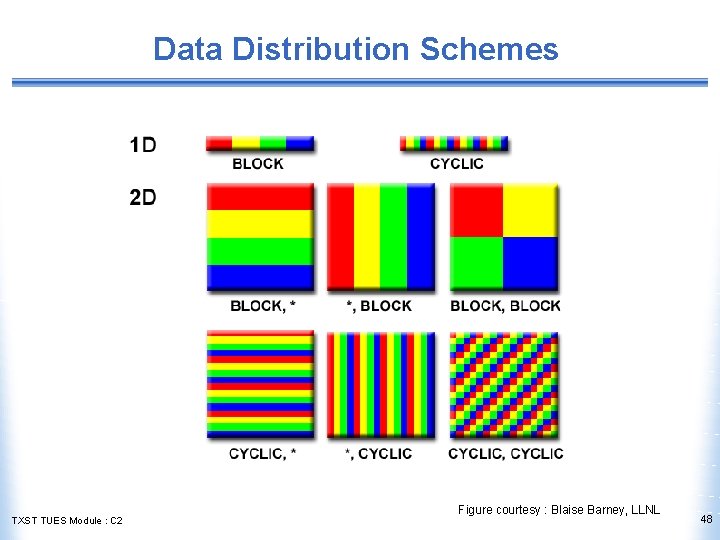

Data Distribution Schemes TXST TUES Module : C 2 Figure courtesy : Blaise Barney, LLNL 48

Example : Data Parallel Code in Open. MP !$omp parallel do private(i, j) do j = 1, N do i = 1, M a(i, j) = 17 enddo b(j) = 17 c(j) = 17 d(j) = 17 enddo !$omp end parallel do TXST TUES Module : C 2 49

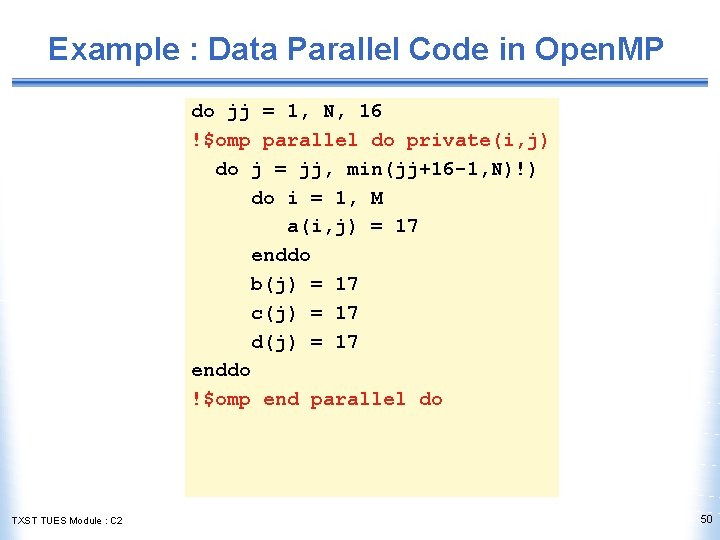

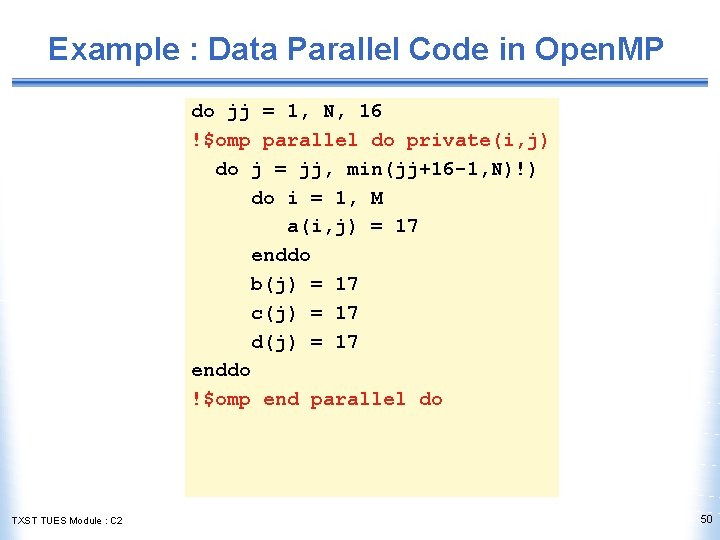

Example : Data Parallel Code in Open. MP do jj = 1, N, 16 !$omp parallel do private(i, j) do j = jj, min(jj+16 -1, N)!) do i = 1, M a(i, j) = 17 enddo b(j) = 17 c(j) = 17 d(j) = 17 enddo !$omp end parallel do TXST TUES Module : C 2 50

Shared-cache and Data Parallelization D D/k D/k D/k ≤ Cache Capacity Shared caches make the task of finding k much more difficult Minimizing communication is no longer the sole objective more Parallel algorithms for CMPs need to be cache-aware ^ TXST TUES Module : C 2 51

Data Parallelism • This type of parallelism is sometimes referred to as loop-level parallelism • Quite common in scientific computation • Also, sorting. Which ones? TXST TUES Module : C 2 52

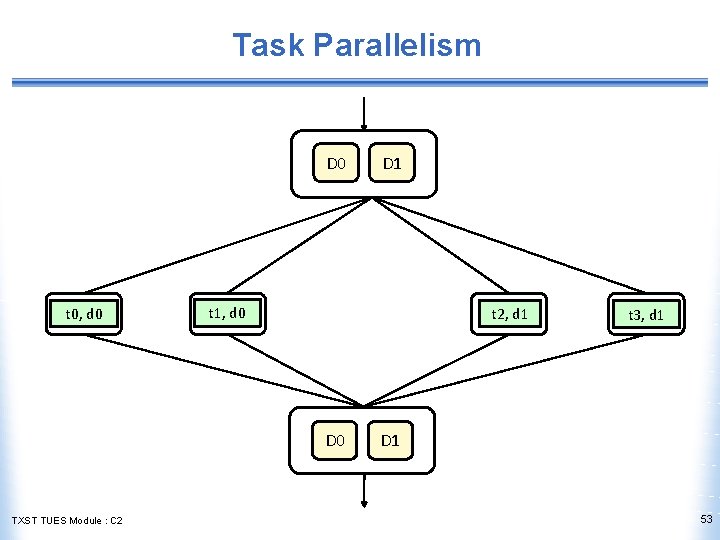

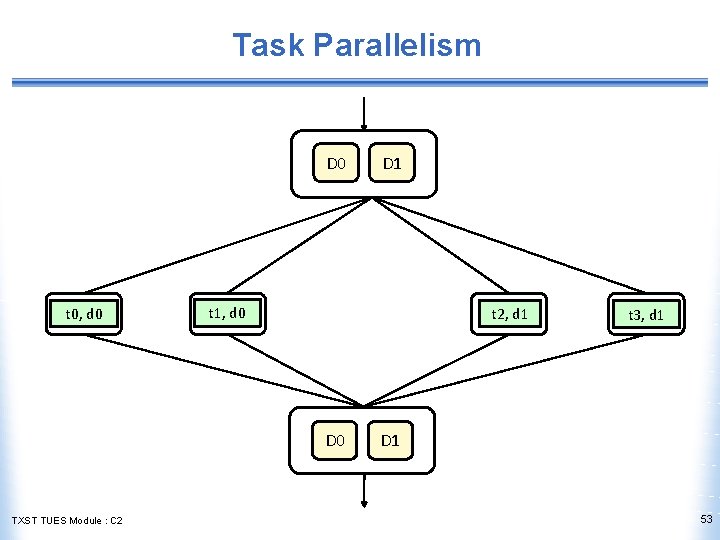

Task Parallelism D 0 t 0, d 0 t 1, d 0 t 2, d 1 D 0 TXST TUES Module : C 2 D 1 t 3, d 1 D 1 53

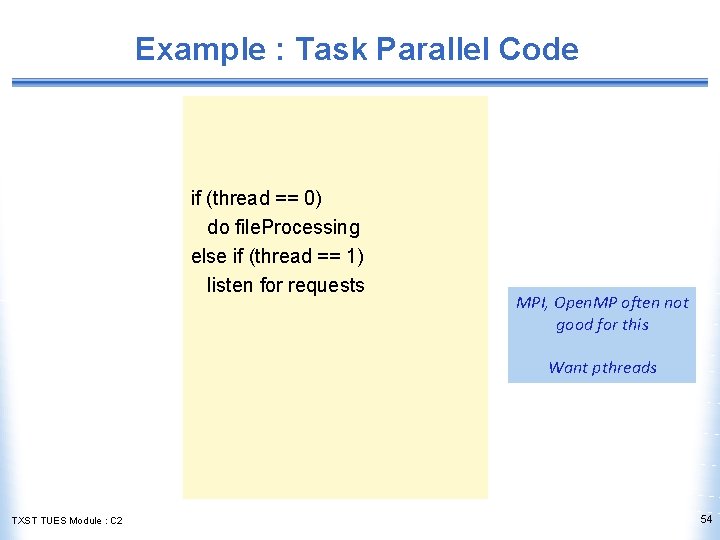

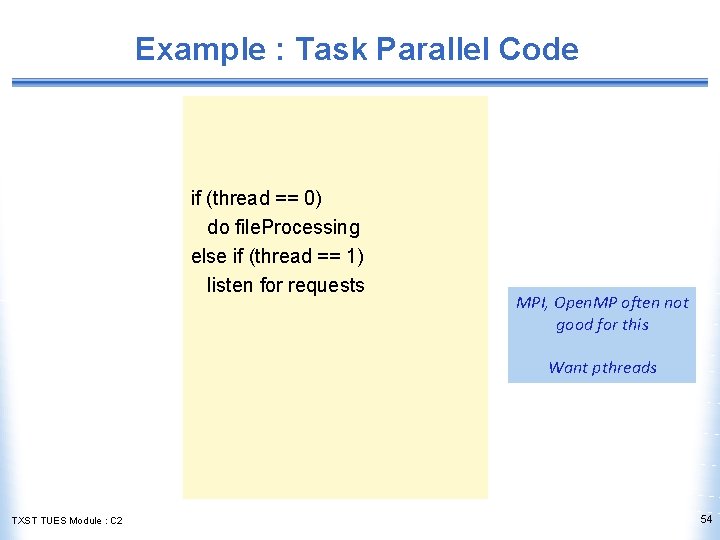

Example : Task Parallel Code if (thread == 0) do file. Processing else if (thread == 1) listen for requests MPI, Open. MP often not good for this Want pthreads TXST TUES Module : C 2 54

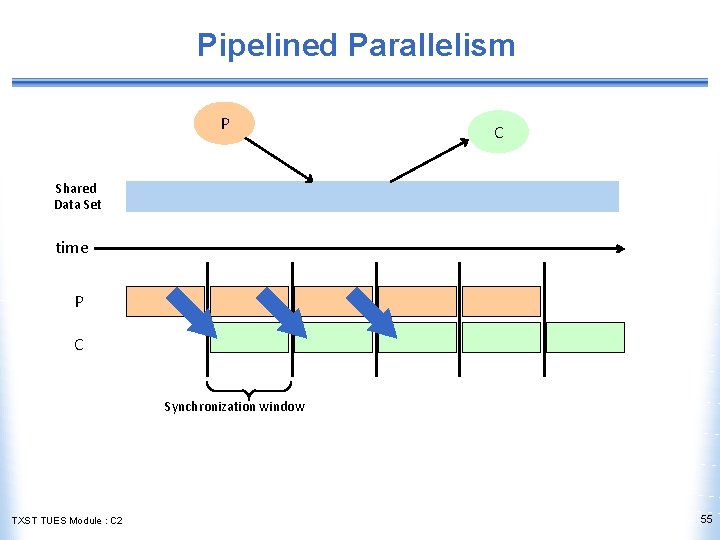

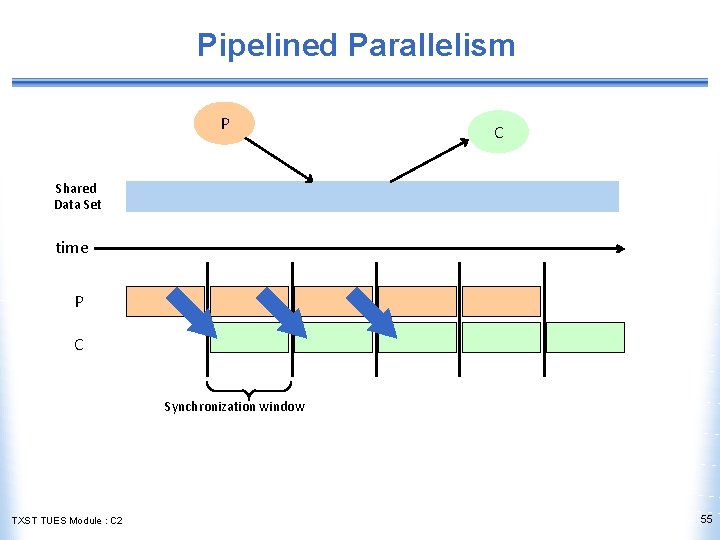

Pipelined Parallelism P C Shared Data Set time P C Synchronization window TXST TUES Module : C 2 55

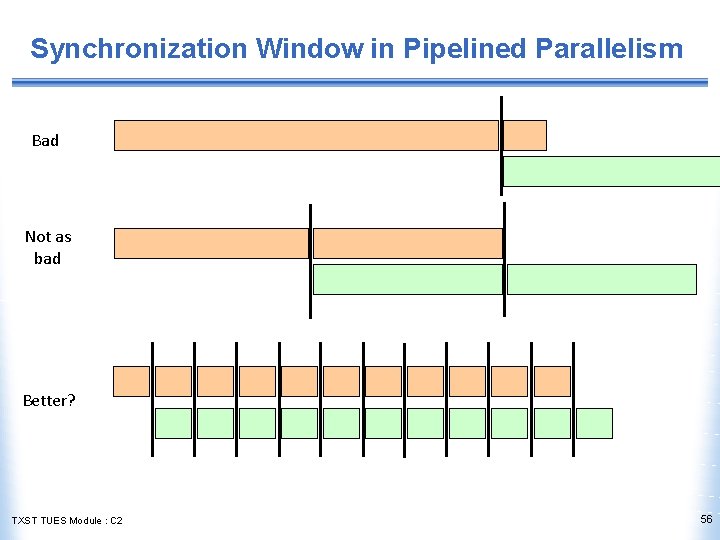

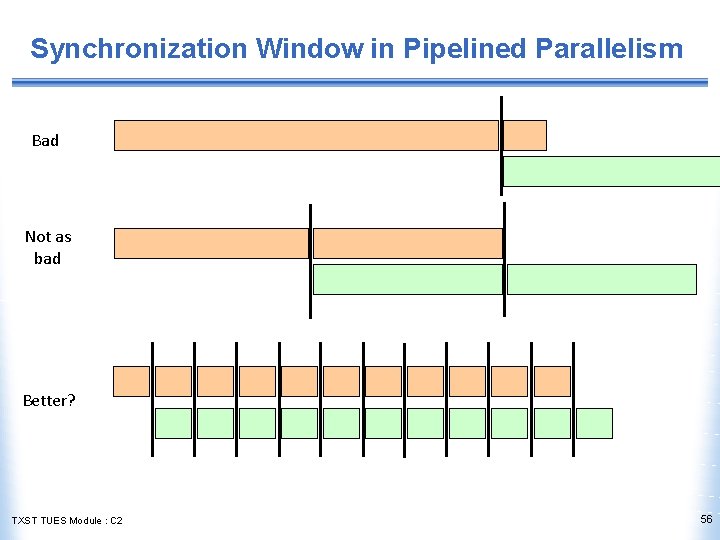

Synchronization Window in Pipelined Parallelism Bad Not as bad Better? TXST TUES Module : C 2 56

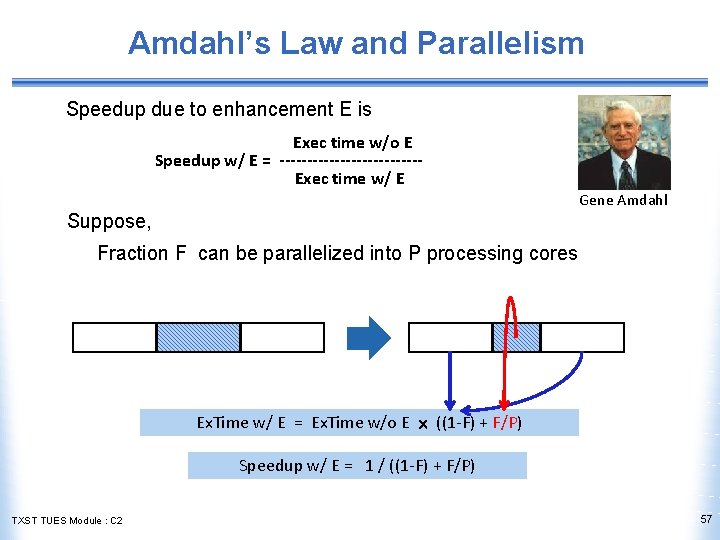

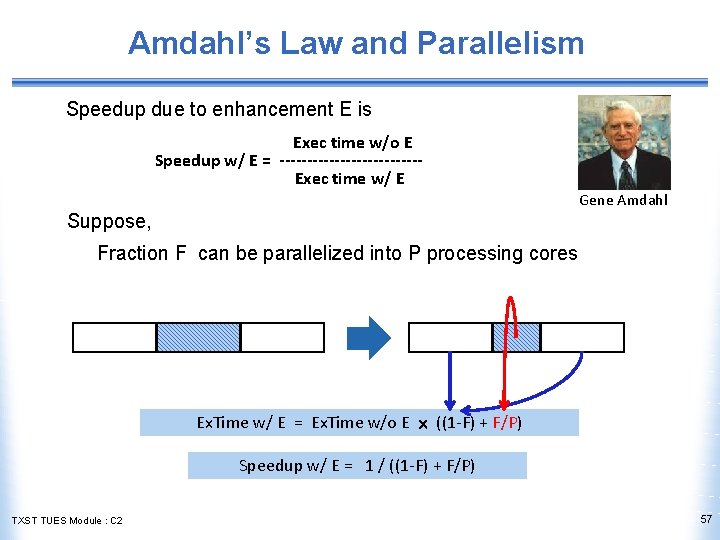

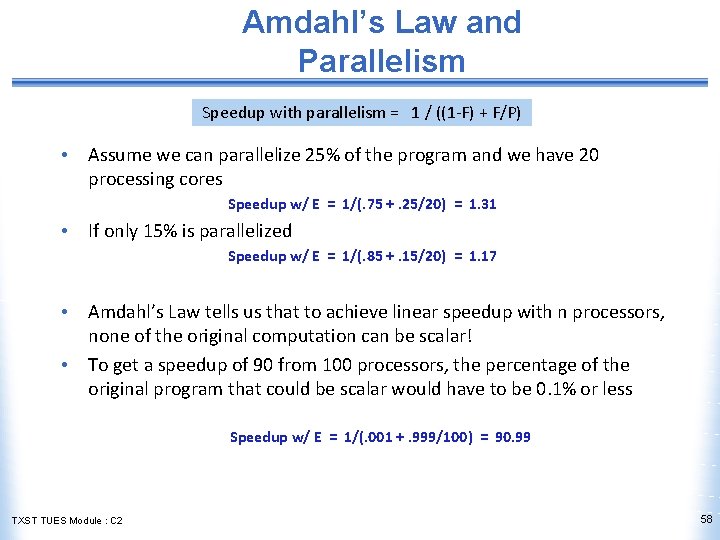

Amdahl’s Law and Parallelism Speedup due to enhancement E is Exec time w/o E Speedup w/ E = -------------Exec time w/ E Gene Amdahl Suppose, Fraction F can be parallelized into P processing cores Ex. Time w/ E = Ex. Time w/o E ((1 -F) + F/P) Speedup w/ E = 1 / ((1 -F) + F/P) TXST TUES Module : C 2 57

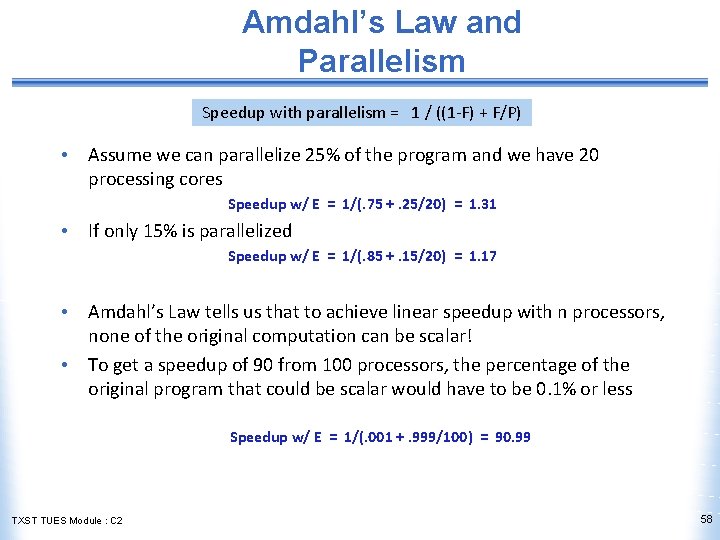

Amdahl’s Law and Parallelism Speedup with parallelism = 1 / ((1 -F) + F/P) • Assume we can parallelize 25% of the program and we have 20 processing cores Speedup w/ E = 1/(. 75 +. 25/20) = 1. 31 • If only 15% is parallelized Speedup w/ E = 1/(. 85 +. 15/20) = 1. 17 • Amdahl’s Law tells us that to achieve linear speedup with n processors, none of the original computation can be scalar! • To get a speedup of 90 from 100 processors, the percentage of the original program that could be scalar would have to be 0. 1% or less Speedup w/ E = 1/(. 001 +. 999/100) = 90. 99 TXST TUES Module : C 2 58

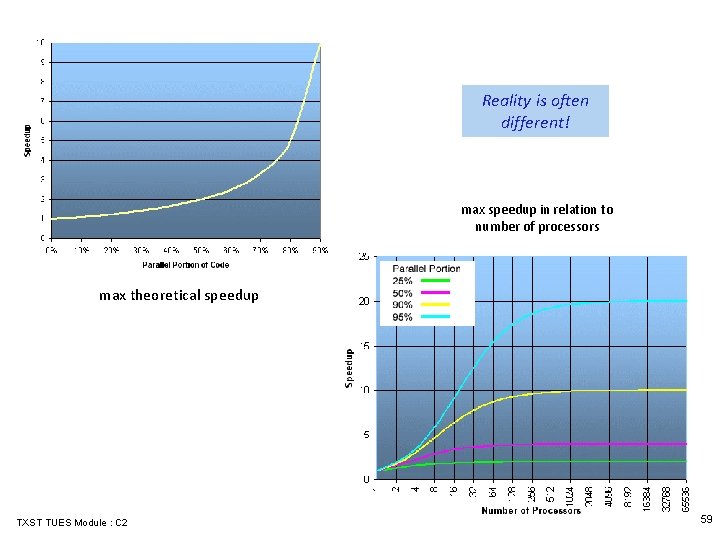

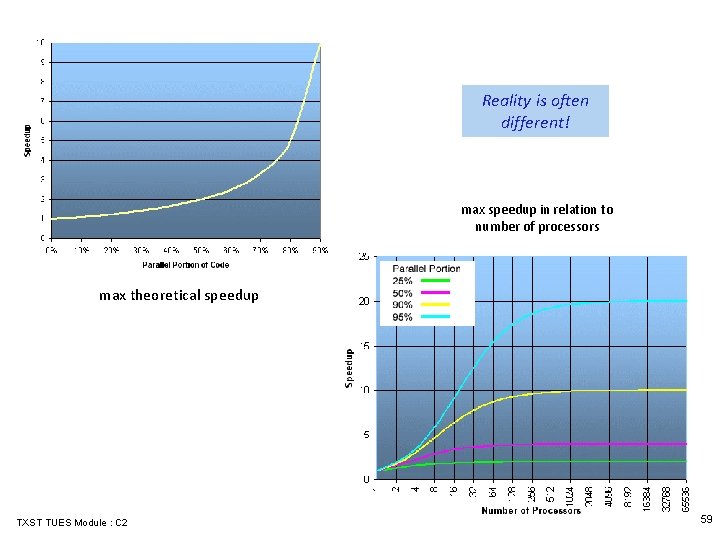

Reality is often different! max speedup in relation to number of processors max theoretical speedup TXST TUES Module : C 2 59

Scaling • Getting good speedup on a multiprocessor while keeping the problem size fixed is harder than getting good speedup by increasing the size of the problem • Strong scaling • when speedup can be achieved on a multiprocessor without increasing the size of the problem • Weak scaling • when speedup is achieved on a multiprocessor by increasing the size of the problem proportionally to the increase in the number of processors TXST TUES Module : C 2 60

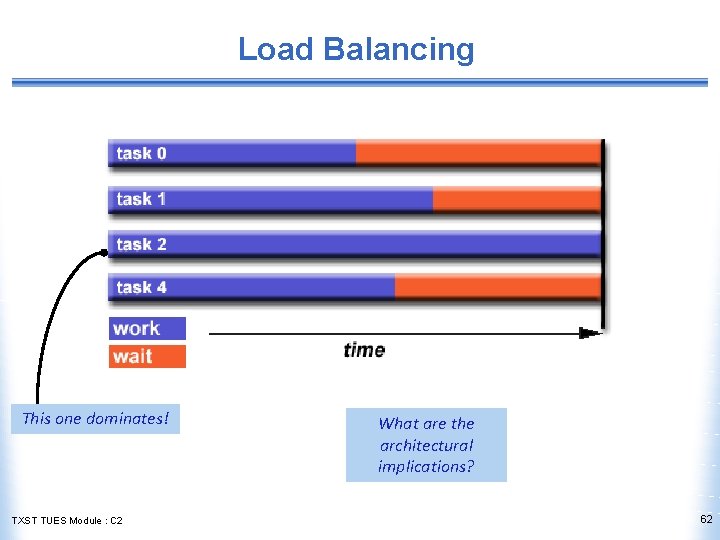

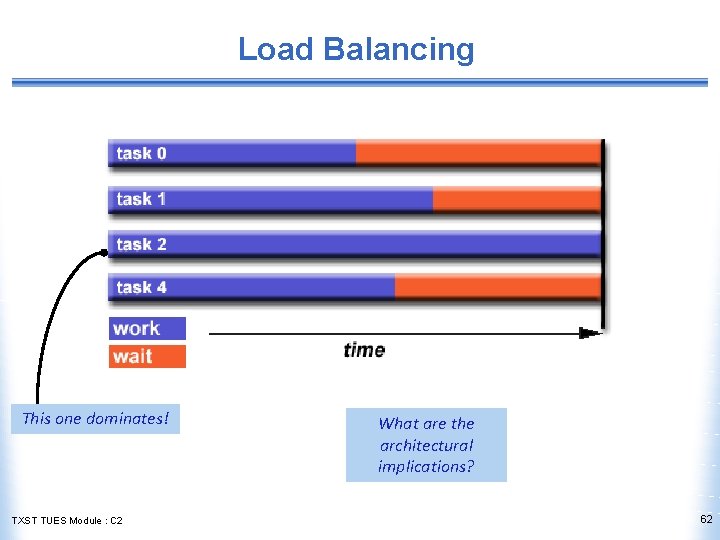

Load Balancing • Load balancing is another important factor in parallel computing • Just a single processor with twice the load of the others cuts the speedup almost in half • If the complexity of the parallel threads vary, then the complexity of the overall algorithm is dominated by the thread with the worst complexity • Granularity of parallel task is important • Adapt granularity based on architectural characteristics TXST TUES Module : C 2 61

Load Balancing This one dominates! TXST TUES Module : C 2 What are the architectural implications? 62