Internship at AMD Exploring Coherency in GPU Ray

- Slides: 92

Internship at AMD Exploring Coherency in GPU Ray Casting Myungbae Son SGLab

TOC • Background • Research in AMD Related work Approach Result • Future Plan

Background

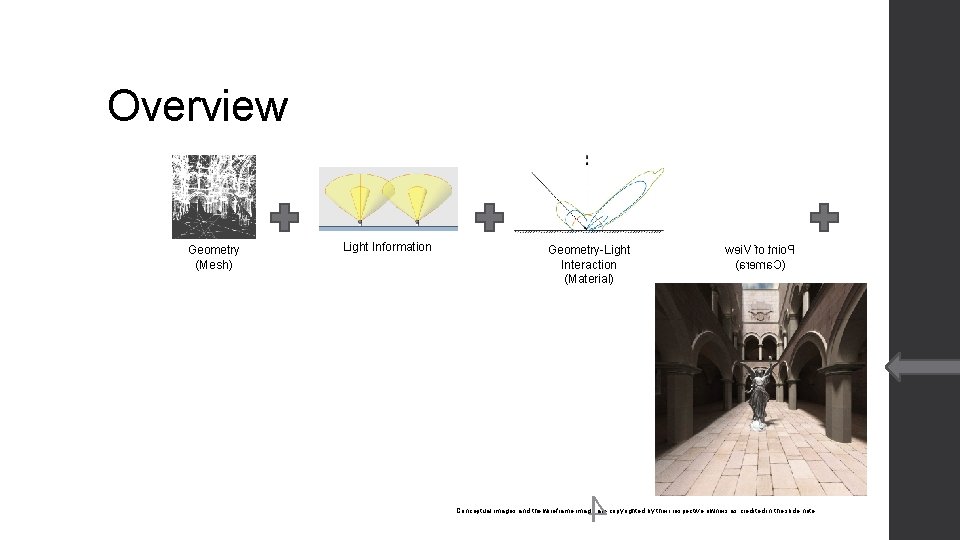

Overview Geometry (Mesh) Light Information Geometry-Light Interaction (Material) 4 wei. V fo tnio. P )arema. C( Conceptual images and the wireframe image are copyrighted by their respective owners as credited in the slide note.

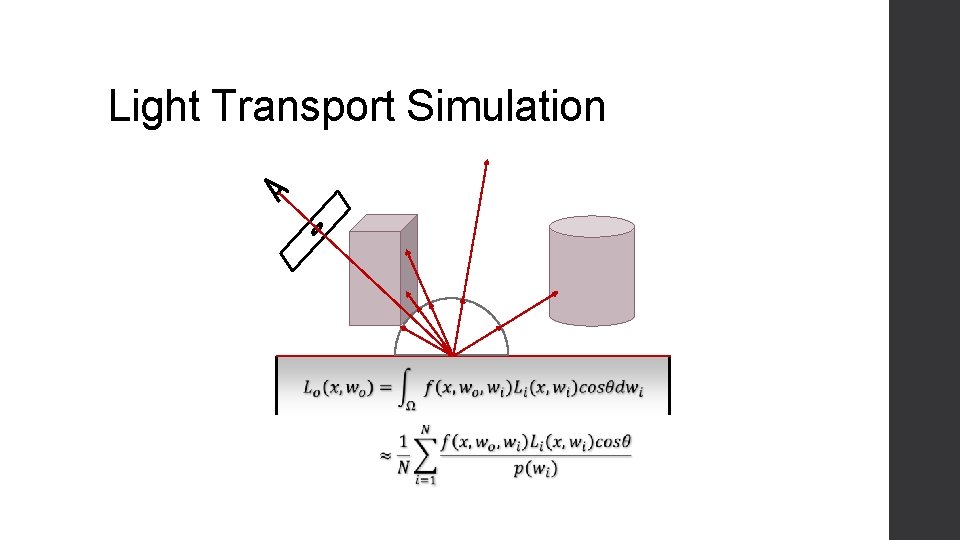

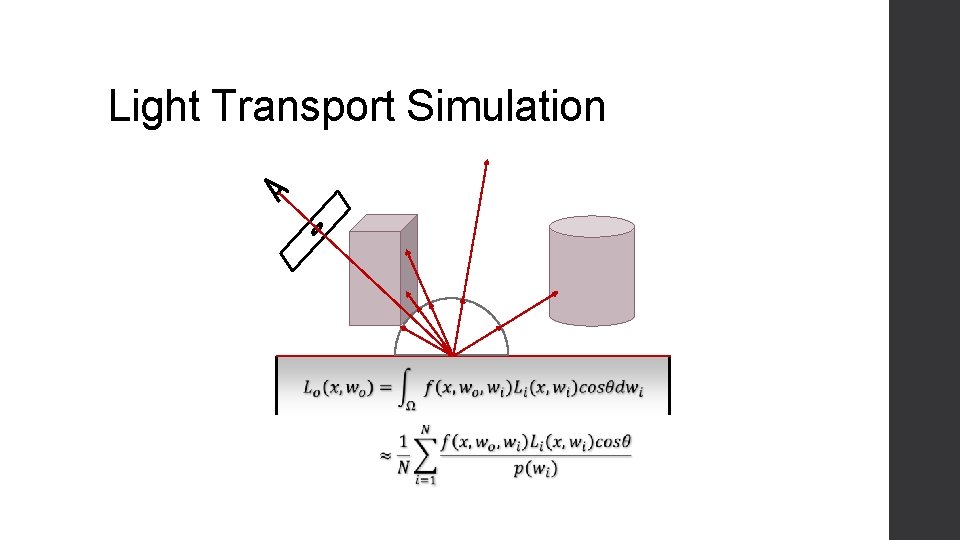

Light Transport Simulation

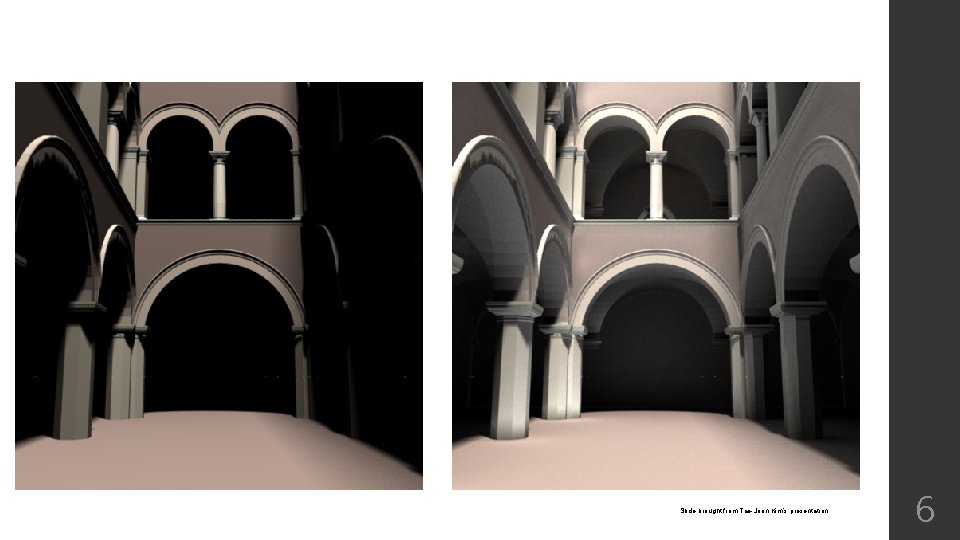

Slide brought from Tae-Joon Kim’s presentation 6

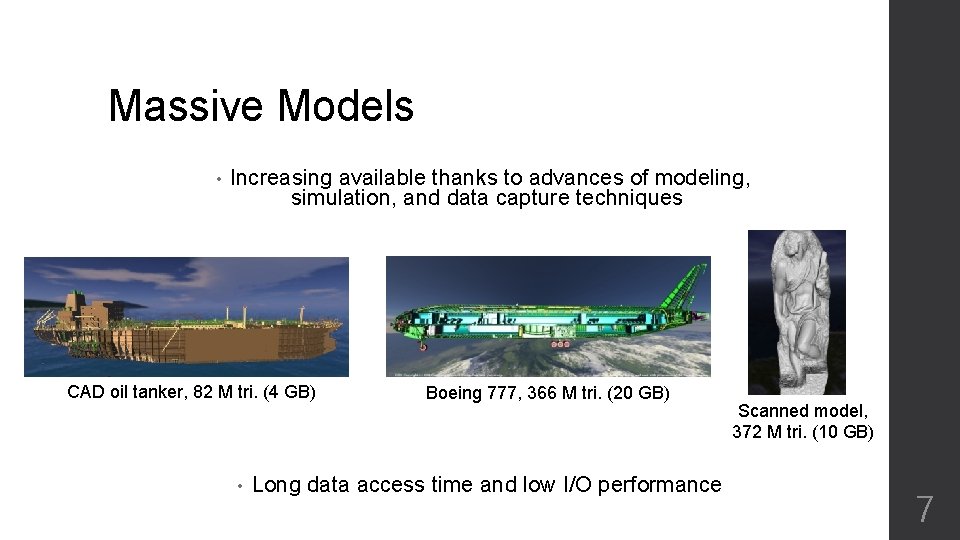

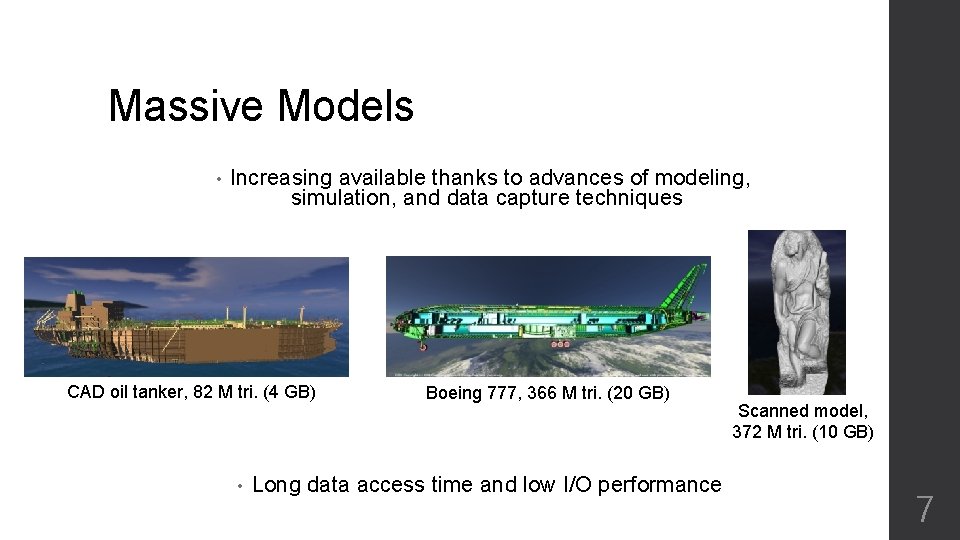

Massive Models • Increasing available thanks to advances of modeling, simulation, and data capture techniques CAD oil tanker, 82 M tri. (4 GB) • Boeing 777, 366 M tri. (20 GB) Long data access time and low I/O performance Scanned model, 372 M tri. (10 GB) 7

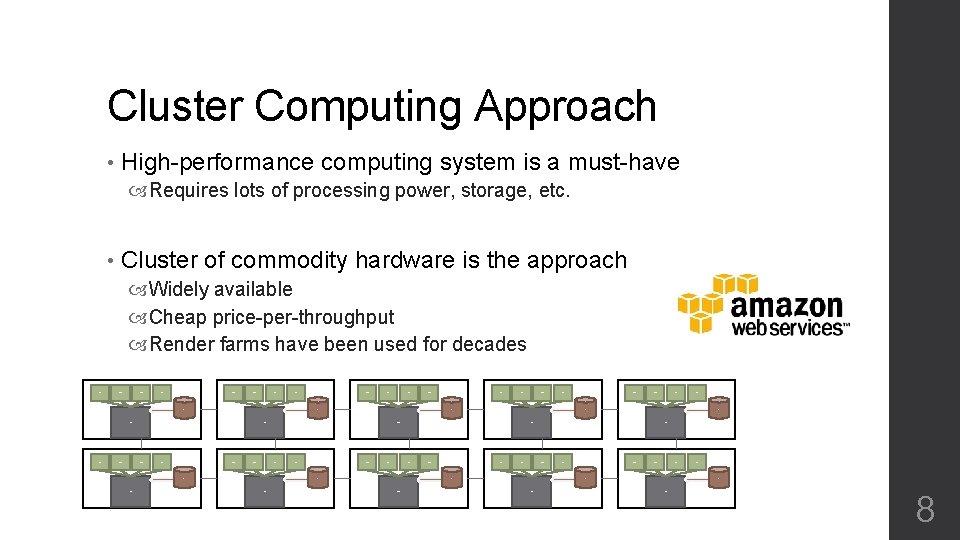

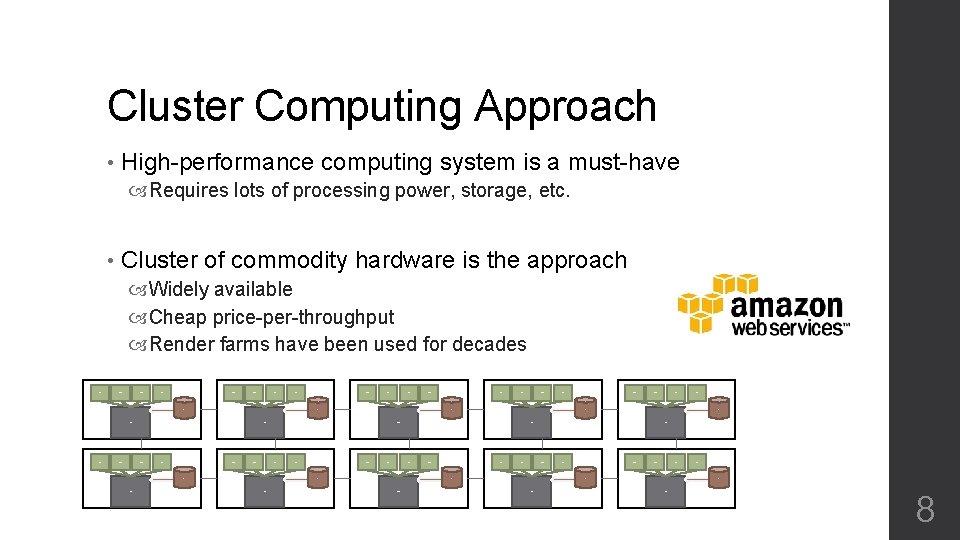

Cluster Computing Approach • High-performance computing system is a must-have Requires lots of processing power, storage, etc. • Cluster of commodity hardware is the approach Widely available Cheap price-per-throughput Render farms have been used for decades GPU GPU Disk GPU GPU GPU GPU Disk GPU Disk CPU GPU CPU GPU Disk CPU GPU GPU GPU Disk CPU 8

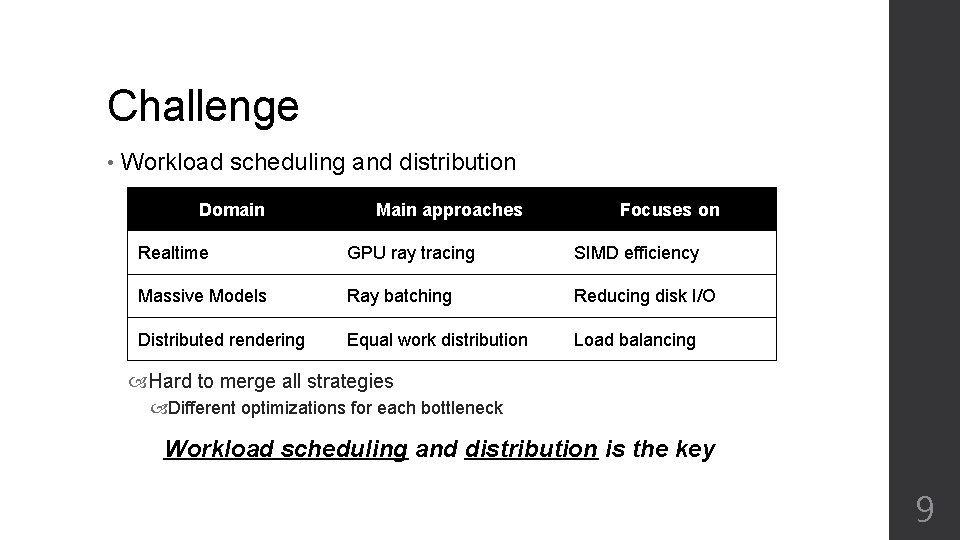

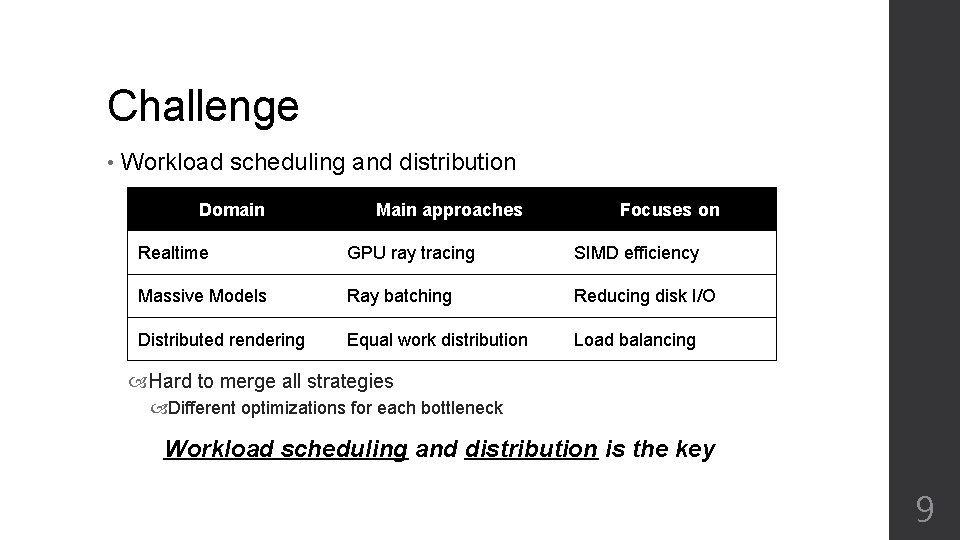

Challenge • Workload scheduling and distribution Domain Main approaches Focuses on Realtime GPU ray tracing SIMD efficiency Massive Models Ray batching Reducing disk I/O Distributed rendering Equal work distribution Load balancing Hard to merge all strategies Different optimizations for each bottleneck Workload scheduling and distribution is the key 9

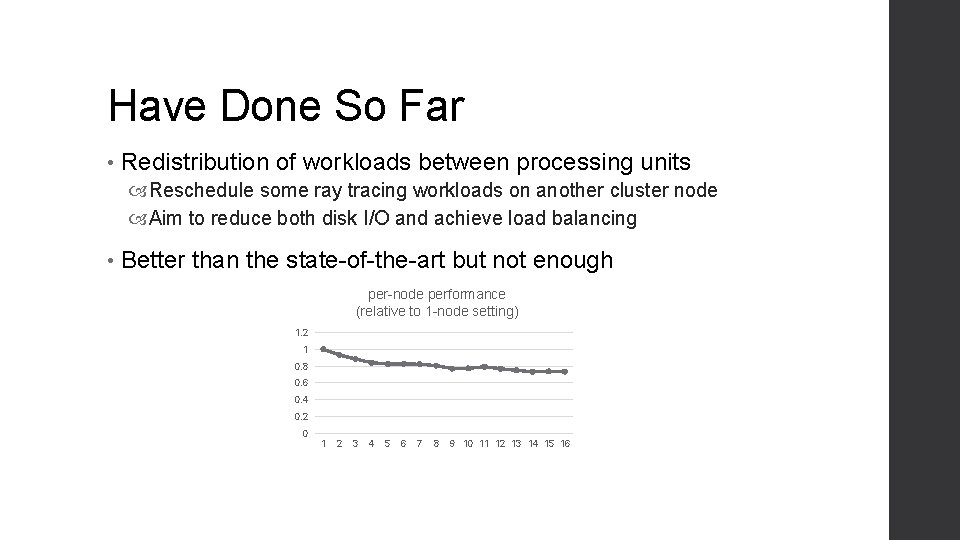

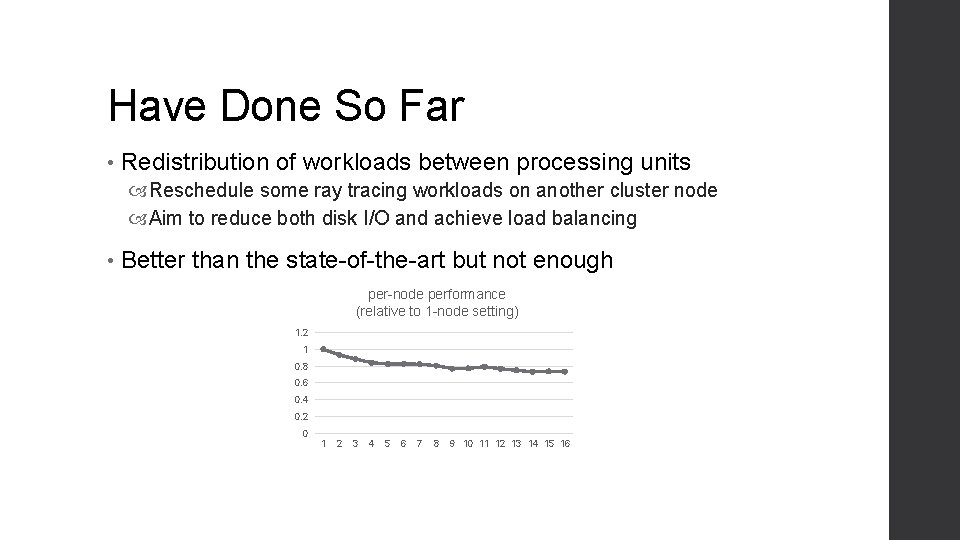

Have Done So Far • Redistribution of workloads between processing units Reschedule some ray tracing workloads on another cluster node Aim to reduce both disk I/O and achieve load balancing • Better than the state-of-the-art but not enough per-node performance (relative to 1 -node setting) 1. 2 1 0. 8 0. 6 0. 4 0. 2 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

Research in AMD

AMD Internship • Motivation For deeper understanding on ray casting For getting experience on optimizing GPU applications For refreshing myself with different topic For my future career • Worked on AMD Research at Sunnyvale, CA Worked here due to visa problem Remote internship with Takahiro Harada

Background GPU vs. Renderer

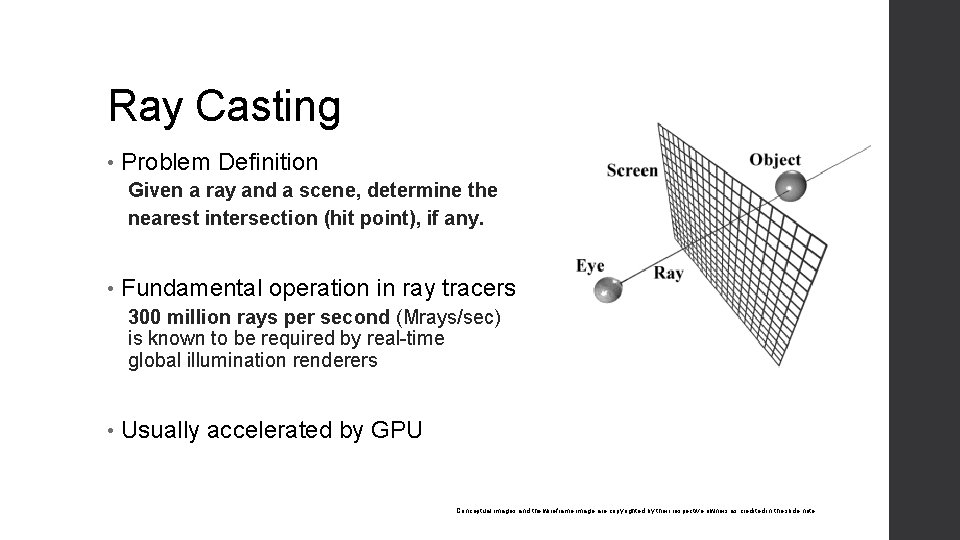

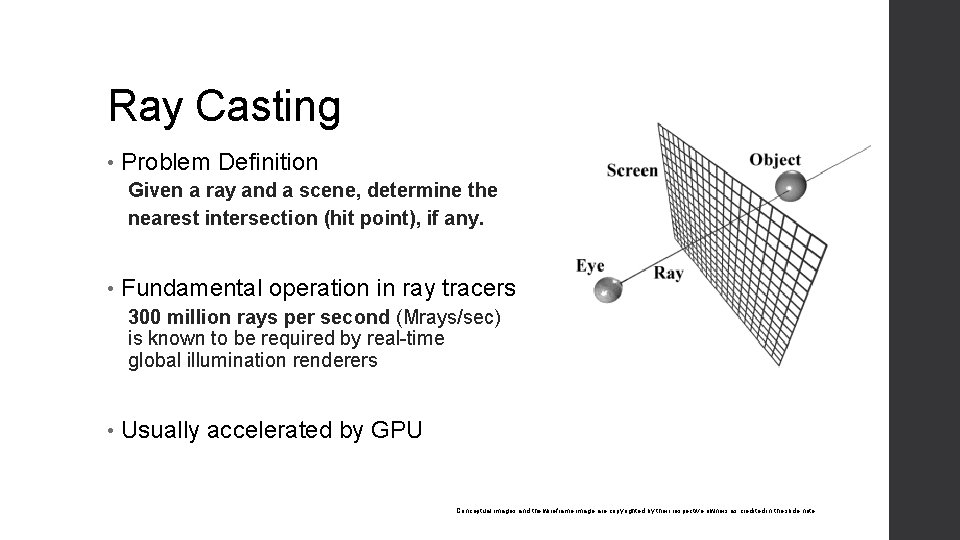

Ray Casting • Problem Definition Given a ray and a scene, determine the nearest intersection (hit point), if any. • Fundamental operation in ray tracers 300 million rays per second (Mrays/sec) is known to be required by real-time global illumination renderers • Usually accelerated by GPU Conceptual images and the wireframe image are copyrighted by their respective owners as credited in the slide note.

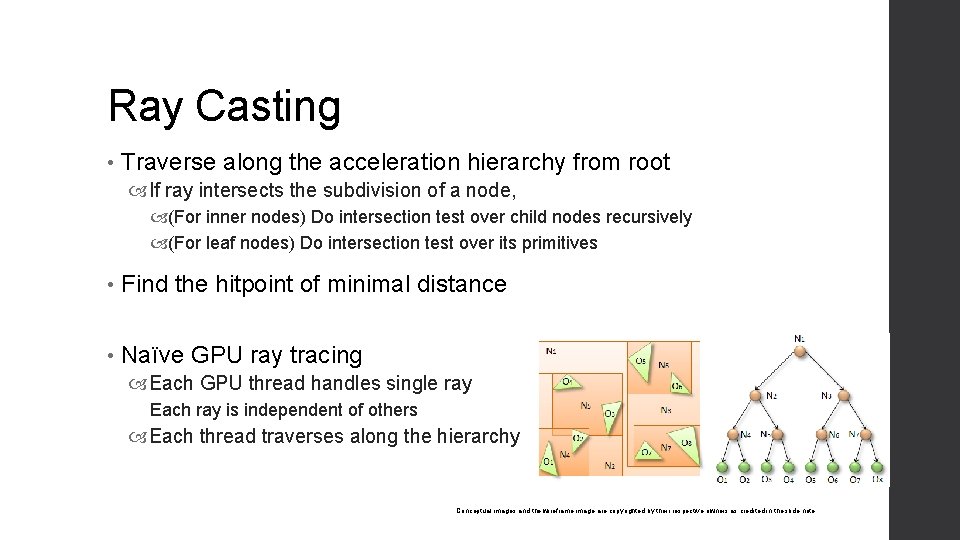

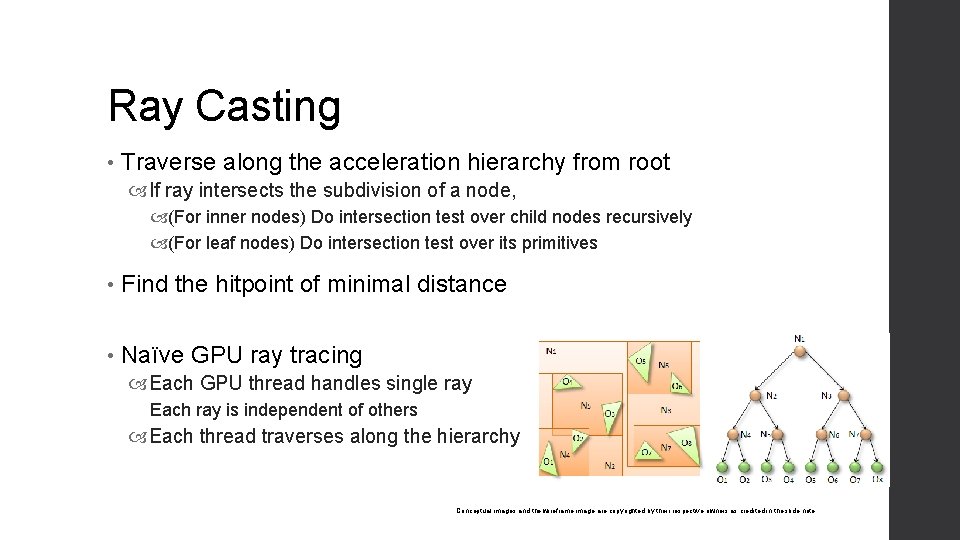

Ray Casting • Traverse along the acceleration hierarchy from root If ray intersects the subdivision of a node, (For inner nodes) Do intersection test over child nodes recursively (For leaf nodes) Do intersection test over its primitives • Find the hitpoint of minimal distance • Naïve GPU ray tracing Each GPU thread handles single ray Each ray is independent of others Each thread traverses along the hierarchy Conceptual images and the wireframe image are copyrighted by their respective owners as credited in the slide note.

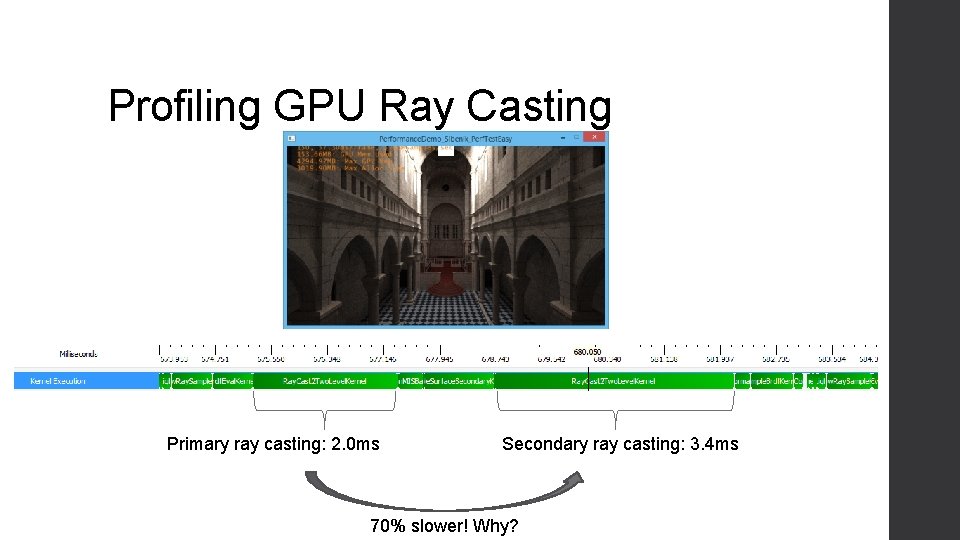

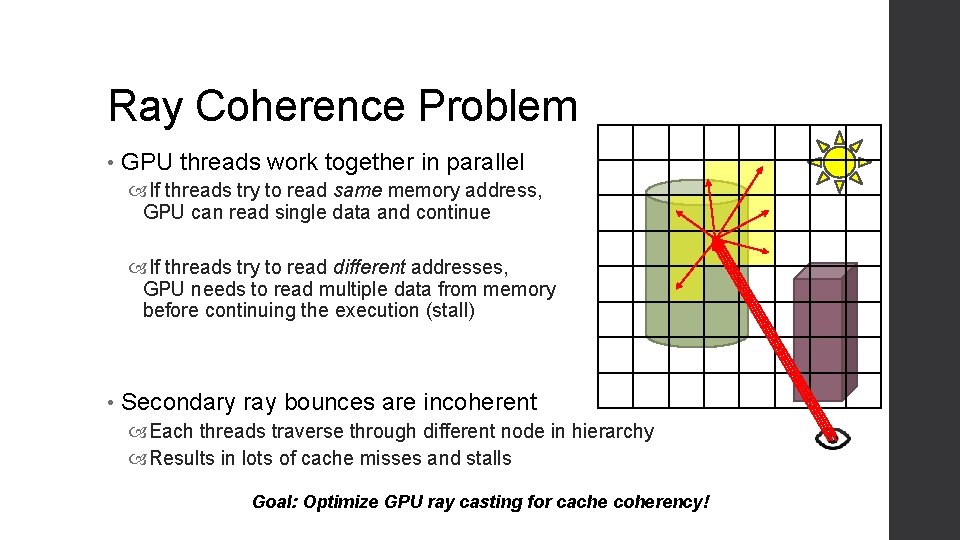

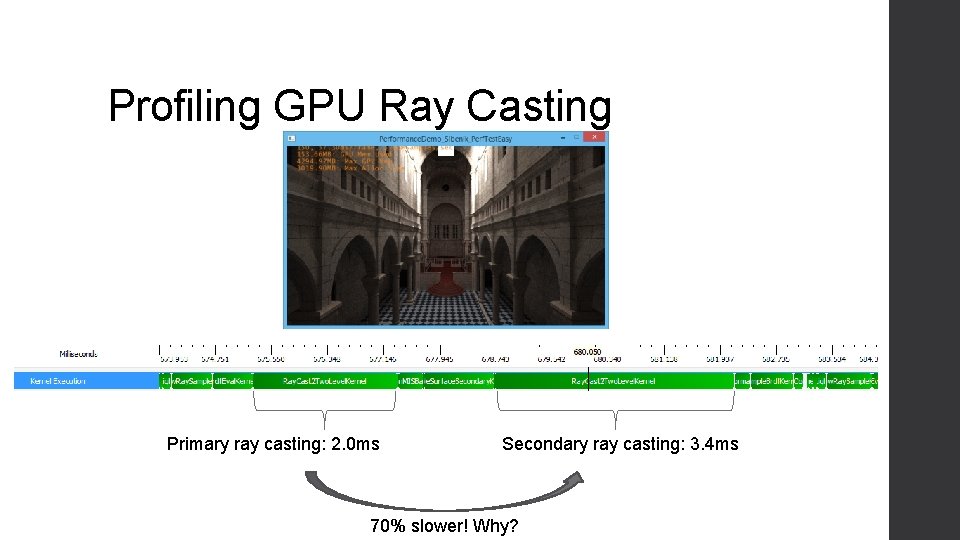

Profiling GPU Ray Casting Primary ray casting: 2. 0 ms Secondary ray casting: 3. 4 ms 70% slower! Why?

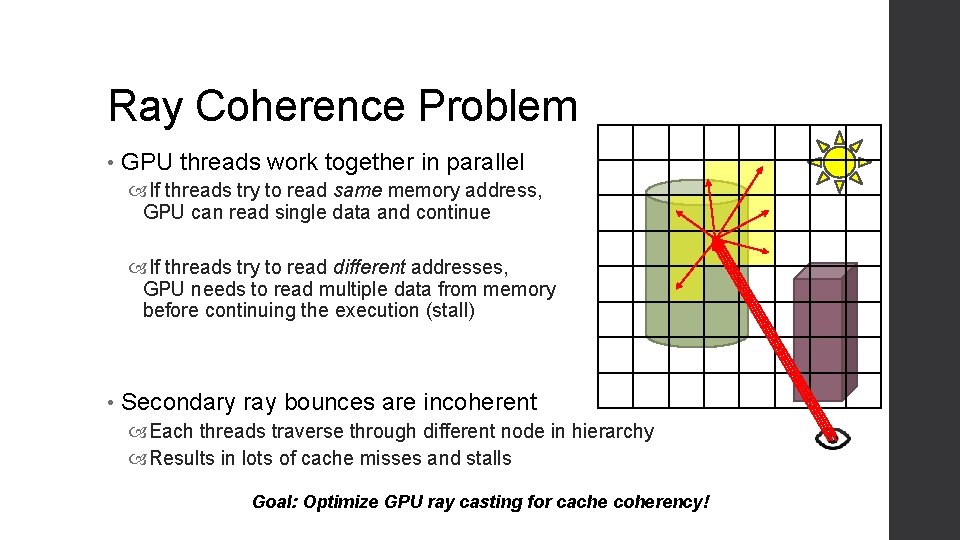

Ray Coherence Problem • GPU threads work together in parallel If threads try to read same memory address, GPU can read single data and continue If threads try to read different addresses, GPU needs to read multiple data from memory before continuing the execution (stall) • Secondary ray bounces are incoherent Each threads traverse through different node in hierarchy Results in lots of cache misses and stalls Goal: Optimize GPU ray casting for cache coherency!

Approaches • Applying CPU techniques for cache-coherent ray casting Ray streaming MBVH RS [Tsakok et al. , HPG 09] DRST [Barringer et al. , TOG 14] Partition-based parallel ray casting

Approaches • Improving existing GPU implementations “Single Ray Traversal” Traversal stack optimization Stackless traversal Cache-coherent implicit scheduling Aila et al. , Architecture considerations for tracing incoherent rays, HPG 10

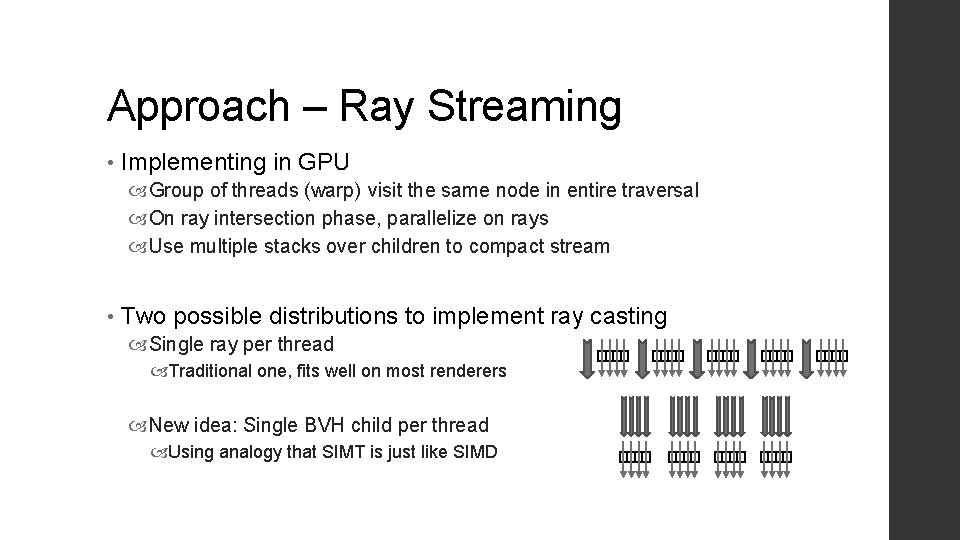

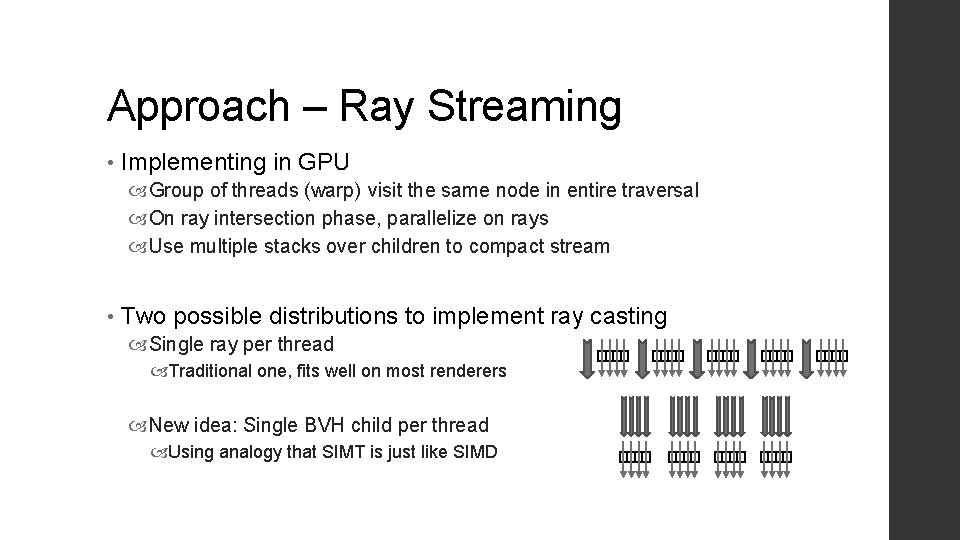

Approach – Ray Streaming • Implementing in GPU Group of threads (warp) visit the same node in entire traversal On ray intersection phase, parallelize on rays Use multiple stacks over children to compact stream • Two possible distributions to implement ray casting Single ray per thread Traditional one, fits well on most renderers New idea: Single BVH child per thread Using analogy that SIMT is just like SIMD

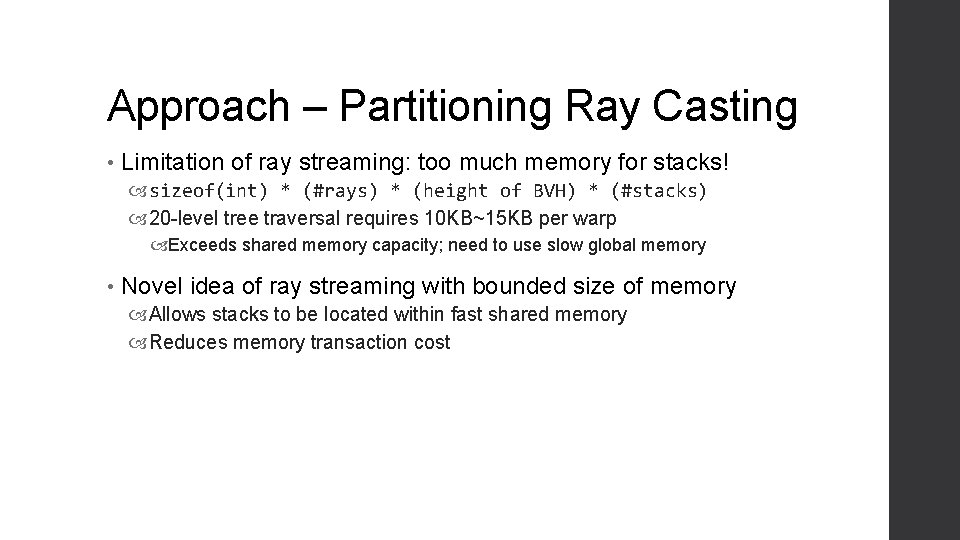

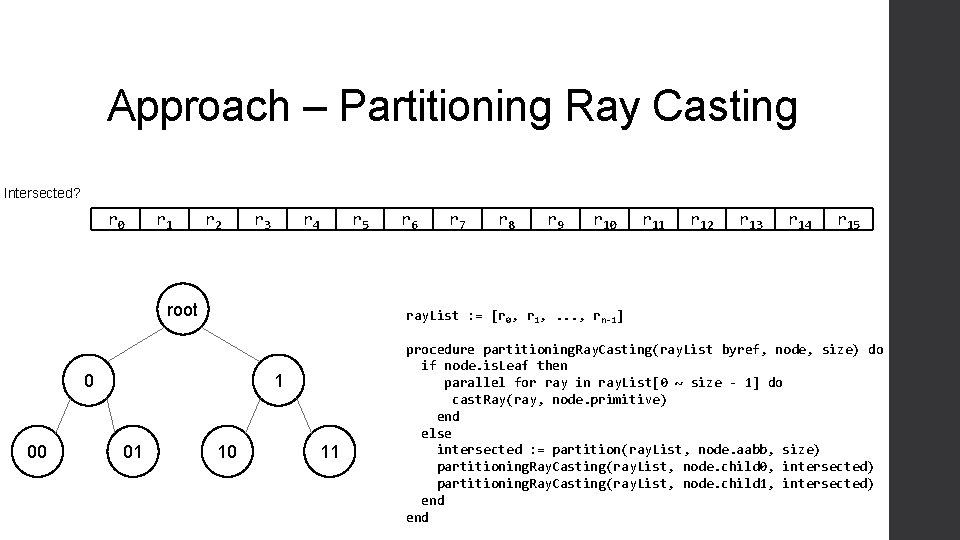

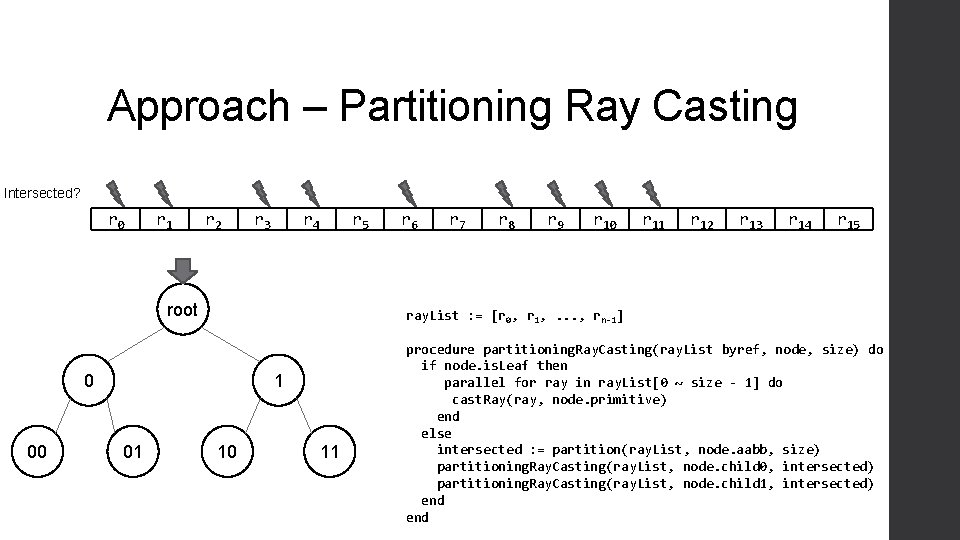

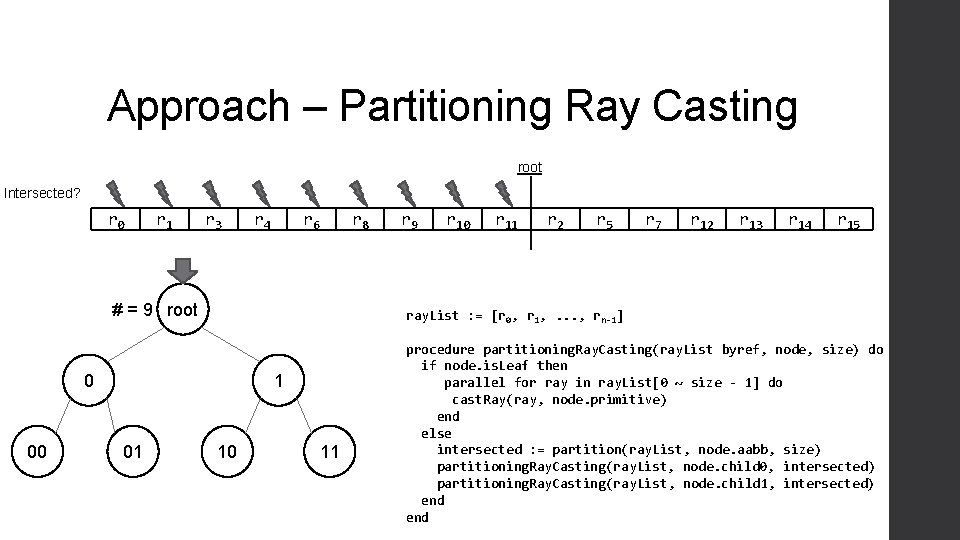

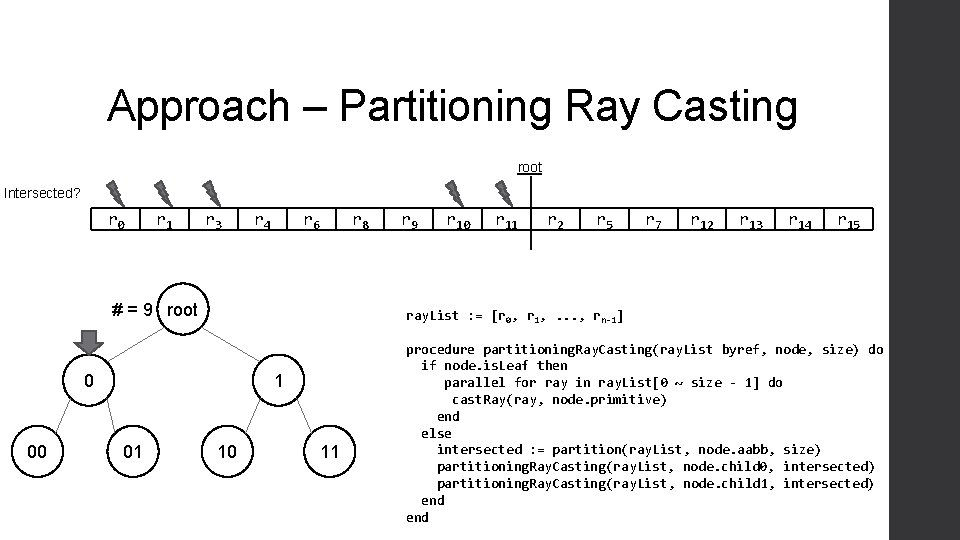

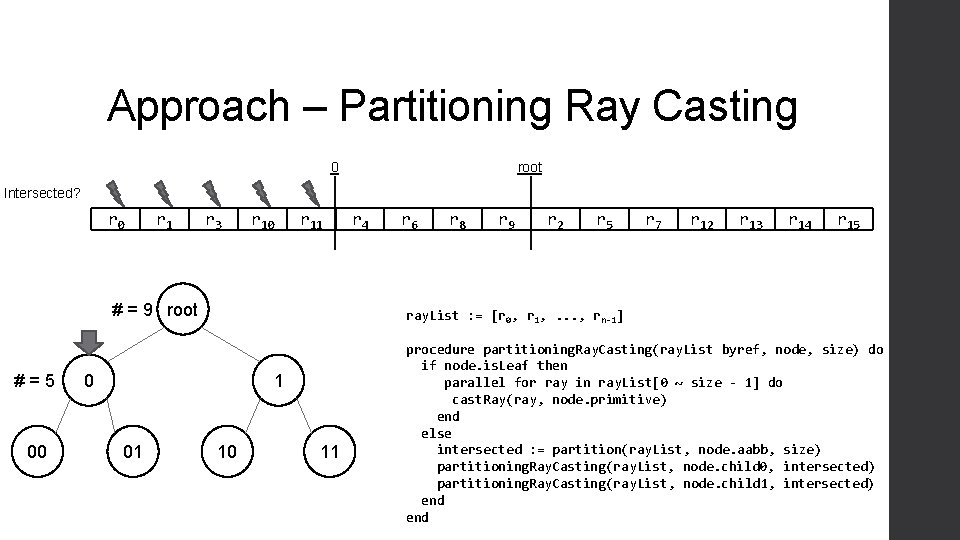

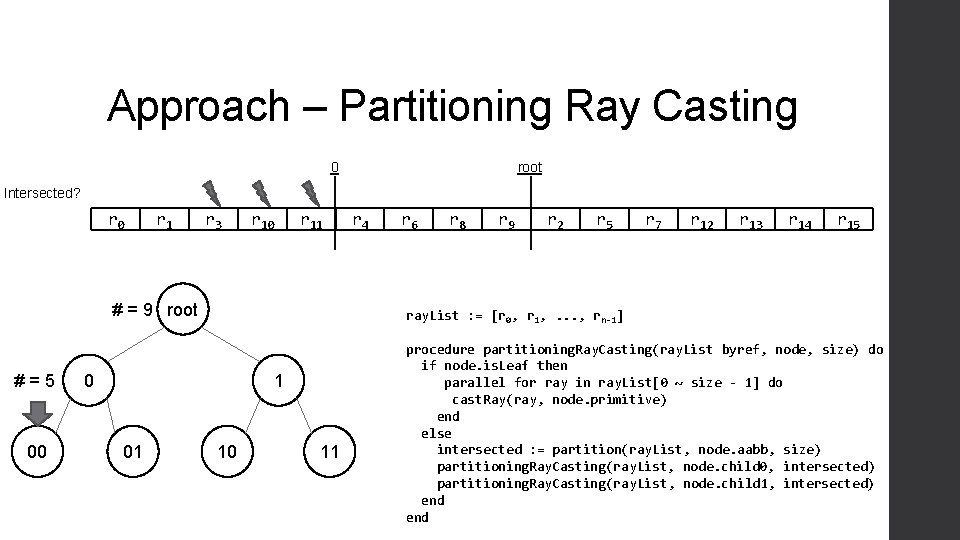

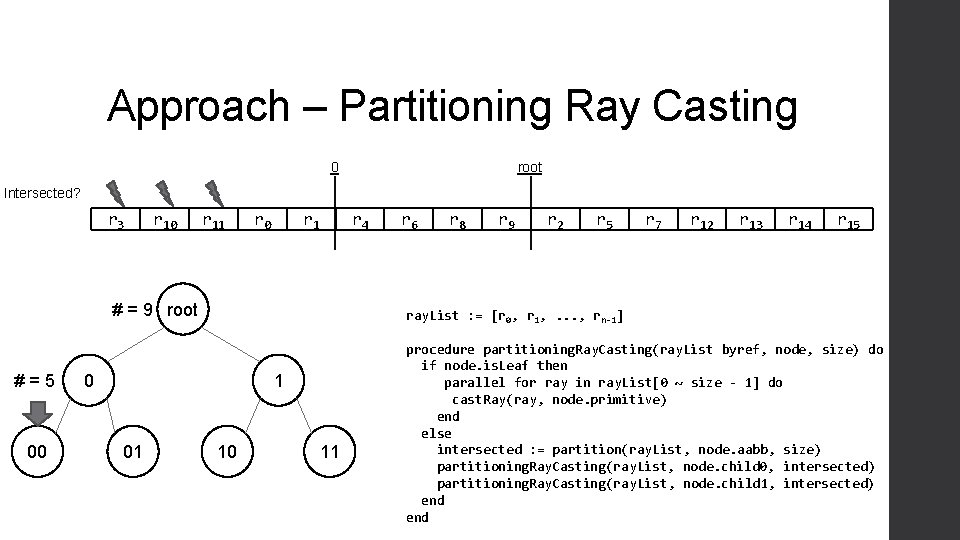

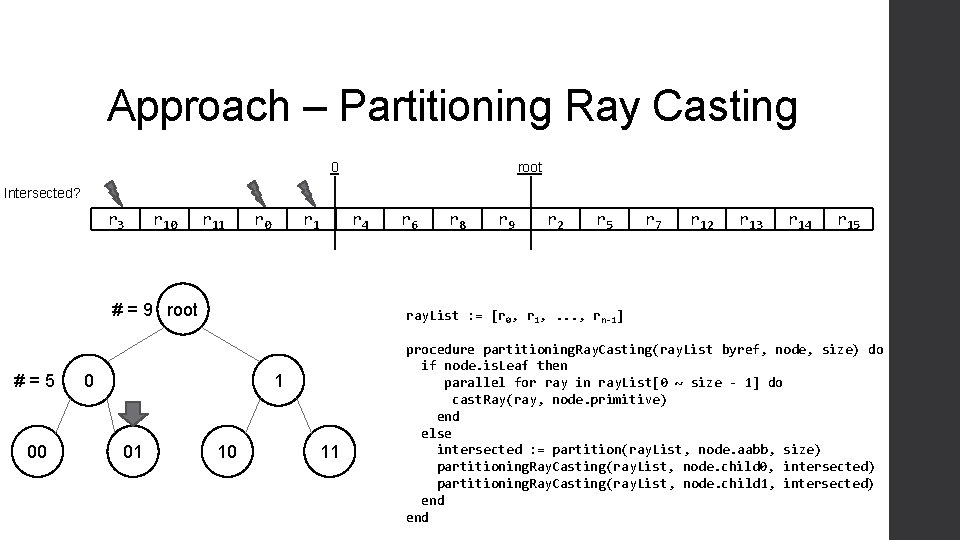

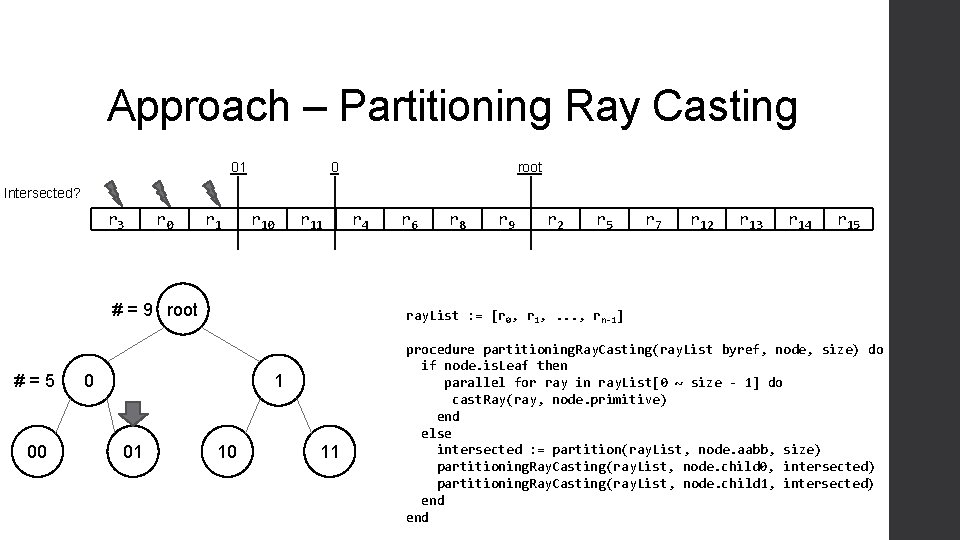

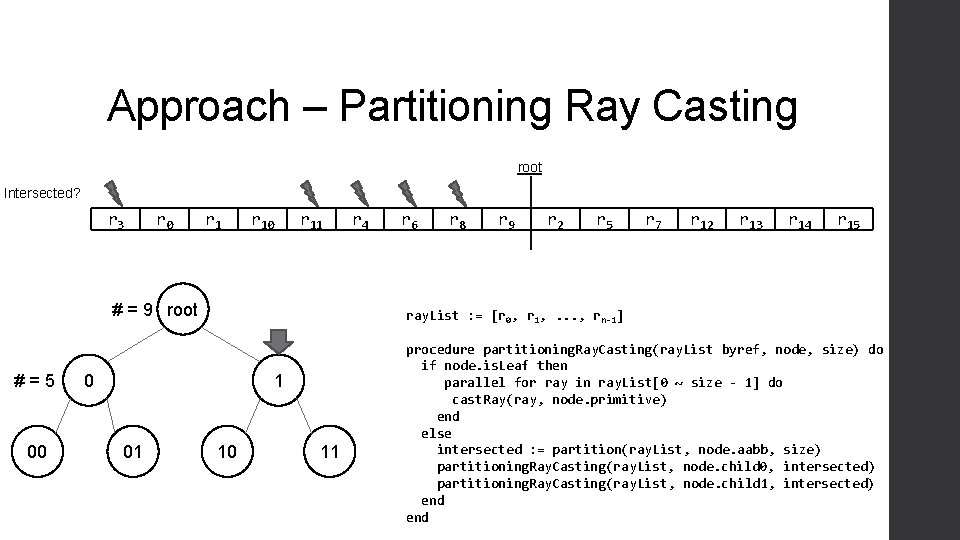

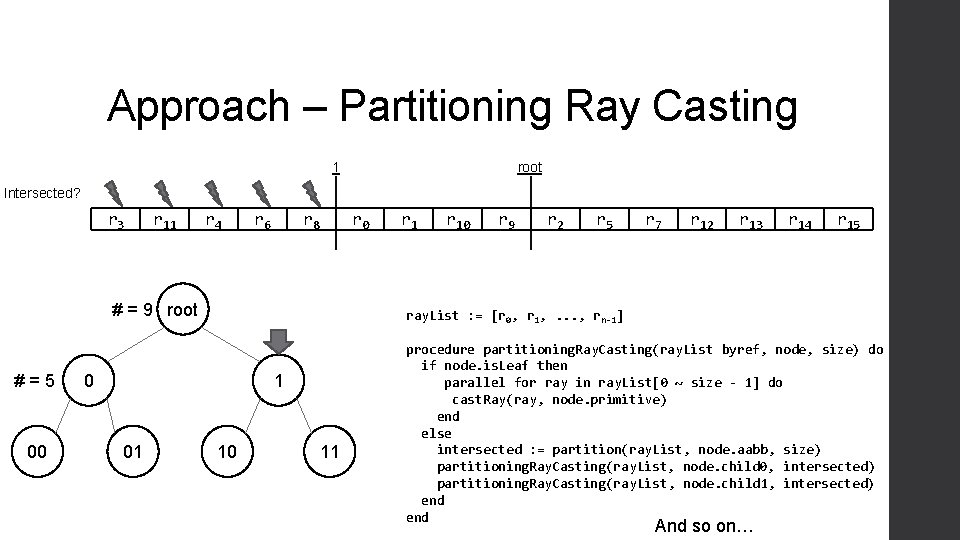

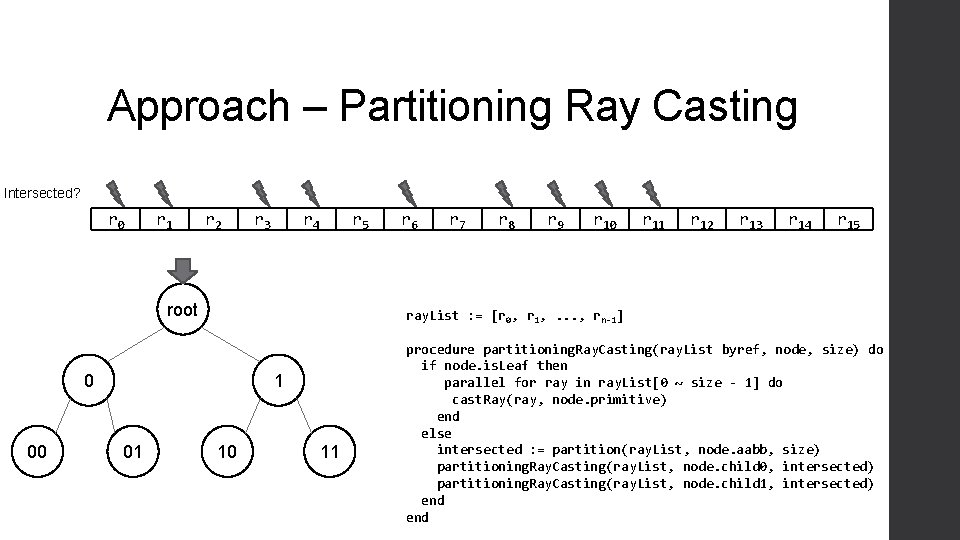

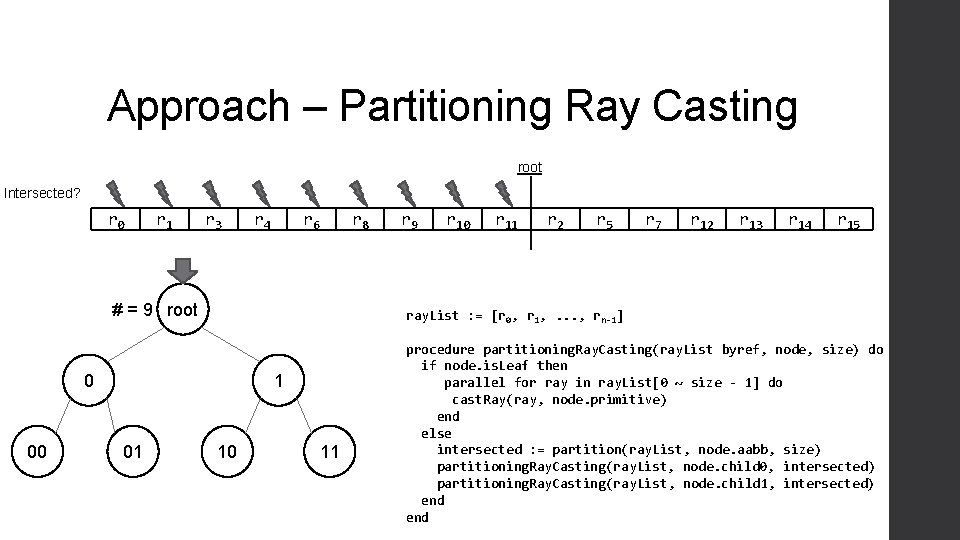

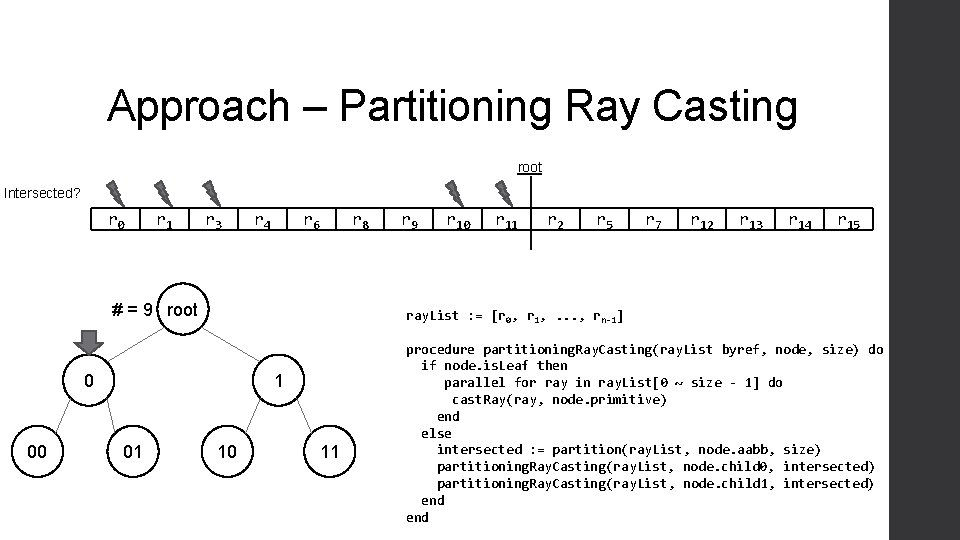

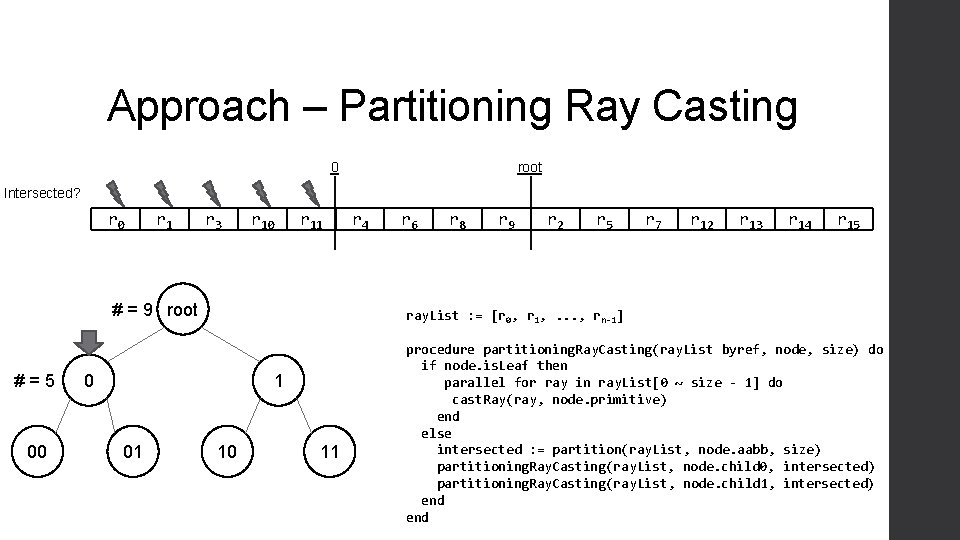

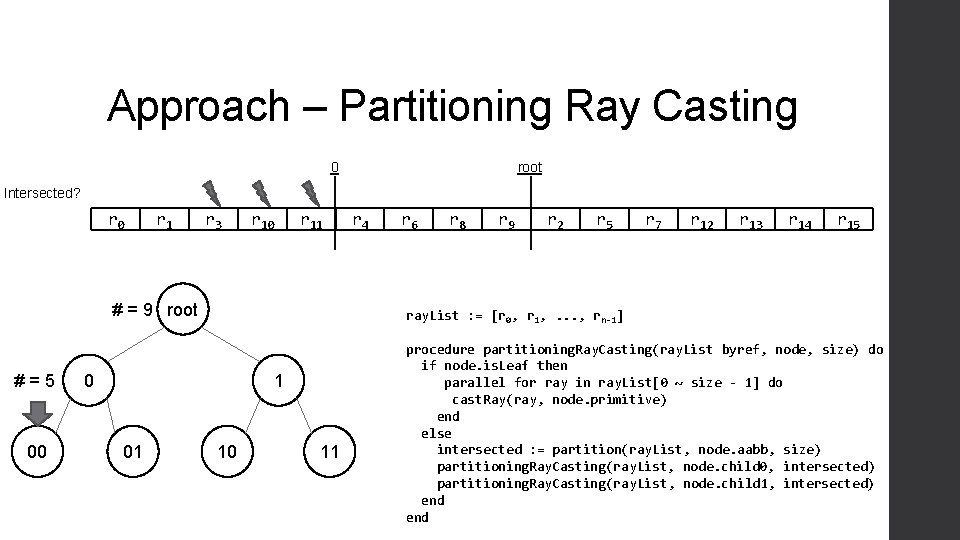

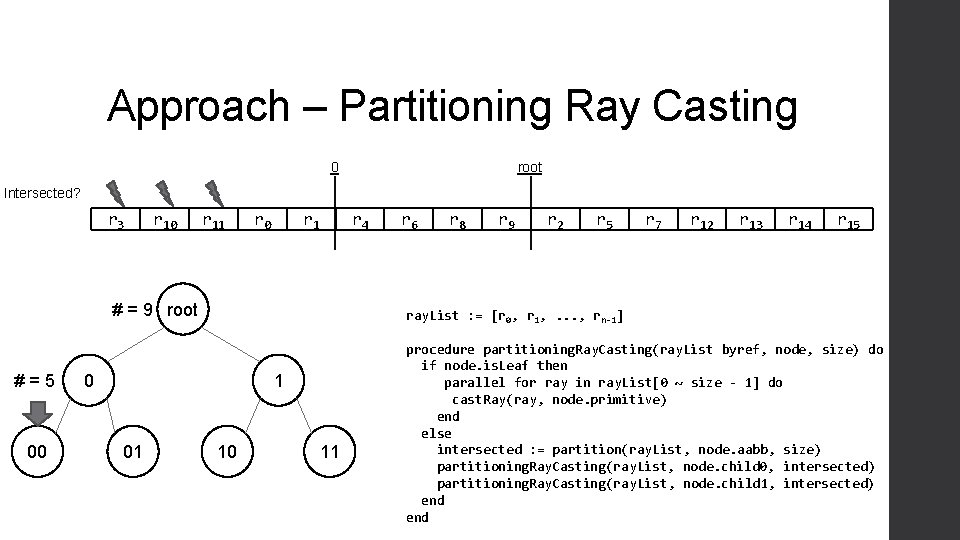

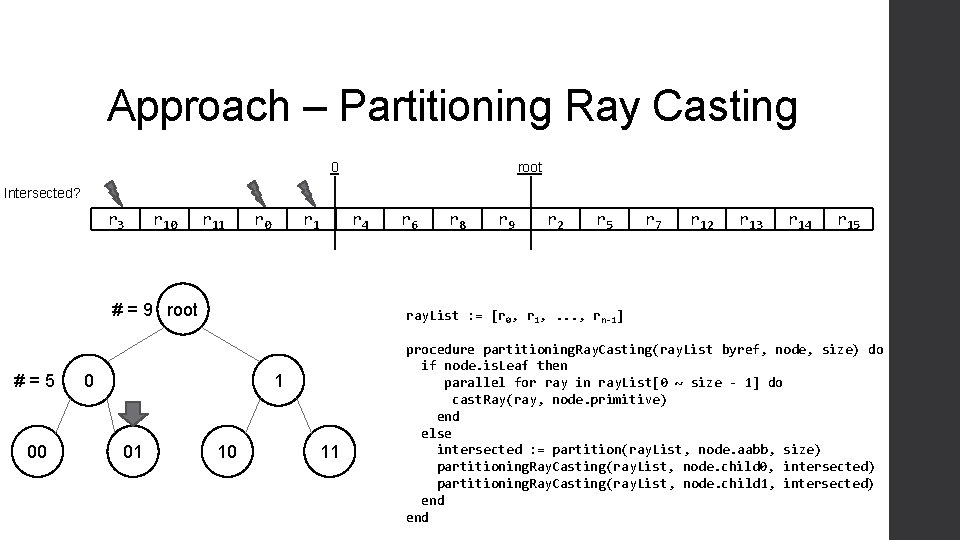

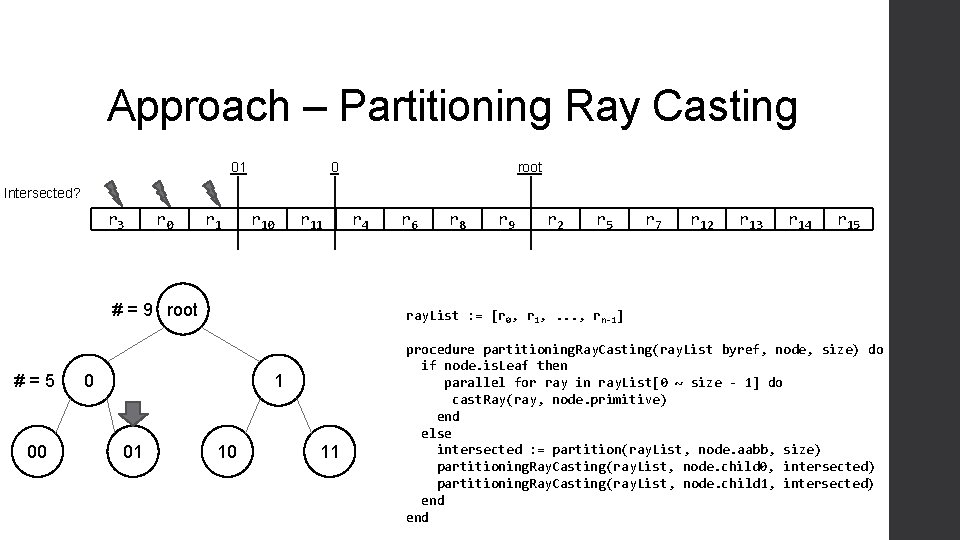

Approach – Partitioning Ray Casting • Limitation of ray streaming: too much memory for stacks! sizeof(int) * (#rays) * (height of BVH) * (#stacks) 20 -level tree traversal requires 10 KB~15 KB per warp Exceeds shared memory capacity; need to use slow global memory • Novel idea of ray streaming with bounded size of memory Allows stacks to be located within fast shared memory Reduces memory transaction cost

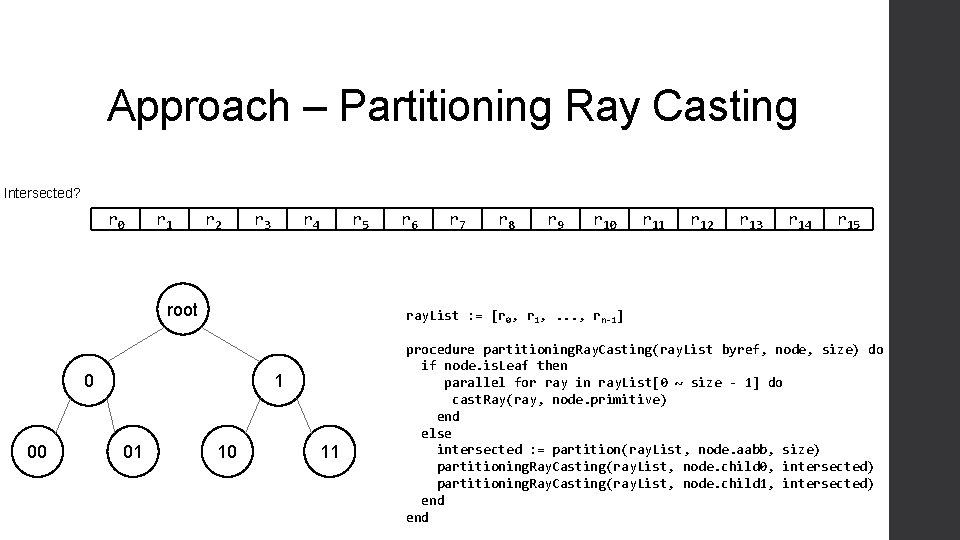

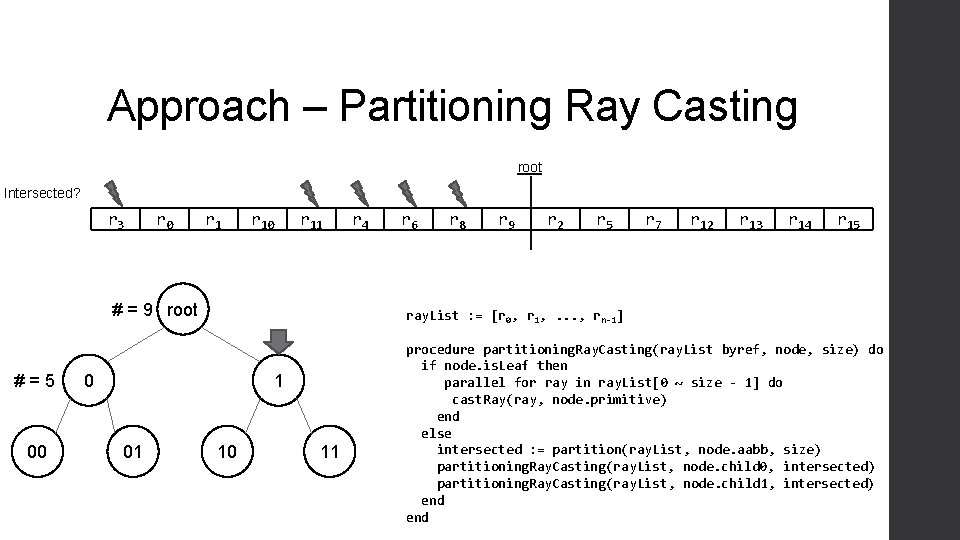

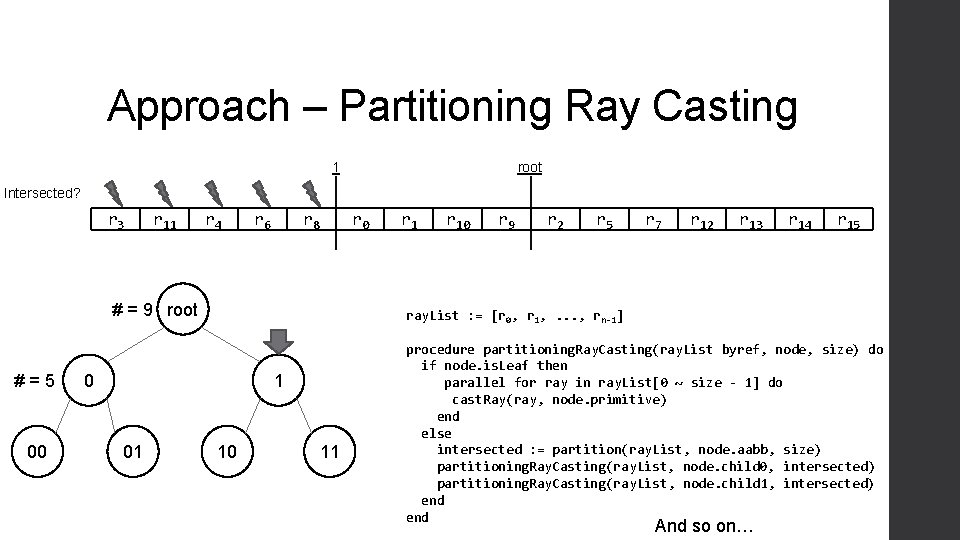

Approach – Partitioning Ray Casting Intersected? r 0 r 1 r 2 r 3 r 4 r 5 root 1 01 r 7 r 8 r 9 r 10 r 11 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 00 r 6 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting Intersected? r 0 r 1 r 2 r 3 r 4 r 5 root 1 01 r 7 r 8 r 9 r 10 r 11 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 00 r 6 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting root Intersected? r 0 r 1 r 3 r 4 r 6 r 8 # = 9 root 1 01 r 10 r 11 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 00 r 9 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting root Intersected? r 0 r 1 r 3 r 4 r 6 r 8 # = 9 root 1 01 r 10 r 11 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 00 r 9 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 0 root Intersected? r 0 r 1 r 3 r 10 r 11 # = 9 root #=5 00 r 6 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 4 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 0 root Intersected? r 0 r 1 r 3 r 10 r 11 # = 9 root #=5 00 r 6 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 4 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 0 root Intersected? r 3 r 10 r 11 r 0 r 1 r 4 # = 9 root #=5 00 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 6 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 0 root Intersected? r 3 r 10 r 11 r 0 r 1 r 4 # = 9 root #=5 00 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 6 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 01 0 root Intersected? r 3 r 0 r 10 r 11 # = 9 root #=5 00 r 6 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 4 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting root Intersected? r 3 r 0 r 10 r 11 # = 9 root #=5 00 r 6 r 8 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 4 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end

Approach – Partitioning Ray Casting 1 root Intersected? r 3 r 11 r 4 r 6 r 8 r 0 # = 9 root #=5 00 r 10 r 9 r 2 r 5 r 7 r 12 r 13 r 14 r 15 ray. List : = [r 0, r 1, . . . , rn-1] 0 1 01 r 1 10 11 procedure partitioning. Ray. Casting(ray. List byref, node, size) do if node. is. Leaf then parallel for ray in ray. List[0 ~ size - 1] do cast. Ray(ray, node. primitive) end else intersected : = partition(ray. List, node. aabb, size) partitioning. Ray. Casting(ray. List, node. child 0, intersected) partitioning. Ray. Casting(ray. List, node. child 1, intersected) end And so on…

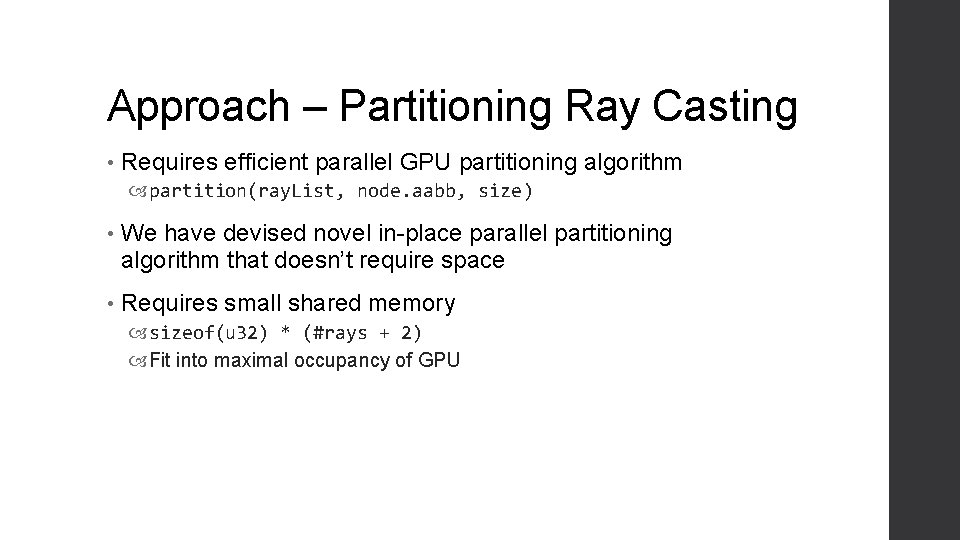

Approach – Partitioning Ray Casting • Requires efficient parallel GPU partitioning algorithm partition(ray. List, node. aabb, size) • We have devised novel in-place parallel partitioning algorithm that doesn’t require space • Requires small shared memory sizeof(u 32) * (#rays + 2) Fit into maximal occupancy of GPU

Approach - Stack Optimization • GPU has different memory areas of size and speed Cache (L 1~L 3) Local memory (registers) Shared memory Global memory Texture cache Etc. . • Locating data into faster memory may increase speed

Approach – Stack Optimization • Overhead on traversal occurs on stack manipulation • Tried several memories to locate stack Global, shared, local Speed: shared > global > local Reason: local memory (registers) cannot be dynamically indexed, thus not suitable for array • Tried to decrease stack size with smaller data u 32 -> u 16 It does not improve performance due to cache interference

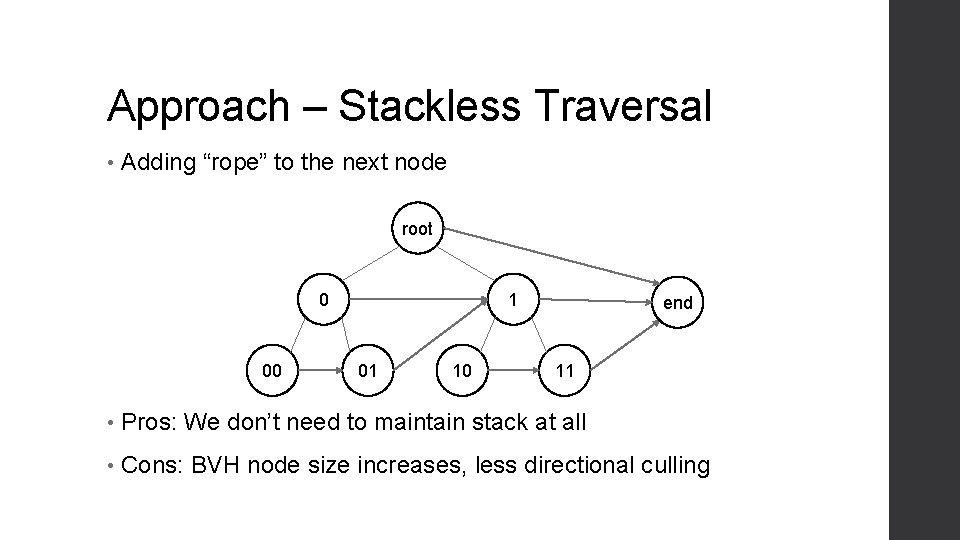

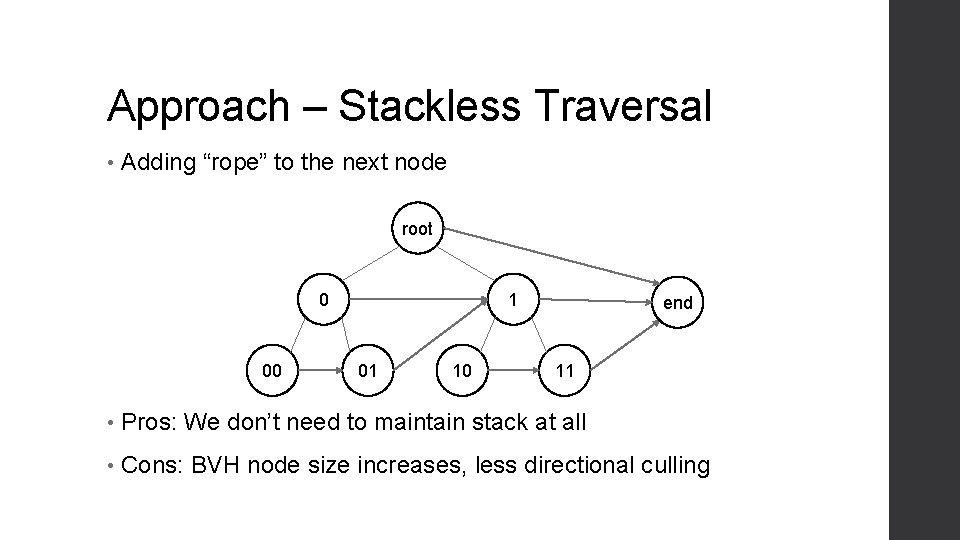

Approach – Stackless Traversal • Adding “rope” to the next node root 0 00 1 01 10 end 11 • Pros: We don’t need to maintain stack at all • Cons: BVH node size increases, less directional culling

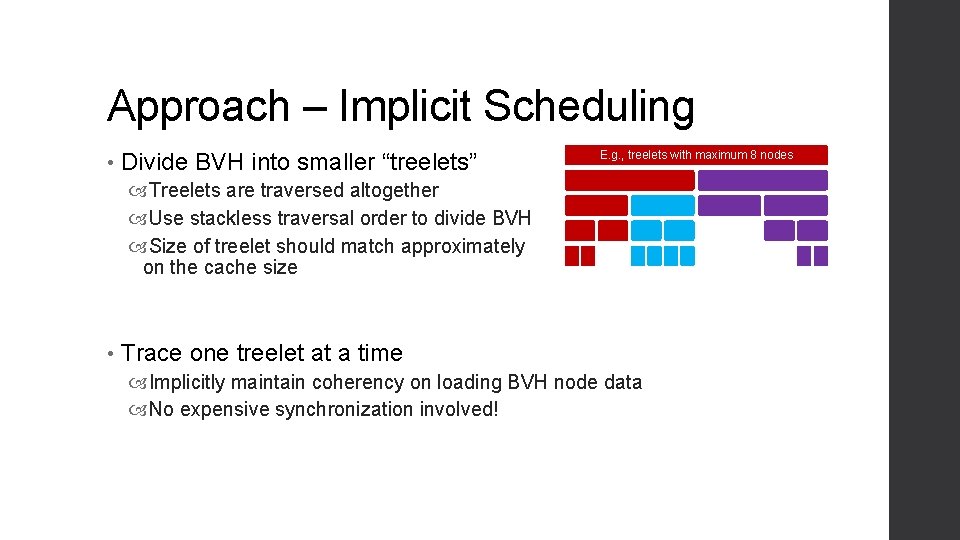

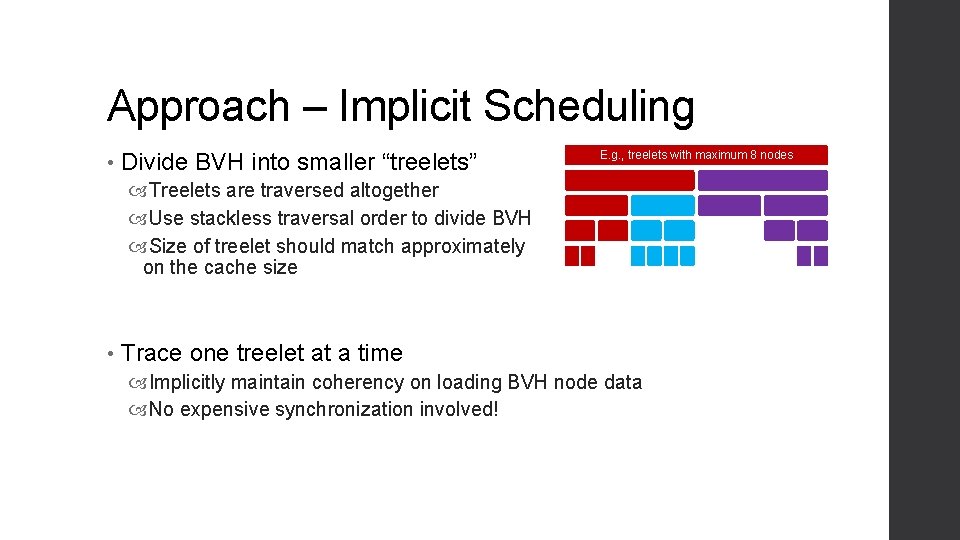

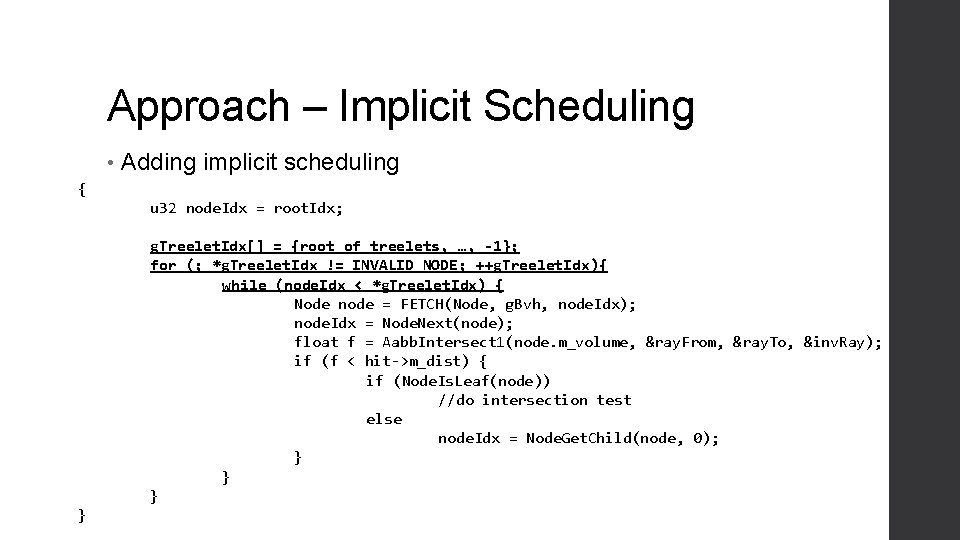

Approach – Implicit Scheduling • Divide BVH into smaller “treelets” E. g. , treelets with maximum 8 nodes Treelets are traversed altogether Use stackless traversal order to divide BVH Size of treelet should match approximately on the cache size • Trace one treelet at a time Implicitly maintain coherency on loading BVH node data No expensive synchronization involved!

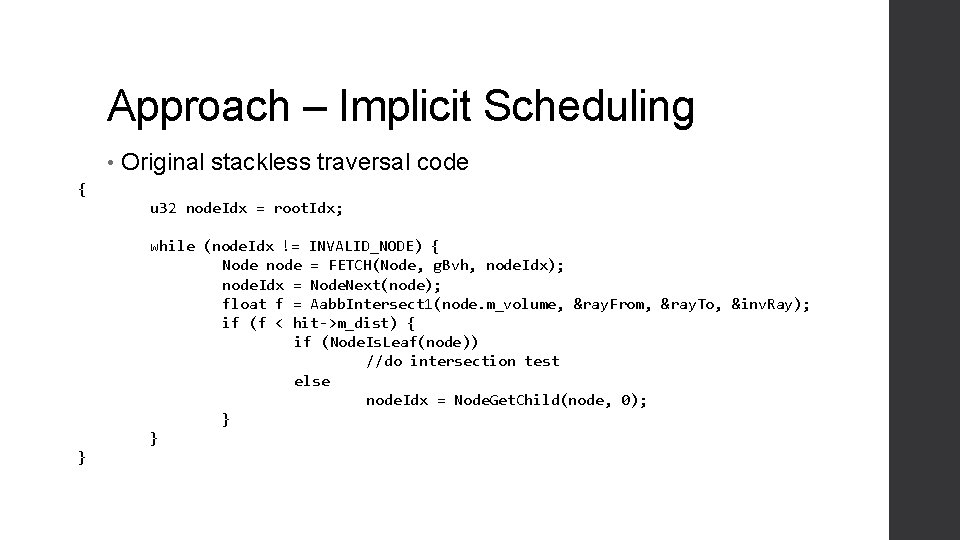

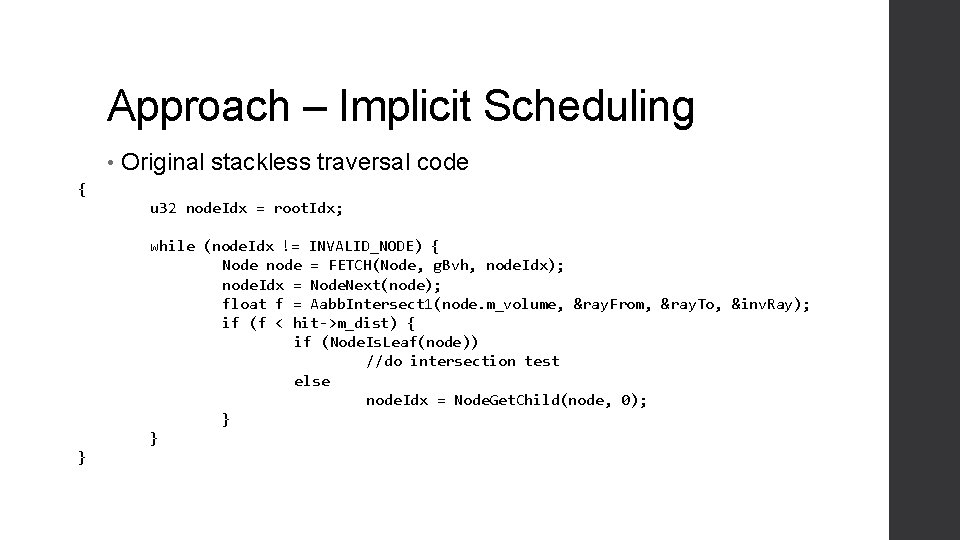

Approach – Implicit Scheduling • Original stackless traversal code { u 32 node. Idx = root. Idx; while (node. Idx != INVALID_NODE) { Node node = FETCH(Node, g. Bvh, node. Idx); node. Idx = Node. Next(node); float f = Aabb. Intersect 1(node. m_volume, &ray. From, &ray. To, &inv. Ray); if (f < hit->m_dist) { if (Node. Is. Leaf(node)) //do intersection test else node. Idx = Node. Get. Child(node, 0); } } }

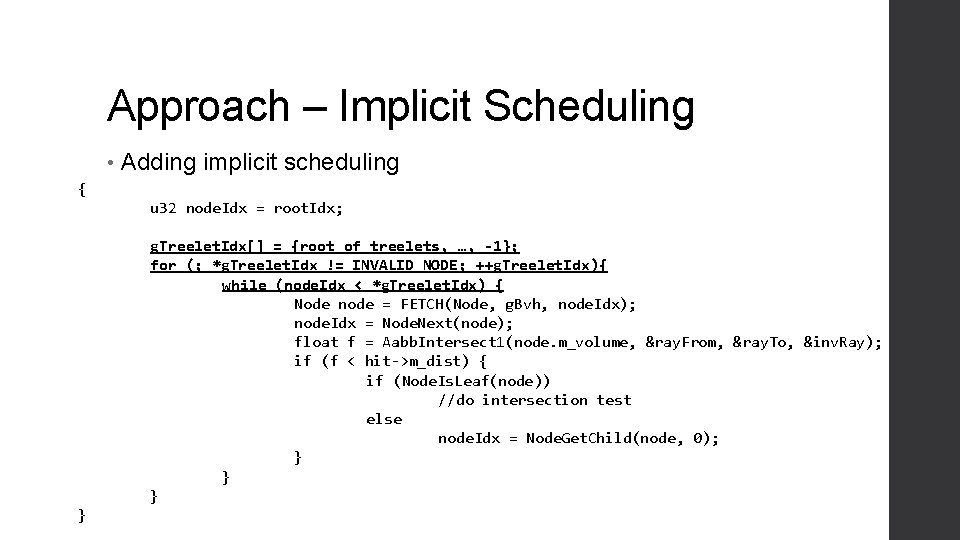

Approach – Implicit Scheduling • Adding implicit scheduling { u 32 node. Idx = root. Idx; g. Treelet. Idx[] = {root_of_treelets, …, -1}; for (; *g. Treelet. Idx != INVALID_NODE; ++g. Treelet. Idx){ while (node. Idx < *g. Treelet. Idx) { Node node = FETCH(Node, g. Bvh, node. Idx); node. Idx = Node. Next(node); float f = Aabb. Intersect 1(node. m_volume, &ray. From, &ray. To, &inv. Ray); if (f < hit->m_dist) { if (Node. Is. Leaf(node)) //do intersection test else node. Idx = Node. Get. Child(node, 0); } }

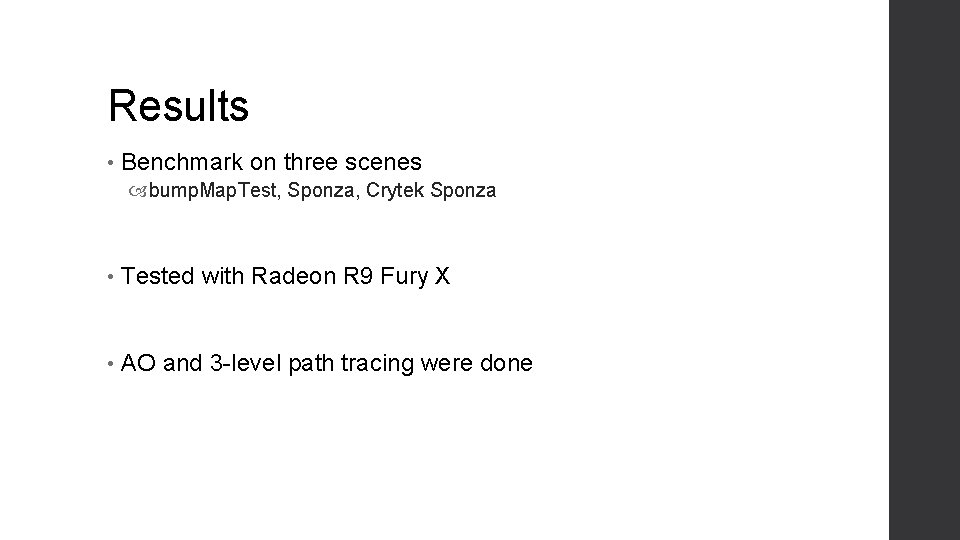

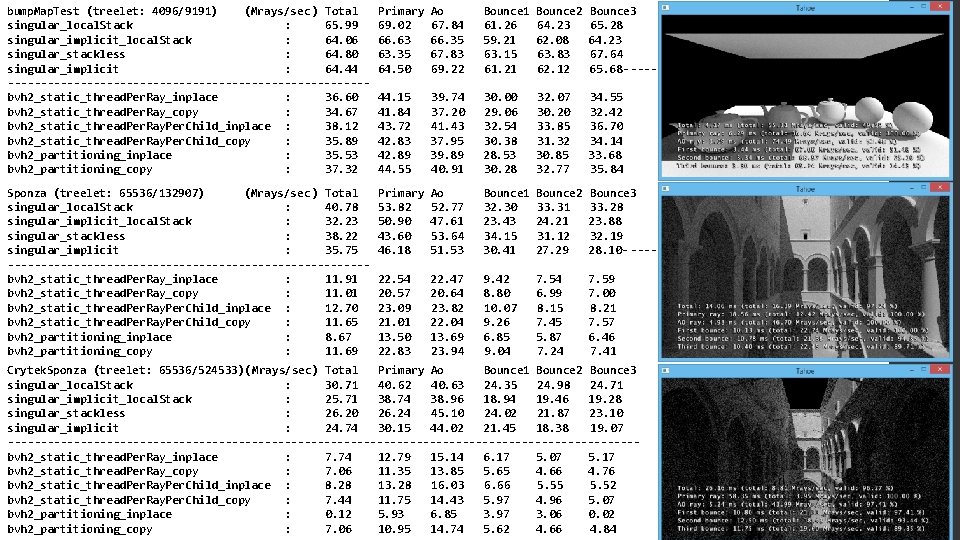

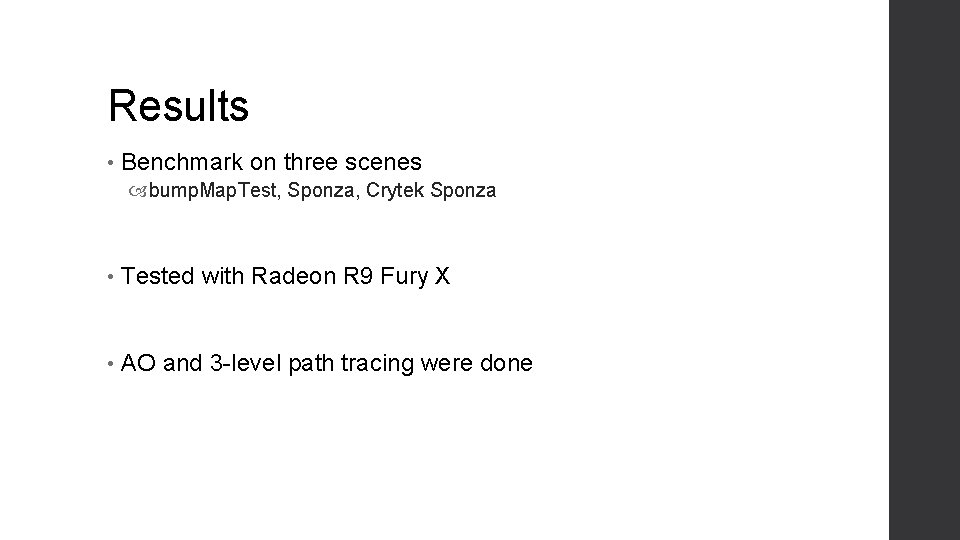

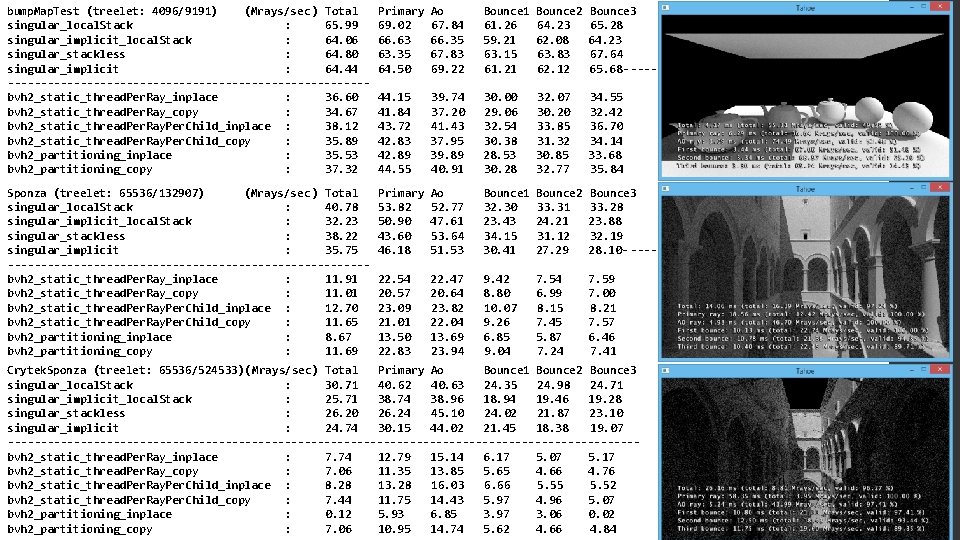

Results • Benchmark on three scenes bump. Map. Test, Sponza, Crytek Sponza • Tested with Radeon R 9 Fury X • AO and 3 -level path tracing were done

bump. Map. Test (treelet: 4096/9191) (Mrays/sec ) Total singular_local. Stack : 65. 99 singular_implicit_local. Stack : 64. 06 singular_stackless : 64. 80 singular_implicit : 64. 44 ---------------------------bvh 2_static_thread. Per. Ray_inplace : 36. 60 bvh 2_static_thread. Per. Ray_copy : 34. 67 bvh 2_static_thread. Per. Ray. Per. Child_inplace : 38. 12 bvh 2_static_thread. Per. Ray. Per. Child_copy : 35. 89 bvh 2_partitioning_inplace : 35. 53 bvh 2_partitioning_copy : 37. 32 Primary 69. 02 66. 63 63. 35 64. 50 Ao 67. 84 66. 35 67. 83 69. 22 Bounce 1 61. 26 59. 21 63. 15 61. 21 Bounce 2 64. 23 62. 08 63. 83 62. 12 Bounce 3 65. 28 64. 23 67. 64 65. 68 --------------------- 44. 15 41. 84 43. 72 42. 83 42. 89 44. 55 39. 74 37. 20 41. 43 37. 95 39. 89 40. 91 30. 00 29. 06 32. 54 30. 38 28. 53 30. 28 32. 07 30. 20 33. 85 31. 32 30. 85 32. 77 34. 55 32. 42 36. 70 34. 14 33. 68 35. 84 Sponza (treelet: 65536/132907) (Mrays/sec ) Total singular_local. Stack : 40. 78 singular_implicit_local. Stack : 32. 23 singular_stackless : 38. 22 singular_implicit : 35. 75 ---------------------------bvh 2_static_thread. Per. Ray_inplace : 11. 91 bvh 2_static_thread. Per. Ray_copy : 11. 01 bvh 2_static_thread. Per. Ray. Per. Child_inplace : 12. 70 bvh 2_static_thread. Per. Ray. Per. Child_copy : 11. 65 bvh 2_partitioning_inplace : 8. 67 bvh 2_partitioning_copy : 11. 69 Primary 53. 82 50. 90 43. 60 46. 18 Ao 52. 77 47. 61 53. 64 51. 53 Bounce 1 32. 30 23. 43 34. 15 30. 41 Bounce 2 33. 31 24. 21 31. 12 27. 29 Bounce 3 33. 28 23. 88 32. 19 28. 10 - -------------------- 22. 54 20. 57 23. 09 21. 01 13. 50 22. 83 22. 47 20. 64 23. 82 22. 04 13. 69 23. 94 9. 42 8. 80 10. 07 9. 26 6. 85 9. 04 7. 54 6. 99 8. 15 7. 45 5. 87 7. 24 7. 59 7. 00 8. 21 7. 57 6. 46 7. 41 Crytek. Sponza (treelet: 65536/524533)( Mrays/sec) Total Primary Ao Bounce 1 Bounce 2 Bounce 3 singular_local. Stack : 30. 71 40. 62 40. 63 24. 35 24. 98 24. 71 singular_implicit_local. Stack : 25. 71 38. 74 38. 96 18. 94 19. 46 19. 28 singular_stackless : 26. 20 26. 24 45. 10 24. 02 21. 87 23. 10 singular_implicit : 24. 74 30. 15 44. 02 21. 45 18. 38 19. 07 ------------------------------------------------bvh 2_static_thread. Per. Ray_inplace : 7. 74 12. 79 15. 14 6. 17 5. 07 5. 17 bvh 2_static_thread. Per. Ray_copy : 7. 06 11. 35 13. 85 5. 65 4. 66 4. 76 bvh 2_static_thread. Per. Ray. Per. Child_inplace : 8. 28 13. 28 16. 03 6. 66 5. 55 5. 52 bvh 2_static_thread. Per. Ray. Per. Child_copy : 7. 44 11. 75 14. 43 5. 97 4. 96 5. 07 bvh 2_partitioning_inplace : 0. 12 5. 93 6. 85 3. 97 3. 06 0. 02 bvh 2_partitioning_copy : 7. 06 10. 95 14. 74 5. 62 4. 66 4. 84

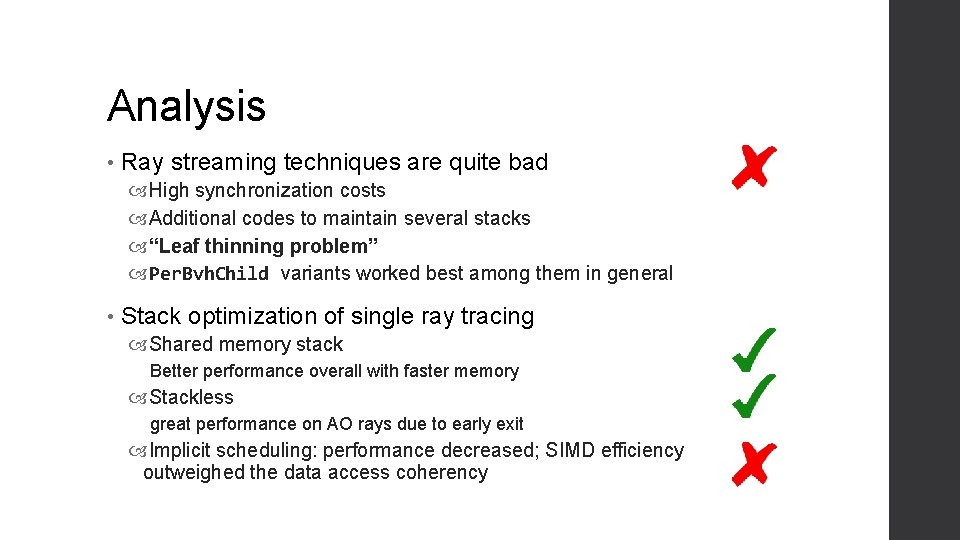

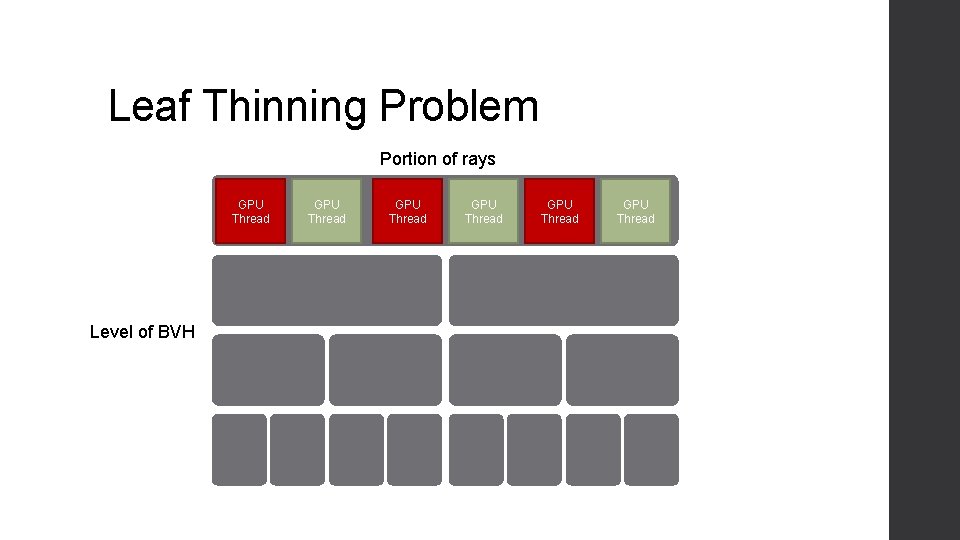

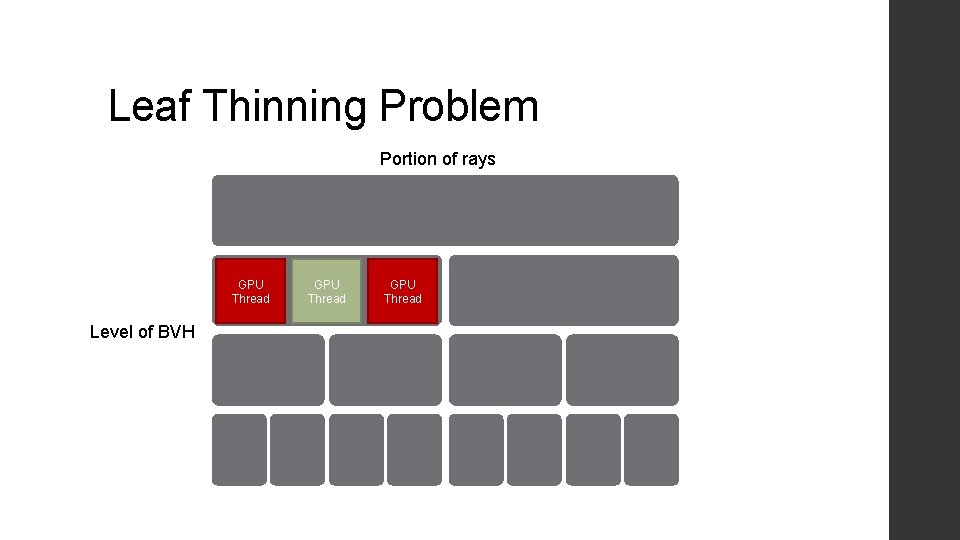

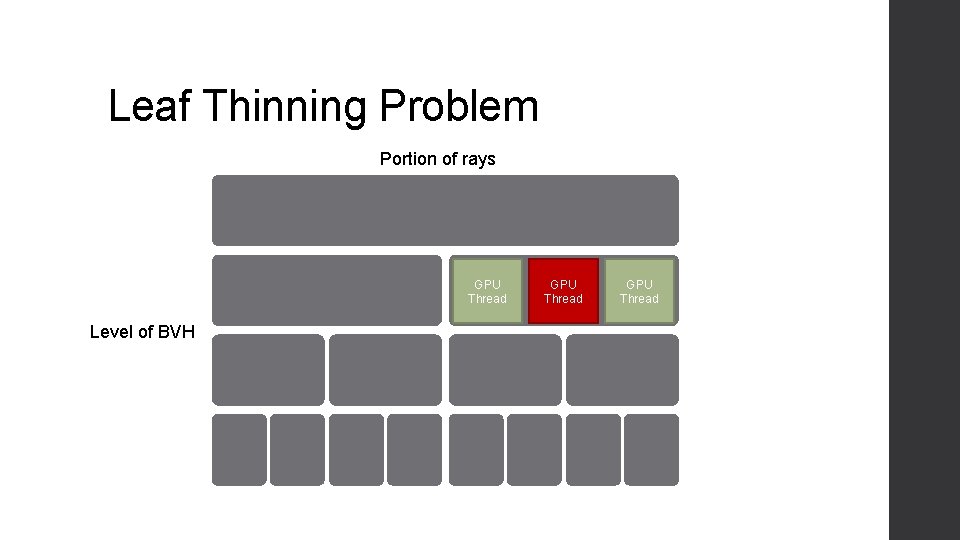

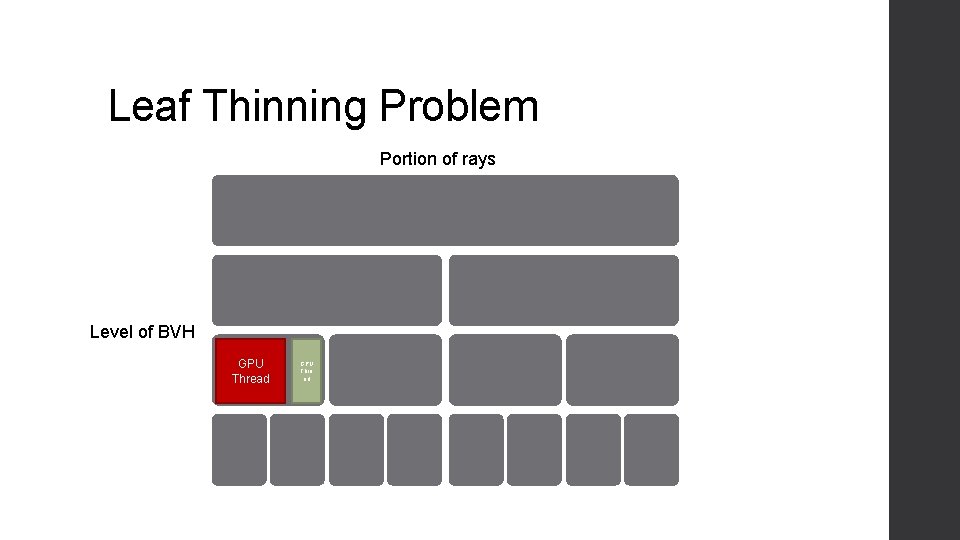

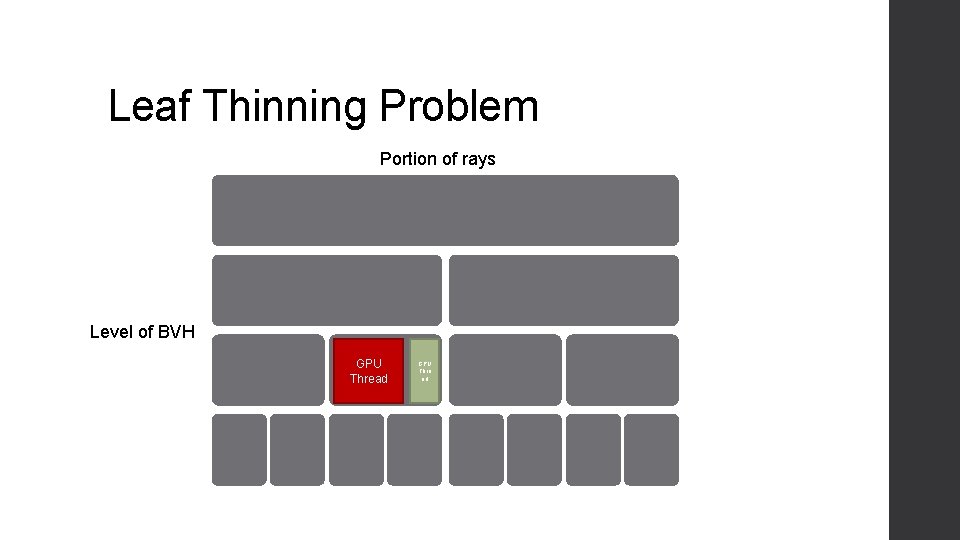

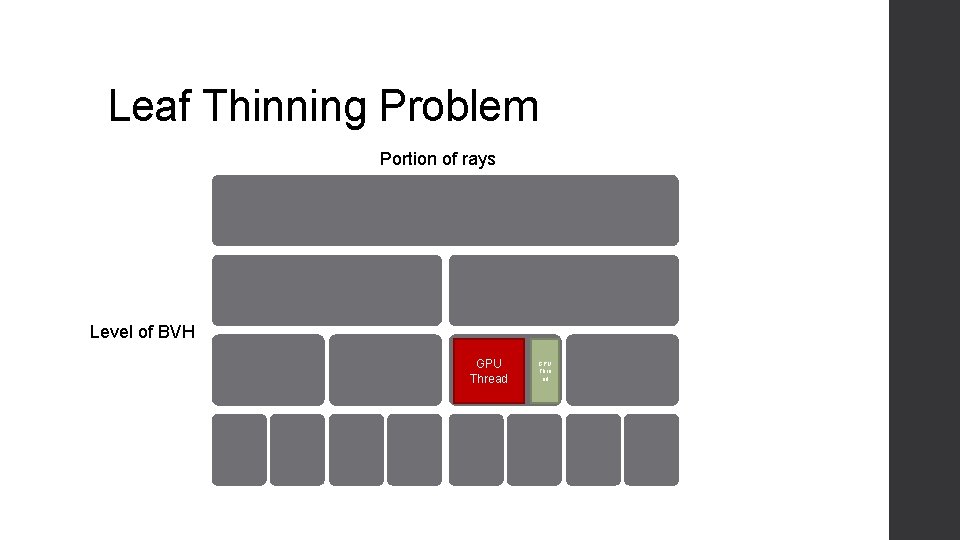

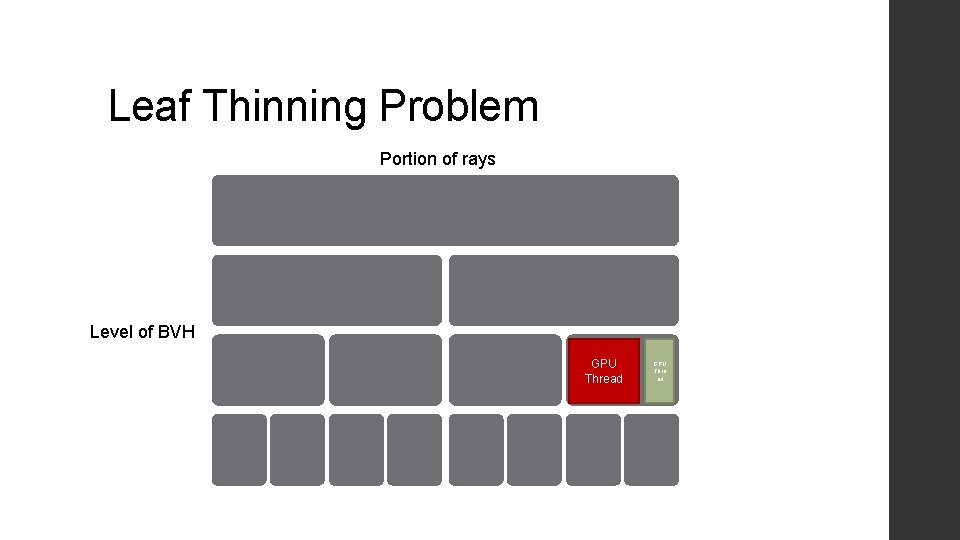

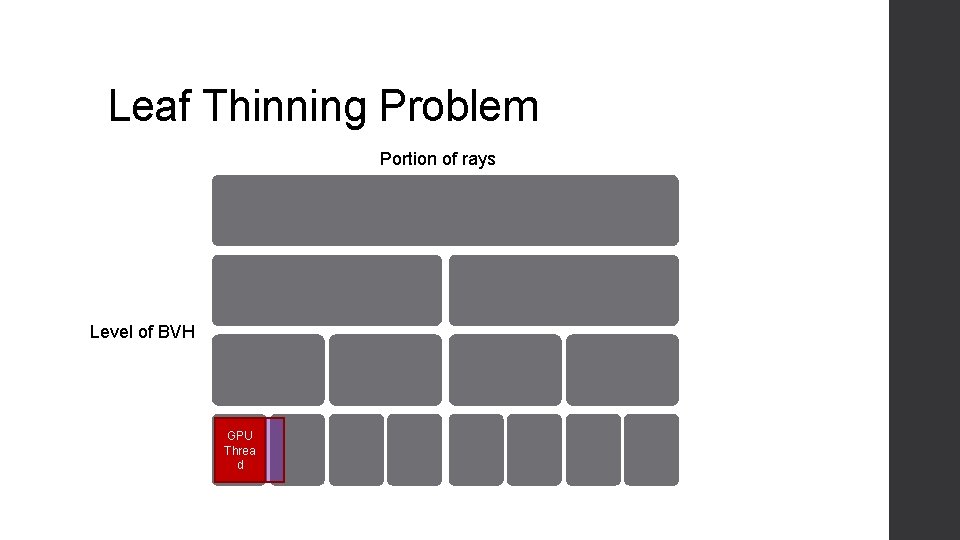

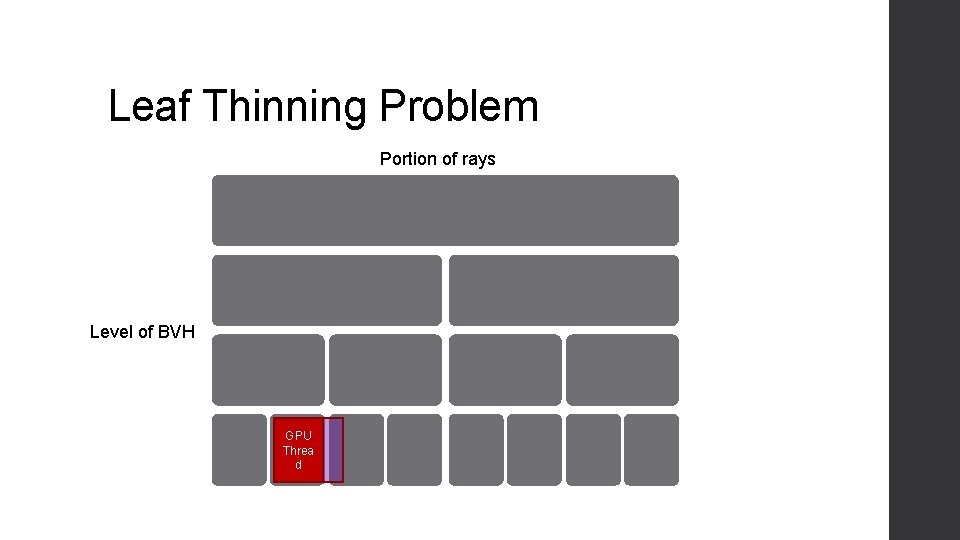

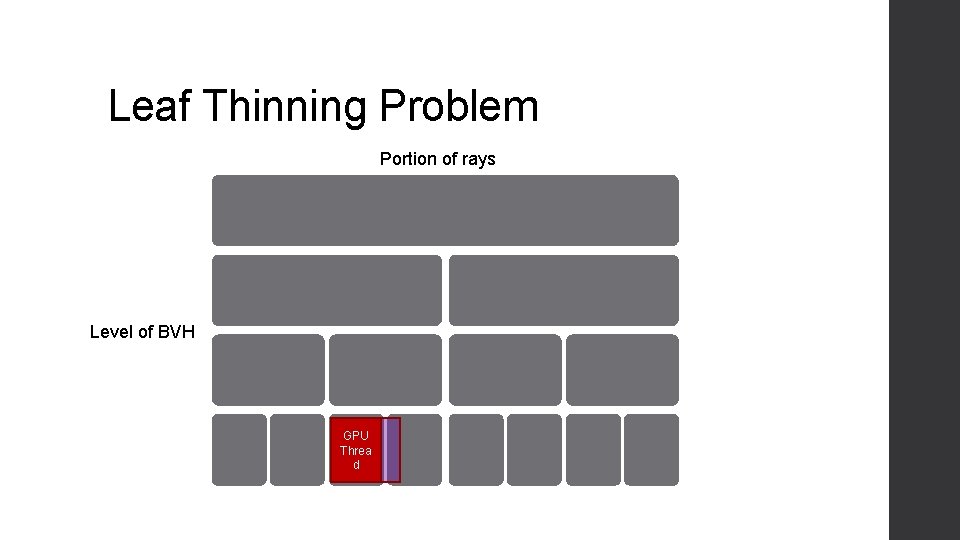

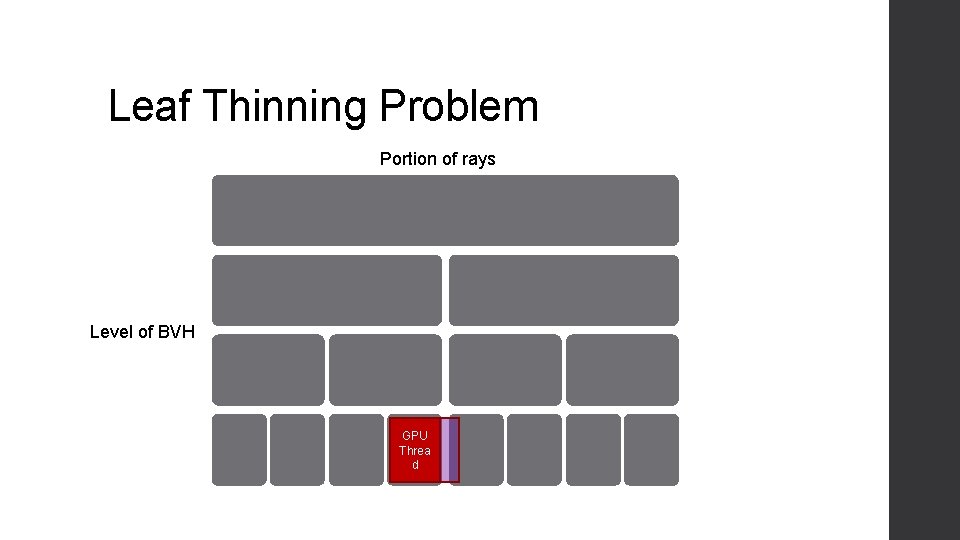

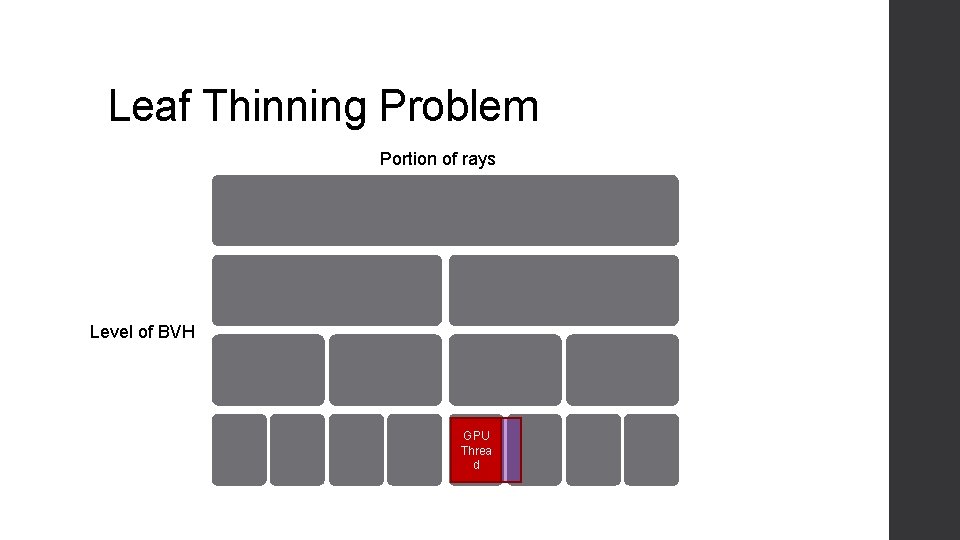

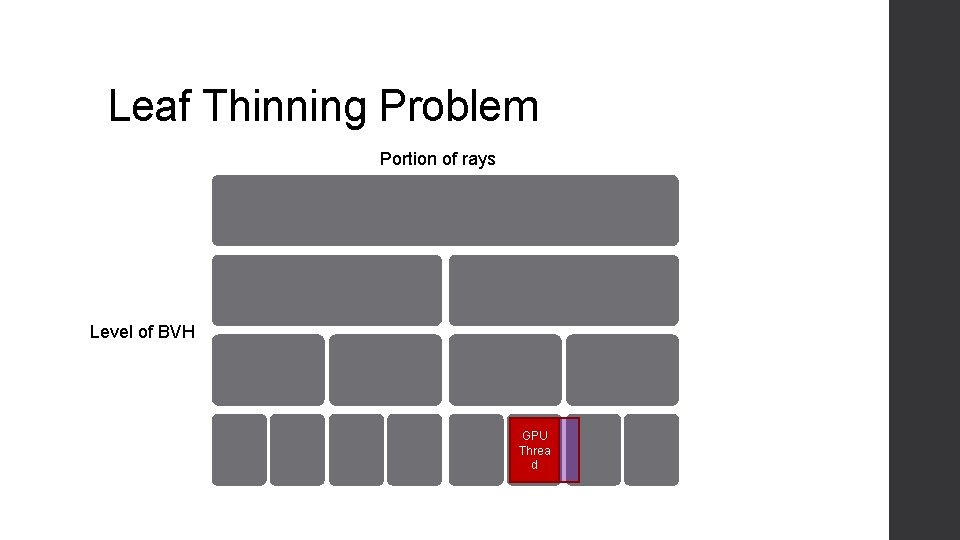

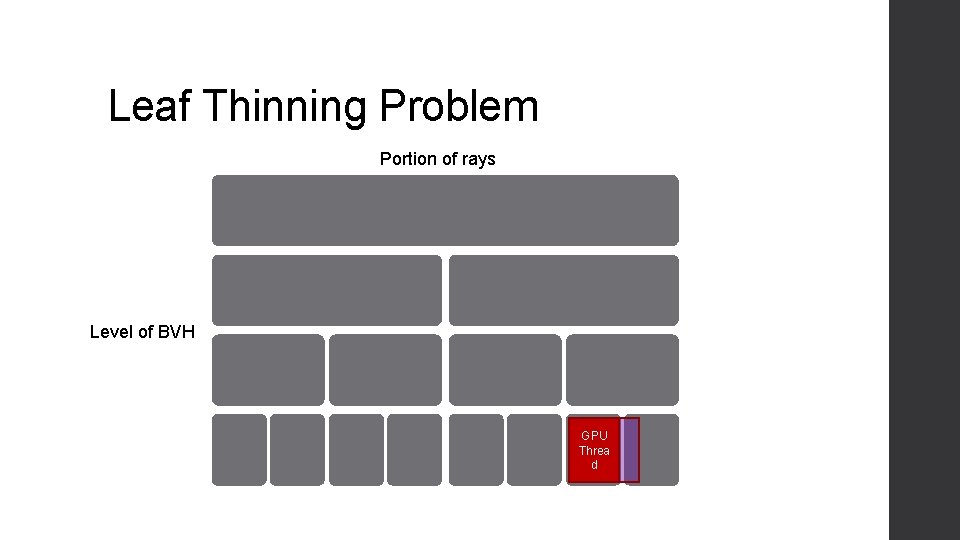

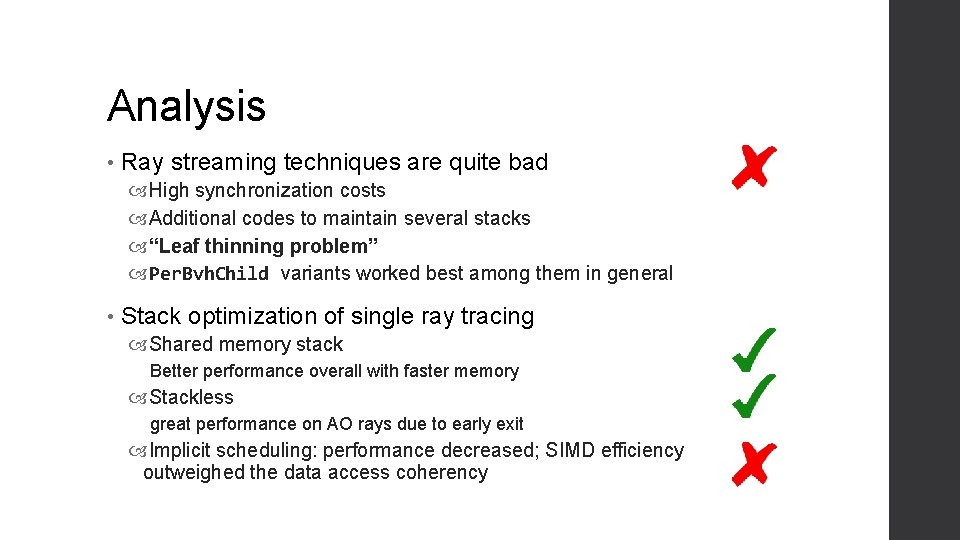

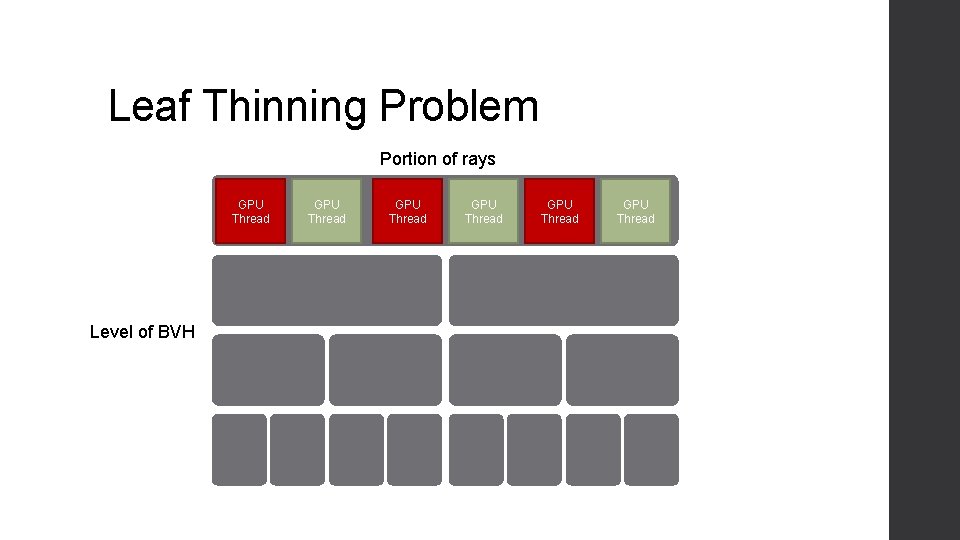

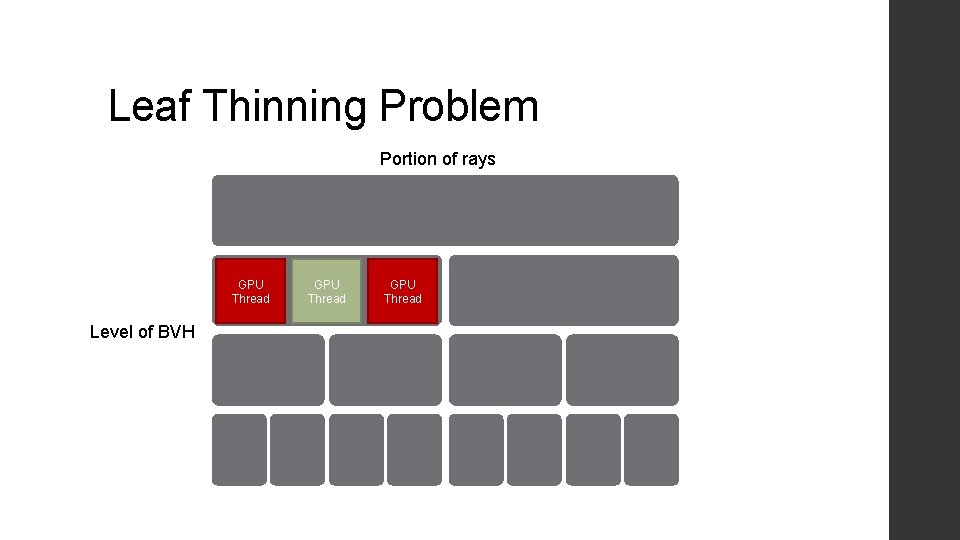

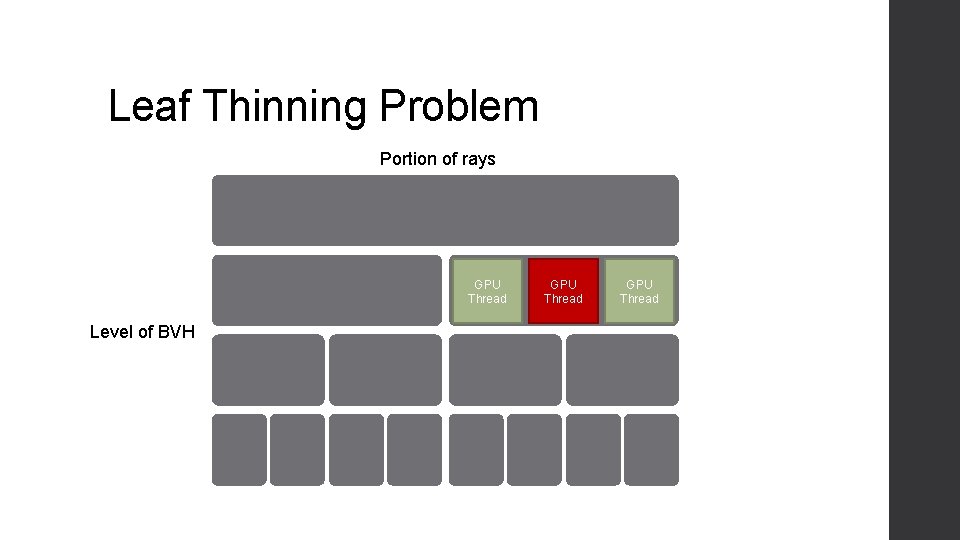

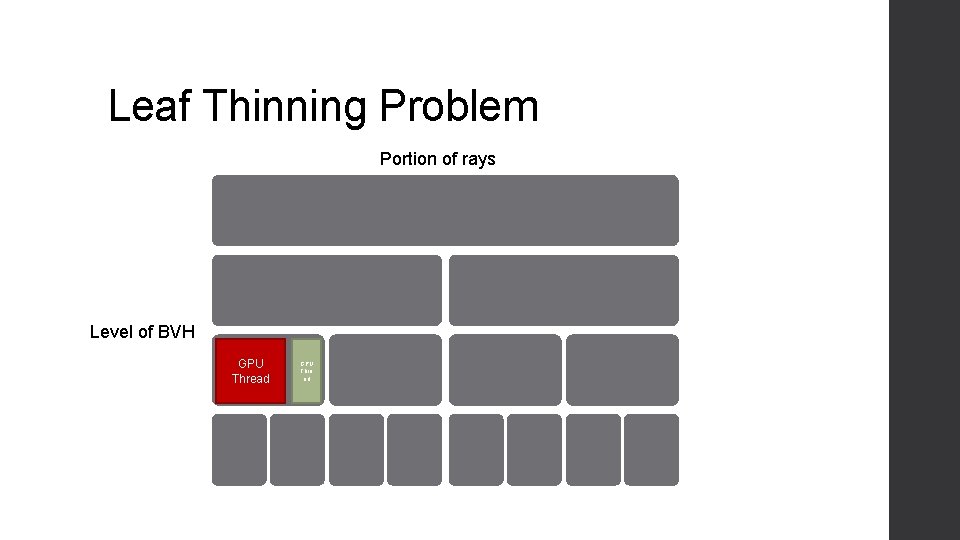

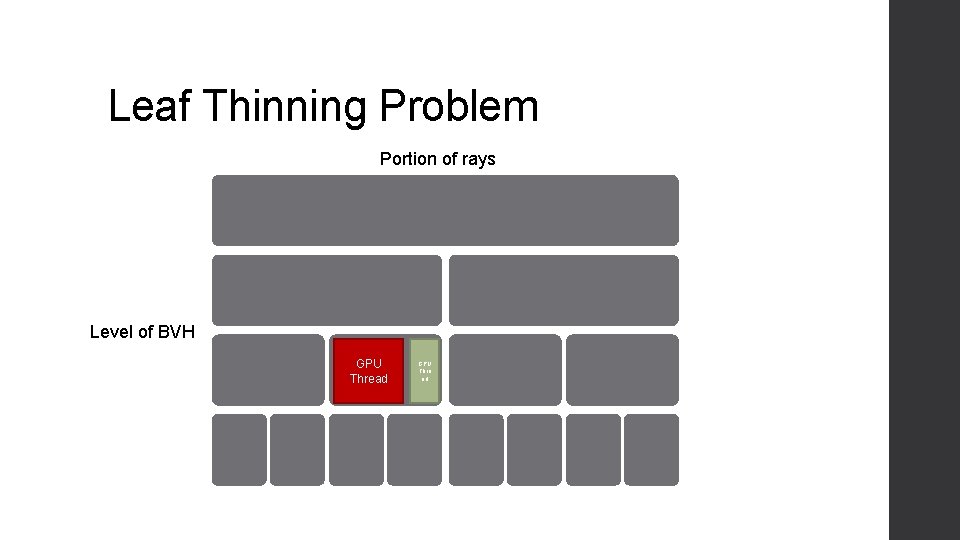

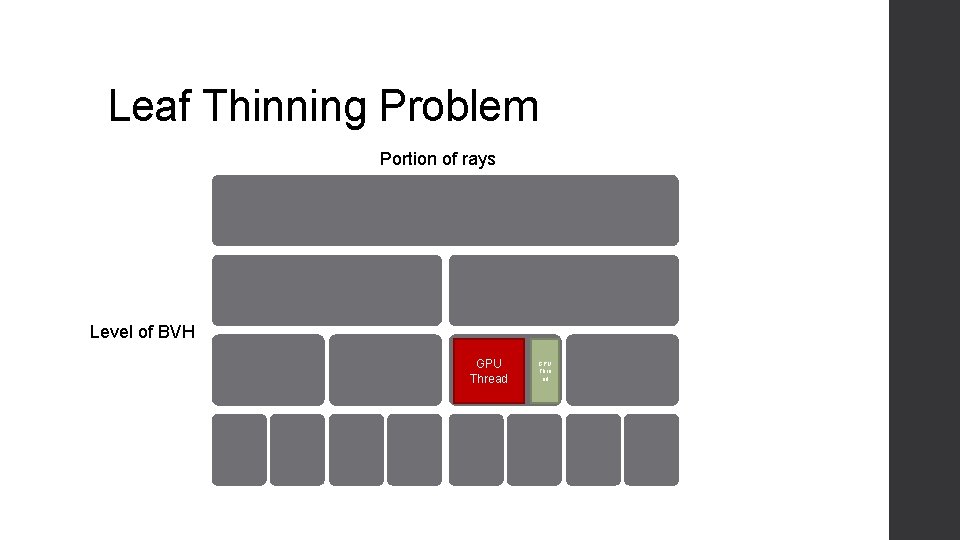

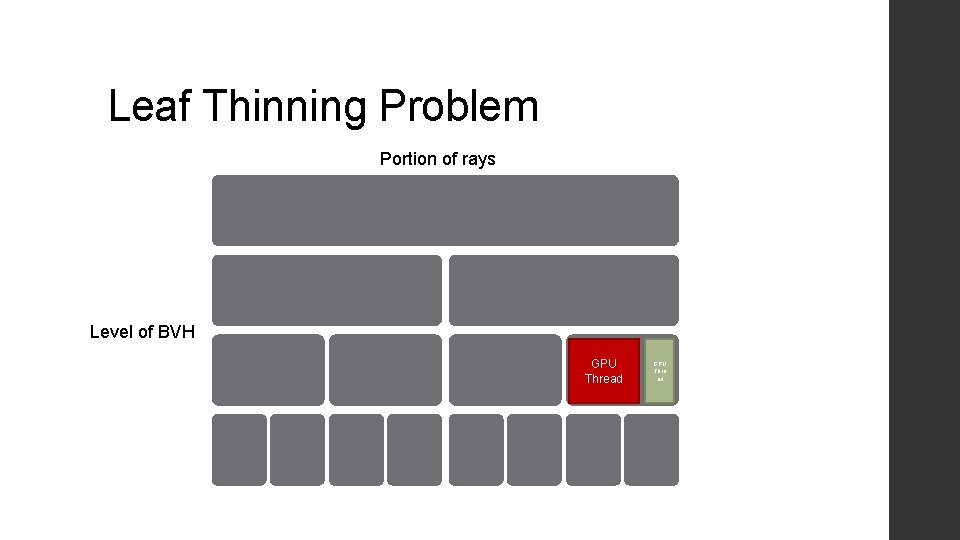

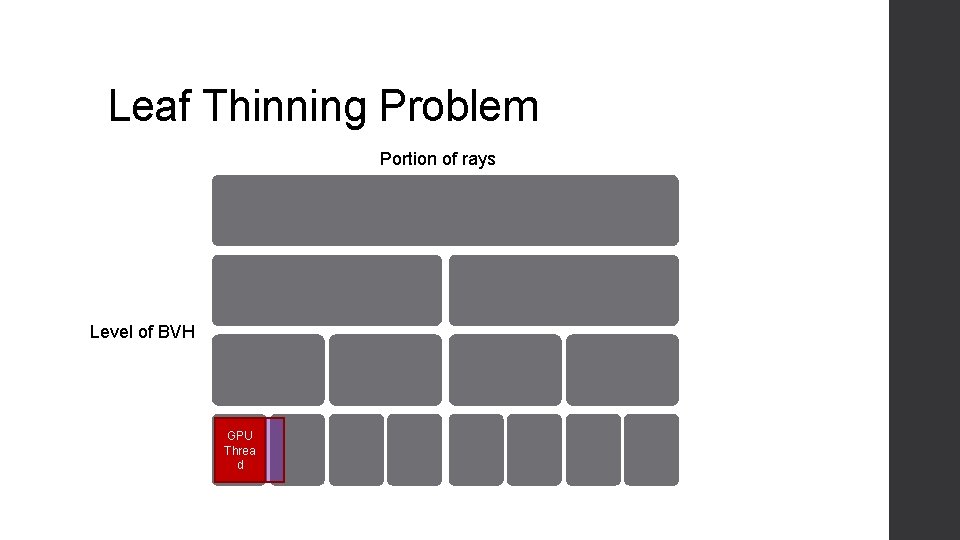

Analysis • Ray streaming techniques are quite bad High synchronization costs Additional codes to maintain several stacks “Leaf thinning problem” Per. Bvh. Child variants worked best among them in general • Stack optimization of single ray tracing Shared memory stack Better performance overall with faster memory Stackless great performance on AO rays due to early exit Implicit scheduling: performance decreased; SIMD efficiency outweighed the data access coherency

Future Work • Partitioning-based ray streaming may be applied to many-core CPUs • Implicit ray casting may be improved • Other optimizations • May be continued in next AMD internship (if possible )

Summary • Ported various ray streaming methods into GPU Generally bad as reported in [Aila 09] Devised Per. Bvh. Child variant - fastest among RS techniques • Implemented and compared variants of single ray tracing Stack-based tracing with different memories Stackless traversal and implicit scheduling Local-stack tracing showed the best performance among them Emphasized on recent Fiji architecture • Devised novel partitioning-based ray streaming BVH traversal with bounded memory usage A novel partitioning algorithm for GPU May achieve speedup over other RS methods in memory-limited situations (e. g. , out-of-core rendering)

Learned About • Rendering techniques and principles in AMD Fire. Render • Industry-level priorities on software design decisions • International co-working skills • Programming techniques Open. CL Debugging, profiling and optimizing GPU codes Various aspects of GPU in general Architectural differences in AMD GPUs and NVIDIA GPUs

Future Plan

Future Plan • Get back into original topic • Target conference Siggraph 2016: Late Jan. PG/EGSR/Sig. Asia 2016: Early April

Q&A End of slide

Backup

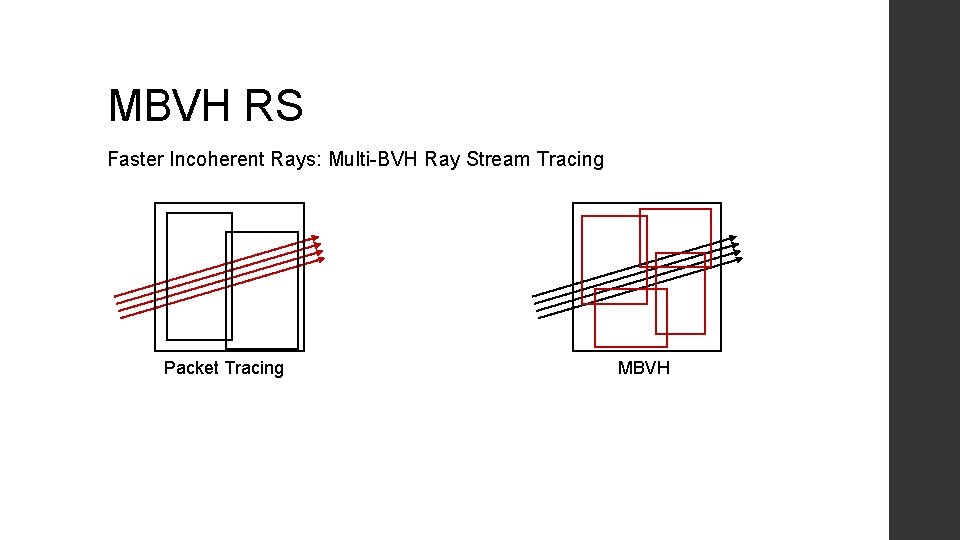

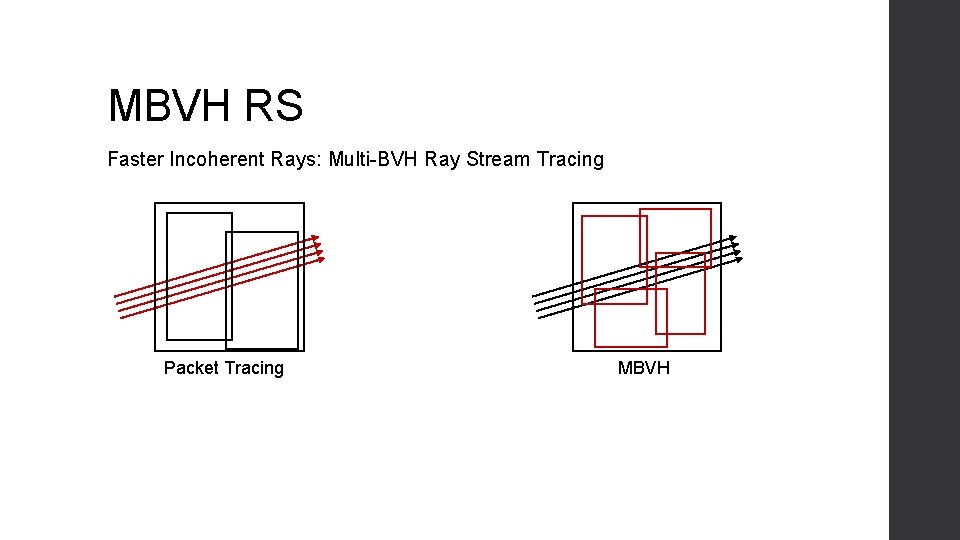

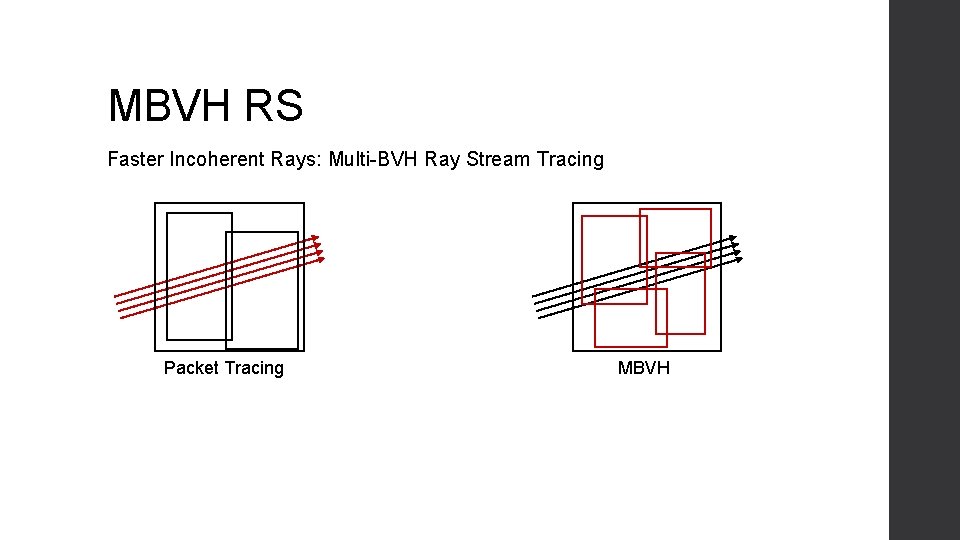

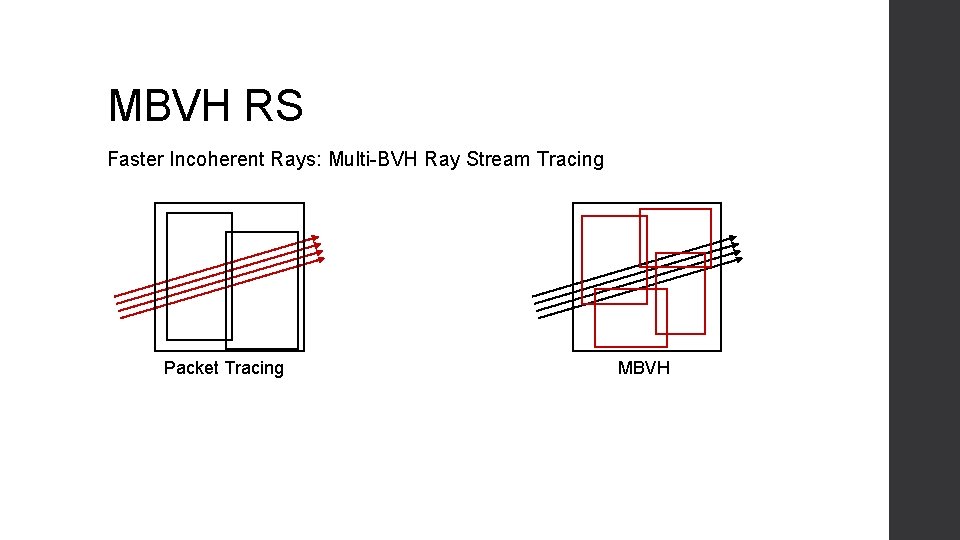

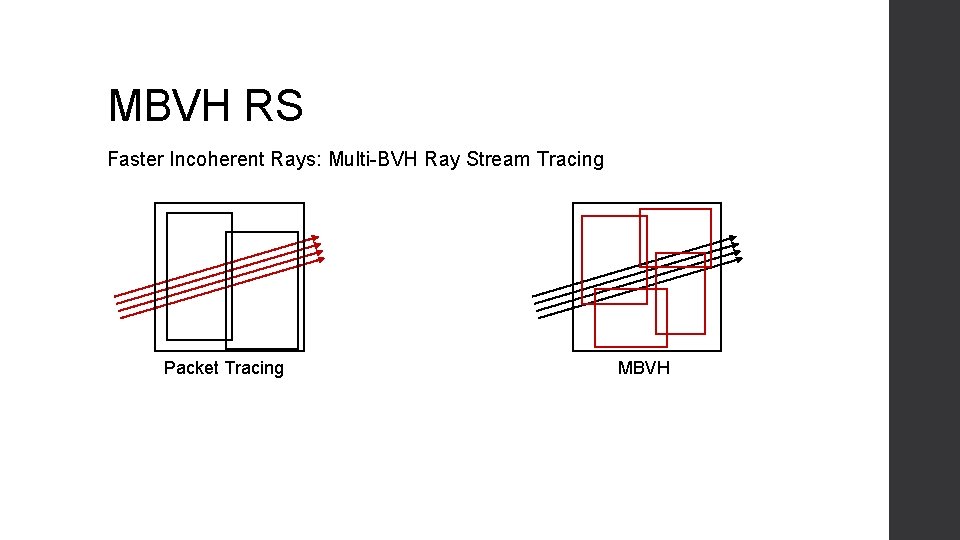

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing

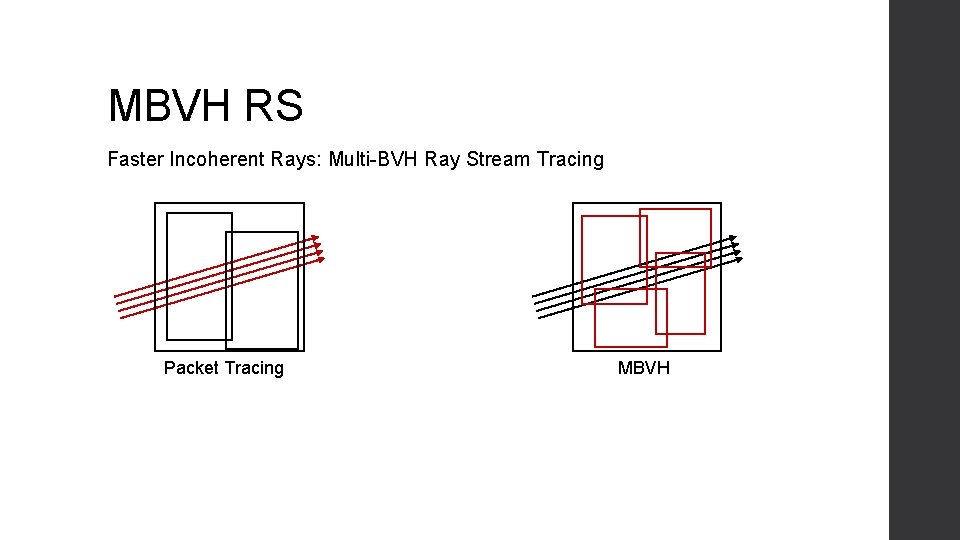

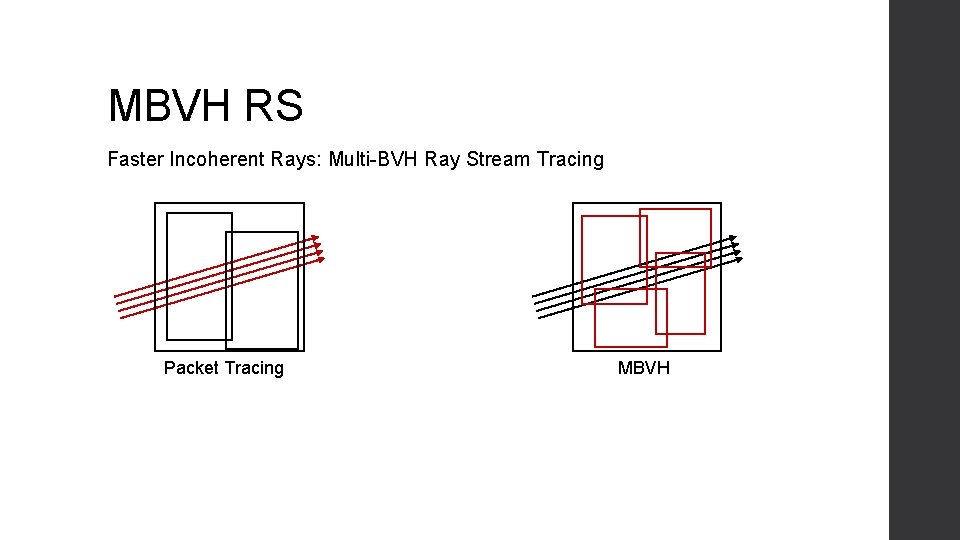

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

MBVH RS Faster Incoherent Rays: Multi-BVH Ray Stream Tracing Packet Tracing MBVH

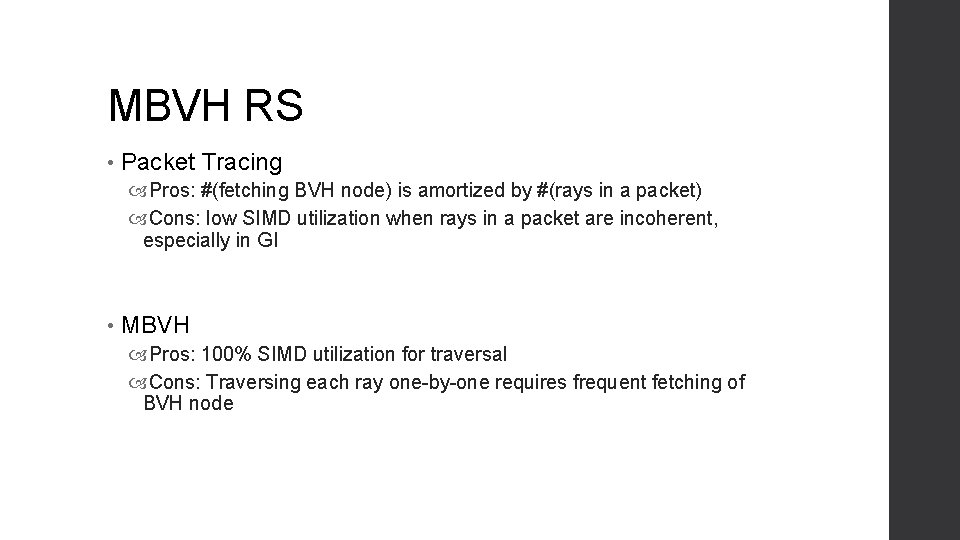

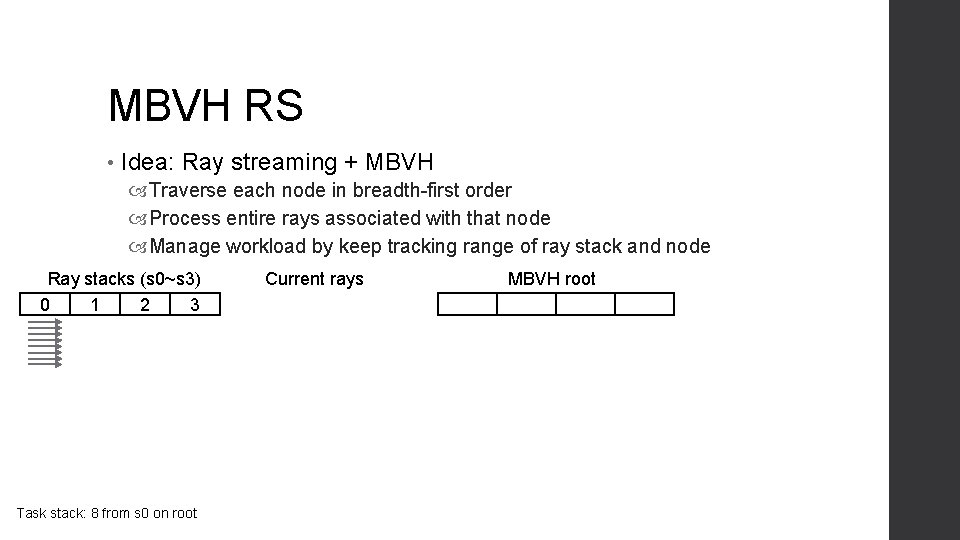

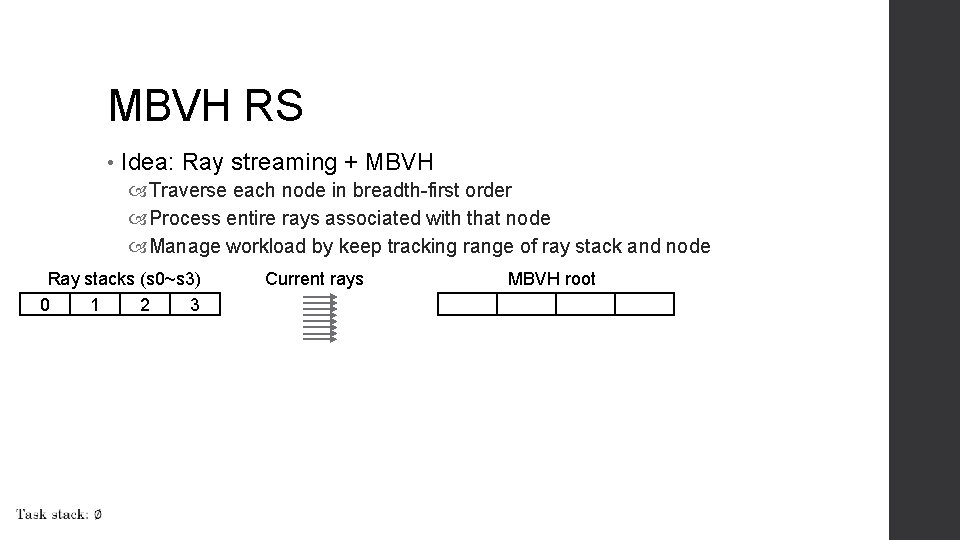

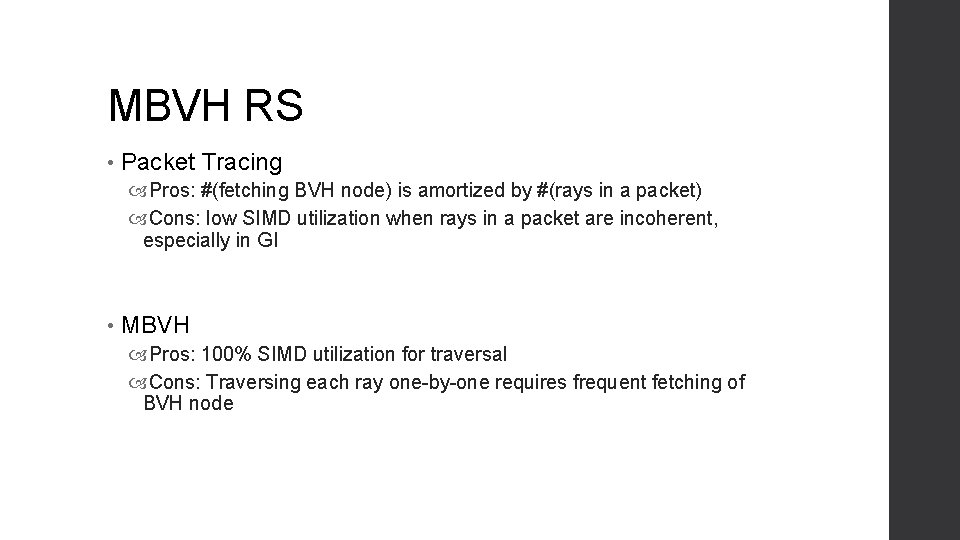

MBVH RS • Packet Tracing Pros: #(fetching BVH node) is amortized by #(rays in a packet) Cons: low SIMD utilization when rays in a packet are incoherent, especially in GI • MBVH Pros: 100% SIMD utilization for traversal Cons: Traversing each ray one-by-one requires frequent fetching of BVH node

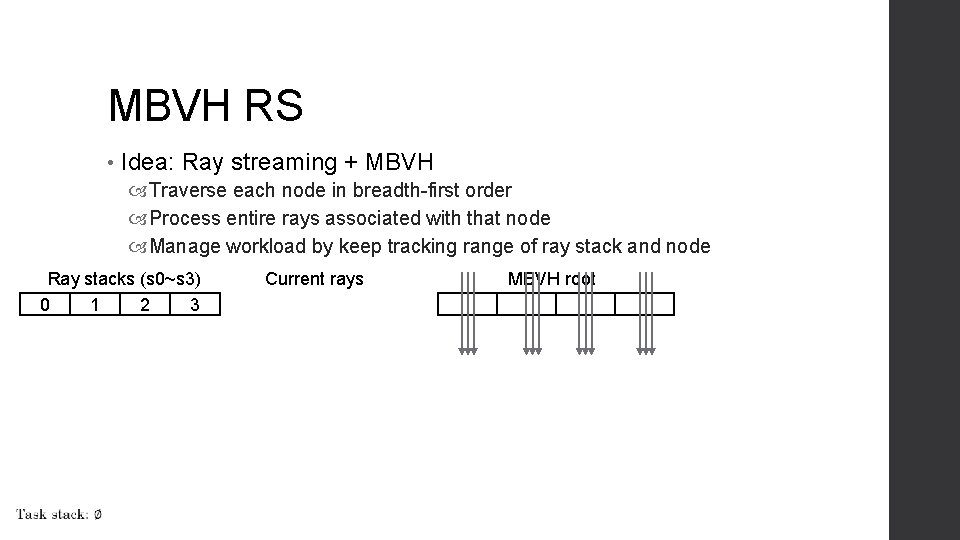

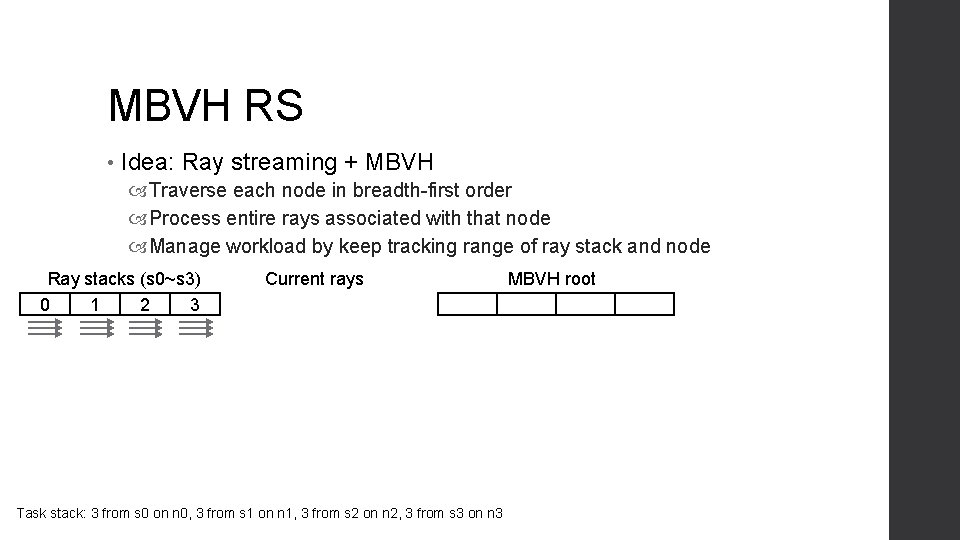

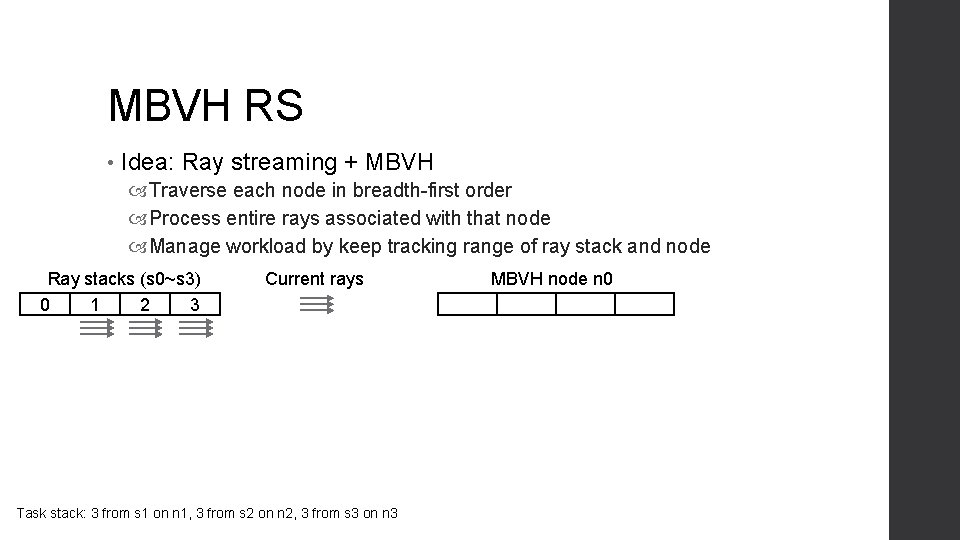

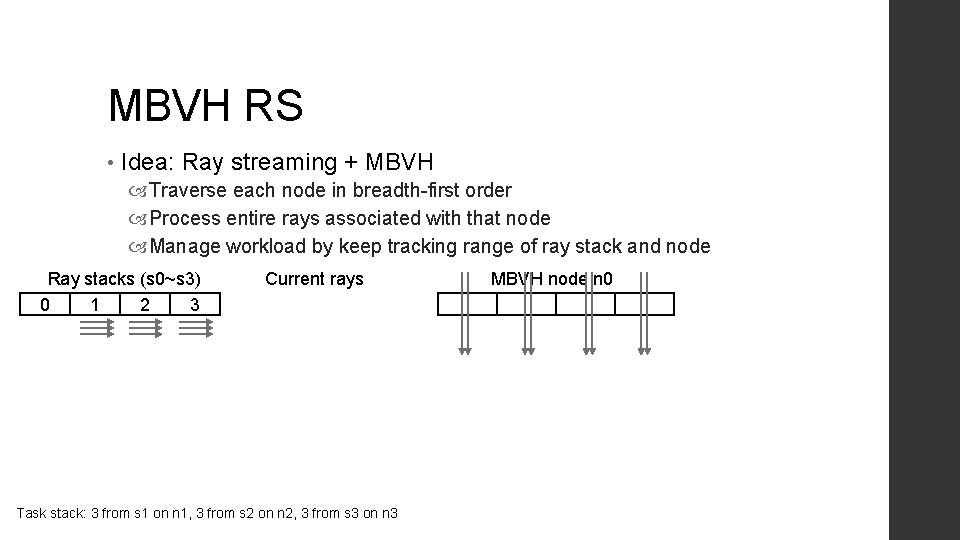

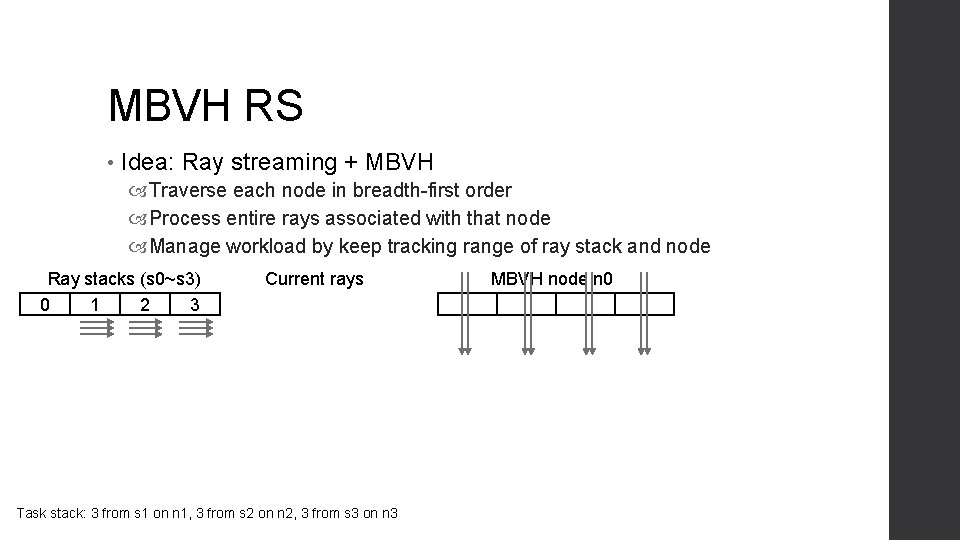

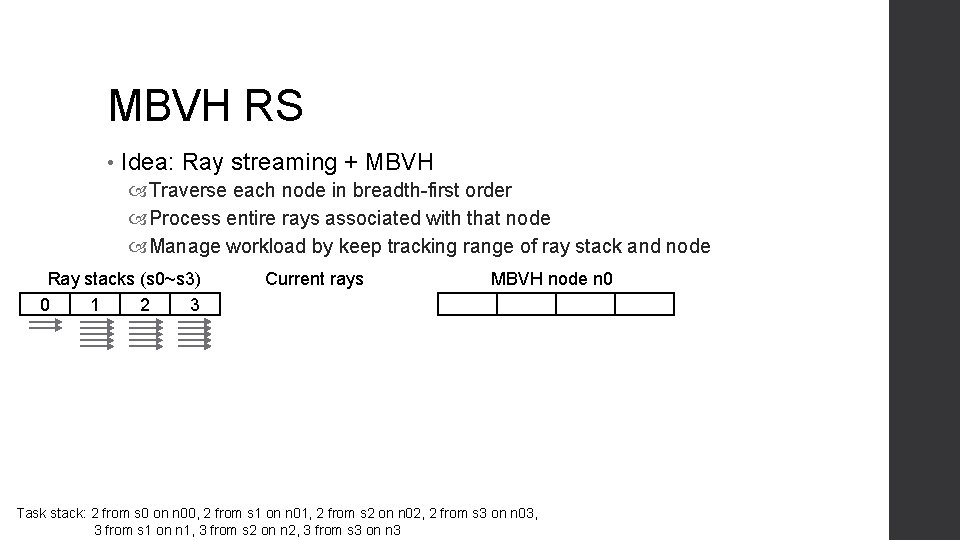

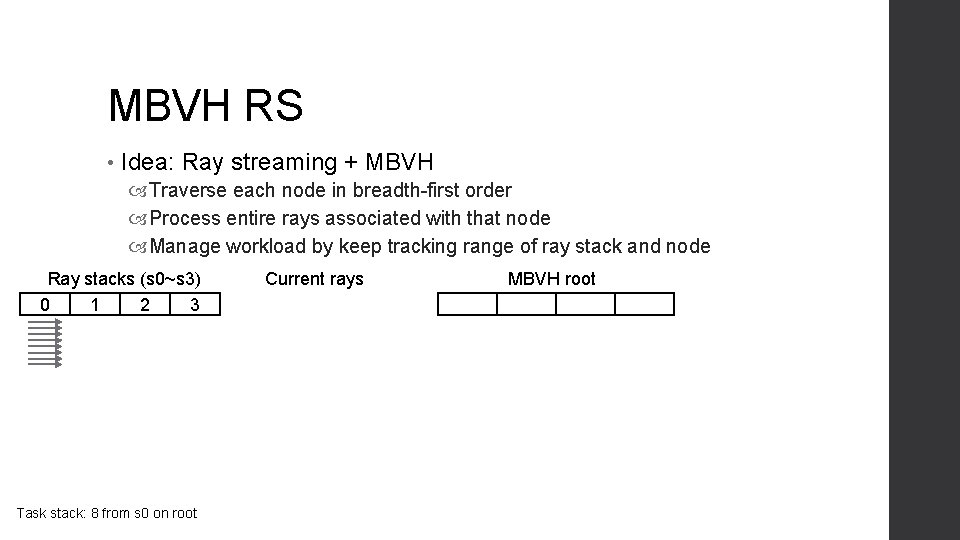

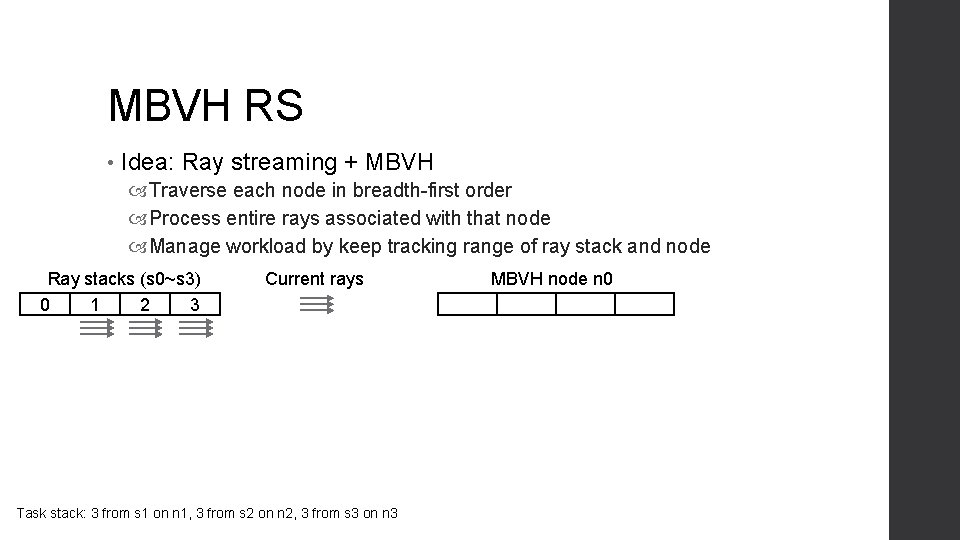

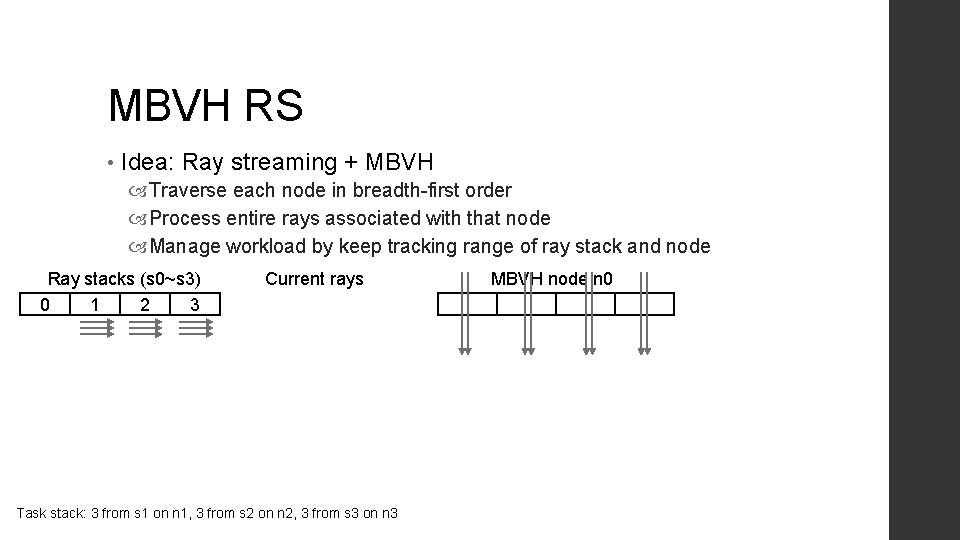

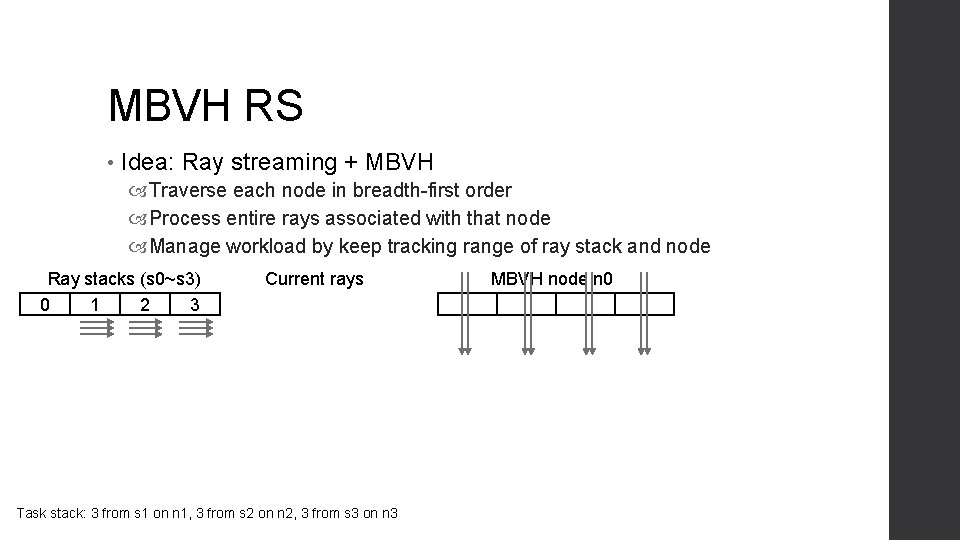

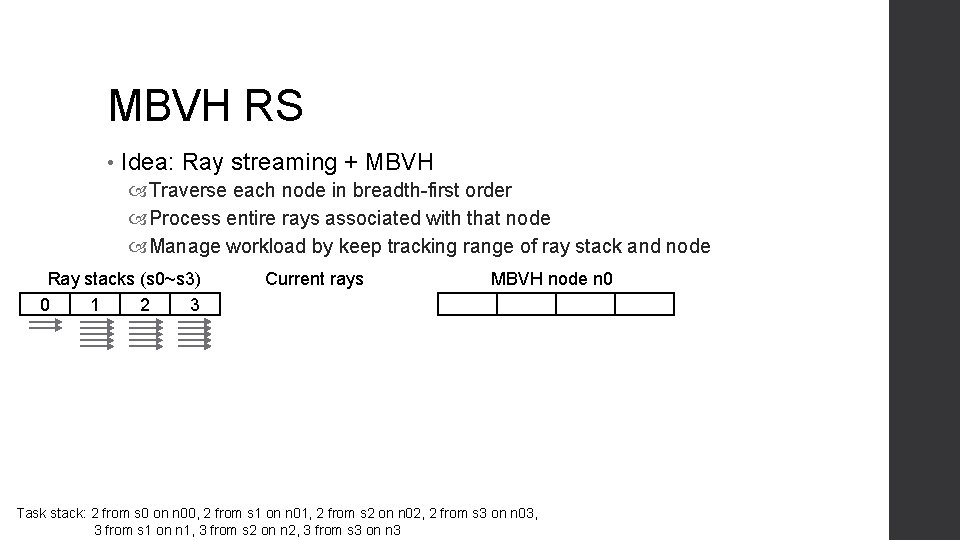

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Task stack: 8 from s 0 on root Current rays MBVH root

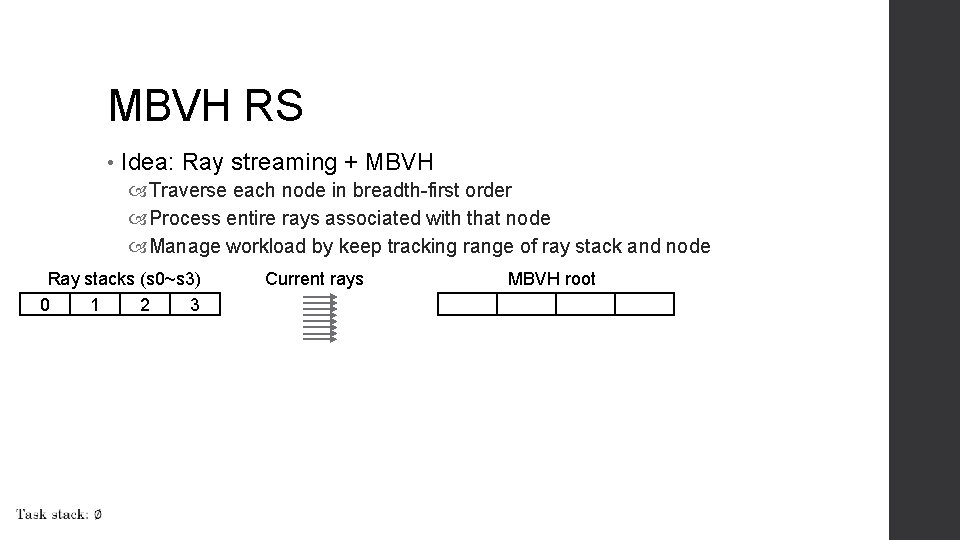

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays MBVH root

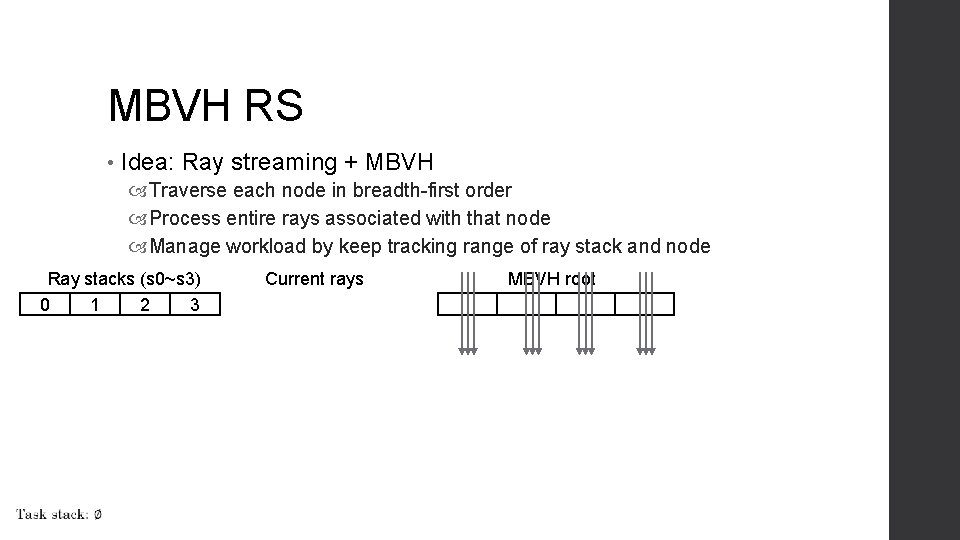

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays MBVH root

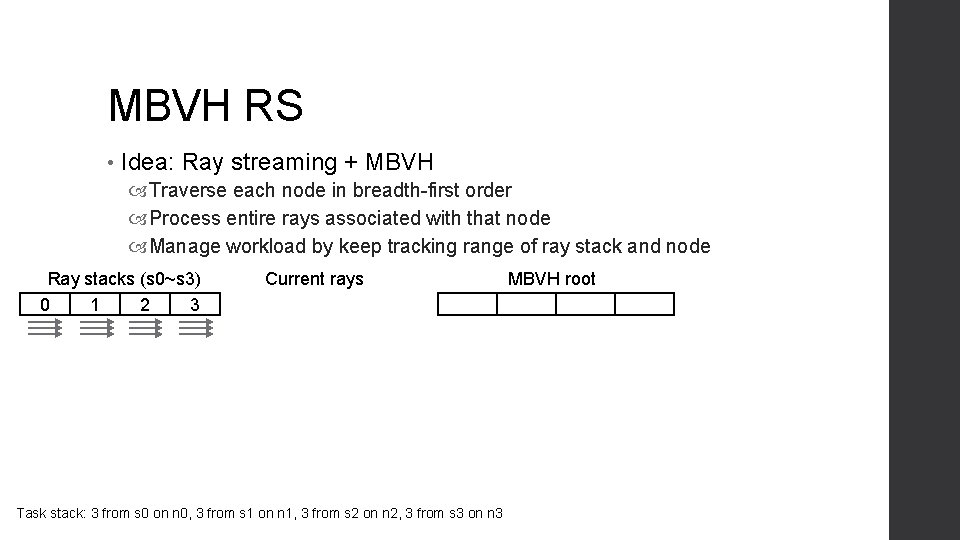

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays Task stack: 3 from s 0 on n 0, 3 from s 1 on n 1, 3 from s 2 on n 2, 3 from s 3 on n 3 MBVH root

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays Task stack: 3 from s 1 on n 1, 3 from s 2 on n 2, 3 from s 3 on n 3 MBVH node n 0

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays Task stack: 3 from s 1 on n 1, 3 from s 2 on n 2, 3 from s 3 on n 3 MBVH node n 0

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays Task stack: 3 from s 1 on n 1, 3 from s 2 on n 2, 3 from s 3 on n 3 MBVH node n 0

MBVH RS • Idea: Ray streaming + MBVH Traverse each node in breadth-first order Process entire rays associated with that node Manage workload by keep tracking range of ray stack and node Ray stacks (s 0~s 3) 0 1 2 3 Current rays MBVH node n 0 Task stack: 2 from s 0 on n 00, 2 from s 1 on n 01, 2 from s 2 on n 02, 2 from s 3 on n 03, 3 from s 1 on n 1, 3 from s 2 on n 2, 3 from s 3 on n 3

DRST Dynamic Ray Stream Traversal • Similar stack-based ray streaming method • Differences from MBVH RS 1. Stack per traversal order (not per SIMD slot) 2. Breakdown of L-to-R and R-to-L traversal order per ray basis

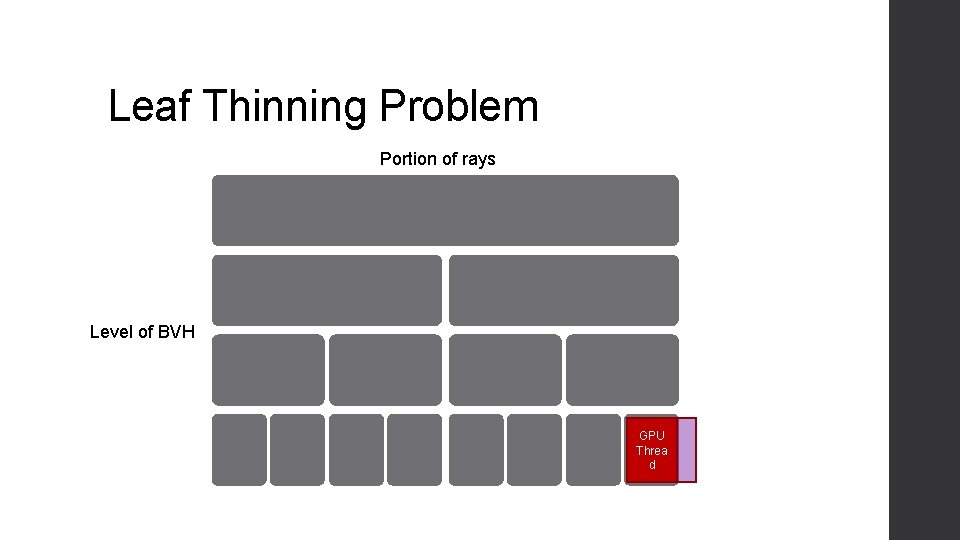

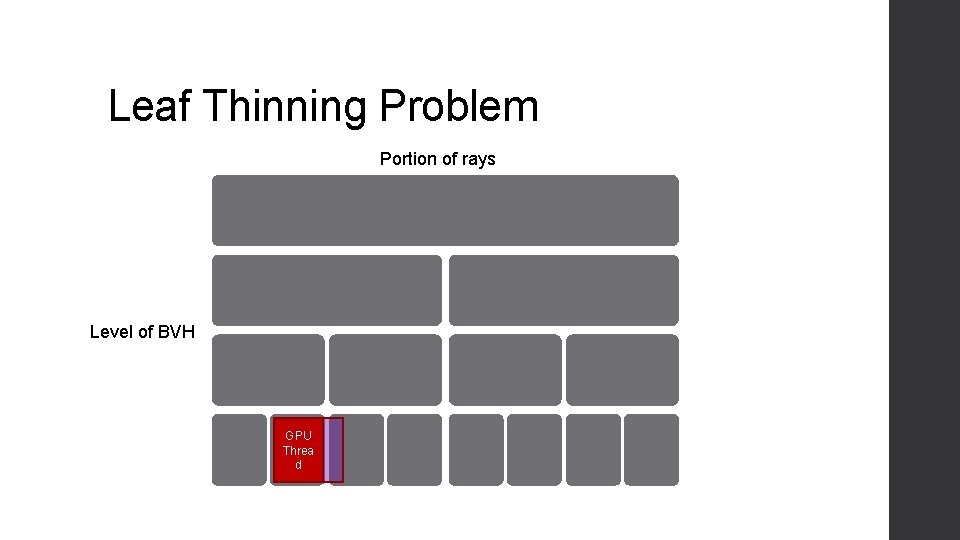

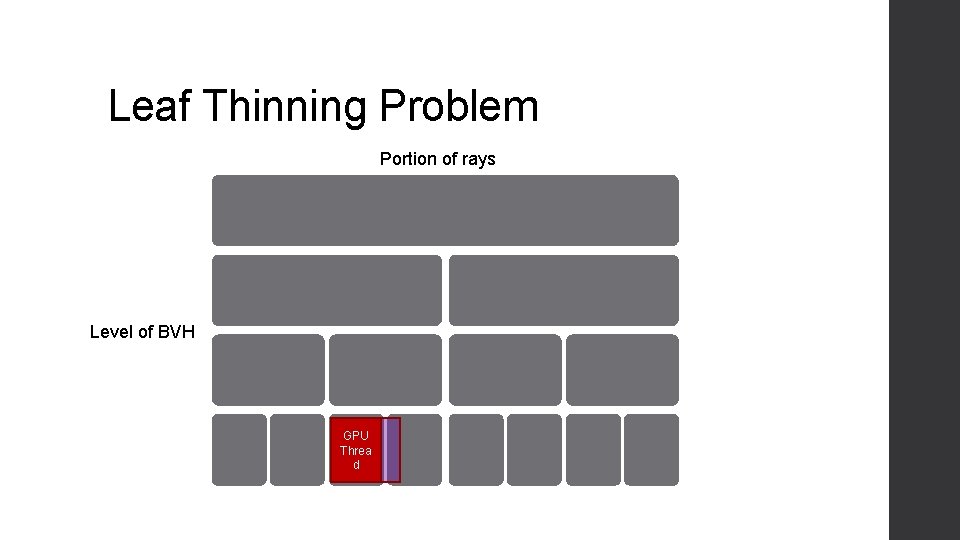

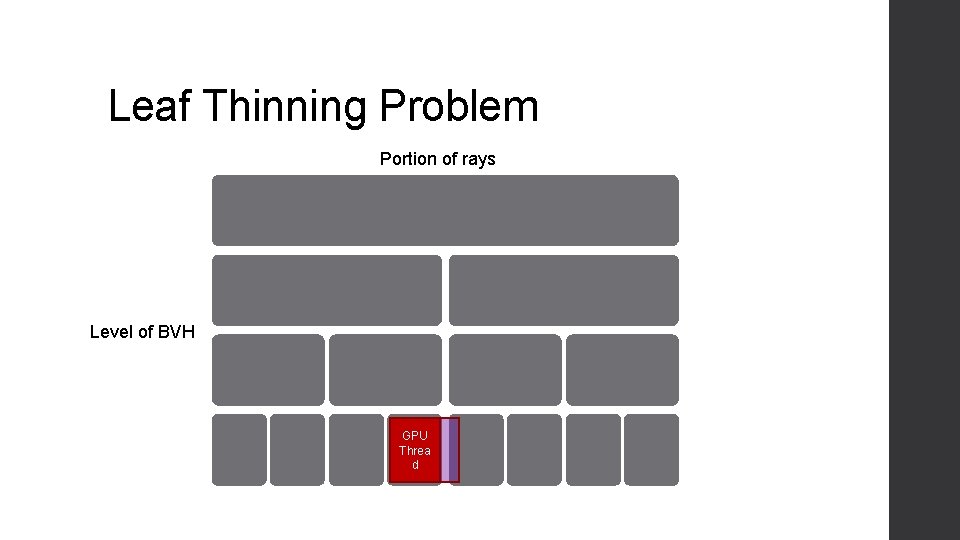

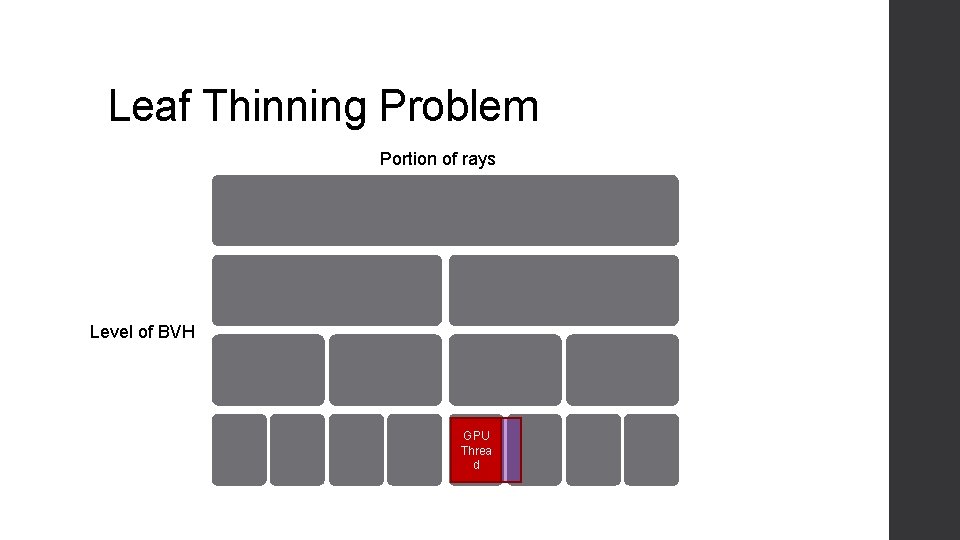

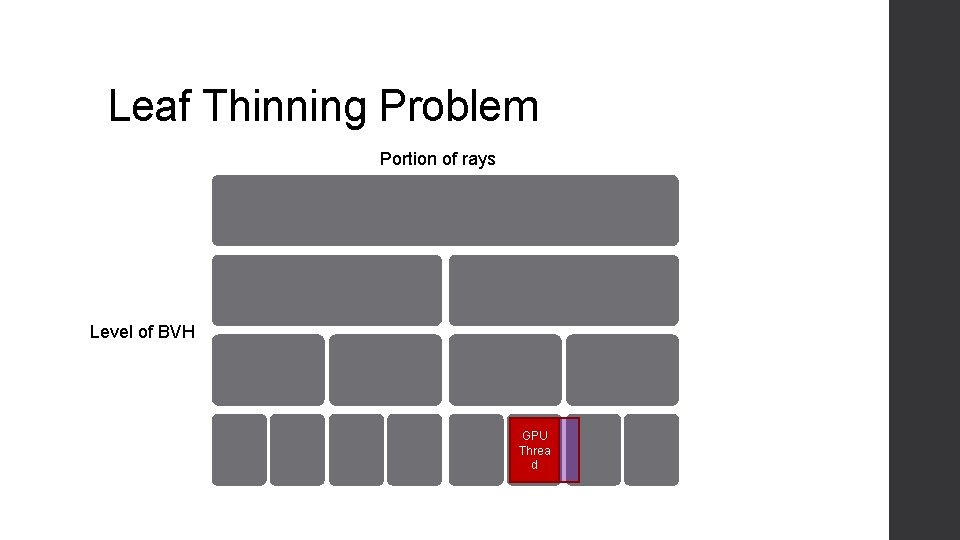

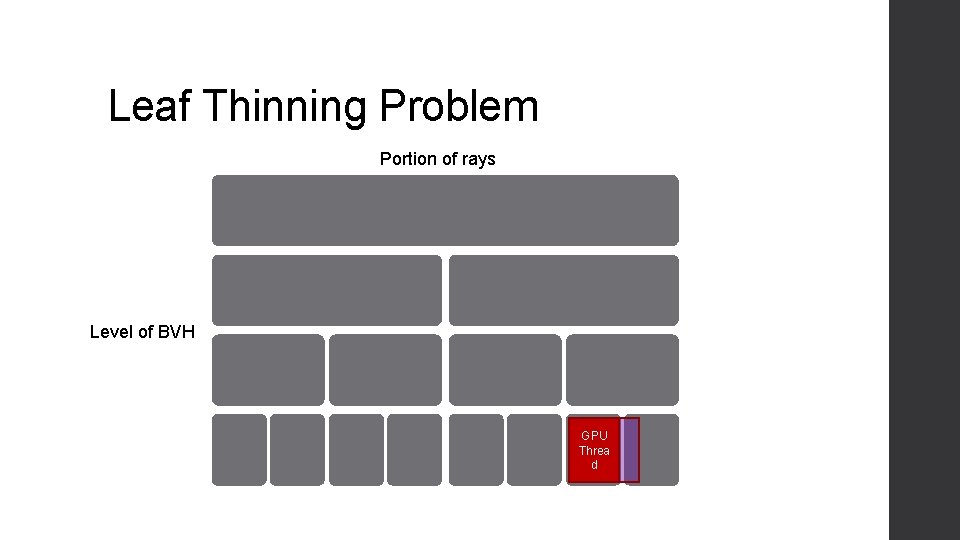

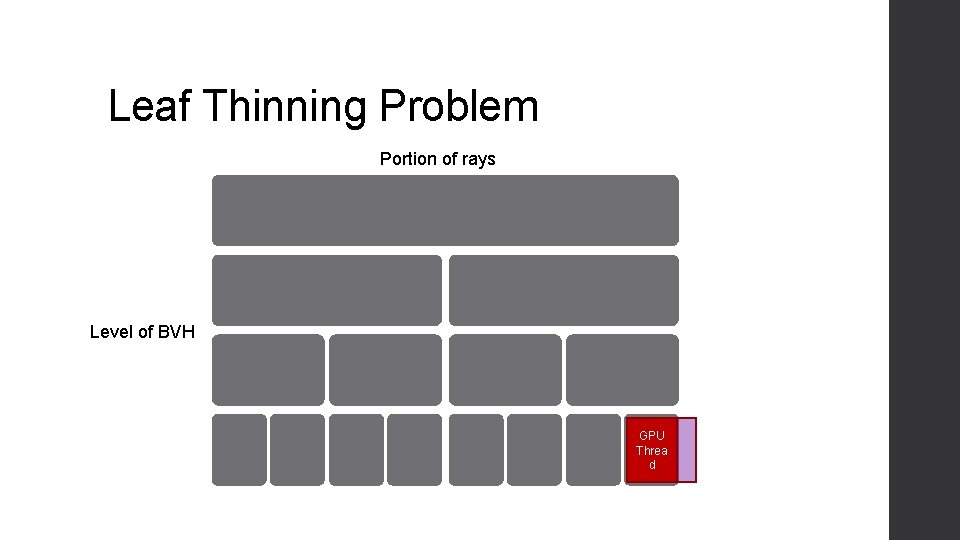

Leaf Thinning Problem Portion of rays GPU Thread Level of BVH GPU Thread GPU Thread

Leaf Thinning Problem Portion of rays GPU Thread Level of BVH GPU Thread

Leaf Thinning Problem Portion of rays GPU Thread Level of BVH GPU Thread

Leaf Thinning Problem Portion of rays Level of BVH GPU Thread GPU Thre ad

Leaf Thinning Problem Portion of rays Level of BVH GPU Thread GPU Thre ad

Leaf Thinning Problem Portion of rays Level of BVH GPU Thread GPU Thre ad

Leaf Thinning Problem Portion of rays Level of BVH GPU Thread GPU Thre ad

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

Leaf Thinning Problem Portion of rays Level of BVH GPU Threa d

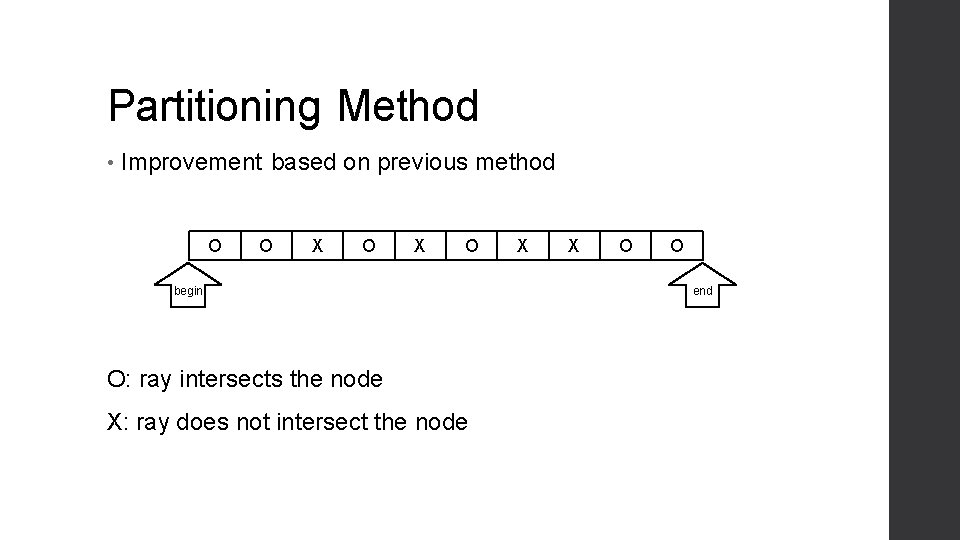

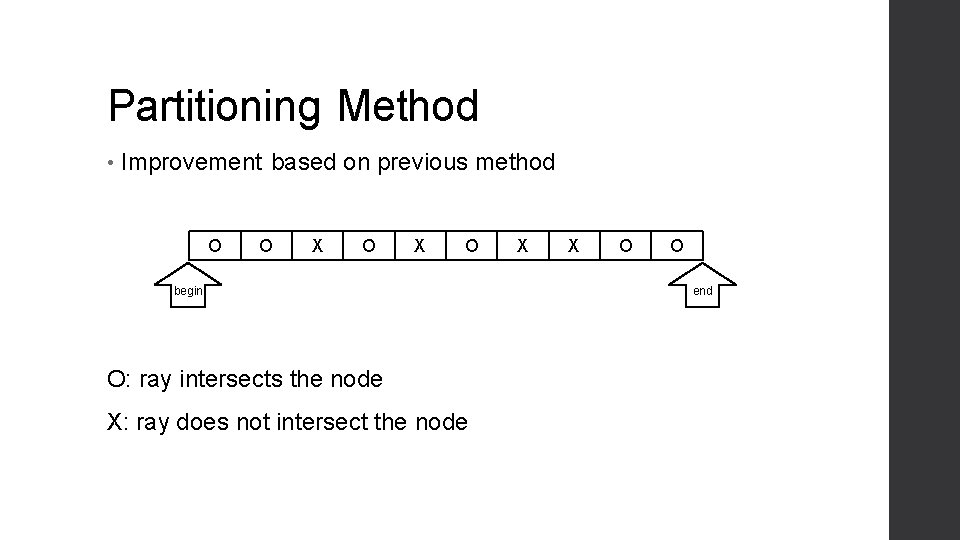

Partitioning Method • Improvement based on previous method O O X O begin O: ray intersects the node X: ray does not intersect the node X X O O end

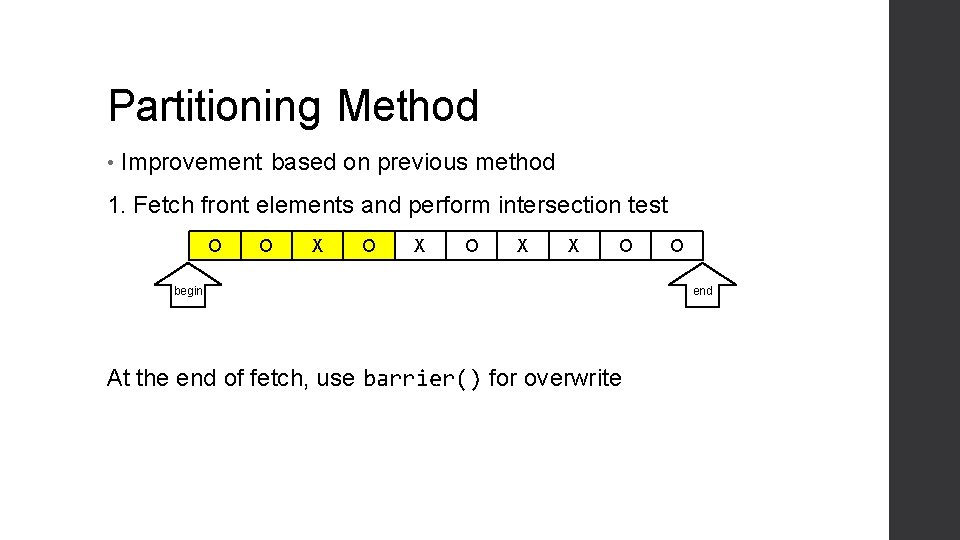

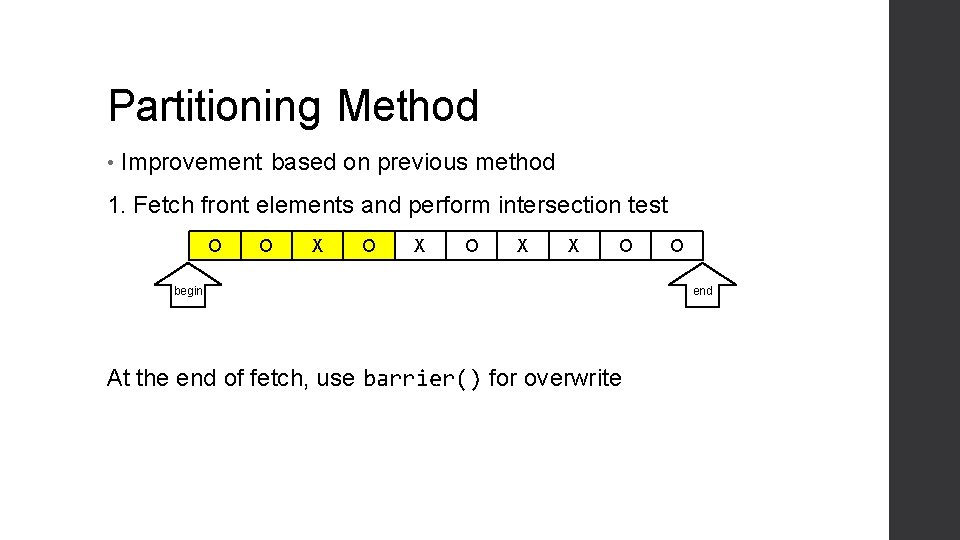

Partitioning Method • Improvement based on previous method 1. Fetch front elements and perform intersection test O O X O X X O begin At the end of fetch, use barrier() for overwrite O end

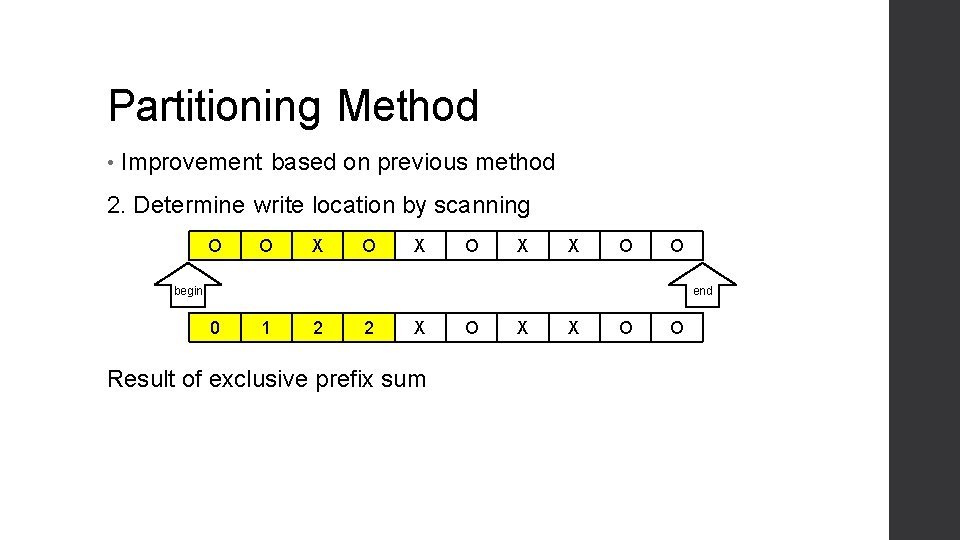

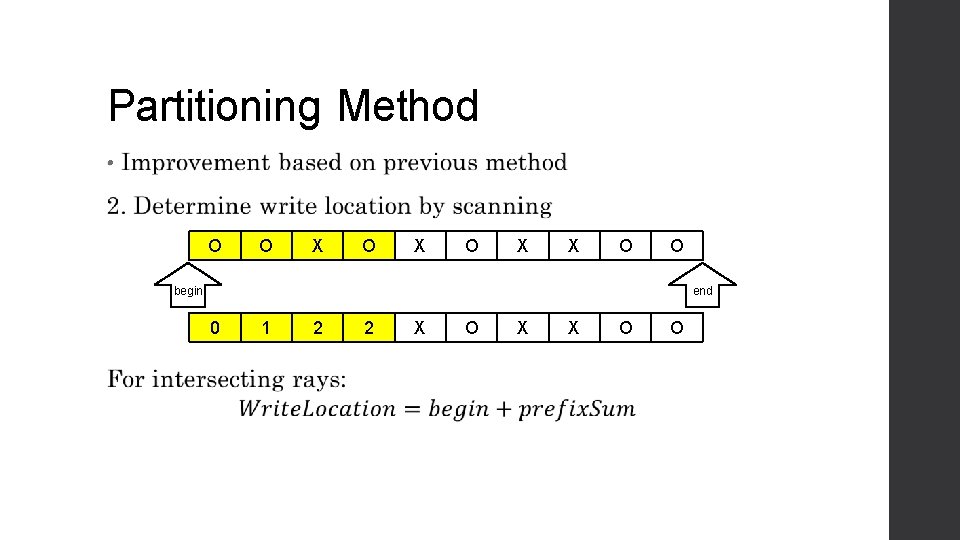

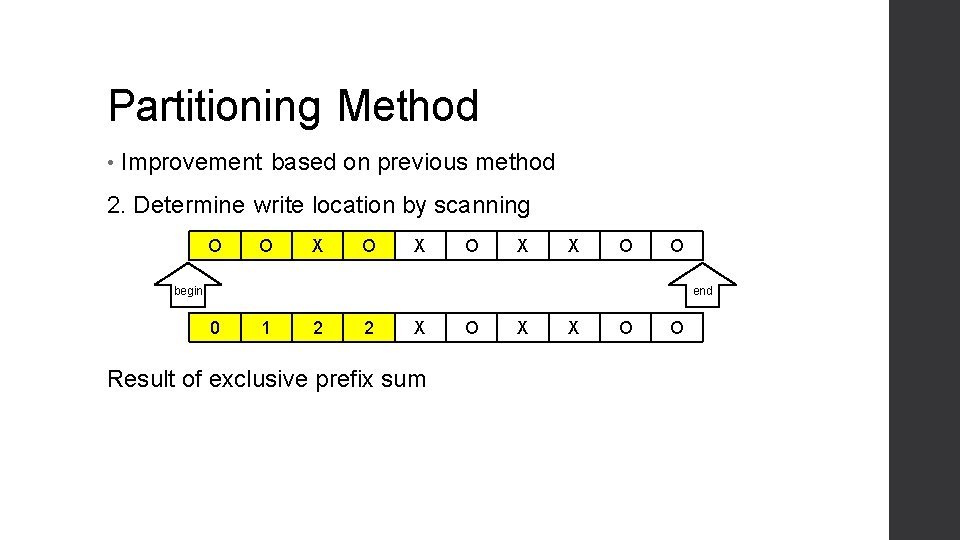

Partitioning Method • Improvement based on previous method 2. Determine write location by scanning O O X O X X O O begin end 0 1 2 2 X Result of exclusive prefix sum O X X O O

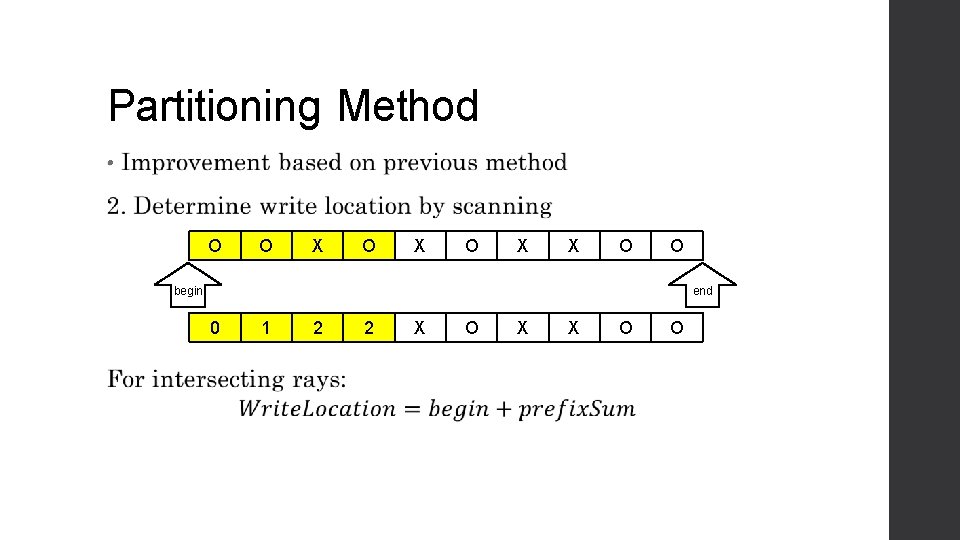

Partitioning Method • O O X O X X O O begin end 0 1 2 2 X O X X O O

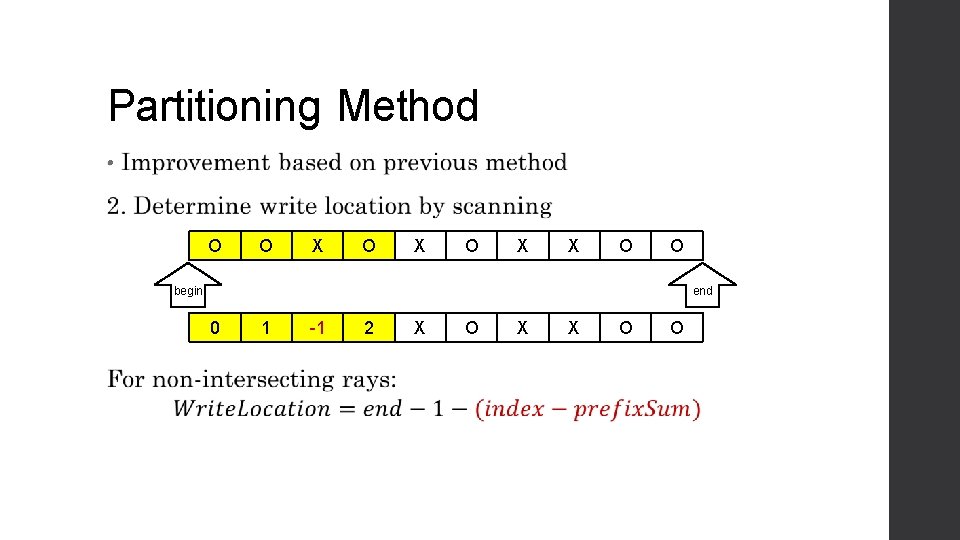

Partitioning Method • O O X O X X O O begin end 0 1 -1 2 X O X X O O

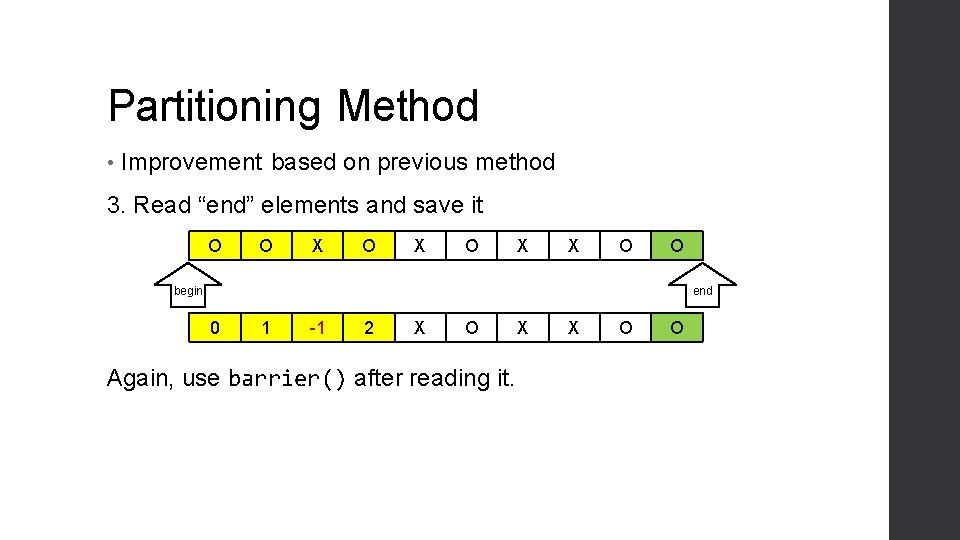

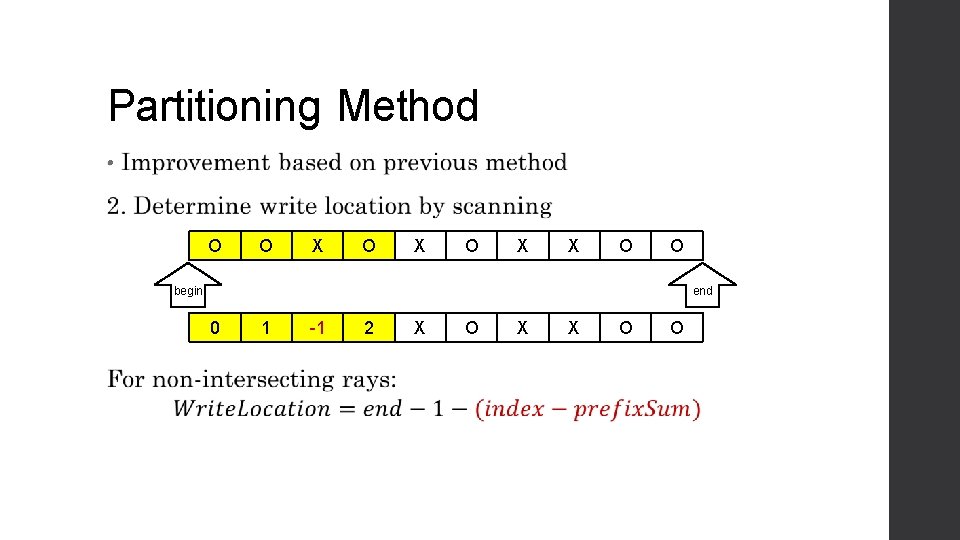

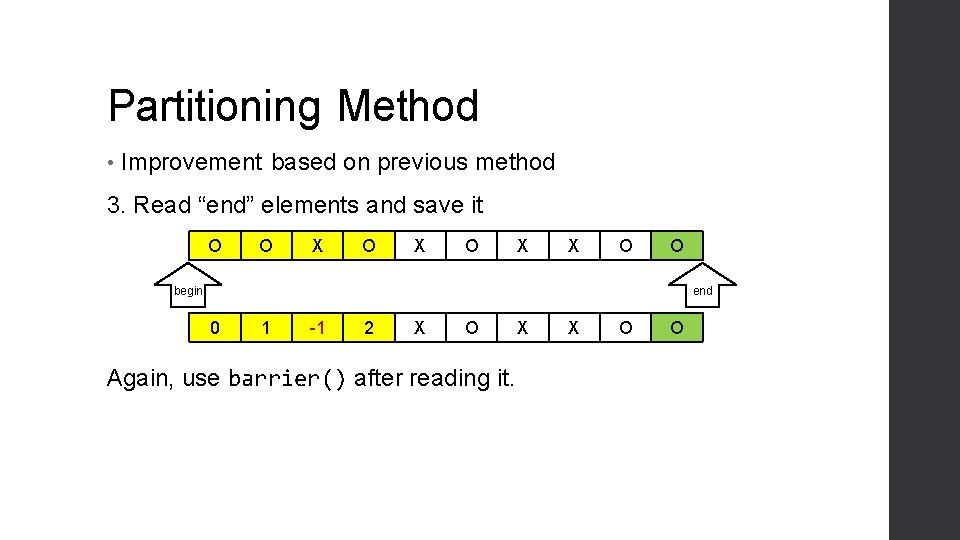

Partitioning Method • Improvement based on previous method 3. Read “end” elements and save it O O X O X X O O begin end 0 1 -1 2 X O Again, use barrier() after reading it. X X O O

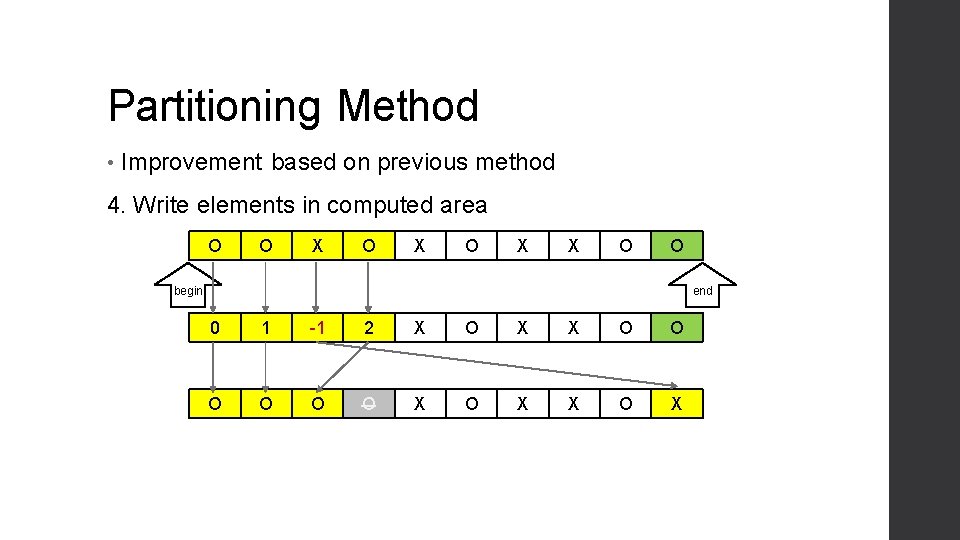

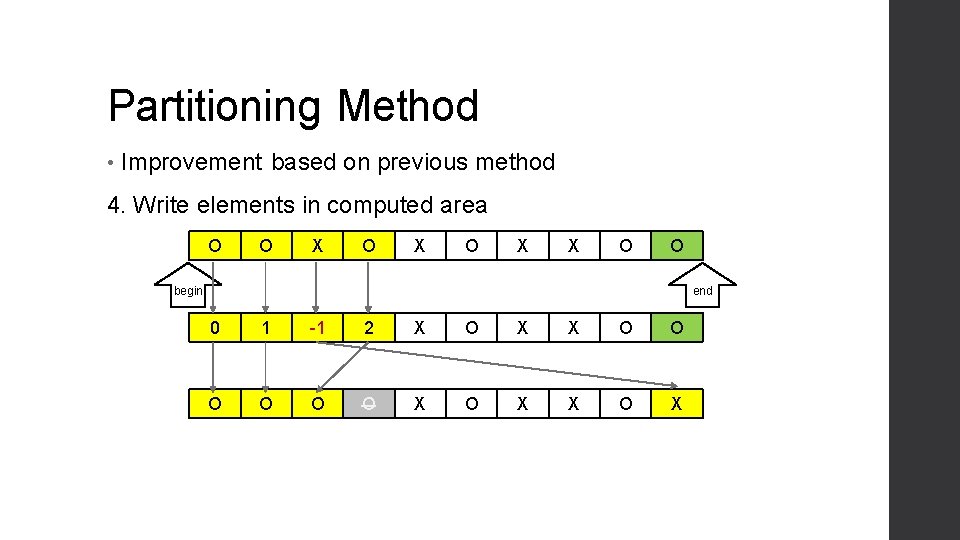

Partitioning Method • Improvement based on previous method 4. Write elements in computed area O O X O X X O O begin end 0 1 -1 2 X O X X O O O X O X

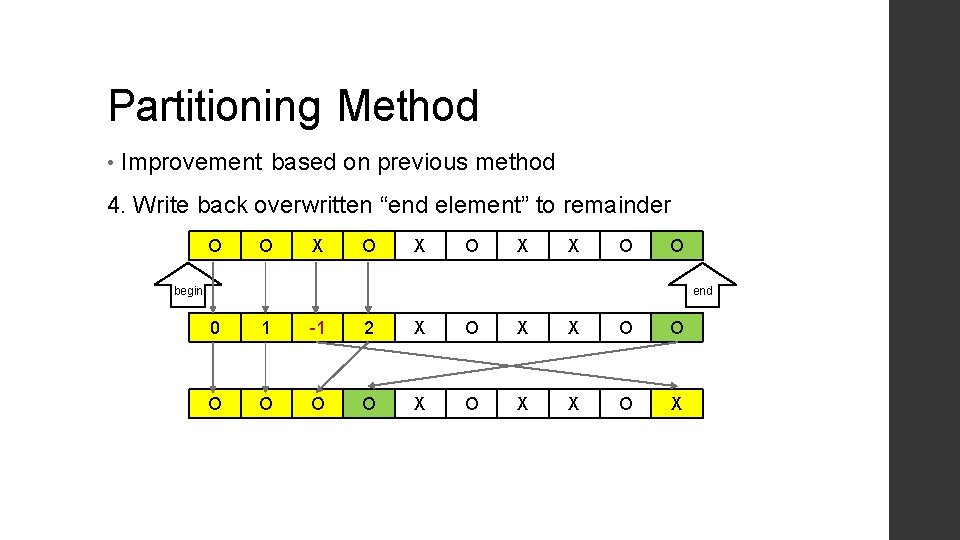

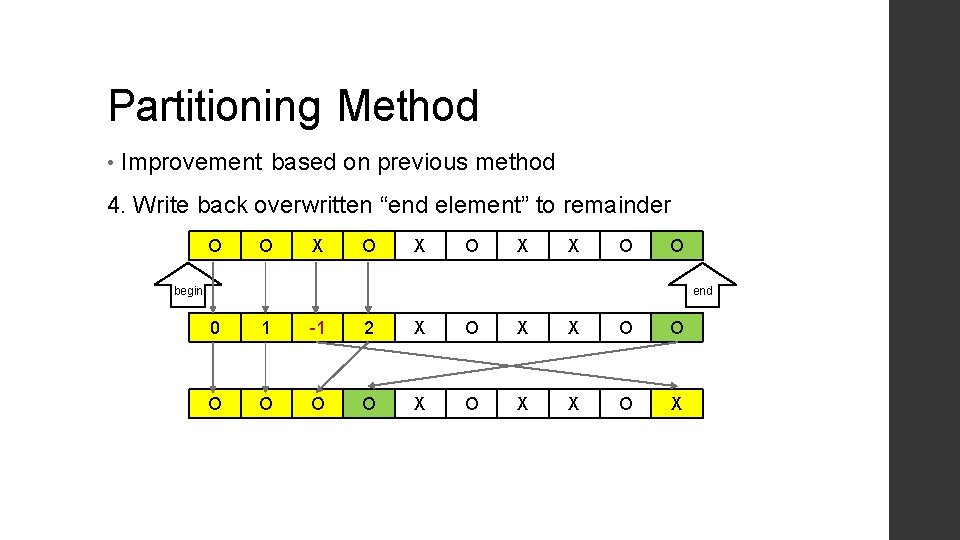

Partitioning Method • Improvement based on previous method 4. Write back overwritten “end element” to remainder O O X O X X O O begin end 0 1 -1 2 X O X X O O O X O X

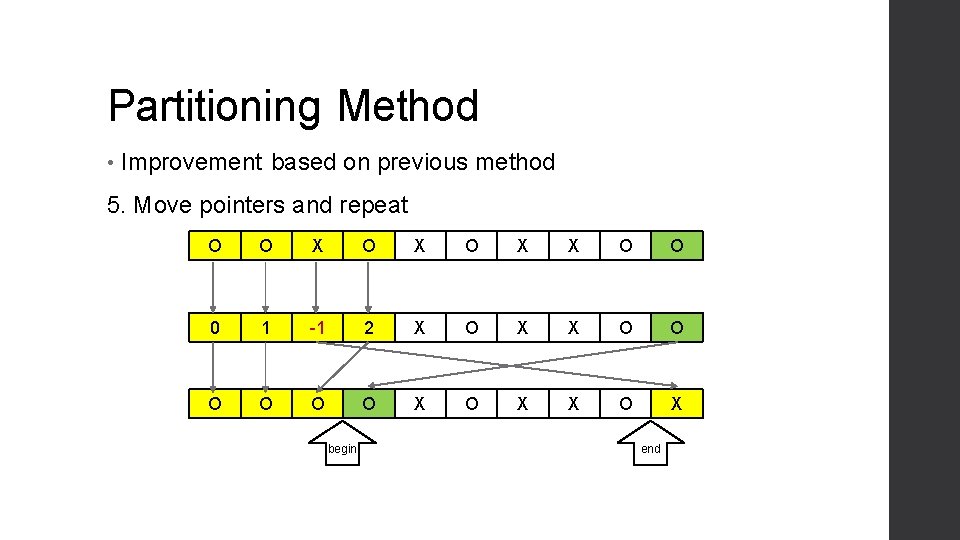

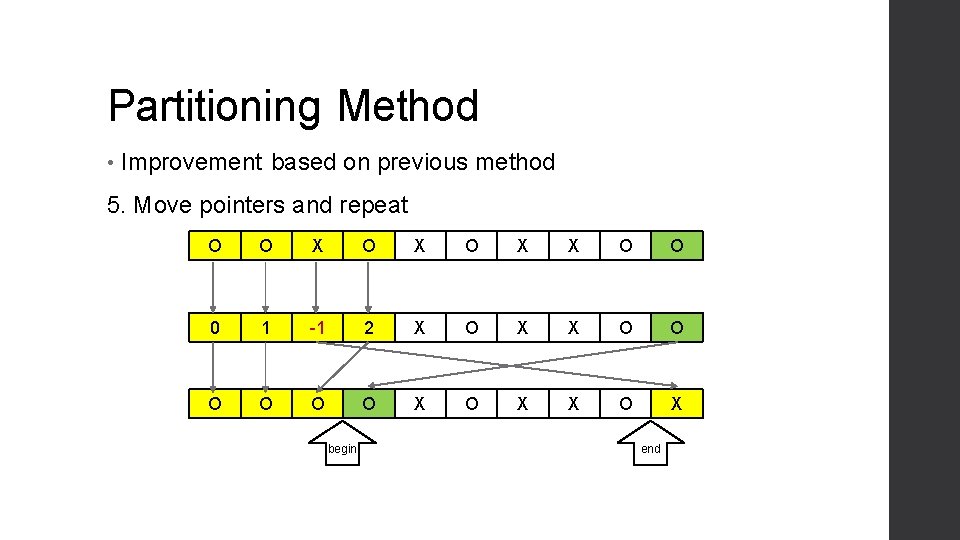

Partitioning Method • Improvement based on previous method 5. Move pointers and repeat O O X O X X O O 0 1 -1 2 X O X X O O O X O X begin end