Internet traffic measurement from packets to insight George

![Related work • Databases [FS+98] Iceberg Queries Ø Limited analysis, no conservative update • Related work • Databases [FS+98] Iceberg Queries Ø Limited analysis, no conservative update •](https://slidetodoc.com/presentation_image_h2/7d852dbbde776d267890656b999764ea/image-44.jpg)

- Slides: 44

Internet traffic measurement: from packets to insight George Varghese (based on Cristi Estan’s work) University of California, San Diego May 2011

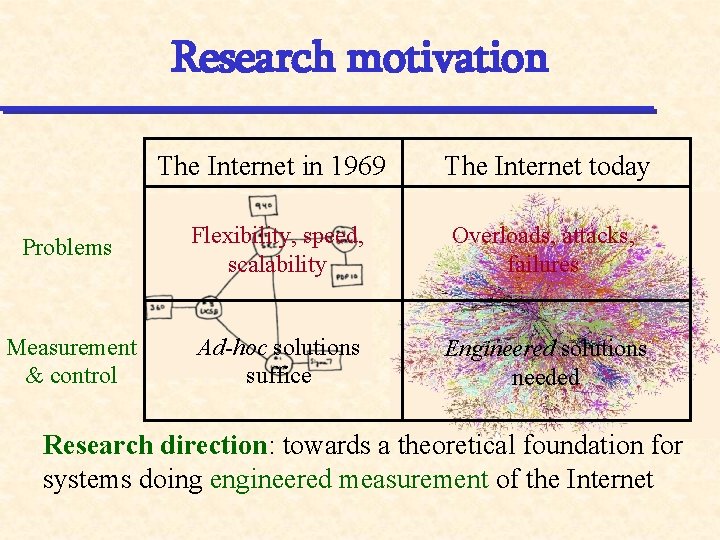

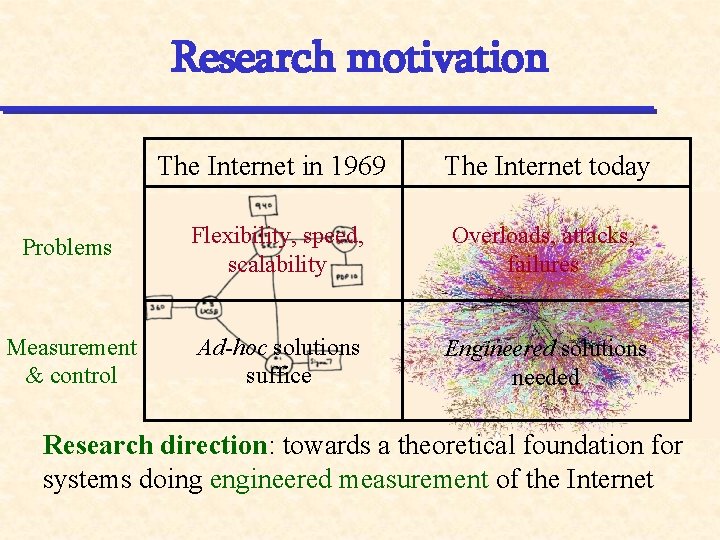

Research motivation The Internet in 1969 The Internet today Problems Flexibility, speed, scalability Overloads, attacks, failures Measurement & control Ad-hoc solutions suffice Engineered solutions needed Research direction: towards a theoretical foundation for systems doing engineered measurement of the Internet

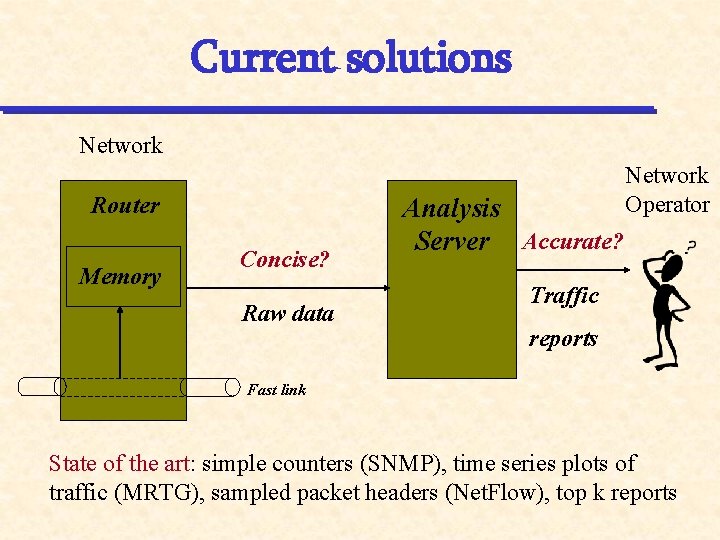

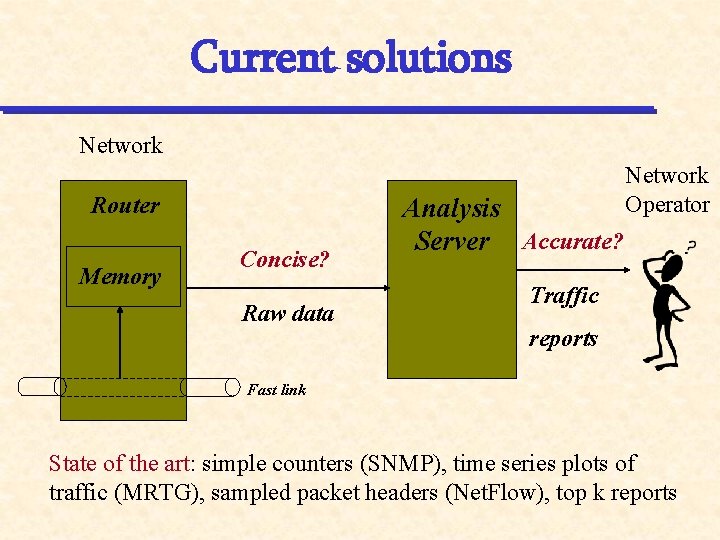

Current solutions Network Router Memory Concise? Raw data Analysis Server Accurate? Network Operator Traffic reports Fast link State of the art: simple counters (SNMP), time series plots of traffic (MRTG), sampled packet headers (Net. Flow), top k reports

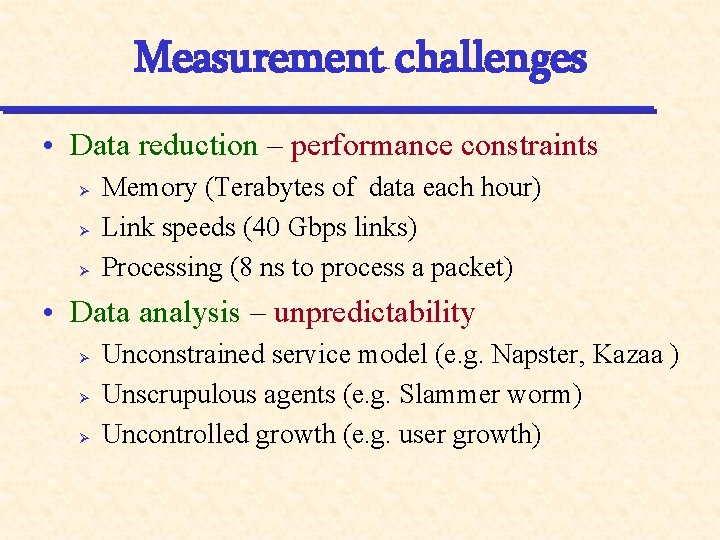

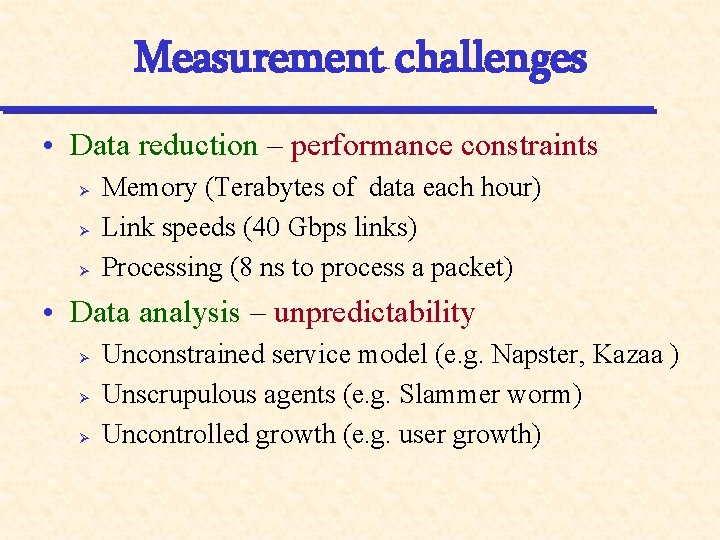

Measurement challenges • Data reduction – performance constraints Ø Ø Ø Memory (Terabytes of data each hour) Link speeds (40 Gbps links) Processing (8 ns to process a packet) • Data analysis – unpredictability Ø Ø Ø Unconstrained service model (e. g. Napster, Kazaa ) Unscrupulous agents (e. g. Slammer worm) Uncontrolled growth (e. g. user growth)

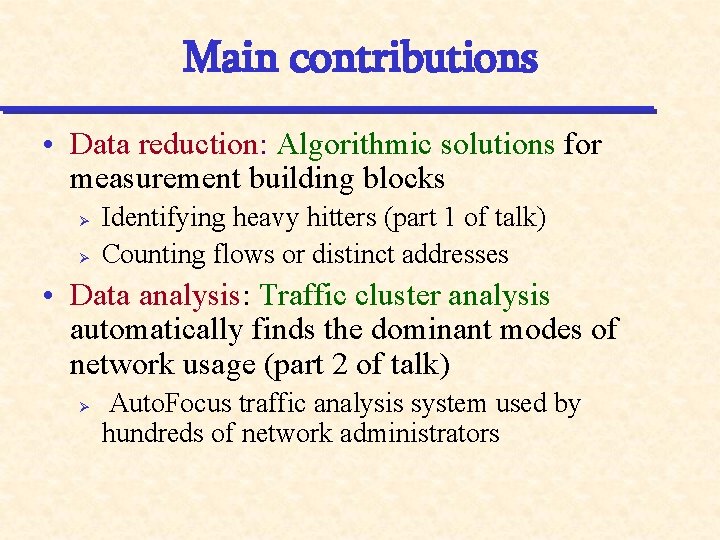

Main contributions • Data reduction: Algorithmic solutions for measurement building blocks Ø Ø Identifying heavy hitters (part 1 of talk) Counting flows or distinct addresses • Data analysis: Traffic cluster analysis automatically finds the dominant modes of network usage (part 2 of talk) Ø Auto. Focus traffic analysis system used by hundreds of network administrators

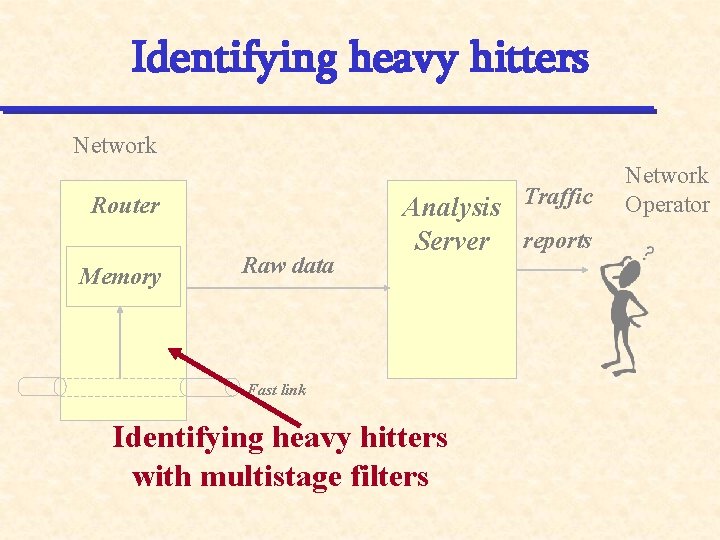

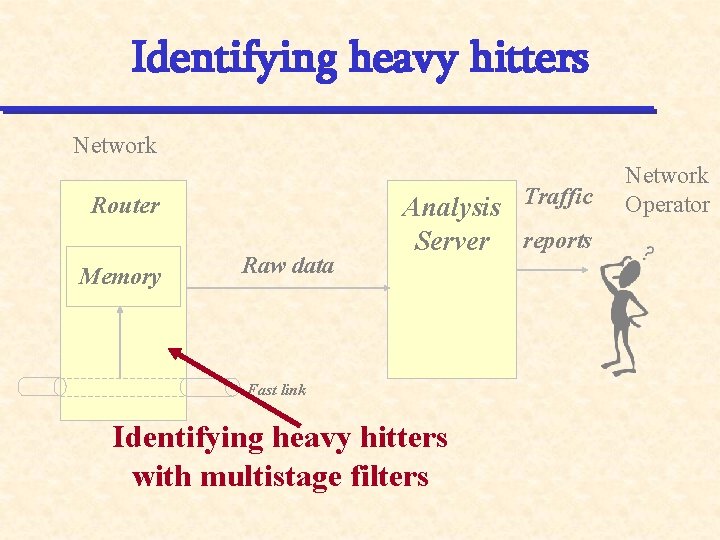

Identifying heavy hitters Network Router Memory Raw data Analysis Traffic Server reports Fast link Identifying heavy hitters with multistage filters Network Operator

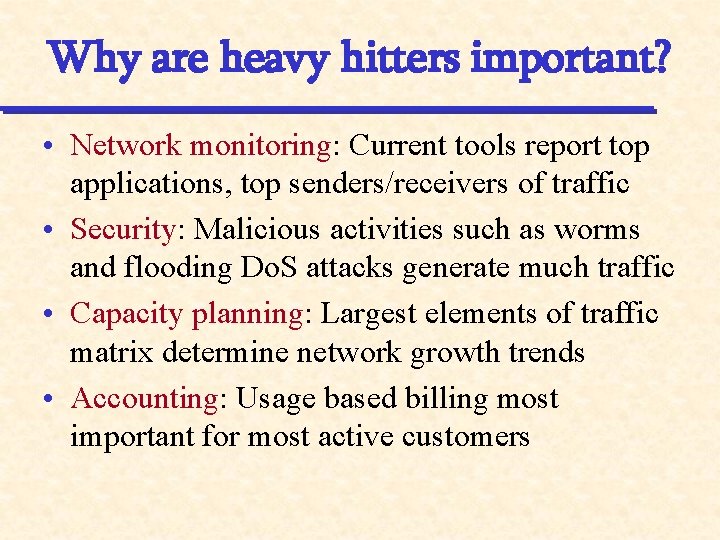

Why are heavy hitters important? • Network monitoring: Current tools report top applications, top senders/receivers of traffic • Security: Malicious activities such as worms and flooding Do. S attacks generate much traffic • Capacity planning: Largest elements of traffic matrix determine network growth trends • Accounting: Usage based billing most important for most active customers

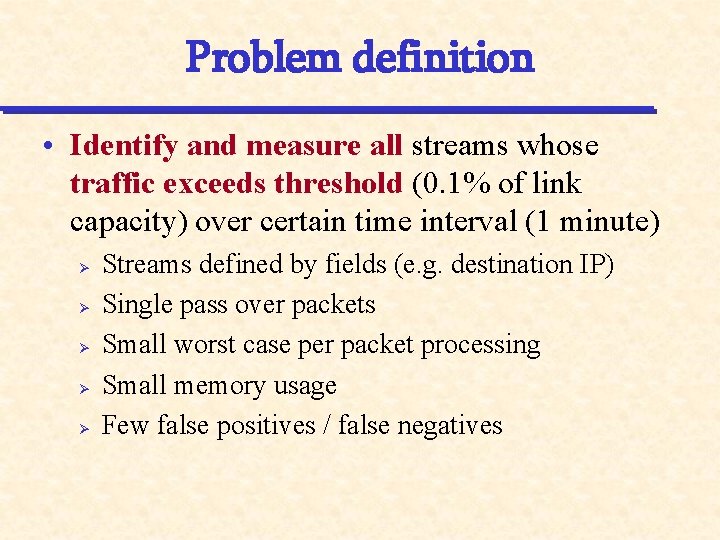

Problem definition • Identify and measure all streams whose traffic exceeds threshold (0. 1% of link capacity) over certain time interval (1 minute) Ø Ø Ø Streams defined by fields (e. g. destination IP) Single pass over packets Small worst case per packet processing Small memory usage Few false positives / false negatives

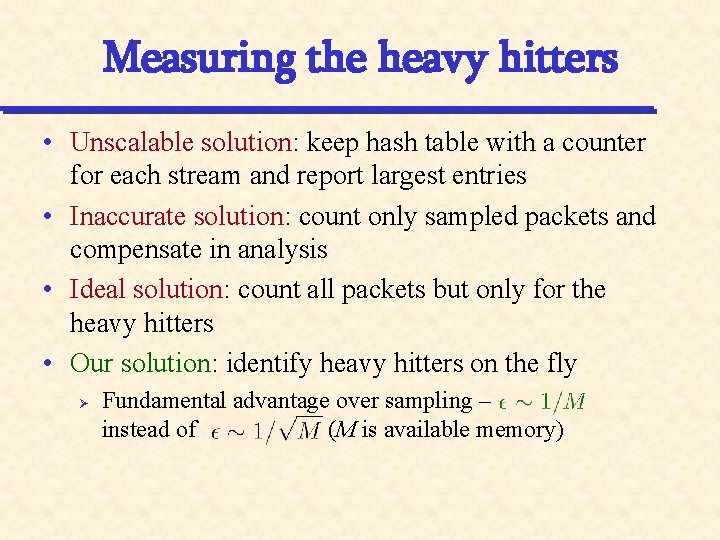

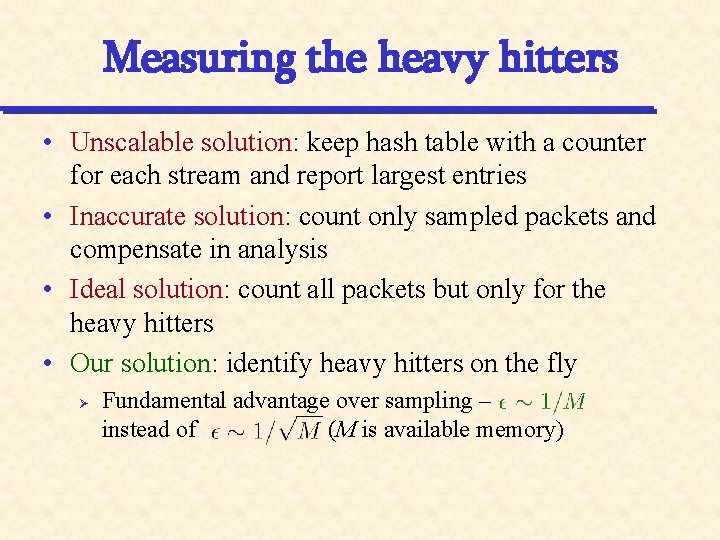

Measuring the heavy hitters • Unscalable solution: keep hash table with a counter for each stream and report largest entries • Inaccurate solution: count only sampled packets and compensate in analysis • Ideal solution: count all packets but only for the heavy hitters • Our solution: identify heavy hitters on the fly Ø Fundamental advantage over sampling – instead of (M is available memory)

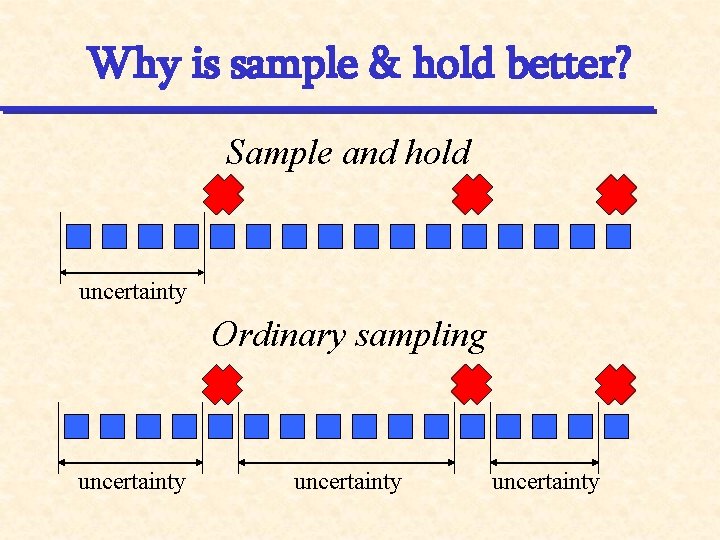

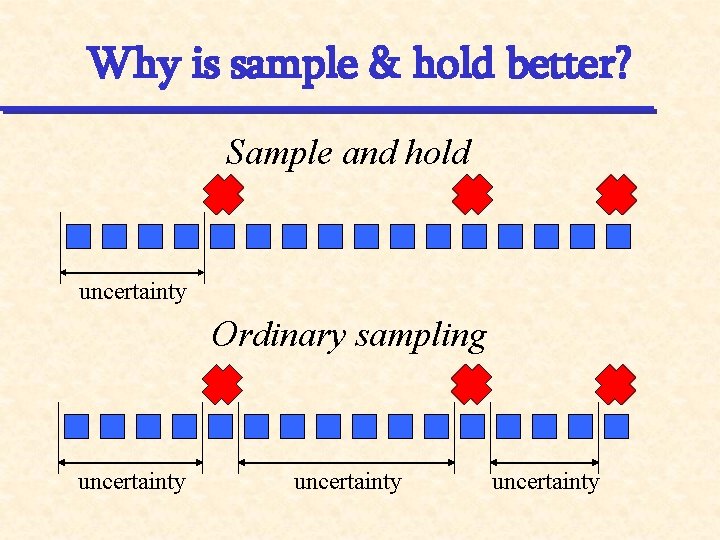

Why is sample & hold better? Sample and hold uncertainty Ordinary sampling uncertainty

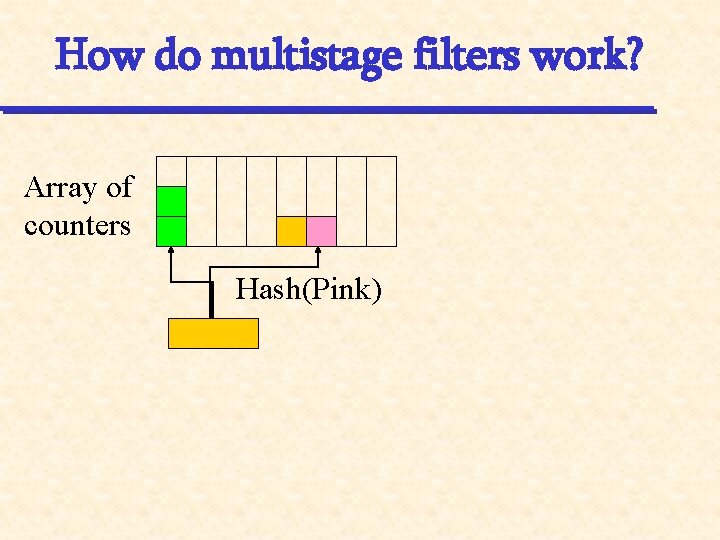

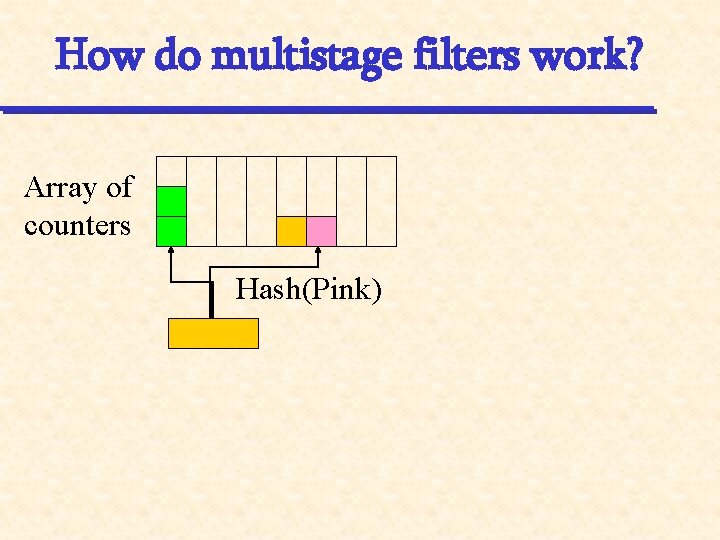

How do multistage filters work? Array of counters Hash(Pink)

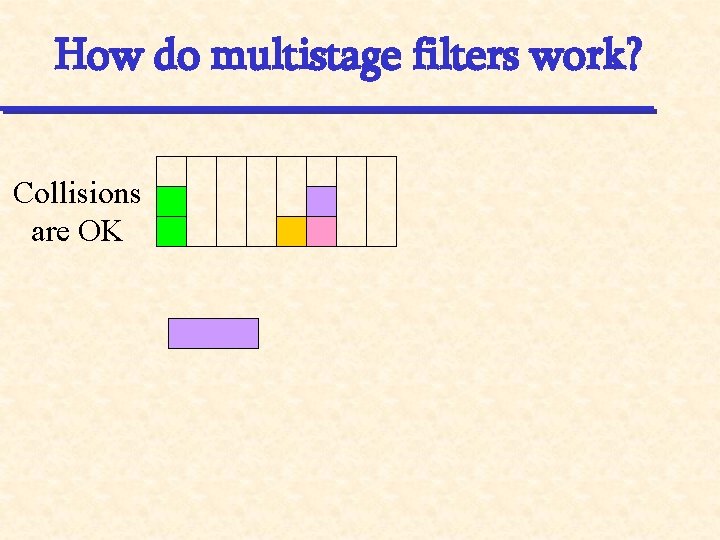

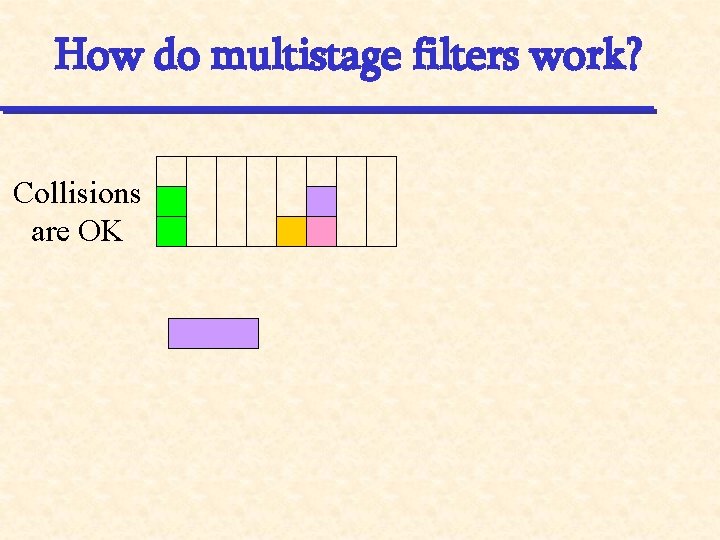

How do multistage filters work? Collisions are OK

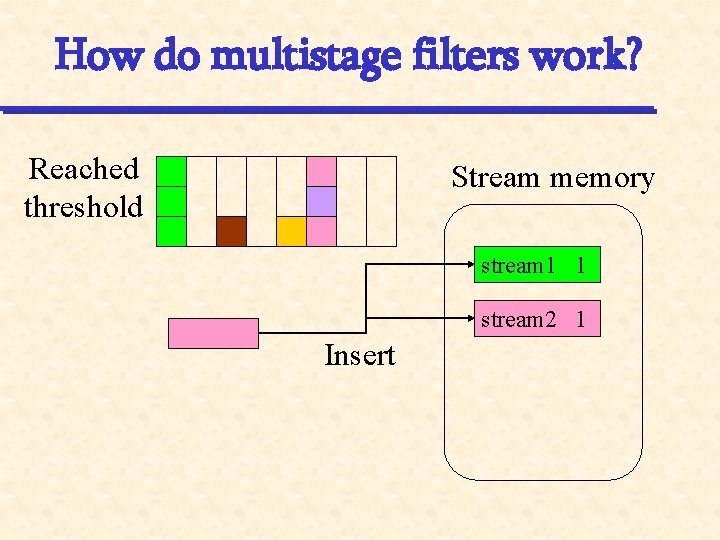

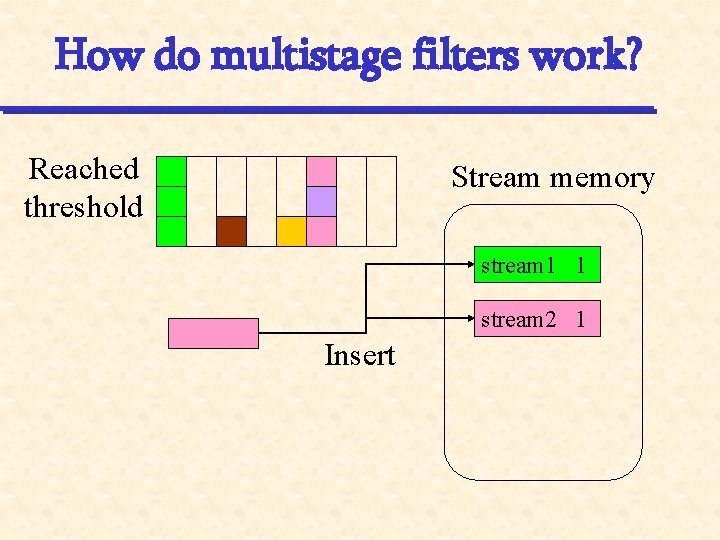

How do multistage filters work? Reached threshold Stream memory stream 1 1 stream 2 1 Insert

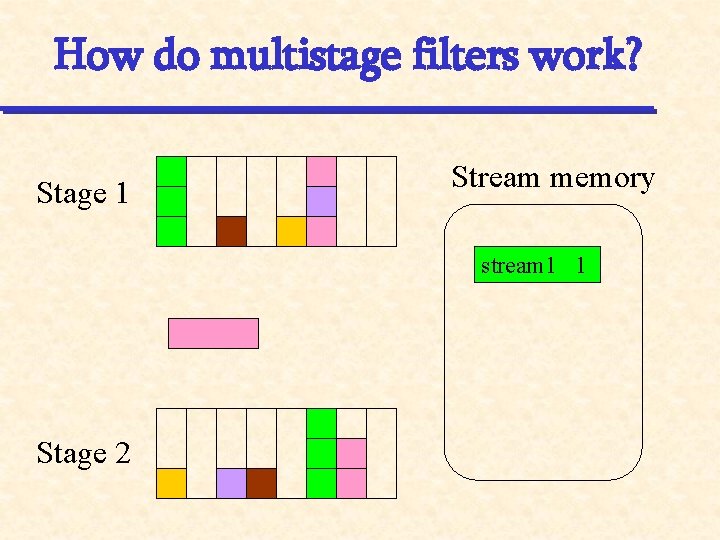

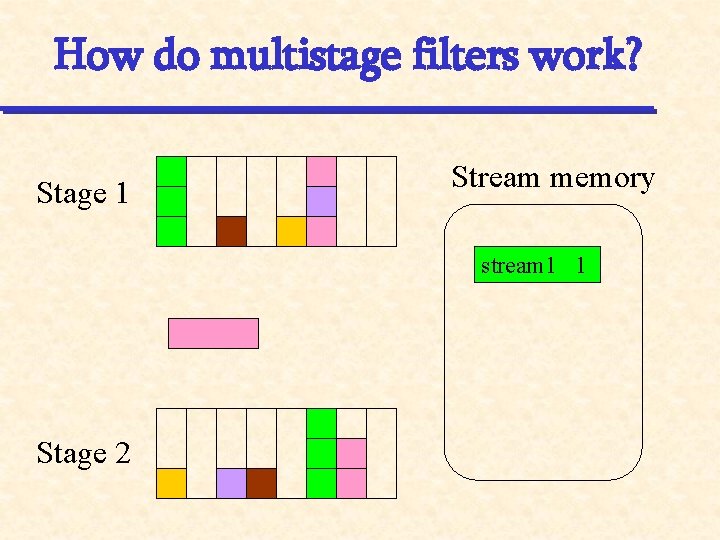

How do multistage filters work? Stage 1 Stream memory stream 1 1 Stage 2

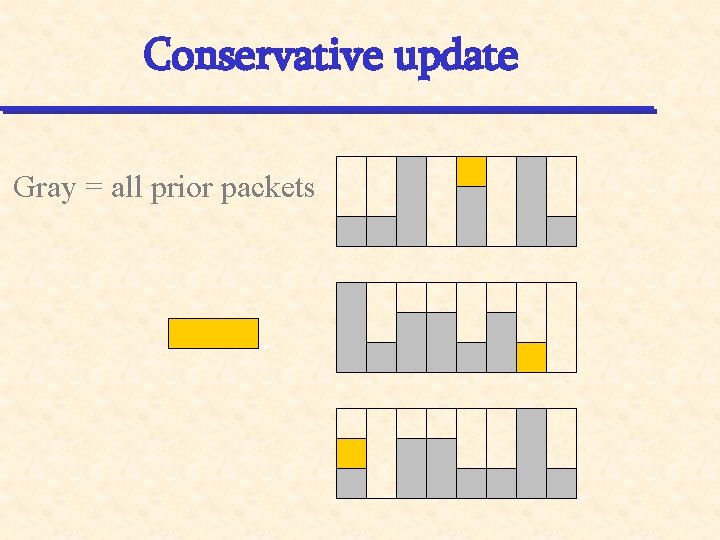

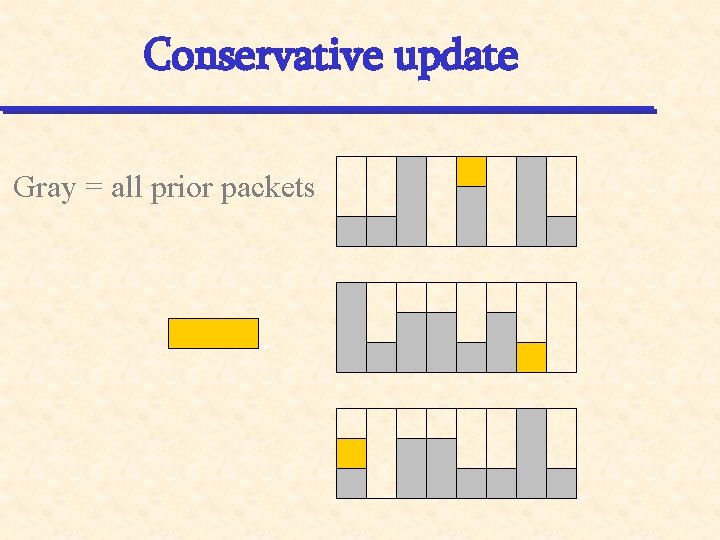

Conservative update Gray = all prior packets

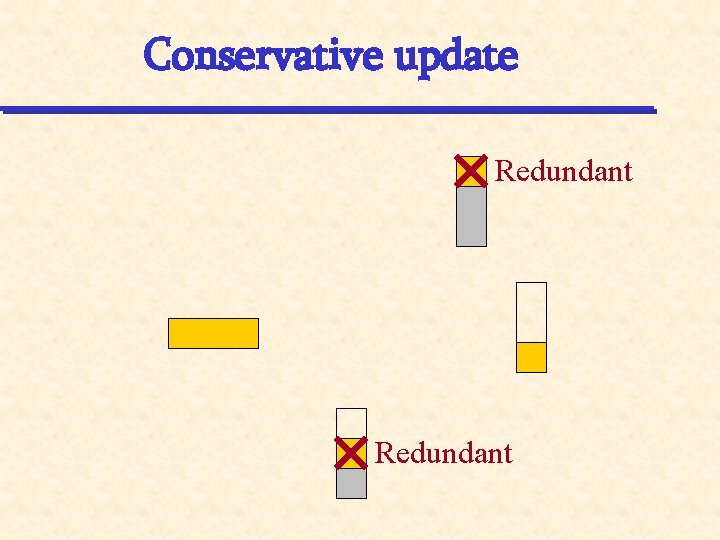

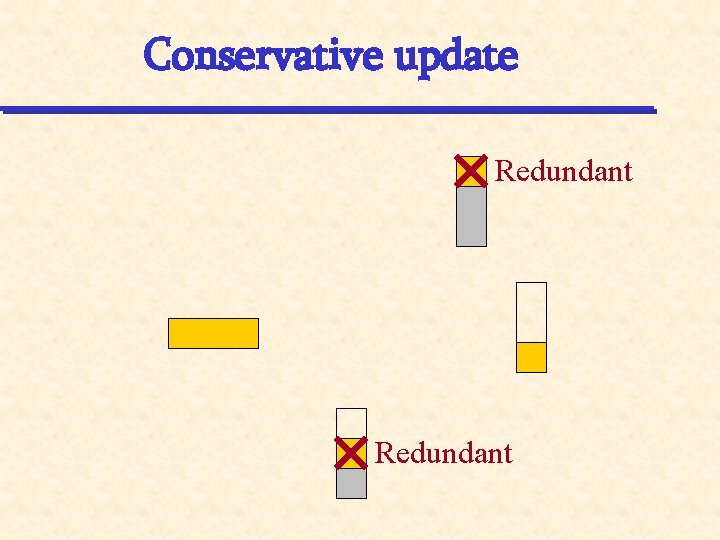

Conservative update Redundant

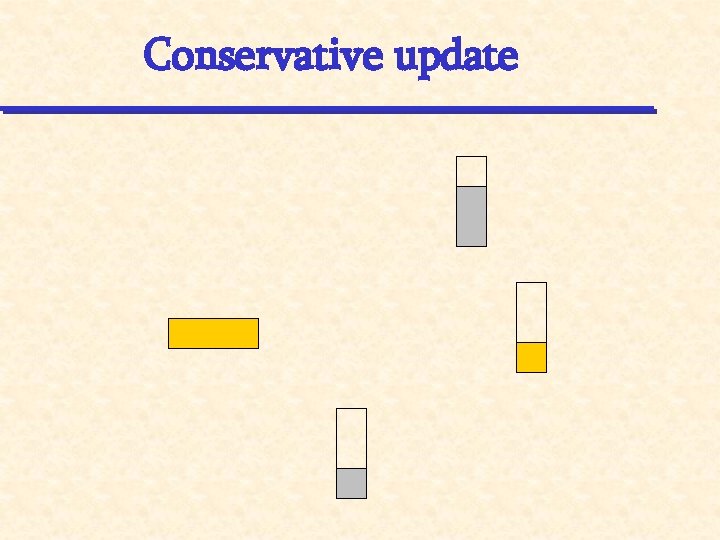

Conservative update

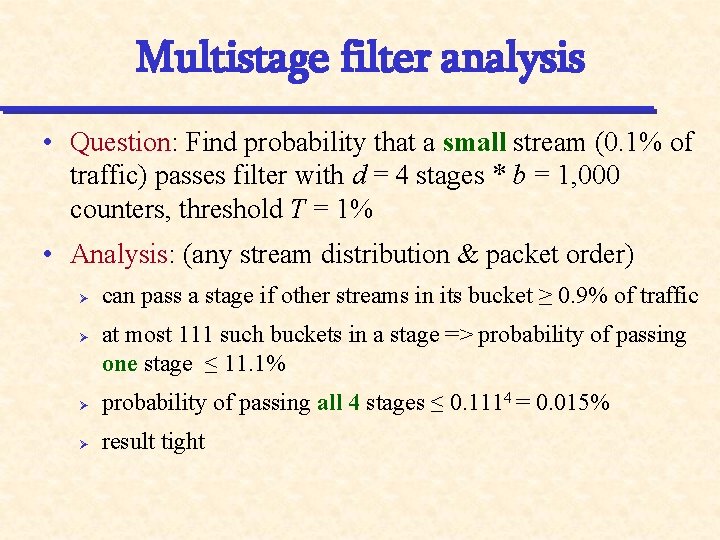

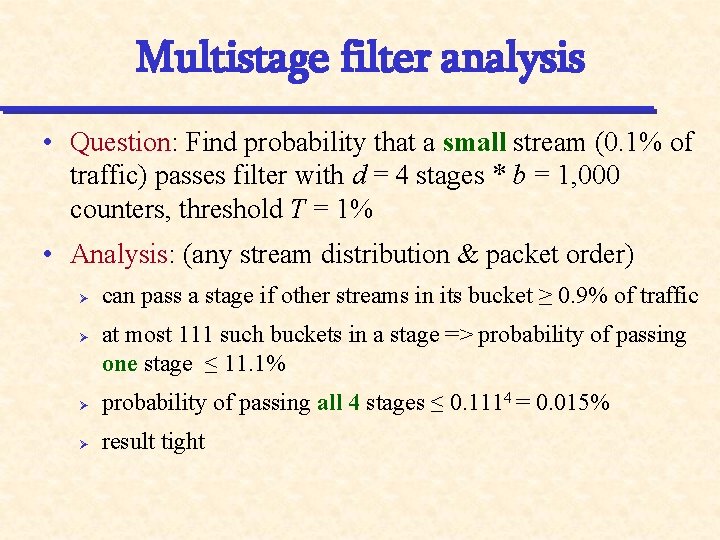

Multistage filter analysis • Question: Find probability that a small stream (0. 1% of traffic) passes filter with d = 4 stages * b = 1, 000 counters, threshold T = 1% • Analysis: (any stream distribution & packet order) Ø Ø can pass a stage if other streams in its bucket ≥ 0. 9% of traffic at most 111 such buckets in a stage => probability of passing one stage ≤ 11. 1% Ø probability of passing all 4 stages ≤ 0. 1114 = 0. 015% Ø result tight

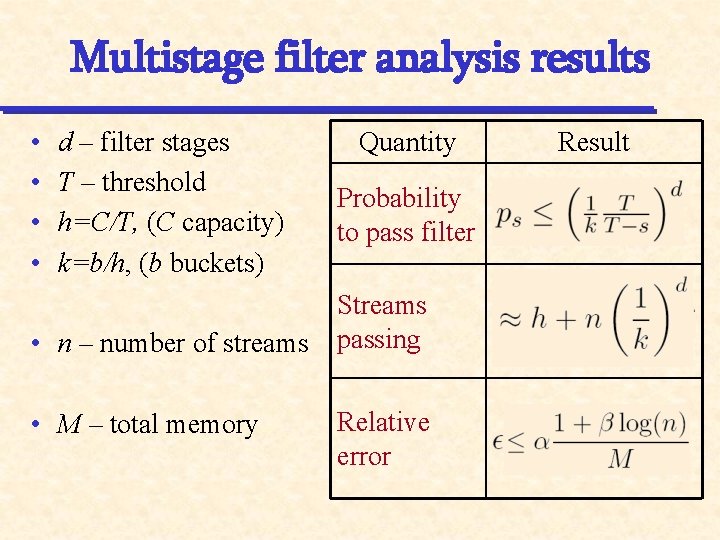

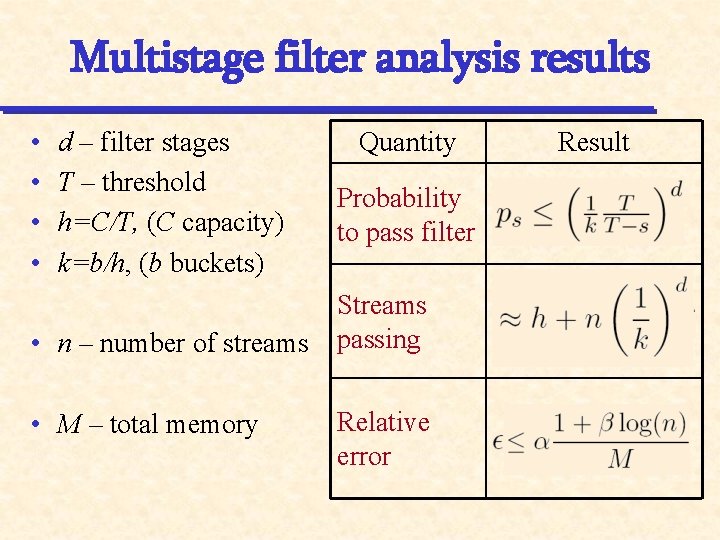

Multistage filter analysis results • • d – filter stages T – threshold h=C/T, (C capacity) k=b/h, (b buckets) • n – number of streams • M – total memory Quantity Probability to pass filter Streams passing Relative error Result

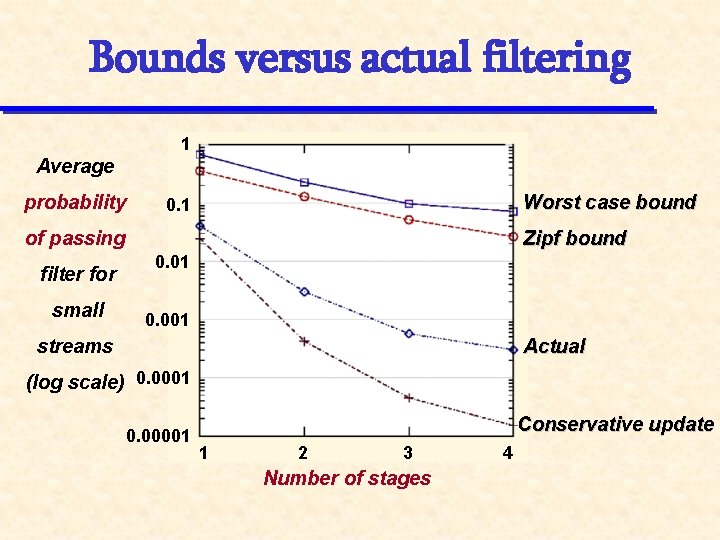

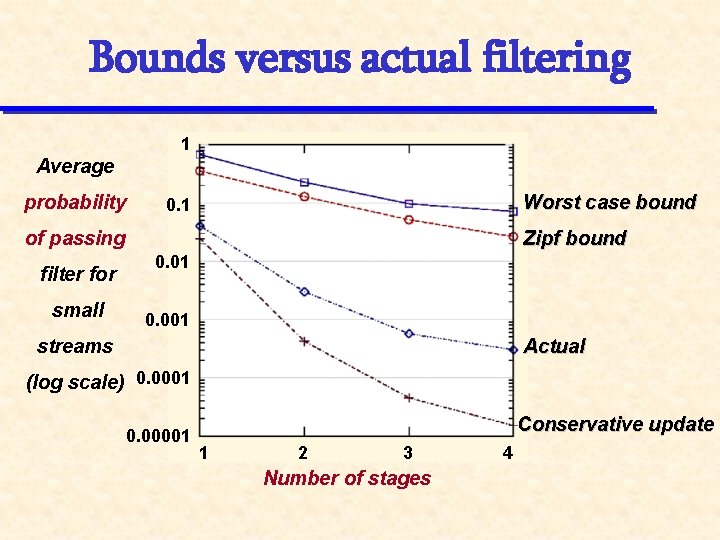

Bounds versus actual filtering 1 Average probability Worst case bound 0. 1 Zipf bound of passing filter for small 0. 01 0. 001 streams Actual (log scale) 0. 0001 0. 00001 Conservative update 1 2 3 Number of stages 4

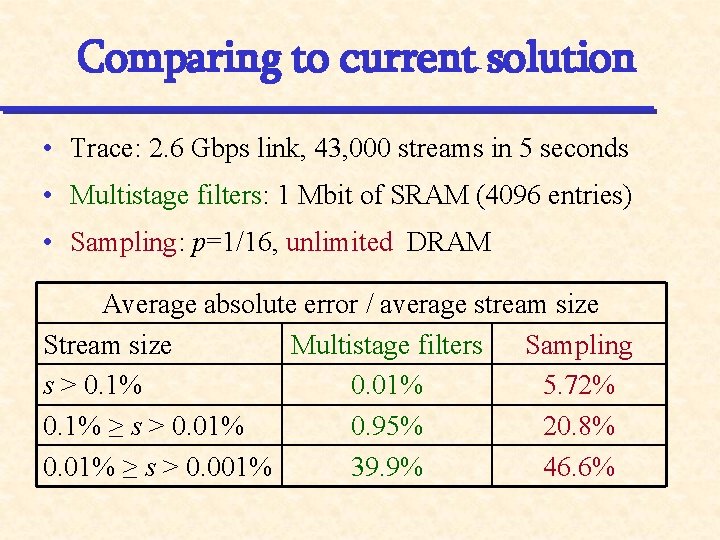

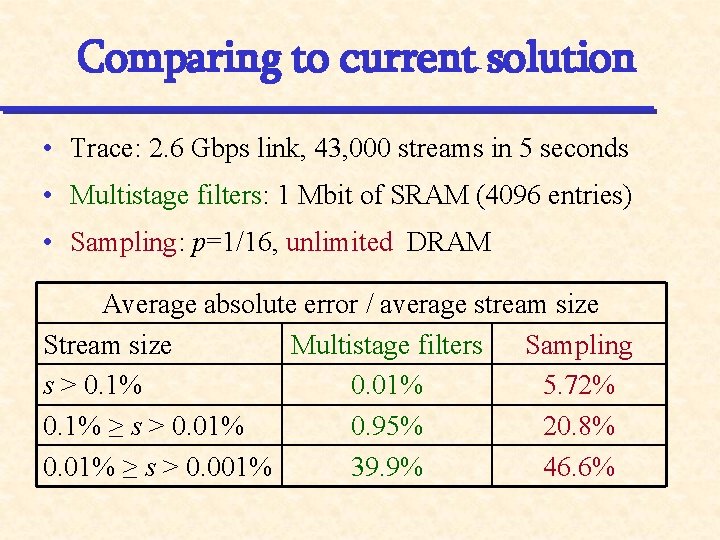

Comparing to current solution • Trace: 2. 6 Gbps link, 43, 000 streams in 5 seconds • Multistage filters: 1 Mbit of SRAM (4096 entries) • Sampling: p=1/16, unlimited DRAM Average absolute error / average stream size Stream size Multistage filters Sampling s > 0. 1% 0. 01% 5. 72% 0. 1% ≥ s > 0. 01% 0. 95% 20. 8% 0. 01% ≥ s > 0. 001% 39. 9% 46. 6%

Summary for heavy hitters • Heavy hitters important for measurement processes • More accurate results than random sampling: . instead of • Multistage filters with conservative update outperform theoretical bounds ? • Prototype implemented at 10 Gbps

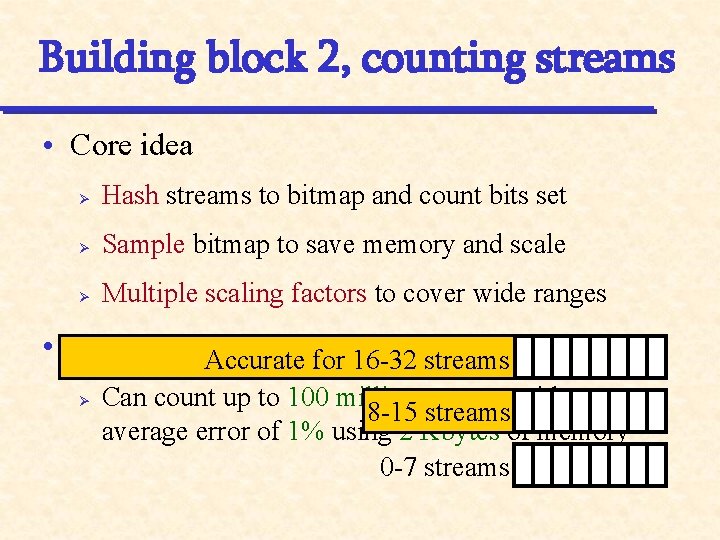

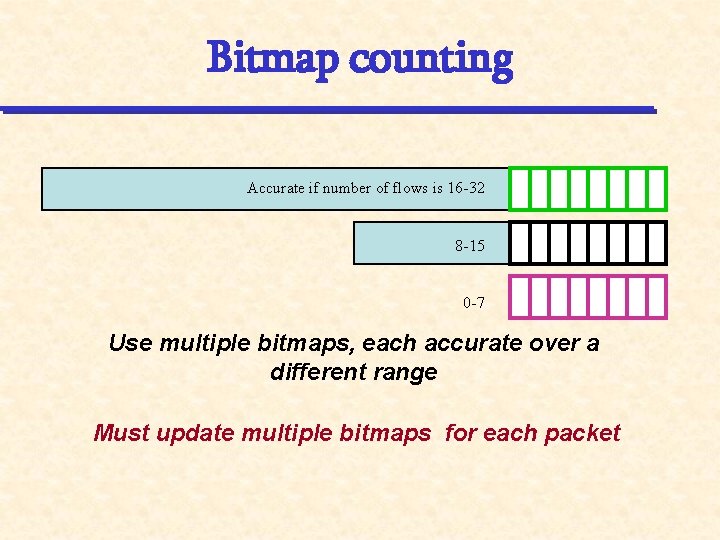

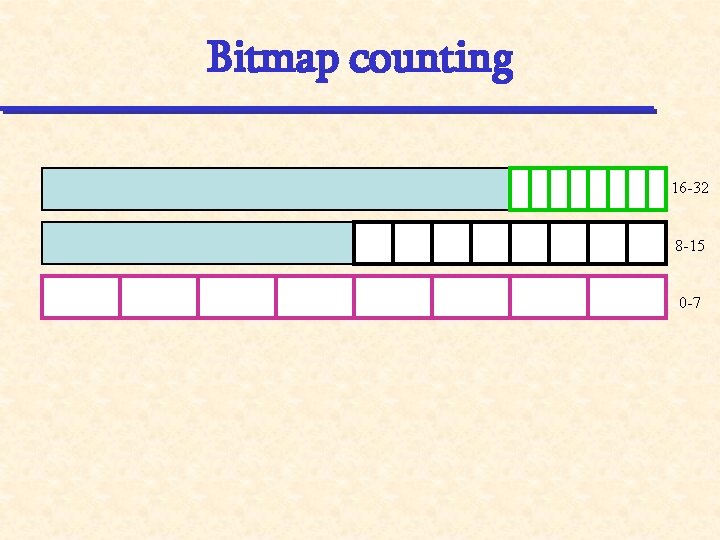

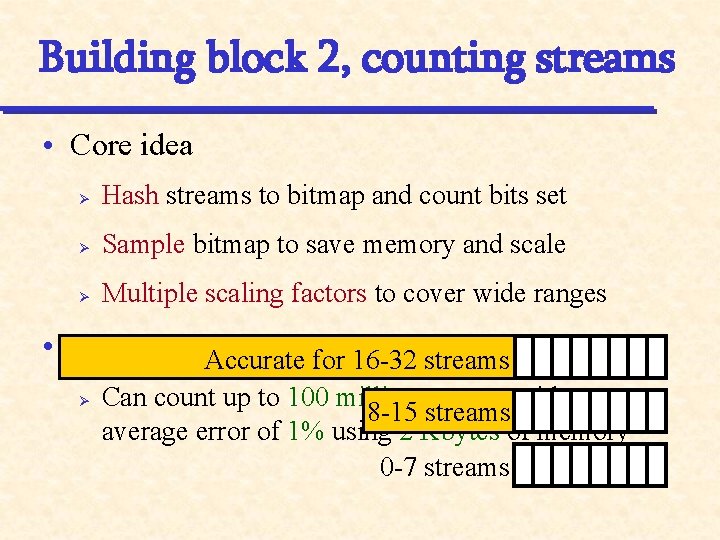

Building block 2, counting streams • Core idea Ø Hash streams to bitmap and count bits set Ø Sample bitmap to save memory and scale Ø Multiple scaling factors to cover wide ranges • Result Ø Accurate for 16 -32 streams Can count up to 100 million streams with an 8 -15 streams average error of 1% using 2 Kbytes of memory 0 -7 streams

Bitmap counting Hash based on flow identifier Estimate based on the number of bits set Does not work if there are too many flows

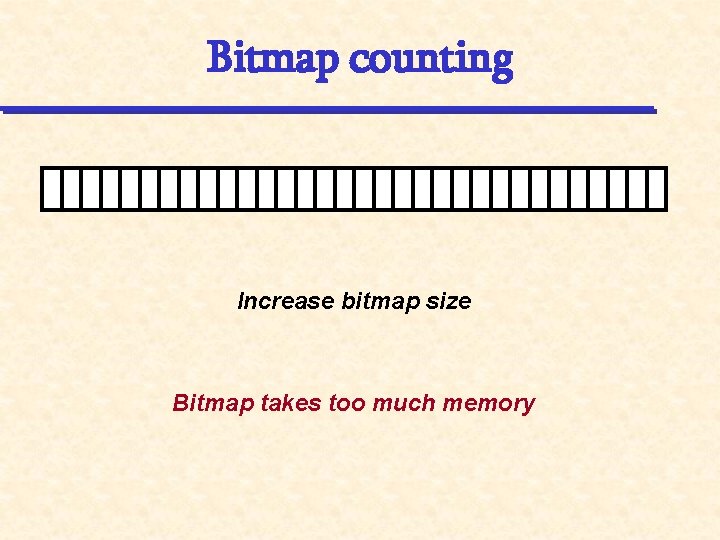

Bitmap counting Increase bitmap size Bitmap takes too much memory

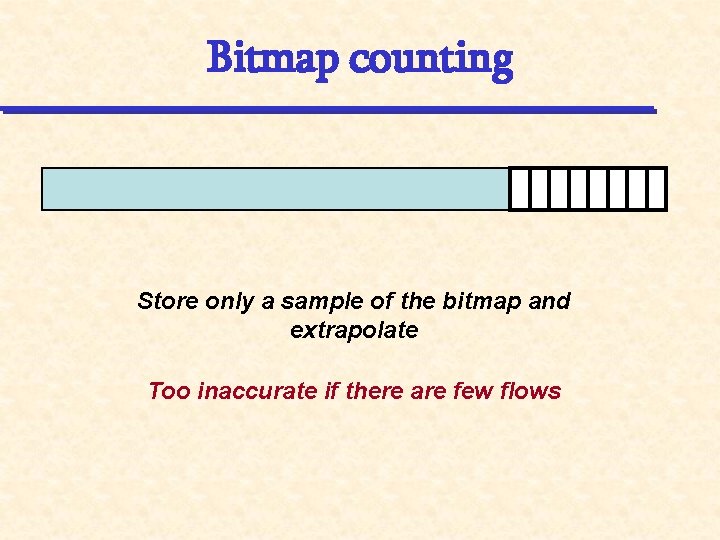

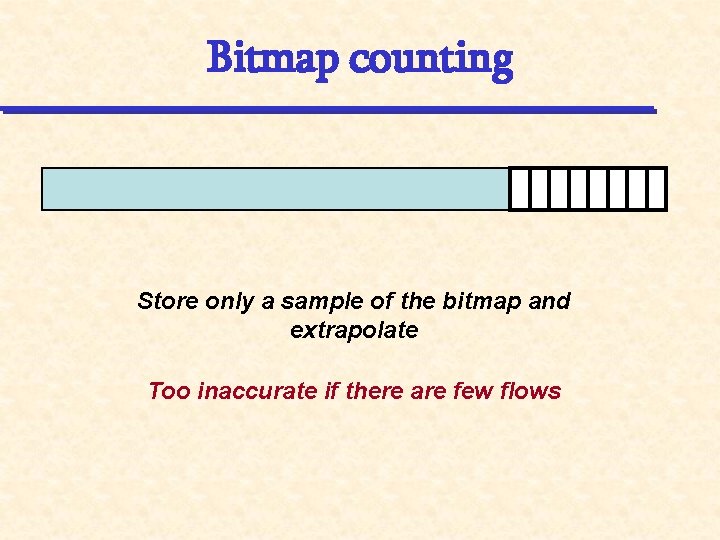

Bitmap counting Store only a sample of the bitmap and extrapolate Too inaccurate if there are few flows

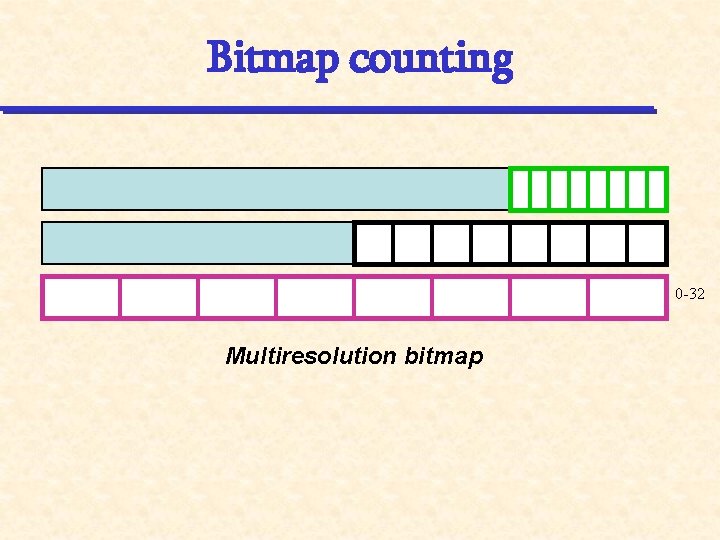

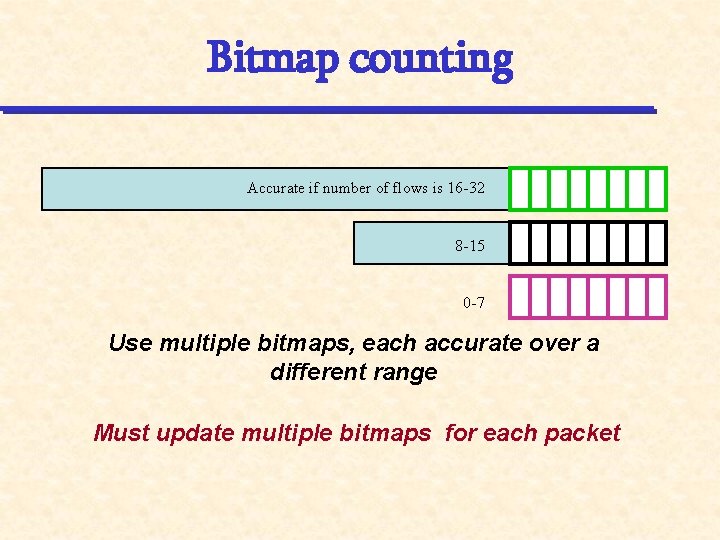

Bitmap counting Accurate if number of flows is 16 -32 8 -15 0 -7 Use multiple bitmaps, each accurate over a different range Must update multiple bitmaps for each packet

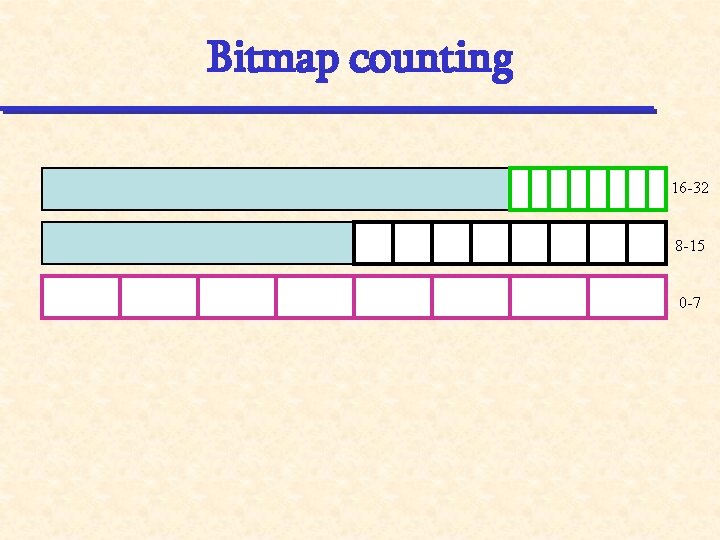

Bitmap counting 16 -32 8 -15 0 -7

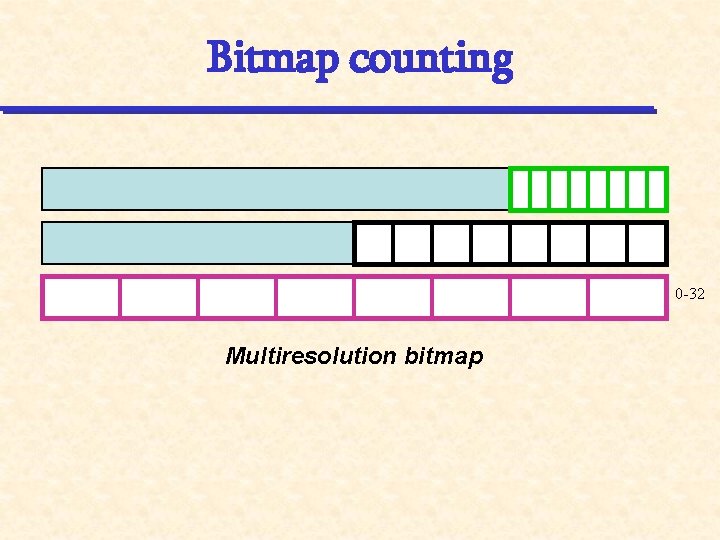

Bitmap counting 0 -32 Multiresolution bitmap

Future work

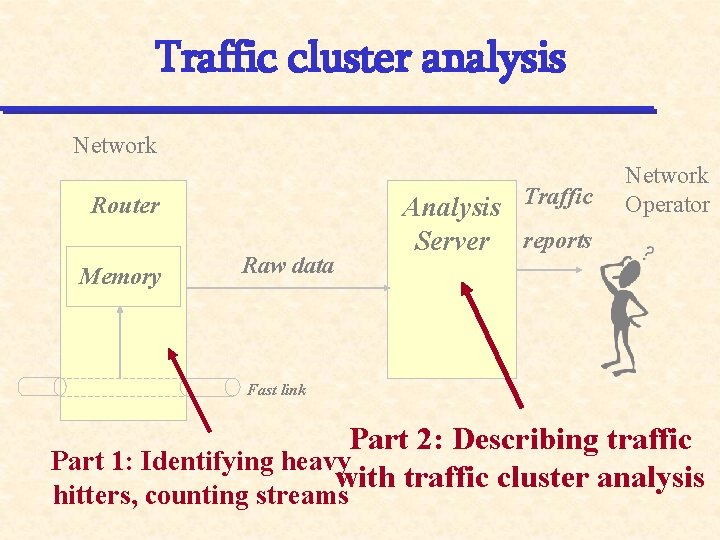

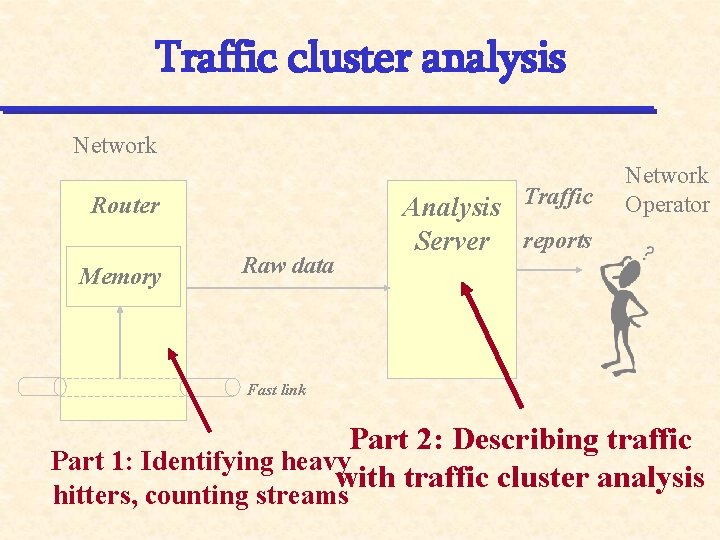

Traffic cluster analysis Network Router Memory Raw data Analysis Traffic Server reports Network Operator Fast link Part 2: Describing traffic Part 1: Identifying heavy with traffic cluster analysis hitters, counting streams

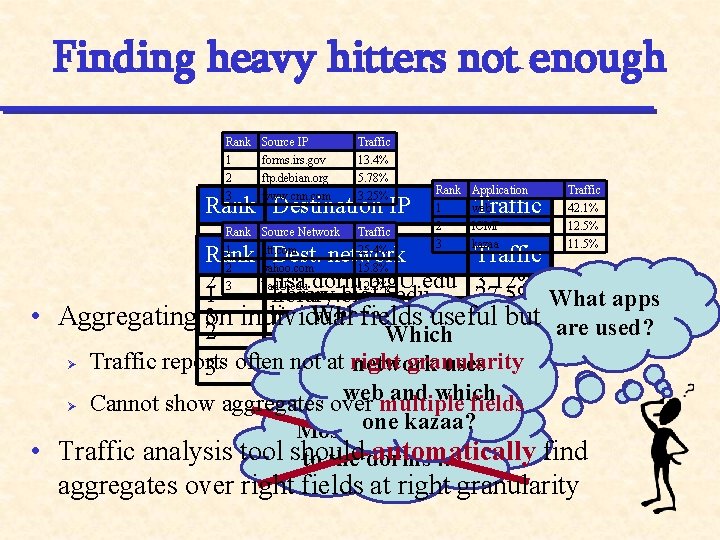

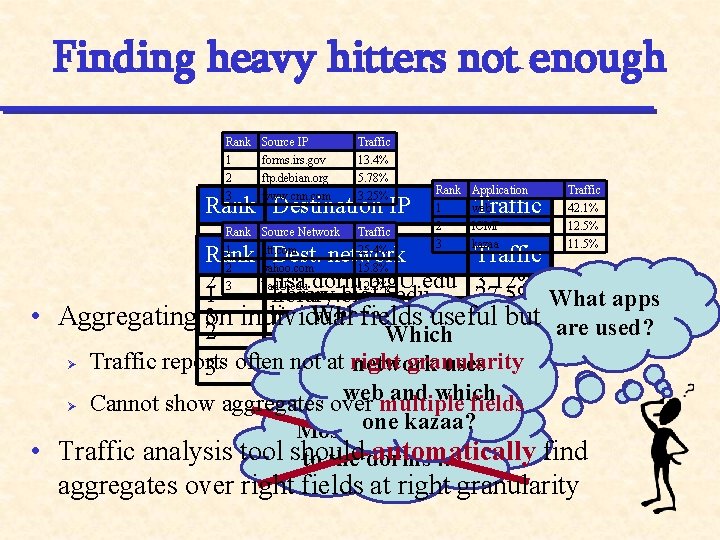

Finding heavy hitters not enough Rank 1 2 3 Source IP forms. irs. gov ftp. debian. org www. cnn. com Traffic 13. 4% 5. 78% 3. 25% Rank Destination IP Rank Source Network 1 att. com 2 yahoo. com Traffic 25. 4% 15. 8% Rank Application 1 web Traffic 42. 1% 2 3 12. 5% 11. 5% Traffic ICMP kazaa 1 jeff. dorm. big. U. edu 11. 9% Rank Dest. network Traffic 2 lisa. dorm. big. U. edu 3. 12% 12. 2% 1 3 bad. U. edu library. big. U. edu 27. 5% What apps Where doesuseful the 2. 83% 3 individual risc. cs. big. U. edu • Aggregating 2 on fields but are used? cs. big. U. edu 18. 1% traffic. Which come Ø Traffic reports not at right granularity network uses 17. 8% 3 often dorm. big. U. edu from? web and which …… Ø Cannot show aggregates over multiple fields one kazaa? Most traffic goes • Traffic analysis tool should automatically find to the dorms … aggregates over right fields at right granularity

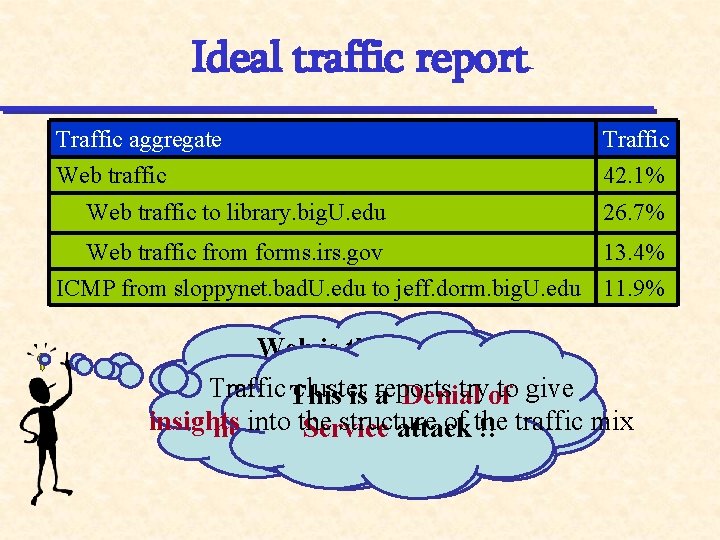

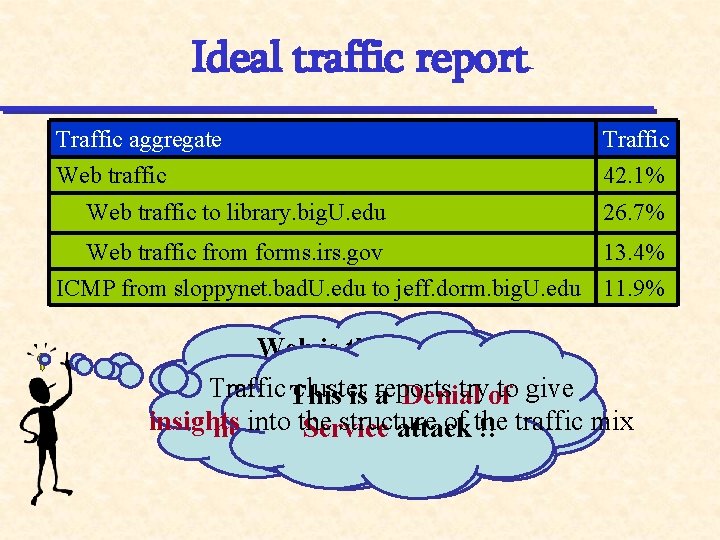

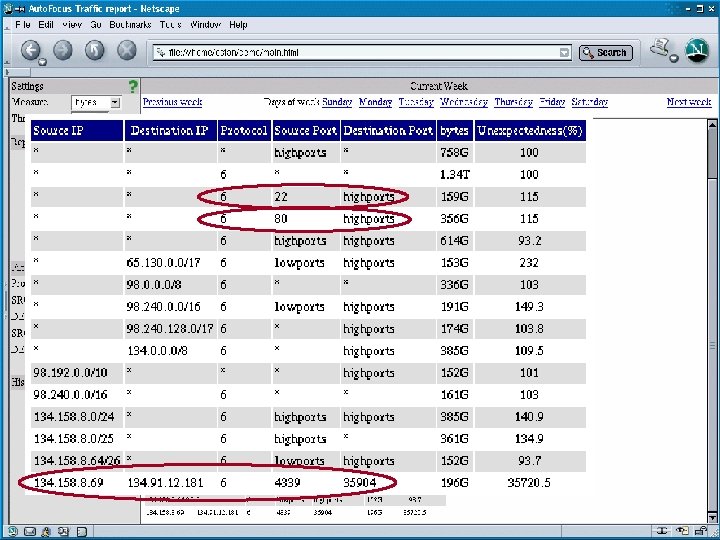

Ideal traffic report Traffic aggregate Traffic Web traffic 42. 1% Web traffic to library. big. U. edu 26. 7% Web traffic from forms. irs. gov 13. 4% ICMP from sloppynet. bad. U. edu to jeff. dorm. big. U. edu 11. 9% Web is the Traffic cluster reports tryofto give Thedominant library is a This is a Denial That’s a big flash application insights intouser the structure of the heavy ofcrowd! web Service attack !! traffic mix

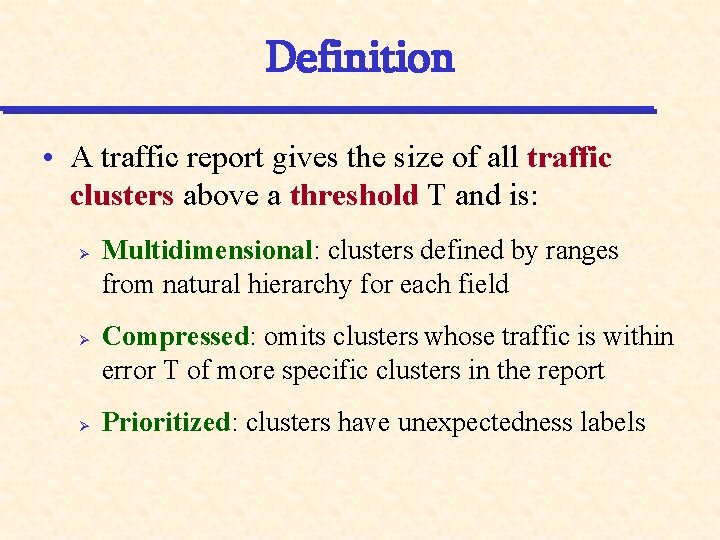

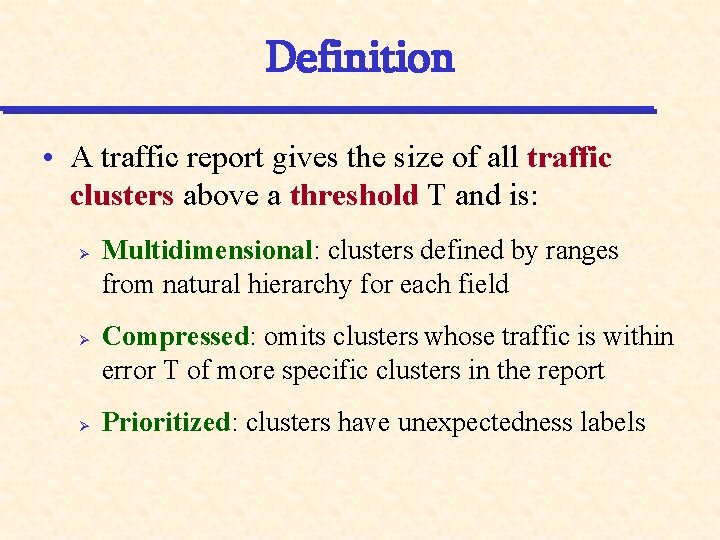

Definition • A traffic report gives the size of all traffic clusters above a threshold T and is: Ø Ø Ø Multidimensional: clusters defined by ranges from natural hierarchy for each field Compressed: omits clusters whose traffic is within error T of more specific clusters in the report Prioritized: clusters have unexpectedness labels

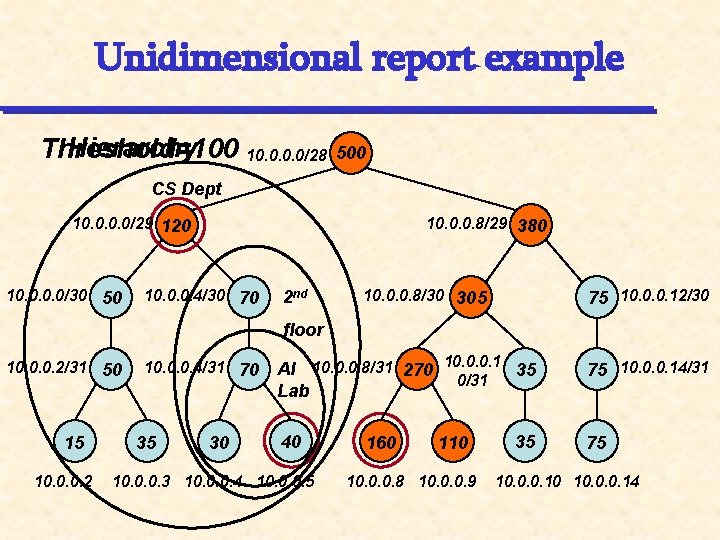

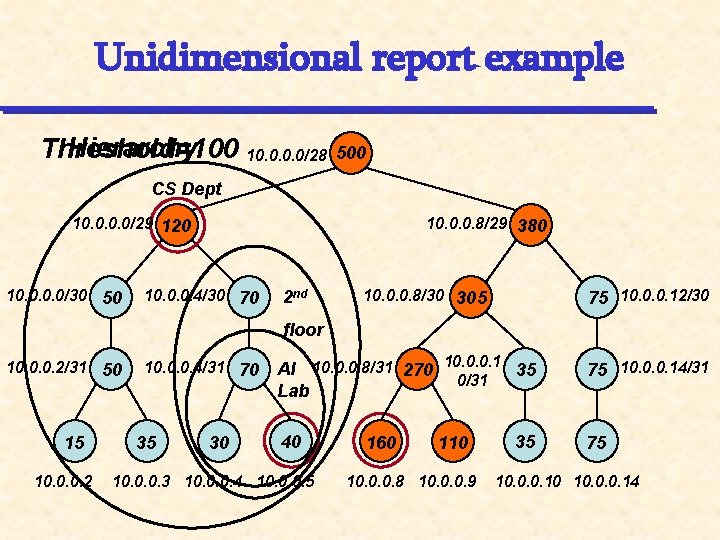

Unidimensional report example Hierarchy Threshold=100 10. 0/28 500 CS Dept 10. 0/29 120 10. 0/30 50 10. 0. 0. 8/29 380 10. 0. 0. 4/30 70 2 nd 10. 0. 0. 8/30 305 75 10. 0. 0. 12/30 floor 10. 0. 0. 2/31 50 15 10. 0. 0. 2 10. 0. 0. 4/31 70 35 30 AI 10. 0. 0. 8/31 270 10. 0. 0. 1 35 0/31 Lab 75 10. 0. 0. 14/31 40 75 10. 0. 0. 3 10. 0. 0. 4 10. 0. 0. 5 160 110 10. 0. 0. 8 10. 0. 0. 9 35 10. 0. 0. 10 10. 0. 0. 14

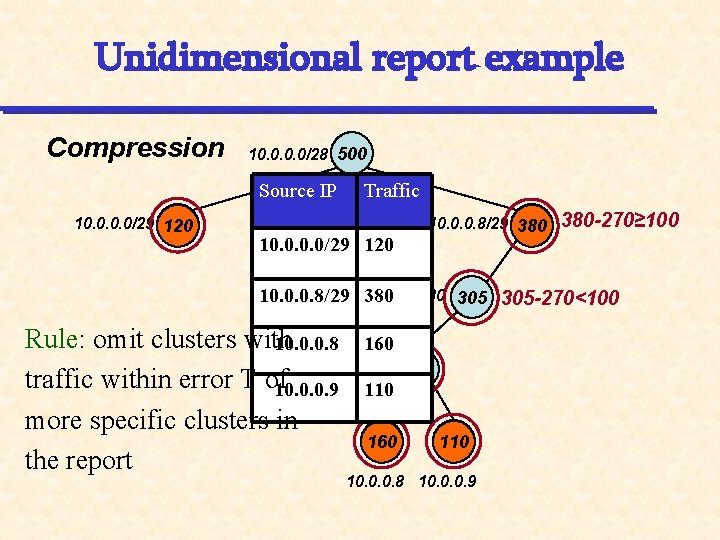

Unidimensional report example Compression 10. 0/28 500 Source IP 10. 0/29 120 Traffic 10. 0/29 120 10. 0. 0. 8/29 380 -270≥ 100 10. 0. 0. 8/30 305 -270<100 10. 0. 0. 8/29 380 Rule: omit clusters with 10. 0. 0. 8 160 10. 0. 0. 8/31 270 traffic within error T of 10. 0. 0. 9 110 more specific clusters in 160 110 the report 10. 0. 0. 8 10. 0. 0. 9

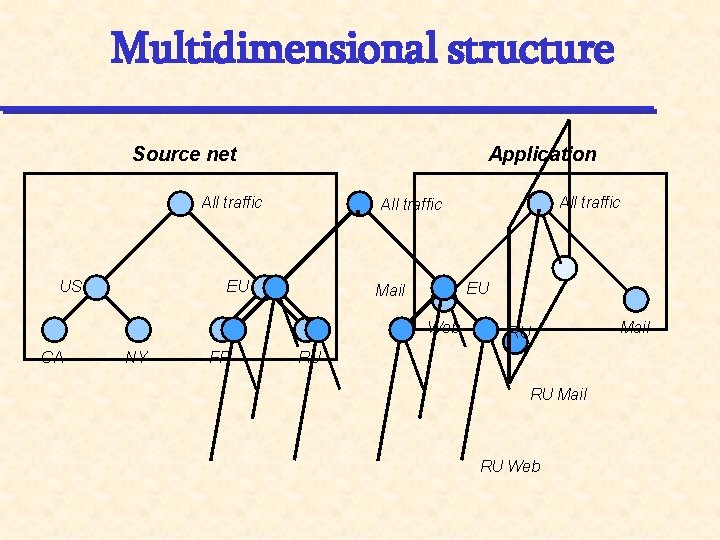

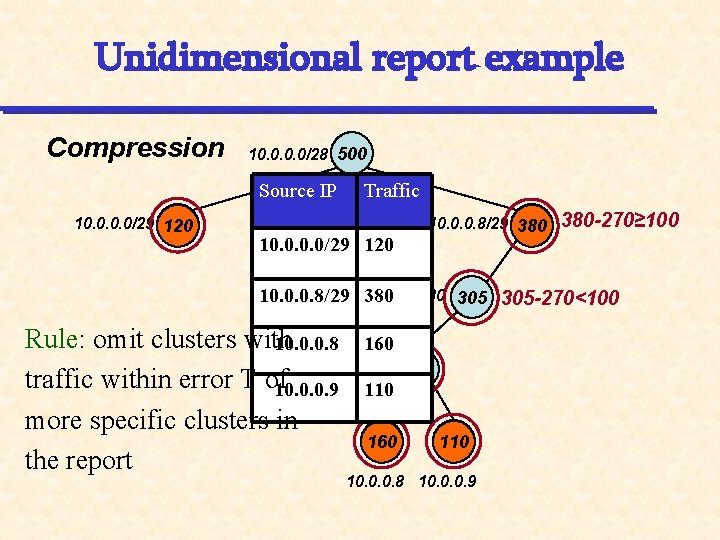

Multidimensional structure Source net Application All traffic US EU EU Mail Web CA NY FR All traffic RU RU RU Mail RU Web Mail

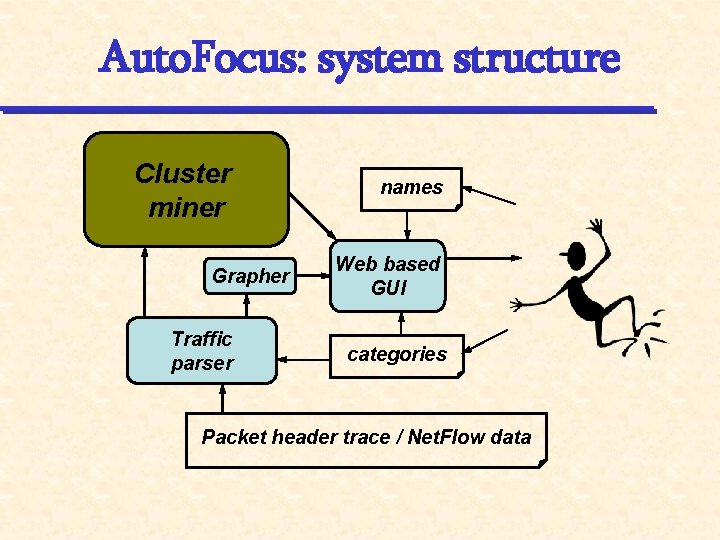

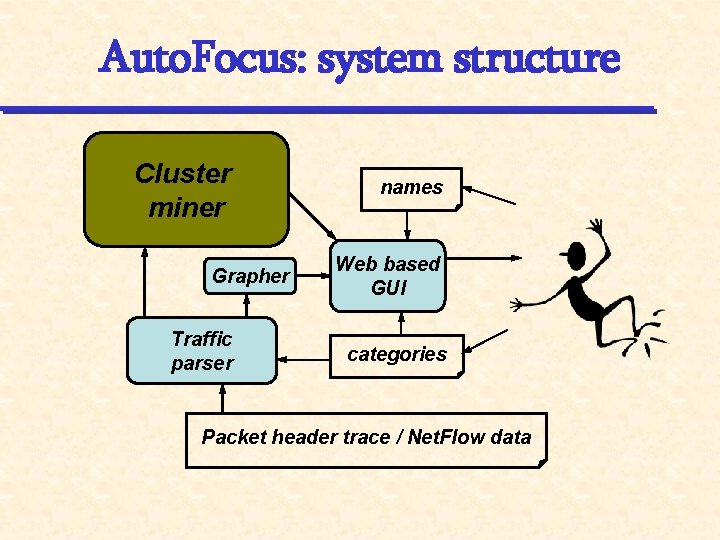

Auto. Focus: system structure Cluster miner Grapher Traffic parser names Web based GUI categories Packet header trace / Net. Flow data

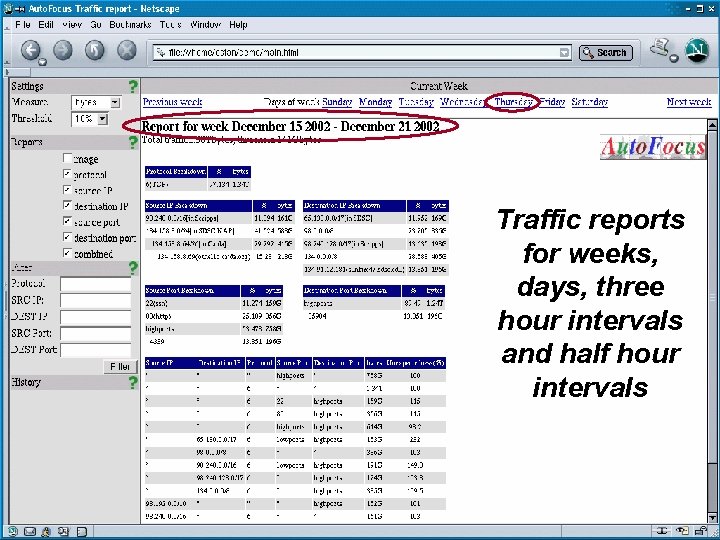

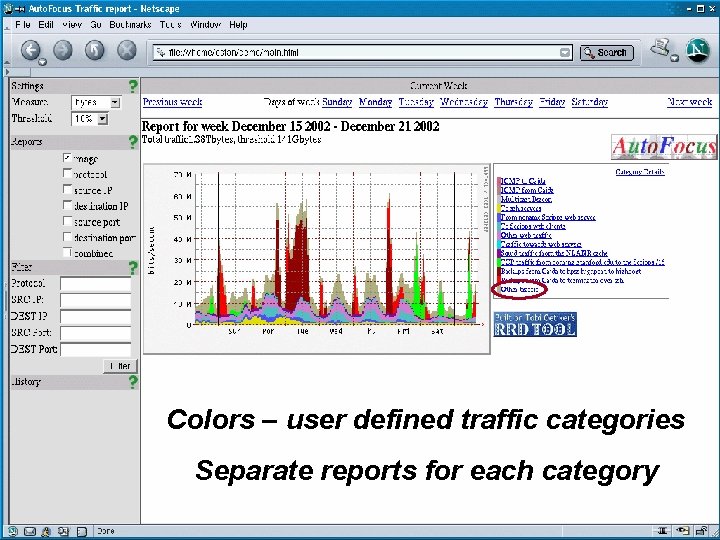

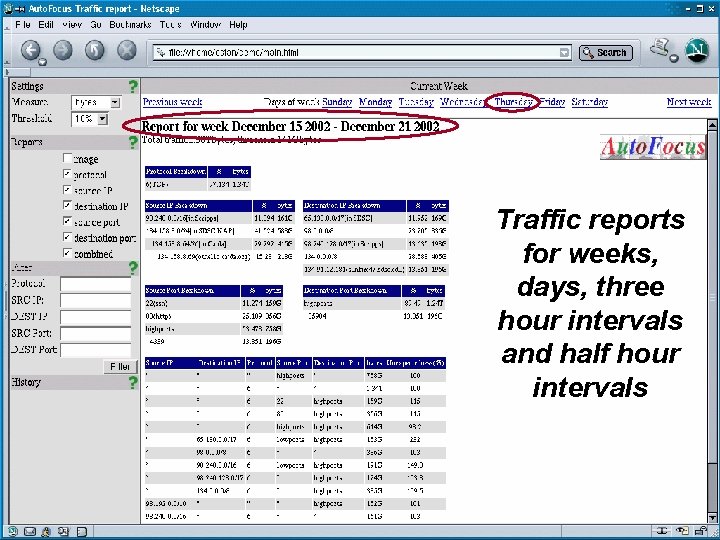

Traffic reports for weeks, days, three hour intervals and half hour intervals

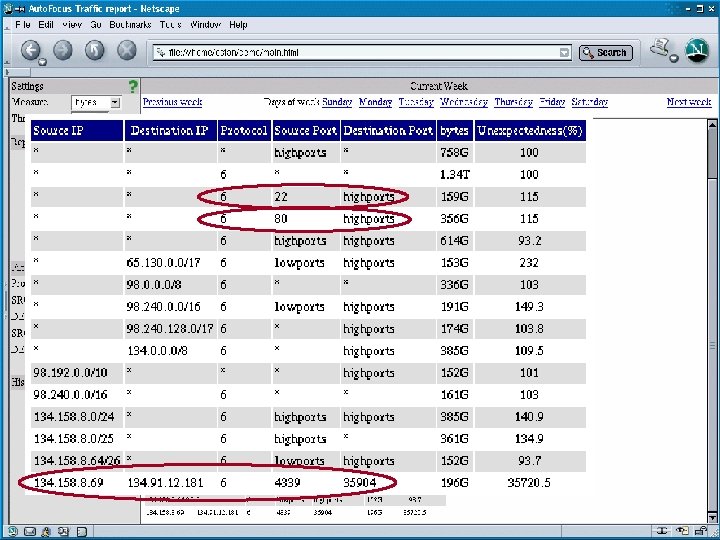

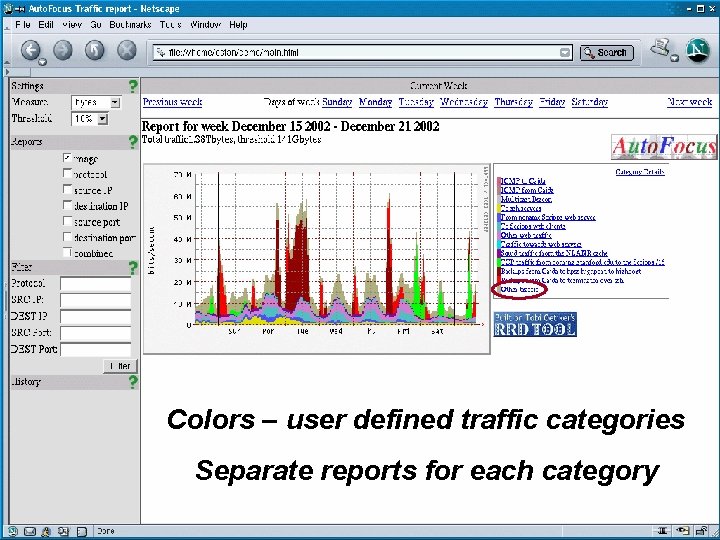

Colors – user defined traffic categories Separate reports for each category

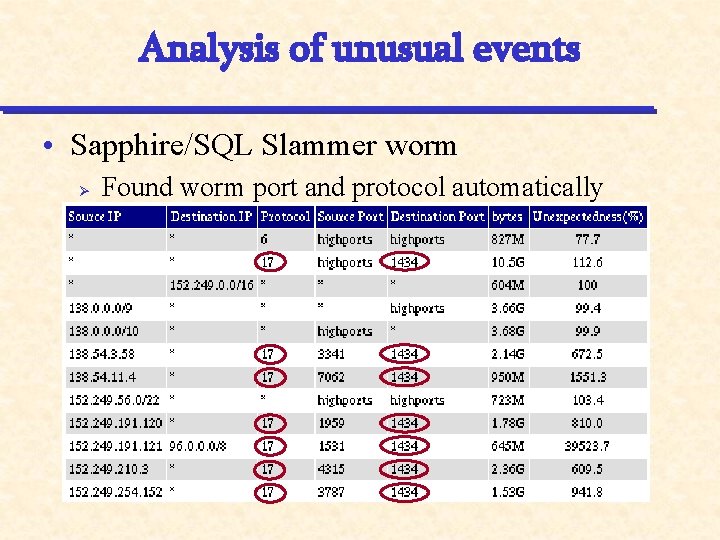

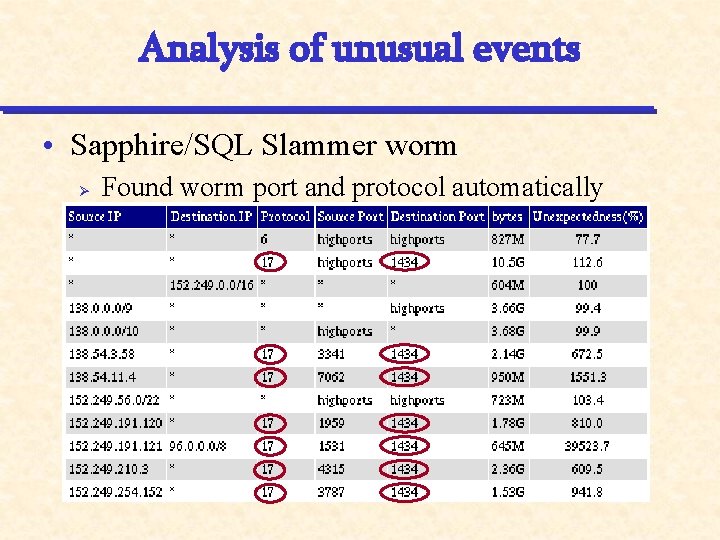

Analysis of unusual events • Sapphire/SQL Slammer worm Ø Found worm port and protocol automatically

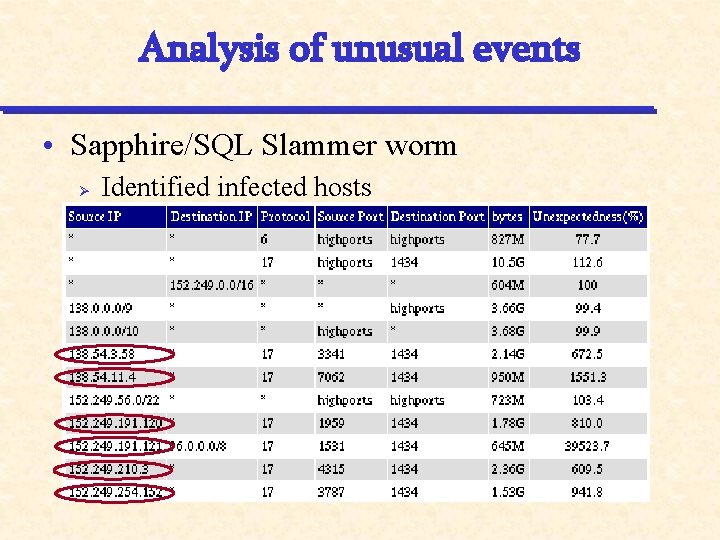

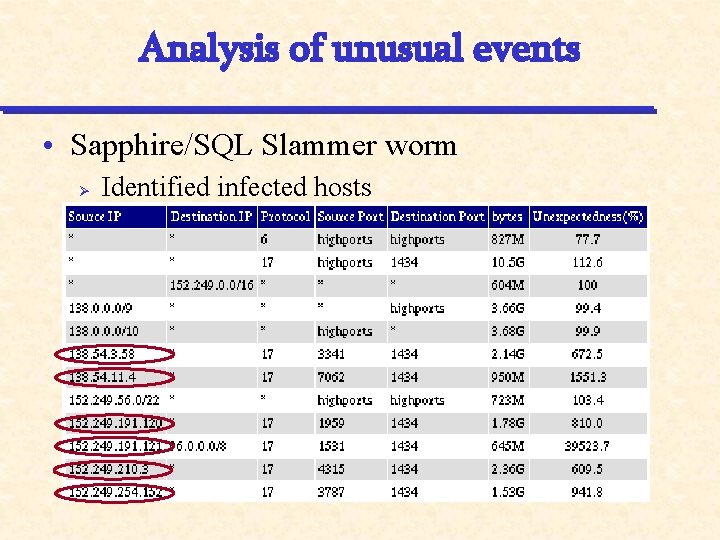

Analysis of unusual events • Sapphire/SQL Slammer worm Ø Identified infected hosts

![Related work Databases FS98 Iceberg Queries Ø Limited analysis no conservative update Related work • Databases [FS+98] Iceberg Queries Ø Limited analysis, no conservative update •](https://slidetodoc.com/presentation_image_h2/7d852dbbde776d267890656b999764ea/image-44.jpg)

Related work • Databases [FS+98] Iceberg Queries Ø Limited analysis, no conservative update • Theory [GM 98, CCF 02] Synopses, sketches Ø Less accurate than multistage filters • Data Mining [AIS 93] Association rules Ø No/limited hierarchy, no compression • Databases [GCB+97] Data cube Ø No automatic generation of “interesting” clusters