Interfacing Processors and Peripherals Andreas Klappenecker CPSC 321

- Slides: 16

Interfacing Processors and Peripherals Andreas Klappenecker CPSC 321 Computer Architecture

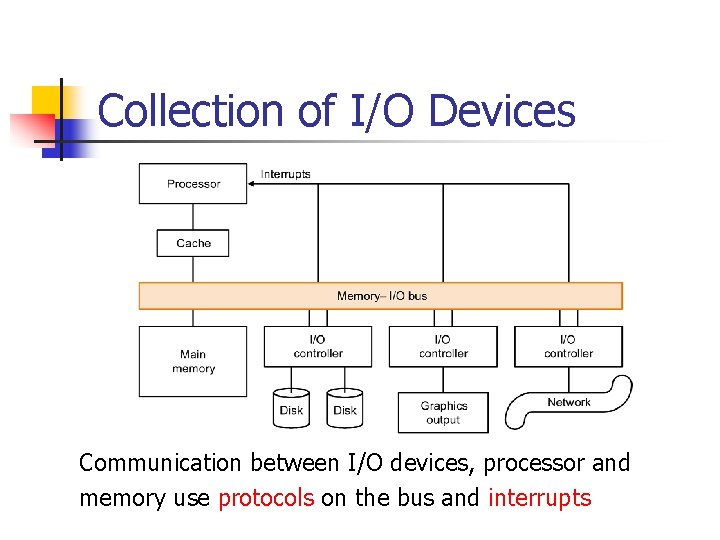

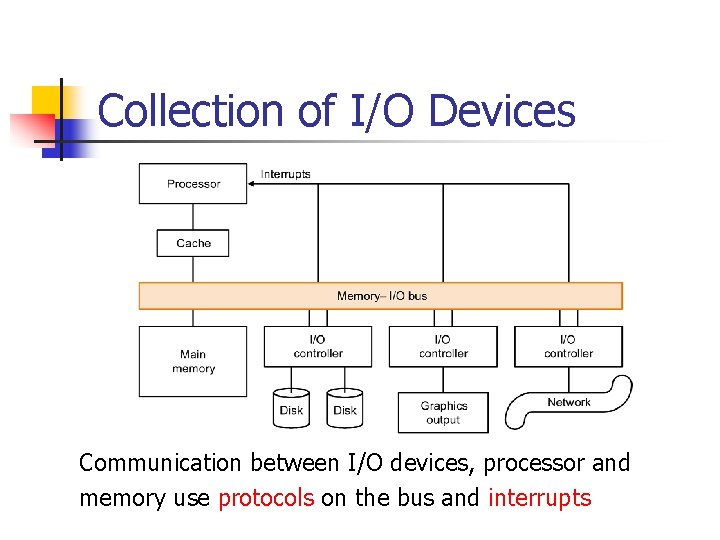

Collection of I/O Devices Communication between I/O devices, processor and memory use protocols on the bus and interrupts

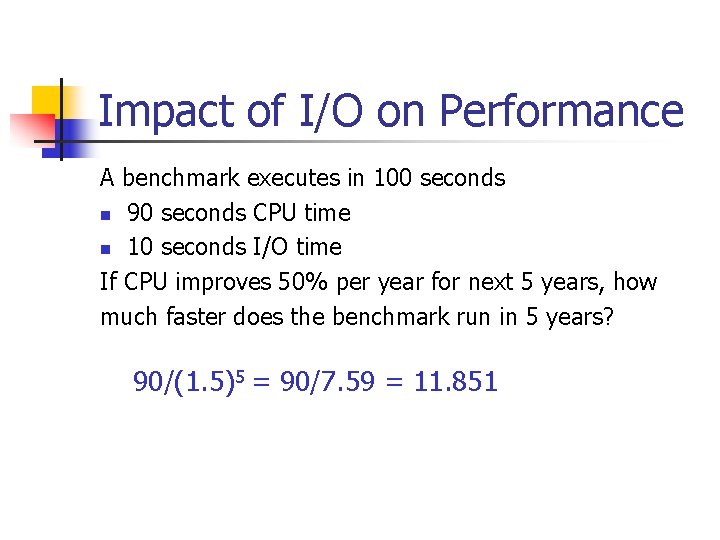

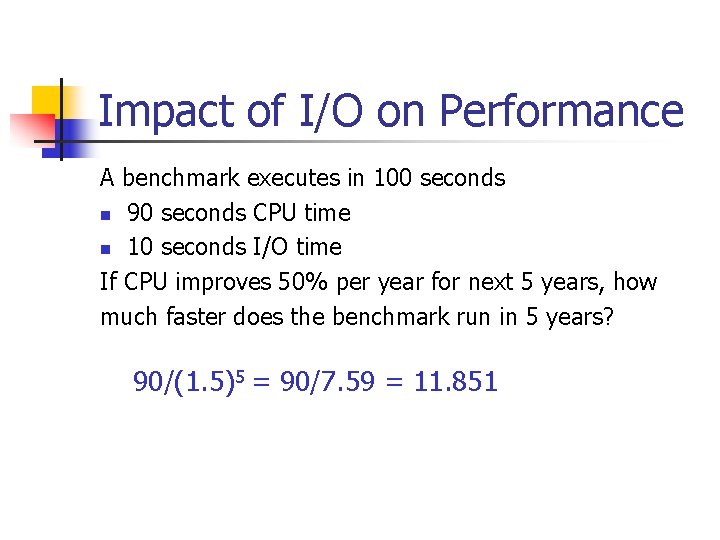

Impact of I/O on Performance A benchmark executes in 100 seconds n 90 seconds CPU time n 10 seconds I/O time If CPU improves 50% per year for next 5 years, how much faster does the benchmark run in 5 years? 90/(1. 5)5 = 90/7. 59 = 11. 851

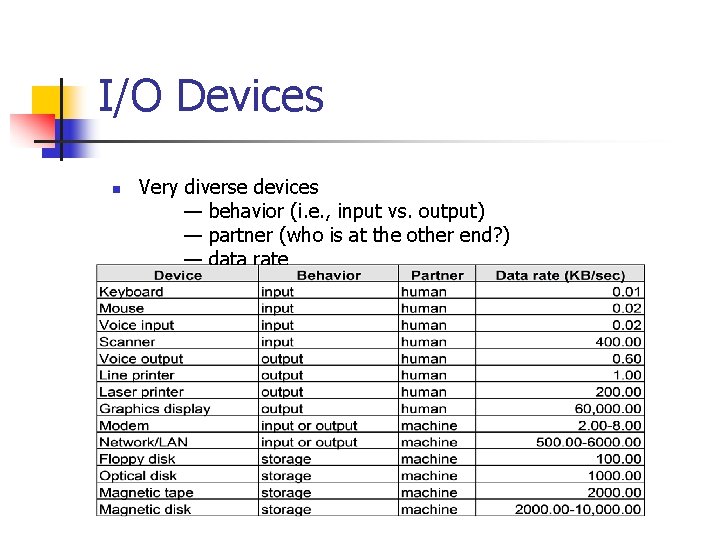

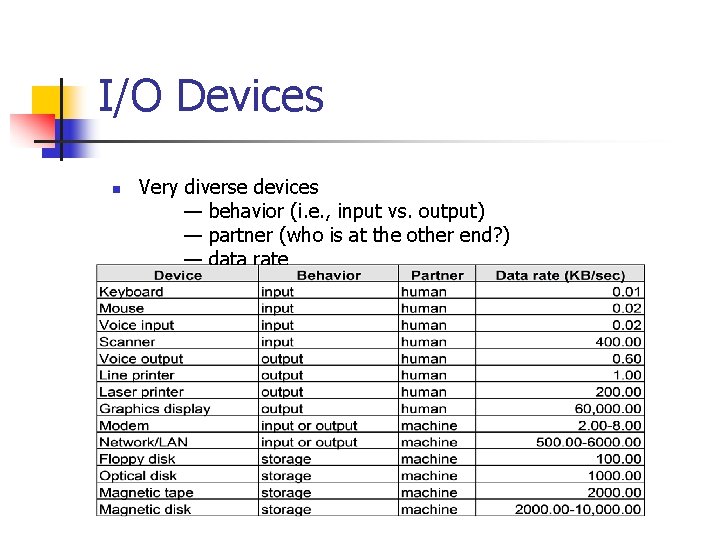

I/O Devices n Very diverse devices — behavior (i. e. , input vs. output) — partner (who is at the other end? ) — data rate

Communicating with Processor n Polling n n n simple I/O device puts information in a status register processor retrieves information check the status periodically Interrupt driven I/O n n device notifies processor that it has completed some operation by causing an interrupt similar to exception, except that it is asynchronous processor must be notified of the device csng interrupts must be prioritized

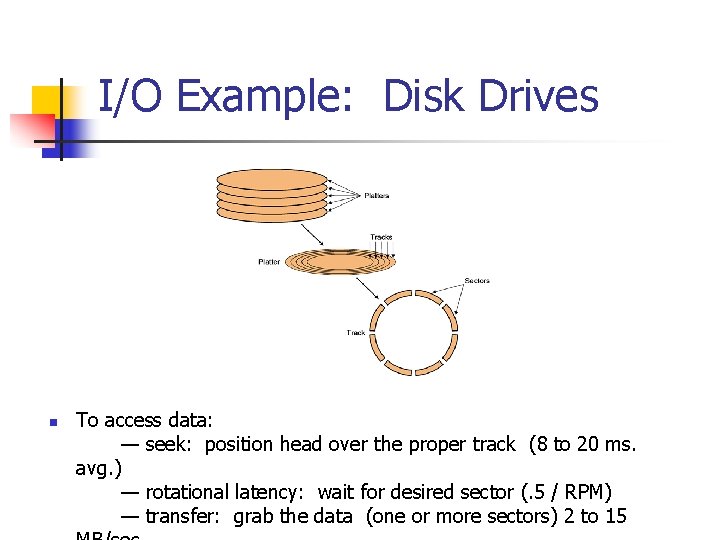

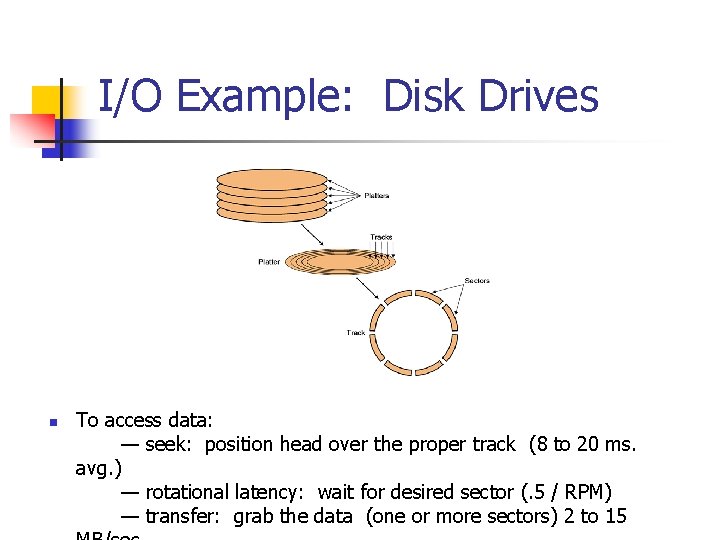

I/O Example: Disk Drives n To access data: — seek: position head over the proper track (8 to 20 ms. avg. ) — rotational latency: wait for desired sector (. 5 / RPM) — transfer: grab the data (one or more sectors) 2 to 15

I/O Example: Buses n n Shared communication link (one or more wires) Difficult design: — may be bottleneck — tradeoffs (buffers for higher bandwidth increases latency) — support for many different devices — cost Types of buses: — processor-memory (short high speed, custom design) — backplane (high speed, often standardized, e. g. , PCI) — I/O (lengthy, different devices, standardized, e. g. , SCSI) Synchronous vs. Asynchronous — use a clock and a synchronous protocol, fast and small, but every device must operate at same rate and clock skew requires the bus to be short

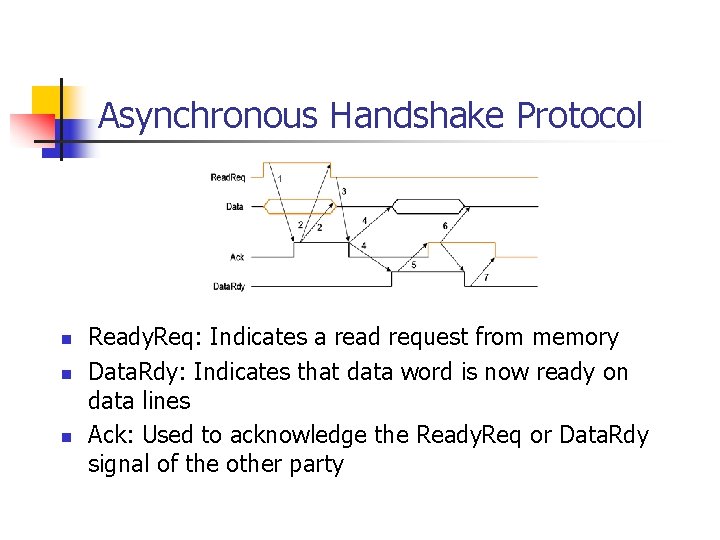

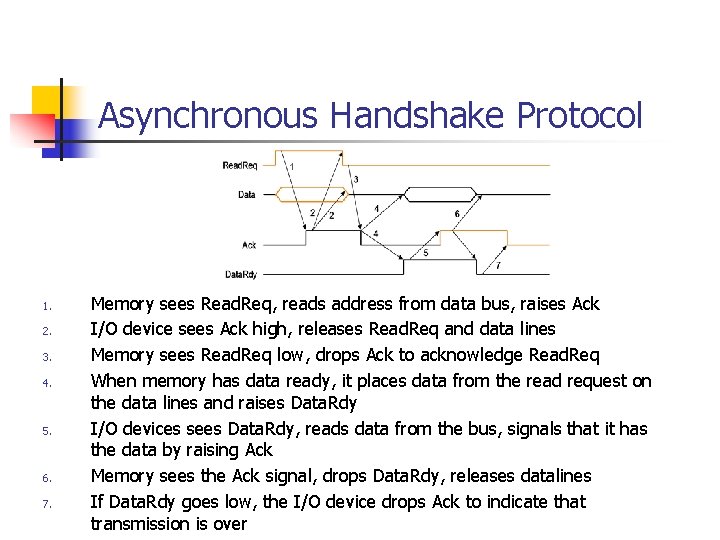

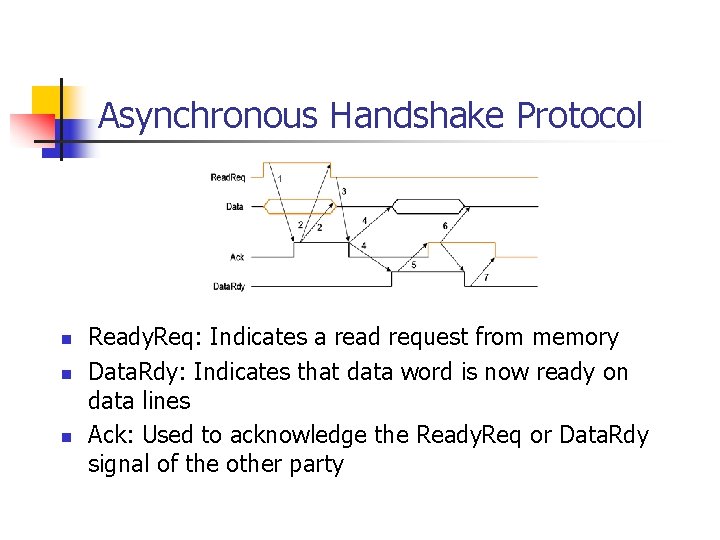

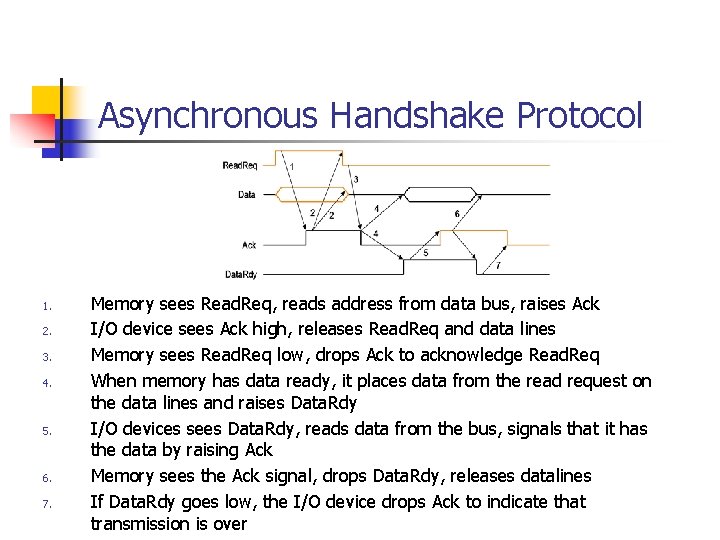

Asynchronous Handshake Protocol n n n Ready. Req: Indicates a read request from memory Data. Rdy: Indicates that data word is now ready on data lines Ack: Used to acknowledge the Ready. Req or Data. Rdy signal of the other party

Asynchronous Handshake Protocol 1. 2. 3. 4. 5. 6. 7. Memory sees Read. Req, reads address from data bus, raises Ack I/O device sees Ack high, releases Read. Req and data lines Memory sees Read. Req low, drops Ack to acknowledge Read. Req When memory has data ready, it places data from the read request on the data lines and raises Data. Rdy I/O devices sees Data. Rdy, reads data from the bus, signals that it has the data by raising Ack Memory sees the Ack signal, drops Data. Rdy, releases datalines If Data. Rdy goes low, the I/O device drops Ack to indicate that transmission is over

Synchronous vs. Asynchronous Buses n n Compare max. bandwidth for a synchronous bus and an asynchronous bus Synchronous bus n n n Asynchronous bus n n has clock cycle time of 50 ns each transmission takes 1 clock cycle requires 40 ns per handshake Find bandwidth for each bus when performing oneword reads from a 200 ns memory

Synchronous Bus Send address to memory: 50 ns Read memory: 200 ns Send data to device: 50 ns Total: 300 ns Max. bandwidth: 1. 2. 3. 4. 5. 1. 4 bytes/300 ns = 13. 3 MB/second

Asynchronous Bus n n n Apparently much slower because each step of the protocol takes 40 ns and memory access 200 ns Notice that several steps are overlapped with memory access time Memory receives address at step 1 does not need to put address until step 5 steps 2, 3, 4 can overlap with memory access n n n Step 1: 40 ns Step 2, 3, 4: max(3 x 40 ns =120 ns, 200 ns) Steps 5, 6, 7: 3 x 40 ns = 120 ns Total time 360 ns max. bandwidth 4 bytes/360 ns=11. 1 MB/second

Other important issues n n n Bus Arbitration: — daisy chain arbitration (not very fair) — centralized arbitration (requires an arbiter), e. g. , PCI — self selection, e. g. , Nu. Bus used in Macintosh — collision detection, e. g. , Ethernet Operating system: — polling, interrupts, DMA Performance Analysis techniques: — queuing theory — simulation — analysis, i. e. , find the weakest link (see “I/O System Design”)

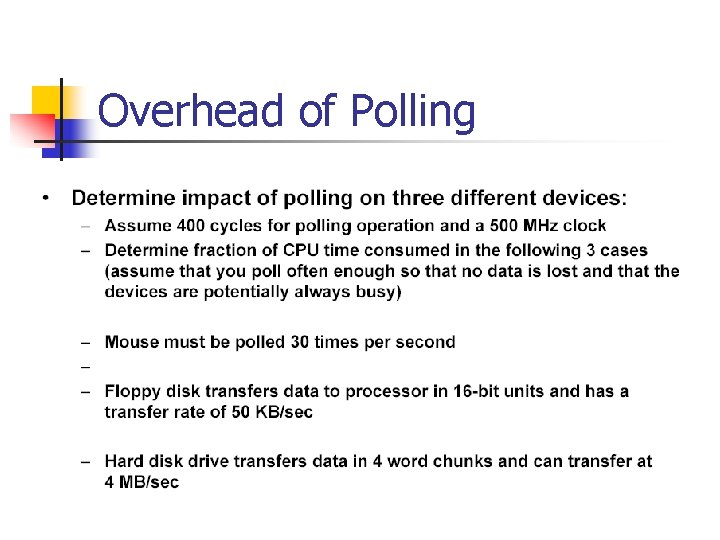

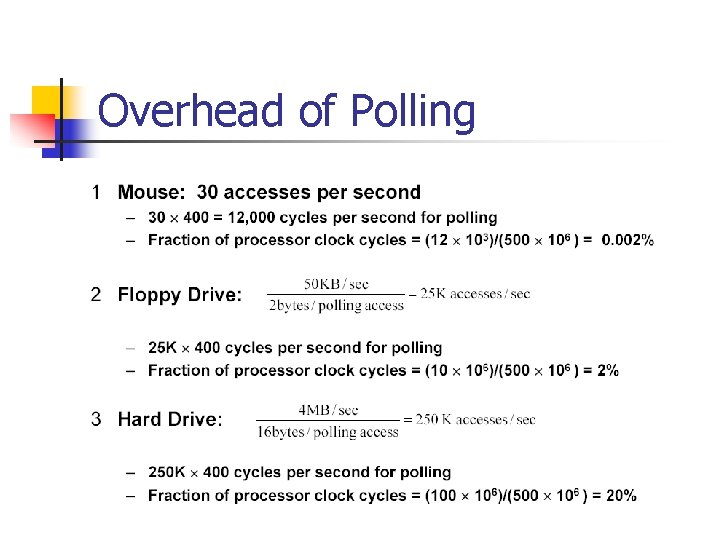

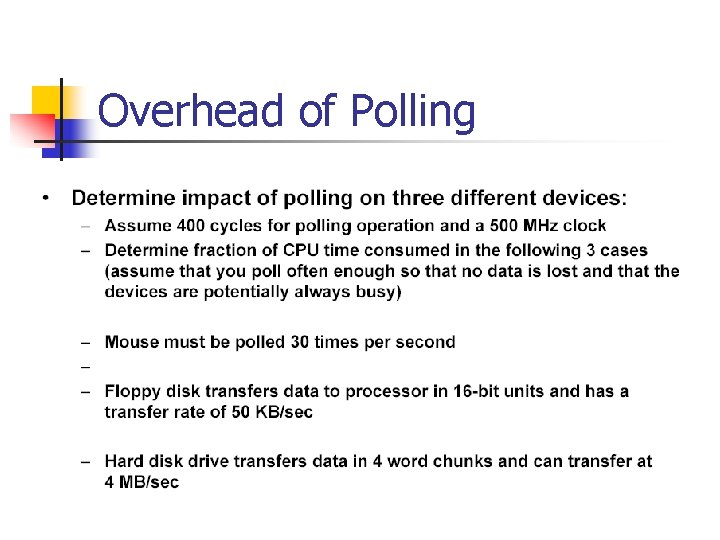

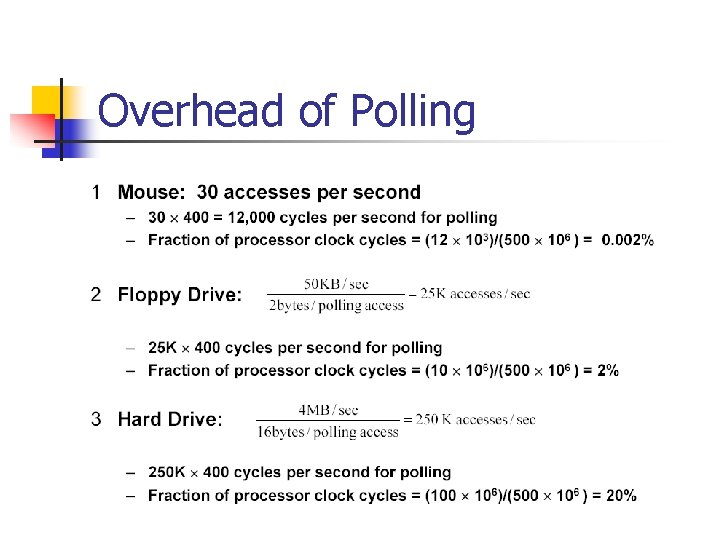

Overhead of Polling

Overhead of Polling

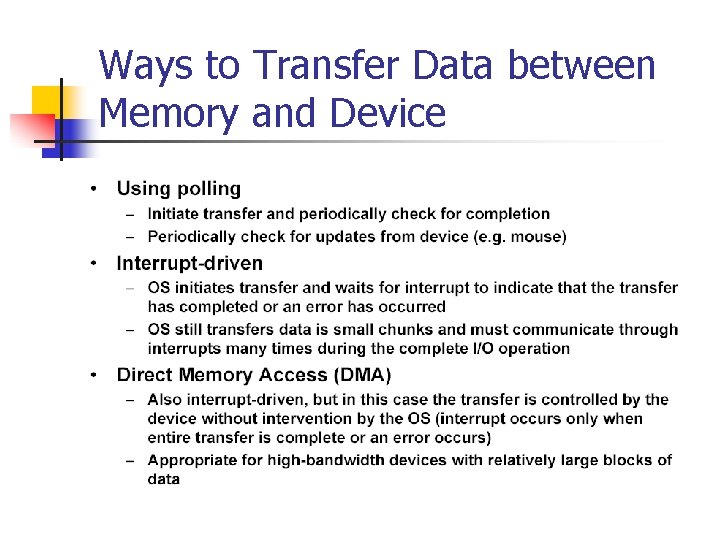

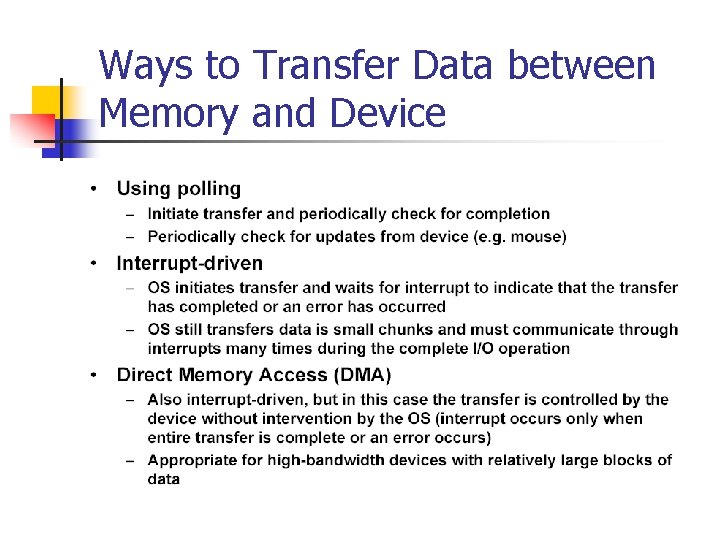

Ways to Transfer Data between Memory and Device