Interconnection Networks ECE 6101 Yalamanchili Spring 2005 Overview

- Slides: 67

Interconnection Networks ECE 6101: Yalamanchili Spring 2005

Overview · Physical Layer and Message Switching · Network Topologies · Metrics · Deadlock & Livelock · Routing Layer · The Messaging Layer ECE 6101: Yalamanchili Spring 2004 2

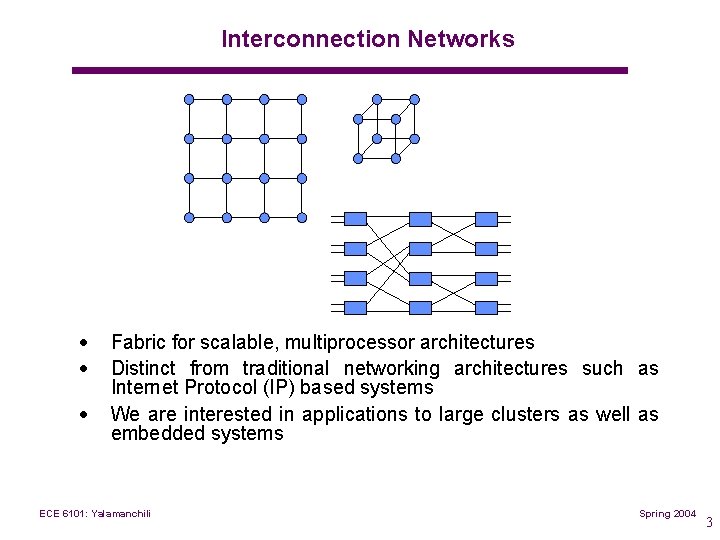

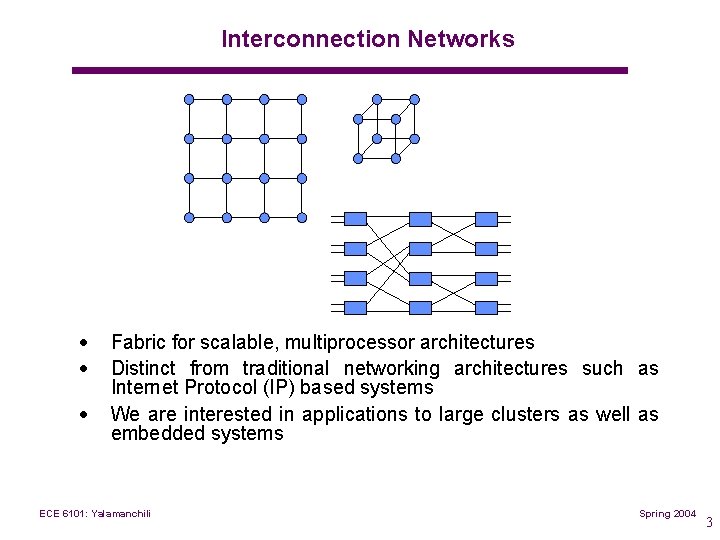

Interconnection Networks · · · Fabric for scalable, multiprocessor architectures Distinct from traditional networking architectures such as Internet Protocol (IP) based systems We are interested in applications to large clusters as well as embedded systems ECE 6101: Yalamanchili Spring 2004 3

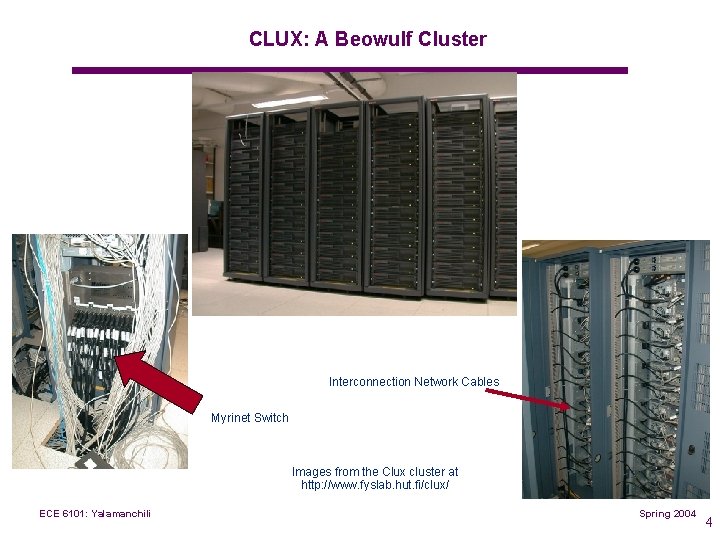

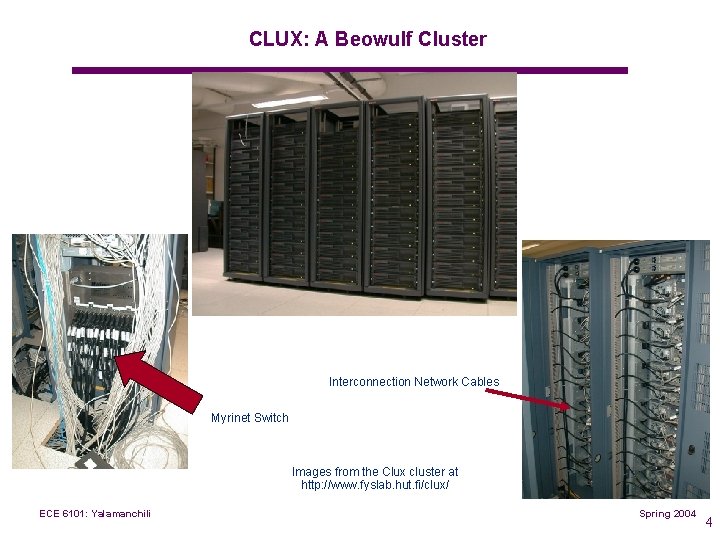

CLUX: A Beowulf Cluster Interconnection Network Cables Myrinet Switch Images from the Clux cluster at http: //www. fyslab. hut. fi/clux/ ECE 6101: Yalamanchili Spring 2004 4

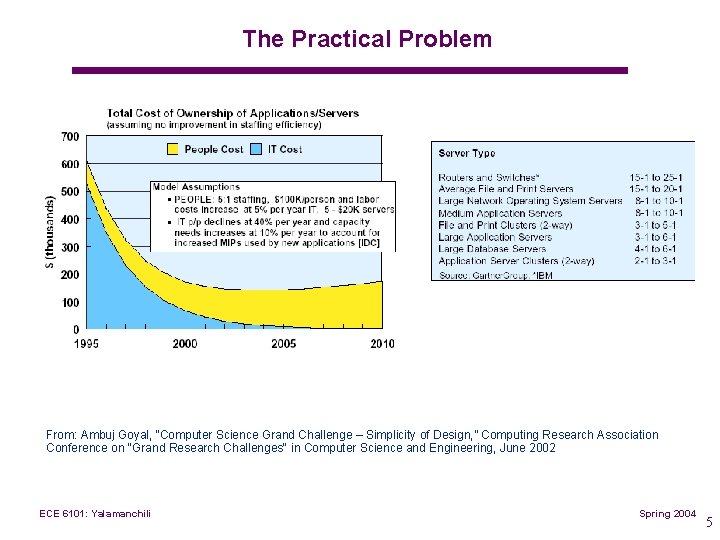

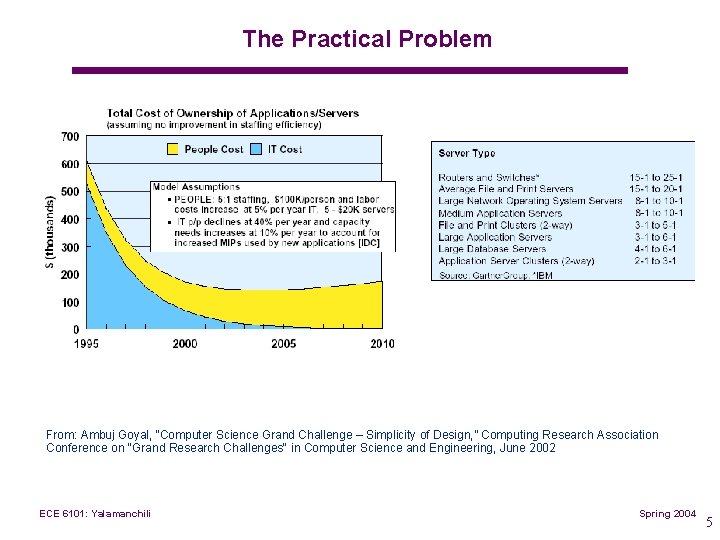

The Practical Problem From: Ambuj Goyal, “Computer Science Grand Challenge – Simplicity of Design, ” Computing Research Association Conference on "Grand Research Challenges" in Computer Science and Engineering, June 2002 ECE 6101: Yalamanchili Spring 2004 5

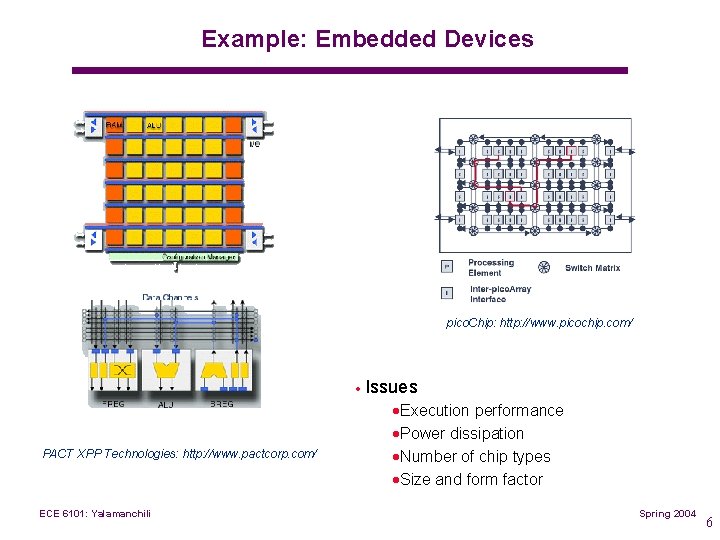

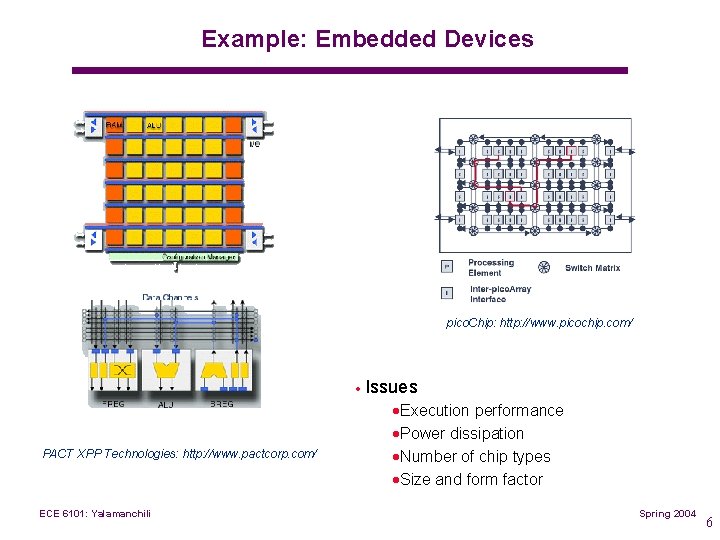

Example: Embedded Devices pico. Chip: http: //www. picochip. com/ · Issues PACT XPP Technologies: http: //www. pactcorp. com/ ECE 6101: Yalamanchili ·Execution performance ·Power dissipation ·Number of chip types ·Size and form factor Spring 2004 6

Physical Layer and Message Switching ECE 6101: Yalamanchili Spring 2005

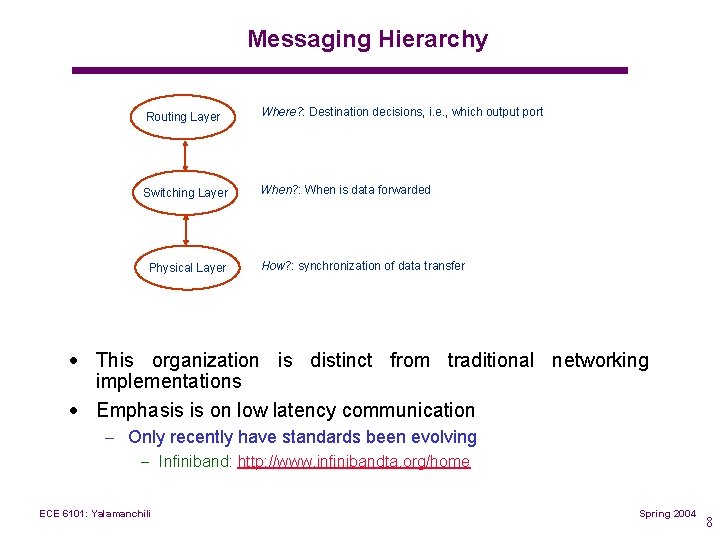

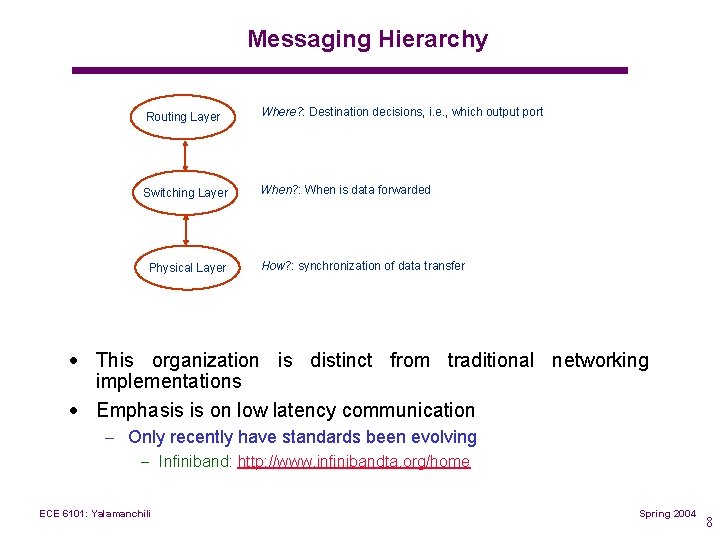

Messaging Hierarchy Routing Layer Where? : Destination decisions, i. e. , which output port Switching Layer When? : When is data forwarded Physical Layer How? : synchronization of data transfer · This organization is distinct from traditional networking implementations · Emphasis is on low latency communication – Only recently have standards been evolving – Infiniband: http: //www. infinibandta. org/home ECE 6101: Yalamanchili Spring 2004 8

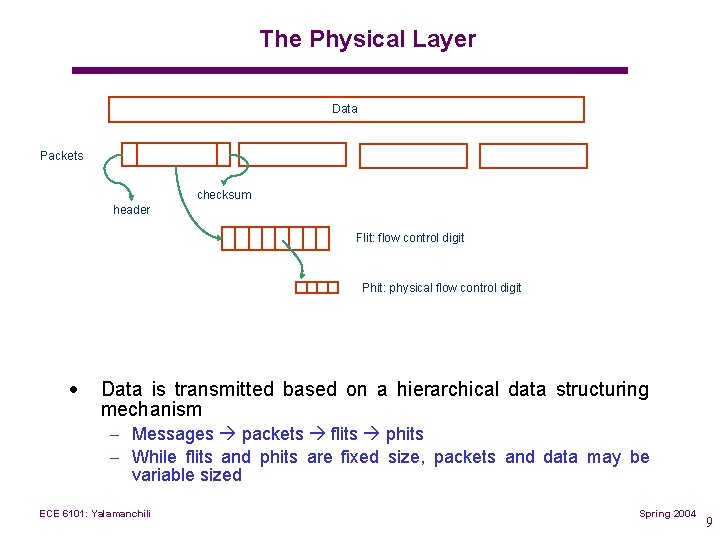

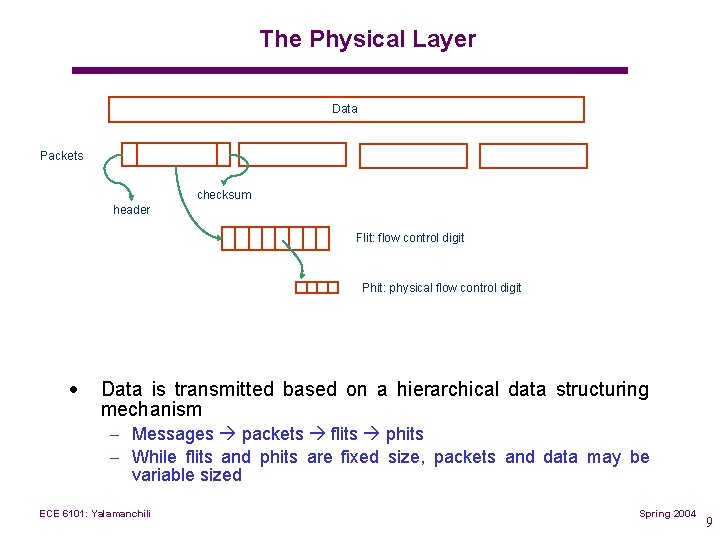

The Physical Layer Data Packets checksum header Flit: flow control digit Phit: physical flow control digit · Data is transmitted based on a hierarchical data structuring mechanism – Messages packets flits phits – While flits and phits are fixed size, packets and data may be variable sized ECE 6101: Yalamanchili Spring 2004 9

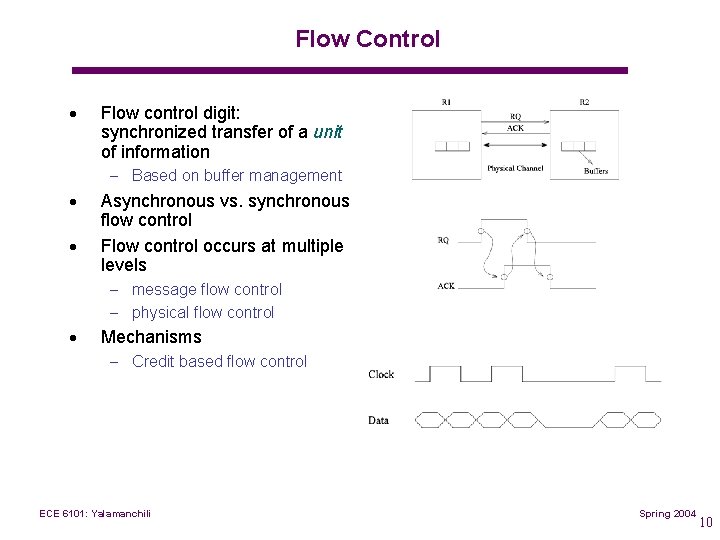

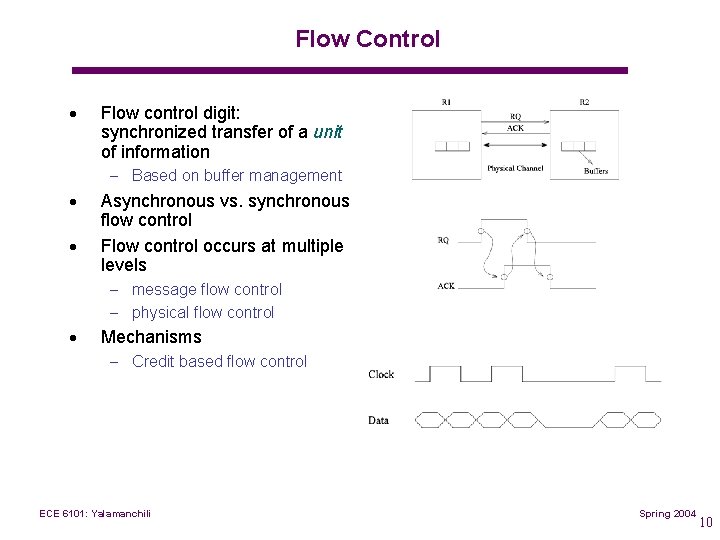

Flow Control · Flow control digit: synchronized transfer of a unit of information – Based on buffer management · · Asynchronous vs. synchronous flow control Flow control occurs at multiple levels – message flow control – physical flow control · Mechanisms – Credit based flow control ECE 6101: Yalamanchili Spring 2004 10

Switching Layer · Comprised of three sets of techniques – switching techniques – flow control – buffer management · Organization and operation of routers are largely determined by the switching layer · Connection Oriented vs. Connectionless communication ECE 6101: Yalamanchili Spring 2004 11

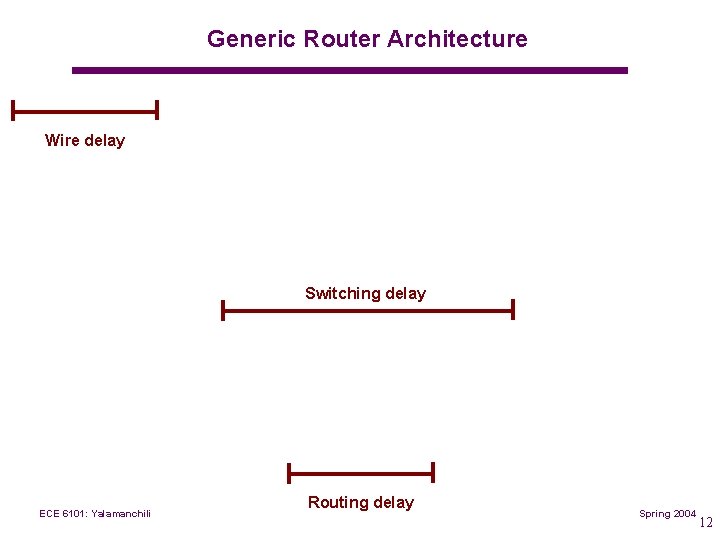

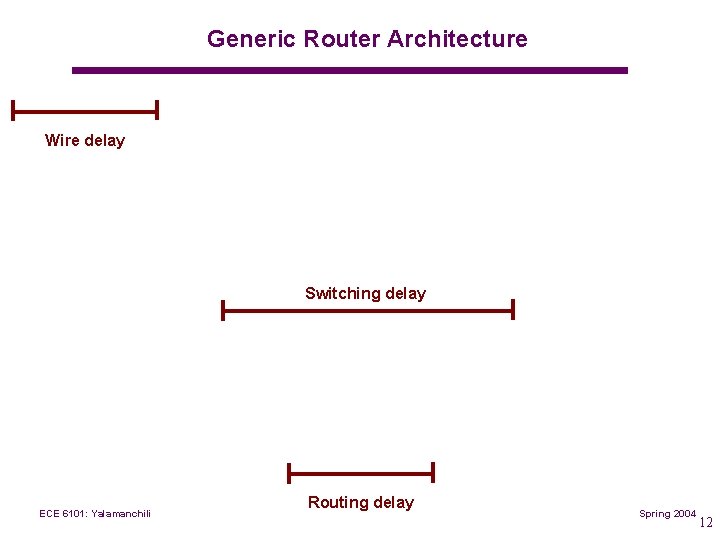

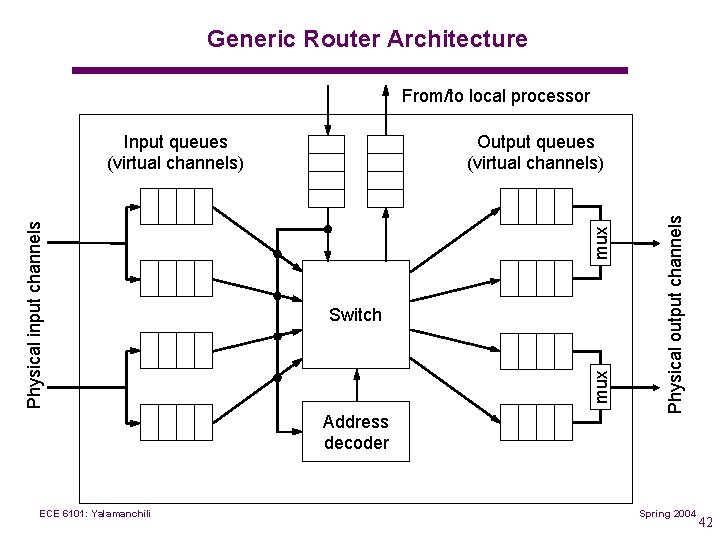

Generic Router Architecture Wire delay Switching delay ECE 6101: Yalamanchili Routing delay Spring 2004 12

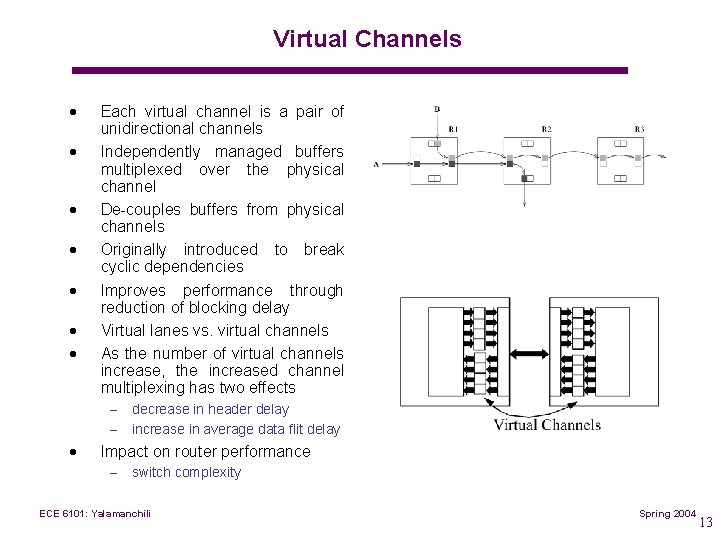

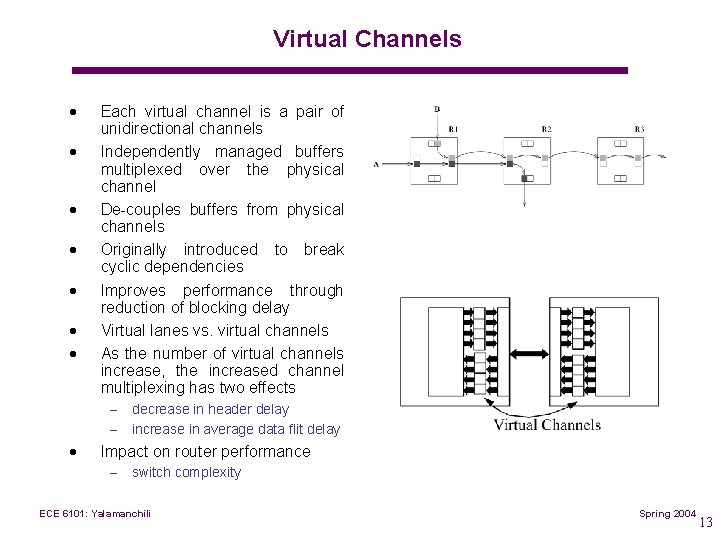

Virtual Channels · · · · Each virtual channel is a pair of unidirectional channels Independently managed buffers multiplexed over the physical channel De couples buffers from physical channels Originally introduced to break cyclic dependencies Improves performance through reduction of blocking delay Virtual lanes vs. virtual channels As the number of virtual channels increase, the increased channel multiplexing has two effects – – · decrease in header delay increase in average data flit delay Impact on router performance – switch complexity ECE 6101: Yalamanchili Spring 2004 13

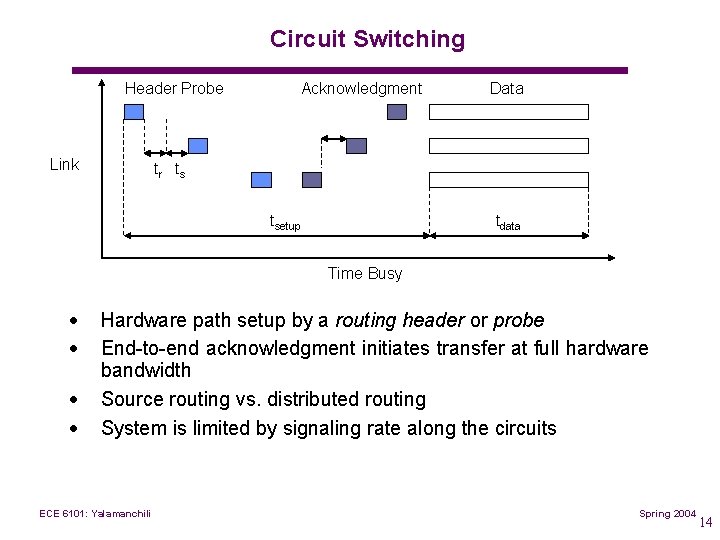

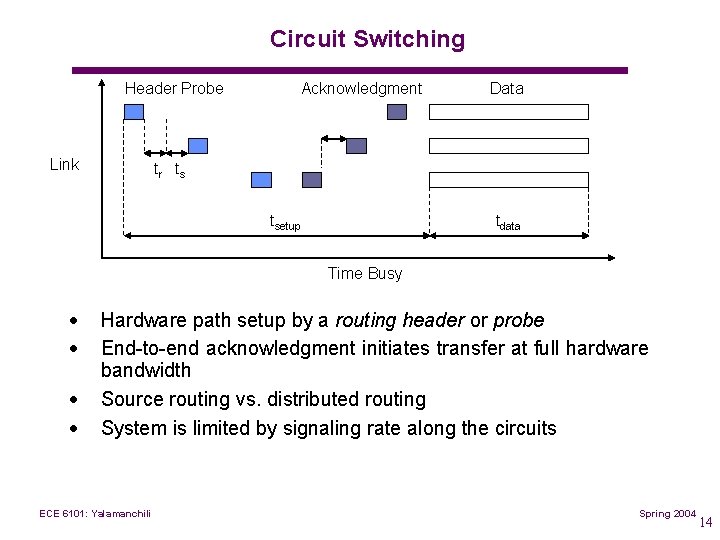

Circuit Switching Header Probe Link Acknowledgment Data tr ts tsetup tdata Time Busy · · Hardware path setup by a routing header or probe End to end acknowledgment initiates transfer at full hardware bandwidth Source routing vs. distributed routing System is limited by signaling rate along the circuits ECE 6101: Yalamanchili Spring 2004 14

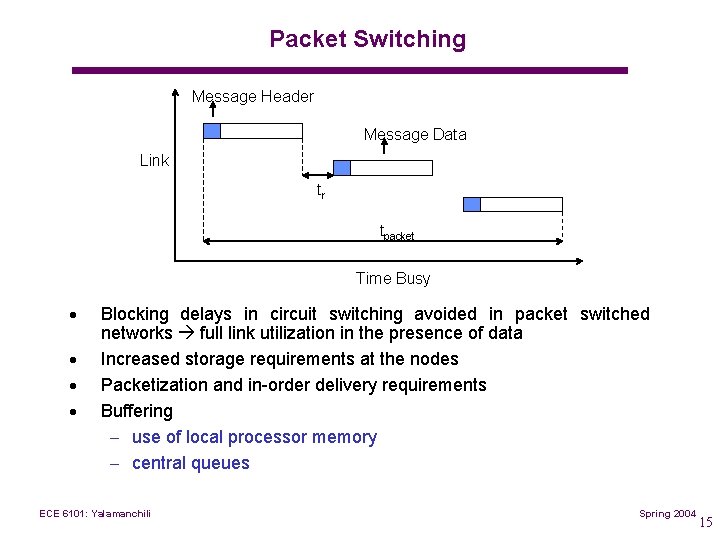

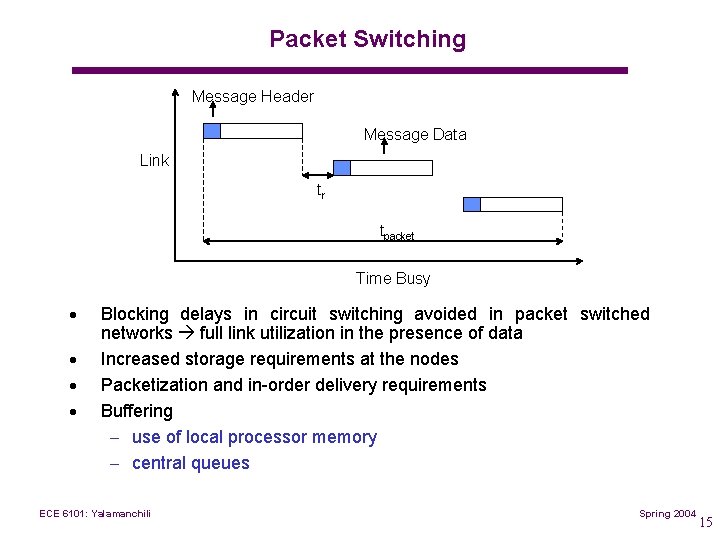

Packet Switching Message Header Message Data Link tr tpacket Time Busy · · Blocking delays in circuit switching avoided in packet switched networks full link utilization in the presence of data Increased storage requirements at the nodes Packetization and in order delivery requirements Buffering – use of local processor memory – central queues ECE 6101: Yalamanchili Spring 2004 15

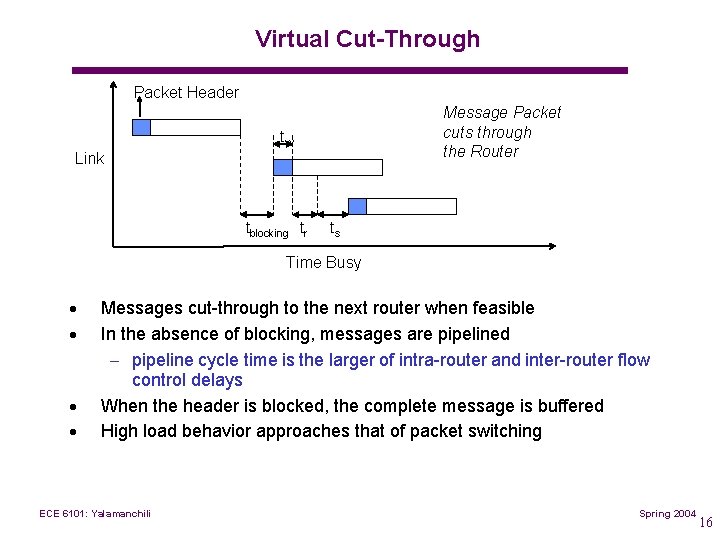

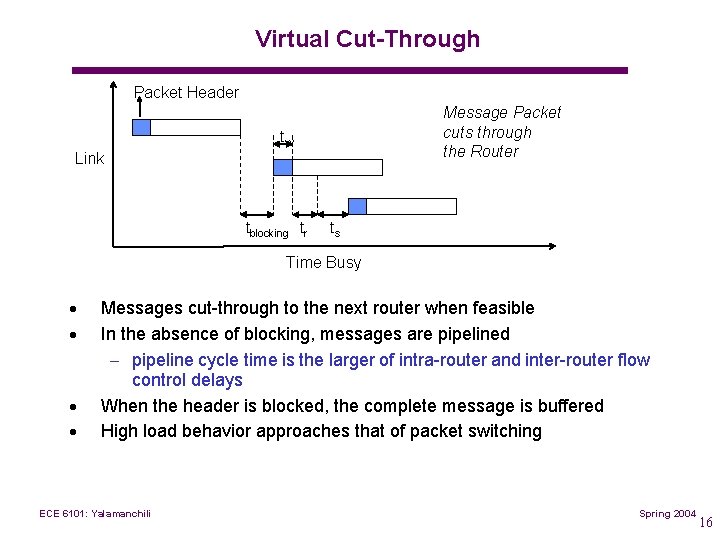

Virtual Cut-Through Packet Header Message Packet cuts through the Router tw Link tblocking tr ts Time Busy · · Messages cut through to the next router when feasible In the absence of blocking, messages are pipelined – pipeline cycle time is the larger of intra router and inter router flow control delays When the header is blocked, the complete message is buffered High load behavior approaches that of packet switching ECE 6101: Yalamanchili Spring 2004 16

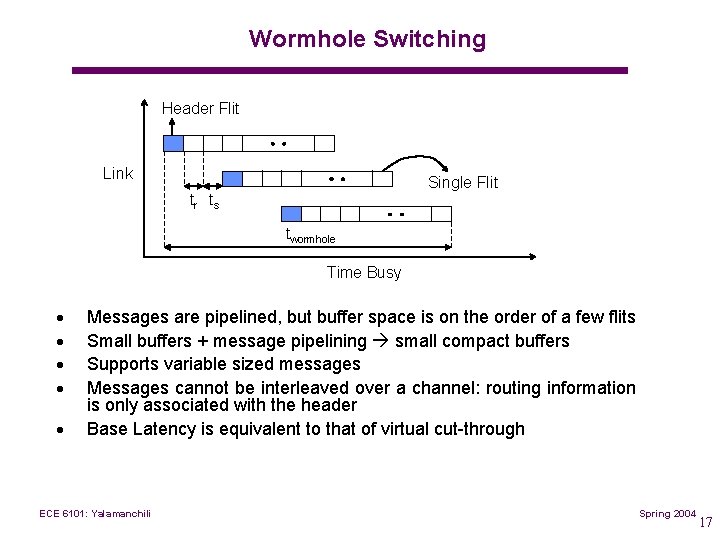

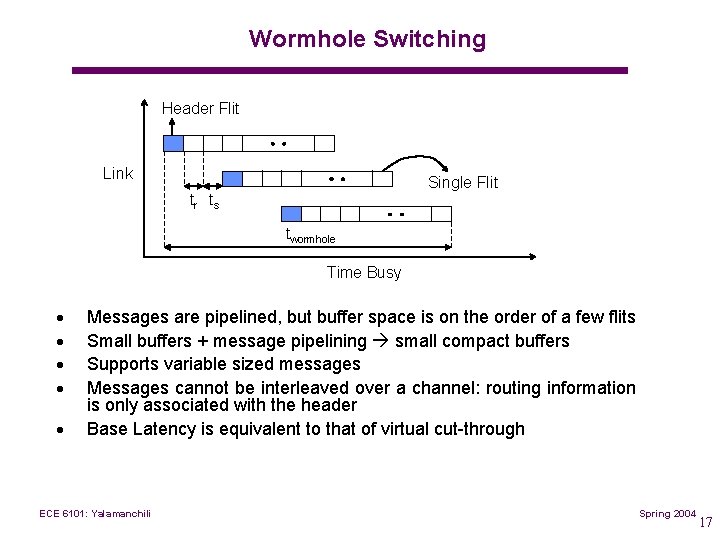

Wormhole Switching Header Flit Link Single Flit tr ts twormhole Time Busy · · · Messages are pipelined, but buffer space is on the order of a few flits Small buffers + message pipelining small compact buffers Supports variable sized messages Messages cannot be interleaved over a channel: routing information is only associated with the header Base Latency is equivalent to that of virtual cut through ECE 6101: Yalamanchili Spring 2004 17

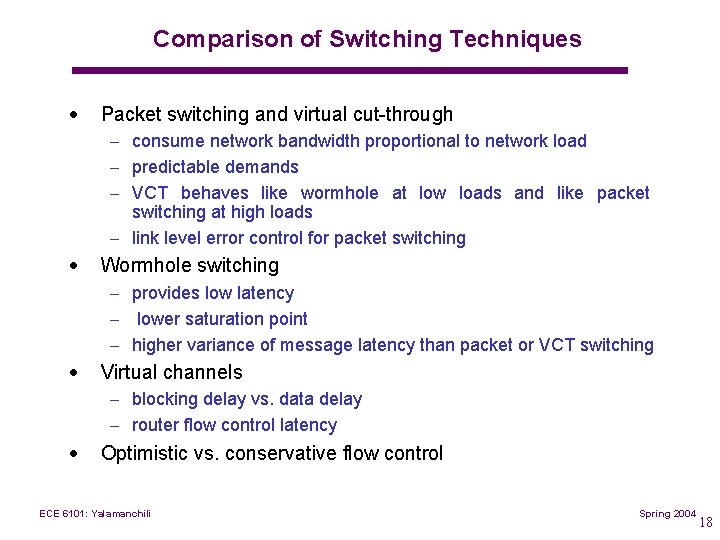

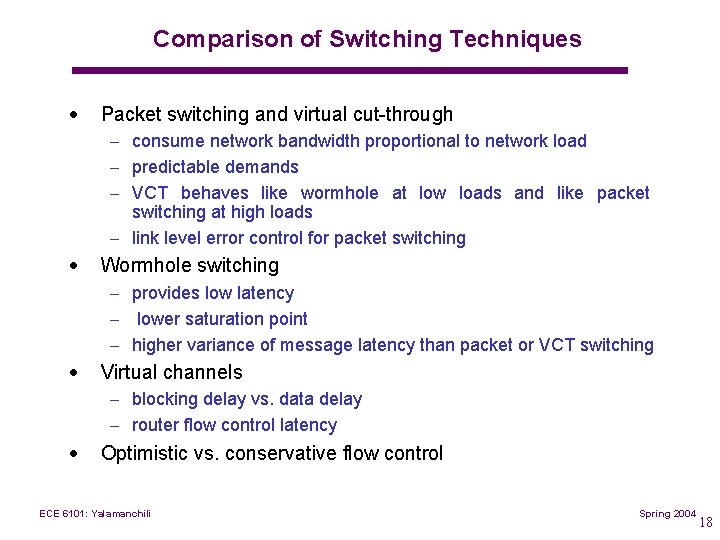

Comparison of Switching Techniques · Packet switching and virtual cut through – consume network bandwidth proportional to network load – predictable demands – VCT behaves like wormhole at low loads and like packet switching at high loads – link level error control for packet switching · Wormhole switching – provides low latency – lower saturation point – higher variance of message latency than packet or VCT switching · Virtual channels – blocking delay vs. data delay – router flow control latency · Optimistic vs. conservative flow control ECE 6101: Yalamanchili Spring 2004 18

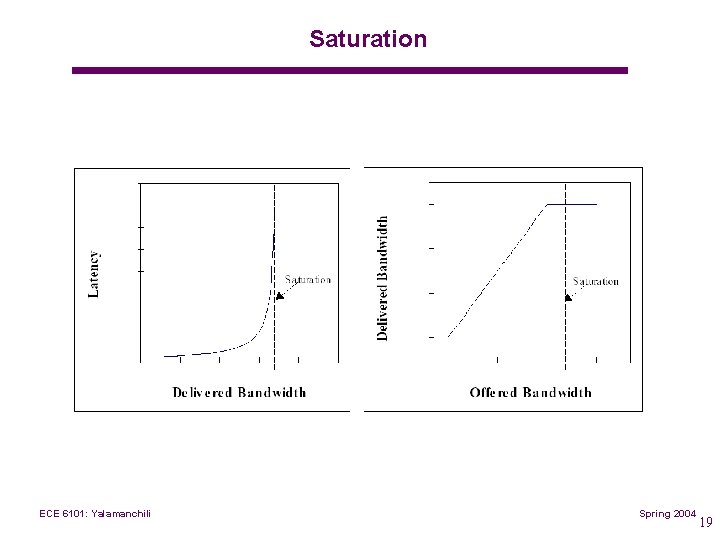

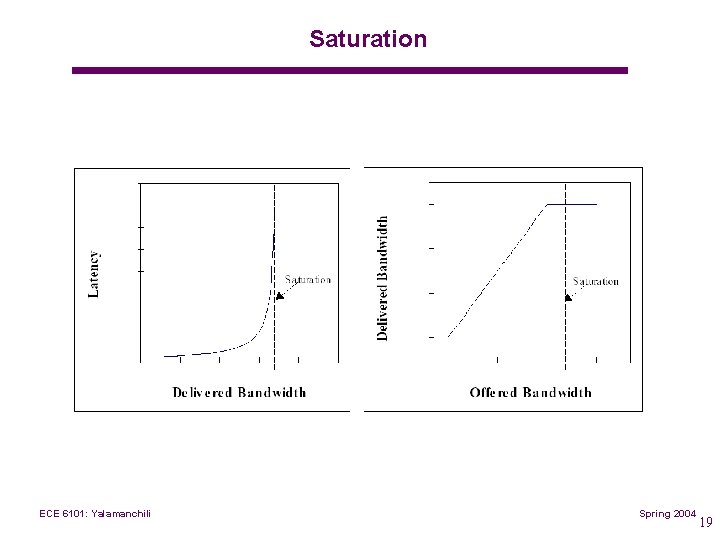

Saturation ECE 6101: Yalamanchili Spring 2004 19

Network Topologies ECE 6101: Yalamanchili Spring 2005

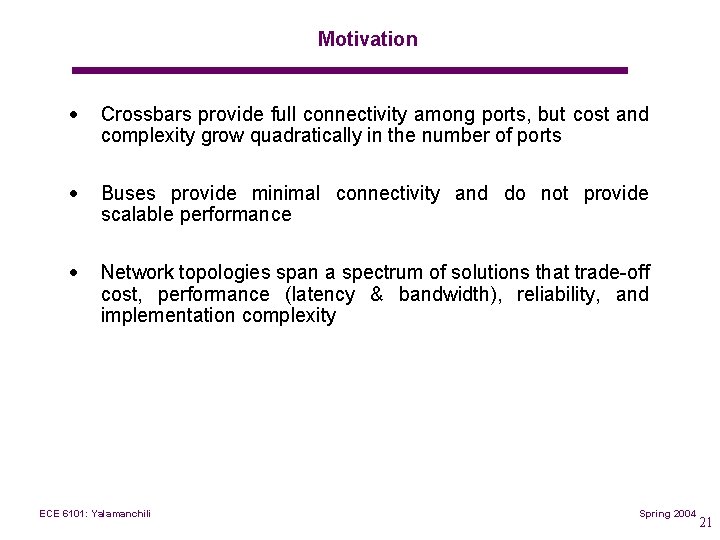

Motivation · Crossbars provide full connectivity among ports, but cost and complexity grow quadratically in the number of ports · Buses provide minimal connectivity and do not provide scalable performance · Network topologies span a spectrum of solutions that trade off cost, performance (latency & bandwidth), reliability, and implementation complexity ECE 6101: Yalamanchili Spring 2004 21

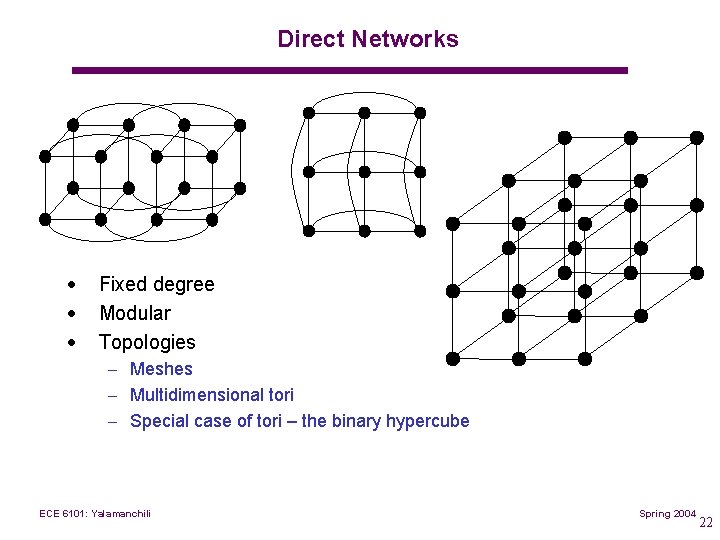

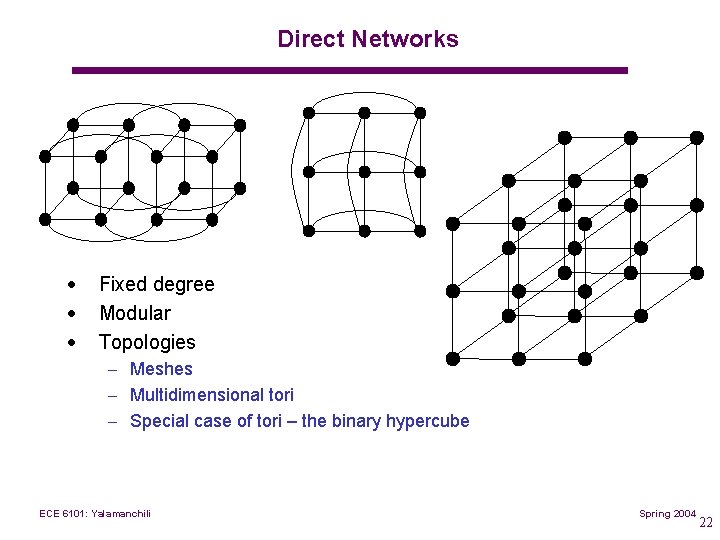

Direct Networks · · · Fixed degree Modular Topologies – Meshes – Multidimensional tori – Special case of tori – the binary hypercube ECE 6101: Yalamanchili Spring 2004 22

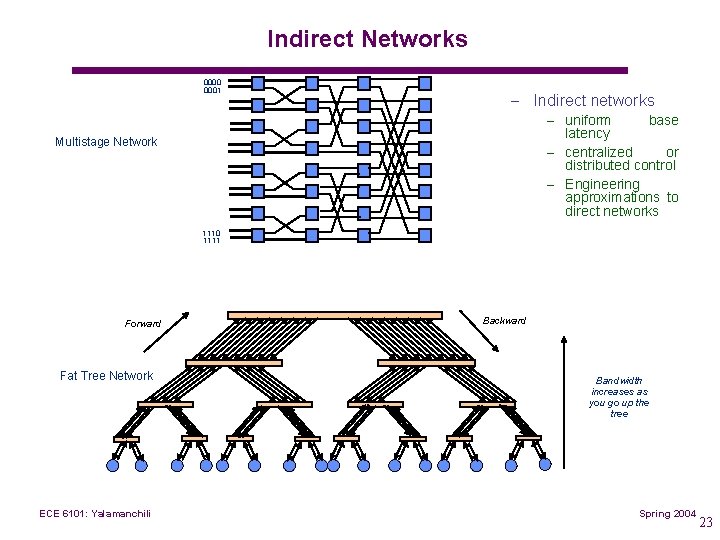

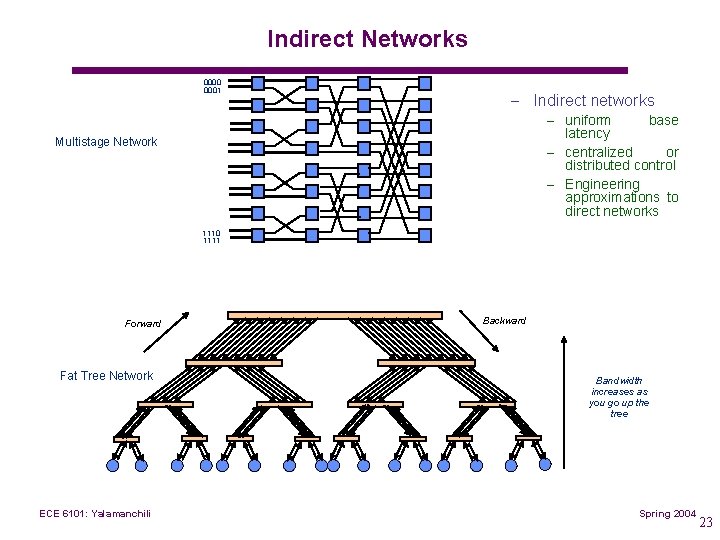

Indirect Networks 0000 0001 – Indirect networks – uniform base latency – centralized or distributed control – Engineering approximations to direct networks Multistage Network 1110 1111 Forward Fat Tree Network ECE 6101: Yalamanchili Backward Bandwidth increases as you go up the tree Spring 2004 23

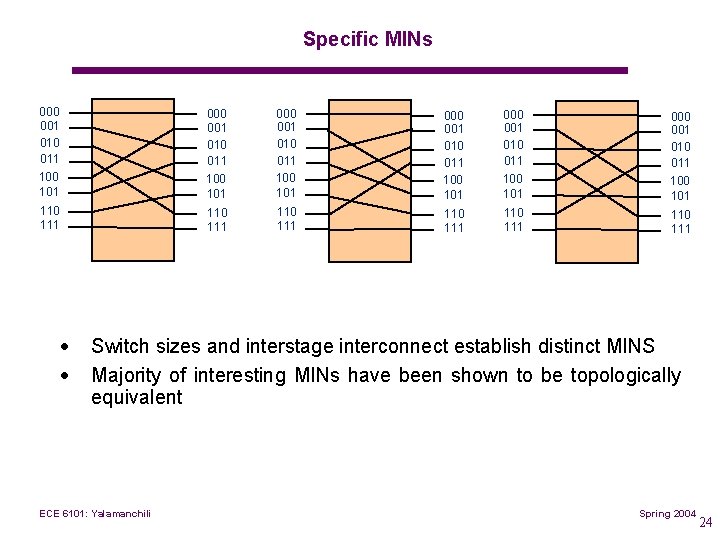

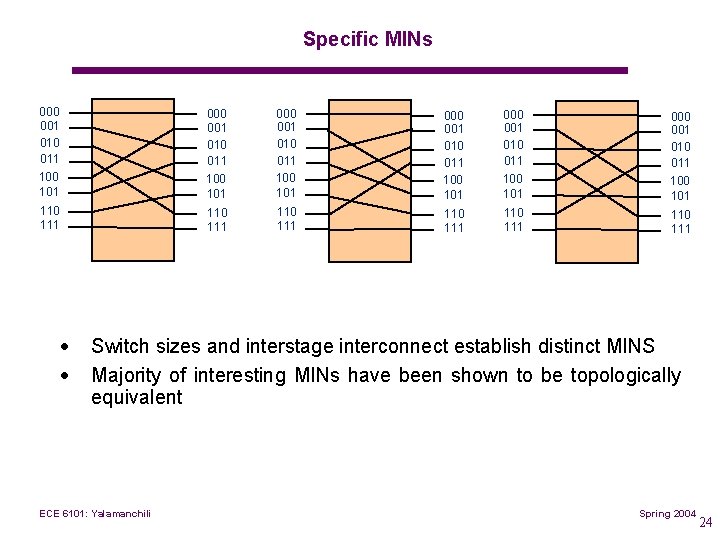

Specific MINs 000 001 010 011 100 101 000 001 010 011 100 101 110 111 110 111 · · Switch sizes and interstage interconnect establish distinct MINS Majority of interesting MINs have been shown to be topologically equivalent ECE 6101: Yalamanchili Spring 2004 24

Metrics ECE 6101: Yalamanchili Spring 2005

Evaluation Metrics · Latency – Message transit time – Determined by switching technique and traffic patterns · Node degree (channel width) – Number of input/output channels – This metric is determined by packaging constraints – pin/wiring constraints · Diameter · Path diversity – A measure of reliability ECE 6101: Yalamanchili Spring 2004 26

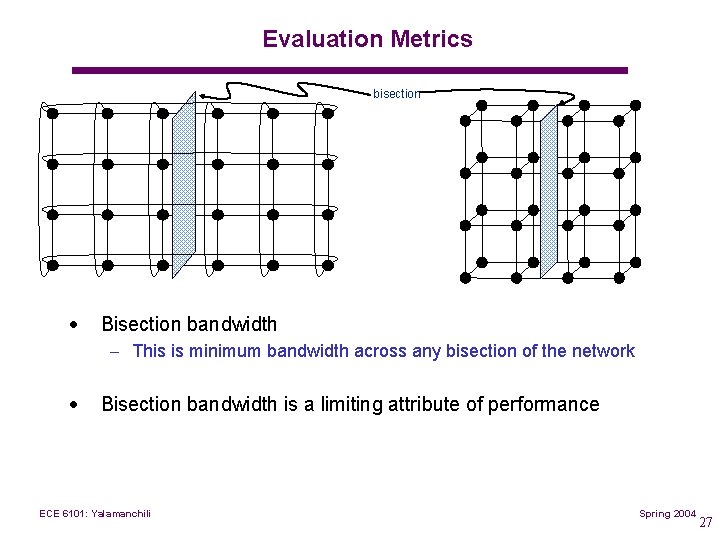

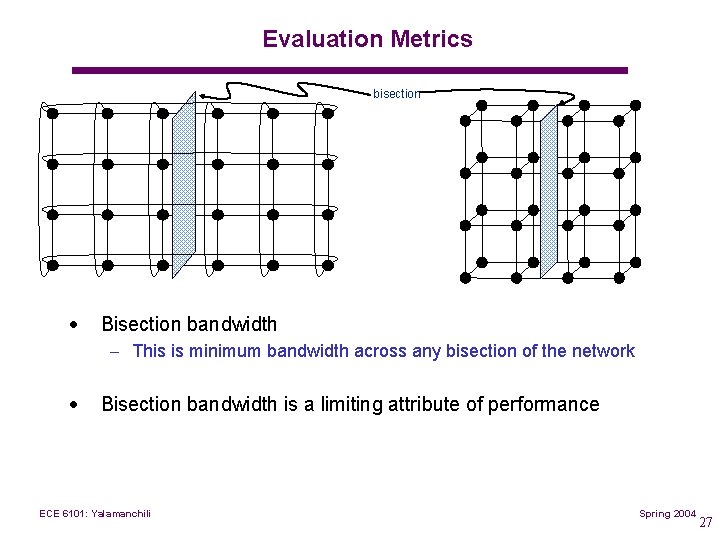

Evaluation Metrics bisection · Bisection bandwidth – This is minimum bandwidth across any bisection of the network · Bisection bandwidth is a limiting attribute of performance ECE 6101: Yalamanchili Spring 2004 27

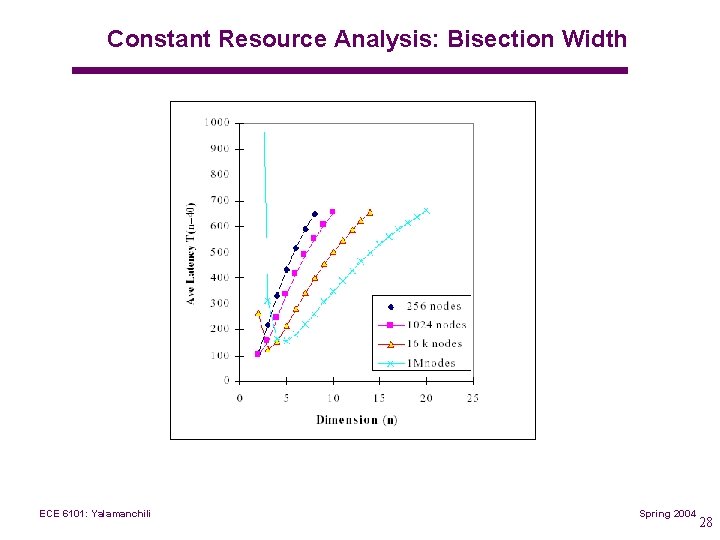

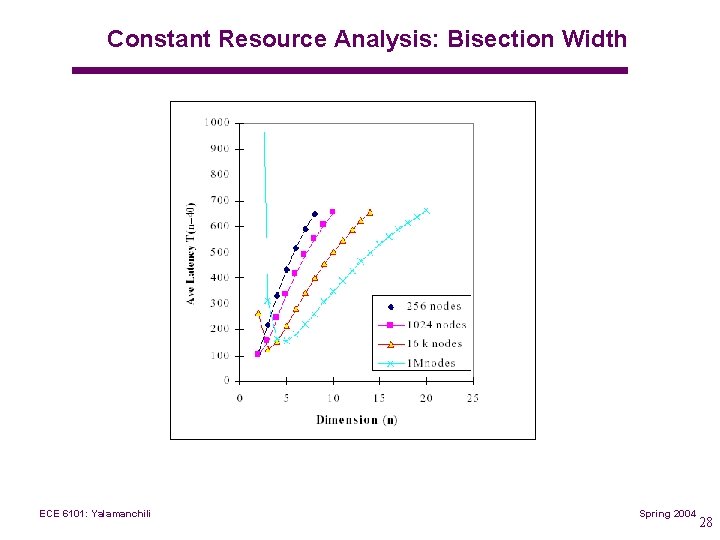

Constant Resource Analysis: Bisection Width ECE 6101: Yalamanchili Spring 2004 28

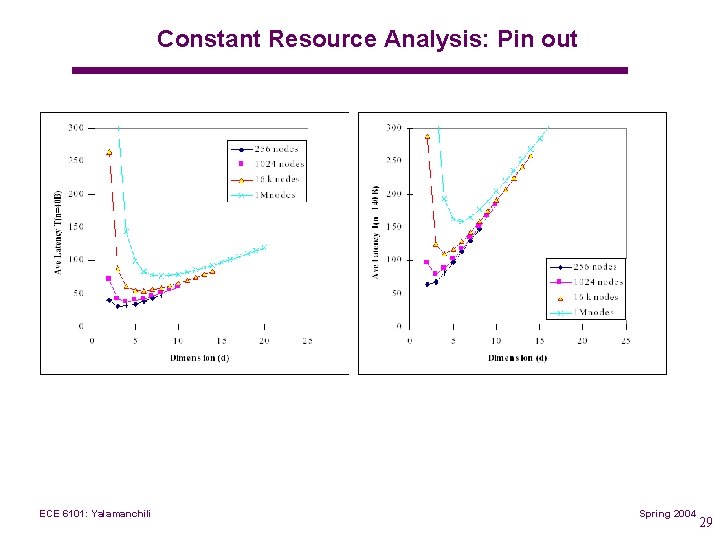

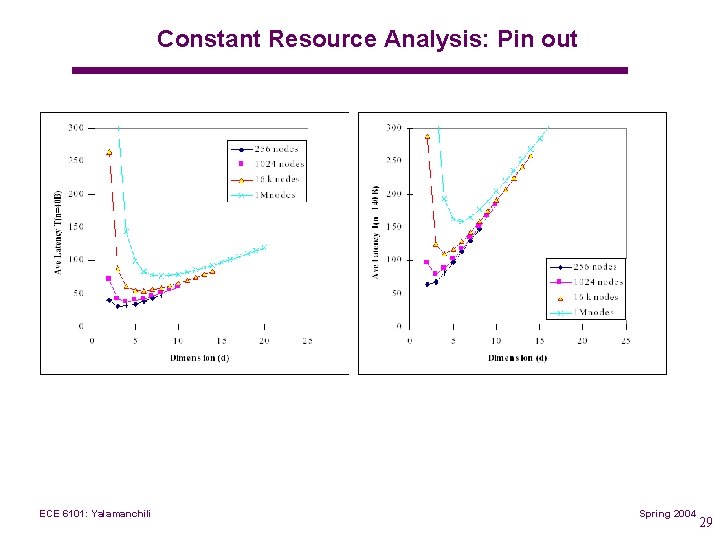

Constant Resource Analysis: Pin out ECE 6101: Yalamanchili Spring 2004 29

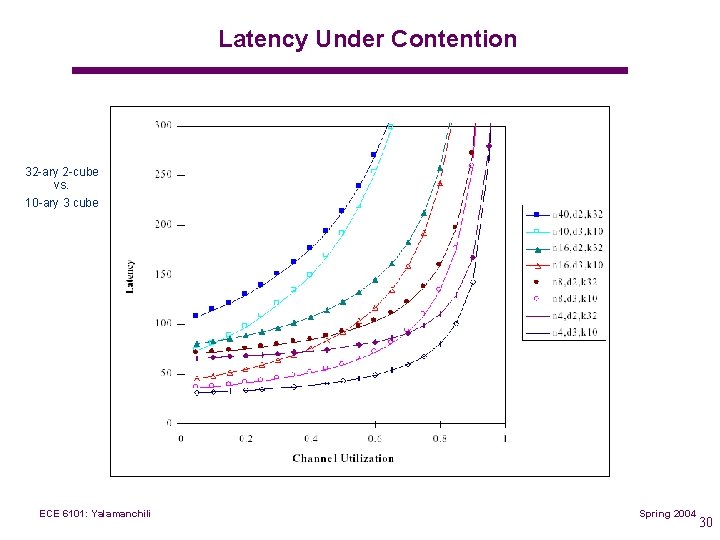

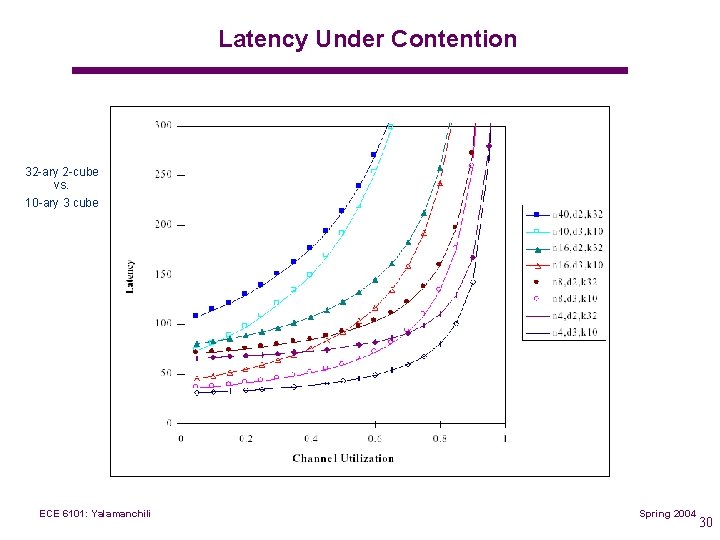

Latency Under Contention 32 ary 2 cube vs. 10 ary 3 cube ECE 6101: Yalamanchili Spring 2004 30

Deadlock and Livelock ECE 6101: Yalamanchili Spring 2005

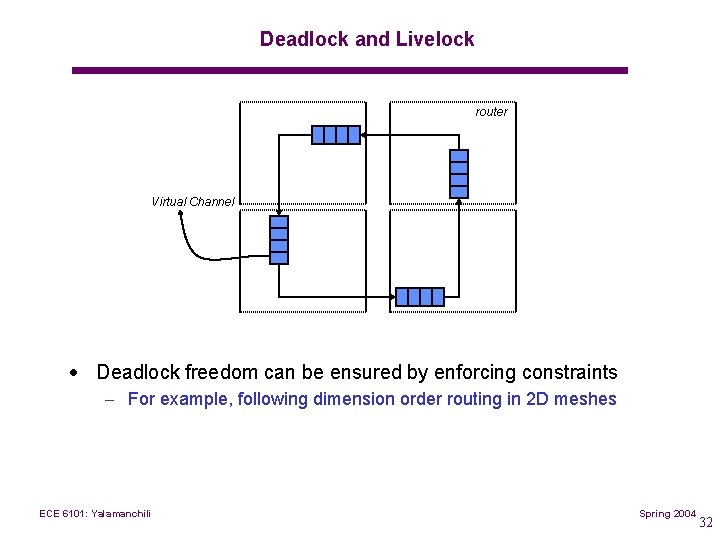

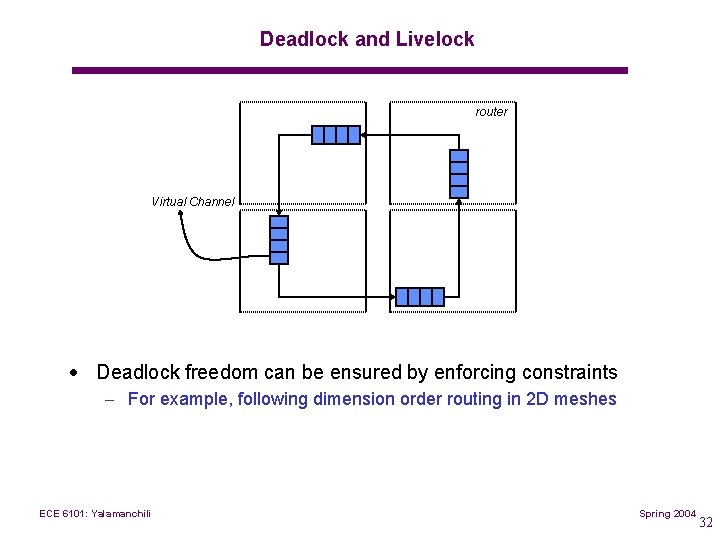

Deadlock and Livelock router Virtual Channel · Deadlock freedom can be ensured by enforcing constraints – For example, following dimension order routing in 2 D meshes ECE 6101: Yalamanchili Spring 2004 32

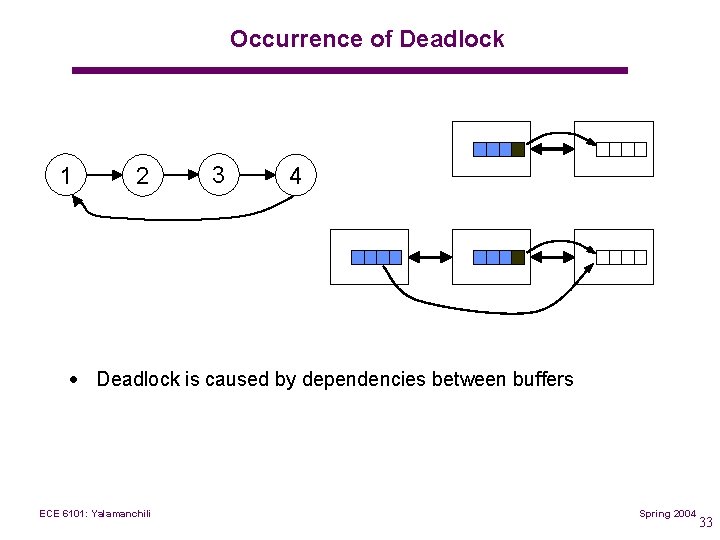

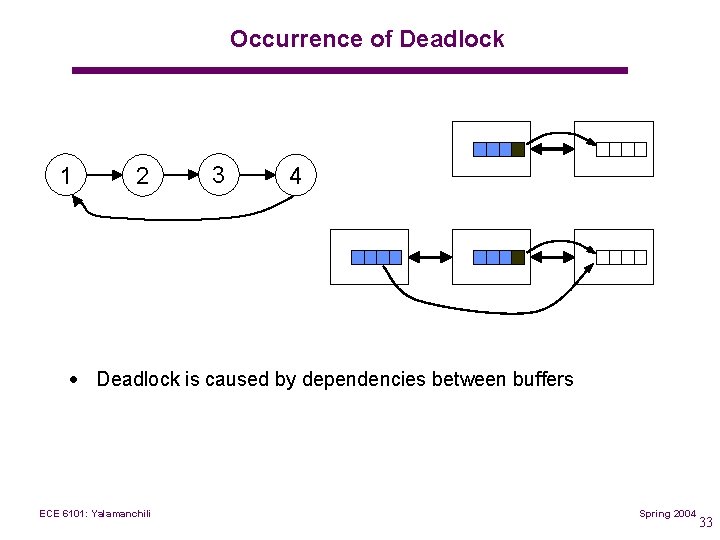

Occurrence of Deadlock 1 2 3 4 · Deadlock is caused by dependencies between buffers ECE 6101: Yalamanchili Spring 2004 33

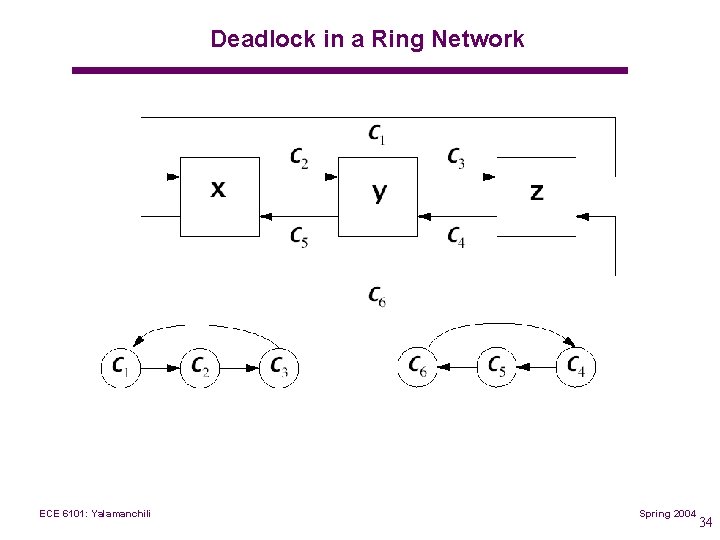

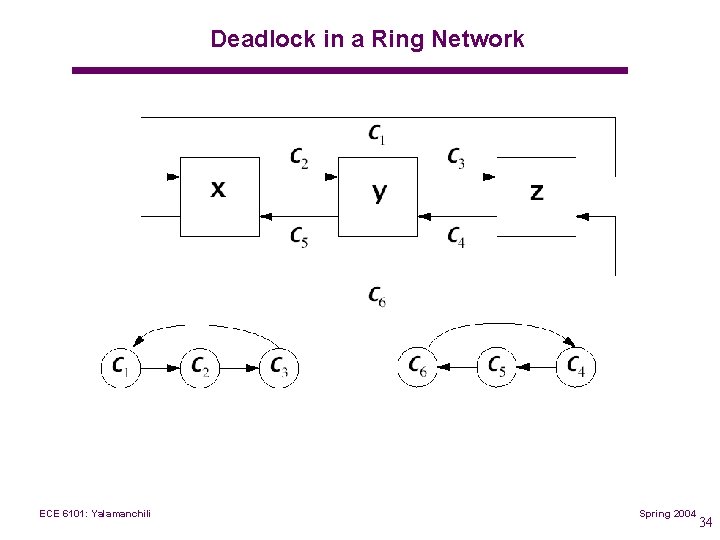

Deadlock in a Ring Network ECE 6101: Yalamanchili Spring 2004 34

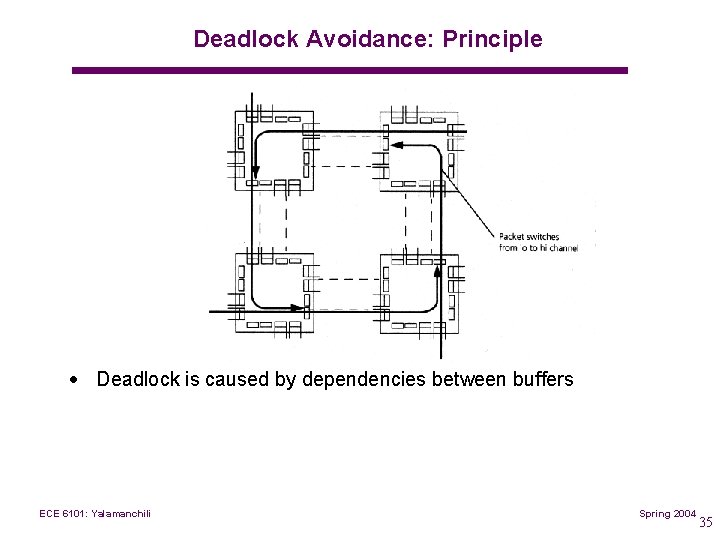

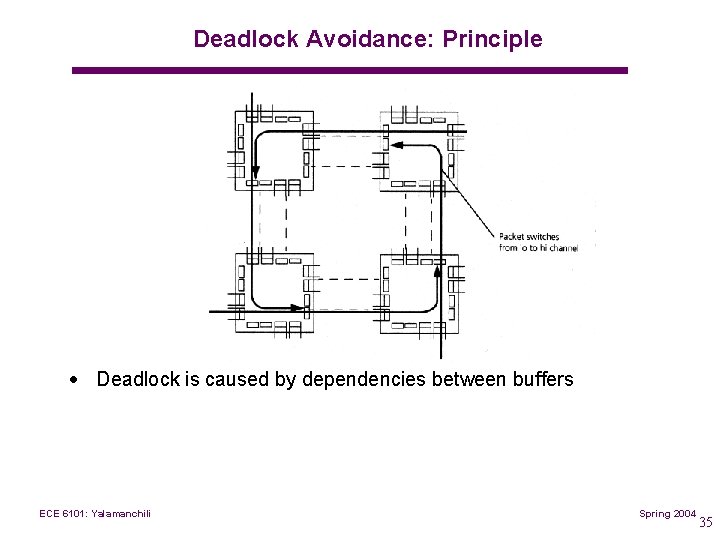

Deadlock Avoidance: Principle · Deadlock is caused by dependencies between buffers ECE 6101: Yalamanchili Spring 2004 35

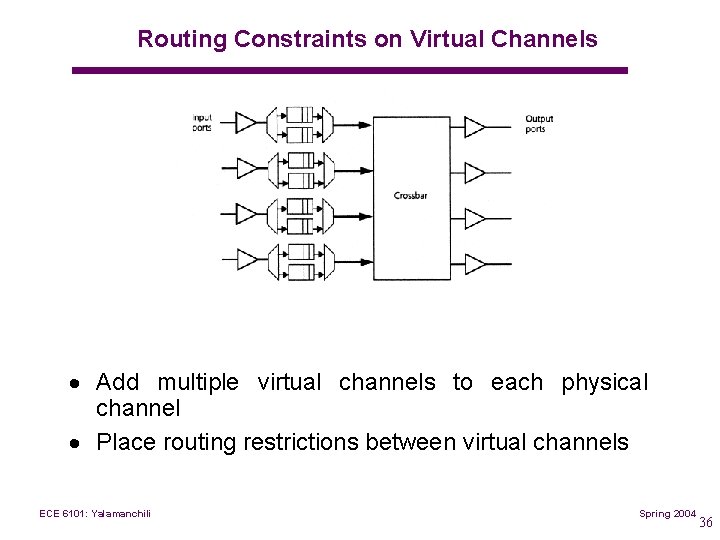

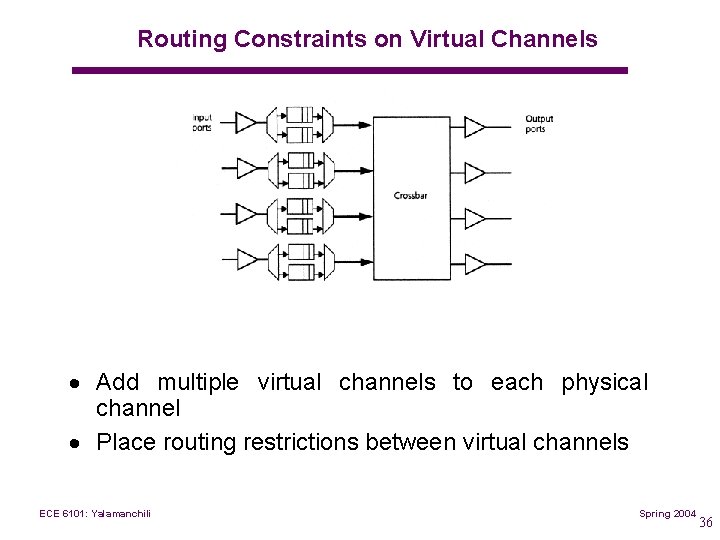

Routing Constraints on Virtual Channels · Add multiple virtual channels to each physical channel · Place routing restrictions between virtual channels ECE 6101: Yalamanchili Spring 2004 36

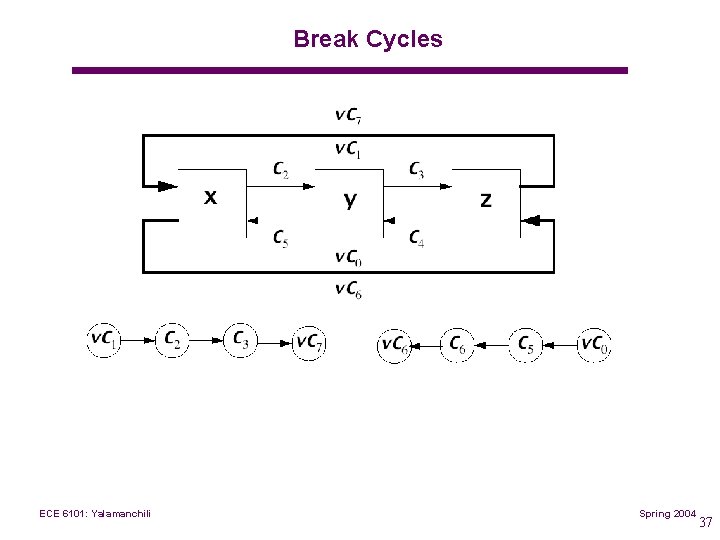

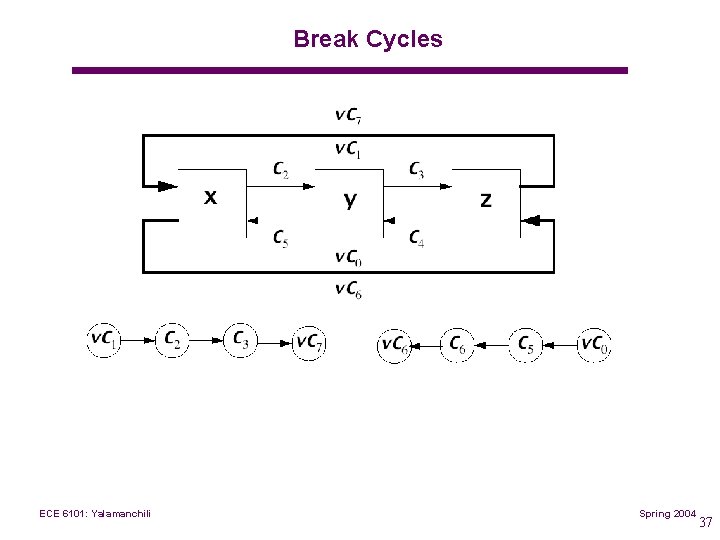

Break Cycles ECE 6101: Yalamanchili Spring 2004 37

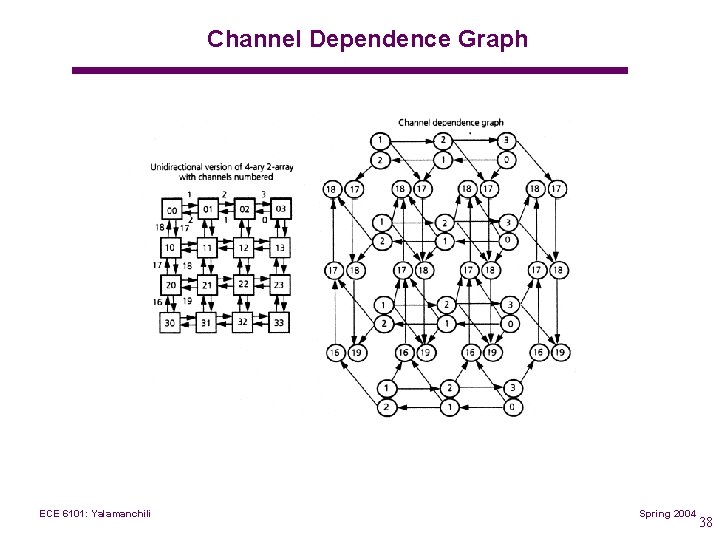

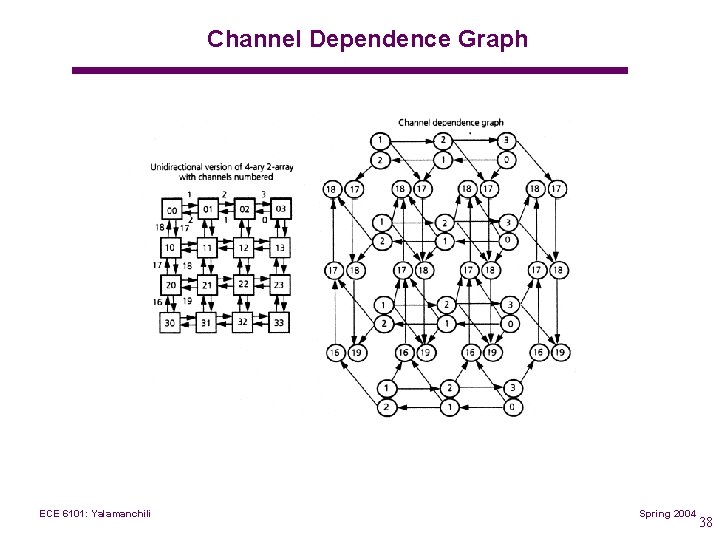

Channel Dependence Graph ECE 6101: Yalamanchili Spring 2004 38

Routing Layer ECE 6101: Yalamanchili Spring 2005

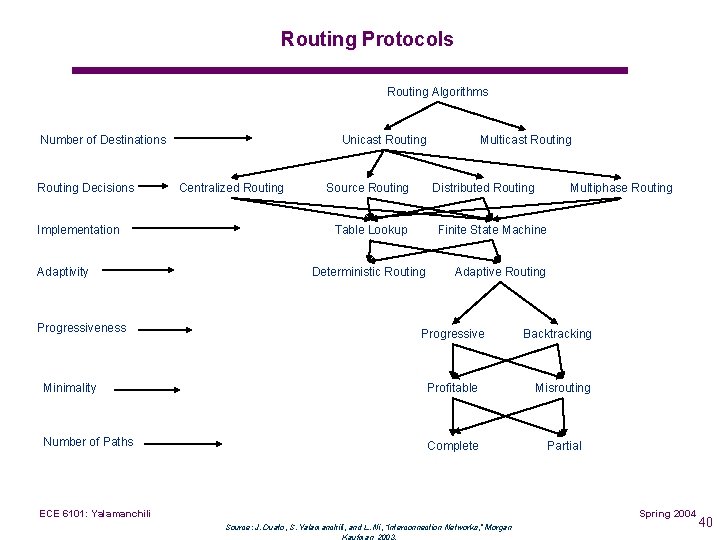

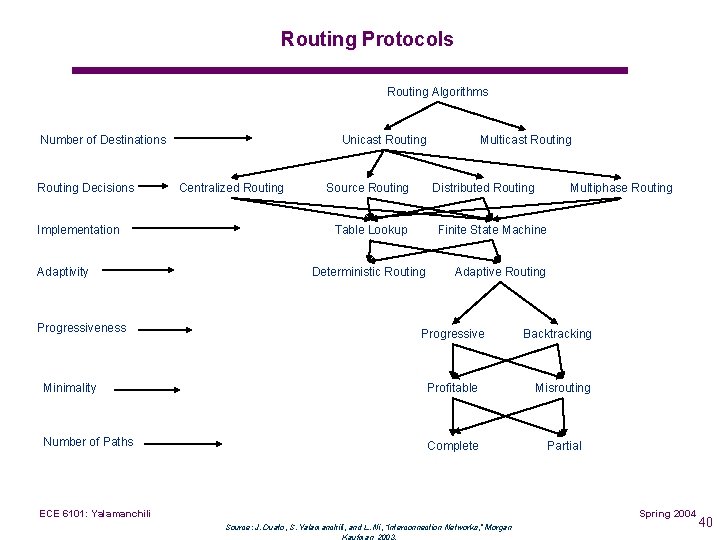

Routing Protocols Routing Algorithms Number of Destinations Routing Decisions Implementation Adaptivity Progressiveness Unicast Routing Centralized Routing Source Routing Multicast Routing Distributed Routing Table Lookup Multiphase Routing Finite State Machine Deterministic Routing Adaptive Routing Progressive Backtracking Minimality Profitable Misrouting Number of Paths Complete Partial ECE 6101: Yalamanchili Spring 2004 Source: J. Duato, S. Yalamanchili, and L. Ni, “Interconnection Networks, ” Morgan Kaufman 2003. 40

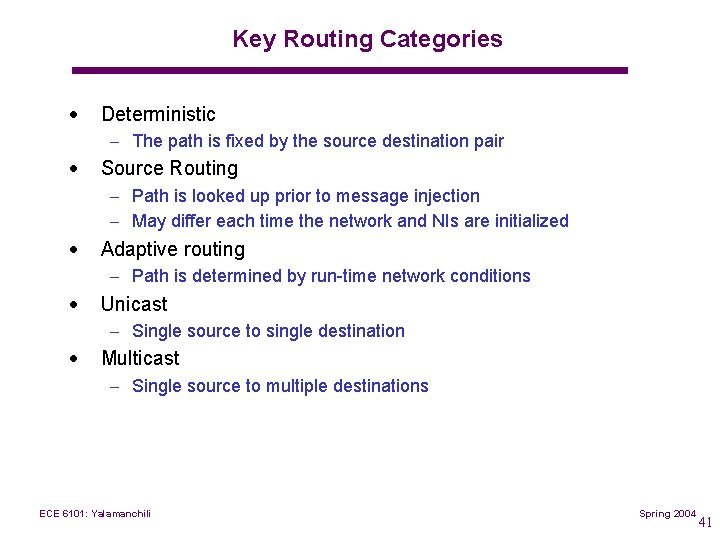

Key Routing Categories · Deterministic – The path is fixed by the source destination pair · Source Routing – Path is looked up prior to message injection – May differ each time the network and NIs are initialized · Adaptive routing – Path is determined by run time network conditions · Unicast – Single source to single destination · Multicast – Single source to multiple destinations ECE 6101: Yalamanchili Spring 2004 41

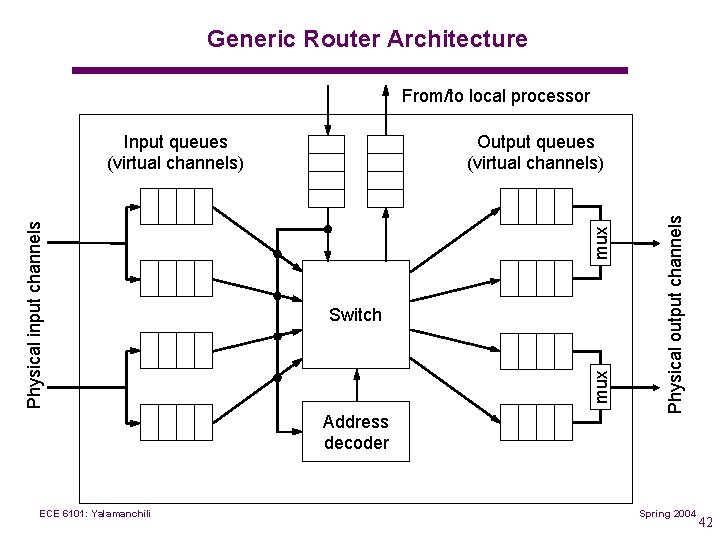

Generic Router Architecture From/to local processor Switch Address decoder ECE 6101: Yalamanchili Physical output channels mux Output queues (virtual channels) mux Physical input channels Input queues (virtual channels) Spring 2004 42

Software Layer ECE 6101: Yalamanchili Spring 2005

The Message Layer · Message layer background – Cluster computers – Myrinet SAN – Design properties · End to End communication path – Injection – Network transmission – Ejection · Overall performance ECE 6101: Yalamanchili Spring 2004 44

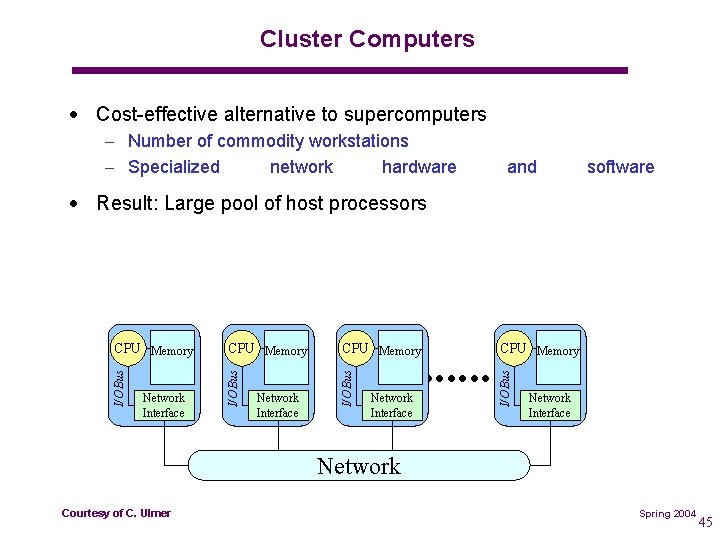

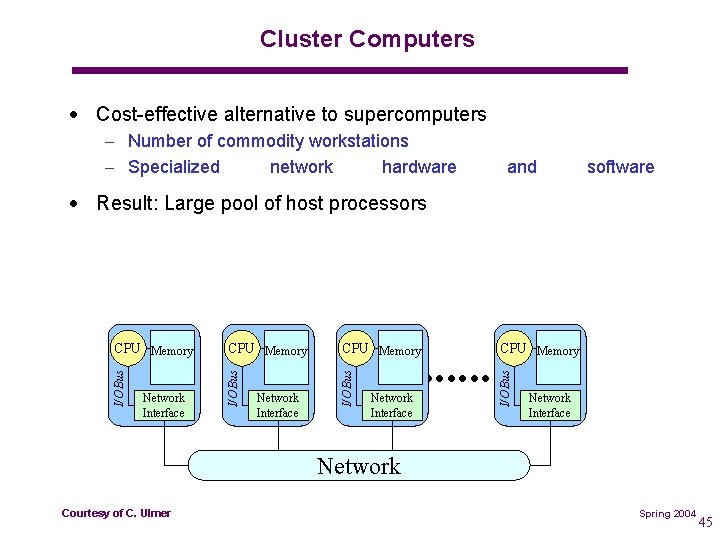

Cluster Computers · Cost effective alternative to supercomputers – Number of commodity workstations – Specialized network hardware and software CPU Memory I/O Bus · Result: Large pool of host processors Network Interface Network of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2004 45

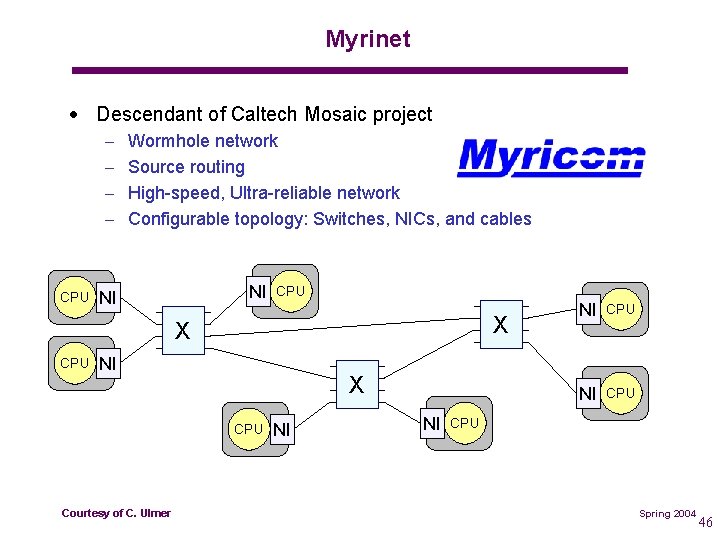

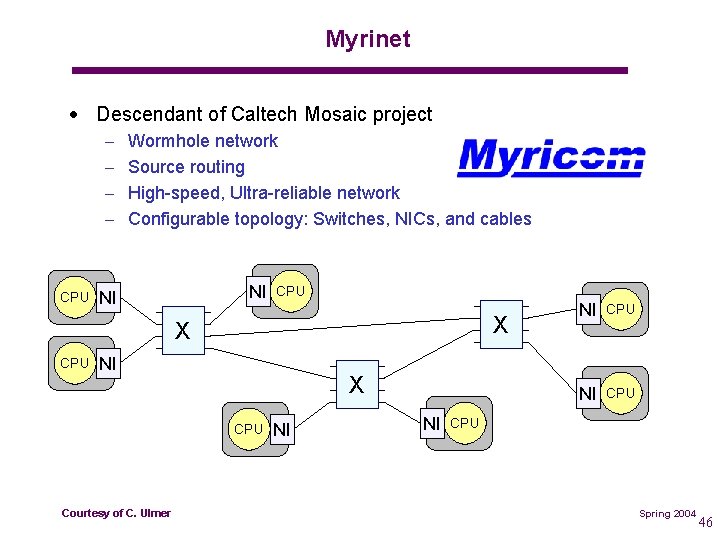

Myrinet · Descendant of Caltech Mosaic project – – CPU Wormhole network Source routing High speed, Ultra reliable network Configurable topology: Switches, NICs, and cables NI NI CPU X X CPU NI X CPU of C. Ulmer ECE Courtesy 6101: Yalamanchili NI NI NI CPU CPU Spring 2004 46

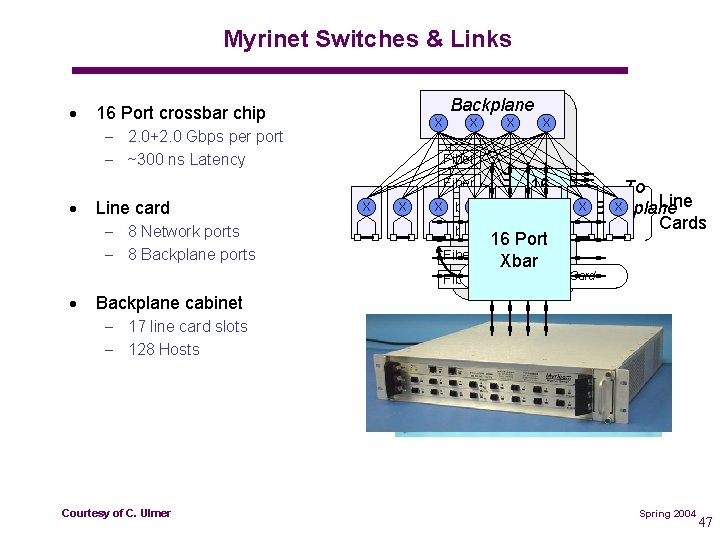

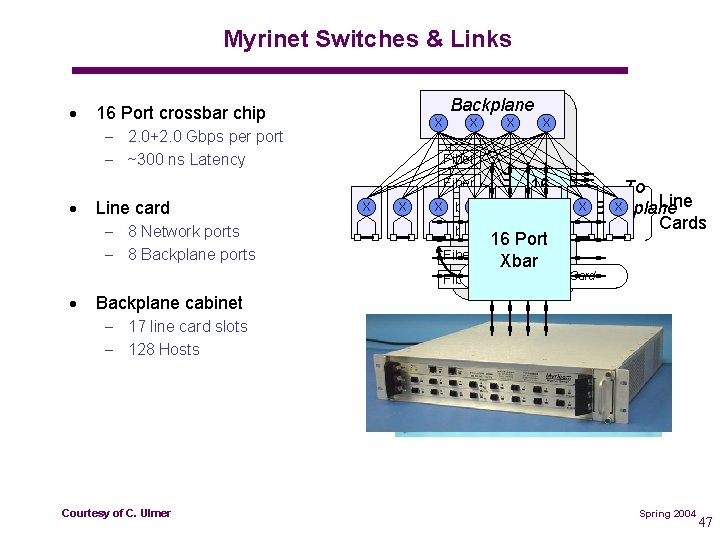

Myrinet Switches & Links · 16 Port crossbar chip X – 2. 0+2. 0 Gbps per port – ~300 ns Latency Backplane Fiber X Fiber · Line card – 8 Network ports – 8 Backplane ports X X X Fiber · X X 16 X Xbar X To Line X Backplane Cards 16 Port Line Xbar 8 Hosts / Line Card Backplane cabinet – 17 line card slots – 128 Hosts of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2004 47

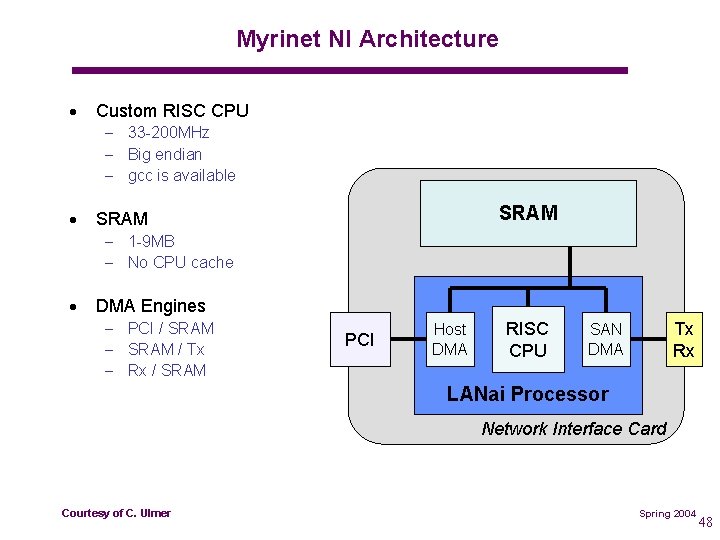

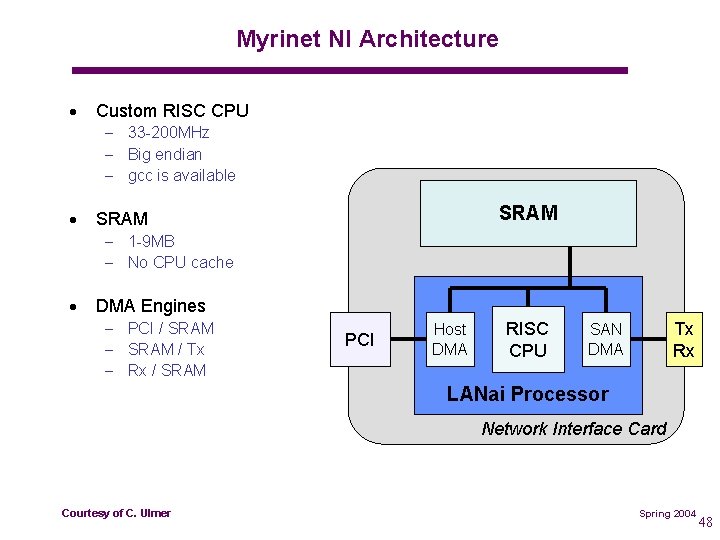

Myrinet NI Architecture · Custom RISC CPU – 33 200 MHz – Big endian – gcc is available · SRAM – 1 9 MB – No CPU cache · DMA Engines – PCI / SRAM – SRAM / Tx – Rx / SRAM PCI Host DMA RISC CPU Tx Rx SAN DMA LANai Processor Network Interface Card of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2004 48

Message Layers of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2005

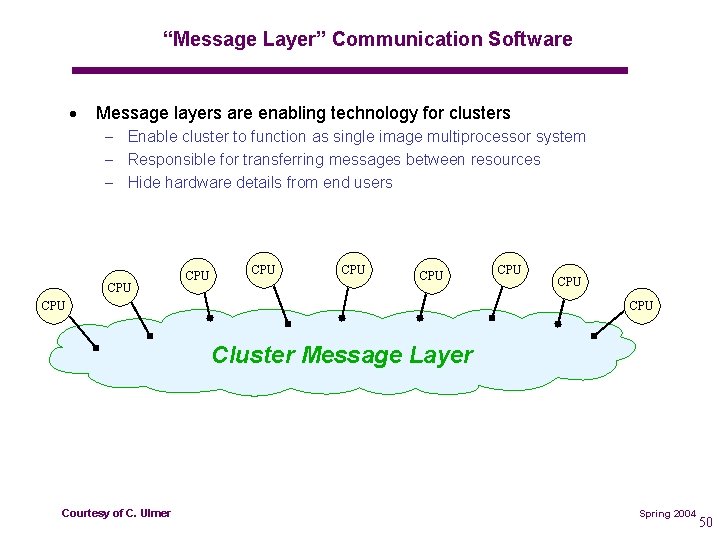

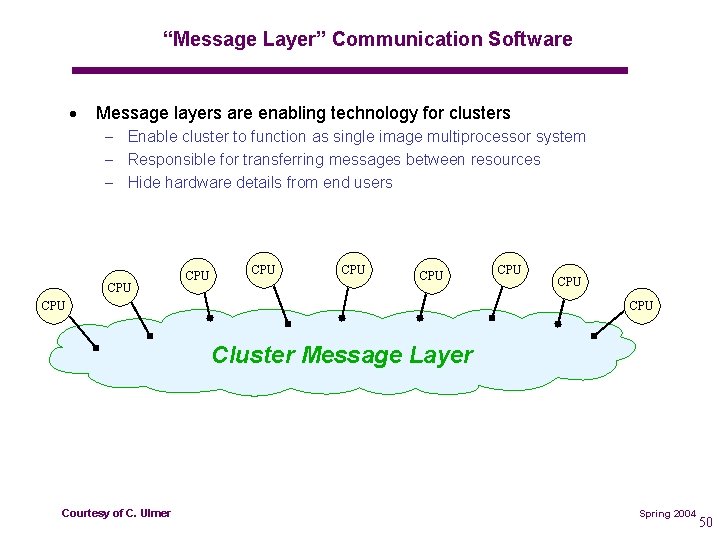

“Message Layer” Communication Software · Message layers are enabling technology for clusters – Enable cluster to function as single image multiprocessor system – Responsible for transferring messages between resources – Hide hardware details from end users CPU CPU CPU Cluster Message Layer of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2004 50

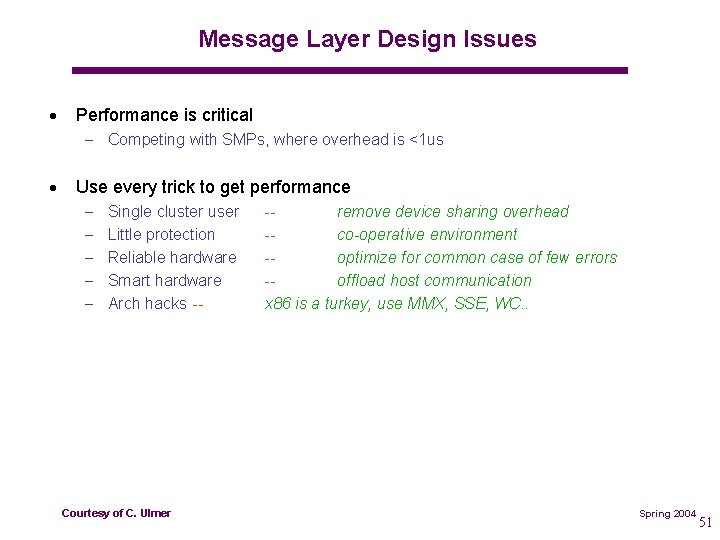

Message Layer Design Issues · Performance is critical – Competing with SMPs, where overhead is <1 us · Use every trick to get performance – – – Single cluster user Little protection Reliable hardware Smart hardware Arch hacks of C. Ulmer ECE Courtesy 6101: Yalamanchili remove device sharing overhead co-operative environment optimize for common case of few errors offload host communication x 86 is a turkey, use MMX, SSE, WC. . Spring 2004 51

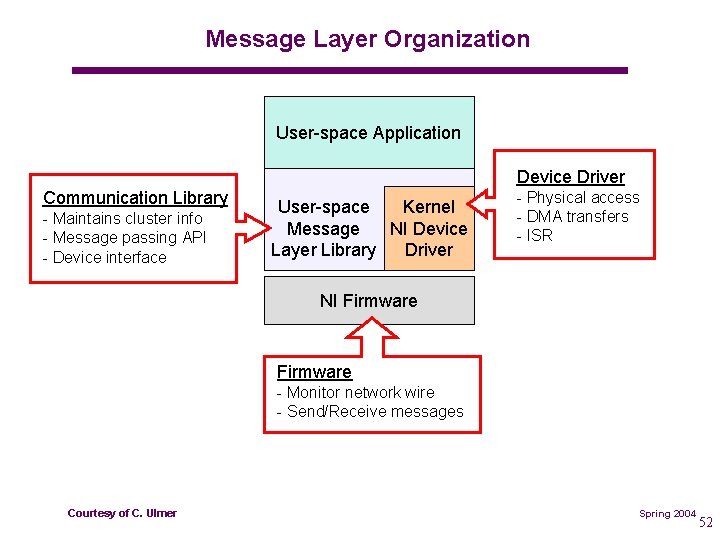

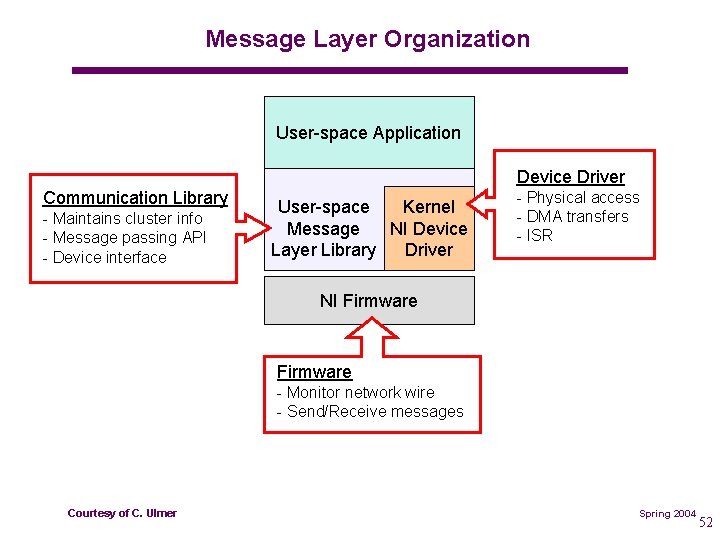

Message Layer Organization User space Application Device Driver Communication Library Maintains cluster info Message passing API Device interface User space Kernel Message NI Device Layer Library Driver Physical access DMA transfers ISR NI Firmware Monitor network wire Send/Receive messages Courtesy of C. Ulmer ECE 6101: Yalamanchili Spring 2004 52

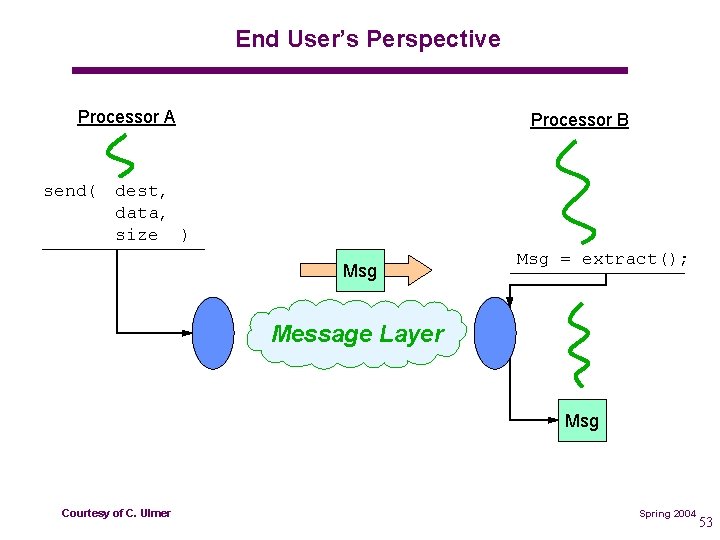

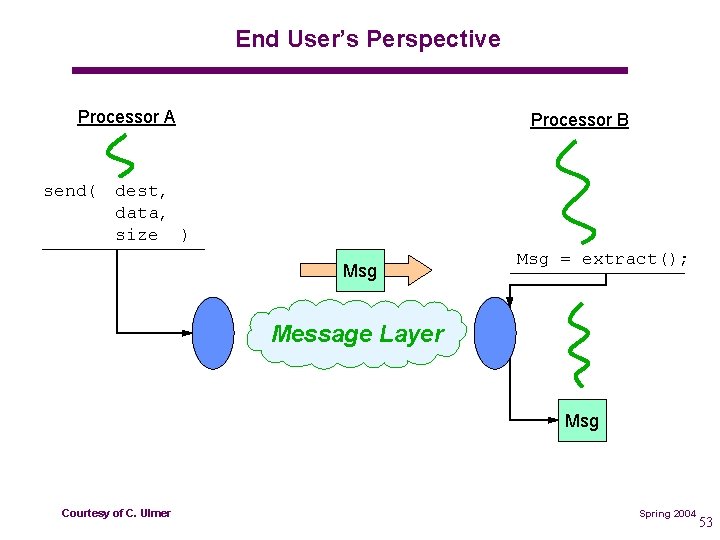

End User’s Perspective Processor A send( Processor B dest, data, size ) Msg = extract(); Message Layer Msg of C. Ulmer ECE Courtesy 6101: Yalamanchili Spring 2004 53

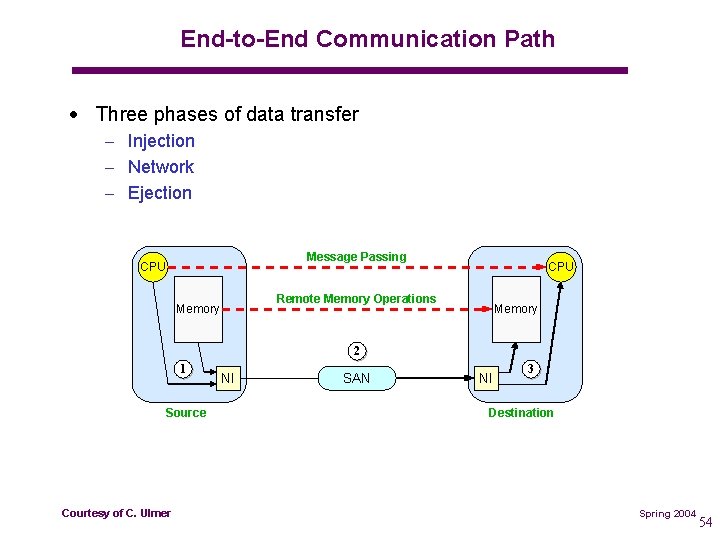

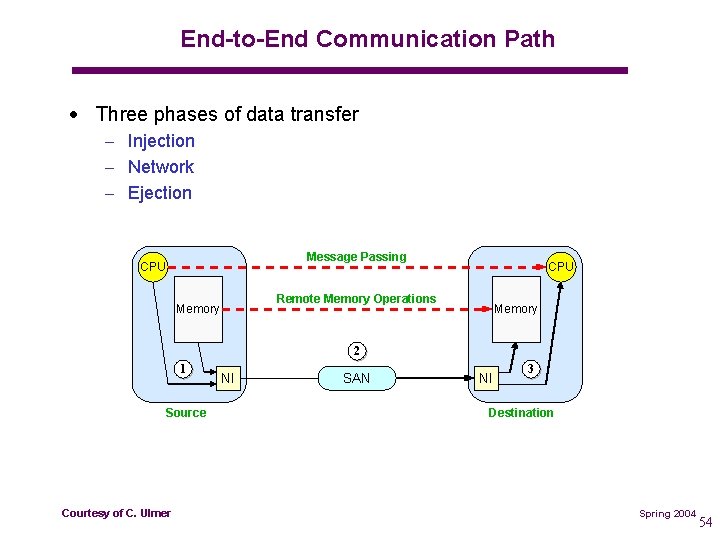

End-to-End Communication Path · Three phases of data transfer – Injection – Network – Ejection Message Passing CPU Remote Memory Operations Memory 2 1 Source of C. Ulmer ECE Courtesy 6101: Yalamanchili NI SAN NI 3 Destination Spring 2004 54

Bandwidth (MBytes/s) TPIL Performance: LANai 9 NI with Pentium III-550 MHz Host of C. Ulmer ECE Courtesy 6101: Yalamanchili Injection Size (Bytes) Spring 2004 55

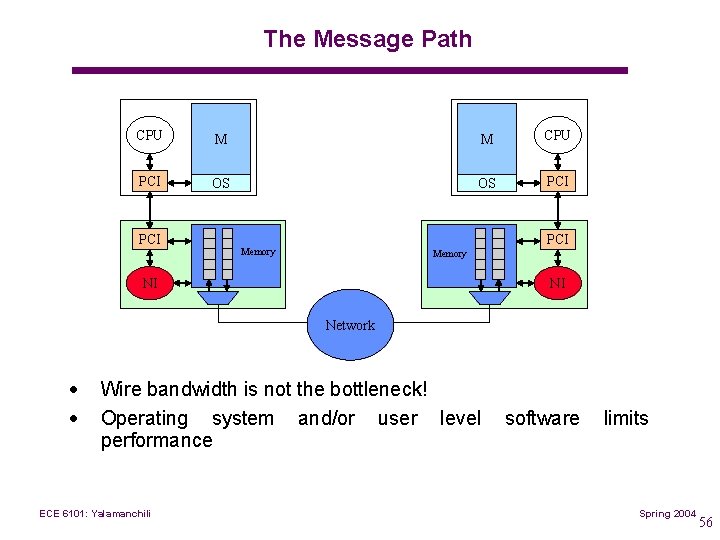

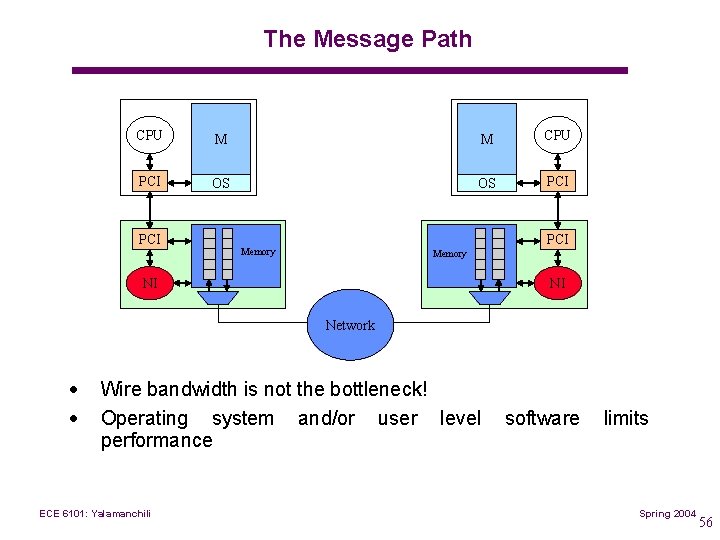

The Message Path CPU M M CPU PCI OS OS PCI PCI Memory NI NI Network · · Wire bandwidth is not the bottleneck! Operating system and/or user level performance ECE 6101: Yalamanchili software limits Spring 2004 56

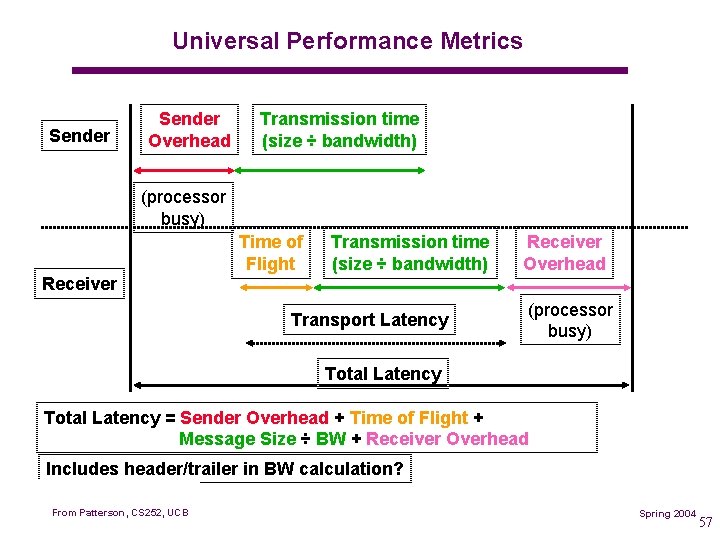

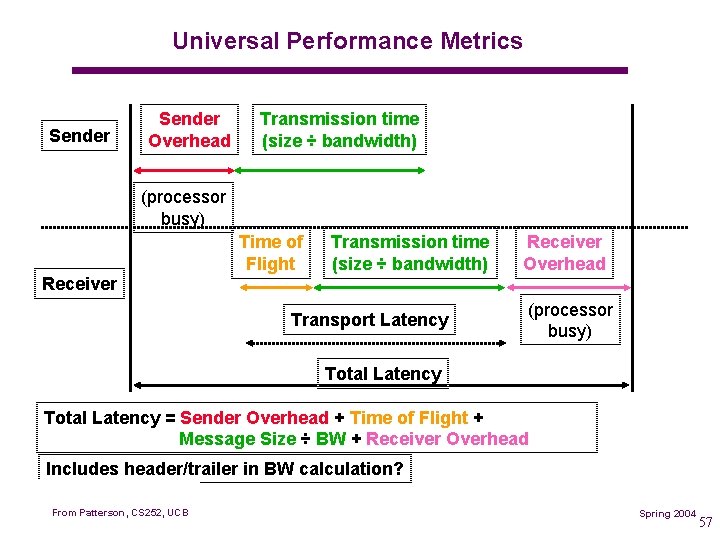

Universal Performance Metrics Sender Overhead Transmission time (size ÷ bandwidth) (processor busy) Time of Flight Transmission time (size ÷ bandwidth) Receiver Overhead Receiver Transport Latency (processor busy) Total Latency = Sender Overhead + Time of Flight + Message Size ÷ BW + Receiver Overhead Includes header/trailer in BW calculation? From Patterson, CS 252, UCB ECE 6101: Yalamanchili Spring 2004 57

Simplified Latency Model · Total Latency Overhead + Message Size / BW · Overhead = Sender Overhead + Time of Flight + Receiver Overhead · Can relate overhead to network bandwidth utilization From Patterson, CS 252, UCB ECE 6101: Yalamanchili Spring 2004 58

Commercial Example ECE 6101: Yalamanchili Spring 2005

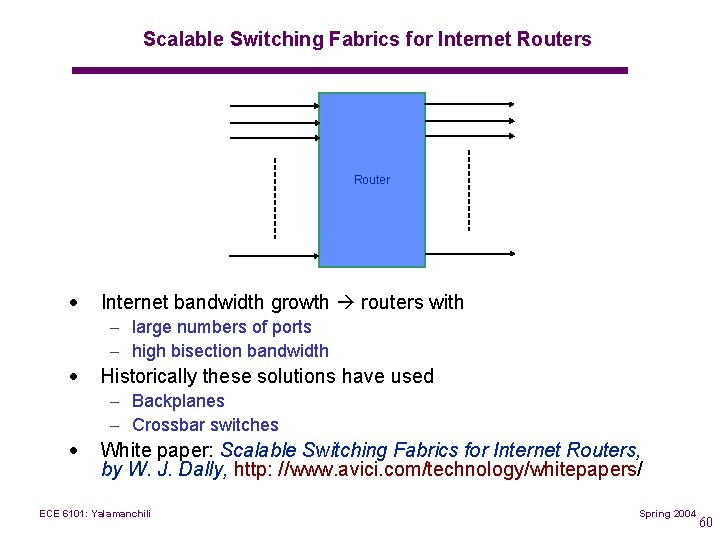

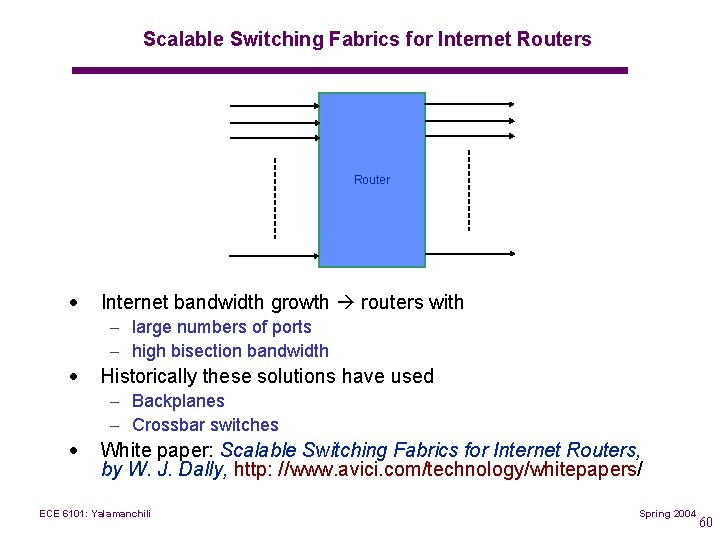

Scalable Switching Fabrics for Internet Routers Router · Internet bandwidth growth routers with – large numbers of ports – high bisection bandwidth · Historically these solutions have used – Backplanes – Crossbar switches · White paper: Scalable Switching Fabrics for Internet Routers, by W. J. Dally, http: //www. avici. com/technology/whitepapers/ ECE 6101: Yalamanchili Spring 2004 60

Requirements · Scalable – Incremental – Economical cost linear in the number of nodes · Robust – Fault tolerant path diversity + reconfiguration – Non blocking features · Performance – High bisection bandwidth – Quality of Service (Qo. S) – Bounded delay ECE 6101: Yalamanchili Spring 2004 61

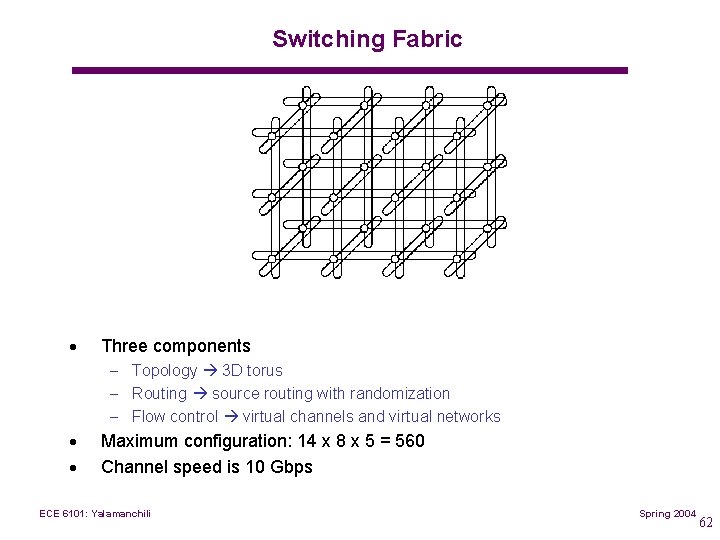

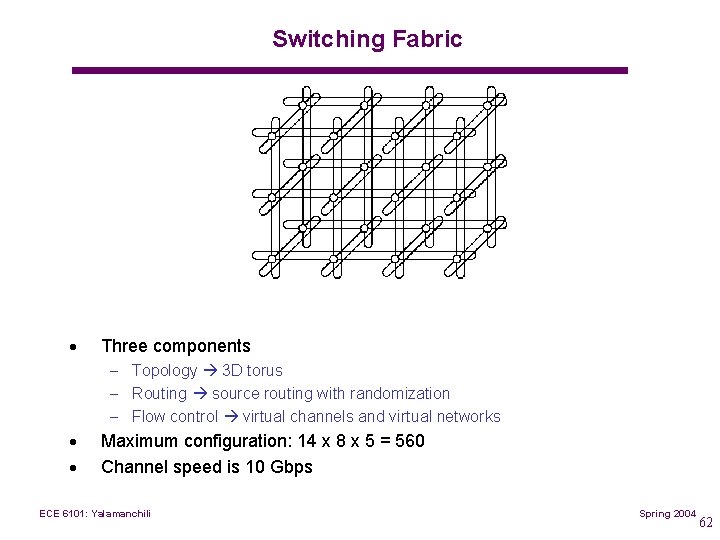

Switching Fabric · Three components – Topology 3 D torus – Routing source routing with randomization – Flow control virtual channels and virtual networks · · Maximum configuration: 14 x 8 x 5 = 560 Channel speed is 10 Gbps ECE 6101: Yalamanchili Spring 2004 62

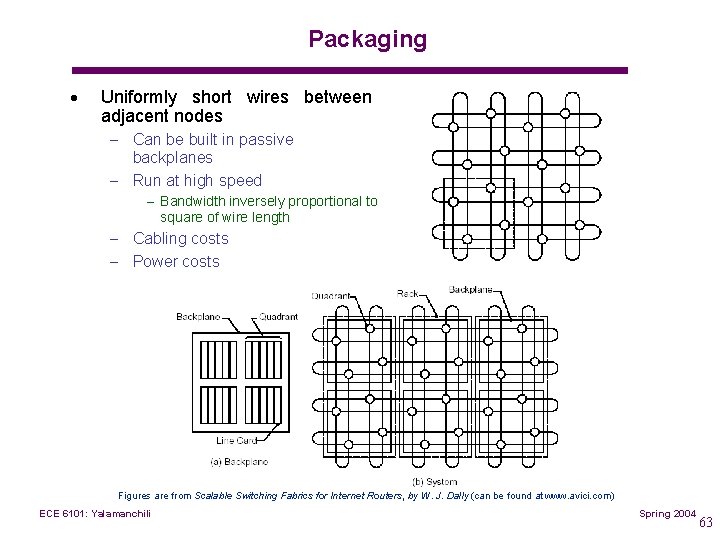

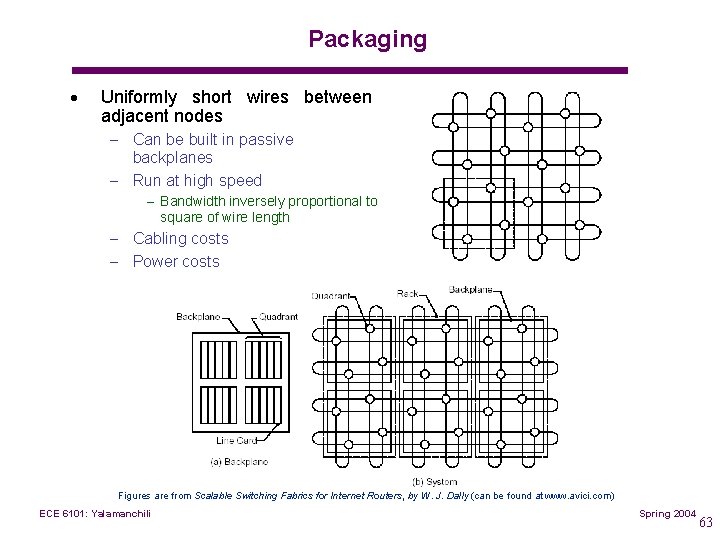

Packaging · Uniformly short wires between adjacent nodes – Can be built in passive backplanes – Run at high speed – Bandwidth inversely proportional to square of wire length – Cabling costs – Power costs Figures are from Scalable Switching Fabrics for Internet Routers, by W. J. Dally (can be found at www. avici. com) ECE 6101: Yalamanchili Spring 2004 63

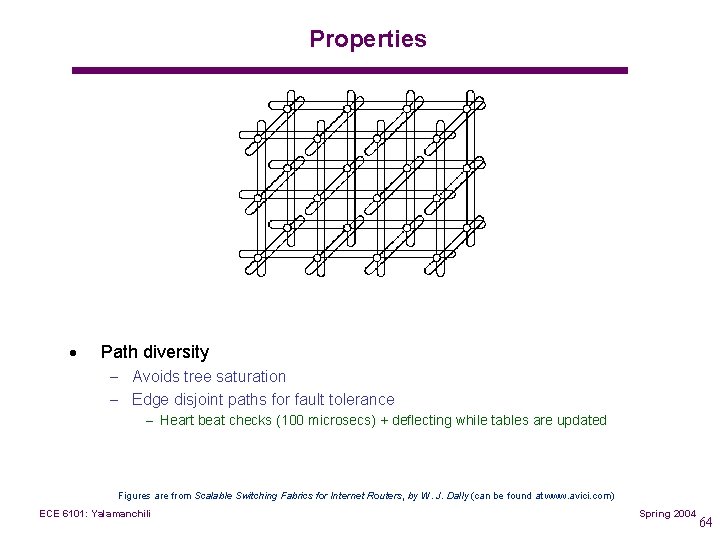

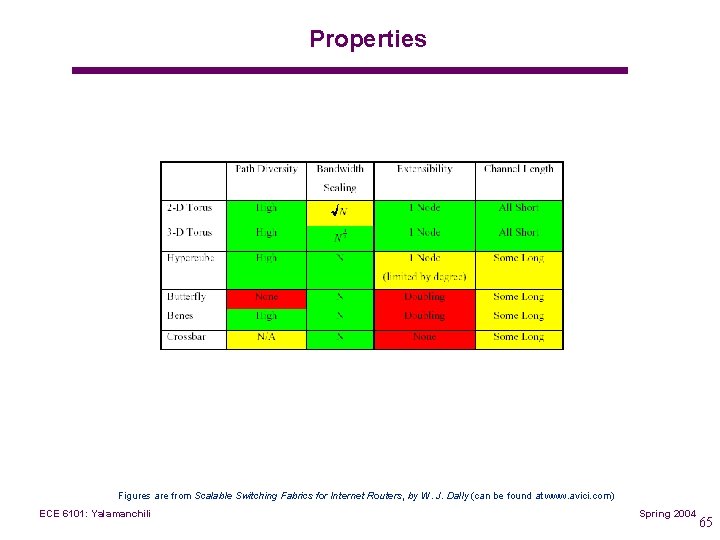

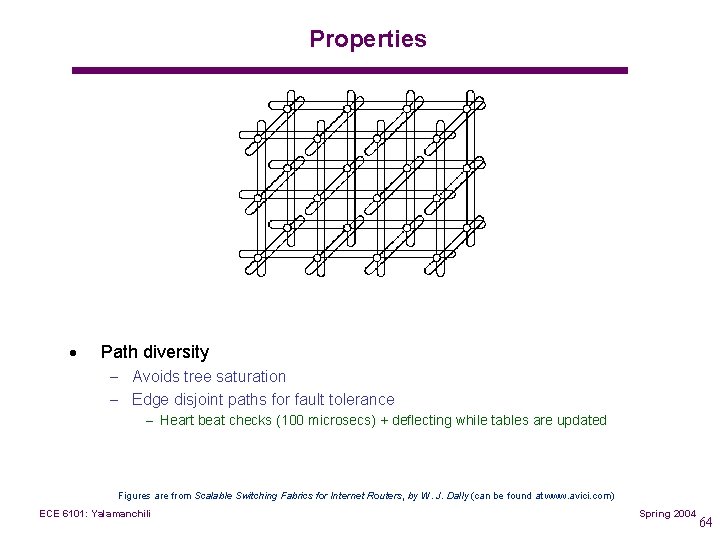

Properties · Path diversity – Avoids tree saturation – Edge disjoint paths for fault tolerance – Heart beat checks (100 microsecs) + deflecting while tables are updated Figures are from Scalable Switching Fabrics for Internet Routers, by W. J. Dally (can be found at www. avici. com) ECE 6101: Yalamanchili Spring 2004 64

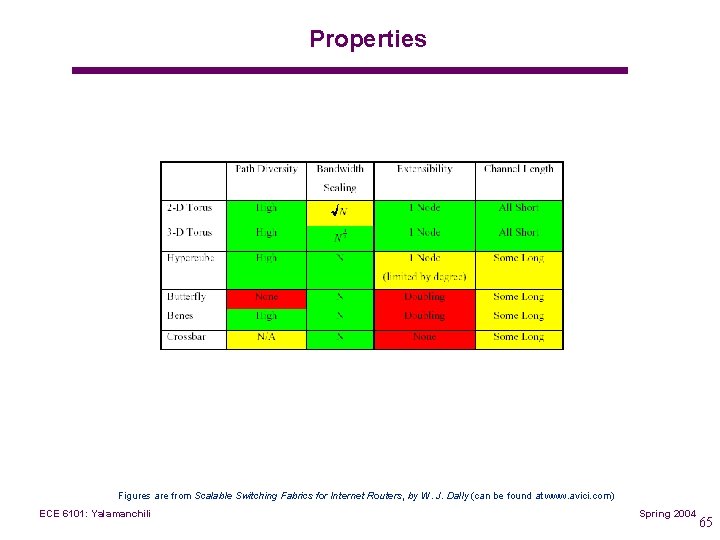

Properties Figures are from Scalable Switching Fabrics for Internet Routers, by W. J. Dally (can be found at www. avici. com) ECE 6101: Yalamanchili Spring 2004 65

Use of Virtual Channels · Virtual channels aggregated into virtual networks – Two networks for each output port · Distinct networks prevent undesirable coupling – Only bandwidth on a link is shared – Fair arbitration mechanisms · Distinct networks enable Qo. S constraints to be met – Separate best effort and constant bit rate traffic ECE 6101: Yalamanchili Spring 2004 66

Summary · Distinguish between traditional networking performance multiprocessor communication · Hierarchy of implementations and high – Physical, switching and routing – Protocol families and protocol layers (the protocol stack) · Datapath and architecture of the switches · Metrics – Bisection bandwidth – Reliability – Traditional latency and bandwidth ECE 6101: Yalamanchili Spring 2004 67