Interconnection network interface and a case study Network

- Slides: 26

Interconnection network interface and a case study

Network interface design issue • The networking requirement user’s perspective – In-order message delivery – Reliable delivery • Error control • Flow control – Deadlock free • Typical network hardware features – Arbitrary delivery order (adaptive/multipath routing) – Finite buffering – Limited fault handling • How and where should we bridge the gap? – Network hardware? Network systems? Or a hardware/systems/software approach?

The Internet approach – How does the Internet realize these functions? • No deadlock issue • Reliability/flow control/in-order delivery are done at the TCP layer? • The network layer (IP) provides best effort service. – IP is done in the software as well. – Drawbacks: • Too many layers of software • Users need to go through the OS to access the communication hardware (system calls can cause context switching).

Approach in HPC networks • Where should these functions be realized? – High performance networking • Most functionality below the network layer are done by the hardware (or almost hardware) – This provide the APIs for network transactions • If there is mis-match between what the network provides and what users want, a software messaging layer is created to bridge the gaps.

Messaging Layer • Bridge between the hardware functionality and the user communication requirement – Typical network hardware features • Arbitrary delivery order (adaptive/multipath routing) • Finite buffering • Limited fault handling – Typical user communication requirement • In-order delivery • End-to-end flow control • Reliable transmission

Messaging Layer

Communication cost • Communication cost = hardware cost + software cost (messaging layer cost) – Hardware message time: msize/bandwidth – Software time: • Buffer management • End-to-end flow control • Running protocols – Which one is dominating? • Depends on how much the software has to do.

Network software/hardware interaction -- a case study • A case study on the communication performance issues on CM 5 – V. Karamcheti and A. A. Chien, “Software Overhead in Messaging layers: Where does the time go? ” ACM ASPLOS-VI, 1994.

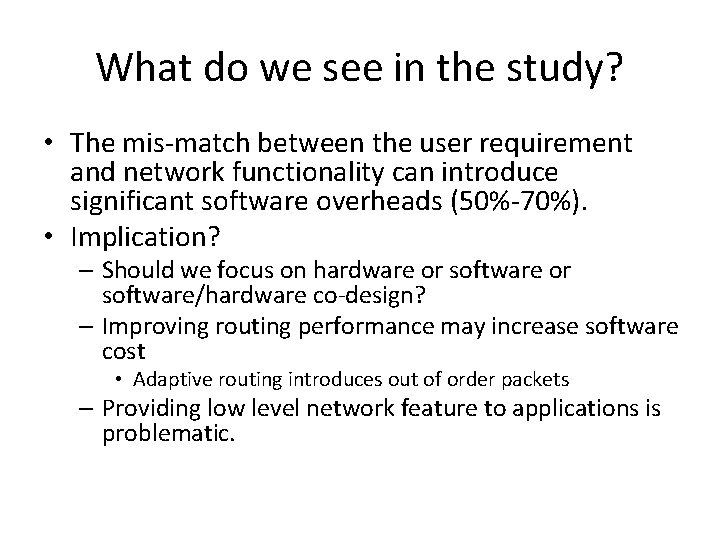

What do we see in the study? • The mis-match between the user requirement and network functionality can introduce significant software overheads (50%-70%). • Implication? – Should we focus on hardware or software/hardware co-design? – Improving routing performance may increase software cost • Adaptive routing introduces out of order packets – Providing low level network feature to applications is problematic.

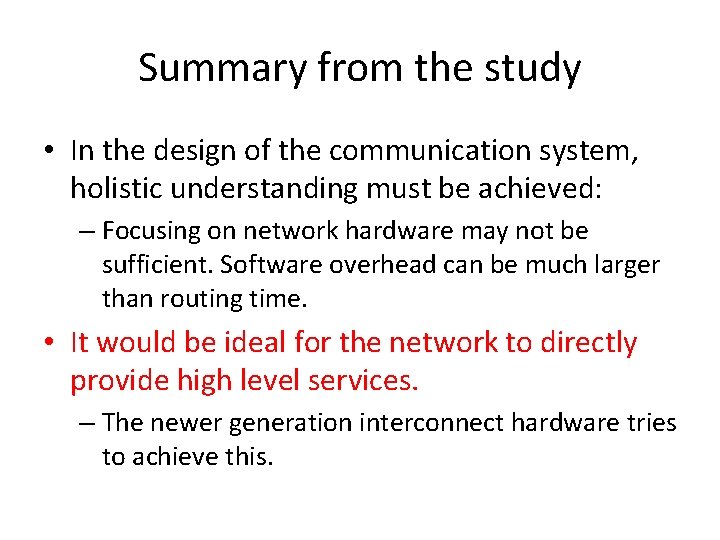

Summary from the study • In the design of the communication system, holistic understanding must be achieved: – Focusing on network hardware may not be sufficient. Software overhead can be much larger than routing time. • It would be ideal for the network to directly provide high level services. – The newer generation interconnect hardware tries to achieve this.

Case study • IBM Bluegene/L system • Infini. Band

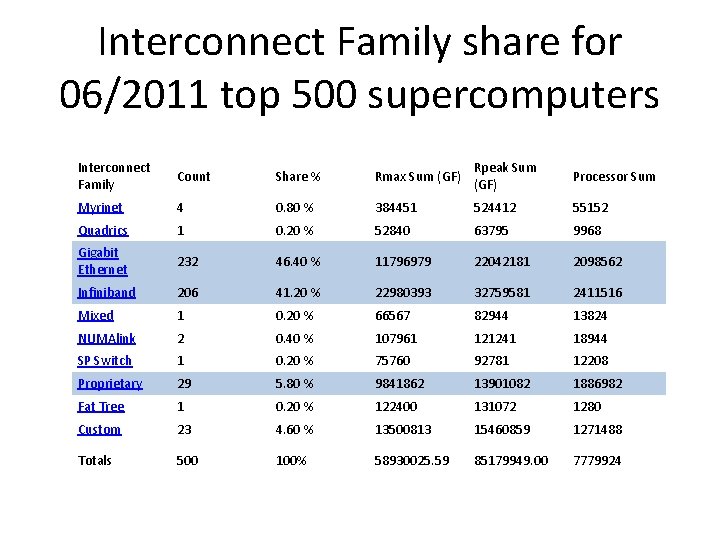

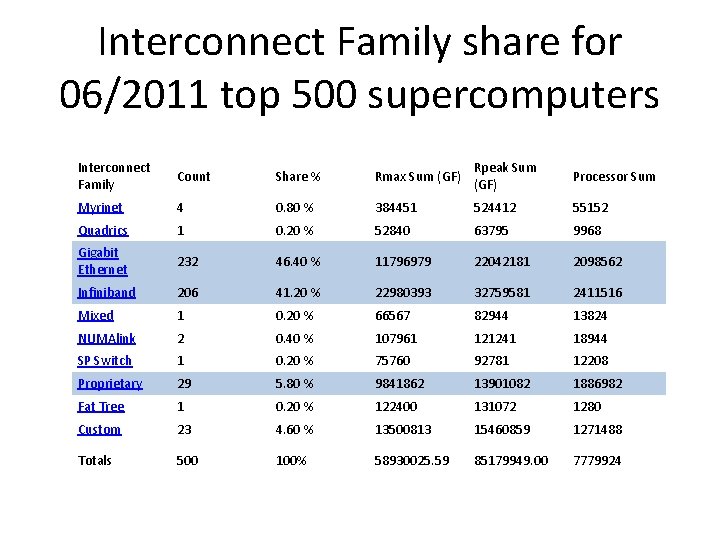

Interconnect Family share for 06/2011 top 500 supercomputers Interconnect Family Count Share % Rmax Sum (GF) Rpeak Sum (GF) Processor Sum Myrinet 4 0. 80 % 384451 524412 55152 Quadrics 1 0. 20 % 52840 63795 9968 Gigabit Ethernet 232 46. 40 % 11796979 22042181 2098562 Infiniband 206 41. 20 % 22980393 32759581 2411516 Mixed 1 0. 20 % 66567 82944 13824 NUMAlink 2 0. 40 % 107961 121241 18944 SP Switch 1 0. 20 % 75760 92781 12208 Proprietary 29 5. 80 % 9841862 13901082 1886982 Fat Tree 1 0. 20 % 122400 131072 1280 Custom 23 4. 60 % 13500813 15460859 1271488 Totals 500 100% 58930025. 59 85179949. 00 7779924

Overview of the IBM Blue Gene/L System Architecture • Design objectives • Hardware overview – System architecture – Node architecture – Interconnect architecture

Highlights • A 64 K-node highly integrated supercomputer based on system-on-a-chip technology – Two ASICs • Blue Gene/L compute (BLC), Blue Gene/L Link (BLL) • Distributed memory, massively parallel processing (MPP) architecture. • Use the message passing programming model (MPI). • 360 Tflops peak performance • Optimized for cost/performance

Design objectives • Objective 1: 360 -Tflops supercomputer – Earth Simulator (Japan, fastest supercomputer from 2002 to 2004): 35. 86 Tflops • Objective 2: power efficiency – Performance/rack = performance/watt * watt/rack • Watt/rack is a constant of around 20 k. W • Performance/watt determines performance/rack

• Power efficiency: – 360 Tflops => 20 megawatts with conventional processors – Need low-power processor design (2 -10 times better power efficiency)

Design objectives (continue) • Objective 3: extreme scalability – Optimized for cost/performance use low power, less powerful processors need a lot of processors • Up to 65536 processors. – Interconnect scalability – Reliability, availability, and serviceability – Application scalability

Blue Gene/L system components

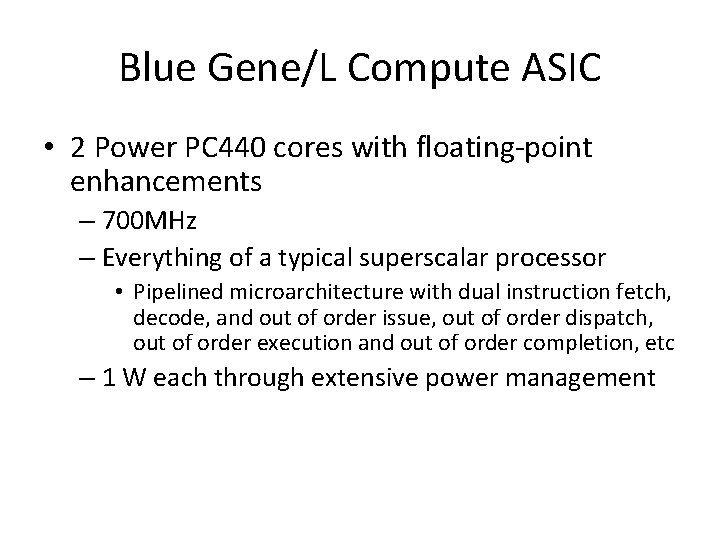

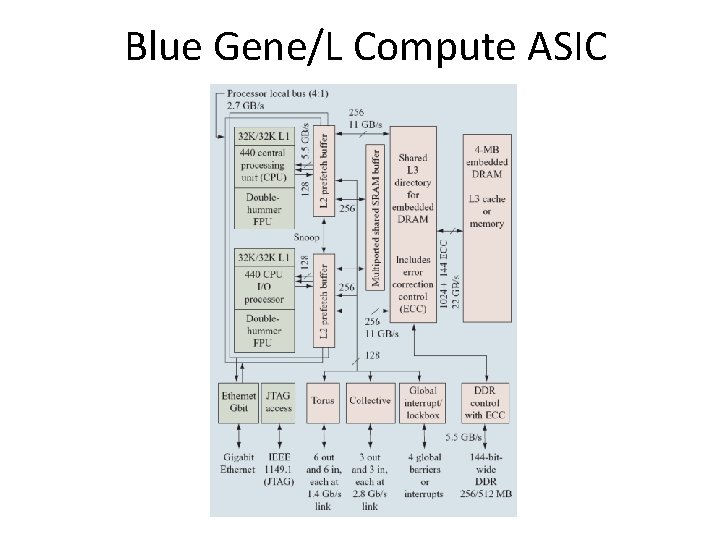

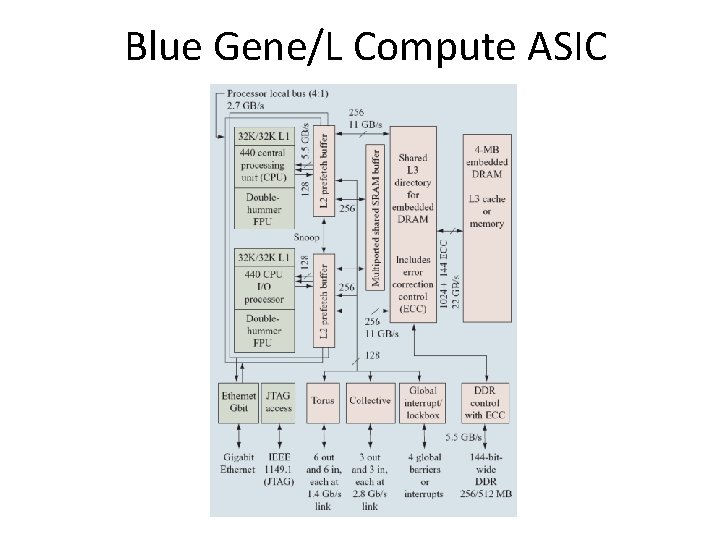

Blue Gene/L Compute ASIC • 2 Power PC 440 cores with floating-point enhancements – 700 MHz – Everything of a typical superscalar processor • Pipelined microarchitecture with dual instruction fetch, decode, and out of order issue, out of order dispatch, out of order execution and out of order completion, etc – 1 W each through extensive power management

Blue Gene/L Compute ASIC

Memory system on a BGL node • BG/L only supports distributed memory paradigm. • No need for efficient support for cache coherence on each node. – Coherence enforced by software if needed. • Two cores operate in two modes: – Communication coprocessor mode • Need coherence, managed in system level libraries – Virtual node mode • Memory is physical partitioned (not shared).

Blue Gene/L networks • Five networks. – 100 Mbps Ethernet control network for diagnostics, debugging, and some other things. – 1000 Mbps Ethernet for I/O – Three high-band width, low-latency networks for data transmission and synchronization. • 3 -D torus network for point-to-point communication • Collective network for global operations • Barrier network • All network logic is integrated in the BG/L node ASIC – Memory mapped interfaces from user space

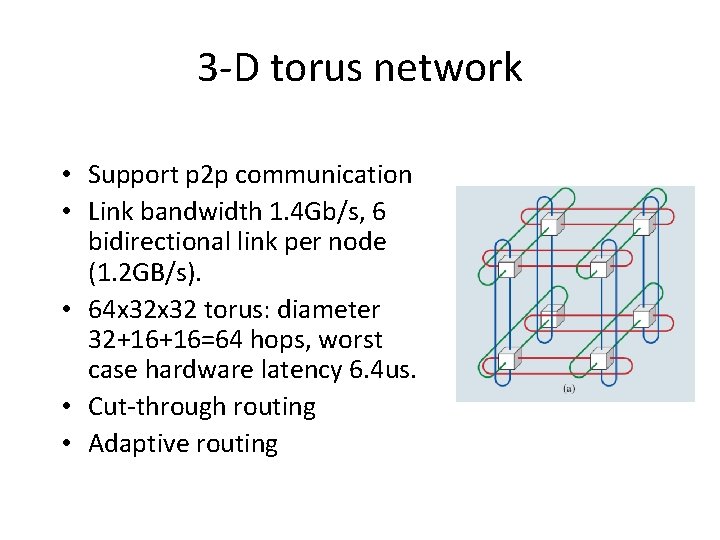

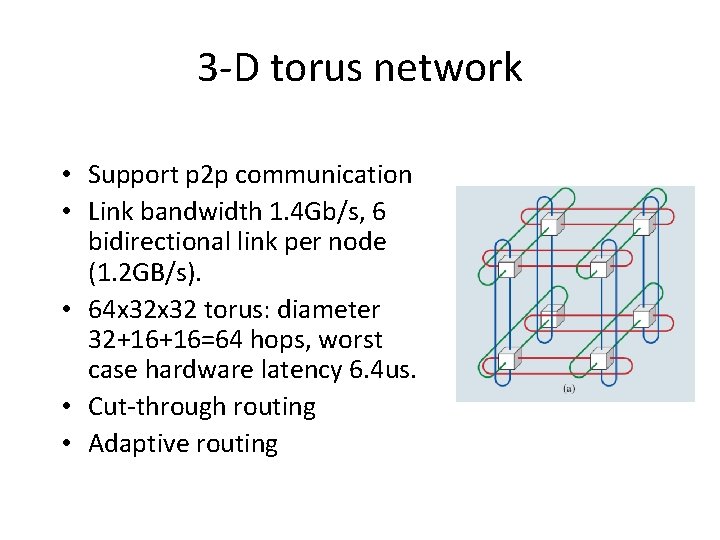

3 -D torus network • Support p 2 p communication • Link bandwidth 1. 4 Gb/s, 6 bidirectional link per node (1. 2 GB/s). • 64 x 32 torus: diameter 32+16+16=64 hops, worst case hardware latency 6. 4 us. • Cut-through routing • Adaptive routing

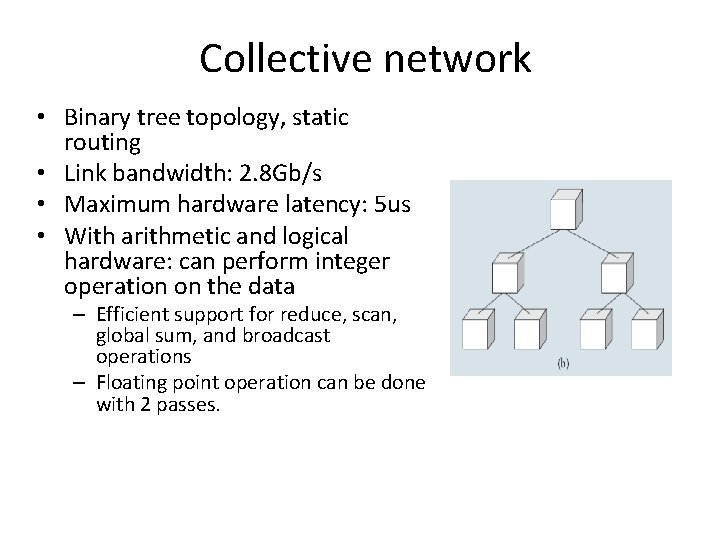

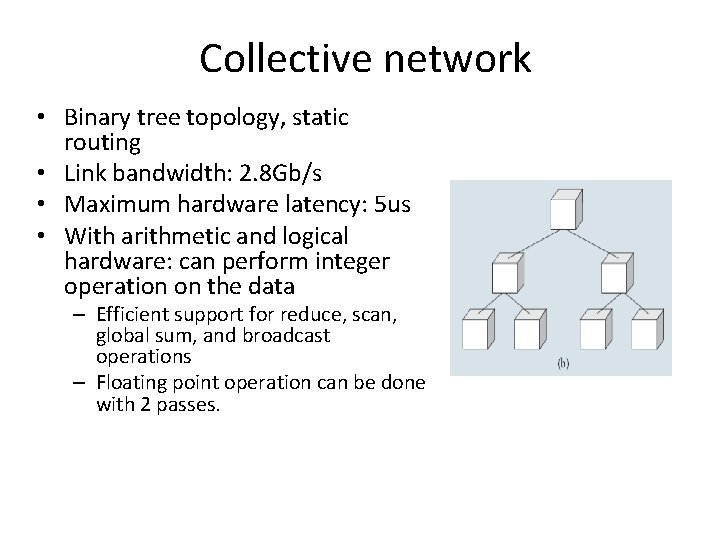

Collective network • Binary tree topology, static routing • Link bandwidth: 2. 8 Gb/s • Maximum hardware latency: 5 us • With arithmetic and logical hardware: can perform integer operation on the data – Efficient support for reduce, scan, global sum, and broadcast operations – Floating point operation can be done with 2 passes.

Barrier network • Hardware support for global synchronization. • 1. 5 us for barrier on 64 K nodes.

IBM Blue. Gene/L summary • Optimize cost/performance – limiting applications. – Use low power design • Lower frequency, system-on-a-chip • Great performance per watt metric • Scalability support – Hardware support for global communication and barrier – Low latency, high bandwidth support