Interactive Computer Graphics Interactive Computer Graphics Allow users

- Slides: 44

Interactive Computer Graphics

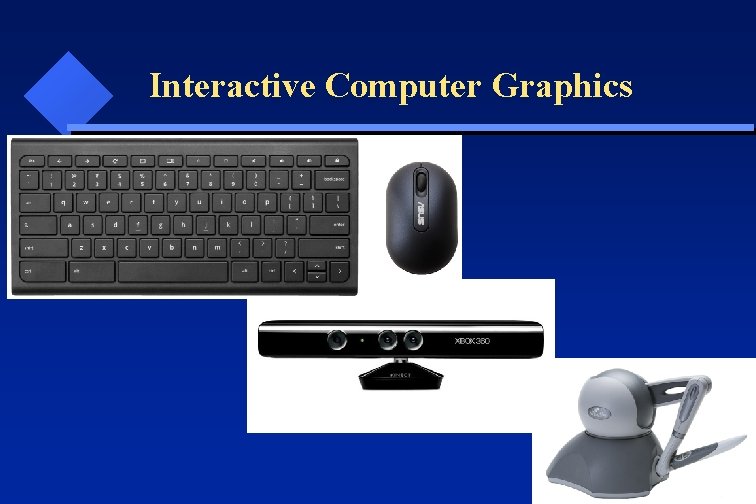

Interactive Computer Graphics Allow users to control how graphical elements are displayed. Human-Computer Interaction (HCI) is critical to Interactive Computer Graphics and Visualization.

HCI

HCI Three types of HCI: CLI: command line interface (with keyboard) GUI: graphical user interface (mouse) NUI: natural user interface with A/V (Kinect)

A GOOD user interface (UI) allows users to perform interactive tasks with ease and joy. WYSIWYG (What you see is what you get). Four basic interaction TASKS: position, select, quantify, text Basic design PRINCIPLES: Look (appearance) and Feel (action).

HCI Hardware

HCI Hardware: Keyboards QWERTY(slow down typing) Dvorak, order by frequency of use Alphabetic order

HCI Hardware: Locators relative devices: mice, trackballs, joysticks absolute devices: data tablets, touch screen, Kinect, Leap. Motion direct devices: light pens, touch screens, Kinect, Leap. Motion

HCI Hardware: Locators indirect devices: mice, trackballs, joysticks continues devices: mice, trackballs, joysticks, Kinect, Leap. Motion discrete devices: control keys

HCI Hardware: Valuators Bounded: volume control on radio Unbounded: clock, dial Choice Devices: function keys foot switches

HCI Hardware: Valuators Haptic Devices: pressure-sensitive stylus force-feedback controls (haptic) Phantom from Sendable

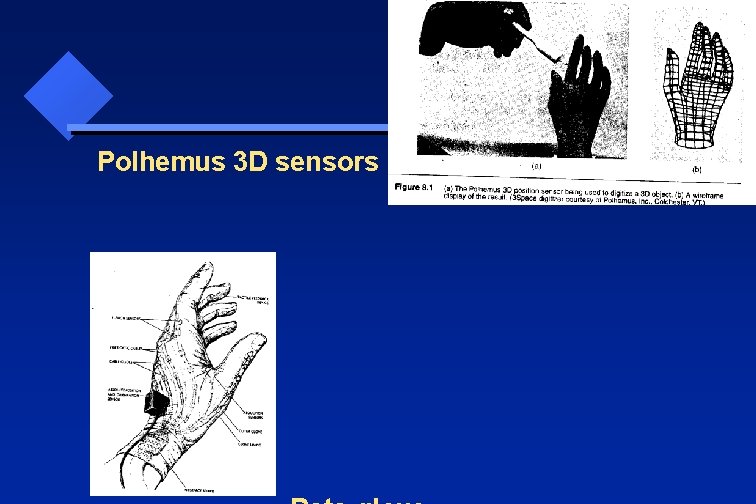

3 D Interaction Devices Joysticks with a shaft that twists for a third dimension Kinect 3 D camera, Leap. Motion VR: virtual reality, immersive, headmounted sensors/markers for tracking

Polhemus 3 D sensors

Interactive Tasks

Interactive Tasks Position: by pointing (GRAPHICS) Selection: by name (DB), by pointing GUI: hierarchical pull-down menu, radio-buttons e. g. Format->Paragraph…

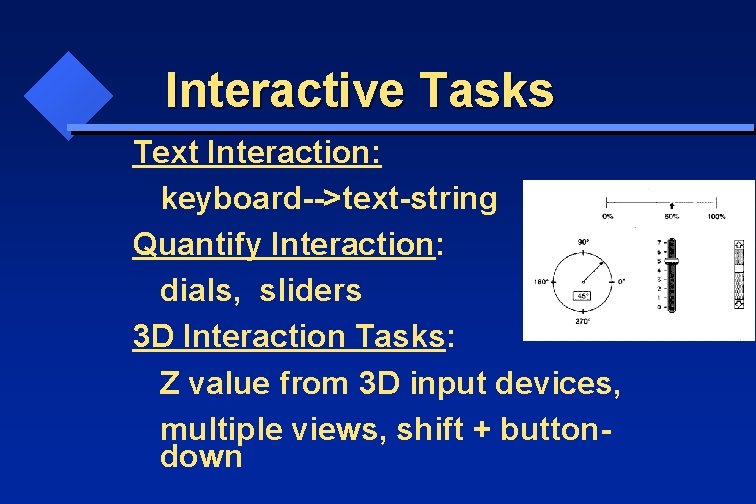

Interactive Tasks Text Interaction: keyboard-->text-string Quantify Interaction: dials, sliders 3 D Interaction Tasks: Z value from 3 D input devices, multiple views, shift + buttondown

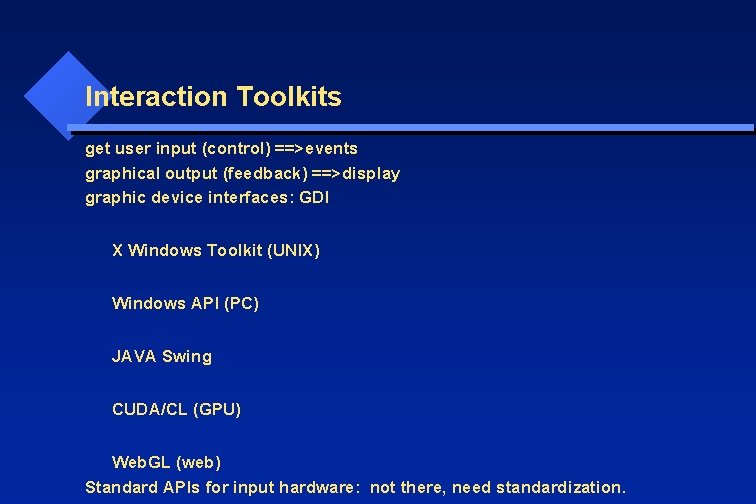

Interaction Toolkits get user input (control) ==>events graphical output (feedback) ==>display graphic device interfaces: GDI X Windows Toolkit (UNIX) Windows API (PC) JAVA Swing CUDA/CL (GPU) Web. GL (web) Standard APIs for input hardware: not there, need standardization.

NUI

Natural User Interfaces: • Voice controls • Kinect 3 D sensor • Leap. Motion

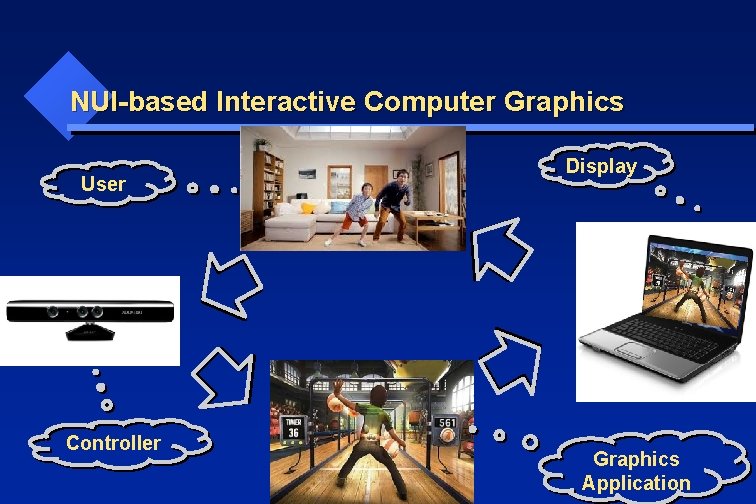

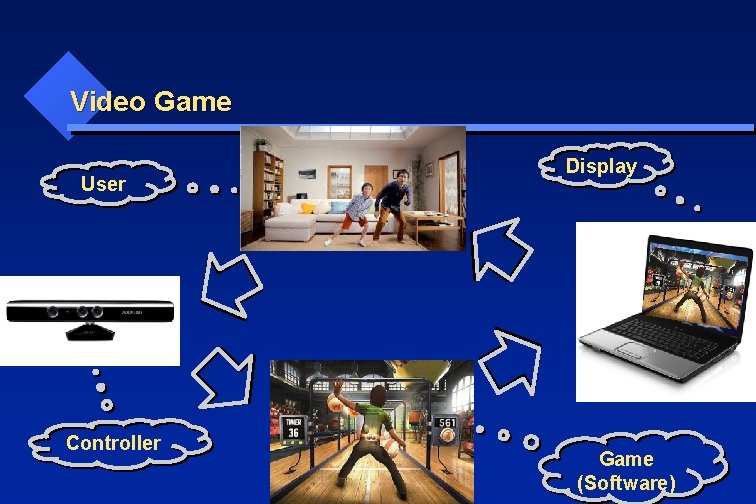

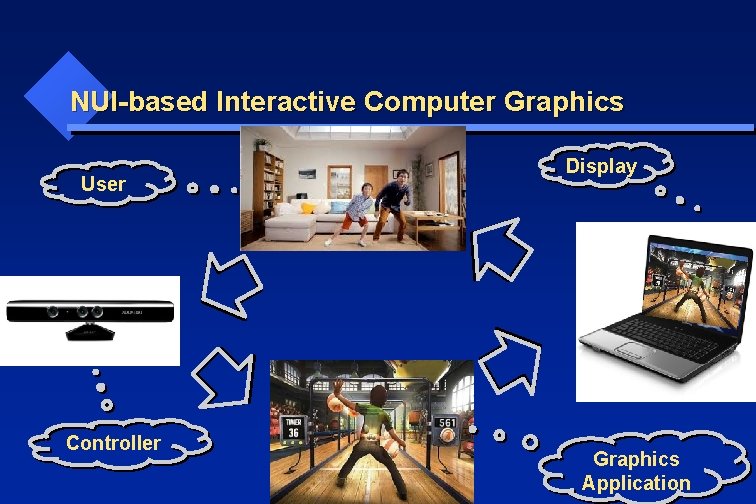

NUI-based Interactive Computer Graphics User Controller Display Graphics Application

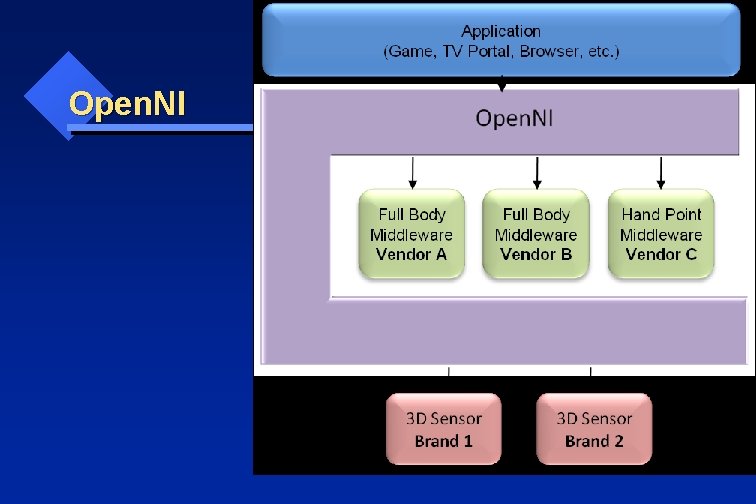

NUI: Three parts of NUI: Hardware: e. g. , Kinect Software: drivers (Open. NI), middleware Application: integration of HW enabling software with applications.

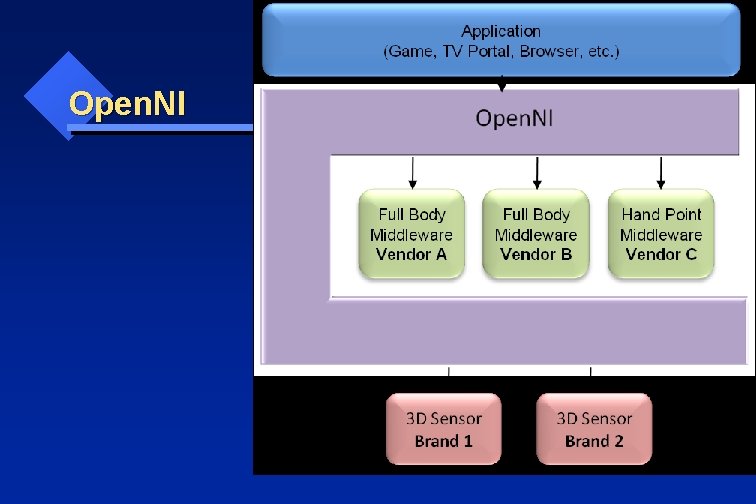

Open. NI

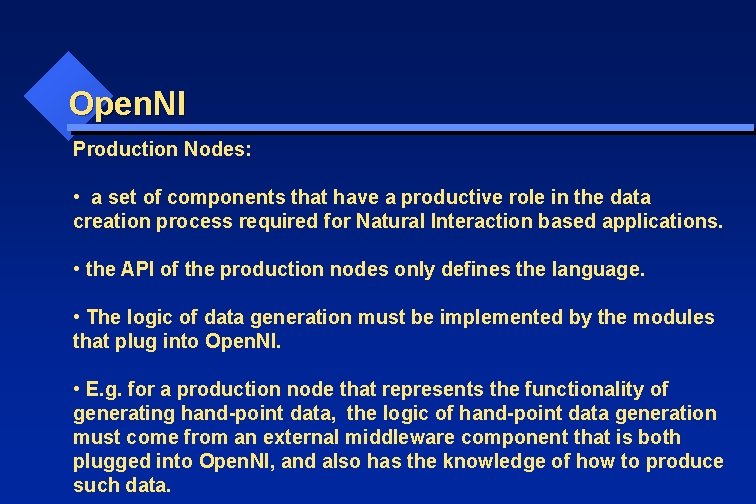

Open. NI Production Nodes: • a set of components that have a productive role in the data creation process required for Natural Interaction based applications. • the API of the production nodes only defines the language. • The logic of data generation must be implemented by the modules that plug into Open. NI. • E. g. for a production node that represents the functionality of generating hand-point data, the logic of hand-point data generation must come from an external middleware component that is both plugged into Open. NI, and also has the knowledge of how to produce such data.

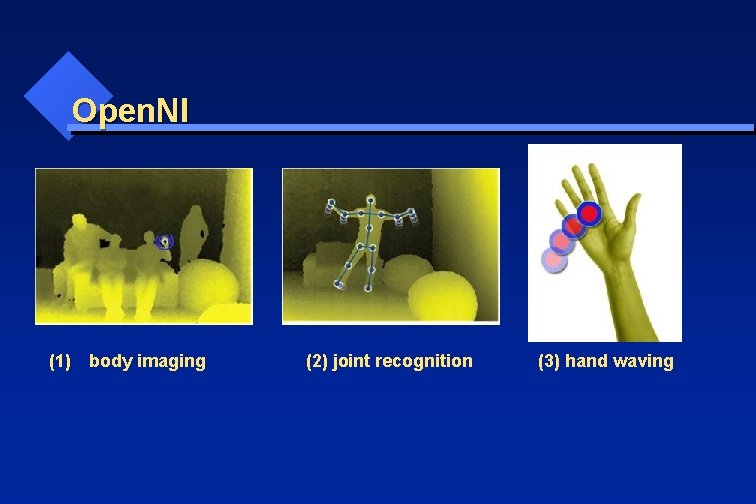

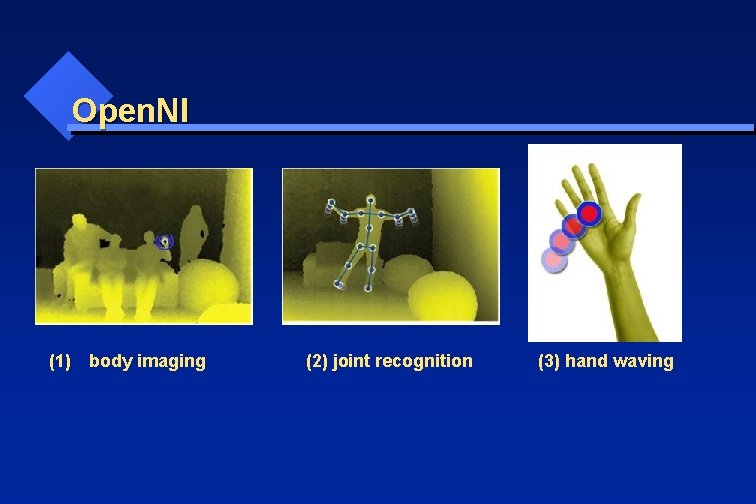

Open. NI (1) body imaging (2) joint recognition (3) hand waving

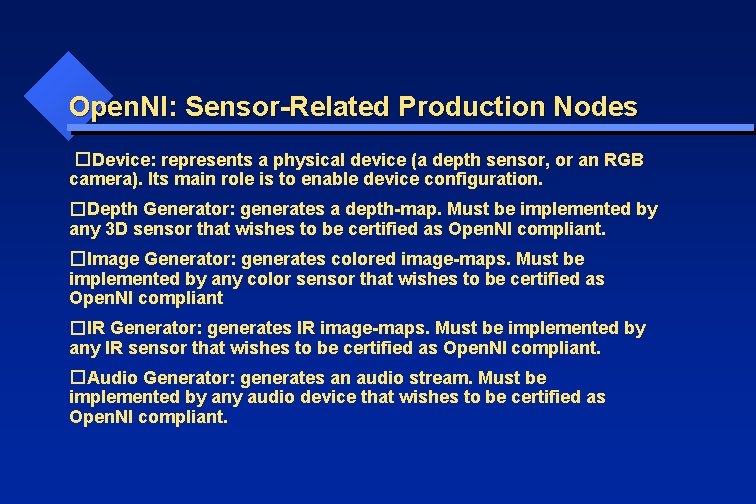

Open. NI: Sensor-Related Production Nodes � Device: represents a physical device (a depth sensor, or an RGB camera). Its main role is to enable device configuration. � Depth Generator: generates a depth-map. Must be implemented by any 3 D sensor that wishes to be certified as Open. NI compliant. � Image Generator: generates colored image-maps. Must be implemented by any color sensor that wishes to be certified as Open. NI compliant � IR Generator: generates IR image-maps. Must be implemented by any IR sensor that wishes to be certified as Open. NI compliant. � Audio Generator: generates an audio stream. Must be implemented by any audio device that wishes to be certified as Open. NI compliant.

Open. NI: Middleware-Related Production Nodes � Gestures Alert Generator: Generates callbacks to the application when specific gestures are identified. � Scene Analyzer: Analyzes a scene, including the separation of the foreground from the background, identification of figures in the scene, and detection of the floor plane. The Scene Analyzer’s main output is a labeled depth map, in which each pixel holds a label that states whether it represents a figure, or it is part of the background. � Hand Point Generator: Supports hand detection and tracking. This node generates callbacks that provide alerts when a hand point (meaning, a palm) is detected, and when a hand point currently being tracked, changes its location. � User Generator: Generates a representation of a (full or partial) body in the 3 D scene.

Open. NI: Recording Production Notes � Recorder: Implements data recordings � Player: Reads data from a recording and plays it � Codec: Used to compress and decompress data in recordings

Open. NI: Capabilities Supports the registration of multiple middleware components and devices. Open. NI is released with a specific set of capabilities, with the option of adding further capabilities in the future. Each module can declare the capabilities it supports. Currently supported capabilities: � Alternative View: Enables any type of map generator to transform its data to appear as if the sensor is placed in another location. � Cropping: Enables a map generator to output a selected area of the frame. � Frame Sync: Enables two sensors producing frame data (for example, depth and image) to synchronize their frames so that they arrive at the same time.

Open. NI: Capabilities Currently supported capabilities: � Mirror: Enables mirroring of the data produced by a generator. � Pose Detection: Enables a user generator to recognize when the user is posed in a specific position. � Skeleton: Enables a user generator to output the skeletal data of the user. This data includes the location of the skeletal joints, the ability to track skeleton positions and the user calibration capabilities. � User Position: Enables a Depth Generator to optimize the output depth map that is generated for a specific area of the scene.

Open. NI: Capabilities Currently supported capabilities: � Error State: Enables a node to report that it is in "Error" status, meaning that on a practical level, the node may not function properly. � Lock Aware: Enables a node to be locked outside the context boundary. � Hand Touching FOV Edge: Alert when the hand point reaches the boundaries of the field of view.

Open. NI: Generating and Reading Data • Production nodes that also produce data are called Generator. • Once these are created, they do not immediately start generating data, to enable the application to set the required configuration. • The xn: : Generator: : Start. Generating() function is used to begin generating data. • The xn: : Generator: : Stop. Generating stops it. • Data Generators "hide" new data internally, until explicitly requested to expose the most updated data to the application, using the Update. Data request function. • Open. NI enables the application to wait for new data to be available, and then update it using the xn: : Generator: : Wait. And. Update. Data() function.

Interactive Game with Kinect

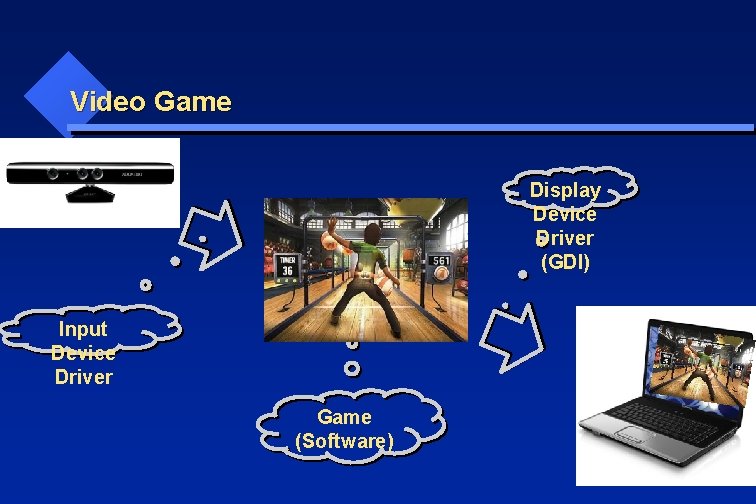

Video Game Interactive animation: user-> interface -> game object action -> feedback (A/V, haptic) Game objects can represent data.

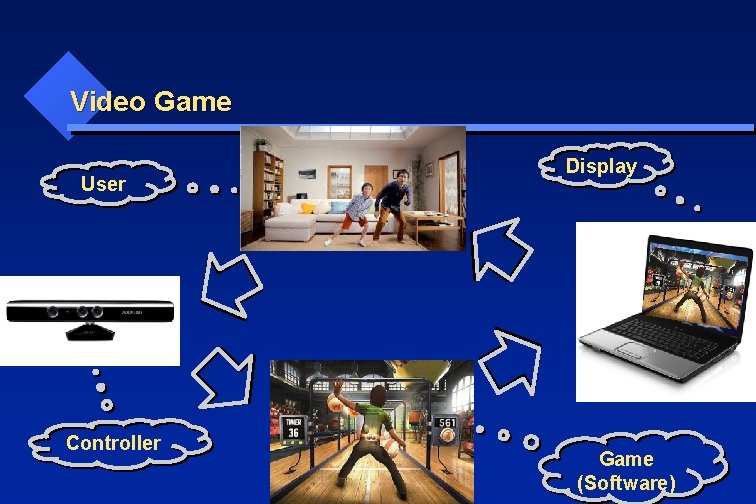

Video Game User Controller Display Game (Software)

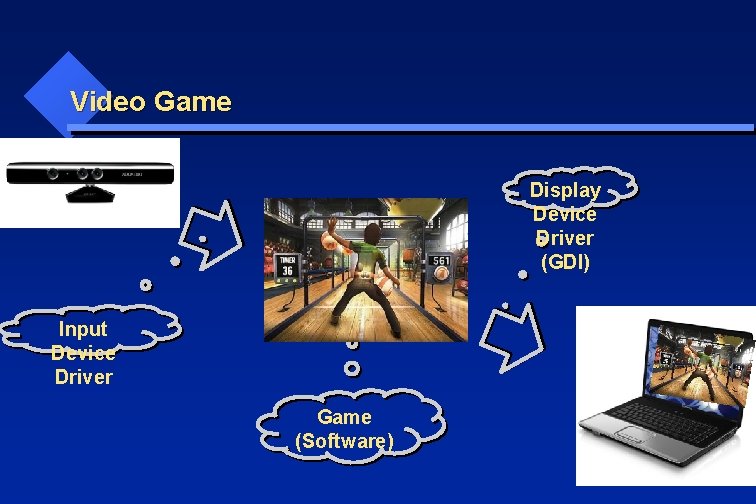

Video Game Display Device Driver (GDI) Input Device Driver Game (Software)

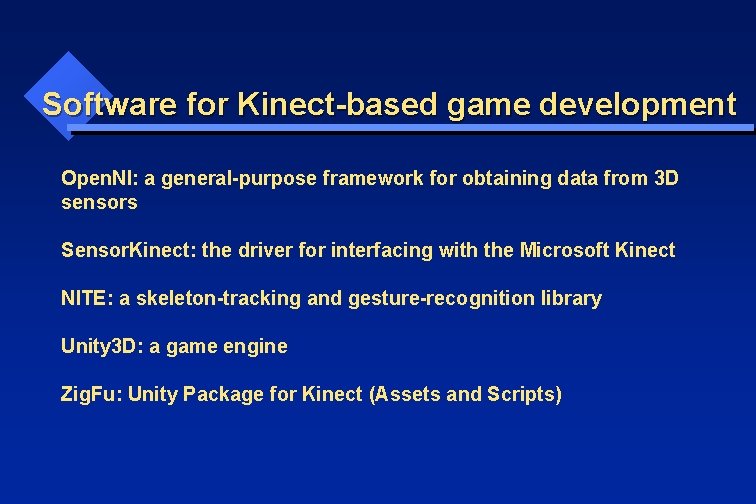

Software for Kinect-based game development Open. NI: a general-purpose framework for obtaining data from 3 D sensors Sensor. Kinect: the driver for interfacing with the Microsoft Kinect NITE: a skeleton-tracking and gesture-recognition library Unity 3 D: a game engine Zig. Fu: Unity Package for Kinect (Assets and Scripts)

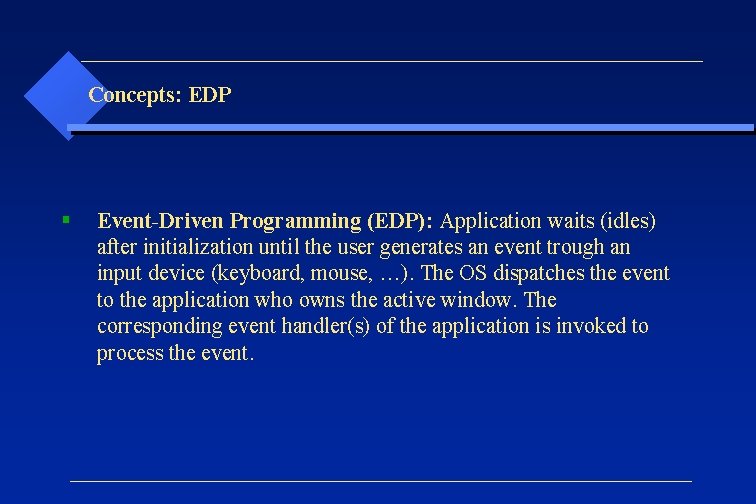

EDP (Event-driven Programming)

Concepts: EDP § Event-Driven Programming (EDP): Application waits (idles) after initialization until the user generates an event trough an input device (keyboard, mouse, …). The OS dispatches the event to the application who owns the active window. The corresponding event handler(s) of the application is invoked to process the event.

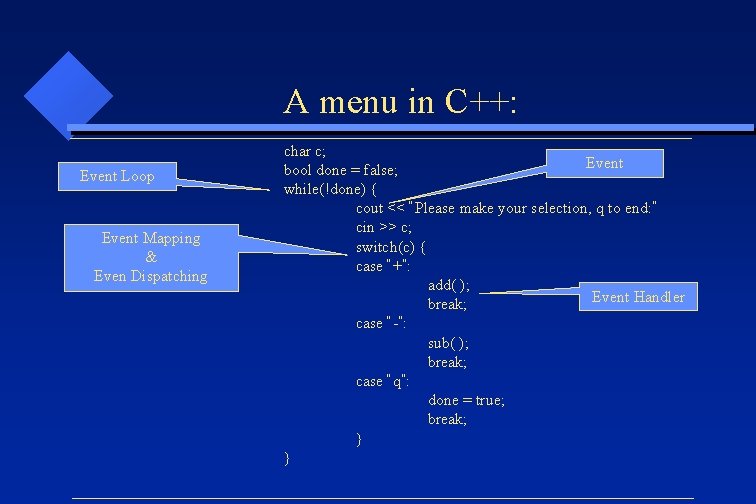

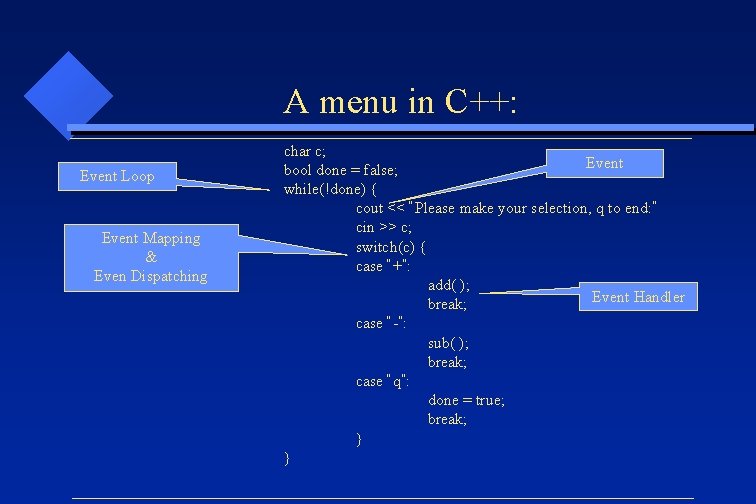

A menu in C++: Event Loop Event Mapping & Even Dispatching char c; Event bool done = false; while(!done) { cout << “Please make your selection, q to end: ” cin >> c; switch(c) { case “+”: add( ); Event Handler break; case “-”: sub( ); break; case “q”: done = true; break; } }

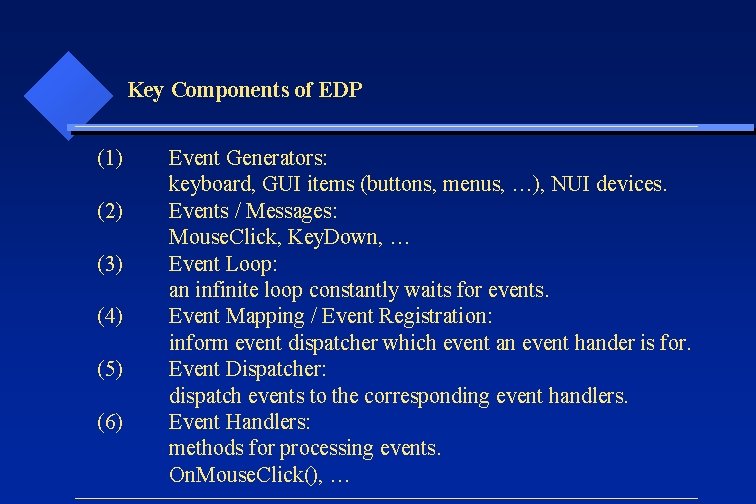

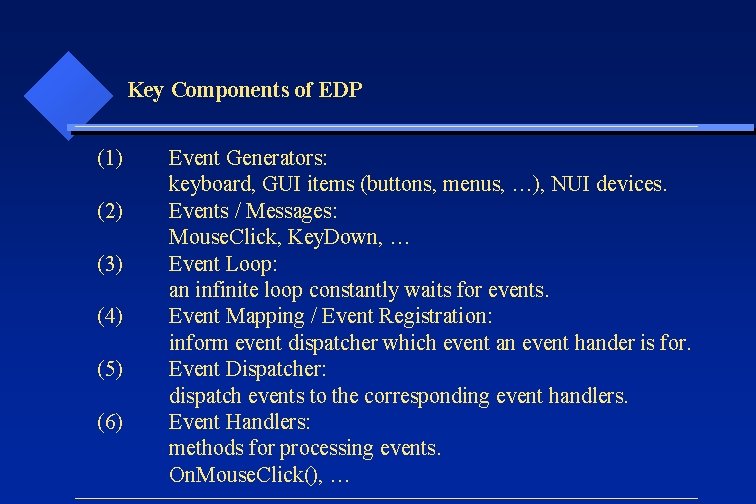

Key Components of EDP (1) (2) (3) (4) (5) (6) Event Generators: keyboard, GUI items (buttons, menus, …), NUI devices. Events / Messages: Mouse. Click, Key. Down, … Event Loop: an infinite loop constantly waits for events. Event Mapping / Event Registration: inform event dispatcher which event an event hander is for. Event Dispatcher: dispatch events to the corresponding event handlers. Event Handlers: methods for processing events. On. Mouse. Click(), …

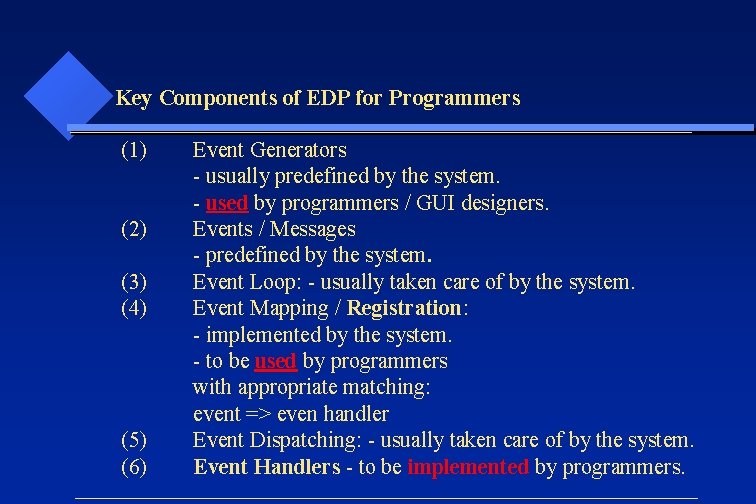

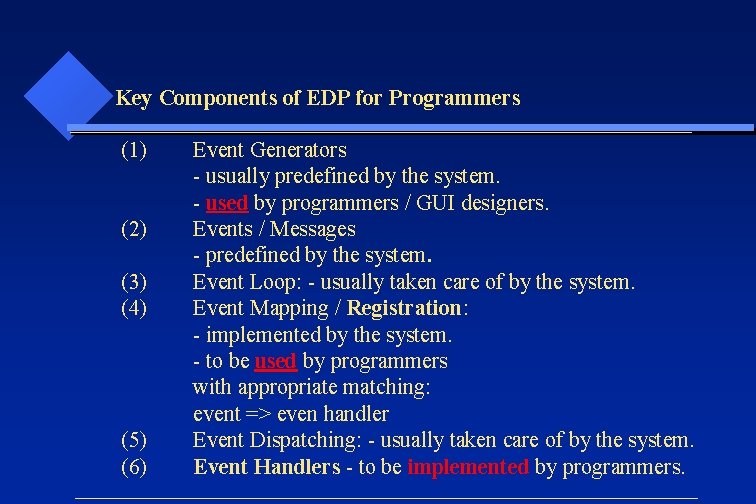

Key Components of EDP for Programmers (1) (2) (3) (4) (5) (6) Event Generators - usually predefined by the system. - used by programmers / GUI designers. Events / Messages - predefined by the system. Event Loop: - usually taken care of by the system. Event Mapping / Registration: - implemented by the system. - to be used by programmers with appropriate matching: event => even handler Event Dispatching: - usually taken care of by the system. Event Handlers - to be implemented by programmers.

EDP Programming Most common EDP steps: (1) (2) (3) (4) Select input devices. Identify event generators and events to use. Map events to event handlers. Implement event handlers.

Summary HCI: CLI, GUI, NUI HCI Hardware Interactive Tasks NUI Kinect-based Games EDP

Interactive Computer Graphics for Visualization