Intelligent Leaning A Brief Introduction to Artificial Neural

Intelligent Leaning -- A Brief Introduction to Artificial Neural Networks Chiung-Yao Fang

Learning n What is leaning? ¨ Machine learning is programming computers to optimize a performance criterion using example data or past experience. ¨ There is no need to “learn” to calculate payroll ¨ Incremental learning, active learning, … n The type of learning ¨ Supervised learning ¨ Unsupervised learning ¨ Reinforcement learning 1/10/2022 2

Understanding the Brain n Levels of analysis (Marr, 1982) 1. Computational theory 2. Representation and algorithm 3. Hardware implementation Example: sorting ¨ The same computational theory may have multiple representations and algorithms. ¨ A given representation and algorithm may have multiple hardware implementations. Reverse engineering: From hardware to theory 1/10/2022 3

Understanding the Brain n n Parallel processing: SIMD vs MIMD ¨ SIMD: single instruction multiple data machines n All processors execute the same instruction but on different pieces of data ¨ MIMD: multiple instruction multiple data machines n Different processors may execute different instructions on different data Neural net: ¨ NIMD: neural instruction multiple data machines n Each processor corresponds to a neuron, local parameters correspond to its synaptic weights, and the whole structure is a neural network. 1/10/2022 n Learning: Update by training/experience n Learning from examples 4

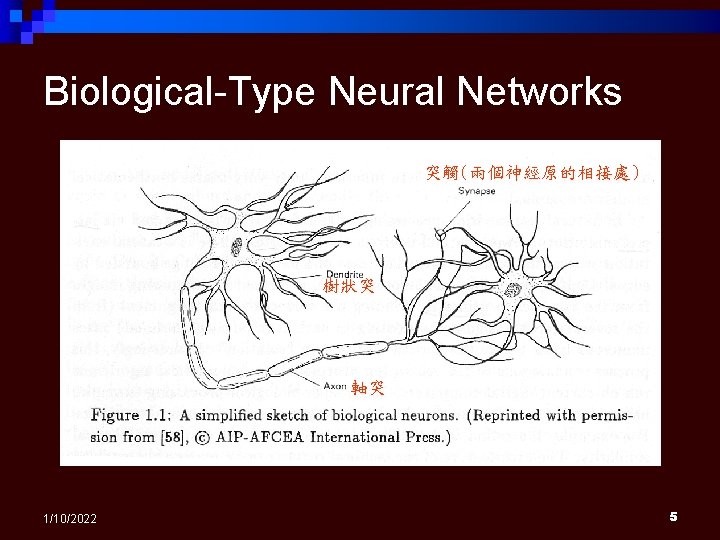

Biological-Type Neural Networks 突觸(兩個神經原的相接處) ( 樹狀突 軸突 1/10/2022 5

Application-Driven Neural Networks n Three main characteristics: ¨ Adaptiveness and self-organization ¨ Nonlinear network processing ¨ Parallel processing 1/10/2022 6

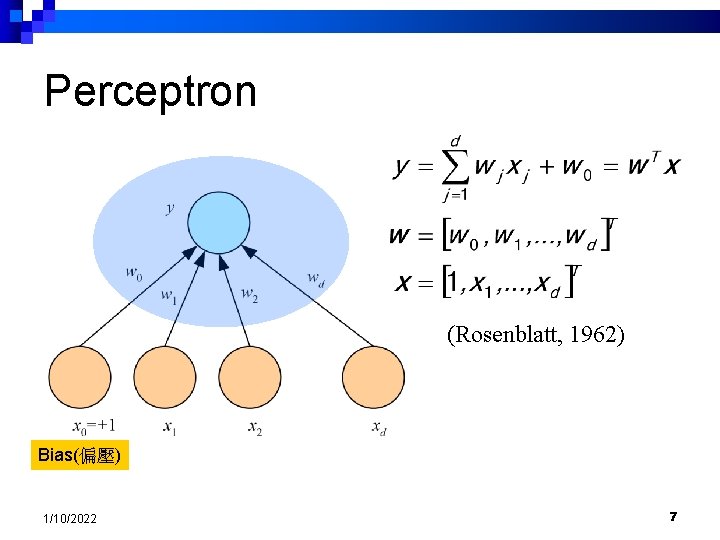

Perceptron (Rosenblatt, 1962) Bias(偏壓) 1/10/2022 7

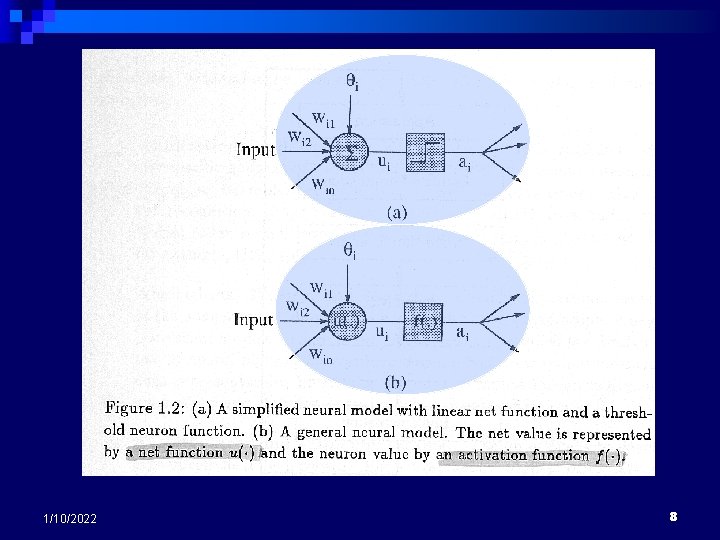

1/10/2022 8

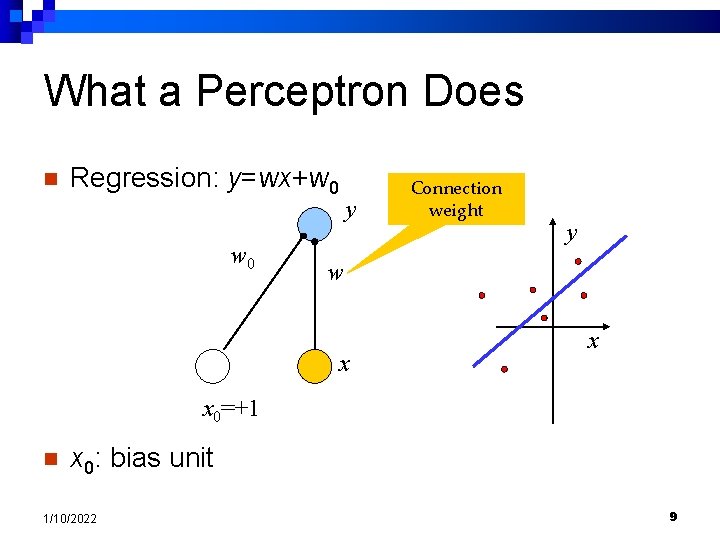

What a Perceptron Does n Regression: y=wx+w 0 y Connection weight y w x x x 0=+1 n x 0: bias unit 1/10/2022 9

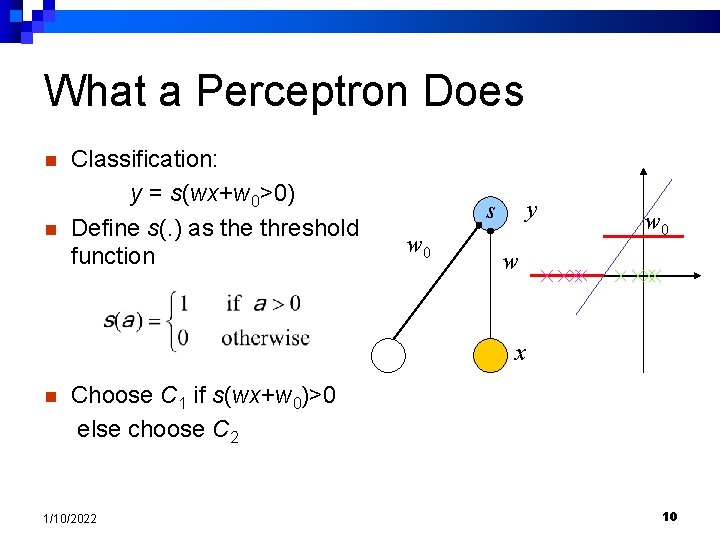

What a Perceptron Does n n Classification: y = s(wx+w 0>0) Define s(. ) as the threshold function y s w 0 w x n Choose C 1 if s(wx+w 0)>0 else choose C 2 1/10/2022 10

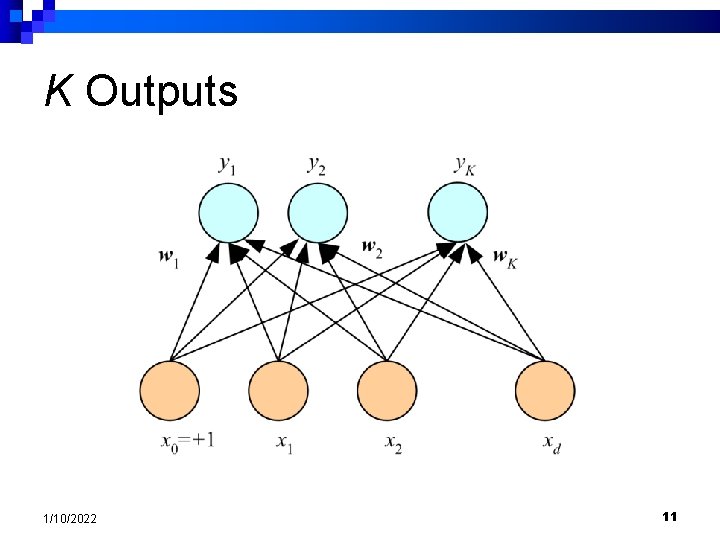

K Outputs 1/10/2022 11

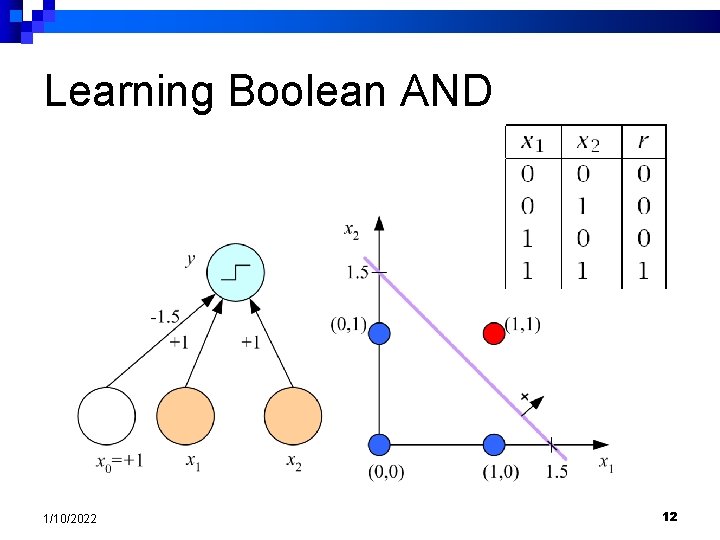

Learning Boolean AND 1/10/2022 12

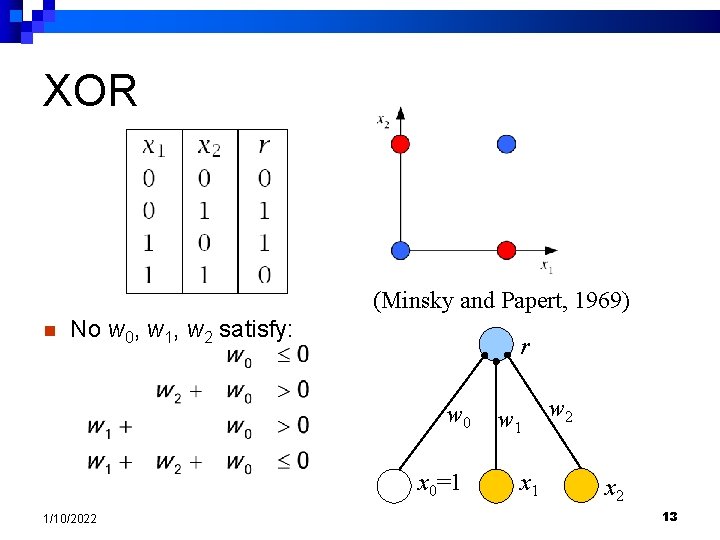

XOR (Minsky and Papert, 1969) n No w 0, w 1, w 2 satisfy: r w 0 x 0=1 1/10/2022 w 1 x 1 w 2 x 2 13

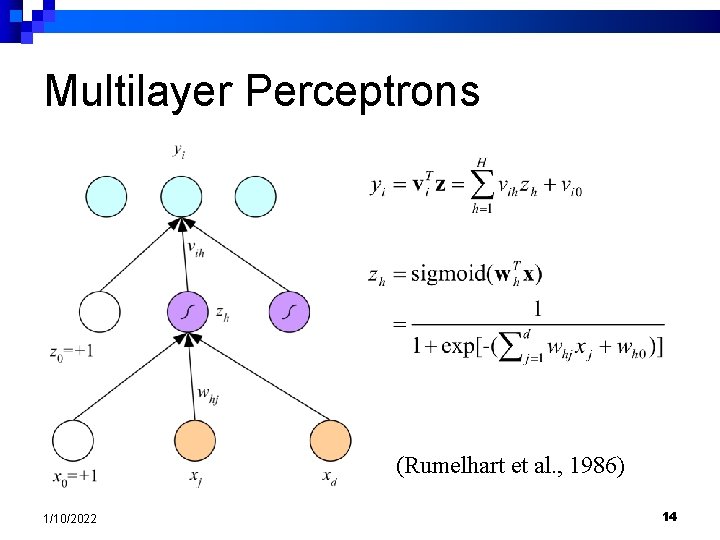

Multilayer Perceptrons (Rumelhart et al. , 1986) 1/10/2022 14

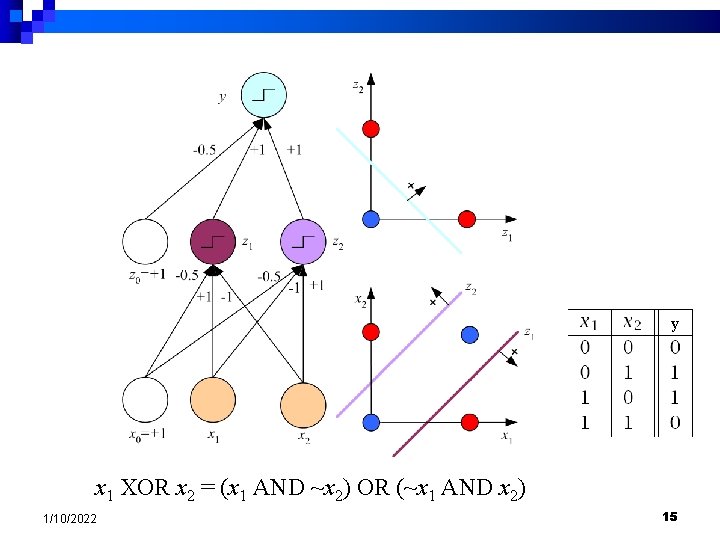

y x 1 XOR x 2 = (x 1 AND ~x 2) OR (~x 1 AND x 2) 1/10/2022 15

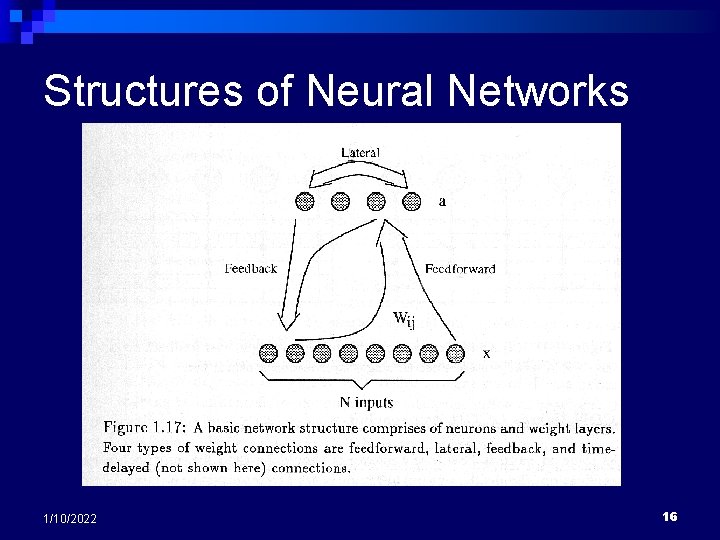

Structures of Neural Networks 1/10/2022 16

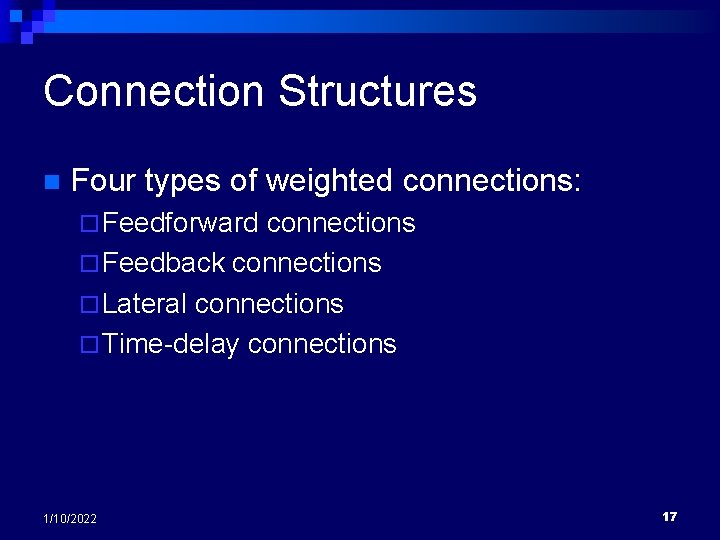

Connection Structures n Four types of weighted connections: ¨ Feedforward connections ¨ Feedback connections ¨ Lateral connections ¨ Time-delay connections 1/10/2022 17

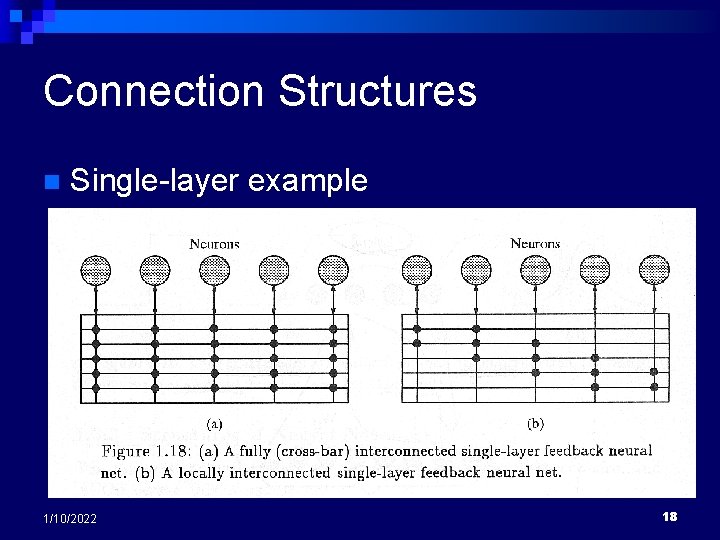

Connection Structures n Single-layer example 1/10/2022 18

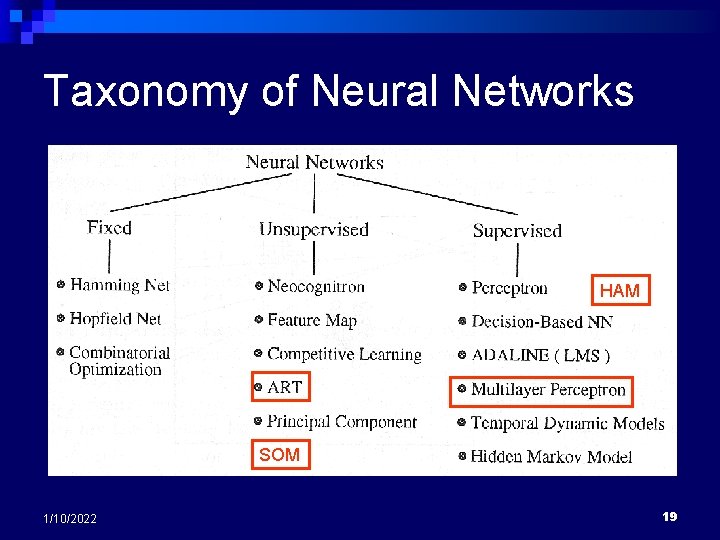

Taxonomy of Neural Networks HAM SOM 1/10/2022 19

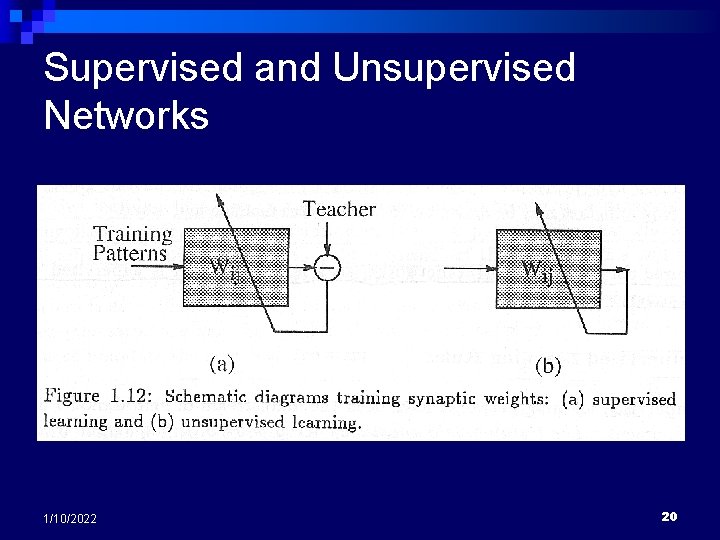

Supervised and Unsupervised Networks 1/10/2022 20

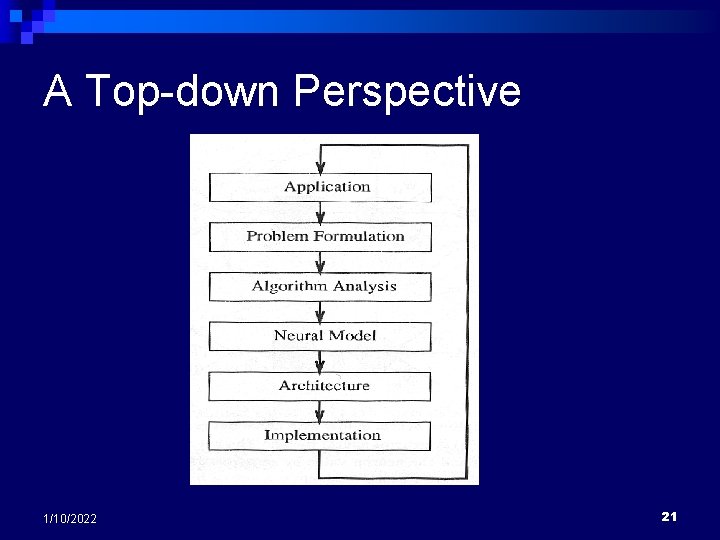

A Top-down Perspective 1/10/2022 21

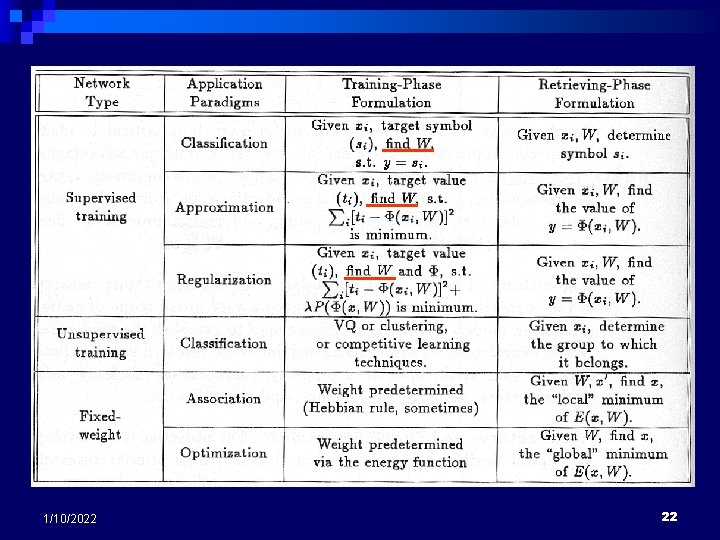

1/10/2022 22

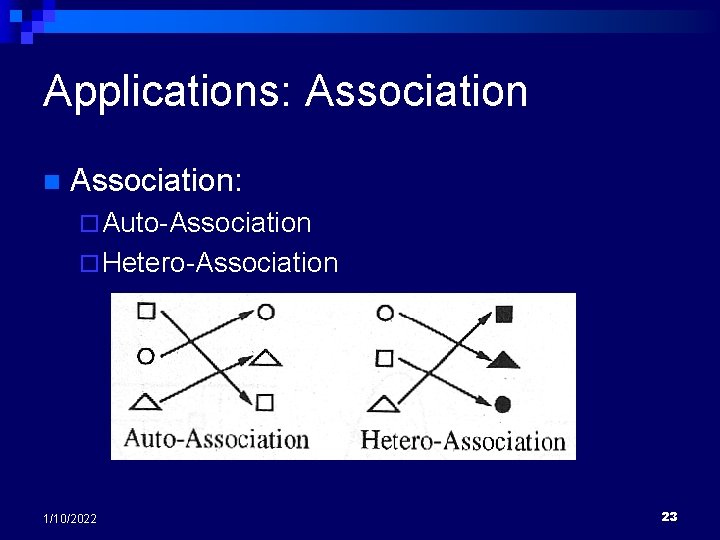

Applications: Association n Association: ¨ Auto-Association ¨ Hetero-Association 1/10/2022 23

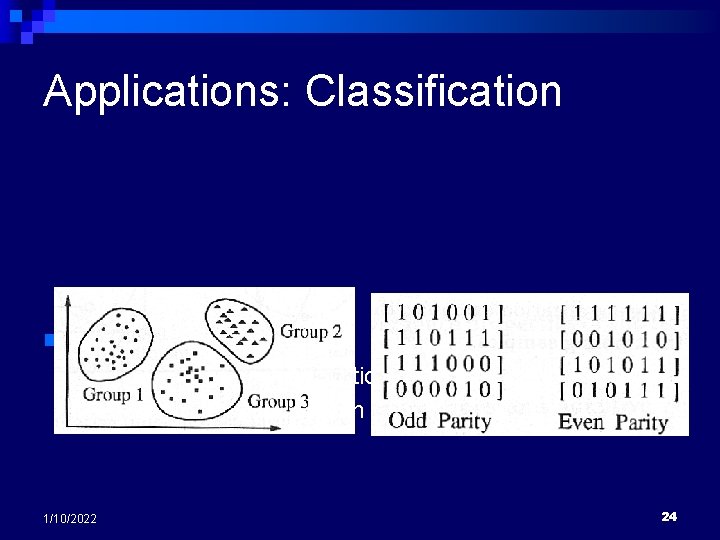

Applications: Classification n Classification: ¨ Unsupervised classification (clustering) ¨ Supervised classification 1/10/2022 24

Applications: Pattern Completions n Two kinds of pattern completion problems: ¨ Static n pattern completion Multilayer nets, Boltzmann machines, and Hopfield nets ¨ Temporal n 1/10/2022 pattern completion Markov models and time-delay dynamic networks 25

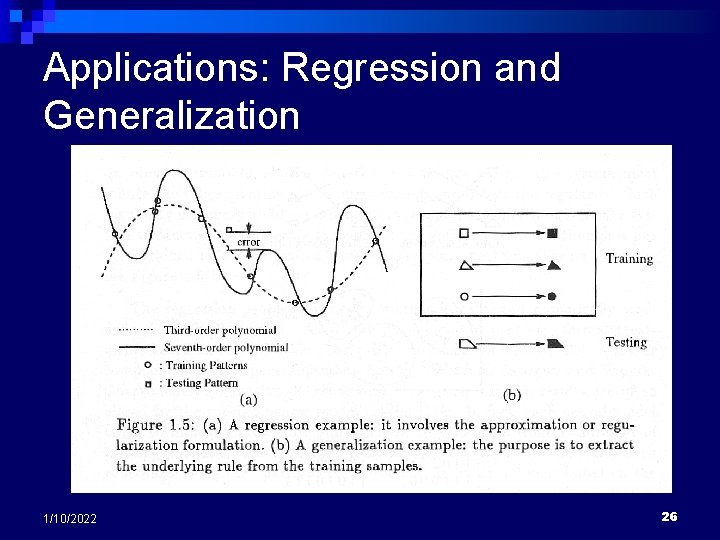

Applications: Regression and Generalization 1/10/2022 26

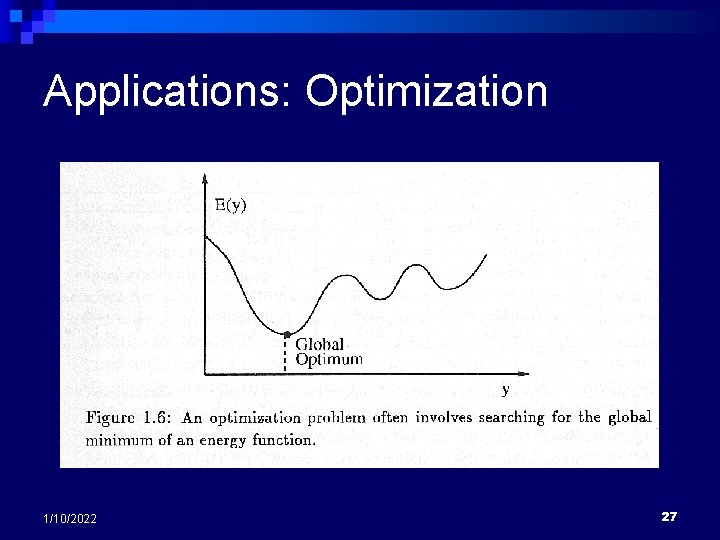

Applications: Optimization 1/10/2022 27

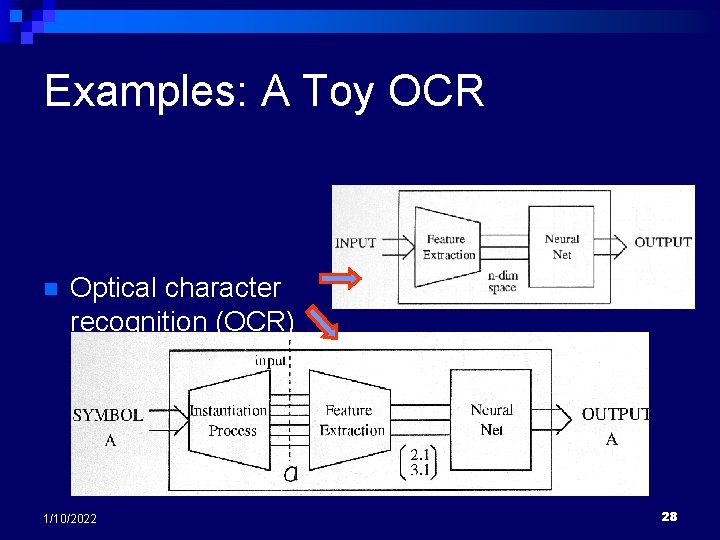

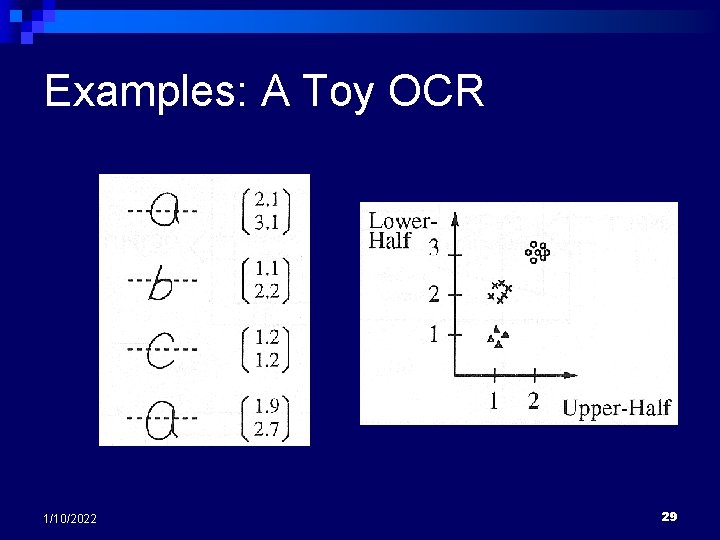

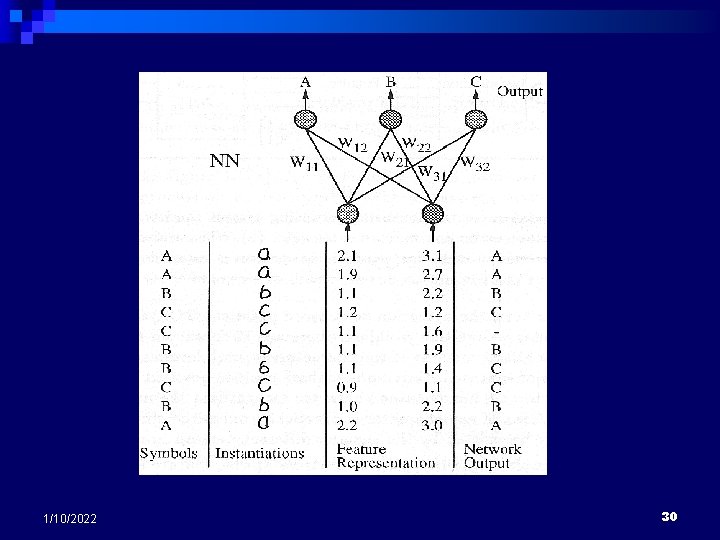

Examples: A Toy OCR n Optical character recognition (OCR) ¨ Supervised n n 1/10/2022 learning The retrieving phase The training phase 28

Examples: A Toy OCR 1/10/2022 29

1/10/2022 30

Supervised Learning Neural Networks Backpropagation HAM

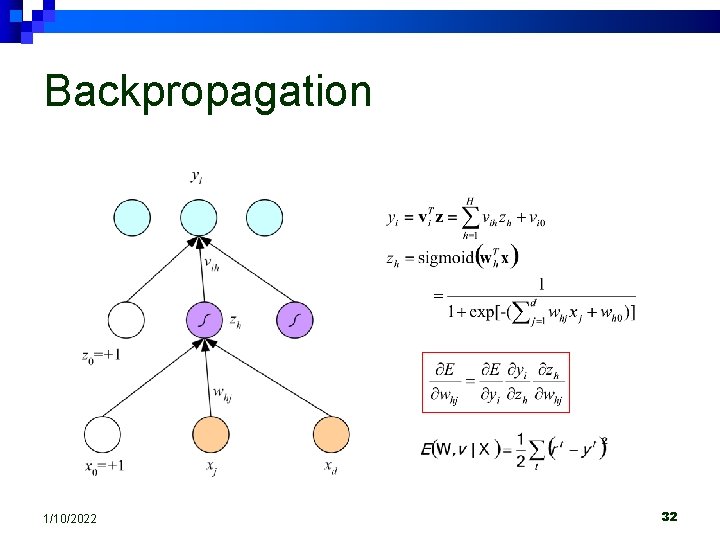

Backpropagation 1/10/2022 32

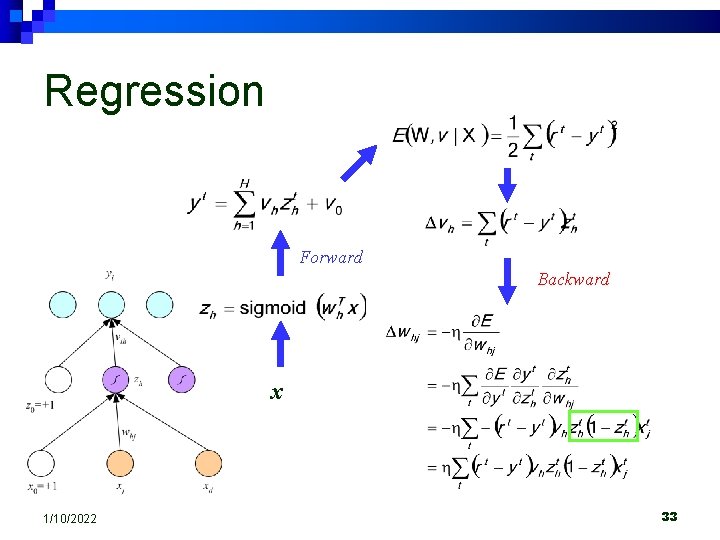

Regression Forward Backward x 1/10/2022 33

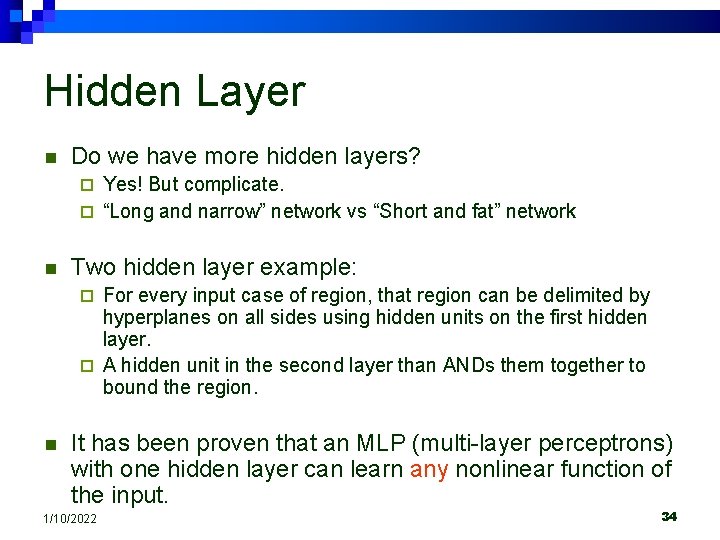

Hidden Layer n Do we have more hidden layers? Yes! But complicate. ¨ “Long and narrow” network vs “Short and fat” network ¨ n Two hidden layer example: For every input case of region, that region can be delimited by hyperplanes on all sides using hidden units on the first hidden layer. ¨ A hidden unit in the second layer than ANDs them together to bound the region. ¨ n It has been proven that an MLP (multi-layer perceptrons) with one hidden layer can learn any nonlinear function of the input. 1/10/2022 34

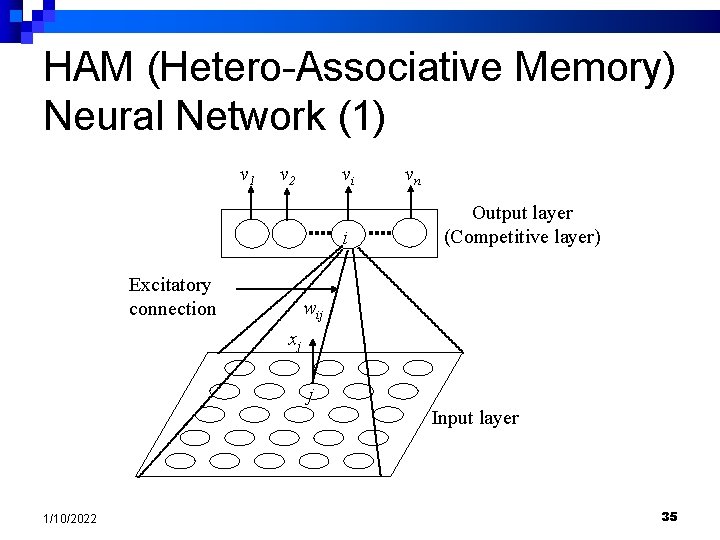

HAM (Hetero-Associative Memory) Neural Network (1) v 1 v 2 vi i Excitatory connection vn Output layer (Competitive layer) wij xj j Input layer 1/10/2022 35

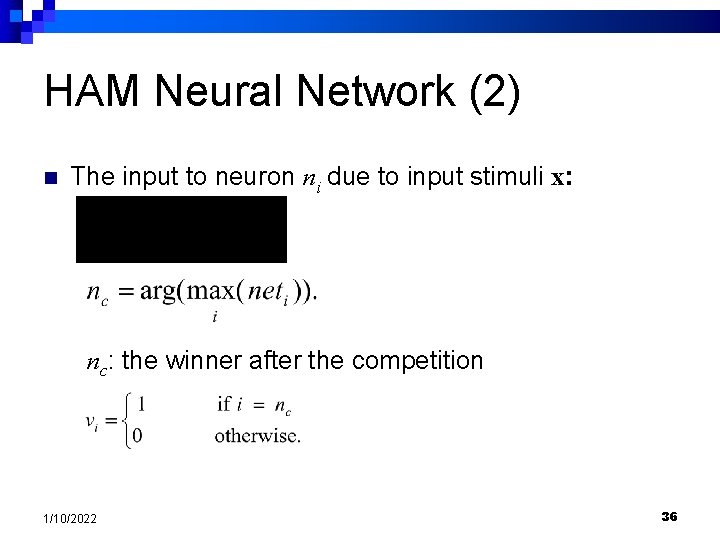

HAM Neural Network (2) n The input to neuron ni due to input stimuli x: nc: the winner after the competition 1/10/2022 36

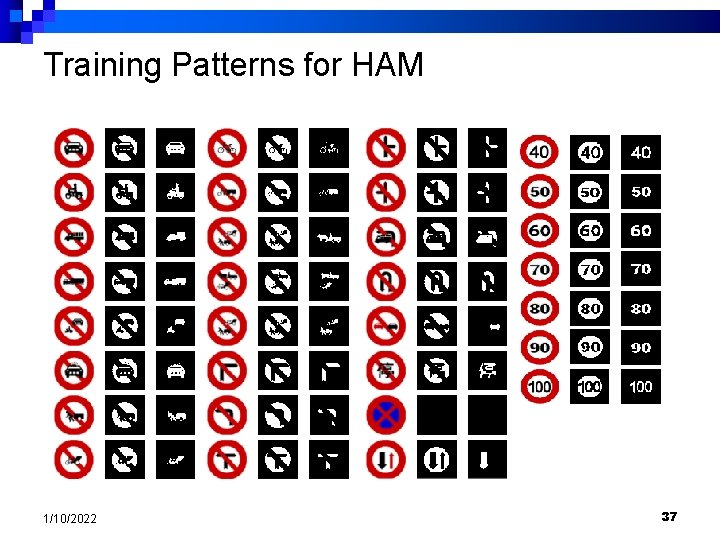

Training Patterns for HAM 1/10/2022 37

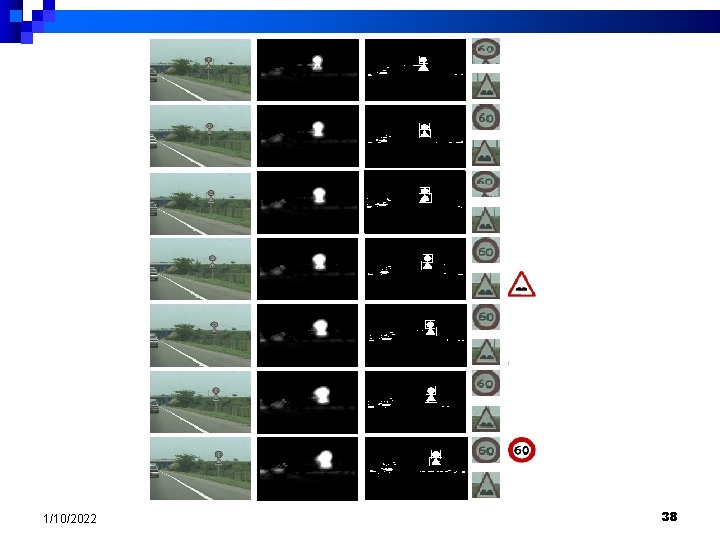

1/10/2022 38

Unsupervised Learning Neural Networks SOM ART 1 ART 2

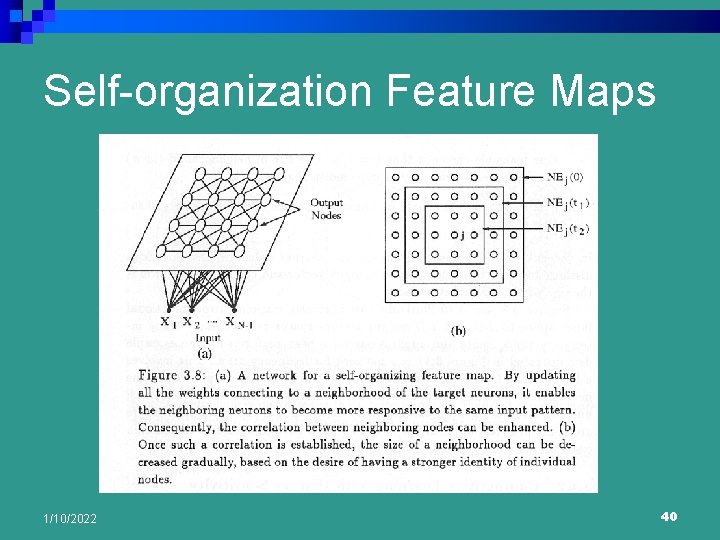

Self-organization Feature Maps 1/10/2022 40

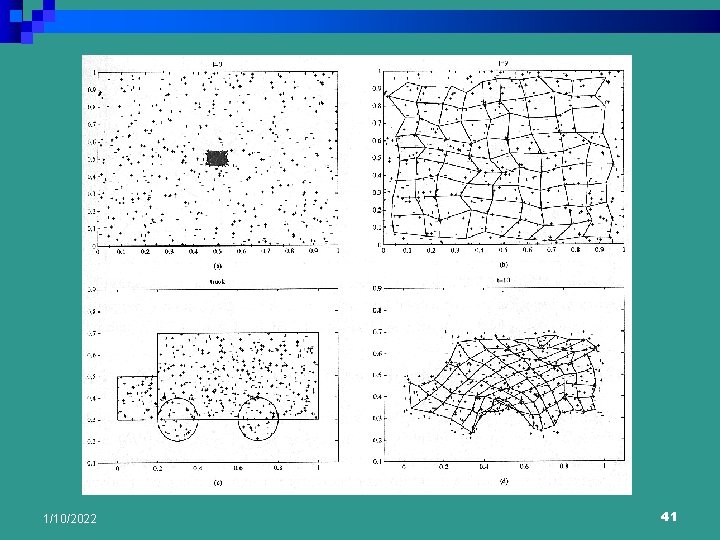

1/10/2022 41

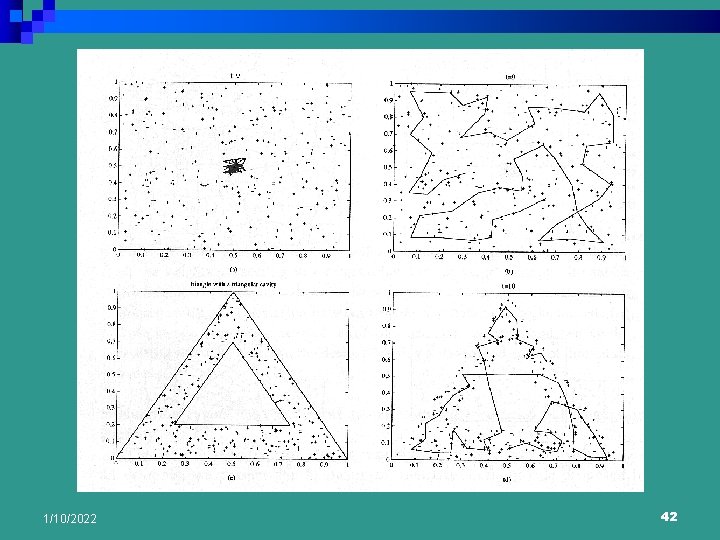

1/10/2022 42

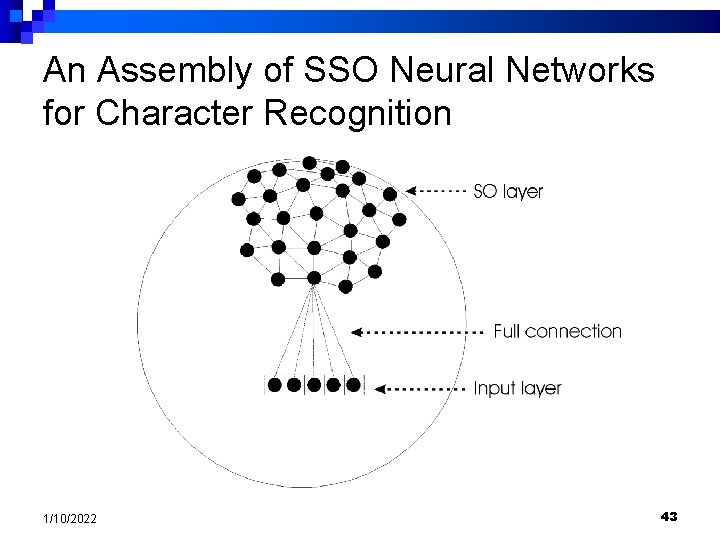

An Assembly of SSO Neural Networks for Character Recognition 1/10/2022 43

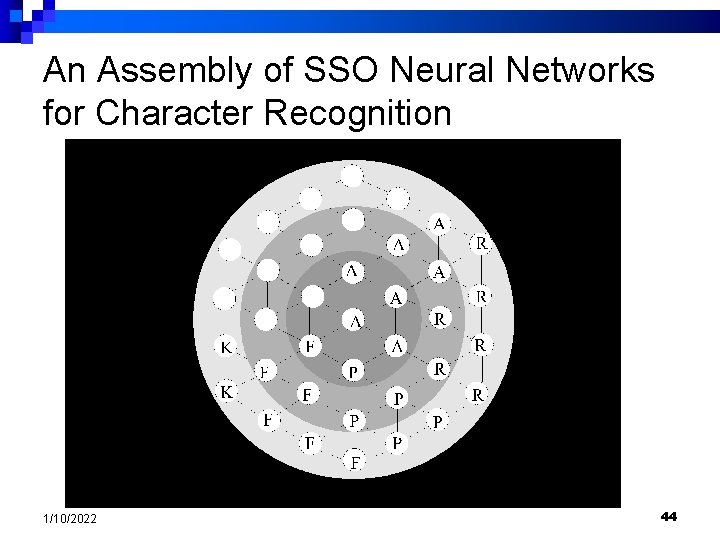

An Assembly of SSO Neural Networks for Character Recognition 1/10/2022 44

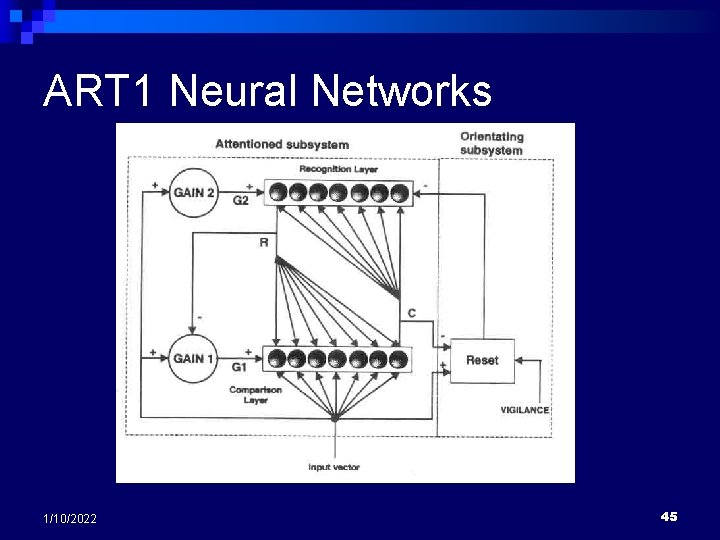

ART 1 Neural Networks 1/10/2022 45

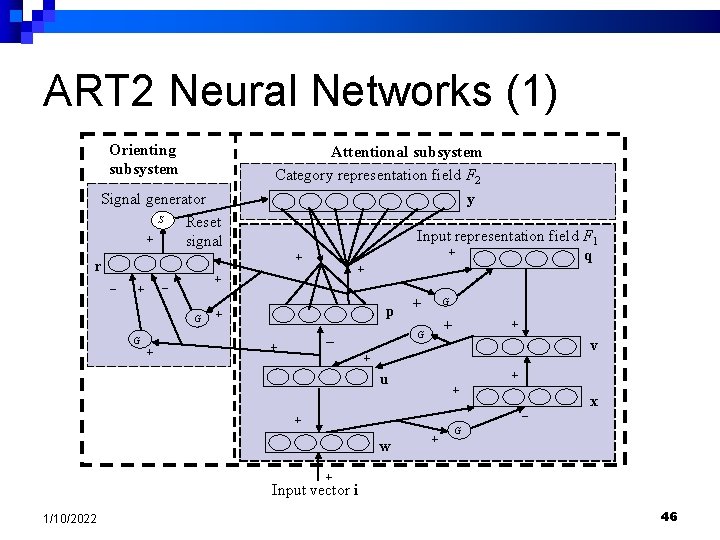

ART 2 Neural Networks (1) Orienting subsystem Signal generator S Reset + signal Attentional subsystem Category representation field F 2 y Input representation field F 1 + q + r + - G G + - + p + + + G - + G + v + u + + x - + w + G + Input vector i 1/10/2022 46

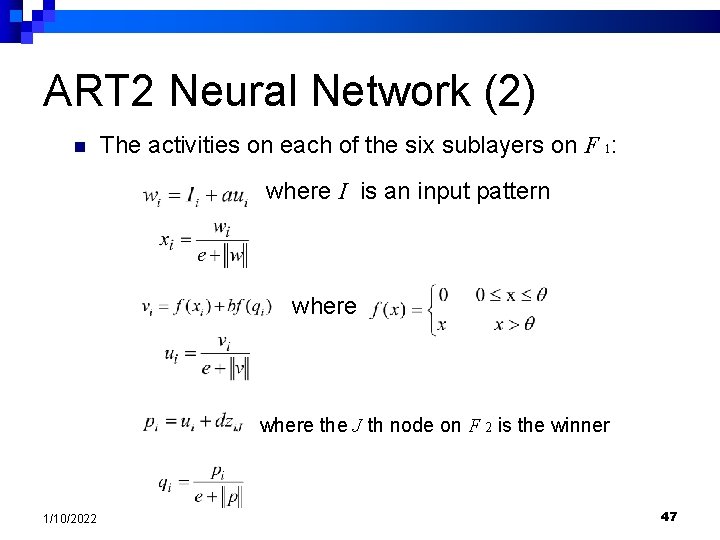

ART 2 Neural Network (2) n The activities on each of the six sublayers on F 1: where I is an input pattern where the J th node on F 2 is the winner 1/10/2022 47

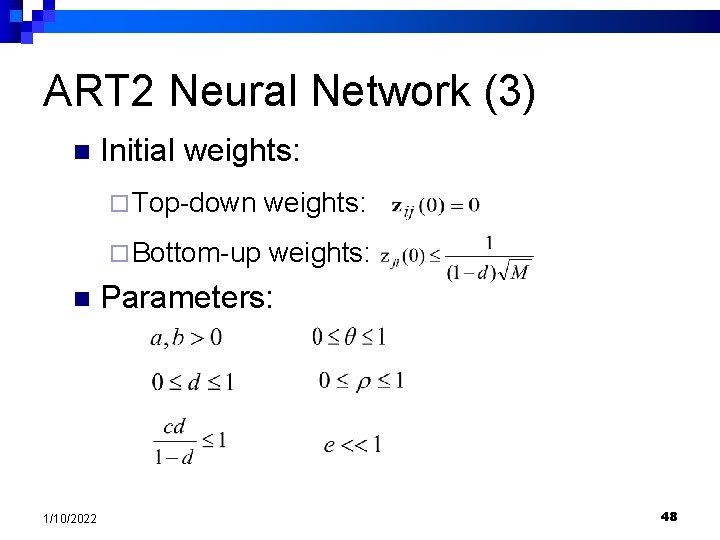

ART 2 Neural Network (3) n n 1/10/2022 Initial weights: ¨ Top-down weights: ¨ Bottom-up weights: Parameters: 48

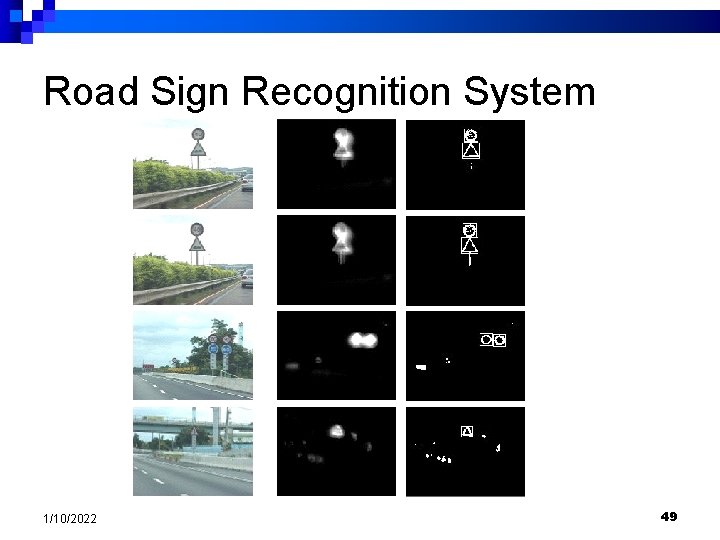

Road Sign Recognition System 1/10/2022 49

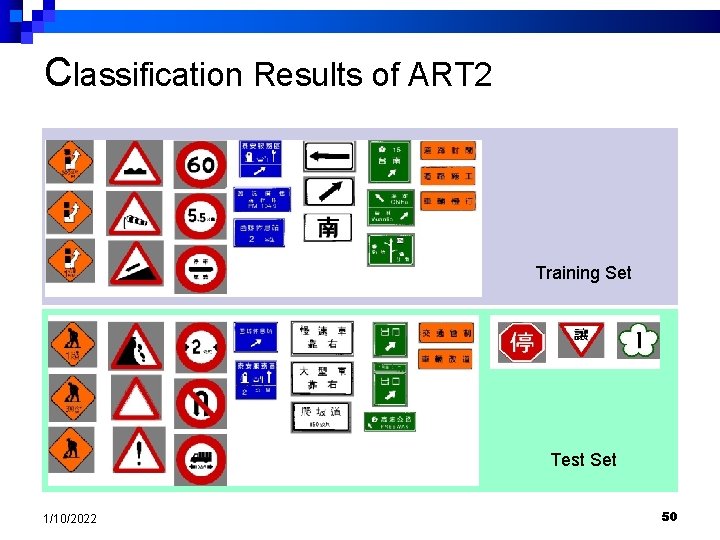

Classification Results of ART 2 Training Set Test Set 1/10/2022 50

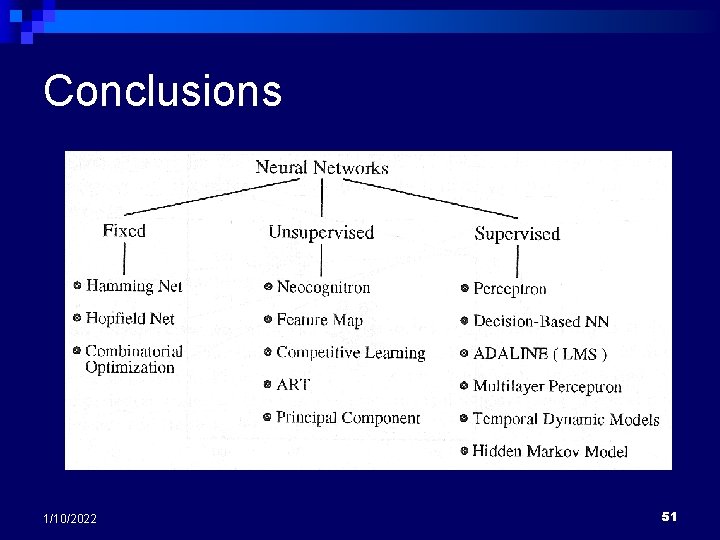

Conclusions 1/10/2022 51

STA Neural Networks

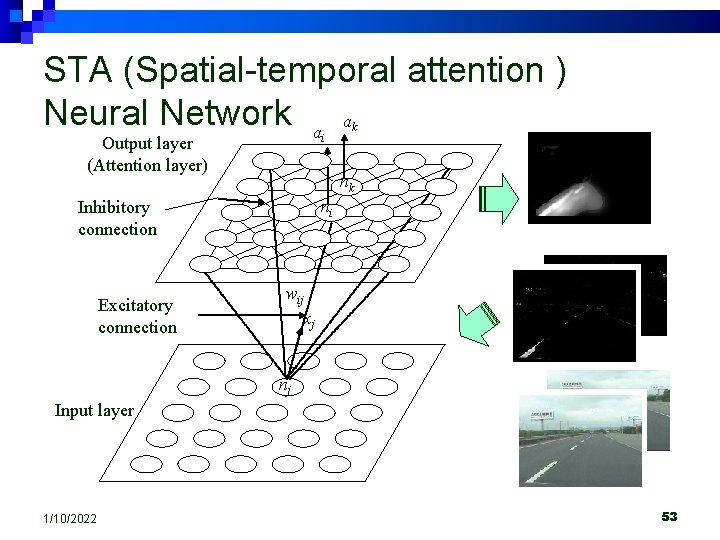

STA (Spatial-temporal attention ) Neural Network a a i Output layer (Attention layer) nk ni Inhibitory connection Excitatory connection k wij xj nj Input layer 1/10/2022 53

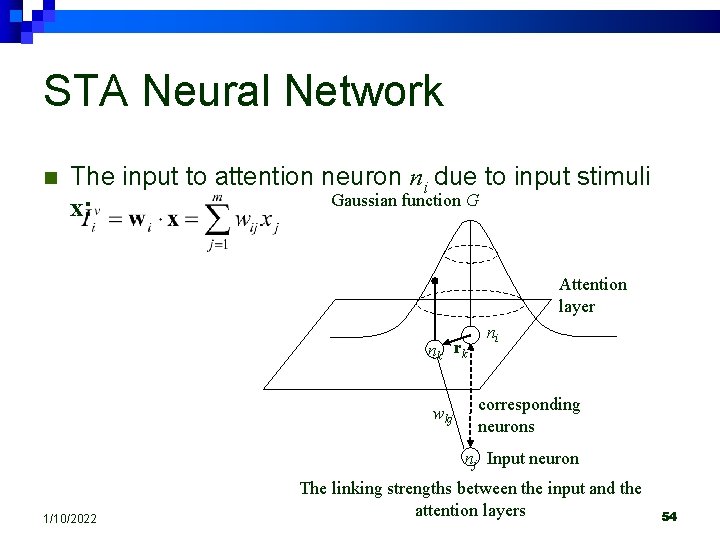

STA Neural Network n The input to attention neuron ni due to input stimuli Gaussian function G x: Attention layer nk rk wkj ni corresponding neurons nj Input neuron 1/10/2022 The linking strengths between the input and the attention layers 54

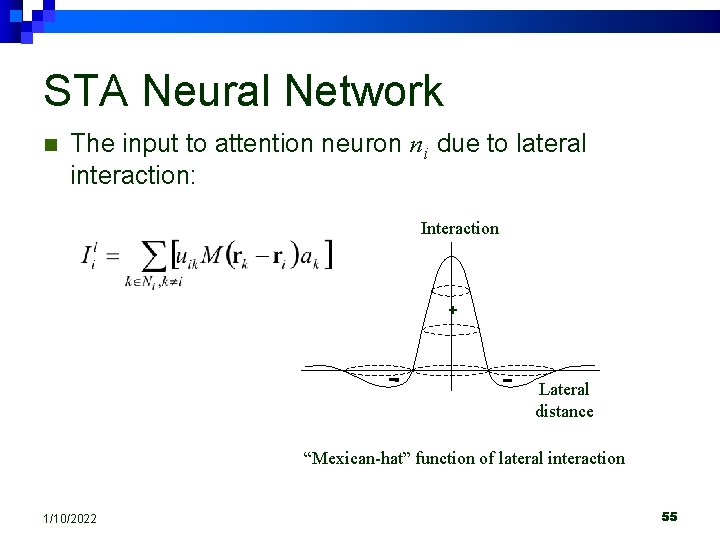

STA Neural Network n The input to attention neuron ni due to lateral interaction: Interaction + Lateral distance “Mexican-hat” function of lateral interaction 1/10/2022 55

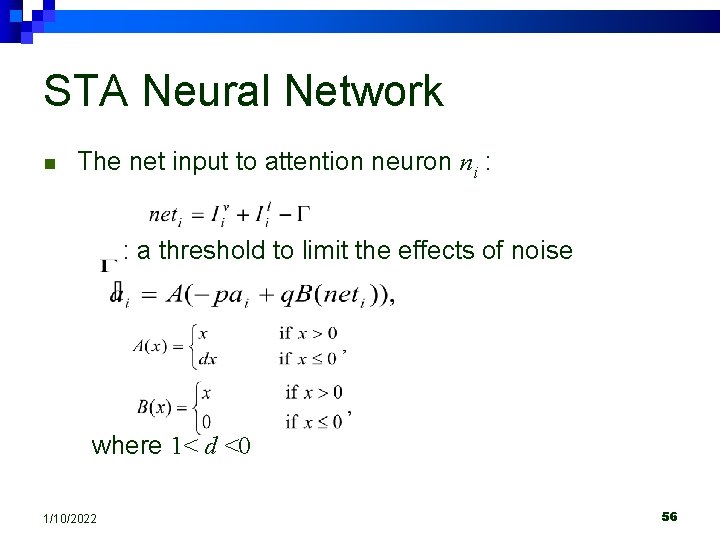

STA Neural Network n The net input to attention neuron ni : : a threshold to limit the effects of noise where 1< d <0 1/10/2022 56

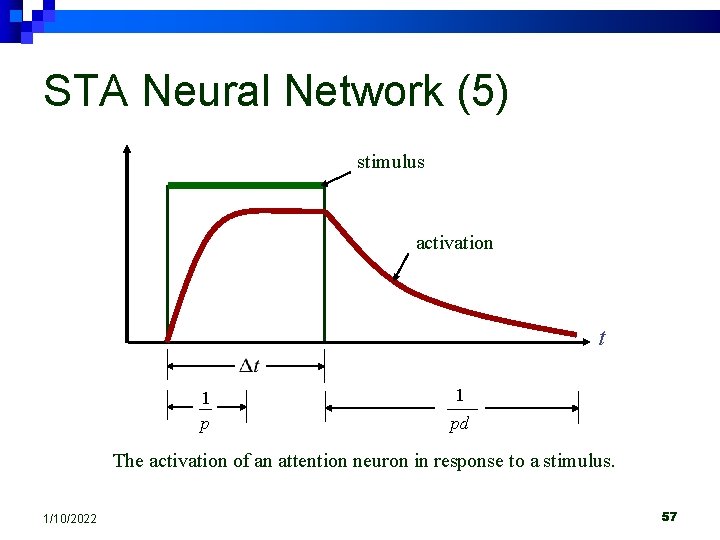

STA Neural Network (5) stimulus activation t 1 pd The activation of an attention neuron in response to a stimulus. 1/10/2022 57

Results of STA Neural Networks 1/10/2022 58

Experimental Results 1/10/2022 59

Results of STA Neural Networks 1/10/2022 60

- Slides: 60