Intelligent Integration of Enterprise Recurrent Neural Network Outline

![Backprop through Time (BPTT) [Razvan Pascanu, ICML’ 13] Backprop through Time (BPTT) [Razvan Pascanu, ICML’ 13]](https://slidetodoc.com/presentation_image_h/9b696c8c6fb040e6f9692d2e91d280c5/image-23.jpg)

- Slides: 27

智慧化企業整合 Intelligent Integration of Enterprise Recurrent Neural Network 助教: 陳可馨

Outline • • • Recurrent Neural Network (RNN) Long Short-term Memory (LSTM) Backprop through Time (BPTT) Demo Class Assignment & Homework

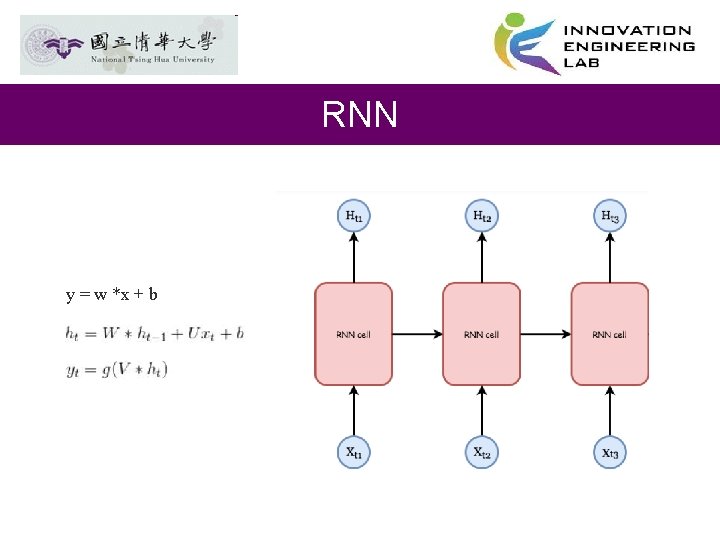

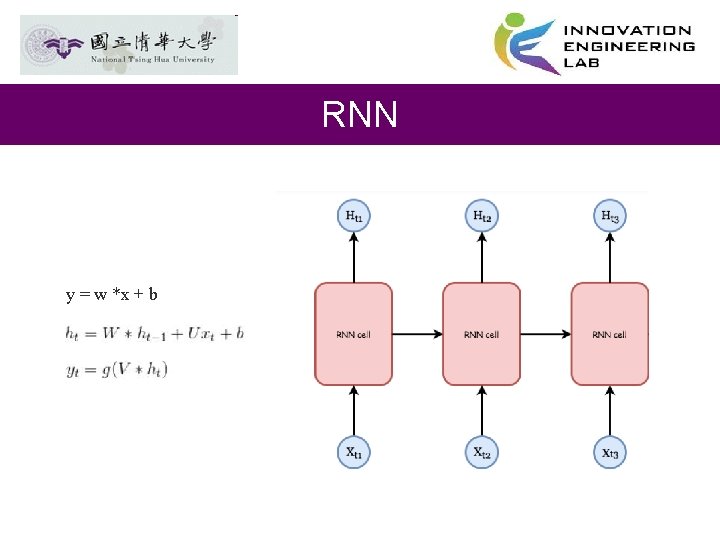

RNN y = w *x + b

Example Application • Slot Filling I would like to arrive Taipei on November 2 nd. ticket booking system Destination: Taipei Slot time of arrival: November 2 nd

Example Application dest Solving slot filling by Feedforward network? • Input: a word (Each word is represented as a vector) • Output: Probability distribution that the input word belonging to the slots Taipei time of departure

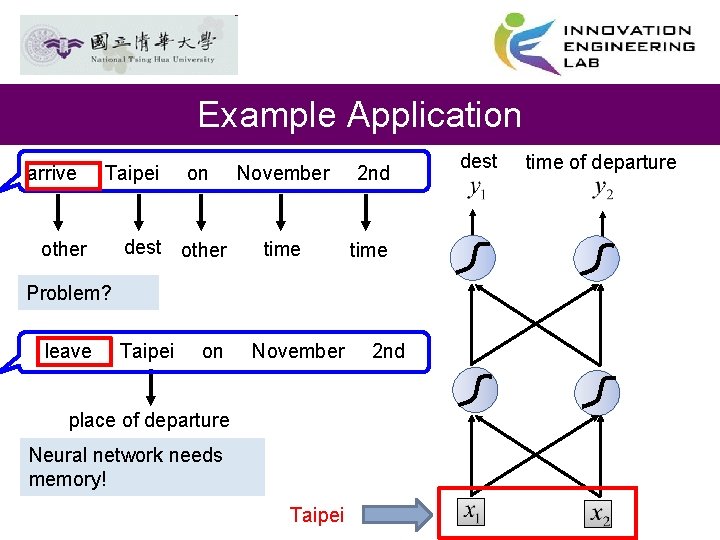

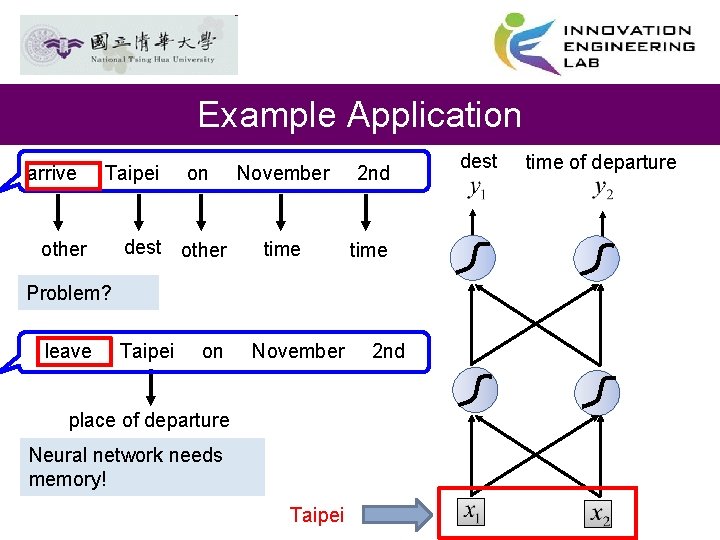

Example Application arrive Taipei other on dest other November 2 nd time Problem? leave Taipei on November place of departure Neural network needs memory! Taipei 2 nd dest time of departure

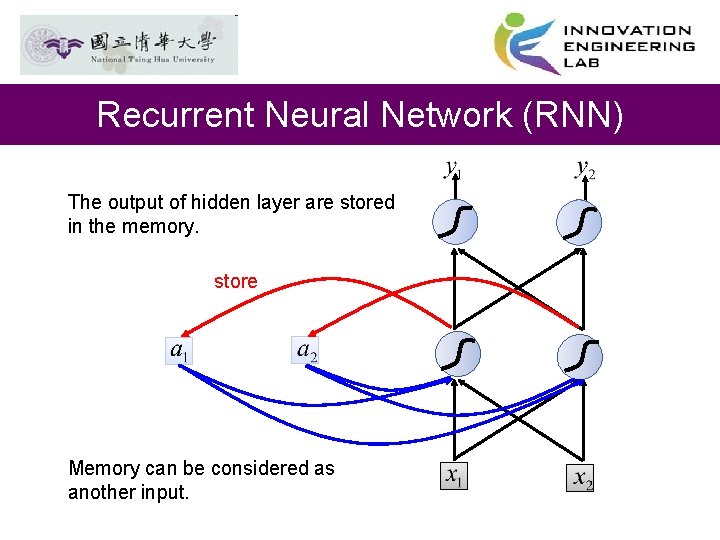

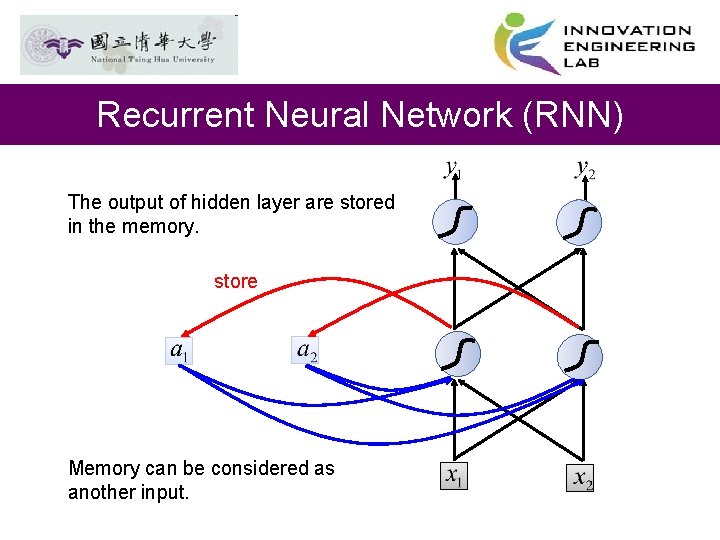

Recurrent Neural Network (RNN) The output of hidden layer are stored in the memory. store Memory can be considered as another input.

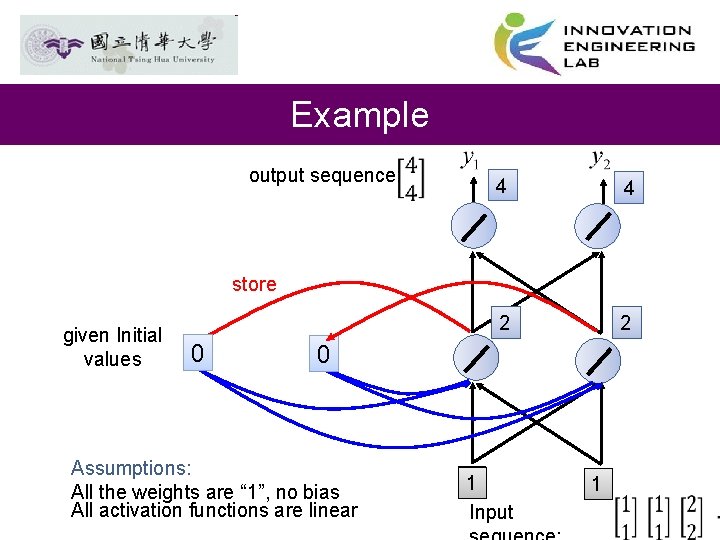

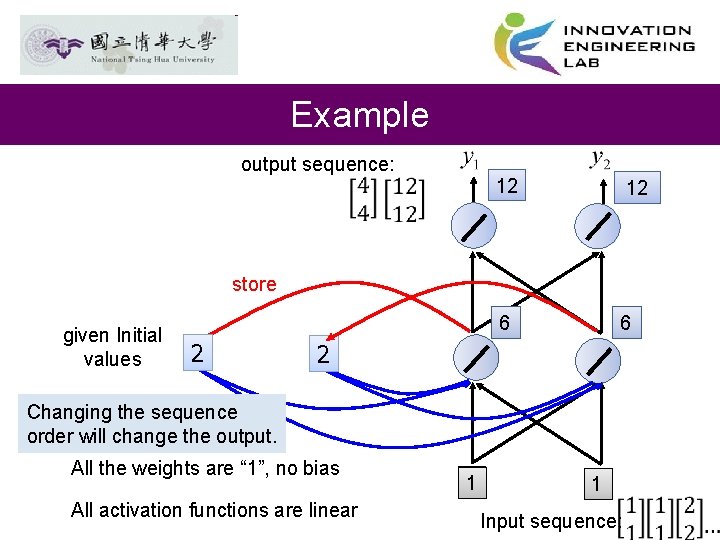

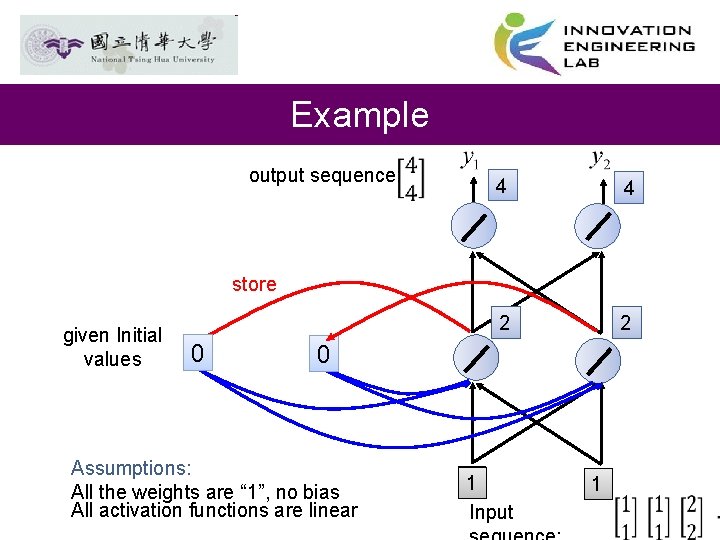

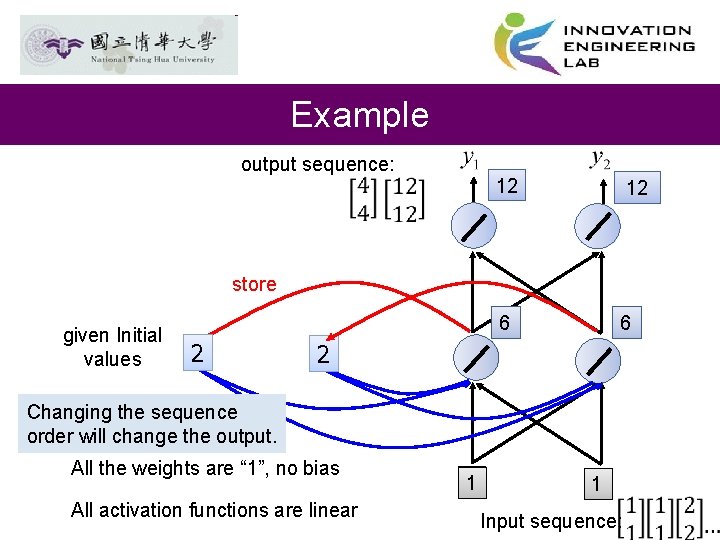

Example output sequence: 4 4 2 2 store given Initial values 0 0 Assumptions: All the weights are “ 1”, no bias All activation functions are linear 1 Input 1

Example output sequence: 12 12 store given Initial values 6 2 Changing the sequence order will change the output. All the weights are “ 1”, no bias All activation functions are linear 1 1 Input sequence:

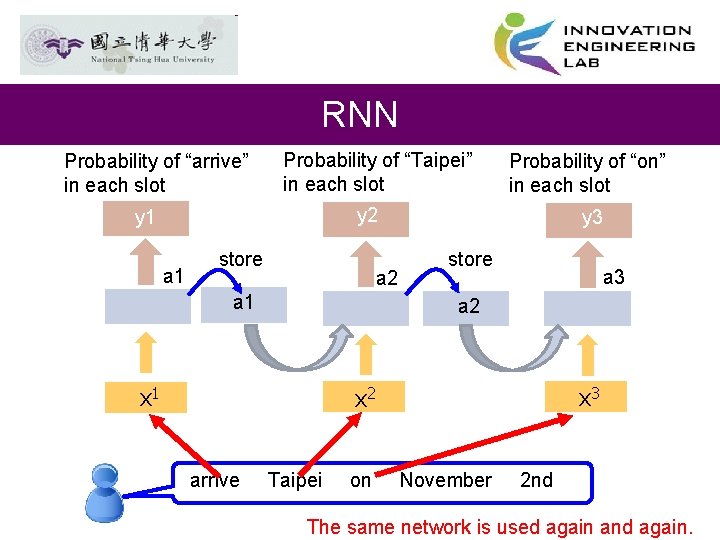

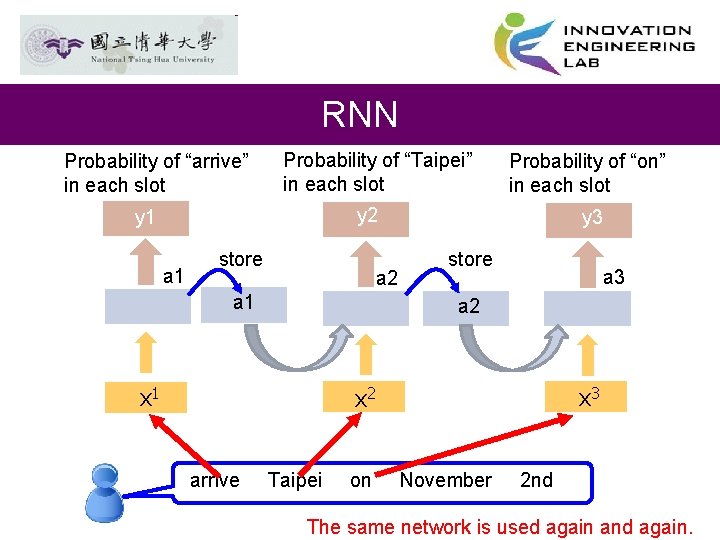

RNN Probability of “arrive” in each slot Probability of “Taipei” in each slot Probability of “on” in each slot y 2 y 1 a 1 store a 2 a 1 y 3 store a 3 a 2 x 1 x 3 x 2 arrive Taipei on November 2 nd The same network is used again and again.

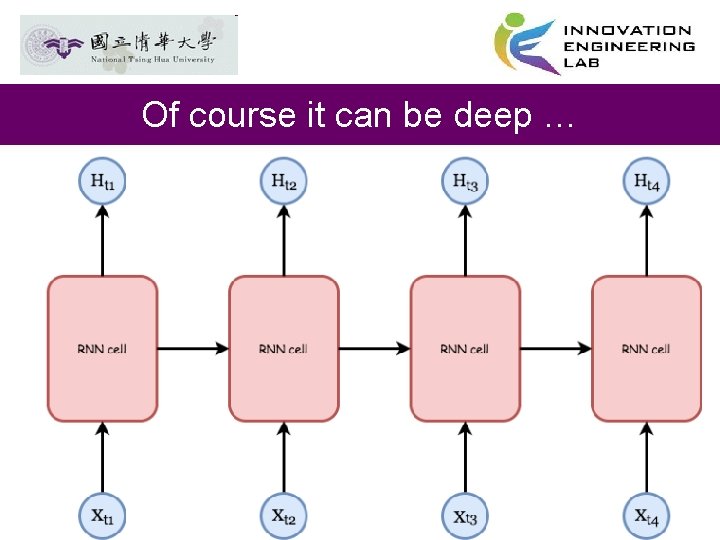

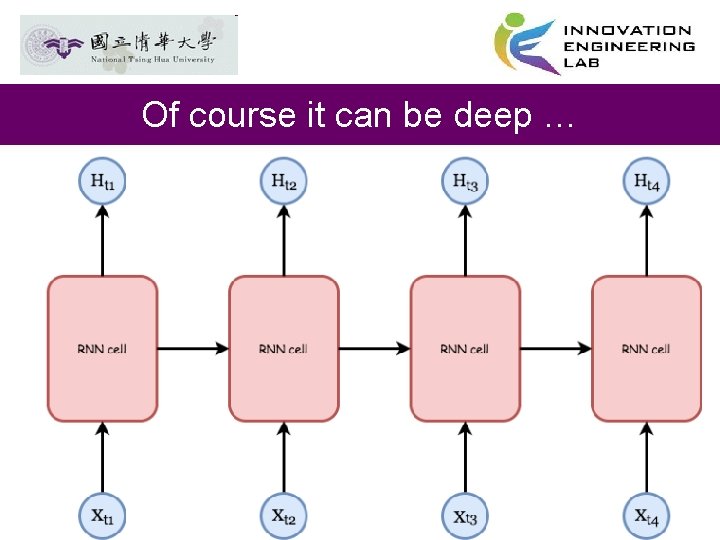

Of course it can be deep …

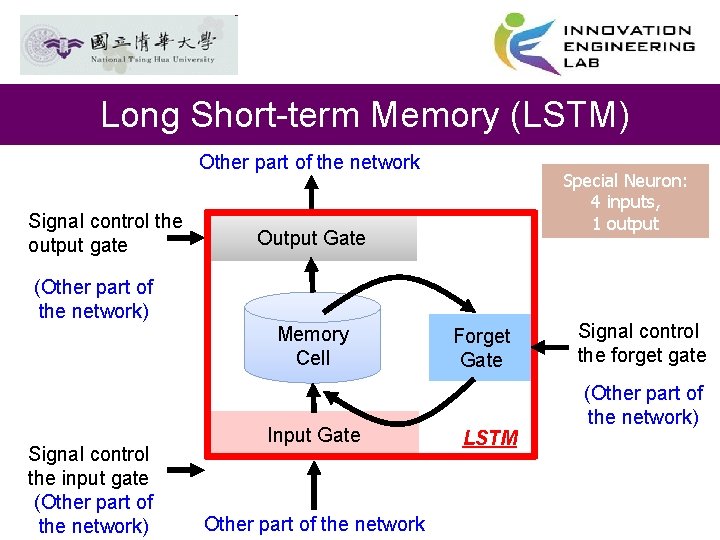

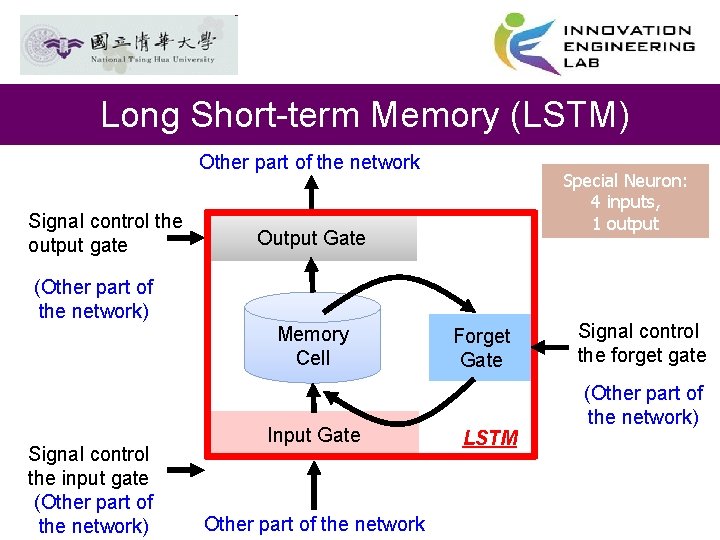

Long Short-term Memory (LSTM) Other part of the network Signal control the output gate Special Neuron: 4 inputs, 1 output Output Gate (Other part of the network) Memory Cell Signal control the input gate (Other part of the network) Input Gate Other part of the network Forget Gate LSTM Signal control the forget gate (Other part of the network)

multiply Activation function f is usually a sigmoid function Between 0 and 1 Mimic open and close gate c multiply

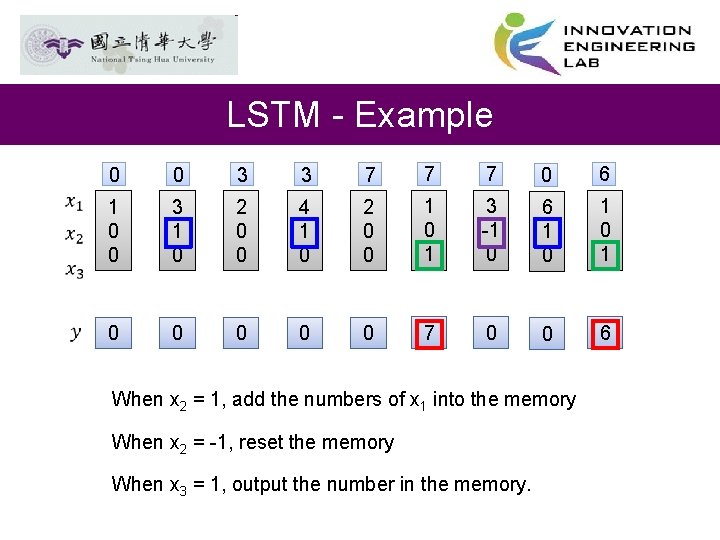

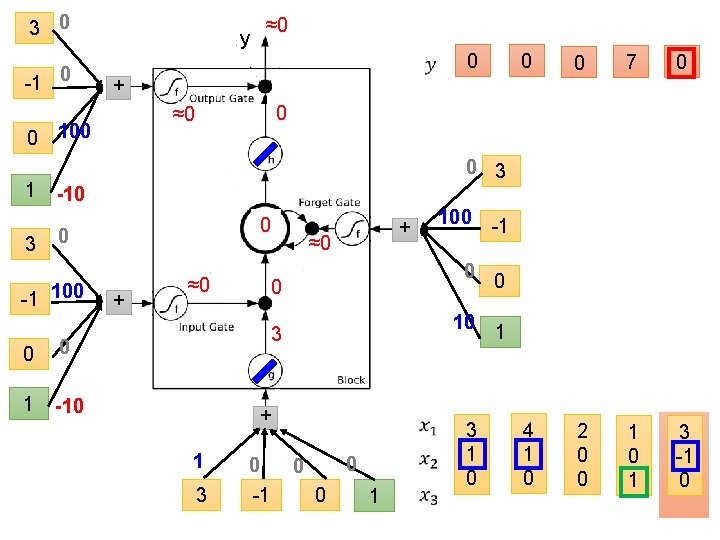

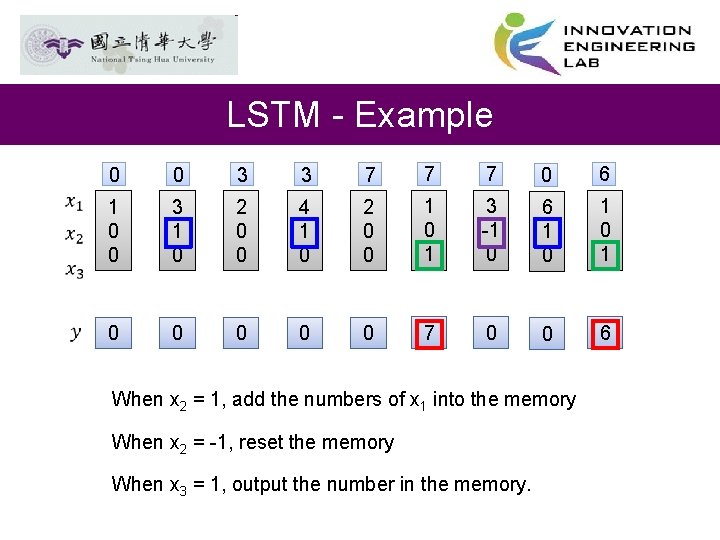

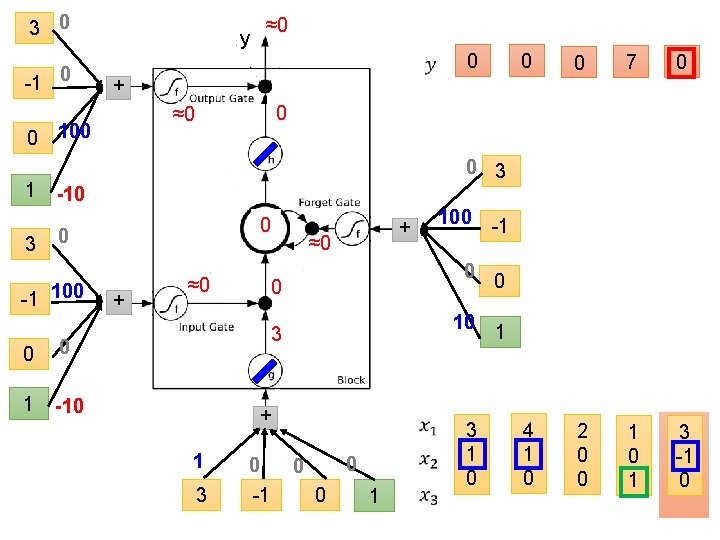

LSTM - Example 0 0 3 3 7 7 7 0 6 1 0 0 3 1 0 2 0 0 4 1 0 2 0 0 1 3 -1 0 6 1 0 1 0 0 0 7 0 0 6 When x 2 = 1, add the numbers of x 1 into the memory When x 2 = -1, reset the memory When x 3 = 1, output the number in the memory.

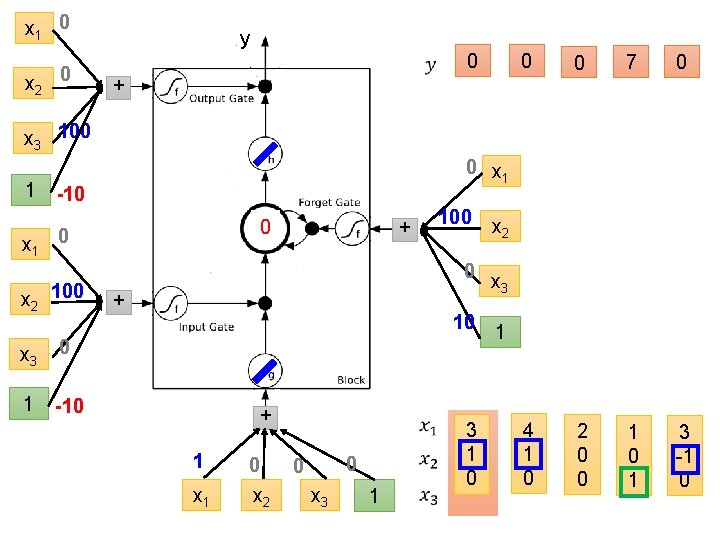

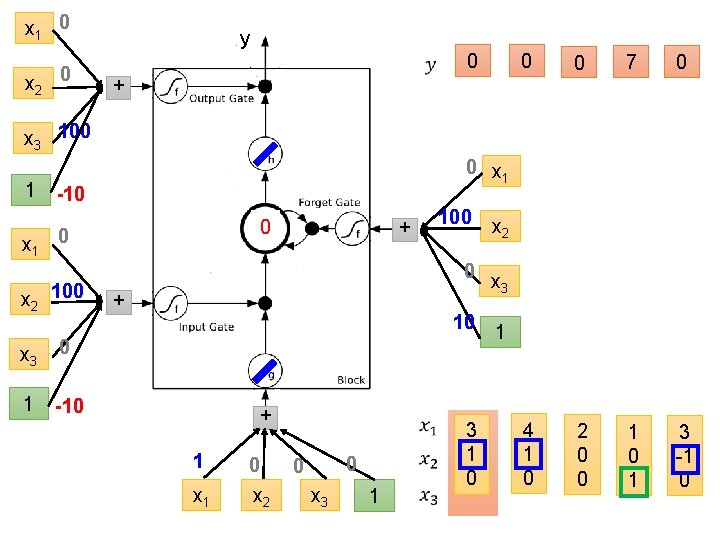

x 1 0 x 2 0 y 0 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + x 3 100 1 x 1 0 x 1 -10 0 x 2 100 x 3 0 1 -10 + 0 100 x 2 0 x 3 + 10 1 + 1 0 x 1 x 2 0 0 x 3 1 0

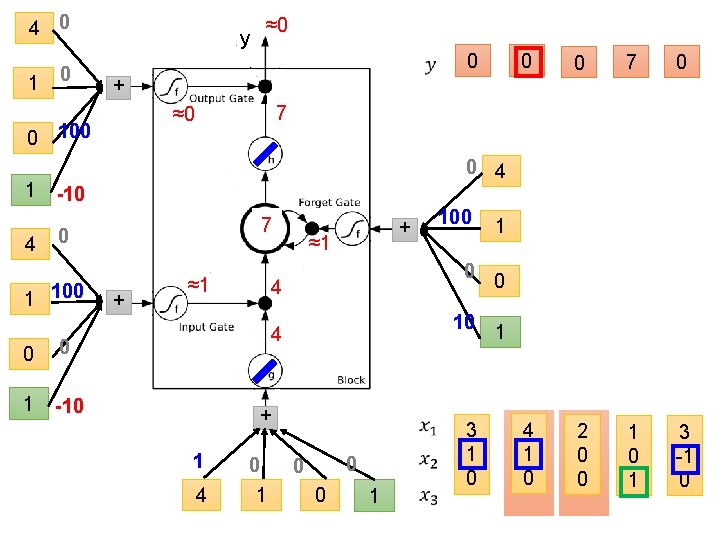

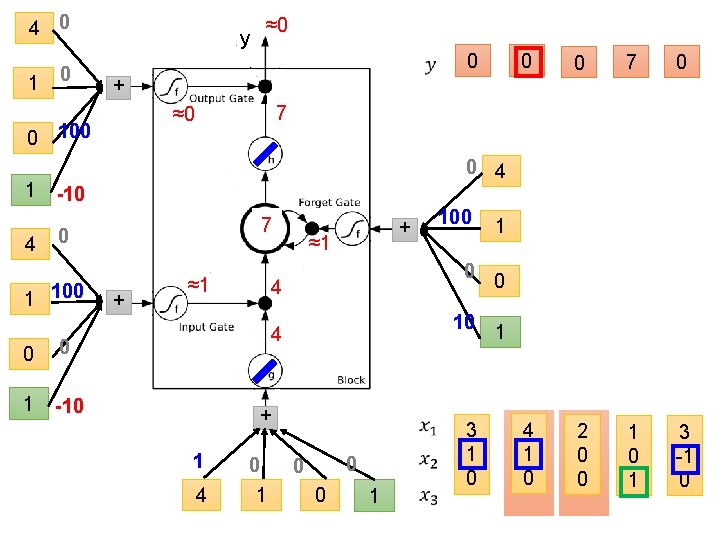

3 0 1 0 0 3 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + 3 ≈0 0 100 1 ≈0 y 0 3 -10 0 3 0 1 100 0 0 1 -10 + + ≈1 3 3 10 1 + 3 0 1 1 0 0 ≈1 1 100 0 1 3 1 0

4 0 1 0 0 4 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + 7 ≈0 0 100 1 ≈0 y 0 4 -10 7 3 0 1 100 0 0 1 -10 + + ≈1 4 4 10 1 + 4 0 1 1 0 0 ≈1 1 100 0 1 3 1 0

2 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + 7 ≈0 0 100 1 ≈0 y 0 2 -10 7 0 0 100 0 0 1 -10 + + ≈1 0 2 10 1 + 2 0 0 0 ≈0 1 100 0 1 3 1 0

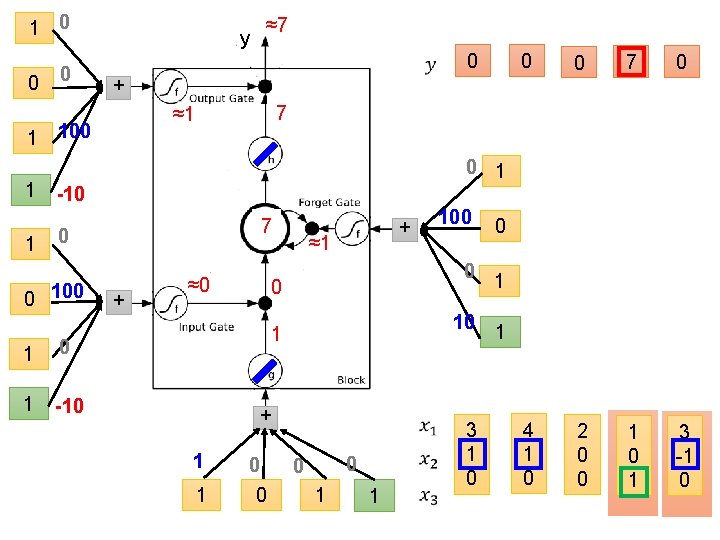

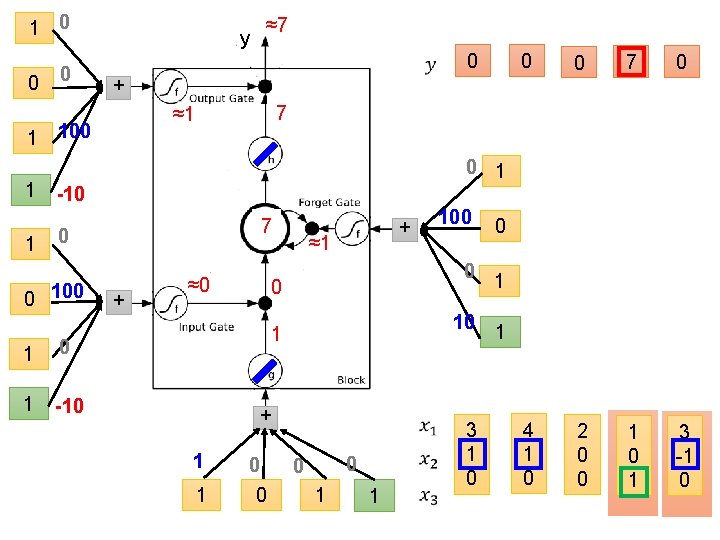

1 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + 7 ≈1 1 100 1 ≈7 y 0 1 -10 7 0 0 100 1 -10 + + ≈1 0 1 10 1 + 1 0 0 1 ≈0 1 100 0 0 1 1 3 1 0

3 0 -1 0 0 3 0 0 7 0 4 1 0 2 0 0 1 3 -1 0 + 0 ≈0 0 100 1 ≈0 y 0 3 -10 0 7 0 -1 100 0 0 1 -10 + + ≈0 0 3 10 1 ≈0 + 1 0 3 -1 100 -1 0 0 0 1 3 1 0

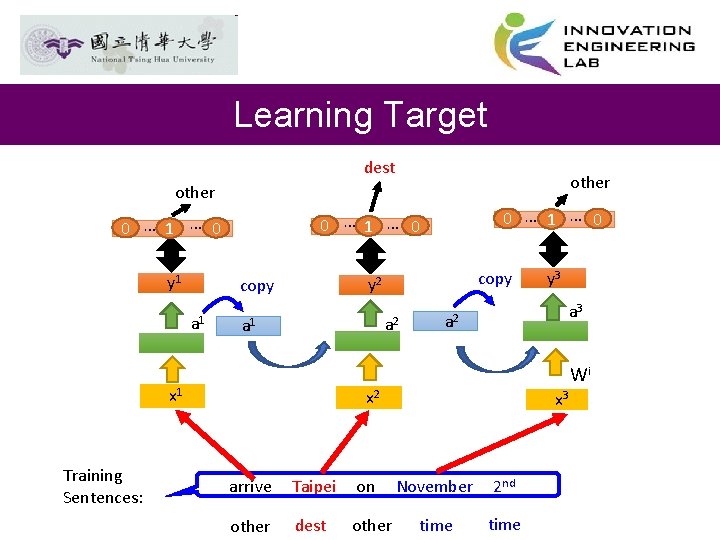

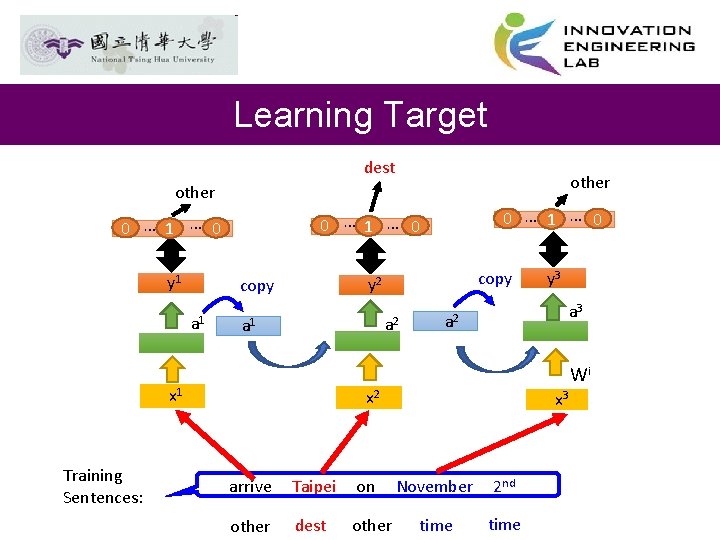

Learning Target dest other y 1 a 2 a 1 y 3 a 2 Wi x 1 Training Sentences: copy y 2 copy a 1 0 … 1 … 0 x 2 arrive Taipei on other dest other x 3 November 2 nd time

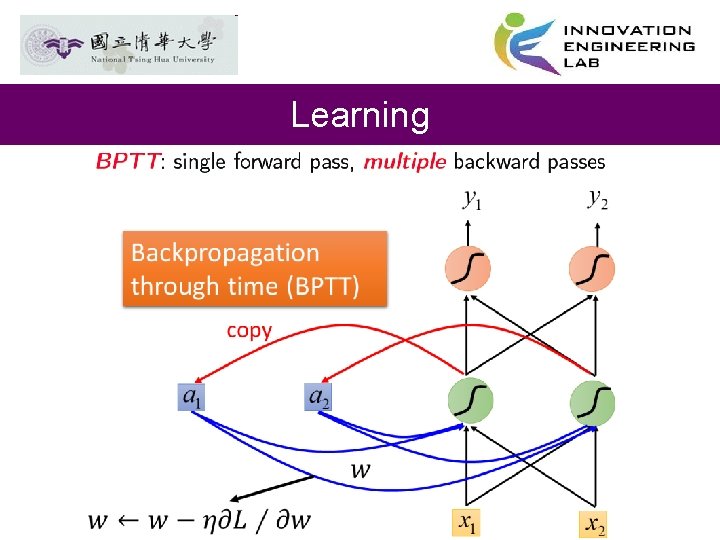

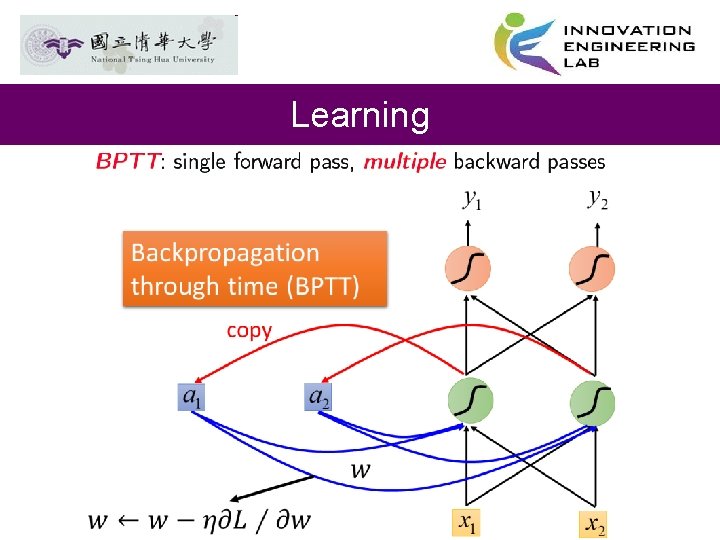

Learning

![Backprop through Time BPTT Razvan Pascanu ICML 13 Backprop through Time (BPTT) [Razvan Pascanu, ICML’ 13]](https://slidetodoc.com/presentation_image_h/9b696c8c6fb040e6f9692d2e91d280c5/image-23.jpg)

Backprop through Time (BPTT) [Razvan Pascanu, ICML’ 13]

Demo

References • http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/ Lecture/RNN. pdf

Class assignment • Please train a simple Recurrent Neural Network(RNN) to classify the MNIST dataset. You are asked to: 1. Split the dataset into training and testing dataset 2. Train the model 4000 times(steps), batch size 50 for each. Every 500 times(steps), print out the loss and accuracy of testing data 3. Predict the top 10 number of the testing data • Turn in your work with the format of. ipynb , and please write some brief comments in your ipynb to illustrate your results. • File name: class 7_Your Chinese Name 26

Homework • Please train a recurrent neural network (RNN) for sentiment classification using Keras using IMDB dataset: 1. Split the dataset into training and testing dataset 2. Print the first movie review in the training set 3. Create an RNN model that has one (or optionally two) LSTM layers and run at least 5 epochs 4. Your accuracy should be greater than 0. 80 on both training and testing set • Turn in your work with the format of. ipynb , and please write some brief comments in your ipynb to illustrate your results. • File name: hw 7_Your Chinese Name 27

Extensions of recurrent neural network language model

Extensions of recurrent neural network language model Colah lstm

Colah lstm Recurrent neural network based language model

Recurrent neural network based language model Recurrent neural network andrew ng

Recurrent neural network andrew ng Pixelrnn

Pixelrnn Simple recurrent network

Simple recurrent network Risk intelligent enterprise

Risk intelligent enterprise Intelligent information network

Intelligent information network Merzenich et al (1984) ib psychology

Merzenich et al (1984) ib psychology Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Student teacher neural network

Student teacher neural network Cost function deep learning

Cost function deep learning Threshold logic unit in neural network

Threshold logic unit in neural network Meshnet: mesh neural network for 3d shape representation

Meshnet: mesh neural network for 3d shape representation Pengertian artificial neural network

Pengertian artificial neural network Neural network in r

Neural network in r Matlab u-net

Matlab u-net Spss neural network

Spss neural network Xkcd neural network

Xkcd neural network Neural network ppt

Neural network ppt Limitations of perceptron:

Limitations of perceptron: Artificial neural network in data mining

Artificial neural network in data mining Least mean square algorithm in neural network

Least mean square algorithm in neural network Weka neural network

Weka neural network Adaline madaline

Adaline madaline Decision boundary of neural network

Decision boundary of neural network Maxnet neural network

Maxnet neural network Neural speech synthesis with transformer network

Neural speech synthesis with transformer network