Intel Software College Confronting Manycore Parallel Programming Beyond

Intel® Software College Confronting Manycore: Parallel Programming Beyond Multicore Michael Wrinn Intel Corporation July 2, 2008 3/5/2021 1

Agenda Intel® Software College • The multicore/manycore landscape – why this switch, to multiple cores, and who is making it? • the software challenge: manycore is more than “more multicore” • confronting the challenge at a conceptual level – programming model, design patterns • confronting the challenge at the implementation level – extending current languages – explicitly parallel languages • call for action/help – please work with us -- sign up for community 3/5/2021 2

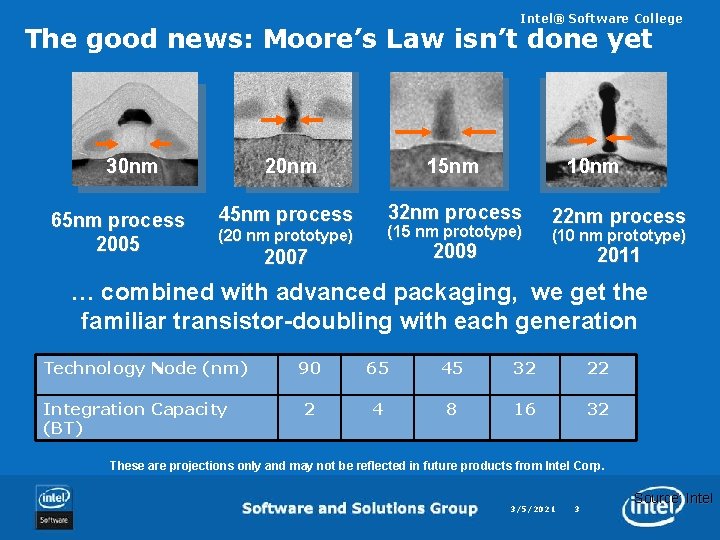

Intel® Software College The good news: Moore’s Law isn’t done yet 30 nm 65 nm process 2005 20 nm 15 nm 10 nm 45 nm process 32 nm process (15 nm prototype) (20 nm prototype) 2009 2007 22 nm process (10 nm prototype) 2011 … combined with advanced packaging, we get the familiar transistor-doubling with each generation Technology Node (nm) Integration Capacity (BT) 90 65 45 32 22 2 4 8 16 32 These are projections only and may not be reflected in future products from Intel Corp. 3/5/2021 3 Source: Intel

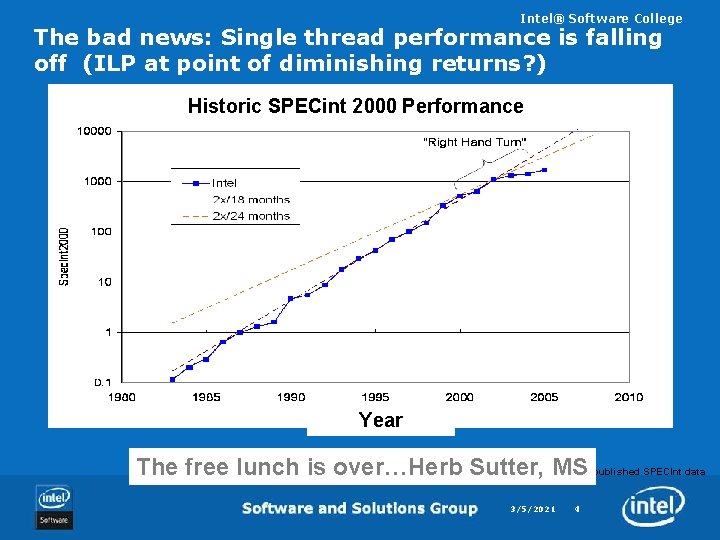

Intel® Software College The bad news: Single thread performance is falling off (ILP at point of diminishing returns? ) Historic SPECint 2000 Performance Year Source: published SPECInt data The free lunch is over…Herb Sutter, MS 3/5/2021 4

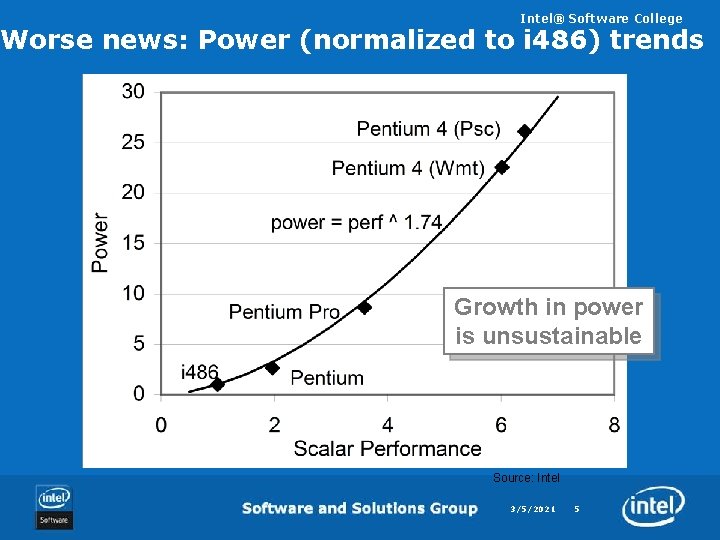

Intel® Software College Worse news: Power (normalized to i 486) trends Growth in power is unsustainable Source: Intel 3/5/2021 5

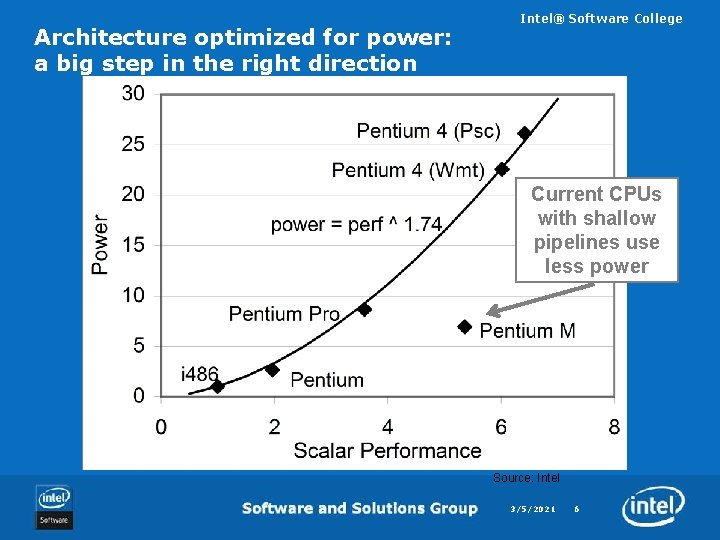

Architecture optimized for power: a big step in the right direction Intel® Software College Current CPUs with shallow pipelines use less power Source: Intel 3/5/2021 6

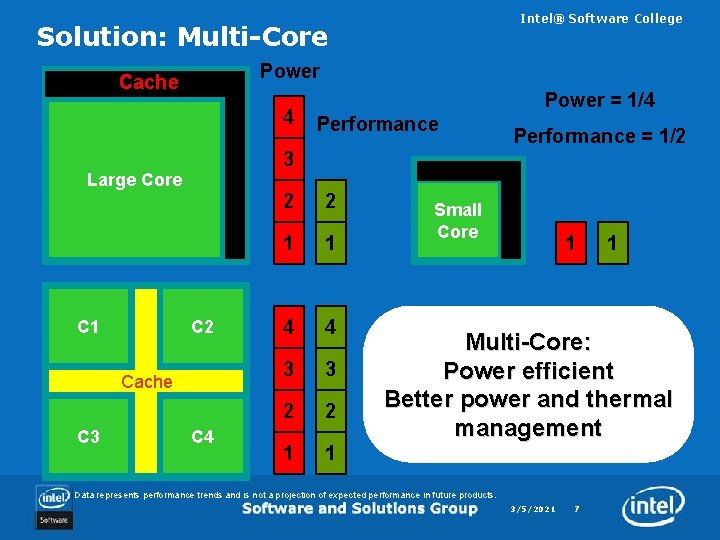

Intel® Software College Solution: Multi-Core Power Cache 4 C 2 Cache C 3 Performance = 1/2 3 Large Core C 1 Power = 1/4 C 4 2 2 1 1 4 4 3 3 2 2 1 1 Small Core 1 1 Multi-Core: Power efficient Better power and thermal management Data represents performance trends and is not a projection of expected performance in future products. 3/5/2021 7

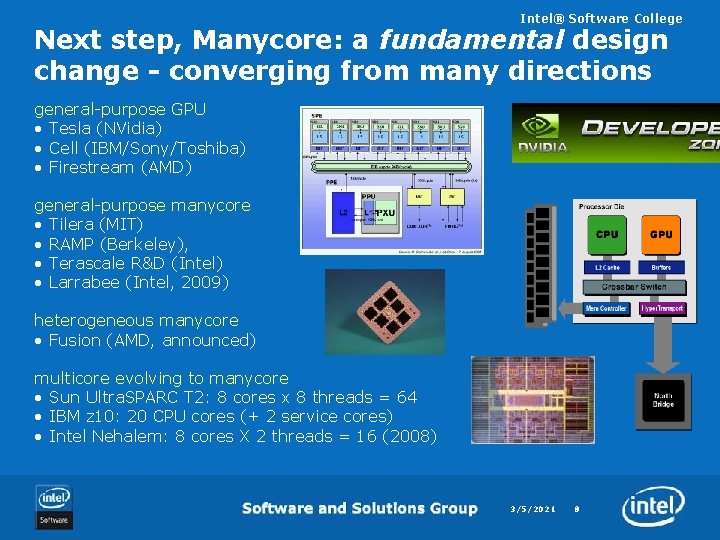

Intel® Software College Next step, Manycore: a fundamental design change - converging from many directions general-purpose GPU • Tesla (NVidia) • Cell (IBM/Sony/Toshiba) • Firestream (AMD) general-purpose manycore • Tilera (MIT) • RAMP (Berkeley), • Terascale R&D (Intel) • Larrabee (Intel, 2009) heterogeneous manycore • Fusion (AMD, announced) multicore evolving to manycore • Sun Ultra. SPARC T 2: 8 cores x 8 threads = 64 • IBM z 10: 20 CPU cores (+ 2 service cores) • Intel Nehalem: 8 cores X 2 threads = 16 (2008) 3/5/2021 8

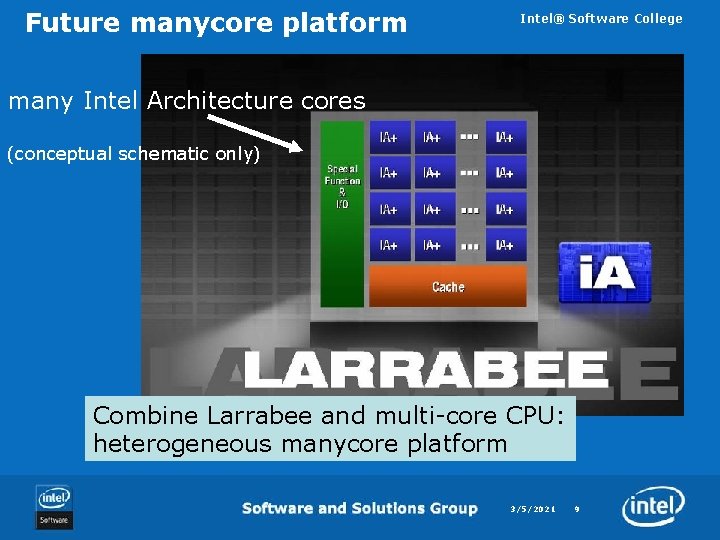

Future manycore platform Intel® Software College many Intel Architecture cores (conceptual schematic only) Combine Larrabee and multi-core CPU: heterogeneous manycore platform 3/5/2021 9

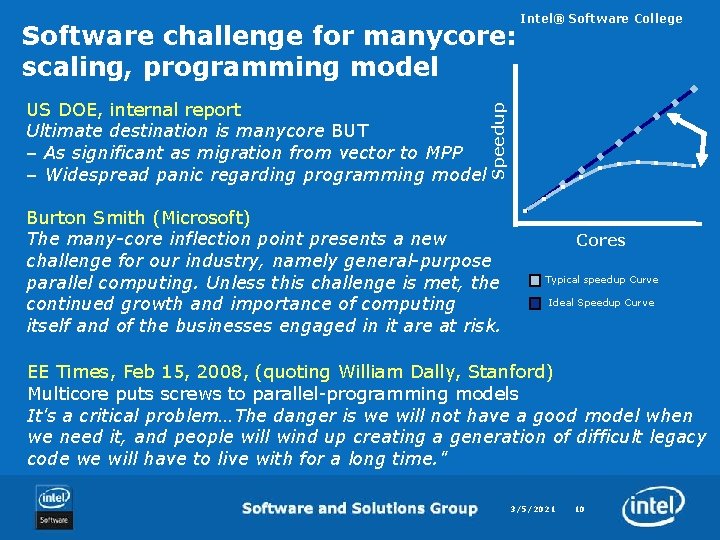

US DOE, internal report Ultimate destination is manycore BUT – As significant as migration from vector to MPP – Widespread panic regarding programming model Speedup Software challenge for manycore: scaling, programming model Intel® Software College Burton Smith (Microsoft) The many-core inflection point presents a new challenge for our industry, namely general-purpose parallel computing. Unless this challenge is met, the continued growth and importance of computing itself and of the businesses engaged in it are at risk. Cores Typical speedup Curve Ideal Speedup Curve EE Times, Feb 15, 2008, (quoting William Dally, Stanford) Multicore puts screws to parallel-programming models It's a critical problem…The danger is we will not have a good model when we need it, and people will wind up creating a generation of difficult legacy code we will have to live with for a long time. " 3/5/2021 10

Intel® Software College Software challenge for manycore: traditional CS thinking is deeply sequential The Peanut Butter & Jelly example 1. First, place a paper plate on the kitchen counter. 2. Then, get a loaf of white bread and a jar of peanut butter from the pantry, as well as a jar of grape jelly from the refrigerator, and place all of these things on the kitchen counter. 3. Next, take two slices of white bread out of the bread bag, and put them on the paper plate. 4. Take a butter knife out of the kitchen drawer, and place it on the paper plate as well. 5. Open the peanut butter jar, and use the butter knife to remove some peanut butter; proceed to butter one slice of bread. 6. Afterwards, open the jelly jar, take some jelly out with the knife, and smear some jelly onto the other slice. 7. Place one slice of bread onto the other, so that the two sides with condiments are facing each other. 8. Enjoy your peanut butter and jelly sandwich! 3/5/2021 11

Intel® Software College Software challenge for manycore: traditional CS programs hard to change Curriculum • what material gets dropped? • new course or change to existing one? Material hard to find • textbook? (parallelism dropped from CLSR 2 nd ed!) • lecture material, demos, labs? What is the right model/approach/language to teach? 3/5/2021 12

Intel® Software College Software challenge for manycore: academics are excited…and sceptical Dave Patterson, Berkeley, on the move to manycore: Computer architecture is back…. End of La-Z-Boy era of programming. Charles Leiserson, MIT Cannot assume that current models, for multicore SMP, The Age of Serial Computing is over. will scale to manycore. Karsten Schwan, Georgia Tech Since processors will be parallel, like the multi-core chips from Intel, we have to Need to findand new programming models for manycore start educating thinking in terms of parallel. processors Edward Lee, Berkeley Intel, for example, has embarked on an active campaign to get leading computer science academic programs to put more emphasis on multi-threaded programming. If they are successful, and the next generation of programmers makes more intensive use of multithreading, then the next generation of computers will become nearly unusable. 3/5/2021 13

Intel® Software College Manycore programming models: active R&D (in contrast to SMP Multicore) Conceptual Models • “View from Berkeley”: 13 dwarfs • design patterns; RMS (Intel) • Alternatives to threads • everything old is new again? • Linda, MPI, functional lang, skeletons(templates, frameworks (cactus)), actors… Extensions to current languages • X 10 (IBM/DARPA) • Ct (Intel CTG) • Open. MP, TBB: continue to scale? Explicitly parallel programming languages: • Erlang (Ericsson) • TStreams (Intel/MIT) • Cilk, Haskell, Charm++, Titanium, etc 3/5/2021 14

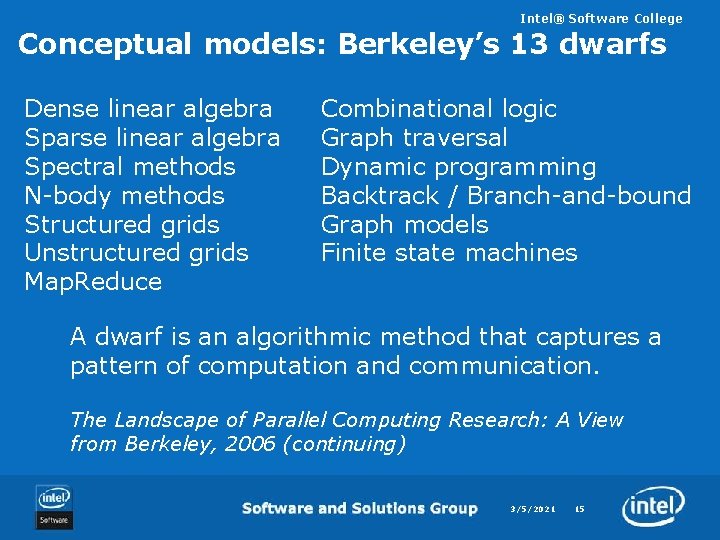

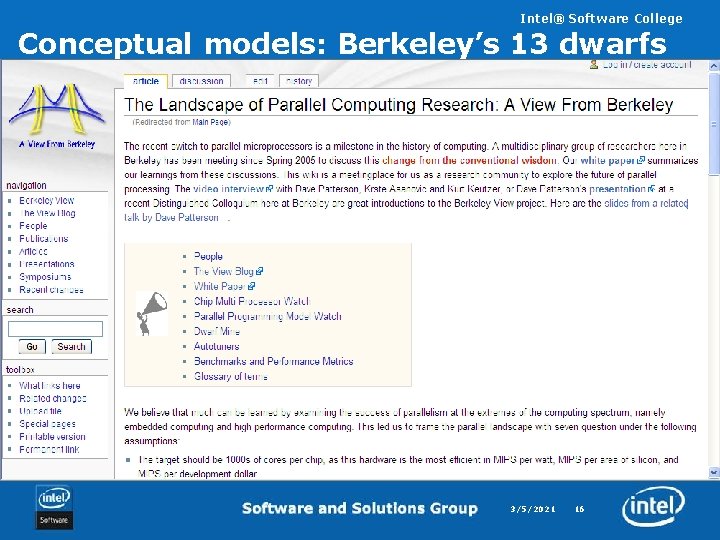

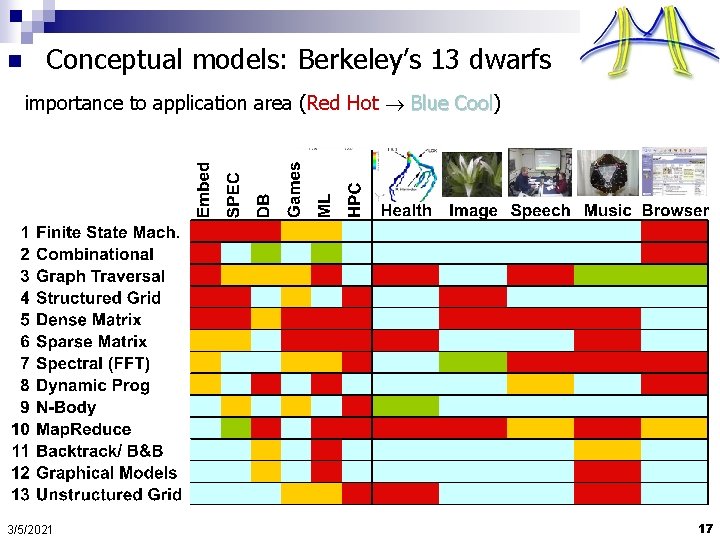

Intel® Software College Conceptual models: Berkeley’s 13 dwarfs Dense linear algebra Sparse linear algebra Spectral methods N-body methods Structured grids Unstructured grids Map. Reduce Combinational logic Graph traversal Dynamic programming Backtrack / Branch-and-bound Graph models Finite state machines A dwarf is an algorithmic method that captures a pattern of computation and communication. The Landscape of Parallel Computing Research: A View from Berkeley, 2006 (continuing) 3/5/2021 15

Intel® Software College Conceptual models: Berkeley’s 13 dwarfs 3/5/2021 16

n Conceptual models: Berkeley’s 13 dwarfs importance to application area (Red Hot Blue Cool) Cool 3/5/2021 17

Conceptual models: Patterns Intel® Software College A design pattern language for parallel algorithm design with examples in MPI, Open. MP and Java. Represents the author's hypothesis for how programmers think about parallel programming. 3/5/2021 18

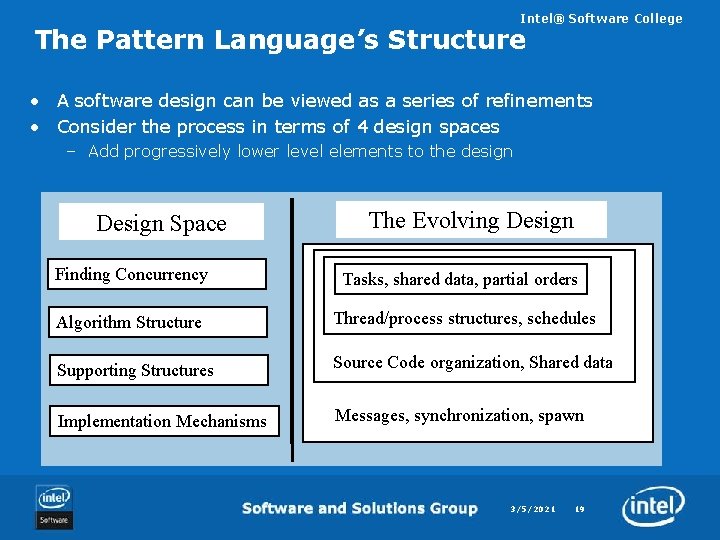

Intel® Software College The Pattern Language’s Structure • A software design can be viewed as a series of refinements • Consider the process in terms of 4 design spaces – Add progressively lower level elements to the design Design Space The Evolving Design Finding Concurrency Tasks, shared data, partial orders Algorithm Structure Thread/process structures, schedules Supporting Structures Source Code organization, Shared data Implementation Mechanisms Messages, synchronization, spawn 3/5/2021 19

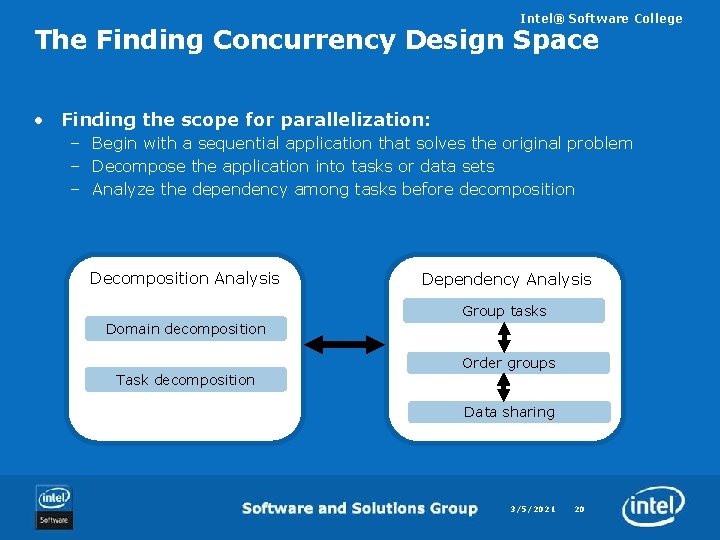

Intel® Software College The Finding Concurrency Design Space • Finding the scope for parallelization: – Begin with a sequential application that solves the original problem – Decompose the application into tasks or data sets – Analyze the dependency among tasks before decomposition Decomposition Analysis Dependency Analysis Group tasks Domain decomposition Order groups Task decomposition Data sharing 3/5/2021 20

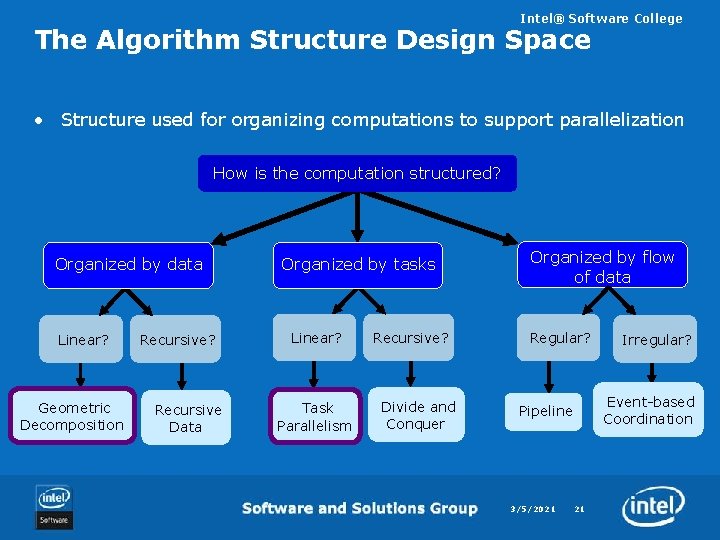

Intel® Software College The Algorithm Structure Design Space • Structure used for organizing computations to support parallelization How is the computation structured? Organized by data Linear? Geometric Decomposition Recursive? Recursive Data Organized by tasks Linear? Task Parallelism Recursive? Divide and Conquer Organized by flow of data Regular? Event-based Coordination Pipeline 3/5/2021 Irregular? 21

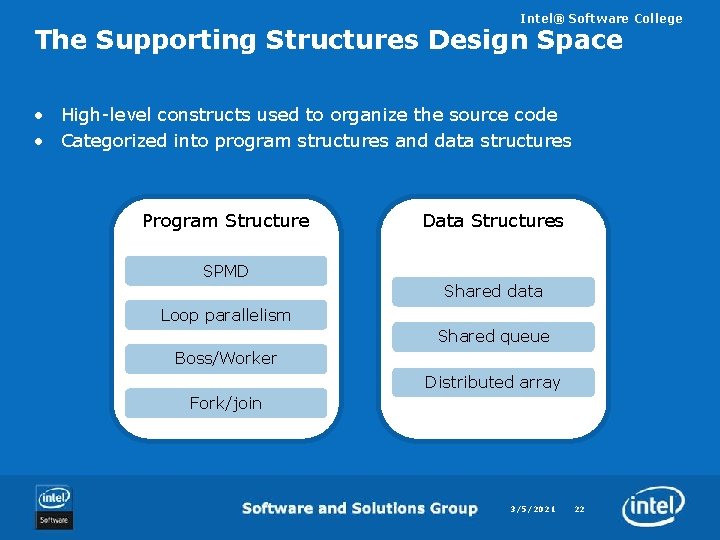

Intel® Software College The Supporting Structures Design Space • High-level constructs used to organize the source code • Categorized into program structures and data structures Program Structure Data Structures SPMD Shared data Loop parallelism Shared queue Boss/Worker Distributed array Fork/join 3/5/2021 22

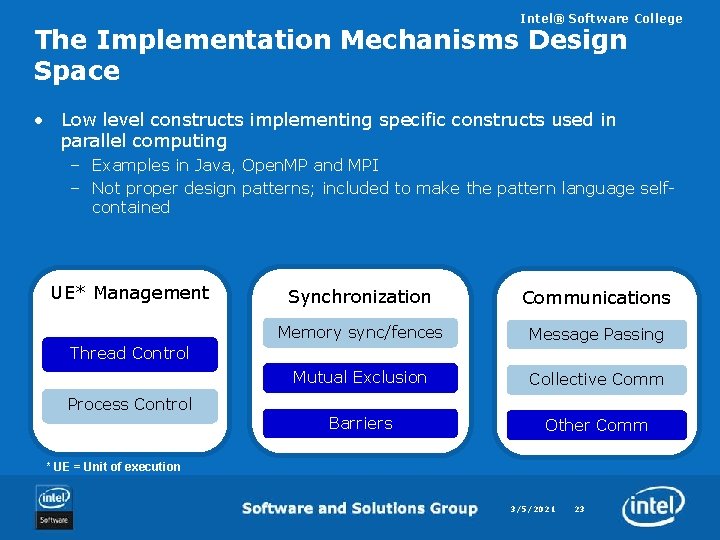

Intel® Software College The Implementation Mechanisms Design Space • Low level constructs implementing specific constructs used in parallel computing – Examples in Java, Open. MP and MPI – Not proper design patterns; included to make the pattern language selfcontained UE* Management Synchronization Communications Memory sync/fences Message Passing Mutual Exclusion Collective Comm Barriers Other Comm Thread Control Process Control * UE = Unit of execution 3/5/2021 23

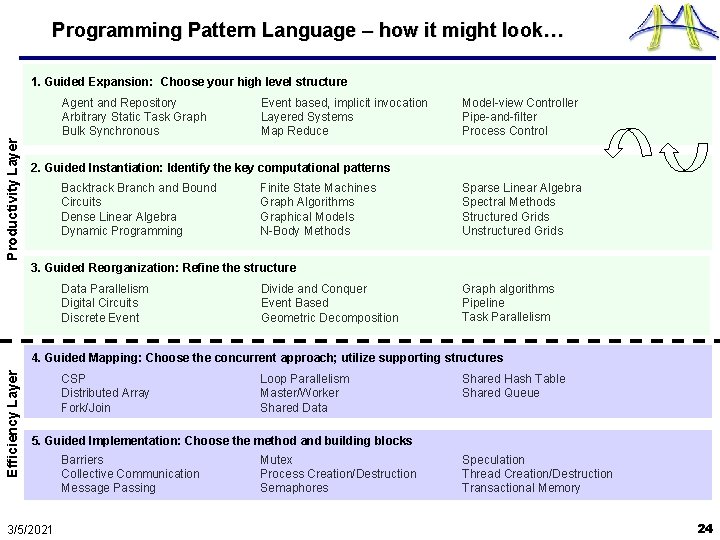

Programming Pattern Language – how it might look… 1. Guided Expansion: Choose your high level structure Productivity Layer Agent and Repository Arbitrary Static Task Graph Bulk Synchronous Event based, implicit invocation Layered Systems Map Reduce Model-view Controller Pipe-and-filter Process Control 2. Guided Instantiation: Identify the key computational patterns Backtrack Branch and Bound Circuits Dense Linear Algebra Dynamic Programming Finite State Machines Graph Algorithms Graphical Models N-Body Methods Sparse Linear Algebra Spectral Methods Structured Grids Unstructured Grids 3. Guided Reorganization: Refine the structure Data Parallelism Digital Circuits Discrete Event Divide and Conquer Event Based Geometric Decomposition Graph algorithms Pipeline Task Parallelism Efficiency Layer 4. Guided Mapping: Choose the concurrent approach; utilize supporting structures CSP Distributed Array Fork/Join Loop Parallelism Master/Worker Shared Data Shared Hash Table Shared Queue 5. Guided Implementation: Choose the method and building blocks 3/5/2021 Barriers Collective Communication Message Passing Mutex Process Creation/Destruction Semaphores Speculation Thread Creation/Destruction Transactional Memory 24

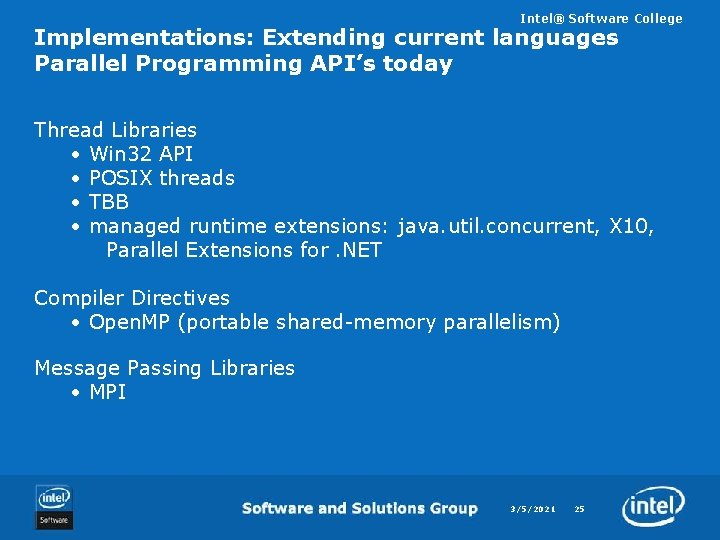

Intel® Software College Implementations: Extending current languages Parallel Programming API’s today Thread Libraries • Win 32 API • POSIX threads • TBB • managed runtime extensions: java. util. concurrent, X 10, Parallel Extensions for. NET Compiler Directives • Open. MP (portable shared-memory parallelism) Message Passing Libraries • MPI 3/5/2021 25

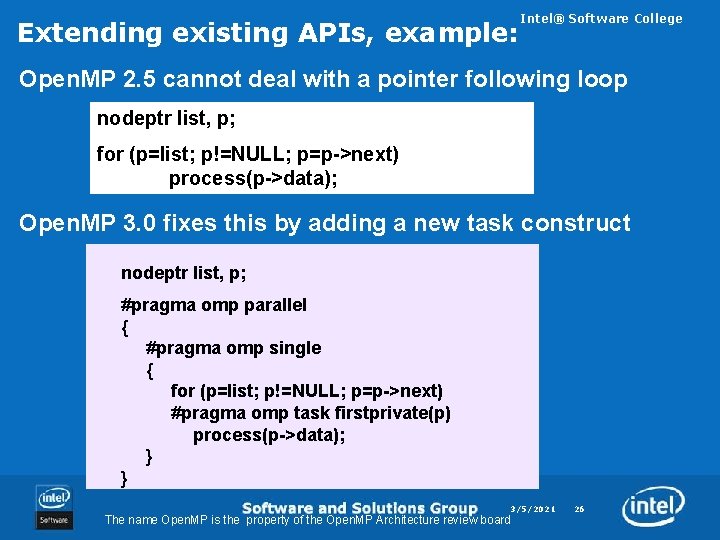

Extending existing APIs, example: Intel® Software College Open. MP 2. 5 cannot deal with a pointer following loop nodeptr list, p; for (p=list; p!=NULL; p=p->next) process(p->data); Open. MP 3. 0 fixes this by adding a new task construct nodeptr list, p; #pragma omp parallel { #pragma omp single { for (p=list; p!=NULL; p=p->next) #pragma omp task firstprivate(p) process(p->data); } } 3/5/2021 The name Open. MP is the property of the Open. MP Architecture review board 26

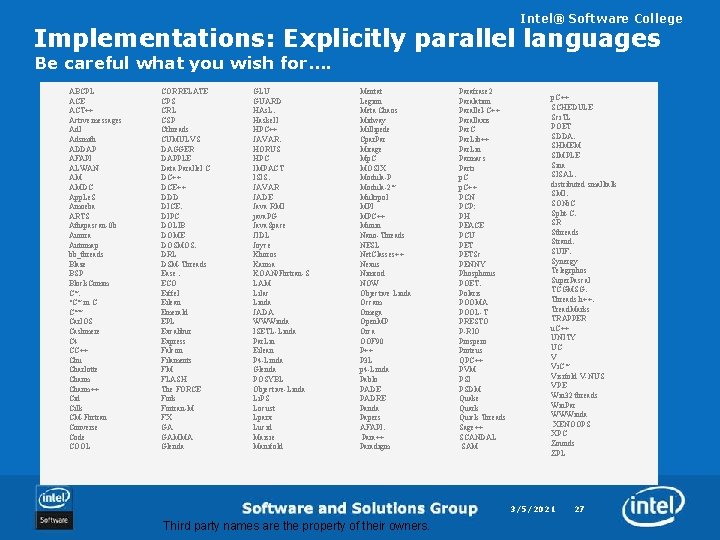

Intel® Software College Implementations: Explicitly parallel languages Be careful what you wish for…. ABCPL ACE ACT++ Active messages Adl Adsmith ADDAP AFAPI ALWAN AM AMDC App. Le. S Amoeba ARTS Athapascan-0 b Aurora Automap bb_threads Blaze BSP Block. Comm C*. "C* in C C** Carl. OS Cashmere C 4 CC++ Chu Charlotte Charm++ Cid Cilk CM-Fortran Converse Code COOL CORRELATE CPS CRL CSP Cthreads CUMULVS DAGGER DAPPLE Data Parallel C DC++ DCE++ DDD DICE. DIPC DOLIB DOME DOSMOS. DRL DSM-Threads Ease. ECO Eiffel Eilean Emerald EPL Excalibur Express Falcon Filaments FM FLASH The FORCE Fork Fortran-M FX GA GAMMA Glenda GLU GUARD HAs. L. Haskell HPC++ JAVAR. HORUS HPC IMPACT ISIS. JAVAR JADE Java RMI java. PG Java. Space JIDL Joyce Khoros Karma KOAN/Fortran-S LAM Lilac Linda JADA WWWinda ISETL-Linda Par. Lin Eilean P 4 -Linda Glenda POSYBL Objective-Linda Li. PS Locust Lparx Lucid Maisie Manifold Mentat Legion Meta Chaos Midway Millipede Cpar. Par Mirage Mp. C MOSIX Modula-P Modula-2* Multipol MPI MPC++ Munin Nano-Threads NESL Net. Classes++ Nexus Nimrod NOW Objective Linda Occam Omega Open. MP Orca OOF 90 P++ P 3 L p 4 -Linda Pablo PADE PADRE Panda Papers AFAPI. Para++ Paradigm Parafrase 2 Paralation Parallel-C++ Parallaxis Par. C Par. Lib++ Par. Lin Parmacs Parti p. C++ PCN PCP: PH PEACE PCU PETSc PENNY Phosphorus POET. Polaris POOMA POOL-T PRESTO P-RIO Prospero Proteus QPC++ PVM PSI PSDM Quake Quark Quick Threads Sage++ SCANDAL SAM p. C++ SCHEDULE Sci. TL POET SDDA. SHMEM SIMPLE Sina SISAL. distributed smalltalk SMI. SONi. C Split-C. SR Sthreads Strand. SUIF. Synergy Telegrphos Super. Pascal TCGMSG. Threads. h++. Tread. Marks TRAPPER u. C++ UNITY UC V Vi. C* Visifold V-NUS VPE Win 32 threads Win. Par WWWinda XENOOPS XPC Zounds ZPL 3/5/2021 Third party names are the property of their owners. 27

Intel® Software College Software challenge for manycore: What is Intel doing about this? Research funding UPCRC at Berkeley & Illinois (jointly with MS) PPL at Stanford (with several others) Tools Intel Thread Checker, Thread Profiler Thread Building Blocks (open source) University Program Multi-core content and training Curriculum development grants 3/5/2021 Third party names are the property of their owners. 28

Intel® Software College Intel Academic Community – Academic Community Collaborating Worldwide In 69 countries around the world , at more than 700 Universities 1150 professors are teaching 35000 new software professionals Multi-core programming 3/5/2021 29

Intel University Program Intel® Software College • Courseware to drop into existing courses • Continual technology updates • Free Intel® Software Development Tools licenses for the classroom • Wiki and blog for collaboration • Forums for specific questions academiccommunity. intel. com 3/5/2021 Third party names are the property of their owners. 30

Call to action Intel® Software College Join the effort: academiccommunity. intel. com 3/5/2021 31

- Slides: 31