Integrated Memory Controllers with Parallel Coherence Streams Mainak

![Background: Flexible Processing n Past research reports up to 12% performance loss [Stanford FLASH] Background: Flexible Processing n Past research reports up to 12% performance loss [Stanford FLASH]](https://slidetodoc.com/presentation_image_h2/ee4f564f3b09faad55df80b7bcaad095/image-7.jpg)

- Slides: 58

Integrated Memory Controllers with Parallel Coherence Streams Mainak Chaudhuri IIT Kanpur Mark Heinrich University of Central Florida Parallel Coherence Streams

Talk in One Slide n Ever-increasing on-die integration – Faster memory controllers and coherence processors – Leads to new trade-offs in the domain of programmable coherence engines for scalable directory-based DSM multiprocessors – We show that multiple coherence engines are unnecessary in such environments – We develop a useful analytical model to quickly decide the coherence bandwidth requirement of parallel applications Parallel Coherence Streams

Sketch Background n Memory controller architecture n Analytical model n Evaluation framework n Simulation results n – Validation of model for directory protocols – Directory-less broadcast protocols – Multiprogramming n Summary Parallel Coherence Streams

Background: Integrated MC n A direct solution to reduce round-trip cache miss latency – Other advantages related to maintenance and glueless multiprocessing n Widely accepted in high-end industry – Alpha 21364, IBM Power 5, AMD Opteron, Sun Ultra. SPARC III and IV, Sun Niagara n Shared memory multiprocessors employing i. MC are naturally DSMs – Bandwidth-thrifty directory coherence is the choice Parallel Coherence Streams

Background: Directory Processing n Home-based coherence protocols – Each cache block has a home node – Upper few bits of physical address – Each coherence request (miss or dirty eviction from the last level of cache) is first sent to the home node of the cache block – At home node, sharing information of the cache block is maintained in a data structure called directory (can be in SRAM or DRAM) – Coherence controller of the home looks up directory and takes appropriate actions n Each node has at least one embedded directory coherence controller Parallel Coherence Streams

Background: Directory Processing n Two different trends in directory coherence controller architecture – Hardwired controllers n Less flexible, tedious verification, often affects project’s critical path, but high-performance n MIT Alewife, KSR 1, SGI Origin, Stanford DASH – Custom programmable controllers n Executes protocol software on a protocol processor embedded in memory controller n Flexible in choice of protocol, easier to verify the protocol, loss of performance n Compaq Piranha, Opteron-Horus, Stanford FLASH, Sequent STi. NG, Sun S 3. mp Parallel Coherence Streams

![Background Flexible Processing n Past research reports up to 12 performance loss Stanford FLASH Background: Flexible Processing n Past research reports up to 12% performance loss [Stanford FLASH]](https://slidetodoc.com/presentation_image_h2/ee4f564f3b09faad55df80b7bcaad095/image-7.jpg)

Background: Flexible Processing n Past research reports up to 12% performance loss [Stanford FLASH] – Main reason why industry is shy of pursuing this option n Coherence controller occupancy has emerged as the most important parameter – Naturally, hardwired controllers get an upper hand – Past research has established the importance of multiple hardwired controllers in SMP nodes Parallel Coherence Streams

Background: Flexible Processing n New technology often changes the tradeoffs – Reconsider programmable directory controllers in the light of increased integration n Bring the programmable controller on die n Faster clock rates lead to lowered occupancy – New research questions: n Can the integrated programmable controllers offer enough coherence bandwidth? n Do we need multiple of those? n Can the integrated controllers cope up with the extra pressure of emerging multi-threaded nodes? Parallel Coherence Streams

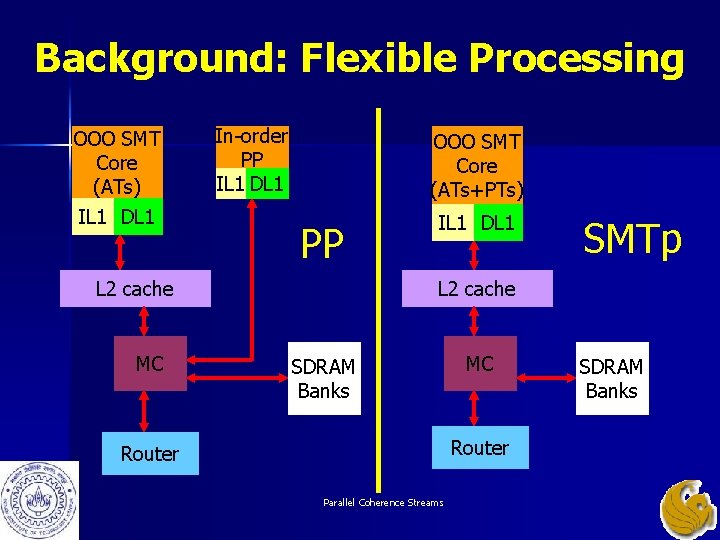

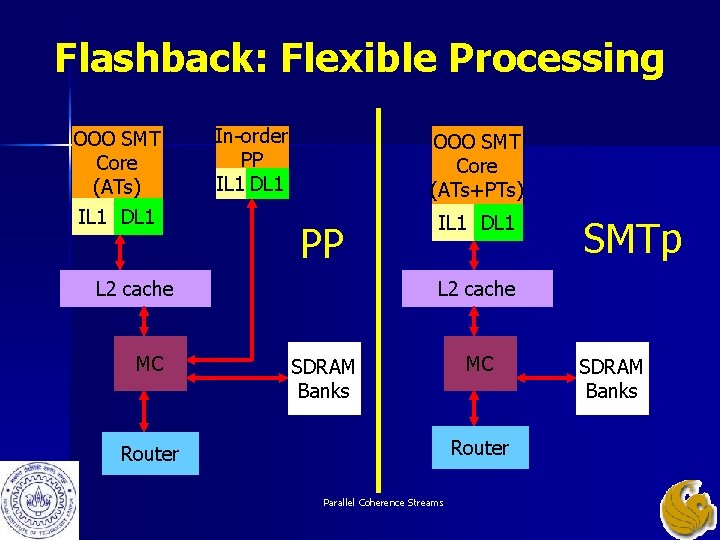

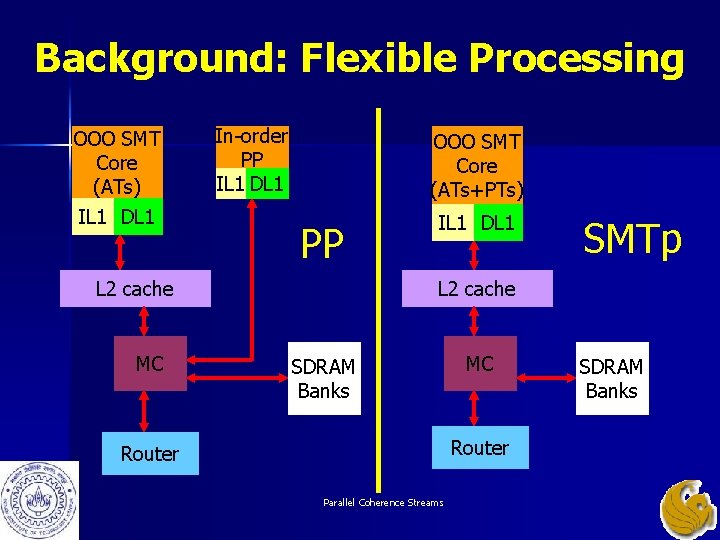

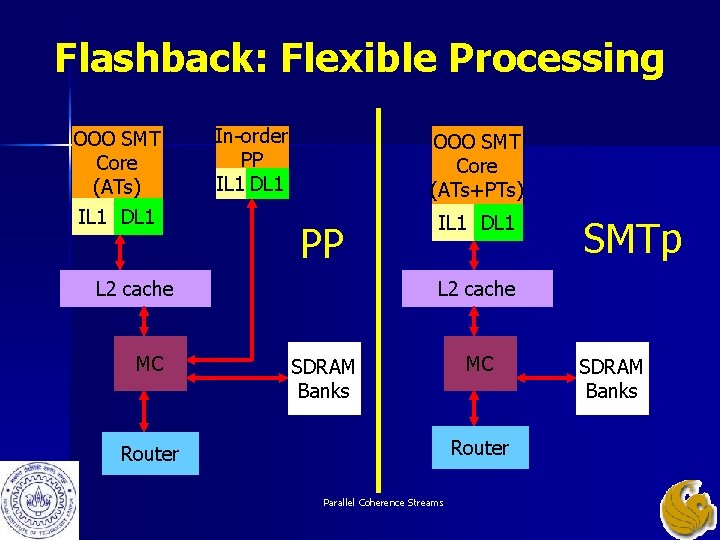

Background: Flexible Processing n Executes coherence protocol handlers in software with hardware support – Does not require interrupts n Two major architectures – Integrated custom protocol processor(s) n Can use one or more simple cores (this work considers one or two static dual-issue in-order cores with dedicated one level of caches) – Reserved protocol thread context(s) in a simultaneous multi-threaded (SMT) node n SMTp: SMT with one or more protocol contexts n Eliminates the protocol processor Parallel Coherence Streams

Background: Flexible Processing OOO SMT Core (ATs) IL 1 DL 1 In-order PP IL 1 DL 1 OOO SMT Core (ATs+PTs) PP L 2 cache MC IL 1 DL 1 SMTp L 2 cache SDRAM Banks MC Router Parallel Coherence Streams SDRAM Banks

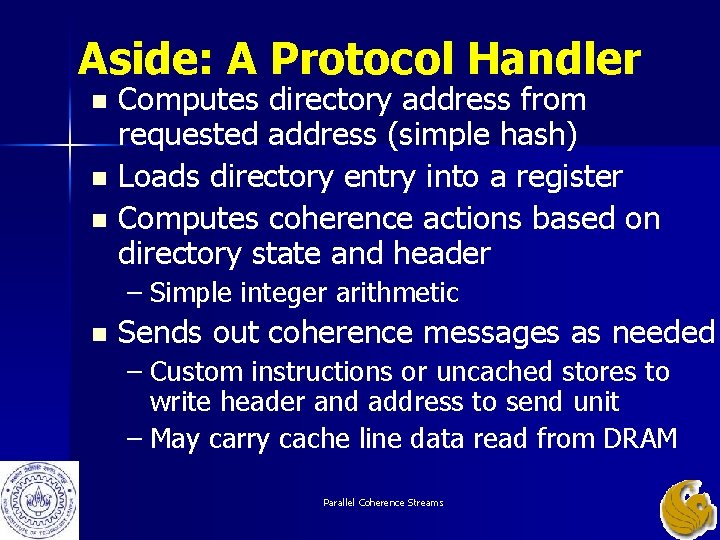

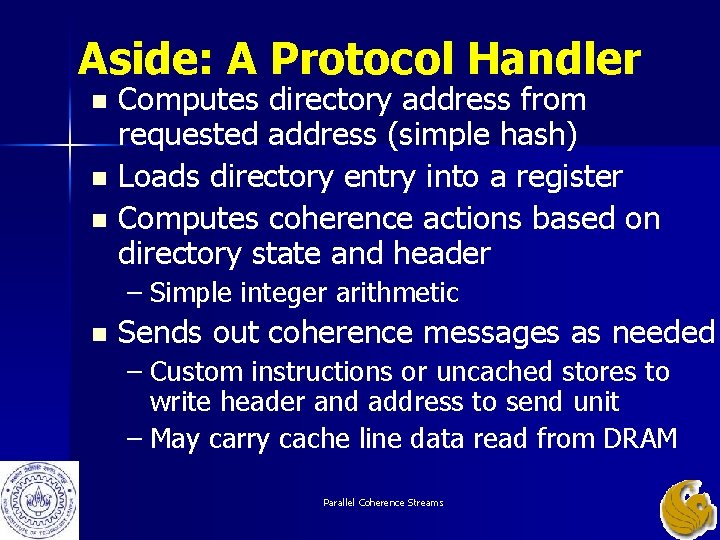

Aside: A Protocol Handler Computes directory address from requested address (simple hash) n Loads directory entry into a register n Computes coherence actions based on directory state and header n – Simple integer arithmetic n Sends out coherence messages as needed – Custom instructions or uncached stores to write header and address to send unit – May carry cache line data read from DRAM Parallel Coherence Streams

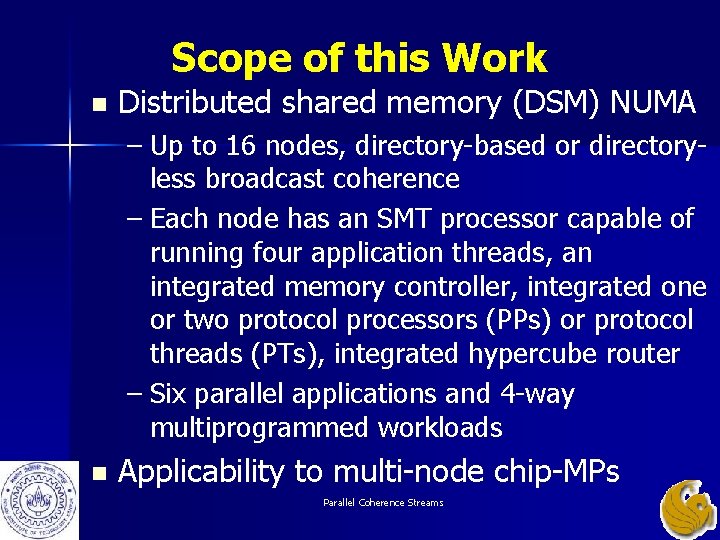

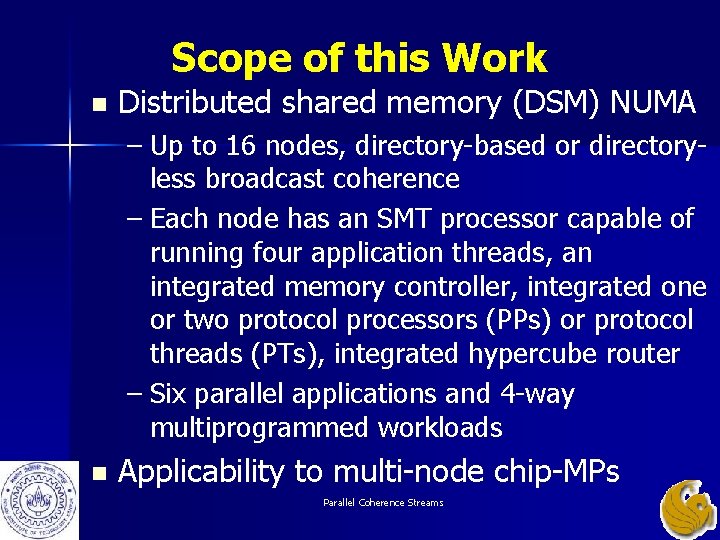

Scope of this Work n Distributed shared memory (DSM) NUMA – Up to 16 nodes, directory-based or directoryless broadcast coherence – Each node has an SMT processor capable of running four application threads, an integrated memory controller, integrated one or two protocol processors (PPs) or protocol threads (PTs), integrated hypercube router – Six parallel applications and 4 -way multiprogrammed workloads n Applicability to multi-node chip-MPs Parallel Coherence Streams

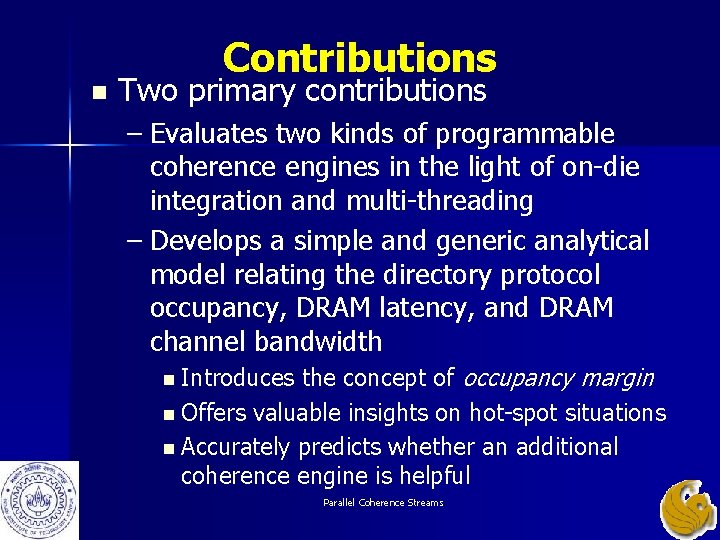

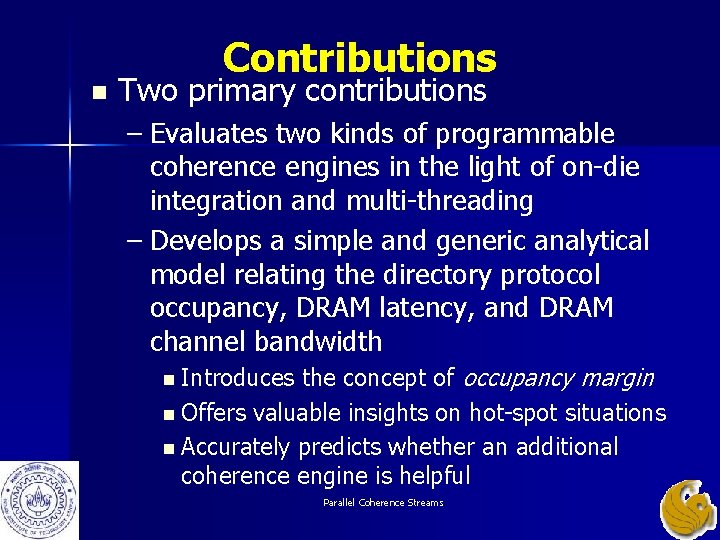

n Contributions Two primary contributions – Evaluates two kinds of programmable coherence engines in the light of on-die integration and multi-threading – Develops a simple and generic analytical model relating the directory protocol occupancy, DRAM latency, and DRAM channel bandwidth the concept of occupancy margin n Offers valuable insights on hot-spot situations n Accurately predicts whether an additional coherence engine is helpful n Introduces Parallel Coherence Streams

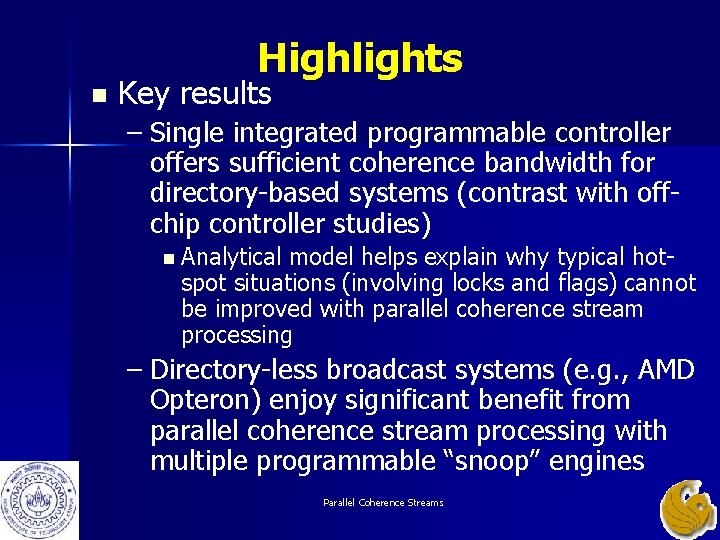

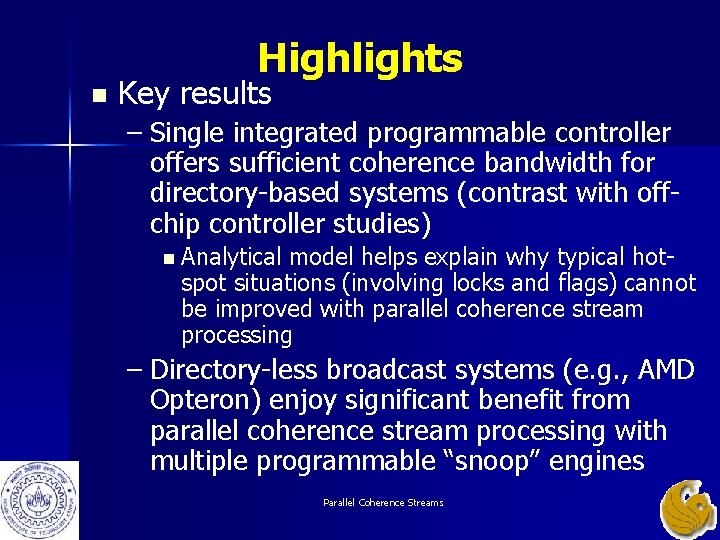

Highlights n Key results – Single integrated programmable controller offers sufficient coherence bandwidth for directory-based systems (contrast with offchip controller studies) n Analytical model helps explain why typical hotspot situations (involving locks and flags) cannot be improved with parallel coherence stream processing – Directory-less broadcast systems (e. g. , AMD Opteron) enjoy significant benefit from parallel coherence stream processing with multiple programmable “snoop” engines Parallel Coherence Streams

Sketch Background Ø Memory controller architecture n Analytical model n Evaluation framework n Simulation results n – Validation of model for directory protocols – Directory-less broadcast protocols – Multiprogramming n Summary Parallel Coherence Streams

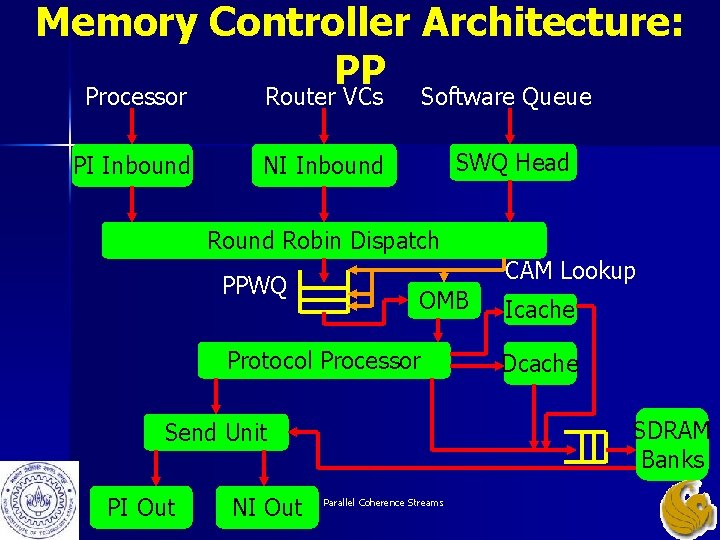

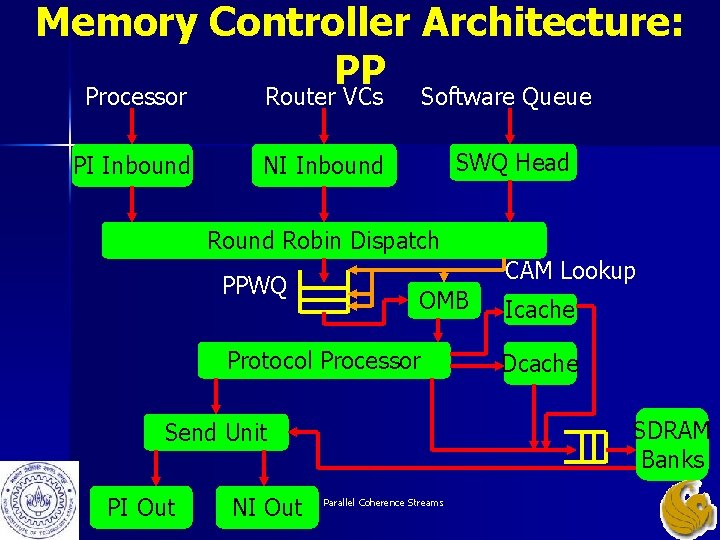

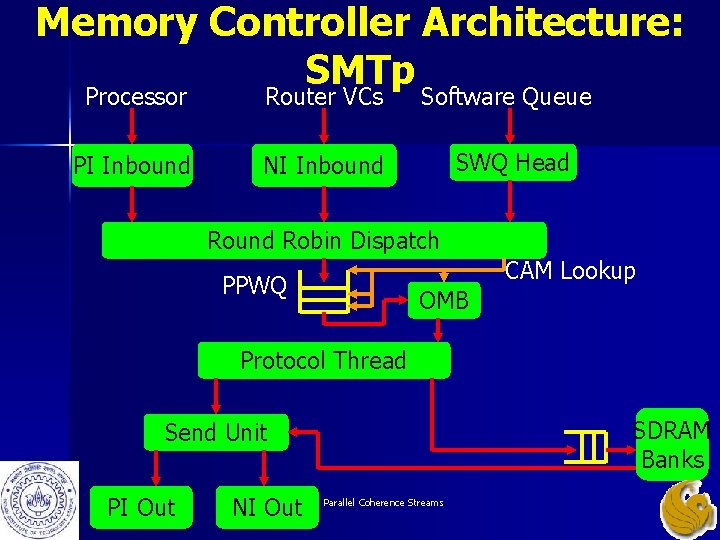

Memory Controller Architecture: PP Processor Router VCs Software Queue PI Inbound SWQ Head NI Inbound Robin Dispatch PPWQ CAM Lookup OMB Protocol Processor NI Out Dcache SDRAM Banks Send Unit PI Out Icache Parallel Coherence Streams

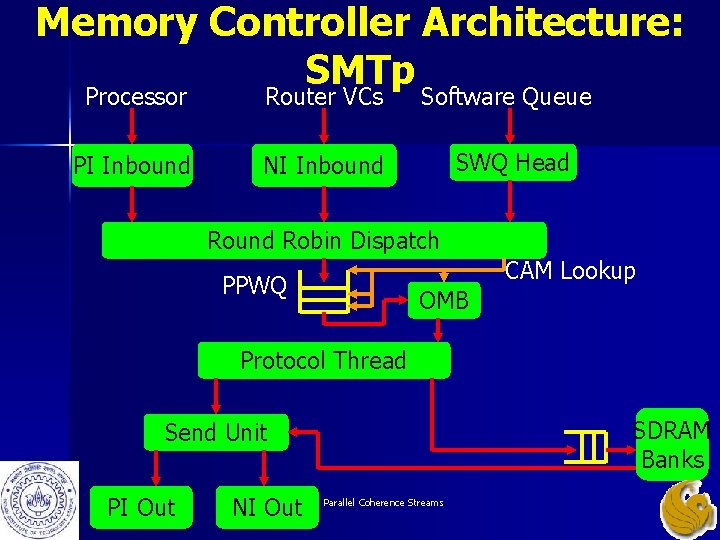

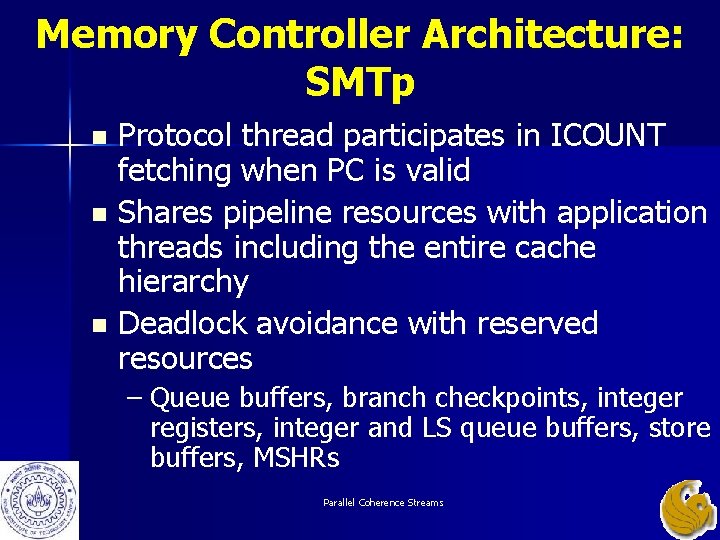

Memory Controller Architecture: SMTp Processor Router VCs Software Queue PI Inbound SWQ Head NI Inbound Robin Dispatch CAM Lookup PPWQ OMB Protocol Thread SDRAM Banks Send Unit PI Out NI Out Parallel Coherence Streams

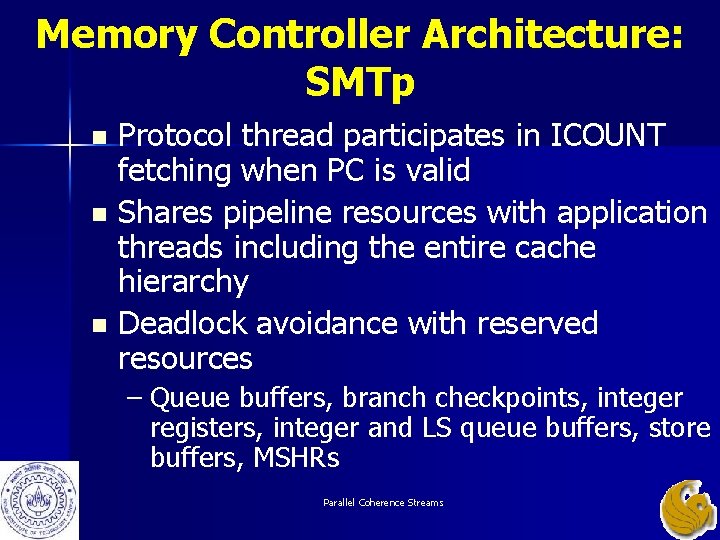

Memory Controller Architecture: SMTp Protocol thread participates in ICOUNT fetching when PC is valid n Shares pipeline resources with application threads including the entire cache hierarchy n Deadlock avoidance with reserved resources n – Queue buffers, branch checkpoints, integer registers, integer and LS queue buffers, store buffers, MSHRs Parallel Coherence Streams

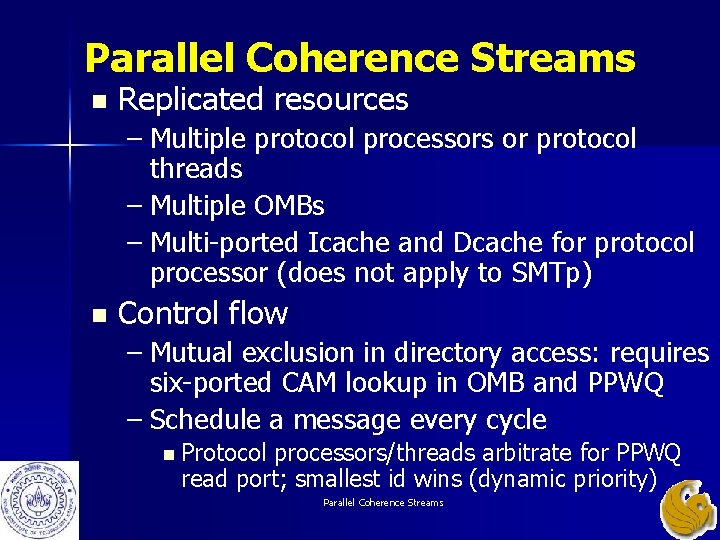

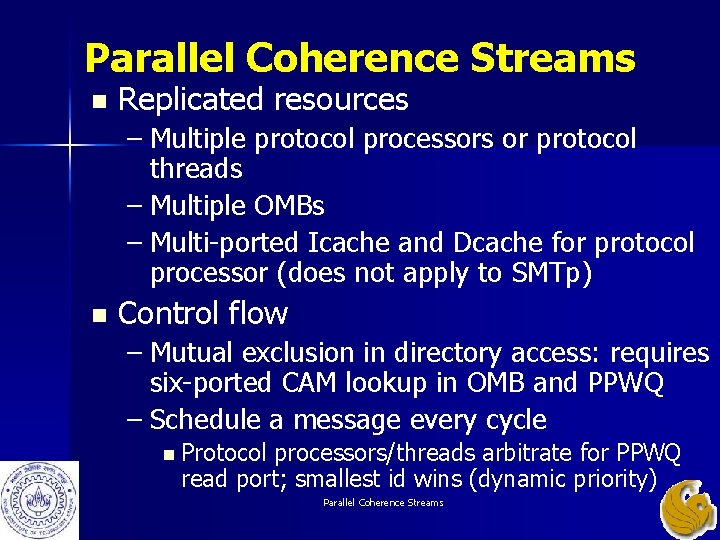

Parallel Coherence Streams n Replicated resources – Multiple protocol processors or protocol threads – Multiple OMBs – Multi-ported Icache and Dcache for protocol processor (does not apply to SMTp) n Control flow – Mutual exclusion in directory access: requires six-ported CAM lookup in OMB and PPWQ – Schedule a message every cycle n Protocol processors/threads arbitrate for PPWQ read port; smallest id wins (dynamic priority) Parallel Coherence Streams

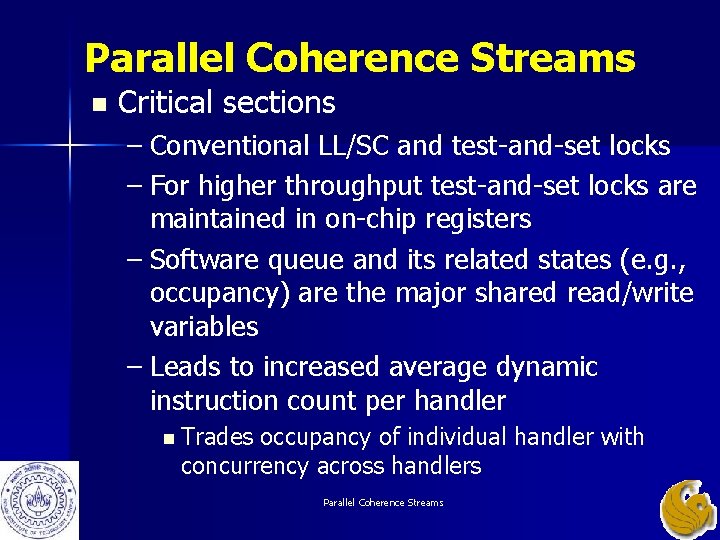

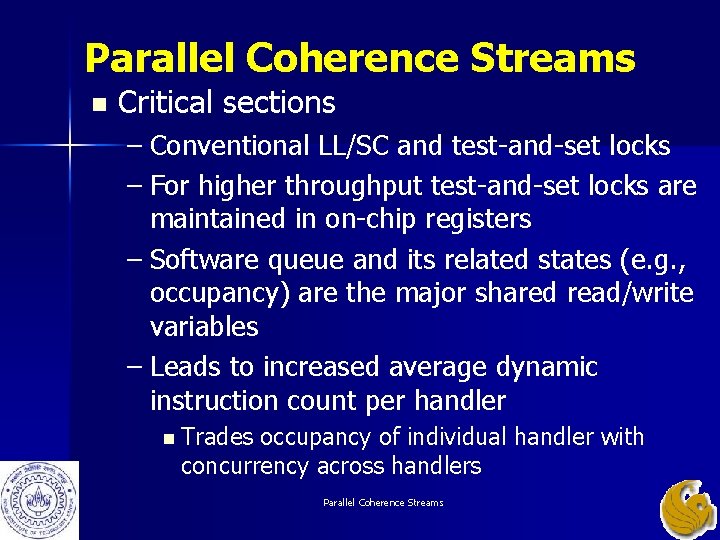

Parallel Coherence Streams n Critical sections – Conventional LL/SC and test-and-set locks – For higher throughput test-and-set locks are maintained in on-chip registers – Software queue and its related states (e. g. , occupancy) are the major shared read/write variables – Leads to increased average dynamic instruction count per handler n Trades occupancy of individual handler with concurrency across handlers Parallel Coherence Streams

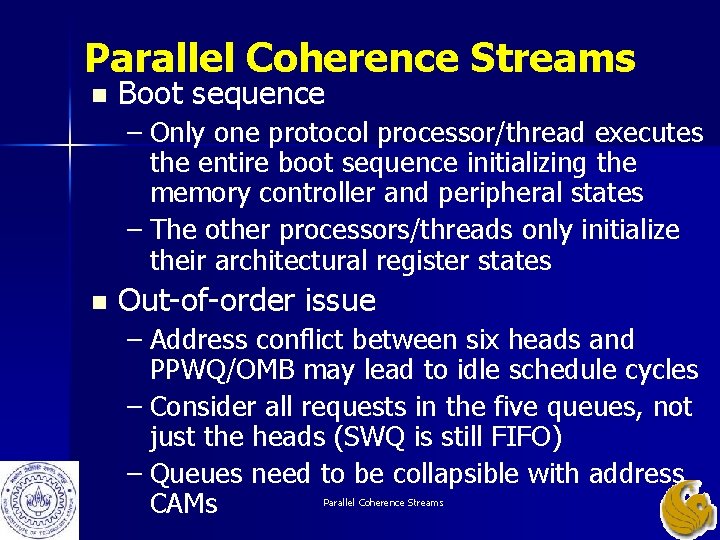

Parallel Coherence Streams n Boot sequence – Only one protocol processor/thread executes the entire boot sequence initializing the memory controller and peripheral states – The other processors/threads only initialize their architectural register states n Out-of-order issue – Address conflict between six heads and PPWQ/OMB may lead to idle schedule cycles – Consider all requests in the five queues, not just the heads (SWQ is still FIFO) – Queues need to be collapsible with address CAMs Parallel Coherence Streams

Sketch Background n Memory controller architecture Ø Analytical model n Evaluation framework n Simulation results n – Validation of model for directory protocols – Directory-less broadcast protocols – Multiprogramming n Summary Parallel Coherence Streams

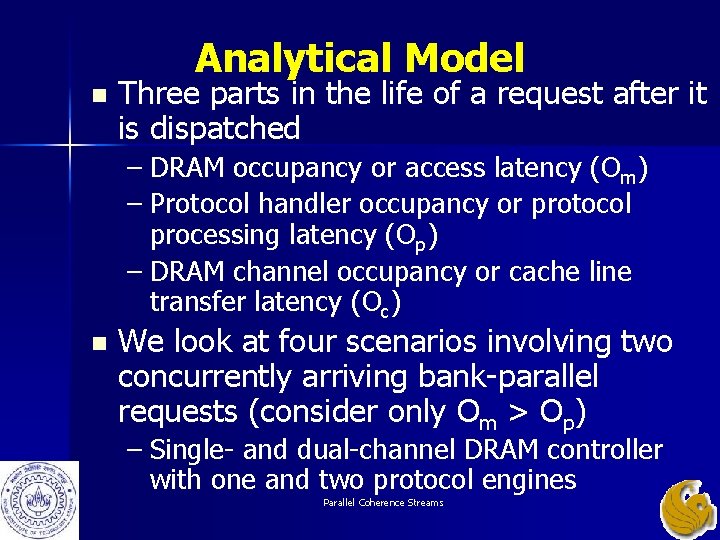

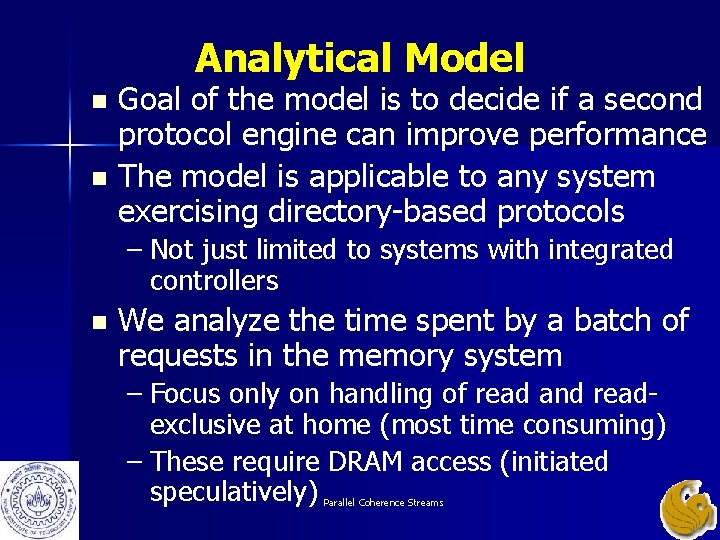

Analytical Model Goal of the model is to decide if a second protocol engine can improve performance n The model is applicable to any system exercising directory-based protocols n – Not just limited to systems with integrated controllers n We analyze the time spent by a batch of requests in the memory system – Focus only on handling of read and readexclusive at home (most time consuming) – These require DRAM access (initiated speculatively) Parallel Coherence Streams

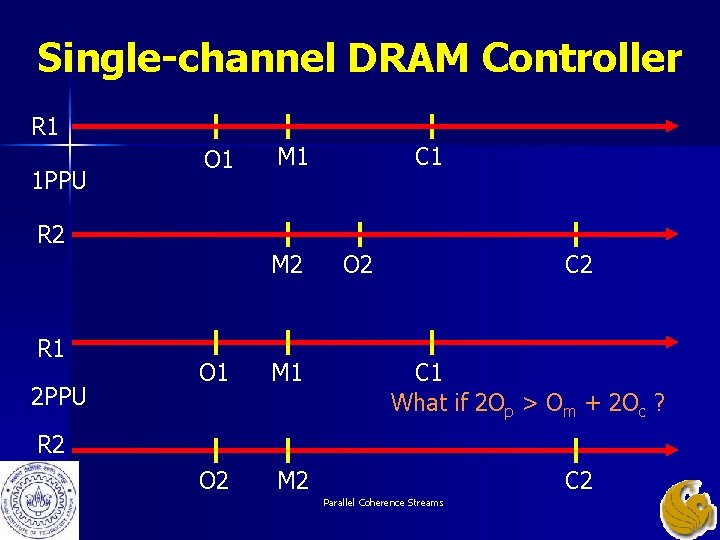

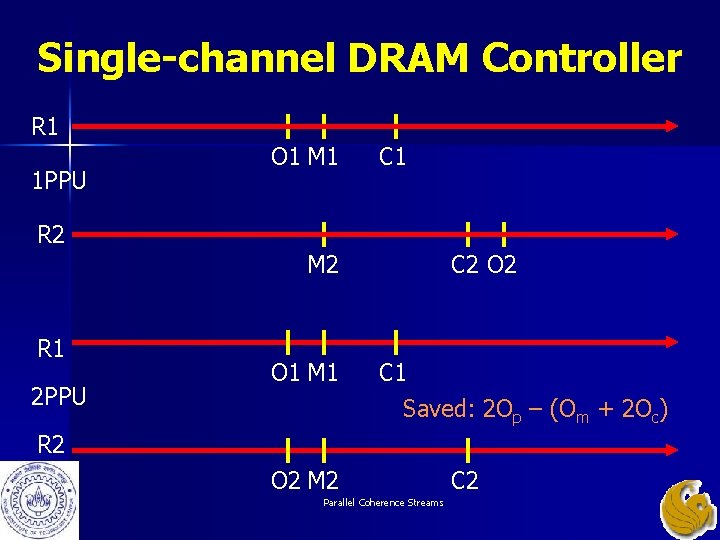

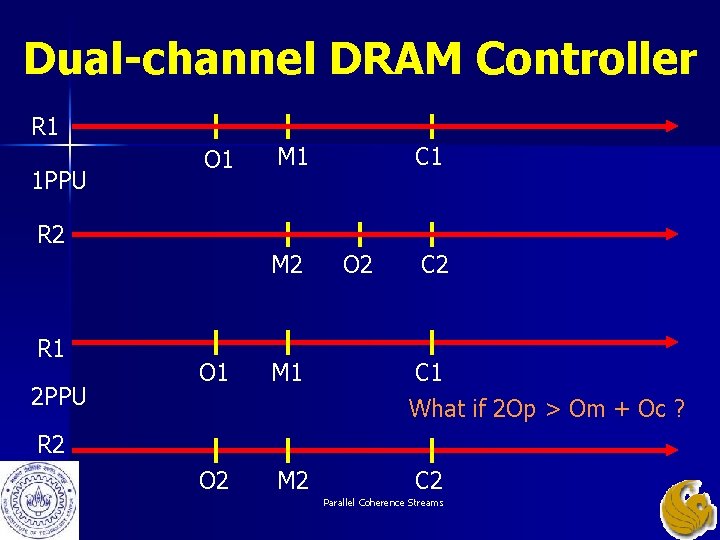

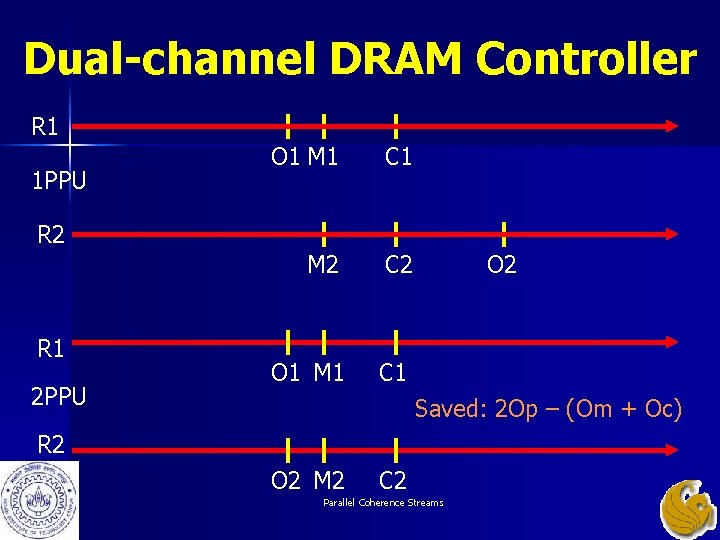

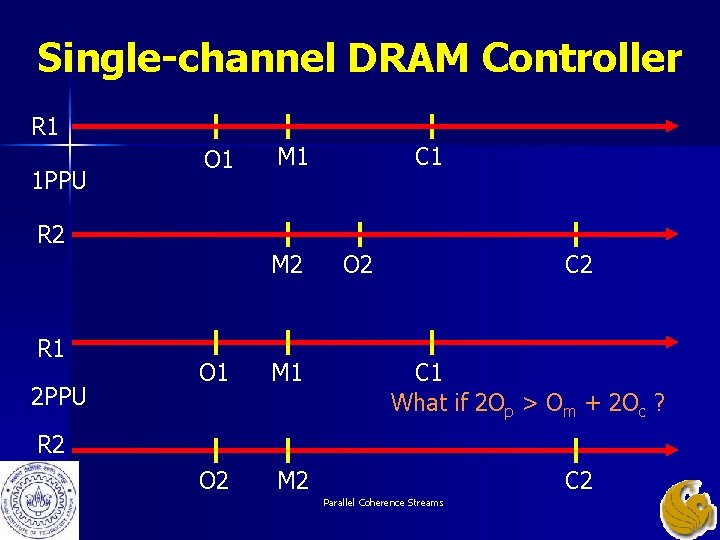

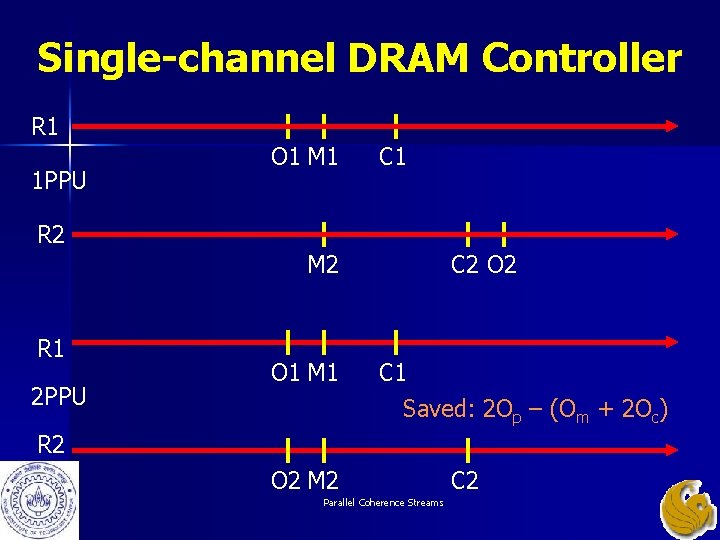

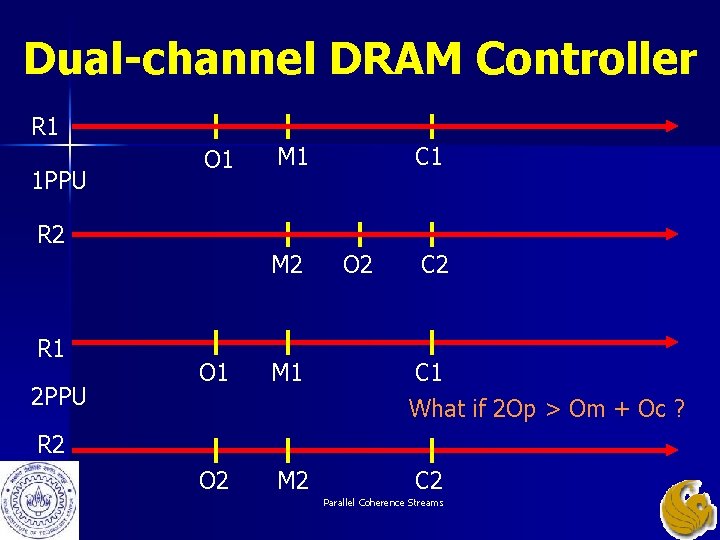

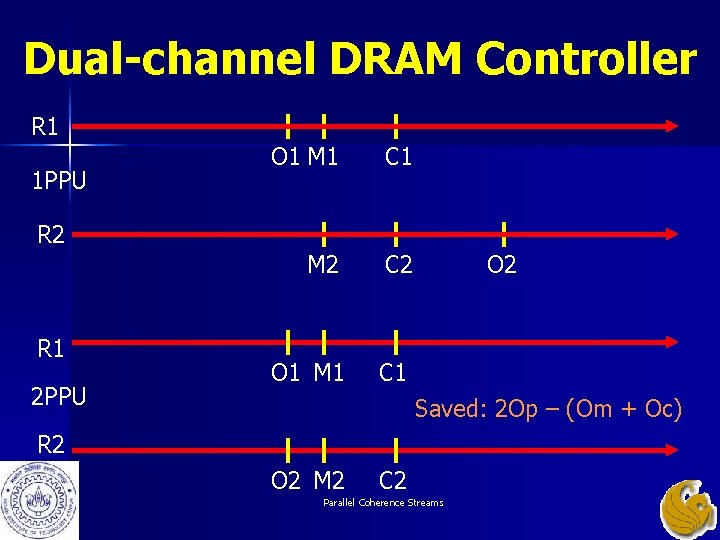

Analytical Model n Three parts in the life of a request after it is dispatched – DRAM occupancy or access latency (Om) – Protocol handler occupancy or protocol processing latency (Op) – DRAM channel occupancy or cache line transfer latency (Oc) n We look at four scenarios involving two concurrently arriving bank-parallel requests (consider only Om > Op) – Single- and dual-channel DRAM controller with one and two protocol engines Parallel Coherence Streams

Single-channel DRAM Controller R 1 1 PPU O 1 M 1 C 1 R 2 M 2 R 1 2 PPU O 1 M 1 O 2 M 2 O 2 C 1 What if 2 Op > Om + 2 Oc ? R 2 C 2 Parallel Coherence Streams

Single-channel DRAM Controller R 1 1 PPU O 1 M 1 C 1 R 2 M 2 R 1 2 PPU O 1 M 1 C 2 O 2 C 1 Saved: 2 Op – (Om + 2 Oc) R 2 O 2 M 2 Parallel Coherence Streams C 2

Dual-channel DRAM Controller R 1 1 PPU O 1 M 1 C 1 R 2 M 2 R 1 2 PPU O 2 C 2 O 1 M 1 C 1 What if 2 Op > Om + Oc ? O 2 M 2 C 2 R 2 Parallel Coherence Streams

Dual-channel DRAM Controller R 1 1 PPU O 1 M 1 C 1 M 2 C 2 O 1 M 1 C 1 R 2 R 1 2 PPU O 2 Saved: 2 Op – (Om + Oc) R 2 O 2 M 2 C 2 Parallel Coherence Streams

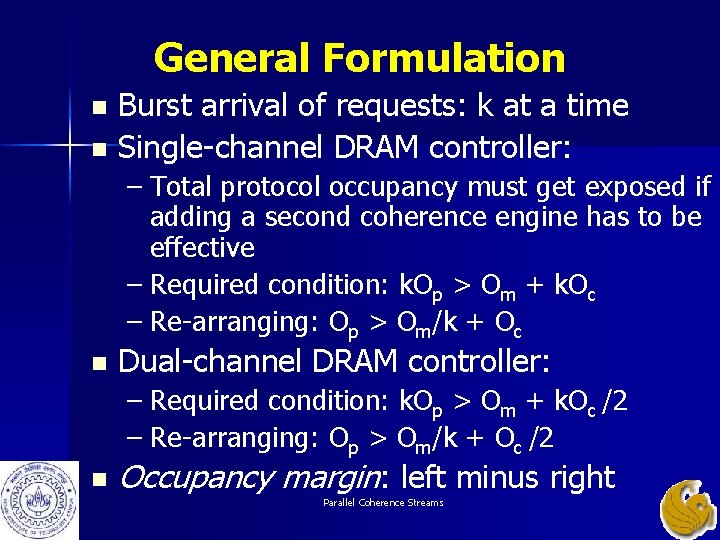

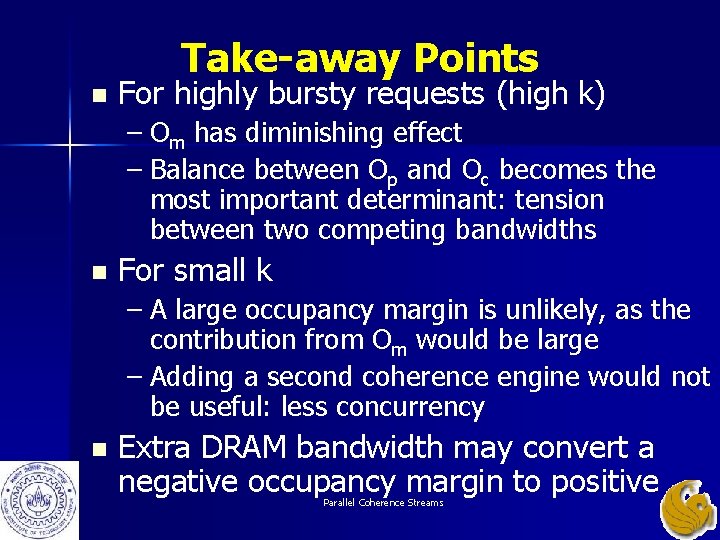

General Formulation Burst arrival of requests: k at a time n Single-channel DRAM controller: n – Total protocol occupancy must get exposed if adding a second coherence engine has to be effective – Required condition: k. Op > Om + k. Oc – Re-arranging: Op > Om/k + Oc n Dual-channel DRAM controller: – Required condition: k. Op > Om + k. Oc /2 – Re-arranging: Op > Om/k + Oc /2 n Occupancy margin: left minus right Parallel Coherence Streams

Take-away Points n For highly bursty requests (high k) – Om has diminishing effect – Balance between Op and Oc becomes the most important determinant: tension between two competing bandwidths n For small k – A large occupancy margin is unlikely, as the contribution from Om would be large – Adding a second coherence engine would not be useful: less concurrency n Extra DRAM bandwidth may convert a negative occupancy margin to positive Parallel Coherence Streams

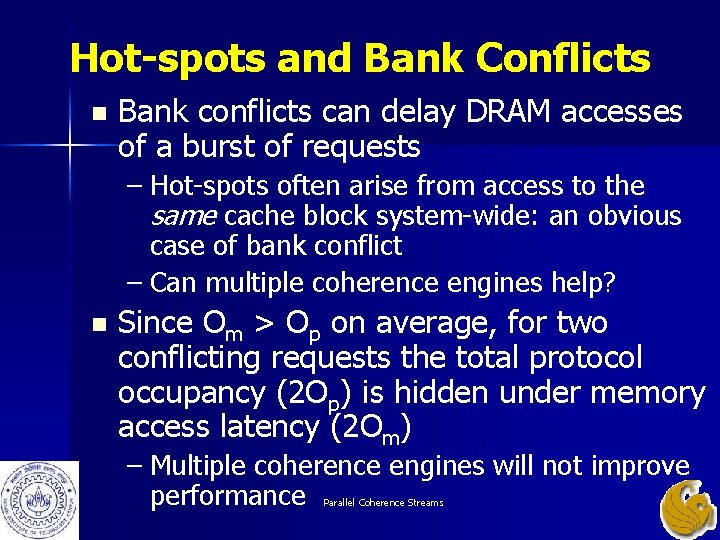

Hot-spots and Bank Conflicts n Bank conflicts can delay DRAM accesses of a burst of requests – Hot-spots often arise from access to the same cache block system-wide: an obvious case of bank conflict – Can multiple coherence engines help? n Since Om > Op on average, for two conflicting requests the total protocol occupancy (2 Op) is hidden under memory access latency (2 Om) – Multiple coherence engines will not improve performance Parallel Coherence Streams

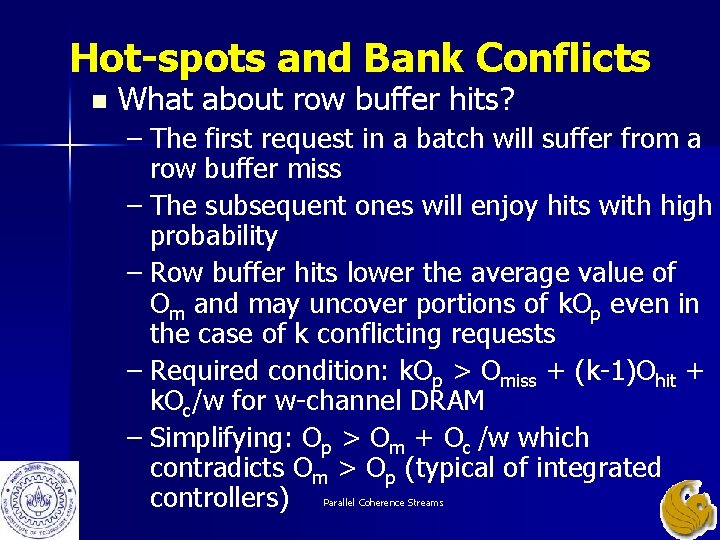

Hot-spots and Bank Conflicts n What about row buffer hits? – The first request in a batch will suffer from a row buffer miss – The subsequent ones will enjoy hits with high probability – Row buffer hits lower the average value of Om and may uncover portions of k. Op even in the case of k conflicting requests – Required condition: k. Op > Omiss + (k-1)Ohit + k. Oc/w for w-channel DRAM – Simplifying: Op > Om + Oc /w which contradicts Om > Op (typical of integrated controllers) Parallel Coherence Streams

Sketch Background n Memory controller architecture n Analytical model Ø Evaluation framework n Simulation results n – Validation of model for directory protocols – Directory-less broadcast protocols – Multiprogramming n Summary Parallel Coherence Streams

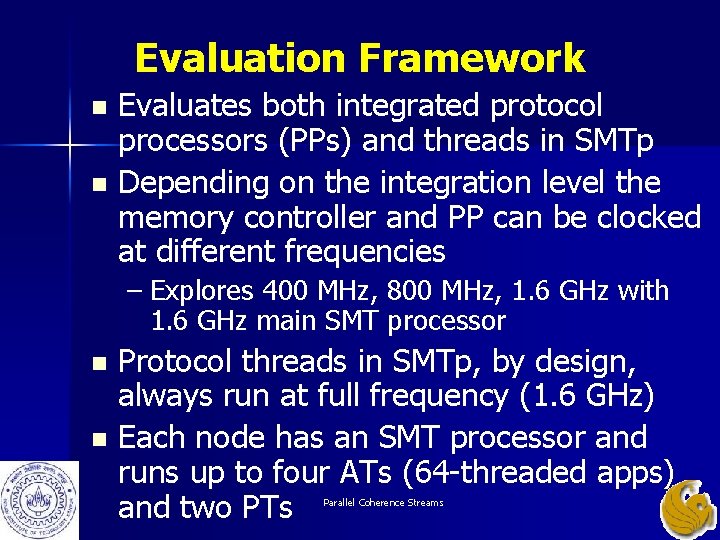

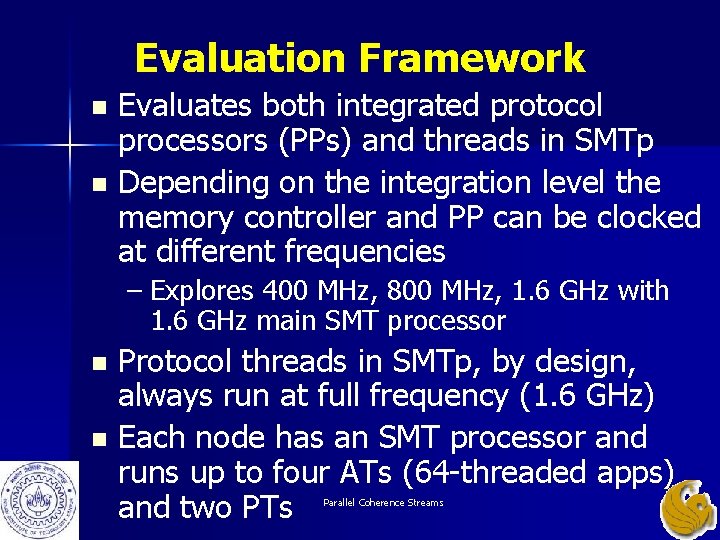

Evaluation Framework Evaluates both integrated protocol processors (PPs) and threads in SMTp n Depending on the integration level the memory controller and PP can be clocked at different frequencies n – Explores 400 MHz, 800 MHz, 1. 6 GHz with 1. 6 GHz main SMT processor Protocol threads in SMTp, by design, always run at full frequency (1. 6 GHz) n Each node has an SMT processor and runs up to four ATs (64 -threaded apps) and two PTs n Parallel Coherence Streams

Flashback: Flexible Processing OOO SMT Core (ATs) IL 1 DL 1 In-order PP IL 1 DL 1 OOO SMT Core (ATs+PTs) PP L 2 cache MC IL 1 DL 1 SMTp L 2 cache SDRAM Banks MC Router Parallel Coherence Streams SDRAM Banks

Sketch Background n Memory controller architecture n Analytical model n Evaluation framework Ø Simulation results n – Validation of model for directory protocols – Directory-less broadcast protocols – Multiprogramming n Summary Parallel Coherence Streams

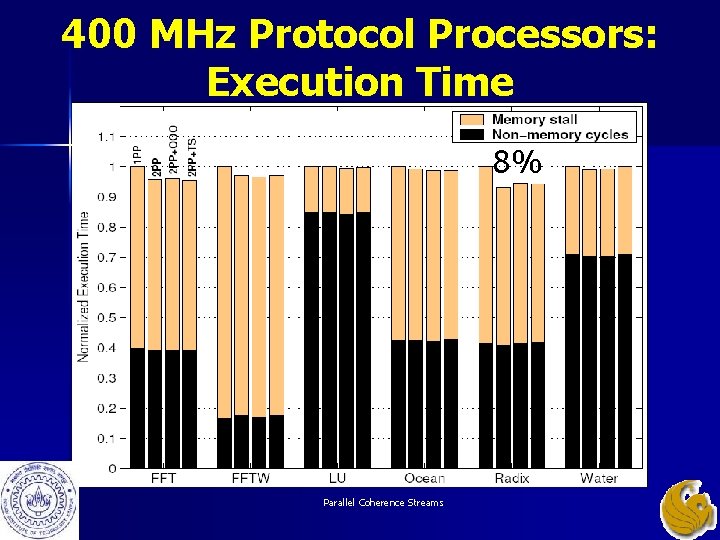

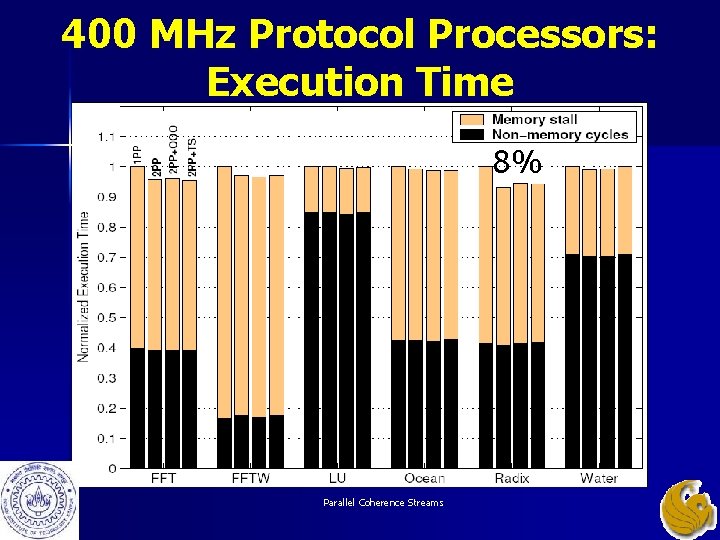

400 MHz Protocol Processors: Execution Time 8% Parallel Coherence Streams

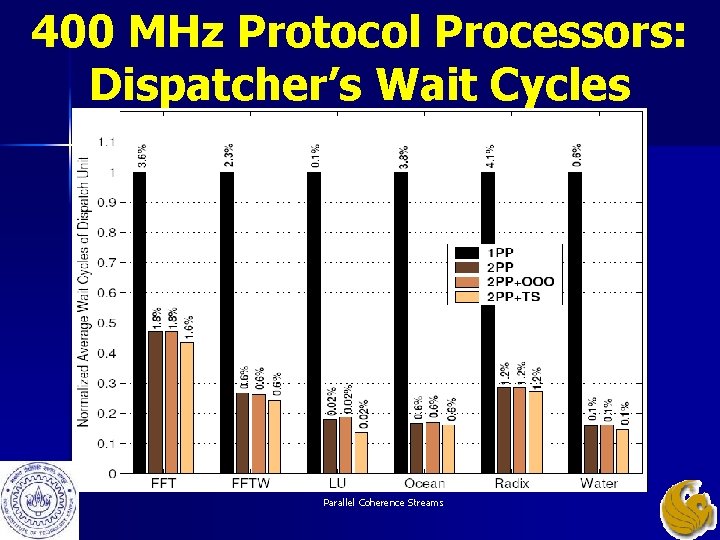

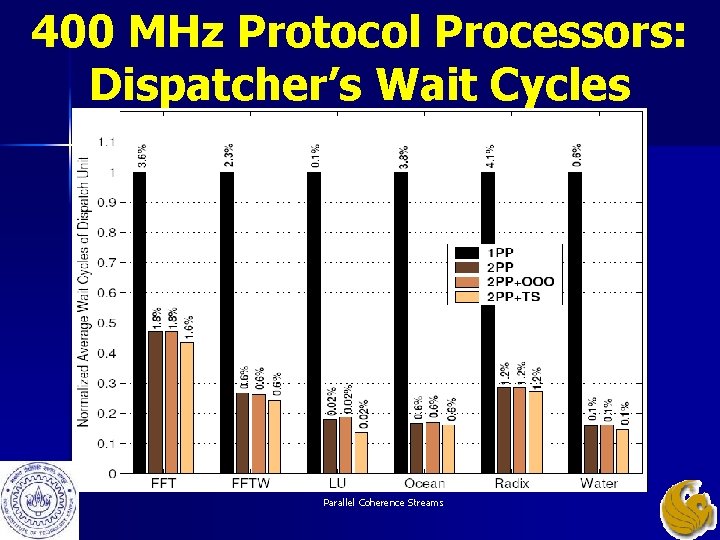

400 MHz Protocol Processors: Dispatcher’s Wait Cycles Parallel Coherence Streams

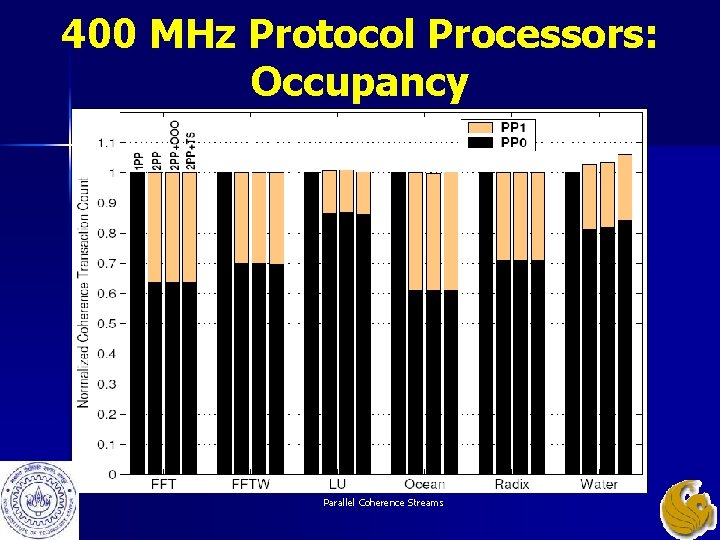

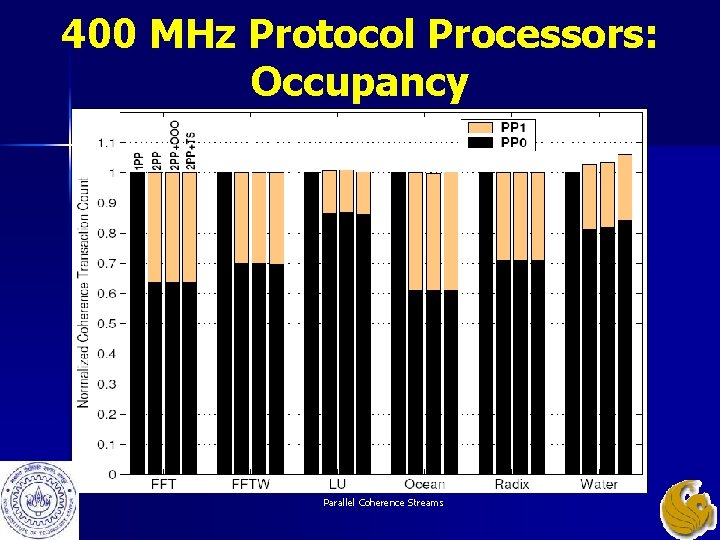

400 MHz Protocol Processors: Occupancy Parallel Coherence Streams

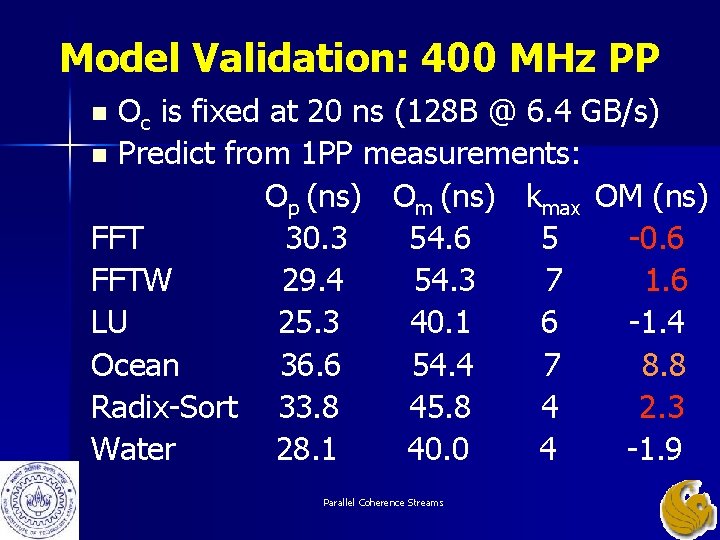

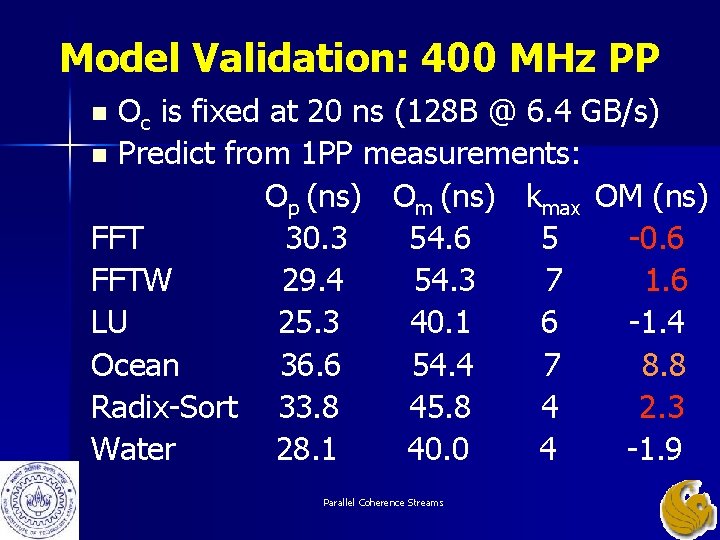

Model Validation: 400 MHz PP Oc is fixed at 20 ns (128 B @ 6. 4 GB/s) n Predict from 1 PP measurements: Op (ns) Om (ns) kmax OM (ns) FFT 30. 3 54. 6 5 -0. 6 FFTW 29. 4 54. 3 7 1. 6 LU 25. 3 40. 1 6 -1. 4 Ocean 36. 6 54. 4 7 8. 8 Radix-Sort 33. 8 45. 8 4 2. 3 Water 28. 1 40. 0 4 -1. 9 n Parallel Coherence Streams

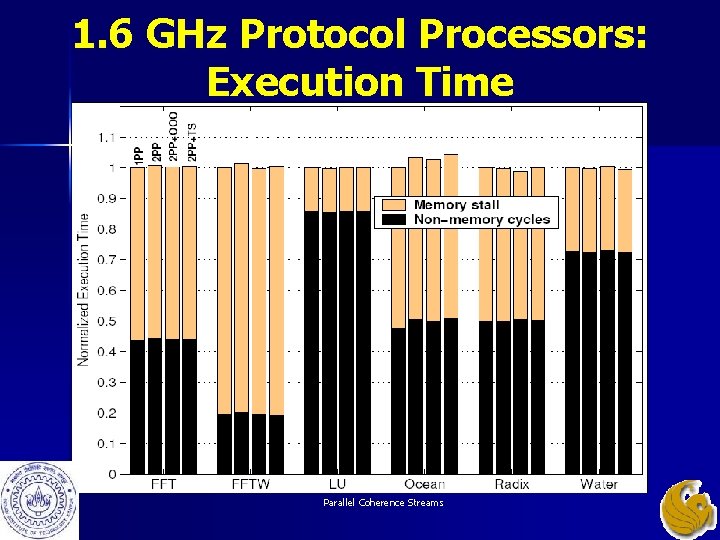

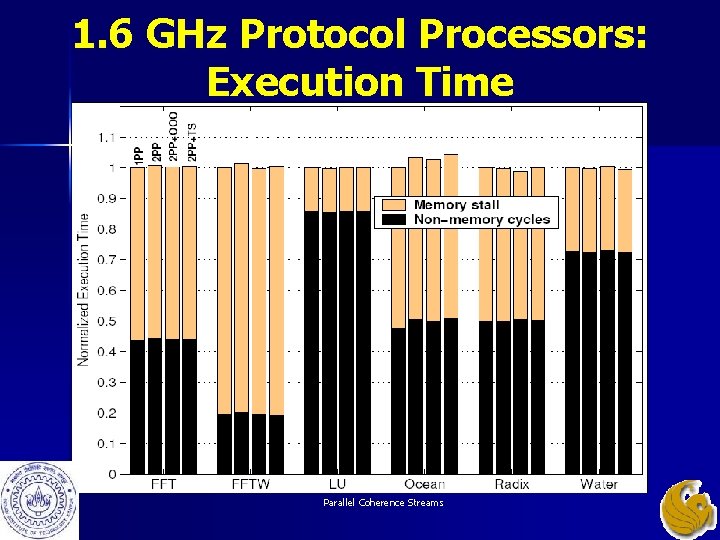

1. 6 GHz Protocol Processors: Execution Time Parallel Coherence Streams

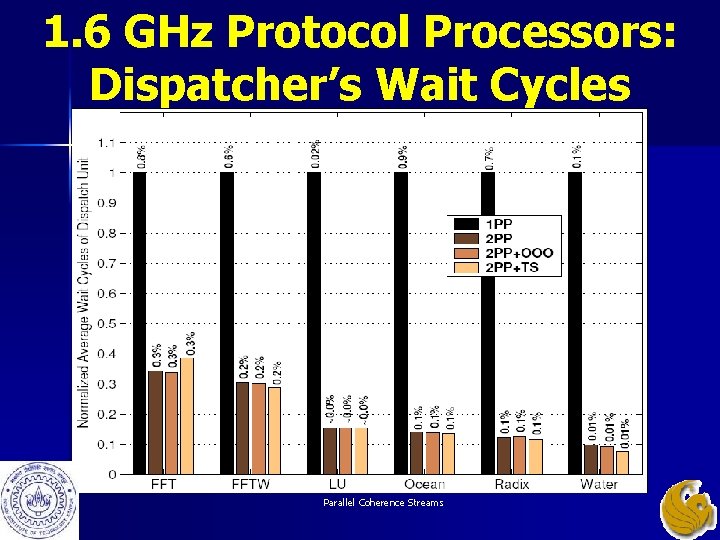

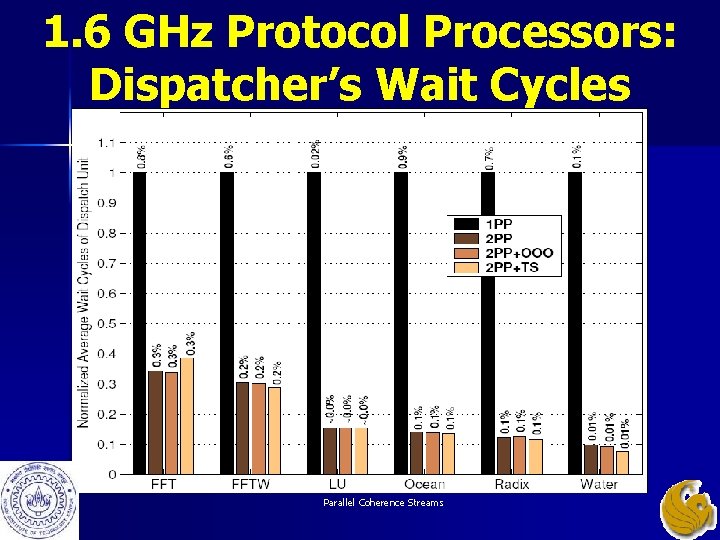

1. 6 GHz Protocol Processors: Dispatcher’s Wait Cycles Parallel Coherence Streams

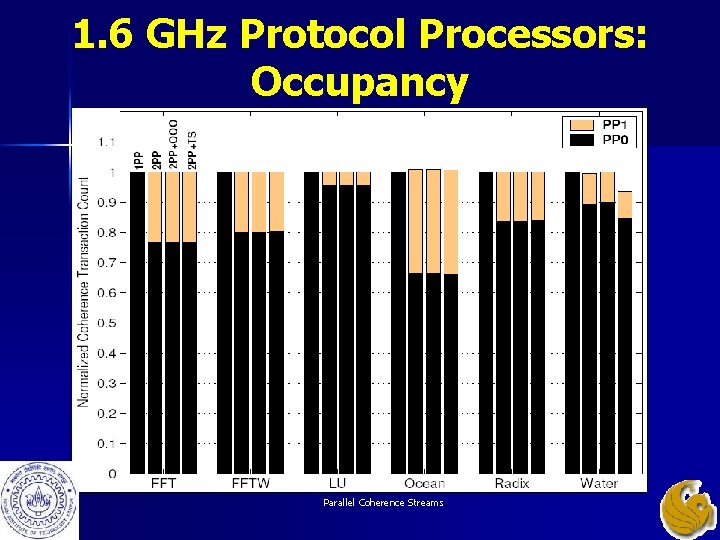

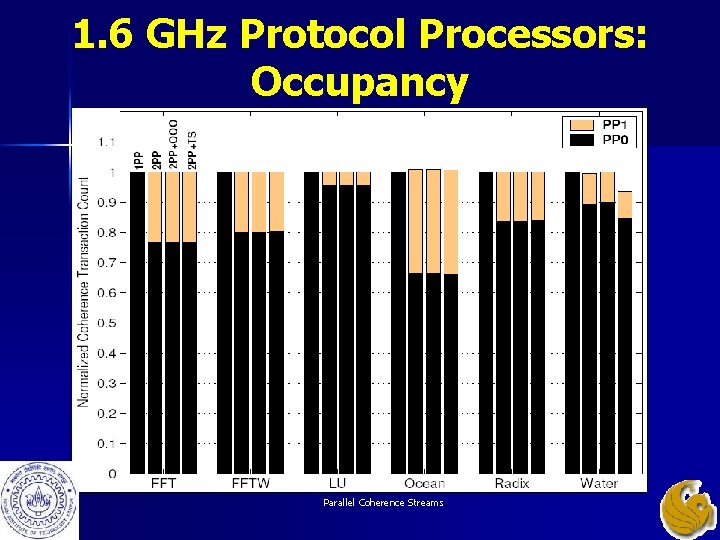

1. 6 GHz Protocol Processors: Occupancy Parallel Coherence Streams

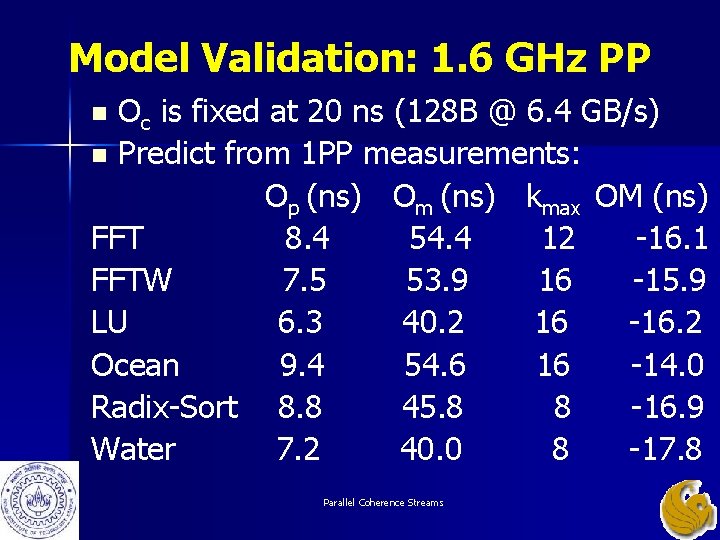

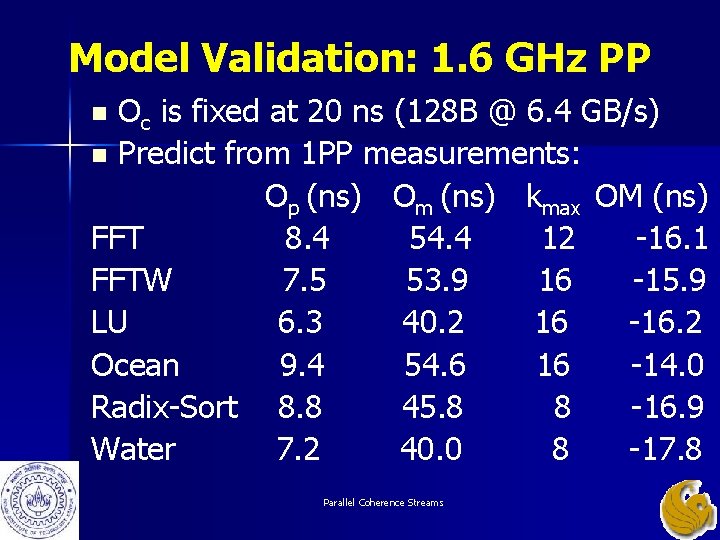

Model Validation: 1. 6 GHz PP Oc is fixed at 20 ns (128 B @ 6. 4 GB/s) n Predict from 1 PP measurements: Op (ns) Om (ns) kmax OM (ns) FFT 8. 4 54. 4 12 -16. 1 FFTW 7. 5 53. 9 16 -15. 9 LU 6. 3 40. 2 16 -16. 2 Ocean 9. 4 54. 6 16 -14. 0 Radix-Sort 8. 8 45. 8 8 -16. 9 Water 7. 2 40. 0 8 -17. 8 n Parallel Coherence Streams

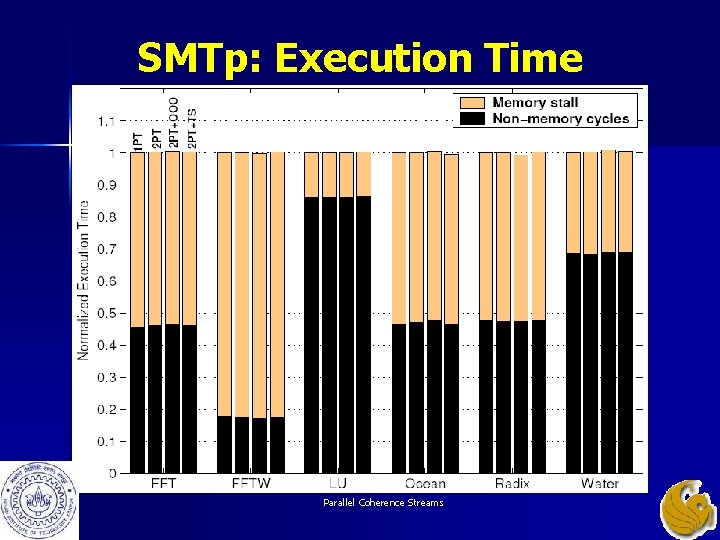

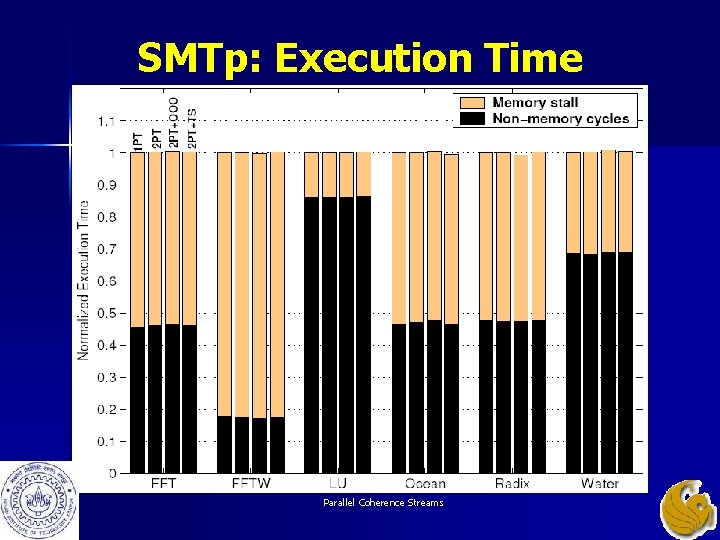

SMTp: Execution Time Parallel Coherence Streams

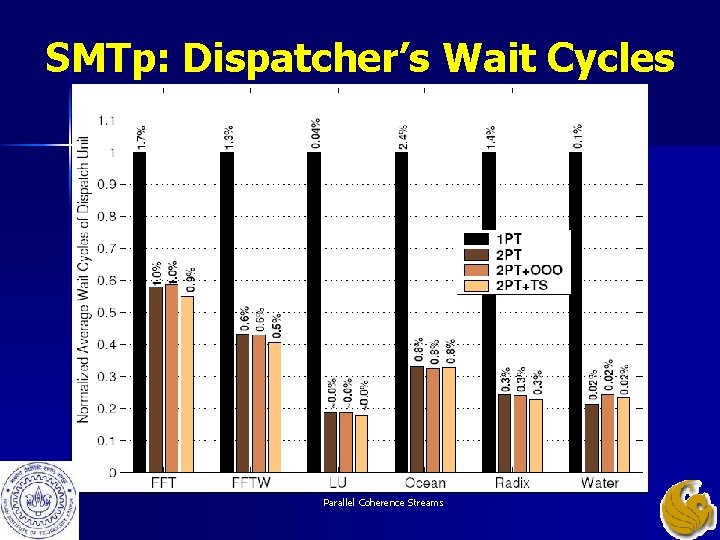

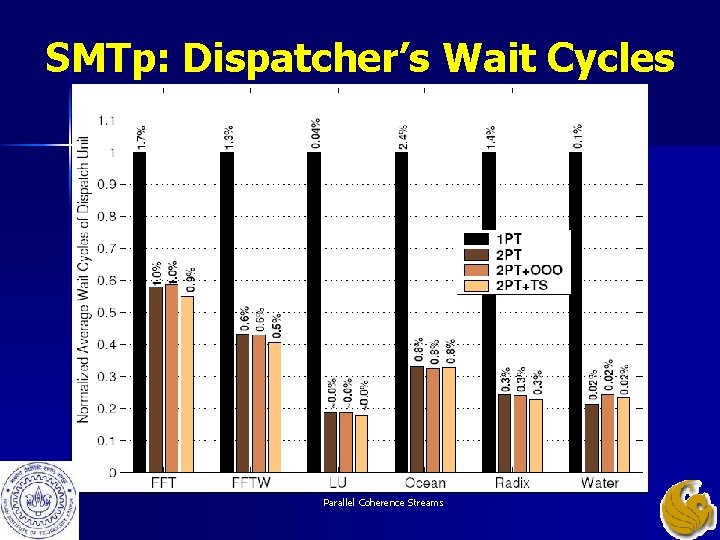

SMTp: Dispatcher’s Wait Cycles Parallel Coherence Streams

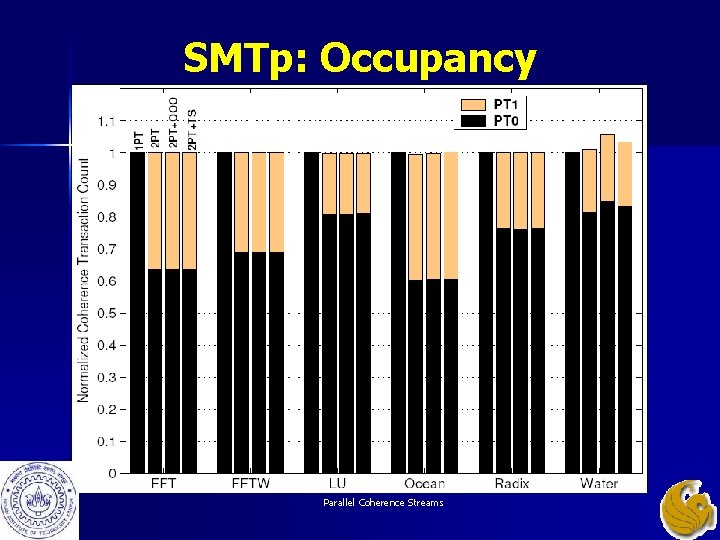

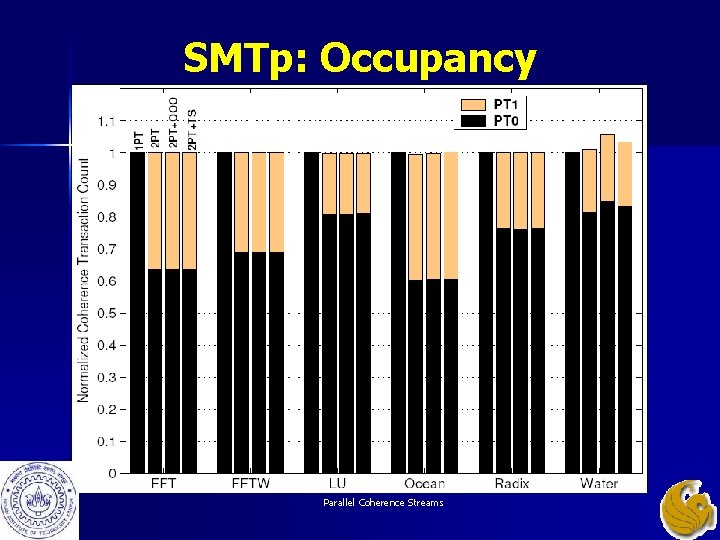

SMTp: Occupancy Parallel Coherence Streams

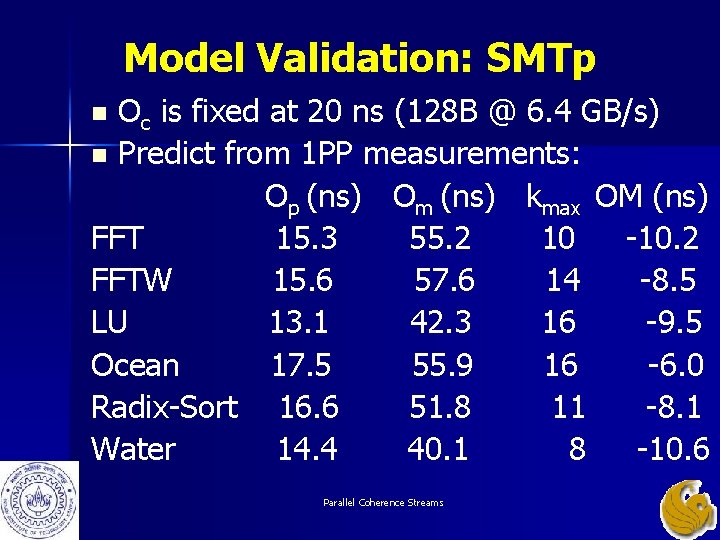

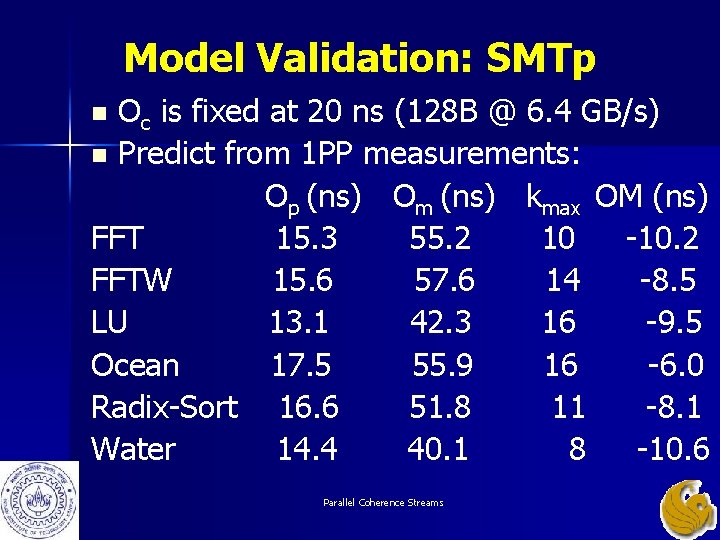

Model Validation: SMTp Oc is fixed at 20 ns (128 B @ 6. 4 GB/s) n Predict from 1 PP measurements: Op (ns) Om (ns) kmax OM (ns) FFT 15. 3 55. 2 10 -10. 2 FFTW 15. 6 57. 6 14 -8. 5 LU 13. 1 42. 3 16 -9. 5 Ocean 17. 5 55. 9 16 -6. 0 Radix-Sort 16. 6 51. 8 11 -8. 1 Water 14. 4 40. 1 8 -10. 6 n Parallel Coherence Streams

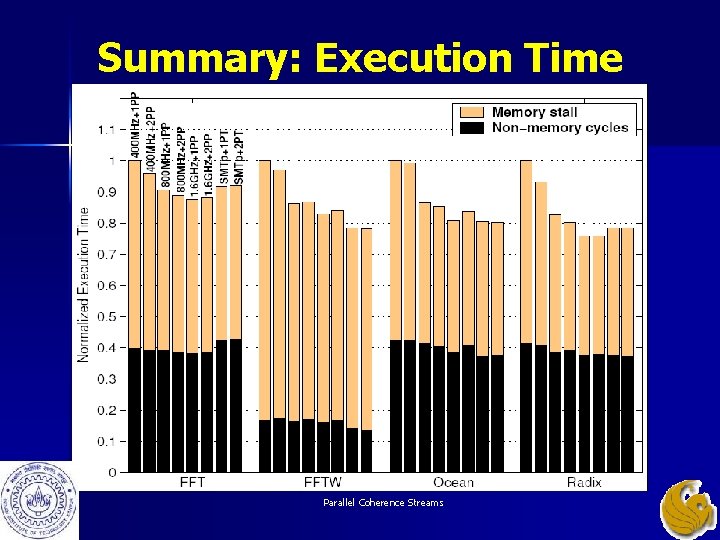

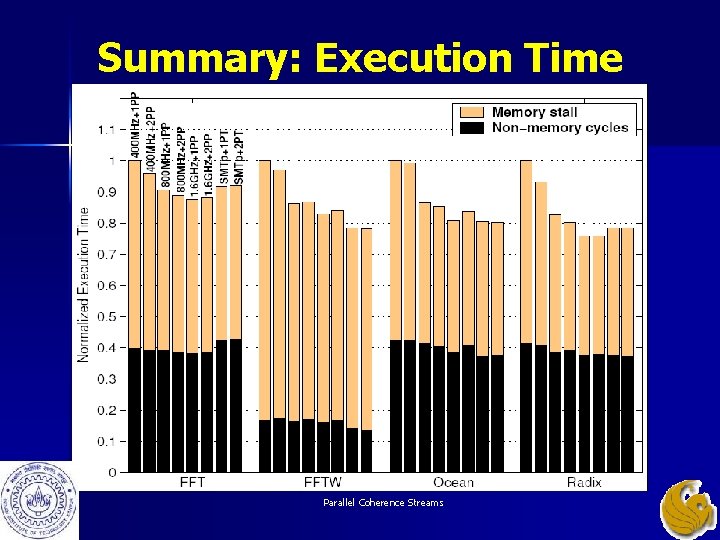

Summary: Execution Time Parallel Coherence Streams

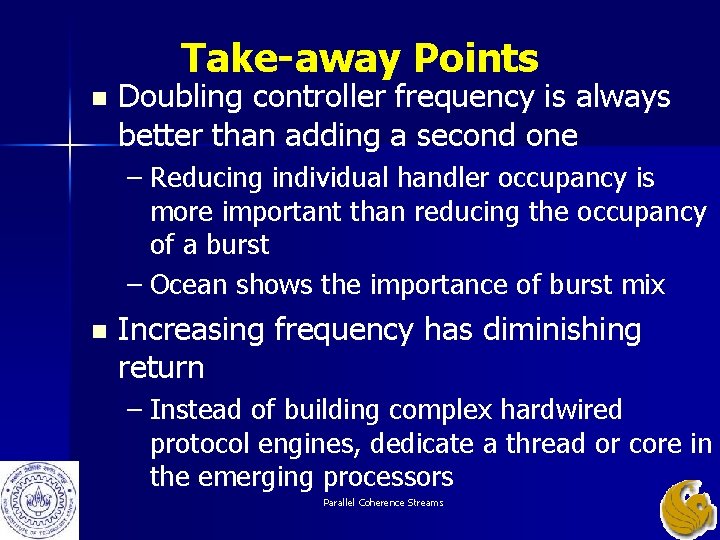

Take-away Points n Doubling controller frequency is always better than adding a second one – Reducing individual handler occupancy is more important than reducing the occupancy of a burst – Ocean shows the importance of burst mix n Increasing frequency has diminishing return – Instead of building complex hardwired protocol engines, dedicate a thread or core in the emerging processors Parallel Coherence Streams

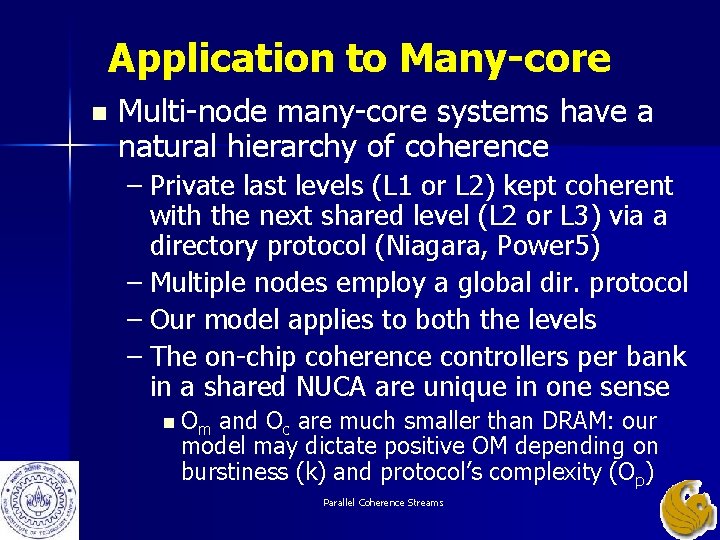

Application to Many-core n Multi-node many-core systems have a natural hierarchy of coherence – Private last levels (L 1 or L 2) kept coherent with the next shared level (L 2 or L 3) via a directory protocol (Niagara, Power 5) – Multiple nodes employ a global dir. protocol – Our model applies to both the levels – The on-chip coherence controllers per bank in a shared NUCA are unique in one sense n Om and Oc are much smaller than DRAM: our model may dictate positive OM depending on burstiness (k) and protocol’s complexity (Op) Parallel Coherence Streams

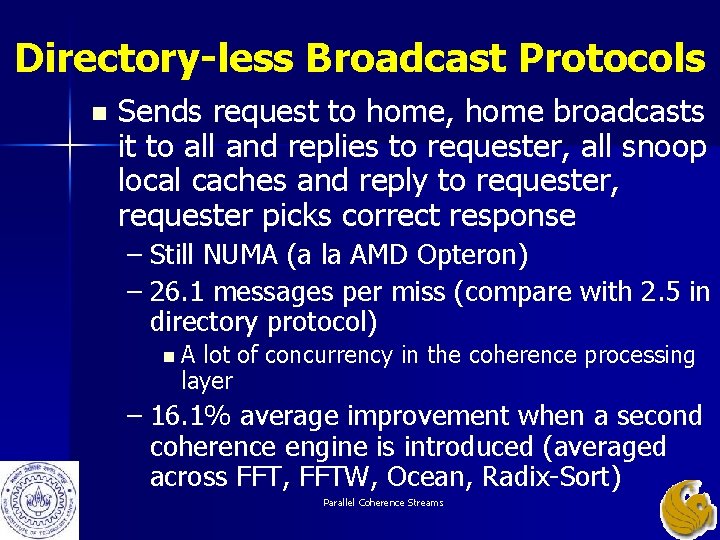

Directory-less Broadcast Protocols n Sends request to home, home broadcasts it to all and replies to requester, all snoop local caches and reply to requester, requester picks correct response – Still NUMA (a la AMD Opteron) – 26. 1 messages per miss (compare with 2. 5 in directory protocol) n. A lot of concurrency in the coherence processing layer – 16. 1% average improvement when a second coherence engine is introduced (averaged across FFT, FFTW, Ocean, Radix-Sort) Parallel Coherence Streams

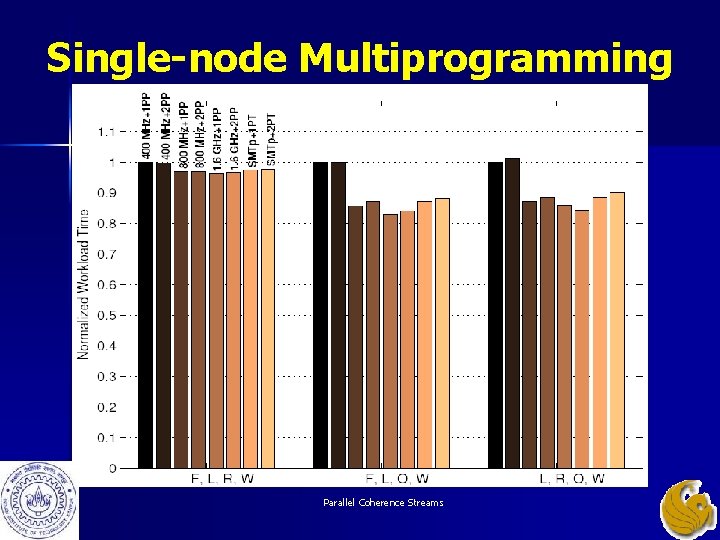

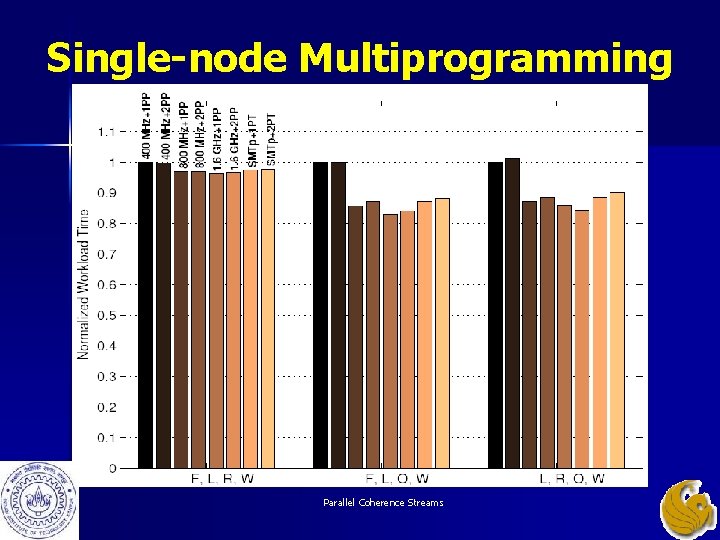

Single-node Multiprogramming n Multiprogrammed workloads have a large data footprint and no sharing across threads – A lot of outer-level cache misses, exercises the coherence engine a lot more than parallel applications – Our model correctly dictates that there is no gain in introducing a second controller n Op is too small to satisfy the inequalities – Take-away point: coherence bandwidth requirement is not directly related to cache miss rate Parallel Coherence Streams

Single-node Multiprogramming Parallel Coherence Streams

Prior Research n Programmable coherence controllers – Stanford FLASH, Wisconsin Typhoon, Sequent STi. NG, Sun S 3. mp, Compaq Piranha CMP, Newisys Opteron-Horus – All controllers are off-chip (and hence lags by at least two generations of process) n Multiple coherence controllers – Explored with SMP nodes having off-chip directory controllers (IBM, UIUC, Purdue) – Local/remote address partitioning in Opteron. Horus, STi. NG, and S 3. mp Parallel Coherence Streams

Summary n A useful model for coherence layer designers – A simple and intuitive inequality rules – DRAM latency contributes little to this decision in the case of highly bursty applications – Bank-conflicting requests enjoy little or no benefit from parallel coherence stream processing (common case for hot locks/flags) Two controllers improve performance by up to 8% when freq. ratio is four n Broadcast protocols enjoy larger benefit n Parallel Coherence Streams

Acknowledgments n Anshuman Gupta (UCSD) – Preliminary simulations (part of independent study at IIT Kanpur) n Varun Khaneja (AMD) – Development of directory-less broadcast protocol (part of MTech thesis at IIT Kanpur) n IIT Kanpur Security Center – For hosting part of simulation infra-structure Parallel Coherence Streams

Integrated Memory Controllers with Parallel Coherence Streams THANK YOU! Mainak Chaudhuri IIT Kanpur Mark Heinrich University of Central Florida To appear in IEEE TPDS Parallel Coherence Streams