Instructor Guni Sharon 1 CSCE689 Reinforcement Learning Stateless

- Slides: 39

Instructor: Guni Sharon 1

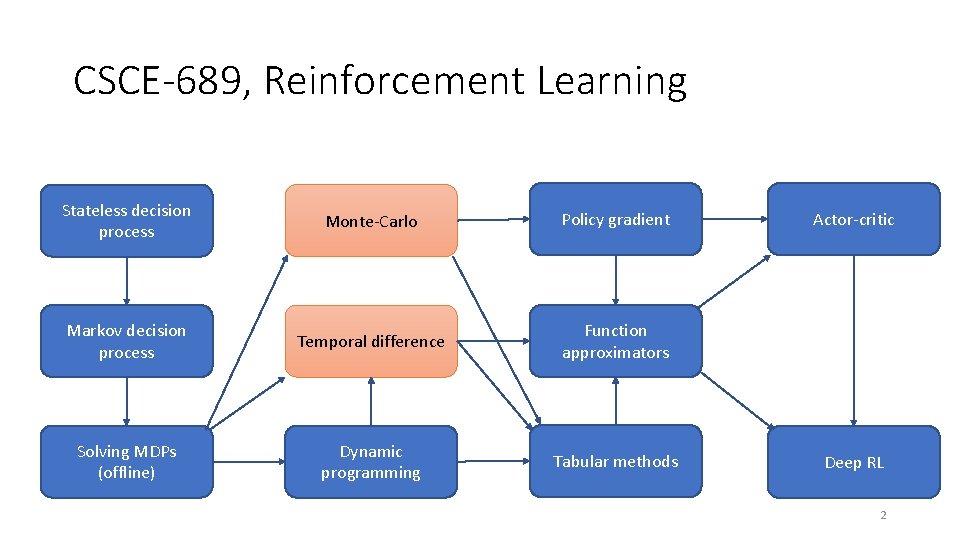

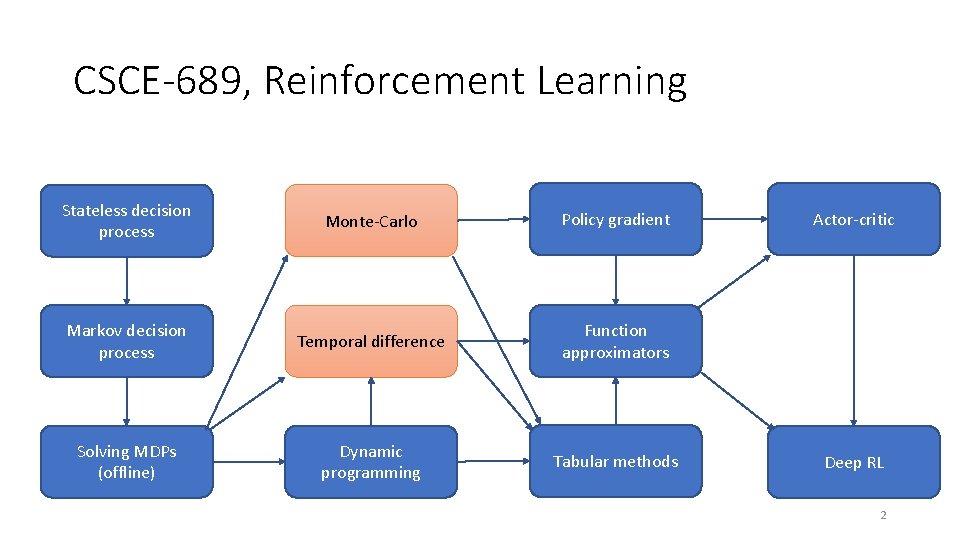

CSCE-689, Reinforcement Learning Stateless decision process Monte-Carlo Policy gradient Markov decision process Temporal difference Function approximators Solving MDPs (offline) Dynamic programming Tabular methods Actor-critic Deep RL 2

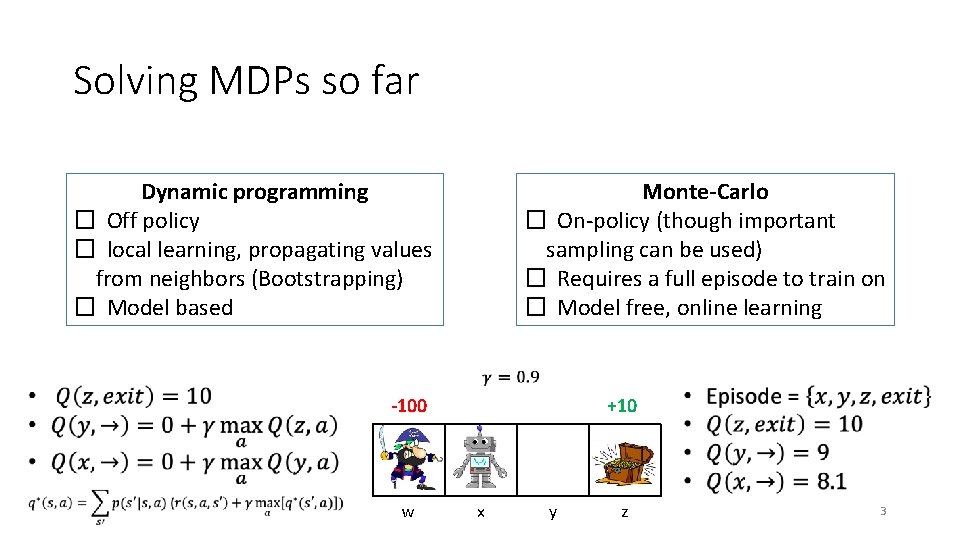

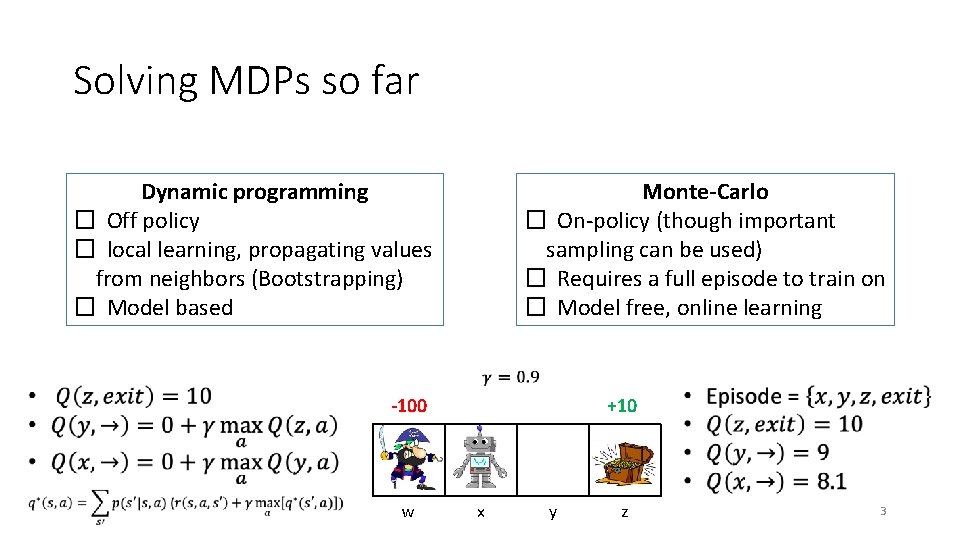

Solving MDPs so far Dynamic programming � Off policy � local learning, propagating values from neighbors (Bootstrapping) � Model based Monte-Carlo � On-policy (though important sampling can be used) � Requires a full episode to train on � Model free, online learning -100 w +10 x y z 3

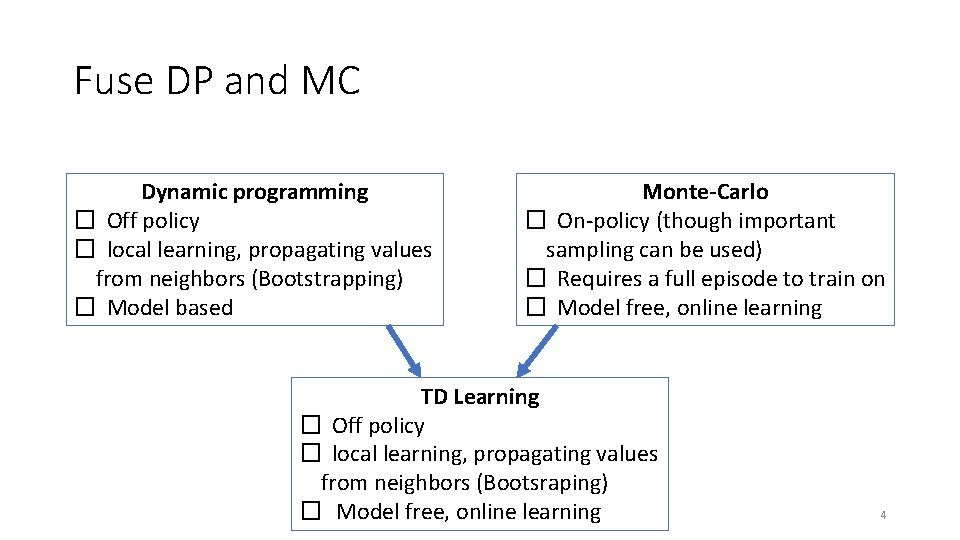

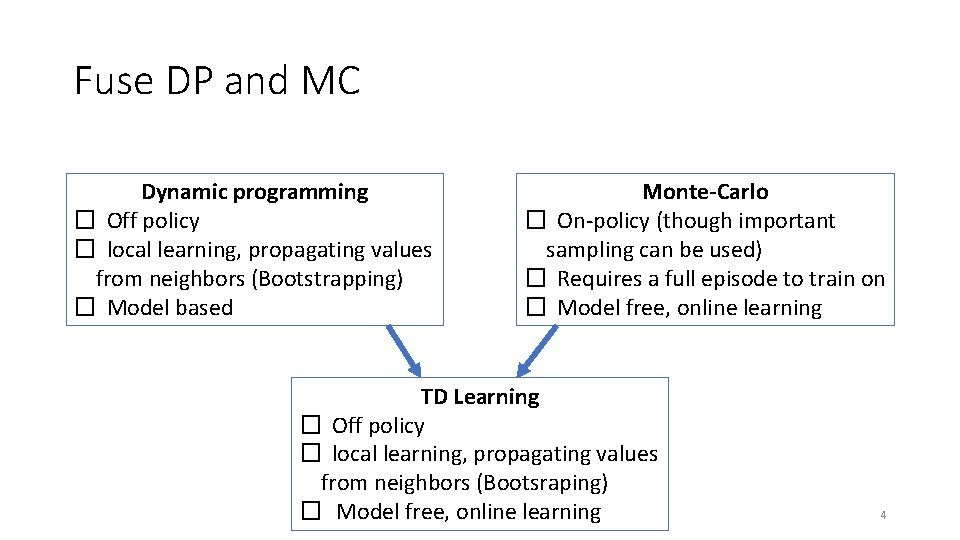

Fuse DP and MC Dynamic programming � Off policy � local learning, propagating values from neighbors (Bootstrapping) � Model based Monte-Carlo � On-policy (though important sampling can be used) � Requires a full episode to train on � Model free, online learning TD Learning � Off policy � local learning, propagating values from neighbors (Bootsraping) � Model free, online learning 4

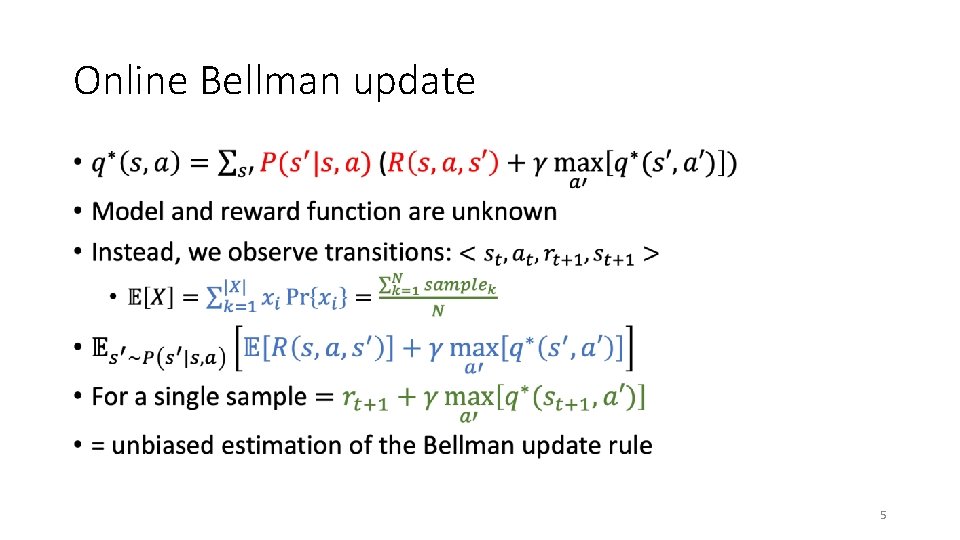

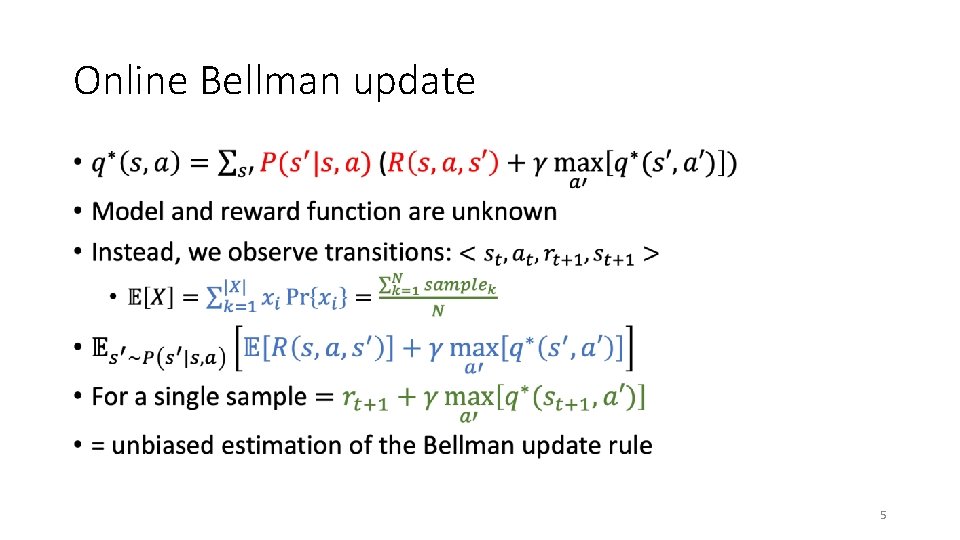

Online Bellman update • 5

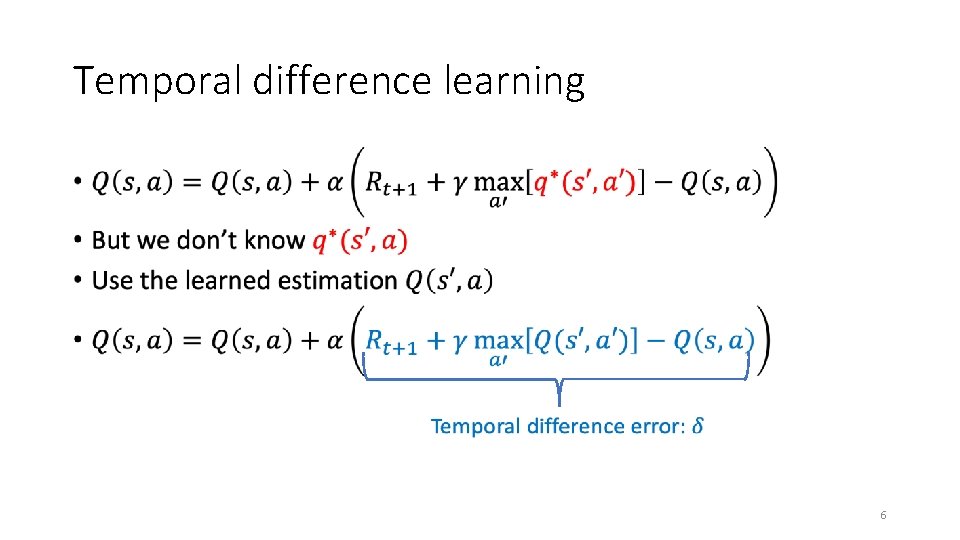

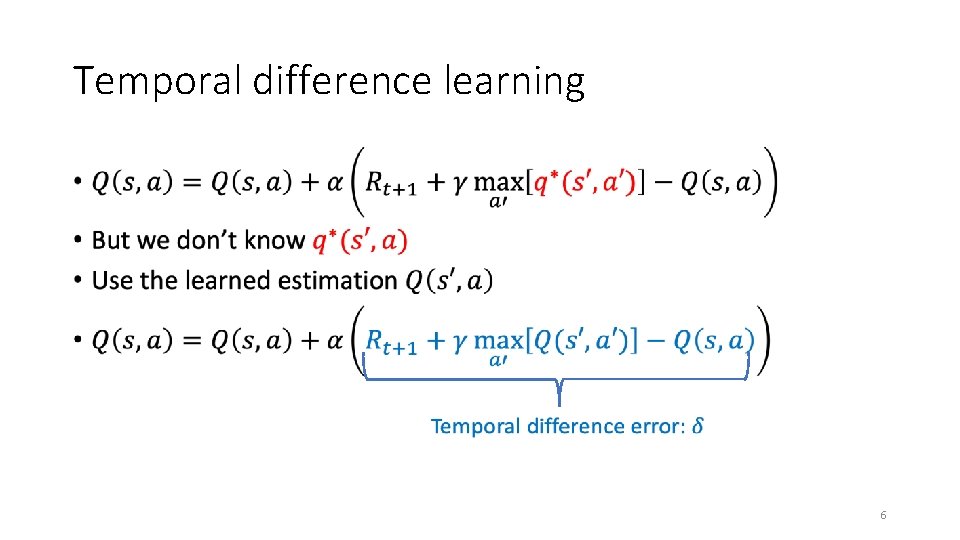

Temporal difference learning • 6

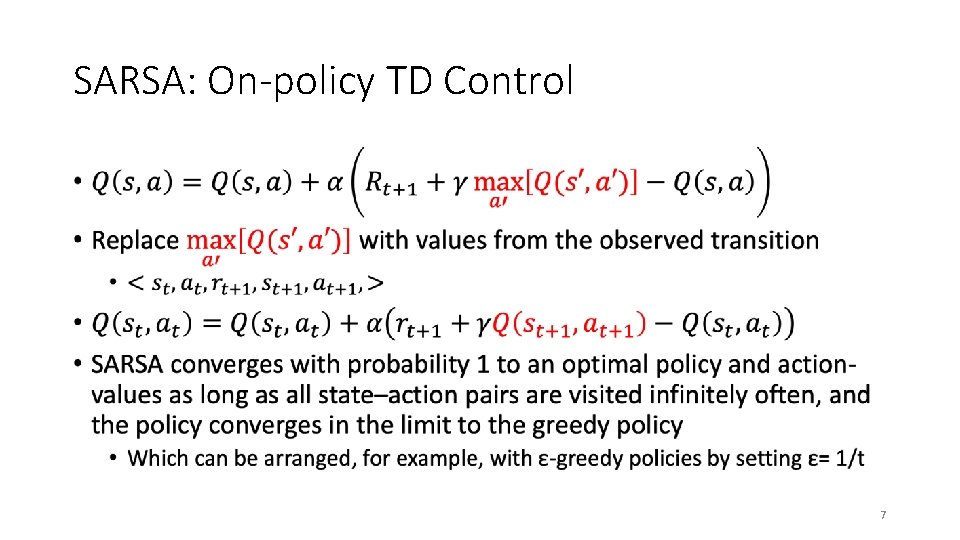

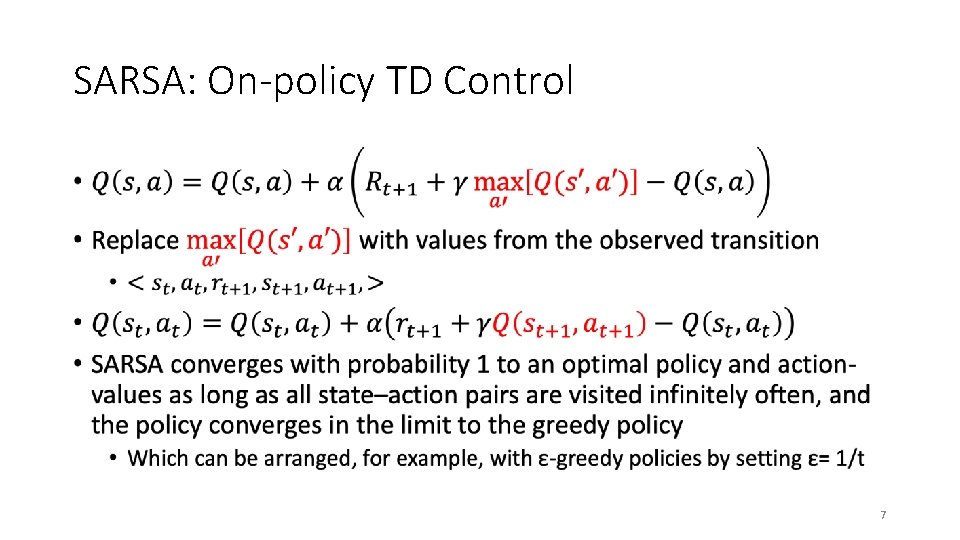

SARSA: On-policy TD Control • 7

SARSA: On-policy TD Control 8

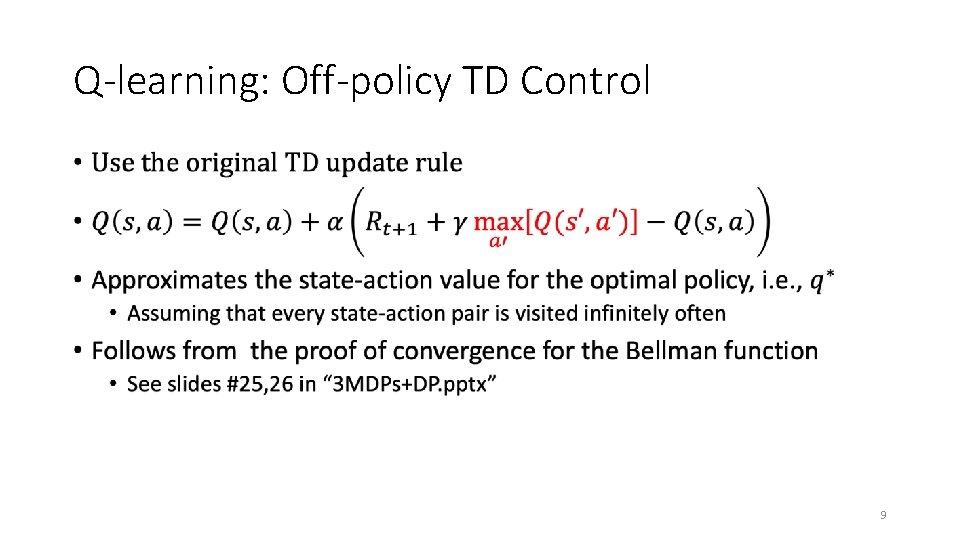

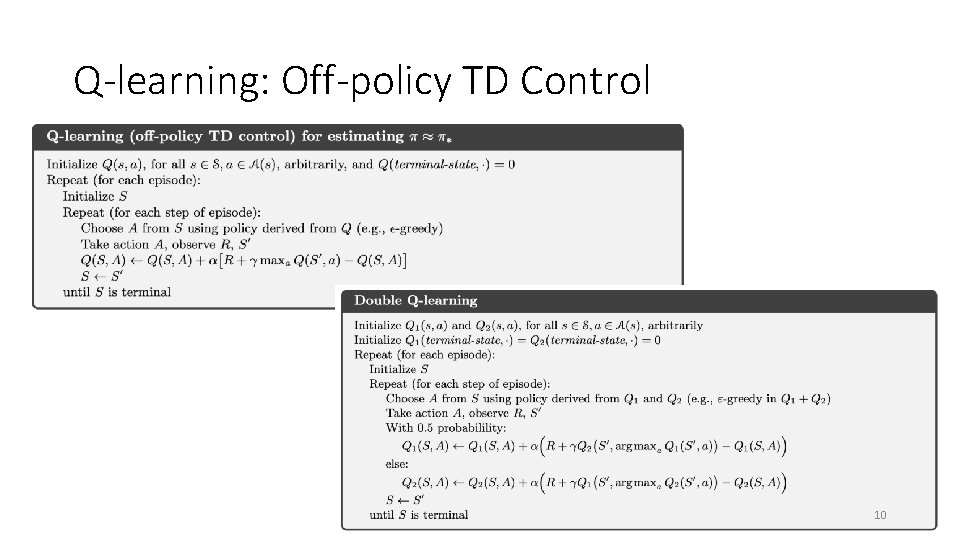

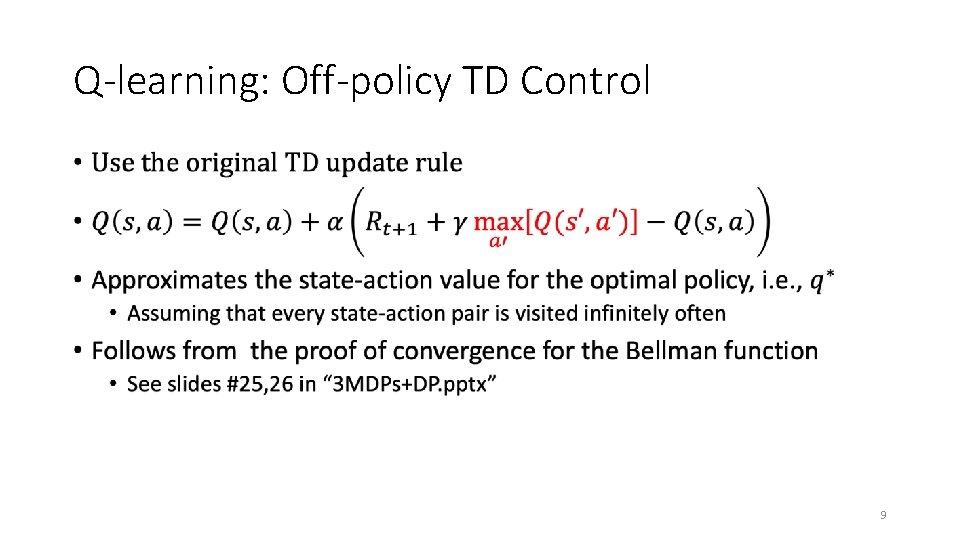

Q-learning: Off-policy TD Control • 9

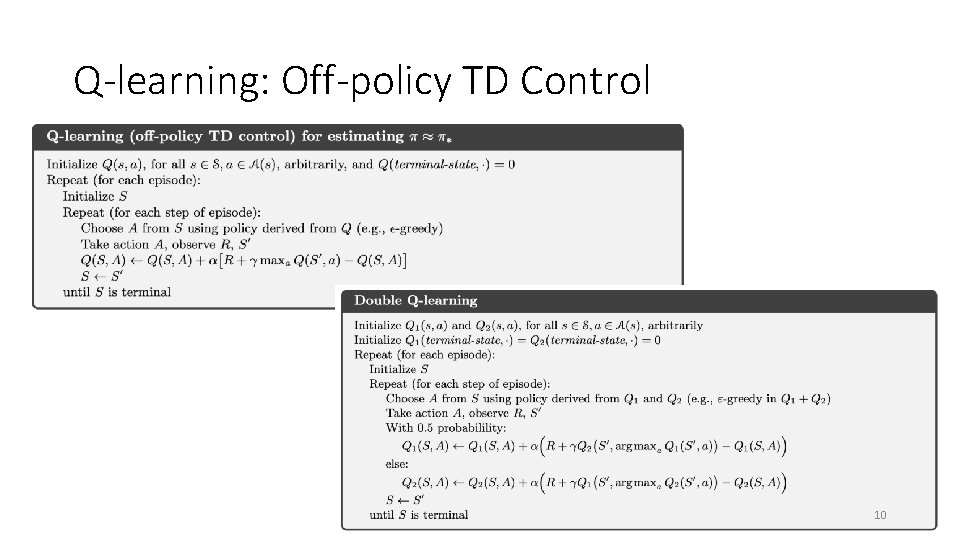

Q-learning: Off-policy TD Control 10

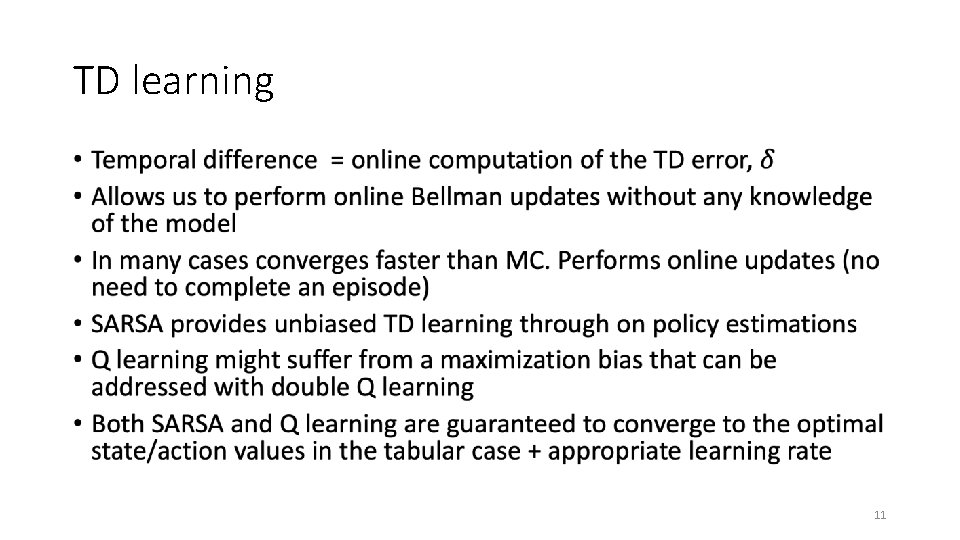

TD learning • 11

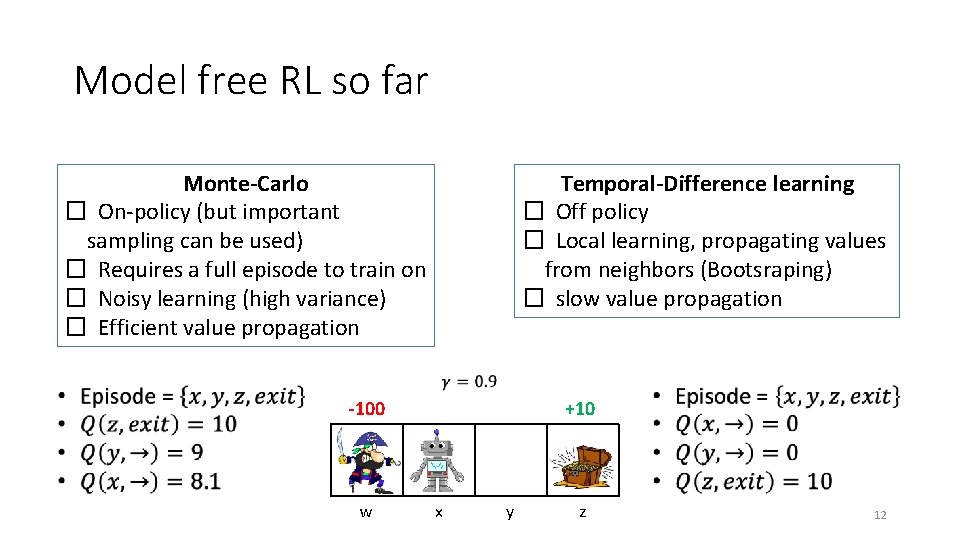

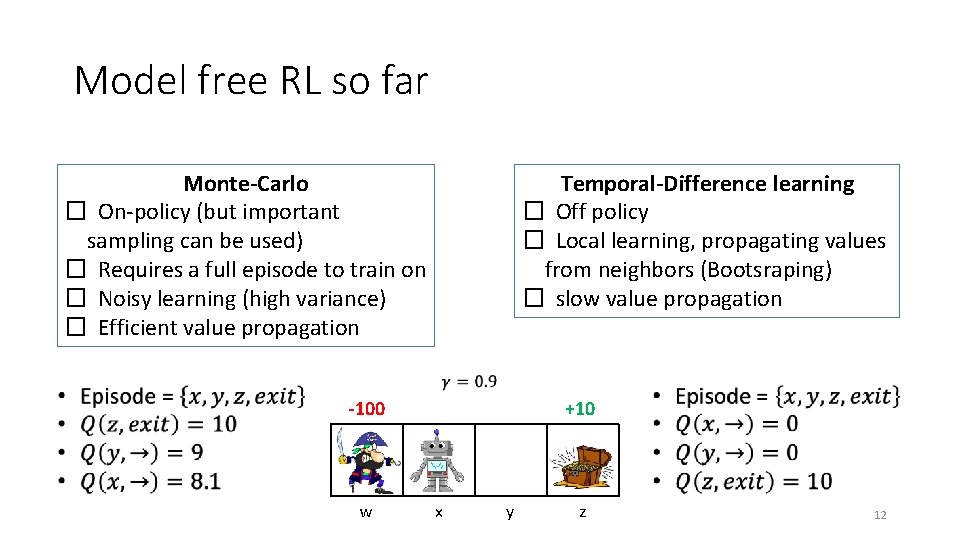

Model free RL so far Temporal-Difference learning � Off policy � Local learning, propagating values from neighbors (Bootsraping) � slow value propagation Monte-Carlo � On-policy (but important sampling can be used) � Requires a full episode to train on � Noisy learning (high variance) � Efficient value propagation -100 w +10 x y z 12

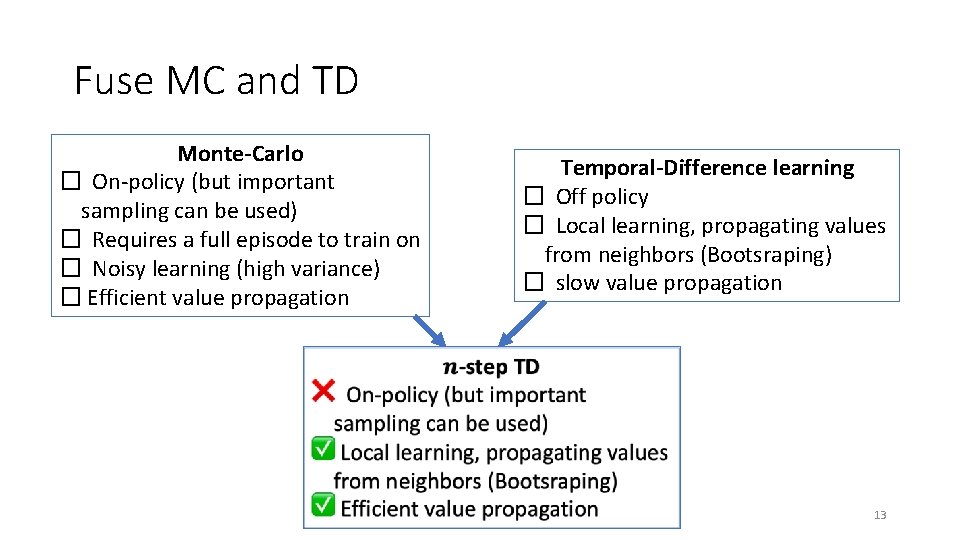

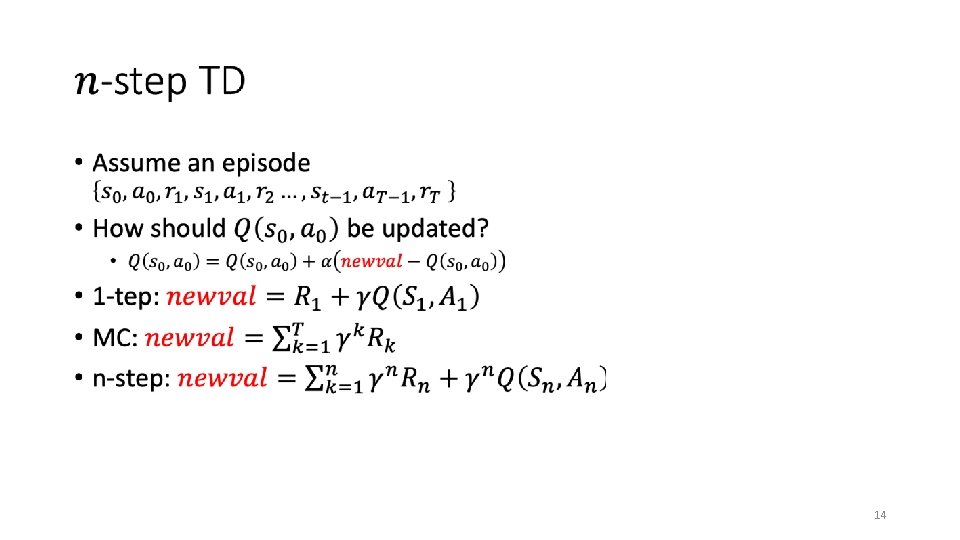

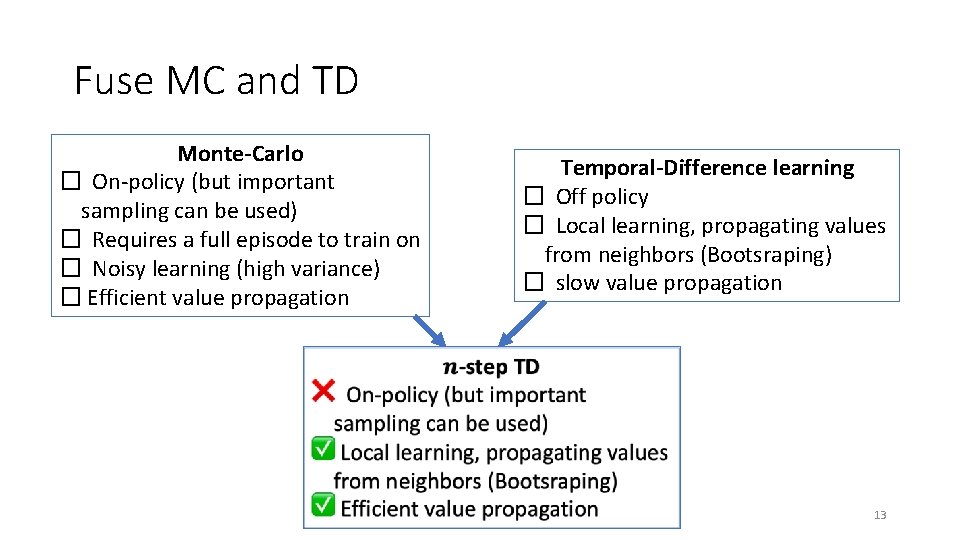

Fuse MC and TD Monte-Carlo � On-policy (but important sampling can be used) � Requires a full episode to train on � Noisy learning (high variance) � Efficient value propagation Temporal-Difference learning � Off policy � Local learning, propagating values from neighbors (Bootsraping) � slow value propagation 13

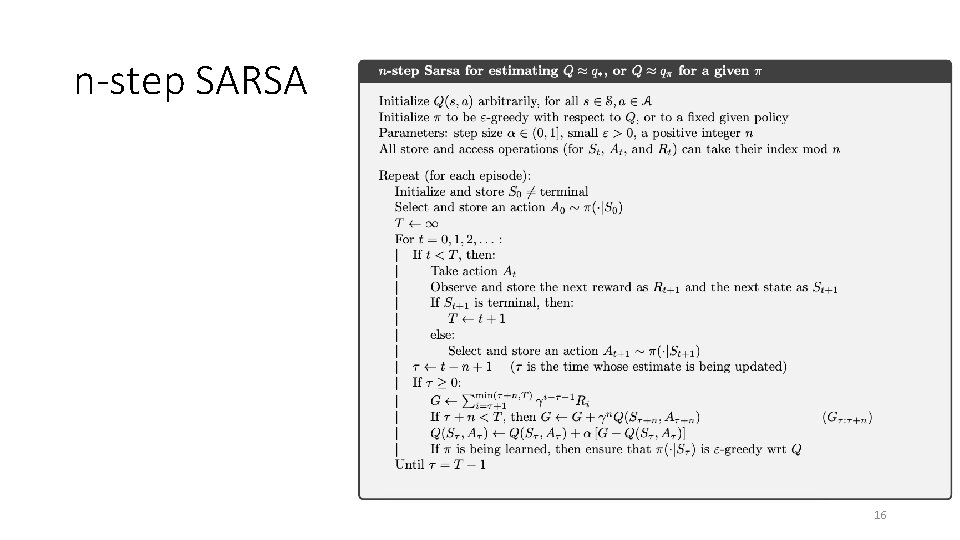

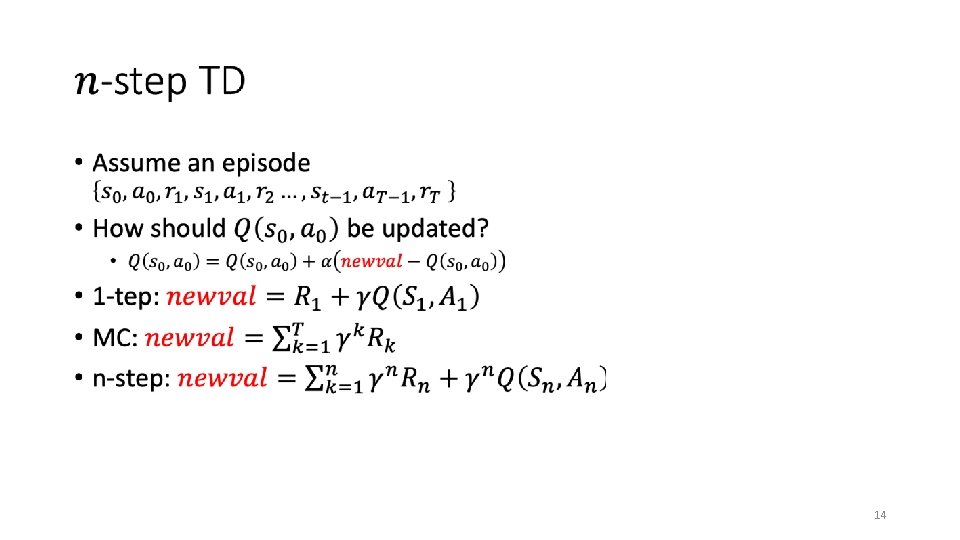

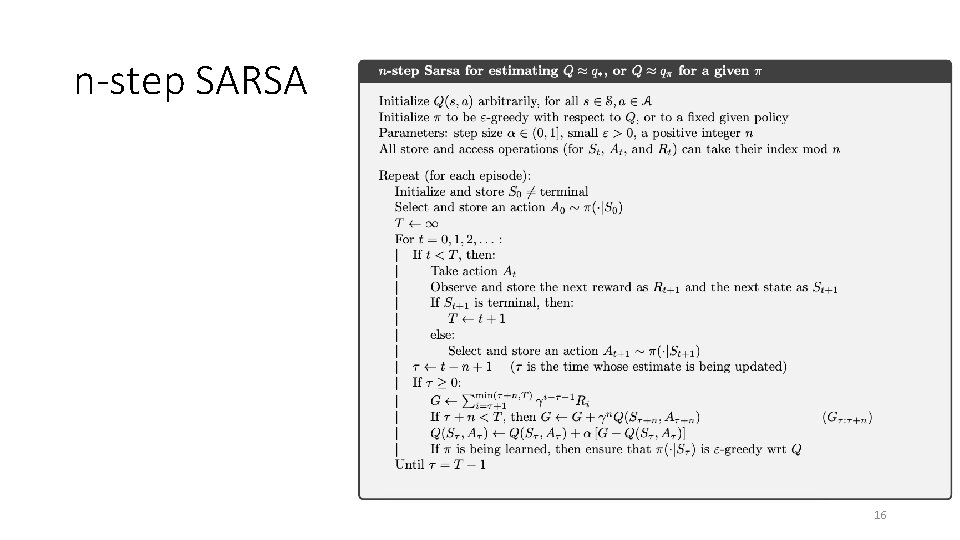

n-step SARSA 16

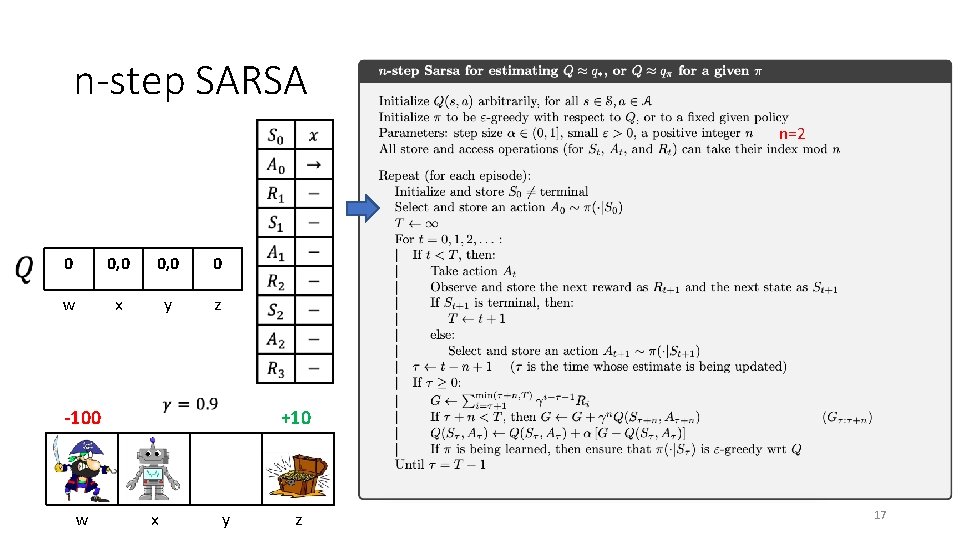

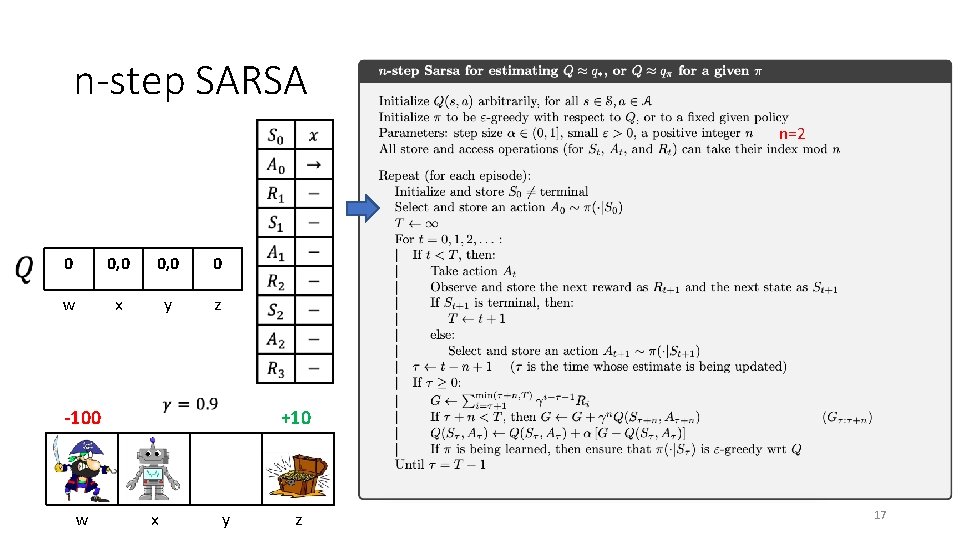

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 17

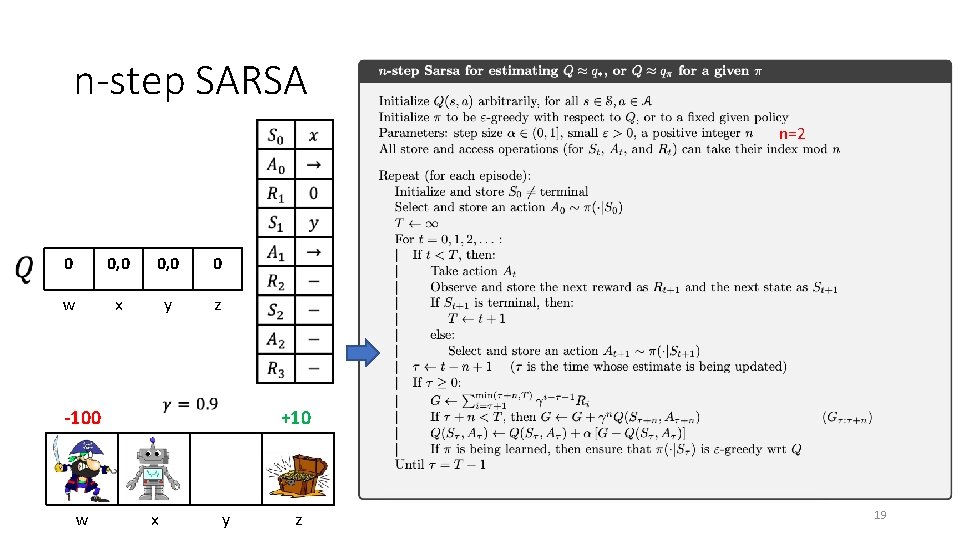

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 18

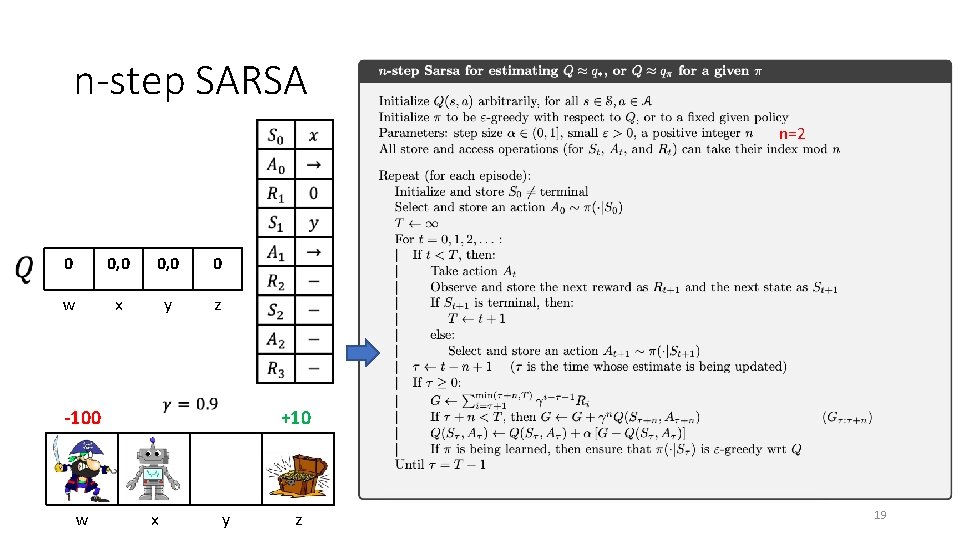

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 19

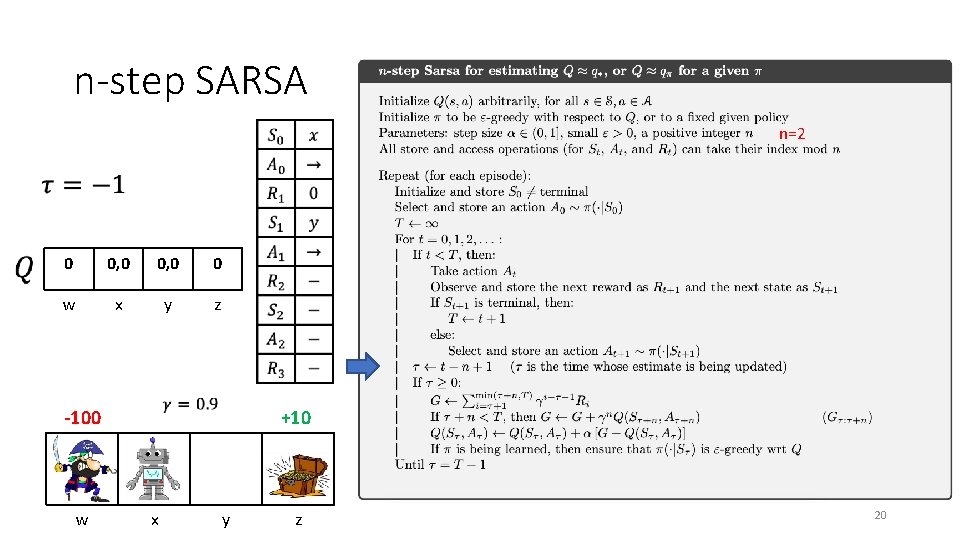

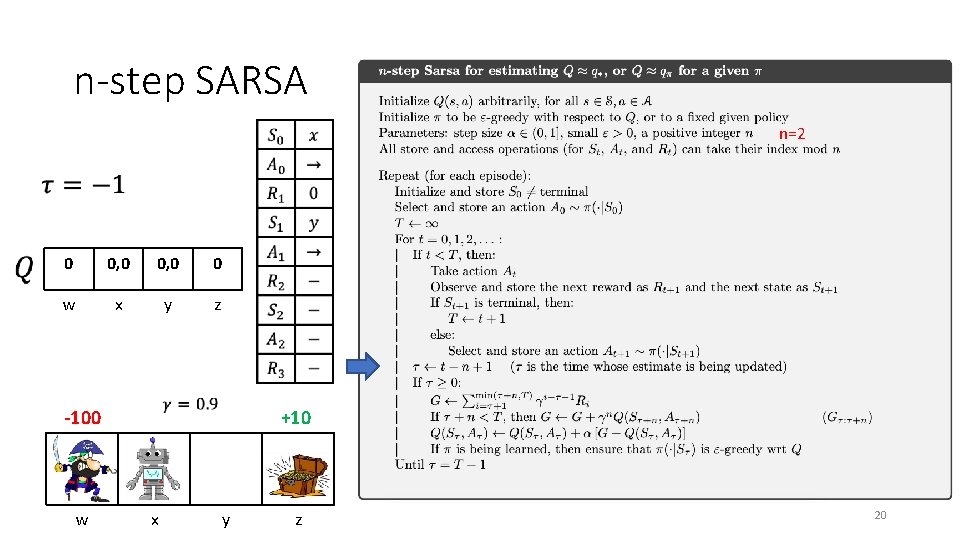

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 20

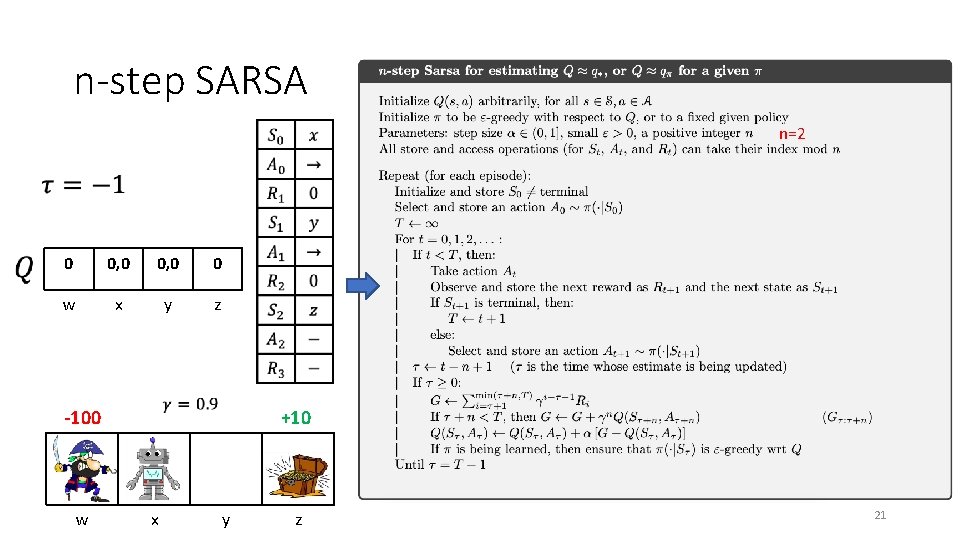

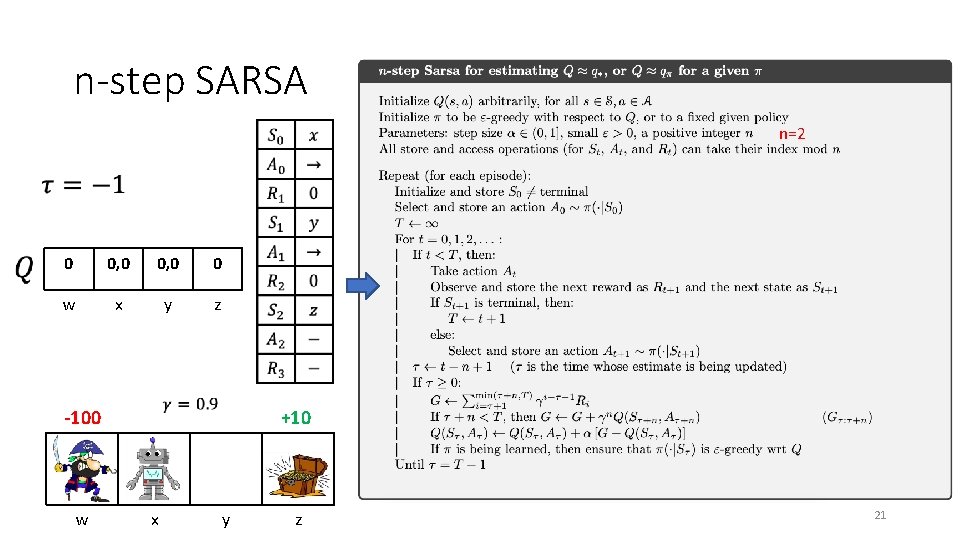

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 21

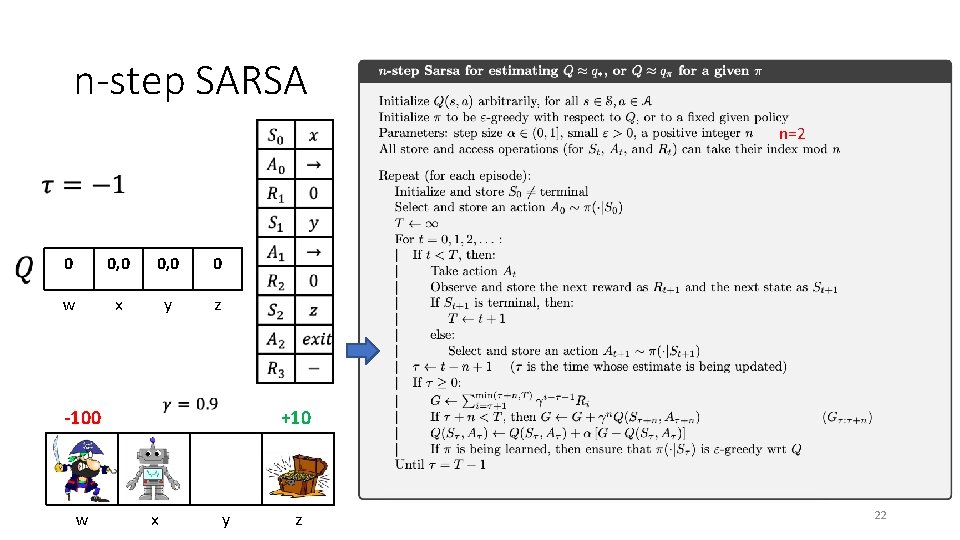

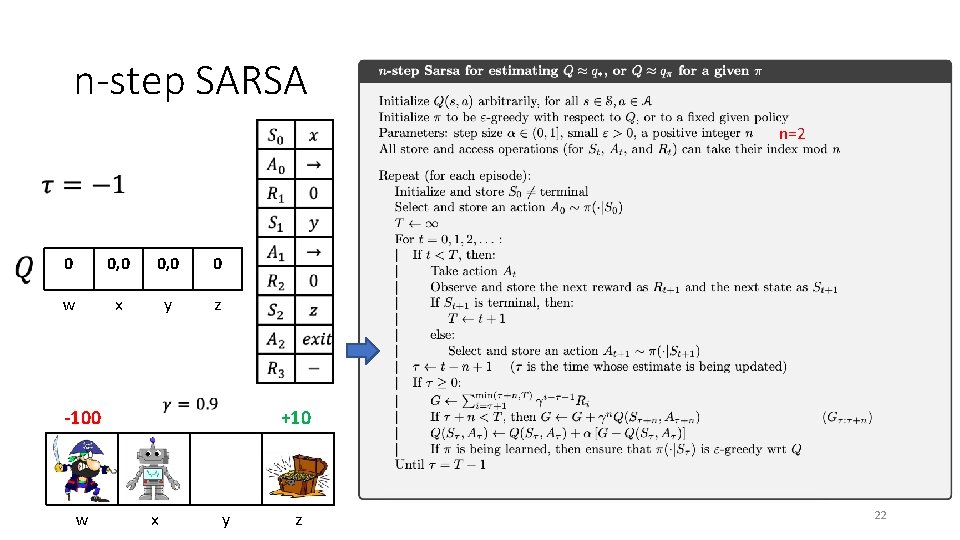

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 22

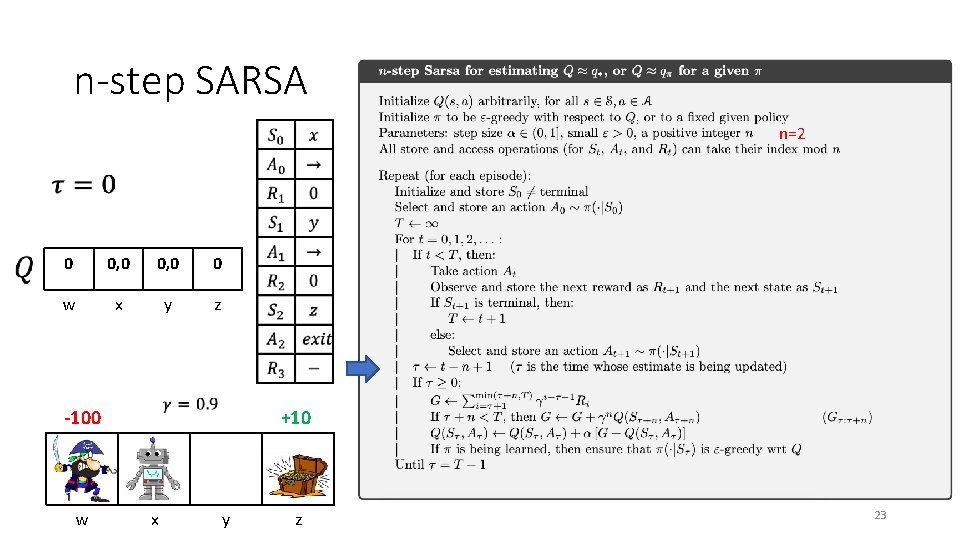

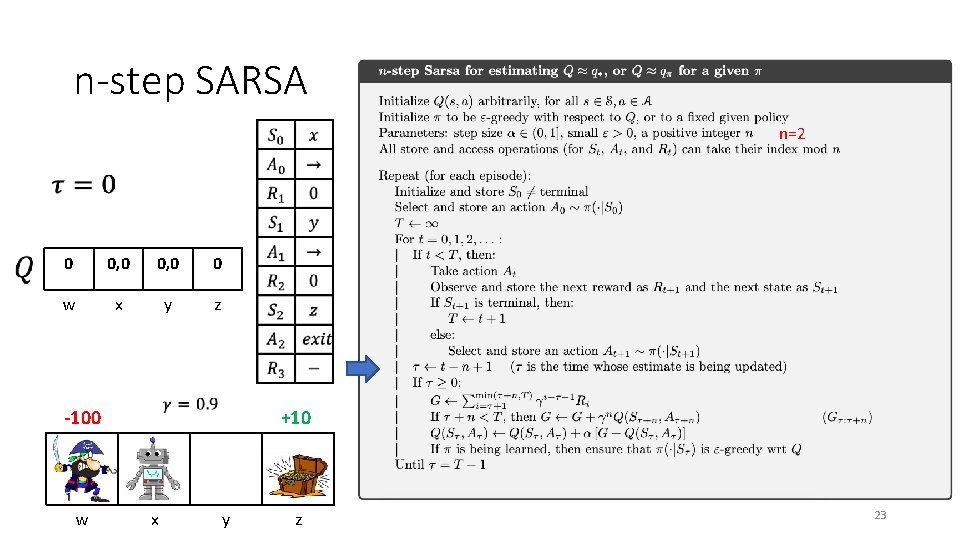

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 23

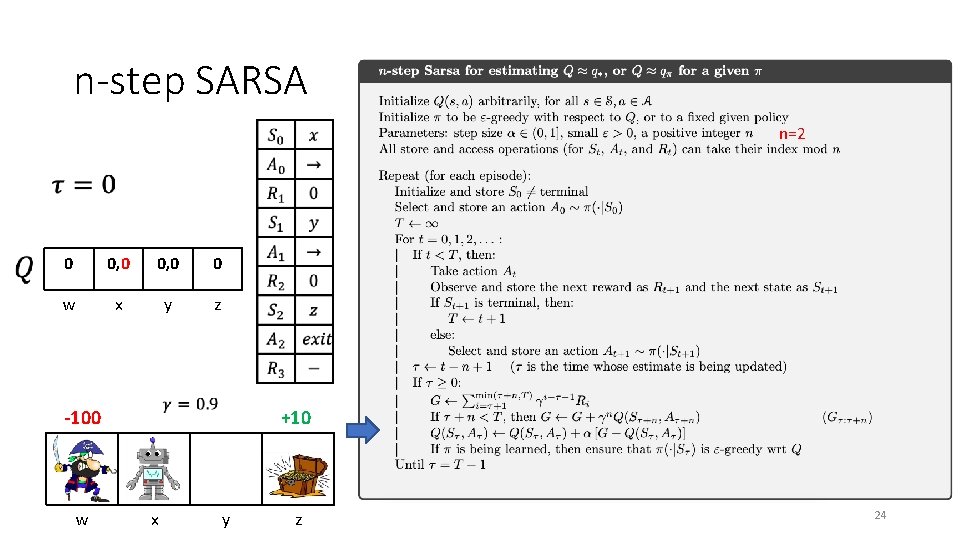

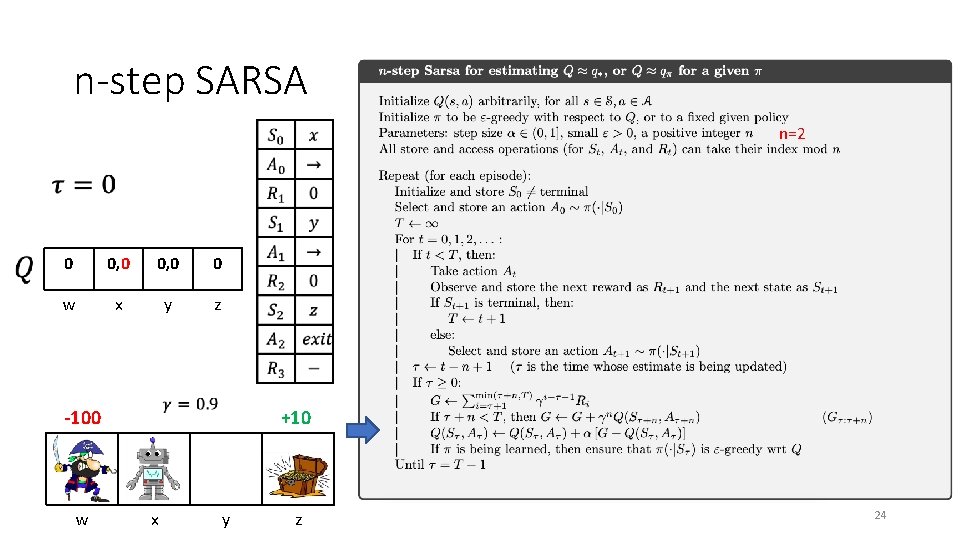

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 24

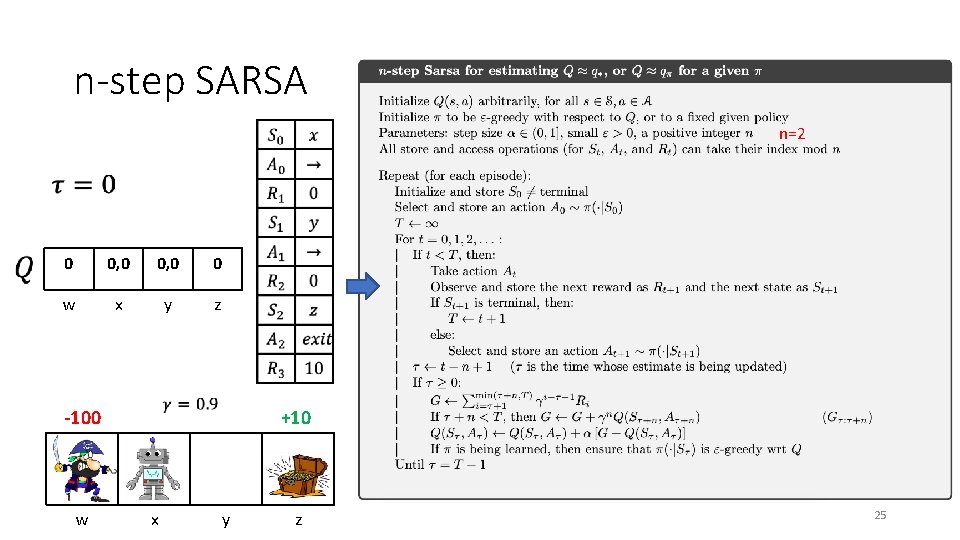

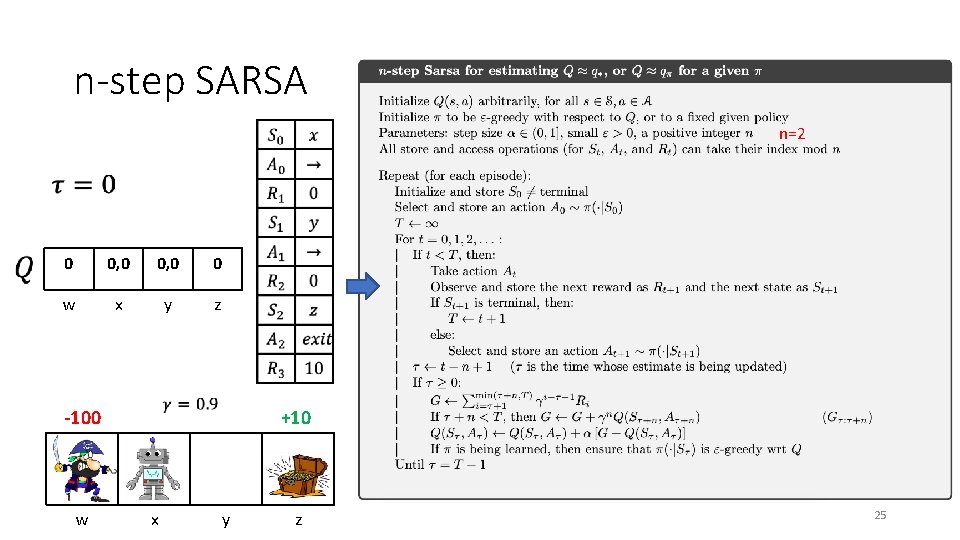

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 25

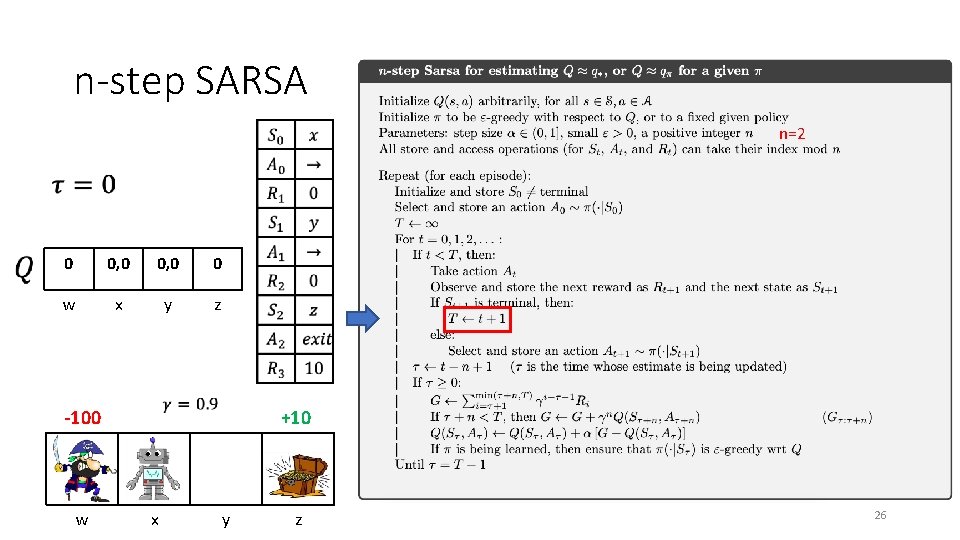

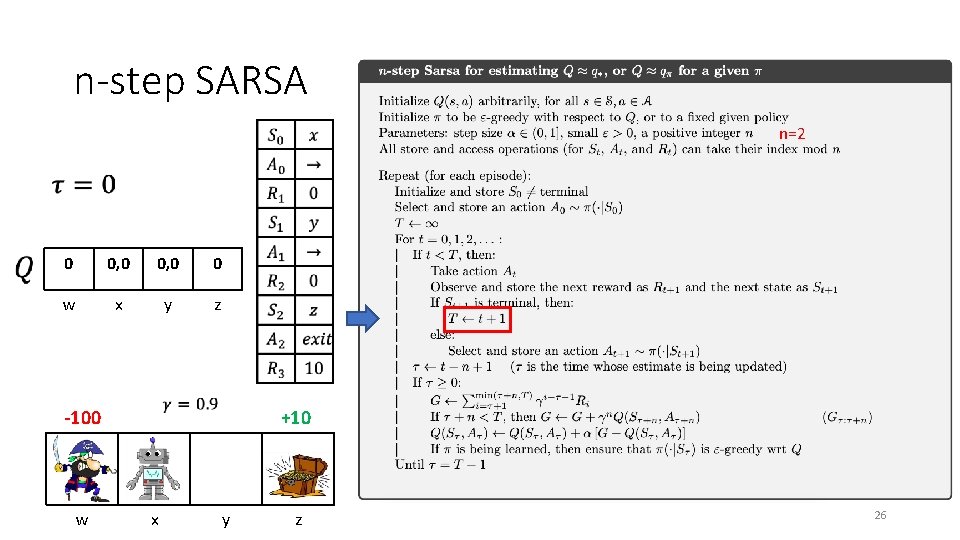

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 26

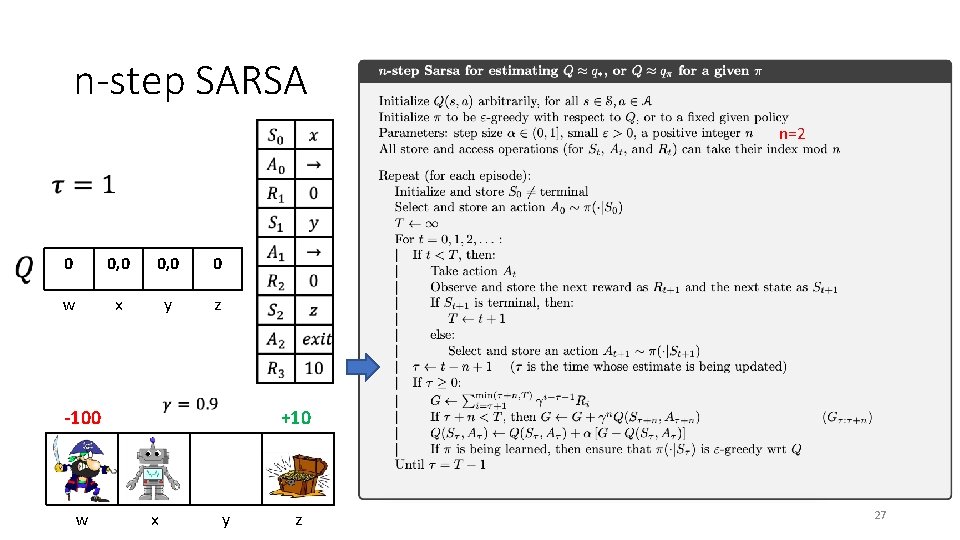

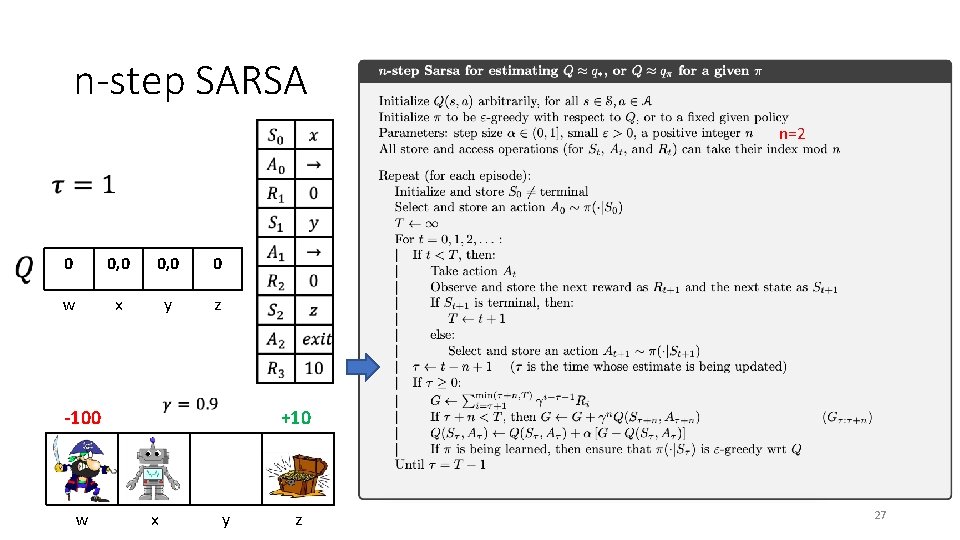

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z 27

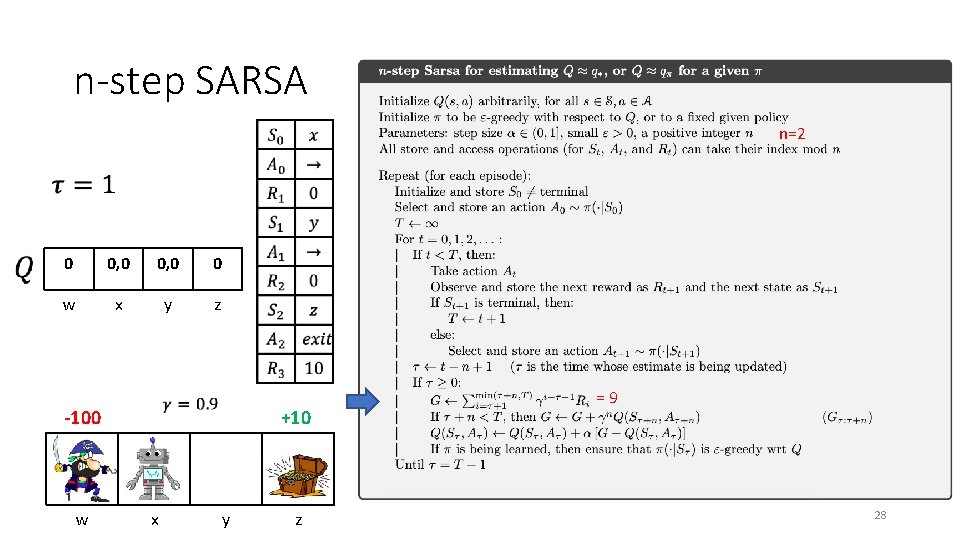

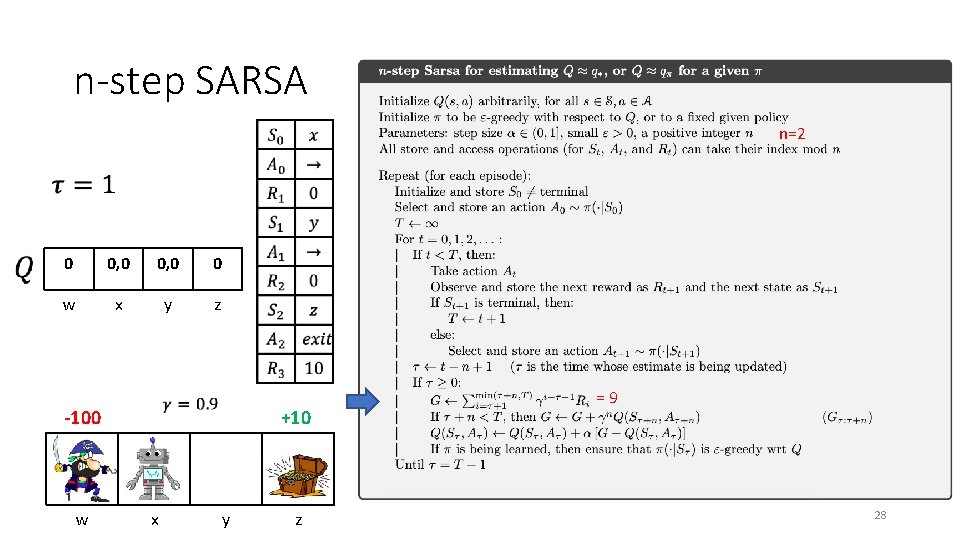

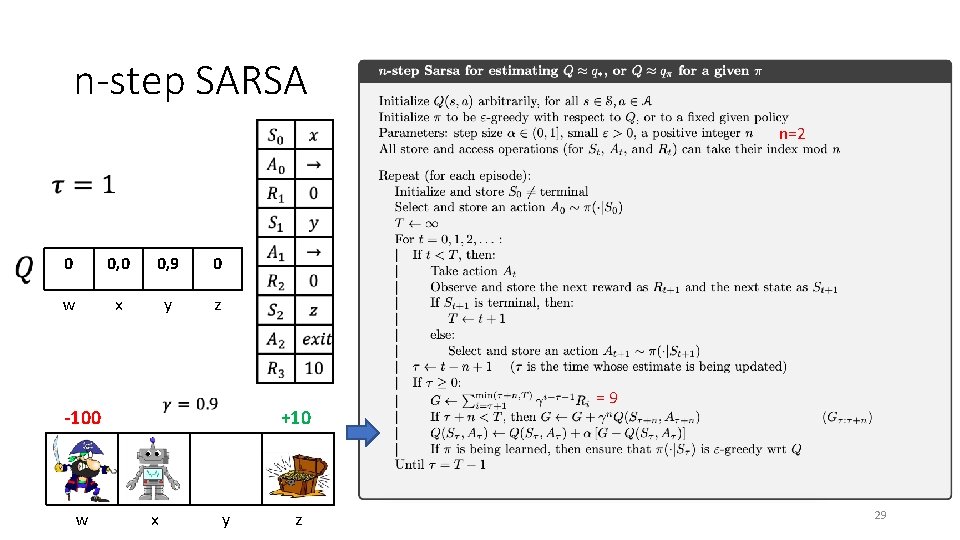

n-step SARSA n=2 0 0, 0 0 w x y z -100 w +10 x y z =9 28

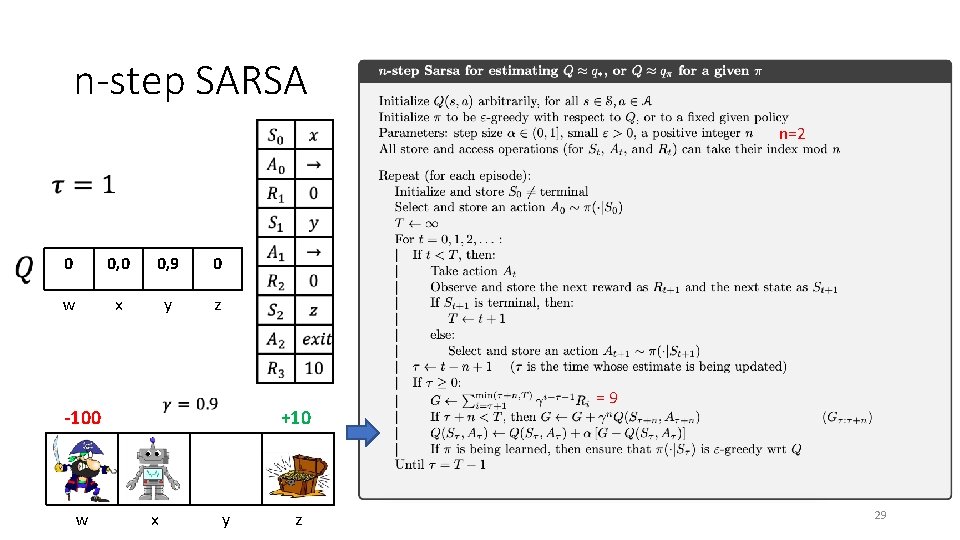

n-step SARSA n=2 0 0, 9 0 w x y z -100 w +10 x y z =9 29

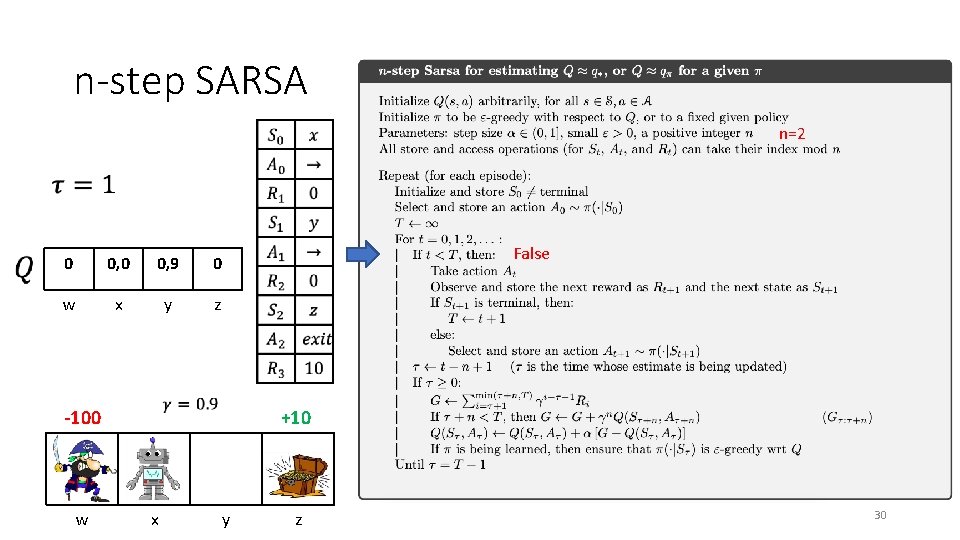

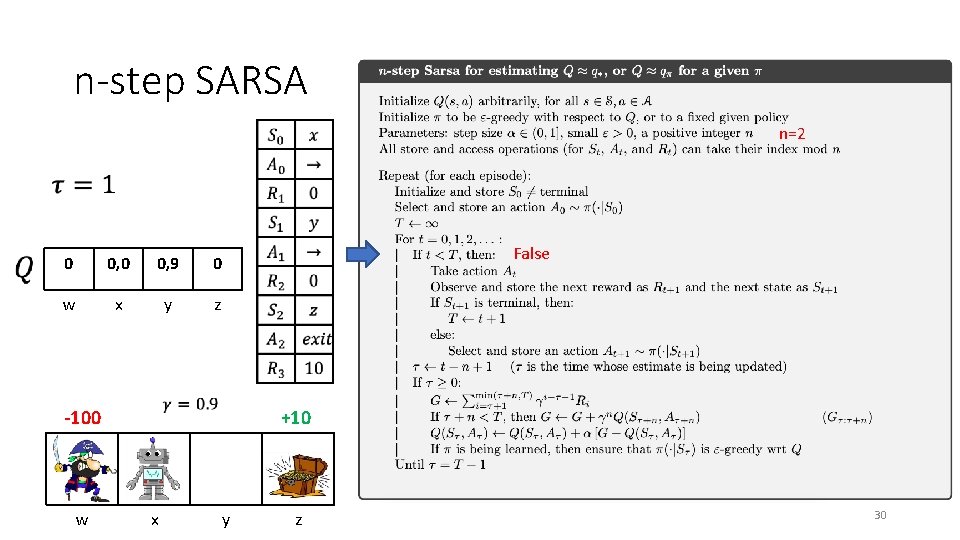

n-step SARSA n=2 0 0, 9 0 w x y z False -100 w +10 x y z 30

n-step SARSA n=2 0 0, 9 0 w x y z -100 w +10 x y z = 10 31

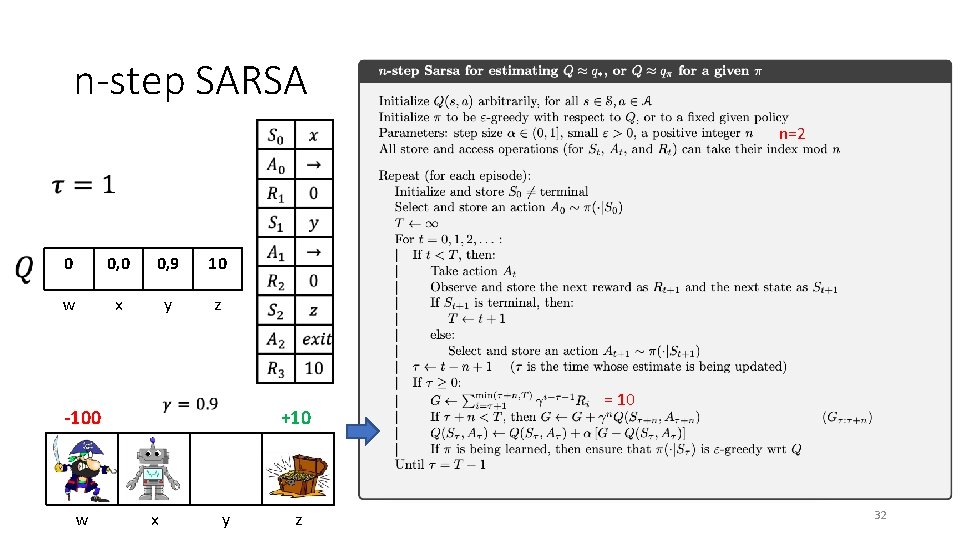

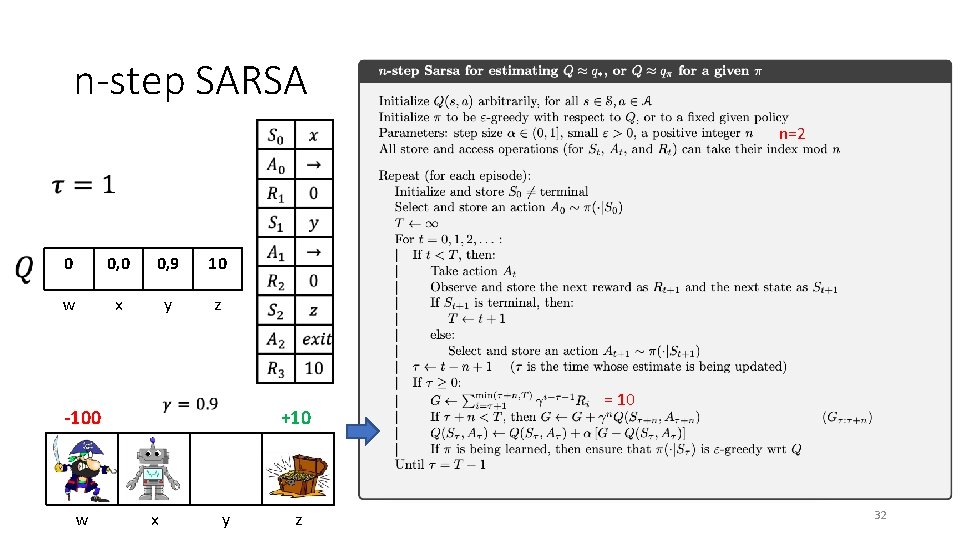

n-step SARSA n=2 0 0, 9 10 w x y z -100 w +10 x y z = 10 32

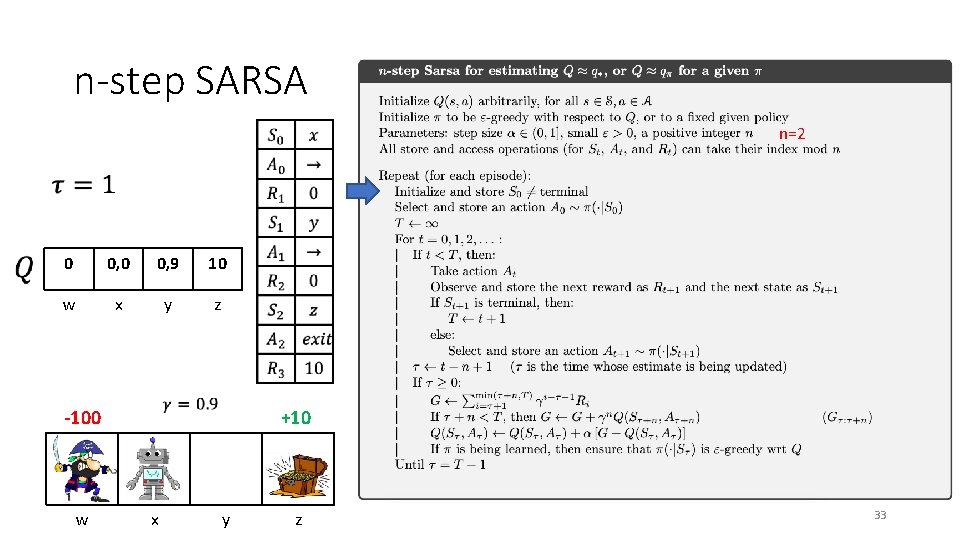

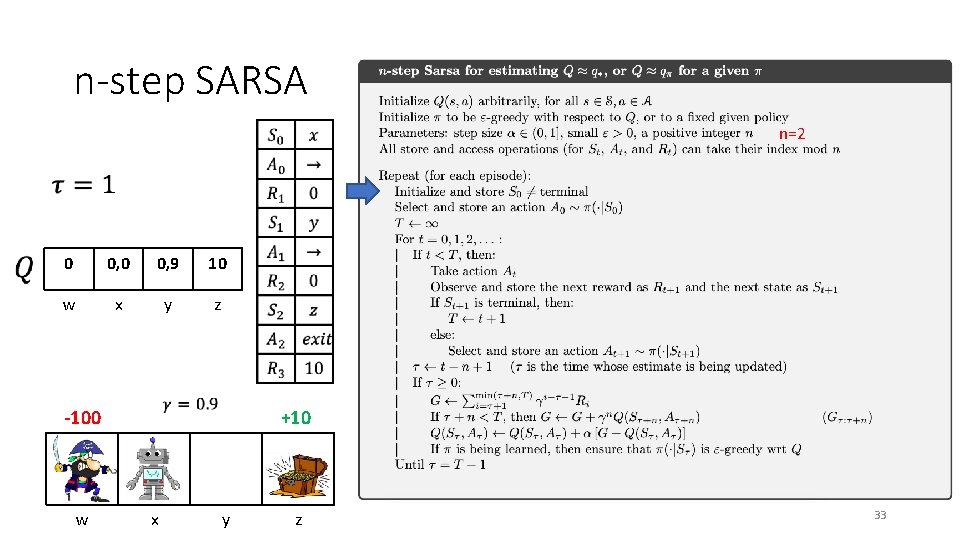

n-step SARSA n=2 0 0, 9 10 w x y z -100 w +10 x y z 33

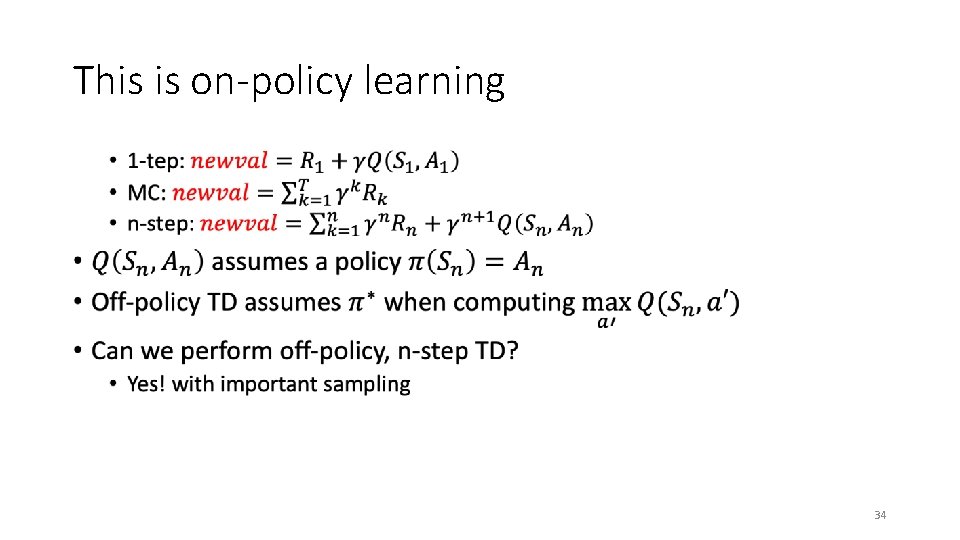

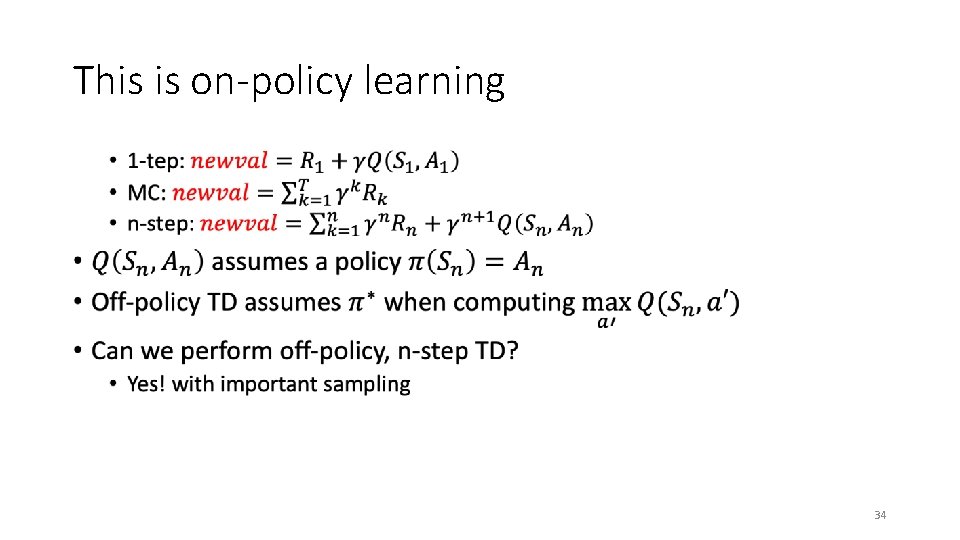

This is on-policy learning • 34

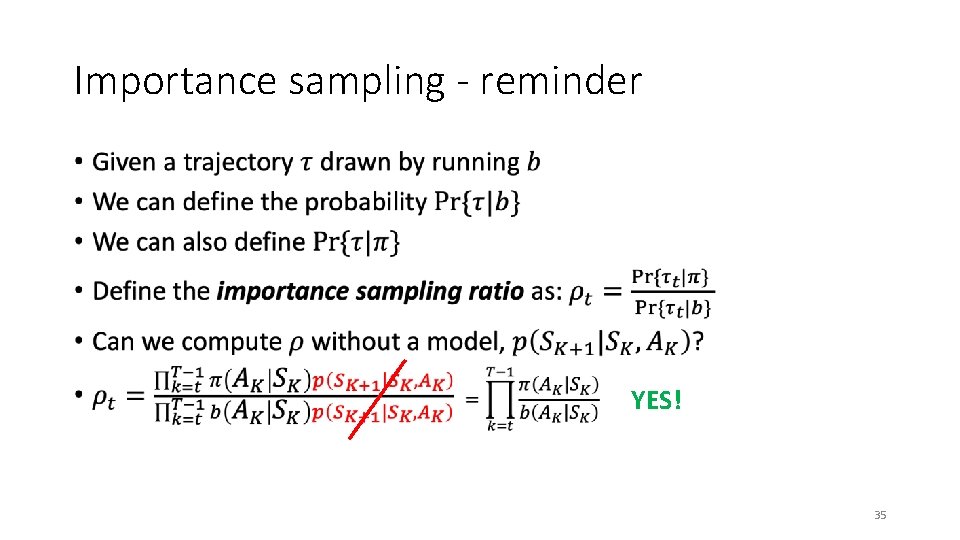

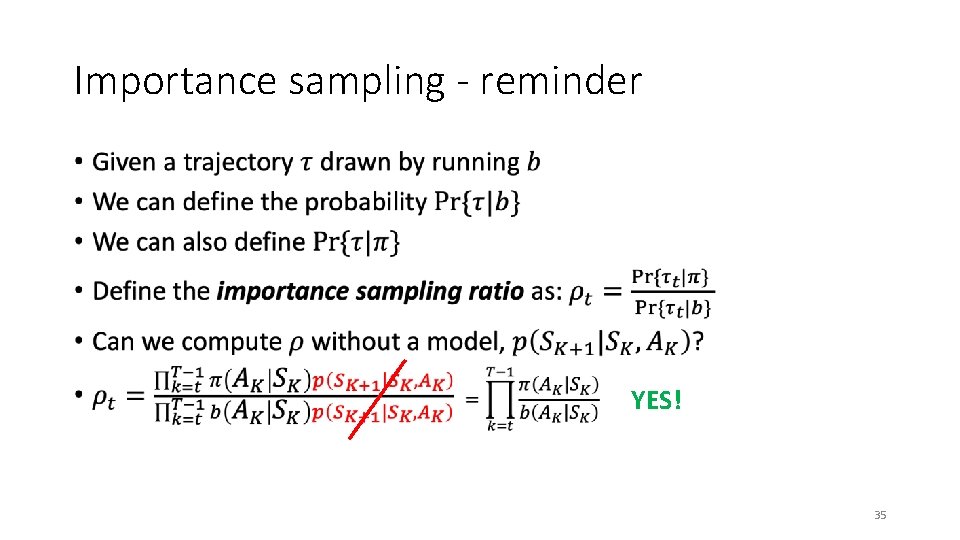

Importance sampling - reminder • YES! 35

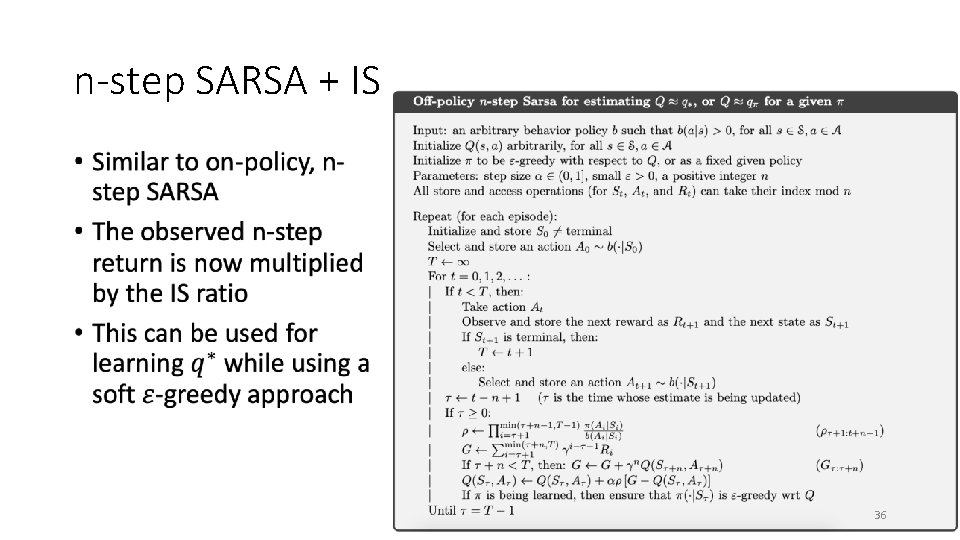

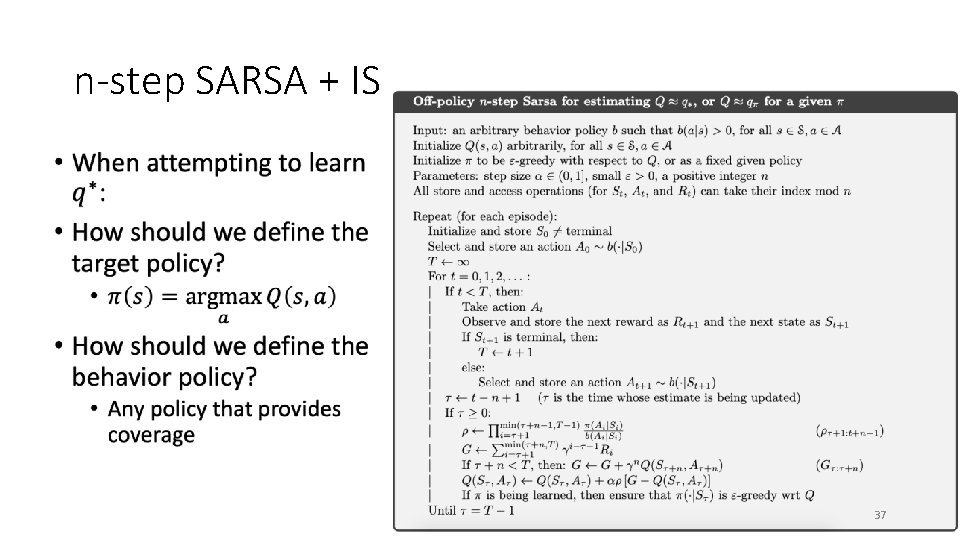

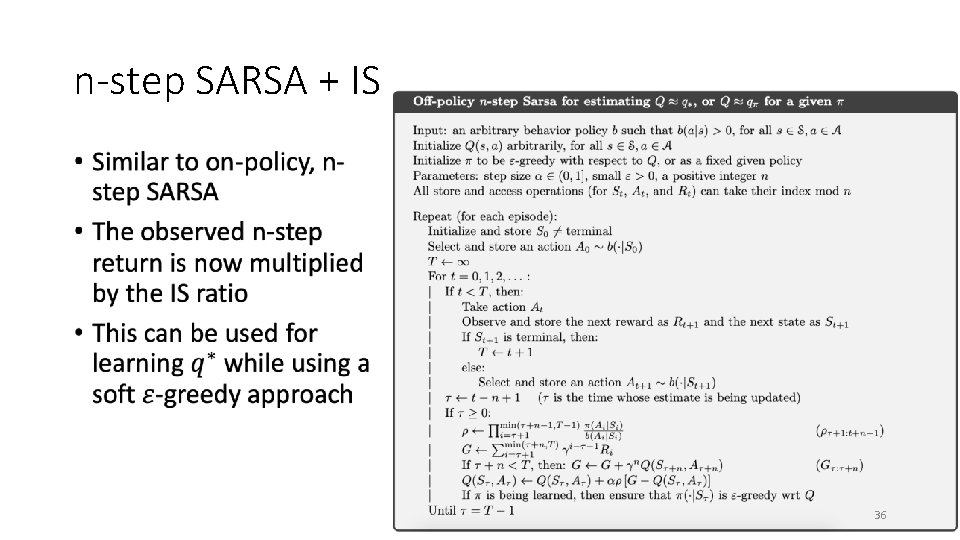

n-step SARSA + IS • 36

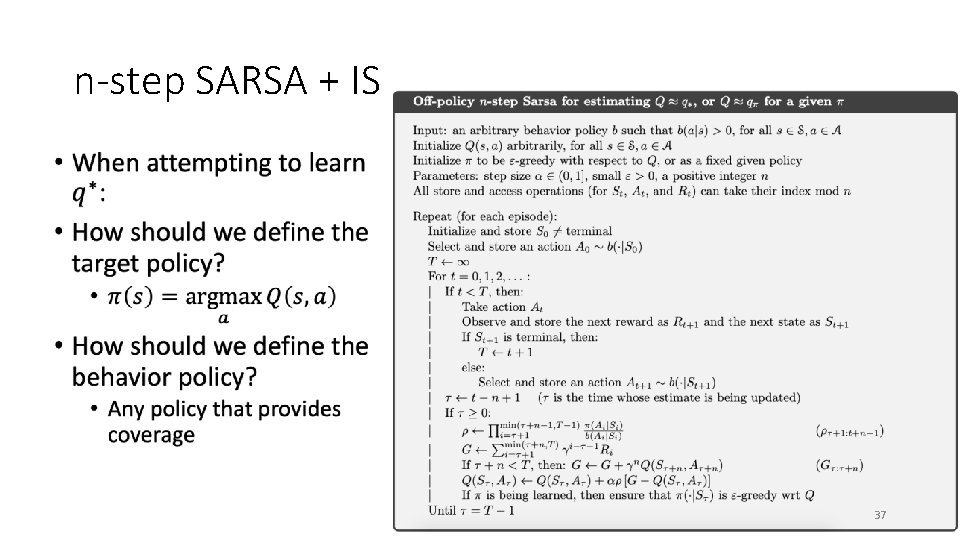

n-step SARSA + IS • 37

What did we learn? • TD learning results in slow propagation of future rewards across the state space • Especially problematic in sparse reward settings • MC, on the other hand, suffers from high variance in observed returns • Especially problematic in stochastic environments and long episodes • TD learning with n-step return gaps these two extremes • The best n is domain specific and is usually chosen empirically • Careful! Like MC, it is off-policy (use IS when needed) 38

What next? • Class: Learning and using the model • Assignments: • Tabular Q-Learning • SARSA • Due by Thursday, Mar. 11, 23: 59, through Canvas • Quiz (on Canvas): • n-step Bootstrapping • By Monday Mar. 8, 23: 59 • Project: • Start writing the project proposal document (due Friday, March 19 th at 11: 59 pm) 39