Instruction Level Parallelism Scalarprocessors the model so far

- Slides: 21

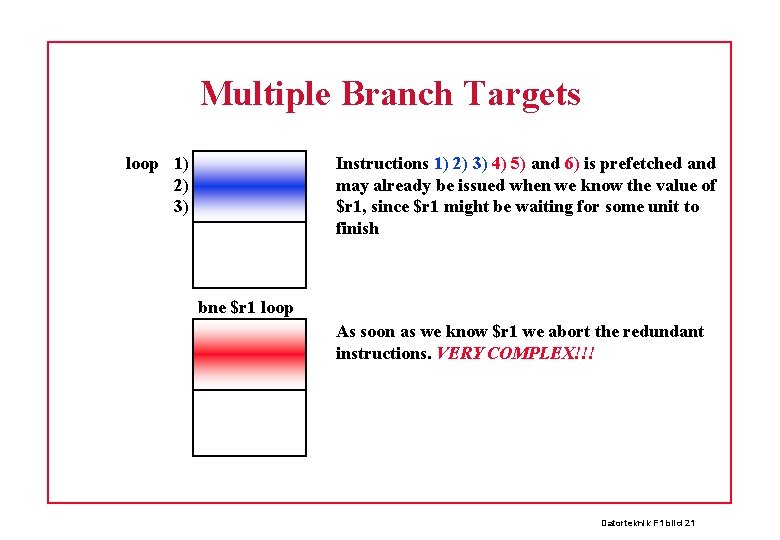

Instruction Level Parallelism Scalar-processors – the model so far Super. Scalar – multiple execution units in parallel VLIW – multiple instructions read in parallel Datorteknik F 1 bild 1

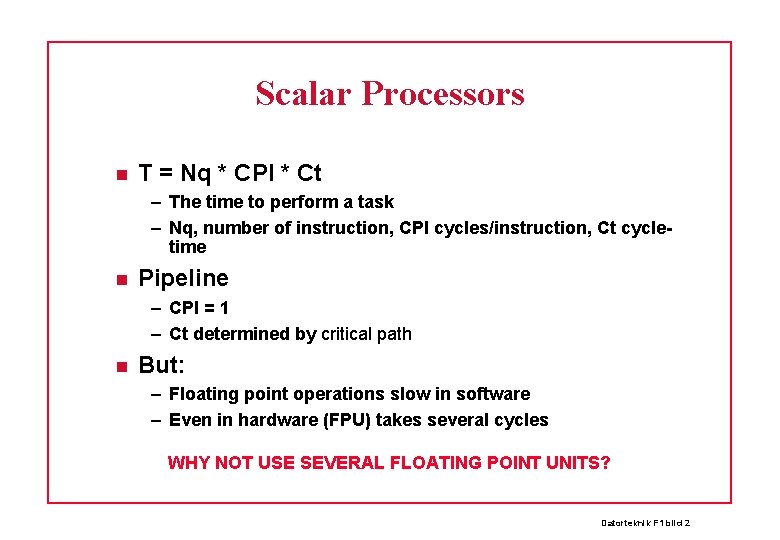

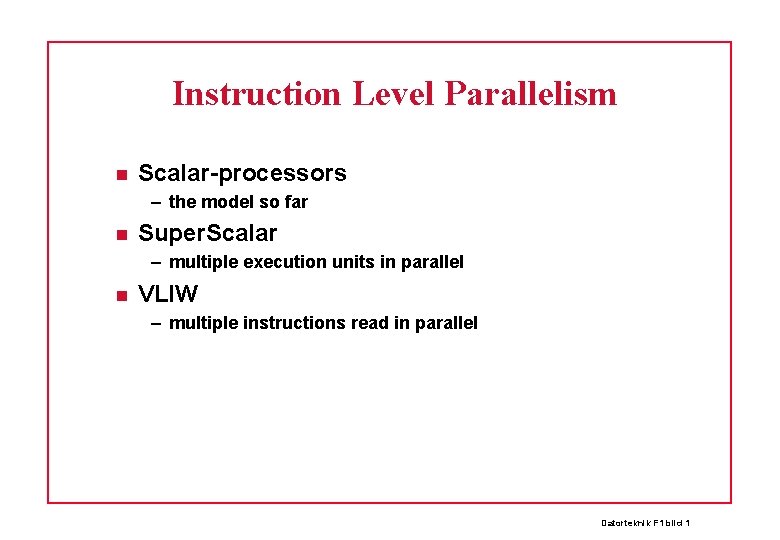

Scalar Processors T = Nq * CPI * Ct – The time to perform a task – Nq, number of instruction, CPI cycles/instruction, Ct cycletime Pipeline – CPI = 1 – Ct determined by critical path But: – Floating point operations slow in software – Even in hardware (FPU) takes several cycles WHY NOT USE SEVERAL FLOATING POINT UNITS? Datorteknik F 1 bild 2

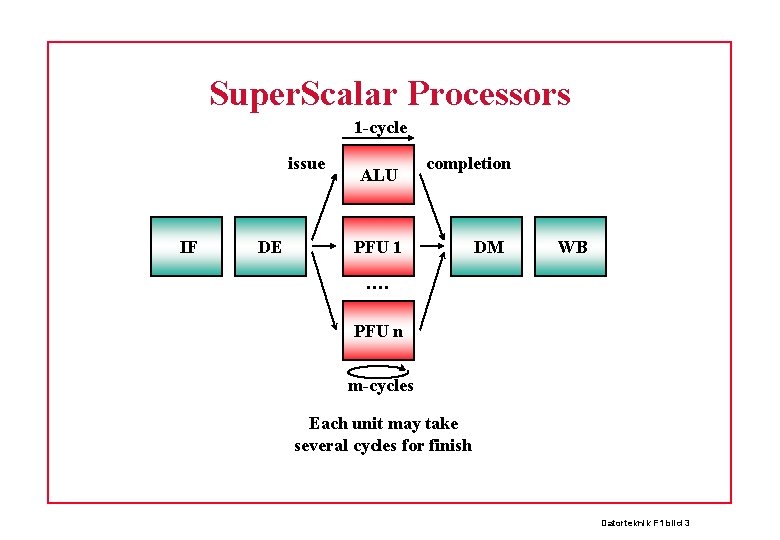

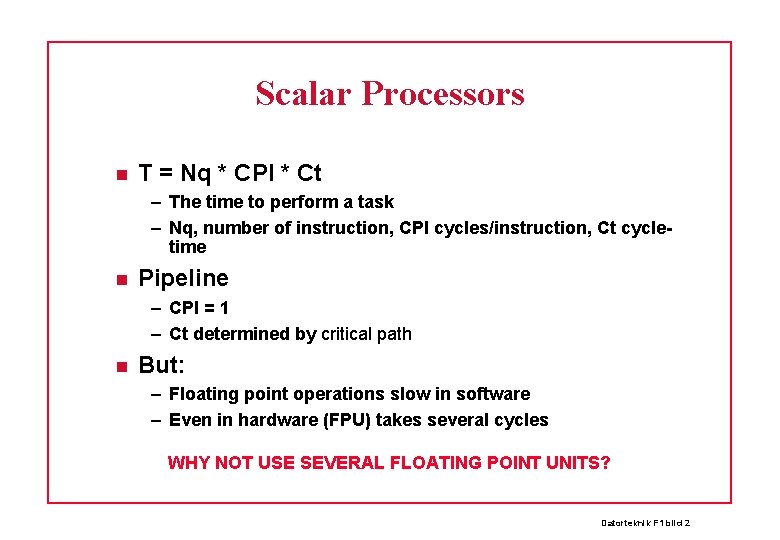

Super. Scalar Processors 1 -cycle issue IF DE ALU completion PFU 1 DM WB …. PFU n m-cycles Each unit may take several cycles for finish Datorteknik F 1 bild 3

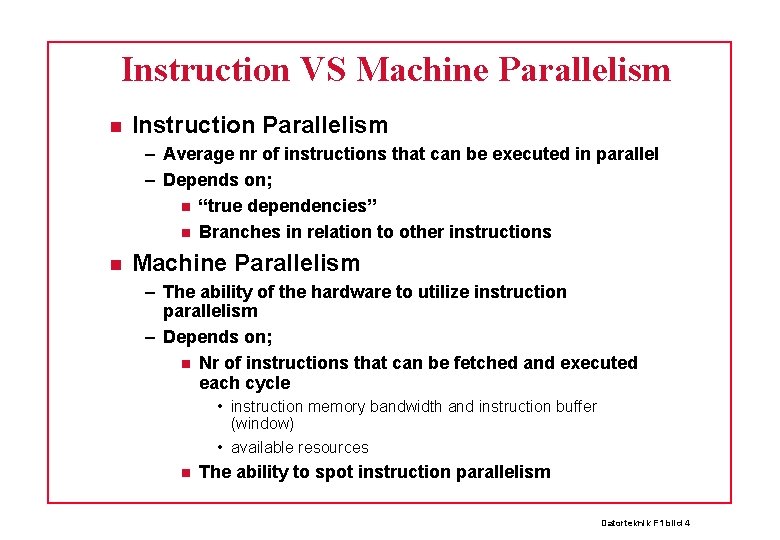

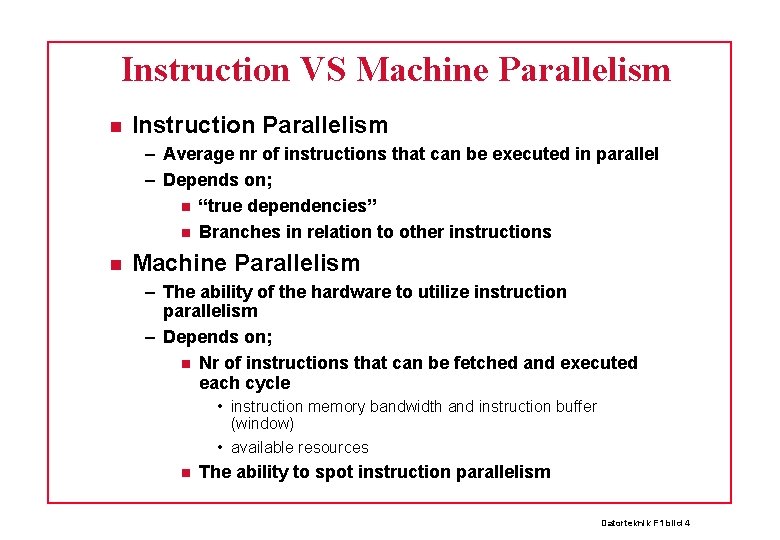

Instruction VS Machine Parallelism Instruction Parallelism – Average nr of instructions that can be executed in parallel – Depends on; “true dependencies” Branches in relation to other instructions Machine Parallelism – The ability of the hardware to utilize instruction parallelism – Depends on; Nr of instructions that can be fetched and executed each cycle • instruction memory bandwidth and instruction buffer (window) • available resources The ability to spot instruction parallelism Datorteknik F 1 bild 4

Example 1 instruction lookahead or “prefetch” 1) add $t 0 $t 1 $t 2 dependent 2) addi $t 0 1 3) sub $t 3 $t 1 $t 2 dependent 4) subi $t 3 1 1) add $t 0 $t 1 $t 2 2) addi $t 0 1 independent 3) sub $t 3 $t 1 $t 2 4) subi $t 3 1 Concurrently executed Datorteknik F 1 bild 5

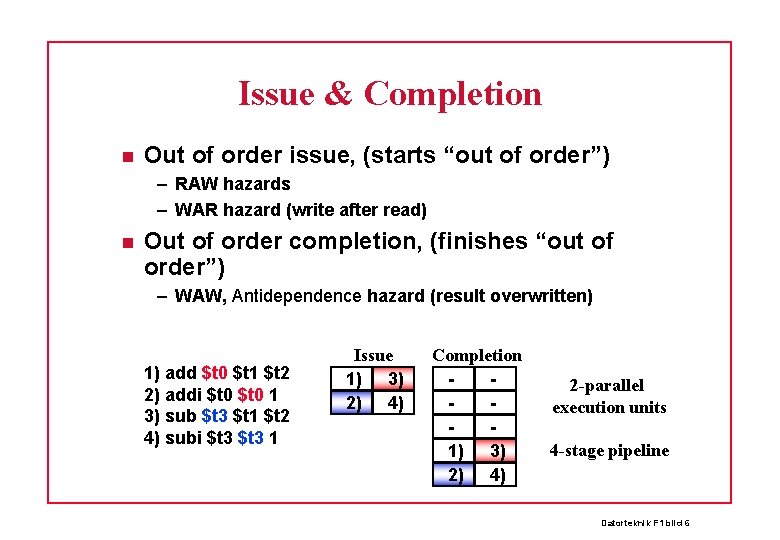

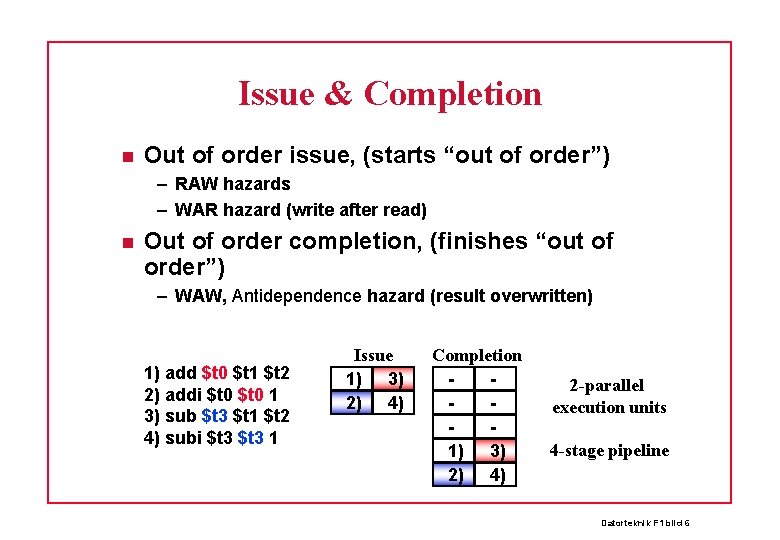

Issue & Completion Out of order issue, (starts “out of order”) – RAW hazards – WAR hazard (write after read) Out of order completion, (finishes “out of order”) – WAW, Antidependence hazard (result overwritten) 1) add $t 0 $t 1 $t 2 2) addi $t 0 1 3) sub $t 3 $t 1 $t 2 4) subi $t 3 1 Issue 1) 3) 2) 4) Completion 1) 3) 2) 4) 2 -parallel execution units 4 -stage pipeline Datorteknik F 1 bild 6

Tomasulo’s Algorithm A mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 IF IDLE. . . DE A B C. . . B DM WB C. . Datorteknik F 1 bild 7

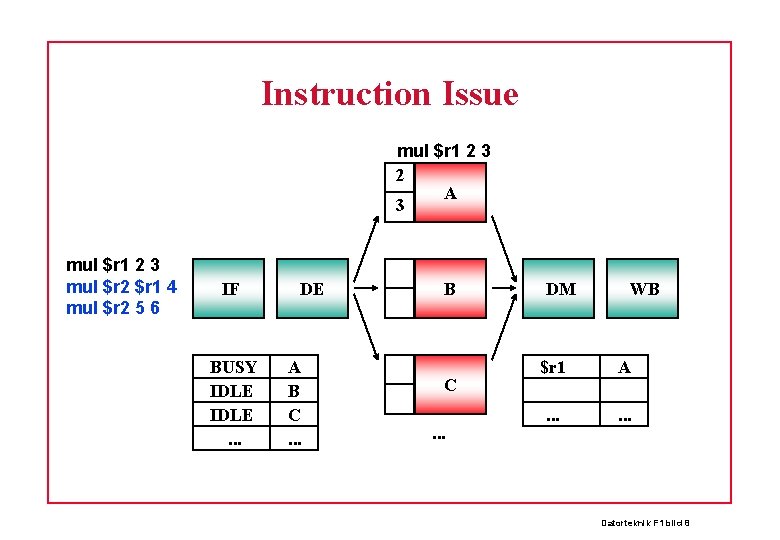

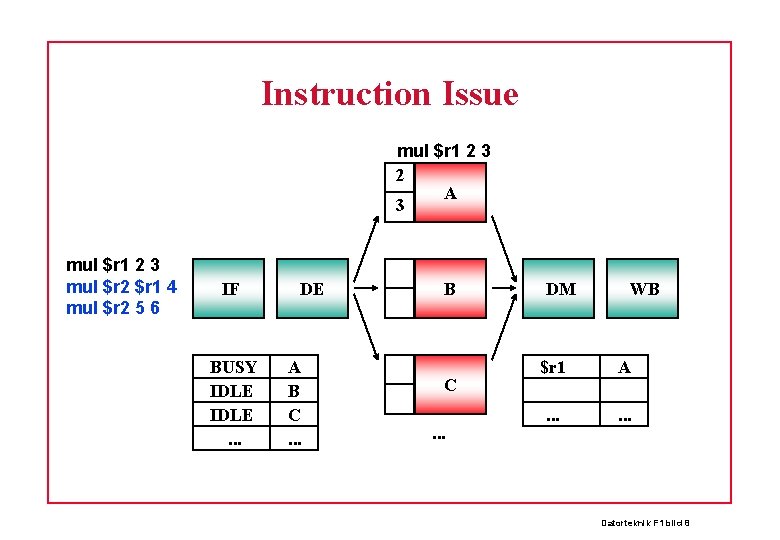

Instruction Issue mul $r 1 2 3 2 A 3 mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 IF BUSY IDLE. . . DE A B C. . . DM WB $r 1 A . . . Datorteknik F 1 bild 8

Instruction Issue mul $r 1 2 3 2 A 3 mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 mul $r 2 A 4 IF BUSY WAIT IDLE. . . DE A B C. . . A 4 B C. . . DM $r 1 $r 2. . . WB A B. . . Datorteknik F 1 bild 9

Instruction Issue mul $r 1 2 3 2 A 3 mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 mul $r 2 A 4 IF DE A 4 B DM WB mul $r 2 5 6 BUSY WAIT BUSY. . . A B C. . . $r 1 $r 2. . . A C. . . Reg $r 2 gets newer value Datorteknik F 1 bild 10

Clock until A and B finish mul $r 1 2 3 2 A 3 mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 mul $r 2 6 4 IF DE 6 4 B DM WB mul $r 2 5 6 IDLE BUSY IDLE. . . A B C. . . $r 1 $r 2. . . 6 30. . . Datorteknik F 1 bild 11

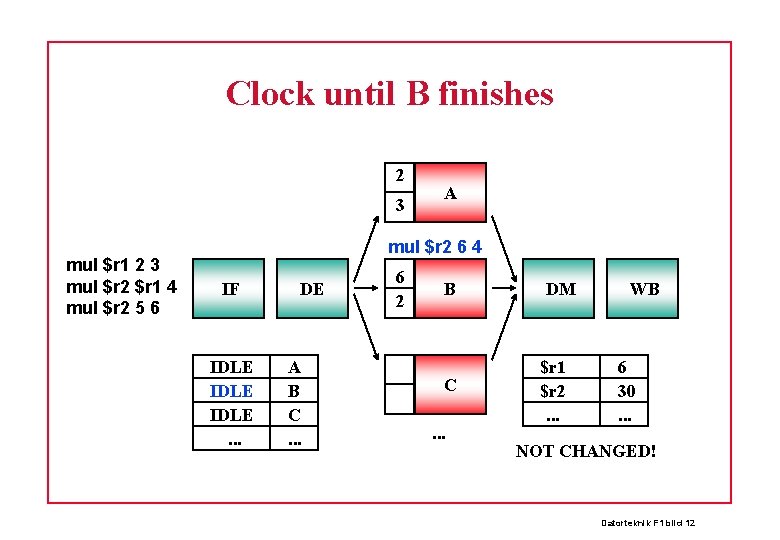

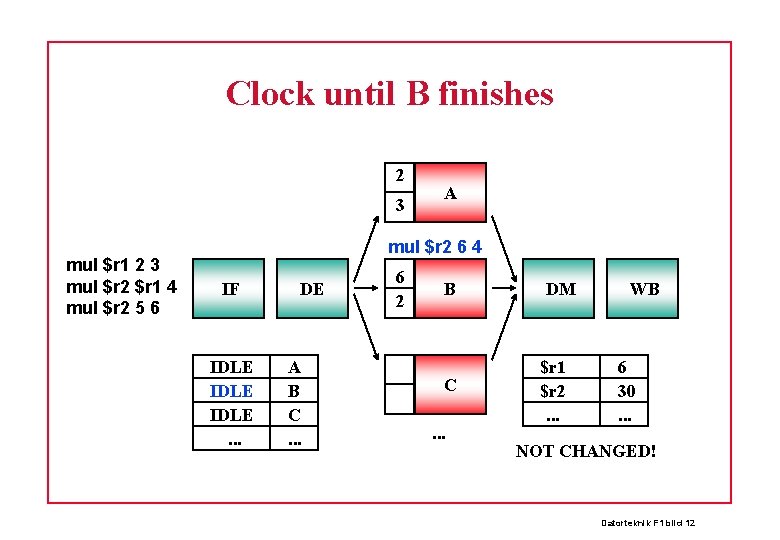

Clock until B finishes 2 3 mul $r 1 2 3 mul $r 2 $r 1 4 mul $r 2 5 6 A mul $r 2 6 4 IF IDLE. . . DE A B C. . . 6 2 B C. . . DM $r 1 $r 2. . . WB 6 30. . . NOT CHANGED! Datorteknik F 1 bild 12

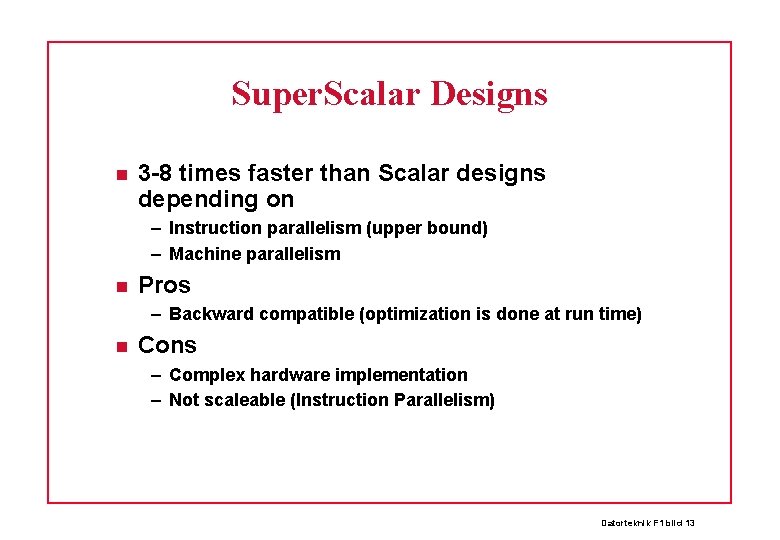

Super. Scalar Designs 3 -8 times faster than Scalar designs depending on – Instruction parallelism (upper bound) – Machine parallelism Pros – Backward compatible (optimization is done at run time) Cons – Complex hardware implementation – Not scaleable (Instruction Parallelism) Datorteknik F 1 bild 13

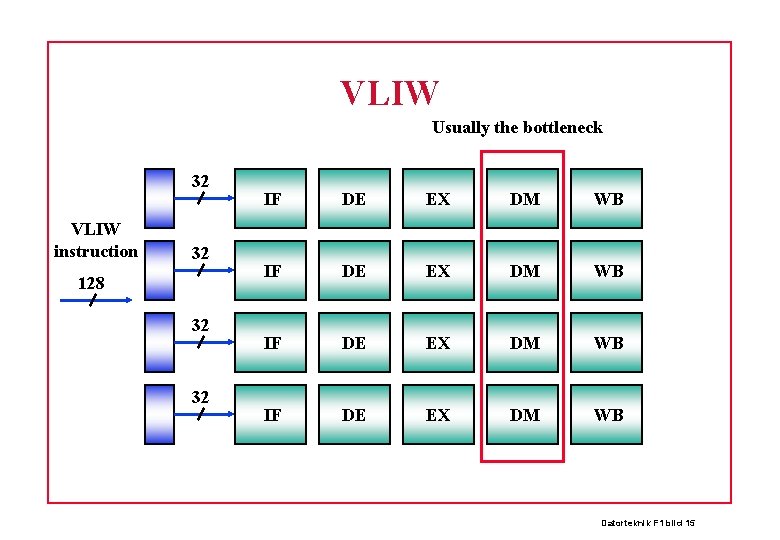

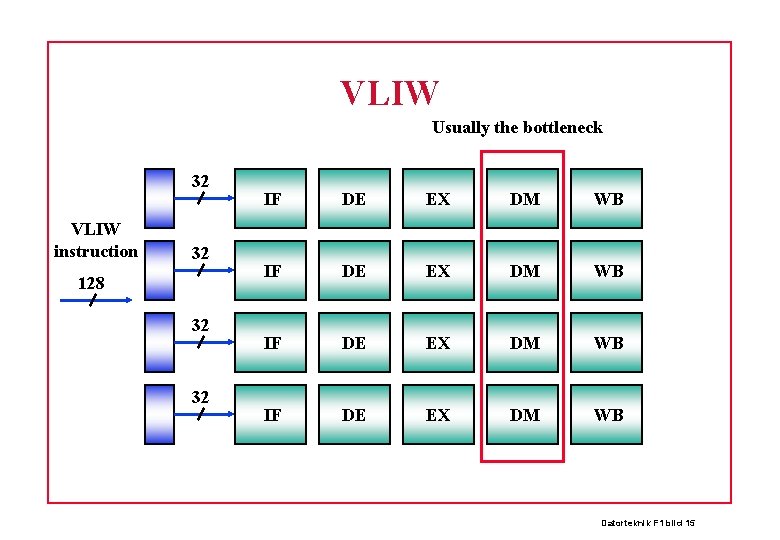

VLIW Why not let the compiler do the work? Use a Very Long Instruction Word (VLIW) – Consisting of many instructions is parallel – Each time we read one VLIW instruction we actually issue all instructions contained in the VLIW instruction Datorteknik F 1 bild 14

VLIW Usually the bottleneck 32 VLIW instruction 32 128 32 32 IF DE EX DM WB Datorteknik F 1 bild 15

VLIW Let the compiler can do the instruction issuing – Let it take it’s time we do this only once, ADVANCED What if we change the architecture – Recompile the code – Could be done the first time you load a program Only recompiled when architecture changed We could also let the compiler know about – Cache configuration Nr levels, line size, nr lines, replacement strategy, writeback/writethrough etc. Hot Research Area! Datorteknik F 1 bild 16

VLIW Pros – We get high bandwidth to instruction memory – Cheap compared to Super. Scalar Not much extra hardware needed – More parallelism We spot parallelism at a higher level (C, MODULA, JAVA? ) We can use advanced algorithms for optimization – New architectures can be utilized by recompilation Cons – Software compatibility – It has not “HIT THE MARKET” (yet). Datorteknik F 1 bild 17

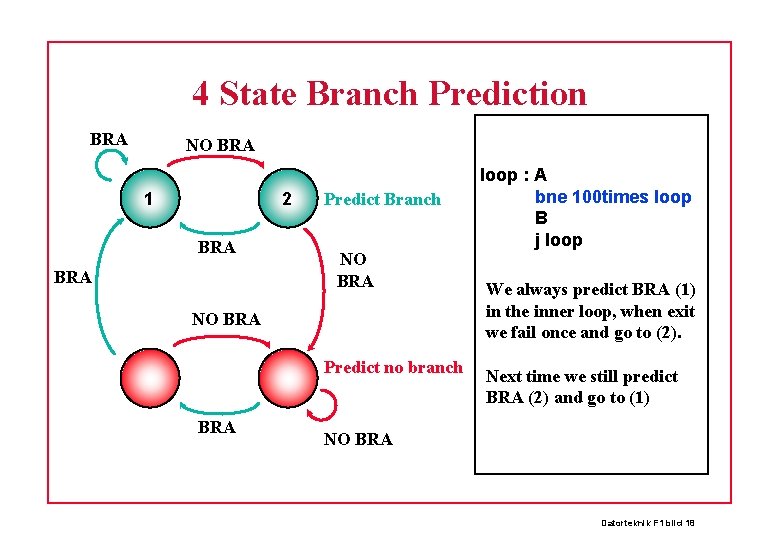

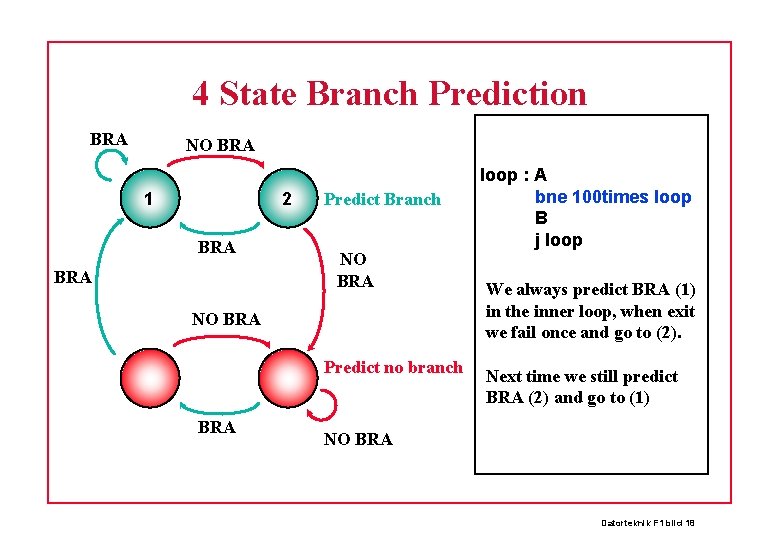

4 State Branch Prediction BRA NO BRA 1 2 BRA Predict Branch NO BRA Predict no branch BRA loop : A bne 100 times loop B j loop We always predict BRA (1) in the inner loop, when exit we fail once and go to (2). Next time we still predict BRA (2) and go to (1) NO BRA Datorteknik F 1 bild 18

Branch Prediction The 4 -states are stored in 2 bits in the instruction cache together with the conditional Branch instruction We predict the branch – We prefetch the predicted instructions – We issue these before we know if branch taken! When predicting fails we abort issued instructions Datorteknik F 1 bild 19

Branch Prediction loop 1) 2) 3) predict bne $r 1 loop branch taken Instructions 1) 2) and 3) are prefetched and may already be issued when we know the value of $r 1, since $r 1 might be waiting for some unit to finish In case of prediction failure we have to abort the issued instructions and start fetching 4) 5) and 6) Datorteknik F 1 bild 20

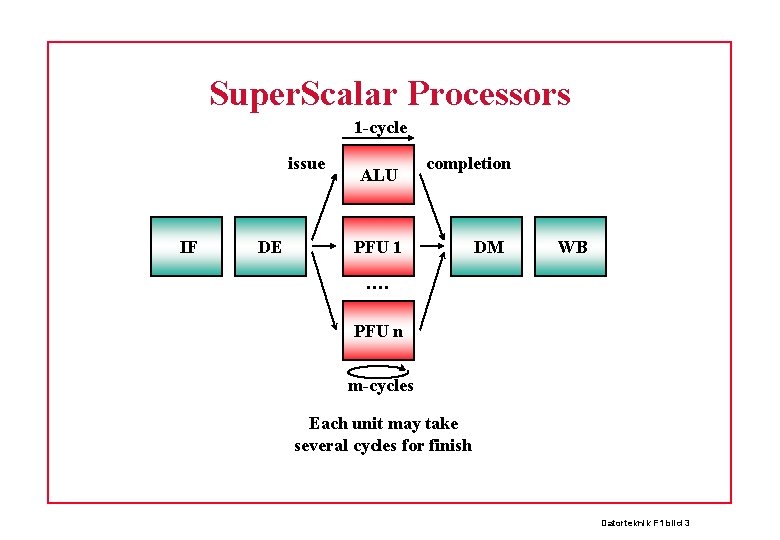

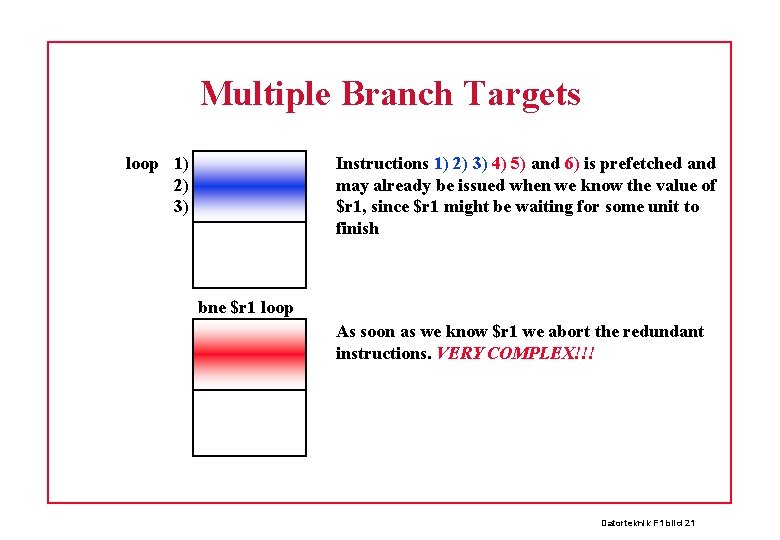

Multiple Branch Targets loop 1) 2) 3) Instructions 1) 2) 3) 4) 5) and 6) is prefetched and may already be issued when we know the value of $r 1, since $r 1 might be waiting for some unit to finish bne $r 1 loop As soon as we know $r 1 we abort the redundant instructions. VERY COMPLEX!!! Datorteknik F 1 bild 21