Institute of Cognitive Sciences and Technologies CNR Departmentof

- Slides: 12

Institute of Cognitive Sciences and Technologies - CNR Departmentof Phonetics and Dialectology - Padova Emotions and Voice Quality: Experiments with Sinusoidal Modeling Authors: Carlo Drioli, Graziano Tisato, Piero Cosi, Fabio Tesser Voice quality: functions, analysis and synthesis VOQUAL’ 03 Geneva, August 27 -29, 2003

Outline §Objectives §Voice and motivations material §Acoustic §Neutral indexes and statistical analysis to emotive utterance mapping §Experimental results

Objectives and motivations §Long-term goals: - emotive speech analysis/synthesis - improvement of ASR/TTS systems §Short-term goal: §Focus of talk: §Method: - preliminary evaluation of processing tools for the reproduction of different voice qualities - analysis/synthesis of different voice qualities corresponding to different emotive intentions - analysis of voice quality acoustic correlates - definition of a sinusoidal modeling framework to control voice timbre and phonation quality

Voice material An emotive voice corpuswas recorded withthe following characteristics : § two phonological structures’VCV: /’aba/ and /’ava/. § neutral (N) plus six emotional states: anger (A), joy (J), fear (F), sadness (SA), disgust (D), surprise (SU). § 1 speaker, 7 recordings for eachemotive intention, for each word.

Analysis of emotive speech: acoustic correlates Cue extraction and analysis: • Intensity, duration, pitch range, formants. anger (A) joy (J) fear (F) sadness (SA) disgust (D) surprise (SU) neutral (N) F 0 mean (global and for stressed vowel), F 0 “mid”, and F 0 range • F 0 stressed vowel mean and F 0 mid values are strongly correlated. ___________________________

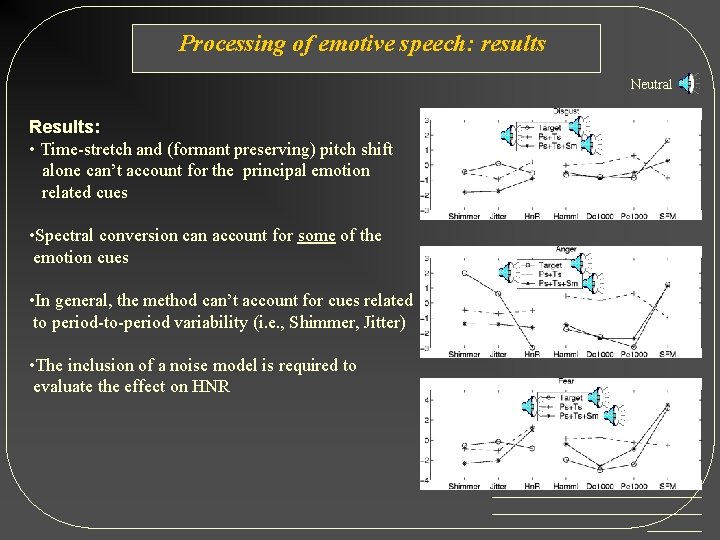

Analysis of emotive speech: acoustic correlates Cue extraction and analysis(acoustic correlates of voice quality): • Shimmer, Jitter • HNR • Hammarberg’s index (Hamm. I) difference between energy max in the 0 -2000 Hz and 2000 -5000 Hz frequency bands • Spectral flatness(SFM) ratio of the geometric to the arithmetic mean • Drop-off of spectral energy above 1000 Hz (Do 1000) LS approx. of the spectral tilt above 1000 Hz • High- versus low-frequency rangerelative energy amount(Pe 1000) ___________________________

Analysis of emotive speech: voice quality Voice quality characterization : Voice quality patterns(distance from Neutral): Anger: harsh voice (/’a/) Disgust: creaky voice (/a/) Joy, Fear, Surprise : breathy voice Discriminantanalysis: Classification matrix for stressed vowel : classification scores: 60/70 % for stressed and unstressed vowel Best score: Fear, Anger ___________________________

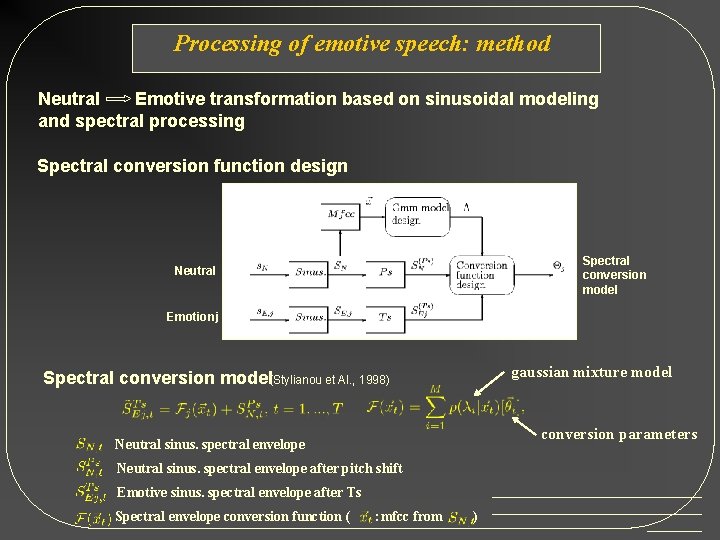

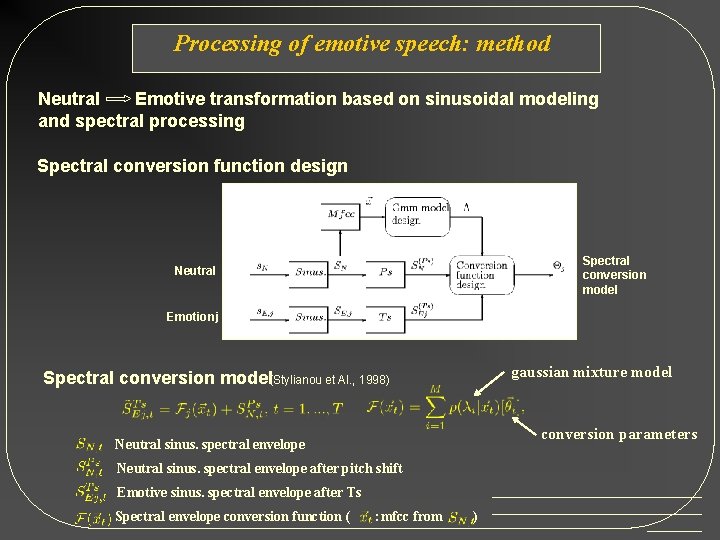

Processing of emotive speech: method Neutral Emotive transformation based on sinusoidal modeling and spectral processing Spectral conversion function design : Spectral conversion model Neutral Emotion j Spectral conversion model(Stylianou et Al. , 1998) gaussian mixture model conversion parameters Neutral sinus. spectral envelope after pitch shift Emotive sinus. spectral envelope after Ts Spectral envelope conversion function ( : mfcc from ___________________________ ) _______

Processing of emotive speech: method Neutral Emotive transformation based on trained model Disgust (Ps+Ts) Disgust Sadness (Ps+Ts) Sadness Target Disgus Target Sadness ___________________________

Processing of emotive speech : results Neutral Emotive transformation based on sinusoidal modeling: Neutral Ps+Ts+Sc Target Anger Disgust Joy Fear Surprise Sadness ___________________________

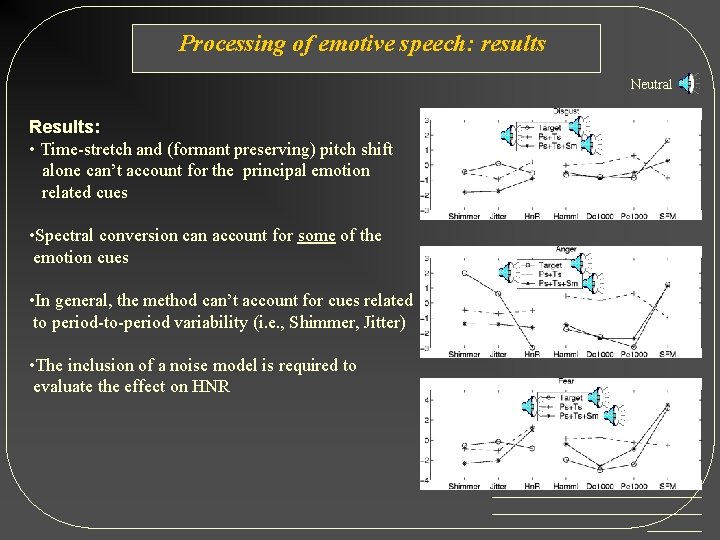

Processing of emotive speech: results Neutral Results: • Time-stretch and (formant preserving) pitch shift alone can’t account for the principal emotion related cues • Spectral conversion can account for some of the emotion cues • In general, the method can’t account for cues related to period-to-period variability (i. e. , Shimmer, Jitter) • The inclusion of a noise model is required to evaluate the effect on HNR ___________________________

Conclusions § § § Sinusoidal framework was found adequate to process emotive information Need refinements (e. g. noise model, harshness model) to account for all the acoustic correlates of emotions Results of processing are perceptually good Future work § § § Refinements of the model (i. e. , noise model) Adaptation to TTS system Search for the existence of speaker-independent transformation patterns (using multi-speaker corpora). ___________________________