Instance Selection Instance Selection 1 2 3 4

- Slides: 58

Instance Selection

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

Introduction • Instance selection (IS) performs the complementary process regarding the FS. • The major issue of scaling down the data is the selection or identification of relevant data from an immense pool of instances. • Instance Selection is to choose a subset of data to achieve the original purpose of a DM application as if the whole data were used.

Introduction • IS vs. data sampling: intelligent operation of instance categorization, according to a degree of irrelevance or noise. • The optimal outcome of IS is a minimum data subset, model independent that can accomplish the same task with no performance loss. • IS method on obtaining a subset S ⊂ T such that S does not contain superfluous instances, Acc(S) is similar to Acc(T), where Acc(X) is the classification accuracy obtained using X as a training set.

Introduction • IS has the following outstanding functions: – Enabling: IS enables a DM algorithm to work with huge data. – Focusing: a concrete DM task is focused on only one aspect of interest of the domain. IS focus the data on the relevant part. – Cleaning: redundant as well as noisy instances are usually removed, improving the quality of the input data.

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

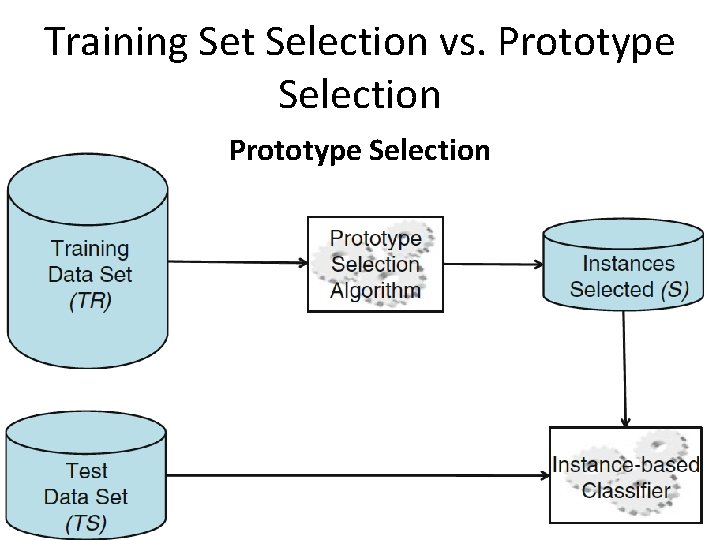

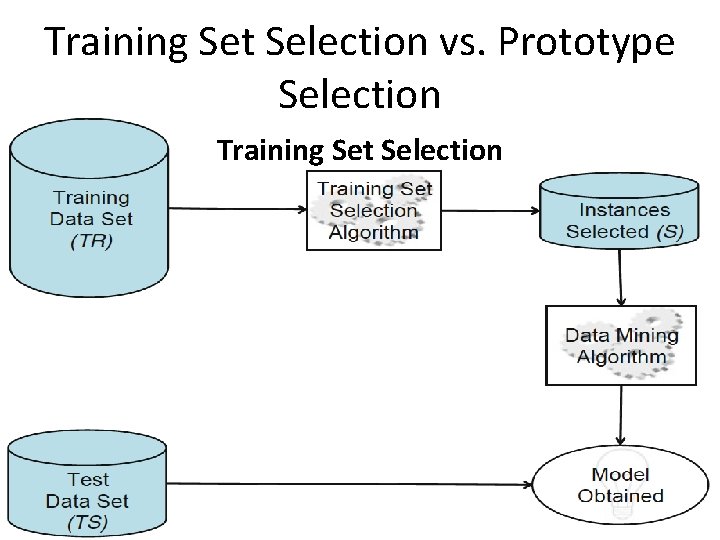

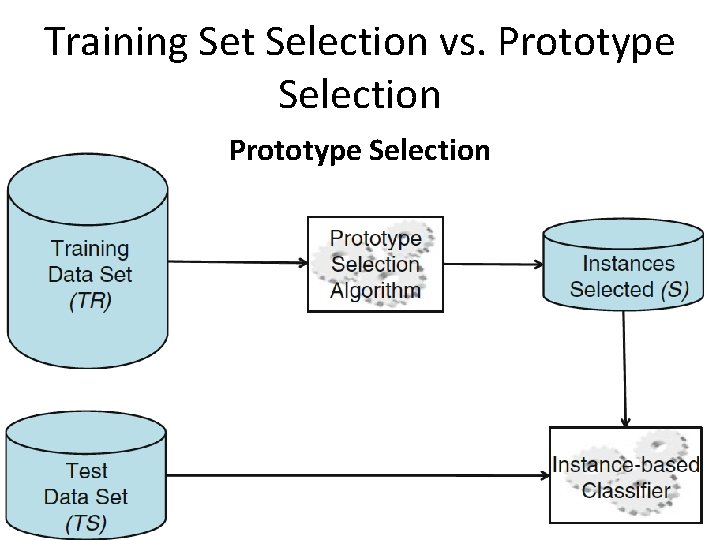

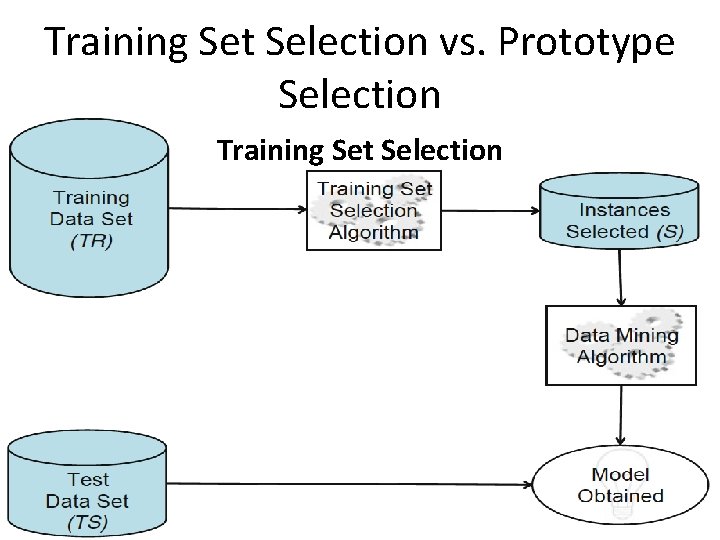

Training Set Selection vs. Prototype Selection • Several terms have been used for selecting the most relevant data from the training set. • Instance selection is the most general one and was thought to work with other learning methods, such as decision trees, ANNs or SVMs. • Nowadays, there are two clear distinctions in the literature: Prototype Selection (PS) and Training Set Selection (TSS).

Training Set Selection vs. Prototype Selection

Training Set Selection vs. Prototype Selection Training Set Selection

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

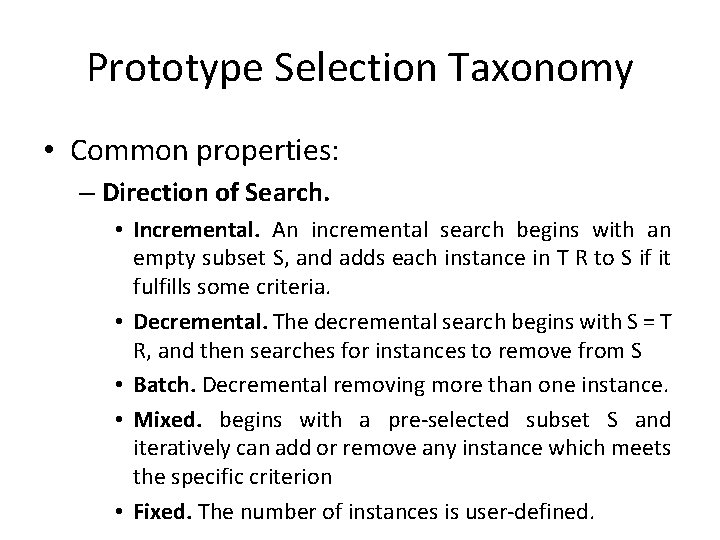

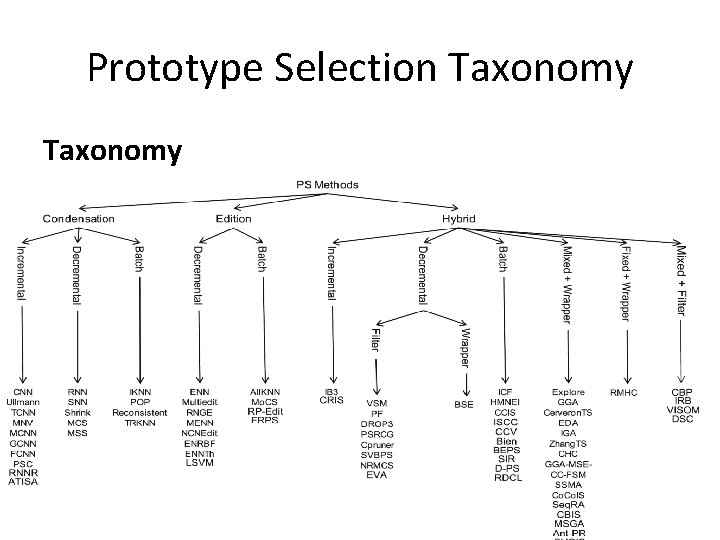

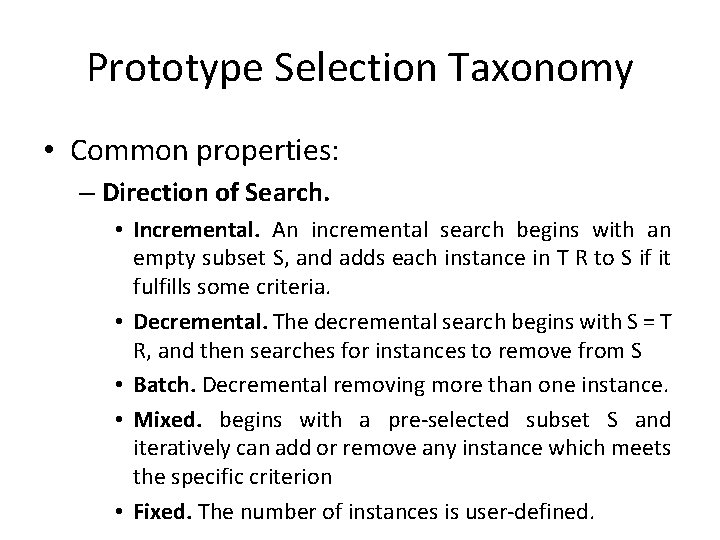

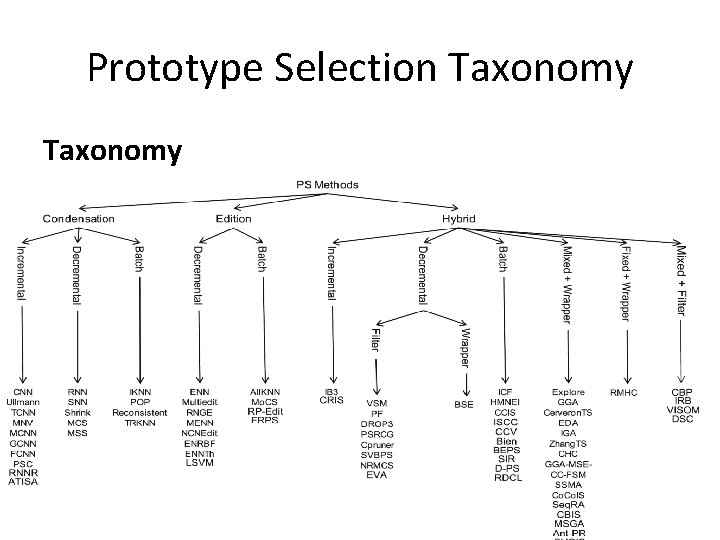

Prototype Selection Taxonomy • Common properties: – Direction of Search. • Incremental. An incremental search begins with an empty subset S, and adds each instance in T R to S if it fulfills some criteria. • Decremental. The decremental search begins with S = T R, and then searches for instances to remove from S • Batch. Decremental removing more than one instance. • Mixed. begins with a pre-selected subset S and iteratively can add or remove any instance which meets the specific criterion • Fixed. The number of instances is user-defined.

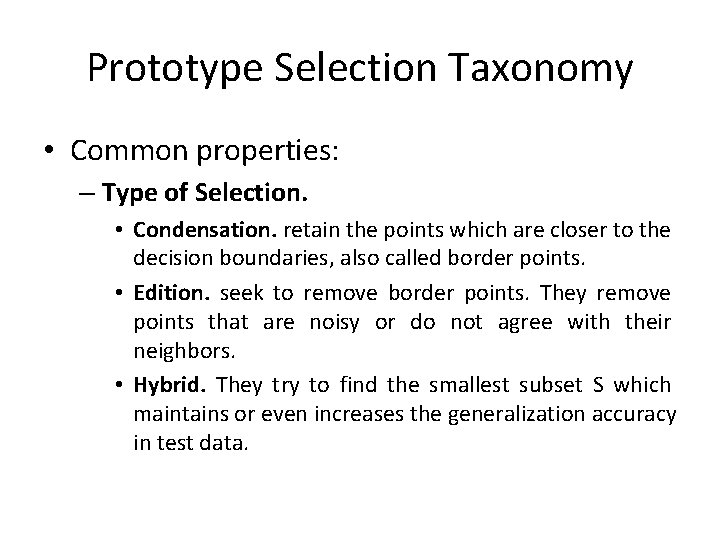

Prototype Selection Taxonomy • Common properties: – Type of Selection. • Condensation. retain the points which are closer to the decision boundaries, also called border points. • Edition. seek to remove border points. They remove points that are noisy or do not agree with their neighbors. • Hybrid. They try to find the smallest subset S which maintains or even increases the generalization accuracy in test data.

Prototype Selection Taxonomy • Common properties: – Evaluation of Search. • Filter. When the k. NN rule is used for partial data to determine the criteria of adding or removing and no leave-one-out validation scheme is used to obtain a good estimation of generalization accuracy. • Wrapper. When the k. NN rule is used for the complete training set with the leave-one-out validation scheme. The conjunction in the use of the two mentioned factors allows us to get a great estimation of generalization accuracy, which helps to obtain better accuracy over test data.

Prototype Selection Taxonomy • Common properties: – Criteria to Compare PS Methods. • Storage reduction • Noise tolerance • Generalization accuracy • Time requirements

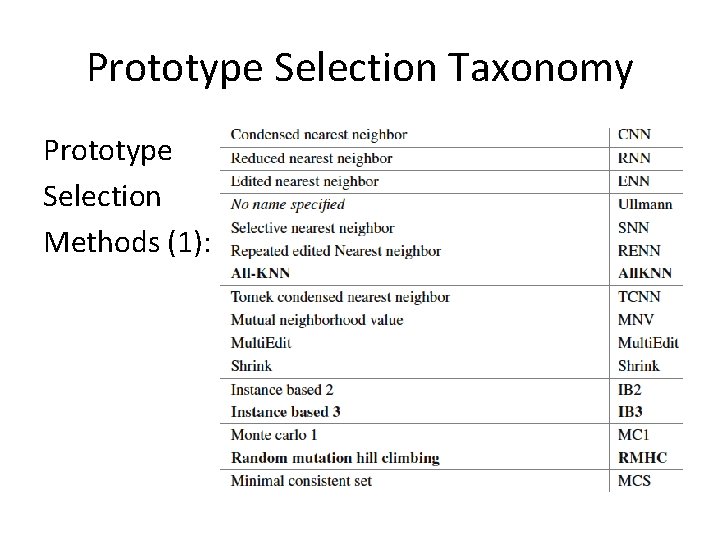

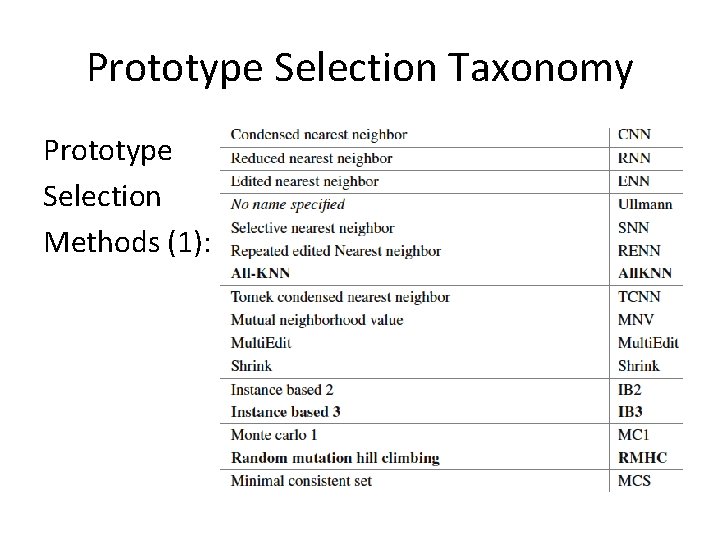

Prototype Selection Taxonomy Prototype Selection Methods (1):

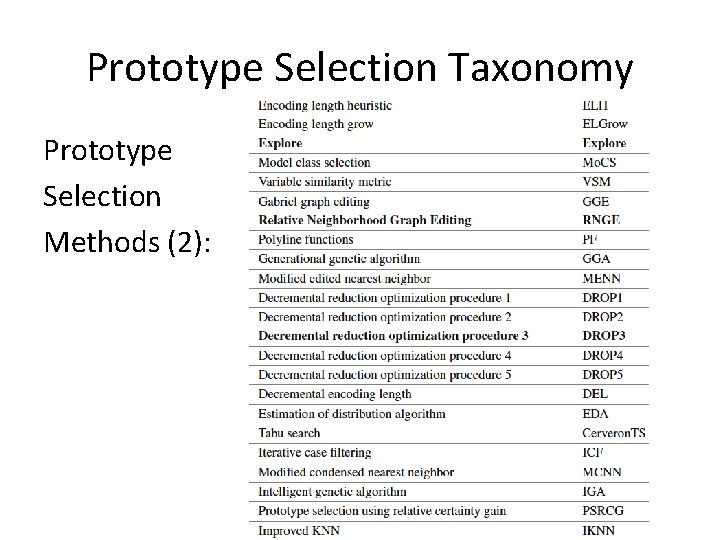

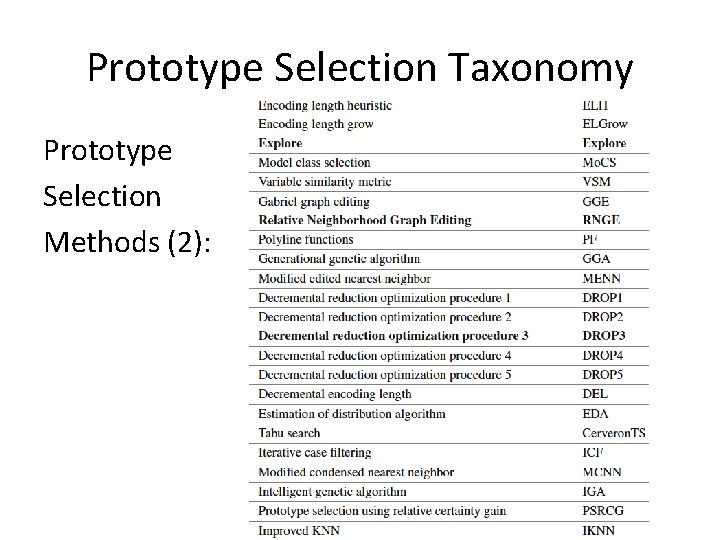

Prototype Selection Taxonomy Prototype Selection Methods (2):

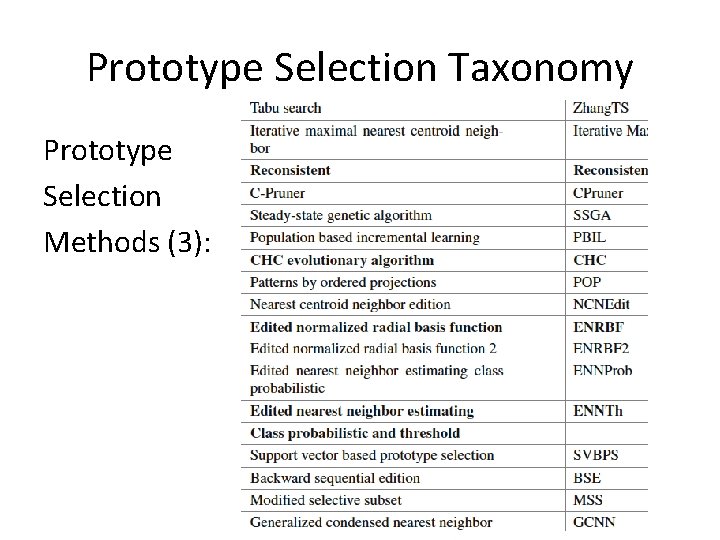

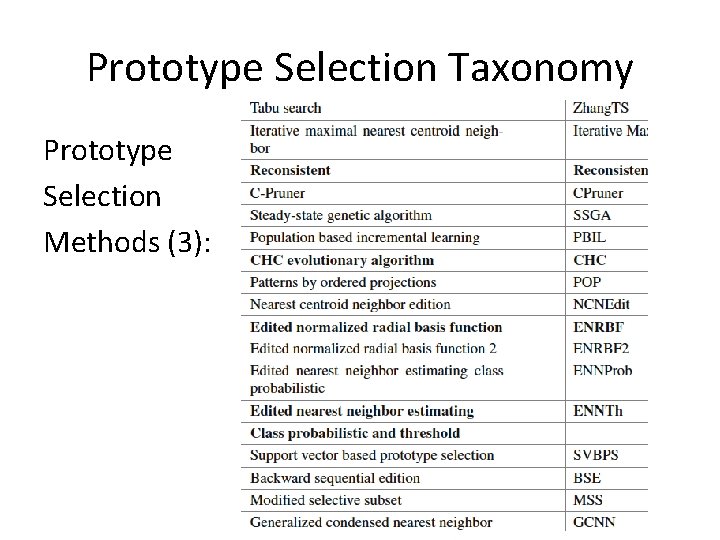

Prototype Selection Taxonomy Prototype Selection Methods (3):

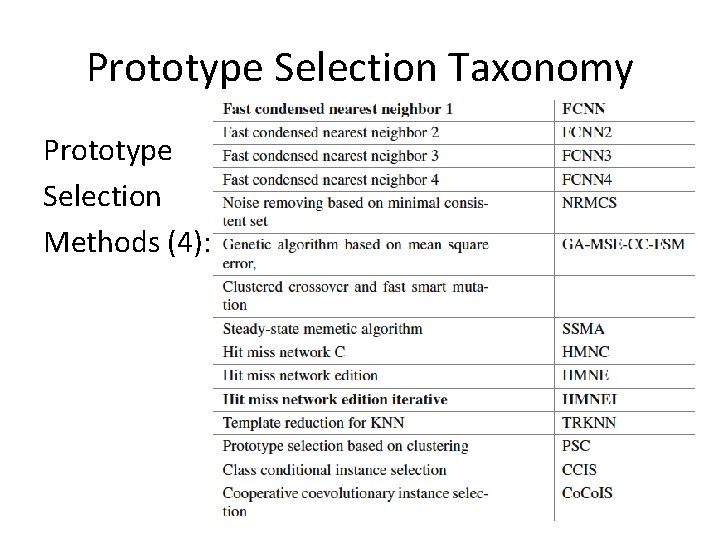

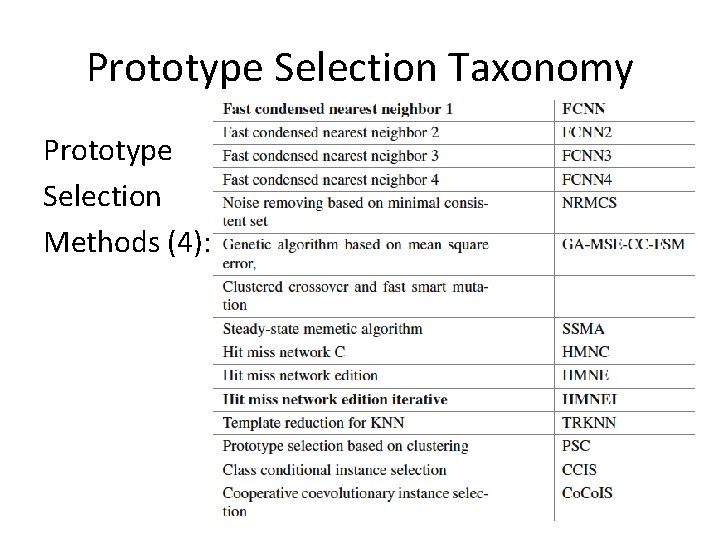

Prototype Selection Taxonomy Prototype Selection Methods (4):

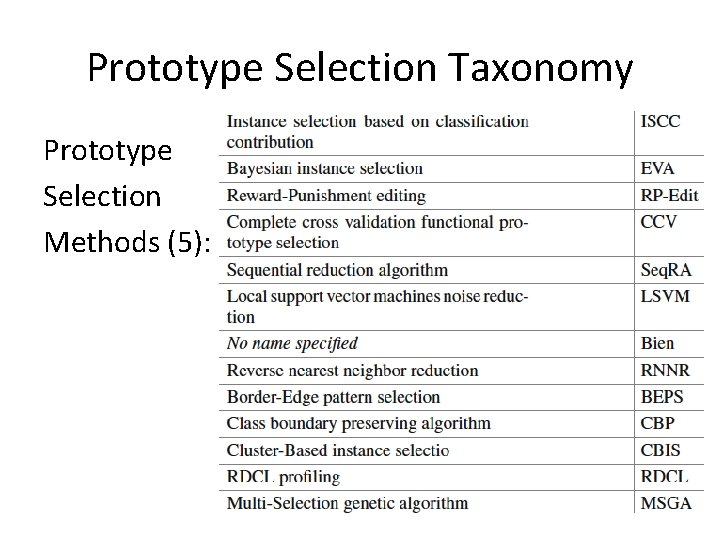

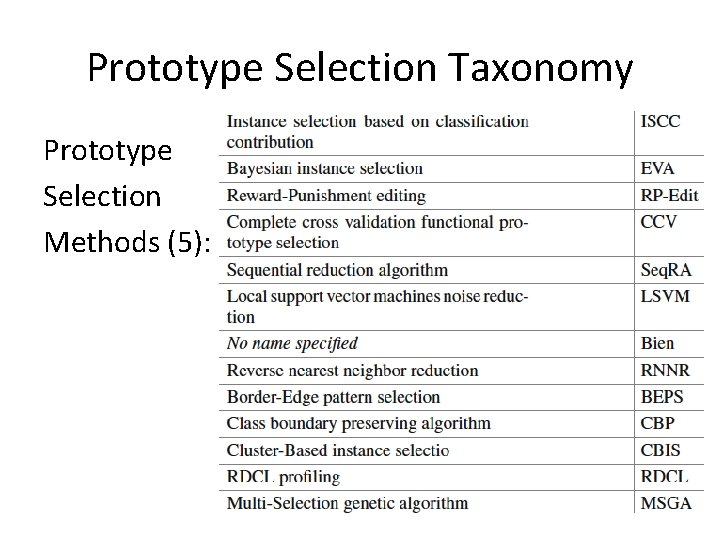

Prototype Selection Taxonomy Prototype Selection Methods (5):

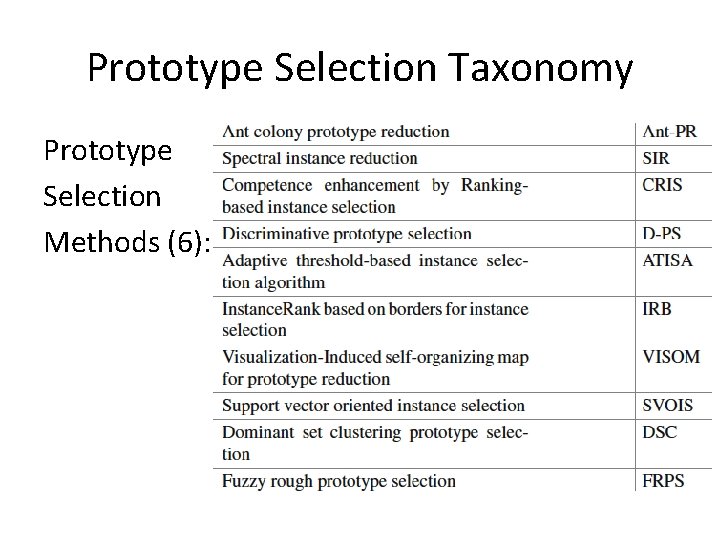

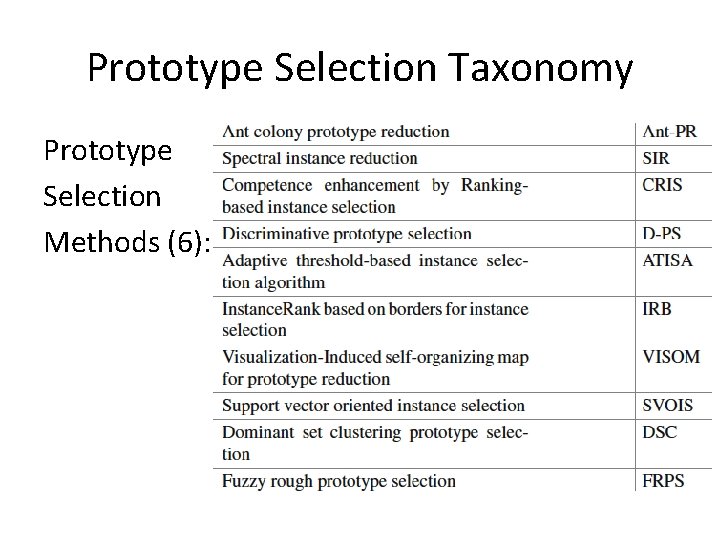

Prototype Selection Taxonomy Prototype Selection Methods (6):

Prototype Selection Taxonomy

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

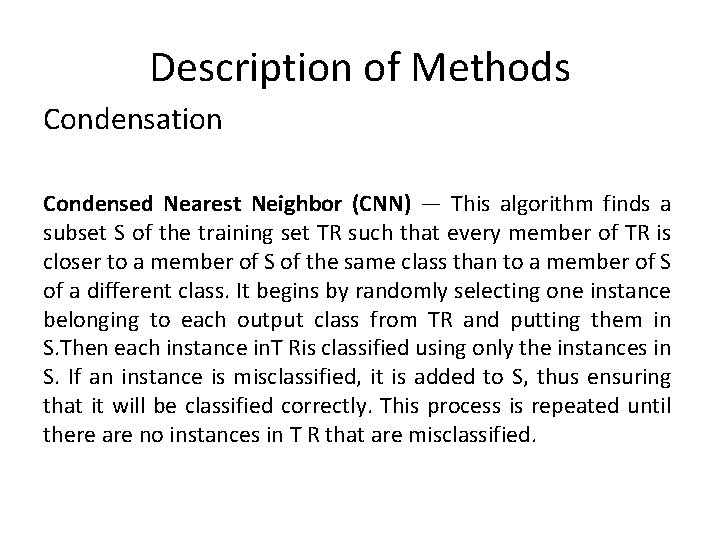

Description of Methods Condensation Condensed Nearest Neighbor (CNN) — This algorithm finds a subset S of the training set TR such that every member of TR is closer to a member of S of the same class than to a member of S of a different class. It begins by randomly selecting one instance belonging to each output class from TR and putting them in S. Then each instance in. T Ris classified using only the instances in S. If an instance is misclassified, it is added to S, thus ensuring that it will be classified correctly. This process is repeated until there are no instances in T R that are misclassified.

Description of Methods Condensation Fast Condensed Nearest Neighbor family (FCNN) — The FCNN 1 algorithm starts by introducing in S the centroids of each class. Then, for each prototype p in S, its nearest enemy inside its Voronoi region is found, and add to S. This process is performed iteratively until no enemies are found on a single iteration. The FCNN 2 algorithm is similar to FCNN 1 but, instead of adding the nearest enemy on each Voronoi region, is added the centroid of the enemies found in the region. The FCNN 3 algorithm is similar to FCNN 1 but, instead of adding one prototype per region in each iteration, only one prototype is added (the one which belongs to the Voronoi region with most enemies). In FCNN 3, S is initialized only with the centroid of the most populated Class.

Description of Methods Condensation Reduced Nearest Neighbor (RNN) — RNN starts with S = TR and removes each instance from S if such a removal does not cause any other instances in TR to be misclassified by the instances remaining in S. It will always generate a subset of the results of CNN algorithm.

Description of Methods Condensation Patterns by Ordered Projections (POP) — This algorithm consists of eliminating the examples that are not within the limits of the regions to which they belong. For it, each attribute is studied separately, sorting and increasing a value, called weakness, associated to each one of the instances, if it is not within a limit. The instances with a value of weakness equal to the number of attributes are eliminated.

Description of Methods Edition Edited Nearest Neighbor (ENN) — Wilson developed this algorithm which starts with S = TR and then each instance in S is removed if it does not agree with the majority of its k nearest neighbors.

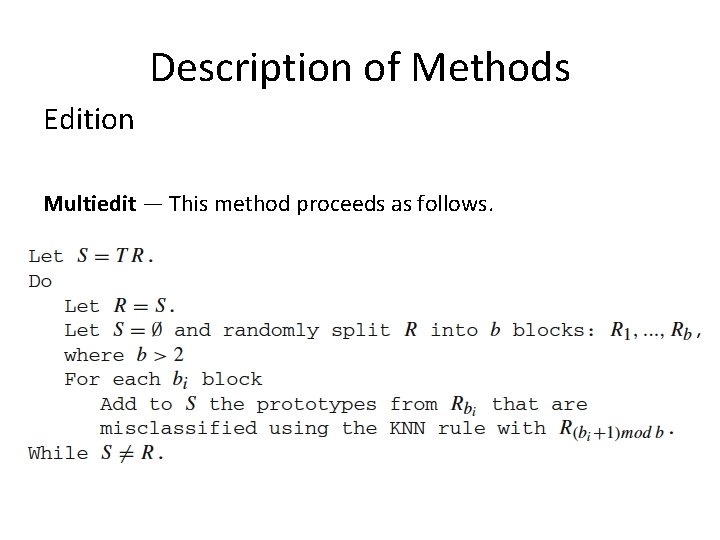

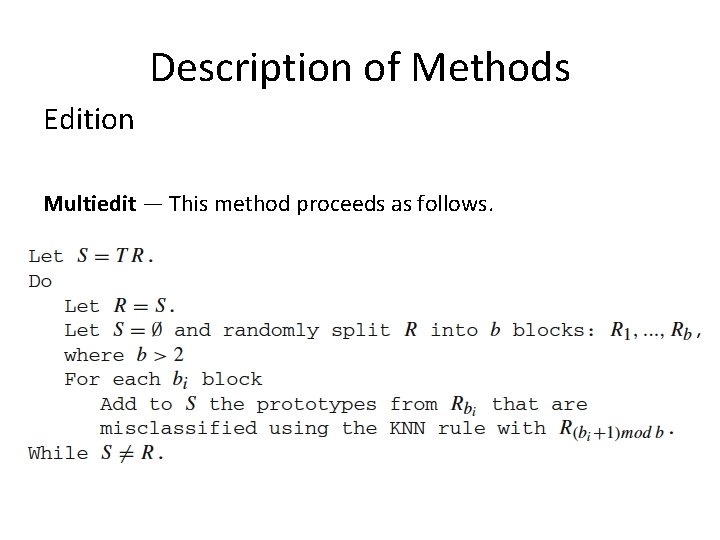

Description of Methods Edition Multiedit — This method proceeds as follows.

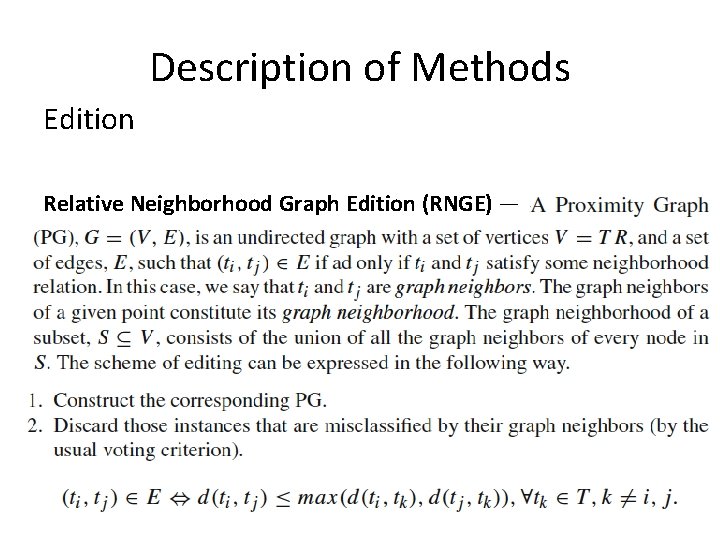

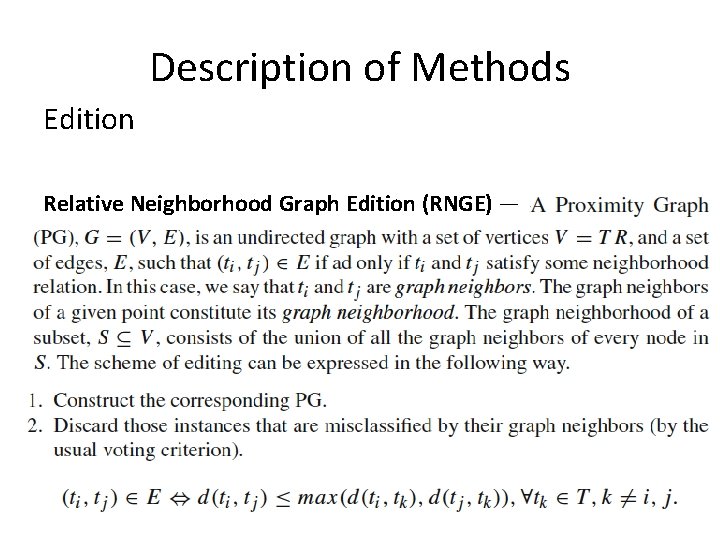

Description of Methods Edition Relative Neighborhood Graph Edition (RNGE) —

Description of Methods Edition All KNN — All KNN is an extension of ENN. The algorithm, for i = 0 to k flags as bad any instance not classified correctly by its i nearest neighbors. When the loop is completed k times, it removes the instances flagged as bad.

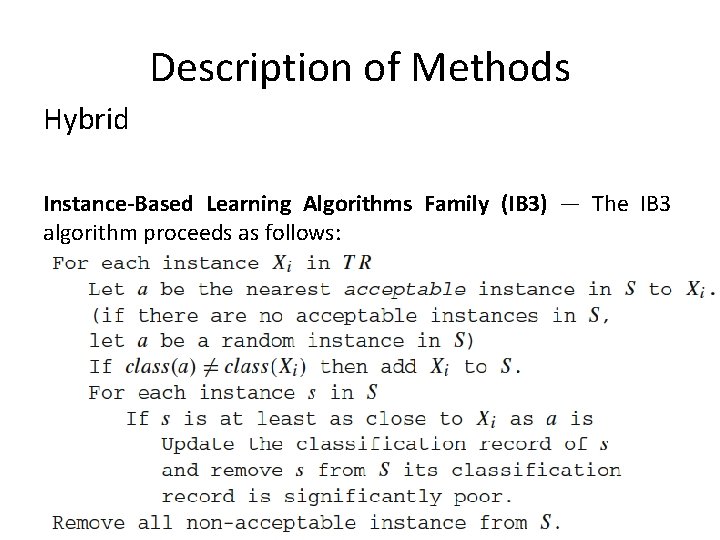

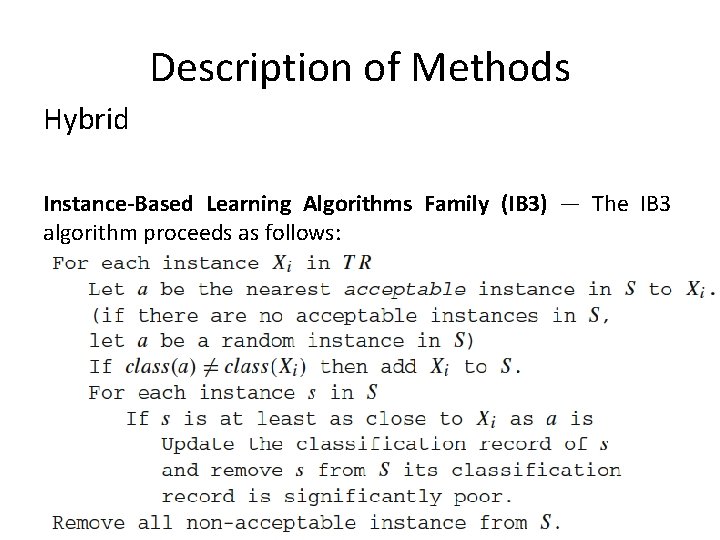

Description of Methods Hybrid Instance-Based Learning Algorithms Family (IB 3) — The IB 3 algorithm proceeds as follows:

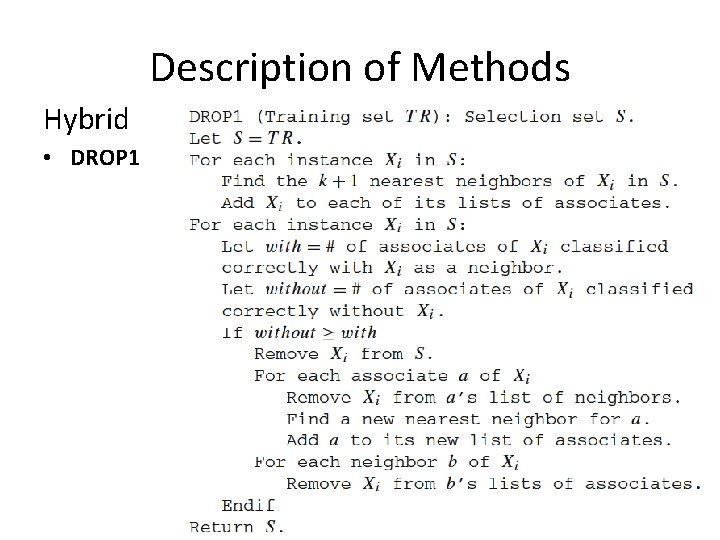

Description of Methods Hybrid Decremental Reduction Optimization Procedure Family (DROP) — Each instance Xi has k nearest neighbors where k is typically a small odd integer. Xi also has a nearest enemy, which is the nearest instance with a different output class. Those instances that have xi as one of their k nearest neighbors are called associates of Xi.

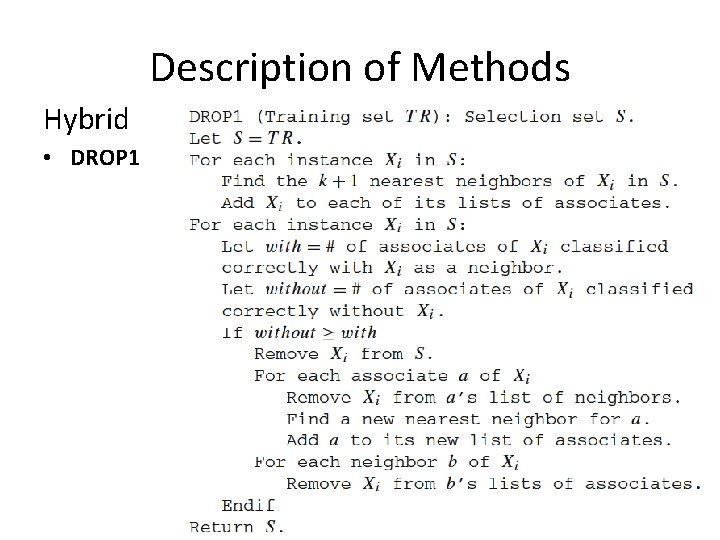

Description of Methods Hybrid • DROP 1

Description of Methods Hybrid • DROP 2: In this method, the removal criterion can be restated as: Remove Xi if at least as many of its associates in TR would be classified correctly without Xi. Using this modification, each instance Xi in the original training set TR continues to maintain a list of its k + 1 nearest neighbors in S, even after Xi is removed from S. DROP 2 also changes the order of removal of instances. It initially sorts the instances in S by the distance to their nearest enemy. • DROP 3: It is a combination of DROP 2 and ENN algorithms. DROP 3 uses a noise-filtering pass before sorting the instances in S (Wilson ENN editing). After this, it works identically to DROP 2.

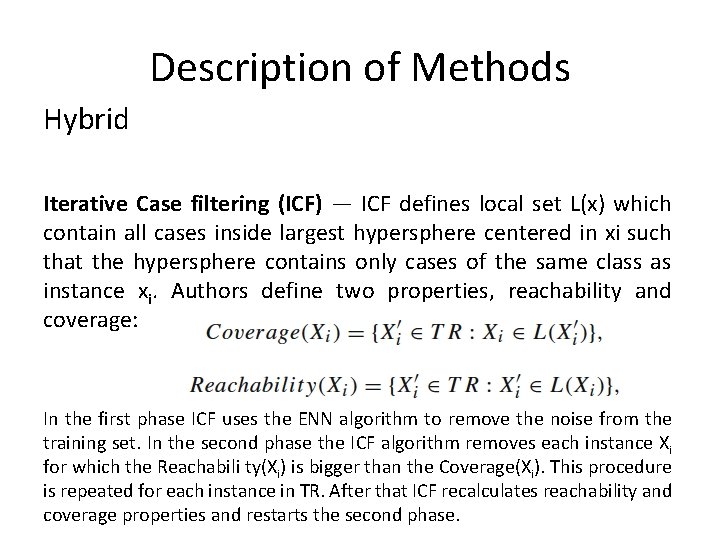

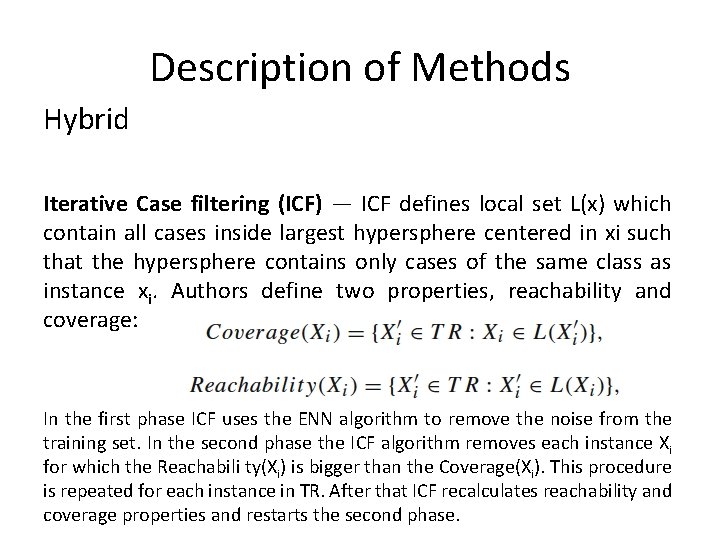

Description of Methods Hybrid Iterative Case filtering (ICF) — ICF defines local set L(x) which contain all cases inside largest hypersphere centered in xi such that the hypersphere contains only cases of the same class as instance xi. Authors define two properties, reachability and coverage: In the first phase ICF uses the ENN algorithm to remove the noise from the training set. In the second phase the ICF algorithm removes each instance Xi for which the Reachabili ty(Xi) is bigger than the Coverage(Xi). This procedure is repeated for each instance in TR. After that ICF recalculates reachability and coverage properties and restarts the second phase.

Description of Methods Hybrid Random Mutation Hill Climbing (RMHC) — It randomly selects a subset S from TR which contains a fixed number of instances s (s = %|T R|). In each iteration, the algorithm interchanges an instance from S with another from TR - S. The change is maintained if it offers better accuracy.

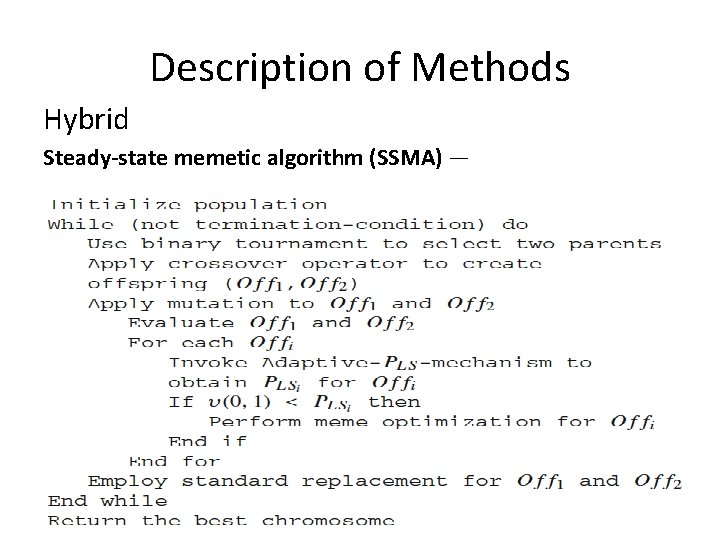

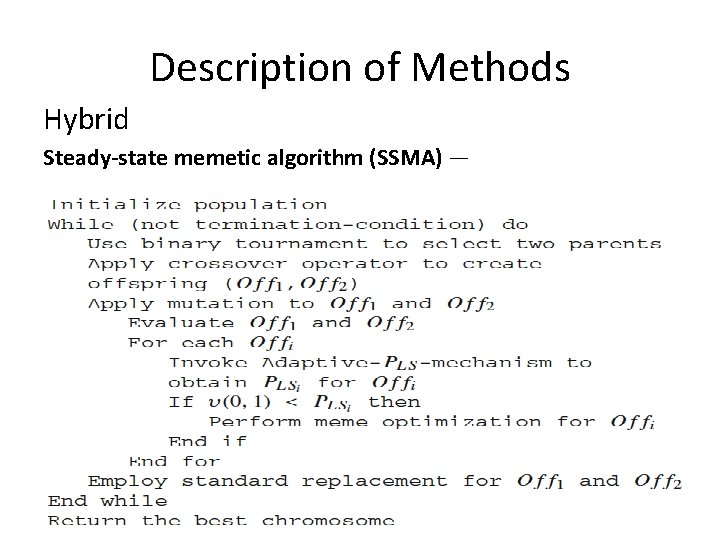

Description of Methods Hybrid Steady-state memetic algorithm (SSMA) —

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

Related and Advanced Topics Prototype Generation Prototype generation methods are not limited only to select examples from the training set. They could also modify the values of the samples, changing their position in the ddimensional space considered. Most of them usemerging or divide and conquer strategies to set new artificial samples, or are based on clustering approaches, Learning Vector Quantization hybrids, advanced proposals and evolutionary algorithms based schemes.

Related and Advanced Topics Distance Metrics, Feature Weighting and Combinations with Feature Selection This area refers to the combination of IS and PS methods with other well-known schemes used for improving accuracy in classification problems. For example, the weighting scheme combines the PS with the FS or Feature Weighting, where a vector of weights associated with each attribute determines and influences the distance computations.

Related and Advanced Topics Hybridizations with Other Learning Methods and Ensembles This family includes all the methods which simultaneously use instances and rules in order to compute the classification of a new object. If the values of the object are within the range of a rule, its consequent predicts the class; otherwise, if no rule matches the object, the most similar rule or instance stored in the data base is used to estimate the class. This area also refers to ensemble learning, where an IS method is run several times and a classification decision is made according to the majority class obtained over several subsets and any performance measure given by a learner.

Related and Advanced Topics Scaling-Up Approaches One of the disadvantages of the IS methods is that most of them report a prohibitive run time or even cannot be applied over large size data sets. Recent improvements in this field cover the stratification of data and the development of distributed approaches for PS.

Related and Advanced Topics Data Complexity This area studies the effect on the complexity of data when PS methods are applied previous to the classification or how to make a useful diagnosis of the benefits of applying PS methods taking into account the complexity of the data.

Instance Selection 1. 2. 3. 4. 5. 6. Introduction Training Set Selection vs. Prototype Selection Taxonomy Description of Methods Related and Advanced Topics Experimental Comparative Analysis in PS

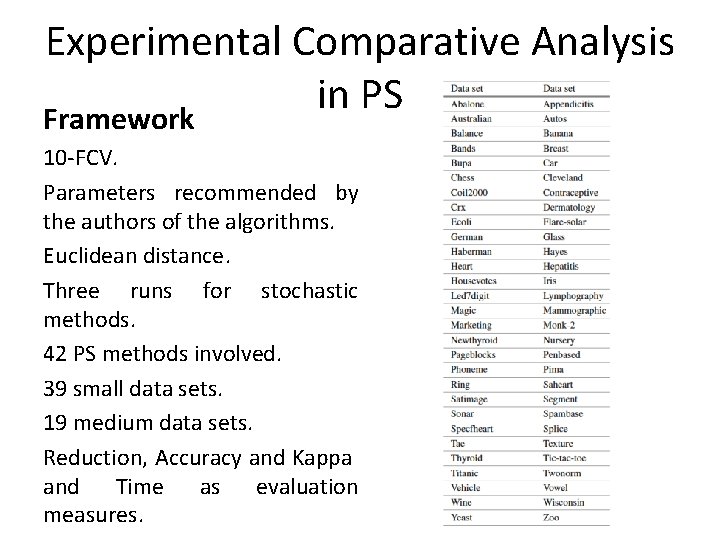

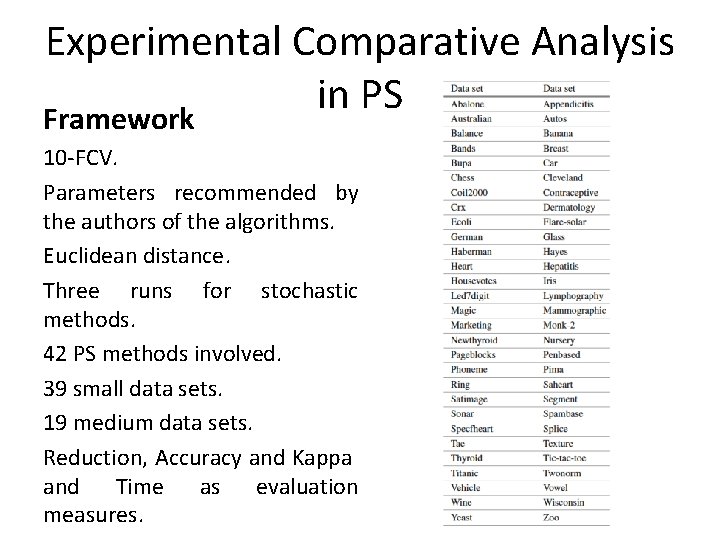

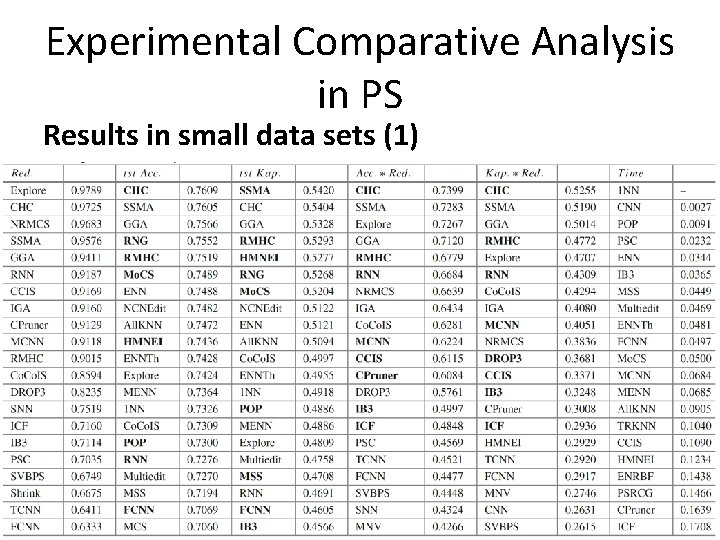

Experimental Comparative Analysis in PS Framework 10 -FCV. Parameters recommended by the authors of the algorithms. Euclidean distance. Three runs for stochastic methods. 42 PS methods involved. 39 small data sets. 19 medium data sets. Reduction, Accuracy and Kappa and Time as evaluation measures.

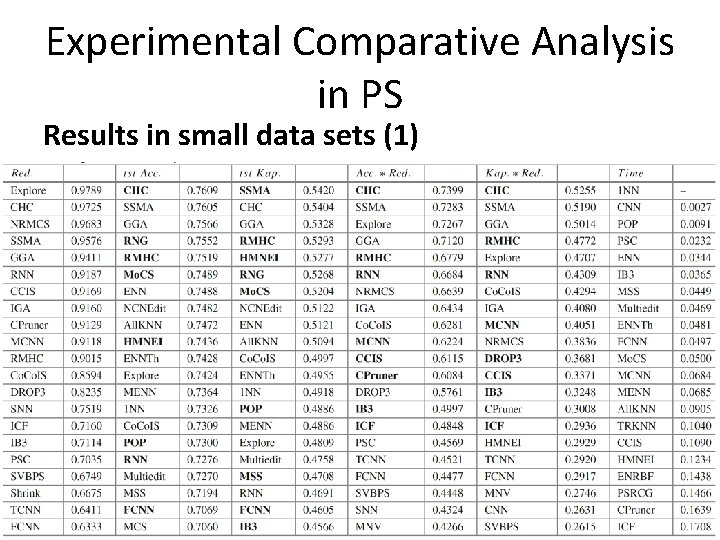

Experimental Comparative Analysis in PS Results in small data sets (1)

Experimental Comparative Analysis in PS Results in small data sets (2)

Experimental Comparative Analysis in PS Analysis in small data sets • Best condensation methods: FCNN and MCNN in incremental and RNN and MSS in decremental • Best edition methods: ENN, RNGE and NCNEdit obtain the best results in accuracy/kappa and MENN and ENNTh offers a good tradeoff considering the reduction rate. • Best hybrid methods: CPruner, HMNEI, CCIS, SSMA, CHC and RMHC. • Best global methods: in terms of accuracy or kappa are Mo. CS, RNGE and HMNEI. Considering the tradeoff reductionaccuracy/kappa are RMHC, RNN, CHC, Explore and SSMA.

Experimental Comparative Analysis in PS Results in medium data sets�

Experimental Comparative Analysis in PS Analysis in small data sets • Five techniques outperform 1 NN in terms of accuracy/kappa over medium data sets: RMHC, SSMA, HMNEI, Mo. CSand RNGE. • There are some techniques whose run could be prohibitive when the data scales up. This is the case for RNN, RMHC, CHC and SSMA. • The best methods in terms of accuracy or kappa are RNGE and HMNEI. • The best methods considering the tradeoff reductionaccuracy/kappa are RMHC, RNN and SSMA.

Experimental Comparative Analysis in PS Final suggestions: • For the tradeoff reduction-accuracy rate: The algorithms which obtain the best behavior are RMHC and SSMA. The methods that harm the accuracy at the expense of a great reduction of time complexity are DROP 3 and CCIS. • If the interest is the accuracy rate: In this case, the best results are to be achieved with the RNGE as editor and HMNEI as hybrid method. • When the key factor is the condensation: FCNN is the highlighted one, being one of the fastest.

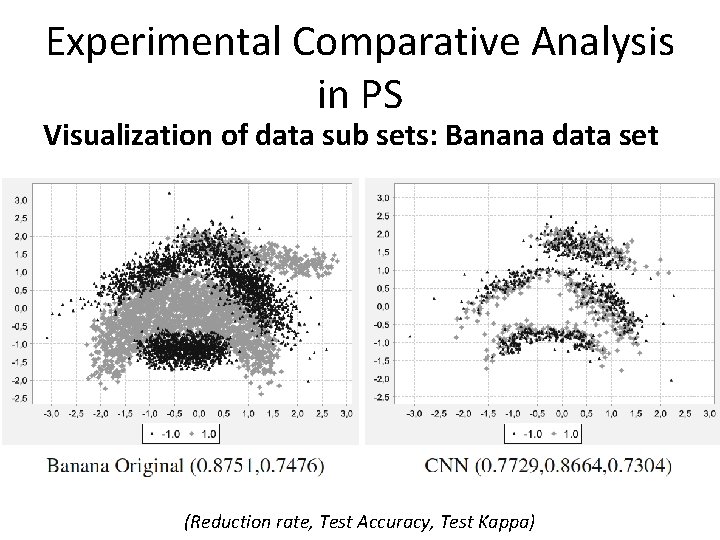

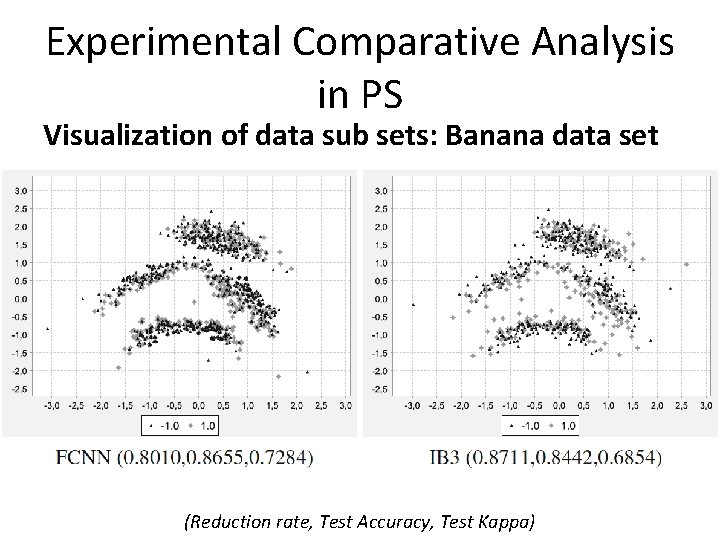

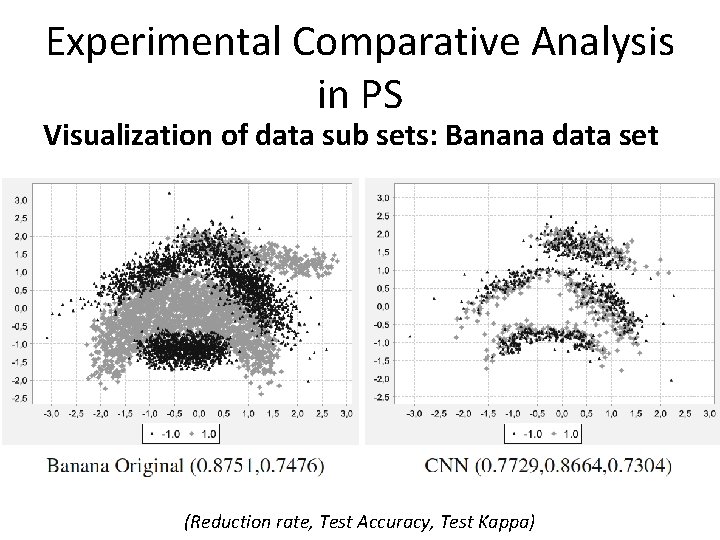

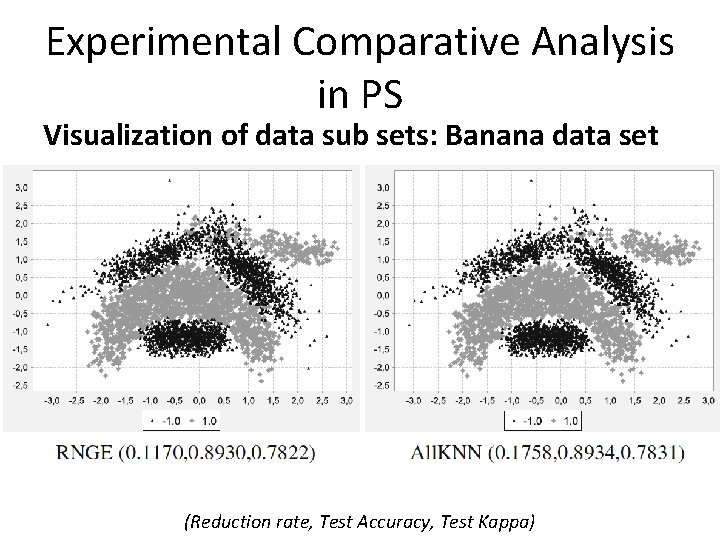

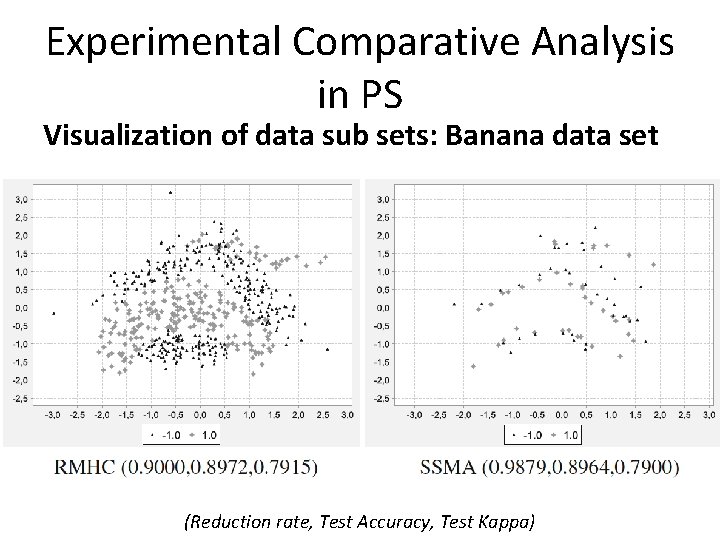

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)

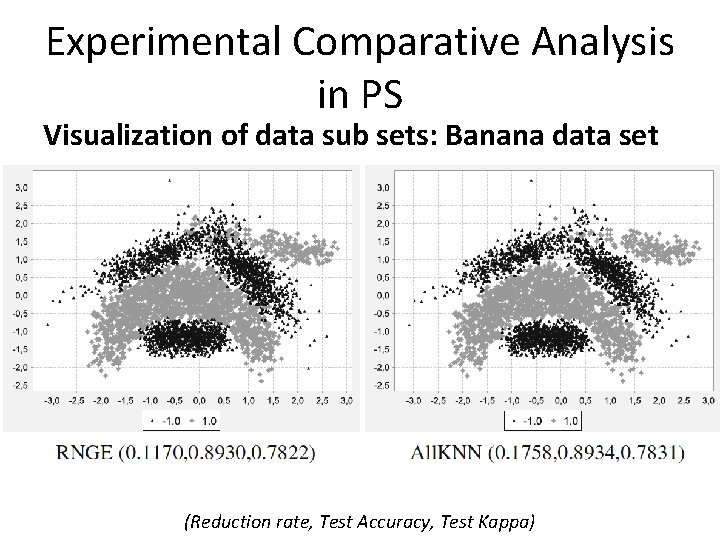

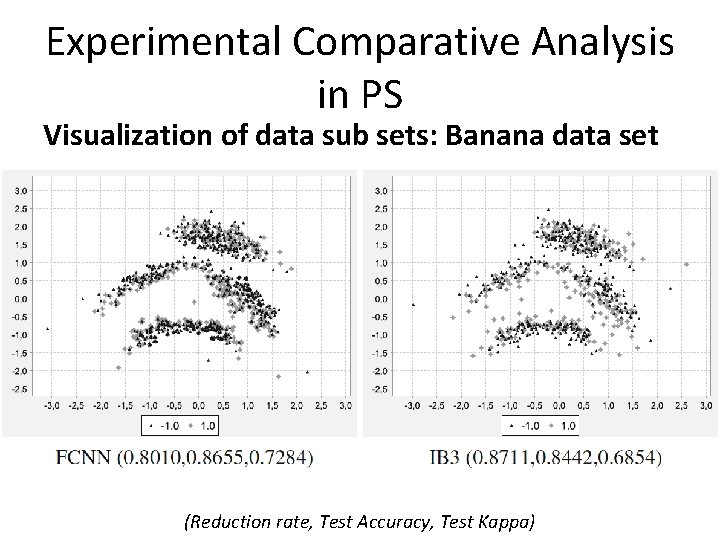

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)

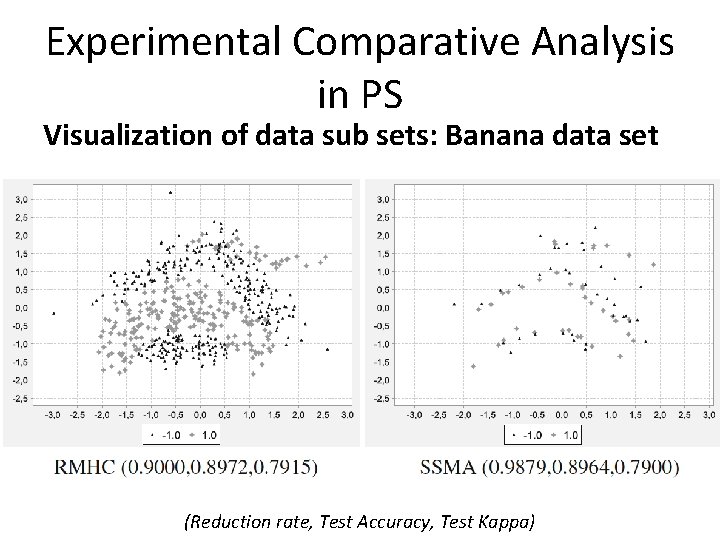

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)

Experimental Comparative Analysis in PS Visualization of data sub sets: Banana data set (Reduction rate, Test Accuracy, Test Kappa)