Innovative Emerging Computer Technologies Presented by Jacob Barhen

Innovative Emerging Computer Technologies Presented by Jacob Barhen and Neena Imam Computing and Computational Sciences Directorate Computational Advances for Distributed Sensing

Center for Engineering Science Advanced Research Fundamental theoretical, experimental, and computational research Mission: Support DOD and the Intelligence Community Examples of current research topics: Missile defense: C 2 BMC, HALO-2 project, flash hyperspectral imaging Sensitivity and uncertainty analysis of complex simulation models Laser array synchronization (directed energy, ultraweak signal detection, communications, terahertz sources) Terascale computing devices: En. Light optical core processor, IBM multicore CELL BE, field-programmable gate arrays (FPGA) Nanoscale science, hybrid Nanoelectronics for high-performance computing (HPC) Anti-submarine warfare: source localization, sensor nets, Doppler-sensitive waveforms, LCCA beamforming, multisensor fusion Quantum optics applied to cryptography Computer networks, wireless reconfigurable sensor network CESAR sponsors: DARPA, DOE/SC, MDA, NSF, ONR, NAVSEA, other government agencies 2

Center for Engineering Science Advanced Research Fundamental theoretical, experimental, and computational research For distributed sensing applications, some of the most promising advances in the computational area build upon the emergence of multicore CELL processor reconfigurable architectures: Xtreme. DSP FPGA and Hyper. X terascale optical core digital devices: En. Light CESAR sponsors: DARPA, DOE/SC, MDA, NSF, ONR, NAVSEA, other government agencies 3

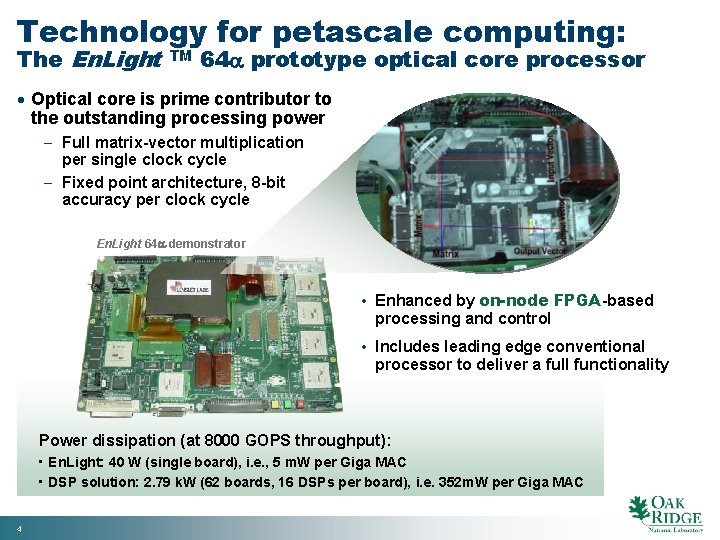

Technology for petascale computing: The En. Light TM 64 prototype optical core processor Optical core is prime contributor to the outstanding processing power - Full matrix-vector multiplication per single clock cycle - Fixed point architecture, 8 -bit accuracy per clock cycle En. Light 64 demonstrator • Enhanced by on-node FPGA-based processing and control • Includes leading edge conventional processor to deliver a full functionality Power dissipation (at 8000 GOPS throughput): • En. Light: 40 W (single board), i. e. , 5 m. W per Giga MAC • DSP solution: 2. 79 k. W (62 boards, 16 DSPs per board), i. e. 352 m. W per Giga MAC 4

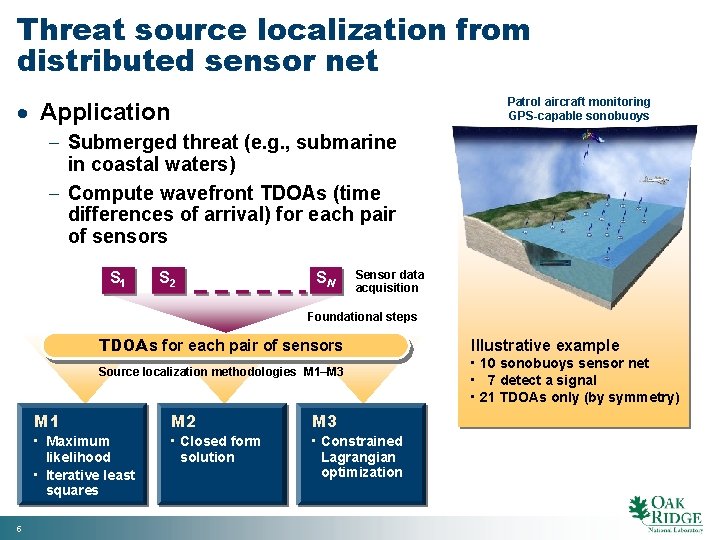

Threat source localization from distributed sensor net Patrol aircraft monitoring GPS-capable sonobuoys Application - Submerged threat (e. g. , submarine in coastal waters) - Compute wavefront TDOAs (time differences of arrival) for each pair of sensors S 1 S 2 SN Sensor data acquisition Foundational steps TDOAs for each pair of sensors Source localization methodologies M 1–M 3 5 M 1 M 2 M 3 • Maximum likelihood • Iterative least squares • Closed form solution • Constrained Lagrangian optimization Illustrative example • 10 sonobuoys sensor net • 7 detect a signal • 21 TDOAs only (by symmetry)

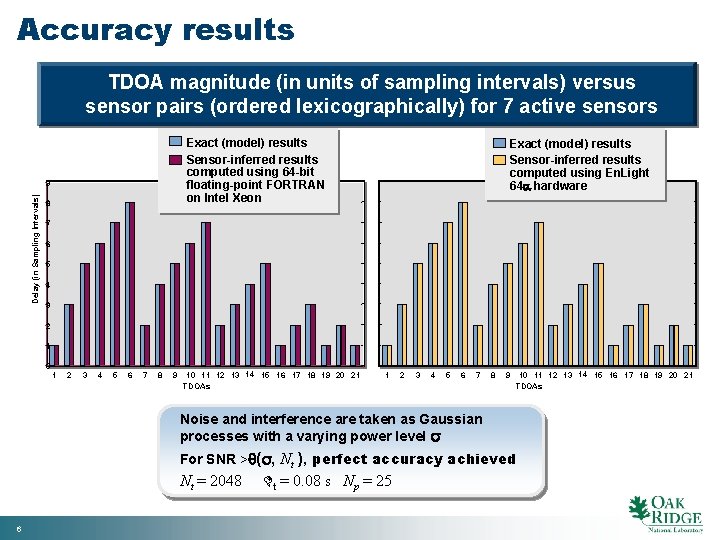

Accuracy results TDOA magnitude (in units of sampling intervals) versus sensor pairs (ordered lexicographically) for 7 active sensors Exact (model) results Sensor-inferred results computed using 64 -bit floating-point FORTRAN on Intel Xeon Delay (in Sampling Intervals) 9 8 Exact (model) results Sensor-inferred results computed using En. Light 64 hardware 7 6 5 4 3 2 1 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 TDOAs Noise and interference are taken as Gaussian processes with a varying power level For SNR > ( , Nt ), perfect accuracy achieved Nt = 2048 6 t = 0. 08 s Np = 25

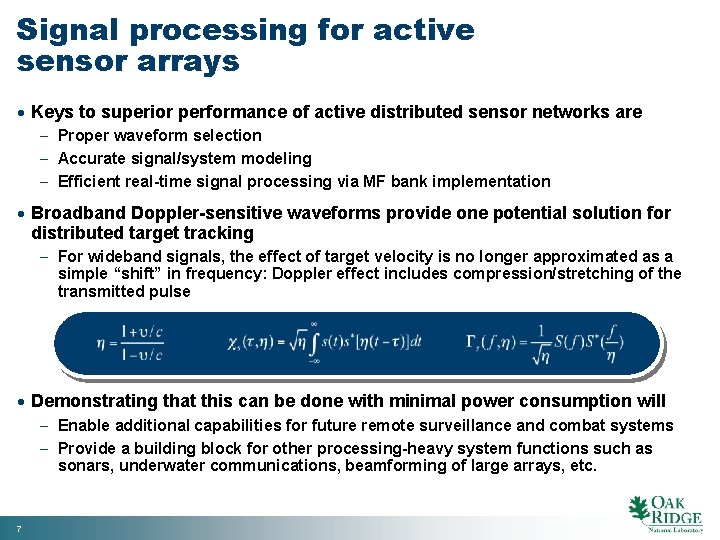

Signal processing for active sensor arrays Keys to superior performance of active distributed sensor networks are - Proper waveform selection - Accurate signal/system modeling - Efficient real-time signal processing via MF bank implementation Broadband Doppler-sensitive waveforms provide one potential solution for distributed target tracking - For wideband signals, the effect of target velocity is no longer approximated as a simple “shift” in frequency: Doppler effect includes compression/stretching of the transmitted pulse Demonstrating that this can be done with minimal power consumption will - Enable additional capabilities for future remote surveillance and combat systems - Provide a building block for other processing-heavy system functions such as sonars, underwater communications, beamforming of large arrays, etc. 7

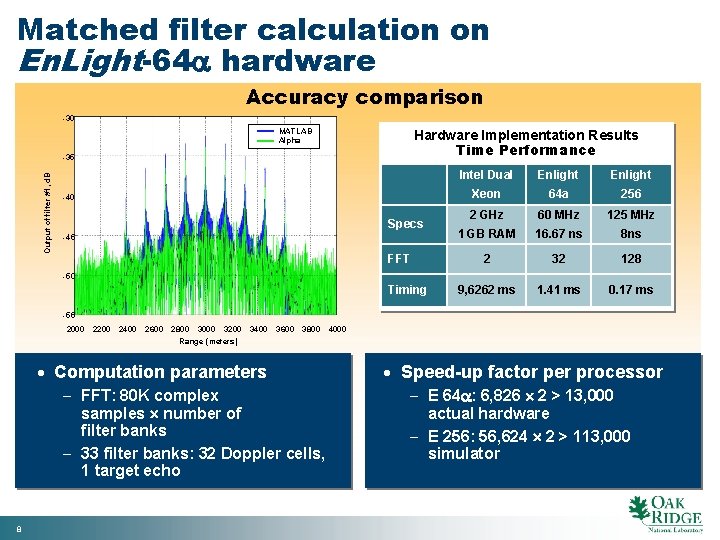

Matched filter calculation on En. Light-64 hardware Accuracy comparison -30 MATLAB Alpha Hardware Implementation Results Time Performance Output of filter #1, d. B -35 -40 Specs -45 FFT Intel Dual Enlight Xeon 64 a 256 2 GHz 60 MHz 125 MHz 1 GB RAM 16. 67 ns 8 ns 2 32 128 9, 6262 ms 1. 41 ms 0. 17 ms -50 Timing -55 2000 2200 2400 2600 2800 3000 3200 3400 3600 3800 4000 Range (meters) Computation parameters - FFT: 80 K complex samples number of filter banks - 33 filter banks: 32 Doppler cells, 1 target echo 8 Speed-up factor per processor - E 64 : 6, 826 2 > 13, 000 actual hardware - E 256: 56, 624 2 > 113, 000 simulator

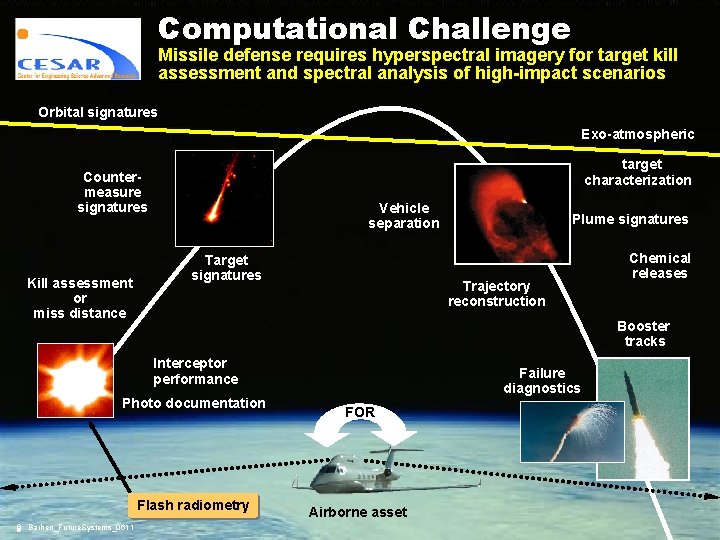

Computational Challenge Missile defense requires hyperspectral imagery for target kill assessment and spectral analysis of high-impact scenarios Orbital signatures Exo-atmospheric target characterization Countermeasure signatures Kill assessment or miss distance Vehicle separation Target signatures Trajectory reconstruction Chemical releases Booster tracks Interceptor performance Photo documentation Flash radiometry 9 Barhen_Future. Systems_0611 Plume signatures Failure diagnostics FOR Airborne asset

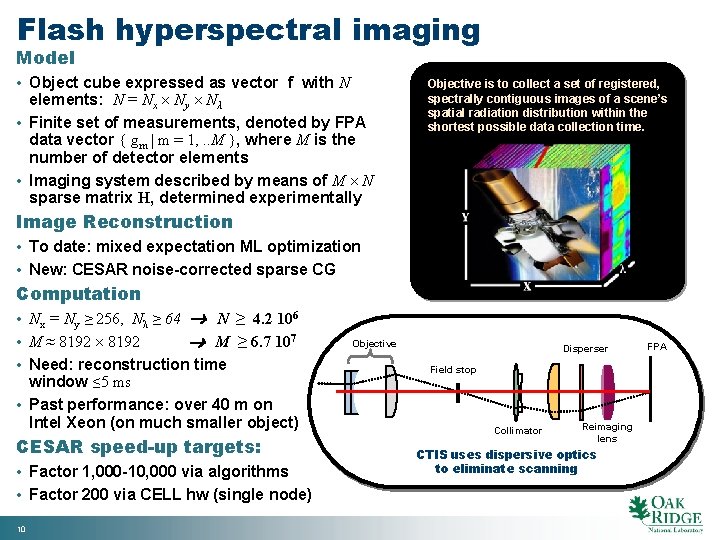

Flash hyperspectral imaging Model • Object cube expressed as vector f with N elements: N = Nx Ny Nλ • Finite set of measurements, denoted by FPA data vector { gm | m = 1, . . M }, where M is the number of detector elements • Imaging system described by means of M N sparse matrix H, determined experimentally Objective is to collect a set of registered, spectrally contiguous images of a scene’s spatial radiation distribution within the shortest possible data collection time. Image Reconstruction • To date: mixed expectation ML optimization • New: CESAR noise-corrected sparse CG Computation • Nx = Ny ≥ 256, Nλ ≥ 64 N ≥ 4. 2 106 • M ≈ 8192 M ≥ 6. 7 107 • Need: reconstruction time window ≤ 5 ms • Past performance: over 40 m on Intel Xeon (on much smaller object) CESAR speed-up targets: • Factor 1, 000 -10, 000 via algorithms • Factor 200 via CELL hw (single node) 10 Objective Disperser Field stop Collimator Reimaging lens CTIS uses dispersive optics to eliminate scanning FPA

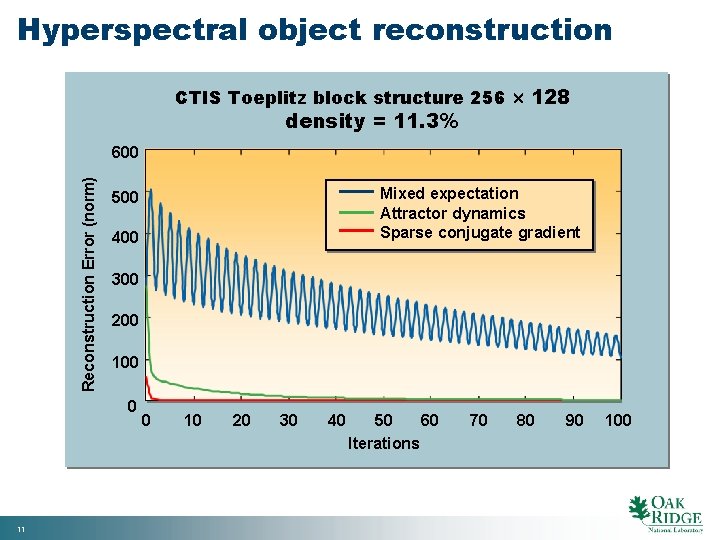

Hyperspectral object reconstruction CTIS Toeplitz block structure 256 128 density = 11. 3% Reconstruction Error (norm) 600 400 300 200 100 0 11 Mixed expectation Attractor dynamics Sparse conjugate gradient 500 0 10 20 30 40 50 60 Iterations 70 80 90 100

Contacts Jacob Barhen Center for Engineering Science Advanced Research Computer Science and Mathematics (865) 574 -7131 barenj@ornl. gov SIPRNET: barhenj@ornl. doe. sgov. gov Neena Imam Center for Engineering Science and Advanced Research Computer Science and Mathematics (865) 574 -8701 Imamn@ornl. gov 12 Barhen_Future. Systems_0611

- Slides: 12