Innetwork Aggregation for Shared Machine Learning Clusters Nadeen

- Slides: 21

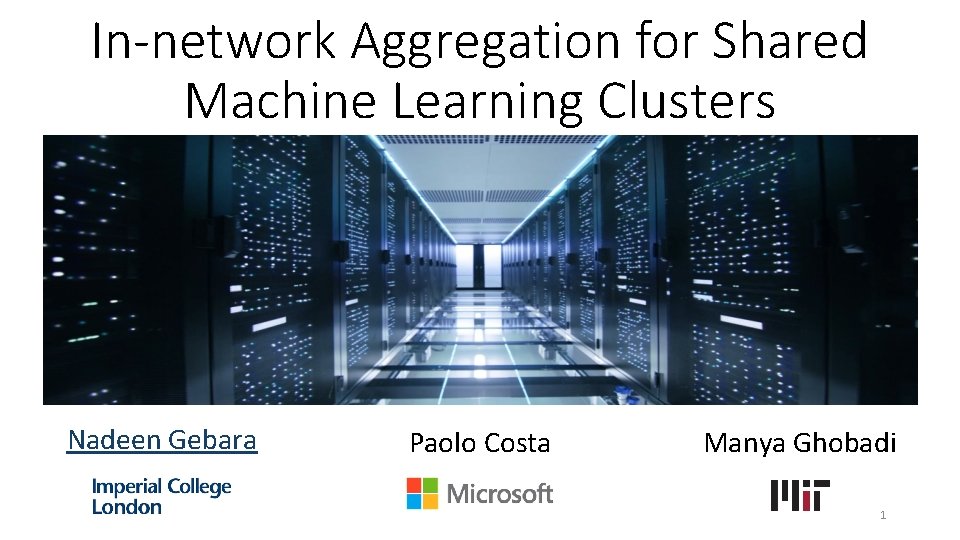

In-network Aggregation for Shared Machine Learning Clusters Nadeen Gebara Paolo Costa Manya Ghobadi 1

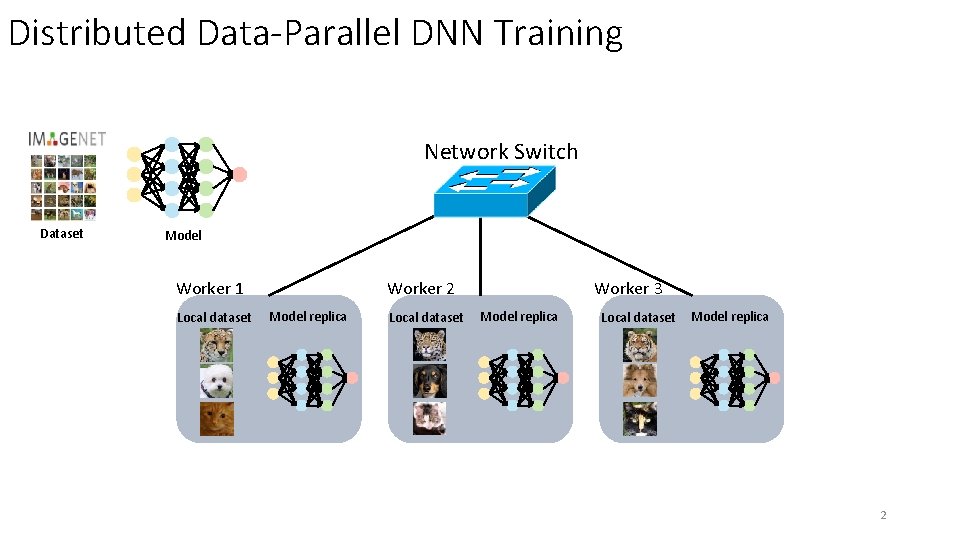

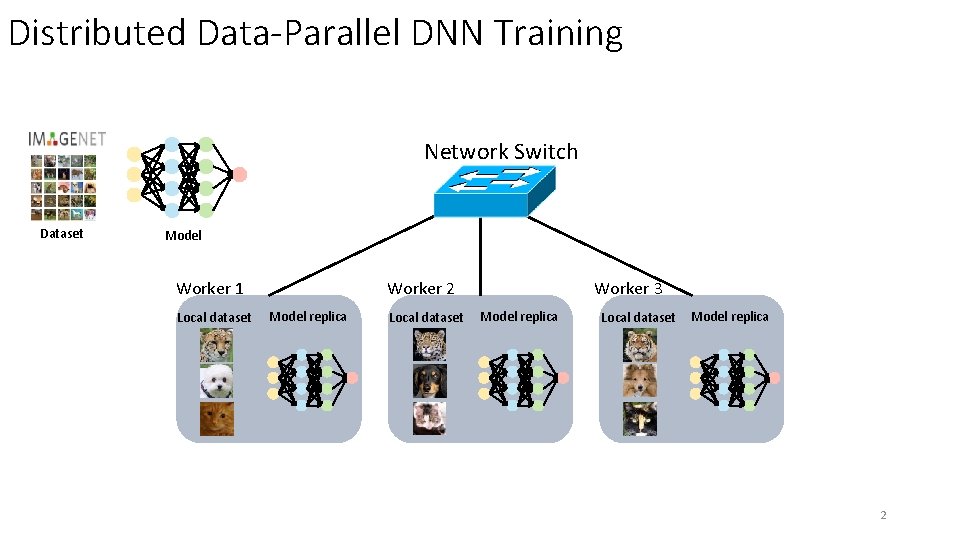

Distributed Data-Parallel DNN Training Network Switch Dataset Model Worker 2 Worker 1 Local dataset Model replica Local dataset Worker 3 Model replica Local dataset Model replica 2

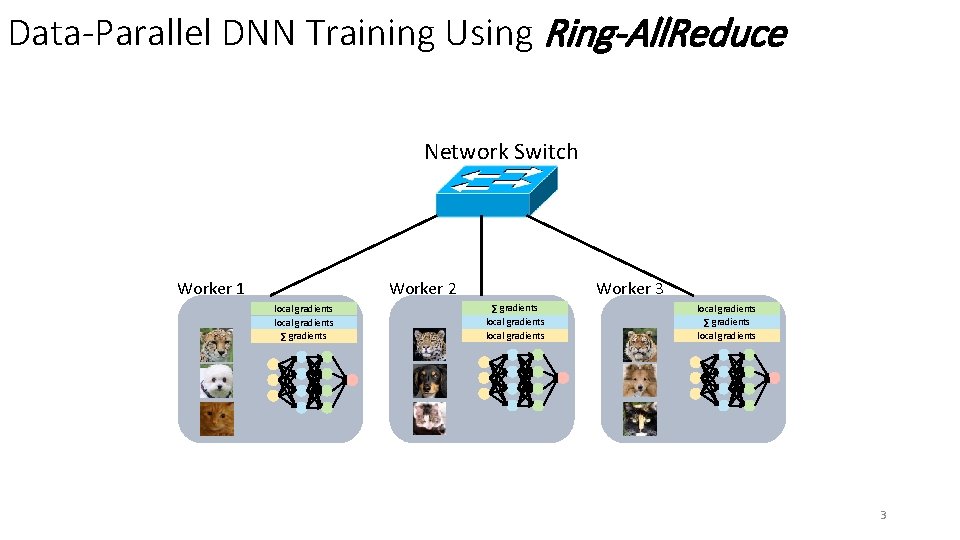

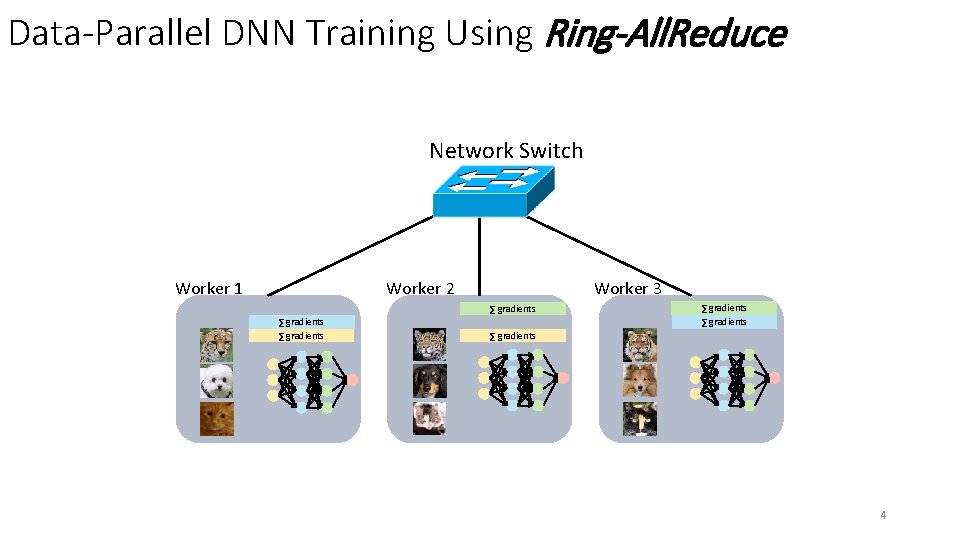

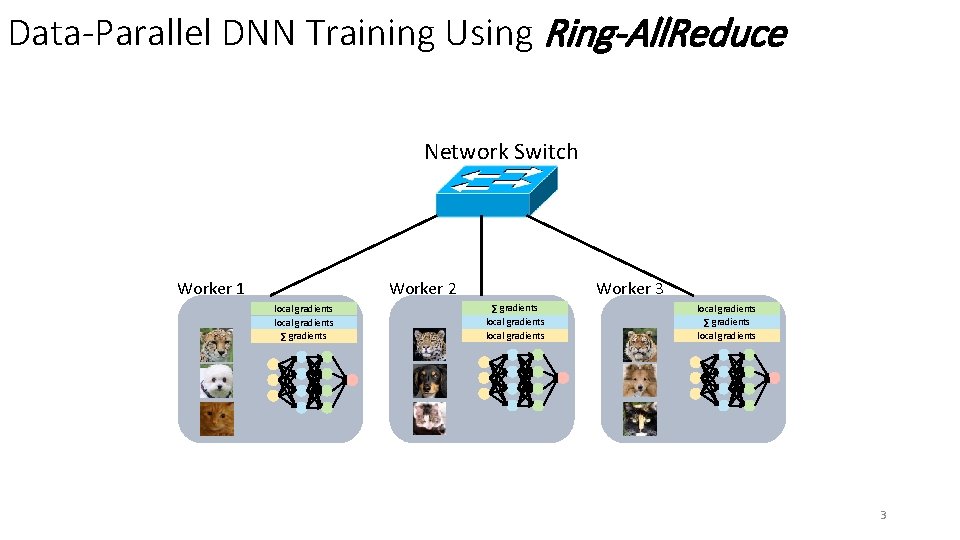

Data-Parallel DNN Training Using Ring-All. Reduce Network Switch Worker 2 Worker 1 local gradients ∑ gradients local gradients Worker 3 ∑ gradients local gradients ∑ gradients local gradients 3

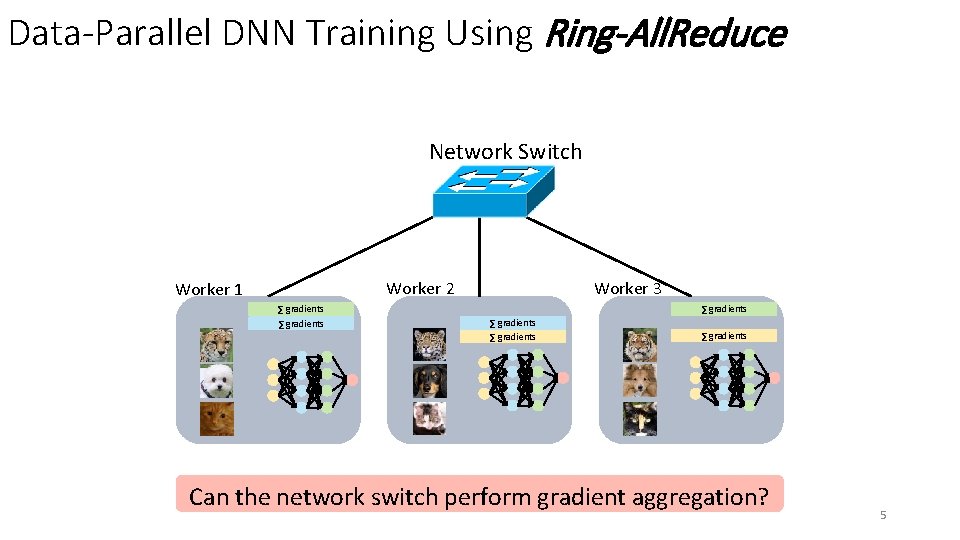

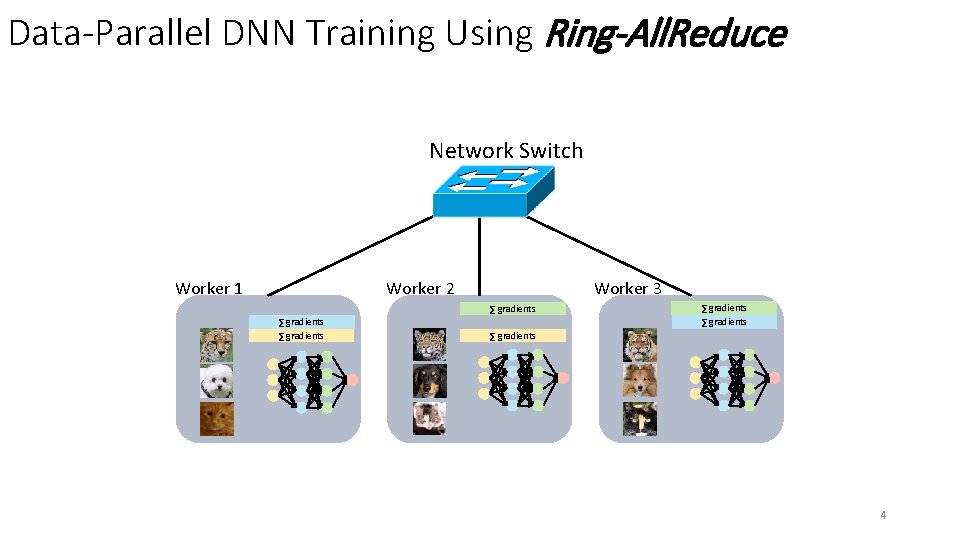

Data-Parallel DNN Training Using Ring-All. Reduce Network Switch Worker 2 Worker 1 Worker 3 ∑ gradients local gradients ∑ gradients local ∑ gradients 4

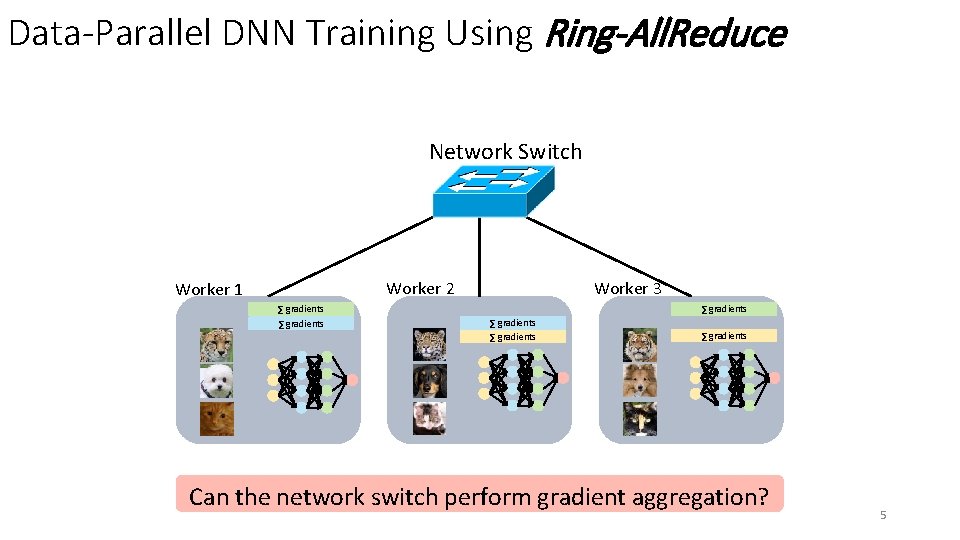

Data-Parallel DNN Training Using Ring-All. Reduce Network Switch Worker 2 Worker 1 ∑ gradients Worker 3 ∑ gradients Can the network switch perform gradient aggregation? 5

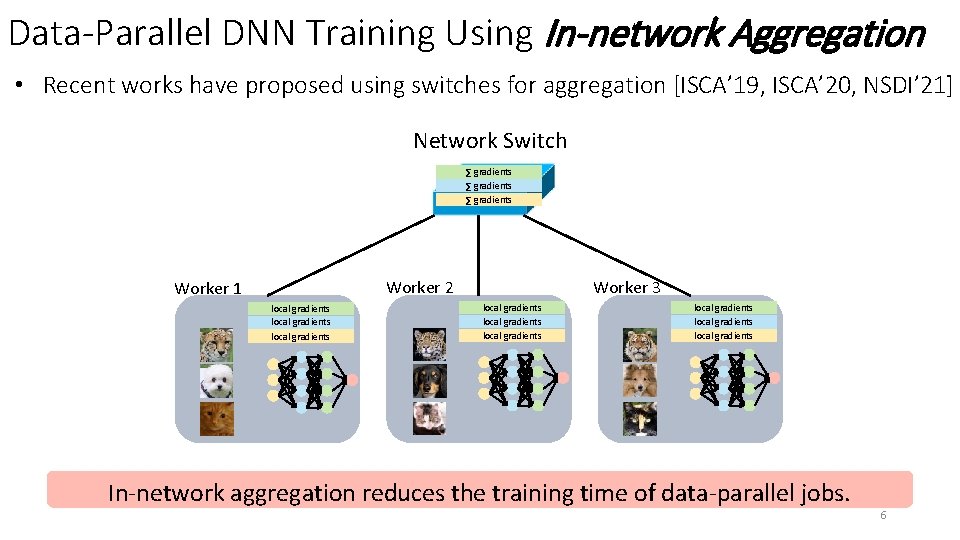

Data-Parallel DNN Training Using In-network Aggregation • Recent works have proposed using switches for aggregation [ISCA’ 19, ISCA’ 20, NSDI’ 21] Network Switch ∑ gradients local gradients ∑ gradients Worker 2 Worker 1 local gradients Worker 3 local gradients local gradients In-network aggregation reduces the training time of data-parallel jobs. 6

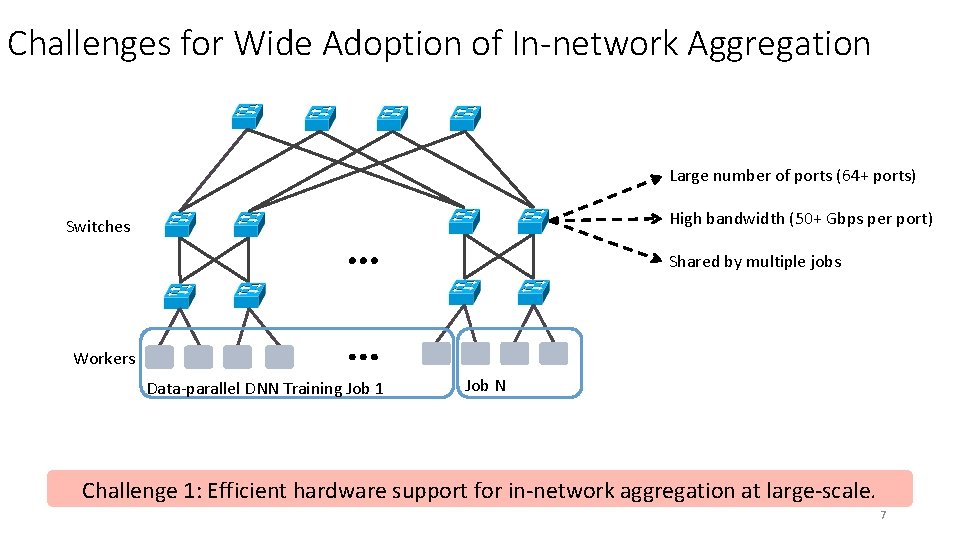

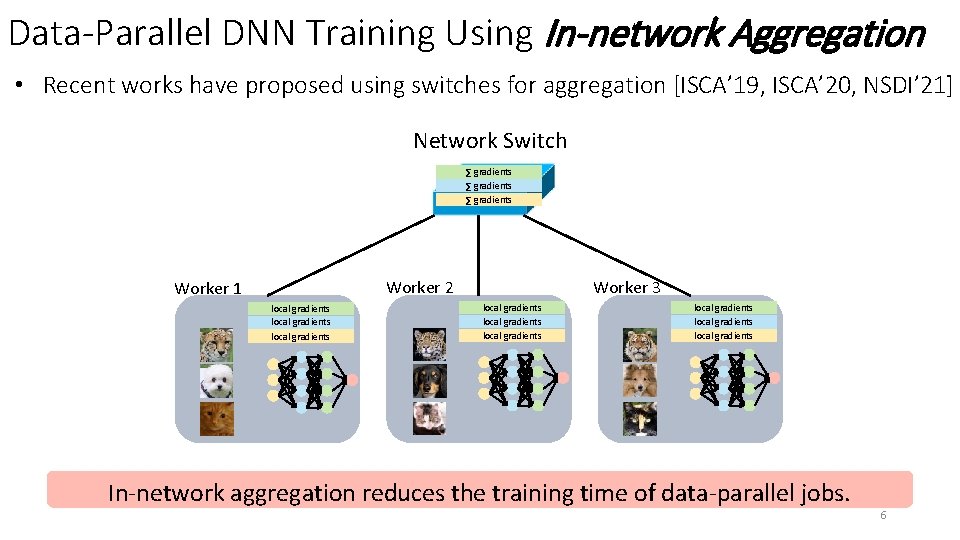

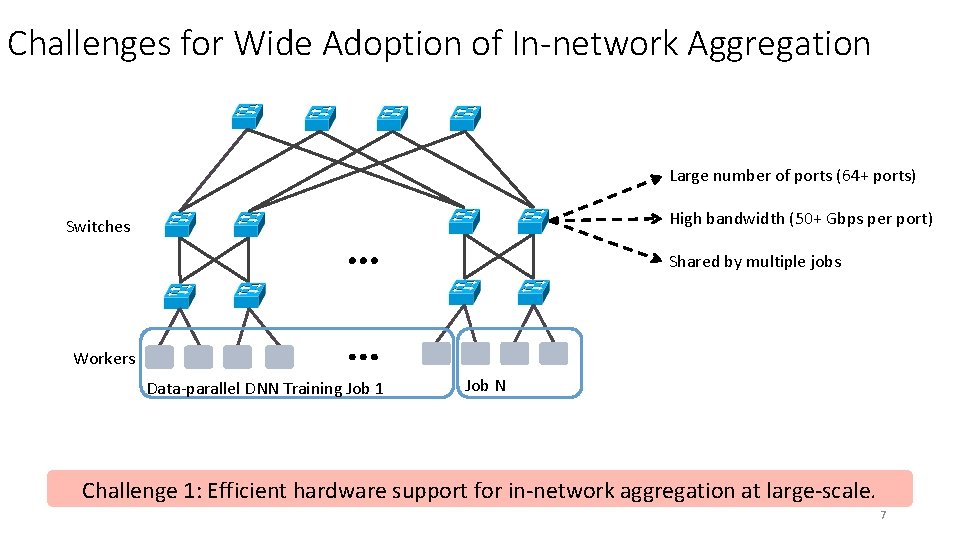

Challenges for Wide Adoption of In-network Aggregation Large number of ports (64+ ports) High bandwidth (50+ Gbps per port) Switches Shared by multiple jobs Workers Data-parallel DNN Training Job 1 Job N Challenge 1: Efficient hardware support for in-network aggregation at large-scale. 7

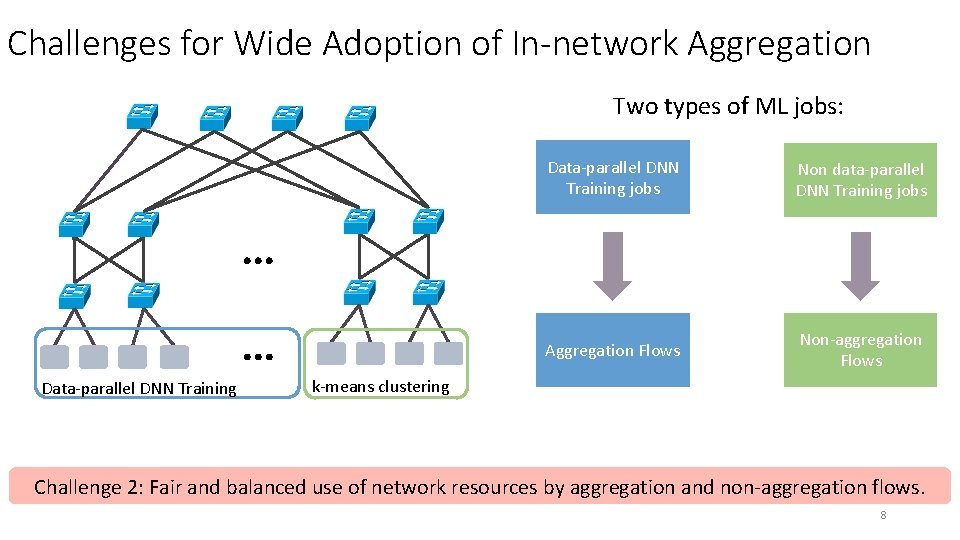

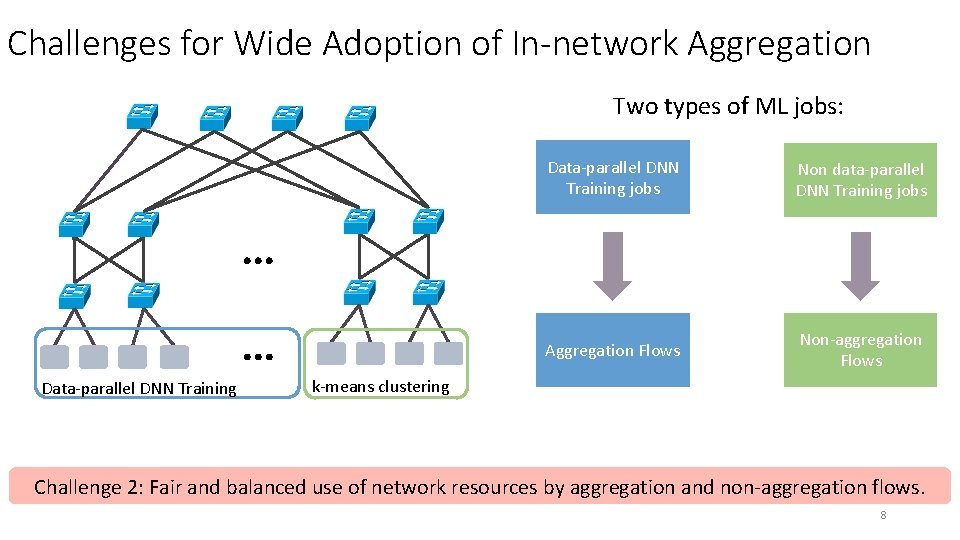

Challenges for Wide Adoption of In-network Aggregation Two types of ML jobs: Data-parallel DNN Training jobs Non data-parallel DNN Training jobs Aggregation Flows Non-aggregation Flows k-means clustering Challenge 2: Fair and balanced use of network resources by aggregation and non-aggregation flows. 8

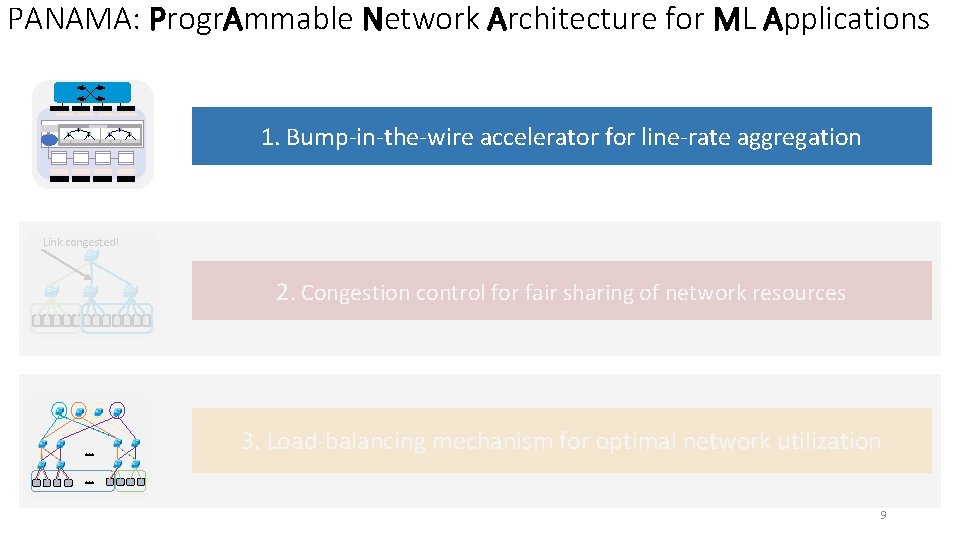

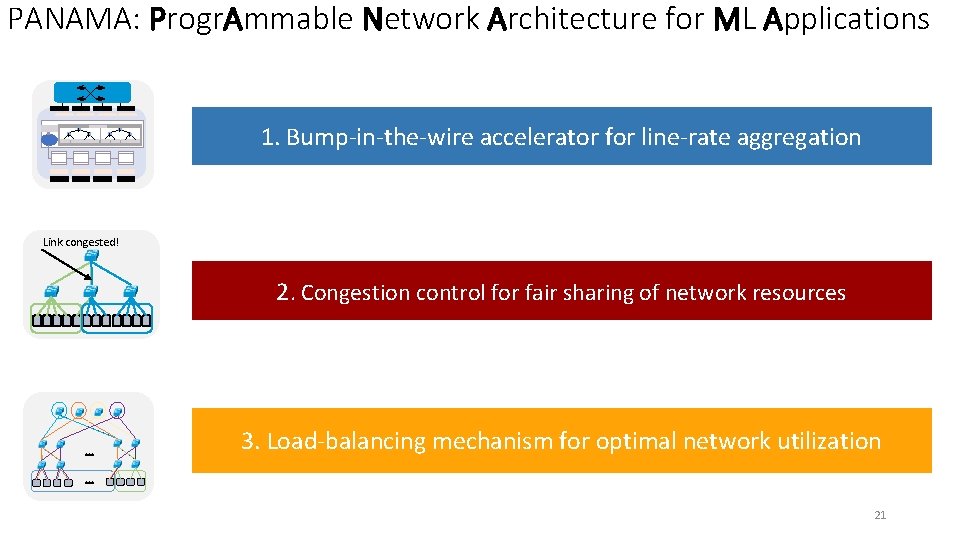

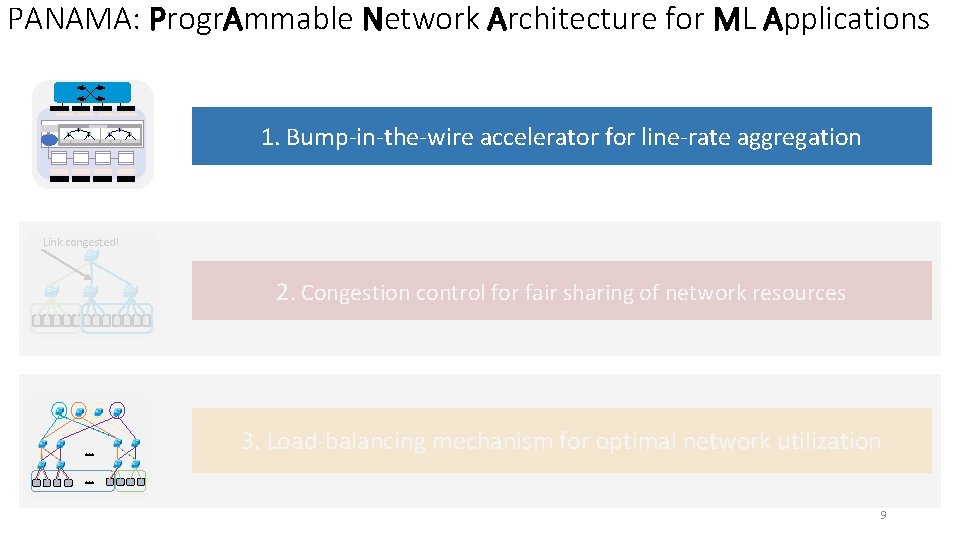

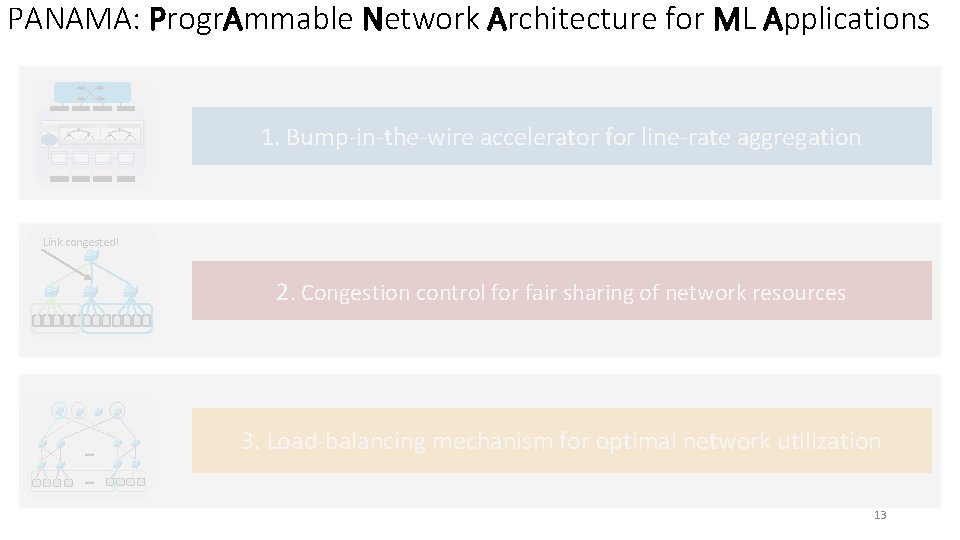

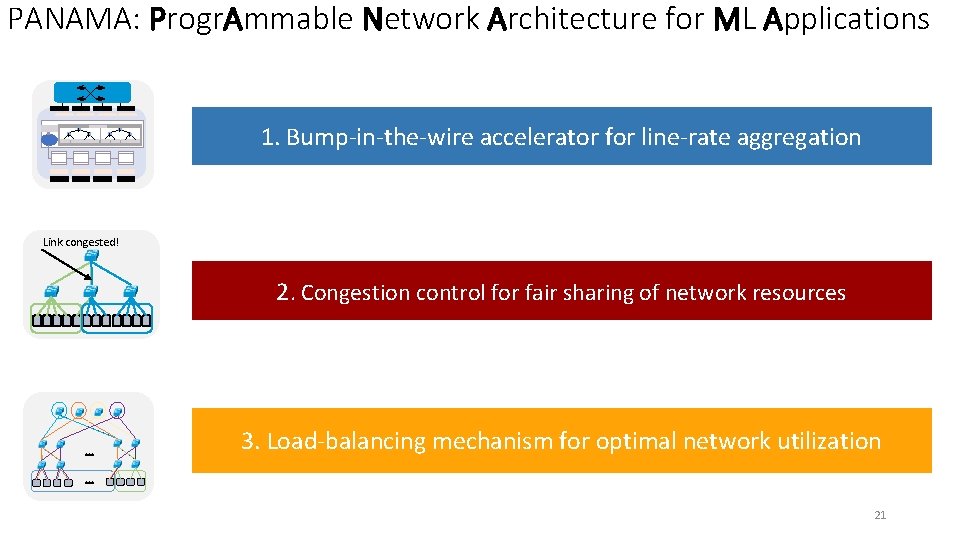

PANAMA: Progr. Ammable Network Architecture for ML Applications + + + 1. Bump-in-the-wire accelerator for line-rate aggregation Link congested! 2. Congestion control for fair sharing of network resources 3. Load-balancing mechanism for optimal network utilization 9

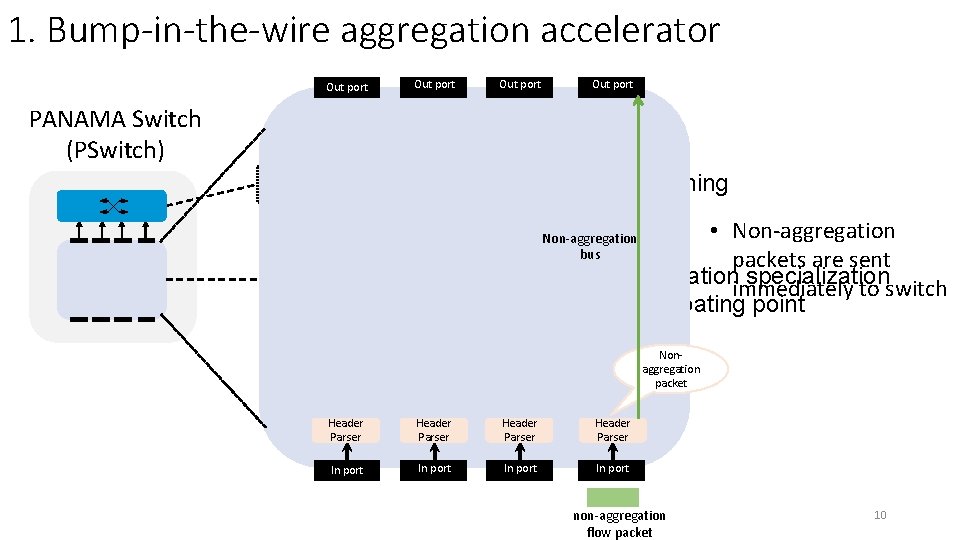

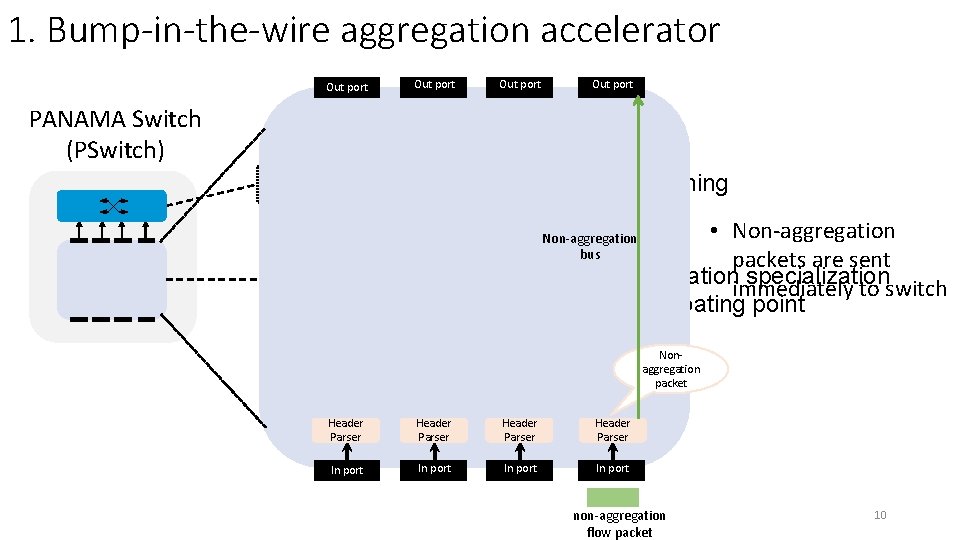

1. Bump-in-the-wire aggregation accelerator Out port PANAMA Switch (PSwitch) Network Switch • Routing and switching • Non-aggregation packets are sent • In-network aggregationimmediately specialization to switch Ø Support for floating point computation Non-aggregation bus Aggregation Accelerator Nonaggregation packet Header Parser In port non-aggregation flow packet 10

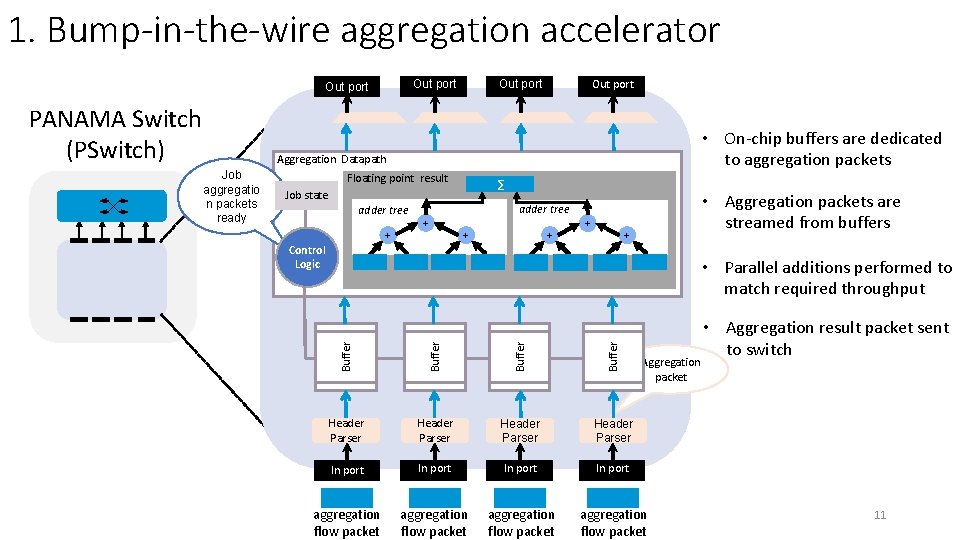

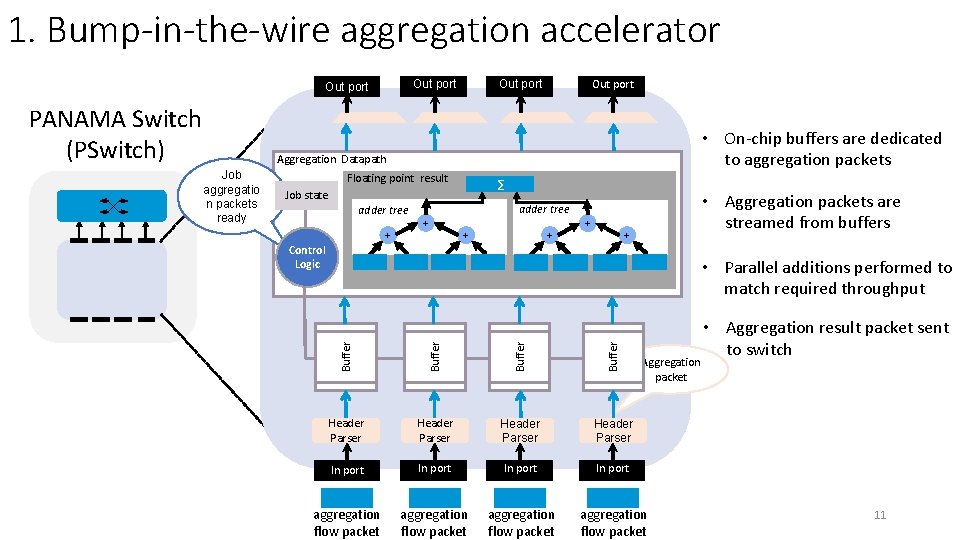

1. Bump-in-the-wire aggregation accelerator Out port PANAMA Switch (PSwitch) Out port • On-chip buffers are dedicated to aggregation packets Aggregation Datapath Floating point result ∑ Job state adder tree + + + • Aggregation packets are streamed from buffers + Control Logic Buffer • Parallel additions performed to match required throughput Buffer Job aggregatio n packets ready Header Parser In port aggregation flow packet Aggregation packet • Aggregation result packet sent to switch 11

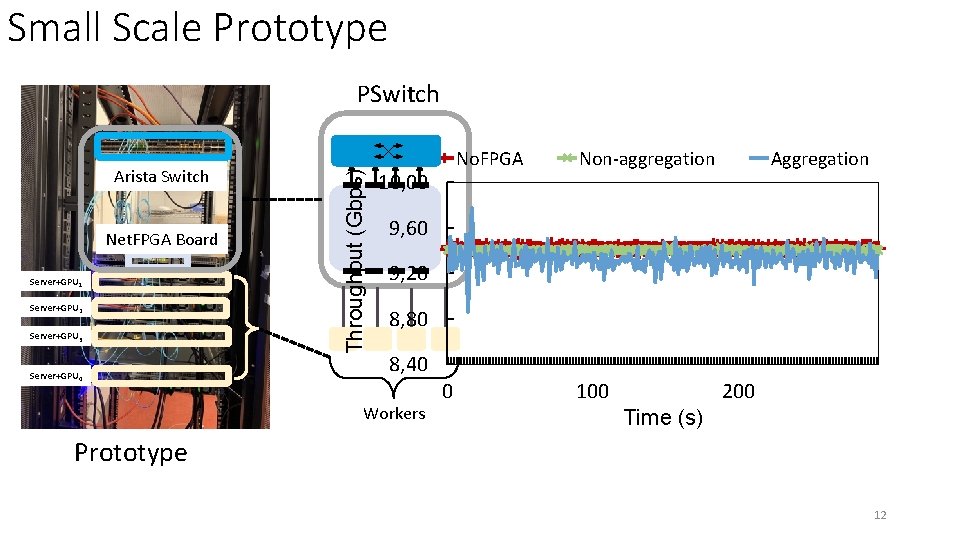

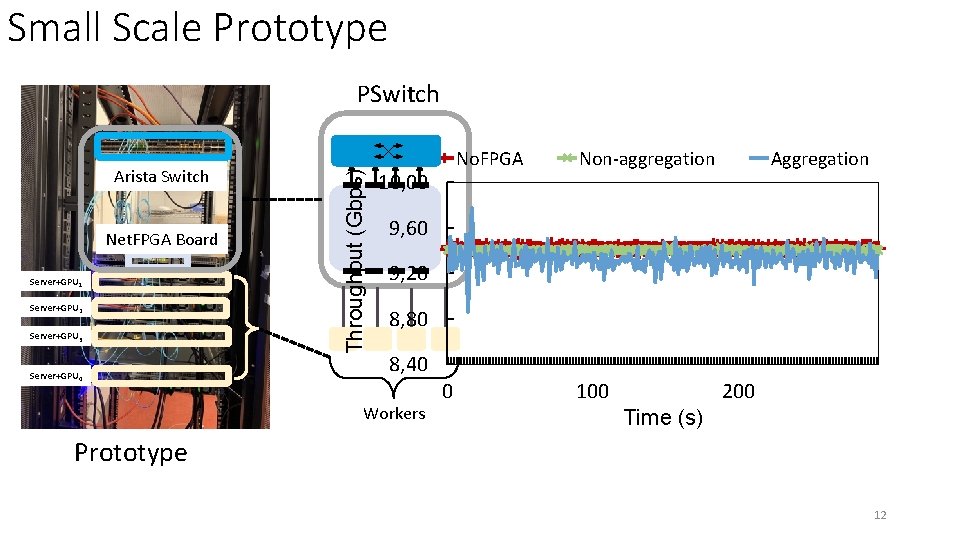

Small Scale Prototype Arista Switch Net. FPGA Board Server+GPU 1 Server+GPU 2 Server+GPU 3 Server+GPU 4 Throughput (Gbps) PSwitch No. FPGA 10, 00 Non-aggregation Aggregation 9, 60 9, 20 8, 80 8, 40 Workers 0 100 200 Time (s) Prototype 12

PANAMA: Progr. Ammable Network Architecture for ML Applications + + + 1. Bump-in-the-wire accelerator for line-rate aggregation Link congested! 2. Congestion control for fair sharing of network resources 3. Load-balancing mechanism for optimal network utilization 13

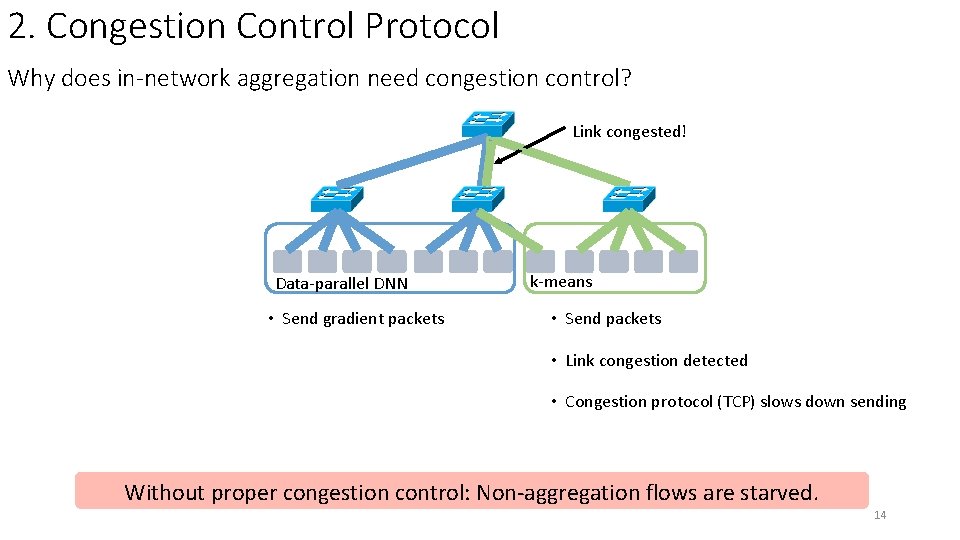

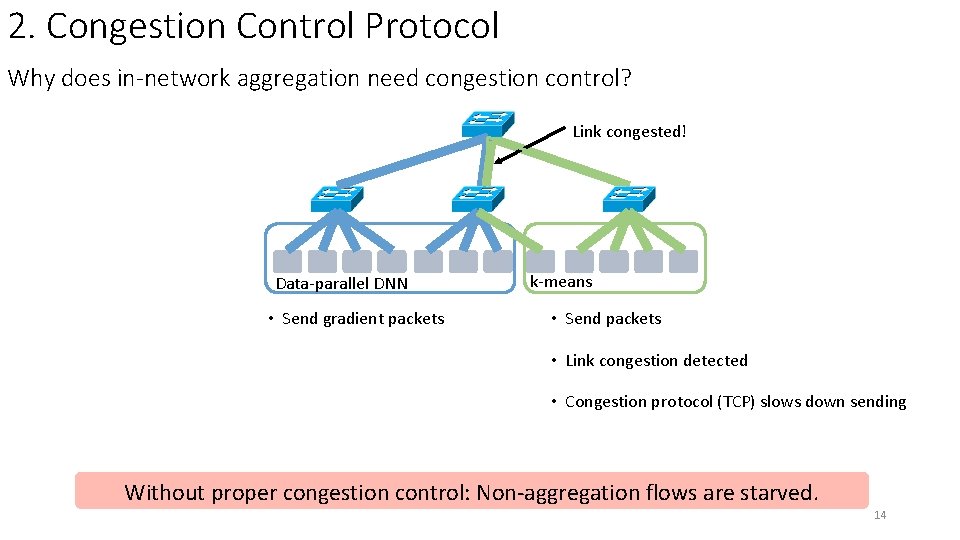

2. Congestion Control Protocol Why does in-network aggregation need congestion control? Link congested! Data-parallel DNN • Send gradient packets k-means • Send packets • Link congestion detected • Congestion protocol (TCP) slows down sending Without proper congestion control: Non-aggregation flows are starved. 14

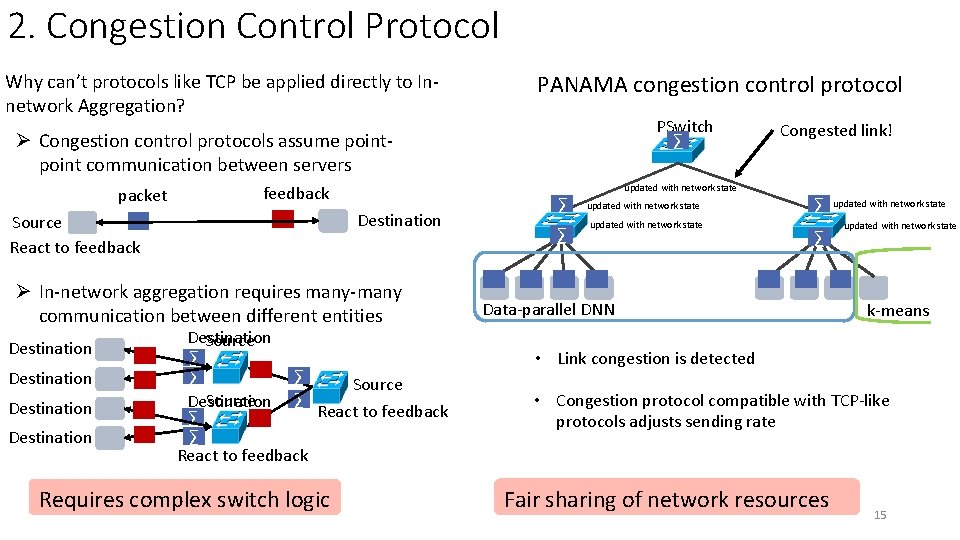

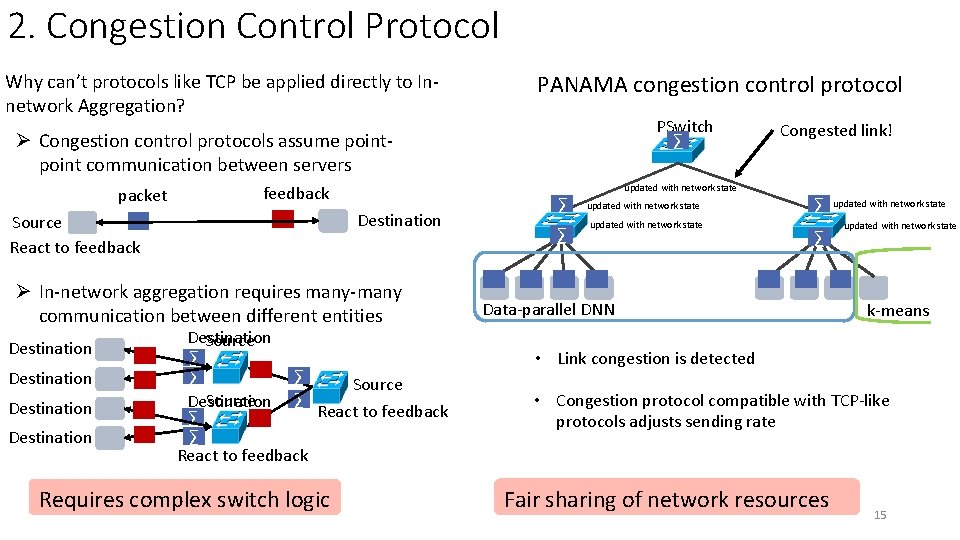

2. Congestion Control Protocol Why can’t protocols like TCP be applied directly to Innetwork Aggregation? PANAMA congestion control protocol PSwitch ∑ Ø Congestion control protocols assume point communication between servers packet feedback Source React to feedback Destination Ø In-network aggregation requires many-many communication between different entities Destination Source ∑ ∑ Destination ∑ ∑ Source Destination React to feedback ∑ ∑ ∑ Destination Source Reactto tofeedback React to feedback React Destination Source Destination Requires complex switch logic ∑ ∑ updated with network state Congested link! ∑ ∑ Data-parallel DNN updated with network state k-means • Link congestion is detected • Congestion protocol compatible with TCP-like protocols adjusts sending rate Fair sharing of network resources 15

PANAMA: Progr. Ammable Network Architecture for ML Applications + + + 1. Bump-in-the-wire accelerator for line-rate aggregation Link congested! 2. Congestion control for fair sharing of network resources 3. Load-balancing mechanism for optimal network utilization 16

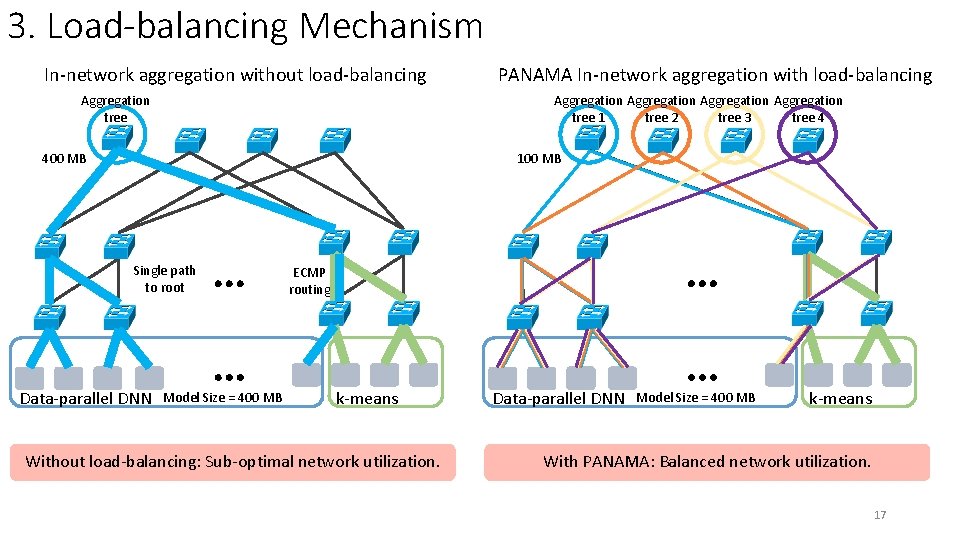

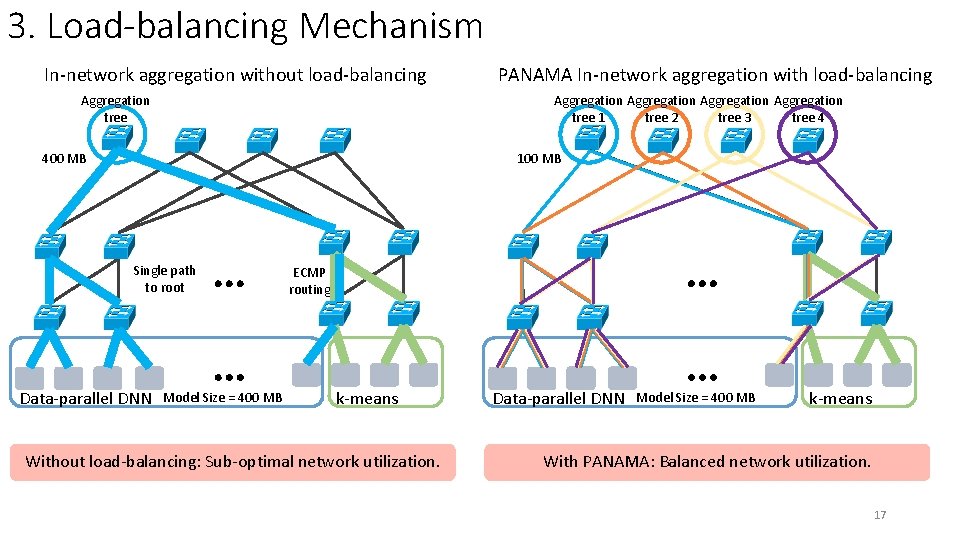

3. Load-balancing Mechanism In-network aggregation without load-balancing Aggregation tree PANAMA In-network aggregation with load-balancing Aggregation tree 1 tree 2 tree 3 tree 4 400 MB 100 MB Single path to root Data-parallel DNN Model Size = 400 MB ECMP routing k-means Without load-balancing: Sub-optimal network utilization. Data-parallel DNN Model Size = 400 MB k-means With PANAMA: Balanced network utilization. 17

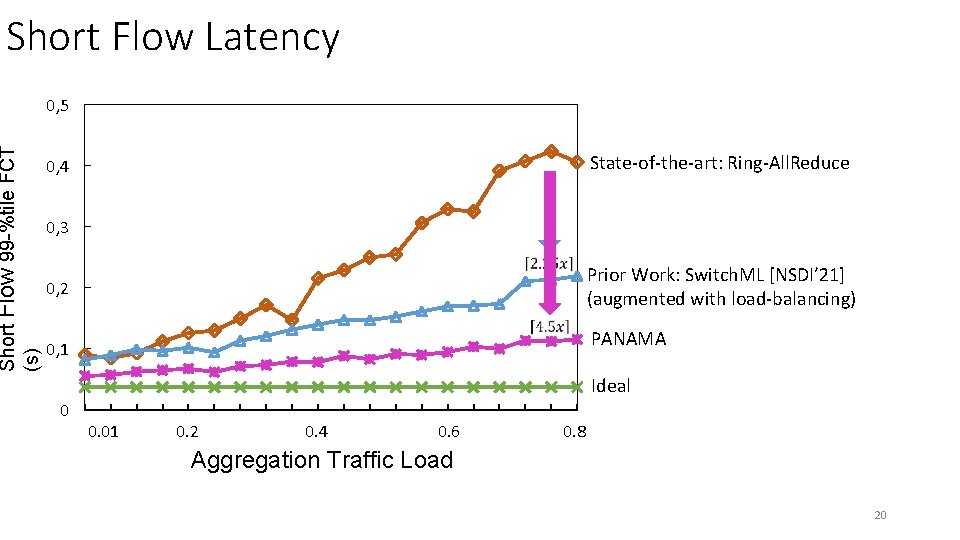

Putting It All Together ØPANAMA reduces the time-to-accuracy of data-parallel training jobs in large scale shared clusters. ØPANAMA benefits latency-sensitive non-aggregation flows even more! 18

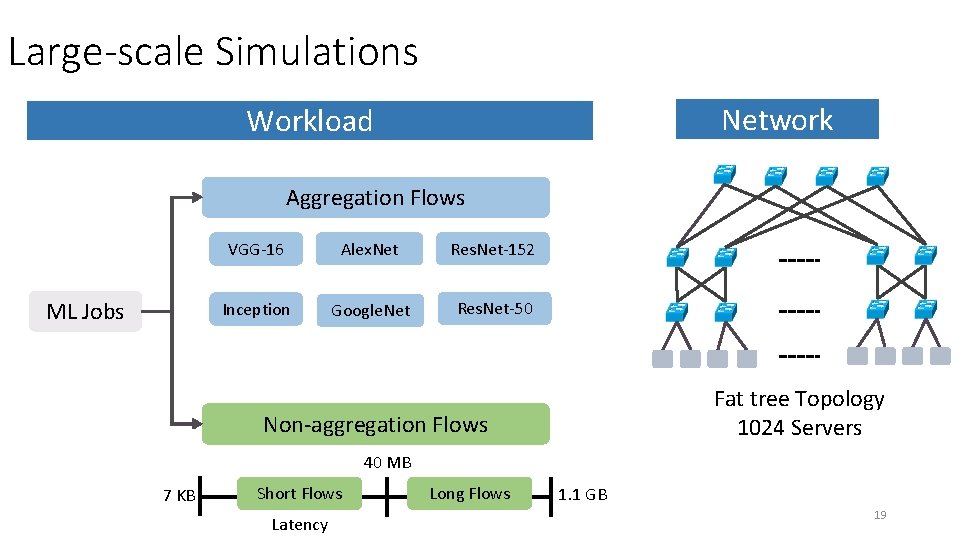

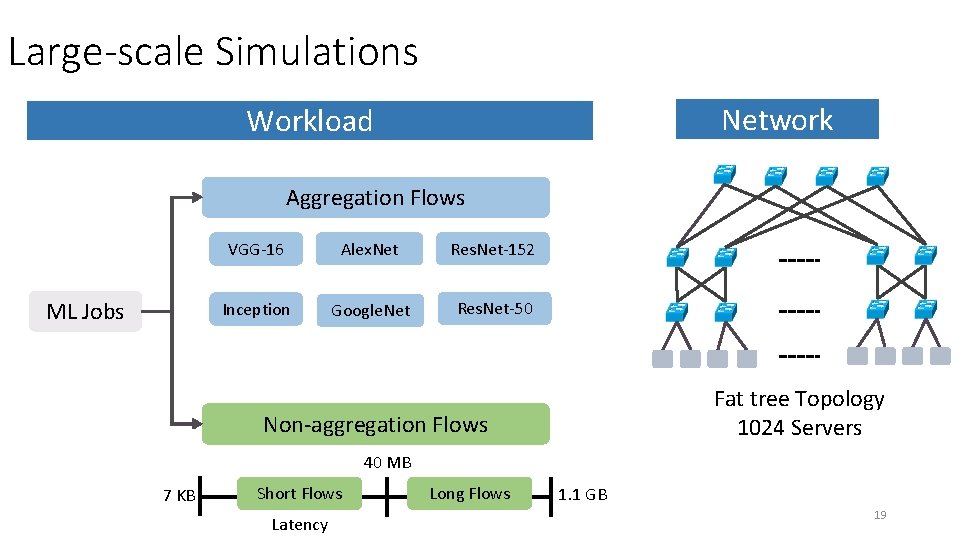

Large-scale Simulations Network Workload Aggregation Flows ML Jobs VGG-16 Alex. Net Res. Net-152 Inception Google. Net Res. Net-50 Fat tree Topology 1024 Servers Non-aggregation Flows 40 MB 7 KB Short Flows Latency Long Flows 1. 1 GB 19

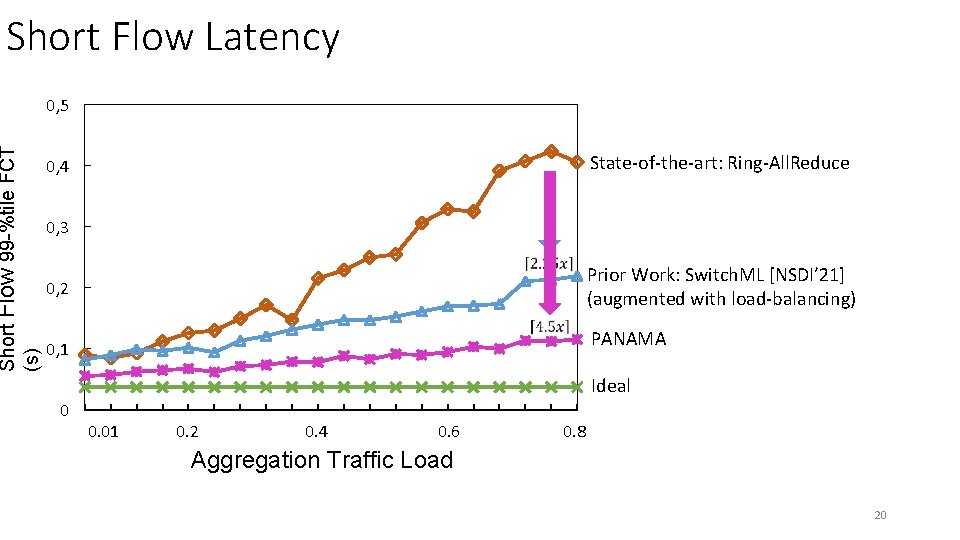

0, 5 State-of-the-art: Ring-All. Reduce 0, 4 0, 3 Prior Work: Switch. ML [NSDI’ 21] (augmented with load-balancing) 0, 2 PANAMA 0, 1 Ideal 0 0. 01 0. 2 0. 4 0. 6 0. 8 0, 01 0, 05 0, 09 0, 13 0, 18 0, 22 0, 26 0, 30 0, 34 0, 38 0, 43 0, 47 0, 51 0, 55 0, 59 0, 63 0, 68 0, 72 0, 76 0, 80 Short Flow 99 -%tile FCT (s) Short Flow Latency Aggregation Traffic Load 20

PANAMA: Progr. Ammable Network Architecture for ML Applications + + + 1. Bump-in-the-wire accelerator for line-rate aggregation Link congested! 2. Congestion control for fair sharing of network resources 3. Load-balancing mechanism for optimal network utilization 21