InformationTheoretic Listening Paris Smaragdis Machine Listening Group MIT

- Slides: 23

Information-Theoretic Listening Paris Smaragdis Machine Listening Group MIT Media Lab 9/17/2020

Outline u Defining a global goal for computational audition u Example 1: Developing a representation u Example 2: Developing grouping functions u Conclusions 2

Auditory Goals u Goals of computational audition are all over the place, should they? u Lack of formal rigor in most theories u Computational listening is fitting psychoacoustic experiment data 3

Auditory Development u What really made audition? u How did our hearing evolve? u How did our environment shape our hearing? u Can we evolve, rather than instruct, a machine to listen? 4

Goals of our Sensory System u Distinguish independent events u Object formation u Gestalt grouping u Minimize thinking and effort Perceive as few objects as possible u Think as little as possible u 5

Entropy Minimization as a Sensory Goal u Long history between entropy and perception u Barlow, Attneave, Attick, Redlich, etc. . . u Entropy can measure statistical dependencies u Entropy can measure economy in both ‘thought’ (algorithmic entropy) u and ‘information’ (Shannon entropy) u 6

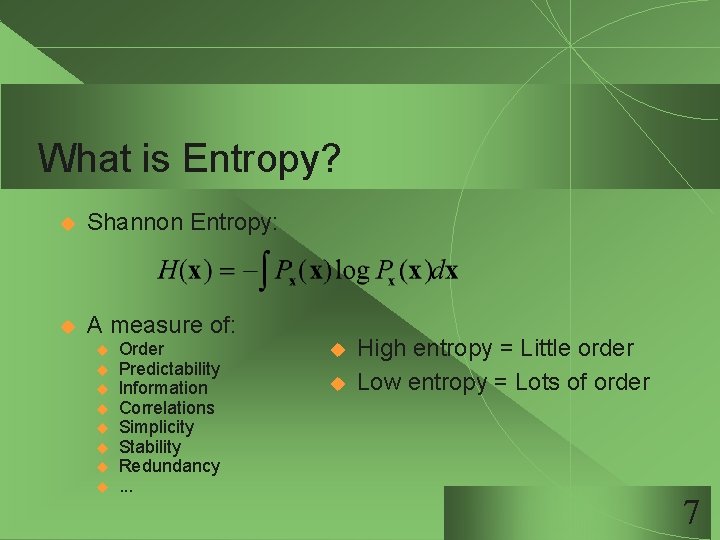

What is Entropy? u Shannon Entropy: u A measure of: u u u u Order Predictability Information Correlations Simplicity Stability Redundancy. . . u u High entropy = Little order Low entropy = Lots of order 7

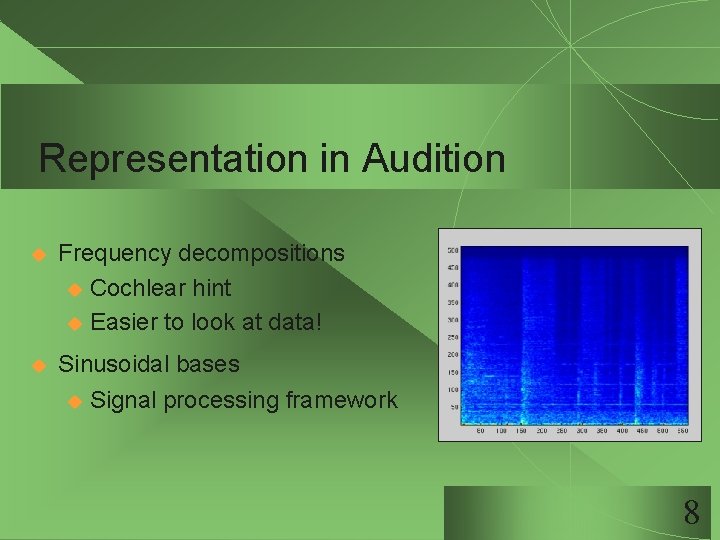

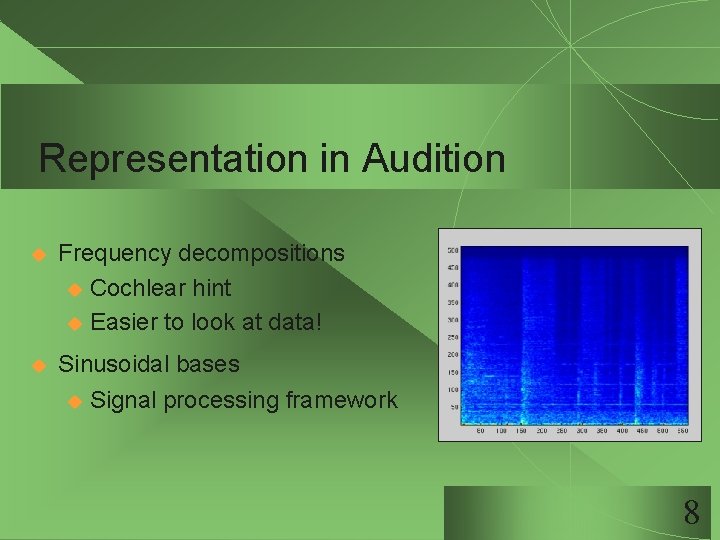

Representation in Audition u Frequency decompositions u Cochlear hint u Easier to look at data! u Sinusoidal bases u Signal processing framework 8

Evolving a Representation u Develop a basis decomposition u Bases should be statistically independent u Satisfaction u of minimal entropy idea Decomposition should be data driven u Account for different domains 9

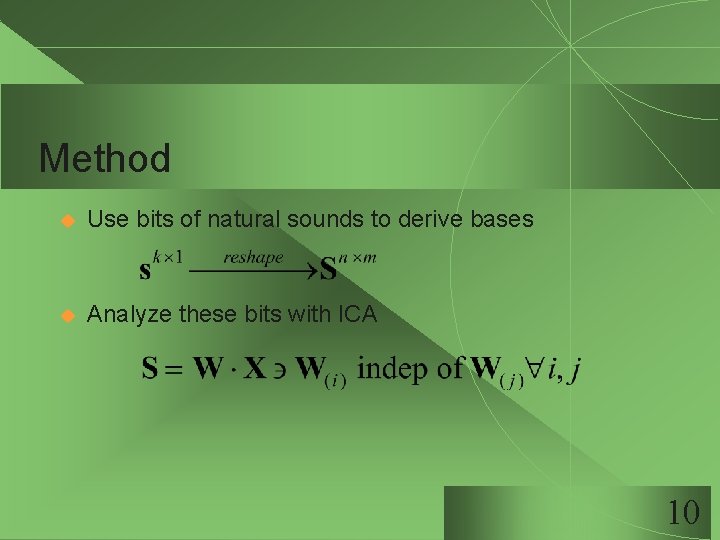

Method u Use bits of natural sounds to derive bases u Analyze these bits with ICA 10

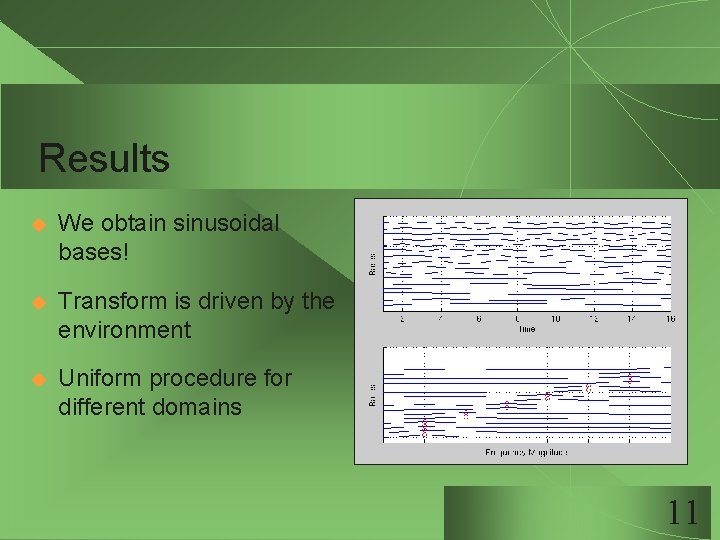

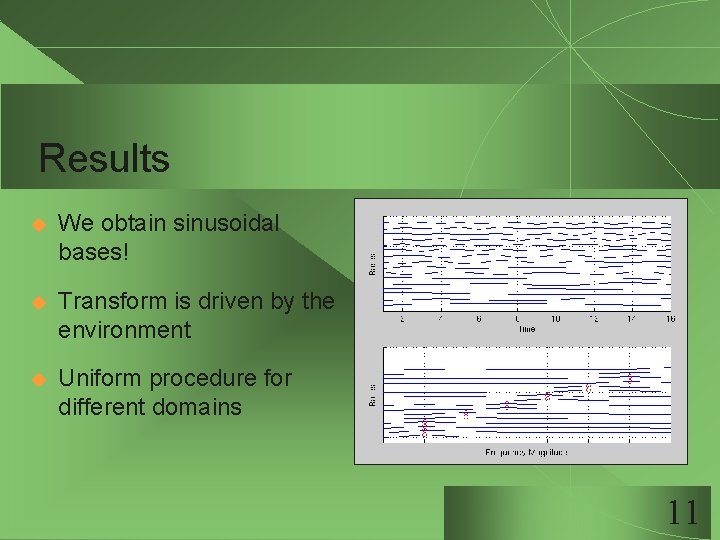

Results u We obtain sinusoidal bases! u Transform is driven by the environment u Uniform procedure for different domains 11

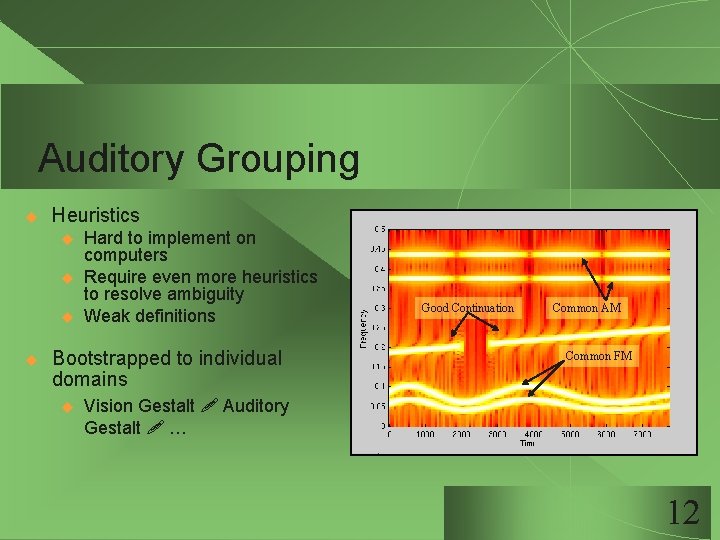

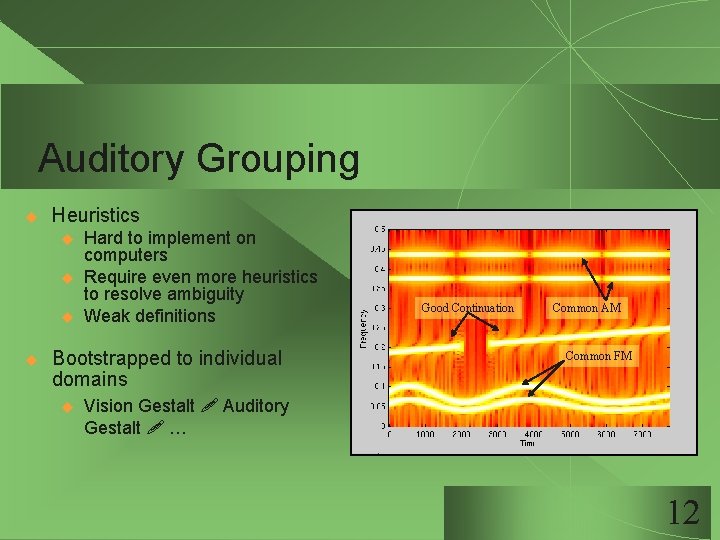

Auditory Grouping u Heuristics u u Hard to implement on computers Require even more heuristics to resolve ambiguity Weak definitions Bootstrapped to individual domains u Good Continuation Common AM Common FM Vision Gestalt Auditory Gestalt … 12

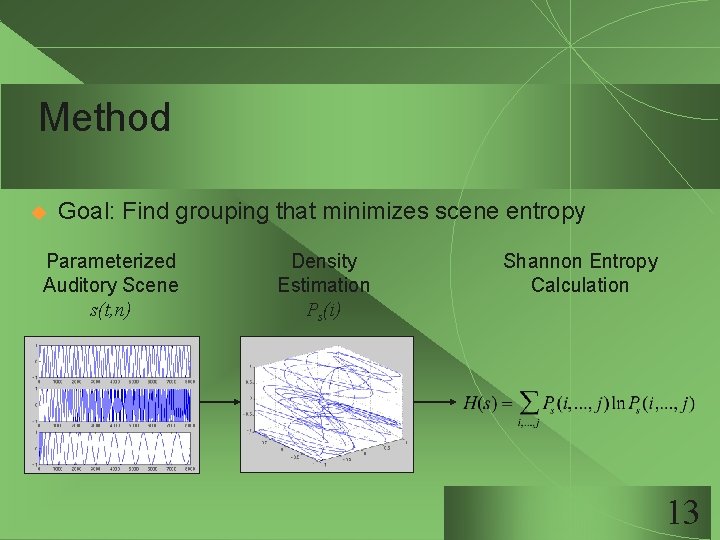

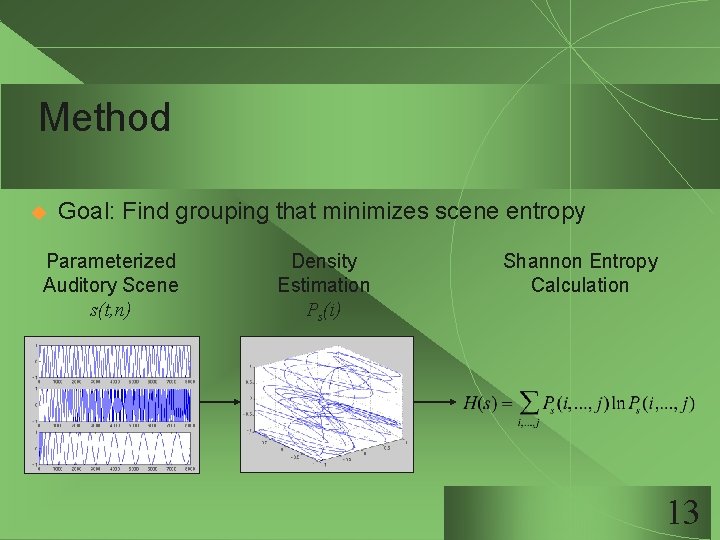

Method u Goal: Find grouping that minimizes scene entropy Parameterized Auditory Scene s(t, n) Density Estimation Ps(i) Shannon Entropy Calculation 13

Common Modulation - Frequency Scene Description: u Entropy Measurement: n = 0. 5 Frequency u Time 14

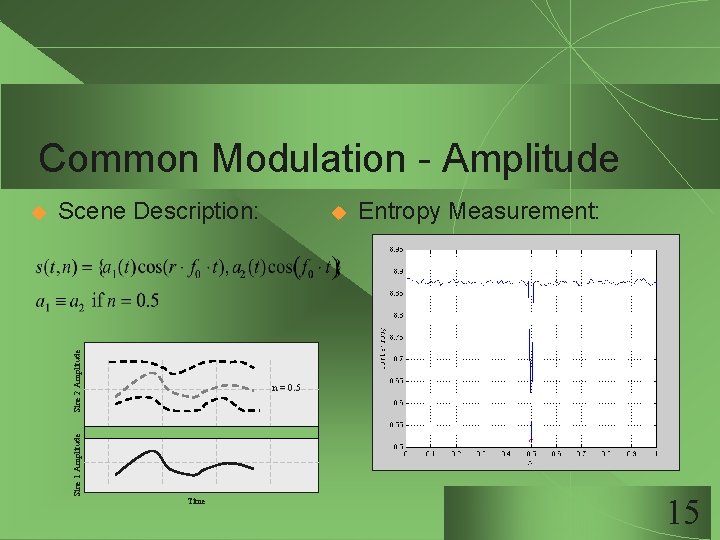

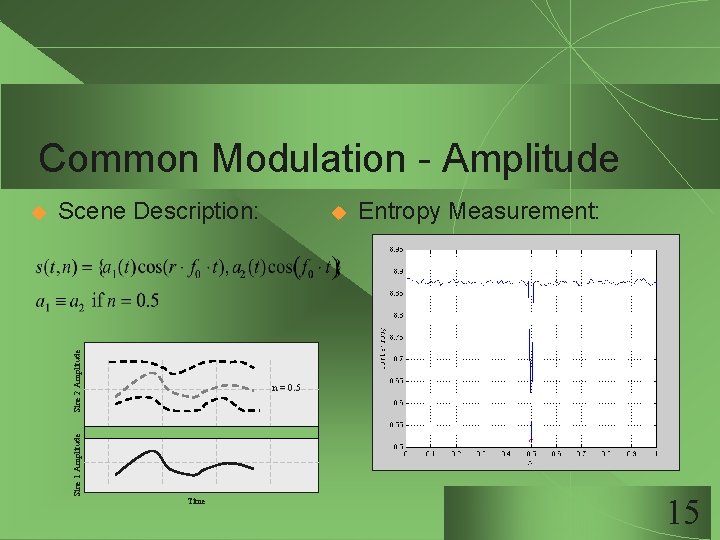

Common Modulation - Amplitude Sine 2 Amplitude Scene Description: Sine 1 Amplitude u u Entropy Measurement: n = 0. 5 Time 15

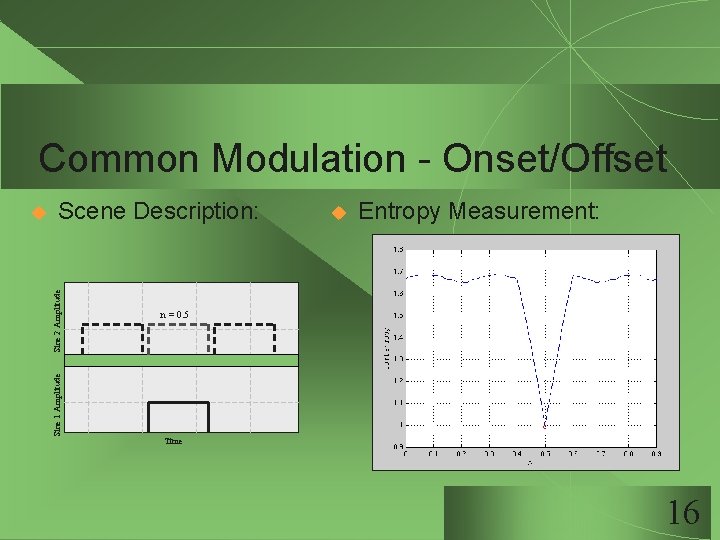

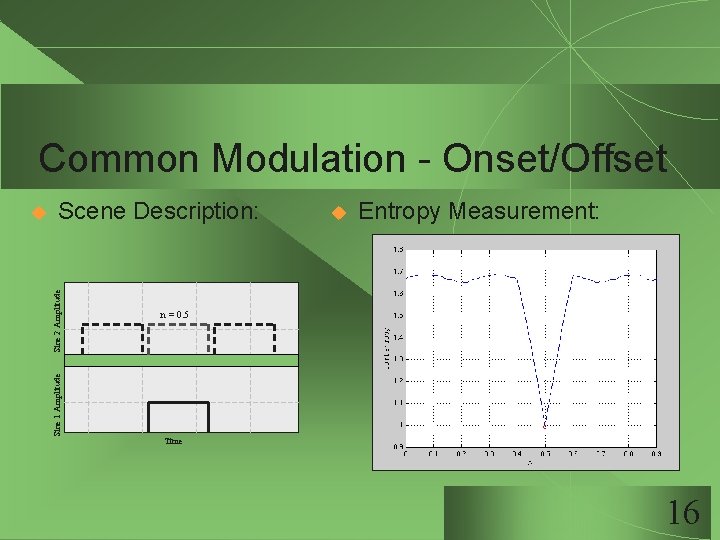

Common Modulation - Onset/Offset Sine 2 Amplitude Scene Description: Sine 1 Amplitude u u Entropy Measurement: n = 0. 5 Time 16

Similarity/Proximity - Harmonicity I Scene Description: u Entropy Measurement: Frequency u Time 17

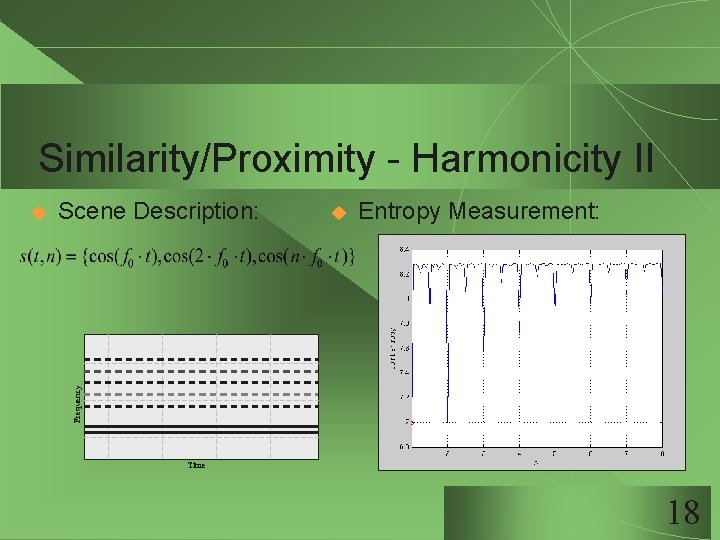

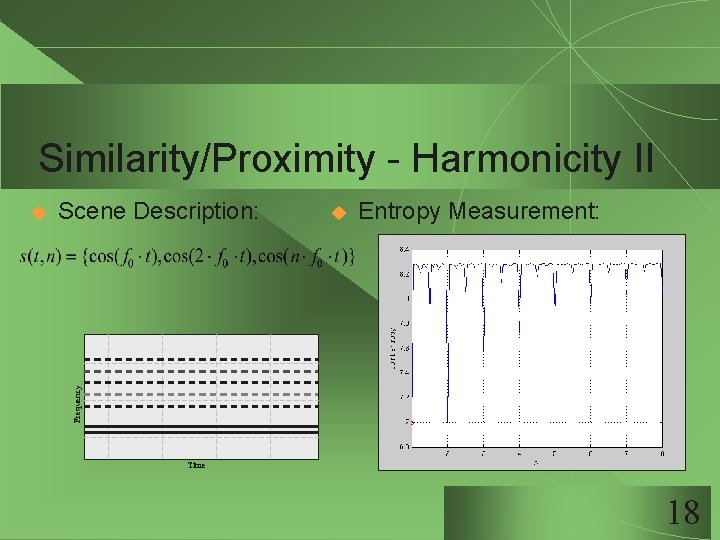

Similarity/Proximity - Harmonicity II Scene Description: u Entropy Measurement: Frequency u Time 18

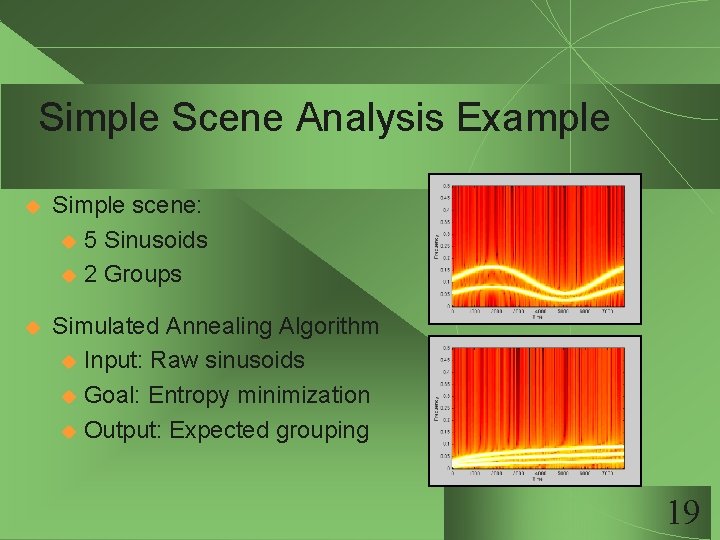

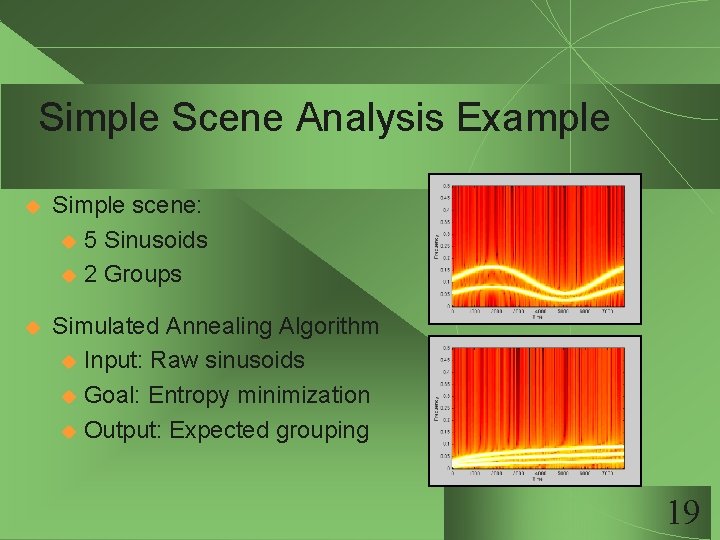

Simple Scene Analysis Example u Simple scene: u 5 Sinusoids u 2 Groups u Simulated Annealing Algorithm u Input: Raw sinusoids u Goal: Entropy minimization u Output: Expected grouping 19

Important Notes u No definition of time u Developed a concept of frequency u No parameter estimation requirement u Operations on data not parameters u No parameter setting! 20

Conclusions u u u Elegant and consistent formulation u No constraint over data representation u Uniform over different domains (Cross-modal!) u No parameter estimation u No parameter tuning! Biological plausibility u Barlow et al. . . Insight to perception development 21

Future Work u Good Cost Function? u u Incorporate time u u Joint entropy vs entropy of sums Shannon entropy vs Kolmogorov complexity Joint-statistics (cumulants, moments) Sounds have time dependencies I’m ignoring Generalize to include perceptual functions 22

Teasers u Dissonance and Entropy u Pitch Detection u Instrument Recognition 23

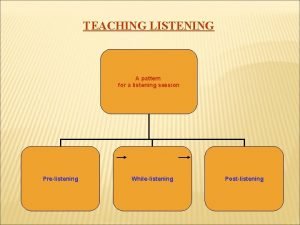

Pre while post listening

Pre while post listening Stages of listening lesson

Stages of listening lesson Active and passive listening

Active and passive listening Evaluative listening definition

Evaluative listening definition Speaking and listening: effective group discussions

Speaking and listening: effective group discussions Finite state machine vending machine example

Finite state machine vending machine example Mealy moore

Mealy moore Moore machine to mealy machine

Moore machine to mealy machine Chapter 10 energy, work and simple machines answer key

Chapter 10 energy, work and simple machines answer key It is a type of inclined plane that is wound around a post

It is a type of inclined plane that is wound around a post Ap psychology chapter 13 social psychology

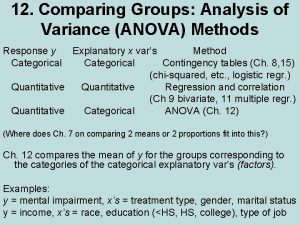

Ap psychology chapter 13 social psychology Within group variance vs between group

Within group variance vs between group Anova within group and between group

Anova within group and between group Primary group in sociology

Primary group in sociology Group 2 specialties

Group 2 specialties How to compare thermal stability of group 2 nitrates

How to compare thermal stability of group 2 nitrates Amino group and carboxyl group

Amino group and carboxyl group Amino group and carboxyl group

Amino group and carboxyl group In group out group

In group out group Group yourself or group yourselves

Group yourself or group yourselves Sumner's classification of social groups

Sumner's classification of social groups Joining together group theory and group skills

Joining together group theory and group skills Cafe voltaire paris

Cafe voltaire paris Villa paris glew

Villa paris glew