Information Theory and Games Ch 16 Information Theory

- Slides: 15

Information Theory and Games (Ch. 16)

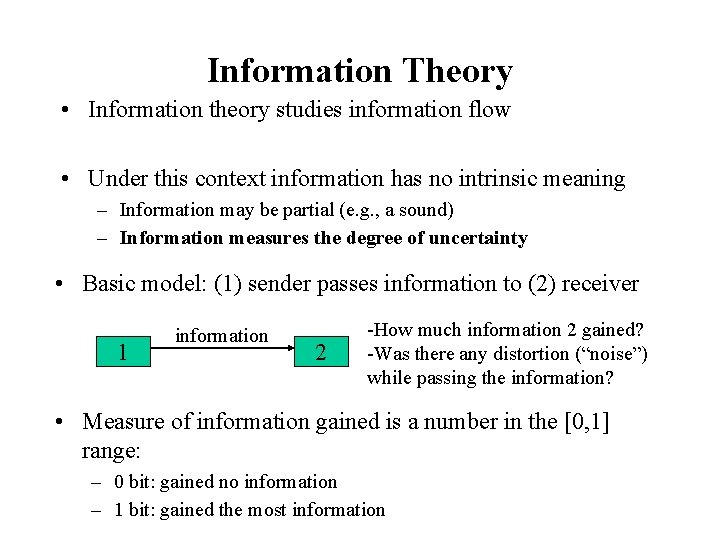

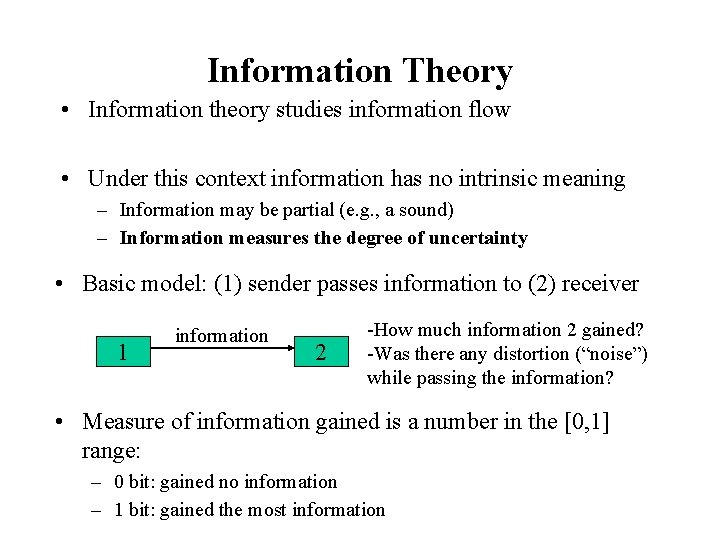

Information Theory • Information theory studies information flow • Under this context information has no intrinsic meaning – Information may be partial (e. g. , a sound) – Information measures the degree of uncertainty • Basic model: (1) sender passes information to (2) receiver 1 information 2 -How much information 2 gained? -Was there any distortion (“noise”) while passing the information? • Measure of information gained is a number in the [0, 1] range: – 0 bit: gained no information – 1 bit: gained the most information

Recall: Probability Distribution • The events E 1, E 2, …, Ek must meet the following conditions: • One always occur • No two can occur at the same time • The probabilities p 1, …, pk are numbers associated with these events, such that 0 pi 1 and p 1 + … + pk = 1 A probability distribution assigns probabilities to events such that the two properties above holds

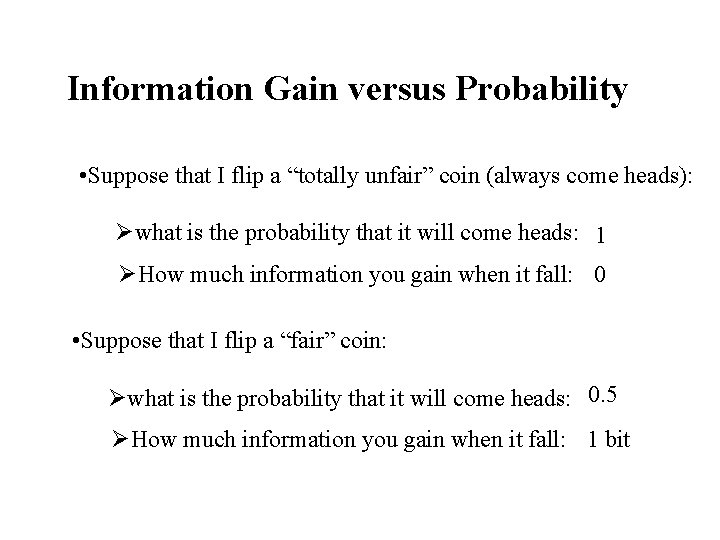

Information Gain versus Probability • Suppose that I flip a “totally unfair” coin (always come heads): Øwhat is the probability that it will come heads: 1 ØHow much information you gain when it fall: 0 • Suppose that I flip a “fair” coin: Øwhat is the probability that it will come heads: 0. 5 ØHow much information you gain when it fall: 1 bit

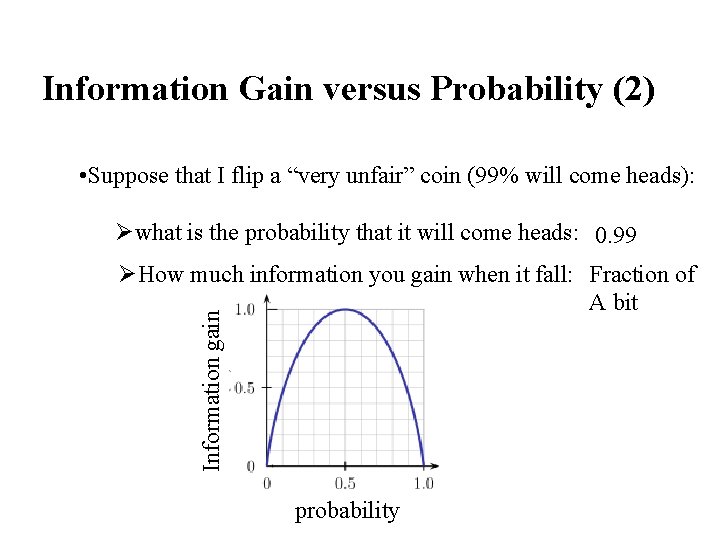

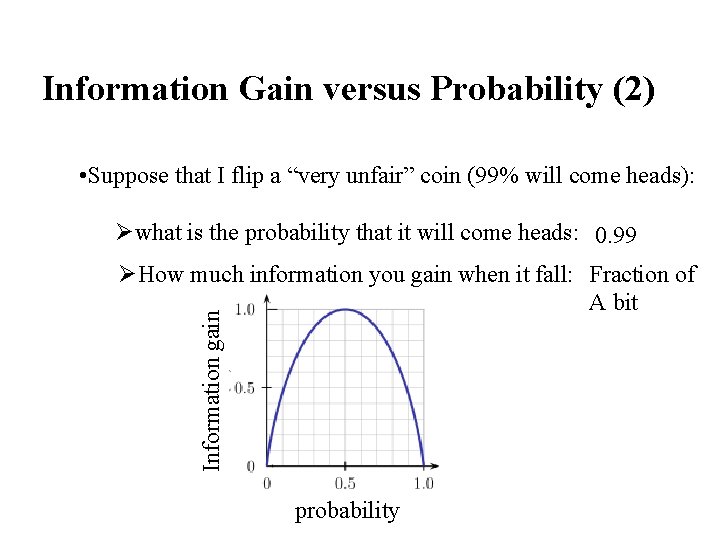

Information Gain versus Probability (2) • Suppose that I flip a “very unfair” coin (99% will come heads): Øwhat is the probability that it will come heads: 0. 99 Information gain ØHow much information you gain when it fall: Fraction of A bit probability

Information Gain versus Probability (3) • Imagine a stranger, “JL”. Which of the following questions, once answered, will provide more information about JL: ØDid you have breakfast this morning? ØWhat is your favorite color? • Hints: • What are your chances of guessing the answer correctly? Ø What if you knew JL and you knew his preferences?

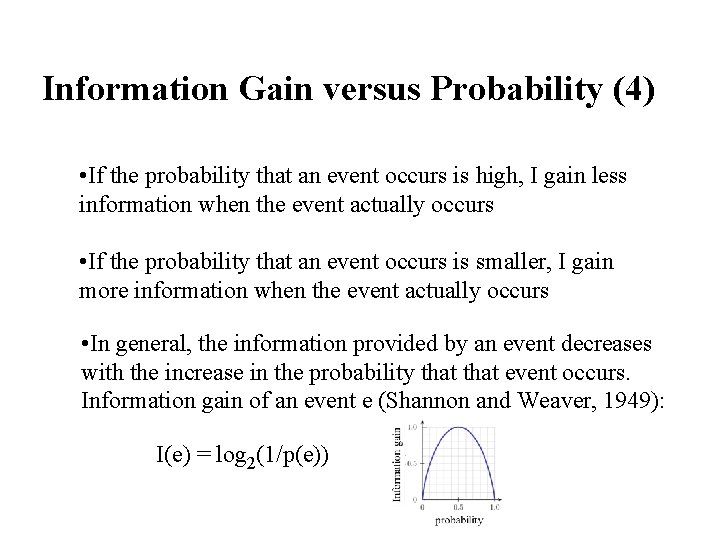

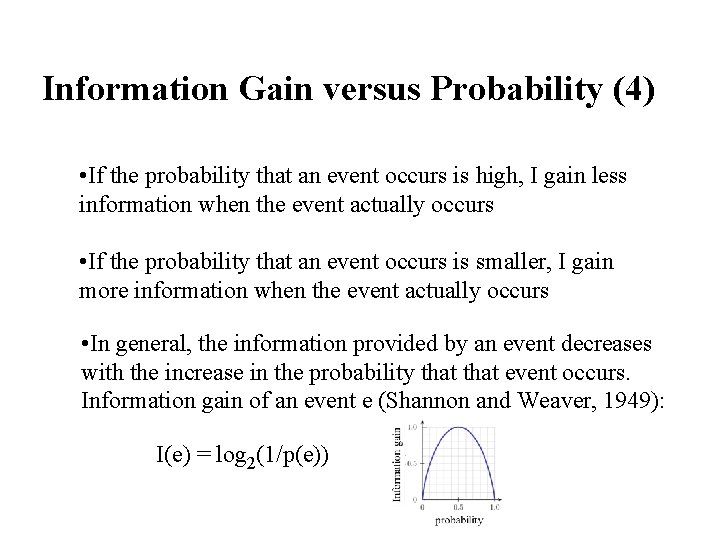

Information Gain versus Probability (4) • If the probability that an event occurs is high, I gain less information when the event actually occurs • If the probability that an event occurs is smaller, I gain more information when the event actually occurs • In general, the information provided by an event decreases with the increase in the probability that event occurs. Information gain of an event e (Shannon and Weaver, 1949): I(e) = log 2(1/p(e))

Information, Uncertainty, and Meaningful Play • Recall discussion of relation between uncertainty and Games – What happens if there is no uncertainty at all in a game (both at macro-level and micro-level)? • What is the relation between uncertainty and information gain? If there is no uncertainty then information gain is 0. As a result, player’s actions are not meaningful!

Lets Play Twenty Questions • I am thinking of an animal: • You can ask “yes/no” questions only • Winning condition: – If you guess the animal correctly after asking 20 questions or less, and – you can’t make more than 3 attempts to guess the right animal

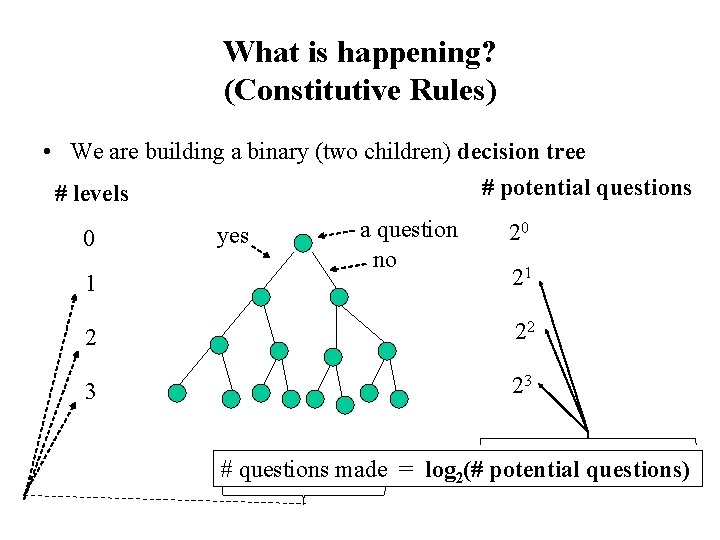

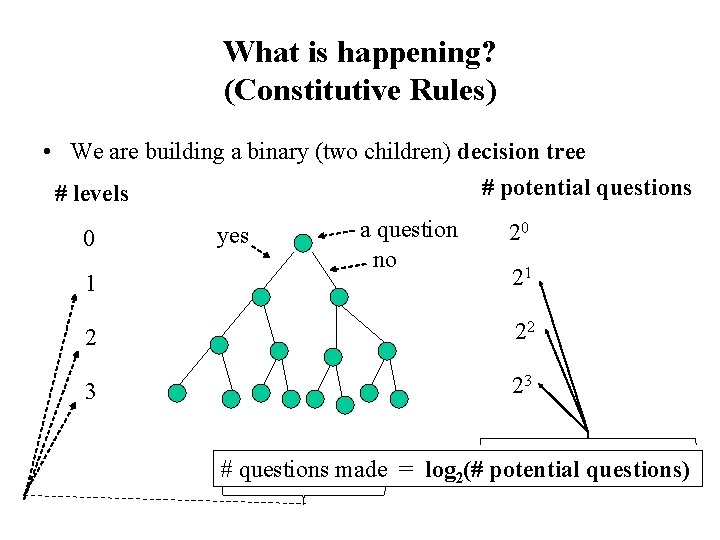

What is happening? (Constitutive Rules) • We are building a binary (two children) decision tree # potential questions # levels 0 1 yes a question no 20 21 2 22 3 23 # questions made = log 2(# potential questions)

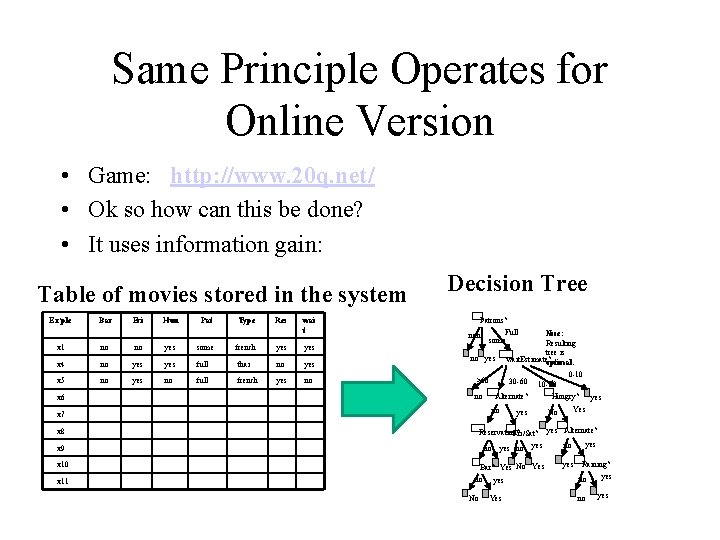

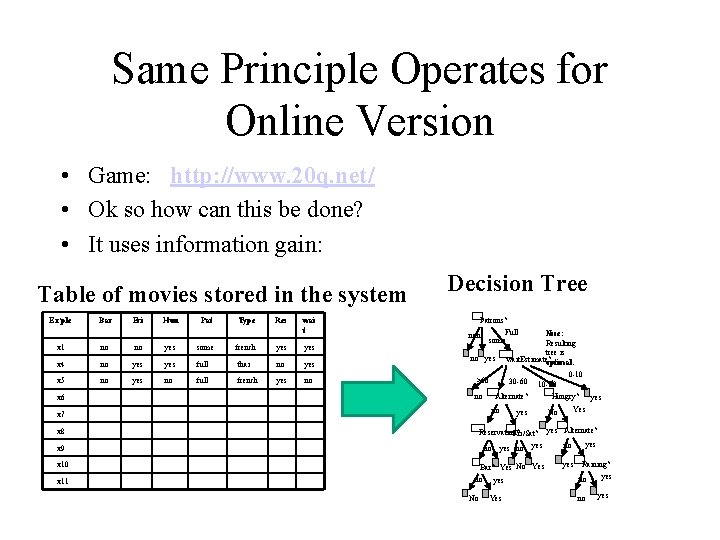

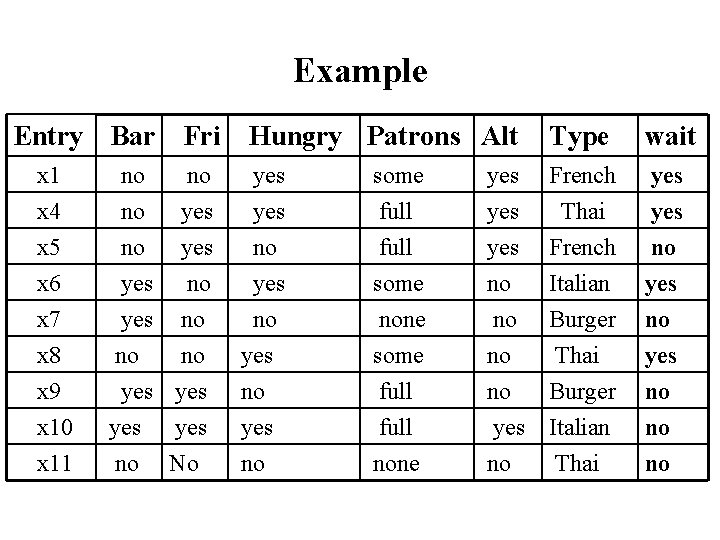

Same Principle Operates for Online Version • Game: http: //www. 20 q. net/ • Ok so how can this be done? • It uses information gain: Table of movies stored in the system Ex’ple Bar Fri Hun Pat Type Res wai t x 1 no no yes some french yes x 4 no yes full thai no yes x 5 no yes no full french yes no x 6 Decision Tree Patrons? Full none some no yes >60 x 11 0 -10 10 -30 Alternate? no Hungry? yes Alternate? yes no Bar? Yes No Yes no yes no No yes no Yes yes Yes No Reservation? Fri/Sat? x 9 x 10 30 -60 no x 7 x 8 Nice: Resulting tree is wait. Estimate? optimal. yes Raining? no no yes

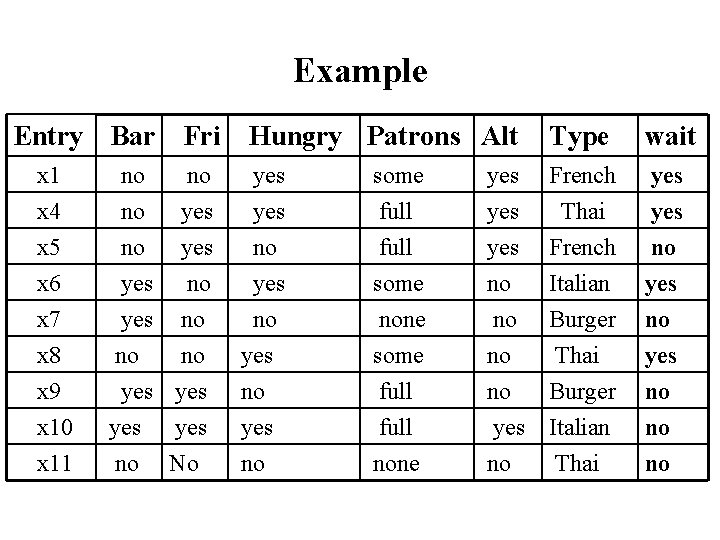

Example Entry Bar Fri Hungry Patrons Alt Type wait x 1 x 4 x 5 no no yes yes no some full yes yes French Thai French yes no x 6 x 7 x 8 yes no no yes some none some no no no Italian Burger Thai yes no yes x 9 yes no full no Burger no yes no full none yes no Italian Thai no no x 10 x 11 yes no yes No

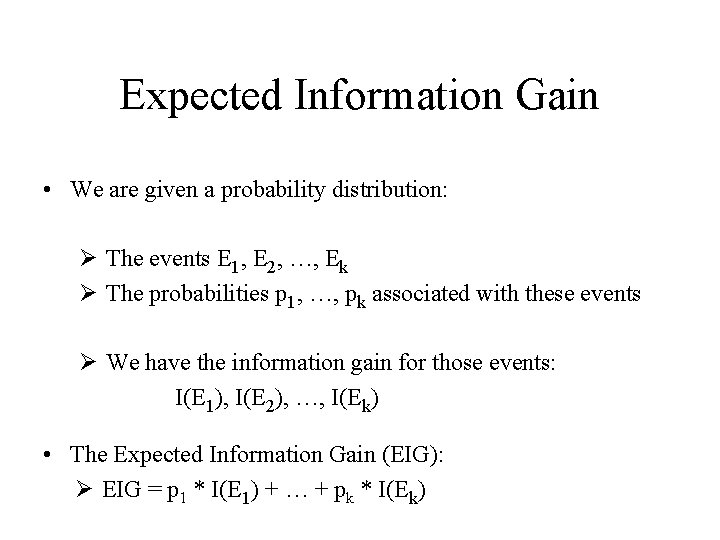

Expected Information Gain • We are given a probability distribution: Ø The events E 1, E 2, …, Ek Ø The probabilities p 1, …, pk associated with these events Ø We have the information gain for those events: I(E 1), I(E 2), …, I(Ek) • The Expected Information Gain (EIG): Ø EIG = p 1 * I(E 1) + … + pk * I(Ek)

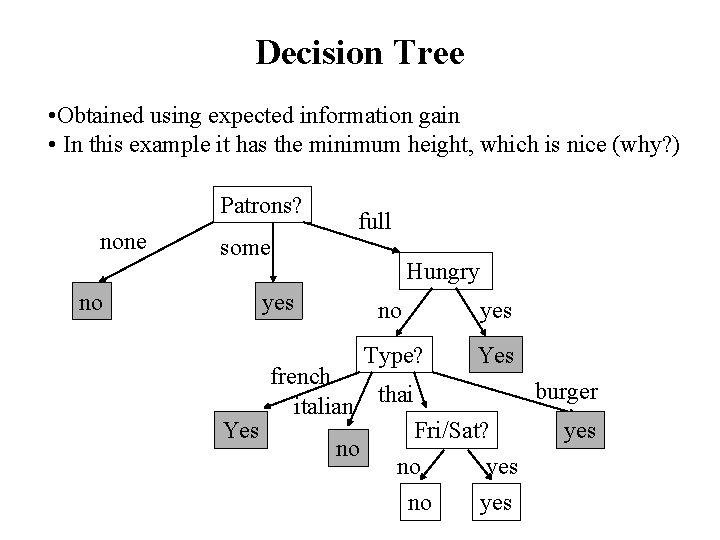

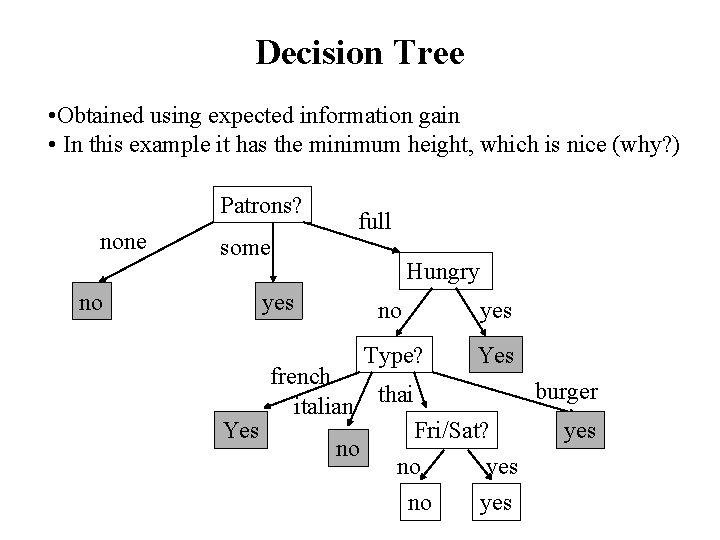

Decision Tree • Obtained using expected information gain • In this example it has the minimum height, which is nice (why? ) Patrons? none full some no Hungry yes Yes french italian no no yes Type? Yes thai Fri/Sat? no yes burger yes

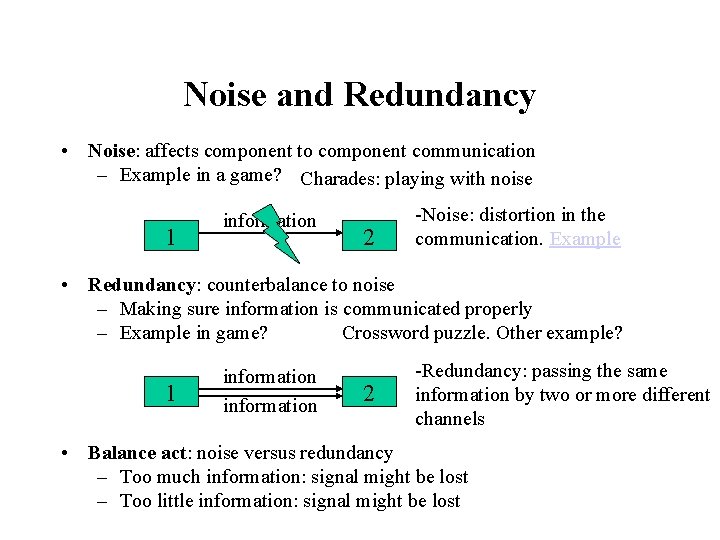

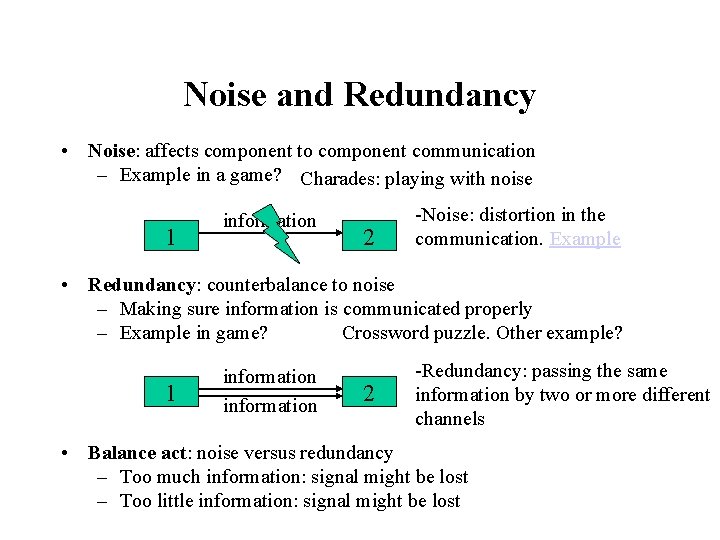

Noise and Redundancy • Noise: affects component to component communication – Example in a game? Charades: playing with noise 1 information 2 -Noise: distortion in the communication. Example • Redundancy: counterbalance to noise – Making sure information is communicated properly Crossword puzzle. Other example? – Example in game? 1 information 2 -Redundancy: passing the same information by two or more different channels • Balance act: noise versus redundancy – Too much information: signal might be lost – Too little information: signal might be lost