Information Organization Classification Classification Type SingleLabel vs MultiLabel

Information Organization: Classification

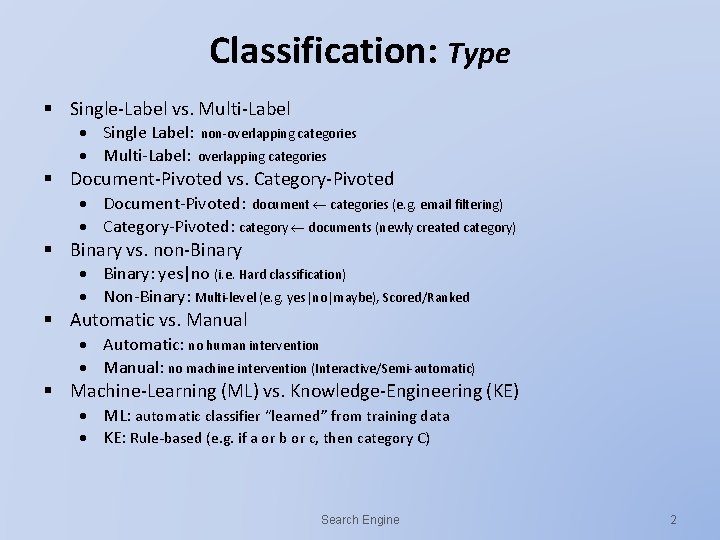

Classification: Type § Single-Label vs. Multi-Label Single Label: non-overlapping categories Multi-Label: overlapping categories § Document-Pivoted vs. Category-Pivoted Document-Pivoted: document categories (e. g. email filtering) Category-Pivoted: category documents (newly created category) § Binary vs. non-Binary Binary: yes|no (i. e. Hard classification) Non-Binary: Multi-level (e. g. yes|no|maybe), Scored/Ranked § Automatic vs. Manual Automatic: no human intervention Manual: no machine intervention (Interactive/Semi-automatic) § Machine-Learning (ML) vs. Knowledge-Engineering (KE) ML: automatic classifier “learned” from training data KE: Rule-based (e. g. if a or b or c, then category C) Search Engine 2

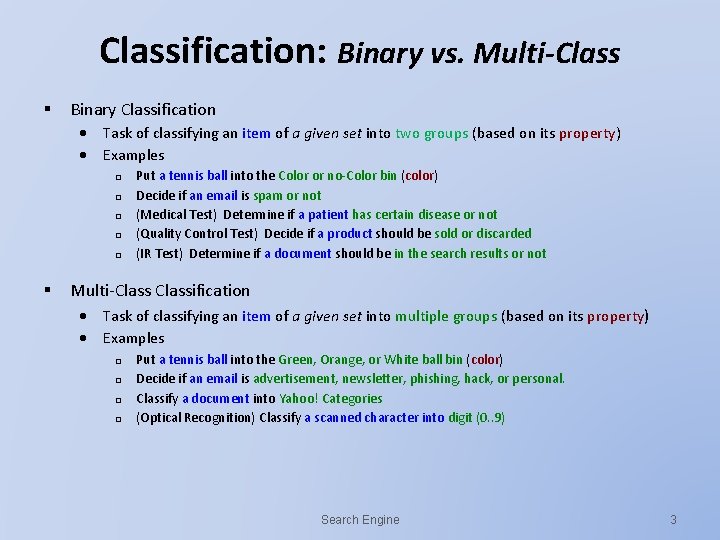

Classification: Binary vs. Multi-Class § Binary Classification Task of classifying an item of a given set into two groups (based on its property) Examples q q q § Put a tennis ball into the Color or no-Color bin (color) Decide if an email is spam or not (Medical Test) Determine if a patient has certain disease or not (Quality Control Test) Decide if a product should be sold or discarded (IR Test) Determine if a document should be in the search results or not Multi-Classification Task of classifying an item of a given set into multiple groups (based on its property) Examples q q Put a tennis ball into the Green, Orange, or White ball bin (color) Decide if an email is advertisement, newsletter, phishing, hack, or personal. Classify a document into Yahoo! Categories (Optical Recognition) Classify a scanned character into digit (0. . 9) Search Engine 3

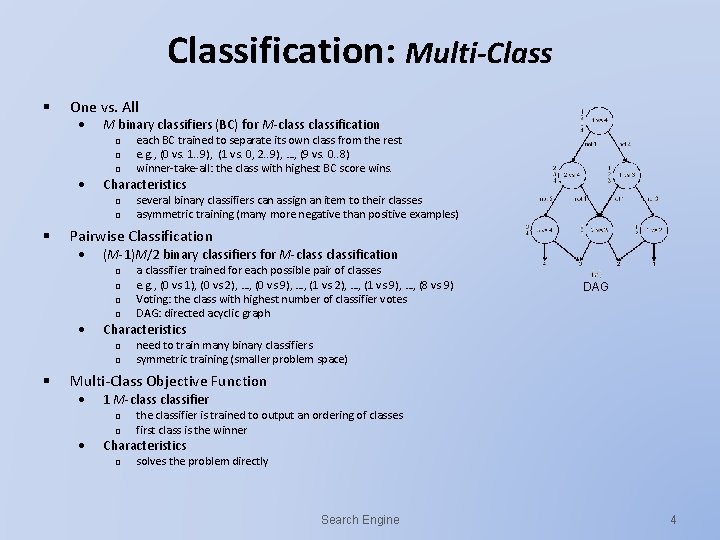

Classification: Multi-Class § One vs. All M binary classifiers (BC) for M-classification q q q Characteristics q q § several binary classifiers can assign an item to their classes asymmetric training (many more negative than positive examples) Pairwise Classification (M-1)M/2 binary classifiers for M-classification q q a classifier trained for each possible pair of classes e. g. , (0 vs 1), (0 vs 2), …, (0 vs 9), …, (1 vs 2), …, (1 vs 9), …, (8 vs 9) Voting: the class with highest number of classifier votes DAG: directed acyclic graph DAG Characteristics q q § each BC trained to separate its own class from the rest e. g. , (0 vs. 1. . 9), (1 vs. 0, 2. . 9), …, (9 vs. 0. . 8) winner-take-all: the class with highest BC score wins. need to train many binary classifiers symmetric training (smaller problem space) Multi-Class Objective Function 1 M-classifier q q the classifier is trained to output an ordering of classes first class is the winner Characteristics q solves the problem directly Search Engine 4

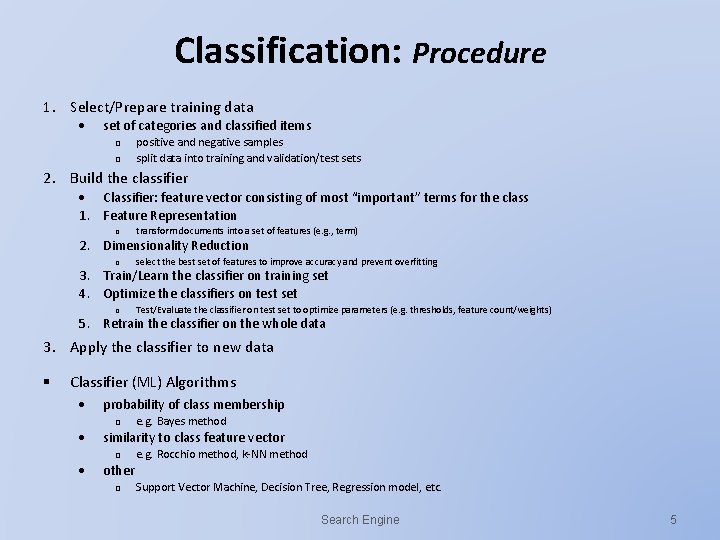

Classification: Procedure 1. Select/Prepare training data set of categories and classified items q q positive and negative samples split data into training and validation/test sets 2. Build the classifier Classifier: feature vector consisting of most “important” terms for the class 1. Feature Representation q transform documents into a set of features (e. g. , term) 2. Dimensionality Reduction q select the best set of features to improve accuracy and prevent overfitting 3. Train/Learn the classifier on training set 4. Optimize the classifiers on test set q Test/Evaluate the classifier on test set to optimize parameters (e. g. thresholds, feature count/weights) 5. Retrain the classifier on the whole data 3. Apply the classifier to new data § Classifier (ML) Algorithms probability of class membership q similarity to class feature vector q e. g. Bayes method e. g. Rocchio method, k-NN method other q Support Vector Machine, Decision Tree, Regression model, etc. Search Engine 5

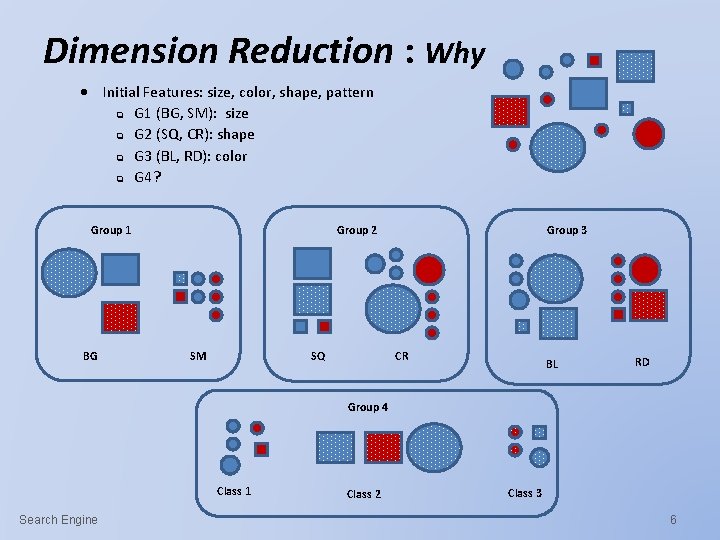

Dimension Reduction : Why Initial Features: size, color, shape, pattern q G 1 (BG, SM): size q G 2 (SQ, CR): shape q G 3 (BL, RD): color q G 4? Group 1 BG Group 2 SM SQ Group 3 CR BL RD Group 4 Class 1 Search Engine Class 2 Class 3 6

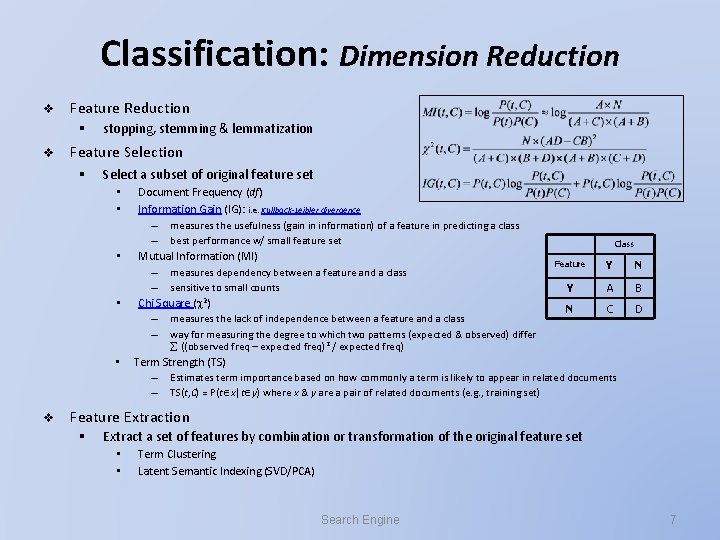

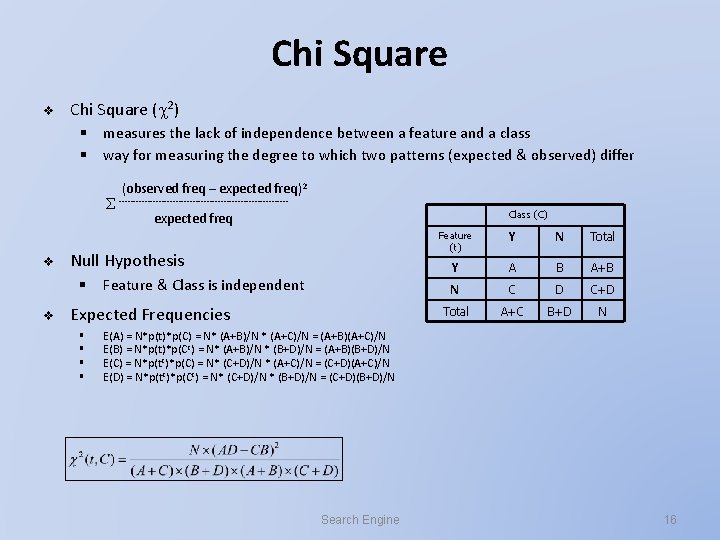

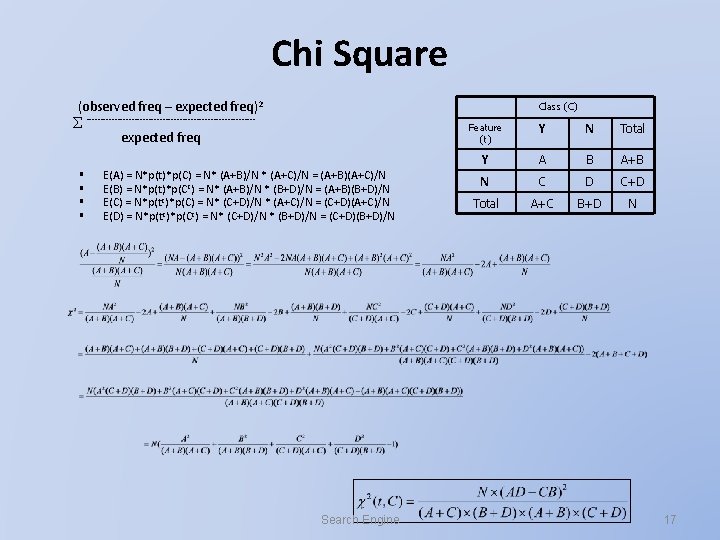

Classification: Dimension Reduction v Feature Reduction § v stopping, stemming & lemmatization Feature Selection § Select a subset of original feature set • • Document Frequency (df) Information Gain (IG): i. e. Kullback-Leibler divergence – measures the usefulness (gain in information) of a feature in predicting a class – best performance w/ small feature set Mutual Information (MI) – measures dependency between a feature and a class – sensitive to small counts Chi Square ( 2) – measures the lack of independence between a feature and a class – way for measuring the degree to which two patterns (expected & observed) differ Class Feature Y N Y A B N C D ((observed freq – expected freq)2 / expected freq) • v Term Strength (TS) – Estimates term importance based on how commonly a term is likely to appear in related documents – TS(t, C) = P(t x|t y) where x & y are a pair of related documents (e. g. , training set) Feature Extraction § Extract a set of features by combination or transformation of the original feature set • • Term Clustering Latent Semantic Indexing (SVD/PCA) Search Engine 7

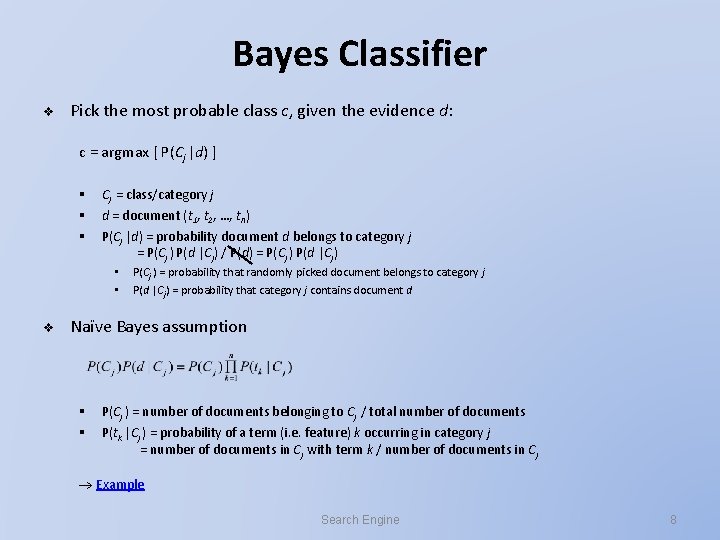

Bayes Classifier v Pick the most probable class c, given the evidence d: c = argmax [ P(Cj |d) ] § § § Cj = class/category j d = document (t 1, t 2, …, tn) P(Cj |d) = probability document d belongs to category j = P(Cj ) P(d |Cj) / P(d) = P(Cj ) P(d |Cj) • • v P(Cj ) = probability that randomly picked document belongs to category j P(d |Cj) = probability that category j contains document d Naïve Bayes assumption § § P(Cj ) = number of documents belonging to Cj / total number of documents P(tk |Cj ) = probability of a term (i. e. feature) k occurring in category j = number of documents in Cj with term k / number of documents in Cj Example Search Engine 8

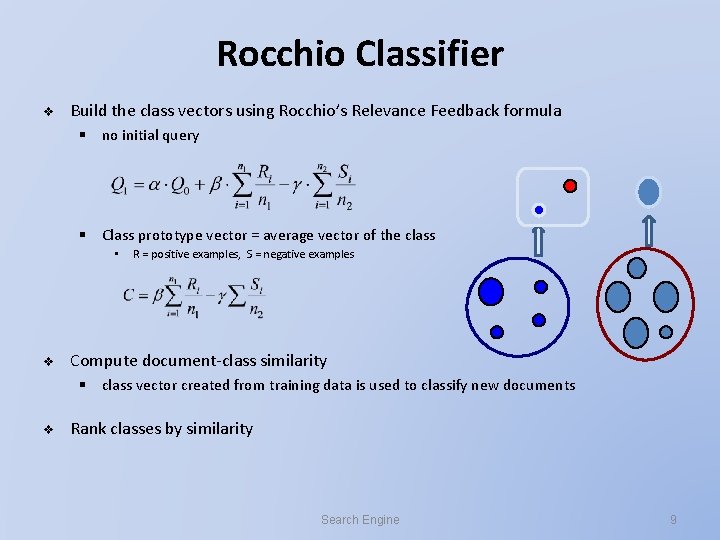

Rocchio Classifier v Build the class vectors using Rocchio’s Relevance Feedback formula § no initial query § Class prototype vector = average vector of the class • v R = positive examples, S = negative examples Compute document-class similarity § class vector created from training data is used to classify new documents v Rank classes by similarity Search Engine 9

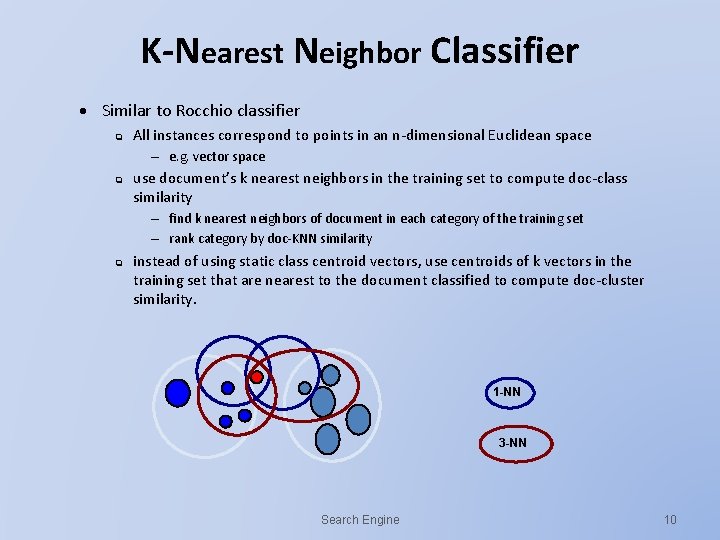

K-Nearest Neighbor Classifier Similar to Rocchio classifier q All instances correspond to points in an n-dimensional Euclidean space – e. g. vector space q use document’s k nearest neighbors in the training set to compute doc-class similarity – find k nearest neighbors of document in each category of the training set – rank category by doc-KNN similarity q instead of using static class centroid vectors, use centroids of k vectors in the training set that are nearest to the document classified to compute doc-cluster similarity. 1 -NN 3 -NN Search Engine 10

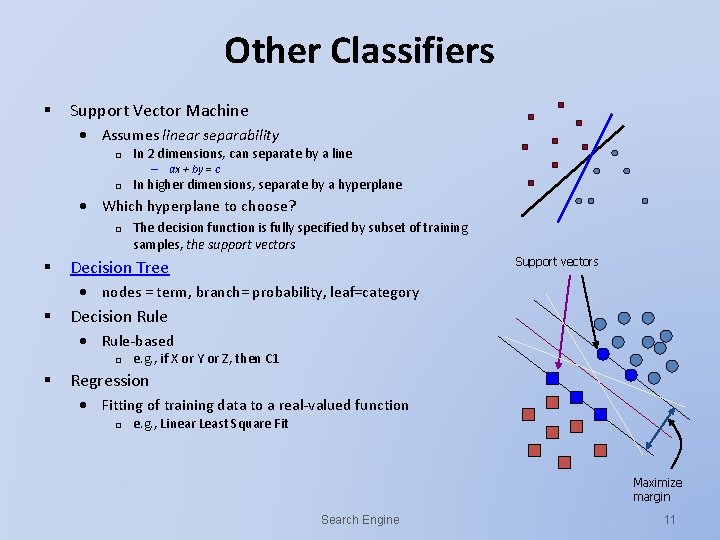

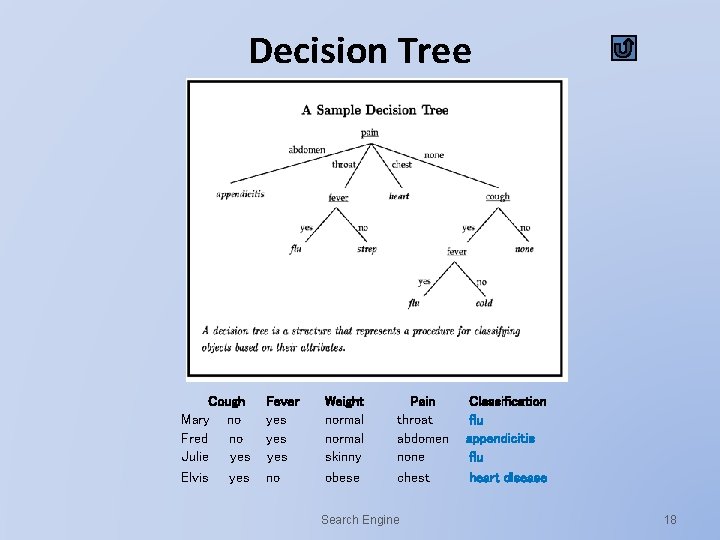

Other Classifiers § Support Vector Machine Assumes linear separability q In 2 dimensions, can separate by a line – ax + by = c q In higher dimensions, separate by a hyperplane Which hyperplane to choose? q § The decision function is fully specified by subset of training samples, the support vectors Support vectors Decision Tree nodes = term, branch= probability, leaf=category § Decision Rule-based q § e. g. , if X or Y or Z, then C 1 Regression Fitting of training data to a real-valued function q e. g. , Linear Least Square Fit Maximize margin Search Engine 11

Classification: Problems § Noise Data Training data often contains noise q False positives and false negatives – e. g. medical tests § Inconsistent Classification structure q static, ordered Categorization q tomato in fruit or vegetable category Category label q retrieval, search, IR Indexing inconsistency/Error § Resource Intensive § Solutions? Faceted classification Fusion of IR & IO Search Engine 12

(Optional ) For curious-minded and advanced learners SUPPLEMENTAL MATERIAL Search Engine 13

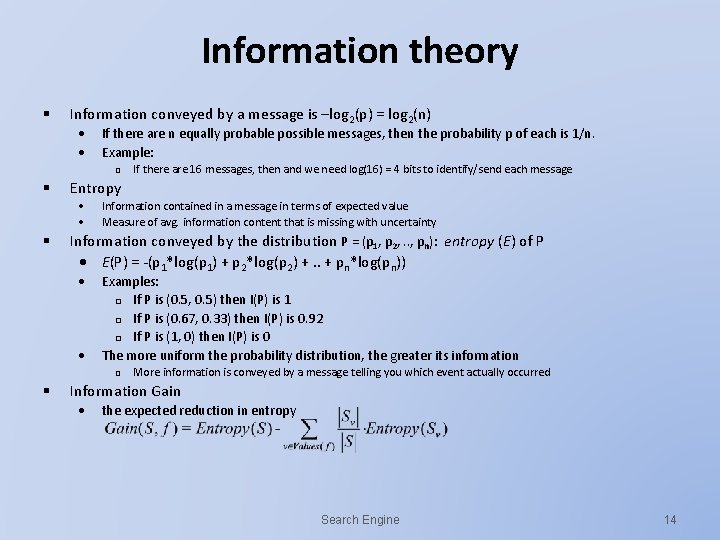

Information theory § Information conveyed by a message is –log 2(p) = log 2(n) If there are n equally probable possible messages, then the probability p of each is 1/n. Example: q § Entropy § Information contained in a message in terms of expected value Measure of avg. information content that is missing with uncertainty Information conveyed by the distribution P = (p 1, p 2, . . , pn): entropy (E) of P E(P) = -(p 1*log(p 1) + p 2*log(p 2) +. . + pn*log(pn)) Examples: q If P is (0. 5, 0. 5) then I(P) is 1 q If P is (0. 67, 0. 33) then I(P) is 0. 92 q If P is (1, 0) then I(P) is 0 The more uniform the probability distribution, the greater its information q § If there are 16 messages, then and we need log(16) = 4 bits to identify/send each message More information is conveyed by a message telling you which event actually occurred Information Gain the expected reduction in entropy Search Engine 14

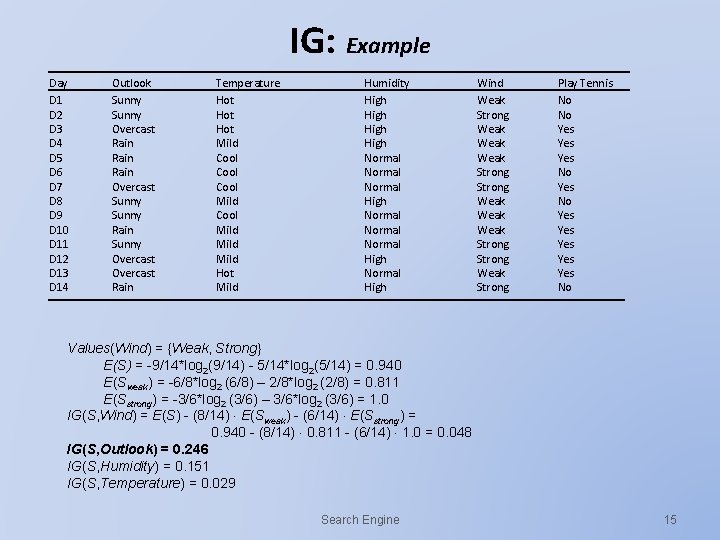

IG: Example Day D 1 D 2 D 3 D 4 D 5 D 6 D 7 D 8 D 9 D 10 D 11 D 12 D 13 D 14 Outlook Sunny Overcast Rain Overcast Sunny Rain Sunny Overcast Rain Temperature Hot Hot Mild Cool Mild Hot Mild Humidity High Normal Normal High Wind Weak Strong Weak Weak Strong Weak Strong Play Tennis No No Yes Yes Yes No Values(Wind) = {Weak, Strong} E(S) = -9/14*log 2(9/14) - 5/14*log 2(5/14) = 0. 940 E(Sweak) = -6/8*log 2 (6/8) – 2/8*log 2 (2/8) = 0. 811 E(Sstrong) = -3/6*log 2 (3/6) – 3/6*log 2 (3/6) = 1. 0 IG(S, Wind) = E(S) - (8/14) E(Sweak) - (6/14) E(Sstrong) = 0. 940 - (8/14) 0. 811 - (6/14) 1. 0 = 0. 048 IG(S, Outlook) = 0. 246 IG(S, Humidity) = 0. 151 IG(S, Temperature) = 0. 029 Search Engine 15

Chi Square v Chi Square ( 2) § measures the lack of independence between a feature and a class § way for measuring the degree to which two patterns (expected & observed) differ v (observed freq – expected freq)2 ------------------------------ Class (C) expected freq Null Hypothesis § Feature & Class is independent v Expected Frequencies § § Feature (t) Y N Total Y A B A+B N C D C+D Total A+C B+D N E(A) = N*p(t)*p(C) = N* (A+B)/N * (A+C)/N = (A+B)(A+C)/N E(B) = N*p(t)*p(Cc) = N* (A+B)/N * (B+D)/N = (A+B)(B+D)/N E(C) = N*p(tc)*p(C) = N* (C+D)/N * (A+C)/N = (C+D)(A+C)/N E(D) = N*p(tc)*p(Cc) = N* (C+D)/N * (B+D)/N = (C+D)(B+D)/N Search Engine 16

Chi Square (observed freq – expected freq)2 ------------------------------expected freq § § Class (C) E(A) = N*p(t)*p(C) = N* (A+B)/N * (A+C)/N = (A+B)(A+C)/N E(B) = N*p(t)*p(Cc) = N* (A+B)/N * (B+D)/N = (A+B)(B+D)/N E(C) = N*p(tc)*p(C) = N* (C+D)/N * (A+C)/N = (C+D)(A+C)/N E(D) = N*p(tc)*p(Cc) = N* (C+D)/N * (B+D)/N = (C+D)(B+D)/N Search Engine Feature (t) Y N Total Y A B A+B N C D C+D Total A+C B+D N 17

Decision Tree Cough Mary no Fred no Julie yes Elvis yes Fever yes yes no Weight normal skinny obese Pain throat abdomen none chest Search Engine Classification flu appendicitis flu heart disease 18

- Slides: 18