Information Filtering Recommender Systems Lecture for CS 410

- Slides: 22

Information Filtering & Recommender Systems (Lecture for CS 410 Text Info Systems) Cheng. Xiang Zhai Department of Computer Science University of Illinois, Urbana-Champaign 1

Short vs. Long Term Info Need • Short-term information need (Ad hoc retrieval) – “Temporary need”, e. g. , info about fixing a bug – Information source is relatively static – User “pulls” information – Application example: library search, Web search, … • Long-term information need (Filtering) – “Stable need”, e. g. , your hobby – Information source is dynamic – System “pushes” information to user – Applications: news filter, movie recommender, … 2

Part I: Content-Based Filtering (Adaptive Information Filtering) 3

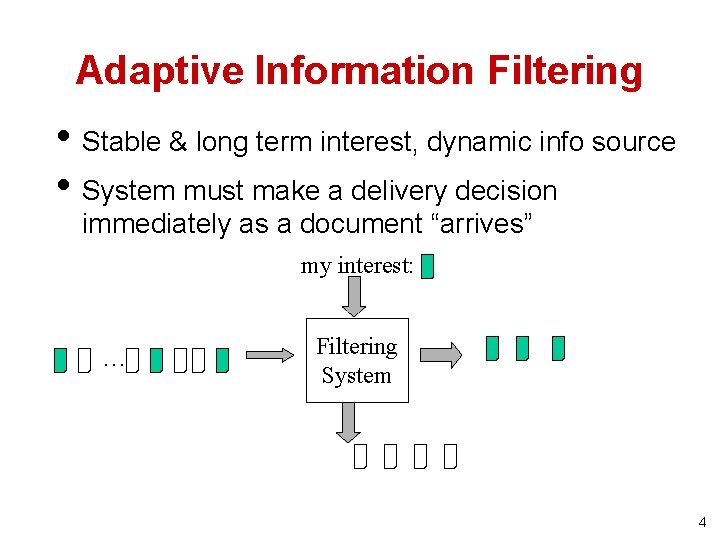

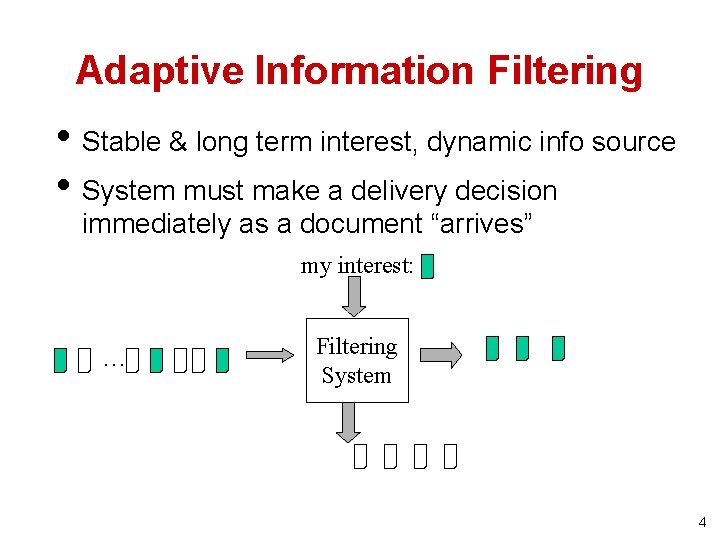

Adaptive Information Filtering • Stable & long term interest, dynamic info source • System must make a delivery decision immediately as a document “arrives” my interest: … Filtering System 4

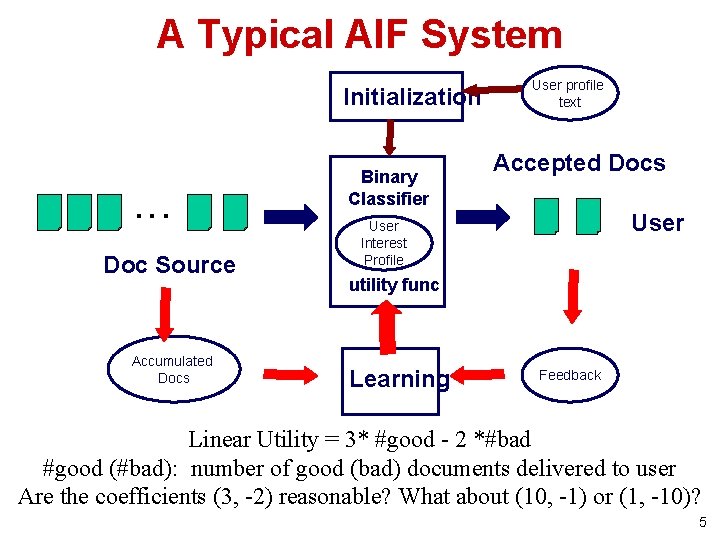

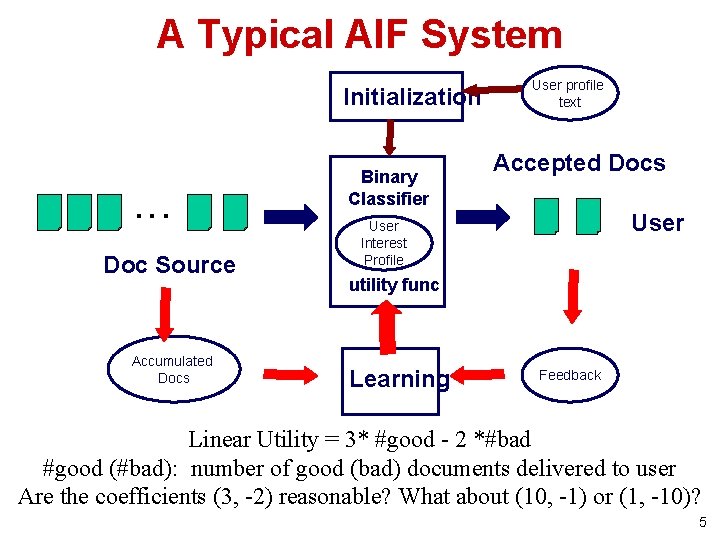

A Typical AIF System Initialization . . . Doc Source Accumulated Docs Binary Classifier User profile text Accepted Docs User Interest Profile utility func Learning Feedback Linear Utility = 3* #good - 2 *#bad #good (#bad): number of good (bad) documents delivered to user Are the coefficients (3, -2) reasonable? What about (10, -1) or (1, -10)? 5

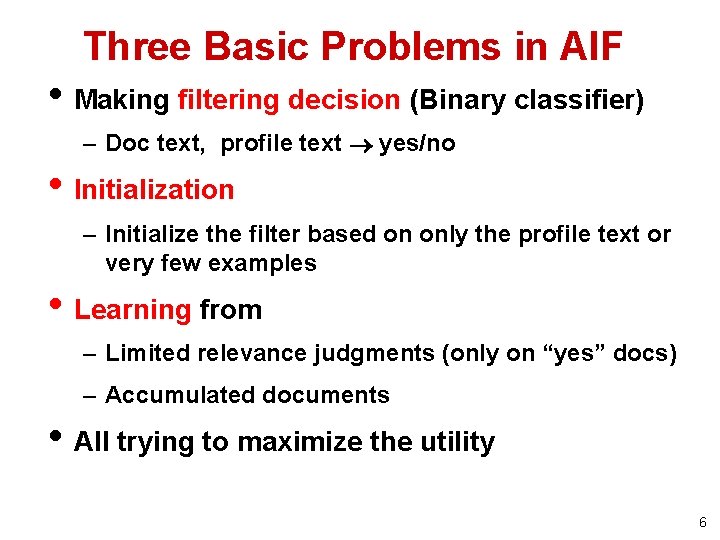

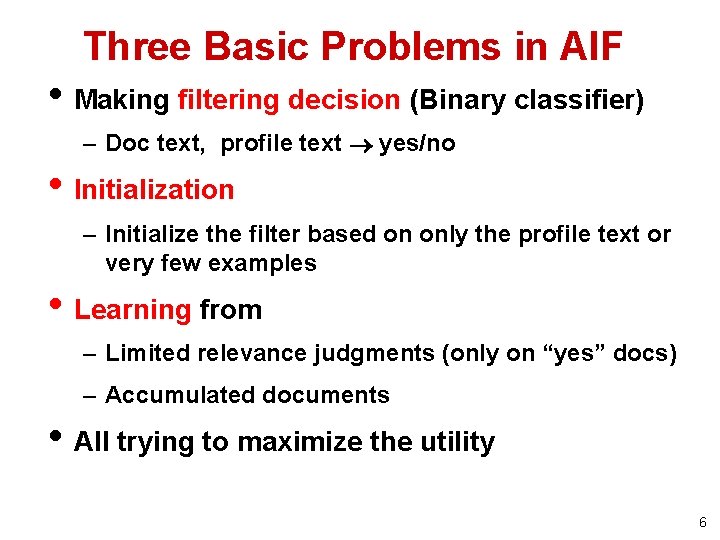

Three Basic Problems in AIF • Making filtering decision (Binary classifier) – Doc text, profile text yes/no • Initialization – Initialize the filter based on only the profile text or very few examples • Learning from – Limited relevance judgments (only on “yes” docs) – Accumulated documents • All trying to maximize the utility 6

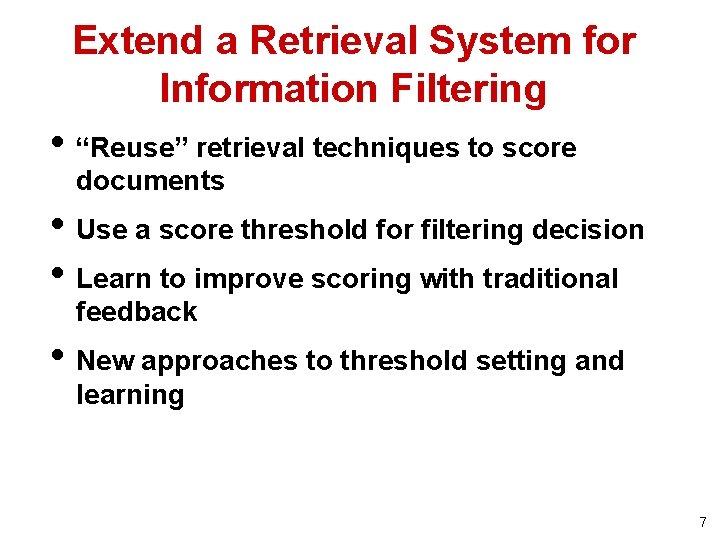

Extend a Retrieval System for Information Filtering • “Reuse” retrieval techniques to score documents • Use a score threshold for filtering decision • Learn to improve scoring with traditional feedback • New approaches to threshold setting and learning 7

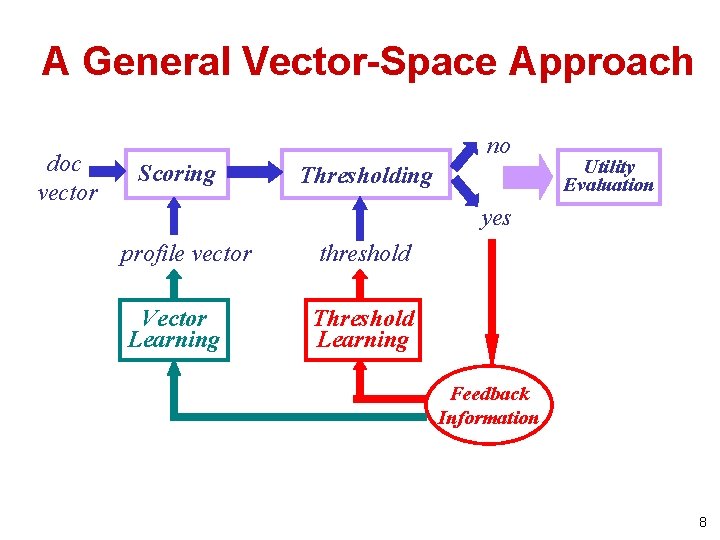

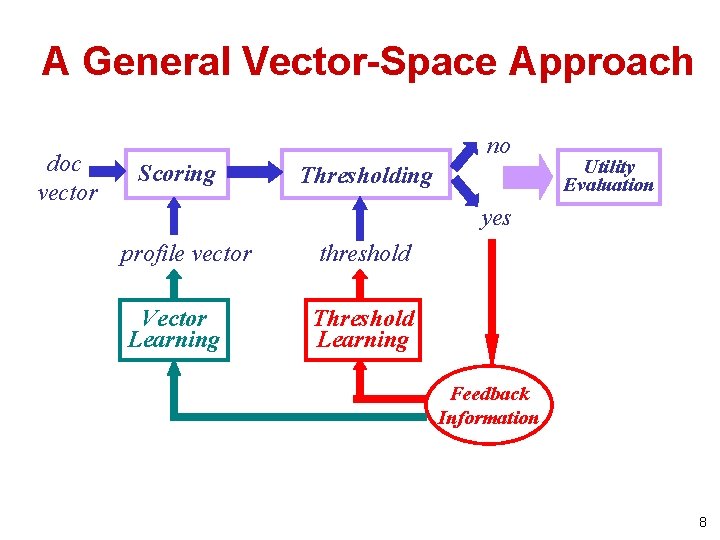

A General Vector-Space Approach doc vector no Scoring Thresholding Utility Evaluation yes profile vector threshold Vector Learning Threshold Learning Feedback Information 8

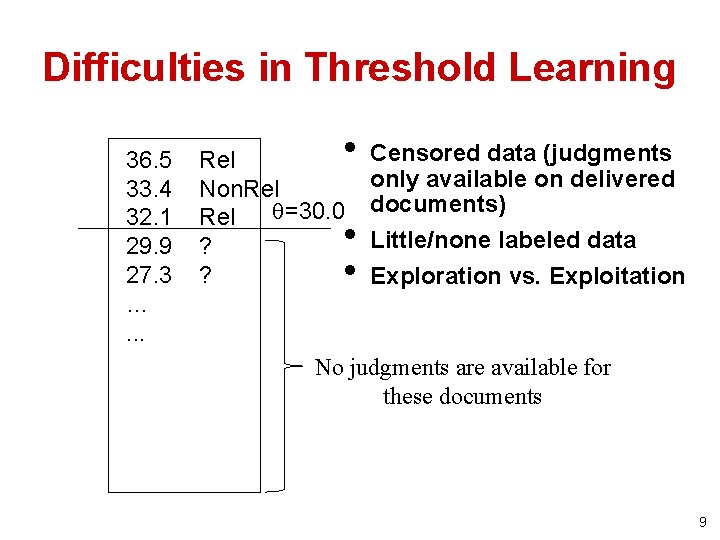

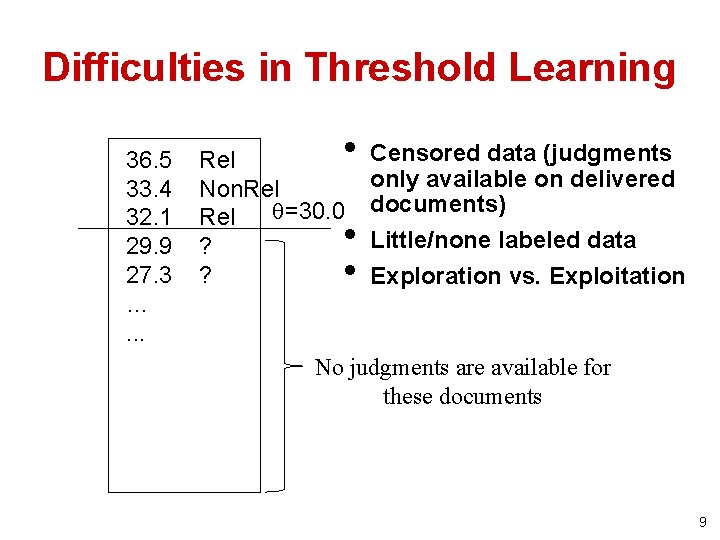

Difficulties in Threshold Learning 36. 5 33. 4 32. 1 29. 9 27. 3 …. . . • Rel Non. Rel =30. 0 Rel ? ? • • Censored data (judgments only available on delivered documents) Little/none labeled data Exploration vs. Exploitation No judgments are available for these documents 9

Empirical Utility Optimization • Basic idea – Compute the utility on the training data for each candidate score threshold – Choose threshold that gives the maximum utility on the training data set • Difficulty: Biased training sample! – We can only get an upper bound for the true optimal threshold – Could a discarded item be possibly interesting to the user? • Solution: – Heuristic adjustment (lowering) of threshold 10

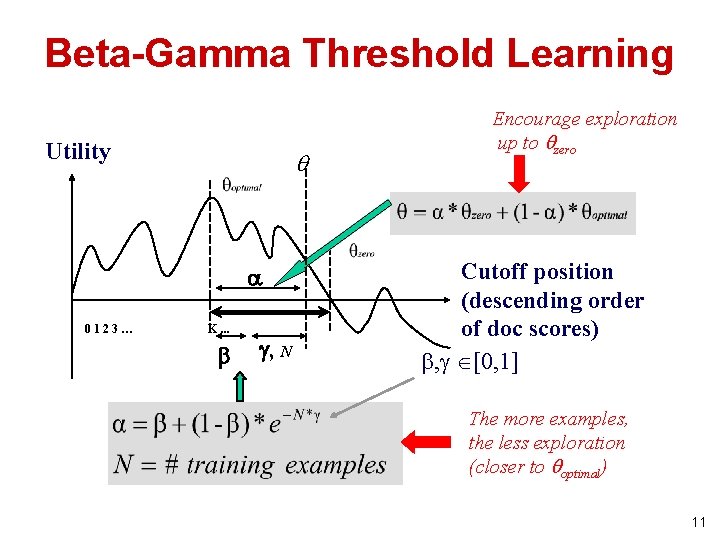

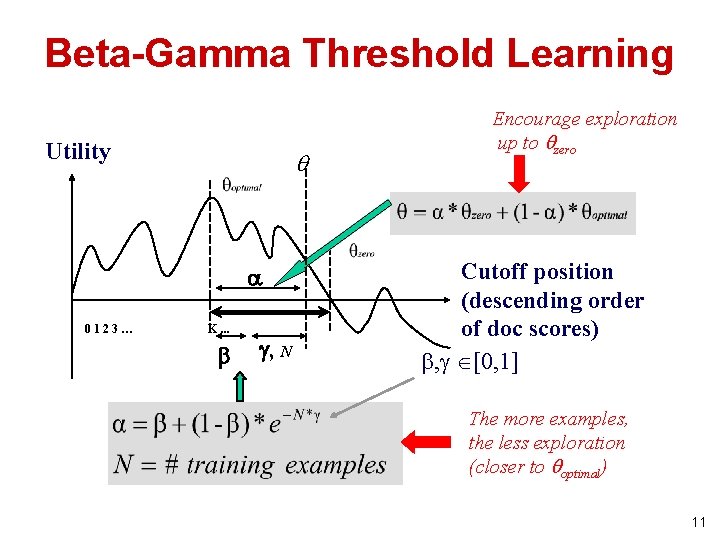

Beta-Gamma Threshold Learning Utility 0123… K. . . , N Encourage exploration up to zero Cutoff position (descending order of doc scores) , [0, 1] The more examples, the less exploration (closer to optimal) 11

Beta-Gamma Threshold Learning (cont. ) • Pros – Explicitly addresses exploration-exploitation tradeoff (“Safe” exploration) – Arbitrary utility (with appropriate lower bound) – Empirically effective • Cons – Purely heuristic – Zero utility lower bound often too conservative 12

Part II: Collaborative Filtering 13

What is Collaborative Filtering (CF)? • Making filtering decisions for an individual user based on the judgments of other users • Inferring individual’s interest/preferences from that of other similar users • General idea – Given a user u, find similar users {u 1, …, um} – Predict u’s preferences based on the preferences of u 1, …, um 14

CF: Assumptions • Users with a common interest will have similar preferences • Users with similar preferences probably share the same interest • Examples – “interest is IR” => “favor SIGIR papers” – “favor SIGIR papers” => “interest is IR” • Sufficiently large number of user preferences are available 15

CF: Intuitions • User similarity (Kevin Chang vs. Jiawei Han) – If Kevin liked the paper, Jiawei will like the paper – ? If Kevin liked the movie, Jiawei will like the movie – Suppose Kevin and Jiawei viewed similar movies in the past six months … • Item similarity – Since 90% of those who liked Star Wars also liked Independence Day, and, you liked Star Wars – You may also like Independence Day The content of items “didn’t matter”! 16

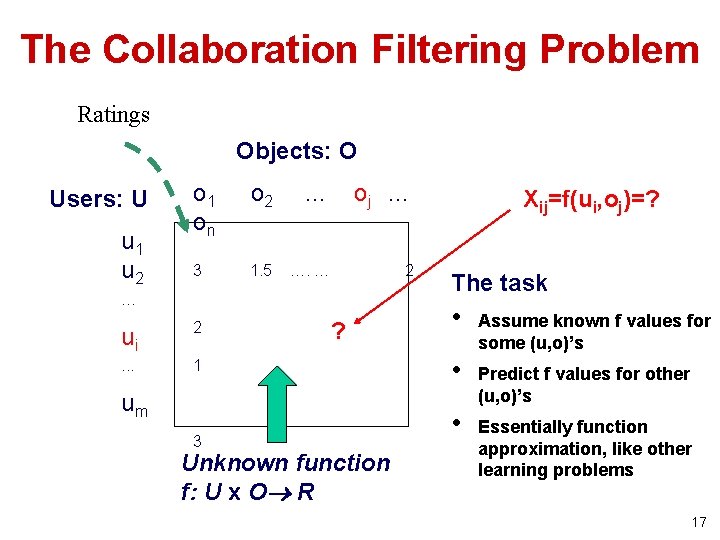

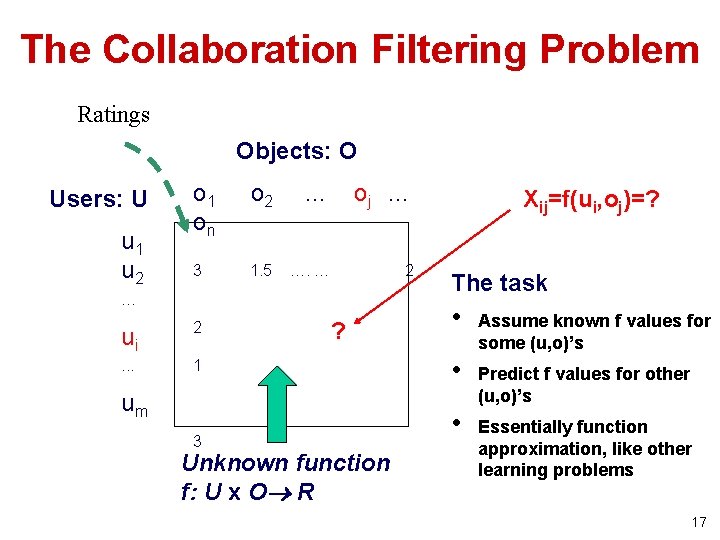

The Collaboration Filtering Problem Ratings Objects: O Users: U u 1 u 2 o 1 on o 2 … oj … 3 1. 5 …. … 2 … ui 2 . . . 1 ? um 3 Unknown function f: U x O R Xij=f(ui, oj)=? The task • • • Assume known f values for some (u, o)’s Predict f values for other (u, o)’s Essentially function approximation, like other learning problems 17

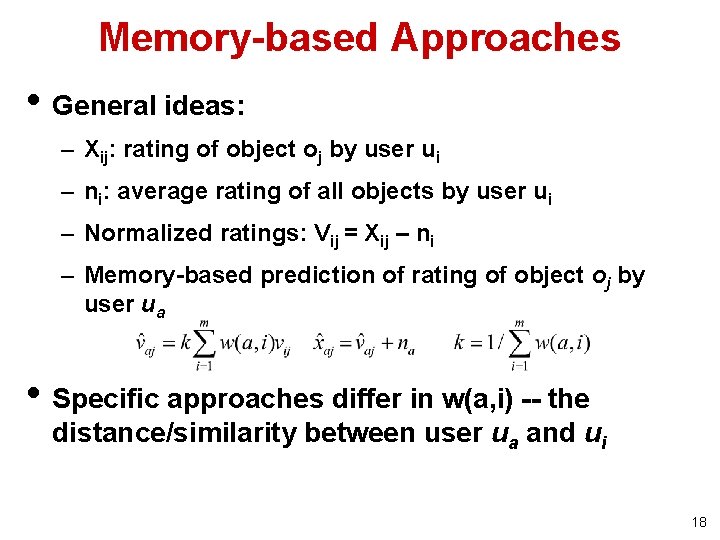

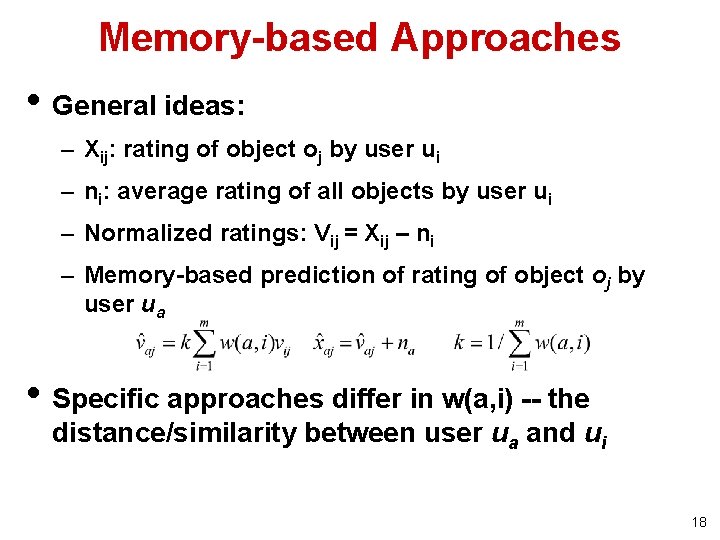

Memory-based Approaches • General ideas: – Xij: rating of object oj by user ui – ni: average rating of all objects by user ui – Normalized ratings: Vij = Xij – ni – Memory-based prediction of rating of object oj by user ua • Specific approaches differ in w(a, i) -- the distance/similarity between user ua and ui 18

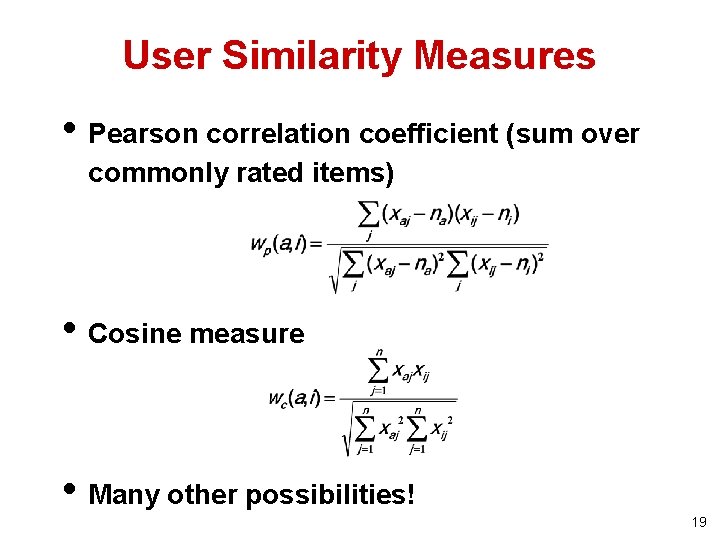

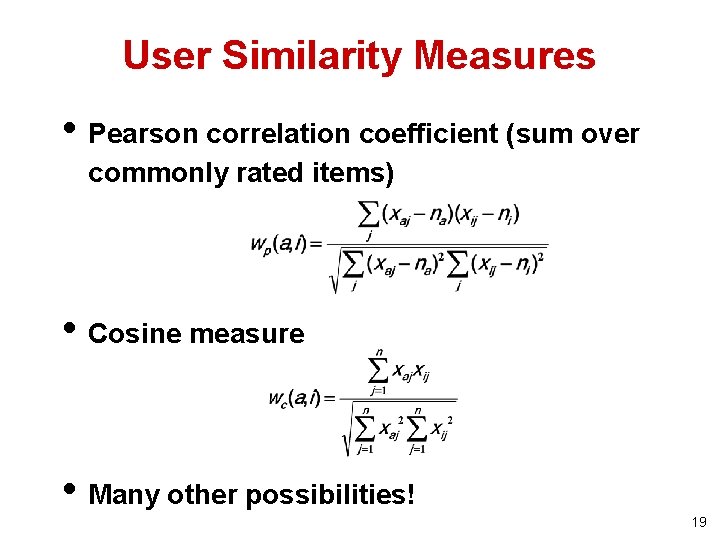

User Similarity Measures • Pearson correlation coefficient (sum over commonly rated items) • Cosine measure • Many other possibilities! 19

Improving User Similarity Measures • Dealing with missing values: set to default ratings (e. g. , average ratings) • Inverse User Frequency (IUF): similar to IDF 20

Summary • Filtering is “easy” – The user’s expectation is low – Any recommendation is better than none – Making it practically useful • Filtering is “hard” – Must make a binary decision, though ranking is also possible – Data sparseness (limited feedback information) – “Cold start” (little information about users at the beginning) 21

What you should know • Filtering, retrieval, and browsing are three basic ways of accessing information • How to extend a retrieval system to do adaptive filtering (adding threshold learning) • What is exploration-exploitation tradeoff • Know how memory-based collaborative filtering works 22