Inference in Propositional Logic Inference is the process

![WW knowledge base (cont. ) Rk: not B [1, 1] --> not P [2, WW knowledge base (cont. ) Rk: not B [1, 1] --> not P [2,](https://slidetodoc.com/presentation_image_h2/66716dc346a9b2f8cd1362f2aaf49227/image-22.jpg)

![S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 2], B [2, 1], S [1, 1] v not W [1, 2],](https://slidetodoc.com/presentation_image_h2/66716dc346a9b2f8cd1362f2aaf49227/image-26.jpg)

- Slides: 26

Inference in Propositional Logic Inference is the process of building a proof of a sentence, that is an implementation of the entailment relation between sentences. Inference is carried out by inference rules, which allow one formula to be inferred from a set of other formulas. For example, A |-- B meaning that B can be derived from A. An inference procedure is sound iff its inference rules are sound. An inference rule is sound iff its conclusion is true whenever the rule's premises are true.

PL inference rules • Modus ponens: if sentence A and implication A => B hold, then B also holds, i. e. (A, A => B) |-- B. Example: Let A means “lights are off”, A => B means “if lights are off, then there is no one in the office” B means “there is no one in the office” • AND-elimination: if conjunction A 1 & A 2 &. . . & An holds, then any of its conjuncts also holds, i. e. A 1 & A 2 &. . . & An |-- Ai. • AND-introduction: if a list of sentences holds, then their conjunction also holds, i. e. A 1, A 2, . . . , An |-- (A 1 & A 2 &. . . & An). • OR-introduction: If Ai holds, then any disjunction containing Ai also holds, i. e. Ai |-- (A 1 v. . . v Ai v. . . v An). • Double-negation elimination: states that a formula can be either true or false, i. e. (not A)) |-- A

PL inference rules (cont. ) • Unit resolution: (A v B), not B |-- A. Note that (A v B) is equivalent to (not B => A), i. e. unit resolution is a modification of modus ponens. • Resolution: (A v B), (not B v C) |-- (A v C). Note that (A v B) is equivalent to (not A => B), (not B v C) is equivalent to (B => C) By eliminating the intermediate conclusion, we get (not A => C). The soundness of each one of these rules can be checked by means of the truth table method. Once the soundness of a rule has been established, it can be used for building proofs. Proofs are sequences of applications of inference rules, starting with sentences initially contained in the KB.

Example (adapted from Dean, Allen & Aloimonos "Artificial Intelligence: theory and practice") Bob, Lisa, Jim and Mary got their dormitory rooms according to the results of a housing lottery. Determine how they were ranked with respect to each other, if the following is known: 1. Lisa is not next to Bob in the ranking. 2. Jim is ranked immediately ahead of a biology major. 3. Bob is ranked immediately ahead of Jim. 4. Either Lisa or Mary is a biology major. 5. Either Lisa or Mary is ranked first. We must start with deciding how to represent the problem in PL. Let the following two be the only types of propositions that we will use: X-ahead-of-Y meaning “X is immediately ahead of Y” X-bio-major meaning “X is a biology major”

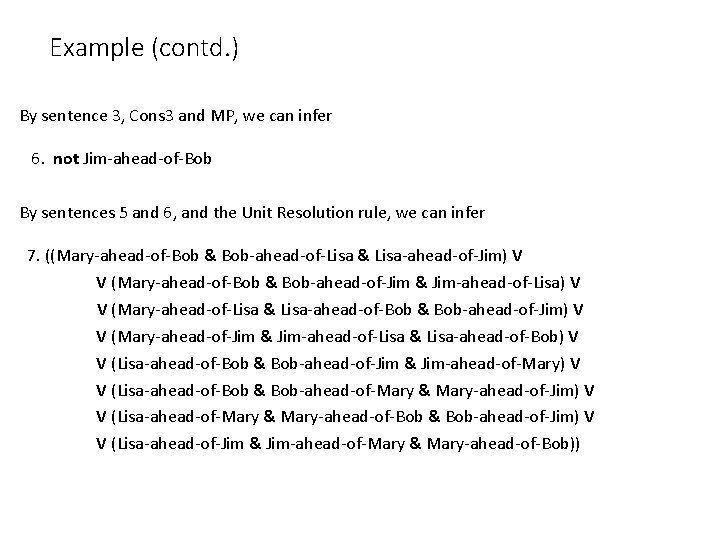

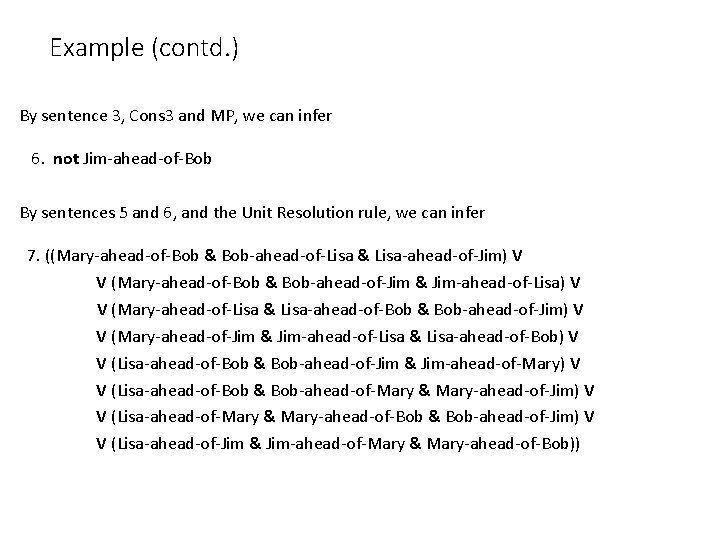

Statements representing the initial KB: 1. (not Lisa-ahead-of-Bob & not Bob-ahead-of-Lisa) 2. ((Jim-ahead-of-Mary & Mary-bio-major) V (Jim-ahead-of-Bob & Bob-bio-major) V V (Jim-ahead-of-Lisa & Lisa-bio-major)) 3. Bob-ahead-of-Jim 4. Mary-bio-major V Lisa-bio-major 5. ((Mary-ahead-of-Bob & Bob-ahead-of-Lisa & Lisa-ahead-of-Jim) V V (Mary-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Lisa) V V (Mary-ahead-of-Lisa & Lisa-ahead-of-Bob & Bob-ahead-of-Jim) V V (Mary-ahead-of-Lisa & Lisa-ahead-of-Jim & Jim-ahead-of-Bob) V V (Mary-ahead-of-Jim & Jim-ahead-of-Lisa & Lisa-ahead-of-Bob) V V (Mary-ahead-of-Jim & Jim-ahead-of-Bob & Bob-ahead-of-Lisa) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Mary) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Mary & Mary-ahead-of-Jim) V V (Lisa-ahead-of-Mary & Mary-ahead-of-Jim & Jim-ahead-of-Bob) V V (Lisa-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim) V V (Lisa-ahead-of-Jim & Jim-ahead-of-Bob & Bob-ahead-of-Mary) V V (Lisa-ahead-of-Jim & Jim-ahead-of-Mary & Mary-ahead-of-Bob))

Example (contd. ) In addition to the explicit knowledge encoded by the five sentences above, the following background (or common-sense) knowledge is needed to solve the problem: BGRule 1: A student can be immediately ahead of at most one other student. Cons 1: Bob-ahead-of-Jim ==> not Bob-ahead-of-Lisa Cons 2: Bob-ahead-of-Jim ==> not Lisa-ahead-of-Jim BGRule 2: If X is immediately ahead of Y, then Y cannot be immediately ahead of X. Cons 3: Bob-ahead-of-Jim ==> not Jim-ahead-of-Bob

Example (contd. ) By sentence 3, Cons 3 and MP, we can infer 6. not Jim-ahead-of-Bob By sentences 5 and 6, and the Unit Resolution rule, we can infer 7. ((Mary-ahead-of-Bob & Bob-ahead-of-Lisa & Lisa-ahead-of-Jim) V V (Mary-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Lisa) V V (Mary-ahead-of-Lisa & Lisa-ahead-of-Bob & Bob-ahead-of-Jim) V V (Mary-ahead-of-Jim & Jim-ahead-of-Lisa & Lisa-ahead-of-Bob) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Mary) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Mary & Mary-ahead-of-Jim) V V (Lisa-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim) V V (Lisa-ahead-of-Jim & Jim-ahead-of-Mary & Mary-ahead-of-Bob))

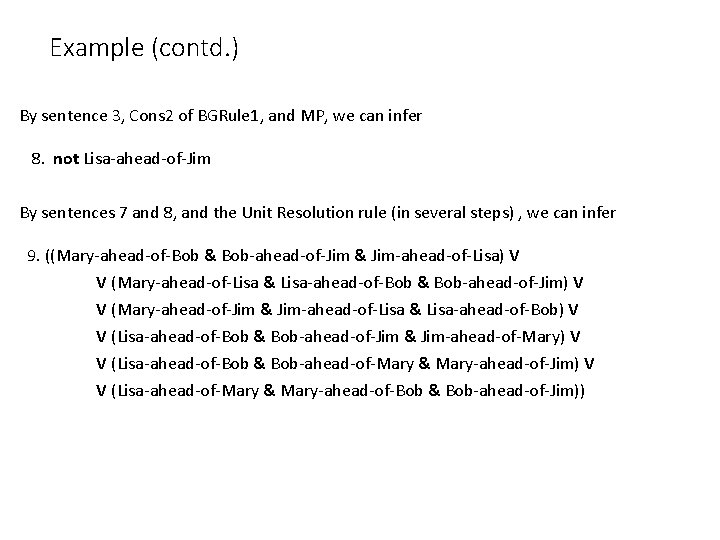

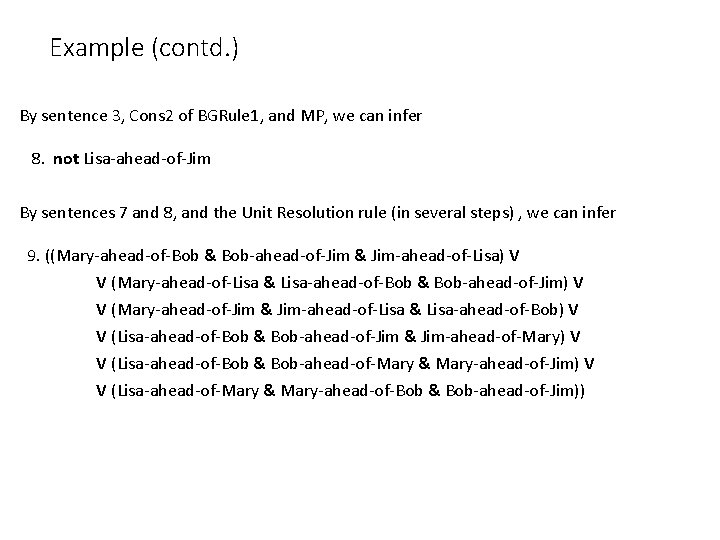

Example (contd. ) By sentence 3, Cons 2 of BGRule 1, and MP, we can infer 8. not Lisa-ahead-of-Jim By sentences 7 and 8, and the Unit Resolution rule (in several steps) , we can infer 9. ((Mary-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Lisa) V V (Mary-ahead-of-Lisa & Lisa-ahead-of-Bob & Bob-ahead-of-Jim) V V (Mary-ahead-of-Jim & Jim-ahead-of-Lisa & Lisa-ahead-of-Bob) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Mary) V V (Lisa-ahead-of-Bob & Bob-ahead-of-Mary & Mary-ahead-of-Jim) V V (Lisa-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim))

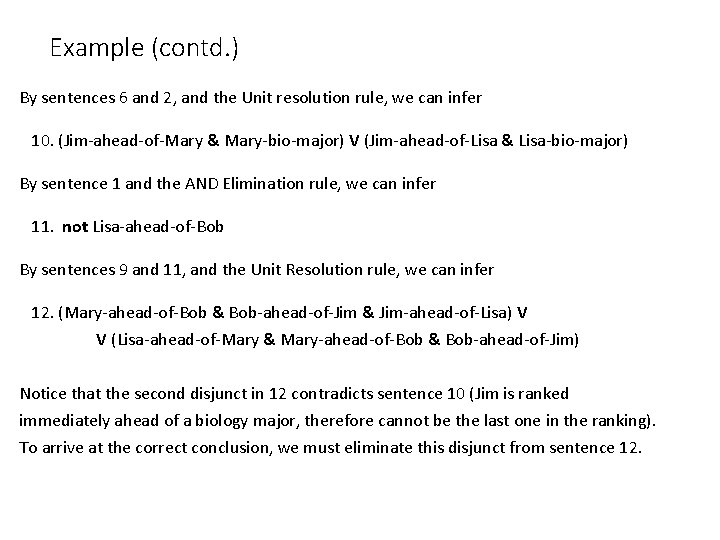

Example (contd. ) By sentences 6 and 2, and the Unit resolution rule, we can infer 10. (Jim-ahead-of-Mary & Mary-bio-major) V (Jim-ahead-of-Lisa & Lisa-bio-major) By sentence 1 and the AND Elimination rule, we can infer 11. not Lisa-ahead-of-Bob By sentences 9 and 11, and the Unit Resolution rule, we can infer 12. (Mary-ahead-of-Bob & Bob-ahead-of-Jim & Jim-ahead-of-Lisa) V V (Lisa-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim) Notice that the second disjunct in 12 contradicts sentence 10 (Jim is ranked immediately ahead of a biology major, therefore cannot be the last one in the ranking). To arrive at the correct conclusion, we must eliminate this disjunct from sentence 12.

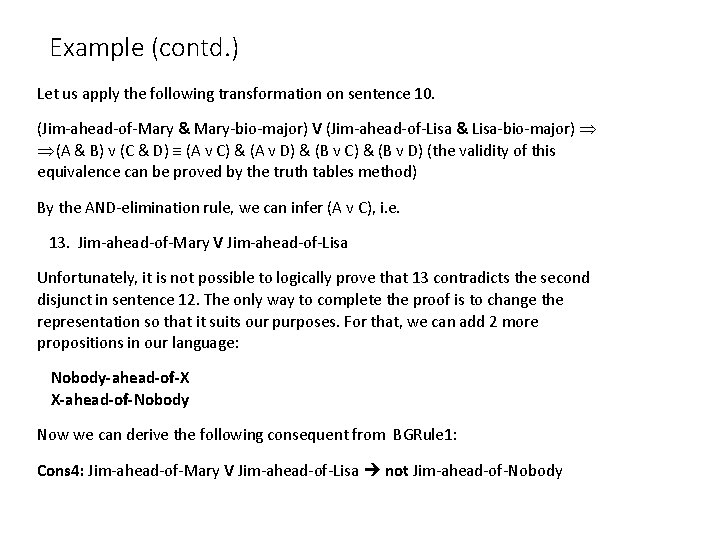

Example (contd. ) Let us apply the following transformation on sentence 10. (Jim-ahead-of-Mary & Mary-bio-major) V (Jim-ahead-of-Lisa & Lisa-bio-major) (A & B) v (C & D) (A v C) & (A v D) & (B v C) & (B v D) (the validity of this equivalence can be proved by the truth tables method) By the AND-elimination rule, we can infer (A v C), i. e. 13. Jim-ahead-of-Mary V Jim-ahead-of-Lisa Unfortunately, it is not possible to logically prove that 13 contradicts the second disjunct in sentence 12. The only way to complete the proof is to change the representation so that it suits our purposes. For that, we can add 2 more propositions in our language: Nobody-ahead-of-X X-ahead-of-Nobody Now we can derive the following consequent from BGRule 1: Cons 4: Jim-ahead-of-Mary V Jim-ahead-of-Lisa not Jim-ahead-of-Nobody

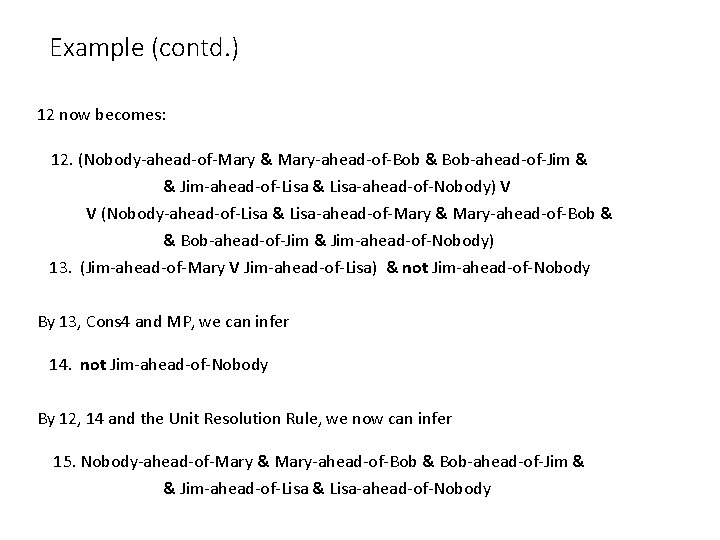

Example (contd. ) 12 now becomes: 12. (Nobody-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim & & Jim-ahead-of-Lisa & Lisa-ahead-of-Nobody) V V (Nobody-ahead-of-Lisa & Lisa-ahead-of-Mary & Mary-ahead-of-Bob & & Bob-ahead-of-Jim & Jim-ahead-of-Nobody) 13. (Jim-ahead-of-Mary V Jim-ahead-of-Lisa) & not Jim-ahead-of-Nobody By 13, Cons 4 and MP, we can infer 14. not Jim-ahead-of-Nobody By 12, 14 and the Unit Resolution Rule, we now can infer 15. Nobody-ahead-of-Mary & Mary-ahead-of-Bob & Bob-ahead-of-Jim & & Jim-ahead-of-Lisa & Lisa-ahead-of-Nobody

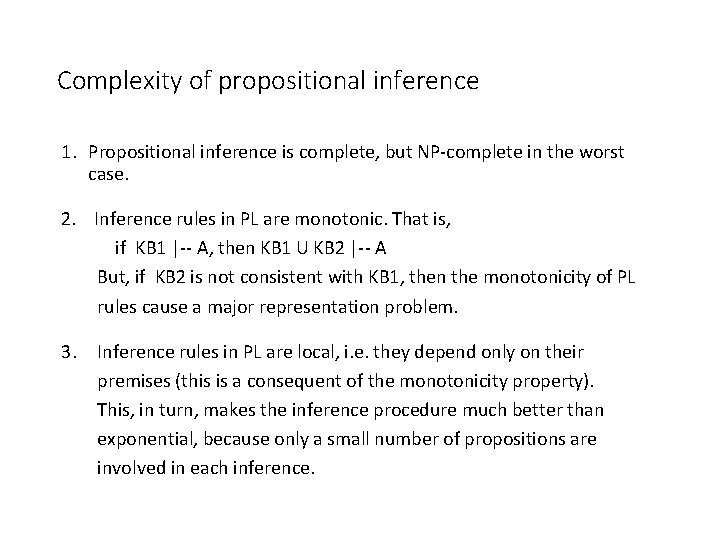

Complexity of propositional inference 1. Propositional inference is complete, but NP-complete in the worst case. 2. Inference rules in PL are monotonic. That is, if KB 1 |-- A, then KB 1 U KB 2 |-- A But, if KB 2 is not consistent with KB 1, then the monotonicity of PL rules cause a major representation problem. 3. Inference rules in PL are local, i. e. they depend only on their premises (this is a consequent of the monotonicity property). This, in turn, makes the inference procedure much better than exponential, because only a small number of propositions are involved in each inference.

Wang’s algorithm for proving the validity of a formula Let the KB be represented as a “sequent” of the form: Premise 1, Premise 2, . . . , Premise. N ===>s Conclusion 1, Conclusion 2, . . . , Conclusion. M Wang’s algorithm transforms such sequents by means of seven rules, two of which are termination rules. Other five rules either eliminate a connective (thus shortening the sequent), or eliminate the implication. The following example will be used to illustrate Wang’s algorithm. Fact 1: If Bob failed to enroll in CS 462, then Bob will not graduate this Spring. Fact 2: If Bob will not graduate this Spring, then Bob will miss a great job. Fact 3: Bob will not miss a great job. Question: Did Bob fail to enroll in CS 462?

Representing the example in formal terms First, we must decide on the representation. Assume that: P means “Bob failed to enroll in CS 462” , Q means “Bob will not graduate this Spring” , and R means “Bob will miss a great job”. The problem is now described by means of the following PL formulas: Fact 1: P --> Q Fact 2: Q --> R Fact 3: not R Question: P or not P Here fact 1, fact 2, and fact 3 are called premises.

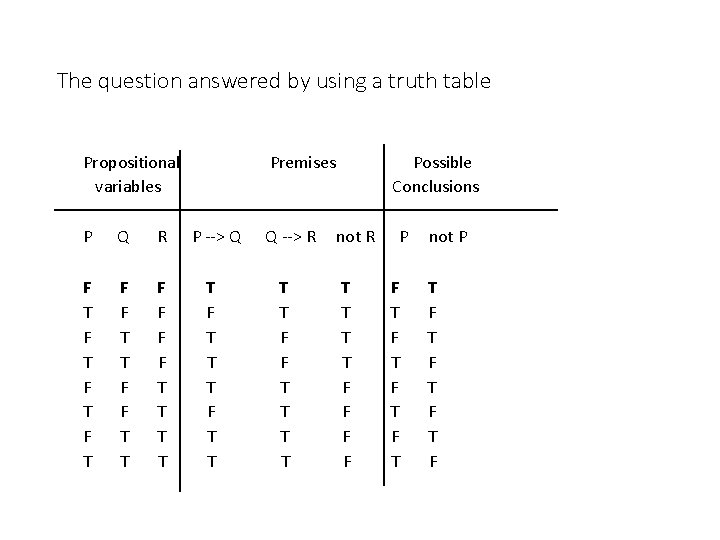

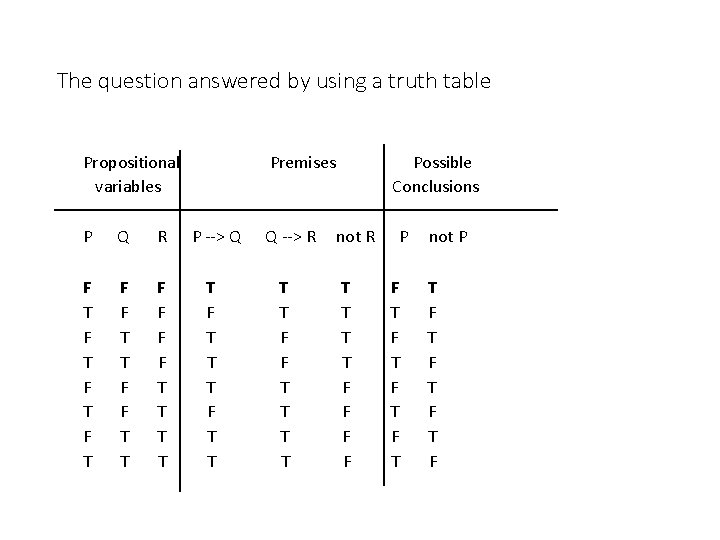

The question answered by using a truth table Propositional variables P Q R F T F T F F T T Premises P --> Q T F T T Q --> R T T F F T T Possible Conclusions not R T T F F P F T F T not P T F T F

Wang’s algorithm: transformation rules Wang’s algorithm takes a KB represented as a set of sequents and applies rules R 1 through R 5 to transform the sequents into simpler ones. Rules R 6 and R 7 are termination rules which check if the proof has been found. Bob’s example. The KB initially contains the following sequent: P --> Q, Q --> R, not R ===>s not P Here P --> Q, Q --> R, not P are referred to as top-level formulas. Transformation rules: R 1 (“not on the left / not on the right” rule). If one of the top level formulas of the sequent has the form not X, then this rule says to drop the negation and move X to the other side of the sequent arrow. If not X is on the left of ===>s, the transformation is called “not on the left; otherwise, it is called “not on the right”.

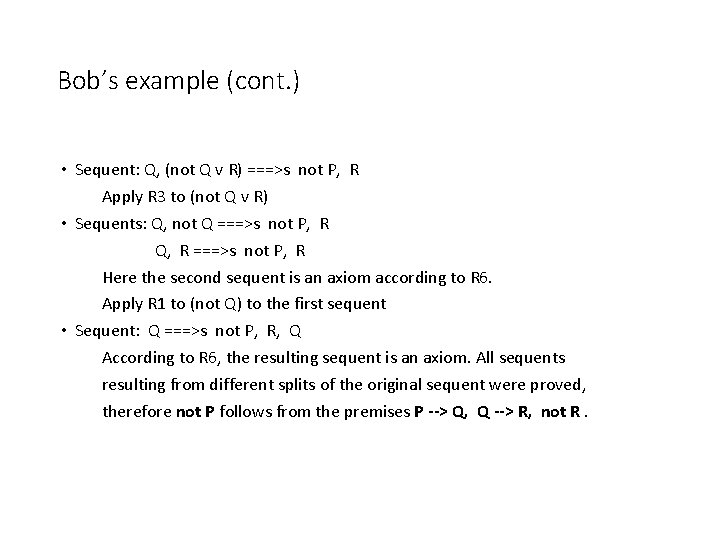

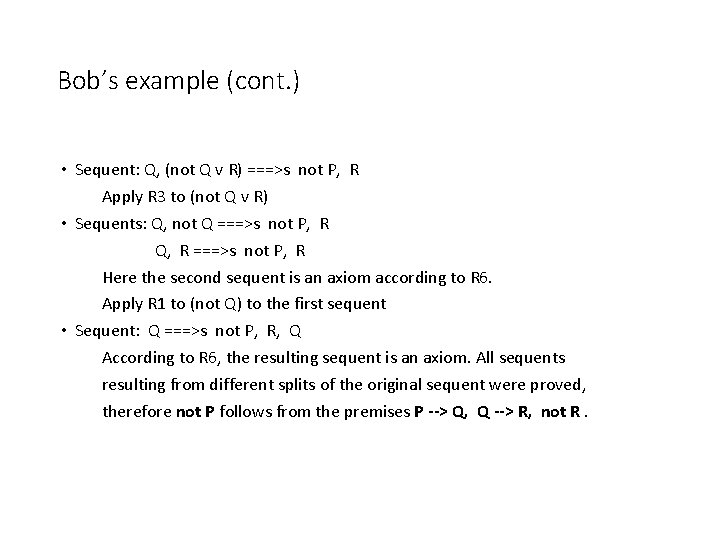

R 2 (“& on the left / v on the right” rule). If a top-level formula on the left of the arrow has the form X & Y, or a top-level formula on the right of the arrow has the form X v Y, then the connective (&, v) can be replaced by a comma. R 3 (“v on the left” rule). If a top-level formula on the left has a form X v Y, then replace the sequent with two new sequents: X ===> s. . . and Y ===>s. . . R 4 (“& on the right” rule). If a top-level formula on the right has a form X & Y, then replace the sequent with two new sequents: . . . . ===>s X and. . . . ===>s Y R 5 (“implication elimination” rule). Any formula of the form X --> Y is replaced by not X v Y. R 6 (“valid sequent” rule). If a top-level formula X occurs on both sides of the sequent, then the sequent is considered proved. Such a sequent is called an axiom. If the original sequent has been split into sequents, then all of these sequents must be proved in order for the original sequent to be proved. R 7 (“invalid sequent” rule). If all of the formulas in a given sequent are propositional symbols (i. e. no future transformations are possible) and the sequent is not an axiom, then the sequent is invalid. If an invalid sequent is found, the algorithm terminates, and the original sequent is proved not to follow logically from the premises.

Bob’s example continued • Sequent: P --> Q, Q --> R, not R ===>s not P Apply R 5 twice, to P --> Q and to Q --> R • Sequent: (not P v Q), (not Q v R), not R ===>s not P Apply R 1 to (not R) • Sequent: (not P v Q), (not Q v R) ===>s not P, R Apply R 3 to (not P v Q), resulting in splitting the current sequent into two new sequents. • Sequents: not P, (not Q v R) ===>s not P, R Q, (not Q v R) ===>s not P, R Note that the first sequent is an axiom according to R 6 (not P is on both sides of the sequent arrow). To prove that not P follows from the premises, however, we still need to prove the second sequent.

Bob’s example (cont. ) • Sequent: Q, (not Q v R) ===>s not P, R Apply R 3 to (not Q v R) • Sequents: Q, not Q ===>s not P, R Q, R ===>s not P, R Here the second sequent is an axiom according to R 6. Apply R 1 to (not Q) to the first sequent • Sequent: Q ===>s not P, R, Q According to R 6, the resulting sequent is an axiom. All sequents resulting from different splits of the original sequent were proved, therefore not P follows from the premises P --> Q, Q --> R, not R.

Characterization of Wang’s algorithm 1. Wang’s algorithm is complete, i. e. it will always prove a formula if it is valid. The length of the proof depends on the order in which rules are applied. 2. Wang’s algorithm is NP-complete in the worst case. However, in the average case its performance in better compared to that of the truth tables method. 3. Wang’s algorithm is sound, i. e. it will prove only formulas entailed by the KB. Wang’s algorithm was originally published in Wang, H. “Towards mechanical mathematics”, in IBM Journal of Research and Development, vol. 4, 1960.

The wumpus example continued The KB describing the WW contains the following types of sentences: • Sentences representing the agent’s percepts. These are propositions of the form: not S [1, 1] : There is no stench in square [1, 1] B [2, 1] : There is a breeze in square [2, 1] not W [1, 1] : There is no wumpus in square [1, 1], etc. • Sentences representing the background knowledge (i. e. the rules of the game). These are formulas of the form: R 1: not S [1, 1] --> not W [1, 1] & not W [1, 2] & not W [2, 1]. . R 16: not S [4, 4] --> not W [4, 4] & not W [3, 4] & not W [4, 3] There are 16 rules of this type (one for each square). Rj: S [1, 2] --> W [1, 3] v W[1, 2] v W [2, 2] v W [1, 1]. . There are 16 rules of this type.

![WW knowledge base cont Rk not B 1 1 not P 2 WW knowledge base (cont. ) Rk: not B [1, 1] --> not P [2,](https://slidetodoc.com/presentation_image_h2/66716dc346a9b2f8cd1362f2aaf49227/image-22.jpg)

WW knowledge base (cont. ) Rk: not B [1, 1] --> not P [2, 1] & not P [1, 2]. . 16 rules of this type Rn: B [1, 1] --> P [2, 1] v P [1, 2]. . 16 rules of this type Rp: not G [1, 1] --> not G [1, 1]. . 16 rules of this type Rs: G[1, 1] --> G[1, 1]. . 16 rules of this type Also, 32 rules are needed to handle the percept “Bump”, and 32 more to handle the percept “Scream”. Total number of rules dealing with percepts: 160.

WW knowledge base: limitations of PL • Rules describing the agent’s actions and rules representing “common-sense knowledge”, such as “Don’t go forward, if the wumpus is in front of you”. This rule will be represented by 64 sentences (16 squares * 4 orientations). • If we want to incorporate the time into the representation, then each group of 64 sentences must be provided for each time segment. Assume that the problem is expected to take 100 time segments. Then, 6400 sentences are needed to represent only the rule “Don’t go forward, if the wumpus is in front of you”. Note: there are still many other rules of this type which will require thousands of sentences to be formally described. • The third group of sentences in the agent’s KB are the entailed sentences. Note: using truth tables for identifying the entailed sentences is infeasible not only because of the large number of propositional variables, but also because of the huge number of formulas that will eventually be tested for validity.

The WW: solving one fragment of the agent’s problem by means of the Wang’s algorithm. Consider the situation on Figure 6. 15 (AIMA), and assume that we want to prove W [1, 3] with the Wang’s algorithm. Current sequent: not S [1, 1], not B [1, 1], S [1, 2], not B [1, 2], not S [2, 1], B [2, 1], not S [1, 1] --> not W [1, 1] & not W [1, 2] & not W [2, 1], not S [2, 1] --> not W [1, 1] & not W [2, 2] & not W [3, 1], S [1, 2] --> W [1, 3] v W [1, 2] v W [2, 2] v W [1, 1] ===>s W [1, 3] • Applying R 1 to all negated single propositions on the left results in Current sequent: S [1, 2], B [2, 1], not S [1, 1] --> not W [1, 1] & not W [1, 2] & not W [2, 1], not S [2, 1] --> not W [1, 1] & not W [2, 2] & not W [3, 1], S [1, 2] --> W [1, 3] v W [1, 2] v W [2, 2] v W [1, 1] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1]

• Applying R 5 to the first two implications on the left in combination with R 2, and only R 5 to the third implication results in Current sequent: S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], not S [1, 2] v W [1, 3] v W [1, 2] v W [2, 2] v W [1, 1] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] • Applying R 3 to the last disjunct will result in Current sequents: S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], not S [1, 2] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] Moving not S [1, 2] on the right by using R 1 will prove that this is an axiom. S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], W [1, 3] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] proves that this is an axiom

![S 1 2 B 2 1 S 1 1 v not W 1 2 S [1, 2], B [2, 1], S [1, 1] v not W [1, 2],](https://slidetodoc.com/presentation_image_h2/66716dc346a9b2f8cd1362f2aaf49227/image-26.jpg)

S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], W [1, 2] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] Applying R 3 to the underlined disjunct will create two new sequents, the first of which is an axiom because S [1, 1] will be on both sides of the arrow, and the second after applying R 1 (moving not W [1, 2] to the right) will be proven because W [1, 2] will be on both sides. S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], W [2, 2] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] Same as above to prove that the two resulting sequents are axioms. S [1, 2], B [2, 1], S [1, 1] v not W [1, 2], S [1, 1] v not W [2, 1], S [2, 1] v not W [1, 1], S [2, 1] v not W [2, 2], S [2, 1] v not W [3, 1], W [1, 1] ===>s W [1, 3], S [1, 1], B [1, 2], S [2, 1] Same as above to prove that the two resulting sequents are axioms. All sequents proven, therefore W [1, 3] follows from the initial premises.