Independent Study of Parallel Programming Languages An Independent

- Slides: 1

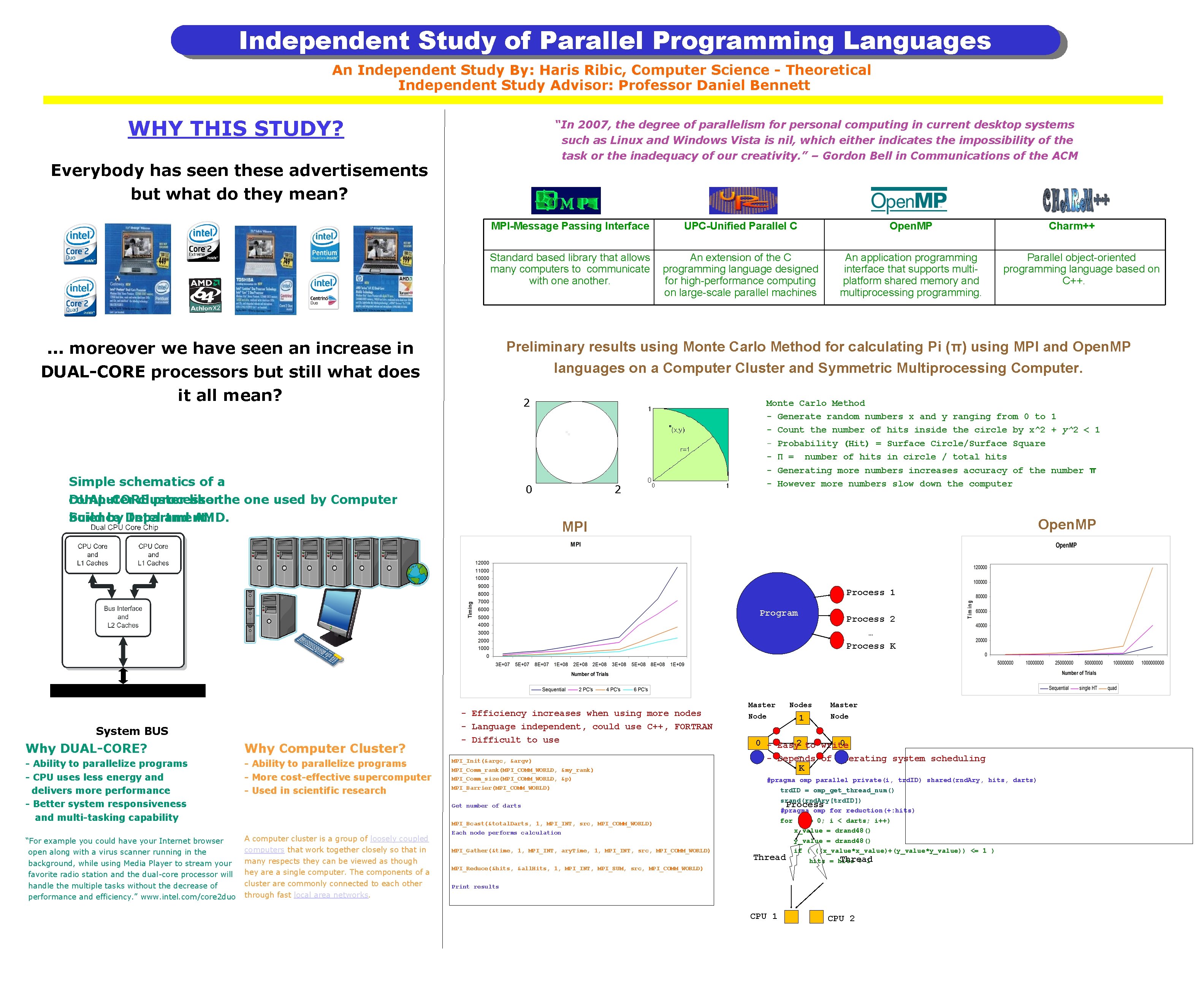

Independent Study of Parallel Programming Languages An Independent Study By: Haris Ribic, Computer Science - Theoretical Independent Study Advisor: Professor Daniel Bennett WHY THIS STUDY? “In 2007, the degree of parallelism for personal computing in current desktop systems such as Linux and Windows Vista is nil, which either indicates the impossibility of the task or the inadequacy of our creativity. ” – Gordon Bell in Communications of the ACM Everybody has seen these advertisements but what do they mean? MPI-Message Passing Interface UPC-Unified Parallel C Open. MP Standard based library that allows many computers to communicate with one another. An extension of the C programming language designed for high-performance computing on large-scale parallel machines An application programming interface that supports multiplatform shared memory and multiprocessing programming. . moreover we have seen an increase in DUAL-CORE processors but still what does it all mean? Charm++ Parallel object-oriented programming language based on C++. Preliminary results using Monte Carlo Method for calculating Pi (π) using MPI and Open. MP languages on a Computer Cluster and Symmetric Multiprocessing Computer. 2 Simple schematics of a DUAL-CORE computer cluster processor like the one used by Computer build by Department. Science Intel and AMD. 0 2 Monte Carlo Method - Generate random numbers x and y ranging from 0 to 1 - Count the number of hits inside the circle by x^2 + y^2 < 1 - Probability (Hit) = Surface Circle/Surface Square - Π = number of hits in circle / total hits - Generating more numbers increases accuracy of the number π - However more numbers slow down the computer Open. MP MPI Process 1 Program System BUS Why DUAL-CORE? Why Computer Cluster? - Ability to parallelize programs - CPU uses less energy and delivers more performance - Better system responsiveness and multi-tasking capability - Ability to parallelize programs - More cost-effective supercomputer - Used in scientific research “For example you could have your Internet browser open along with a virus scanner running in the background, while using Media Player to stream your favorite radio station and the dual-core processor will handle the multiple tasks without the decrease of performance and efficiency. ” www. intel. com/core 2 duo A computer cluster is a group of loosely coupled computers that work together closely so that in many respects they can be viewed as though hey are a single computer. The components of a cluster are commonly connected to each other through fast local area networks. - Efficiency increases when using more nodes - Language independent, could use C++, FORTRAN - Difficult to use MPI_Init(&argc, &argv) MPI_Comm_rank(MPI_COMM_WORLD, &my_rank) MPI_Comm_size(MPI_COMM_WORLD, &p) MPI_Barrier(MPI_COMM_WORLD) Get number of darts MPI_Bcast(&total. Darts, 1, MPI_INT, src, MPI_COMM_WORLD) Each node performs calculation MPI_Gather(&time, 1, MPI_INT, ary. Time, 1, MPI_INT, src, MPI_COMM_WORLD) Master Node Process 2 … Process K Nodes 1 Master Node 0 - Easy 2 to write 0 … - Depends of operating system scheduling K #pragma omp parallel private(i, trd. ID) shared(rnd. Ary, hits, darts) trd. ID = omp_get_thread_num() srand(rnd. Ary[trd. ID]) Process #pragma omp for reduction(+: hits) for (i = 0; i < darts; i++) x_value = drand 48() y_value = drand 48() if ( ((x_value*x_value)+(y_value*y_value)) <= 1 ) Thread hits = hits + 1 MPI_Reduce(&hits, &all. Hits, 1, MPI_INT, MPI_SUM, src, MPI_COMM_WORLD) Print results CPU 1 CPU 2