Independent Component Analysis For Track Classification Seeding for

- Slides: 21

Independent Component Analysis For Track Classification • Seeding for Kalman Filter • High Level Trigger • Tracklets After Hough Transformation A K Mohanty 1

Outline of the presentation • What is ICA • Results (TPC as a test case) • Why ICA has worked ? a. Unsupervised Linear Learning b. Similarity with Neural net (both supervised and unsupervised) A K Mohanty 2

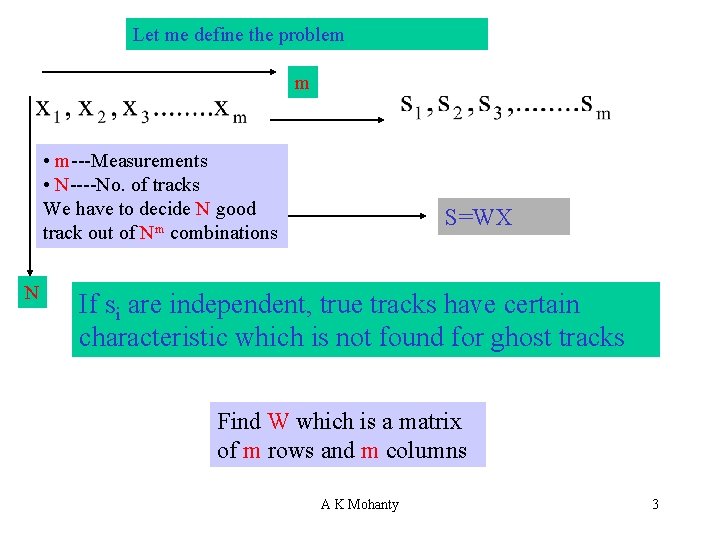

Let me define the problem m • m---Measurements • N----No. of tracks We have to decide N good track out of Nm combinations N S=WX If si are independent, true tracks have certain characteristic which is not found for ghost tracks Find W which is a matrix of m rows and m columns A K Mohanty 3

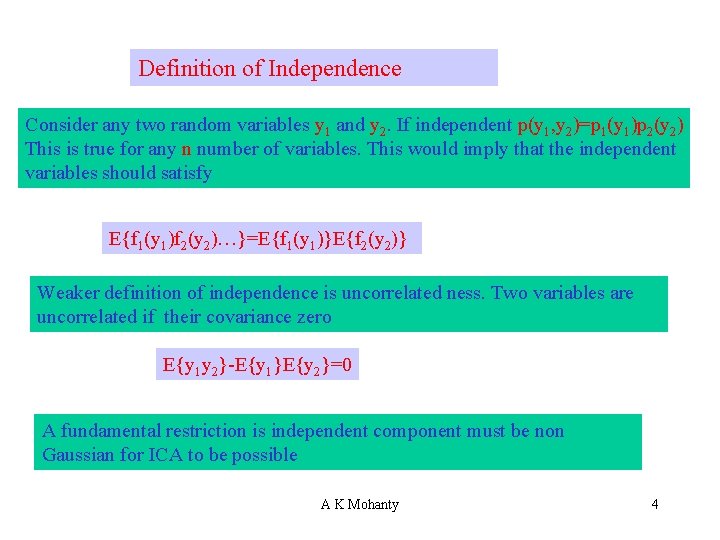

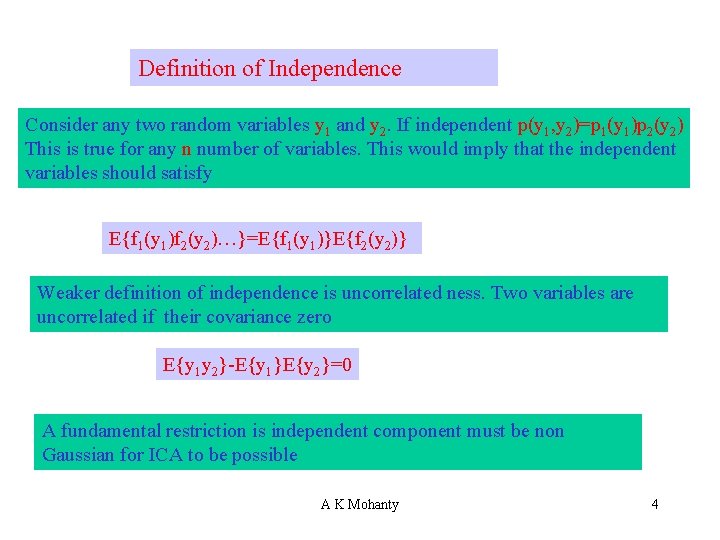

Definition of Independence Consider any two random variables y 1 and y 2. If independent p(y 1, y 2)=p 1(y 1)p 2(y 2) This is true for any n number of variables. This would imply that the independent variables should satisfy E{f 1(y 1)f 2(y 2)…}=E{f 1(y 1)}E{f 2(y 2)} Weaker definition of independence is uncorrelated ness. Two variables are uncorrelated if their covariance zero E{y 1 y 2}-E{y 1}E{y 2}=0 A fundamental restriction is independent component must be non Gaussian for ICA to be possible A K Mohanty 4

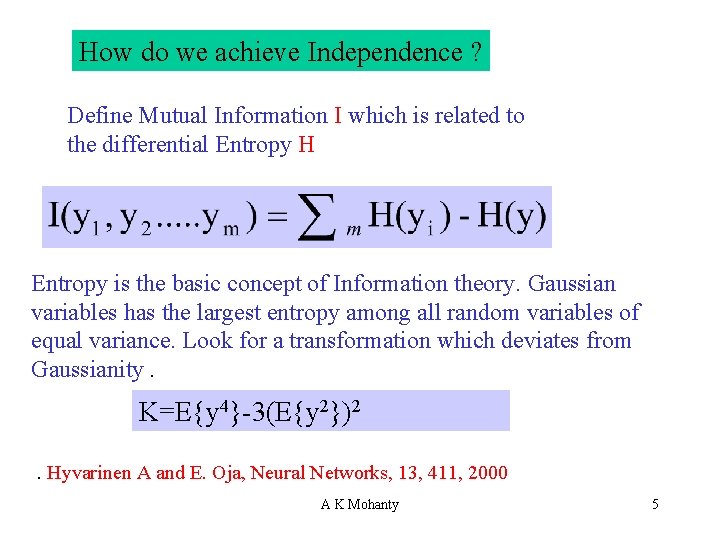

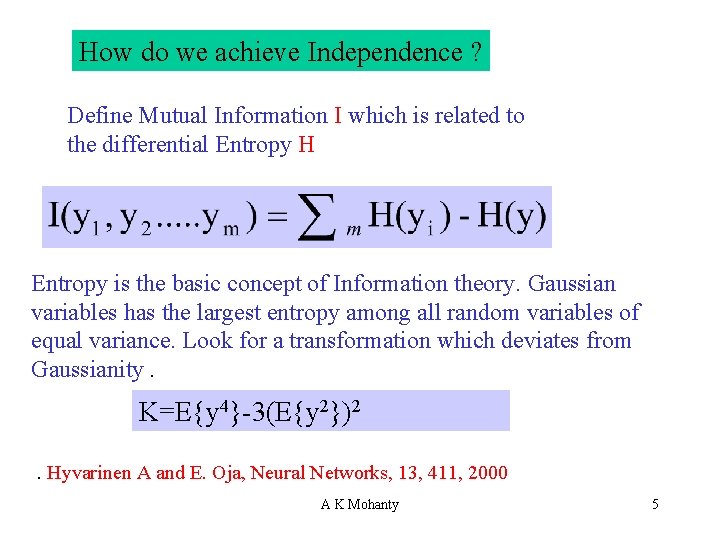

How do we achieve Independence ? Define Mutual Information I which is related to the differential Entropy H Entropy is the basic concept of Information theory. Gaussian variables has the largest entropy among all random variables of equal variance. Look for a transformation which deviates from Gaussianity. K=E{y 4}-3(E{y 2})2. Hyvarinen A and E. Oja, Neural Networks, 13, 411, 2000 A K Mohanty 5

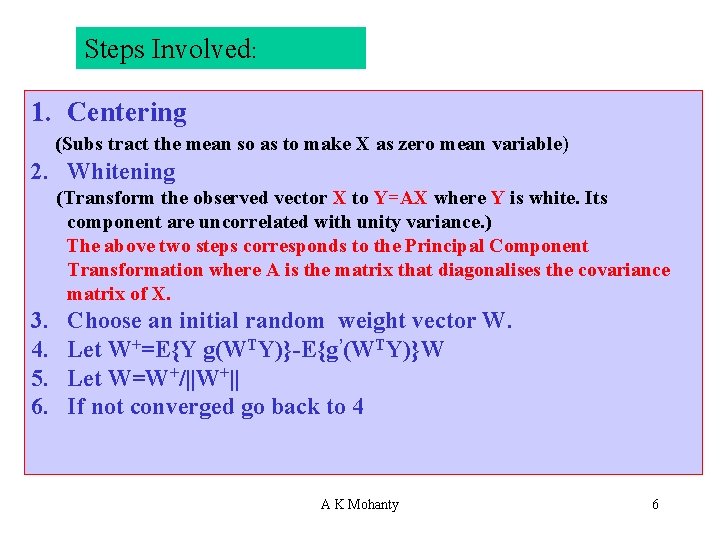

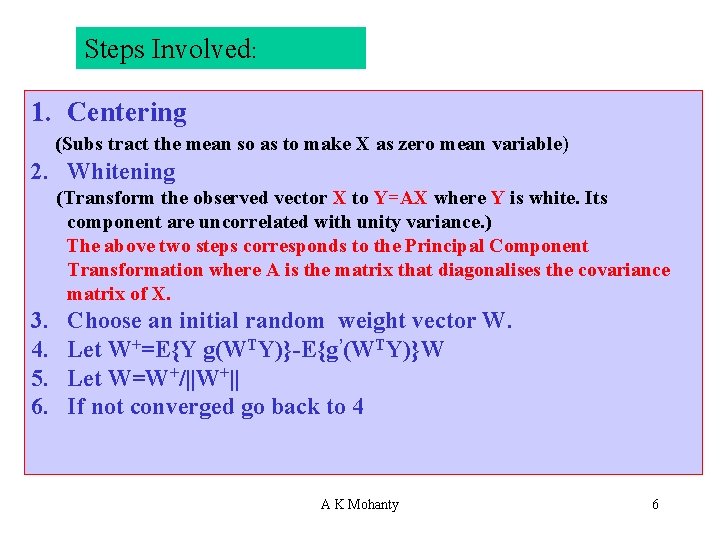

Steps Involved: 1. Centering (Subs tract the mean so as to make X as zero mean variable) 2. Whitening (Transform the observed vector X to Y=AX where Y is white. Its component are uncorrelated with unity variance. ) The above two steps corresponds to the Principal Component Transformation where A is the matrix that diagonalises the covariance matrix of X. Choose an initial random weight vector W. 3. 4. Let W+=E{Y g(WTY)}-E{g’(WTY)}W 5. Let W=W+/||W+|| 6. If not converged go back to 4 A K Mohanty 6

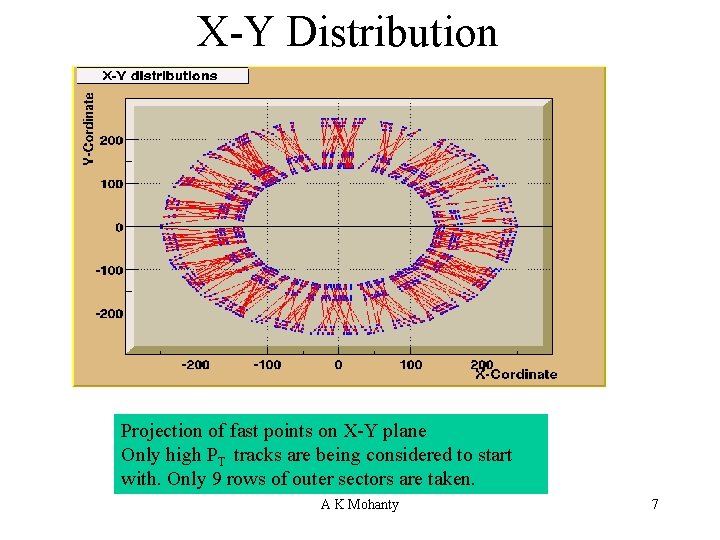

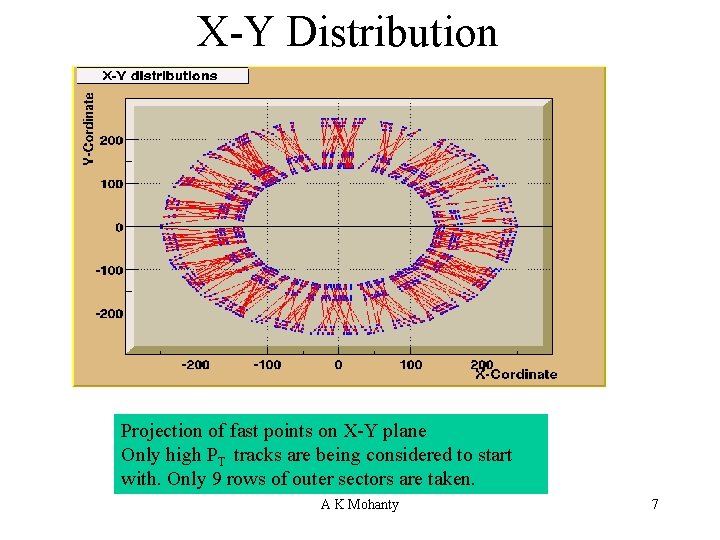

X-Y Distribution Projection of fast points on X-Y plane Only high PT tracks are being considered to start with. Only 9 rows of outer sectors are taken. A K Mohanty 7

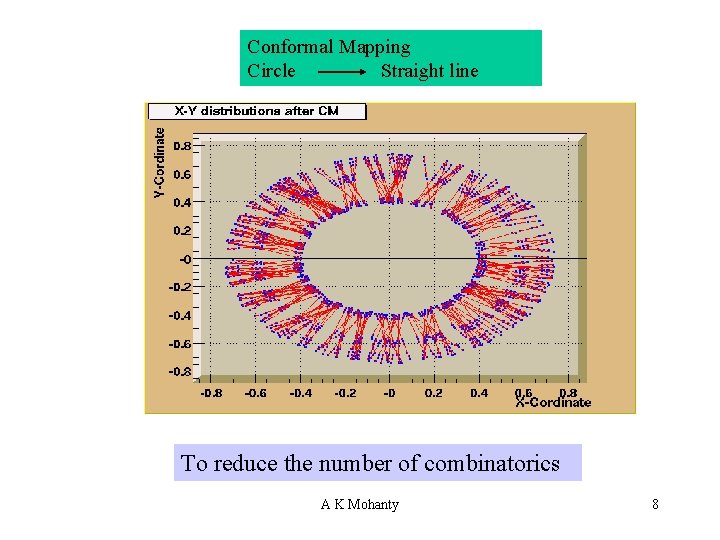

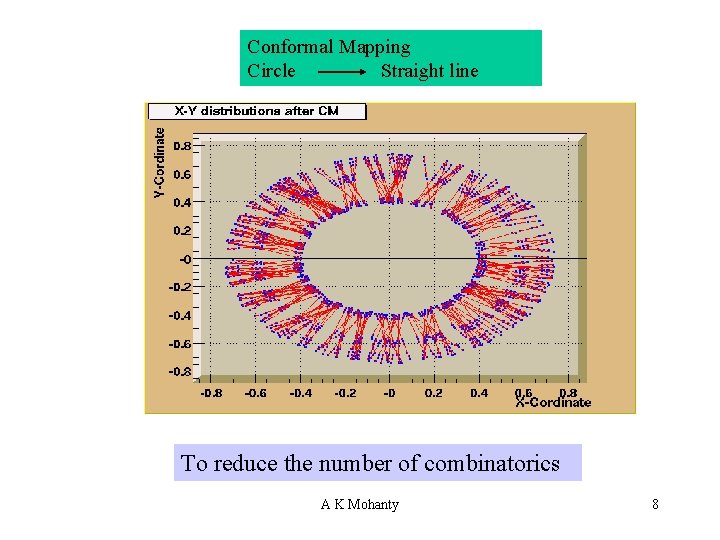

Conformal Mapping Circle Straight line To reduce the number of combinatorics A K Mohanty 8

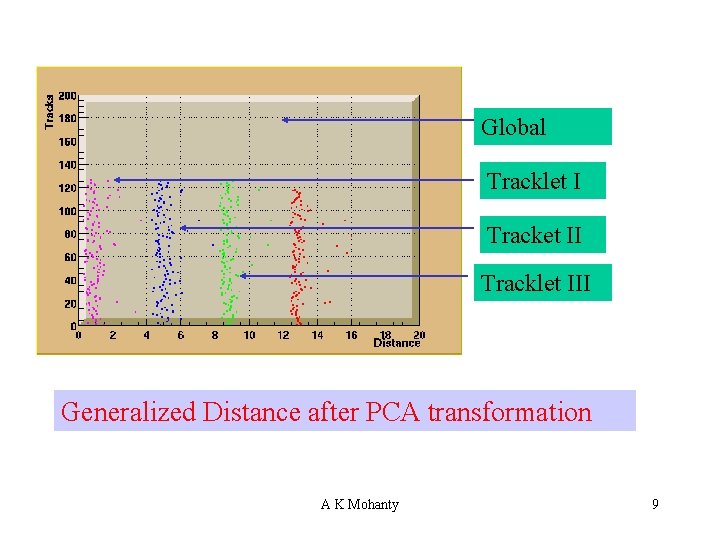

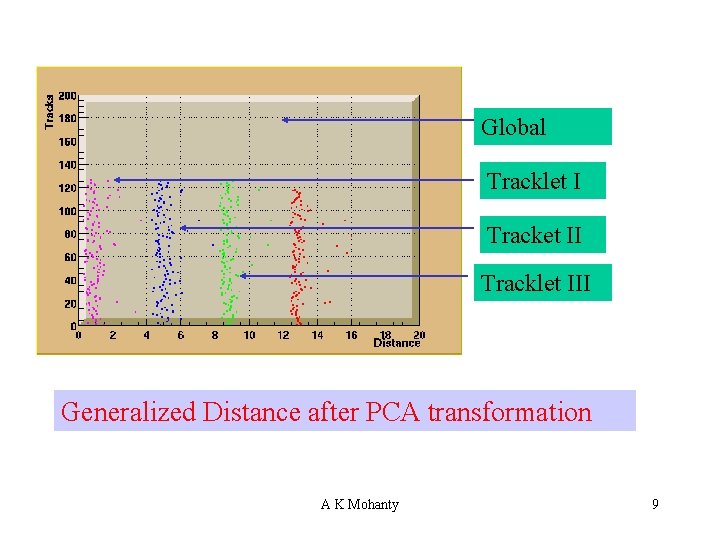

Global Tracklet I Tracket II Tracklet III Generalized Distance after PCA transformation A K Mohanty 9

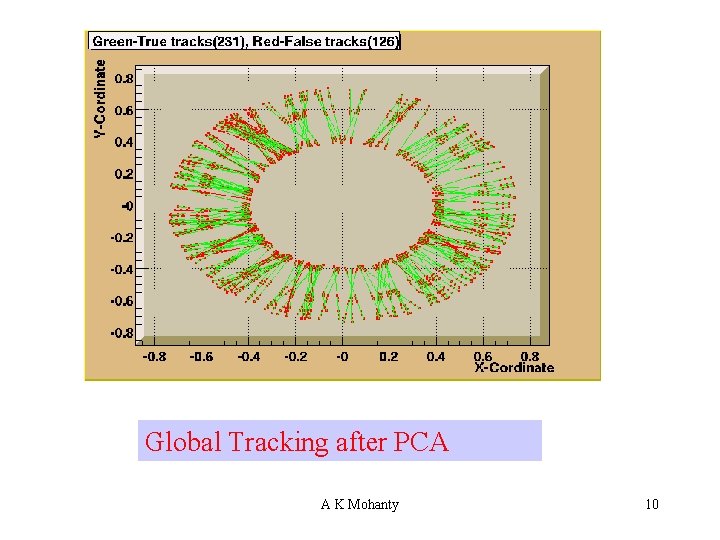

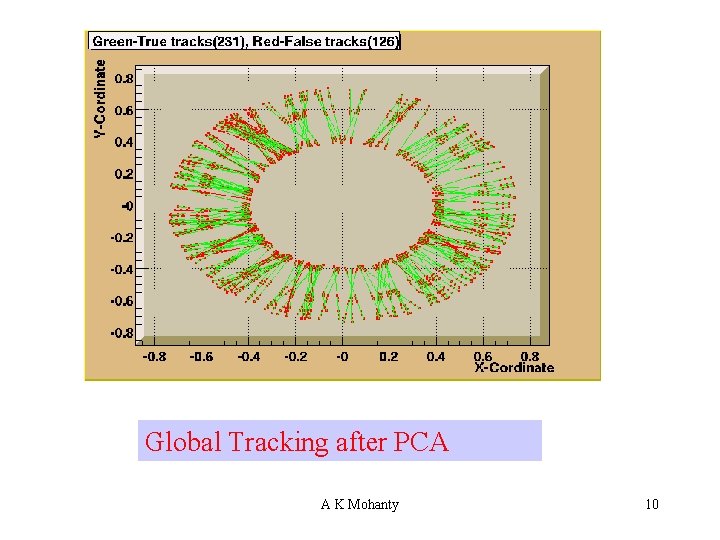

Global Tracking after PCA A K Mohanty 10

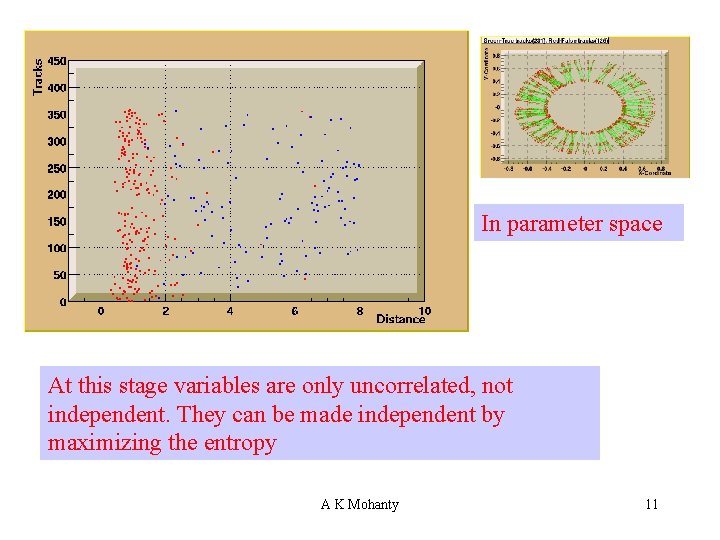

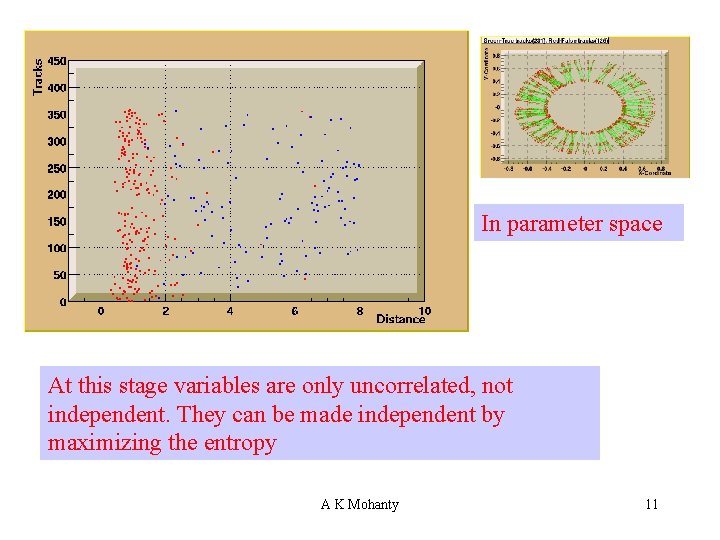

In parameter space At this stage variables are only uncorrelated, not independent. They can be made independent by maximizing the entropy A K Mohanty 11

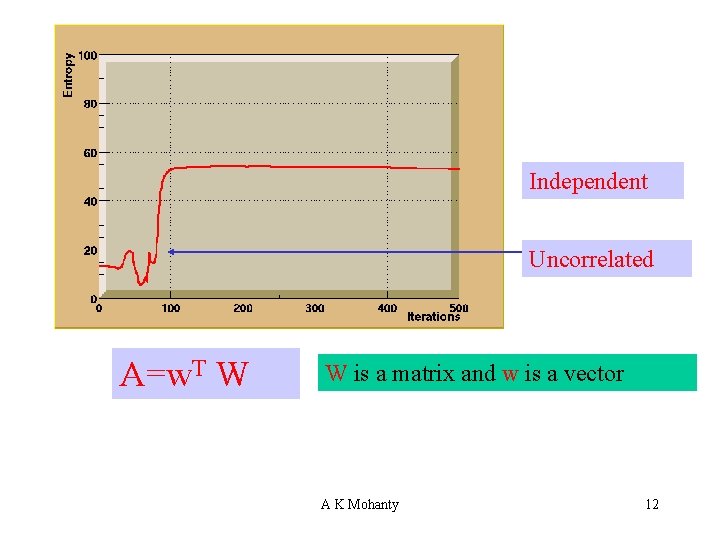

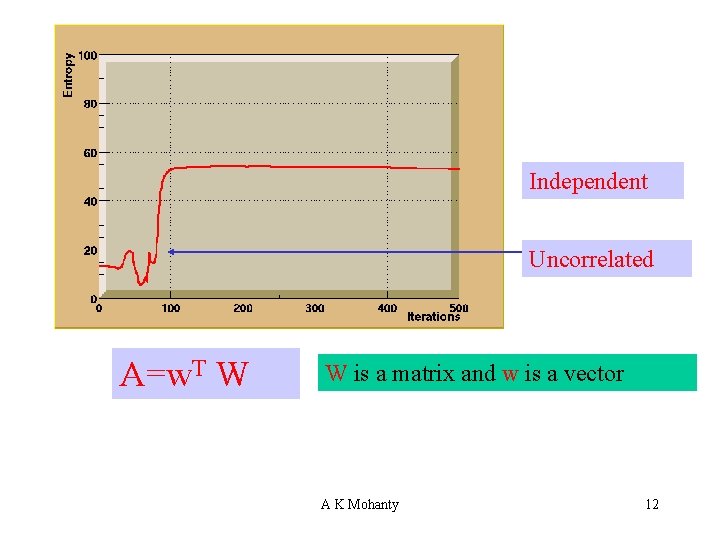

Independent Uncorrelated A=w. T W W is a matrix and w is a vector A K Mohanty 12

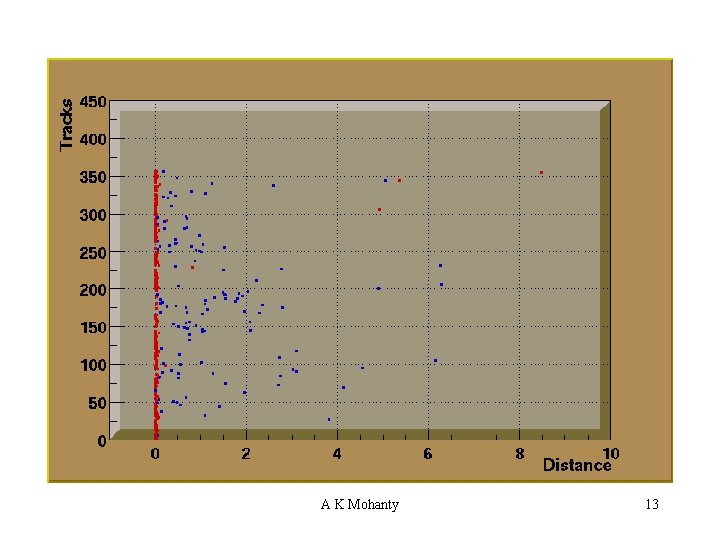

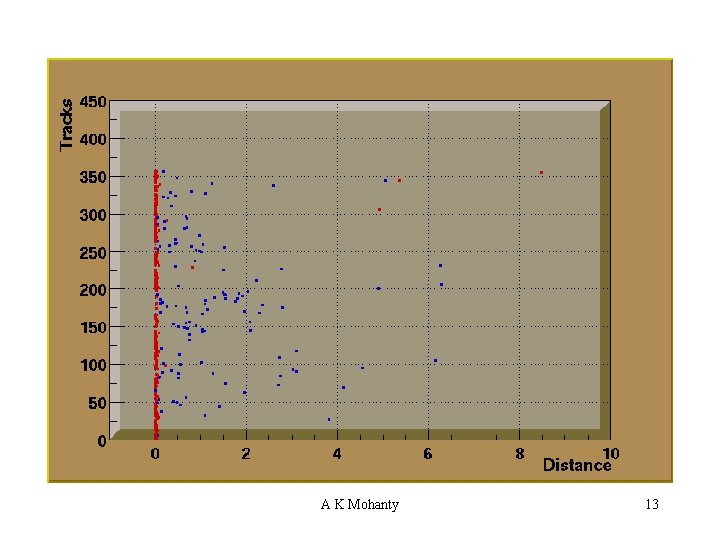

A K Mohanty 13

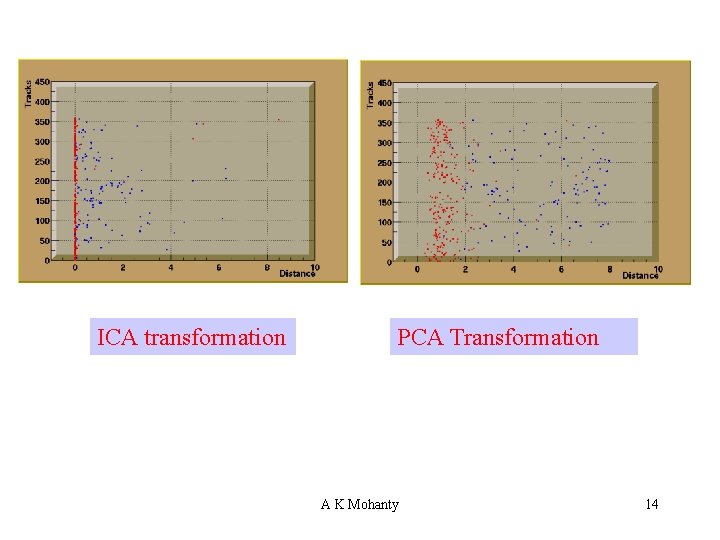

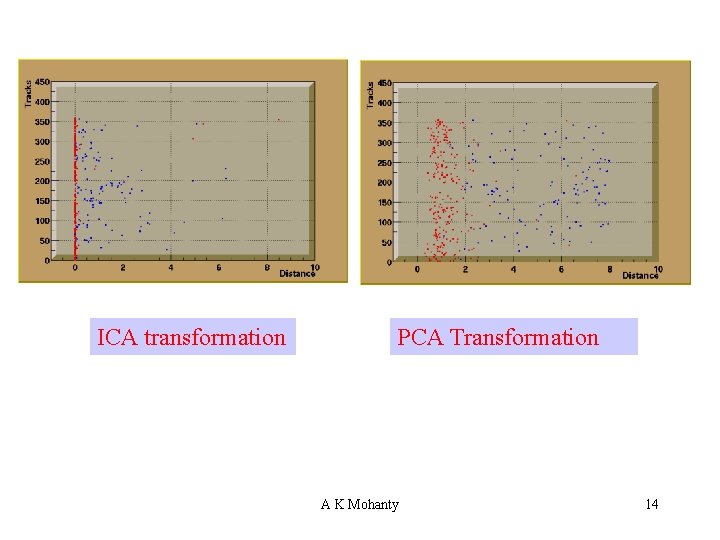

ICA transformation PCA Transformation A K Mohanty 14

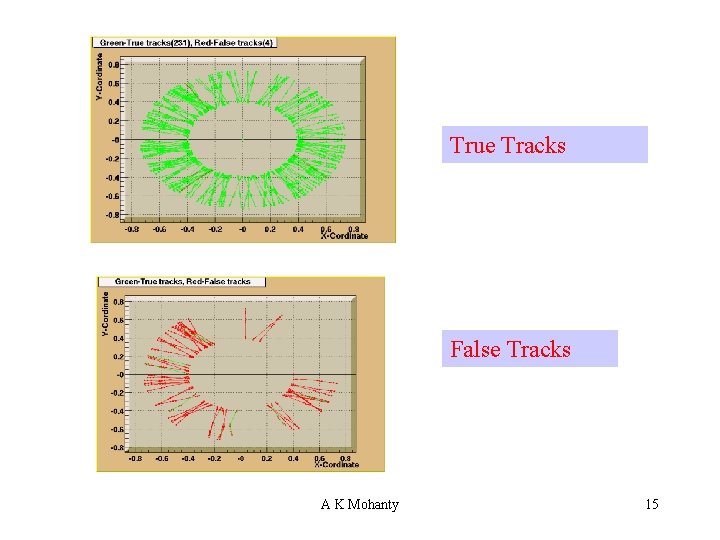

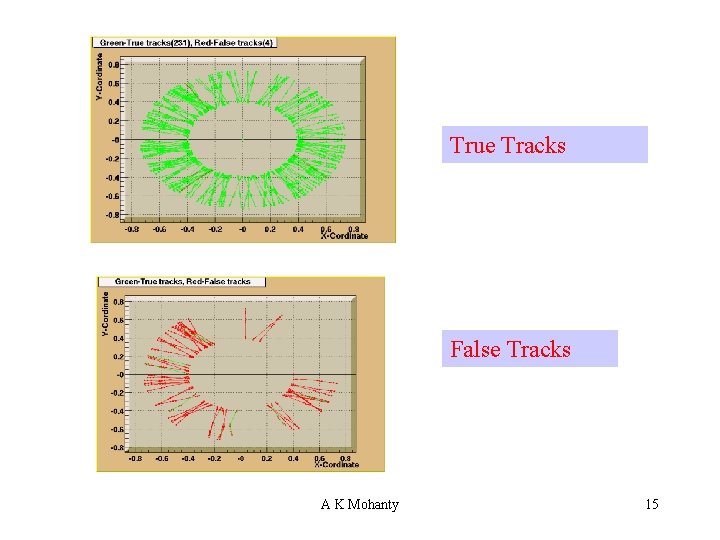

True Tracks False Tracks A K Mohanty 15

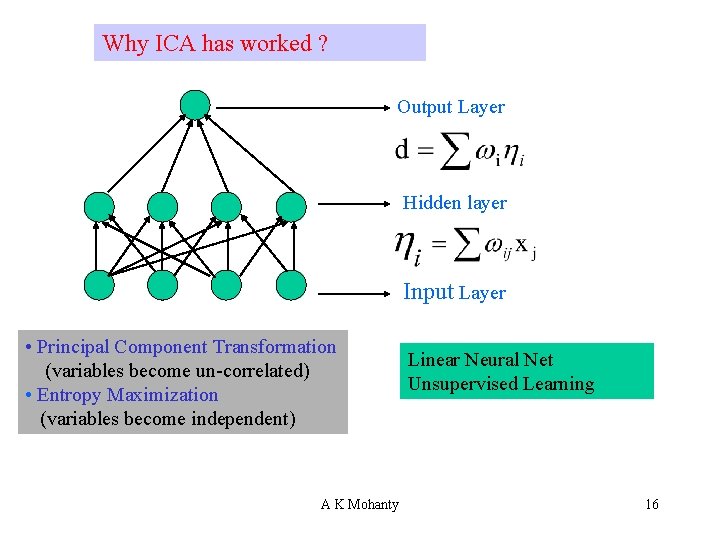

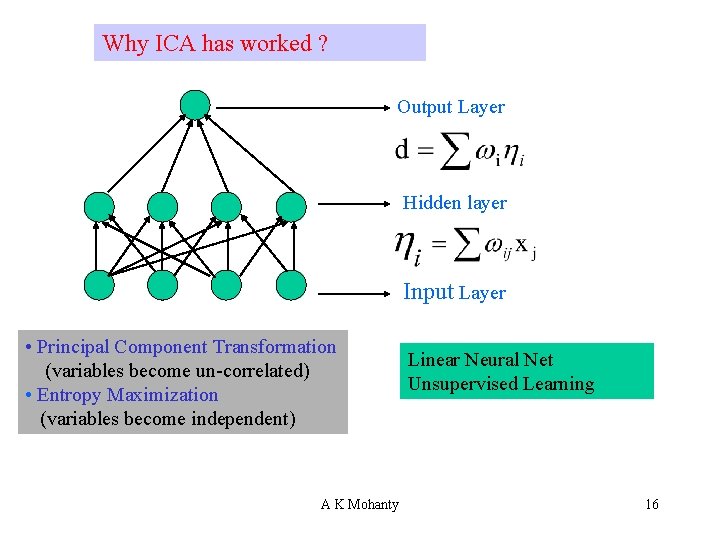

Why ICA has worked ? Output Layer Hidden layer Input Layer • Principal Component Transformation (variables become un-correlated) • Entropy Maximization (variables become independent) A K Mohanty Linear Neural Net Unsupervised Learning 16

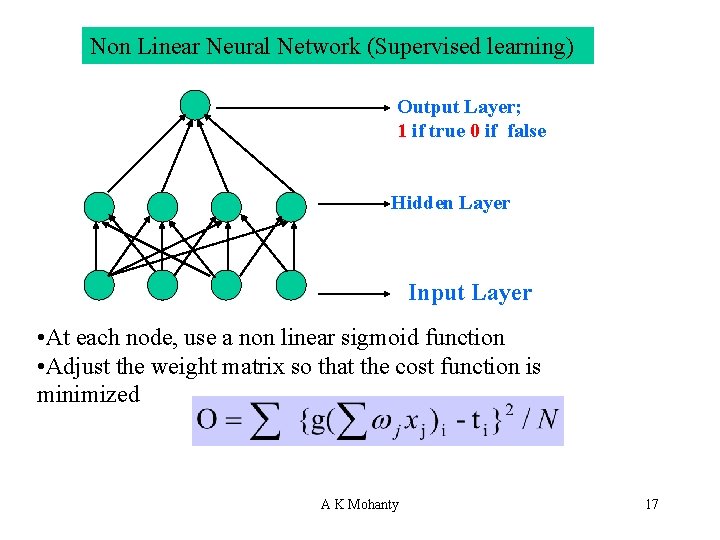

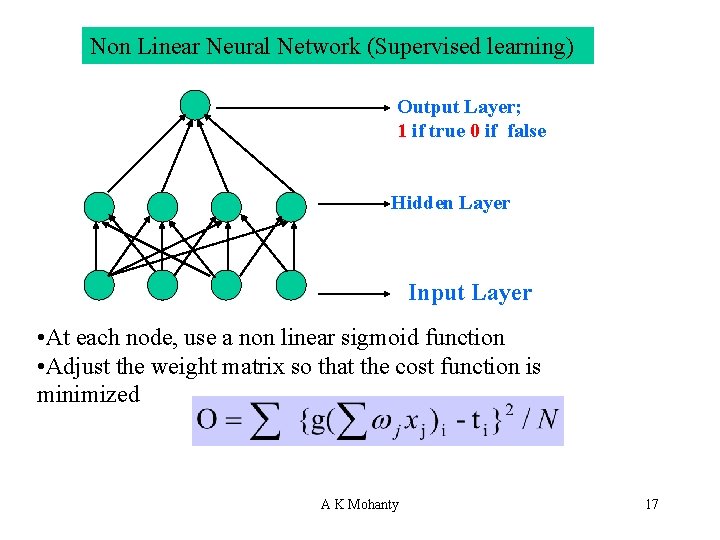

Non Linear Neural Network (Supervised learning) Output Layer; 1 if true 0 if false Hidden Layer Input Layer • At each node, use a non linear sigmoid function • Adjust the weight matrix so that the cost function is minimized A K Mohanty 17

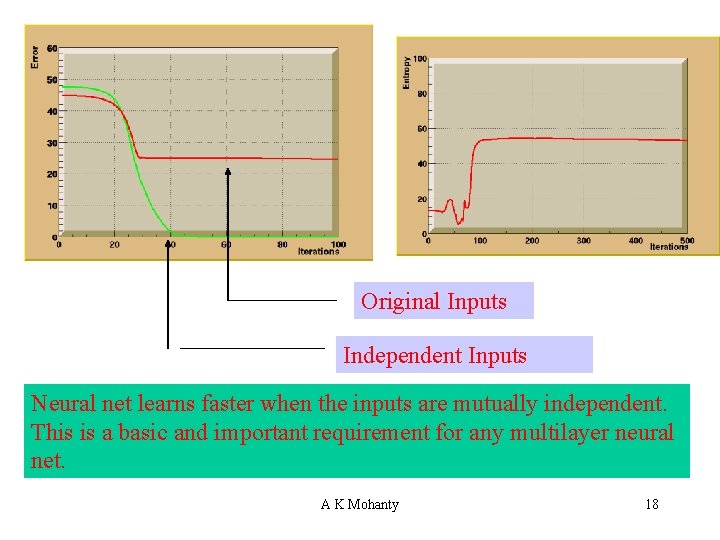

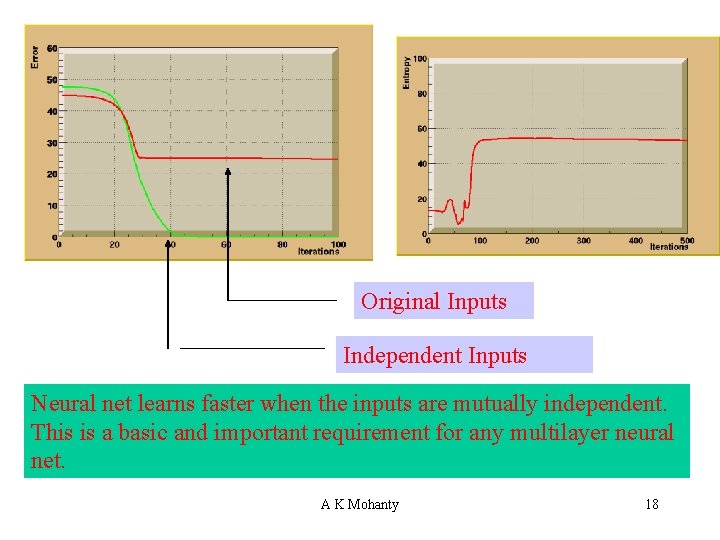

Original Inputs Independent Inputs Neural net learns faster when the inputs are mutually independent. This is a basic and important requirement for any multilayer neural net. A K Mohanty 18

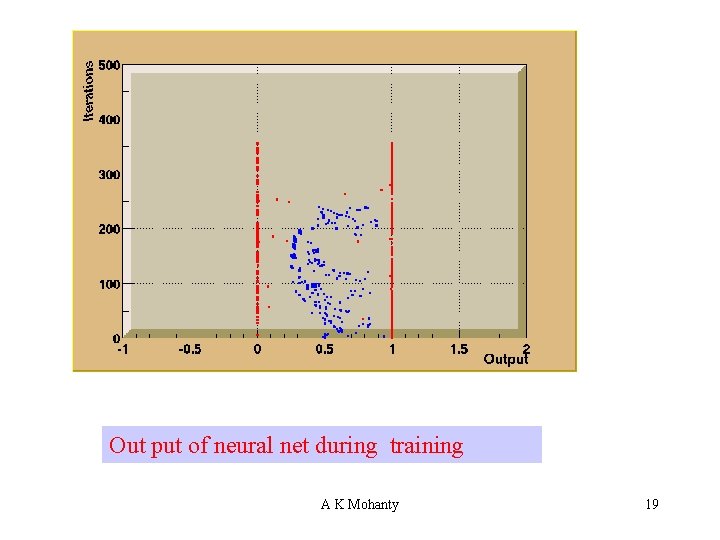

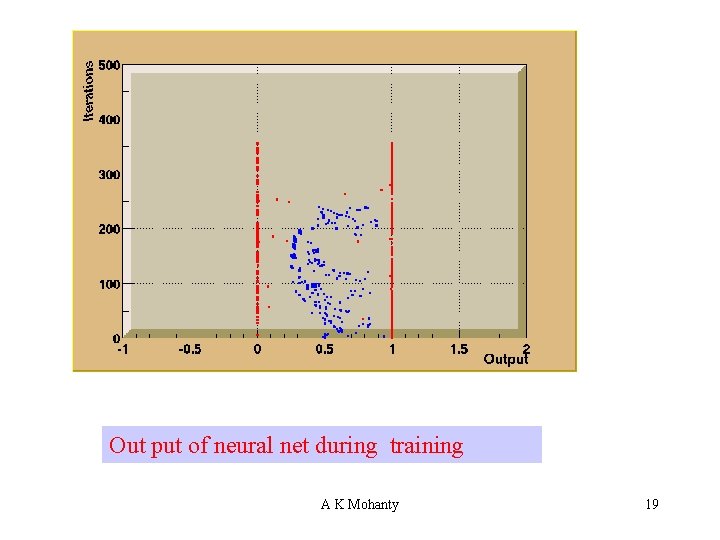

Out put of neural net during training A K Mohanty 19

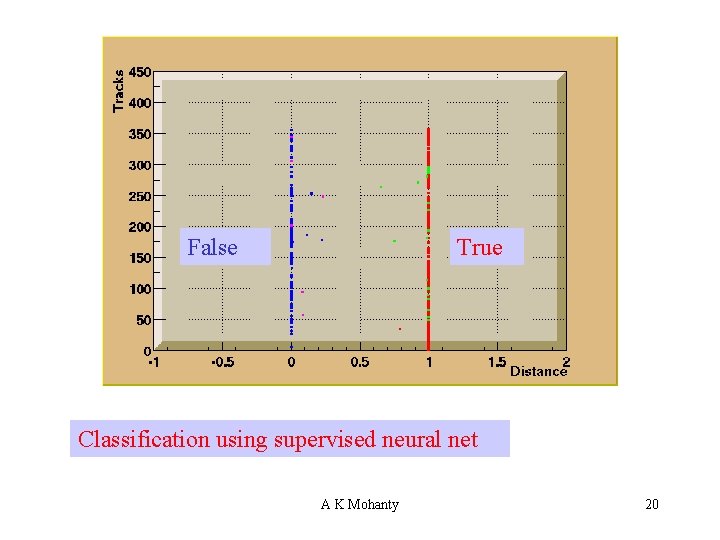

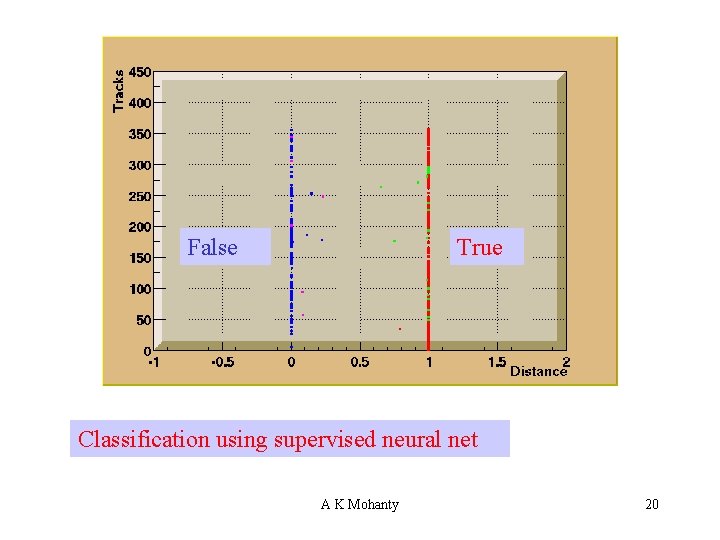

False True Classification using supervised neural net A K Mohanty 20

Conclusions: a. ICA has better discriminatory features which can extract good tracks either eliminating or minimizing the false combinatorics depending on the multiplicity of the events. b. ICA which learns in a unsupervised way can also be used as a preprocessor for more advanced non-linear neural nets to improve the performance. A K Mohanty 21