Incremental Learning with Multiple Classifier Systems using Correction

- Slides: 17

Incremental Learning with Multiple Classifier Systems using Correction Filters for Classification Authors: José del Campo Ávila, Gonzalo Ramos Jiménez, Rafael Morales Bueno IDA 2007, Ljubljana (Slovenia)

Outline Introduction Incremental learning with Multiple Classifier Systems • Multi. CIDIM-DS Correction Filters for Classification • Multi. CIDIM-DS-CFC Experiments and results Conclusion Introduction Incremental MCS Correction Filters Experiments Conclusion 2 de 16

Introduction Multiple classifier systems (MCS) l l Ensemble of basic models Main requirements of the basic models Accuracy Diversity l Advantages Increased expressive ability Higher accuracy Very large datasets or data streams l l Incremental learning Concept drift Use of Correction Filters for Classification (CFC) l l Independent of MCS approach Can be applied to a wide variety of MCS Introduction Incremental MCS Correction Filters Experiments Conclusion 3 de 16

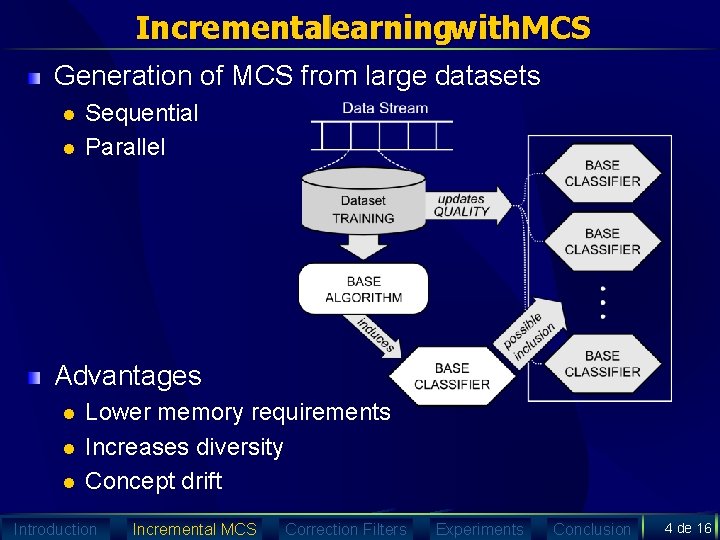

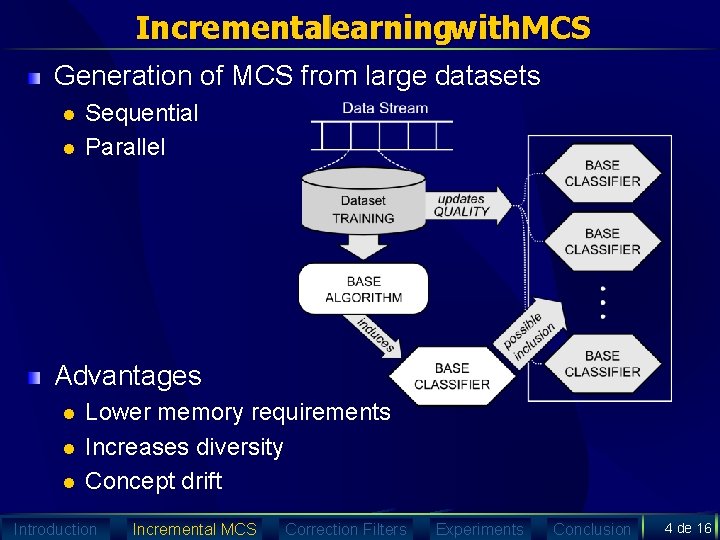

Incrementallearningwith. MCS Generation of MCS from large datasets l l Sequential Parallel Advantages l l l Lower memory requirements Increases diversity Concept drift Introduction Incremental MCS Correction Filters Experiments Conclusion 4 de 16

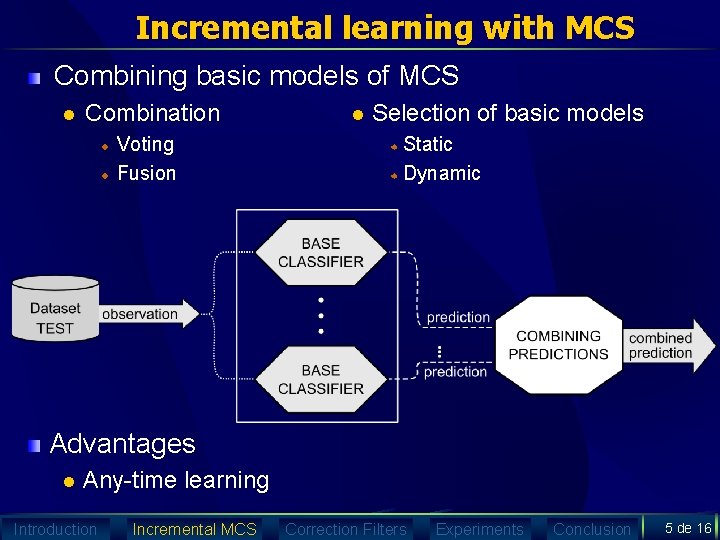

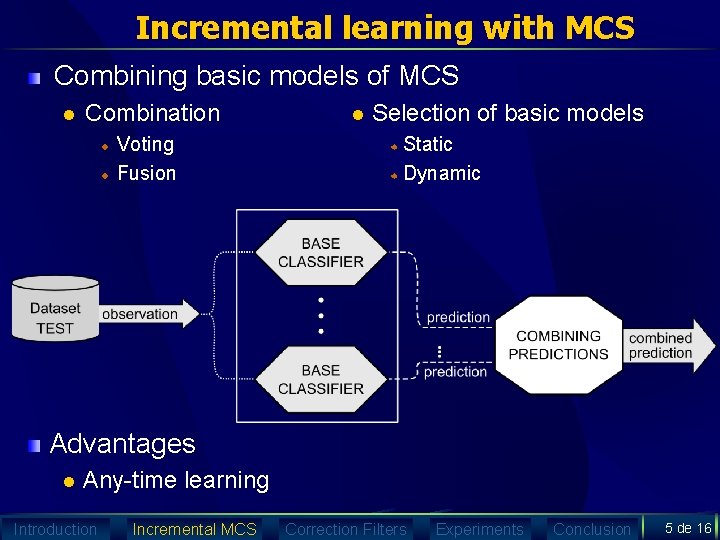

Incremental learning with MCS Combining basic models of MCS l Combination Voting Fusion l Selection of basic models Static Dynamic Advantages l Any-time learning Introduction Incremental MCS Correction Filters Experiments Conclusion 5 de 16

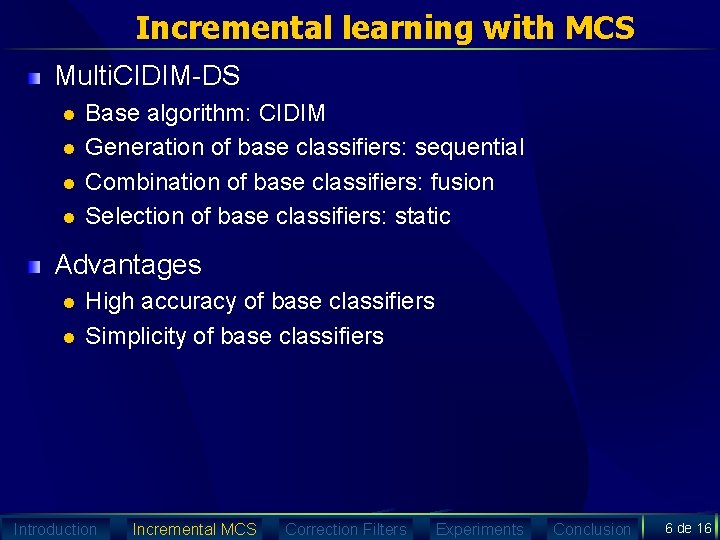

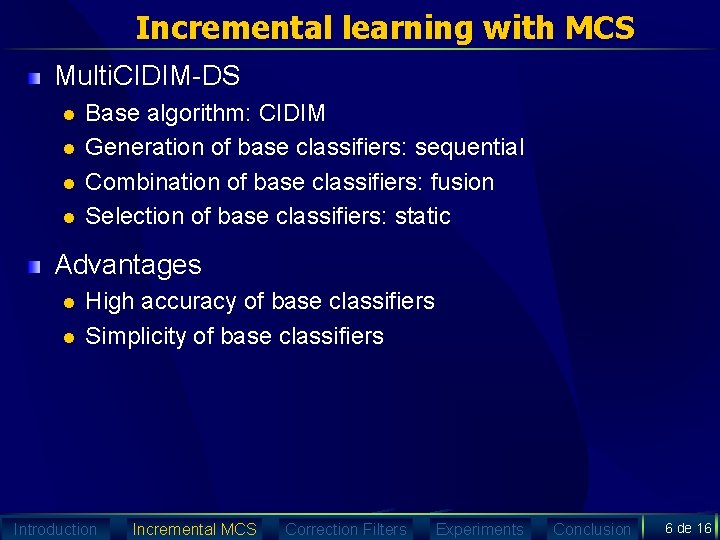

Incremental learning with MCS Multi. CIDIM-DS l l Base algorithm: CIDIM Generation of base classifiers: sequential Combination of base classifiers: fusion Selection of base classifiers: static Advantages l l High accuracy of base classifiers Simplicity of base classifiers Introduction Incremental MCS Correction Filters Experiments Conclusion 6 de 16

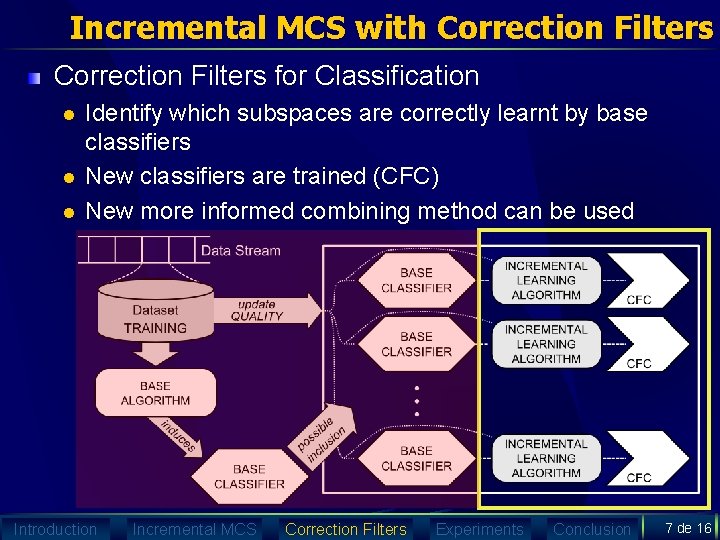

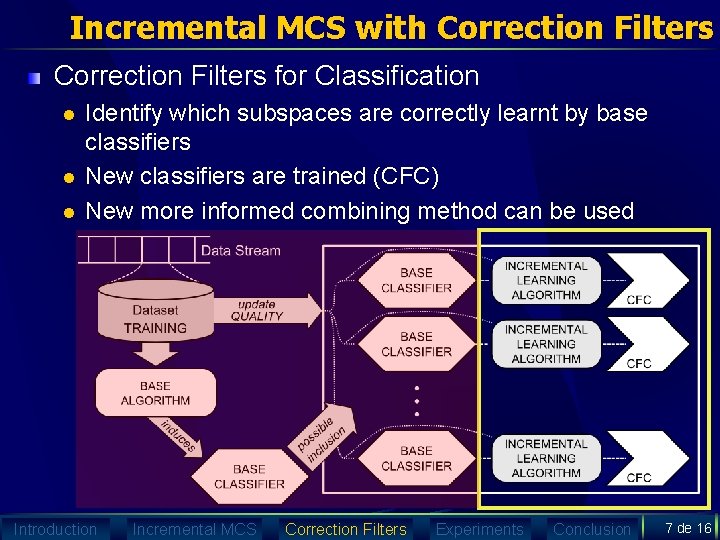

Incremental MCS with Correction Filters for Classification l l l Identify which subspaces are correctly learnt by base classifiers New classifiers are trained (CFC) New more informed combining method can be used Introduction Incremental MCS Correction Filters Experiments Conclusion 7 de 16

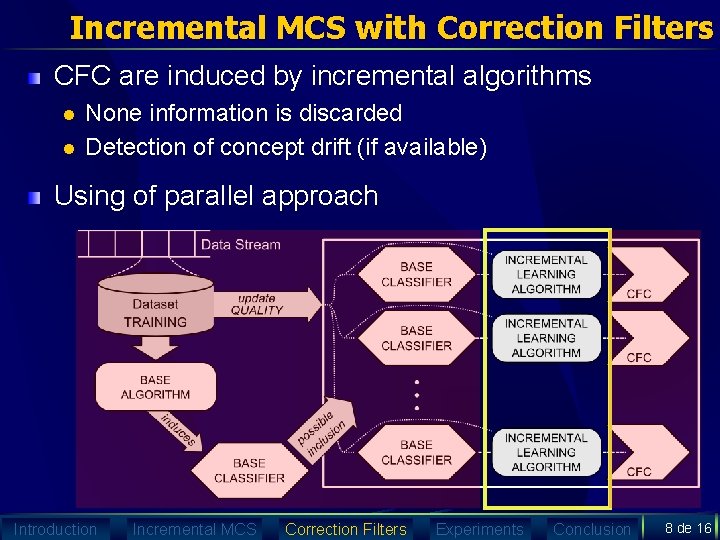

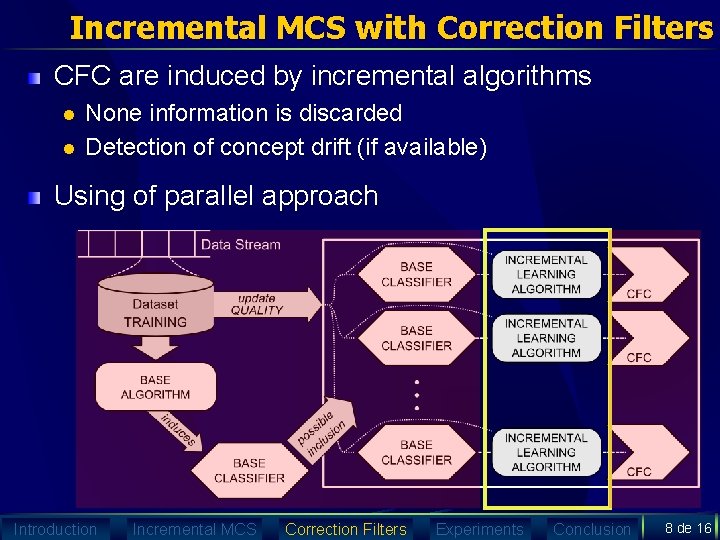

Incremental MCS with Correction Filters CFC are induced by incremental algorithms l l None information is discarded Detection of concept drift (if available) Using of parallel approach Introduction Incremental MCS Correction Filters Experiments Conclusion 8 de 16

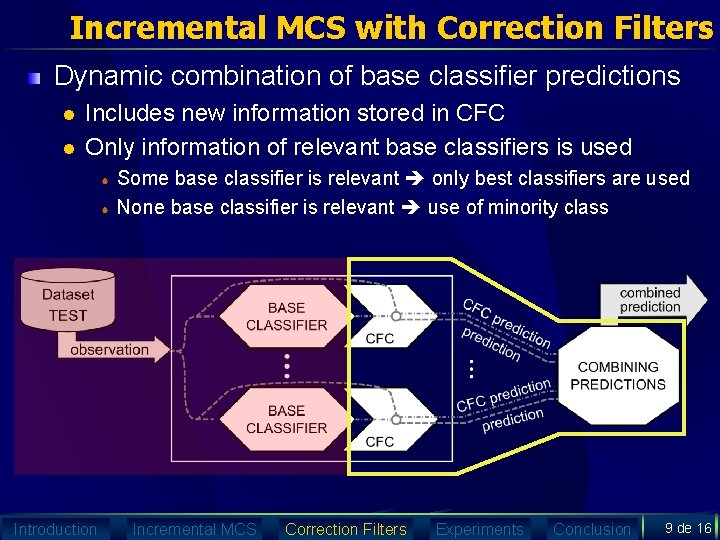

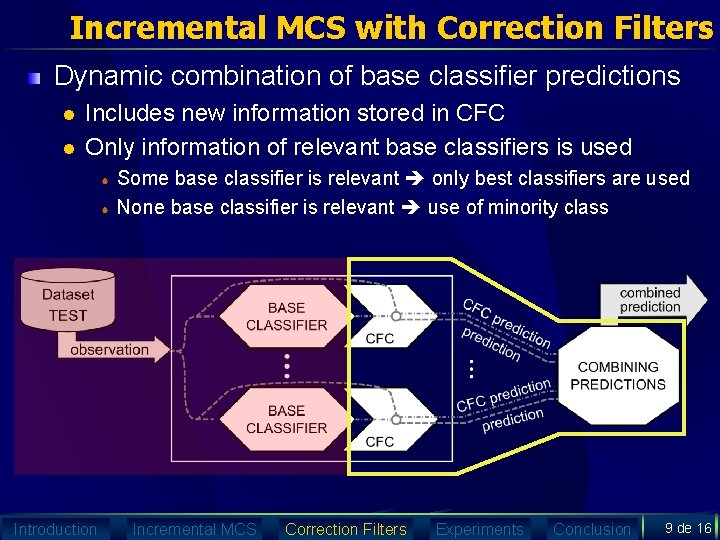

Incremental MCS with Correction Filters Dynamic combination of base classifier predictions l l Includes new information stored in CFC Only information of relevant base classifiers is used Some base classifier is relevant only best classifiers are used None base classifier is relevant use of minority class Introduction Incremental MCS Correction Filters Experiments Conclusion 9 de 16

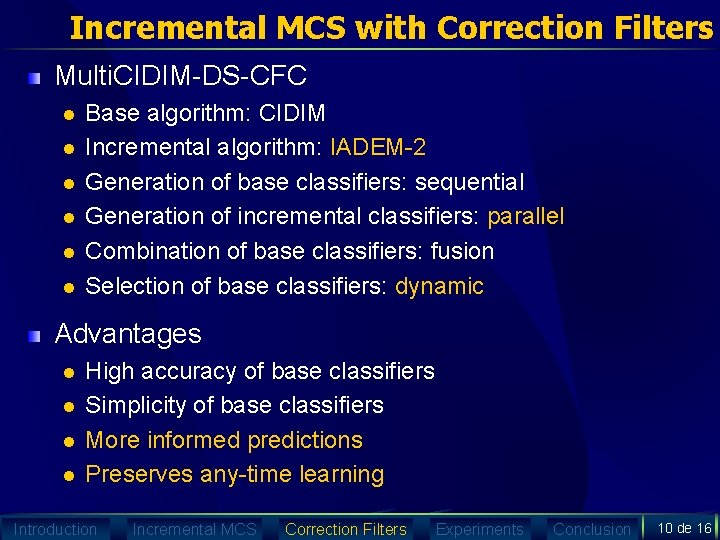

Incremental MCS with Correction Filters Multi. CIDIM-DS-CFC l l l Base algorithm: CIDIM Incremental algorithm: IADEM-2 Generation of base classifiers: sequential Generation of incremental classifiers: parallel Combination of base classifiers: fusion Selection of base classifiers: dynamic Advantages l l High accuracy of base classifiers Simplicity of base classifiers More informed predictions Preserves any-time learning Introduction Incremental MCS Correction Filters Experiments Conclusion 10 de 16

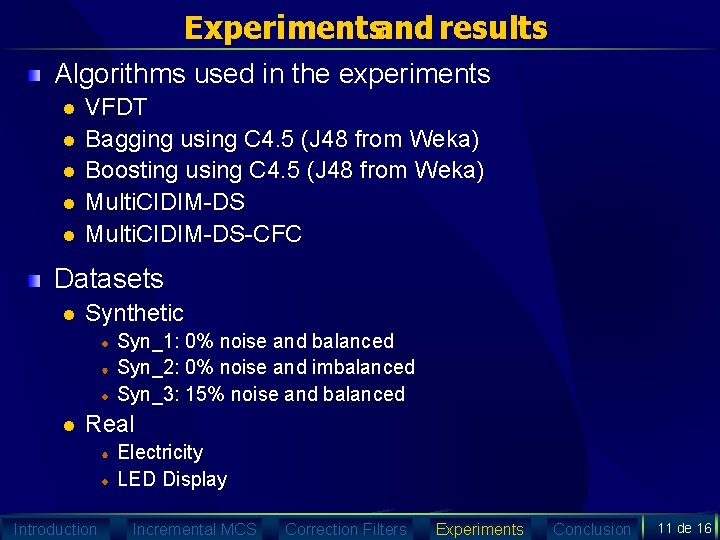

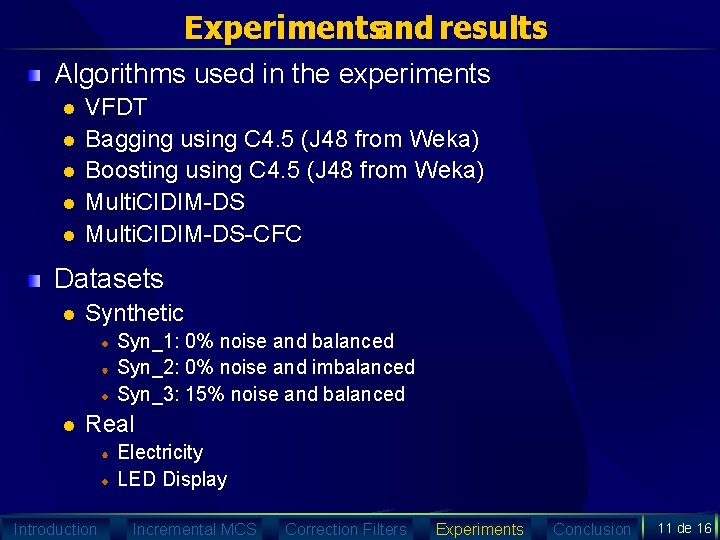

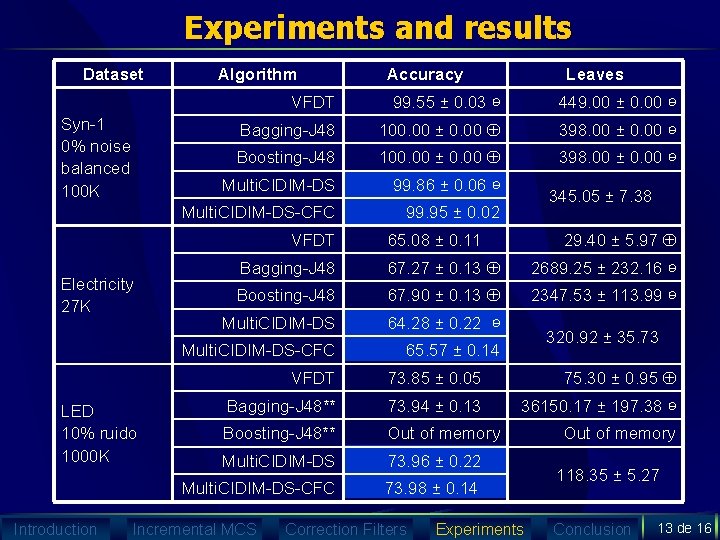

Experimentsand results Algorithms used in the experiments l l l VFDT Bagging using C 4. 5 (J 48 from Weka) Boosting using C 4. 5 (J 48 from Weka) Multi. CIDIM-DS-CFC Datasets l Synthetic Syn_1: 0% noise and balanced Syn_2: 0% noise and imbalanced Syn_3: 15% noise and balanced l Real Electricity LED Display Introduction Incremental MCS Correction Filters Experiments Conclusion 11 de 16

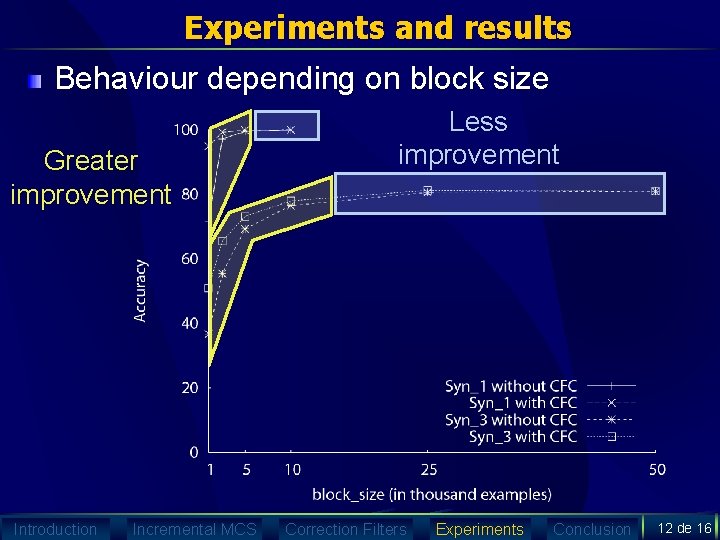

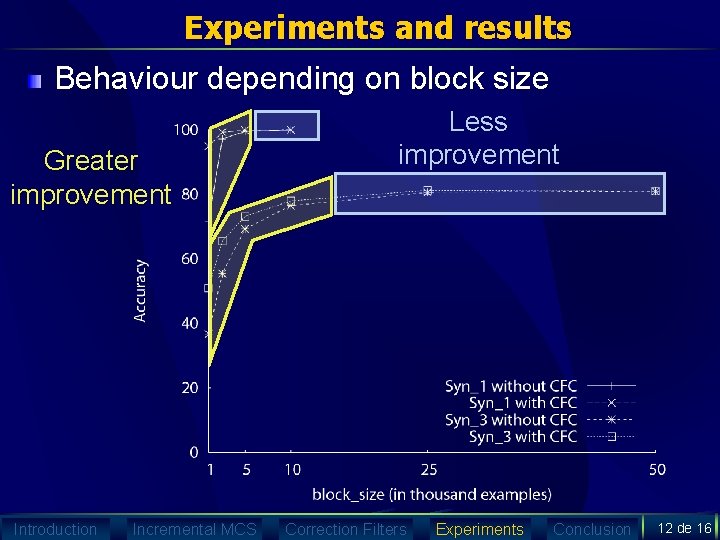

Experiments and results Behaviour depending on block size Greater improvement Introduction Incremental MCS Less improvement Correction Filters Experiments Conclusion 12 de 16

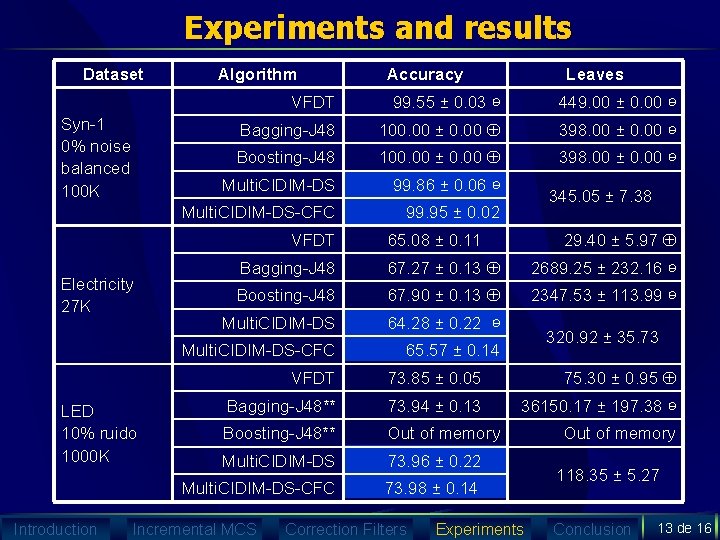

Experiments and results Dataset Syn-1 0% noise balanced 100 K Algorithm LED 10% ruido 1000 K Introduction Leaves VFDT 99. 55 ± 0. 03 ⊖ 449. 00 ± 0. 00 ⊖ Bagging-J 48 100. 00 ± 0. 00 398. 00 ± 0. 00 ⊖ Boosting-J 48 100. 00 ± 0. 00 398. 00 ± 0. 00 ⊖ Multi. CIDIM-DS 99. 86 ± 0. 06 ⊖ Multi. CIDIM-DS-CFC 99. 95 ± 0. 02 VFDT Electricity 27 K Accuracy 345. 05 ± 7. 38 29. 40 ± 5. 97 65. 08 ± 0. 11 Bagging-J 48 67. 27 ± 0. 13 2689. 25 ± 232. 16 ⊖ Boosting-J 48 67. 90 ± 0. 13 2347. 53 ± 113. 99 ⊖ Multi. CIDIM-DS 64. 28 ± 0. 22 ⊖ Multi. CIDIM-DS-CFC 65. 57 ± 0. 14 320. 92 ± 35. 73 VFDT 73. 85 ± 0. 05 75. 30 ± 0. 95 Bagging-J 48** 73. 94 ± 0. 13 36150. 17 ± 197. 38 ⊖ Boosting-J 48** Out of memory Multi. CIDIM-DS 73. 96 ± 0. 22 Multi. CIDIM-DS-CFC 73. 98 ± 0. 14 Incremental MCS Correction Filters Experiments Out of memory 118. 35 ± 5. 27 Conclusion 13 de 16

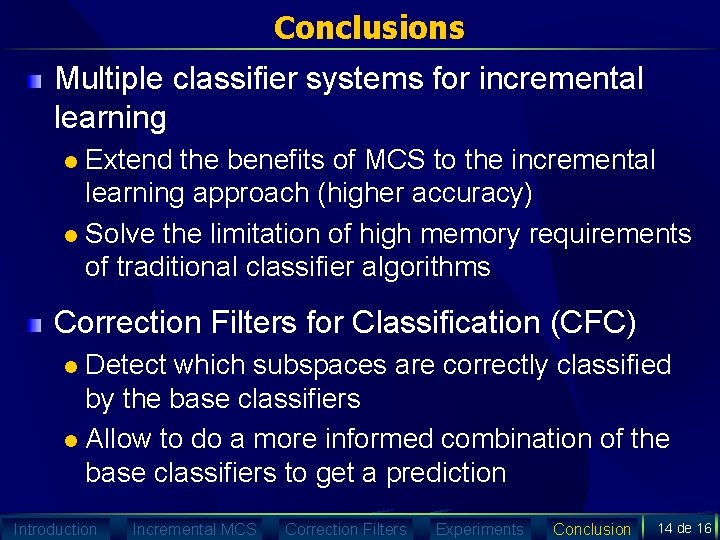

Conclusions Multiple classifier systems for incremental learning Extend the benefits of MCS to the incremental learning approach (higher accuracy) l Solve the limitation of high memory requirements of traditional classifier algorithms l Correction Filters for Classification (CFC) Detect which subspaces are correctly classified by the base classifiers l Allow to do a more informed combination of the base classifiers to get a prediction l Introduction Incremental MCS Correction Filters Experiments Conclusion 14 de 16

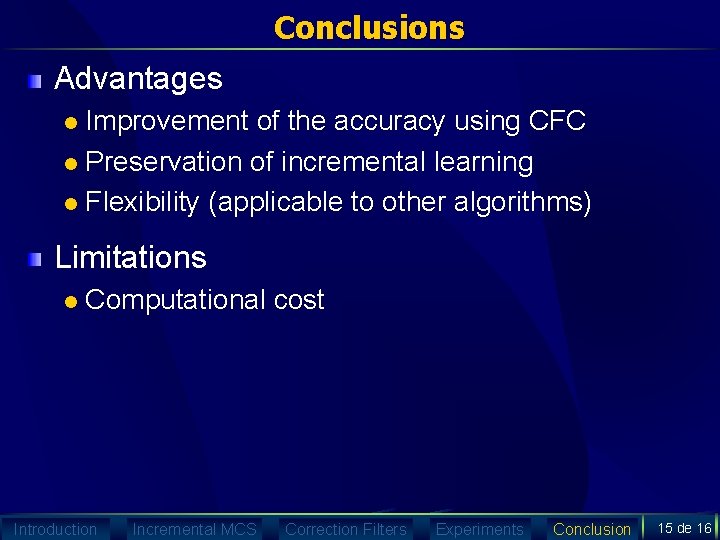

Conclusions Advantages Improvement of the accuracy using CFC l Preservation of incremental learning l Flexibility (applicable to other algorithms) l Limitations l Computational cost Introduction Incremental MCS Correction Filters Experiments Conclusion 15 de 16

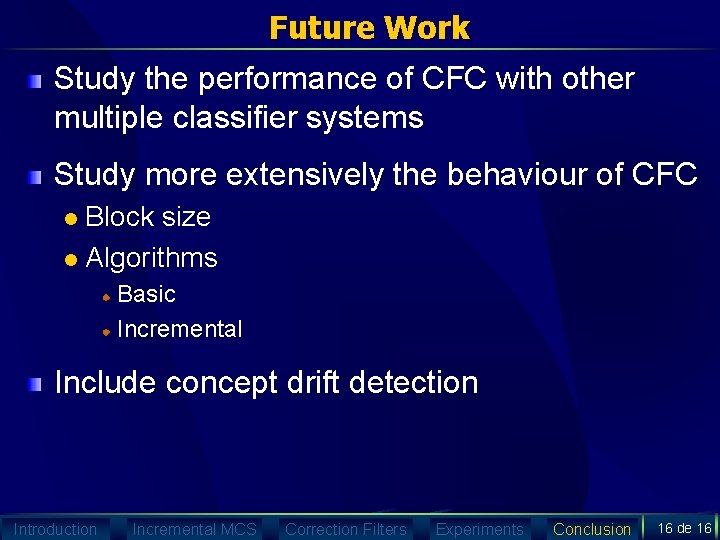

Future Work Study the performance of CFC with other multiple classifier systems Study more extensively the behaviour of CFC Block size l Algorithms l Basic Incremental Include concept drift detection Introduction Incremental MCS Correction Filters Experiments Conclusion 16 de 16

Incremental Learning with Multiple Classifier Systems using Correction Filters for Classification Authors: José del Campo Ávila, Gonzalo Ramos Jiménez, Rafael Morales Bueno IDA 2007, Ljubljana