Incremental Coevolution With Competitive and Cooperative Tasks in

- Slides: 19

Incremental Coevolution With Competitive and Cooperative Tasks in a Multirobot Environment Eiji Uchibe, Okinawa Institute of Science Minoru Asada, Osaka University Proceedings of the IEEE, July 2006 Presented By: Dan De. Blasio For : CAP 6671, Spring 2008 8 April 2008

Coevolution �Two (or more) separate populations �Evolve the populations separately �Creates “arms race”

Competitive v. Cooperative Have agents from each population compete to gain fitness Usually one is being evaluated at a time Agents from multiple populations work together to solve a problem Team evaluated as a whole, not each agent

Robocup �Special because you need both cooperative and competitive components Groups of agents need to work together as a team (cooperation) Need to defeat the other team (competitive)

Paper v. My Work Presented for a small league team 3 agents per team Work done on a simulation league Up to 11 players per team

Motivation �Evaluation is a big issue �Even with two populations of 100 agents, to accurately evaluate each player in population A, it would need to play each agent in B �That would be 10, 000 simulated games per generation

How do we reduce the number of games per generation, without degrading the results if our fitness evaluation?

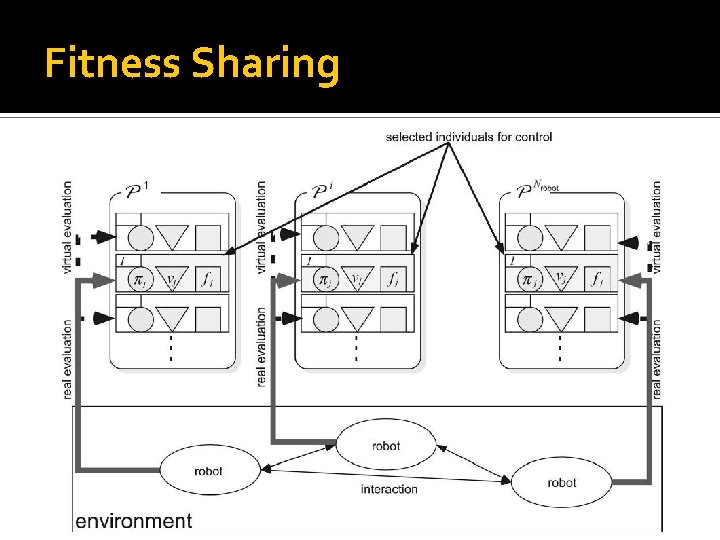

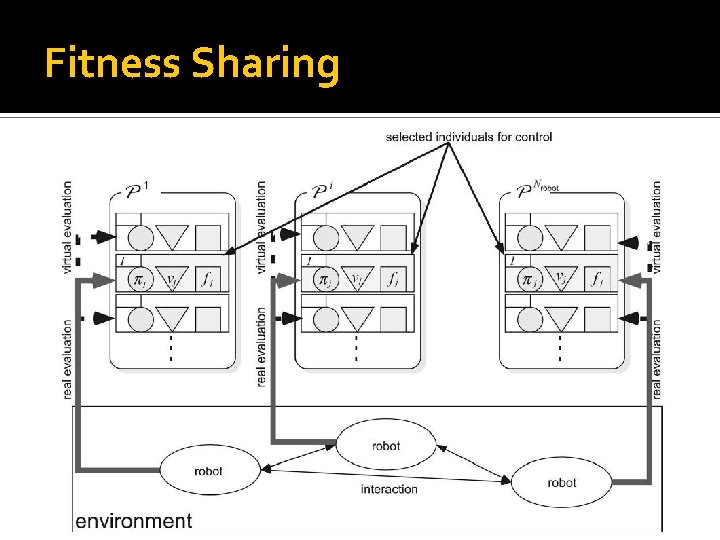

Fitness Sharing �At each iteration, agents are selected from each population to actually control the players �After evaluation of each agent, the system updates the fitness value of each agent in the population using its similarity to the agent that was selected.

Fitness Sharing

Fitness Sharing �Each Individual in population has: π - policy (brain) v - (previous)performance value f - fitness

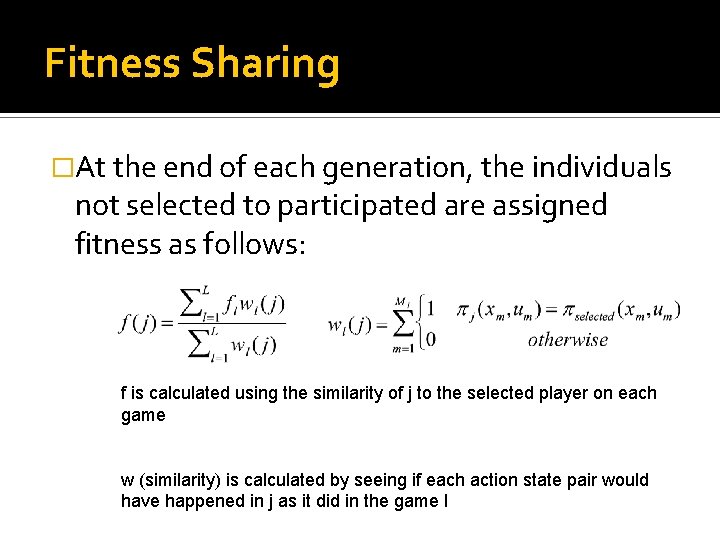

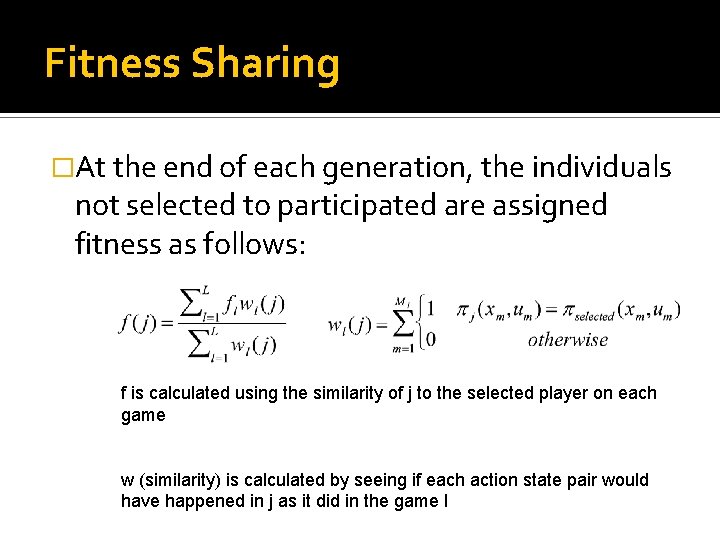

Fitness Sharing �At the end of each generation, the individuals not selected to participated are assigned fitness as follows: f is calculated using the similarity of j to the selected player on each game w (similarity) is calculated by seeing if each action state pair would have happened in j as it did in the game l

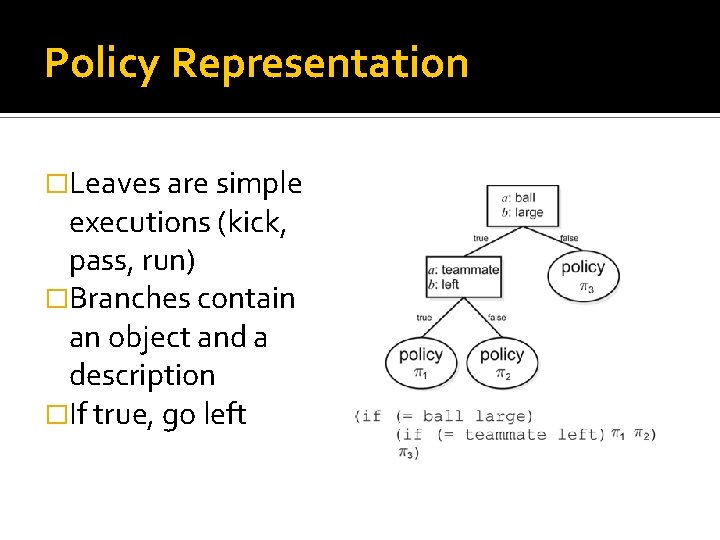

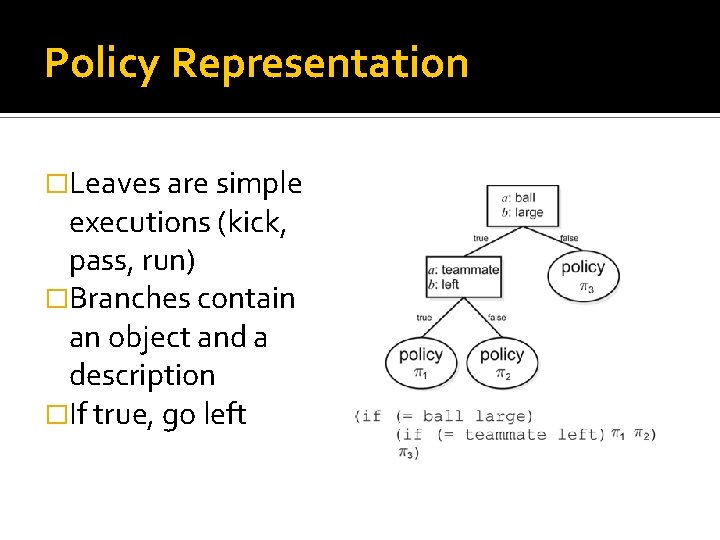

Policy Representation �Leaves are simple executions (kick, pass, run) �Branches contain an object and a description �If true, go left

Genetic Manipulation �Basic GP manipulation is used Crossover ▪ Select two points from parents trees, swap subtrees Mutation ▪ Change random action, object, or description in tree ▪ Add new branch

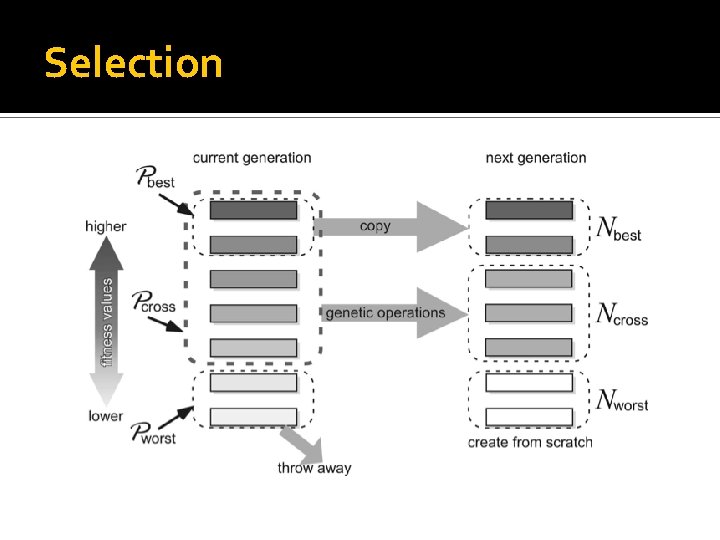

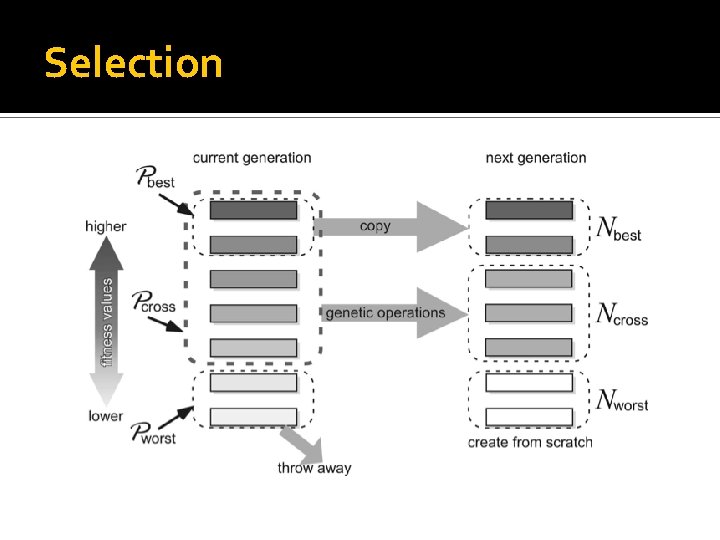

Selection

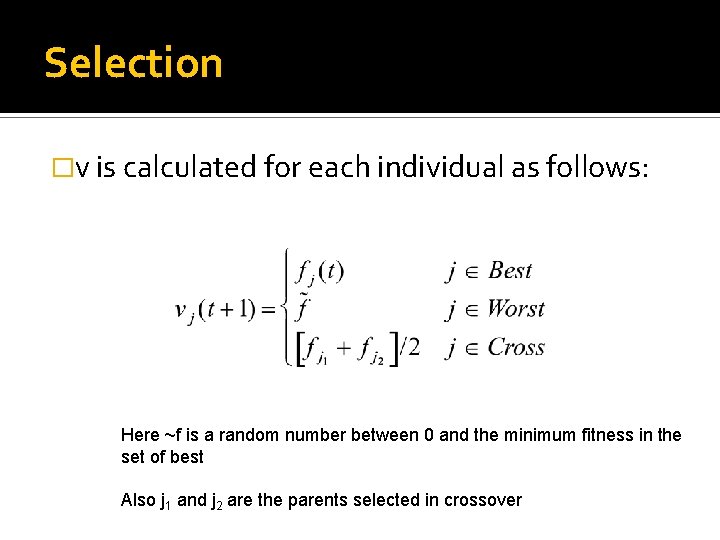

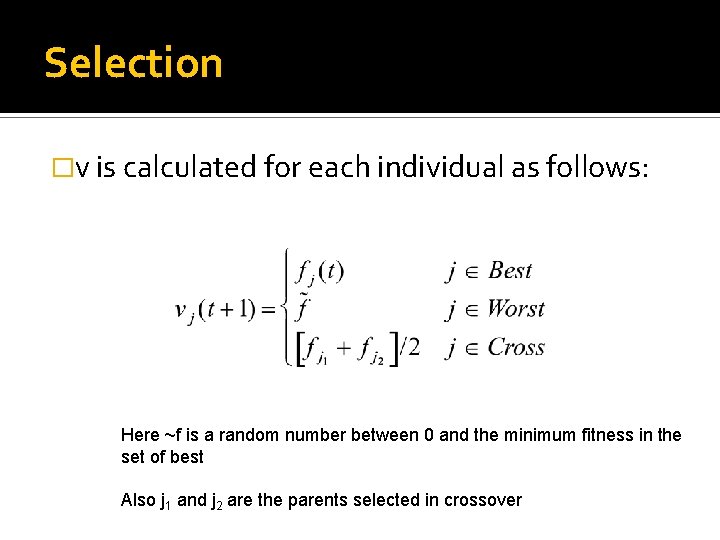

Selection �v is calculated for each individual as follows: Here ~f is a random number between 0 and the minimum fitness in the set of best Also j 1 and j 2 are the parents selected in crossover

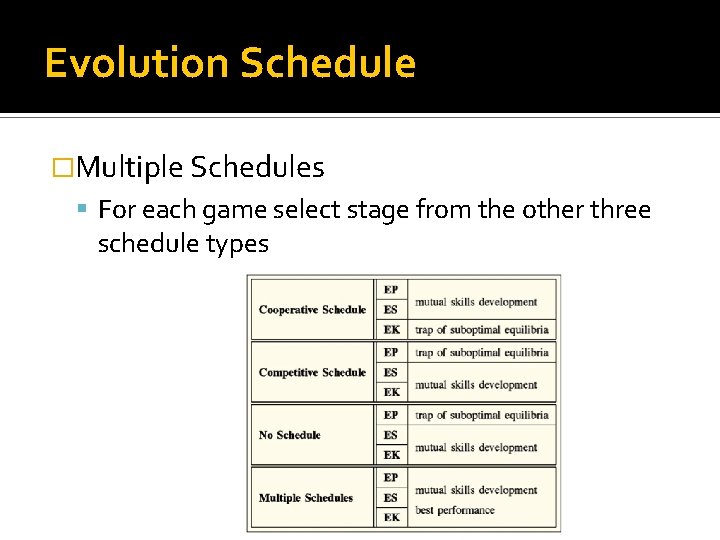

Evolution Schedule � 3 robot environment Keeper Shooter passer

Evolution Schedule � Cooperative Schedule Train mainly the shooter and passer to work together Keeper does not get much playing time � Competitive schedule Keeper and shooter are evolved Passer is left out much of the time � No Schedule All three play all the time

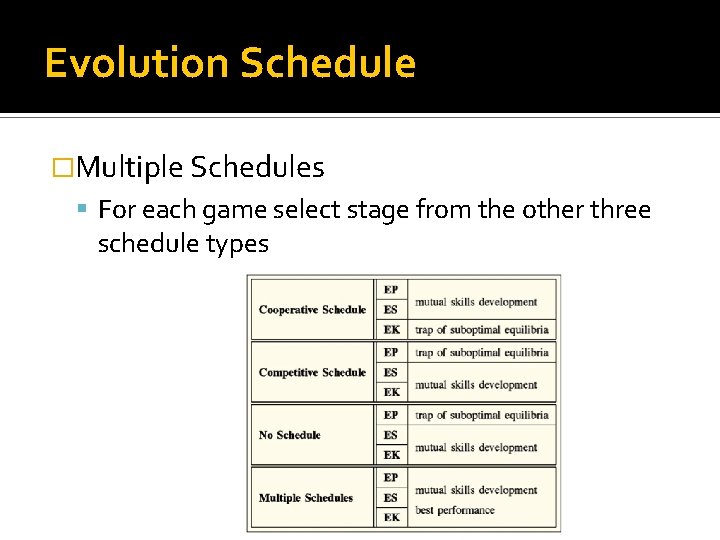

Evolution Schedule �Multiple Schedules For each game select stage from the other three schedule types

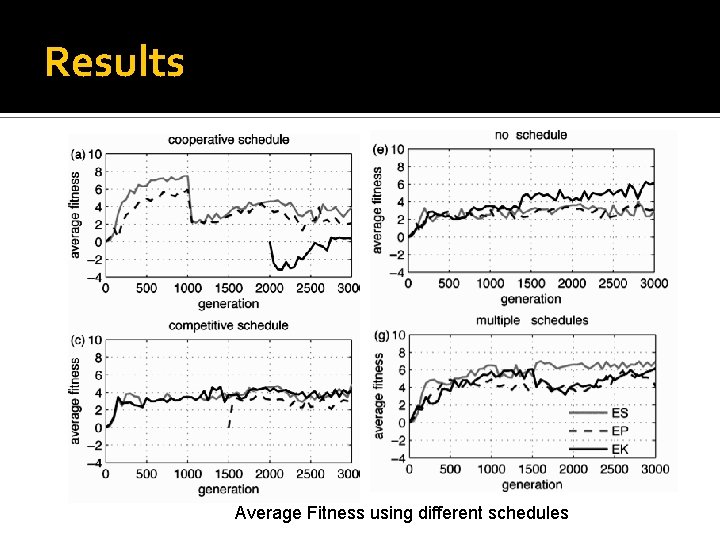

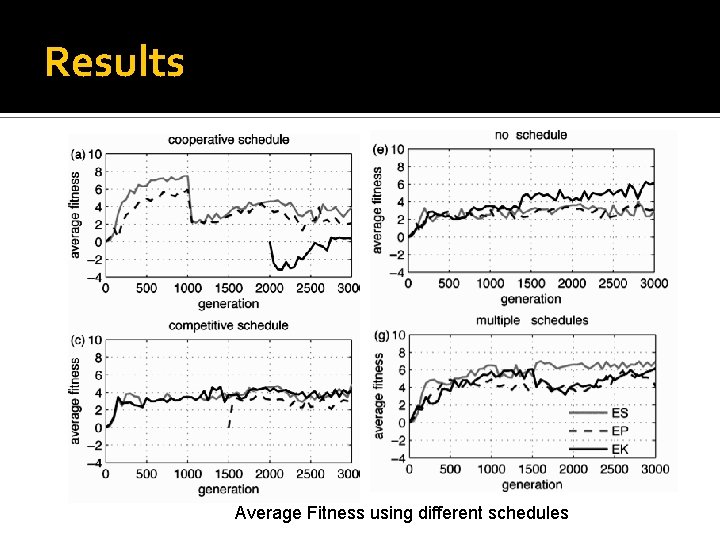

Results Average Fitness using different schedules