Incremental Clustering n n n Previous clustering algorithms

![Incremental Clustering Model [Charikar et al. 1997] n Extension to HAC as follows: n Incremental Clustering Model [Charikar et al. 1997] n Extension to HAC as follows: n](https://slidetodoc.com/presentation_image_h/fcc5012280c85a6810b418067bc017f0/image-3.jpg)

- Slides: 23

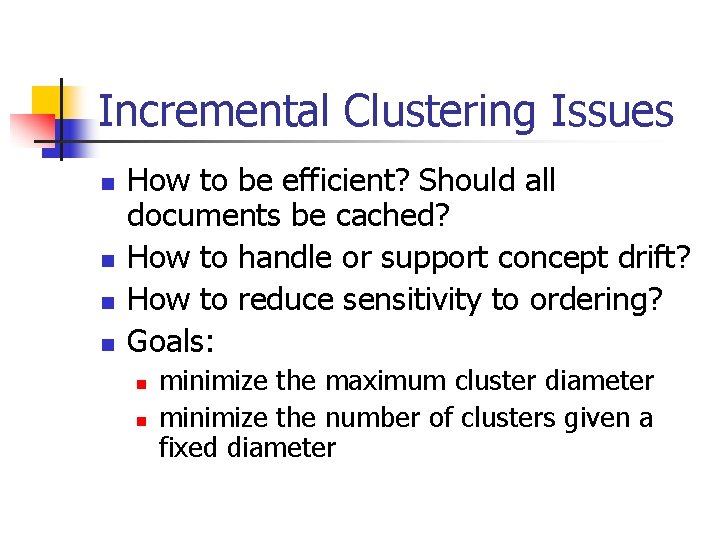

Incremental Clustering n n n Previous clustering algorithms worked in “batch” mode: processed all points at essentially the same time. Some IR applications cluster an incoming document stream (e. g. , topic tracking). For these applications, we need incremental clustering algorithms.

Incremental Clustering Issues n n How to be efficient? Should all documents be cached? How to handle or support concept drift? How to reduce sensitivity to ordering? Goals: n n minimize the maximum cluster diameter minimize the number of clusters given a fixed diameter

![Incremental Clustering Model Charikar et al 1997 n Extension to HAC as follows n Incremental Clustering Model [Charikar et al. 1997] n Extension to HAC as follows: n](https://slidetodoc.com/presentation_image_h/fcc5012280c85a6810b418067bc017f0/image-3.jpg)

Incremental Clustering Model [Charikar et al. 1997] n Extension to HAC as follows: n n Incremental Clustering: “for an update sequence of n points in M, maintain a collection of k clusters such that as each one is presented, either it is assigned to one of the current k clusters or it starts off a new cluster while two existing clusters are merged into one. ” Maintains a HAC for points added up until current time. M. Charikar, C. Chekuri, T. Feder, R. Motwani. “Incremental Clustering and Dynamic Information Retrieval”, Proc. 29 th Annual ACM Symposium on Theory of Computing, 1997.

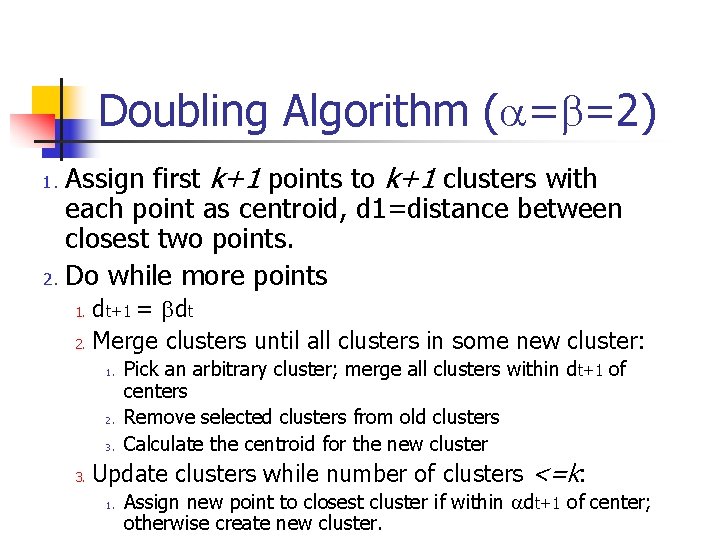

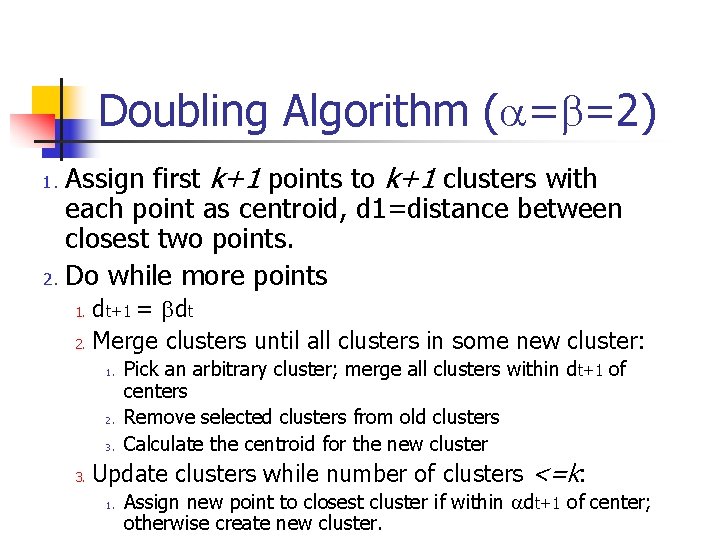

Doubling Algorithm (a=b=2) Assign first k+1 points to k+1 clusters with each point as centroid, d 1=distance between closest two points. 2. Do while more points 1. dt+1 = bdt 2. Merge clusters until all clusters in some new cluster: 1. 2. 3. Pick an arbitrary cluster; merge all clusters within dt+1 of centers Remove selected clusters from old clusters Calculate the centroid for the new cluster Update clusters while number of clusters <=k: 1. Assign new point to closest cluster if within adt+1 of center; otherwise create new cluster.

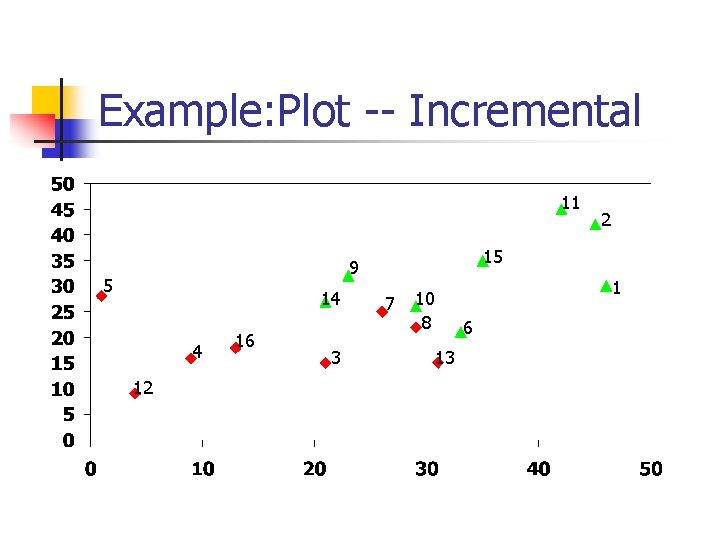

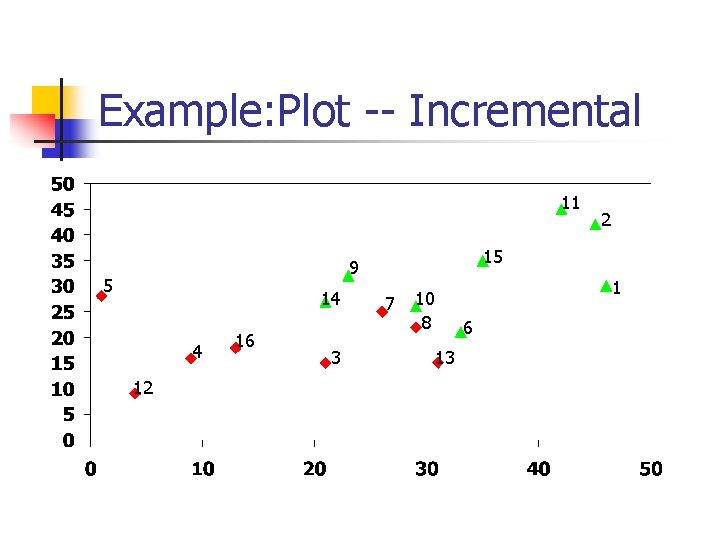

Example: Plot -- Incremental 11 15 9 5 14 4 12 16 3 2 7 1 10 8 6 13

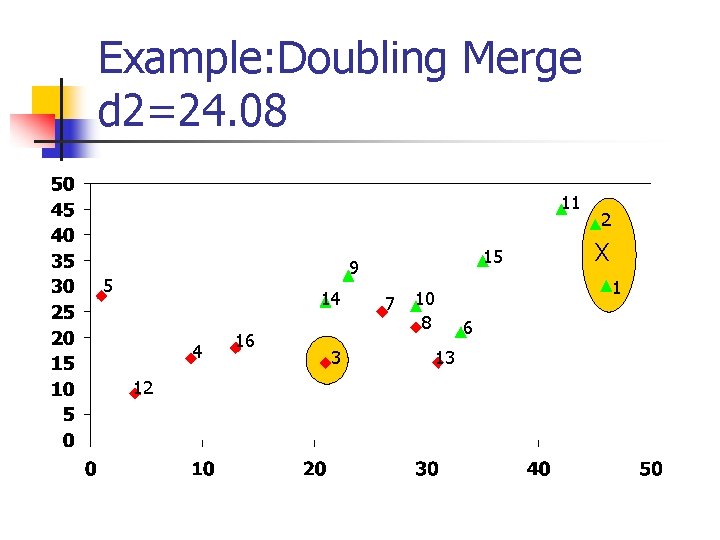

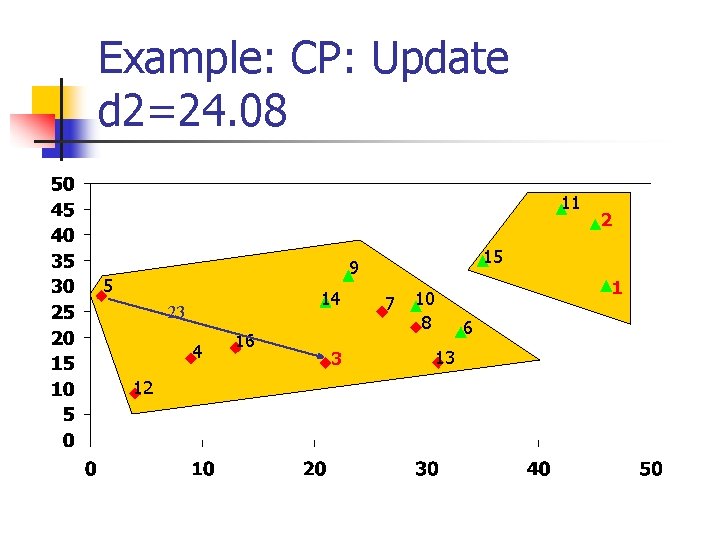

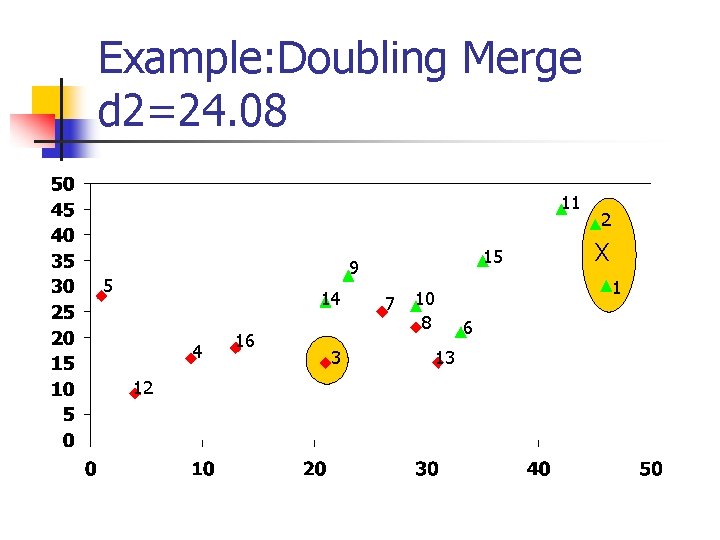

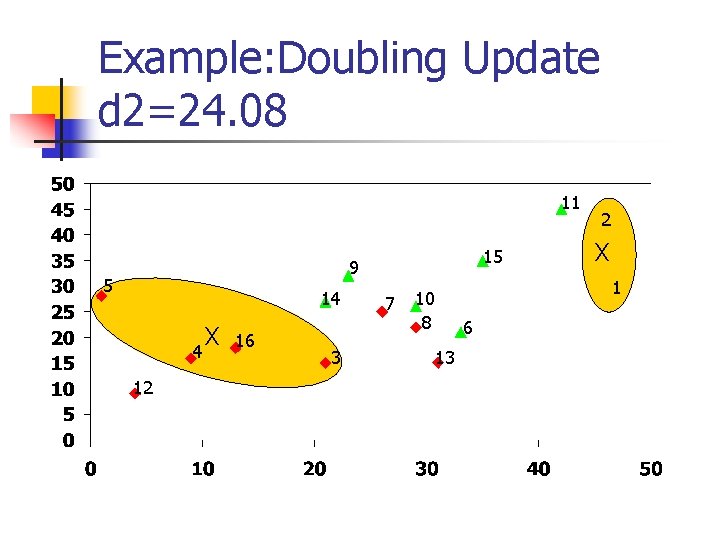

Example: Doubling Merge d 2=24. 08 11 15 9 5 14 4 12 16 3 7 2 X 1 10 8 6 13

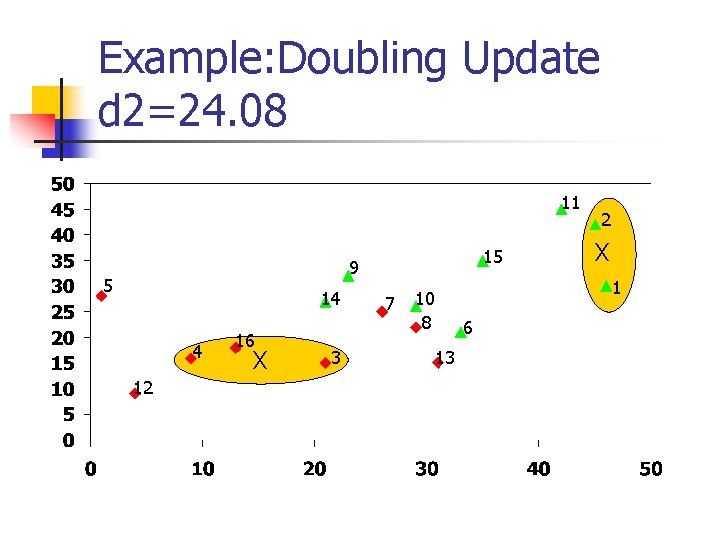

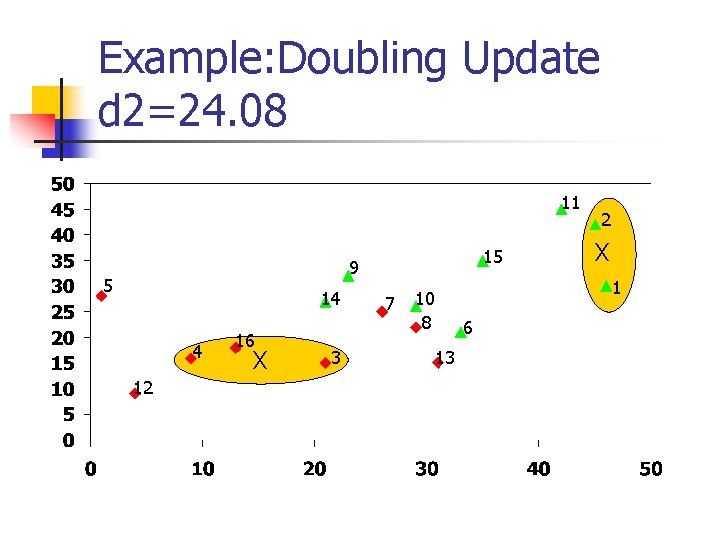

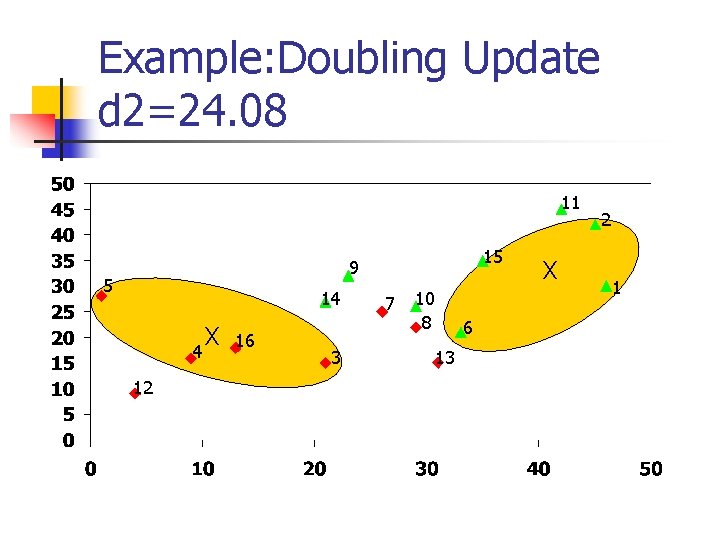

Example: Doubling Update d 2=24. 08 11 15 9 5 14 4 12 16 X 3 7 2 X 1 10 8 6 13

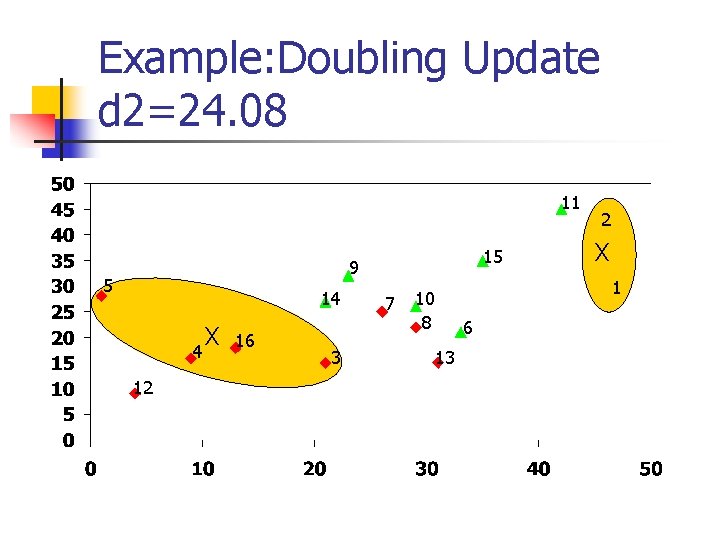

Example: Doubling Update d 2=24. 08 11 15 9 5 14 4 12 X 16 3 7 2 X 1 10 8 6 13

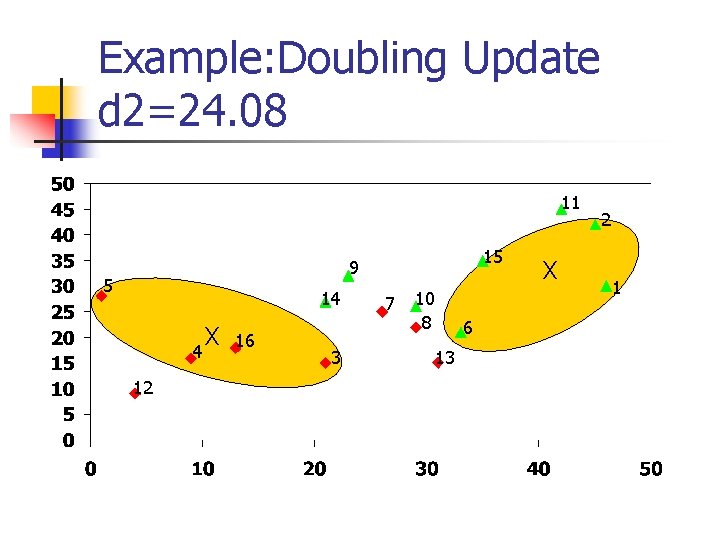

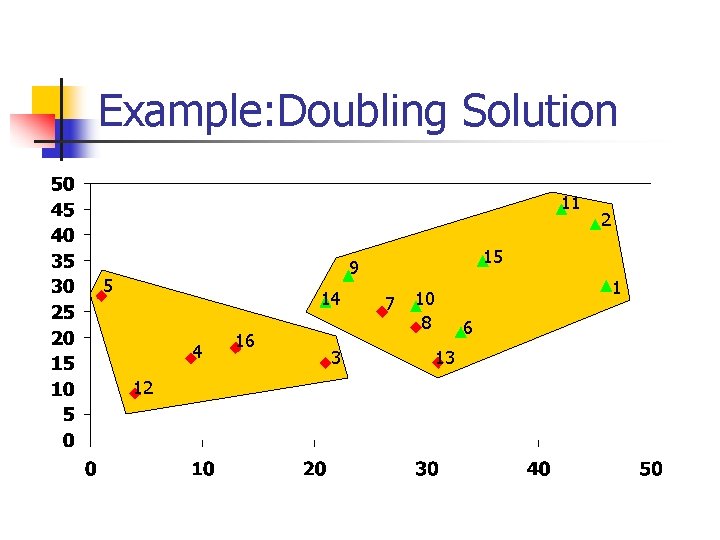

Example: Doubling Update d 2=24. 08 11 15 9 5 14 4 12 X 16 3 7 10 8 6 13 X 2 1

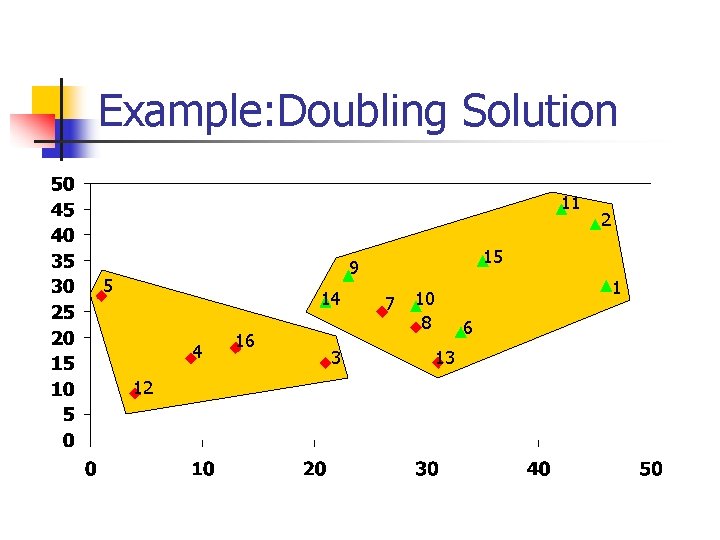

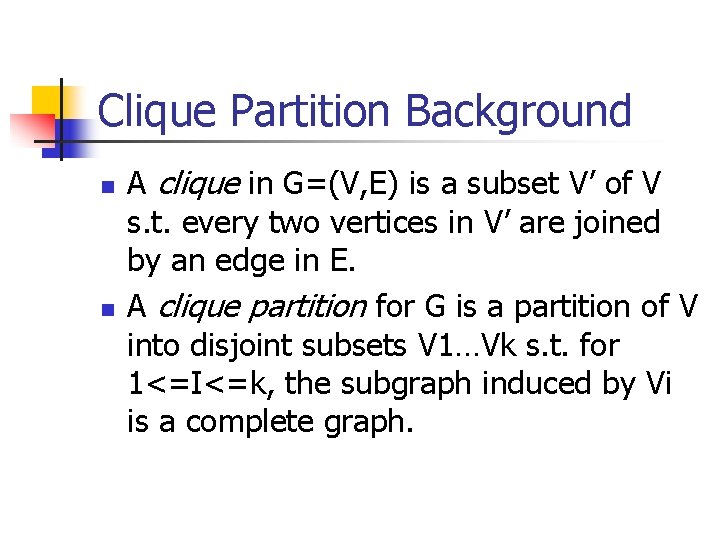

Example: Doubling Solution 11 15 9 5 14 4 12 16 3 2 7 1 10 8 6 13

Clique Partition Background n n A clique in G=(V, E) is a subset V’ of V s. t. every two vertices in V’ are joined by an edge in E. A clique partition for G is a partition of V into disjoint subsets V 1…Vk s. t. for 1<=I<=k, the subgraph induced by Vi is a complete graph.

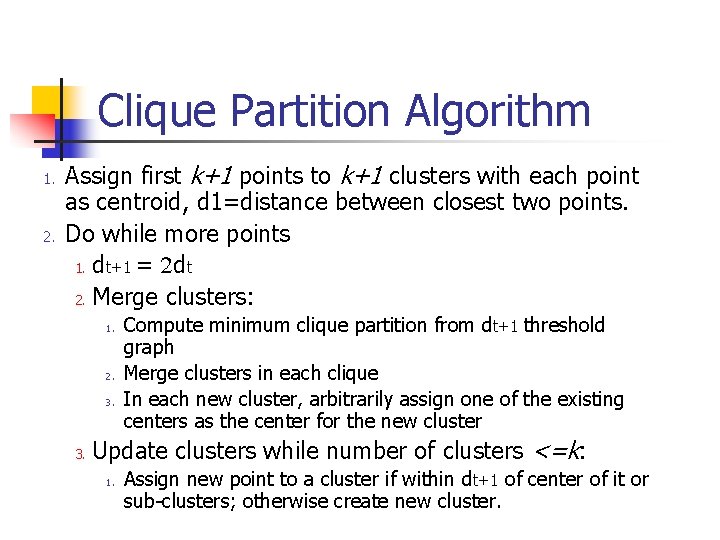

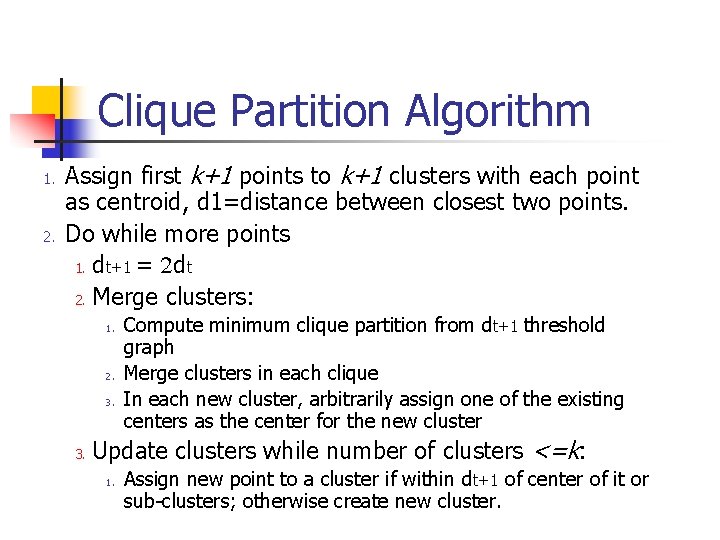

Clique Partition Algorithm 1. 2. Assign first k+1 points to k+1 clusters with each point as centroid, d 1=distance between closest two points. Do while more points 1. dt+1 = 2 dt 2. Merge clusters: 1. 2. 3. Compute minimum clique partition from dt+1 threshold graph Merge clusters in each clique In each new cluster, arbitrarily assign one of the existing centers as the center for the new cluster Update clusters while number of clusters <=k: 1. Assign new point to a cluster if within dt+1 of center of it or sub-clusters; otherwise create new cluster.

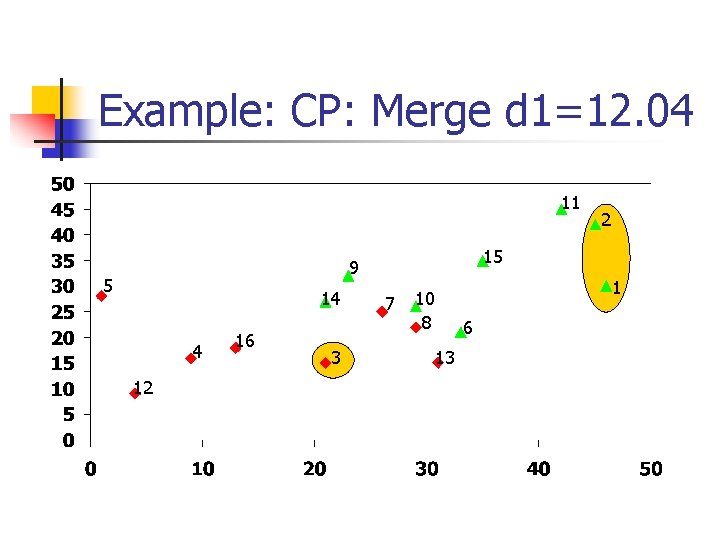

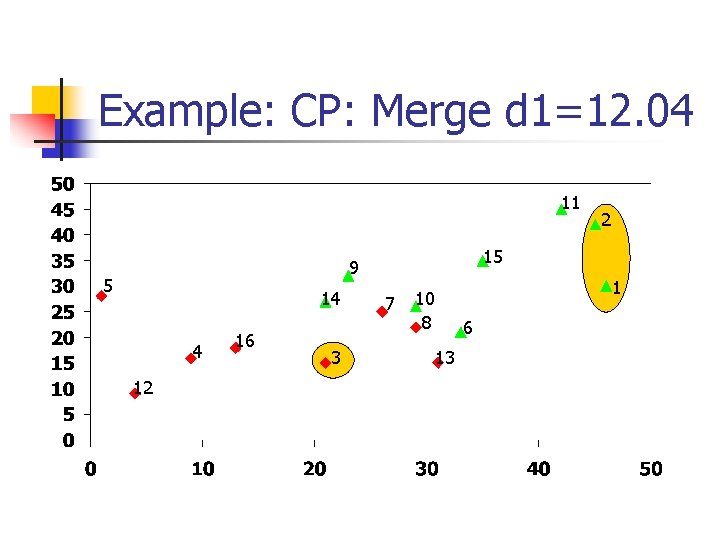

Example: CP: Merge d 1=12. 04 11 15 9 5 14 4 12 16 3 2 7 1 10 8 6 13

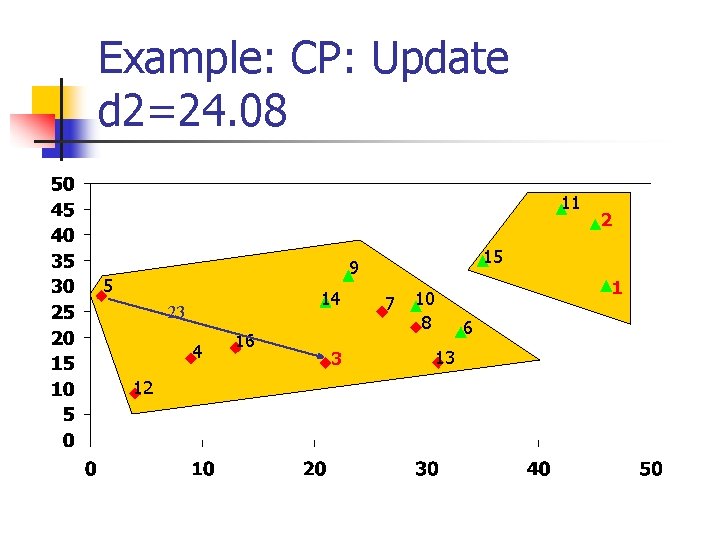

Example: CP: Update d 2=24. 08 11 15 9 5 14 23 4 12 16 3 2 7 1 10 8 6 13

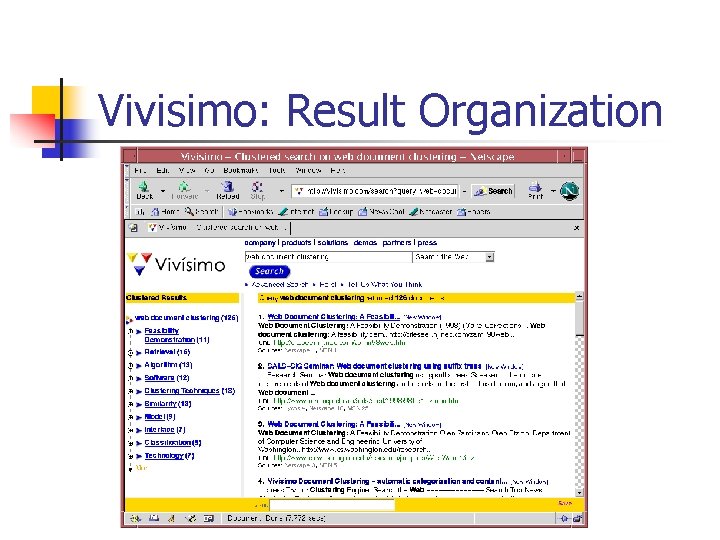

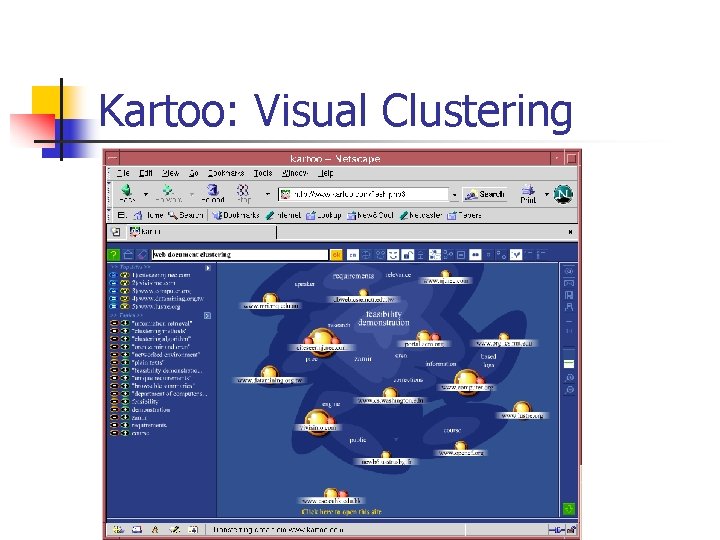

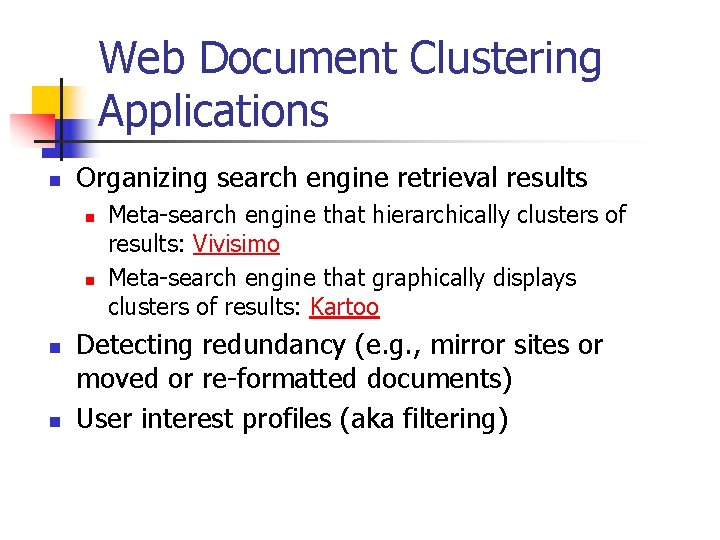

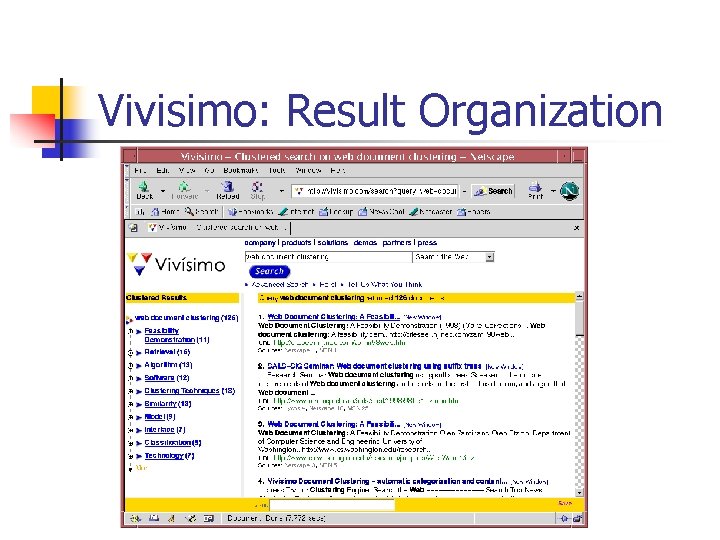

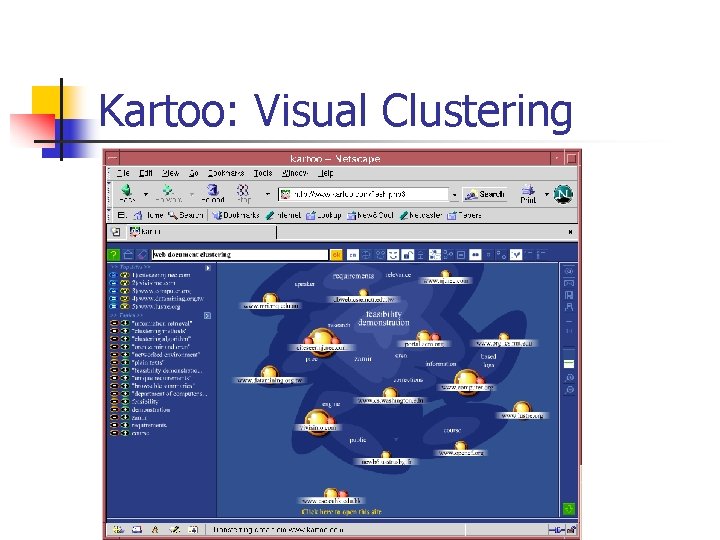

Web Document Clustering Applications n Organizing search engine retrieval results n n Meta-search engine that hierarchically clusters of results: Vivisimo Meta-search engine that graphically displays clusters of results: Kartoo Detecting redundancy (e. g. , mirror sites or moved or re-formatted documents) User interest profiles (aka filtering)

Vivisimo: Result Organization

Kartoo: Visual Clustering

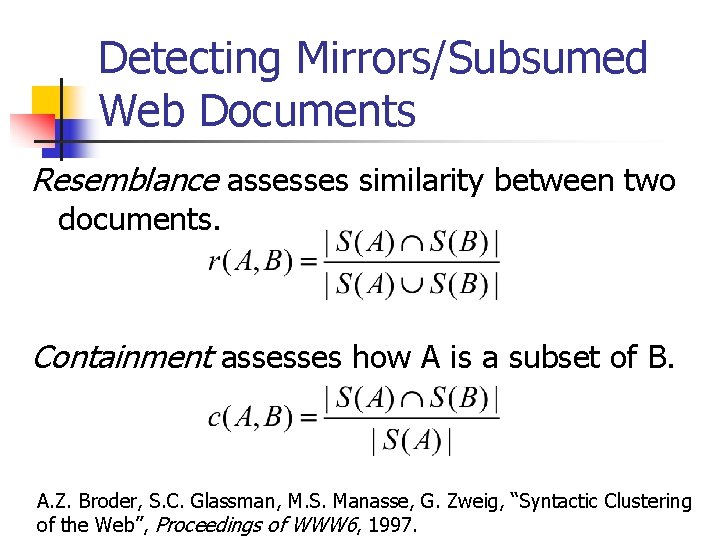

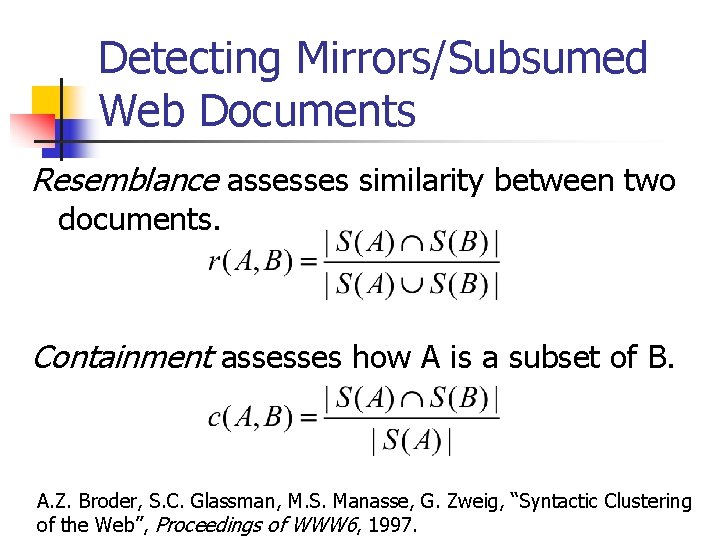

Detecting Mirrors/Subsumed Web Documents Resemblance assesses similarity between two documents. Containment assesses how A is a subset of B. A. Z. Broder, S. C. Glassman, M. S. Manasse, G. Zweig, “Syntactic Clustering of the Web”, Proceedings of WWW 6, 1997.

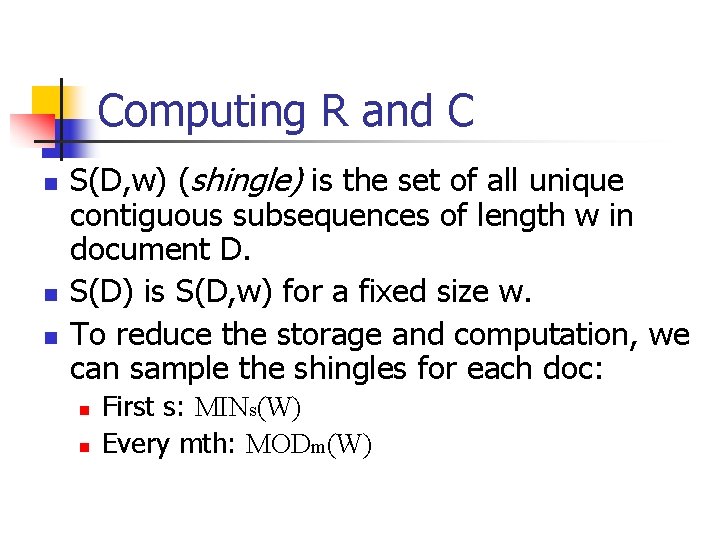

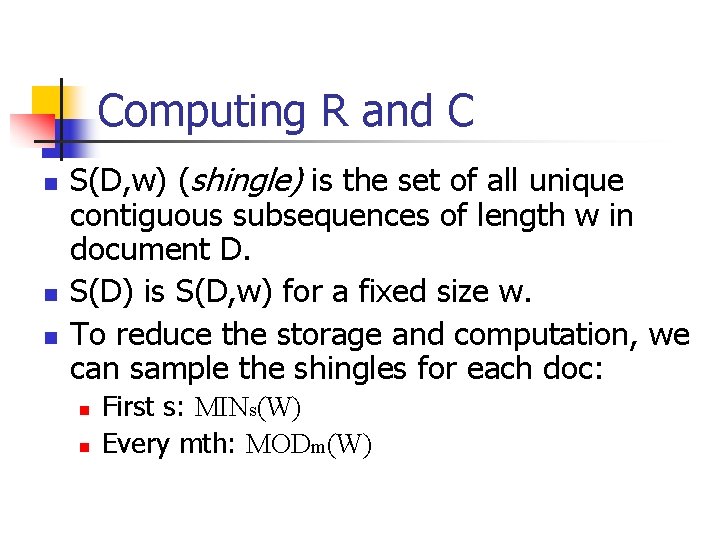

Computing R and C n n n S(D, w) (shingle) is the set of all unique contiguous subsequences of length w in document D. S(D) is S(D, w) for a fixed size w. To reduce the storage and computation, we can sample the shingles for each doc: n n First s: MINs(W) Every mth: MODm(W)

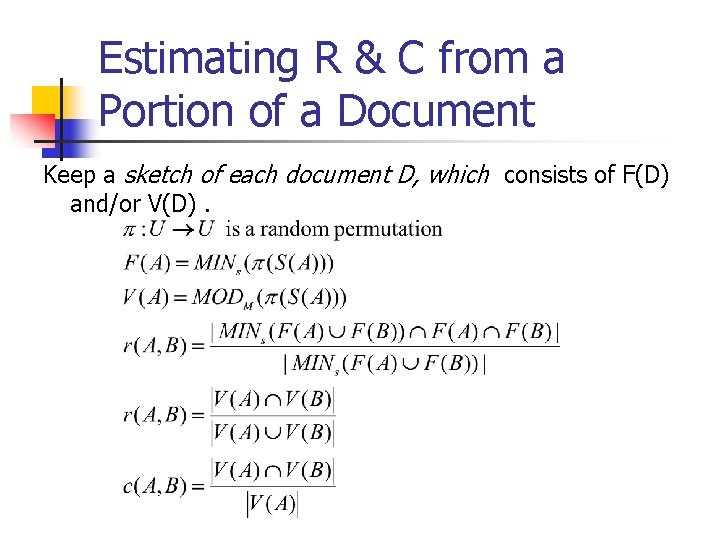

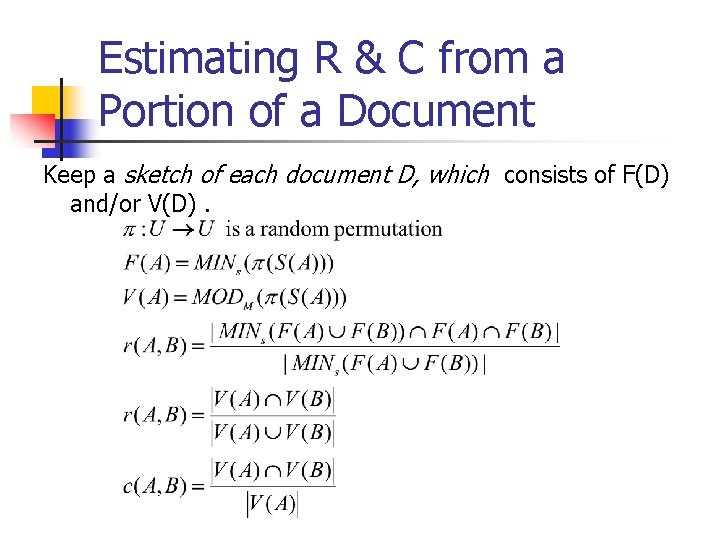

Estimating R & C from a Portion of a Document Keep a sketch of each document D, which consists of F(D) and/or V(D).

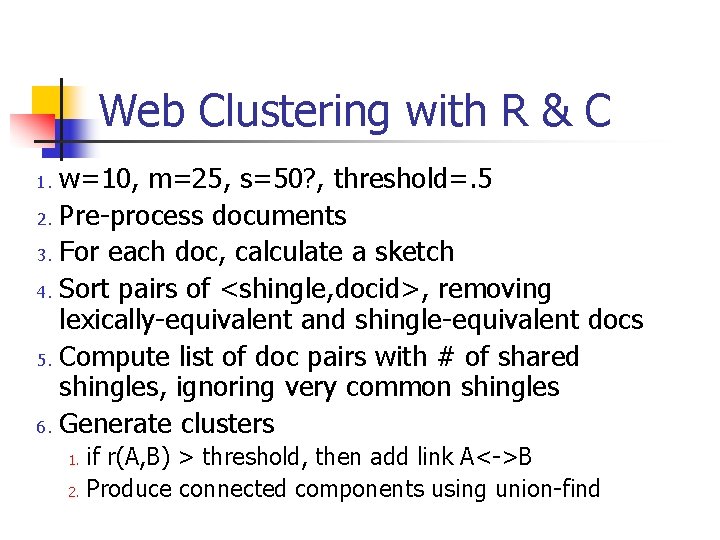

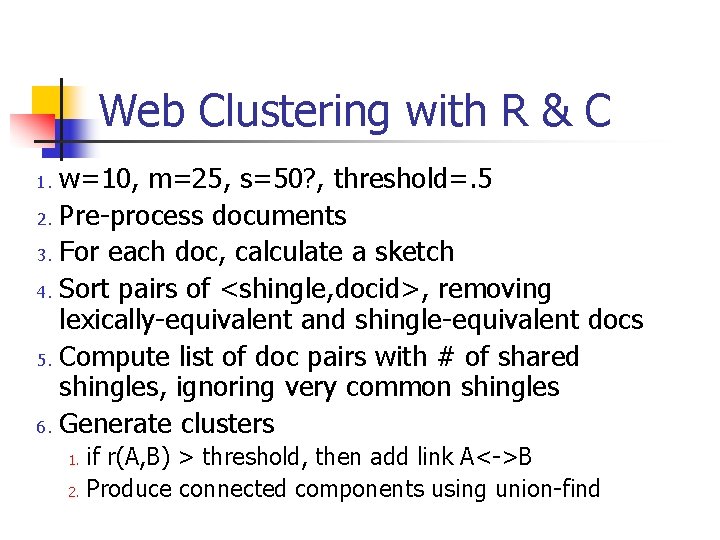

Web Clustering with R & C w=10, m=25, s=50? , threshold=. 5 2. Pre-process documents 3. For each doc, calculate a sketch 4. Sort pairs of <shingle, docid>, removing lexically-equivalent and shingle-equivalent docs 5. Compute list of doc pairs with # of shared shingles, ignoring very common shingles 6. Generate clusters 1. if r(A, B) > threshold, then add link A<->B 2. Produce connected components using union-find 1.

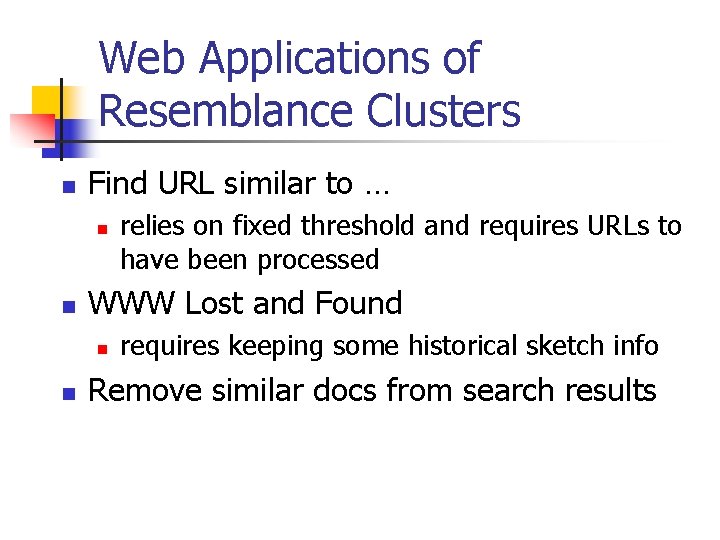

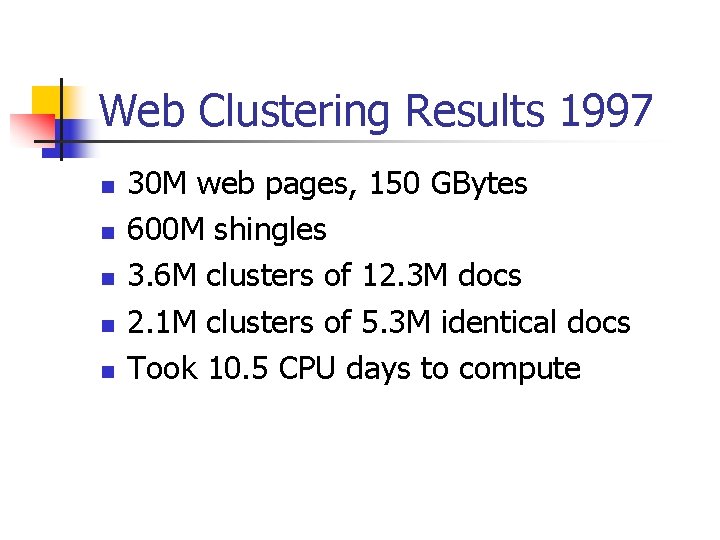

Web Clustering Results 1997 n n n 30 M web pages, 150 GBytes 600 M shingles 3. 6 M clusters of 12. 3 M docs 2. 1 M clusters of 5. 3 M identical docs Took 10. 5 CPU days to compute

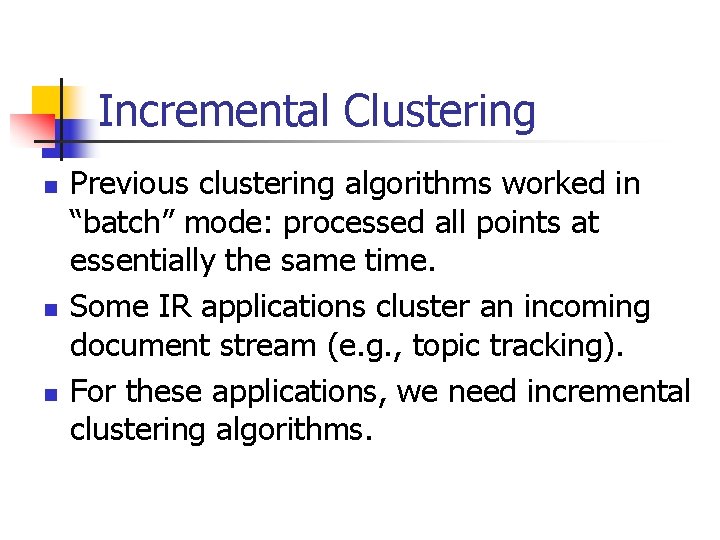

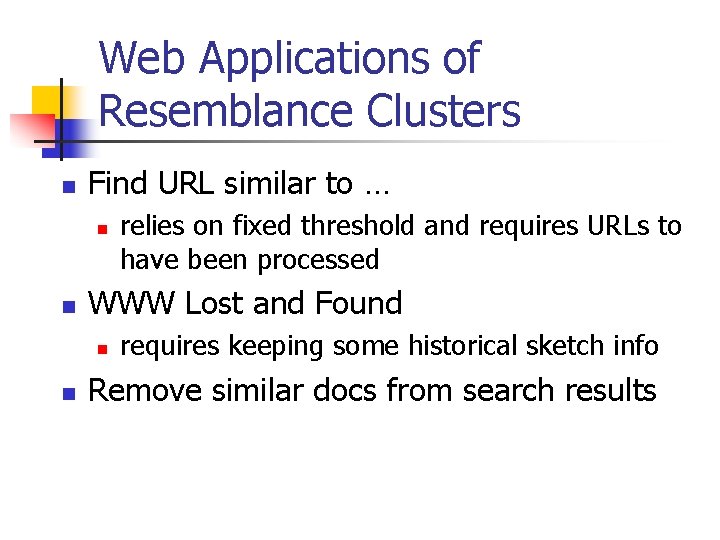

Web Applications of Resemblance Clusters n Find URL similar to … n n WWW Lost and Found n n relies on fixed threshold and requires URLs to have been processed requires keeping some historical sketch info Remove similar docs from search results