In Search for Simplicity A SelfOrganizing MultiSource Multicast

- Slides: 22

In Search for Simplicity: A Self-Organizing, Multi-Source Multicast Overlay Matei Ripeanu The University of Chicago 1/2/2022

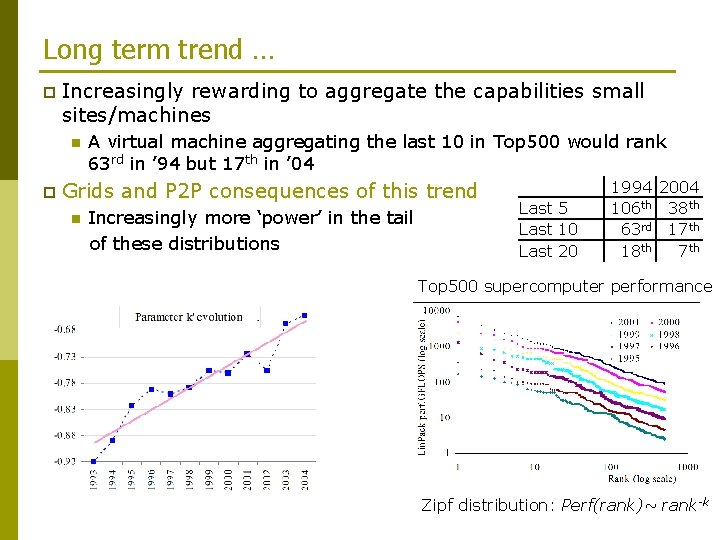

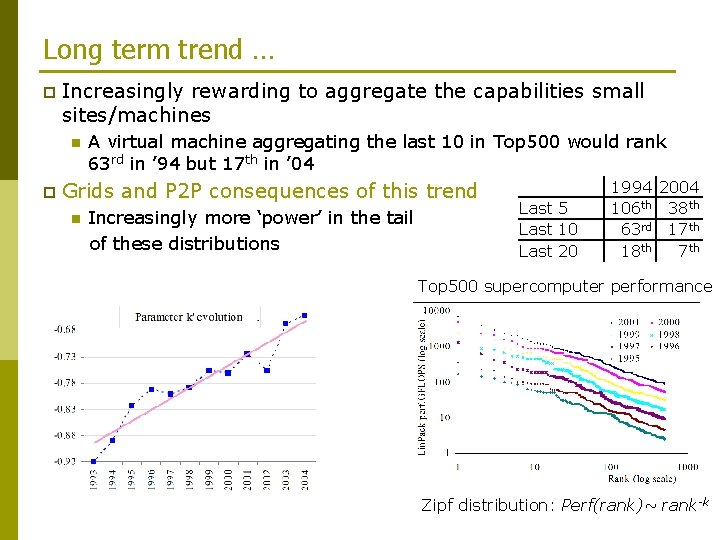

Long term trend … p Increasingly rewarding to aggregate the capabilities small sites/machines n p A virtual machine aggregating the last 10 in Top 500 would rank 63 rd in ’ 94 but 17 th in ’ 04 Grids and P 2 P consequences of this trend n Increasingly more ‘power’ in the tail of these distributions Last 5 Last 10 Last 20 1994 2004 106 th 38 th 63 rd 17 th 18 th 7 th Top 500 supercomputer performance Zipf distribution: Perf(rank)~ rank-k

Grids: infrastructure to support federated resource sharing. p Early Grid work: n n p Focus on defining service interfaces and individual behaviors Implementations often based on centralized components Today’s challenge: n Self-organizing, adaptive services. Self-organization – system behavior emerges as a result of: p independent decisions made by system components p using incomplete information about the entire system.

Group communication (multicast) functionality p Applications n n p Setting n n p Conferencing White-board type applications Resource monitoring and discovery Data distribution Multiple senders and receivers Medium scale Challenge n Build/maintain support structures (overlays) … p p p that map well on a heterogeneous set of end nodes and network paths … when facing node and link volatility … using decentralized, adaptive algorithms.

Roadmap p p Introduction UMM: An Unstructured Multi-source Multicast Application study: Replica Location Service Conclusions

Existing approaches p Shared Tree n n p No need for explicit routing protocol But: fragile to failures, does not exploit all available capacity, larger delays (for multi-source) Unstructured Mesh n Random overlay + flooding (Gnutella) p p n Simple, resilient. But: inefficient resource usage (duplicate traffic). 'Measurement based’ overlays (Narada, Scattercast): p p (NICE, ALMI, Overcast) Unstructured overlay mesh, initially random Routing protocol to extract distribution trees for each source. But: (1) Overhead to build/maintain routing tables, (2) Tree extraction and overlay optimization are coupled. Structured Overlay n n (M-CAN, Bayeux, Pastry) Scalable. Promise: structure reusability. But: (1) Complex protocols to maintain structure, (2) Difficult to adapt to heterogeneous node/link capacities (Bharambe’ 05)

UMM design guidelines p Use unstructured overlays for their: Low construction/maintenance costs n Ability to map well to heterogeneous node and link characteristics Adaptiveness n p Prefer a simple design over a highly optimized complex one. We attempt preserve flooding simplicity and try to reduce its overheads. n Soft-state protocols and passive state collection are preferable to active state maintenance Deployability, reliability. n p Keep design layers independent Ability to optimize and evolve layers individually

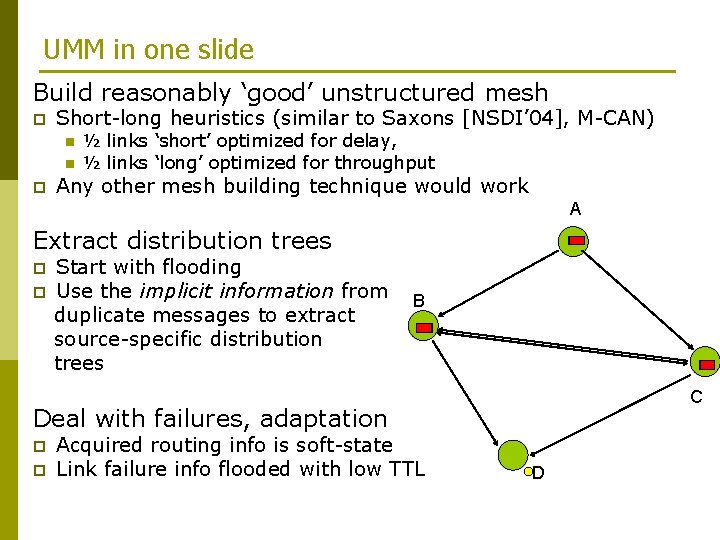

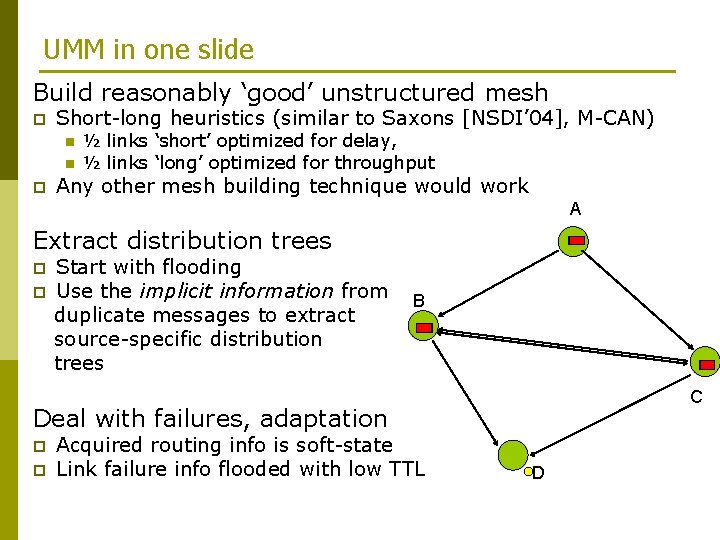

UMM in one slide Build reasonably ‘good’ unstructured mesh p Short-long heuristics (similar to Saxons [NSDI’ 04], M-CAN) n n p ½ links ‘short’ optimized for delay, ½ links ‘long’ optimized for throughput Any other mesh building technique would work A Extract distribution trees p p Start with flooding Use the implicit information from duplicate messages to extract source-specific distribution trees B C Deal with failures, adaptation p p Acquired routing info is soft-state Link failure info flooded with low TTL D

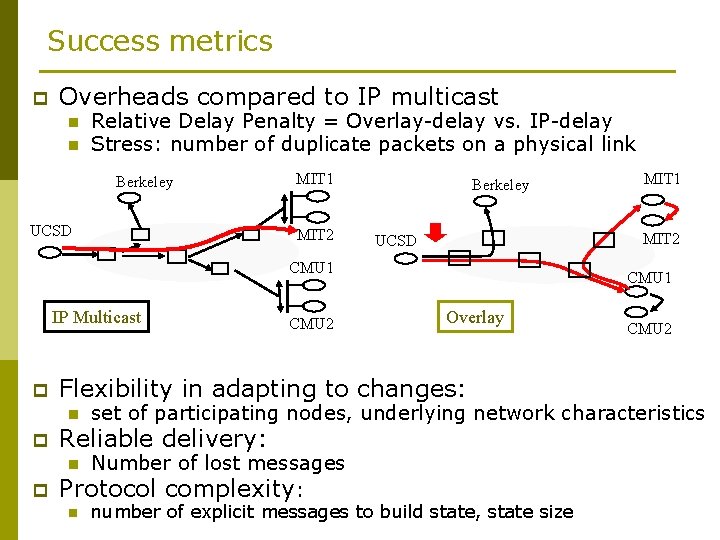

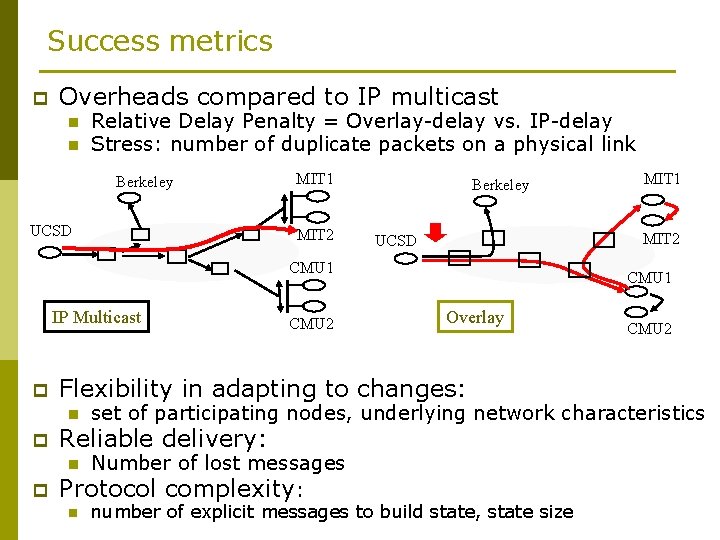

Success metrics p Overheads compared to IP multicast n n Relative Delay Penalty = Overlay-delay vs. IP-delay Stress: number of duplicate packets on a physical link Berkeley UCSD MIT 1 MIT 2 Berkeley MIT 2 UCSD CMU 1 IP Multicast p CMU 1 Overlay Flexibility in adapting to changes: n p CMU 2 set of participating nodes, underlying network characteristics Reliable delivery: Number of lost messages p Protocol complexity: n n MIT 1 number of explicit messages to build state, state size

Evaluation Methodology Design and implementation evaluated in two contexts: p Model. Net - emulated wide-area network testbed n n Application runs unmodified Controlled network characteristics IP-multicast used as common base to compare with published results Topologies p p p Inet generated, 4040 routers with end-nodes randomly attached Planet. Lab deployment n Evaluate performance when facing real network dynamics

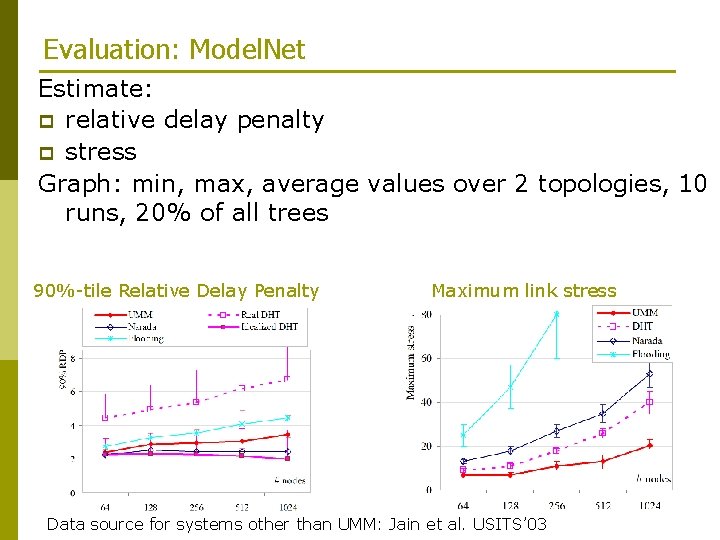

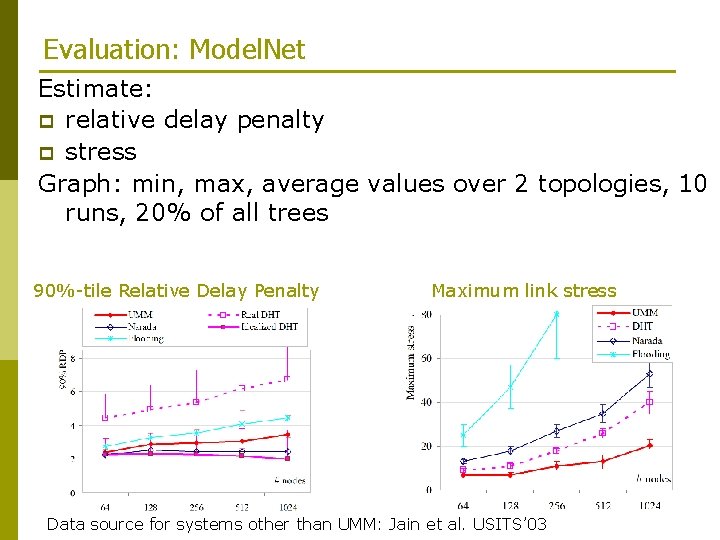

Evaluation: Model. Net Estimate: p relative delay penalty p stress Graph: min, max, average values over 2 topologies, 10 runs, 20% of all trees 90%-tile Relative Delay Penalty Maximum link stress Data source for systems other than UMM: Jain et al. USITS’ 03

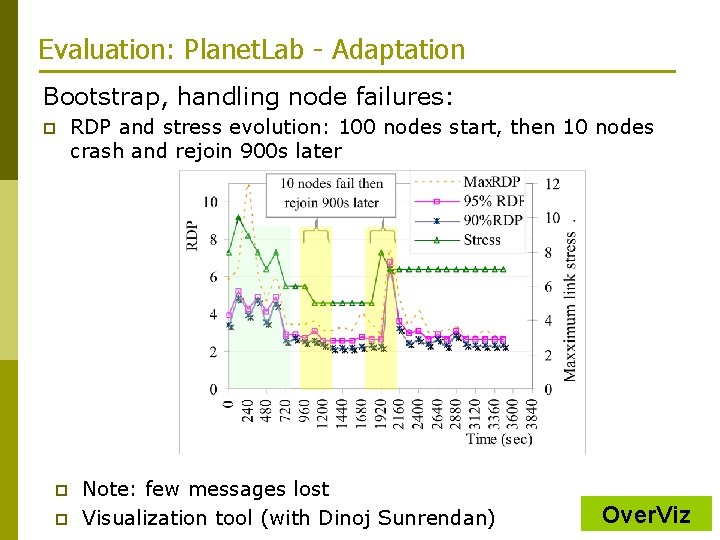

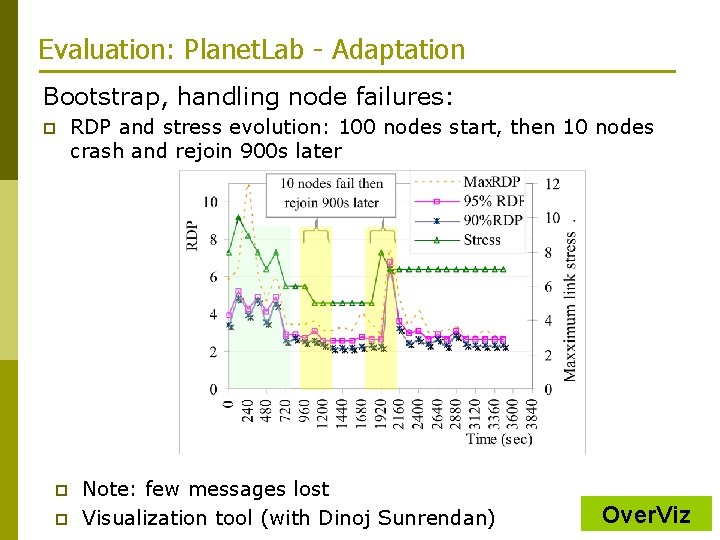

Evaluation: Planet. Lab - Adaptation Bootstrap, handling node failures: p p p RDP and stress evolution: 100 nodes start, then 10 nodes crash and rejoin 900 s later Note: few messages lost Visualization tool (with Dinoj Sunrendan) Over. Viz

Why is this solution appealing? p Low-overhead, reliable, simple n n n p p Low-overhead: uing passive data collection to build distribution trees (simply inspecting the message flow). Reliable distributed structure to support routing: all routing state is soft-state Preserves simplicity of flooding-based solutions Self-organizing: nodes make independent decisions based only on local information Scales with the number of sources, not with the number of passive participants

Roadmap p p Introduction UMM: An Unstructured Multi-source Multicast Application study: Replica Location Service Conclusions

Application -- Replica Location Service (RLS) p p Replication often used to improve reliability, access latency, or availability. Need efficient mechanism to locate replicas: n n p Map logical ID to replica location(s). Common to cooperative proxy caches, distributed object systems. Data Grids operations: n n client presents an identifier (LFN=logical file name) and asks for one, many or ‘all’ replica locations (PFN=physical file name). Client presents a set of LFN and asks for a site where (most) replicas of these files are already present

RLS: Application Requirements Data-intensive, scientific applications requirements: p Target scale: n n p p up to 500 m replicas by 2008, 100 s of sites. Decoupling: sites able to operate independently Support efficient location of collocated sets of replicas, (requirements and trace analysis) p Lookup rates order(s) of magnitude higher than update rates, p Flat popularity distribution for files (non-Zipf) p Most add/update operations are done in batches.

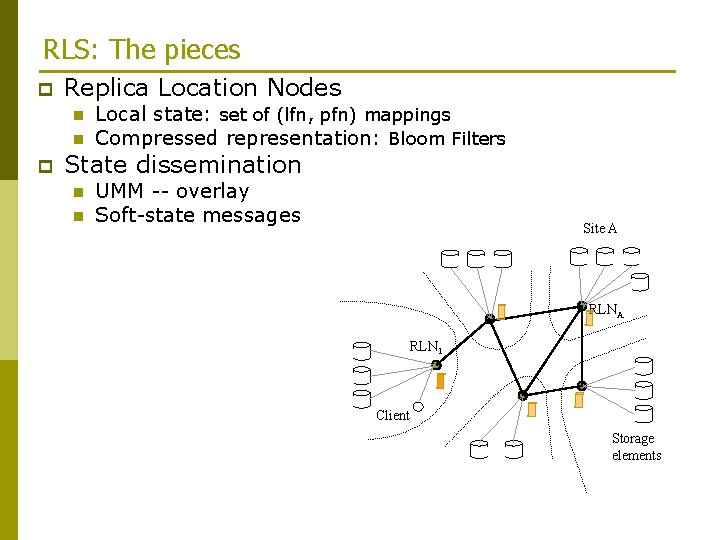

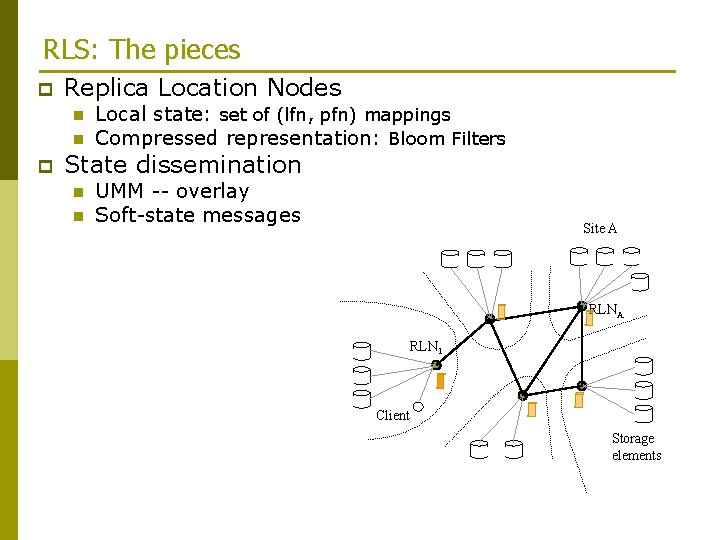

RLS: The pieces p Replica Location Nodes n n p Local state: set of (lfn, pfn) mappings Compressed representation: Bloom Filters State dissemination n n UMM -- overlay Soft-state messages Site A RLN 1 Client Storage elements

Discussion p Tradeoffs n n n p p Accuracy (false positive rate) vs. memory and bandwidth cost Accuracy (false negative rate) vs. bandwidth cost Extra CPU and memory vs. bandwidth cost with compressed Bloom filters. Workload and deployment characteristics reduce appeal of DHT-based solutions Existing users prefer low-latency queries over low memory usage n Current RLS implementation offers a deployment choice

RLS: Prototype implementation and evaluation p Bloom filters n p (isolated) Replica Location Node n n p Fast lookup (7 -10μs), add, delete operations (14μs) Lookup rates: up to 24, 000 lookups/sec. Add, delete: about half of lookup performance Overlay performance n n Deployed and tested on Planet. Lab Meets user-defined accuracy p p Alternatively: caps for generated traffic. Site A Congestion management n Reduced accuracy for faraway nodes RLNA RLN 1 Client Storage element s

Contributions: UMM, RLS p (UMM) Unstructured multi-source multicast overlay: n n n p As efficient as solutions based on structured overlays or on running full routing protocols at the overlay level Simple protocol based on flooding and passive data collection. Self-organizing (RLS) Replica Location Service n n Application layered on top of UMM Proposed design: p p Meets requirements of data-intensive, scientific applications. Is simple, decentralized, adaptive. § Matei Ripeanu and Ian Foster, A Decentralized, Adaptive, Replica Location Service, HPDC 2002. § A. Chervenak, E. Deelman, I. Foster, A. Iamnitchi, C. Kesselman, W. Hoschek, P. Kunst, M. Ripeanu, B. Schwartzkopf, H. Stockinger, K. Stockinger, and Brian Tierney, Giggle: A Framework for Constructing Scalable Replica Location Services, SC 2002. § Matei Ripeanu, I. Foster, A. Iamnitchi, A. Rogers, UMM-An unstructured multisource overlay, 2005 (submitted)

Open questions p ‘Geometries’ to organize interactions between resources n Random graphs: p p n Structured geometries p p n p Power-law graphs present in a number of instances (Internet, power-grid, Gnutella). Low cost to build and maintain Structure can be exploited by applications High maintenance cost when nodes volatile, heterogeneous Where is the tipping point? (application requirements? ) Large systems and self-organization n Are there generic building blocks for self-organization?

Thank you p More information, links to papers n p http: //www. cs. uchicago. edu/~matei Questions?