In Memory Databases InMemory Column Store in Oracle

In Memory Databases

In-Memory Column Store in Oracle Database 12 c This features allows you to store columns, tables, partitions and materialized views in memory in a columnar format, rather than the typical row format. The advantage of having data in memory is obvious, but the columnar storage lends itself extremely well to analytic queries found in business intelligence products. The In-Memory column store is a new section of the System Global Area (SGA), sized using the INMEMORY_SIZE initialization parameter. Alternatively, you can enable IM column store at the tablespace level, so all tables and materialized views in the tablespace are automatically enabled for the IM column store.

In-Memory Column Store in Oracle The documentation claims the IM column store is good for the following. Large scans that apply "=", "<", ">" and "IN" filters. Queries that return a small number of columns from a table with a large number of columns. Queries that join small tables to large tables. Queries that aggregate data. It also states it is not designed for the following. Queries with complex predicates. Queries that return a large number of columns. Queries that return large numbers of rows. Queries with multiple large table joins. The important thing to remember here is *you* will be responsible for deciding which objects will benefit the most from inclusion in the IM column store. If you choose wisely you will see big improvements in performance. If you choose badly, you will waste a lot of memory that could be used by the buffer cache.

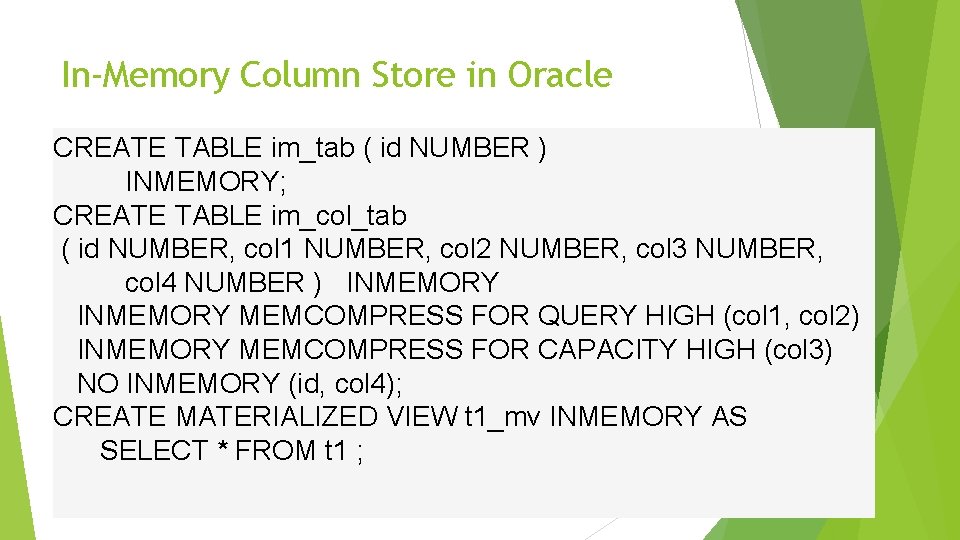

In-Memory Column Store in Oracle CREATE TABLE im_tab ( id NUMBER ) INMEMORY; CREATE TABLE im_col_tab ( id NUMBER, col 1 NUMBER, col 2 NUMBER, col 3 NUMBER, col 4 NUMBER ) INMEMORY MEMCOMPRESS FOR QUERY HIGH (col 1, col 2) INMEMORY MEMCOMPRESS FOR CAPACITY HIGH (col 3) NO INMEMORY (id, col 4); CREATE MATERIALIZED VIEW t 1_mv INMEMORY AS SELECT * FROM t 1 ;

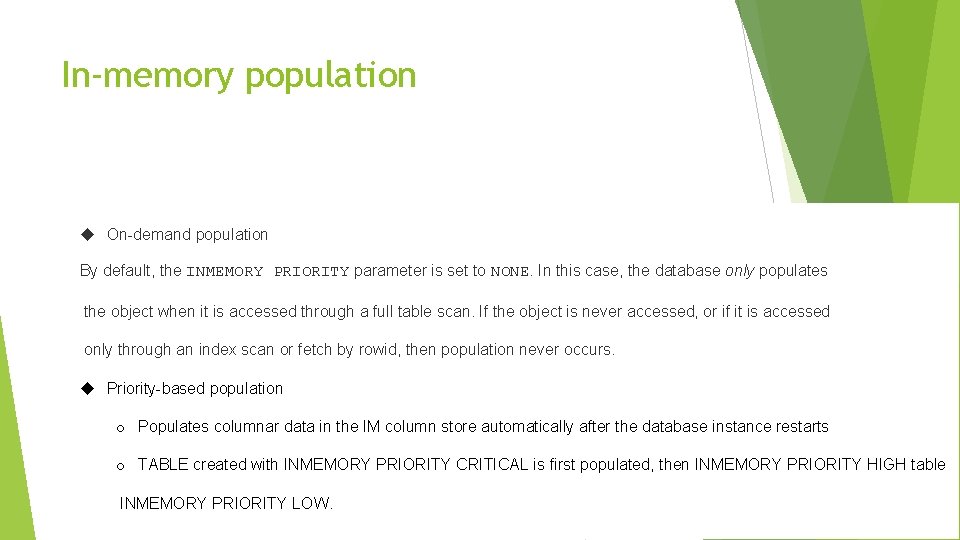

In-memory population On-demand population By default, the INMEMORY PRIORITY parameter is set to NONE. In this case, the database only populates the object when it is accessed through a full table scan. If the object is never accessed, or if it is accessed only through an index scan or fetch by rowid, then population never occurs. Priority-based population o Populates columnar data in the IM column store automatically after the database instance restarts o TABLE created with INMEMORY PRIORITY CRITICAL is first populated, then INMEMORY PRIORITY HIGH table INMEMORY PRIORITY LOW.

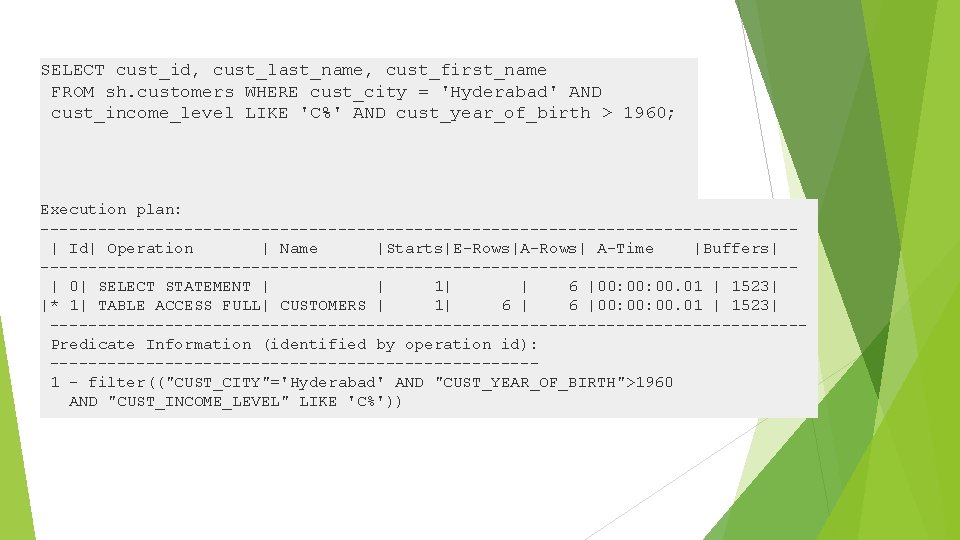

SELECT cust_id, cust_last_name, cust_first_name FROM sh. customers WHERE cust_city = 'Hyderabad' AND cust_income_level LIKE 'C%' AND cust_year_of_birth > 1960; Execution plan: ---------------------------------------| Id| Operation | Name |Starts|E-Rows|A-Rows| A-Time |Buffers| ---------------------------------------| 0| SELECT STATEMENT | | 1| | 6 |00: 00. 01 | 1523| |* 1| TABLE ACCESS FULL| CUSTOMERS | 1| 6 |00: 00. 01 | 1523| ---------------------------------------Predicate Information (identified by operation id): -------------------------1 - filter(("CUST_CITY"='Hyderabad' AND "CUST_YEAR_OF_BIRTH">1960 AND "CUST_INCOME_LEVEL" LIKE 'C%'))

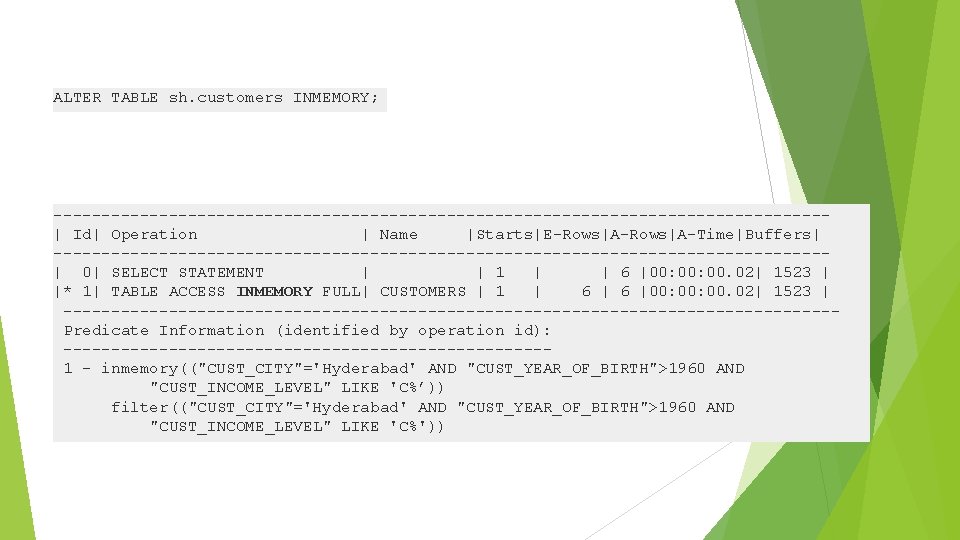

ALTER TABLE sh. customers INMEMORY; ----------------------------------------| Id| Operation | Name |Starts|E-Rows|A-Time|Buffers| ----------------------------------------| 0| SELECT STATEMENT | | 1 | | 6 |00: 00. 02| 1523 | |* 1| TABLE ACCESS INMEMORY FULL| CUSTOMERS | 1 | 6 |00: 00. 02| 1523 | ----------------------------------------Predicate Information (identified by operation id): -------------------------1 - inmemory(("CUST_CITY"='Hyderabad' AND "CUST_YEAR_OF_BIRTH">1960 AND "CUST_INCOME_LEVEL" LIKE 'C%’)) filter(("CUST_CITY"='Hyderabad' AND "CUST_YEAR_OF_BIRTH">1960 AND "CUST_INCOME_LEVEL" LIKE 'C%'))

IM Column Store Compression Methods compression is a space-saving mechanism MEMCOMPRESS FOR DML - This method results in the best DML performance. MEMCOMPRESS FOR QUERY LOW MEMCOMPRESS FOR QUERY HIGH - This method results in good query performance and saves space. MEMCOMPRESS FOR CAPACITY HIGH - This method results in the best space saving. MEMCOMPRESS FOR CAPACITY LOW - This method balances space saving and query performance.

SQL Serves Memory-Optimized Tables Memory-optimized tables are tables, created using CREATE TABLE (Transact. SQL). create table dbo. Ord (Ord. No integer not null primary key nonclustered, Ord. Date datetime not null, Cust. Code nvarchar(5) not null) with (memory_optimized=on) Memory-optimized tables are fully durable by default, and, like transactions on (traditional) disk-based tables, fully durable transactions on memoryoptimized tables are fully atomic, consistent, isolated, and durable (ACID). Memory-optimized tables and natively compiled stored procedures support a subset of Transact-SQL.

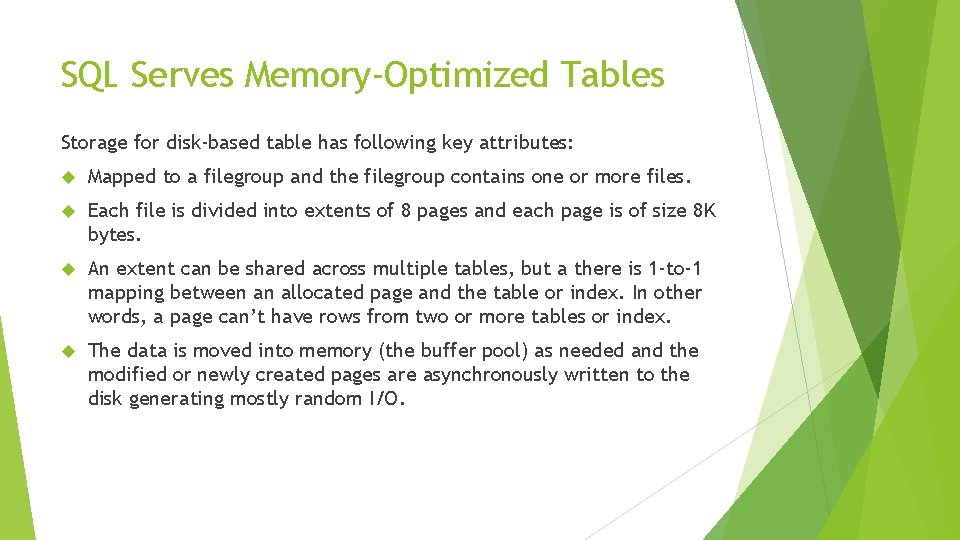

SQL Serves Memory-Optimized Tables Storage for disk-based table has following key attributes: Mapped to a filegroup and the filegroup contains one or more files. Each file is divided into extents of 8 pages and each page is of size 8 K bytes. An extent can be shared across multiple tables, but a there is 1 -to-1 mapping between an allocated page and the table or index. In other words, a page can’t have rows from two or more tables or index. The data is moved into memory (the buffer pool) as needed and the modified or newly created pages are asynchronously written to the disk generating mostly random I/O.

Storage for memory-optimized tables has following key attributes: All memory-optimized tables are mapped to a memory-optimized datafilegroup. This filegroup uses syntax and semantics similar to Filestream. The data is persisted as a row. All changes to memory-optimized tables are stored by appending to active files. Both reading and writing to files is sequential. An update is implemented as a delete followed by an insert. The deleted rows are not immediately removed from the storage. The deleted rows are removed by a background process, called MERGE, based on a policy as described in Durability for Memory-Optimized Tables. Unlike disk-based tables, storage for memory-optimized tables is not compressed. When migrating a compressed (ROW or PAGE) disk-based table to memory-optimized table, you will need to account for the change in size. A memory-optimized table can be durable or can be non-durable. You only need to configure storage for durable memory-optimized tables.

Considerations for indexes on memoryoptimized tables The baseline index for memory-optimized tables is the NONCLUSTERED index, which supports: point lookups (index seek on equality predicate), range scans (index seek in inequality predicate), full index scans, and ordered scans. searching on leading columns of the index key. In fact memory-optimized NONCLUSTERED indexes support all the operations supported by disk-based NONCLUSTERED indexes, with the only exception being backward scans. Therefore, using NONCLUSTERED indexes is a safe choice for your indexes. HASH indexes can be used to further optimize the workload. are particularly optimized for point lookups and row inserts. do not support range scans, ordered scans, or search on leading index key columns. it is necessary to specify the bucket_count at create time. It should usually be set at between one and two times the number of index key values, but overestimating is usually not a problem.

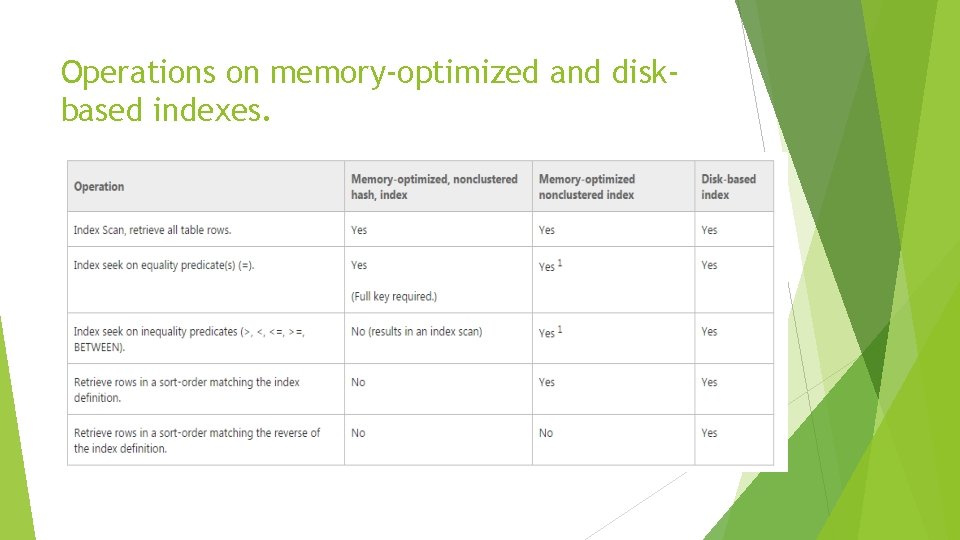

Operations on memory-optimized and diskbased indexes.

- Slides: 13