Improving the Performance of Network Intrusion Detection Using

![Related Work • Specialized hardware – Reprogrammable Hardware (FPGAs) [3, 4, 13, 14, 31] Related Work • Specialized hardware – Reprogrammable Hardware (FPGAs) [3, 4, 13, 14, 31]](https://slidetodoc.com/presentation_image/21aaf9433a755bb3a8f782d0d0253bf4/image-38.jpg)

- Slides: 51

Improving the Performance of Network Intrusion Detection Using Graphics Processors Giorgos Vasiliadis Master Thesis Presentation Computer Science Department - University of Crete

Motivation • Pattern matching is a crucial component of network intrusion detection systems – Thousands of patterns – Require high rate (e. g. gigabit) – Multi-pattern search is not sufficient • Parallel matching provides a scalable solution Giorgos Vasiliadis 2

Objectives • To offload the pattern matching operations to the Graphics card – highly-parallel computational devices – low-cost • Match thousands of network packets concurrently, instead of one per time Giorgos Vasiliadis 3

Roadmap • • Introduction Design Evaluation Conclusions Giorgos Vasiliadis 4

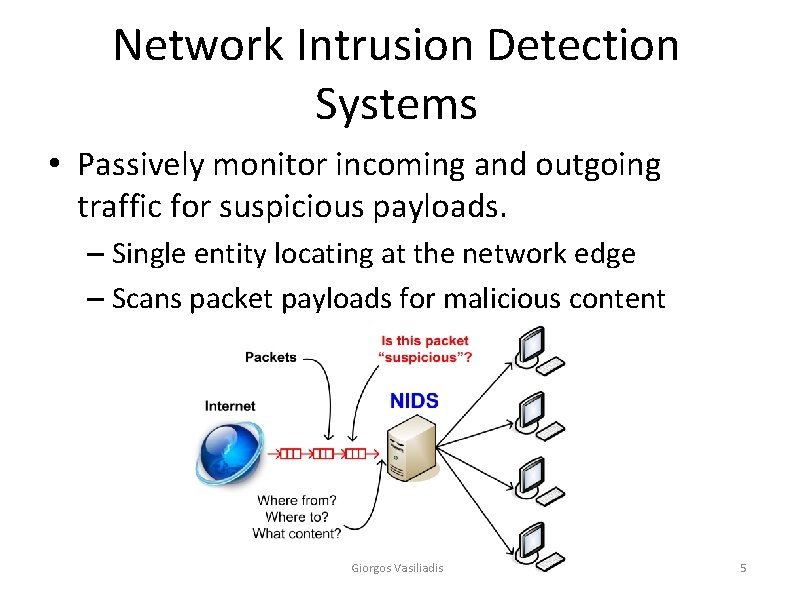

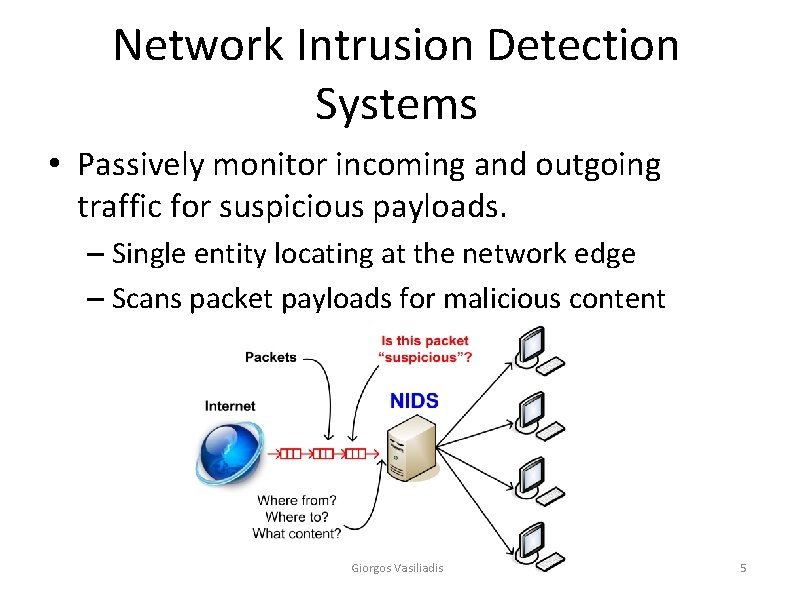

Network Intrusion Detection Systems • Passively monitor incoming and outgoing traffic for suspicious payloads. – Single entity locating at the network edge – Scans packet payloads for malicious content Giorgos Vasiliadis 5

Pattern Matching Algorithms • Essential for any signature-based NIDS – Algorithms were not necessarily motivated by IDS – It is just string searching Giorgos Vasiliadis 6

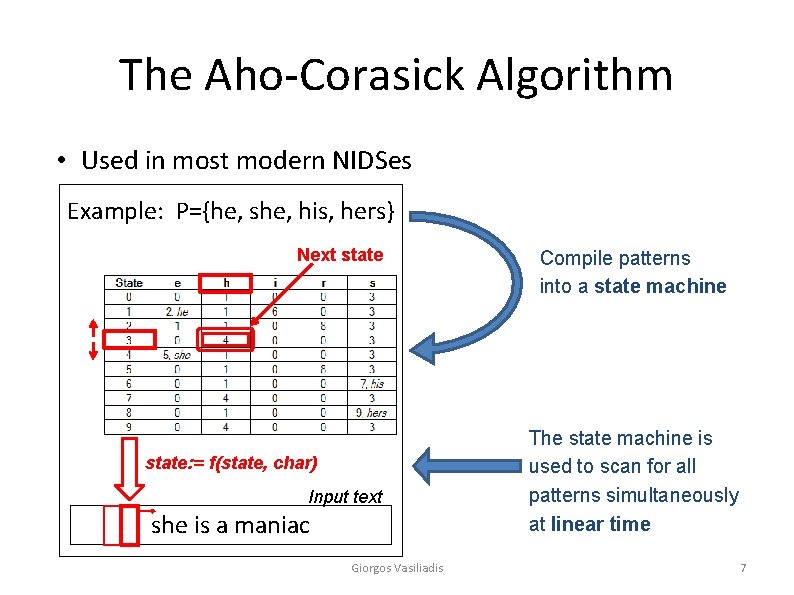

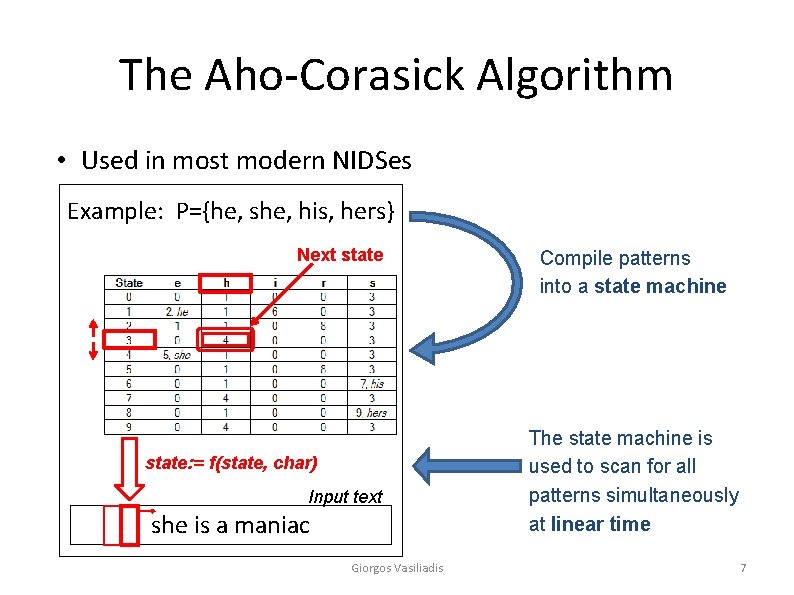

The Aho-Corasick Algorithm • Used in most modern NIDSes Example: P={he, she, his, hers} Next state: = f(state, char) Input text she is a maniac Giorgos Vasiliadis Compile patterns into a state machine The state machine is used to scan for all patterns simultaneously at linear time 7

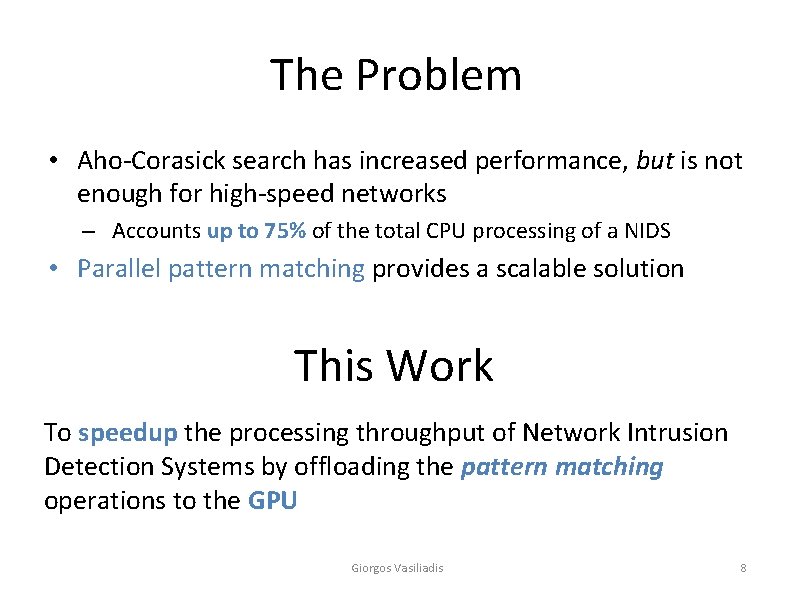

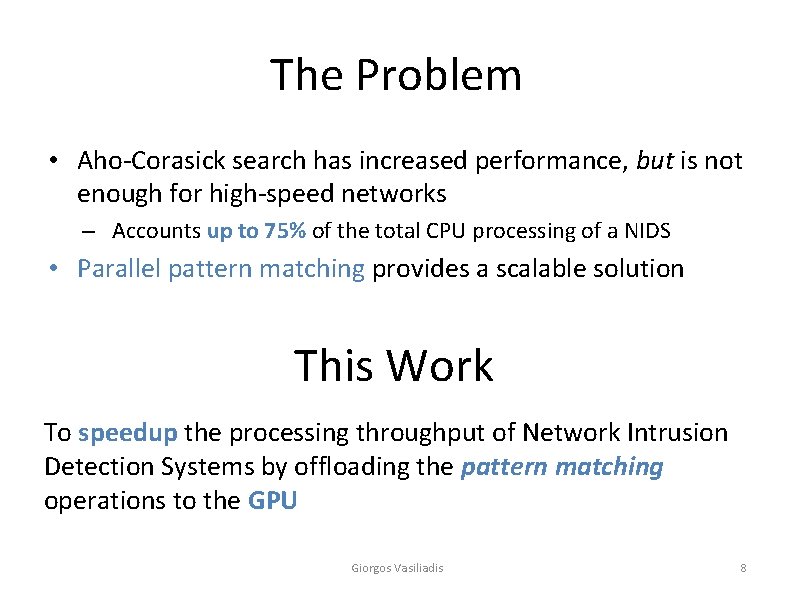

The Problem • Aho-Corasick search has increased performance, but is not enough for high-speed networks – Accounts up to 75% of the total CPU processing of a NIDS • Parallel pattern matching provides a scalable solution This Work To speedup the processing throughput of Network Intrusion Detection Systems by offloading the pattern matching operations to the GPU Giorgos Vasiliadis 8

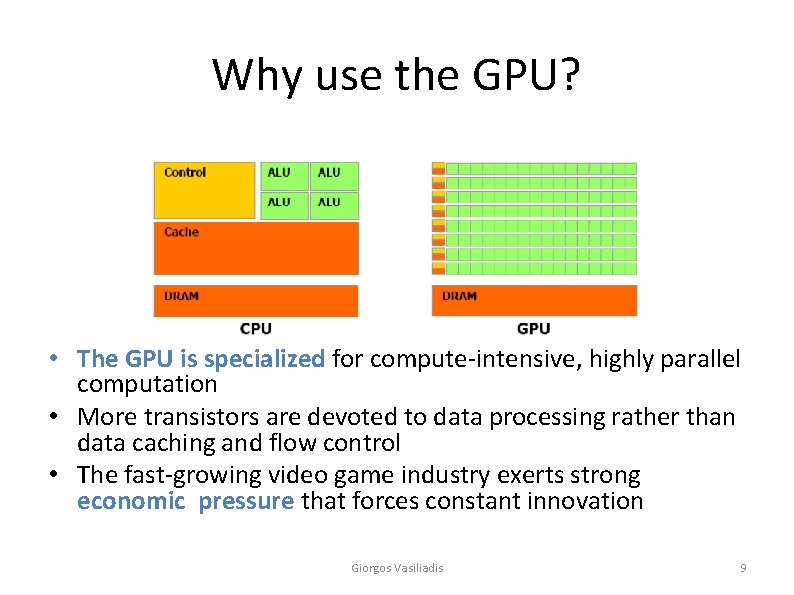

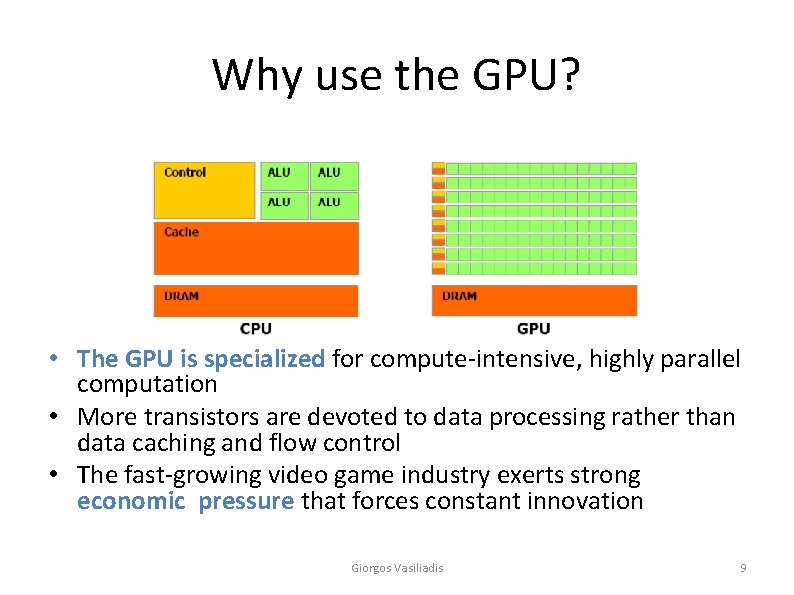

Why use the GPU? • The GPU is specialized for compute-intensive, highly parallel computation • More transistors are devoted to data processing rather than data caching and flow control • The fast-growing video game industry exerts strong economic pressure that forces constant innovation Giorgos Vasiliadis 9

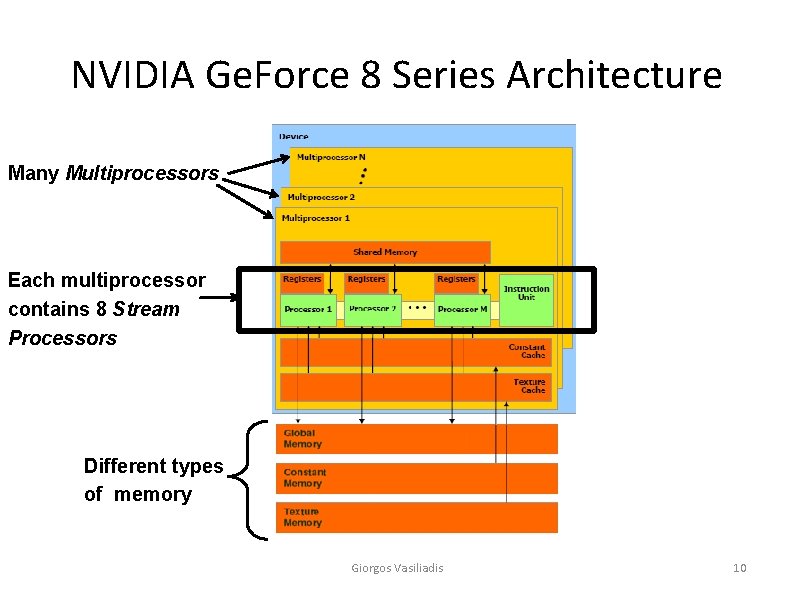

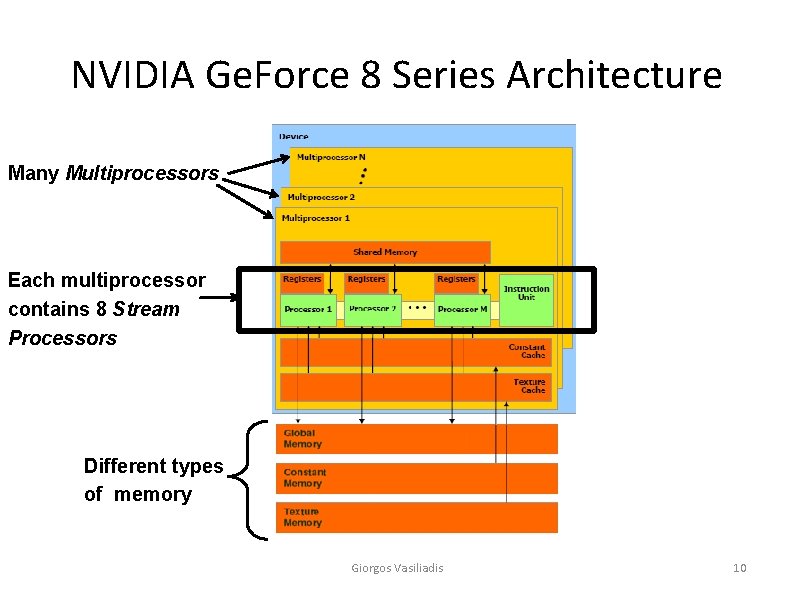

NVIDIA Ge. Force 8 Series Architecture Many Multiprocessors Each multiprocessor contains 8 Stream Processors Different types of memory Giorgos Vasiliadis 10

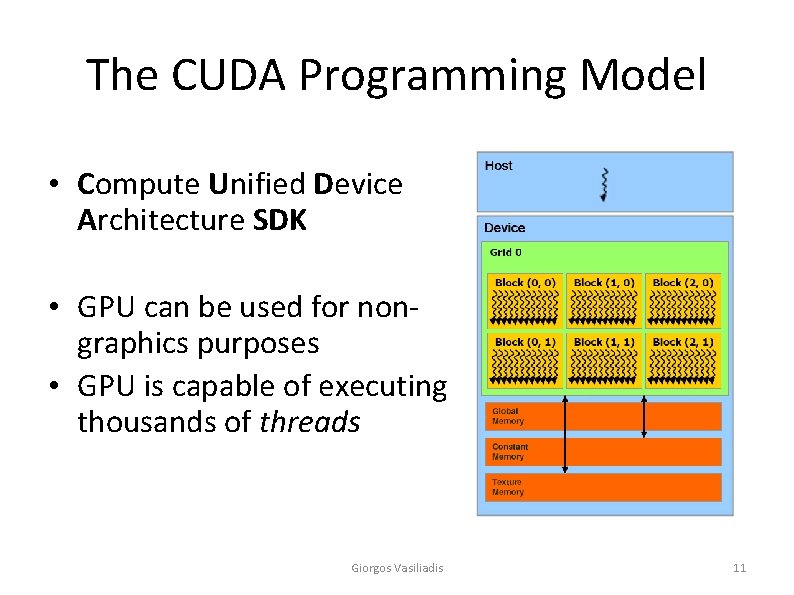

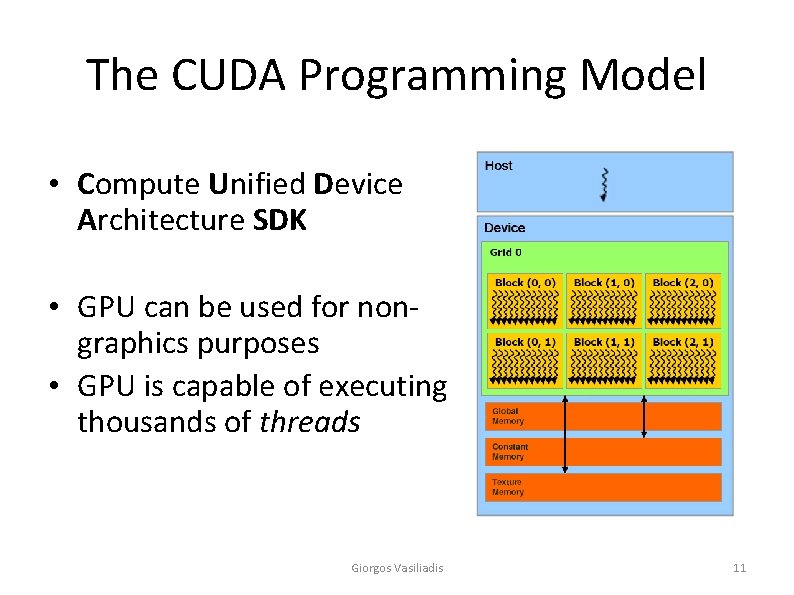

The CUDA Programming Model • Compute Unified Device Architecture SDK • GPU can be used for nongraphics purposes • GPU is capable of executing thousands of threads Giorgos Vasiliadis 11

Roadmap • • Introduction Design Evaluation Conclusions Giorgos Vasiliadis 12

Implementation within Snort • Snort is the most widely used Network Intrusion Detection System – Open-source – Contains a large number of threats signatures Giorgos Vasiliadis 13

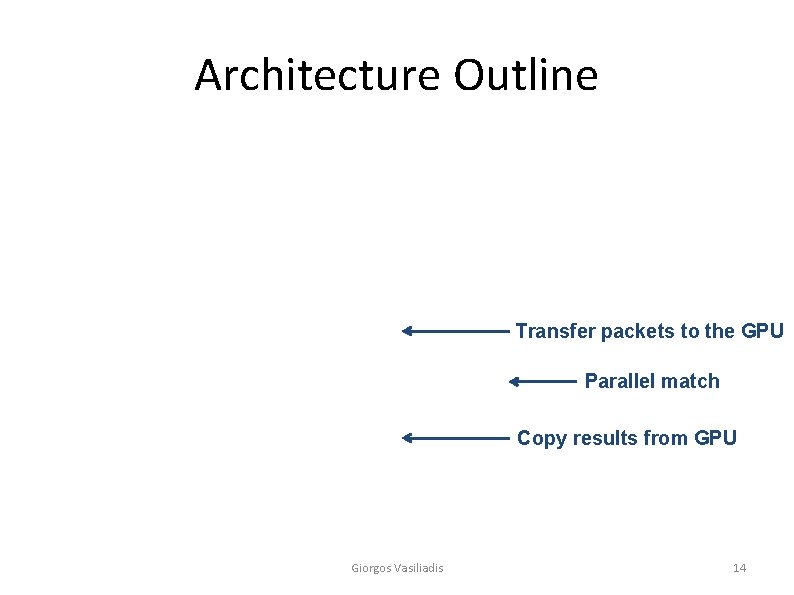

Architecture Outline Transfer packets to the GPU Parallel match Copy results from GPU Giorgos Vasiliadis 14

Challenges • Overhead of moving data to/from the GPU – Additional communication costs • Parallelize packet inspection process – Map packet data to processing elements Giorgos Vasiliadis 15

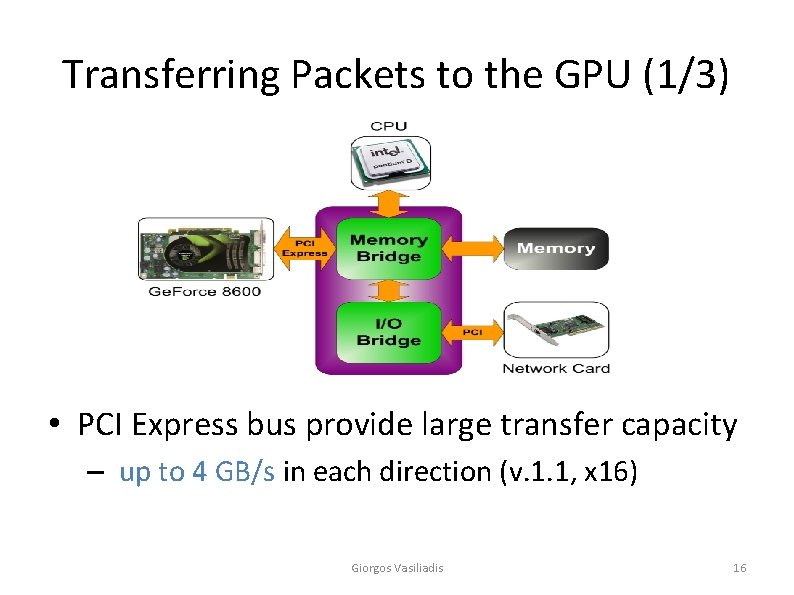

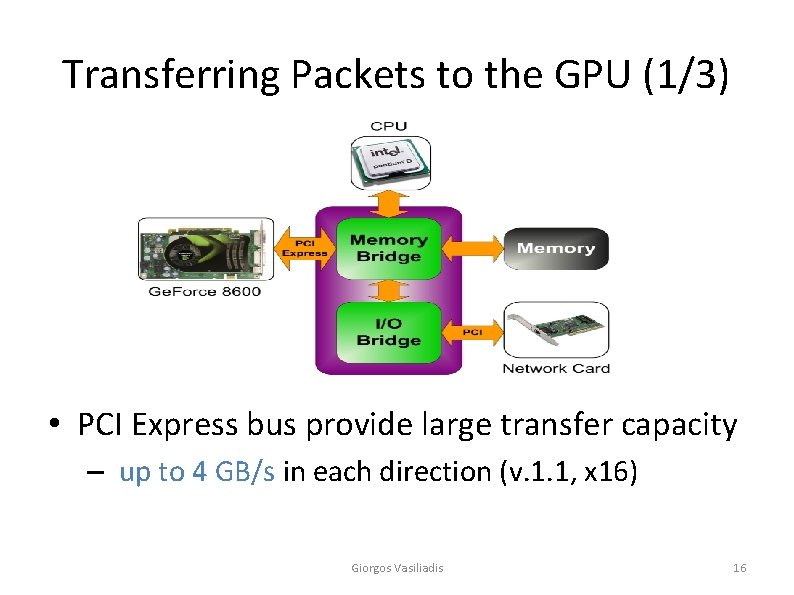

Transferring Packets to the GPU (1/3) • PCI Express bus provide large transfer capacity – up to 4 GB/s in each direction (v. 1. 1, x 16) Giorgos Vasiliadis 16

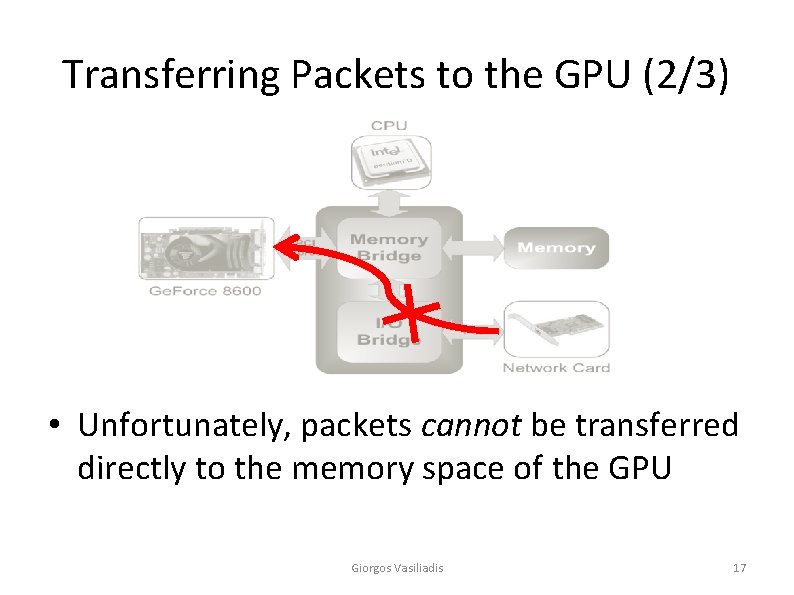

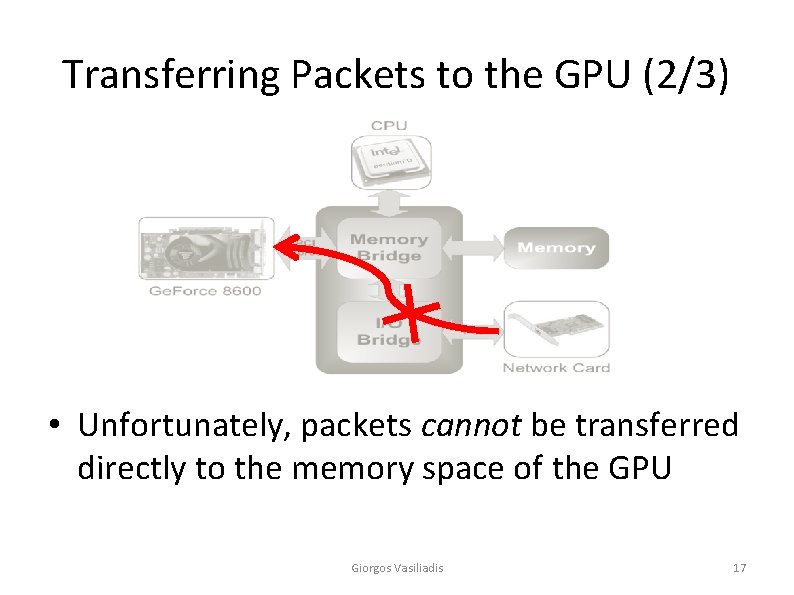

Transferring Packets to the GPU (2/3) • Unfortunately, packets cannot be transferred directly to the memory space of the GPU Giorgos Vasiliadis 17

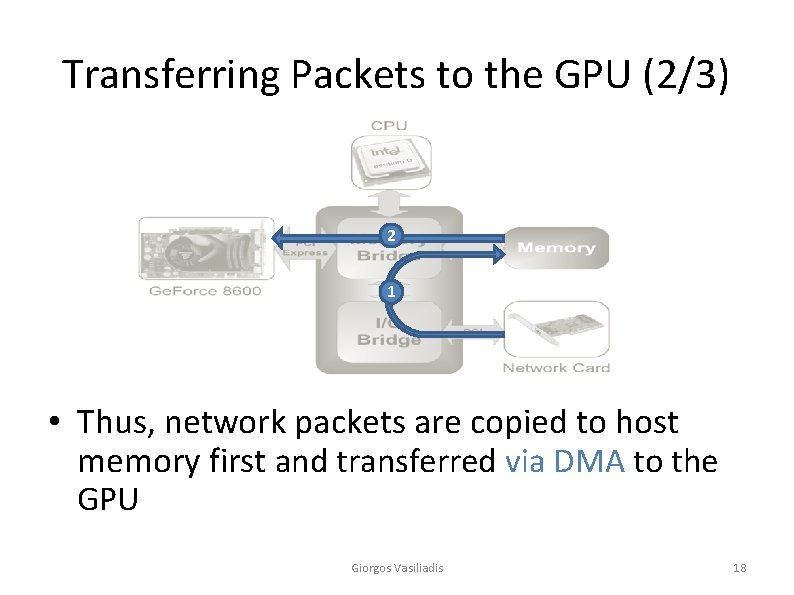

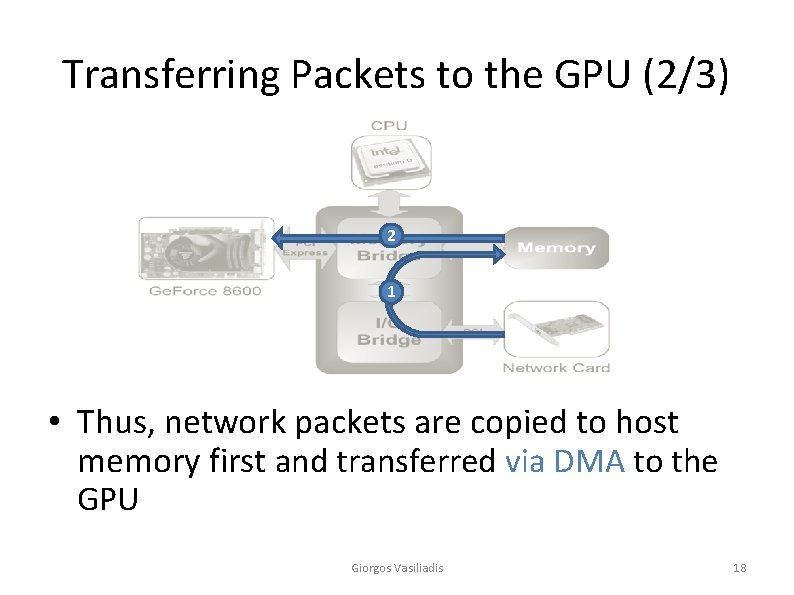

Transferring Packets to the GPU (2/3) 2 1 • Thus, network packets are copied to host memory first and transferred via DMA to the GPU Giorgos Vasiliadis 18

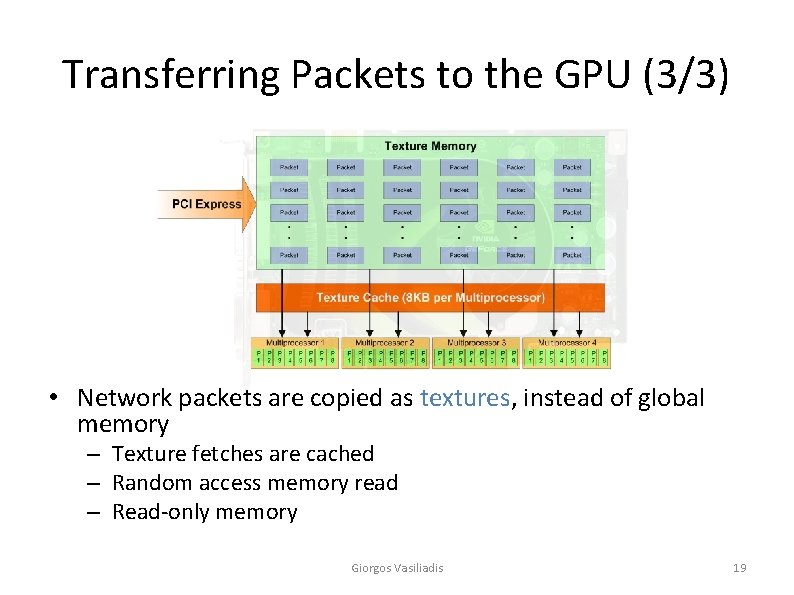

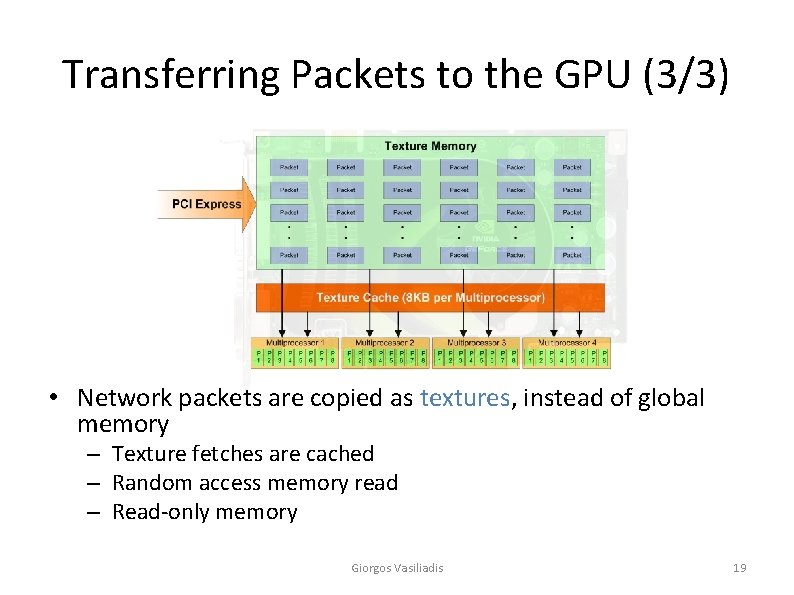

Transferring Packets to the GPU (3/3) • Network packets are copied as textures, instead of global memory – Texture fetches are cached – Random access memory read – Read-only memory Giorgos Vasiliadis 19

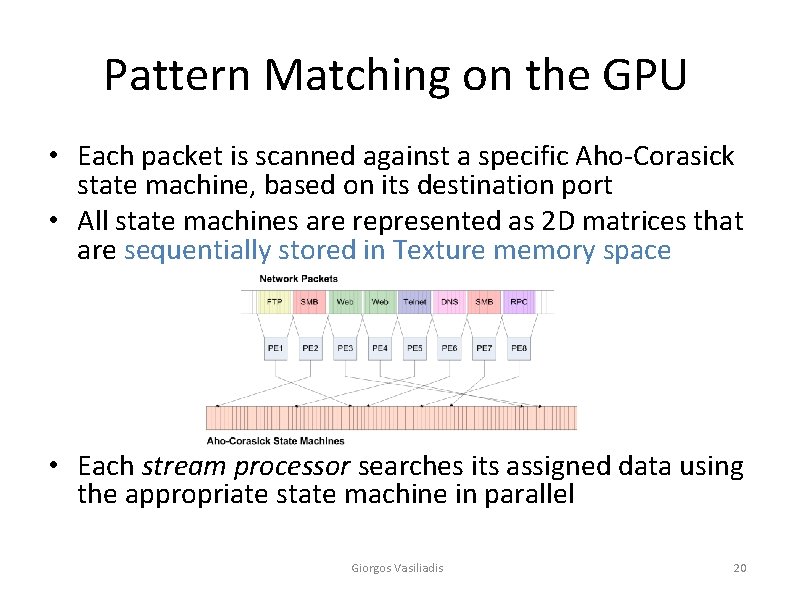

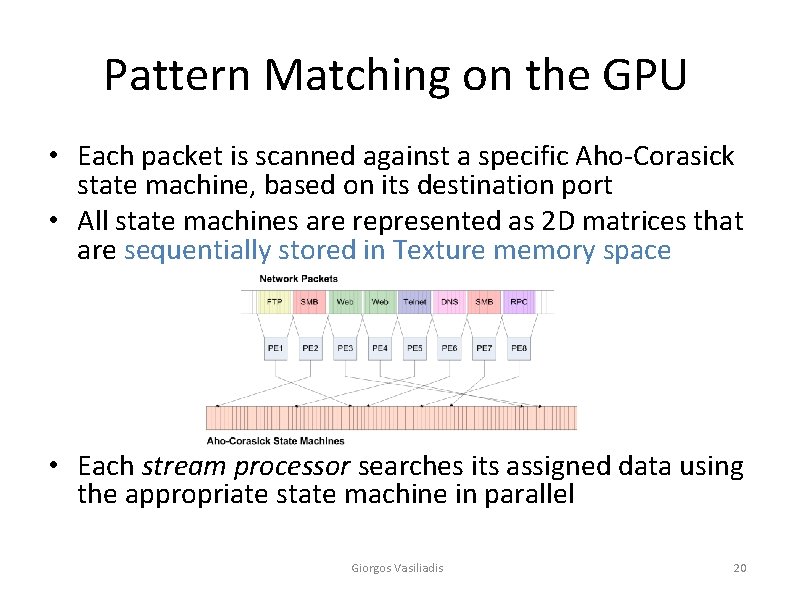

Pattern Matching on the GPU • Each packet is scanned against a specific Aho-Corasick state machine, based on its destination port • All state machines are represented as 2 D matrices that are sequentially stored in Texture memory space • Each stream processor searches its assigned data using the appropriate state machine in parallel Giorgos Vasiliadis 20

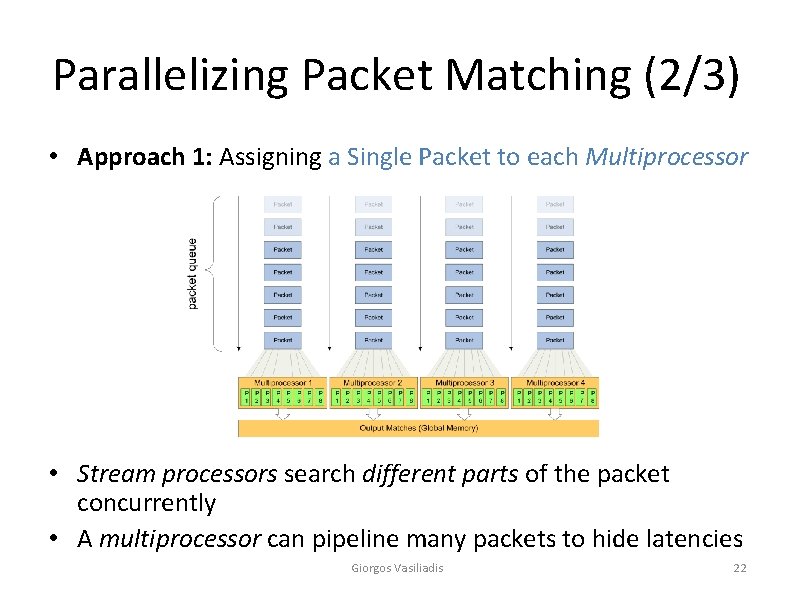

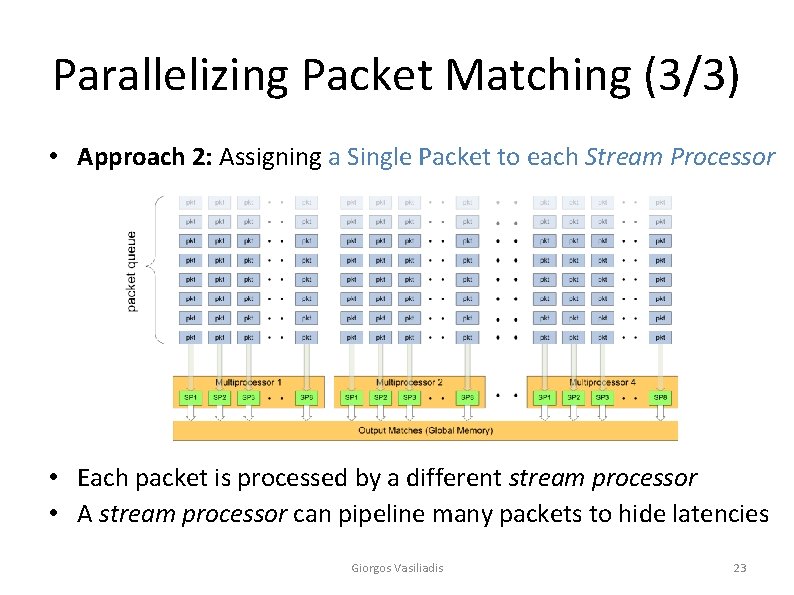

Parallelizing Packet Matching (1/3) • Perform data-parallel pattern matching • Distribute packets across Processing Elements – The Ge. Force 8600 contains 32 Stream Processors organized in 4 Multiprocessors • We have explored two different approaches for parallelizing the searching phase. Giorgos Vasiliadis 21

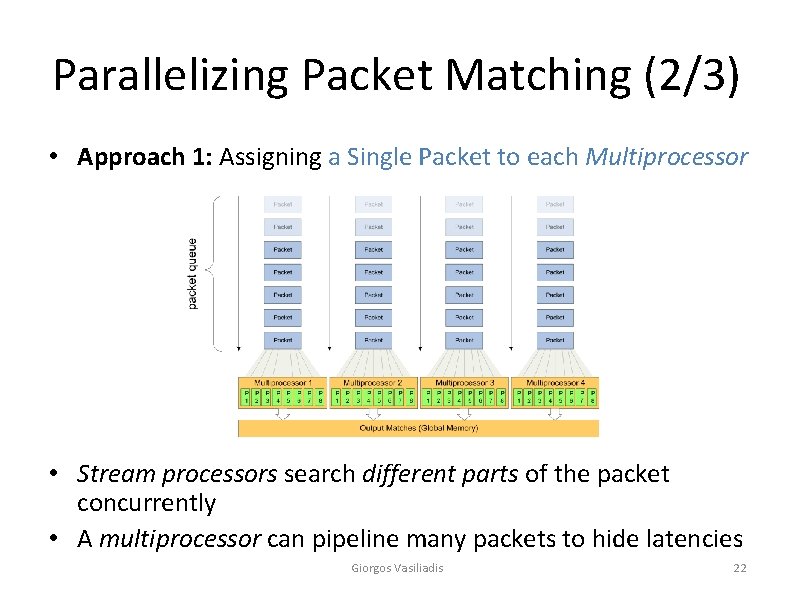

Parallelizing Packet Matching (2/3) • Approach 1: Assigning a Single Packet to each Multiprocessor • Stream processors search different parts of the packet concurrently • A multiprocessor can pipeline many packets to hide latencies Giorgos Vasiliadis 22

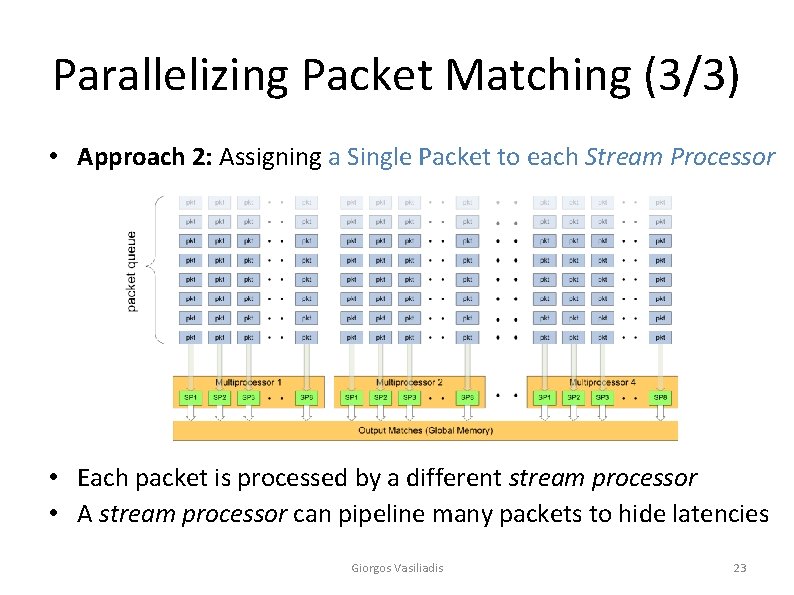

Parallelizing Packet Matching (3/3) • Approach 2: Assigning a Single Packet to each Stream Processor • Each packet is processed by a different stream processor • A stream processor can pipeline many packets to hide latencies Giorgos Vasiliadis 23

Saving the results in the GPU • Pattern matches for each packet are appended in a two-dimensional array in global device memory • For each match, we store – the ID of the matched pattern – the index inside the packet where it was found Giorgos Vasiliadis 24

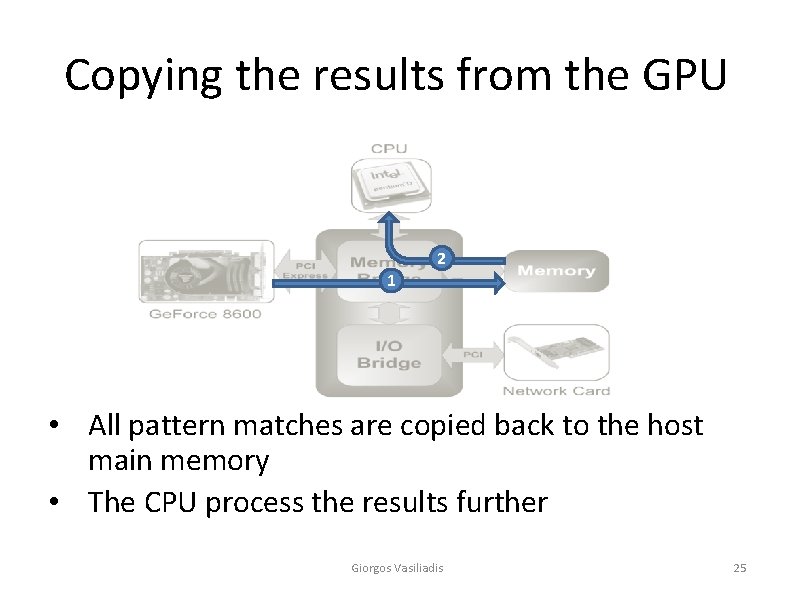

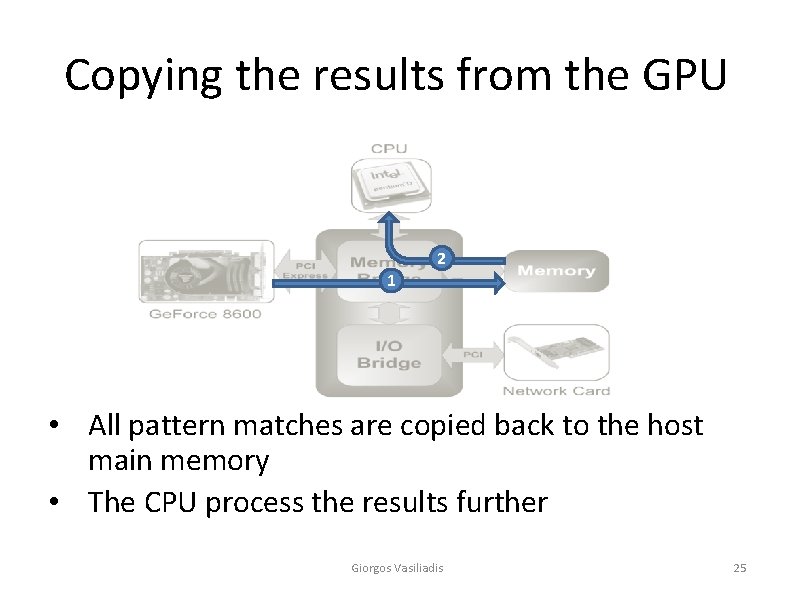

Copying the results from the GPU 2 1 • All pattern matches are copied back to the host main memory • The CPU process the results further Giorgos Vasiliadis 25

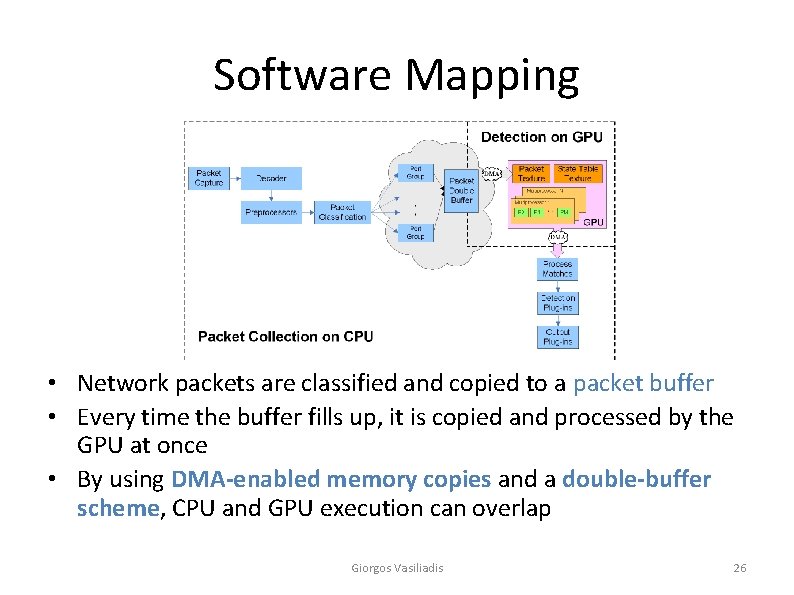

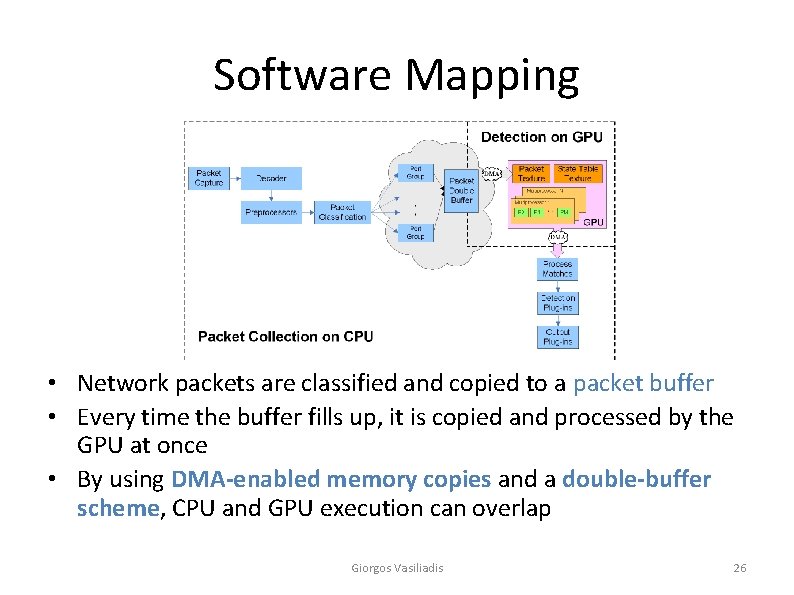

Software Mapping • Network packets are classified and copied to a packet buffer • Every time the buffer fills up, it is copied and processed by the GPU at once • By using DMA-enabled memory copies and a double-buffer scheme, CPU and GPU execution can overlap Giorgos Vasiliadis 26

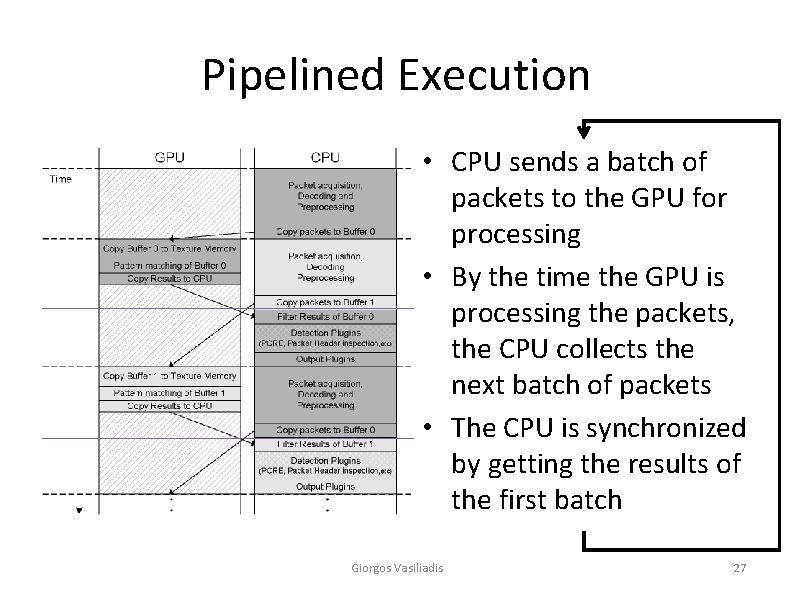

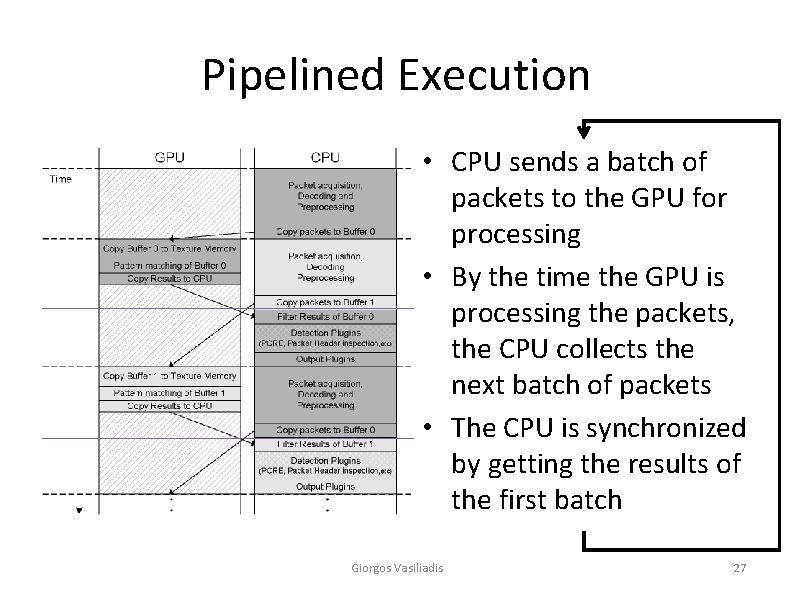

Pipelined Execution • CPU sends a batch of packets to the GPU for processing • By the time the GPU is processing the packets, the CPU collects the next batch of packets • The CPU is synchronized by getting the results of the first batch Giorgos Vasiliadis 27

Roadmap • • Introduction Design Evaluation Conclusions Giorgos Vasiliadis 28

Evaluation Overview • Technical equipment – 3. 4 GHz Intel Pentium 4 – 2 GB of memory – NVIDIA Ge. Force 8600 GT • Evaluation with Snort – 5467 content filtering rules – 7878 patterns associated with these rules Giorgos Vasiliadis 29

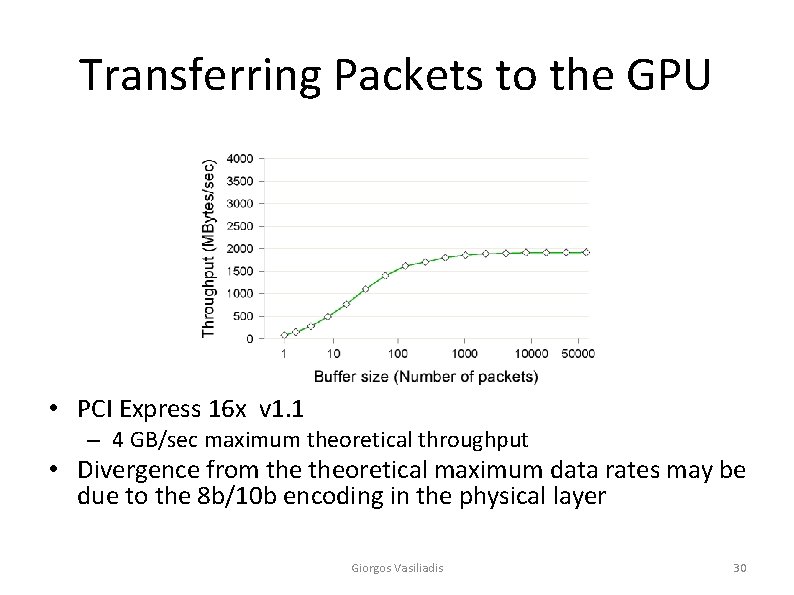

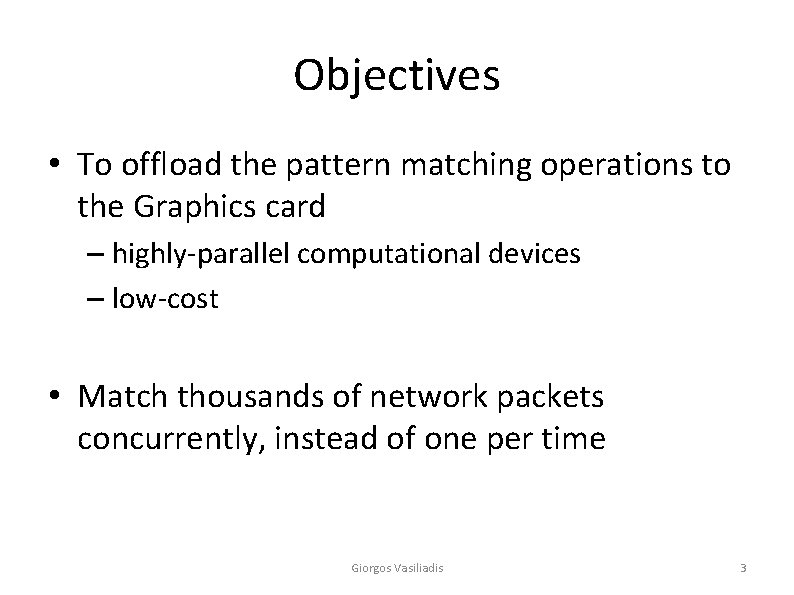

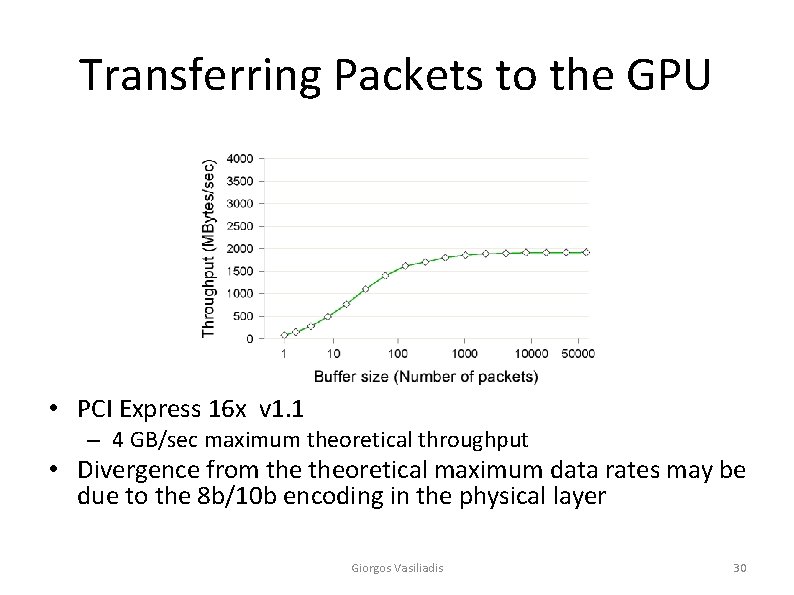

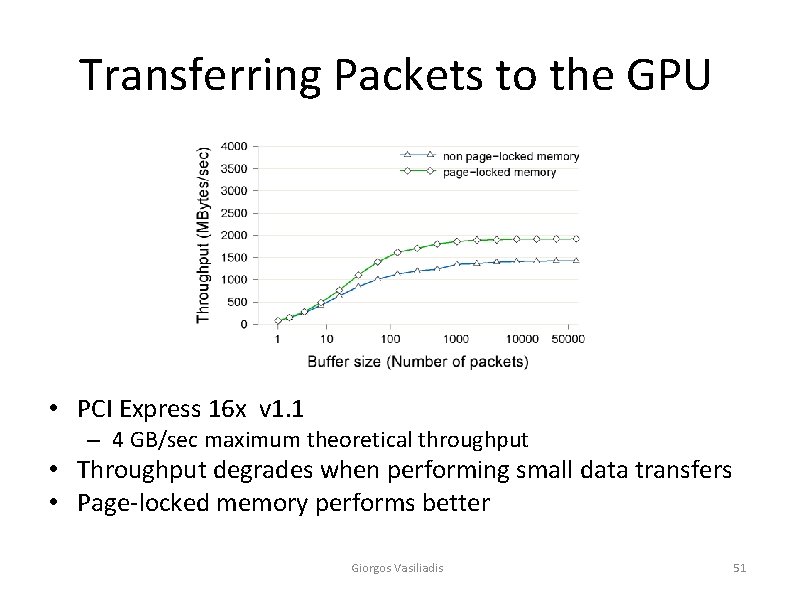

Transferring Packets to the GPU • PCI Express 16 x v 1. 1 – 4 GB/sec maximum theoretical throughput • Divergence from theoretical maximum data rates may be due to the 8 b/10 b encoding in the physical layer Giorgos Vasiliadis 30

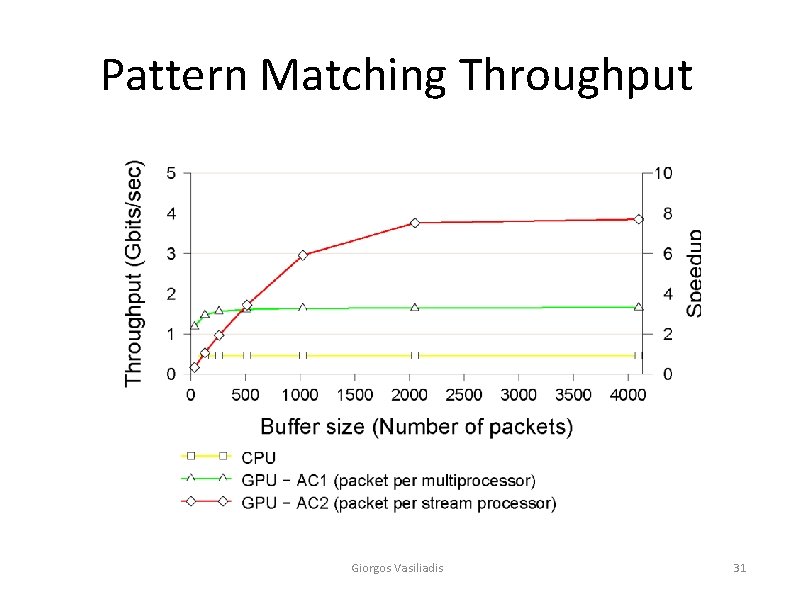

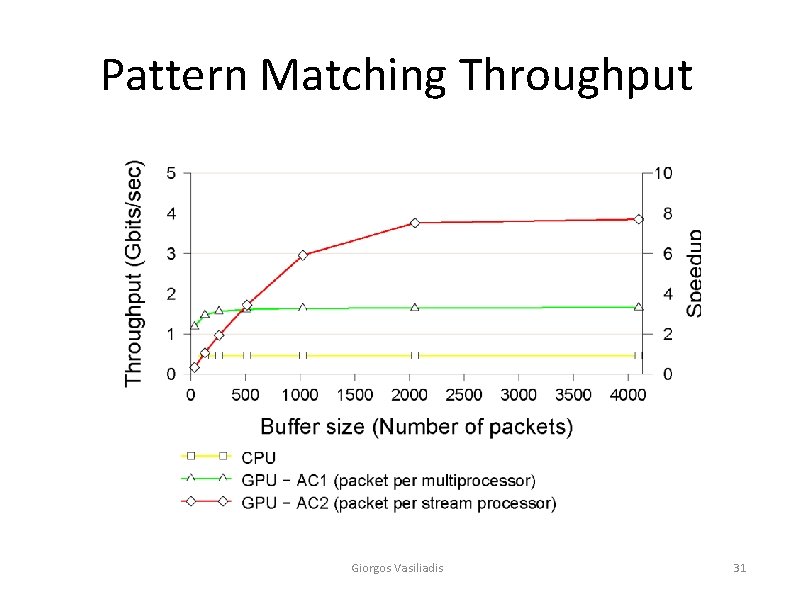

Pattern Matching Throughput Giorgos Vasiliadis 31

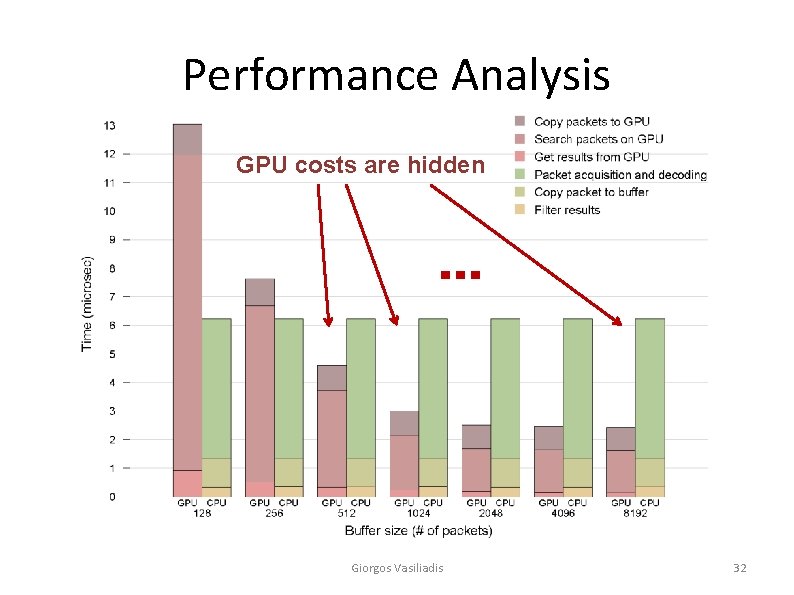

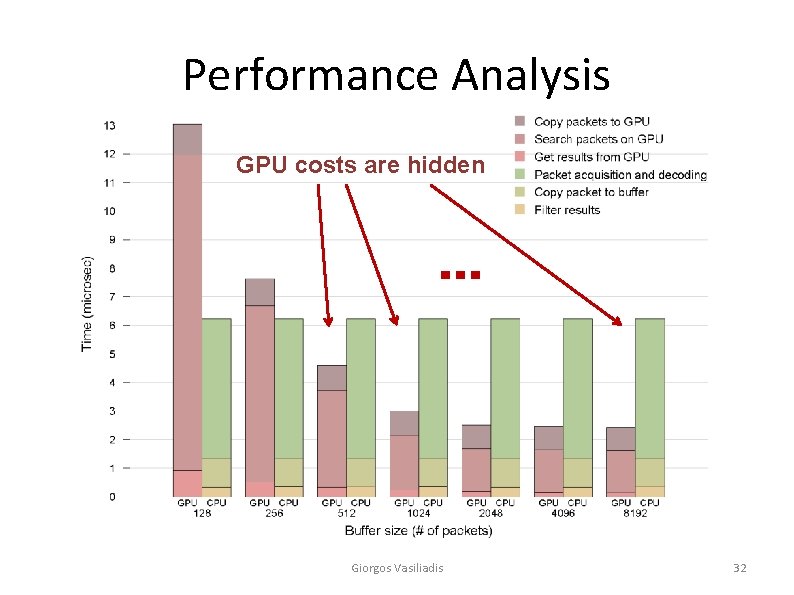

Performance Analysis GPU costs are hidden Giorgos Vasiliadis 32

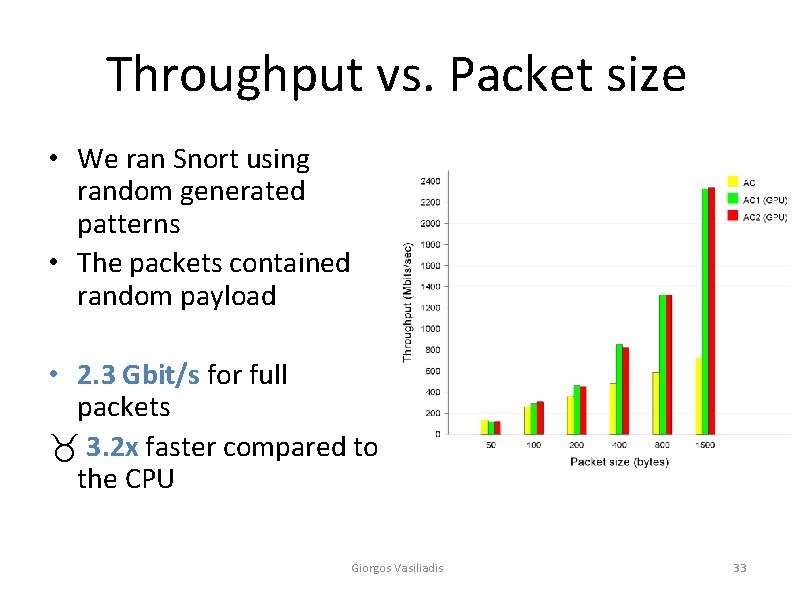

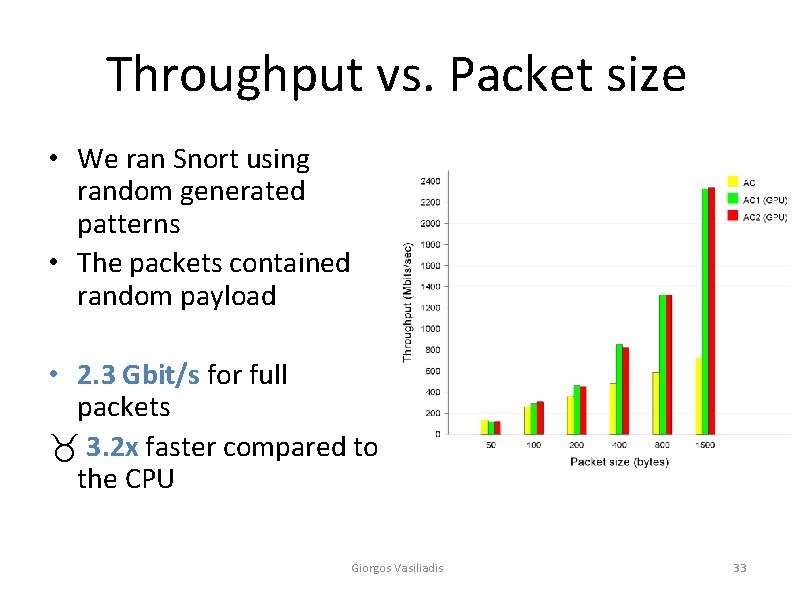

Throughput vs. Packet size • We ran Snort using random generated patterns • The packets contained random payload • 2. 3 Gbit/s for full packets _ 3. 2 x faster compared to the CPU Giorgos Vasiliadis 33

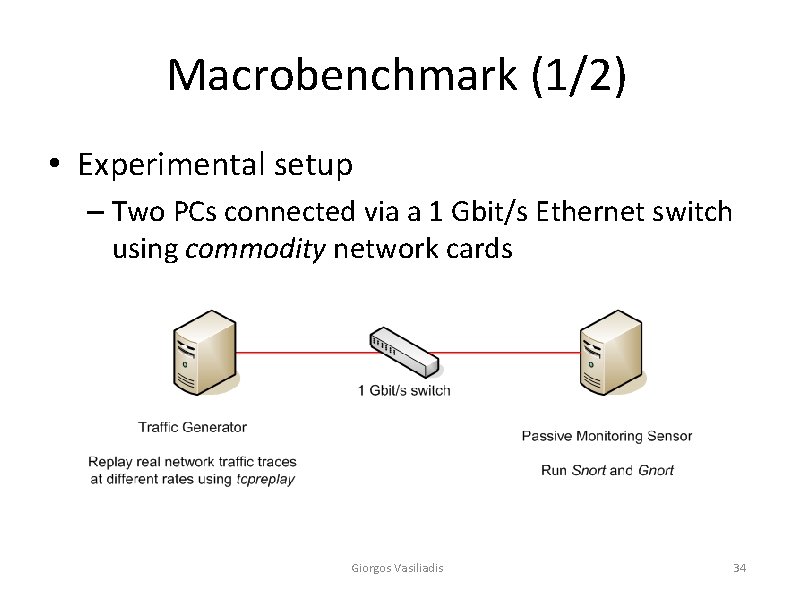

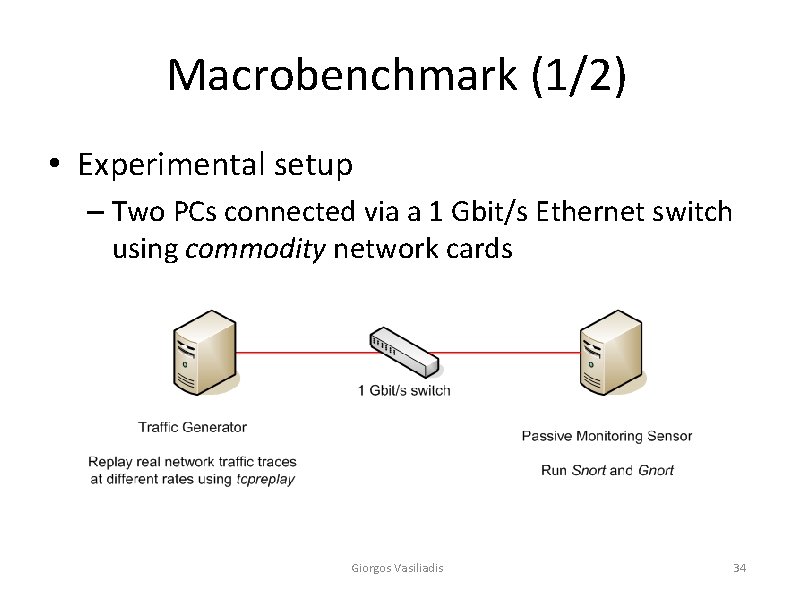

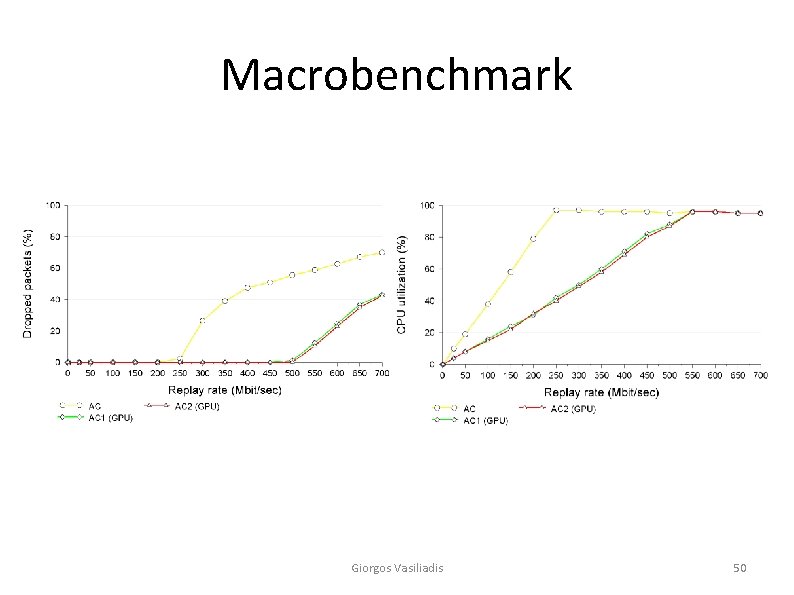

Macrobenchmark (1/2) • Experimental setup – Two PCs connected via a 1 Gbit/s Ethernet switch using commodity network cards Giorgos Vasiliadis 34

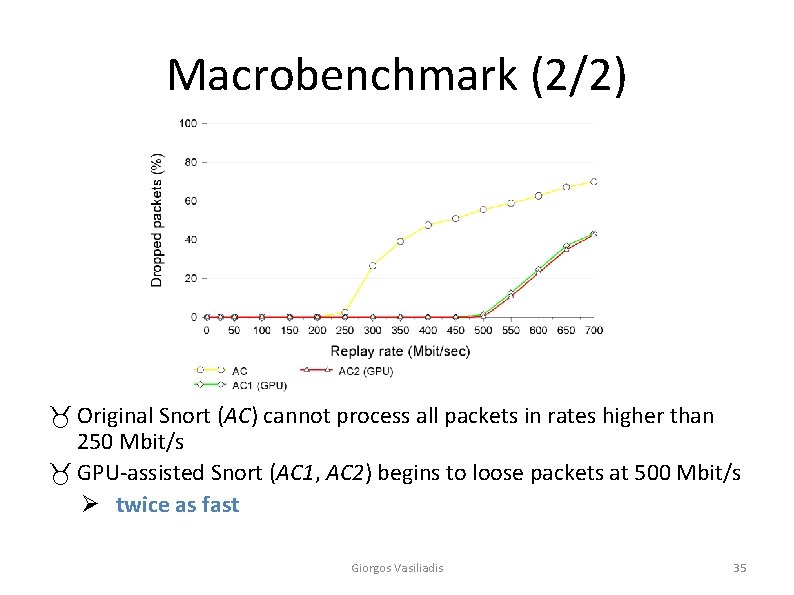

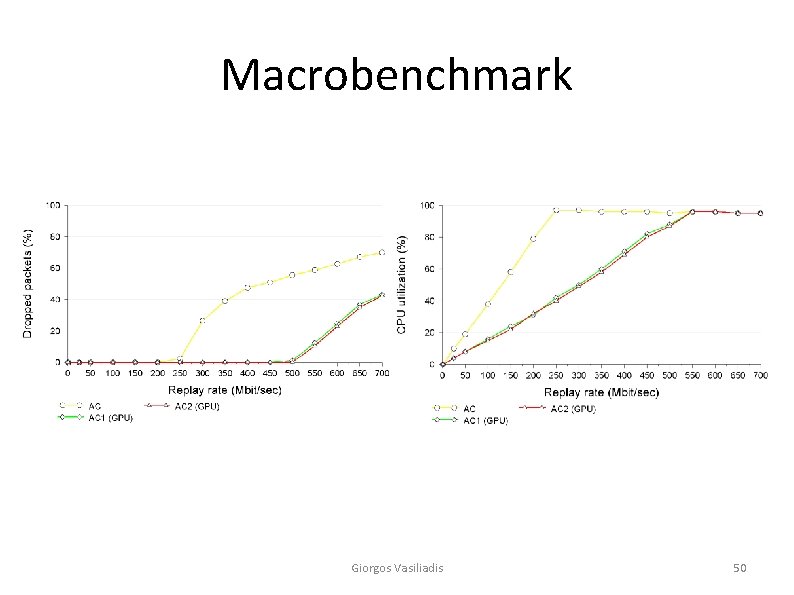

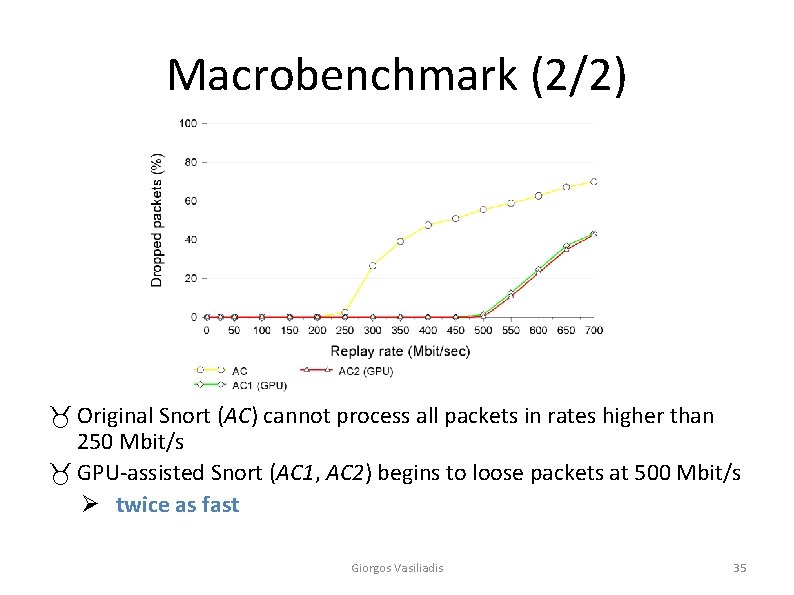

Macrobenchmark (2/2) _ Original Snort (AC) cannot process all packets in rates higher than 250 Mbit/s _ GPU-assisted Snort (AC 1, AC 2) begins to loose packets at 500 Mbit/s Ø twice as fast Giorgos Vasiliadis 35

Roadmap • • Introduction Design Evaluation Conclusions Giorgos Vasiliadis 36

Conclusions • Graphics cards can be used effectively to speed up Network Intrusion Detection Systems. – Low-cost (Ge. Force 8600 costs less than $100) – Worth the extra GPU programming effort • Our results indicate that network intrusion detection at gigabit rates is feasible using graphics processors Giorgos Vasiliadis 37

![Related Work Specialized hardware Reprogrammable Hardware FPGAs 3 4 13 14 31 Related Work • Specialized hardware – Reprogrammable Hardware (FPGAs) [3, 4, 13, 14, 31]](https://slidetodoc.com/presentation_image/21aaf9433a755bb3a8f782d0d0253bf4/image-38.jpg)

Related Work • Specialized hardware – Reprogrammable Hardware (FPGAs) [3, 4, 13, 14, 31] • Very efficient in terms of speed • Poor flexibility – Network Processors [5, 8, 12] • Commodity hardware – Multi-core processors [25] – Graphics processors [17] Giorgos Vasiliadis 38

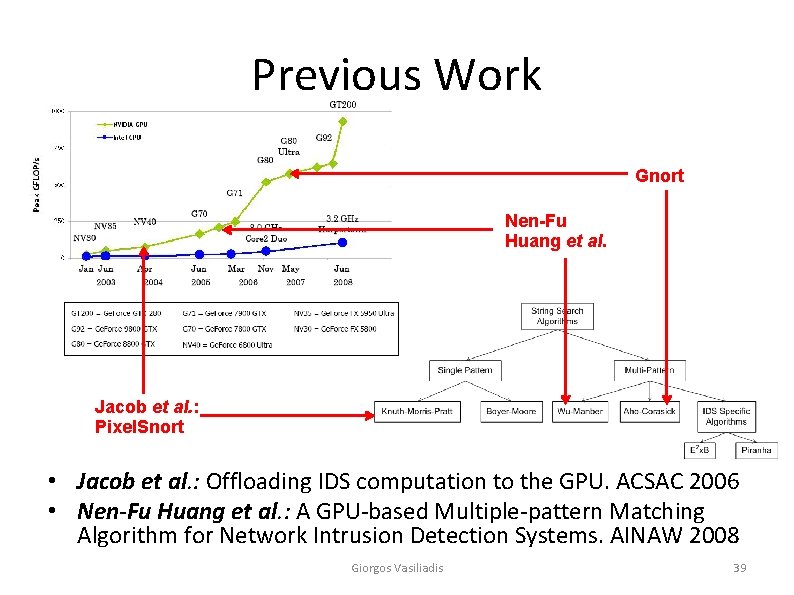

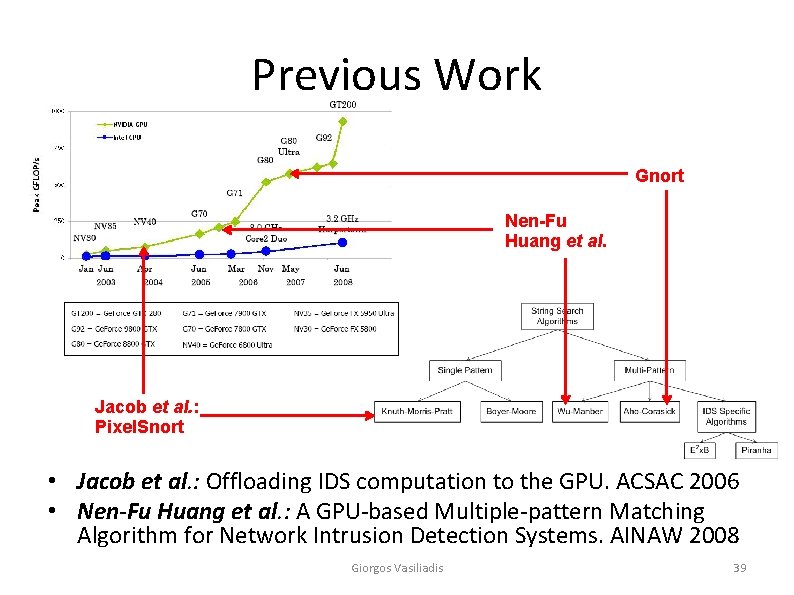

Previous Work Gnort Nen-Fu Huang et al. Jacob et al. : Pixel. Snort • Jacob et al. : Offloading IDS computation to the GPU. ACSAC 2006 • Nen-Fu Huang et al. : A GPU-based Multiple-pattern Matching Algorithm for Network Intrusion Detection Systems. AINAW 2008 Giorgos Vasiliadis 39

Publications • G. Vasiliadis, S. Antonatos, M. Polychronakis, E. Markatos, S. Ioannidis. Gnort: High Performance Intrusion Detection Using Graphics Processors. RAID 2008 • G. Vasiliadis, S. Antonatos, M. Polychronakis, E. Markatos, S. Ioannidis. Regular Expression Matching on Graphics Hardware for Intrusion Detection. Under Submission (Security and Privacy 2009) Giorgos Vasiliadis 40

Fin Thank you Giorgos Vasiliadis 41

Future work • Transfer the packets directly from the NIC to the memory space of the GPU • Utilize multiple GPUs on multi-slot motherboards • Content-based traffic applications – virus scanners, anti-spam filters, firewalls, etc. Giorgos Vasiliadis 42

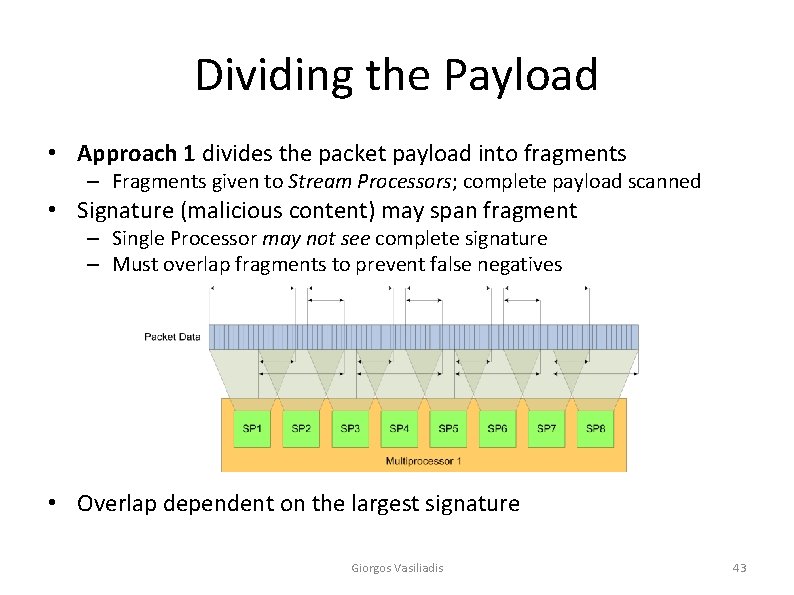

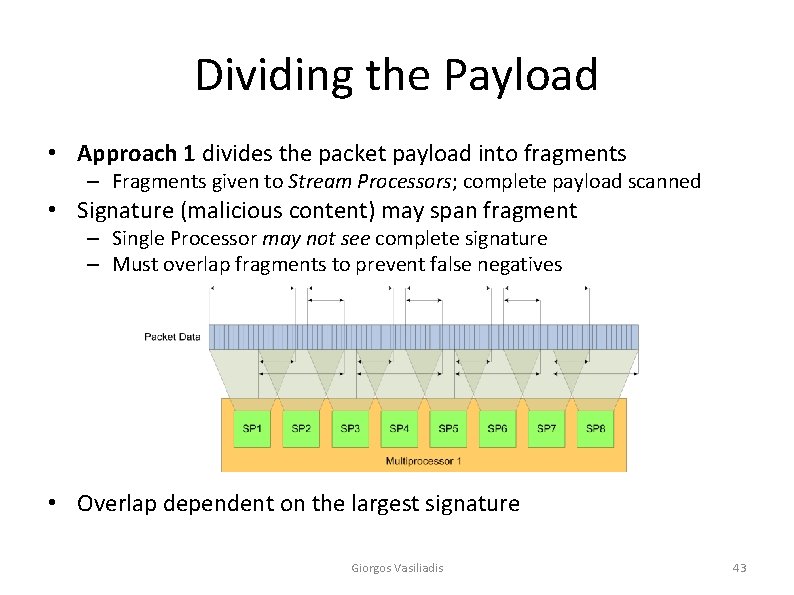

Dividing the Payload • Approach 1 divides the packet payload into fragments – Fragments given to Stream Processors; complete payload scanned • Signature (malicious content) may span fragment – Single Processor may not see complete signature – Must overlap fragments to prevent false negatives • Overlap dependent on the largest signature Giorgos Vasiliadis 43

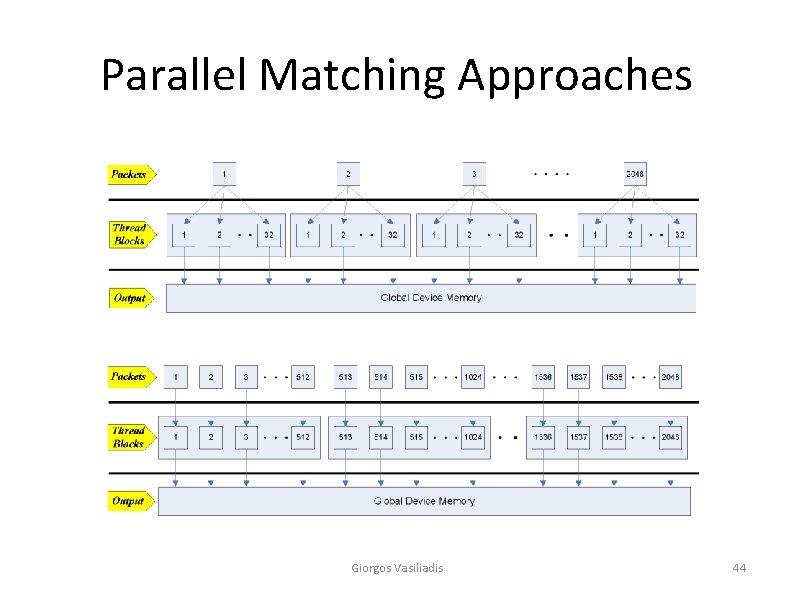

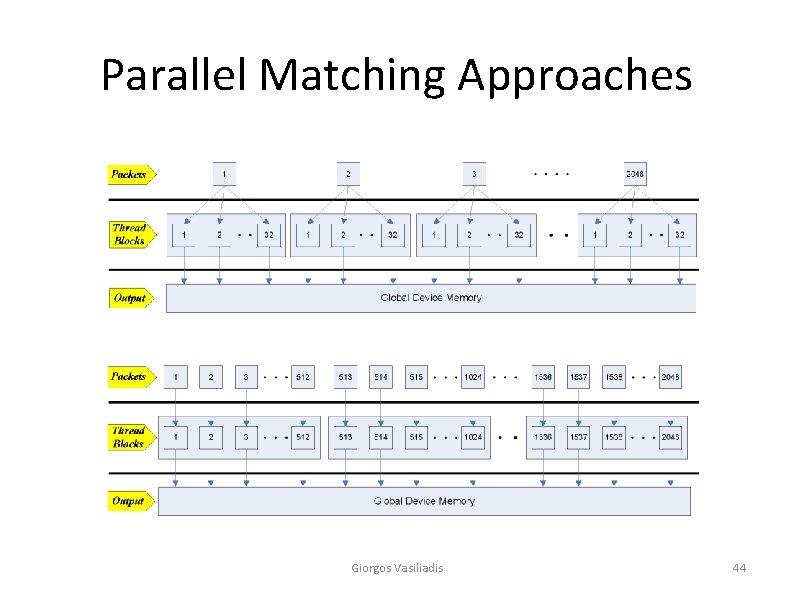

Parallel Matching Approaches Giorgos Vasiliadis 44

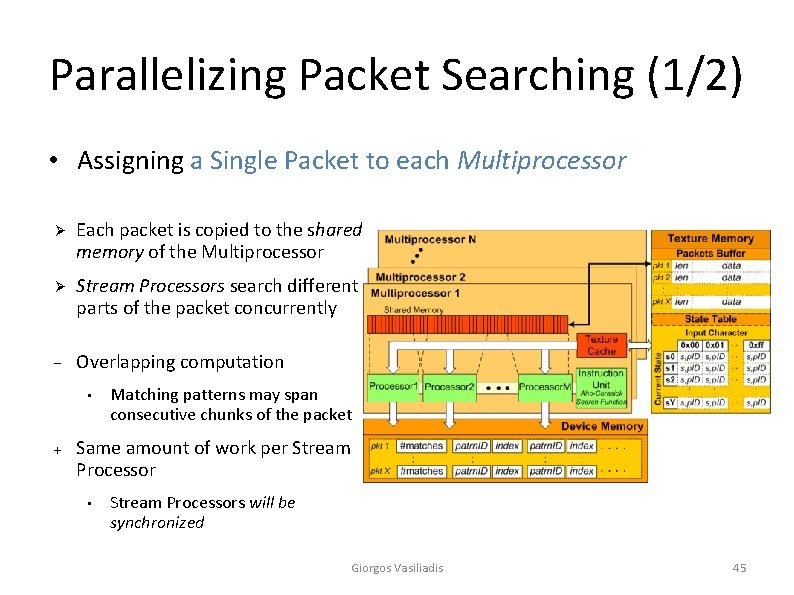

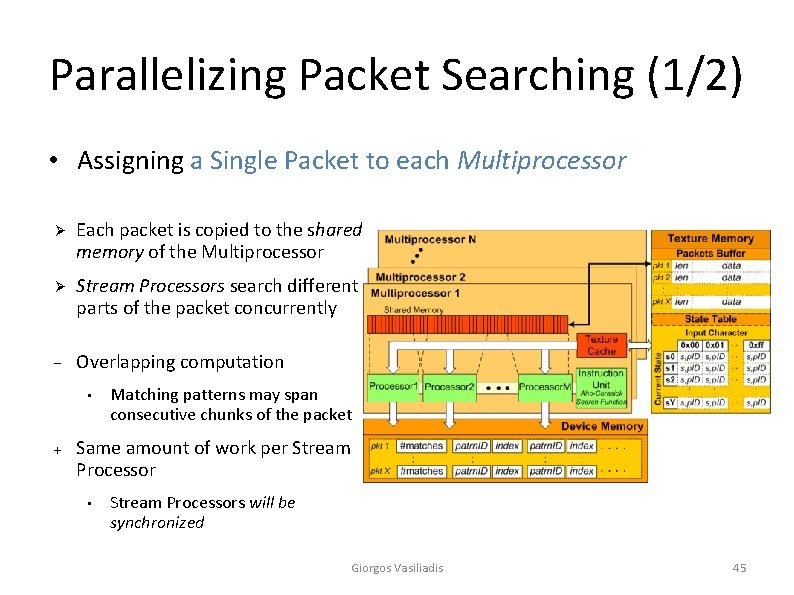

Parallelizing Packet Searching (1/2) • Assigning a Single Packet to each Multiprocessor Ø Each packet is copied to the shared memory of the Multiprocessor Ø Stream Processors search different parts of the packet concurrently Overlapping computation • Matching patterns may span consecutive chunks of the packet Same amount of work per Stream Processor • Stream Processors will be synchronized Giorgos Vasiliadis 45

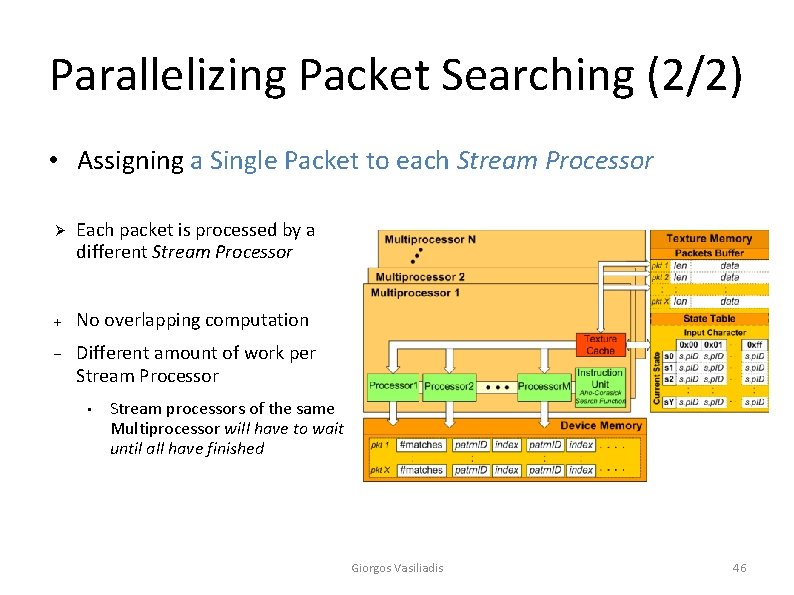

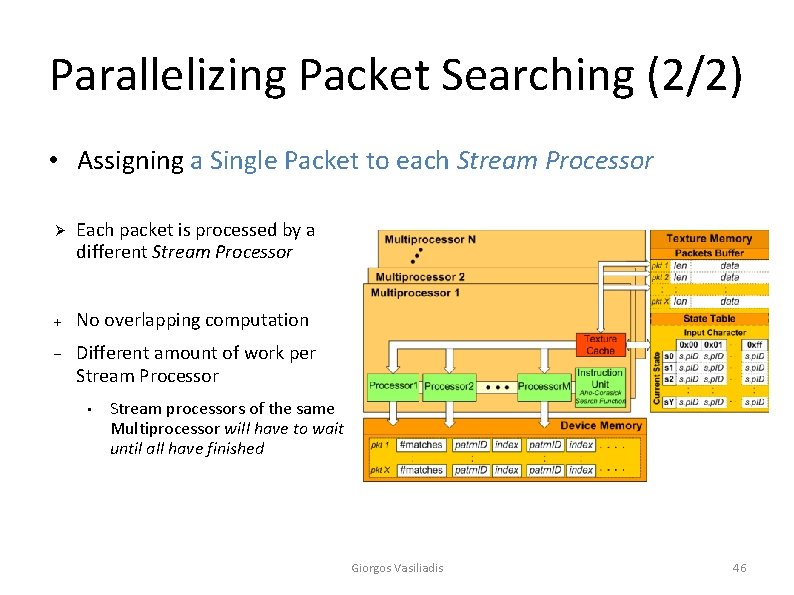

Parallelizing Packet Searching (2/2) • Assigning a Single Packet to each Stream Processor Ø Each packet is processed by a different Stream Processor No overlapping computation Different amount of work per Stream Processor • Stream processors of the same Multiprocessor will have to wait until all have finished Giorgos Vasiliadis 46

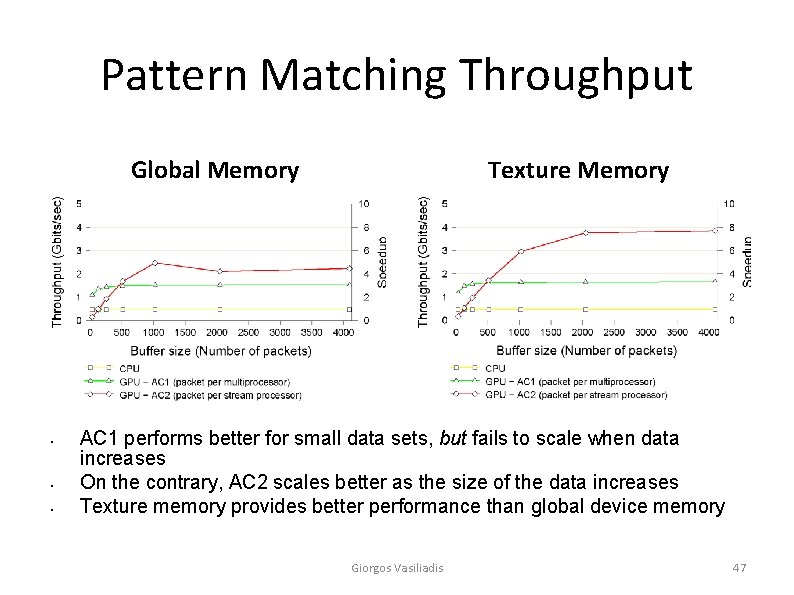

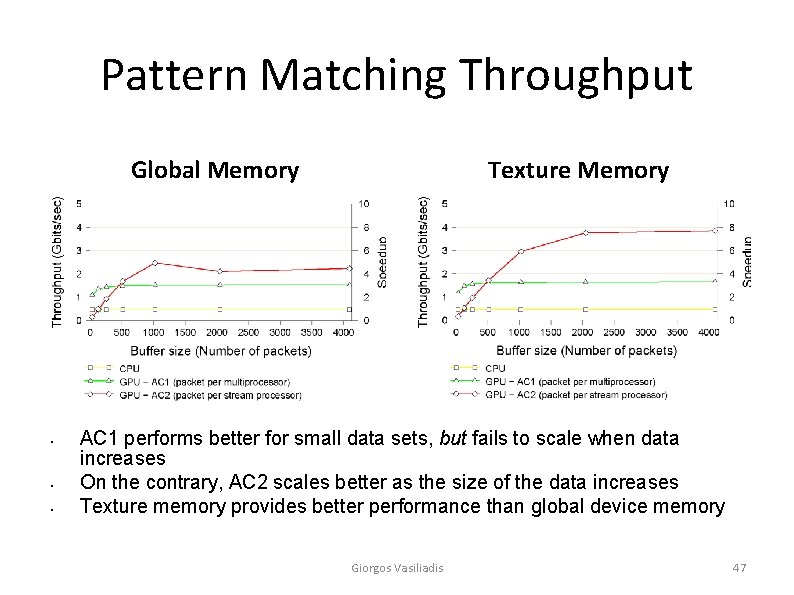

Pattern Matching Throughput Global Memory • • • Texture Memory AC 1 performs better for small data sets, but fails to scale when data increases On the contrary, AC 2 scales better as the size of the data increases Texture memory provides better performance than global device memory Giorgos Vasiliadis 47

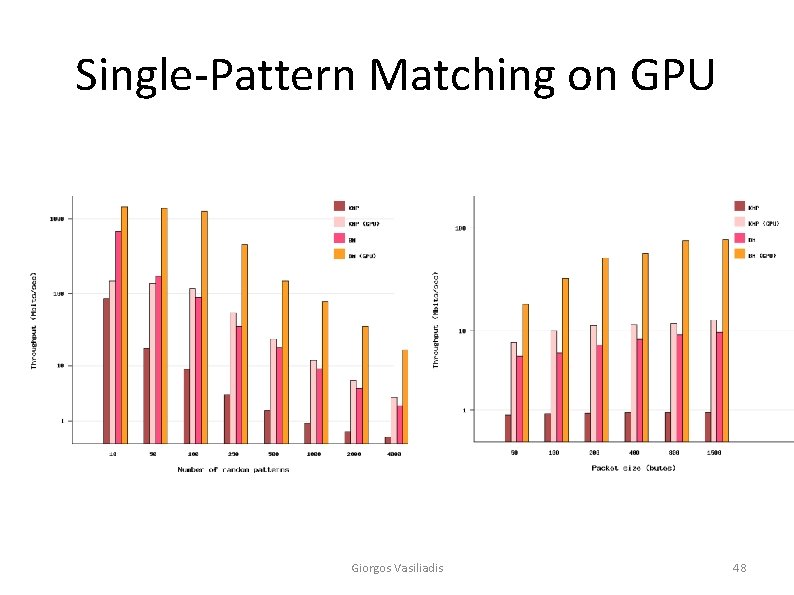

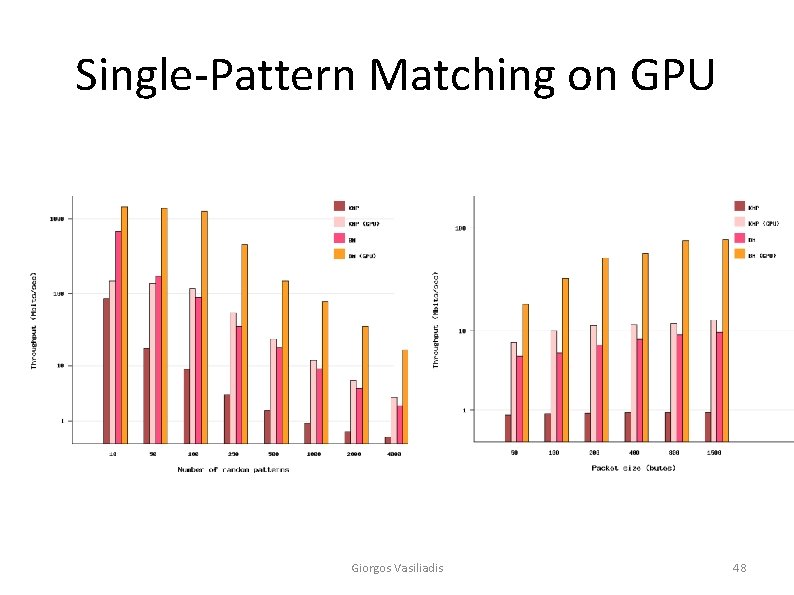

Single-Pattern Matching on GPU Giorgos Vasiliadis 48

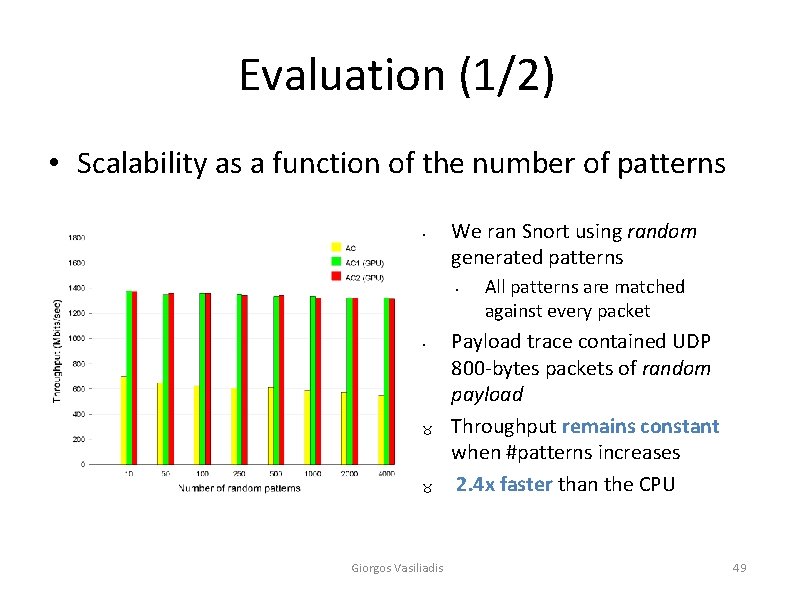

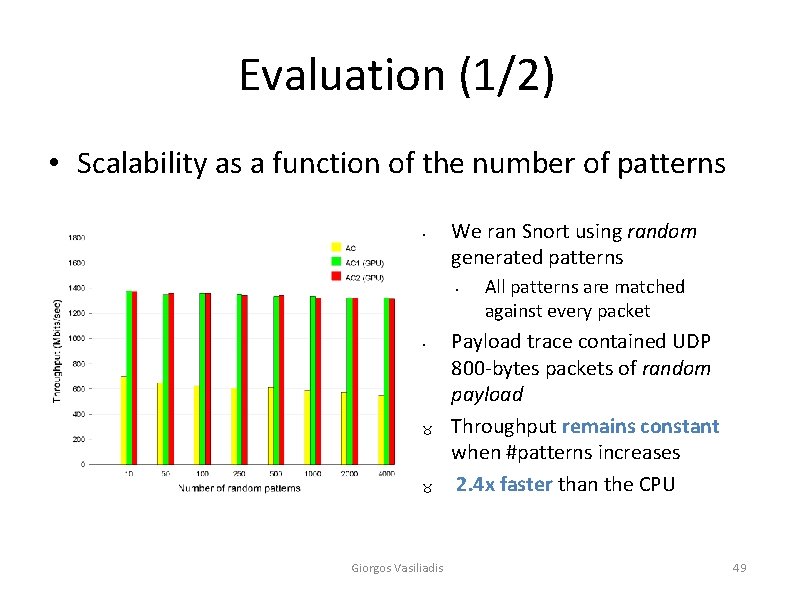

Evaluation (1/2) • Scalability as a function of the number of patterns • We ran Snort using random generated patterns • • _ _ Giorgos Vasiliadis All patterns are matched against every packet Payload trace contained UDP 800 -bytes packets of random payload Throughput remains constant when #patterns increases 2. 4 x faster than the CPU 49

Macrobenchmark Giorgos Vasiliadis 50

Transferring Packets to the GPU • PCI Express 16 x v 1. 1 – 4 GB/sec maximum theoretical throughput • Throughput degrades when performing small data transfers • Page-locked memory performs better Giorgos Vasiliadis 51