Improving Prefetching Mechanisms for Tiled CMP Platforms 28

- Slides: 65

Improving Prefetching Mechanisms for Tiled CMP Platforms 28 th November of 2016 Candidate: Advisors: Tutor: Martí Torrents Raúl Martínez, Carlos Molina Antonio González

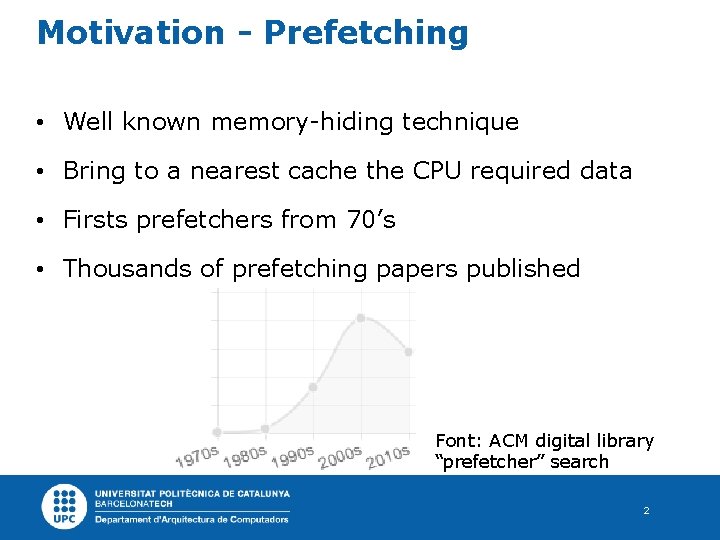

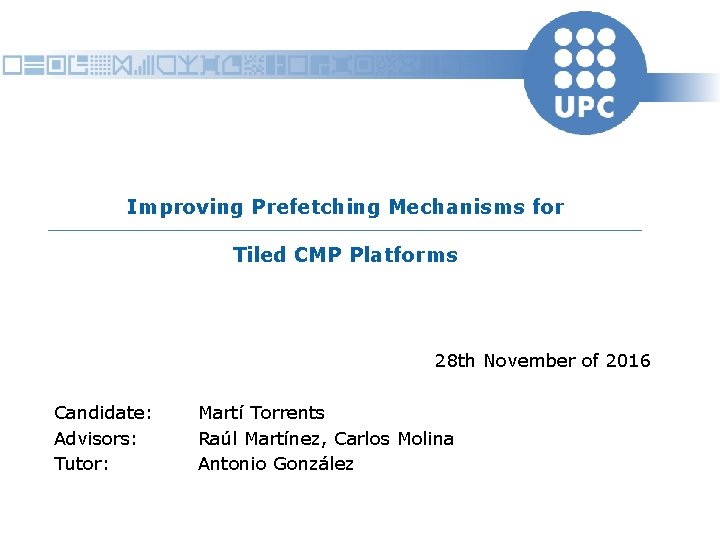

Motivation - Prefetching • Well known memory-hiding technique • Bring to a nearest cache the CPU required data • Firsts prefetchers from 70’s • Thousands of prefetching papers published Font: ACM digital library “prefetcher” search 2

Motivation - Prefetching 3

Motivation – So, why prefetching? • Implemented in most commercial processors • Changes in technology challenged prefetching • Evolved towards technology generations • What about next generations? 4

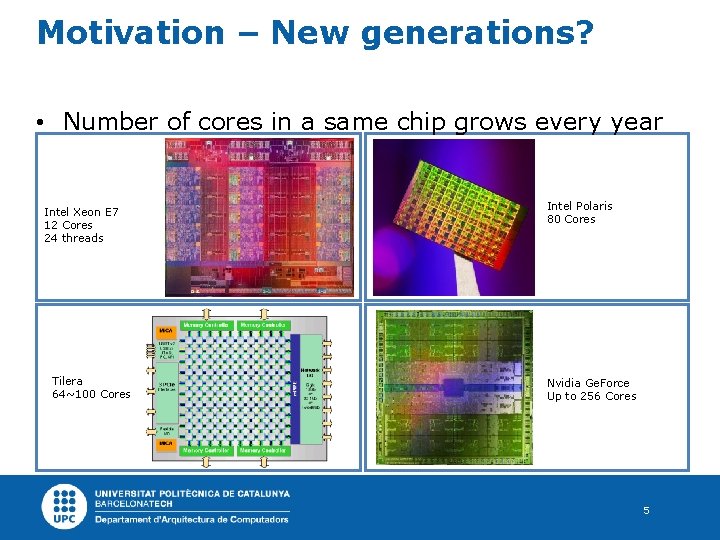

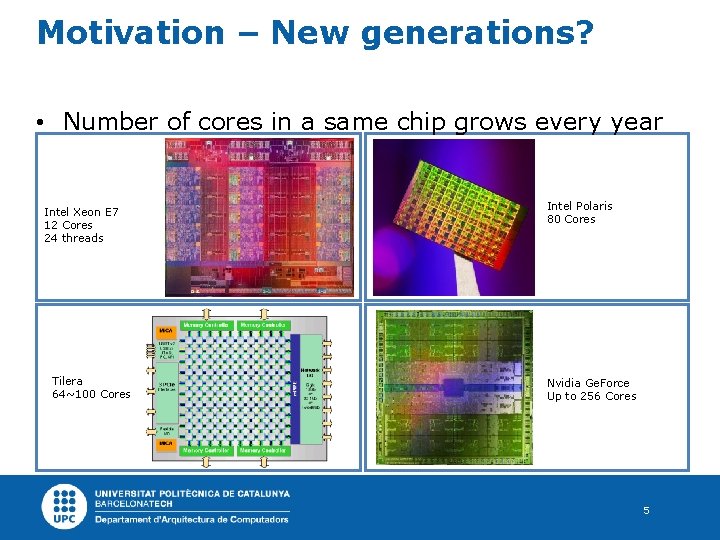

Motivation – New generations? • Number of cores in a same chip grows every year Intel Xeon E 7 12 Cores 24 threads Tilera 64~100 Cores Intel Polaris 80 Cores Nvidia Ge. Force Up to 256 Cores 5

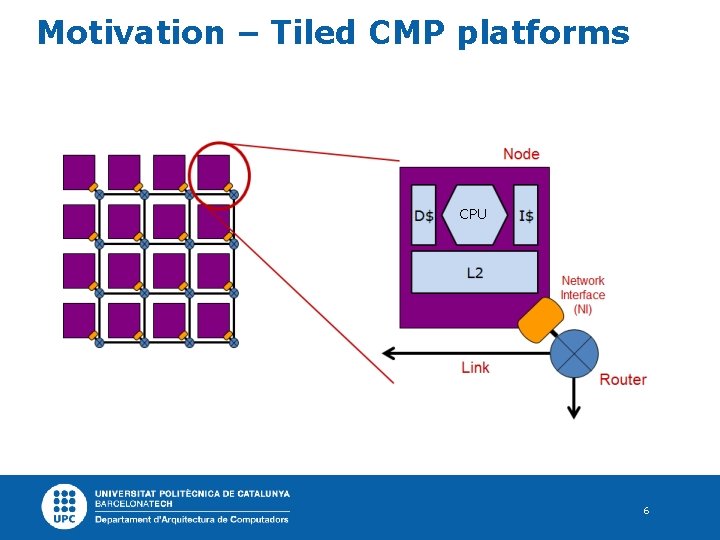

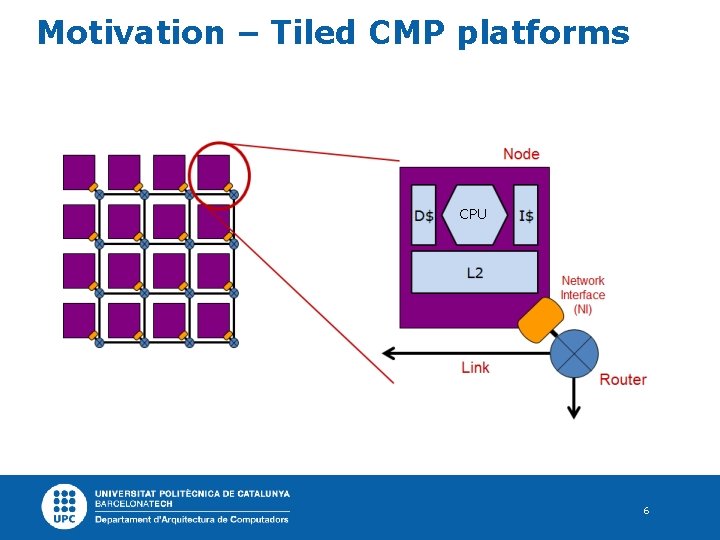

Motivation – Tiled CMP platforms CPU 6

Motivation - Objectives 1. Prefetching in multi-core platforms 2. Confidence predictor for prefetching in CMPs 3. Dynamic management of prefetch requests 4. Prefetching challenges in DSMs 7

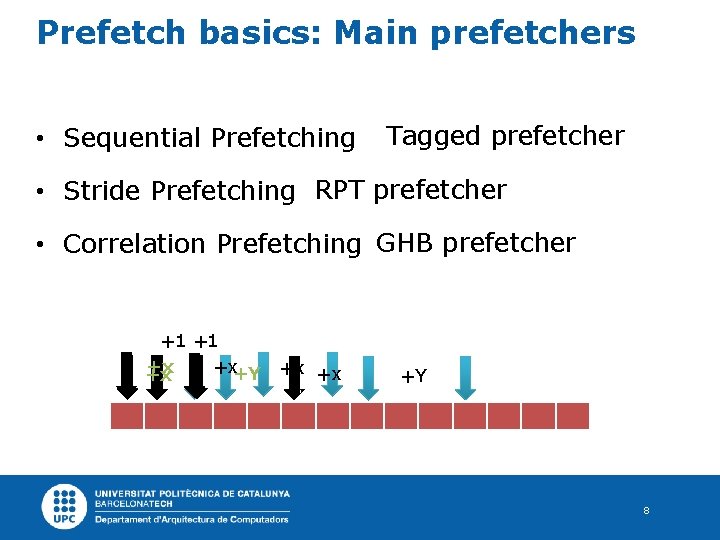

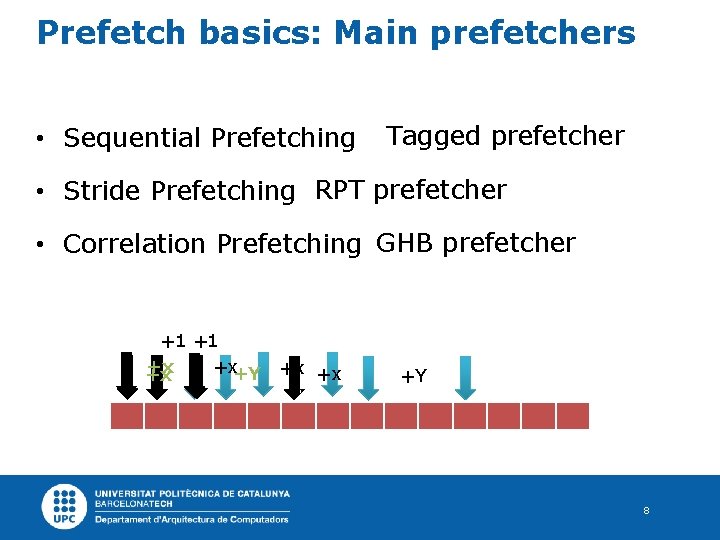

Prefetch basics: Main prefetchers • Sequential Prefetching Tagged prefetcher • Stride Prefetching RPT prefetcher • Correlation Prefetching GHB prefetcher +1 +1 +x+Y +x +x +Y 8

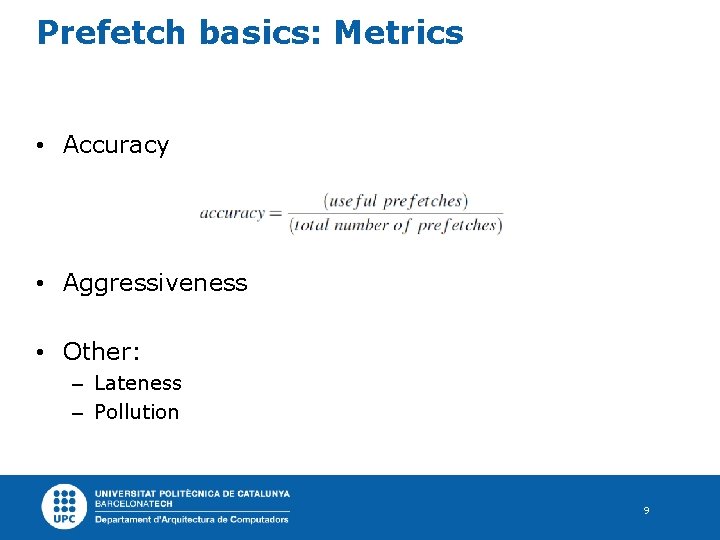

Prefetch basics: Metrics • Accuracy • Aggressiveness • Other: – Lateness – Pollution 9

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 10

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 11

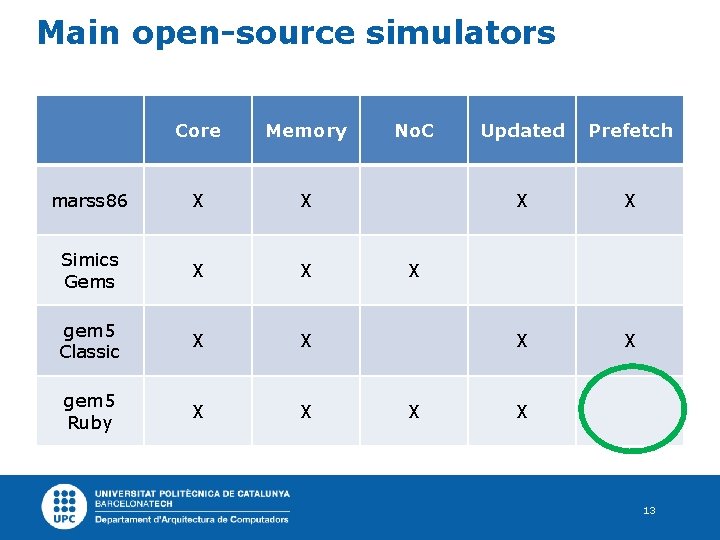

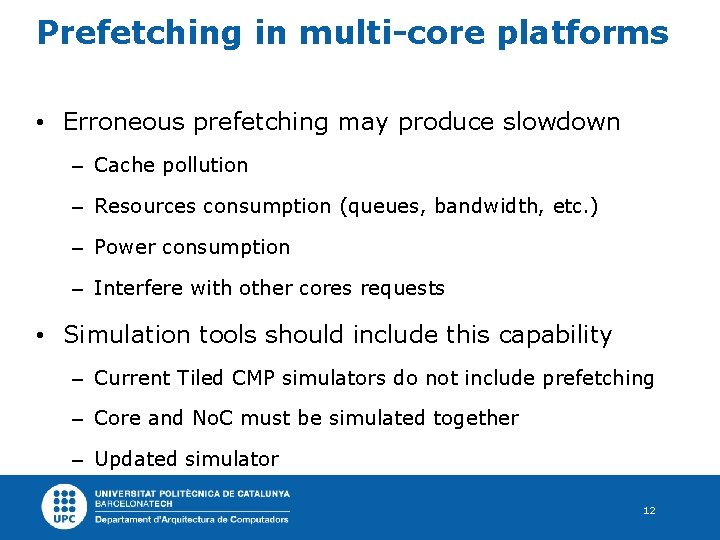

Prefetching in multi-core platforms • Erroneous prefetching may produce slowdown – Cache pollution – Resources consumption (queues, bandwidth, etc. ) – Power consumption – Interfere with other cores requests • Simulation tools should include this capability – Current Tiled CMP simulators do not include prefetching – Core and No. C must be simulated together – Updated simulator 12

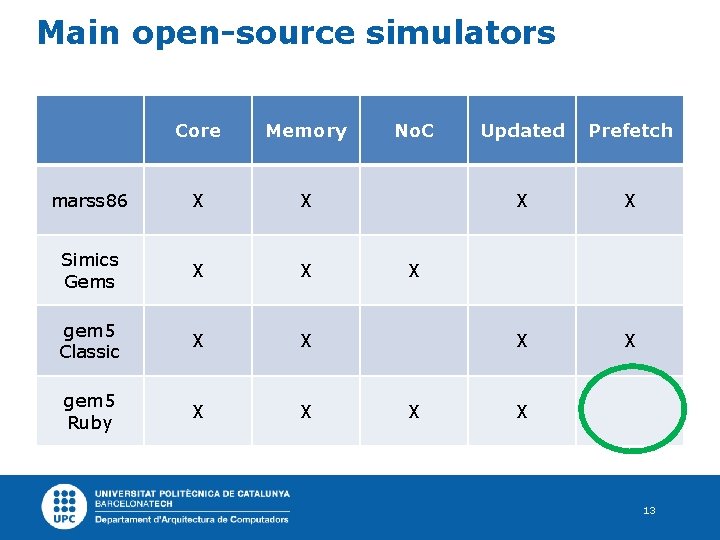

Main open-source simulators Core Memory marss 86 X X Simics Gems X X gem 5 Classic X X gem 5 Ruby X X No. C Updated Prefetch X X X X 13

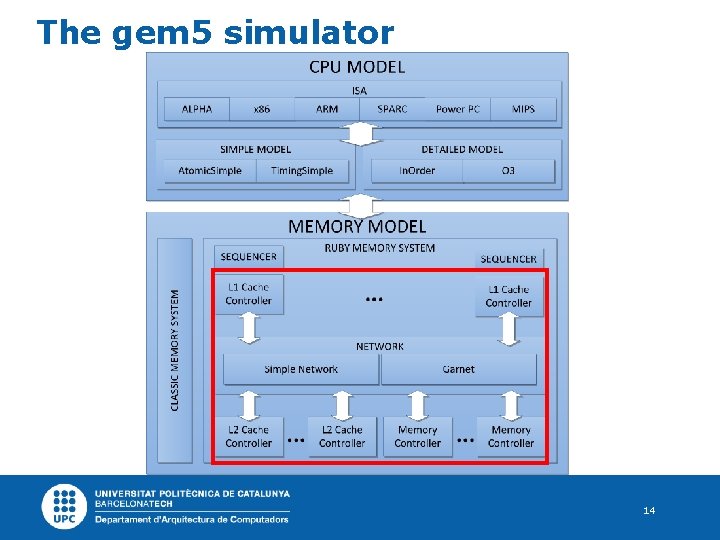

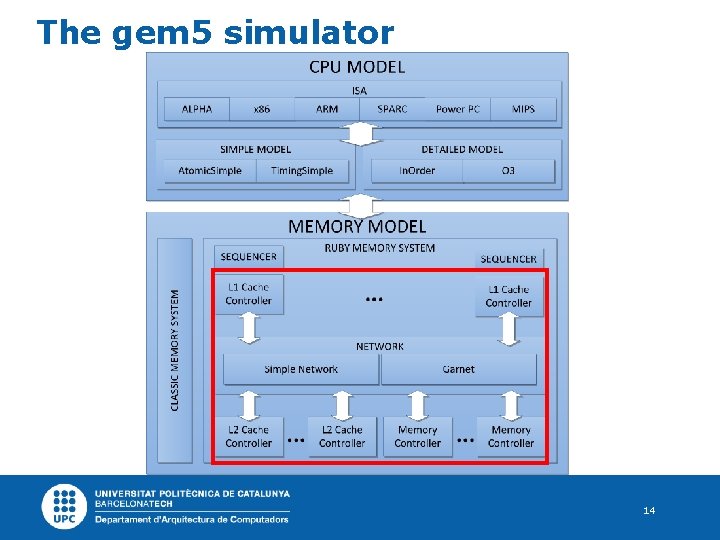

The gem 5 simulator 14

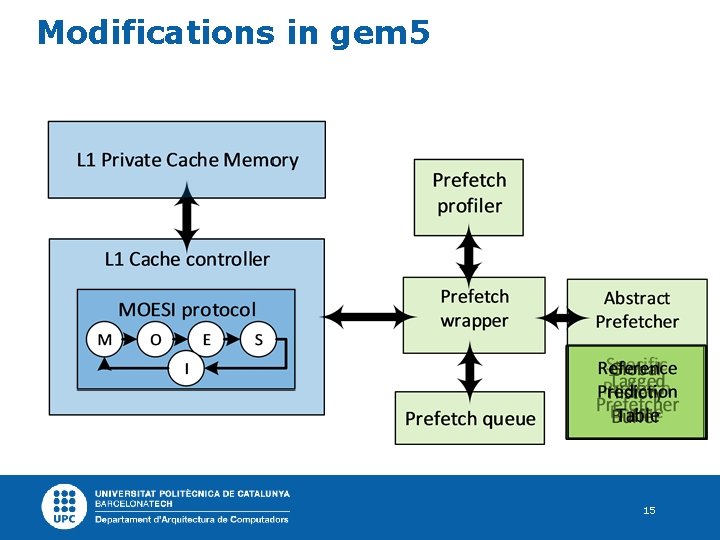

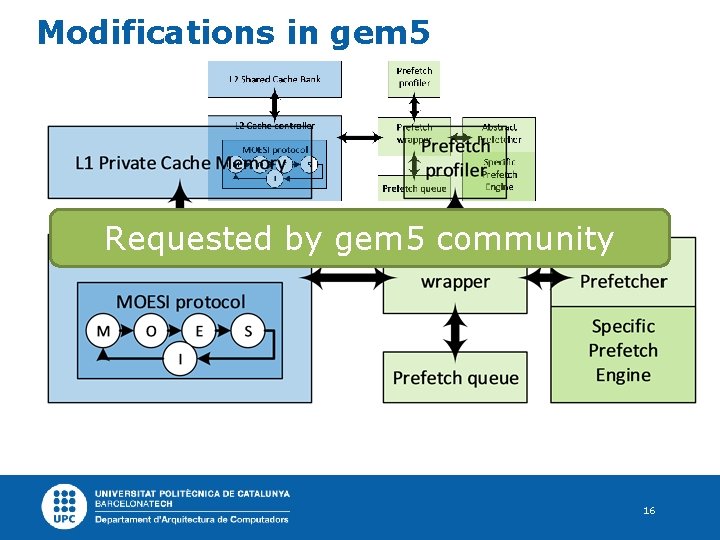

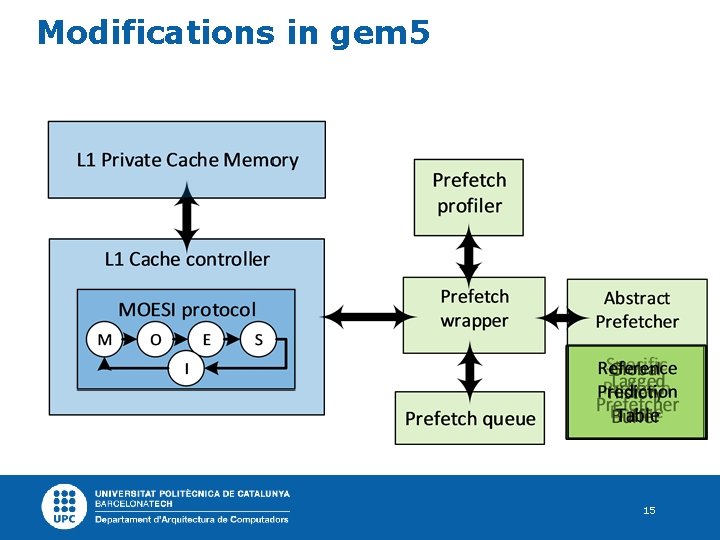

Modifications in gem 5 15

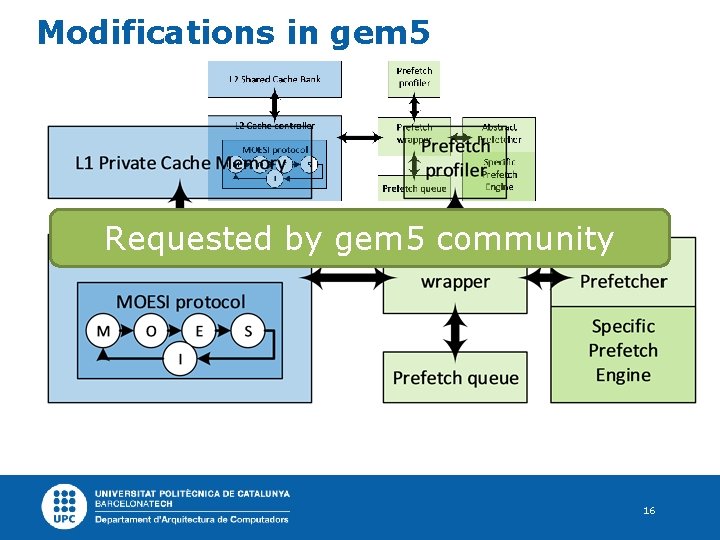

Modifications in gem 5 Requested by gem 5 community 16

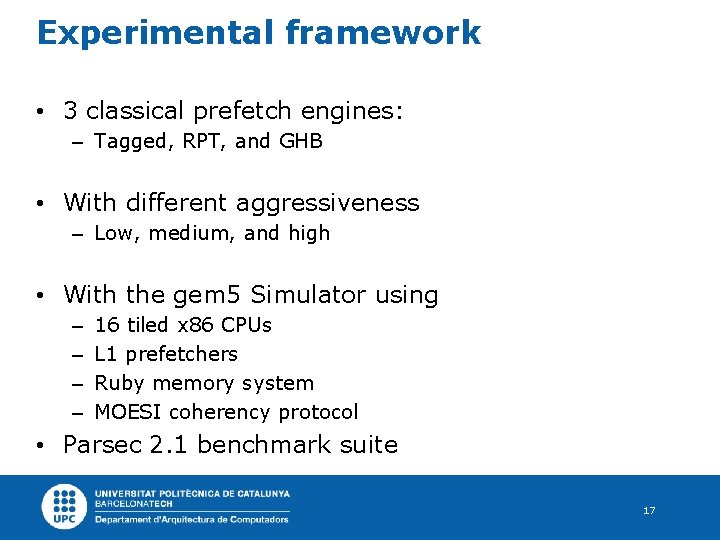

Experimental framework • 3 classical prefetch engines: – Tagged, RPT, and GHB • With different aggressiveness – Low, medium, and high • With the gem 5 Simulator using – – 16 tiled x 86 CPUs L 1 prefetchers Ruby memory system MOESI coherency protocol • Parsec 2. 1 benchmark suite 17

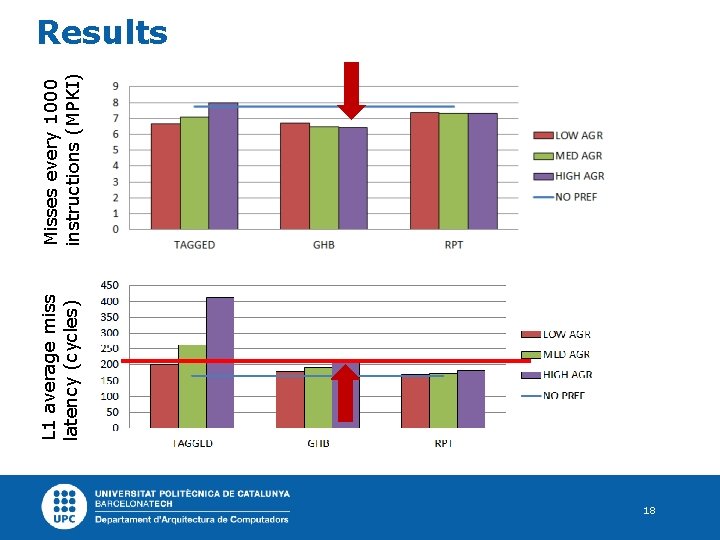

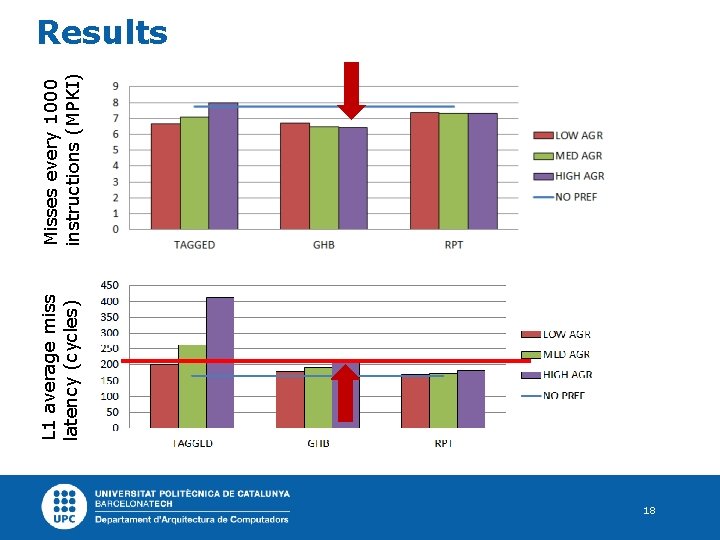

L 1 average miss latency (cycles) Misses every 1000 instructions (MPKI) Results 18

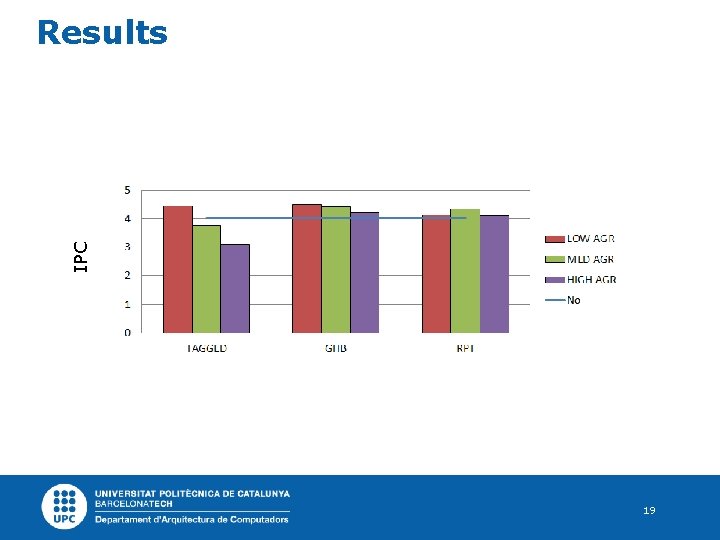

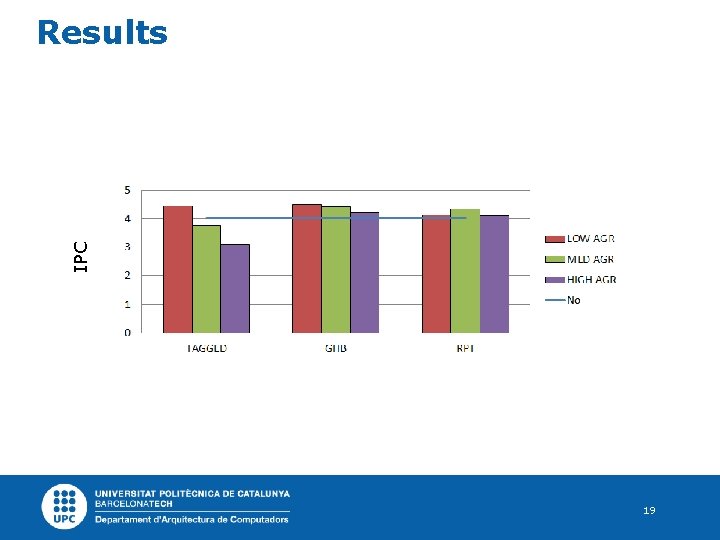

IPC Results 19

Results IPC Increasing the level of detail of the simulations may increase the accuracy of its results 20

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 21

Confidence predictor • Many techniques try to improve prefetching • Dynamic management techniques • Depend on the profiling strategies • Used to profile different statistics • Most of them focus on the accuracy 22

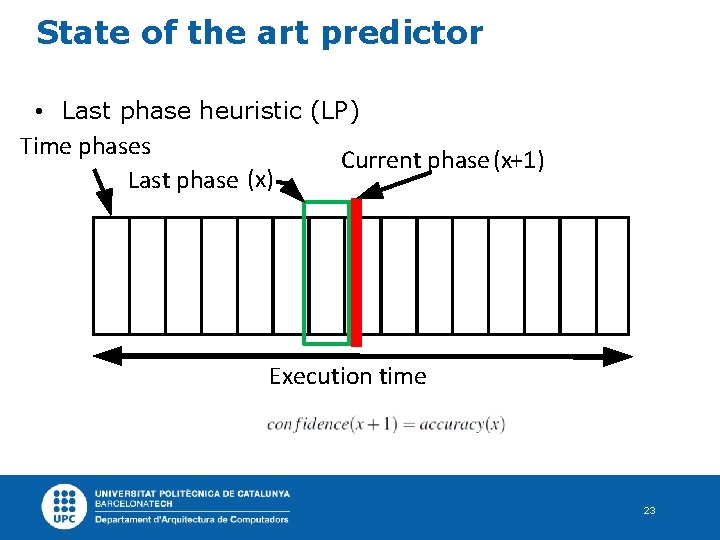

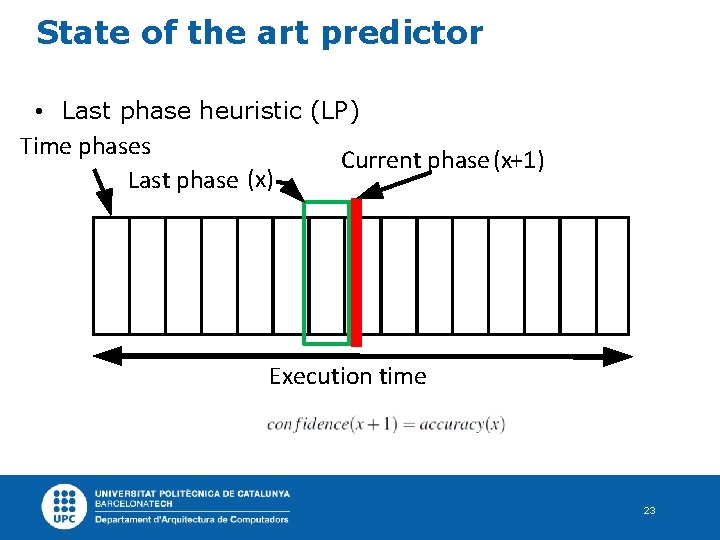

State of the art predictor • Last phase heuristic (LP) Time phases Last phase (x) Current phase (x+1) Execution time 23

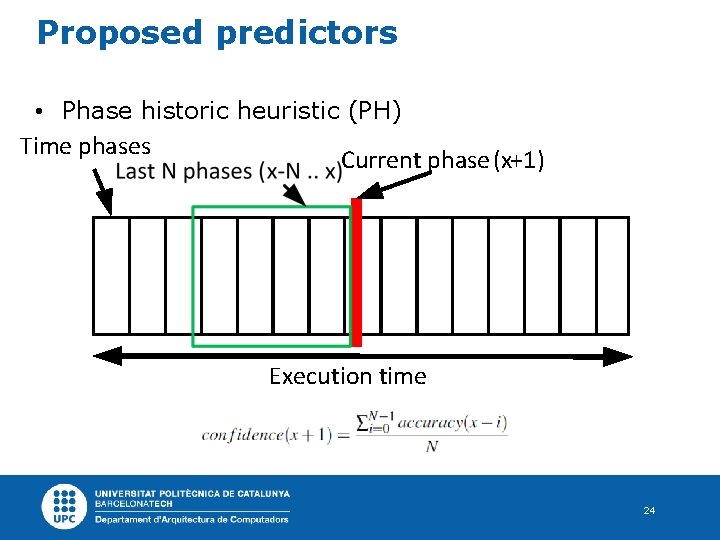

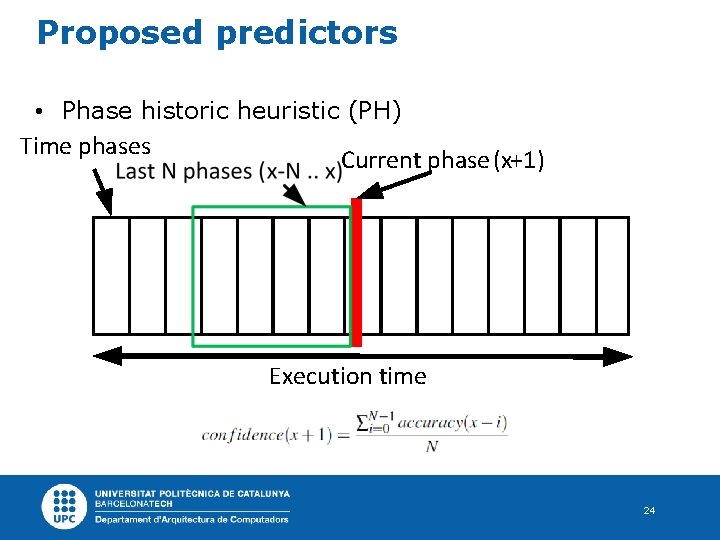

Proposed predictors • Phase historic heuristic (PH) Time phases Current phase (x+1) Execution time 24

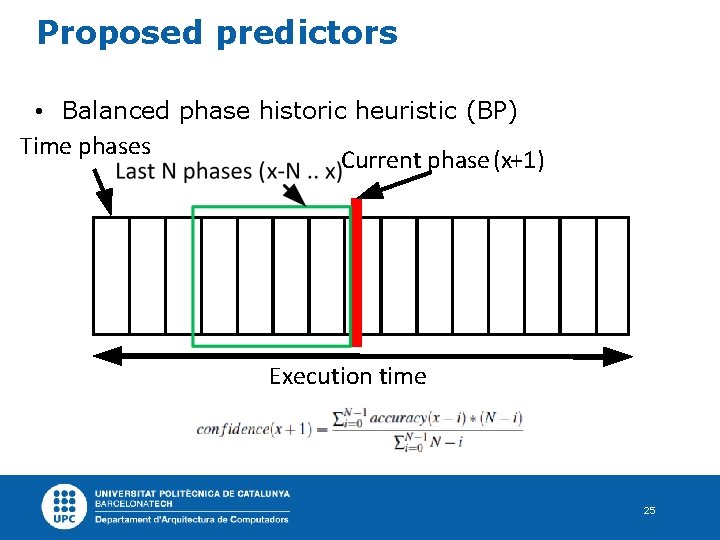

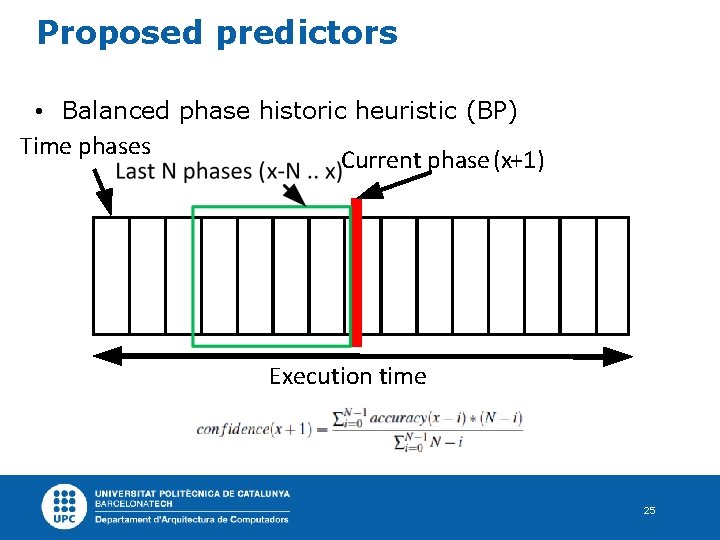

Proposed predictors • Balanced phase historic heuristic (BP) Time phases Current phase (x+1) Execution time 25

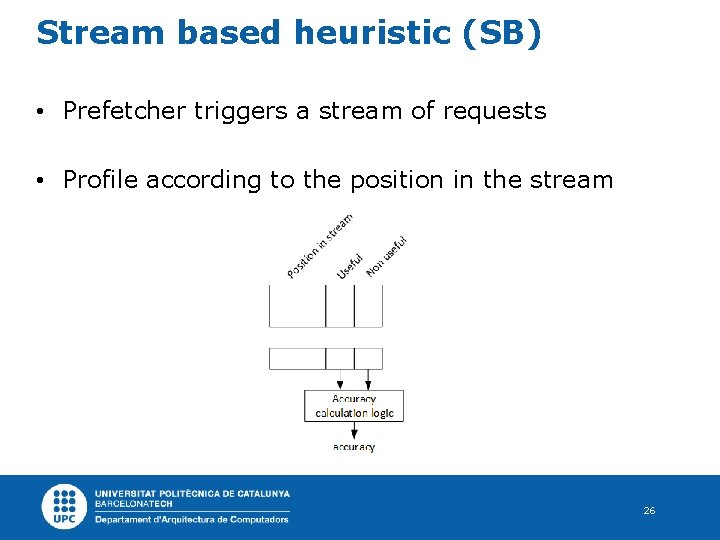

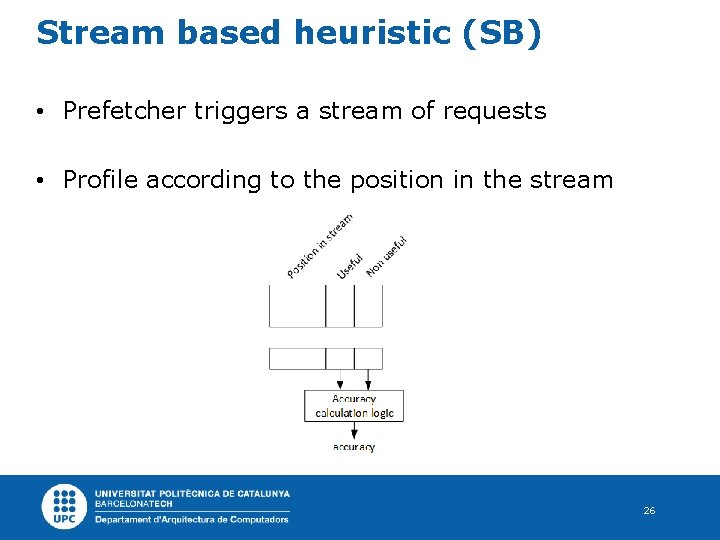

Stream based heuristic (SB) • Prefetcher triggers a stream of requests • Profile according to the position in the stream 26

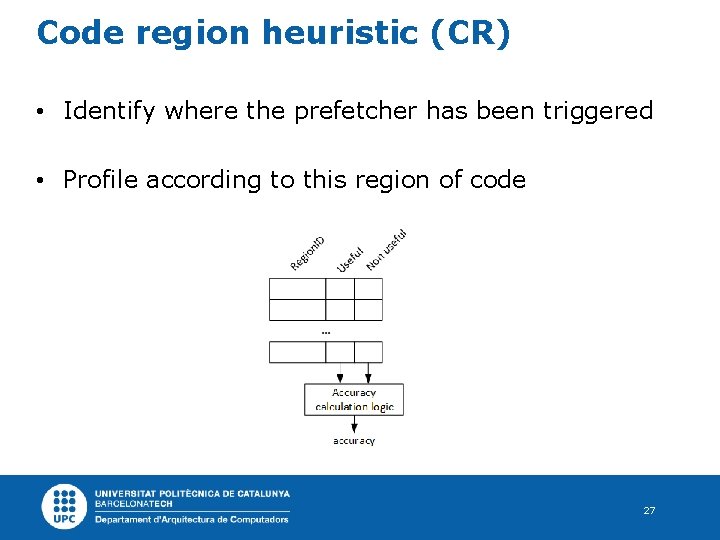

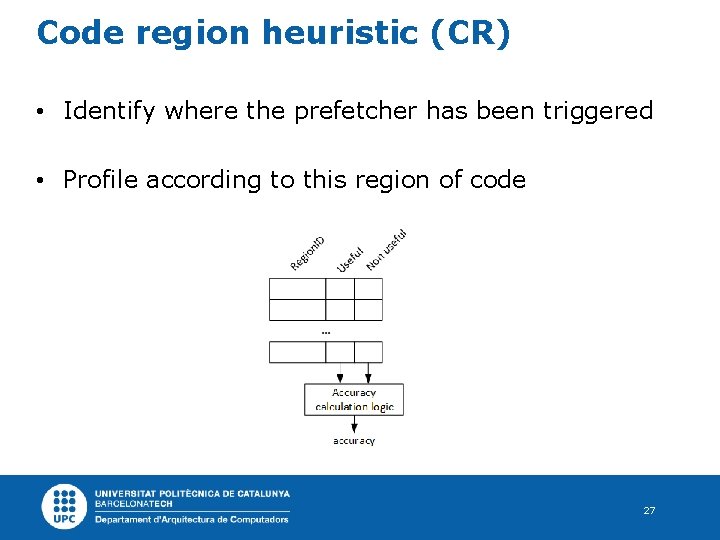

Code region heuristic (CR) • Identify where the prefetcher has been triggered • Profile according to this region of code 27

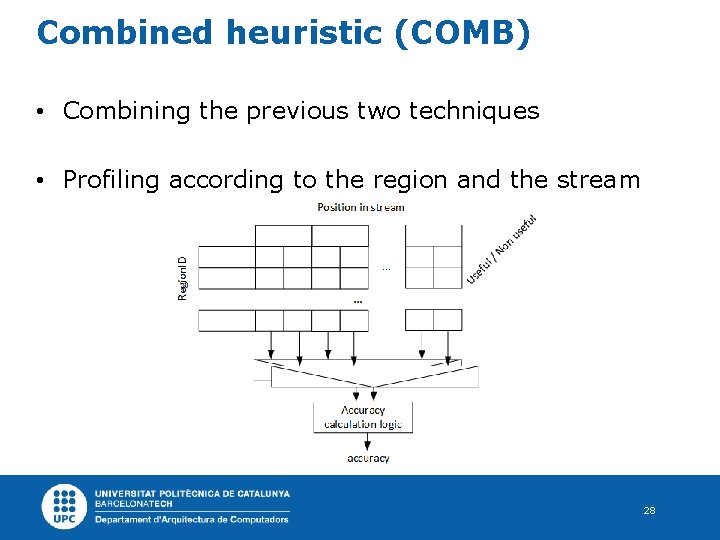

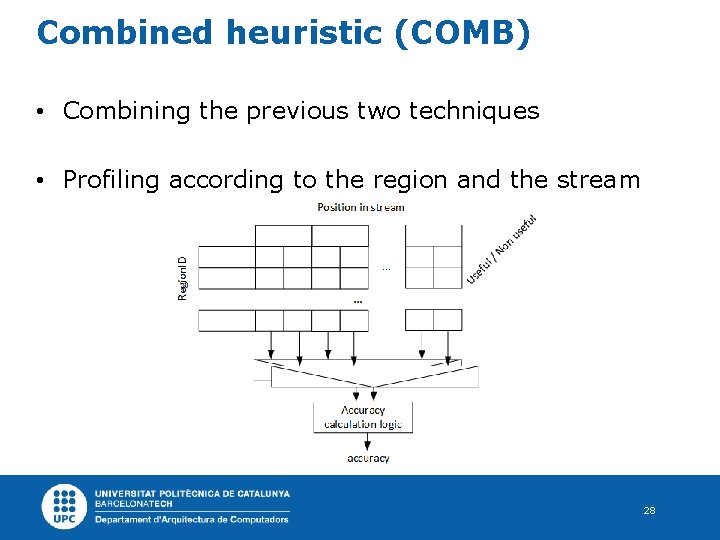

Combined heuristic (COMB) • Combining the previous two techniques • Profiling according to the region and the stream 28

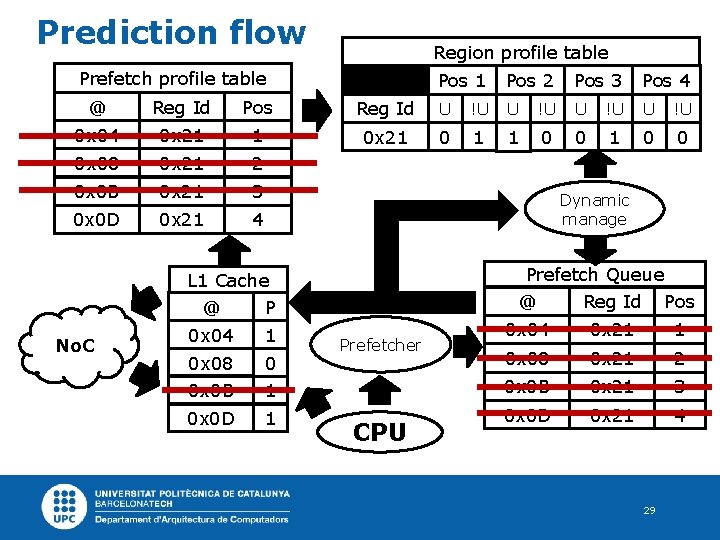

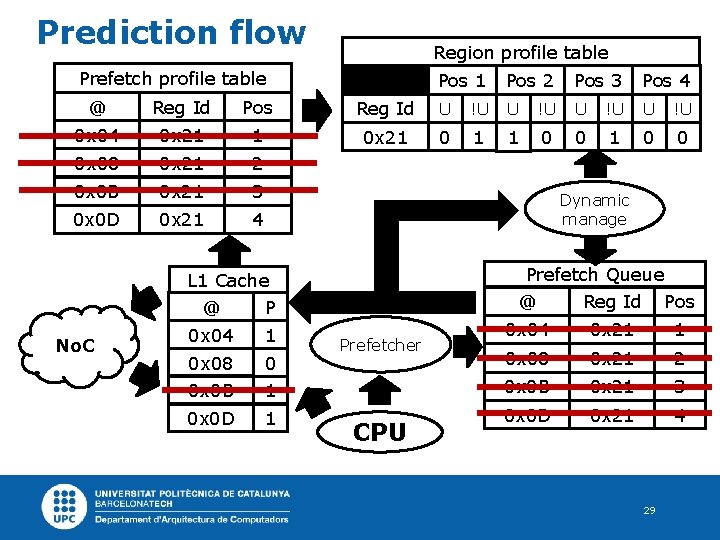

Prediction flow Region profile table Prefetch profile table Pos 1 Pos 2 Pos 3 Pos 4 @ Reg Id Pos Reg Id U !U 0 x 04 0 x 21 1 0 x 21 0 0 0 0 x 08 0 x 21 2 0 x 0 B 0 x 21 3 0 x 0 D 0 x 21 4 Dynamic manage Prefetch Queue @ Reg Id Pos L 1 Cache No. C @ P 0 x 04 1 0 x 08 1 0 0 x 0 B 0 x 0 D 0 x 04 0 x 21 1 0 x 08 0 x 21 2 1 0 x 0 B 0 x 21 3 1 0 x 0 D 0 x 21 4 Prefetcher CPU 29

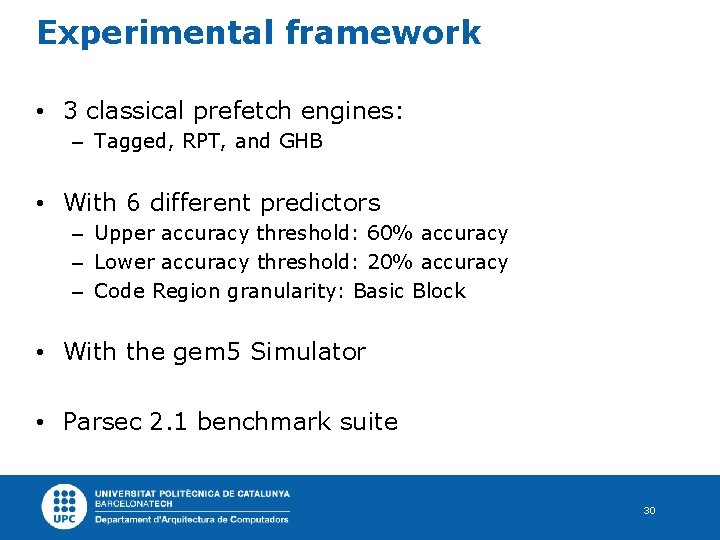

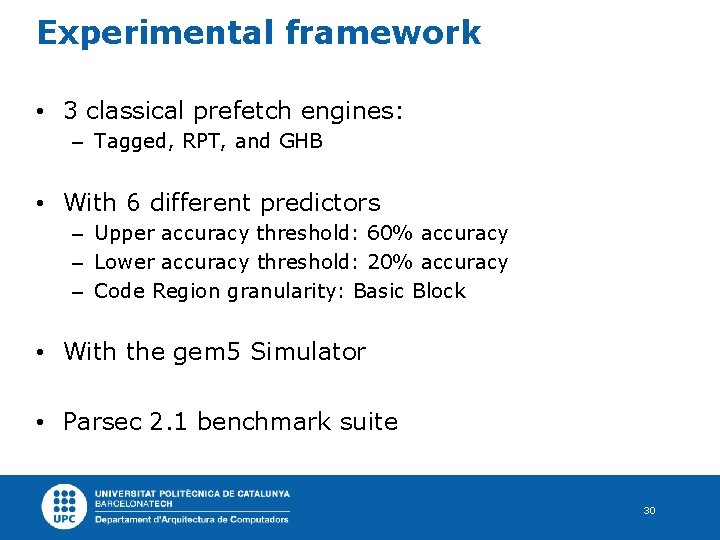

Experimental framework • 3 classical prefetch engines: – Tagged, RPT, and GHB • With 6 different predictors – Upper accuracy threshold: 60% accuracy – Lower accuracy threshold: 20% accuracy – Code Region granularity: Basic Block • With the gem 5 Simulator • Parsec 2. 1 benchmark suite 30

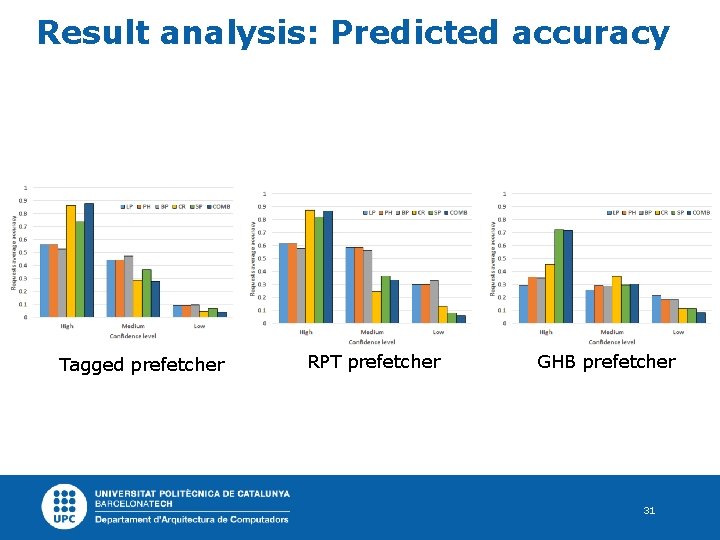

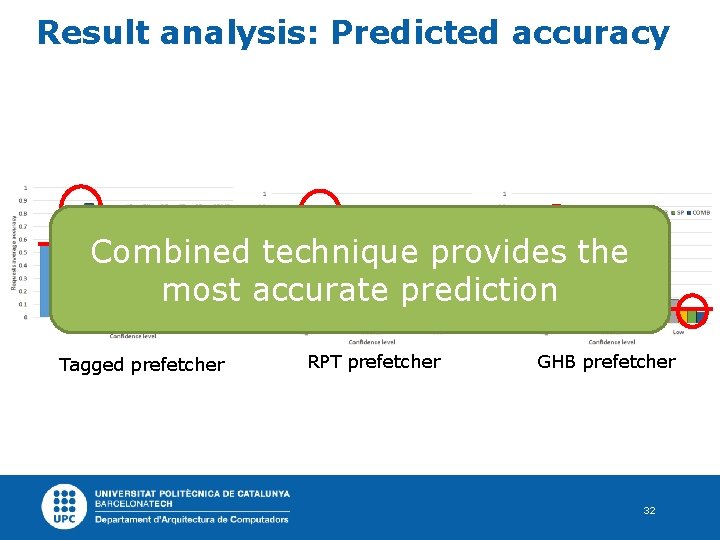

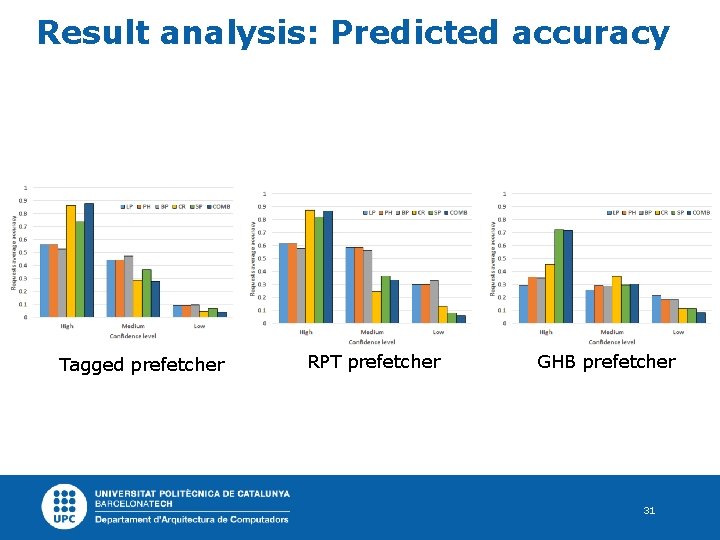

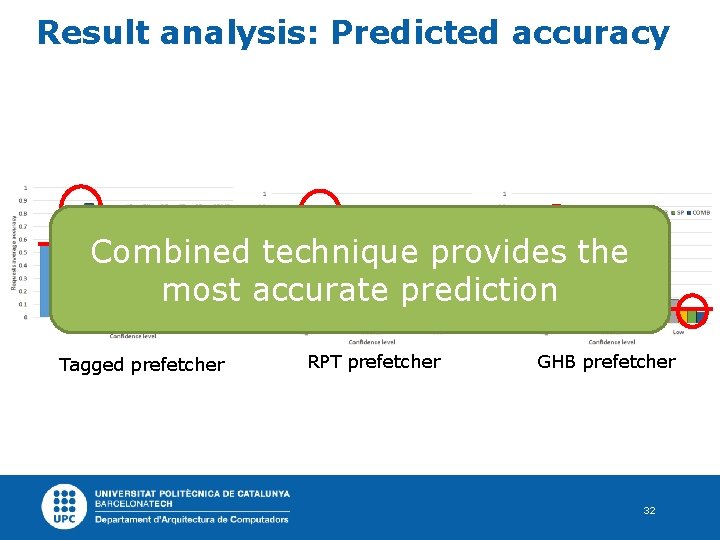

Result analysis: Predicted accuracy Tagged prefetcher RPT prefetcher GHB prefetcher 31

Result analysis: Predicted accuracy Combined technique provides the most accurate prediction Tagged prefetcher RPT prefetcher GHB prefetcher 32

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 33

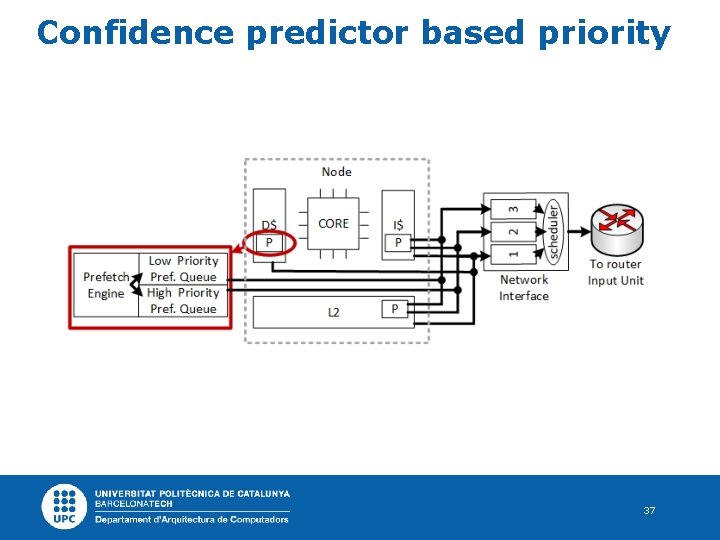

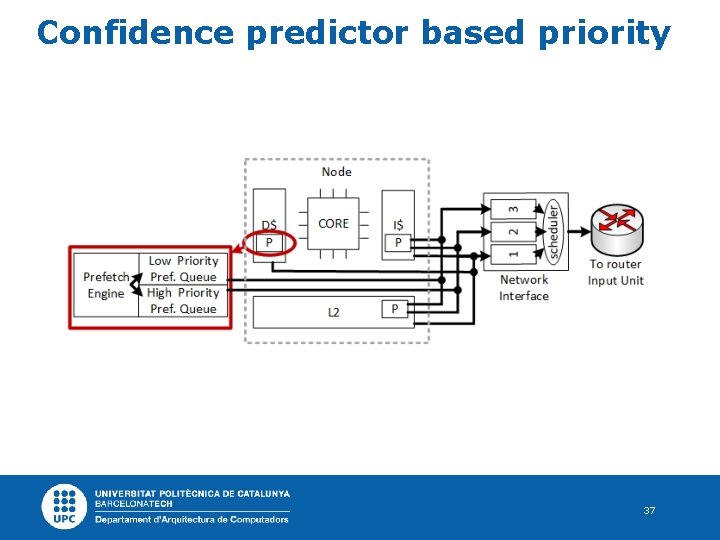

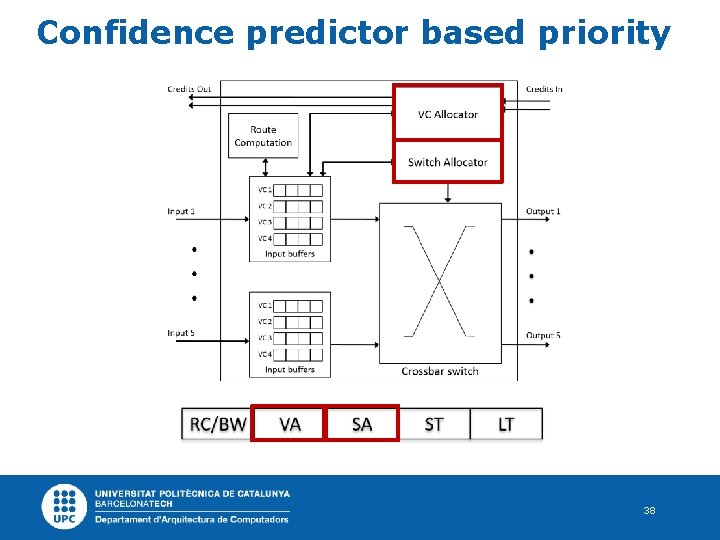

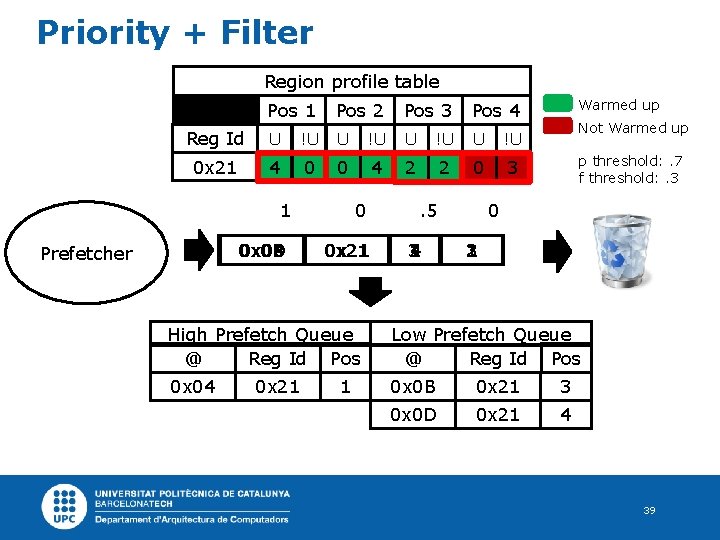

Dynamic management of requests • Novel dynamic management technique • Using our confidence predictor • Based on filtering and prioritization • Discard Low confidence requests • Prioritize High and medium confidence requests 34

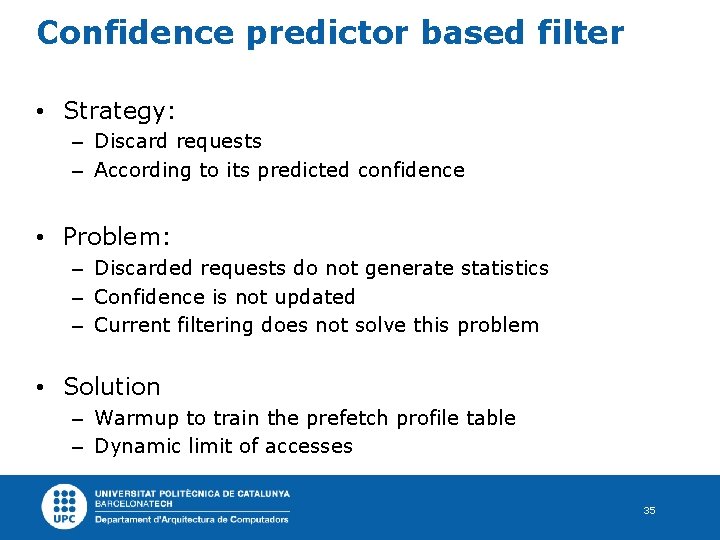

Confidence predictor based filter • Strategy: – Discard requests – According to its predicted confidence • Problem: – Discarded requests do not generate statistics – Confidence is not updated – Current filtering does not solve this problem • Solution – Warmup to train the prefetch profile table – Dynamic limit of accesses 35

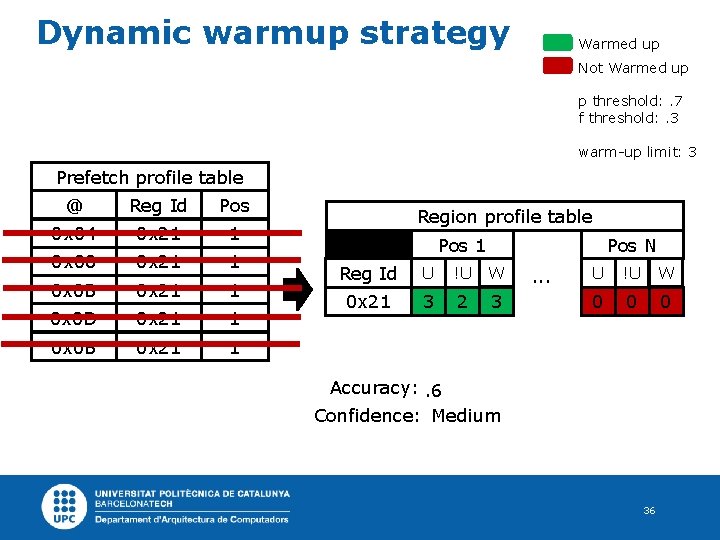

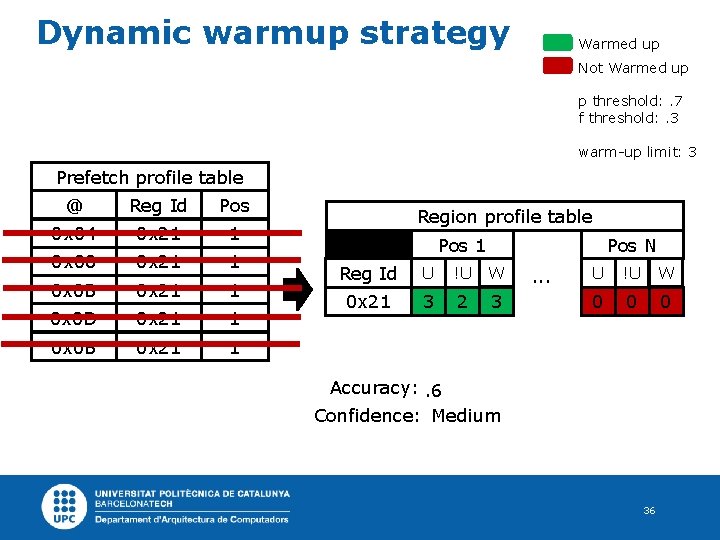

Dynamic warmup strategy Warmed up Not Warmed up p threshold: . 7 f threshold: . 3 warm-up limit: 3 Prefetch profile table @ Reg Id Pos 0 x 04 0 x 21 1 0 x 08 0 x 21 1 0 x 0 B 0 x 21 1 0 x 0 D 0 x 21 1 0 x 0 B 0 x 21 1 Region profile table Pos 1 Pos N Reg Id U !U W 0 x 21 0 2 3 1 1 2 0 1 0 3 2 . . . U !U W 0 0 0 Accuracy: . 6. 5. 31. 5 High Confidence: Medium 36

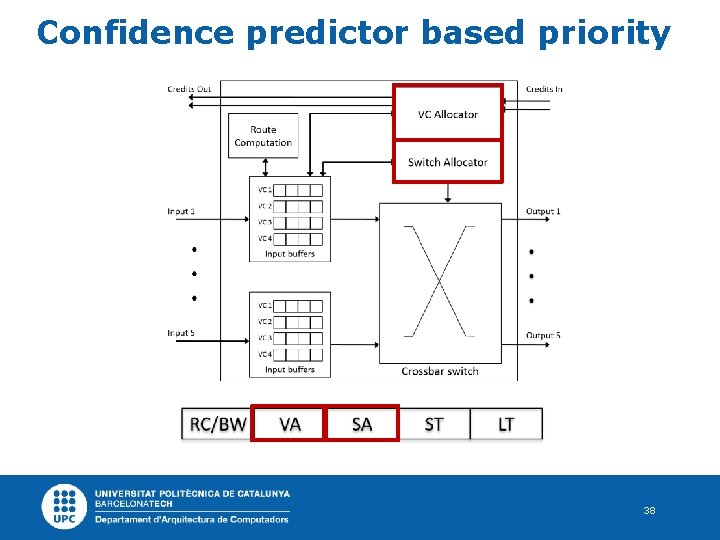

Confidence predictor based priority 37

Confidence predictor based priority 38

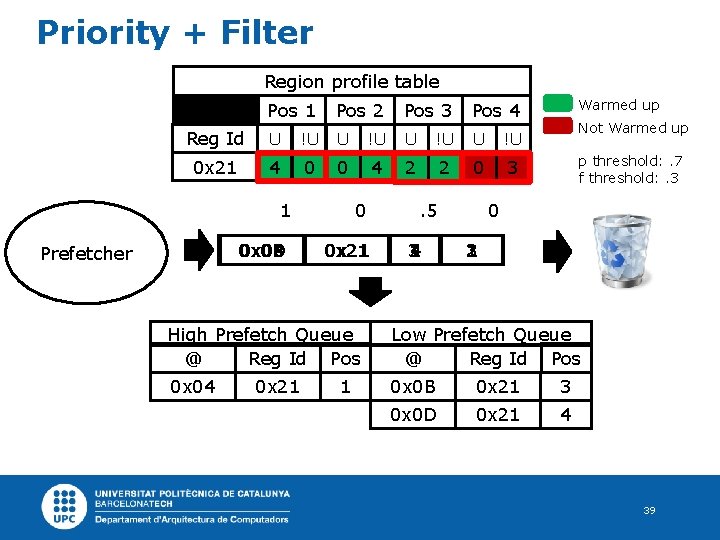

Priority + Filter Region profile table Pos 1 Pos 2 Pos 3 Pos 4 Reg Id U !U 0 x 21 4 0 0 4 2 2 0 3 Prefetcher 1 0 0 x 0 B 0 x 04 0 x 08 0 x 0 D 0 x 21 Not Warmed up p threshold: . 7 f threshold: . 3 0 . 5 4 3 1 2 Warmed up 1 3 2 High Prefetch Queue @ Reg Id Pos Low Prefetch Queue @ Reg Id Pos 0 x 04 0 x 0 B 0 x 21 3 0 x 0 D 0 x 21 4 0 x 21 1 39

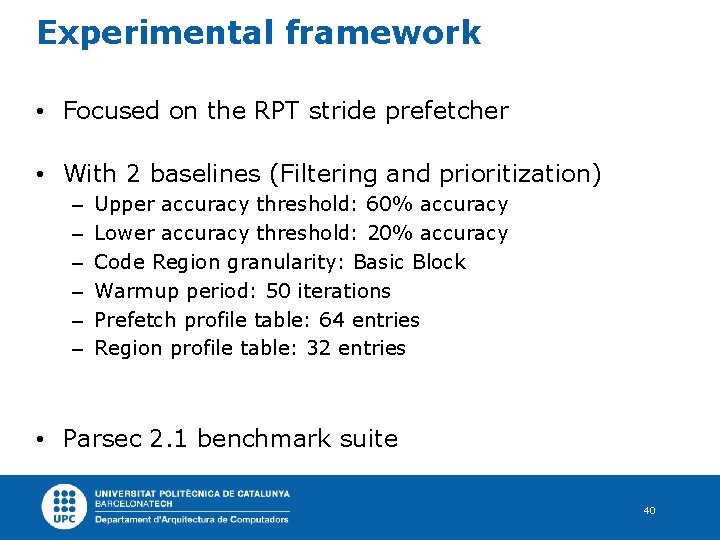

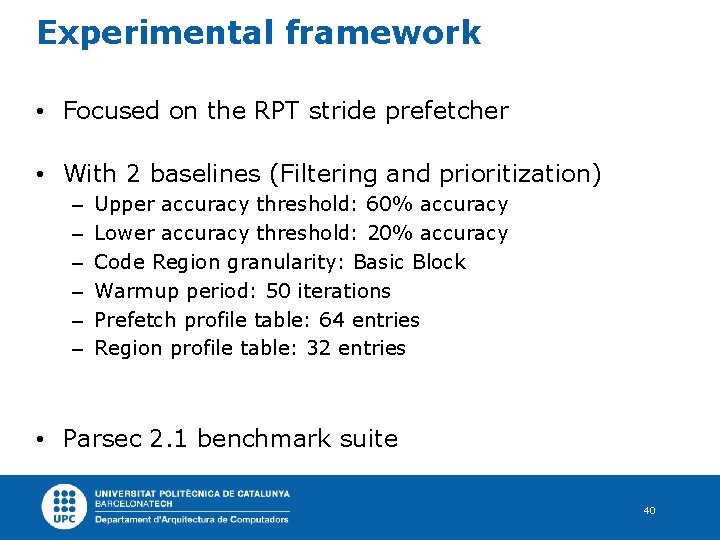

Experimental framework • Focused on the RPT stride prefetcher • With 2 baselines (Filtering and prioritization) – – – Upper accuracy threshold: 60% accuracy Lower accuracy threshold: 20% accuracy Code Region granularity: Basic Block Warmup period: 50 iterations Prefetch profile table: 64 entries Region profile table: 32 entries • Parsec 2. 1 benchmark suite 40

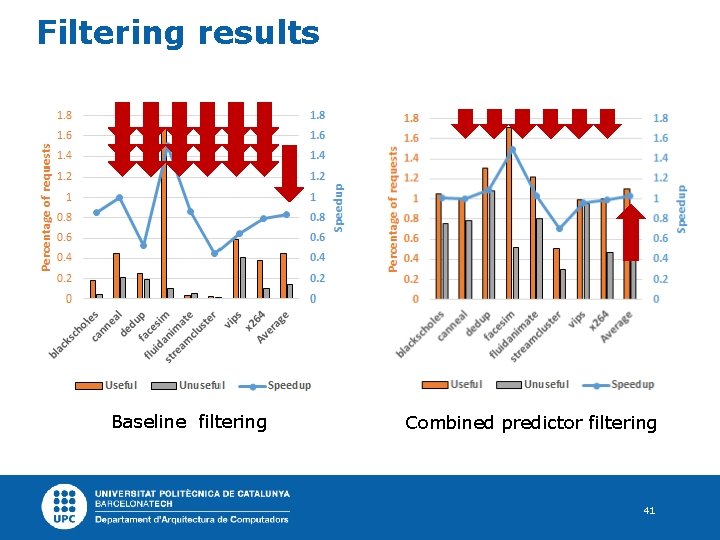

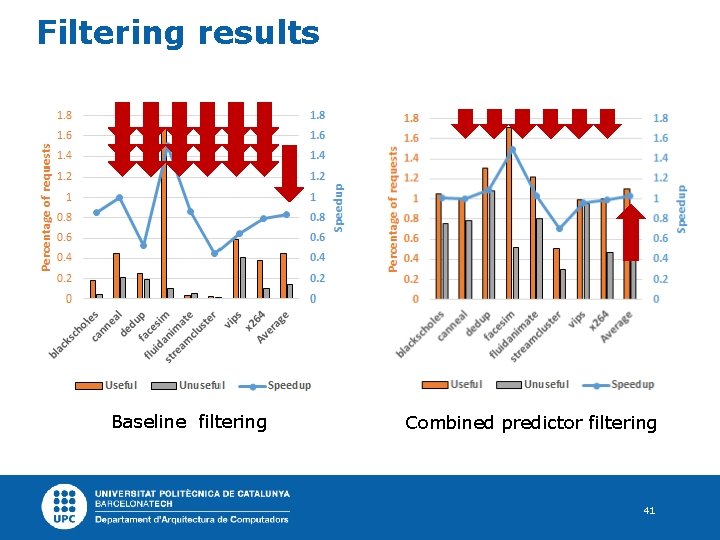

Filtering results Baseline filtering Combined predictor filtering 41

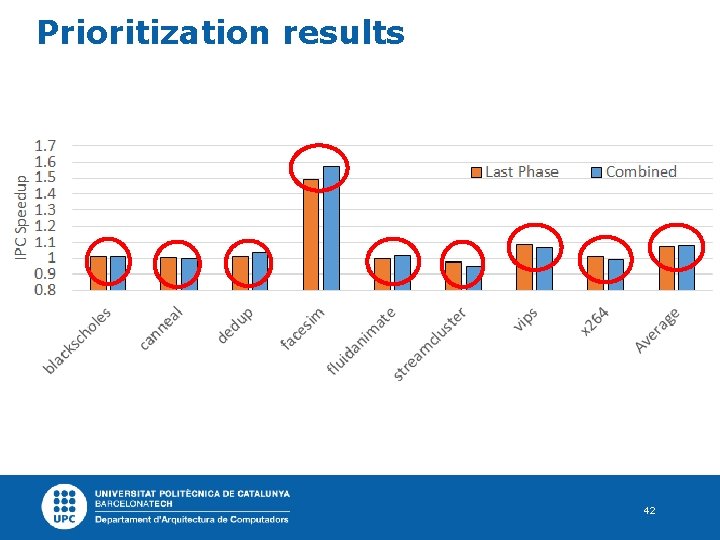

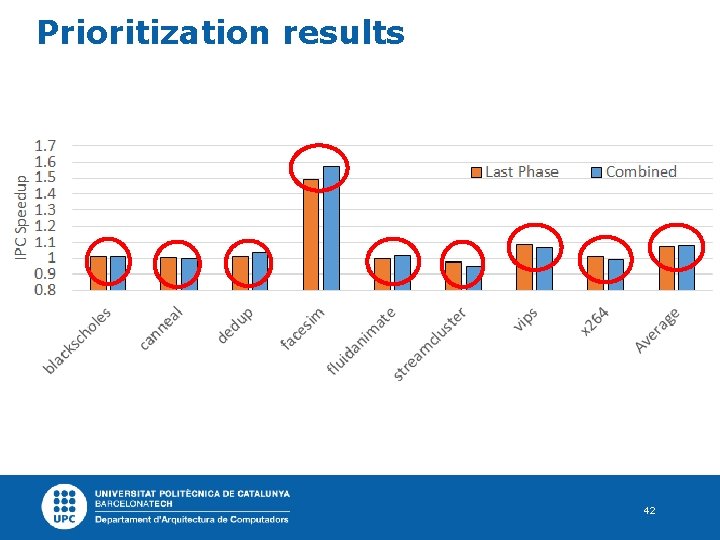

Prioritization results 42

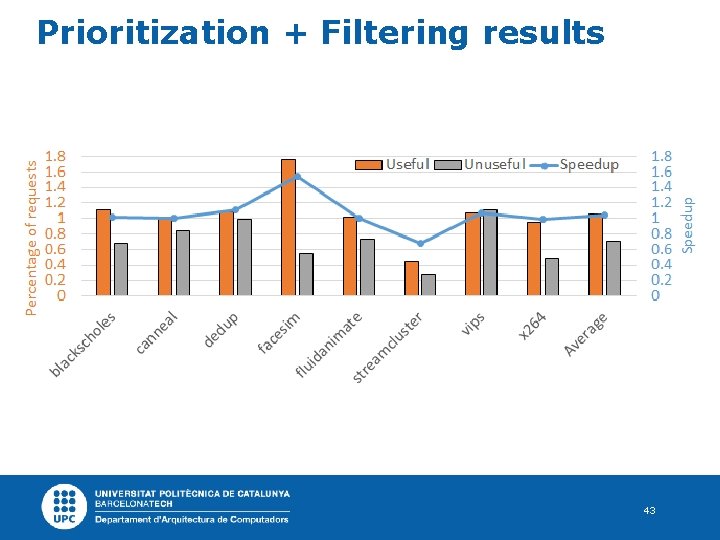

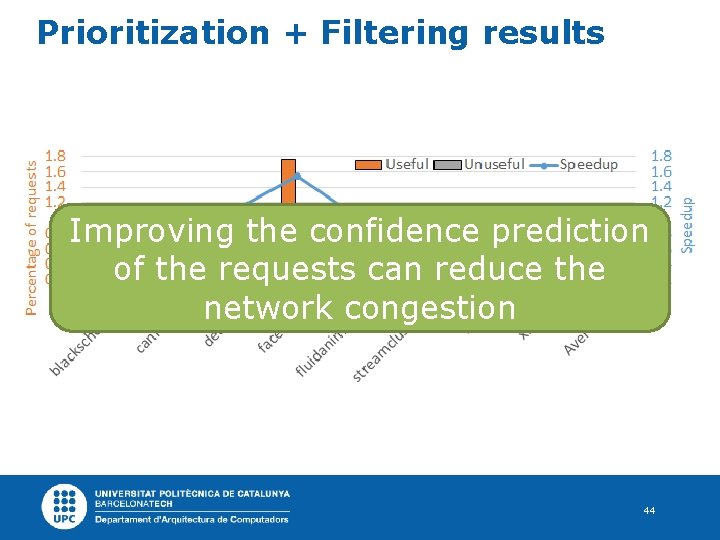

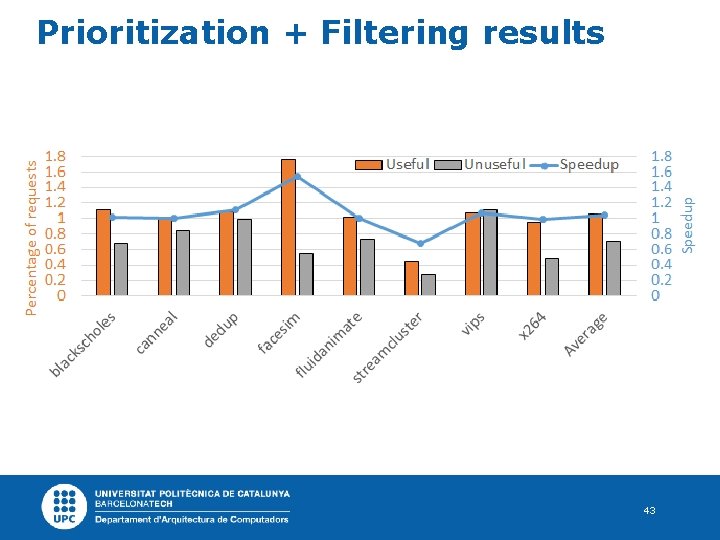

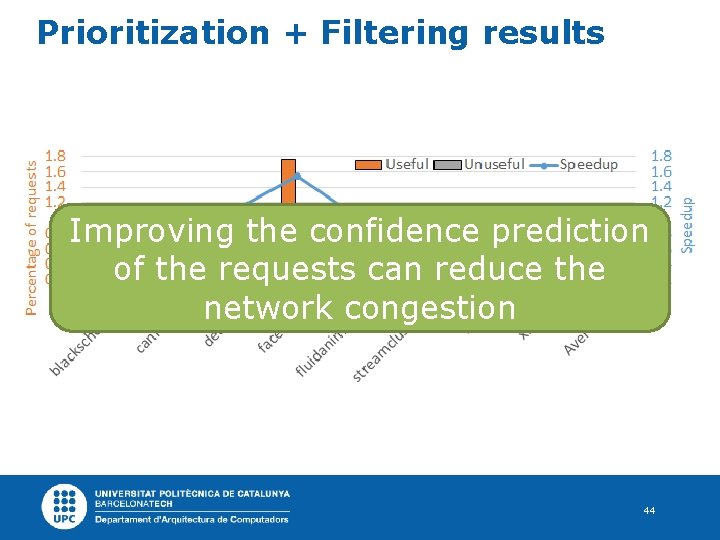

Prioritization + Filtering results 43

Prioritization + Filtering results Improving the confidence prediction of the requests can reduce the network congestion 44

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 45

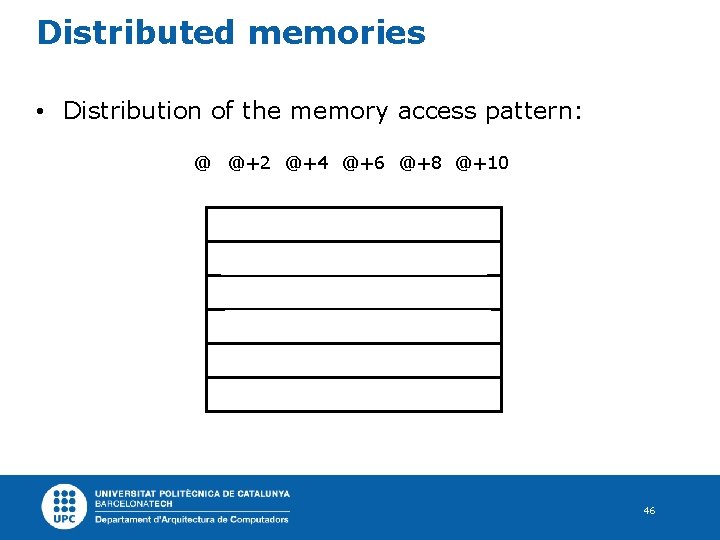

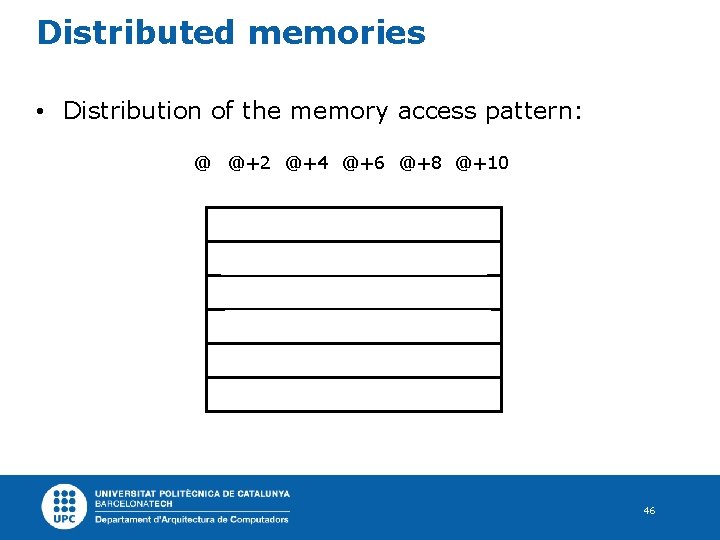

Distributed memories • Distribution of the memory access pattern: @ @+2 @+4 @+6 @+8 @+10 @ @+2 @+4 @+6 @+8 @ + 10 46

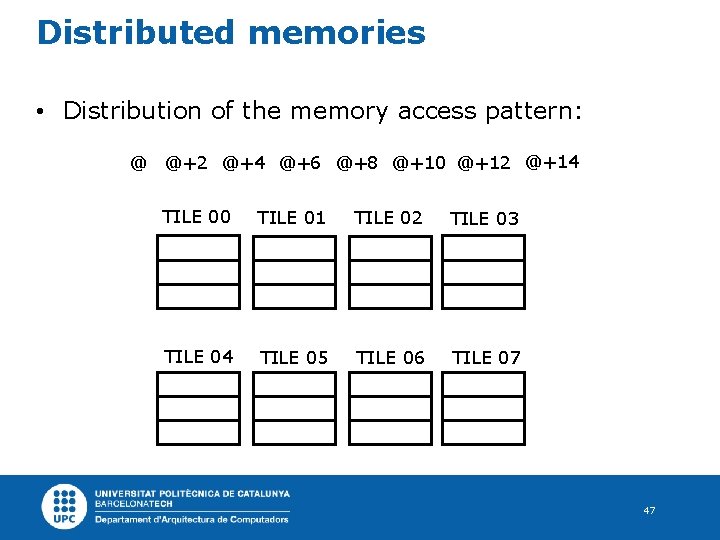

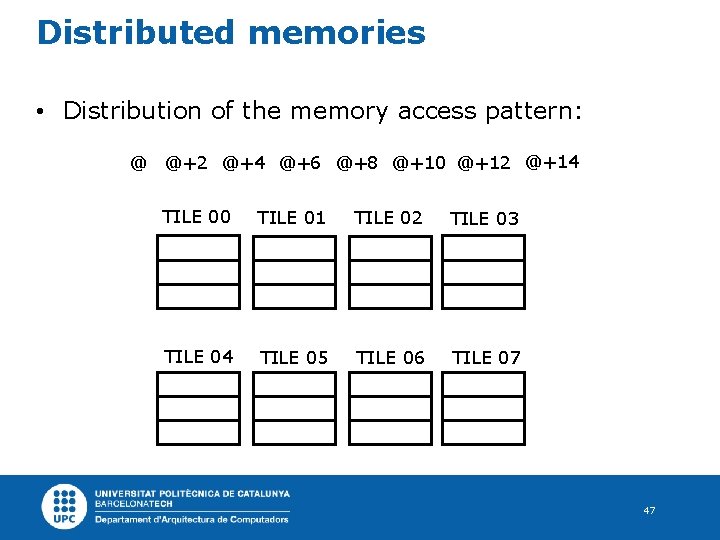

Distributed memories • Distribution of the memory access pattern: @ @+2 @+4 @+6 @+8 @+10 @+12 @+14 TILE 00 TILE 01 TILE 02 TILE 03 @ @+2 @+4 @+6 TILE 04 TILE 05 TILE 06 @+8 @ + 10 @ + 12 TILE 07 @ + 14 47

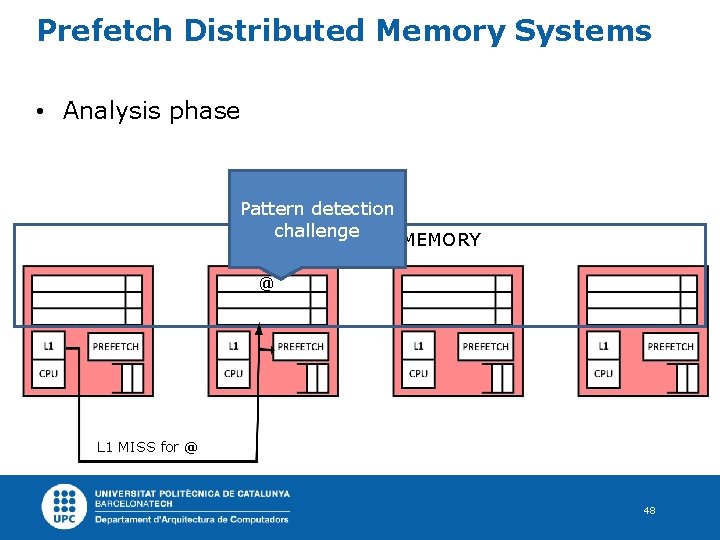

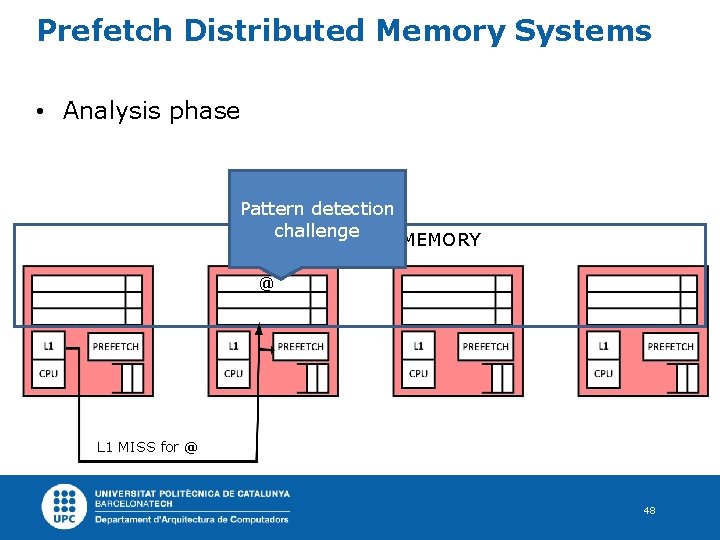

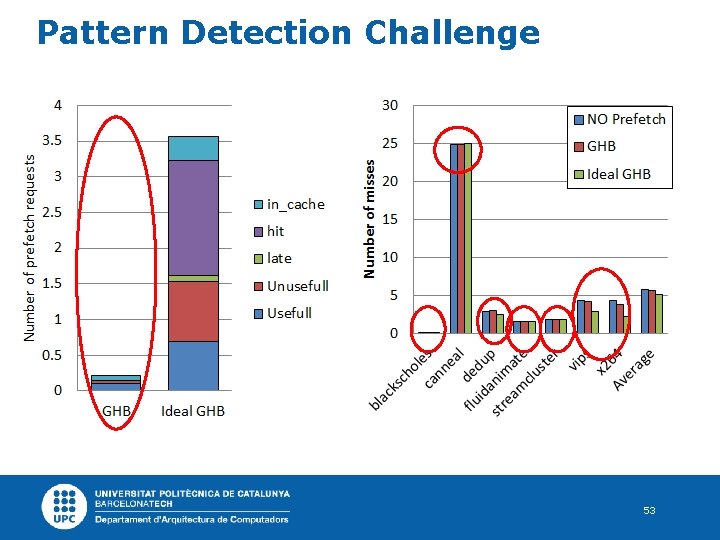

Prefetch Distributed Memory Systems • Analysis phase Pattern detection challenge DISTRIBUTED L 2 MEMORY @ L 1 MISS for @ 48

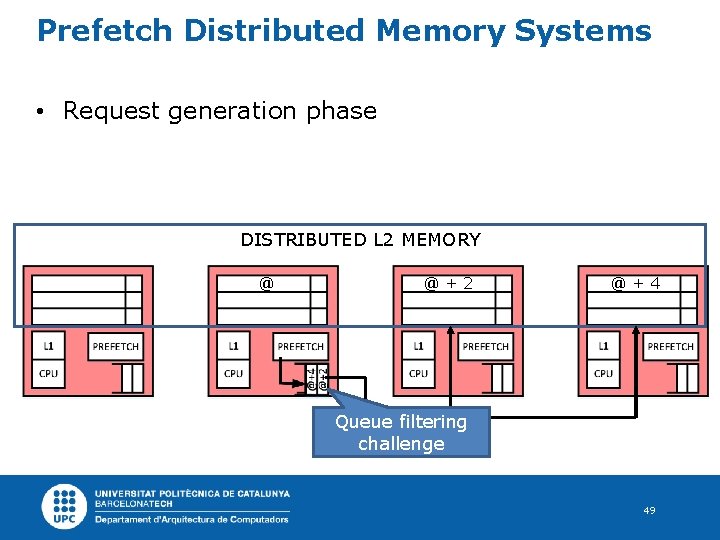

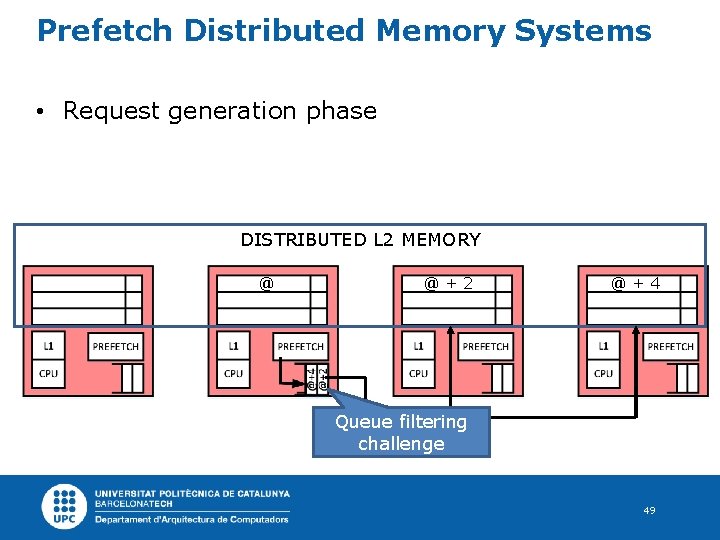

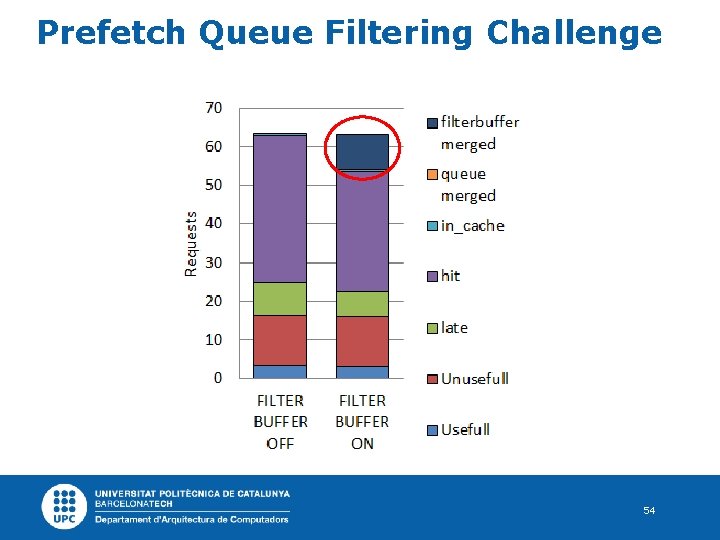

Prefetch Distributed Memory Systems • Request generation phase DISTRIBUTED L 2 MEMORY @ @+2 @+4 Queue filtering challenge 49

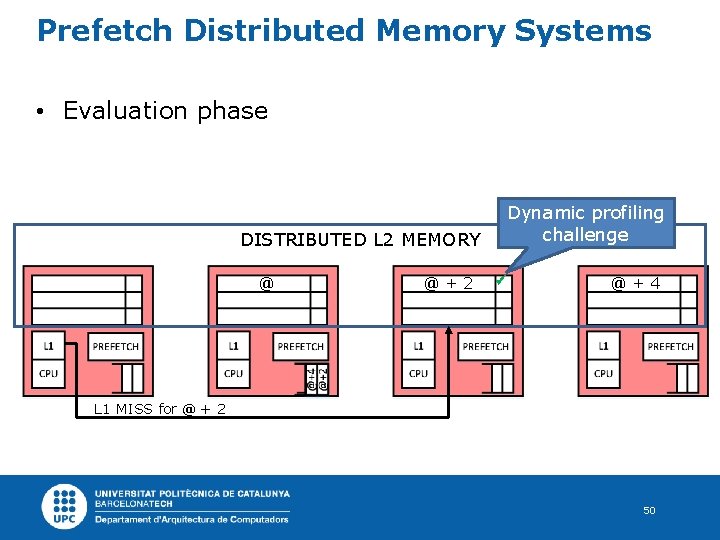

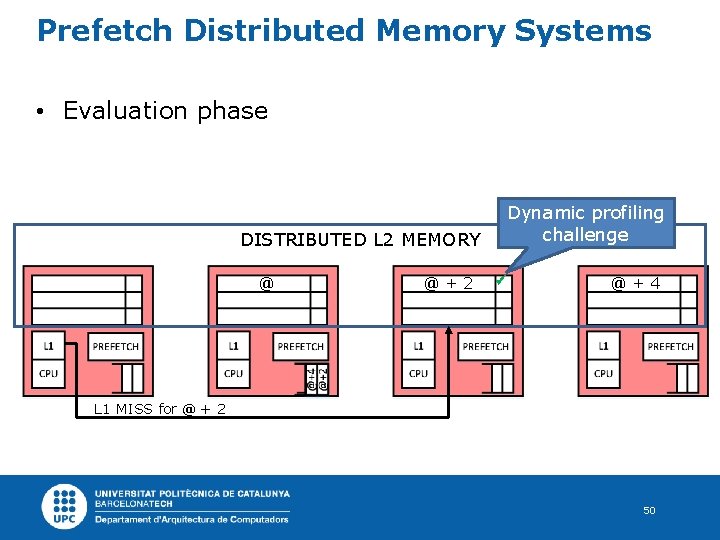

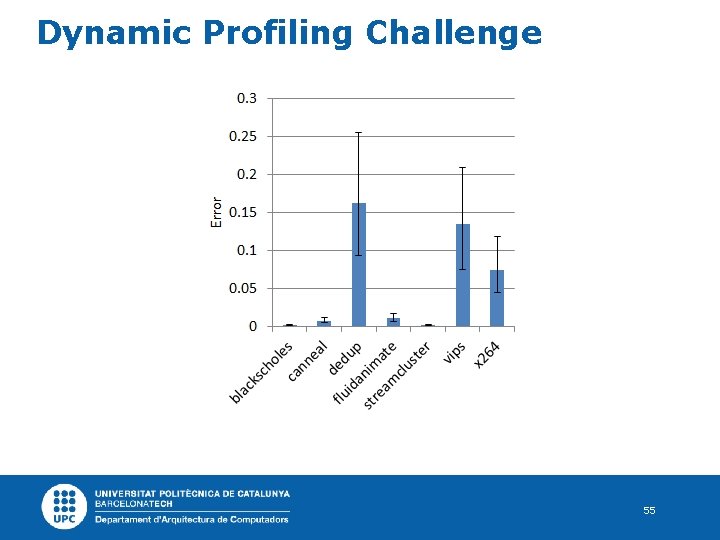

Prefetch Distributed Memory Systems • Evaluation phase Dynamic profiling challenge DISTRIBUTED L 2 MEMORY @ @+2 @+4 L 1 MISS for @ + 2 50

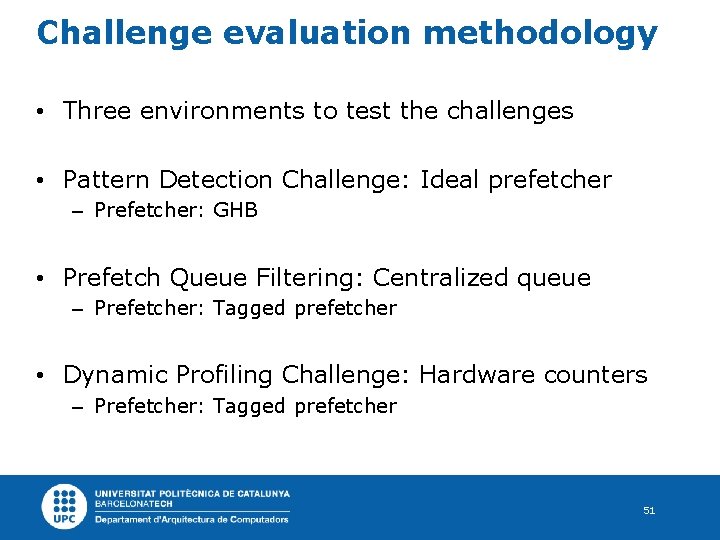

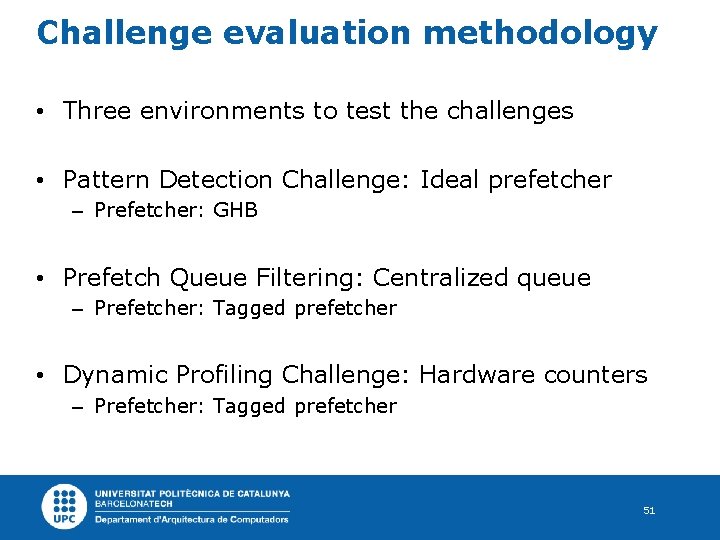

Challenge evaluation methodology • Three environments to test the challenges • Pattern Detection Challenge: Ideal prefetcher – Prefetcher: GHB • Prefetch Queue Filtering: Centralized queue – Prefetcher: Tagged prefetcher • Dynamic Profiling Challenge: Hardware counters – Prefetcher: Tagged prefetcher 51

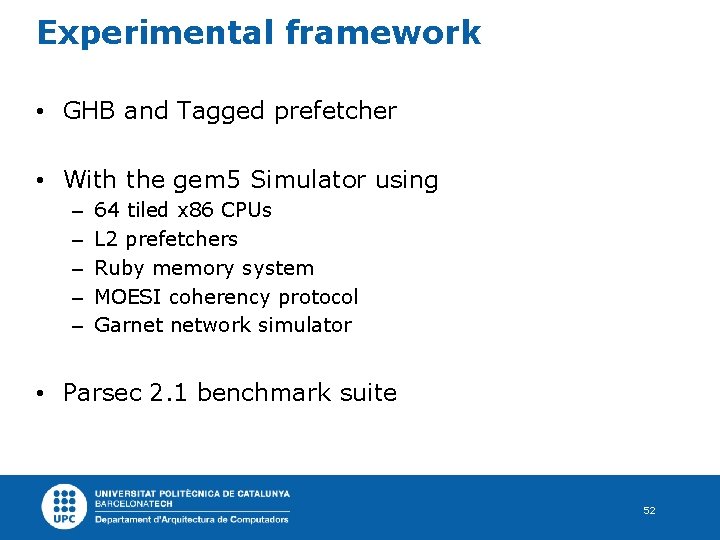

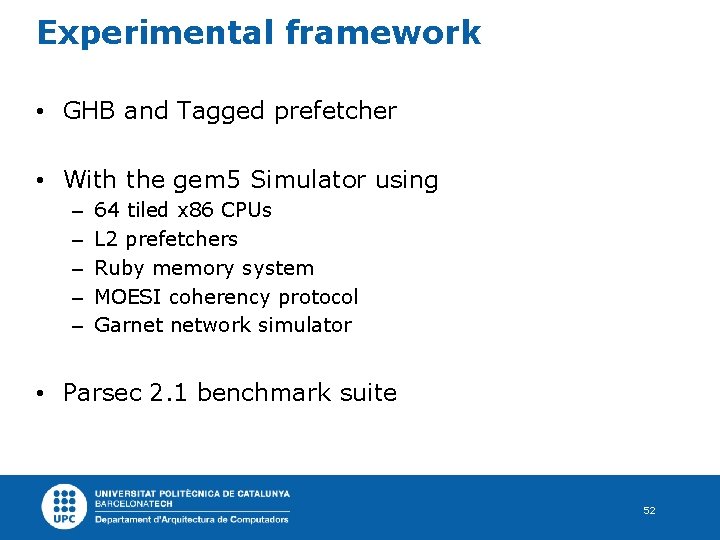

Experimental framework • GHB and Tagged prefetcher • With the gem 5 Simulator using – – – 64 tiled x 86 CPUs L 2 prefetchers Ruby memory system MOESI coherency protocol Garnet network simulator • Parsec 2. 1 benchmark suite 52

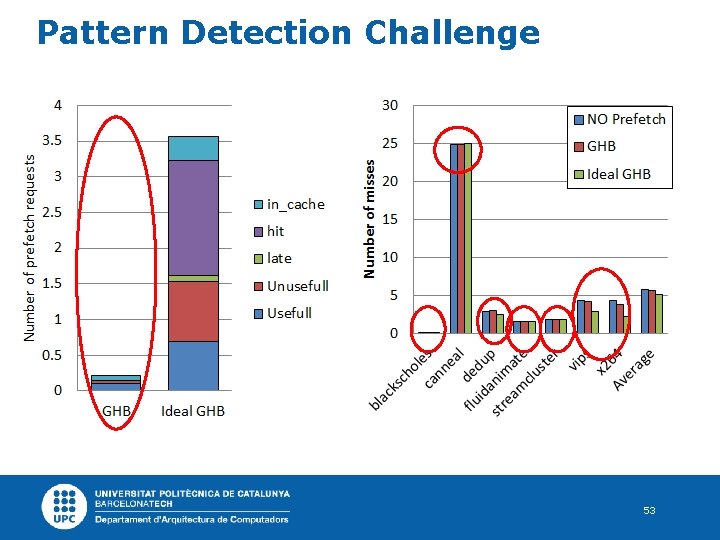

Pattern Detection Challenge 53

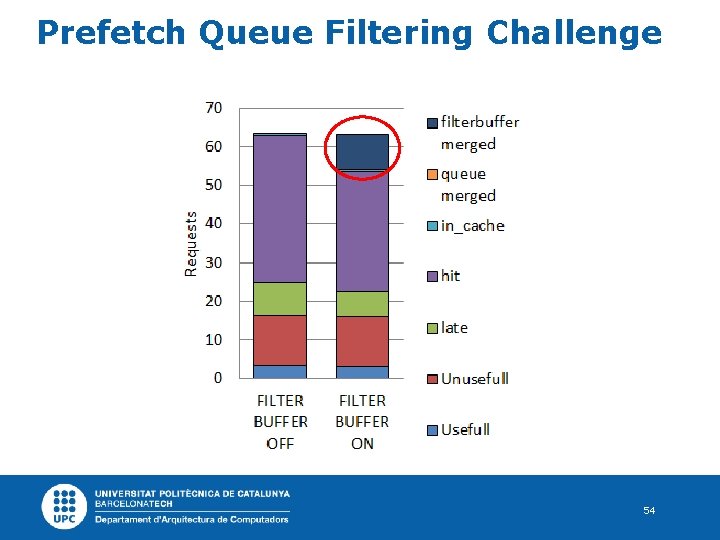

Prefetch Queue Filtering Challenge 54

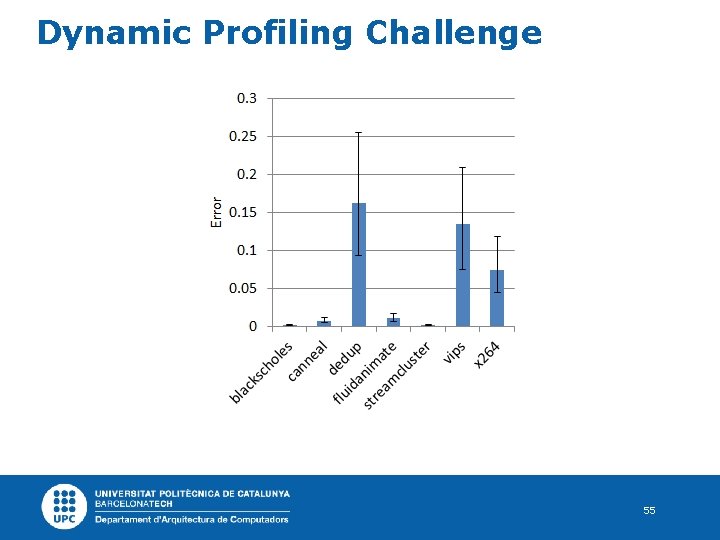

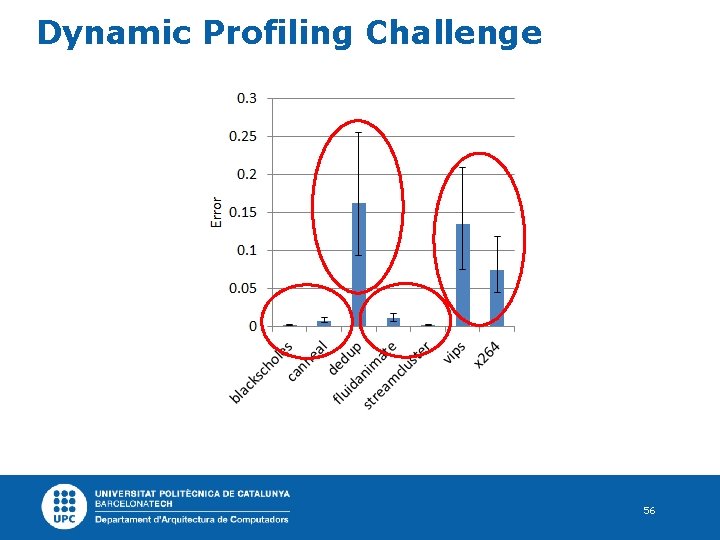

Dynamic Profiling Challenge 55

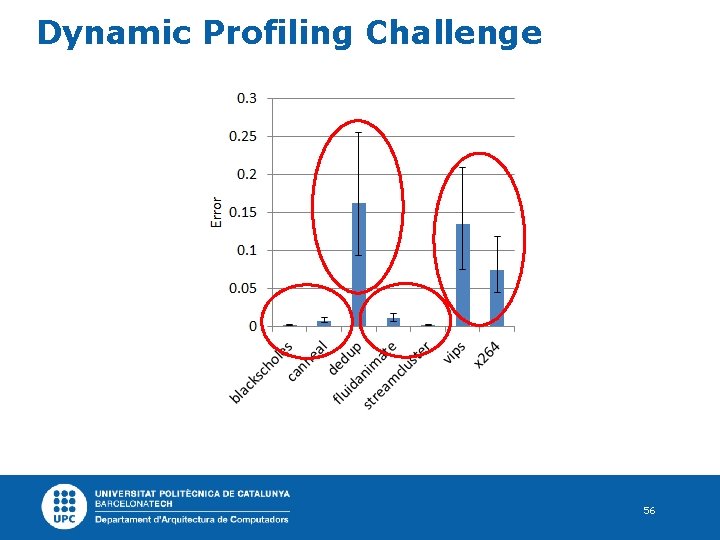

Dynamic Profiling Challenge 56

Facing the challenges • There are two main options – Redesign the entire prefetch philosophy – Adapt the current techniques to work with DSMs • Moreover, there are two main directions There is still room for improvement – Centralize the information in this kind of architectures – Handicap of communication increment – Distribute the prefetcher – Handicap of smartly distribute the prefetcher 57

Outline Motivation Prefetching in multi-core platforms Confidence predictor for prefetching in CMPs Dynamic management of prefetch requests Prefetching challenges in DSMs Conclusions 58

Conclusions • We have seen that new technologies provide opportunities on improvement in prefetching. • We have shown that incorporating the effect of the No. C may increase the accuracy of the simulation and lead to more optimal decisions. • We have demonstrated that the contention injected by the prefetcher in the No. C is not negligible and it can increase the memory latency. 59

Conclusions • We have proposed a novel confidence predictor that can accurately predict the usability of prefetching requests before being issued. • We have successfully used our confidence predictor to improve techniques that try to reduce the contention on the network-on-chip. • We have identified unexpected challenges that appear when prefetching in DSMs and we have provided research directions to face them. 60

Contributions • Prefetching evaluation in multi-core platforms – M. Torrents, R. Martínez, C. Molina, An Accurate and Detailed Prefetching Simulation Framework for gem 5, The Second gem 5 User Workshop (GEM 5’ 15), held in conjunction with the 42 nd International Symposium on Computer Architecture(ISCA’ 15), Portland, (USA), June 2015. – M. Torrents, R. Martínez, C. Molina, Network Aware Performance Evaluation of Prefetching Techniques in CMPs, Journal of Simulation Modelling Practice and Theory, Volume 45, June 2014. – M. Torrents, R. Martínez, P. Lopez, J. M. Codina, A. Gonzalez, Comparative Study of Prefetching Mechanisms, In XXI Jornadas de Paralelismo, 2010. 7. 4. 2. 61

Contributions • Confidence predictor mechanisms for prefetching in CMPs – M. Torrents, R. Martínez, C. Molina, CRa. SP: A novel confidence predictor to improve prefetching performance in CMPs, Summited to The 44 th International Symposium on Computer Architecture (ISCA’ 17), Toronto, ON, Canada, June 2017. – M. Torrents, R. Martínez, C. Molina, Improving the Prefetching Performance Through Code Region Profiling, In Proceedings of the 2 nd International BSC Doctoral Symposium (BSC’ 15), Barcelona, (Spain), May 2015. – R. Martínez, E. Gibert, P. Lopez, M. Torrents, et. al, Profiling asynchronous events resulting from the execution of software at code region granularity. US Patent App. 13/993, 054 -2011. 7. 4. 3. 62

Contributions • Improving the Prioritization and Filtering of Prefetch Requests – M. Torrents, R. Martínez, C. Molina, Improving the prioritization and filtering of prefetching request, Tech. Report UPC-DAC-RR-2016 -5, July 2016. 7. 4. 4. • Prefetching Challenges in Distributed Shared Memories for CMPs – M. Torrents, R. Martínez, C. Molina, Facing Prefetching Challenges in Distributed Shared Memories, The Journal of Supercomputing (JSC’ 16), February 2016. – M. Torrents, R. Martínez, C. Molina, Prefetching Challenges in Distributed Memories for CMPs, In Proceedings of the International Conference on Computational Science (ICCS’ 15), Reykjavík, (Iceland), June 2015. 63

Improving Prefetching Mechanisms for Tiled CMP Platforms 22 th July of 2016 Candidate: Advisors: Tutor: Martí Torrents Raúl Martínez, Carlos Molina Antonio González

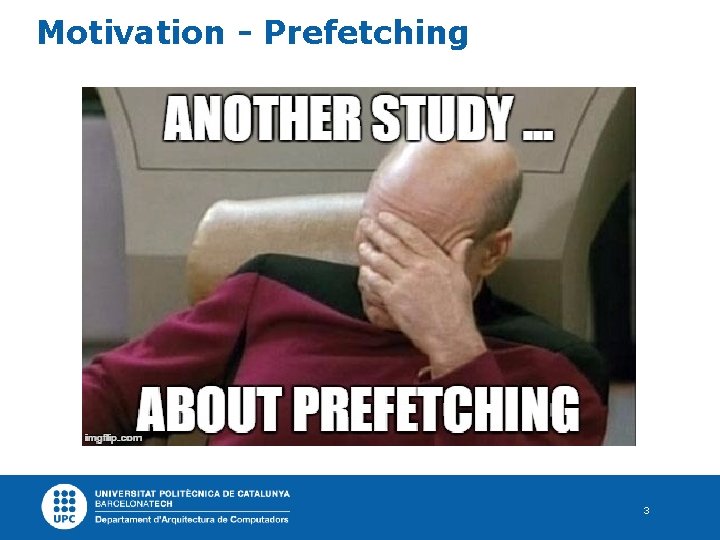

Reducing the division costs • Calculate and evaluate the accuracy – – – IS (ACC > TH) ? ACC = USEFUL / (USEFUL + NON USEFUL) USEFUL > USEFUL · TH + NON USEFUL · TH USEFUL – USEFUL · TH > NON USEFUL · TH USEFUL (1 – TH) > NON USEFUL · TH If we force the TH to be a percentage multiple of 10 USEFUL 1 -0. 7 > NON USEFUL · 0. 7 3 USEFUL > 7 NON USEFUL 2 USEFUL + USEFUL > 8 NON USEFUL – NON USEFUL (USEFUL << 1) + USEFUL > (NON U. << 3) – NON U. Cost: 2 Shifts + 2 ADD operations + a comparision If Shift operations and ADD are done in paralelel: 3 cicles 65