Improving GPU Performance via Improved SIMD Efficiency Ahmad

![References 42 [1] A. Bakhoda, G. L. Yuan, W. W. L. Fung, H. Wong, References 42 [1] A. Bakhoda, G. L. Yuan, W. W. L. Fung, H. Wong,](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-42.jpg)

![Published 43 [1] A. Lashgar, A. Baniasadi. "Performance in GPU Architectures: Potentials and Distances. Published 43 [1] A. Lashgar, A. Baniasadi. "Performance in GPU Architectures: Potentials and Distances.](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-43.jpg)

![Multi-banked register file [Fung’MICRO 2007] 50 November 12, 2012 Improving GPU Performance … Multi-banked register file [Fung’MICRO 2007] 50 November 12, 2012 Improving GPU Performance …](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-50.jpg)

![57 Large Warp Micro-architecture [Narasiman’MICRO 2011] November 12, 2012 Improving GPU Performance … 57 Large Warp Micro-architecture [Narasiman’MICRO 2011] November 12, 2012 Improving GPU Performance …](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-57.jpg)

- Slides: 77

Improving GPU Performance via Improved SIMD Efficiency Ahmad Lashgar ECE Department University of Tehran Supervisors: Ahmad Khonsari Amirali Baniasadi November 12, 2012 Improving GPU Performance … 1

Outline 2 • Introduction • Background – Warping – Branch divergence – Memory divergence • CROWN – Branch divergence management – Technique & Results • DWR – Memory access coalescing – Technique & Results • Conclusion and Future works November 12, 2012 Improving GPU Performance …

Introduction 3 CPU GPU Simple motivation: There are different kind of threads which can be activated to improve performance. Sepermicro® 6016 GT-TF-FM 209 Inactivate due to branch or memory divergence … Tianhe-1 A supercomputer November 12, 2012 Improving GPU Performance … DRAM In this study, we propose two techniques (CROWN and DWR) to improve the performance of GPU.

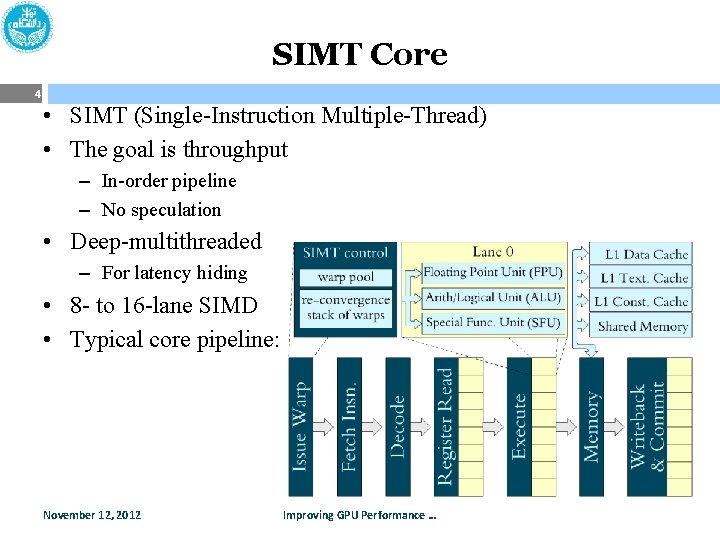

SIMT Core 4 • SIMT (Single-Instruction Multiple-Thread) • The goal is throughput – In-order pipeline – No speculation • Deep-multithreaded – For latency hiding • 8 - to 16 -lane SIMD • Typical core pipeline: November 12, 2012 Improving GPU Performance …

Outline 5 • Introduction • Background – Warping – Branch divergence – Memory divergence • CROWN – Branch divergence management – Technique & Results • DWR – Memory access coalescing – Technique & Results • Conclusion and Future works November 12, 2012 Improving GPU Performance …

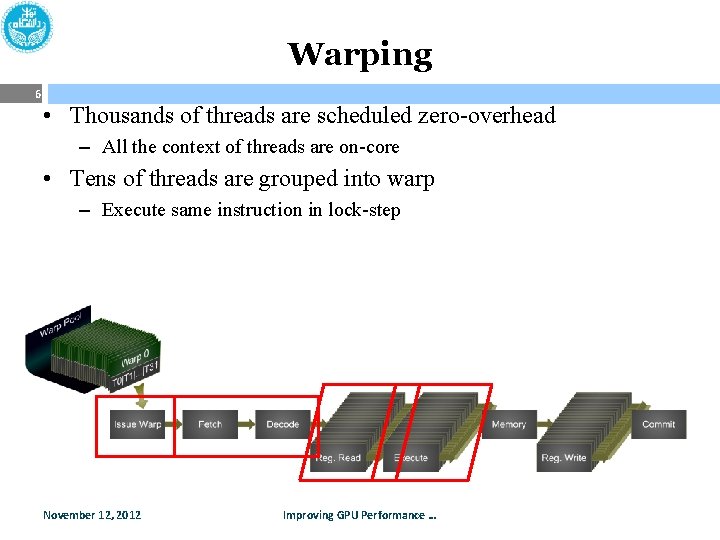

Warping 6 • Thousands of threads are scheduled zero-overhead – All the context of threads are on-core • Tens of threads are grouped into warp – Execute same instruction in lock-step November 12, 2012 Improving GPU Performance …

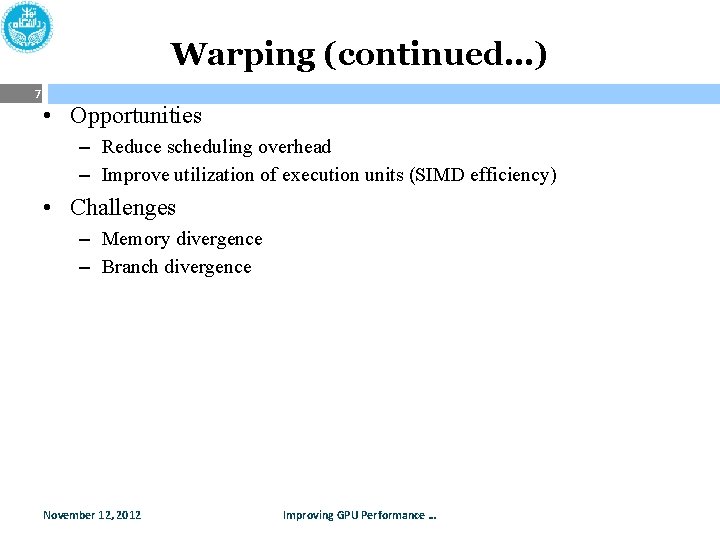

Warping (continued…) 7 • Opportunities – Reduce scheduling overhead – Improve utilization of execution units (SIMD efficiency) • Challenges – Memory divergence – Branch divergence November 12, 2012 Improving GPU Performance …

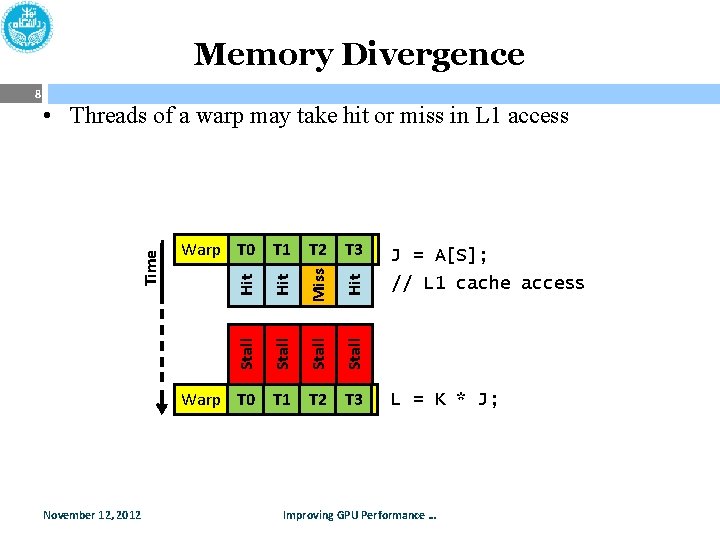

Memory Divergence • Threads of a warp may take hit or miss in L 1 access Hit Miss Hit Stall Warp T 0 T 1 T 2 T 3 Stall Time 8 Warp T 0 T 1 T 2 T 3 November 12, 2012 J = A[S]; // L 1 cache access L = K * J; Improving GPU Performance …

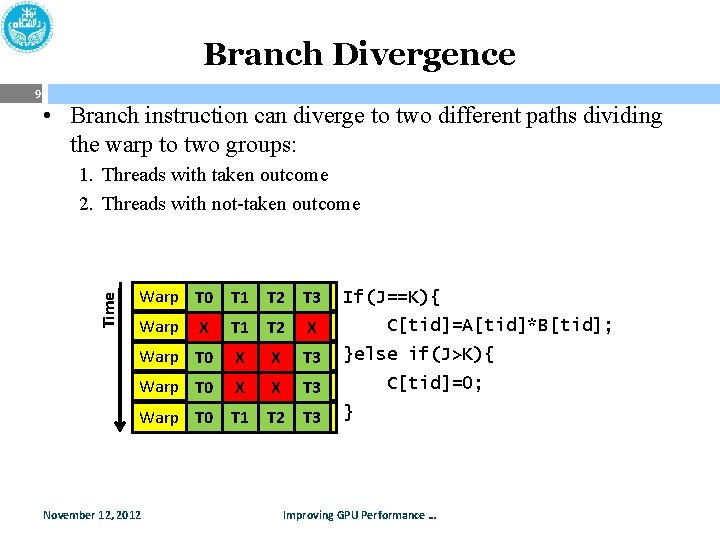

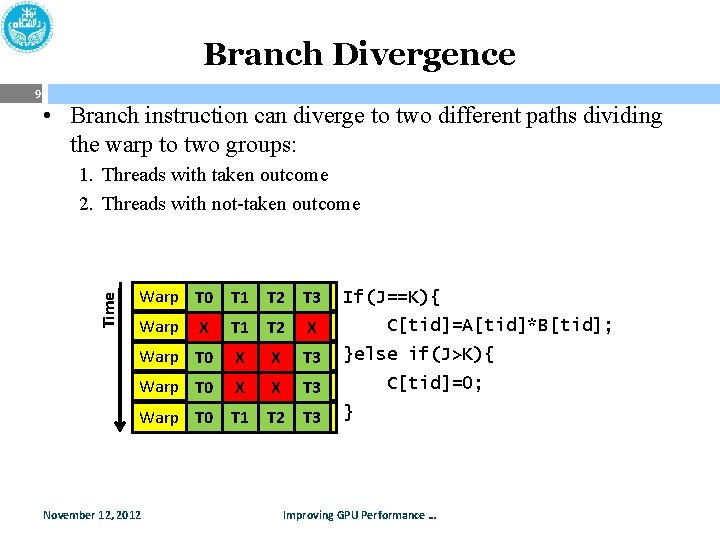

Branch Divergence • Branch instruction can diverge to two different paths dividing the warp to two groups: 1. Threads with taken outcome 2. Threads with not-taken outcome Time 9 Warp T 0 T 1 T 2 T 3 Warp X T 1 T 2 X Warp T 0 X X T 3 Warp T 0 T 1 T 2 T 3 November 12, 2012 If(J==K){ C[tid]=A[tid]*B[tid]; }else if(J>K){ C[tid]=0; } Improving GPU Performance …

Outline 10 • Introduction • Background – Warping – Branch divergence – Memory divergence • CROWN – Branch divergence management – Technique & Results • DWR – Memory access coalescing – Technique & Results • Conclusion and Future works November 12, 2012 Improving GPU Performance …

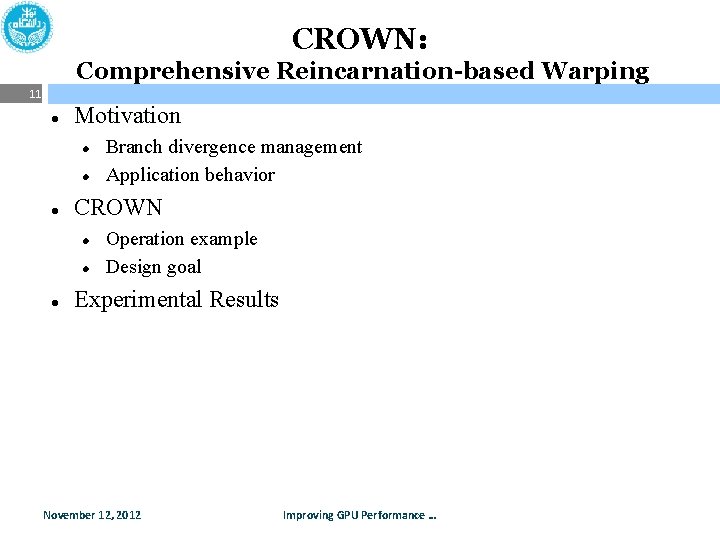

CROWN: Comprehensive Reincarnation-based Warping 11 Motivation CROWN Branch divergence management Application behavior Operation example Design goal Experimental Results November 12, 2012 Improving GPU Performance …

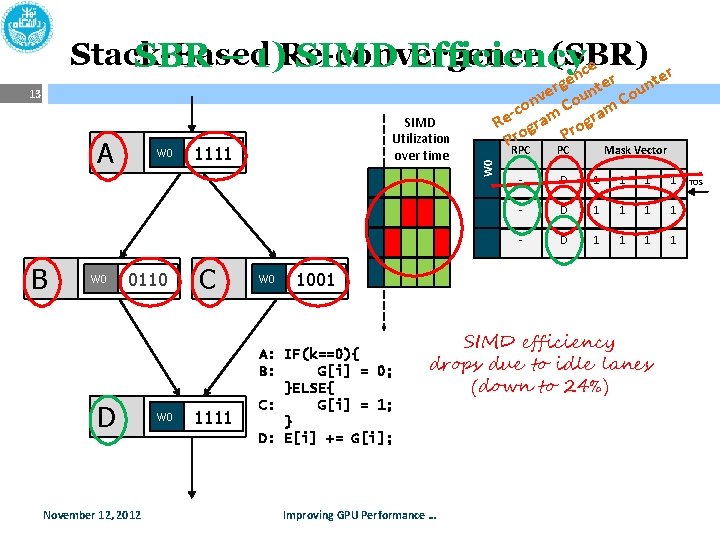

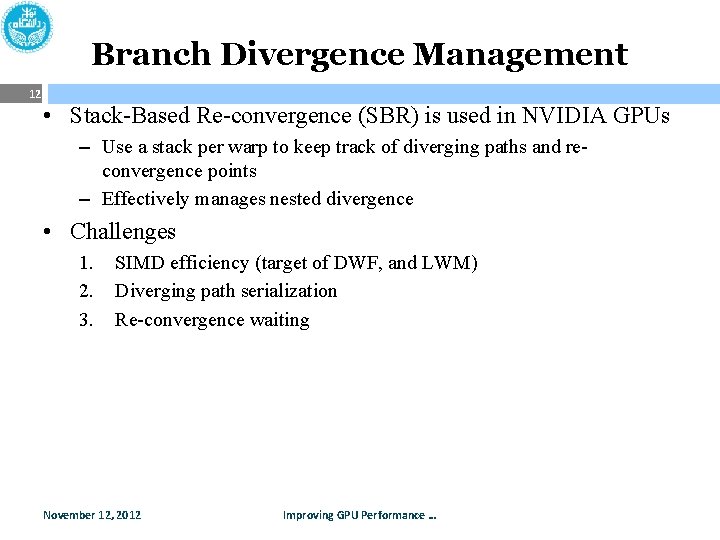

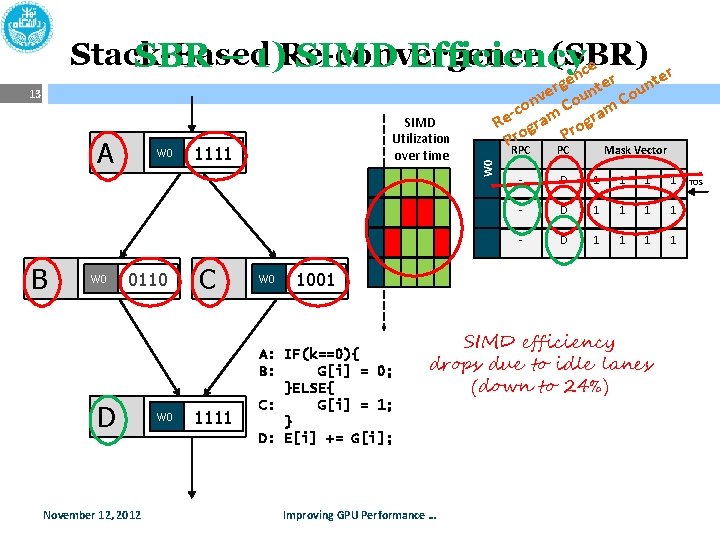

Branch Divergence Management 12 • Stack-Based Re-convergence (SBR) is used in NVIDIA GPUs – Use a stack per warp to keep track of diverging paths and reconvergence points – Effectively manages nested divergence • Challenges 1. 2. 3. SIMD efficiency (target of DWF, and LWM) Diverging path serialization Re-convergence waiting November 12, 2012 Improving GPU Performance …

Stack-Based (SBR) SBR – 1)Re-convergence SIMD Efficiency ce n er er e t g n t r ve oun Cou n -co am C gram e R gr Pro A B W 0 0110 D November 12, 2012 W 0 SIMD Utilization over time 1111 C 1111 W 0 W 0 W 0 13 RPC PC Mask Vector D- D A B C 1 0 1 0 D- D B 1 0 1 1 1 0 - D 1 1 1001 A: IF(k==0){ B: G[i] = 0; }ELSE{ C: G[i] = 1; } D: E[i] += G[i]; SIMD efficiency drops due to idle lanes (down to 24%) Improving GPU Performance … TOS

SBR – 2) Diverging Path Serialization 14 SIMD Utilization over time W 0 A B W 0 0110 D November 12, 2012 C W 0 RPC PC Mask Vector D C 1 0 0 1 D B 0 1 1 0 - D 1 1 1001 Threads are inactivated due to divergence (Up to 13% of threads) Targeted by DWF Improving GPU Performance … TOS

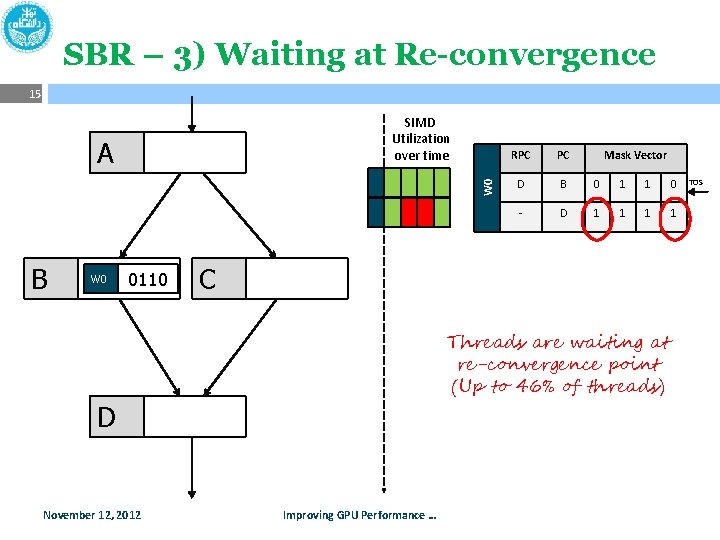

SBR – 3) Waiting at Re-convergence 15 SIMD Utilization over time W 0 A B W 0 0110 PC Mask Vector D B 0 1 1 0 - D 1 1 C Threads are waiting at re-convergence point (Up to 46% of threads) D November 12, 2012 RPC Improving GPU Performance … TOS

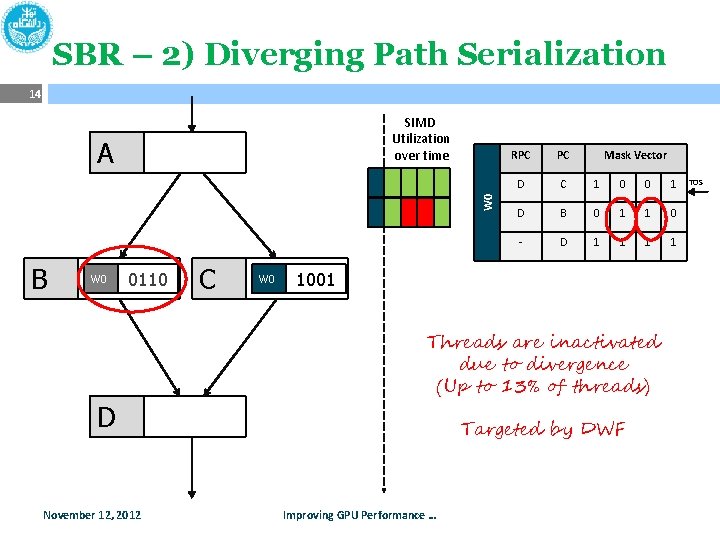

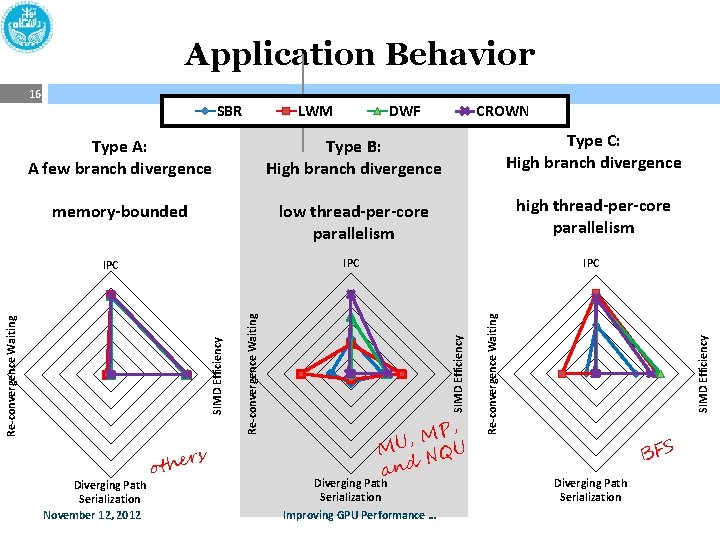

IPC Application Behavior Type A: A few branch divergence CROWN Type B: Diverging High branch divergence Type C: High branch divergence parallelism high thread-per-core parallelism IPC P, M , MU NQU and Diverging Path Serialization Improving GPU Performance … Re-convergence Waiting SIMD Efficiency Re-convergence Waiting IPC Diverging Path Serialization November 12, 2012 LWM SIMD DWF efficiency Path Serialization low thread-per-core memory-bounded ers oth 1. 2 0. 81 0. 6 0. 4 0. 2 0 SIMD Efficiency Reconvergence SBR Waiting 16 BFS Diverging Path Serialization

CROWN’s Design Goal 17 Proposed to address SBR challenges SIMD Efficiency Diverging Path Serialization Re-convergence Dynamic regrouping Activate all threads Re-convergence Waiting Dynamic regrouping Schedule at small warp granularity (as wide as SIMD width) November 12, 2012 Improving GPU Performance …

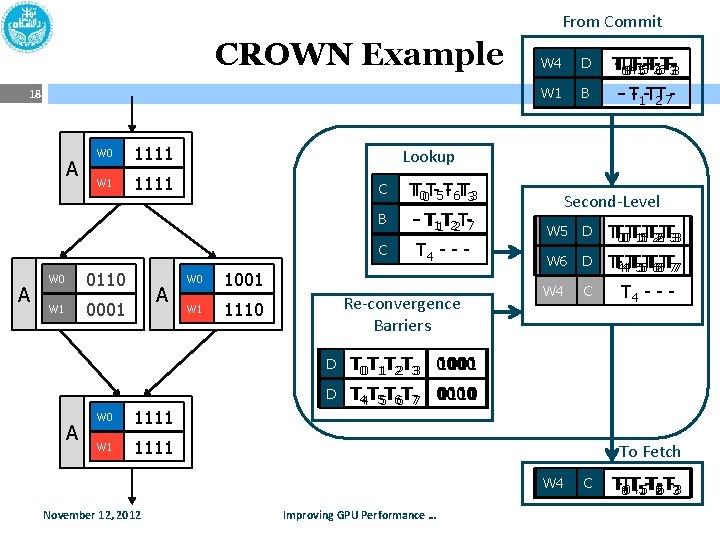

From Commit CROWN Example 18 A A W 0 1111 W 1 1111 0110 W 0 A 0001 W 1 A W 0 1111 W 1 1111 W 3 W 1 W 4 W 2 W 0 D C T-TT 040 TT -15 -5 TT-T-266 TT-3 -73 4 T W 1 W 0 B -- T- 1 -TT 2 7 - Lookup W 0 W 1 C TT 00 T-5 T- 6 TT 33 B -- TT 11 TT 22 T-7 C T 4 - - - 1001 Re-convergence Barriers 1110 D D TT 00 TT 11 TT 22 TT 33 0000 1001 1111 D D TT 44 TT 55 TT 66 TT 77 0000 0111 1111 Second-Level W 0 W 2 W 5 A D A C TTT 000 TTT 1151 TTT 2262 TTT 333 W 1 W 3 W 6 A D A B TTT-444 TTT 5155 TTT 6266 TTT 777 W 4 C T 4 - - - To Fetch W 1 W 2 W 3 W 4 W 0 November 12, 2012 Improving GPU Performance … AC B TT-T T T-266 TT-733 4 41 55 -T 00 T

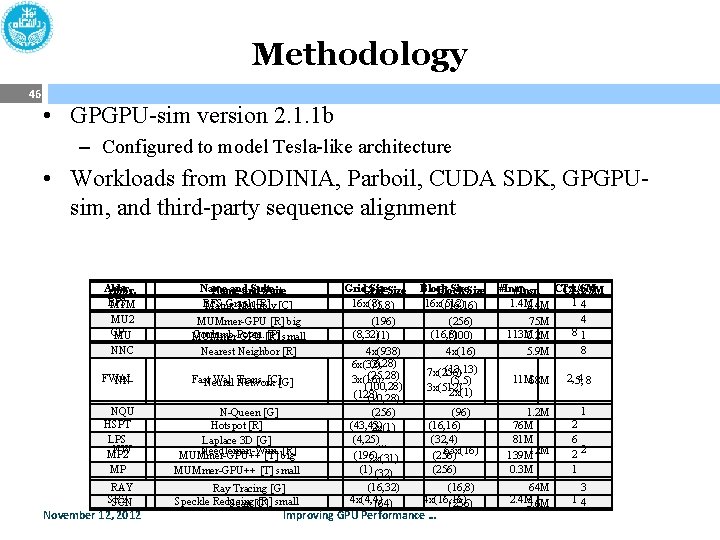

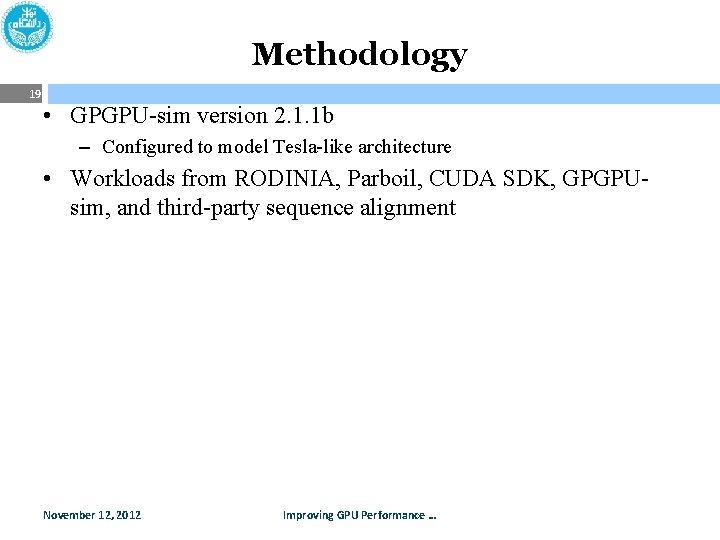

Methodology 19 • GPGPU-sim version 2. 1. 1 b – Configured to model Tesla-like architecture • Workloads from RODINIA, Parboil, CUDA SDK, GPGPUsim, and third-party sequence alignment November 12, 2012 Improving GPU Performance …

Experimental Results 20 • Three challenges: – SIMD efficiency – Diverging path serialization – Re-convergence waiting • Throughput in term of Instruction per clock (IPC) November 12, 2012 Improving GPU Performance …

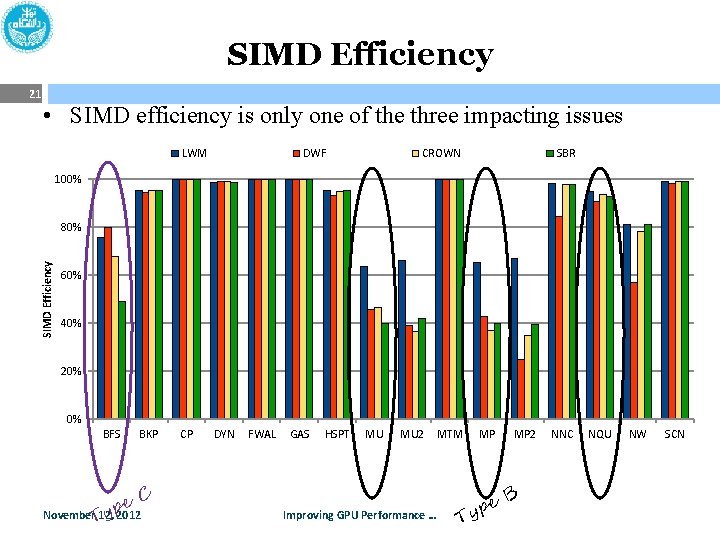

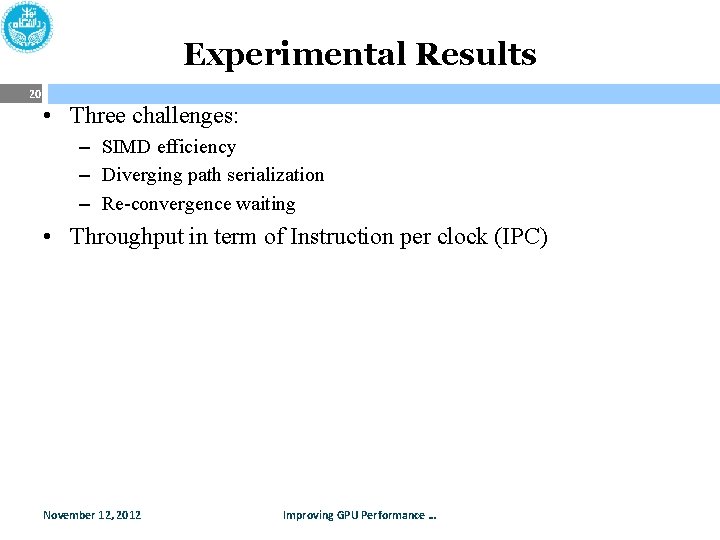

SIMD Efficiency 21 • SIMD efficiency is only one of the three impacting issues LWM DWF CROWN SBR 100% SIMD Efficiency 80% 60% 40% 20% 0% BFS BKP e. C p November. T 12, y 2012 CP DYN FWAL GAS HSPT MU MU 2 Improving GPU Performance … MTM MP T e yp MP 2 B NNC NQU NW SCN

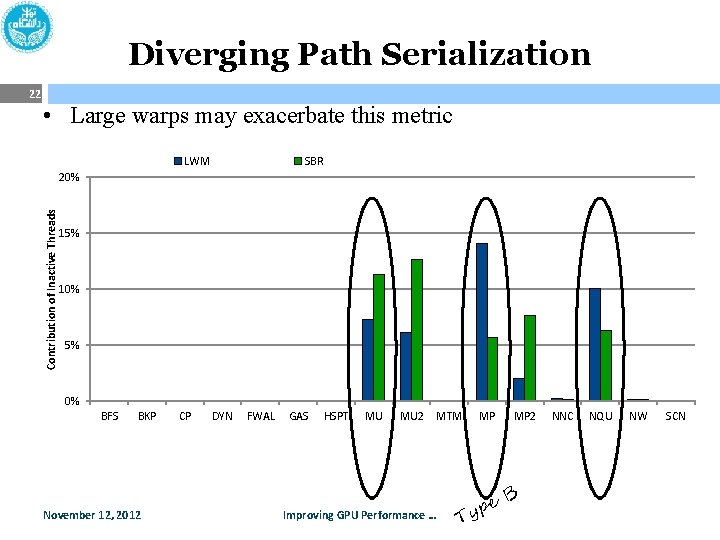

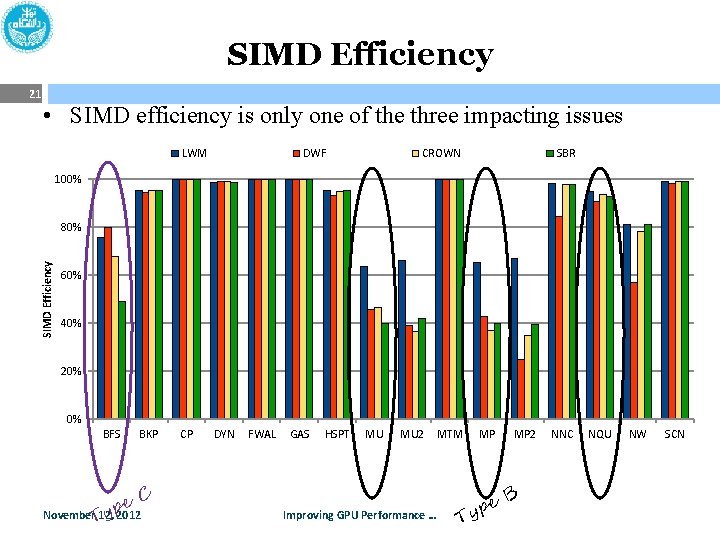

Diverging Path Serialization • Large warps may exacerbate this metric LWM SBR 20% Contribution of Inactive Threads 22 15% 10% 5% 0% BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP T e yp MP 2 B NNC NQU NW SCN

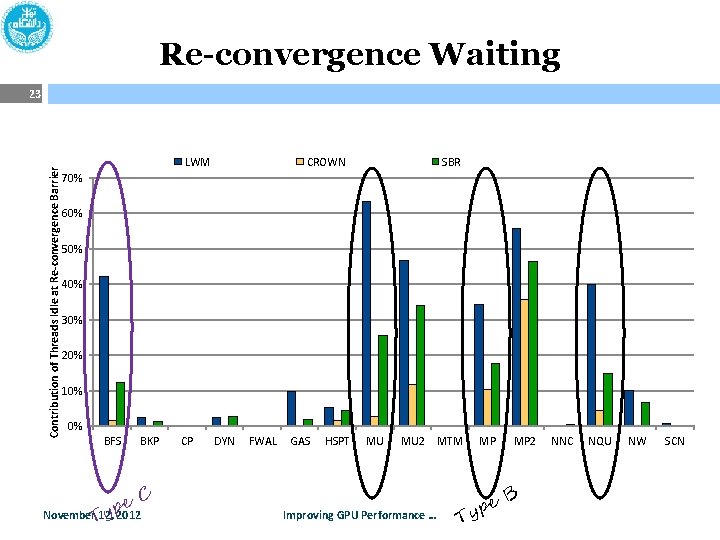

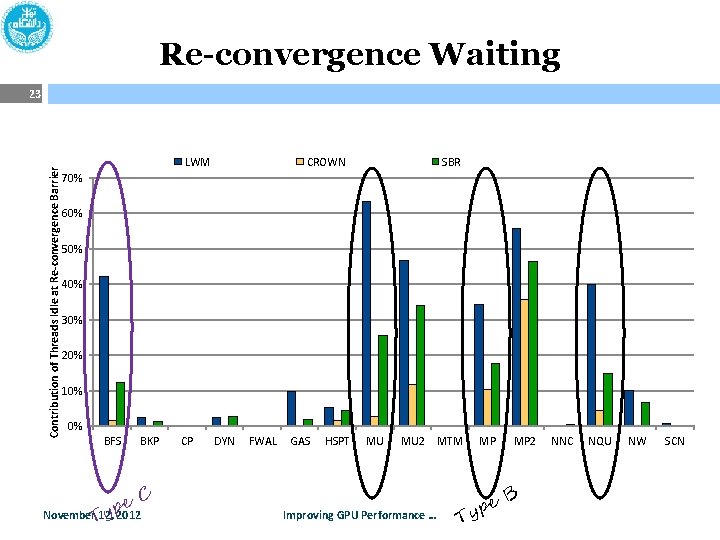

Re-convergence Waiting Contribution of Threads Idle at Re-convergence Barrier 23 LWM CROWN SBR 70% 60% 50% 40% 30% 20% 10% 0% BFS BKP e. C p November. T 12, y 2012 CP DYN FWAL GAS HSPT MU MU 2 Improving GPU Performance … MTM MP T e yp MP 2 B NNC NQU NW SCN

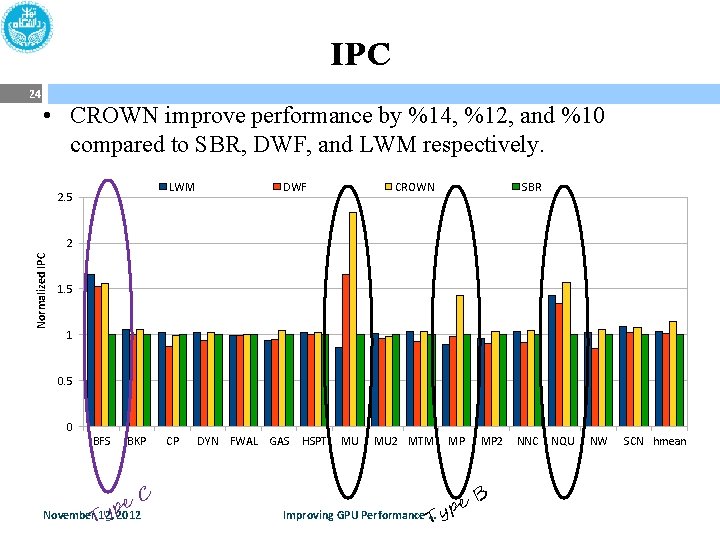

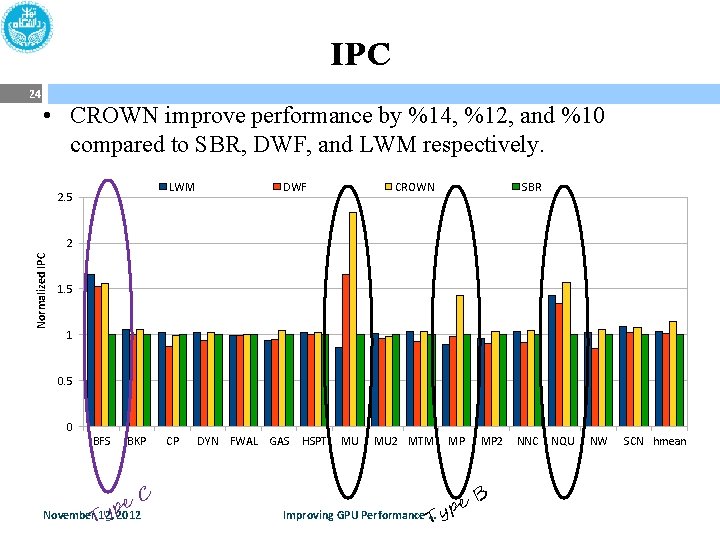

IPC 24 • CROWN improve performance by %14, %12, and %10 compared to SBR, DWF, and LWM respectively. LWM 2. 5 DWF CROWN SBR Normalized IPC 2 1. 5 1 0. 5 0 BFS BKP e. C p November. T 12, y 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM MP pe Improving GPU Performance … y T MP 2 B NNC NQU NW SCN hmean

Outline 25 • Introduction • Background – Warping – Branch divergence – Memory divergence • CROWN – Branch divergence management – Technique & Results • DWR – Memory access coalescing – Technique & Results • Conclusion and Future works November 12, 2012 Improving GPU Performance …

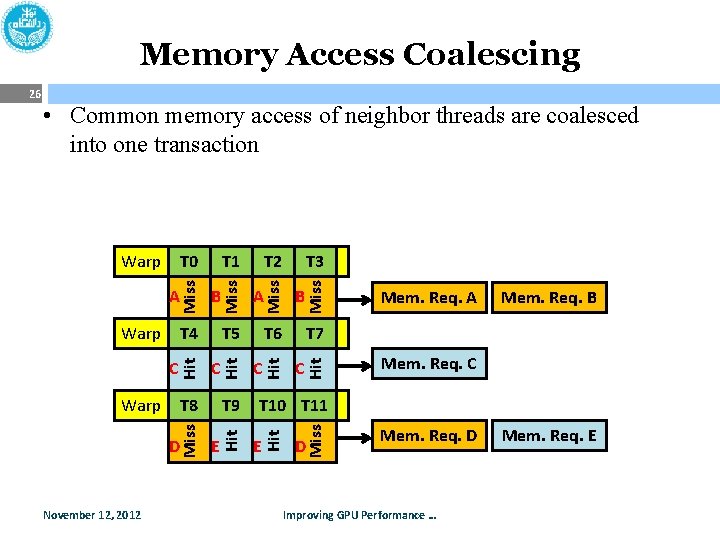

Memory Access Coalescing • Common memory access of neighbor threads are coalesced into one transaction T 9 E Miss B T 6 Mem. Req. A Mem. Req. B T 7 C C Hit C T 8 D November 12, 2012 T 5 Hit Warp A Mem. Req. C T 10 T 11 E Miss Hit C T 3 Hit T 4 Hit Warp B T 2 Hit A T 1 Miss T 0 Miss Warp Miss 26 D Mem. Req. D Improving GPU Performance … Mem. Req. E

Coalescing Width 27 • Range of the threads in a warp which are considered for memory access coalescing – Over sub-warp – Over half-warp – Over entire warp • When the coalescing width is over entire warp, optimal warp size depends on the workload November 12, 2012 Improving GPU Performance …

Warp Size 28 • Warp Size is the number of threads in warp • Why small warp? (not lower that SIMD width) – Less branch/memory divergence – Less synchronization overhead at every instruction • Why large warp? – Greater opportunity for memory access coalescing • We study warp size impact on performance November 12, 2012 Improving GPU Performance …

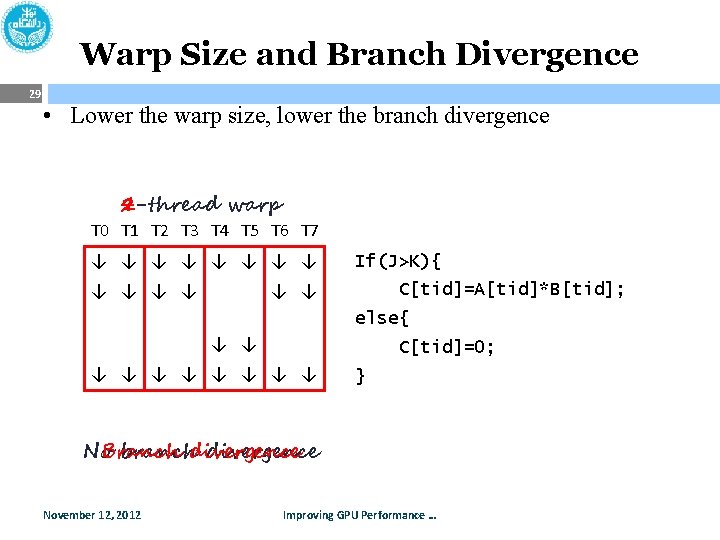

Warp Size and Branch Divergence 29 • Lower the warp size, lower the branch divergence 2 -thread warp 4 -thread T 0 T 1 T 2 T 3 T 4 T 5 T 6 T 7 ↓ ↓ ↓ If(J>K){ ↓ ↓ C[tid]=A[tid]*B[tid]; else{ ↓ ↓ C[tid]=0; ↓ ↓ ↓ ↓ } No Branch branchdivergence November 12, 2012 Improving GPU Performance …

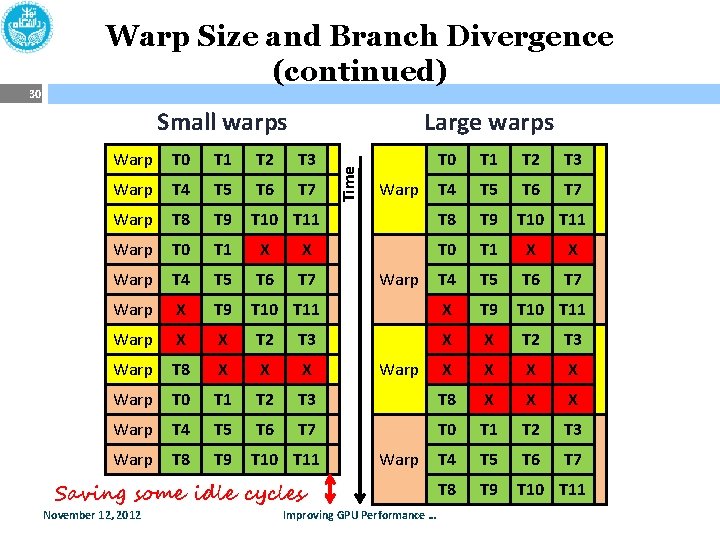

Small warps Large warps Warp T 0 T 1 T 2 T 3 Warp T 4 T 5 T 6 T 7 Warp T 8 T 9 T 10 T 11 Warp T 0 T 1 X X Warp T 4 T 5 T 6 T 7 Warp X T 9 T 10 T 11 Warp X X T 2 T 3 Warp T 8 X X X X Warp T 0 T 1 T 2 T 3 T 8 X X X Warp T 4 T 5 T 6 T 7 T 0 T 1 T 2 T 3 Warp T 8 T 9 T 10 T 11 T 4 T 5 T 6 T 7 T 8 T 9 T 10 T 11 Saving some idle cycles November 12, 2012 Time 30 Warp Size and Branch Divergence (continued) Warp Improving GPU Performance …

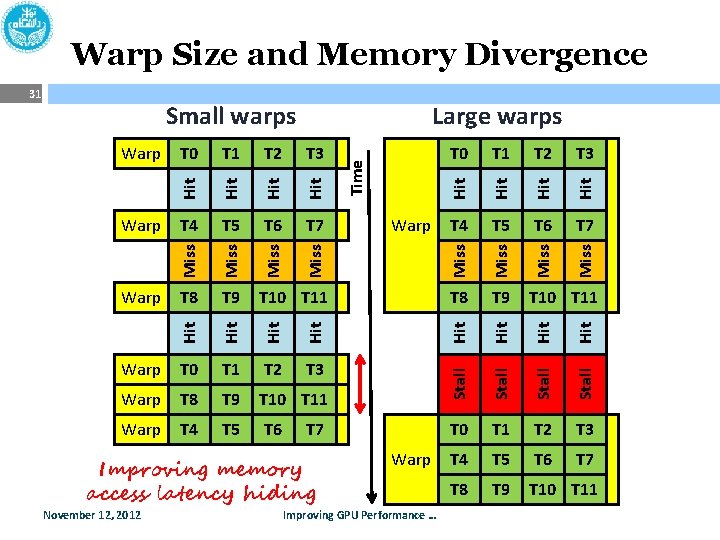

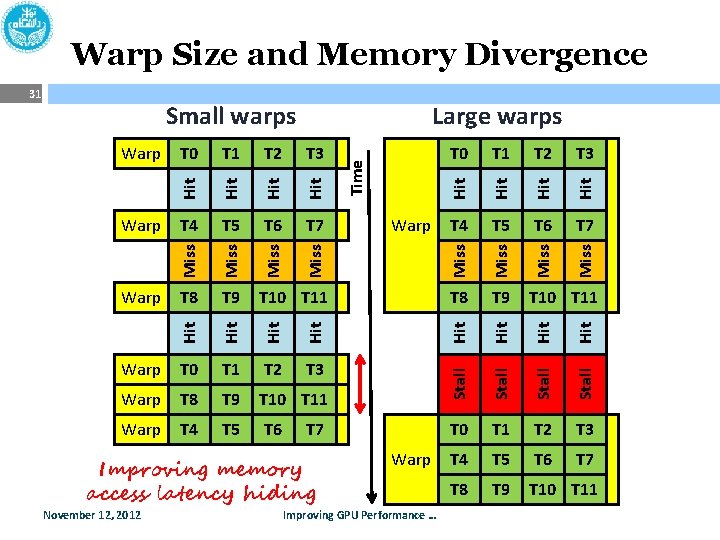

Warp Size and Memory Divergence Hit T 6 T 7 T 9 T 10 T 11 Warp T 4 T 5 T 6 T 7 Improving memory access latency hiding Warp Improving GPU Performance … Hit T 9 Stall T 8 Hit Warp Stall T 3 Hit T 2 Stall T 1 Hit T 0 Miss T 8 Hit T 10 T 11 Hit T 9 Hit Miss Hit T 5 Miss Hit T 4 T 8 Warp November 12, 2012 Warp T 3 Miss Hit T 7 T 2 Miss T 6 T 1 Miss T 5 T 0 Miss T 4 Hit T 3 Hit T 2 Hit Warp T 1 Hit Warp T 0 Miss Warp Large warps Time Small warps Stall 31 T 0 T 1 T 2 T 3 T 4 T 5 T 6 T 7 T 8 T 9 T 10 T 11

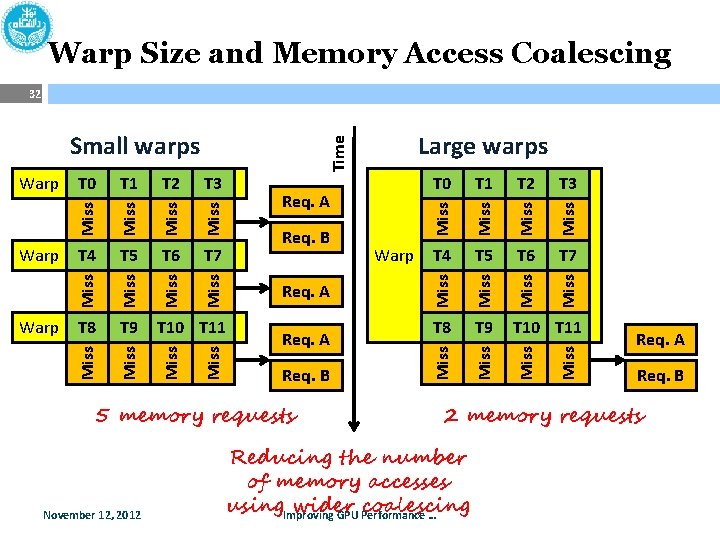

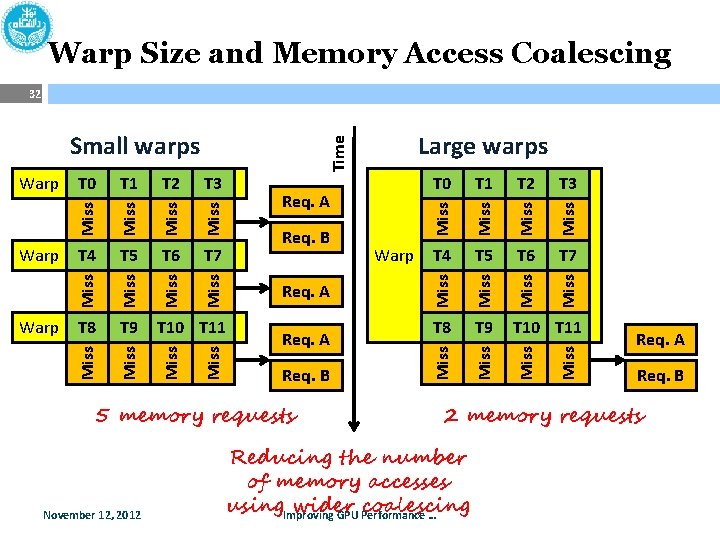

Warp Size and Memory Access Coalescing 32 5 memory requests November 12, 2012 Miss T 6 T 7 Miss T 8 T 9 T 10 T 11 Miss T 5 Miss Req. B T 4 Miss Req. A T 3 Miss T 10 T 11 T 2 Miss T 9 Miss T 8 Req. A Warp T 1 Miss T 7 T 0 Miss T 6 Miss T 5 Req. B Miss T 4 Req. A Miss T 3 Miss T 2 Miss Warp T 1 Miss Warp T 0 Miss Warp Large warps Time Small warps Req. A Req. B 2 memory requests Reducing the number of memory accesses using. Improving wider coalescing GPU Performance …

Motivation • Study of warp size • Coalescing over entire warp 100 8 16 32 64 100% 8 16 32 64 1. 6 8 16 BKP CP 32 64 80 70 60 50 40 30 20 10 0 (a) 1. 4 80% Normalized IPC Contribution of idle cycles 90 Coalescing Rate 33 60% 40% 20% November 12, 2012 CP HSPT MU (b) 1 0. 8 0. 6 0. 4 0% BKP 1. 2 BKP CP HSPT MU Improving GPU Performance … (c) HSPT MU

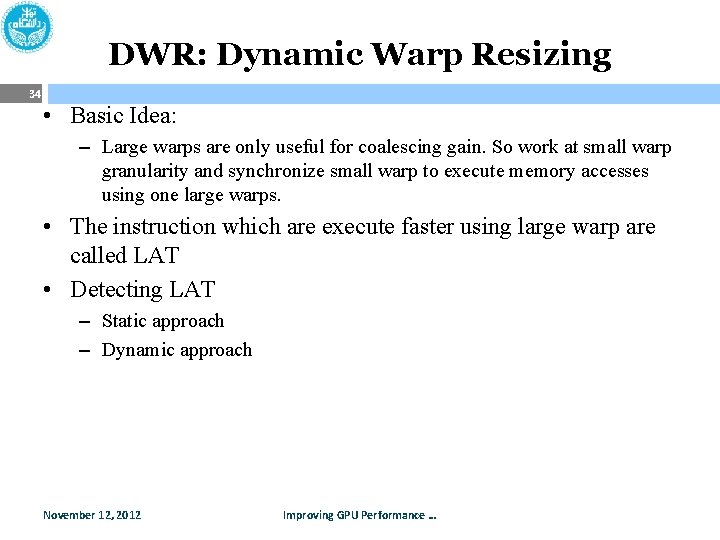

DWR: Dynamic Warp Resizing 34 • Basic Idea: – Large warps are only useful for coalescing gain. So work at small warp granularity and synchronize small warp to execute memory accesses using one large warps. • The instruction which are execute faster using large warp are called LAT • Detecting LAT – Static approach – Dynamic approach November 12, 2012 Improving GPU Performance …

Static Approach for Detecting LATs 35 • Modify ISA and add new synchronization instruction cvt. u 64. s 32 %rd 1, %r 3; ld. param. u 64 %rd 2, [__parm 1]; add. u 64 %rd 3, %rd 2, %rd 1; bar. synch_partner 0; ld. global. s 8 %r 5, [%rd 3+0]; mov. u 32 %r 6, 0; setp. eq. s 32 %p 2, %r 5, %r 6; @%p 2 bra $Lt_0_5122; mov. s 16 %rh 2, 0; st. global. s 8 [%rd 3+0], %rh 2; bar. synch_partner 0; st. global. s 8 November 12, 2012 Improving GPU Performance … [%rd 3+0], %rh 2;

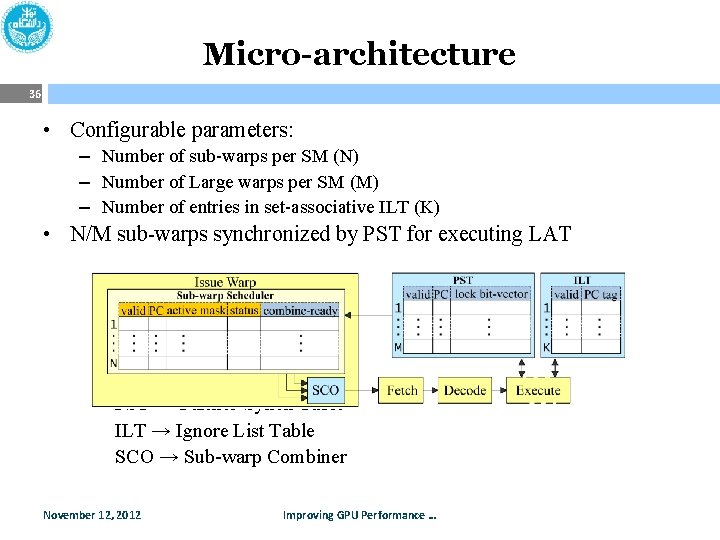

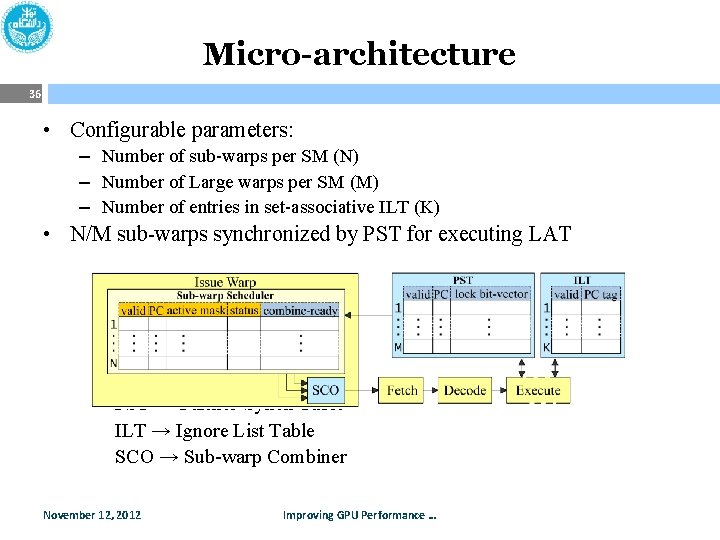

Micro-architecture 36 • Configurable parameters: – Number of sub-warps per SM (N) – Number of Large warps per SM (M) – Number of entries in set-associative ILT (K) • N/M sub-warps synchronized by PST for executing LAT PST → Partner-Synch Table ILT → Ignore List Table SCO → Sub-warp Combiner November 12, 2012 Improving GPU Performance …

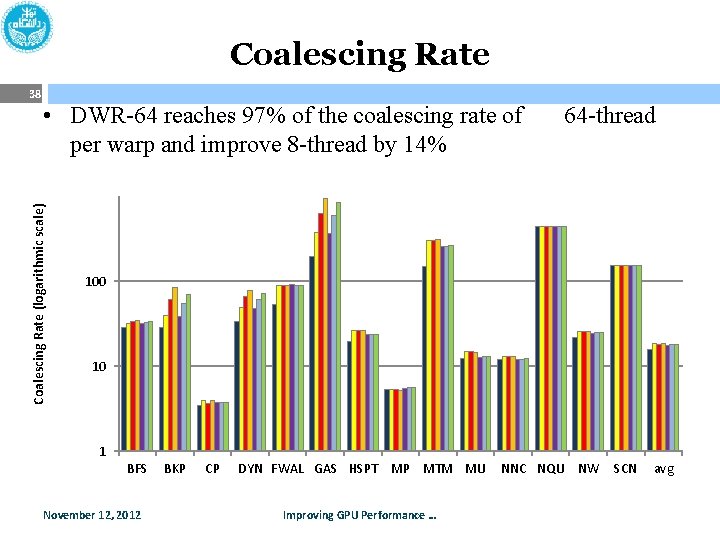

Experimental Results 37 • Methodology – DWR-X where X is the largest warp size – We assume 32 -entry per ILT • Results – – Coalescing rate Idle Cycles Performance Sensitivity Analysis • SIMD width • ILT Size November 12, 2012 Improving GPU Performance …

Coalescing Rate • DWR-64 reaches 97% of the coalescing rate of 64 -thread per warp and improve 8 -thread by 14% Coalescing Rate (logarithmic scale) 38 100 10 1 BFS November 12, 2012 BKP CP DYN FWAL GAS HSPT MP MTM MU Improving GPU Performance … NNC NQU NW SCN avg

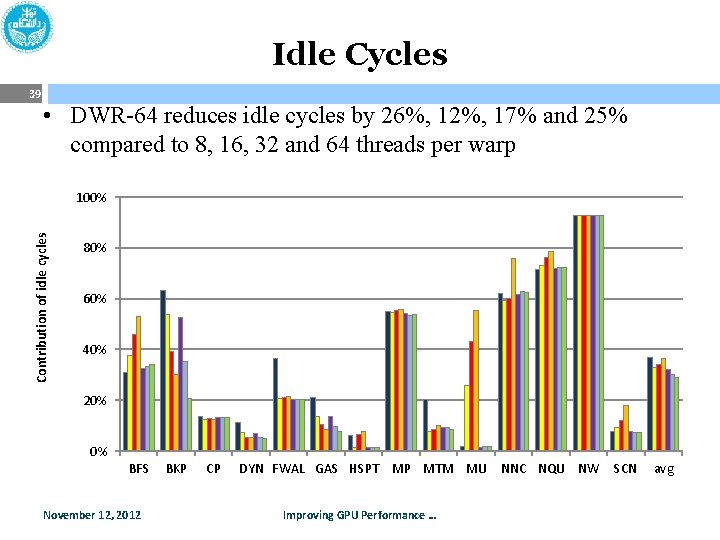

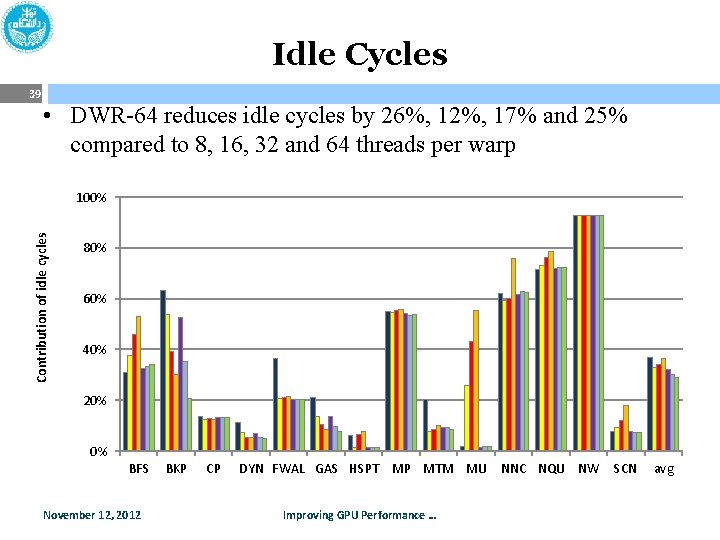

Idle Cycles 39 • DWR-64 reduces idle cycles by 26%, 12%, 17% and 25% compared to 8, 16, 32 and 64 threads per warp Contribution of idle cycles 100% 80% 60% 40% 20% 0% BFS November 12, 2012 BKP CP DYN FWAL GAS HSPT MP MTM MU Improving GPU Performance … NNC NQU NW SCN avg

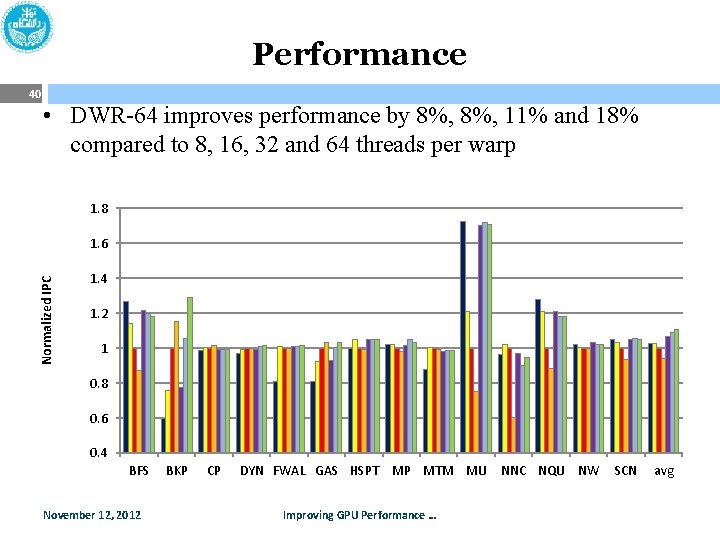

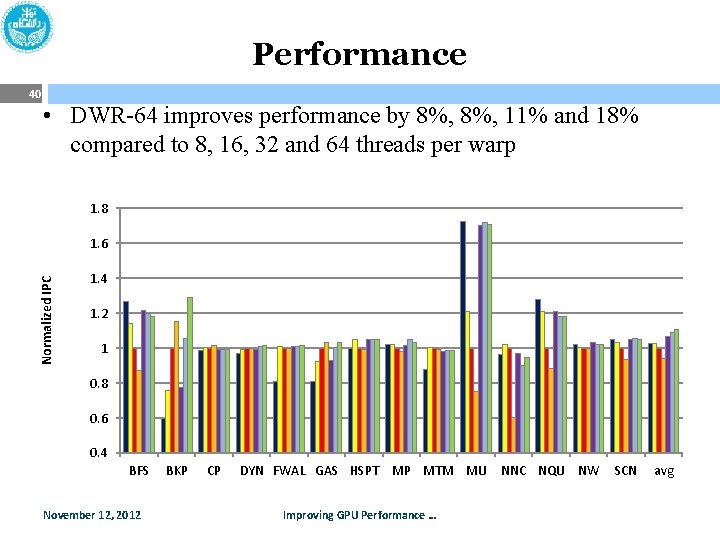

Performance 40 • DWR-64 improves performance by 8%, 11% and 18% compared to 8, 16, 32 and 64 threads per warp 1. 8 Normalized IPC 1. 6 1. 4 1. 2 1 0. 8 0. 6 0. 4 BFS November 12, 2012 BKP CP DYN FWAL GAS HSPT MP MTM MU Improving GPU Performance … NNC NQU NW SCN avg

Conclusion & Future Works 41 • Proposed mechanism are based on scheduling the short warps – When the coalescing width is over SIMD • CROWN can improve performance by 14% compared to conventional control-flow mechanism at the cost of 4. 2% area overhead – When the coalescing width is over entire warp • DWR can improve the performance of baseline micro-architecture by 8% at the cost of less than 1% area overhead • Energy-Efficiency of proposed mechanism should be evaluated – Full simulation – Exploiting locality November 12, 2012 Improving GPU Performance …

![References 42 1 A Bakhoda G L Yuan W W L Fung H Wong References 42 [1] A. Bakhoda, G. L. Yuan, W. W. L. Fung, H. Wong,](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-42.jpg)

References 42 [1] A. Bakhoda, G. L. Yuan, W. W. L. Fung, H. Wong, T. M. Aamodt. "Analyzing CUDA workloads using a detailed GPU simulator. " In Proceedings of IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS). 2009. 163 - 174. [2] W. W. L. Fung, I. Sham, G. Yuan, T. M. Aamodt. "Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow. " In Proceedings of 40 th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). 2007. 407 -420. [3] W. W. L. Fung, T. M. Aamodt. "Thread Block Compaction for Efficient SIMT Control Flow. " In Proceedings of 17 th IEEE International Symposium on High-Performance Computer Architecture (HPCA-17). 2011. 25 -36. [4] V. Narasiman, M. Shebanow, C. J. Lee, R. Miftakhutdinov, O. Mutlu, Y. N. Patt. "Improving GPU performance via large warps and two-level warp scheduling. " In Proceedings of the 44 th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). 2011. 308 -317. [5] M. Rhu, M. Erez. "CAPRI: prediction of compaction-adequacy for handling controldivergence in GPGPU architectures. " In Proceedings of the 39 th International Symposium on Computer Architecture (ISCA). 2012. 61 -71. [6] N. Brunie, S. Collange, G. Diamos. "Simultaneous branch and warp interweaving for sustained GPU performance. " In Proceedings of the 39 th International Symposium on Computer Architecture (ISCA). 2012. 49 -60. a November 12, 2012 Improving GPU Performance …

![Published 43 1 A Lashgar A Baniasadi Performance in GPU Architectures Potentials and Distances Published 43 [1] A. Lashgar, A. Baniasadi. "Performance in GPU Architectures: Potentials and Distances.](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-43.jpg)

Published 43 [1] A. Lashgar, A. Baniasadi. "Performance in GPU Architectures: Potentials and Distances. " 9 th Annual Workshop on Duplicating, Deconstructing, and Debunking (WDDD), in conjunction with ISCA 2011. [2] A. Lashgar, A. Baniasadi, A. Khonsari. "Dynamic Warp Resizing: Analysis and Benefits in High-Performance SIMT. " In Proceedings of the 30 th IEEE International Conference on Computer Design (ICCD) Poster Session. 2012. November 12, 2012 Improving GPU Performance …

44 Thank You! Any Question? November 12, 2012 Improving GPU Performance …

Backup Slides 45 November 12, 2012 Improving GPU Performance …

Methodology 46 • GPGPU-sim version 2. 1. 1 b – Configured to model Tesla-like architecture • Workloads from RODINIA, Parboil, CUDA SDK, GPGPUsim, and third-party sequence alignment Abbr. BFS MTM BKP MU 2 CP MU DYN NNC Name and Suite BFS Graph [R] Matrix Multiply [C] Back Propagation [R] MUMmer-GPU [R] big Coulumb Poten. [P] MUMmer-GPU [R] small Dyn_Proc [R] Nearest Neighbor [R] FWAL NN Fast Wal. Trans. [C] Neural Network [G] GAS NQU HSPT LPS NW MP 2 MP SR 1 RAY SR 2 SCN November 12, 2012 Gaussian Elimin. [R] N-Queen [G] Hotspot [R] Laplace 3 D [G] Needleman-Wun. [R] MUMmer-GPU++ [T] big MUMmer-GPU++ [T] small Speckle Reducing [R] big Ray Tracing [G] Speckle Reducing [R] small Scan [C] Grid Size 16 x(8) (5, 8) 2 x(1, 64) (196) (8, 32)(1) 13 x(35) 4 x(938) (6, 28) 6 x(32) (25, 28) 3 x(16) (100, 28) (10, 28) 48 x(3, 3) (256) (43, 43) 2 x(1) (4, 25)… (196) 2 x(31) (32) 4 x(8, 8) (16, 32) 4 x(4, 4) (64) Block Size Block 16 x(512) (16, 16) 2 x(16, 16) (256) (16, 8) (100) 13 x(256) 4 x(16) #Insn CTA/SM 14 1. 4 M 2. 4 M 44 2. 9 M 75 M 81 113 M 0. 2 M 48 64 M 5. 9 M (13, 13) 7 x(256) (5, 5) 3 x(512) 2 x(1) 11 M 68 M 2, 4 5, 8 48 x(16, 16) (96) (16, 16) (32, 4) 63 x(16) (256) 4 x(16, 16) (16, 8) 4 x(16, 16) (256) 9 M 1. 2 M 76 M 81 M 139 M 12 M 0. 3 M 9. 5 M 64 M 2. 4 M 3. 6 M 11 2 6 22 1 2, 33 14 Improving GPU Performance …

CROWN backup 47 November 12, 2012 Improving GPU Performance …

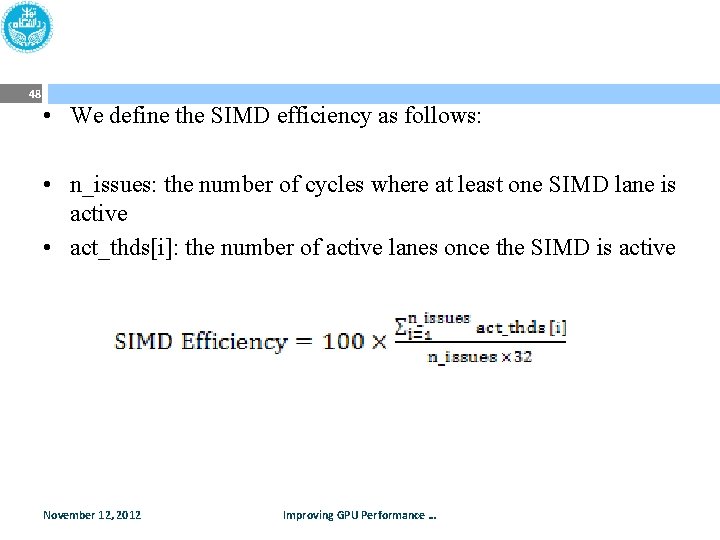

48 • We define the SIMD efficiency as follows: • n_issues: the number of cycles where at least one SIMD lane is active • act_thds[i]: the number of active lanes once the SIMD is active November 12, 2012 Improving GPU Performance …

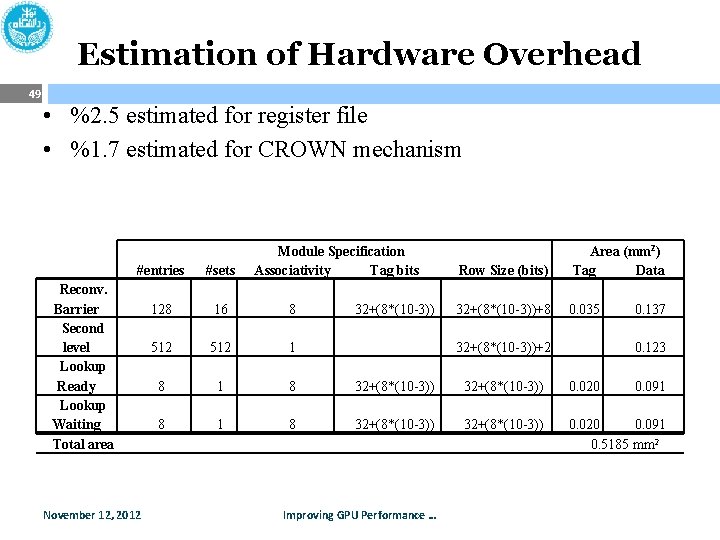

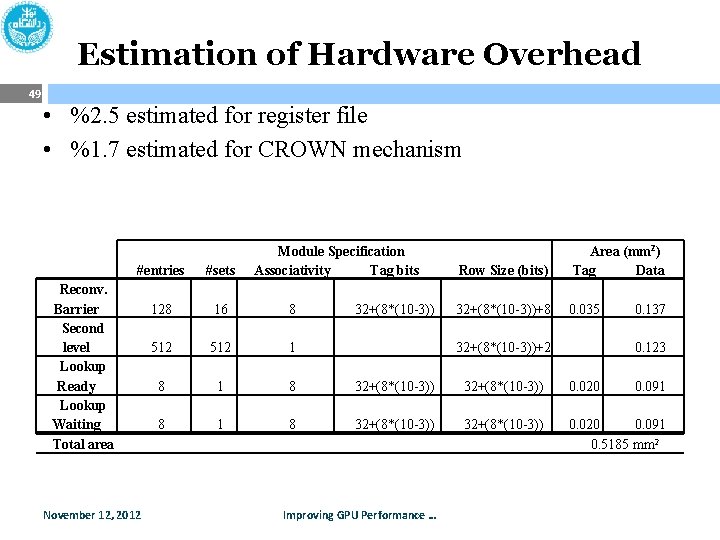

Estimation of Hardware Overhead 49 • %2. 5 estimated for register file • %1. 7 estimated for CROWN mechanism Module Specification Associativity Tag bits Row Size (bits) Area (mm 2) Tag Data 32+(8*(10 -3))+8 0. 035 #entries #sets 128 16 8 512 1 8 32+(8*(10 -3)) 0. 020 8 1 8 32+(8*(10 -3)) 0. 020 0. 091 0. 5185 mm 2 Reconv. Barrier Second level Lookup Ready Lookup Waiting Total area November 12, 2012 32+(8*(10 -3))+2 Improving GPU Performance … 0. 137 0. 123 0. 091

![Multibanked register file FungMICRO 2007 50 November 12 2012 Improving GPU Performance Multi-banked register file [Fung’MICRO 2007] 50 November 12, 2012 Improving GPU Performance …](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-50.jpg)

Multi-banked register file [Fung’MICRO 2007] 50 November 12, 2012 Improving GPU Performance …

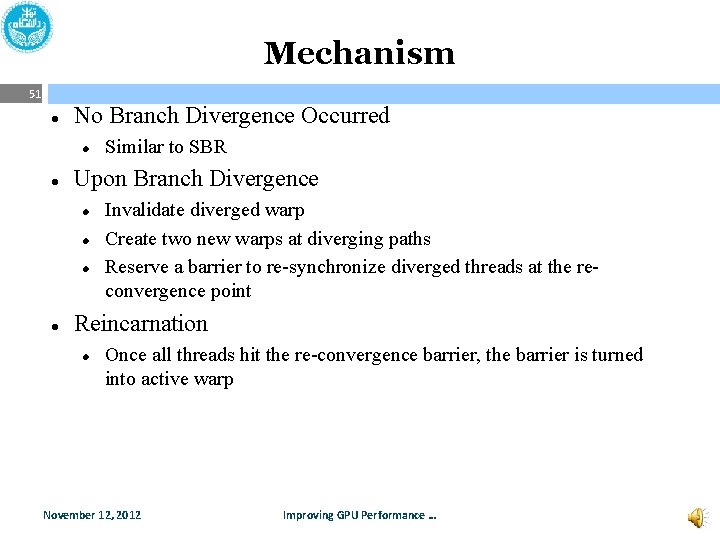

Mechanism 51 No Branch Divergence Occurred Upon Branch Divergence Similar to SBR Invalidate diverged warp Create two new warps at diverging paths Reserve a barrier to re-synchronize diverged threads at the reconvergence point Reincarnation Once all threads hit the re-convergence barrier, the barrier is turned into active warp November 12, 2012 Improving GPU Performance …

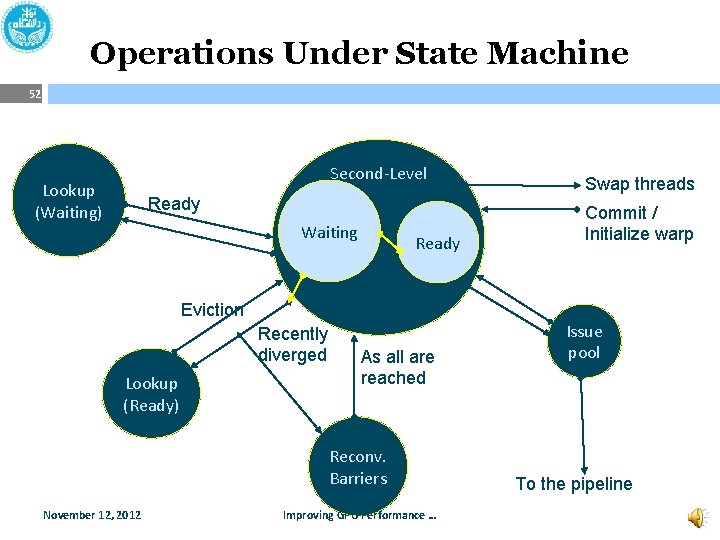

Operations Under State Machine 52 Second-Level Lookup (Waiting) Ready Waiting Ready Eviction Recently diverged Lookup (Ready) As all are reached Reconv. Barriers November 12, 2012 Improving GPU Performance … Swap threads Commit / Initialize warp Issue pool To the pipeline

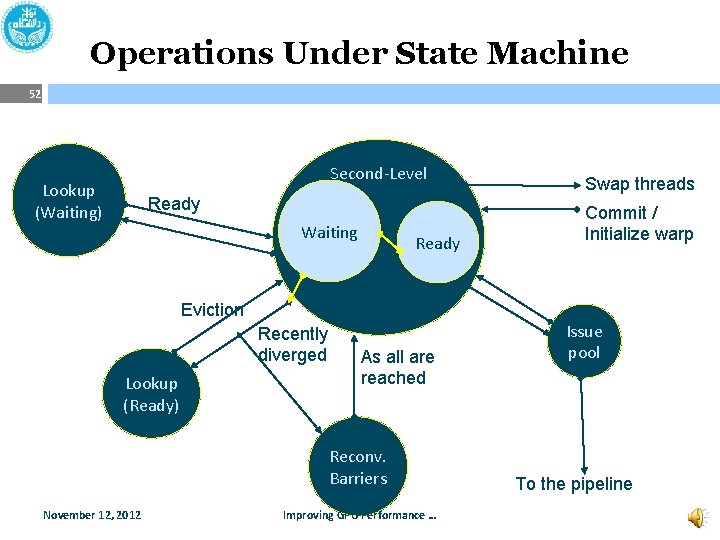

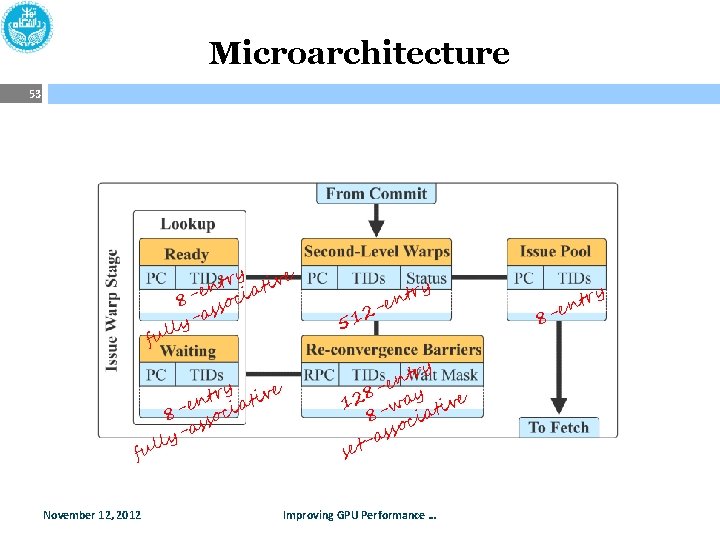

Microarchitecture 53 try tive n a e 8 - ssoci -a y l l fu November 12, 2012 2 51 y tr n e - try n -e y 8 12 -wa ative 8 oci ss a set Improving GPU Performance … 8 try n -e

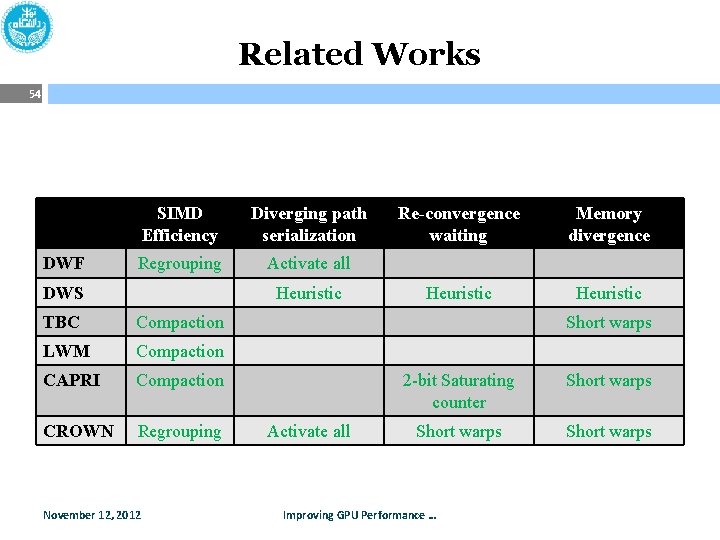

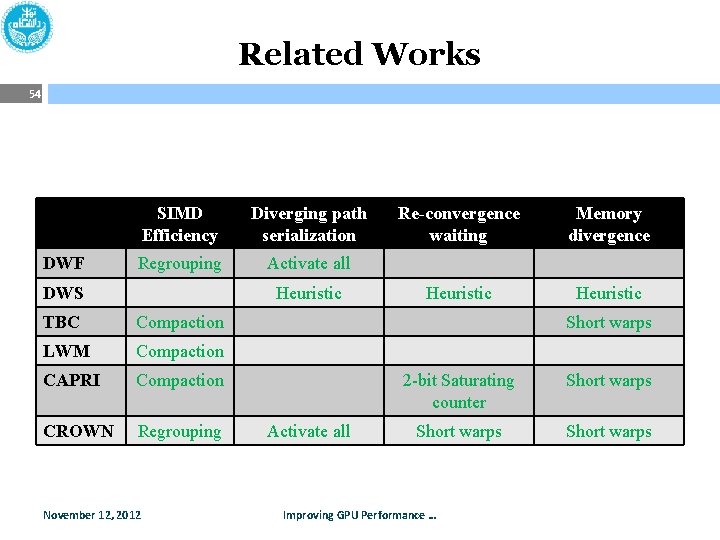

Related Works 54 DWF SIMD Efficiency Diverging path serialization Regrouping Activate all DWS Heuristic TBC Compaction LWM Compaction CAPRI Compaction CROWN Regrouping November 12, 2012 Re-convergence waiting Memory divergence Heuristic Short warps Activate all 2 -bit Saturating counter Short warps Improving GPU Performance …

PDOM 55 B W 1 1111 W 0 0110 W 1 0001 D November 12, 2012 C W 0 1111 W 1 1111 W 0 W 0 W 0 1111 W 0 1001 W 1 1110 W 1 W 1 W 1 A W 0 SIMD Utilization over time RPC PC Mask Vector D- D A B C 0 1 0 1 D- D B 0 1 1 1 0 1 - D 1 1 RPC PC D- D A B C 0 1 0 1 D- D B 0 1 0 1 1 - D 1 1 Mask Vector Dynamic regrouping of diverged threads at same path increases utilization Improving GPU Performance … TOS

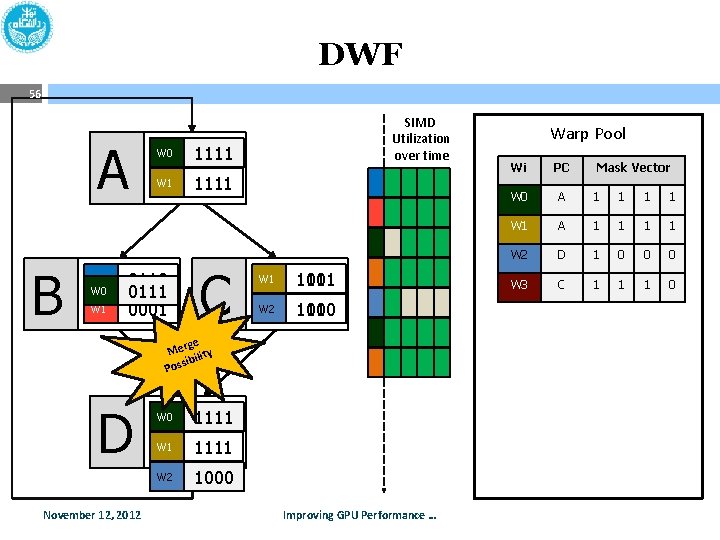

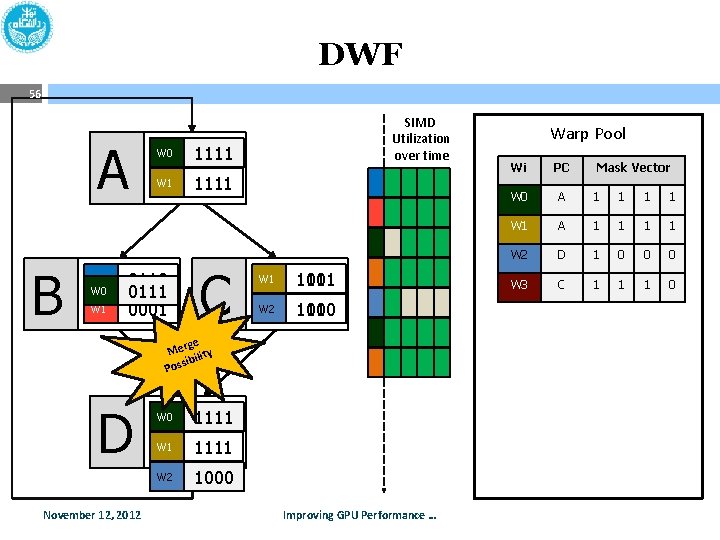

DWF 56 A B W 0 W 1 0110 0111 0001 SIMD Utilization over time 1111 C W 2 W 1 1001 1111 W 3 W 2 1110 1000 ge Mer ility sib Pos D November 12, 2012 W 0 0111 1111 W 2 1000 Improving GPU Performance … Warp Pool Wi PC Mask Vector W 0 B D A 0 1 1 1 0 1 W 1 C B D A 0 1 0 1 1 W 2 D C 1 0 0 0 1 W 3 C 1 1 1 0

![57 Large Warp Microarchitecture NarasimanMICRO 2011 November 12 2012 Improving GPU Performance 57 Large Warp Micro-architecture [Narasiman’MICRO 2011] November 12, 2012 Improving GPU Performance …](https://slidetodoc.com/presentation_image_h/0d29c1a806033ece9b5a05f1378423e0/image-57.jpg)

57 Large Warp Micro-architecture [Narasiman’MICRO 2011] November 12, 2012 Improving GPU Performance …

58 Operation Example - SBR 1 2 4 3 5 Status of Concurrent Threads 6 1 2 1 2 1 6 3 4 Ready 4 Inactive masked 6 6 Waiting at 6 re-convergence 1 1 6 1 2 6 Terminated 6 4 5 5 6 4 5 6 6 6 6 4 5 6 SIMD Time 1 1 1 1 2 November 12, 2012 2 Active 3 2 Idle 4 4 Improving GPU Performance … 5 4 5 5 5 6 6 6 6

59 Operation Example - CROWN 1 2 4 Inactive masked 3 5 Active Waiting at re-convergence Idle Terminated 6 Status of Concurrent Threads 1 2 2 3 3 3 5 5 5 6 6 1 2 2 4 4 5 5 6 6 1 1 2 2 4 4 4 5 5 5 6 6 1 1 6 6 6 1 1 1 6 6 6 6 6 6 1 1 2 2 4 4 5 5 6 6 6 1 1 2 2 6 3 4 5 5 6 6 6 1 1 2 2 6 5 5 6 6 6 Time SIMD Ready November 12, 2012 4 4 Improving GPU Performance …

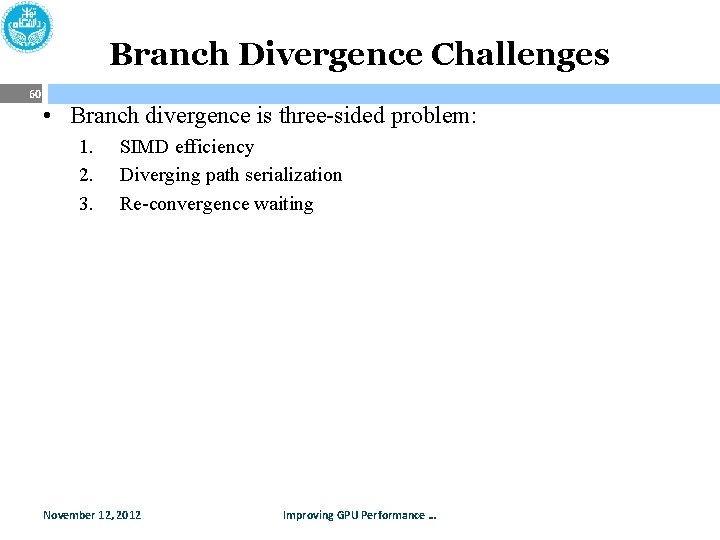

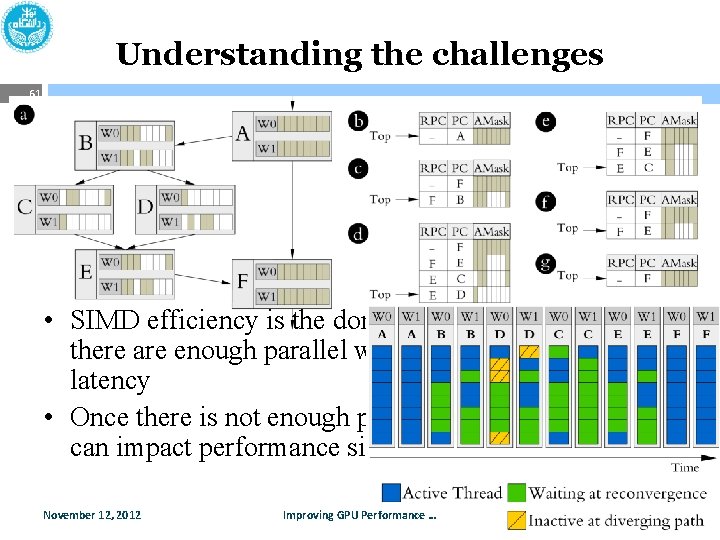

Branch Divergence Challenges 60 • Branch divergence is three-sided problem: 1. 2. 3. SIMD efficiency Diverging path serialization Re-convergence waiting November 12, 2012 Improving GPU Performance …

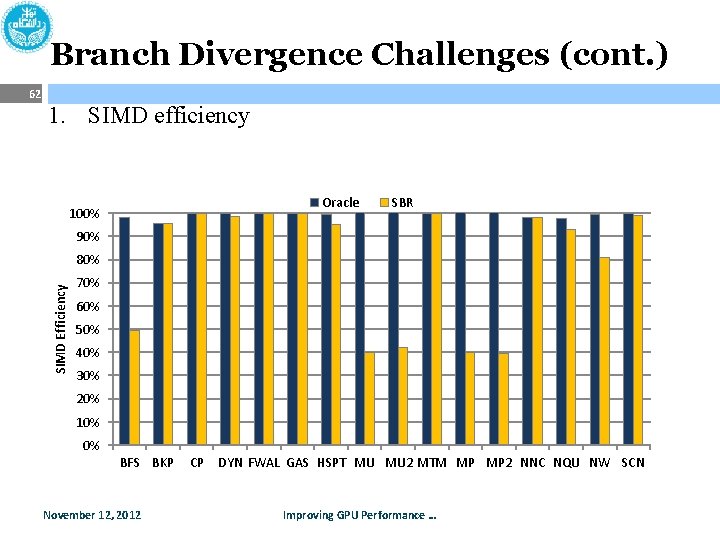

Understanding the challenges 61 • SIMD efficiency is the dominant challenge as far as there are enough parallel warps to hide the memory latency • Once there is not enough parallelism, two other factor can impact performance significantly November 12, 2012 Improving GPU Performance …

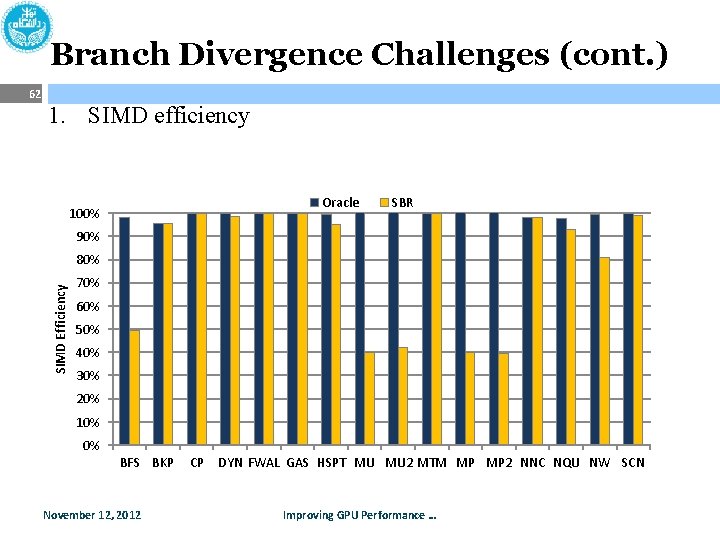

Branch Divergence Challenges (cont. ) 62 1. SIMD efficiency Oracle 100% SBR 90% SIMD Efficiency 80% 70% 60% 50% 40% 30% 20% 10% 0% BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM MP MP 2 NNC NQU NW SCN Improving GPU Performance …

Branch Divergence Challenges (cont. ) 63 2. Diverging path serialization 3. Re-convergence waiting Waiting at reconvergence Waiting at barrier synch. N SC U NW C NQ NN M P 2 Improving GPU Performance … P M DY N FW AL GA S HS PT M U 2 M TM CP CP P S Already issued in the pipeline BK 75% Inactive Ready BF 50% 25% NW SC N M P 2 NN C NQ U M U 2 M TM U M T S HS P GA AL FW P BK BF S November 12, 2012 N 0% DY Contribution of Idle Normalized Number of Concurrent Cycles Threads 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 100%

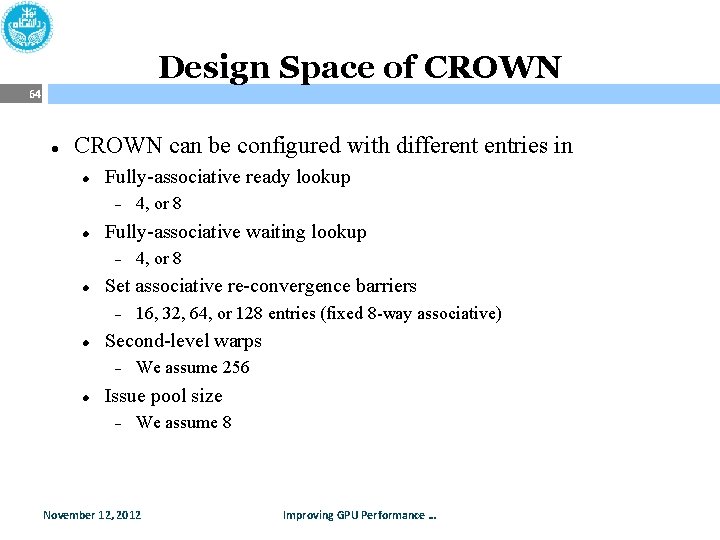

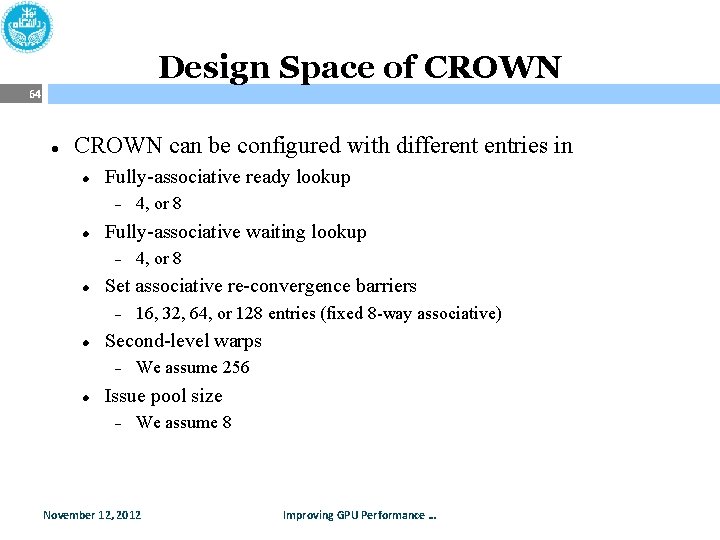

Design Space of CROWN 64 CROWN can be configured with different entries in Fully-associative ready lookup Fully-associative waiting lookup 16, 32, 64, or 128 entries (fixed 8 -way associative) Second-level warps 4, or 8 Set associative re-convergence barriers 4, or 8 We assume 256 Issue pool size We assume 8 November 12, 2012 Improving GPU Performance …

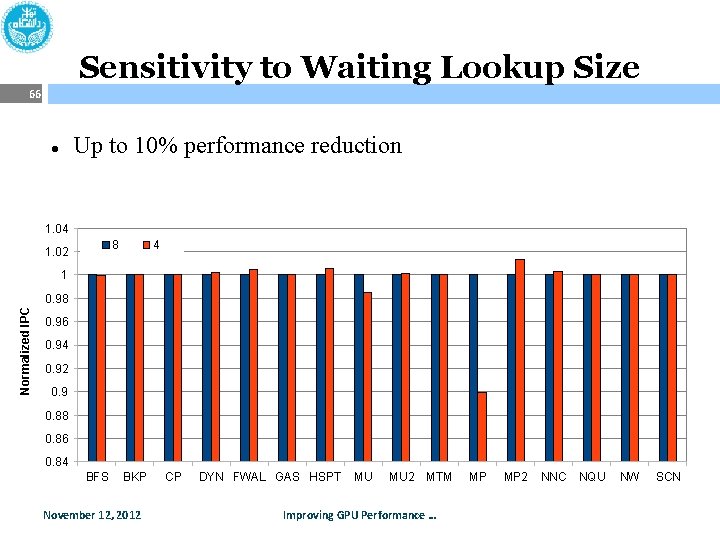

Sensitivity to Ready Lookup Size 65 Up to 8% performance change 1. 02 8 4 Normalized IPC 1 0. 98 0. 96 0. 94 0. 92 0. 9 0. 88 0. 86 BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP MP 2 NNC NQU NW SCN

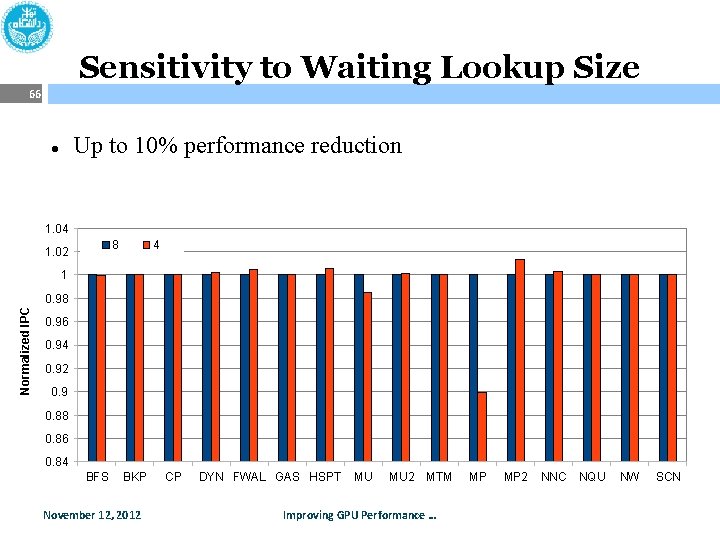

Sensitivity to Waiting Lookup Size 66 Up to 10% performance reduction 1. 04 8 1. 02 4 1 Normalized IPC 0. 98 0. 96 0. 94 0. 92 0. 9 0. 88 0. 86 0. 84 BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP MP 2 NNC NQU NW SCN

Sensitivity to Reconv. Barriers Size 67 Lower Re-convergence barriers nears CROWN to DWF 128 64 32 16 DWF Normalized IPC 1. 2 1 0. 8 0. 6 0. 4 0. 2 0 BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP MP 2 NNC NQU NW SCN

Compared to Previous Works 68 1024 -thread per SM 16 -wide 8 -stage SIMD 1024 -thread per SM 8 -wide 16 -stage SIMD 512 -thread per SM 8 -wide 8 -stage SIMD November 12, 2012 Improving GPU Performance …

1024 -thread 16 -wide 8 -stage SIMD 69 Larger synchronization overhead LWM Normalized IPC 3 DWF CROWN SBR ﺧﻂ 16 SIMD )ﺍﻟﻒ( پﻬﻨﺎﻱ 2. 5 2 1. 5 1 0. 5 0 BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP MP 2 NNC NQU NW SCN hmean

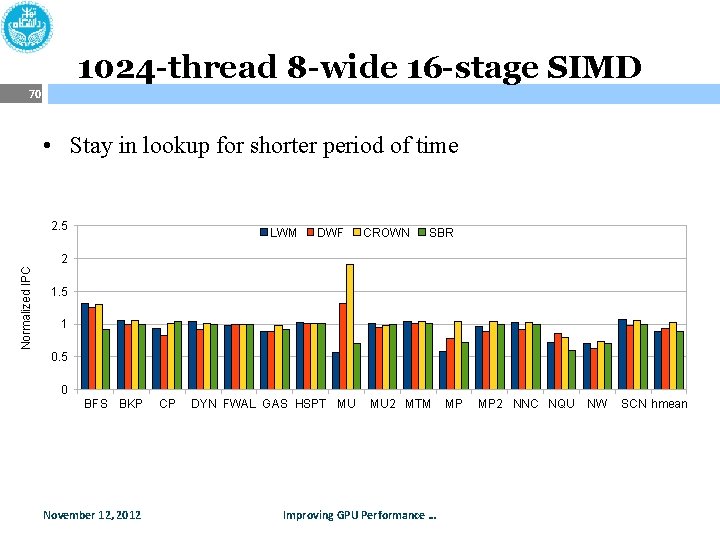

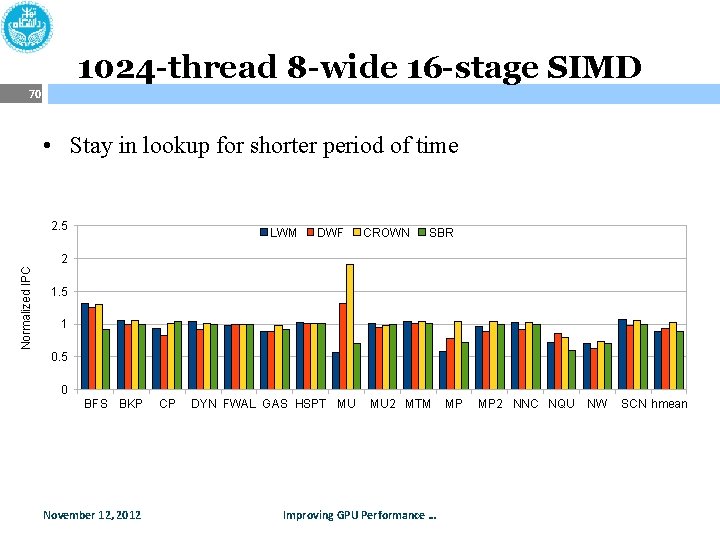

1024 -thread 8 -wide 16 -stage SIMD 70 • Stay in lookup for shorter period of time 2. 5 LWM DWF CROWN SBR Normalized IPC 2 1. 5 1 0. 5 0 BFS BKP November 12, 2012 CP DYN FWAL GAS HSPT MU MU 2 MTM Improving GPU Performance … MP MP 2 NNC NQU NW SCN hmean

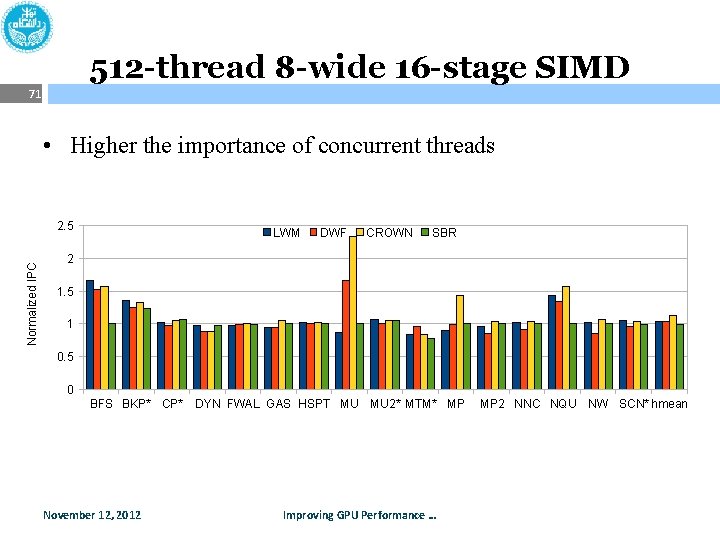

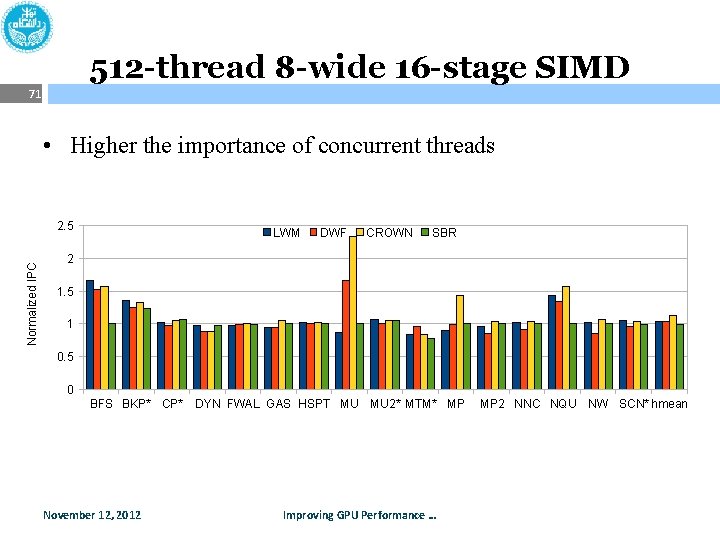

512 -thread 8 -wide 16 -stage SIMD 71 • Higher the importance of concurrent threads Normalized IPC 2. 5 LWM DWF CROWN SBR 2 1. 5 1 0. 5 0 BFS BKP* CP* November 12, 2012 DYN FWAL GAS HSPT MU MU 2* MTM* MP Improving GPU Performance … MP 2 NNC NQU NW SCN* hmean

DWR Backup 72 November 12, 2012 Improving GPU Performance …

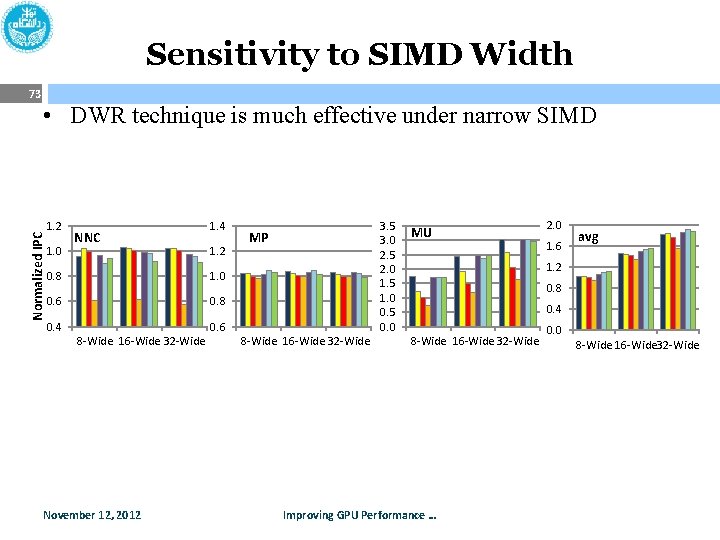

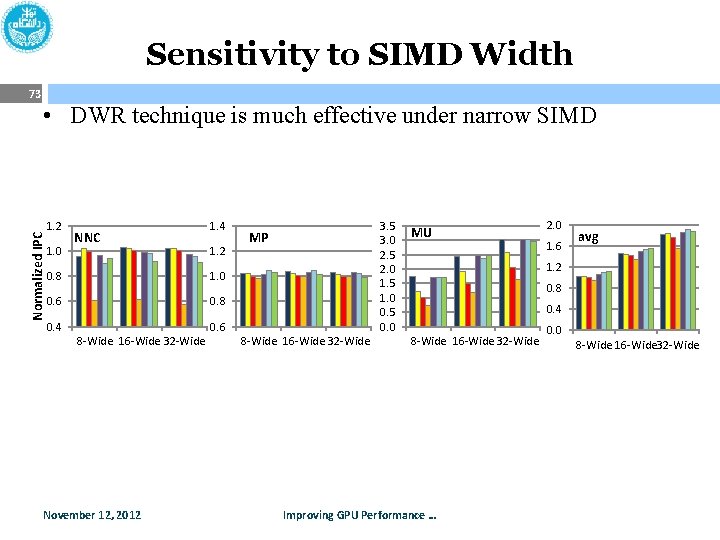

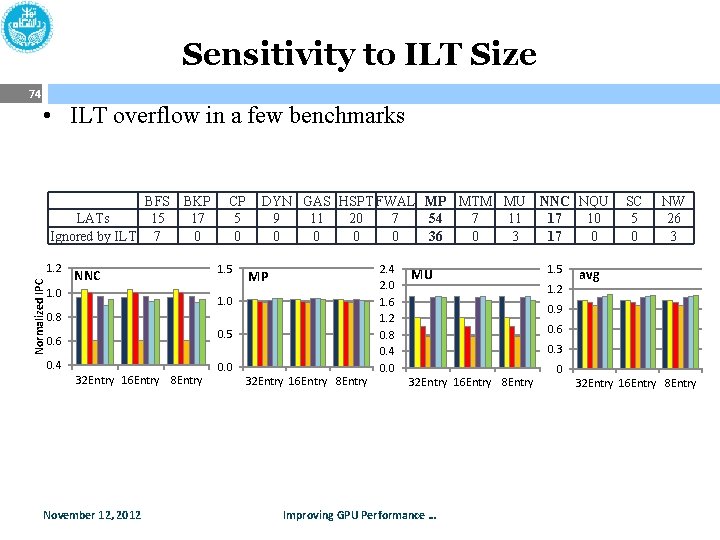

Sensitivity to SIMD Width • DWR technique is much effective under narrow SIMD Normalized IPC 73 1. 2 1. 0 NNC 1. 4 1. 2 0. 8 1. 0 0. 6 0. 8 0. 4 0. 6 8 -Wide 16 -Wide 32 -Wide November 12, 2012 3. 5 3. 0 2. 5 2. 0 1. 5 1. 0 0. 5 0. 0 MP 8 -Wide 16 -Wide 32 -Wide MU 2. 0 1. 6 avg 1. 2 0. 8 0. 4 8 -Wide 16 -Wide 32 -Wide Improving GPU Performance … 0. 0 8 -Wide 16 -Wide 32 -Wide

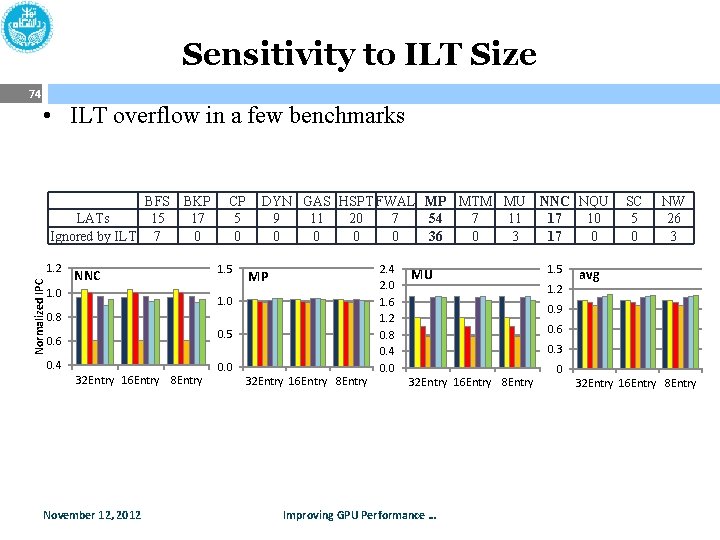

Sensitivity to ILT Size 74 • ILT overflow in a few benchmarks BFS BKP LATs 15 17 Ignored by ILT 7 0 Normalized IPC 1. 2 NNC 1. 0 CP 5 0 1. 5 DYN GAS HSPT FWAL MP MTM MU NNC NQU 9 11 20 7 54 7 11 17 10 0 0 36 0 3 17 0 MP 1. 0 0. 8 0. 5 0. 6 0. 4 32 Entry 16 Entry 8 Entry November 12, 2012 0. 0 32 Entry 16 Entry 8 Entry 2. 4 2. 0 1. 6 1. 2 0. 8 0. 4 0. 0 MU 1. 5 1. 2 SC 5 0 NW 26 3 avg 0. 9 0. 6 0. 3 32 Entry 16 Entry 8 Entry Improving GPU Performance … 0 32 Entry 16 Entry 8 Entry

Ignore list 75 • All of the LATs are not useful for coalescing: 1: if( sub_warp_id == 0){ 2: reg. A = gmem[idx. A]; 3: } 4: reg. B = gmem[idx. B]; (a) 1: if( sub_warp_id == 0){ 2: reg. A = gmem[idx]; 3: } 4: __syncthreads(); (b) • Add the PC of such LATs to ignore list table (ILT) for bypassing the future synchronization November 12, 2012 Improving GPU Performance …

• Locality can be exploited to design efficient pipeline front-end Fetch Redundancy 76 Improving the Energy-Efficiency of Short Warps 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% BFS BKP CP DYN FWAL GAS HSPT MP 2 MP MTM MU 2 MU NNC NQU NW SCN <= 16 -fetch November 12, 2012 <= 32 -fetch and > 16 -fetch Improving GPU Performance … <= 64 -fetch and > 32 -fetch

81 November 12, 2012 Improving GPU Performance …