Improving File System Synchrony CS 614 Lecture Fall

- Slides: 35

Improving File System Synchrony CS 614 Lecture – Fall 2007 – Tuesday October 16 By Jonathan Winter

Introduction 2 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Motivation § File system I/O is a major performance bottleneck. • On chip computation and caches access are very fast. • Even main memory accesses are comparatively quick. • Distributed computing further exacerbates the problem. § Durability and fault-tolerance are also key concerns. • Files systems depend on mechanical disks. o Common source of crashes, data loss, and system incoherence. • Distributed file systems further complicate reliability issues. • Typically performance and durability must be traded off. § Ease-of-use is also important. • Synchronous I/O semantics make programming easier. 3 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Outline 1. Overview of Local File System I/O Scenario 2. Overview of Distributed File System Scenario 3. A User-Centric View of Synchronous I/O 4. Similarities and Differences in Problem Domains 5. Details of the Speculator Infrastructure 6. Implementation of External Synchrony 7. Benchmark Descriptions 8. Performance Results 9. Conclusions 4 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Local File System I/O § Traditional File Systems Come in Two Flavors. • Synchronous provide durability guarantees by blocking. o o o OS crashes and power failures will not cause data loss. File modifications are ordered providing determinism. Blocking and sequential execution for ordering reduces performance. • Asynchronous files systems don’t block on modifications. o o o § Commit can occur long after completion. Users can view output later invalidated by a crash. Synchronization can be enforced through explicit commands (fsync). fsync does not protect against data loss on a typical desktop OS. Performance is higher through buffering and group commit. ext 3 is a standard journaling Linux local file system. • Can be configured in async, and durable modes. 5 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Distributed File Systems § Distributed file systems typically use synchronous I/O. • Provides straightforward abstraction of single namespace. • Enables cache coherence and durability. • Synchronous messages have long latencies over network. • Weaker consistency used (close-to-open) for speed. § Common systems include AFS and NFS. § Earlier research by authors created the Blue File System. • Provides single-copy semantics. • Distributed nature of network is transparent. 6 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

User-Centric View of Synchrony § Synchronous I/O assumes an application-centric view. • Durability of file system guaranteed for application. • System views application as external entity. • Application state must be kept consistent. o o § Application must not see uncommitted results. Application must block on distributed file I/O. User-centric view considers application state as internal. • Observable output must be synchronous. o o o Kernel and applications are both internal state. Internal implementation can run asynchronously. Only external output to the screen or network must be synchronous. • Execution of internal components can be speculative. o Results of speculative execution cannot be observed by outside. 7 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Similarities Between Scenarios § Both local and distributed file system solutions require buffering output to user and external environment. • Asynchrony of implementation hidden from user. • Durability must be preserved in the presence of faults. • Speculative execution must not be seen until commit. § Both speculation and external synchrony require dependence tracking for uncommitted process and kernel state. • Tracking allows speculative execution rollback misspeculations. • Tracking determines which data should not yet be user visible. § Asynchronous implementation allows computation, IPC, I/O messages, network communication, and disk writes to overlap. • Major source of systems’ performance improvement. 8 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Differences Between Scenarios § Speculative execution in distributed file systems requires checkpointing and recovery on misspeculation. § External synchrony does not speculate, it just allows internal state to run ahead of the output to user. § Speculative execution must block in some situations. • Checkpointing challenges limit the kinds of supported IPC. • Shared memory was not implemented in distributed setting. § External synchrony conservatively assumes all readers in shared memory inherit dependencies. 9 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

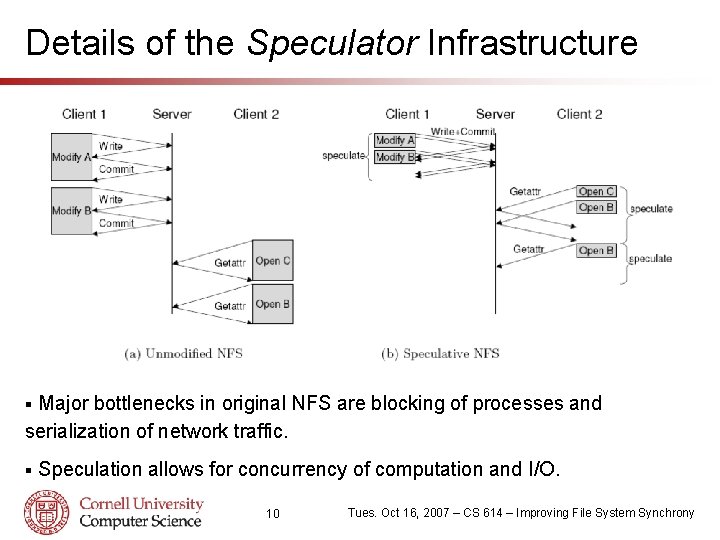

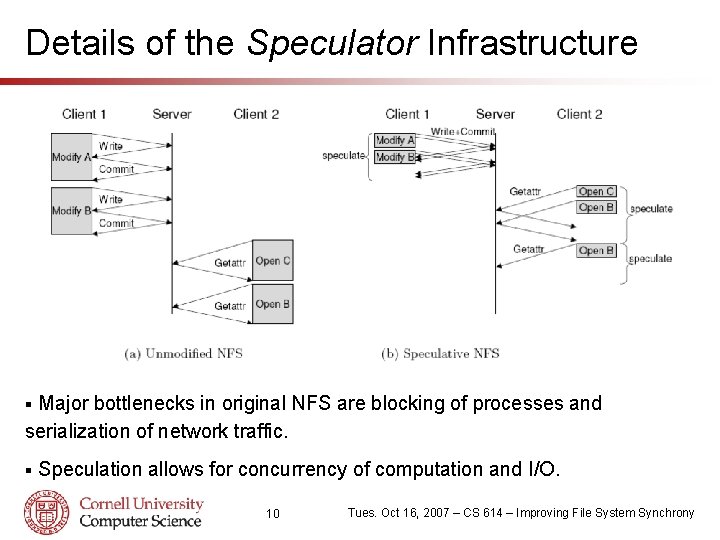

Details of the Speculator Infrastructure Major bottlenecks in original NFS are blocking of processes and serialization of network traffic. § § Speculation allows for concurrency of computation and I/O. 10 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Conditions for Success of Speculations § File systems chosen as first target for Speculator because: • Results of speculative operations are highly predictable. o o Clients cache data and concurrent updates are rare. Speculating that cached data is valid is successful most of the time. • Network I/O much slower than checkpointing. o Checkpoint is low overhead and a lot speculative work can be completed in the time that the cached data is verified. • Computers have spare resources available for speculation. o o o Processors are idle significant portions of the time. Extra memory is available for checkpoints. Spare resources are available for use to speed up I/O throughput. 11 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Speculation Interface § Speculator requires modifications to system calls to allow for speculative distributed I/O and to propagate dependencies. § Interface is designed to encapsulate implementation details. • Speculator provides: o o o create_speculation commit_speculation fail_speculation • Speculator doesn’t worry about details of speculative hypotheses. • Distributed file system is oblivious to checkpointing and recovery. • Partitioning of responsibilities allows for easy modification of internal implementation and expansion of support for IPC. 12 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Speculation Implementation § Checkpointing performed by executing a copy-on-write fork. • Must save the state of open file descriptors and copy signals. • Forked child only run if speculation fails, discarded otherwise. • If speculation fails, child fork is given identify of original process. § Two data structures added to kernel to track speculative state. • Speculation structure created by create_speculation to track the set of kernel objects that depend on the new speculation. • The undo log is an ordered list of speculative operations with information to allow speculative operations to be undone. • Multiple speculations can be started for the same process, with multiple speculation structures and checkpoints. • If a previous speculation was read only, checkpoints are shared. • New checkpoints are required every 500 ms to cap recovery time. 13 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Ensuring Correct Speculative Execution § Two invariants must hold for correct execution. • Speculative state should not be visible to the user or external devices. o Output to screen, network, and other interfaces must be buffered. • Processes cannot view speculative state unless they are registered as dependent upon that state. o Non-speculative processes must block or become speculative when viewing speculative state. § Blocking can always be used to ensure correctness. § System calls that do not modify state or modify only private state can be performed speculatively unmodified. § Speculation flags set in file system superblocks and for read and write system calls to indicate dependency relationships. 14 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Multi-Process Speculation § To extend the amount of possible speculative work, a speculative process can perform inter-process communication. • Dependencies must propagate from a process P to an object X when P modifies X and P depends on speculation that X does not. • Typically propagations are bi-directional between objects. A commit_speculation will deleted the associated speculation structure and removed related undo log entries. § Fail_speculation will atomically perform rollback. § § The undo log, undo entries, and speculations are generic. • Undo log entries point to type-specific state and functions to implement type-specific rollback for different forms of IPC. 15 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

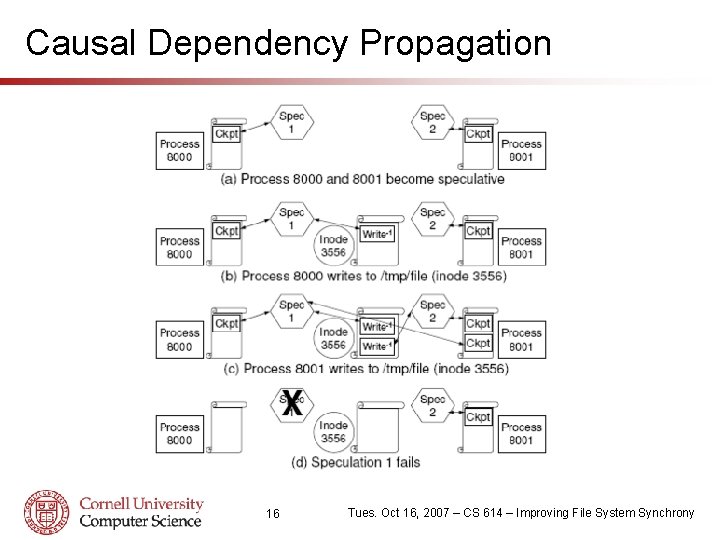

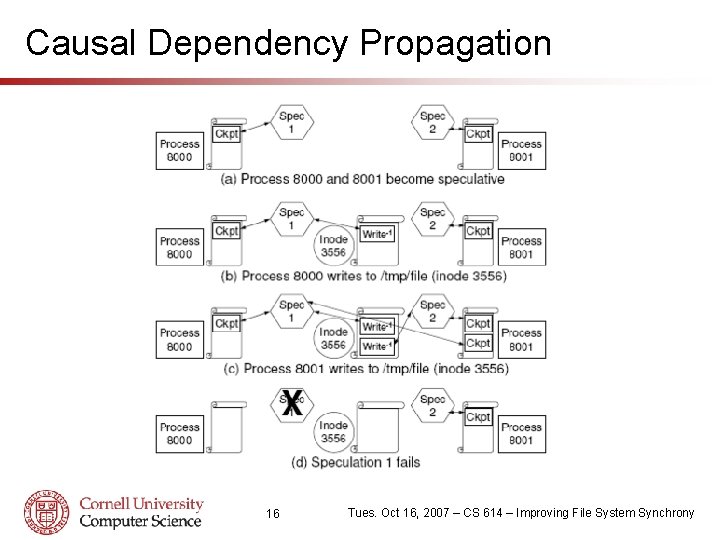

Causal Dependency Propagation 16 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Forms of Supported IPC § Distributed file system objects. • Cached copies used speculatively, deleted and retrieved if stale. § Local memory file system – RAMFS was modified. § Modified ext 3 to allow speculation for local disk file system. • Speculative data never written to disk. • Calling fdatasync blocks the process. • Processes can observe speculative metadata in ext 3 superblocks, bitmaps, and group descriptors. Metadata can be written to disk. • ext 3 journal modified to separate speculative and non-speculative data in compound transactions. § Pipes and fifos handled like local file systems. 17 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Forms of Supported IPC (continued) § Unix sockets propagate dependencies bi-directionally § Signals challenging because exitting process cannot restart. • Signaling processes are checkpointed and managed with queue. § During fork, child inherits all dependencies of parent. § Exiting processes not deallocated until all dependencies are resolved. § Other forms of IPC not supported: • System V IPC, futexes, and shared memory. • Processes block to ensure proper behavior. 18 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Using Speculation in the File System § For read operations, cached version of file is required. • Speculation assumes file has not been modified, • RPCs changed from synchronous to asynchronous. § Server with full knowledge managing mutating operations. • Server permits other processes to see speculatively changed files only if the cached version matches the server version. • Server must process messages in same ordering as clients see. • Server never stores speculative data. Clients group commit multiple operations with one disk write. § NFS modified to support Speculator (keeps close-to-open). § Blue File System modified to show speculation can enabled strong consistency and safety as well as good performance. § 19 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

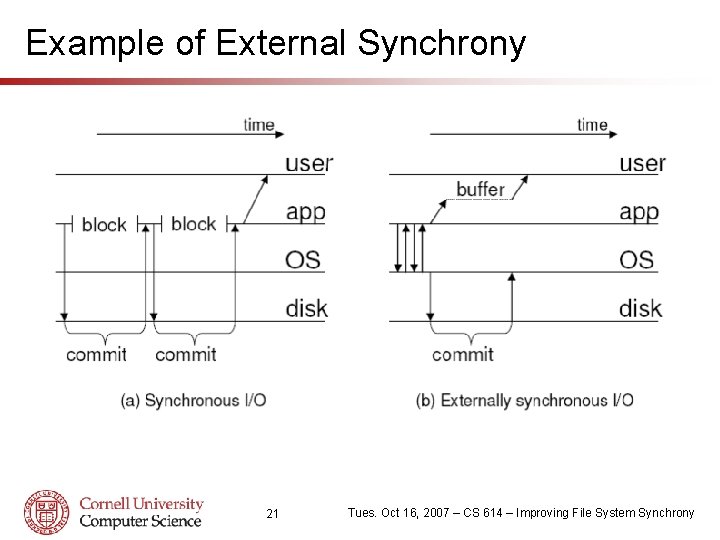

External Synchrony § Goal: Provide the reliability and ease-of-use of synchronous I/O and the performance of asynchronous. § Implementation called xsyncfs build on top of ext 3. § File system transactions are completed in non-blocking manner but output is not allowed to be externalized. § All output is buffered in the OS to be released when all disk transactions depended on commit. § Processes with commit dependencies propagate output restrictions when interacting with other processes through IPC. § Xsyncfs uses output-triggered commits to balance throughput and latency. 20 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

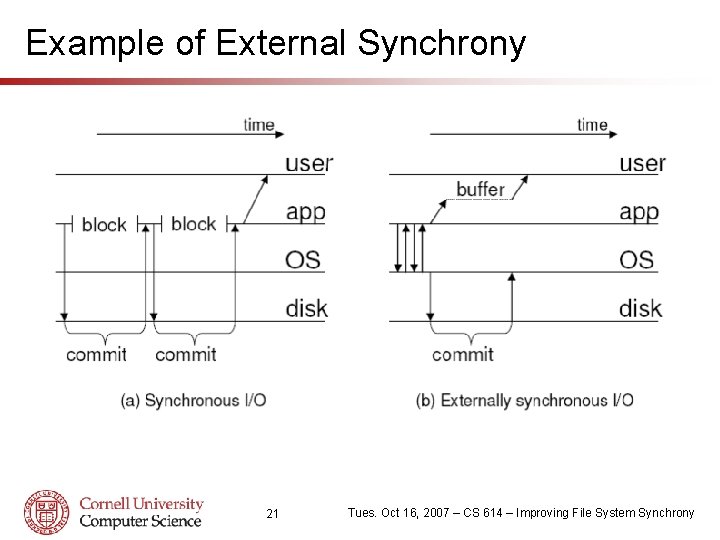

Example of External Synchrony 21 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

External Synchrony Design Overview § Synchrony defined by externally observable behavior. • I/O is externally synchronous if output cannot be distinguished from output that could be produced from synchronous I/O. o o o Requires values of external outputs to be the same. Outputs must occur in same causal order as defined by Lamport’s happens before relation. Disk commits are considered external output. • File system does all the same processing as for synchronous. o § Need not commit the modification to disk before returning. Two optimizations made to improve performance. • Group committing is used (commits are atomic). • External output is buffered and processes continue execution. § Output guaranteed to be committed every 5 seconds. 22 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

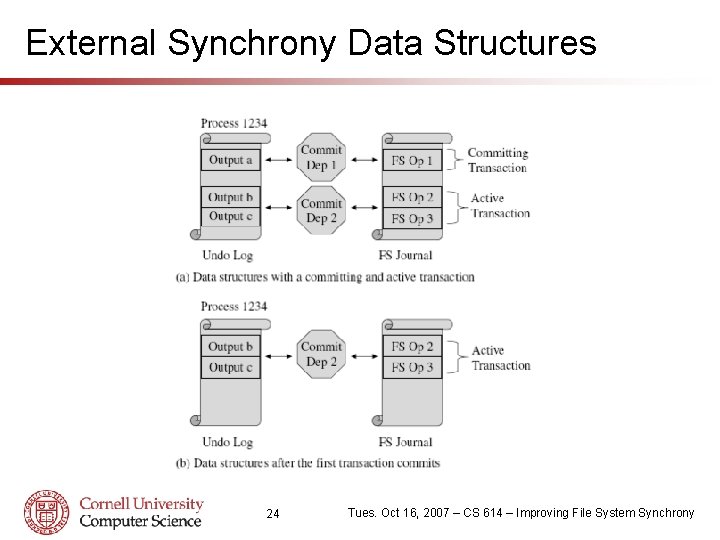

External Synchrony Implementation § Xsyncfs leverages Speculator infrastructure for output buffering and dependency tracking for uncommitted state. • Checkpointing and rollback features unneeded and are disabled. § Speculator tracks commit dependencies between processes and uncommitted file system transactions. • Processes interacting with the dependent process are marked as dependent on the same set of uncommitted transactions. • Many-to-many relationships between objects tracked in undo logs. § ext 3 operates in journaled mode. • Multiple modifications are grouped into compound transactions. • Single transaction active at any time and committed atomically. • Likewise only one transaction can be committing at a time. 23 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

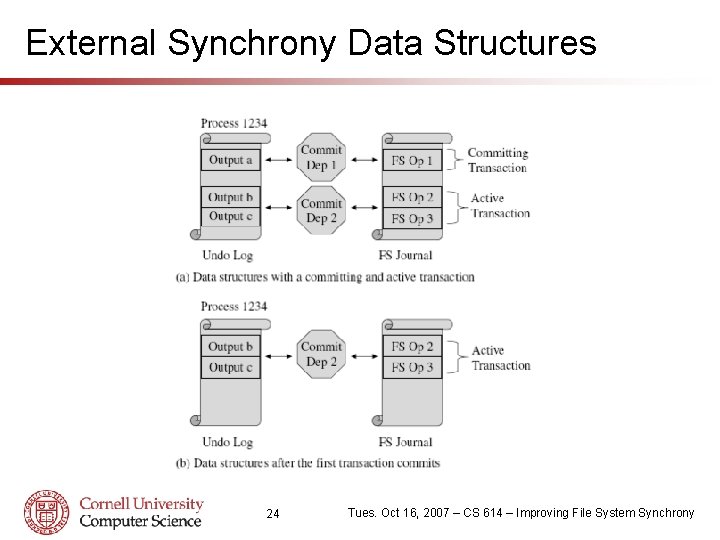

External Synchrony Data Structures 24 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Some Additional Issues § External synchrony must be augmented to support explicit synchronization operations such as sync and fdatasync. • A commit dependency is created between the calling process and active transaction, creating a visible event causing a commit. § Xsyncfs does not require application modification. • Programmers can write the same code as for synchronous. • Explicit synchronization is not needed. • Programmers don’t need to added group commit to the code. § Hand-tuned code can provide benefits in when programmers have specialized information. § However, xsyncfs has global information about external output which can be used to optimize commit throughput. 25 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Evaluation Methodology § All experiments run on Pentium 4 processors. § Red. Hat Enterprise Linux release 3 (kernel 2. 4. 2. 1) used. § Speculative execution evaluated for two scenarios. • First has no delay and second assumes 30 ms round trip. • Packets routed through NISTnet network emulator. 26 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

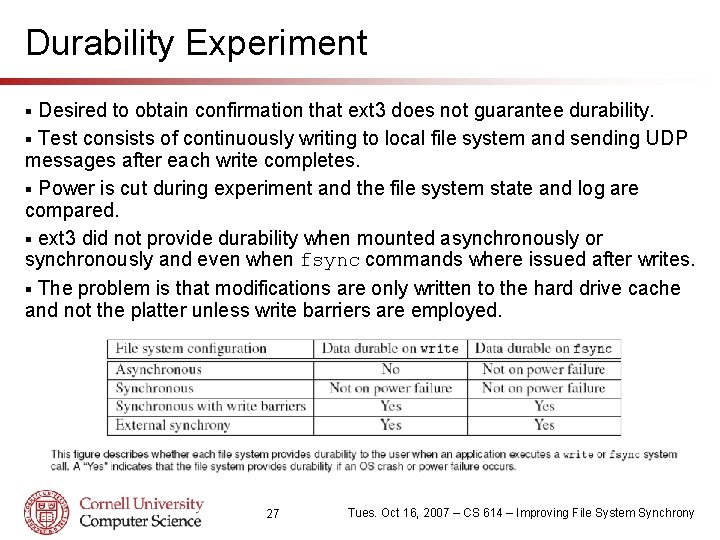

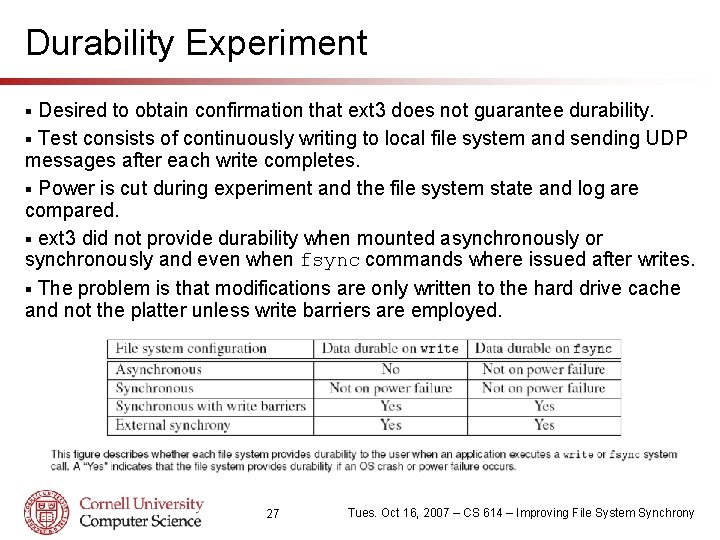

Durability Experiment Desired to obtain confirmation that ext 3 does not guarantee durability. § Test consists of continuously writing to local file system and sending UDP messages after each write completes. § Power is cut during experiment and the file system state and log are compared. § ext 3 did not provide durability when mounted asynchronously or synchronously and even when fsync commands where issued after writes. § The problem is that modifications are only written to the hard drive cache and not the platter unless write barriers are employed. § 27 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

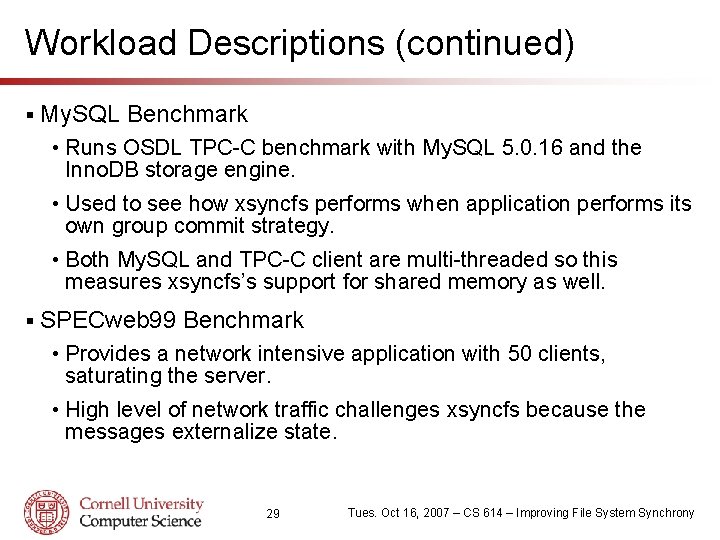

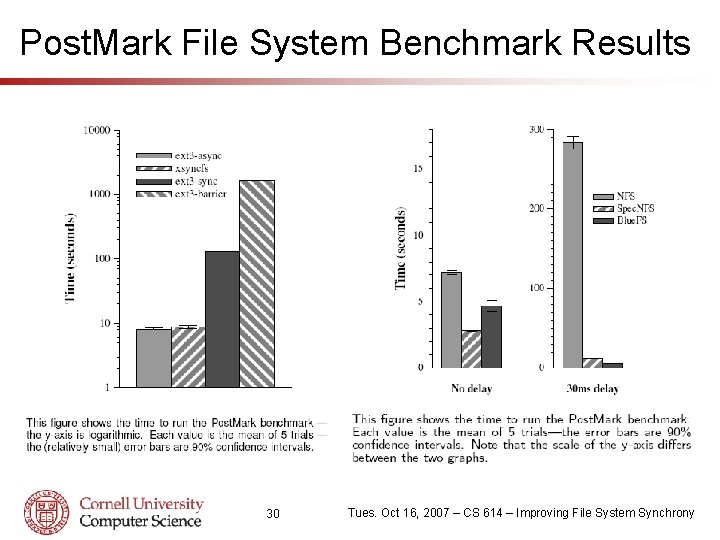

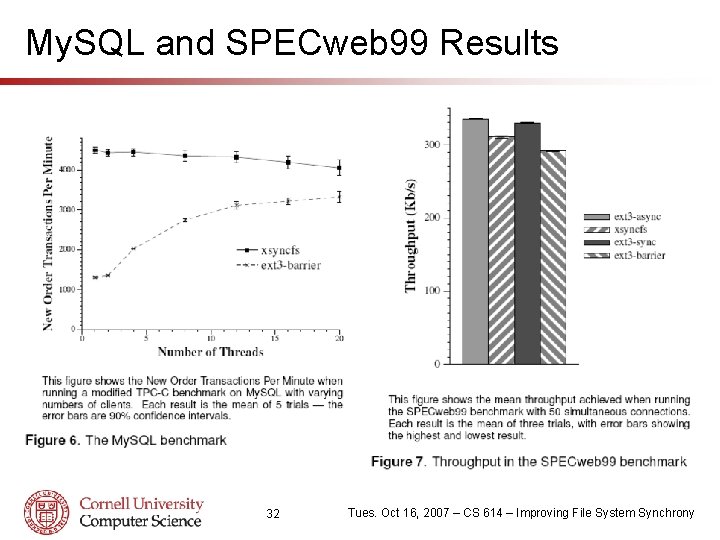

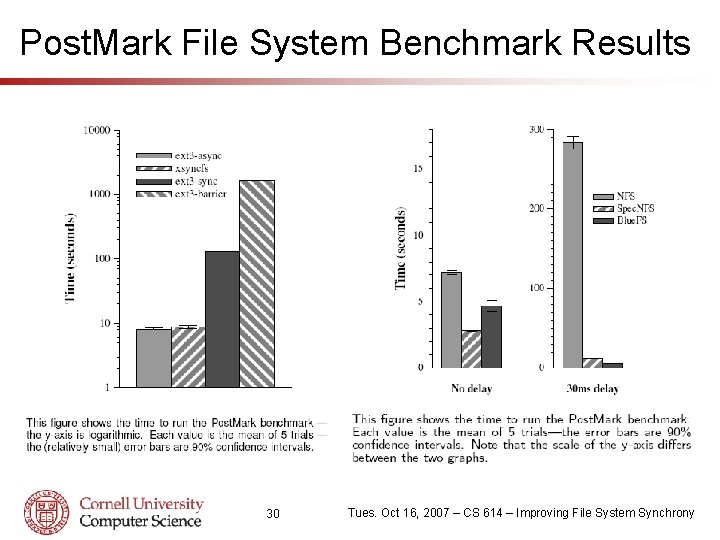

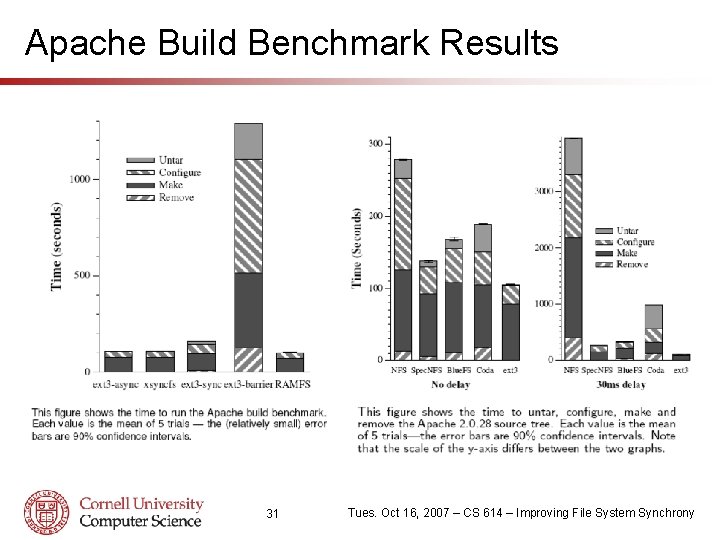

Workload Descriptions § Post. Mark Benchmark • Performs hundreds or thousands of transactions consisting of file reads, writes, creates, and deletes, and then removes all the files. • Replicates small file workloads of electronic mail, netnews, and web-based commerce. • Good test of file system throughput since there is little output or computation. § Apache Build Benchmark • Benchmark untars Apache 2. 0. 48 source tree, runs configure in an object directory, runs make, and then removes all the files. • File system must balance throughput and latency since there is a lot of screen output interleaved with disk I/O and computation. 28 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

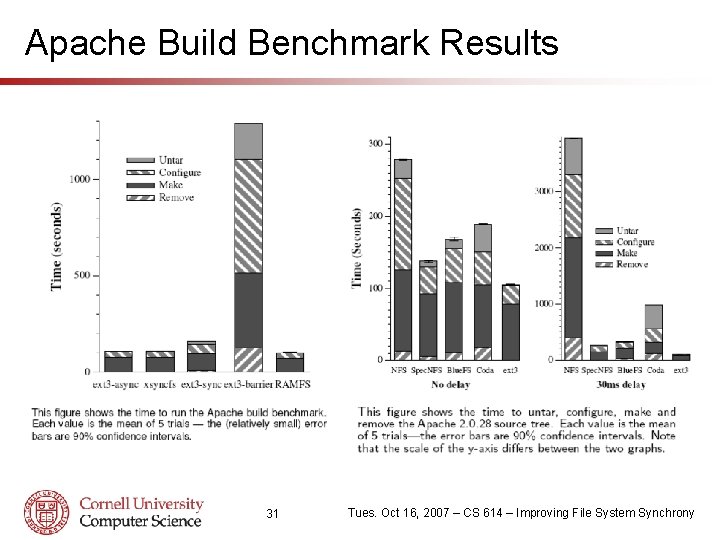

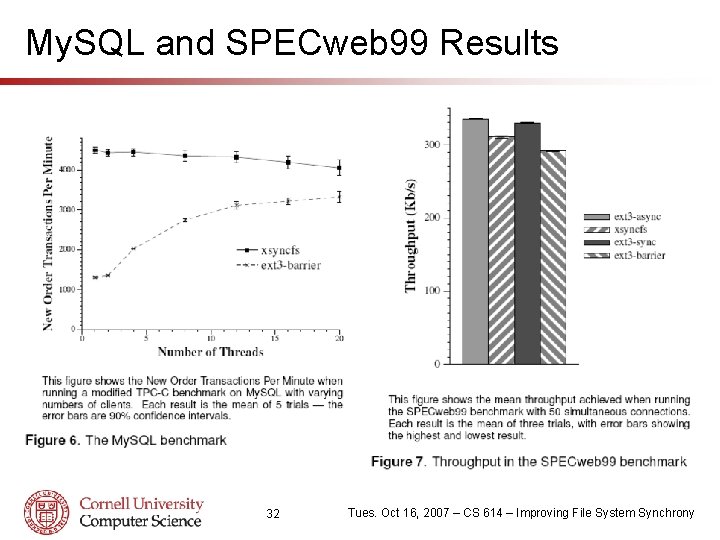

Workload Descriptions (continued) § My. SQL Benchmark • Runs OSDL TPC-C benchmark with My. SQL 5. 0. 16 and the Inno. DB storage engine. • Used to see how xsyncfs performs when application performs its own group commit strategy. • Both My. SQL and TPC-C client are multi-threaded so this measures xsyncfs’s support for shared memory as well. § SPECweb 99 Benchmark • Provides a network intensive application with 50 clients, saturating the server. • High level of network traffic challenges xsyncfs because the messages externalize state. 29 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Post. Mark File System Benchmark Results 30 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Apache Build Benchmark Results 31 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

My. SQL and SPECweb 99 Results 32 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

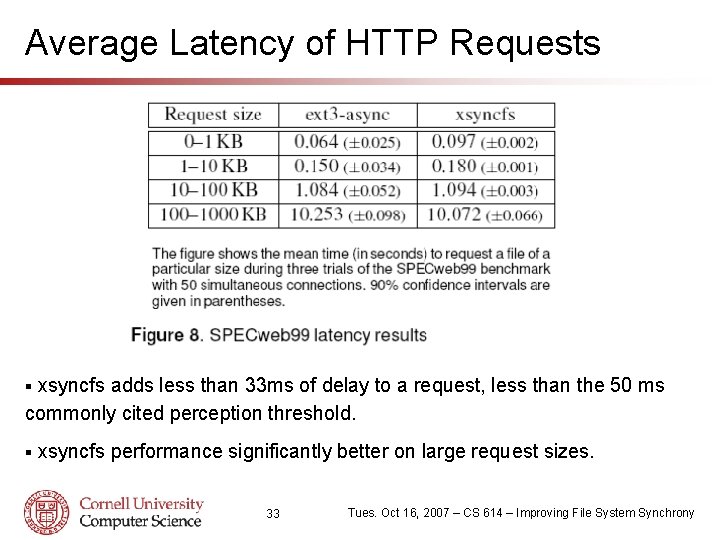

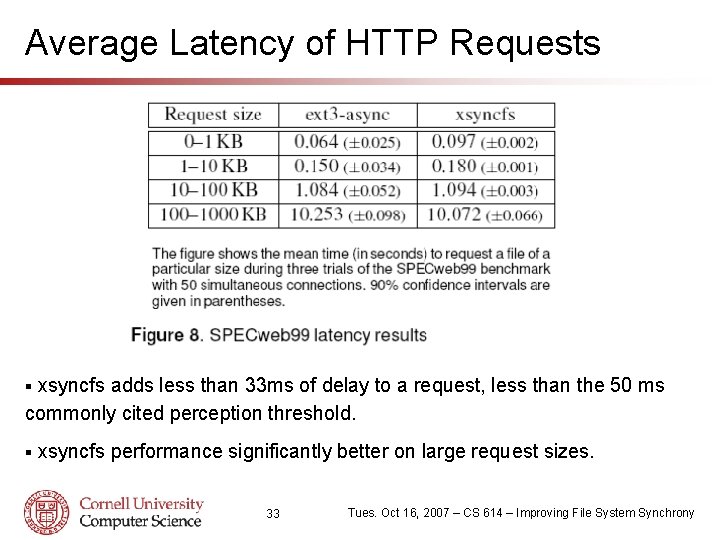

Average Latency of HTTP Requests xsyncfs adds less than 33 ms of delay to a request, less than the 50 ms commonly cited perception threshold. § § xsyncfs performance significantly better on large request sizes. 33 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

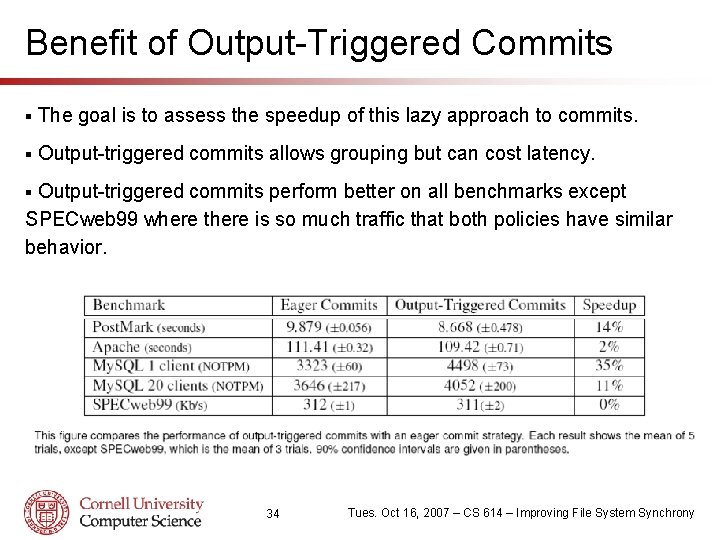

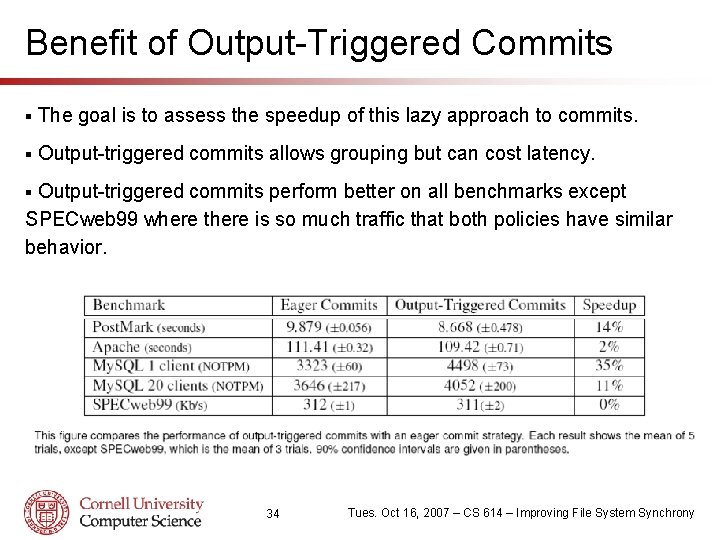

Benefit of Output-Triggered Commits § The goal is to assess the speedup of this lazy approach to commits. § Output-triggered commits allows grouping but can cost latency. Output-triggered commits perform better on all benchmarks except SPECweb 99 where there is so much traffic that both policies have similar behavior. § 34 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony

Conclusions § Speed not be sacrificed for durability and ease-of-use. • Both papers succeed in developing a system that achieves performance near that of an asynchronous implementation with the fault-tolerance and simplicity of the synchronous abstraction. § Key insight is user-centric view abstraction. § Speculator infrastructure provides powerful functionality through dependency tracking and checkpointing/rollback. • Papers focus on using system to speed up local and distributed file systems but many other applications are possible. § Amazing order-of-magnitude speedups are achieved. § Simple ideas that surprisingly took until 2005 to be developed. § Why didn’t I think of this for my research? 35 Tues. Oct 16, 2007 – CS 614 – Improving File System Synchrony