Implementing Hypre AMG in NIMROD via PETSc S

- Slides: 12

Implementing Hypre. AMG in NIMROD via PETSc S. Vadlamani- Tech X S. Kruger- Tech X T. Manteuffel- CU APPM S. Mc. Cormick- CU APPM Funding: DE-FG 02 -07 ER 84730

Goals • SBIR funding for – “improving existing multigrid linear solver libraries applied to the extended MHD system to work efficiently on petascale computers”. • HYPRE chosen because – Multigrid shown to “scale” – CU development • callable from PETSc interface – development of library (ie. AMG method) will benefit all CEMM efforts • Phase I: – explore HYPRE’s solvers applied to the positive definite matricies in NIMROD – start a validation process for petascale scalings • Phase II: – push development for non-symmetric operators of the extended MHD system on high-order FE grids

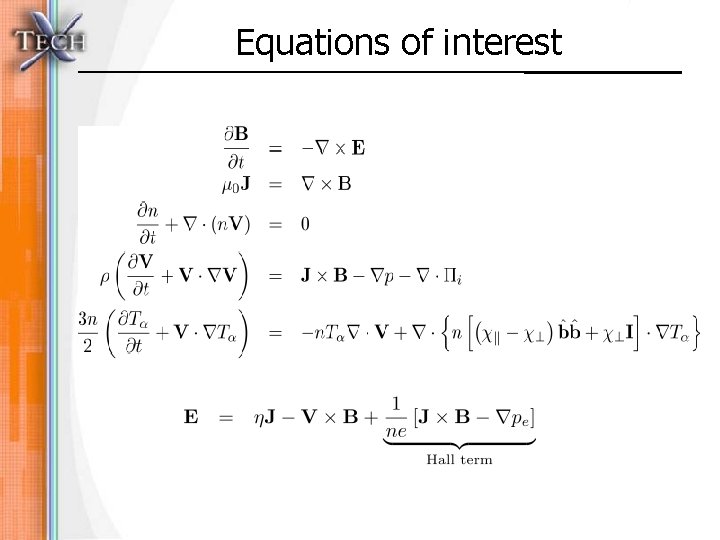

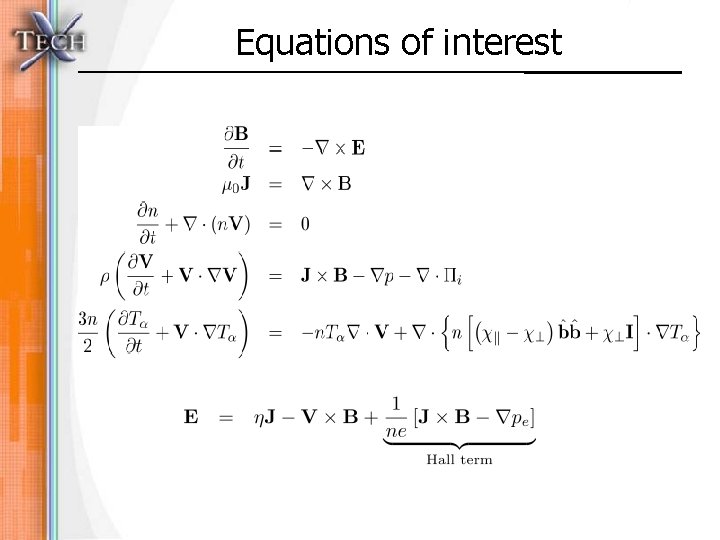

Equations of interest

Major Difficulties for MHD system

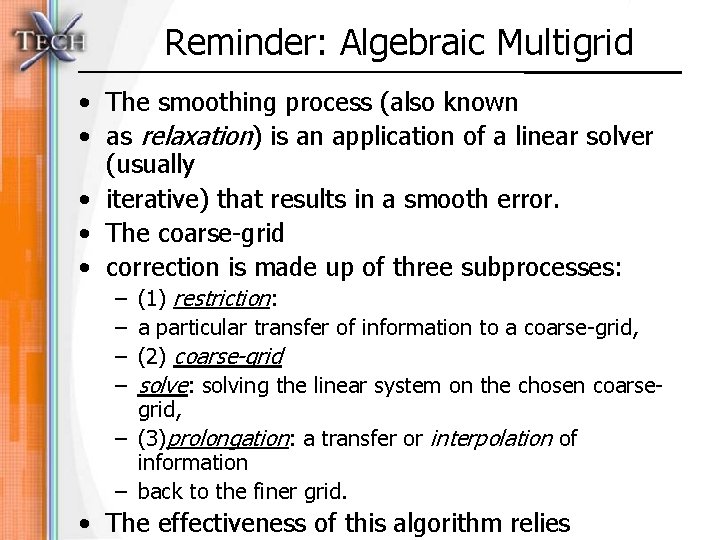

Reminder: Algebraic Multigrid • The smoothing process (also known • as relaxation) is an application of a linear solver (usually • iterative) that results in a smooth error. • The coarse-grid • correction is made up of three subprocesses: (1) restriction: a particular transfer of information to a coarse-grid, (2) coarse-grid solve: solving the linear system on the chosen coarsegrid, – (3)prolongation: a transfer or interpolation of information – back to the finer grid. – – • The effectiveness of this algorithm relies

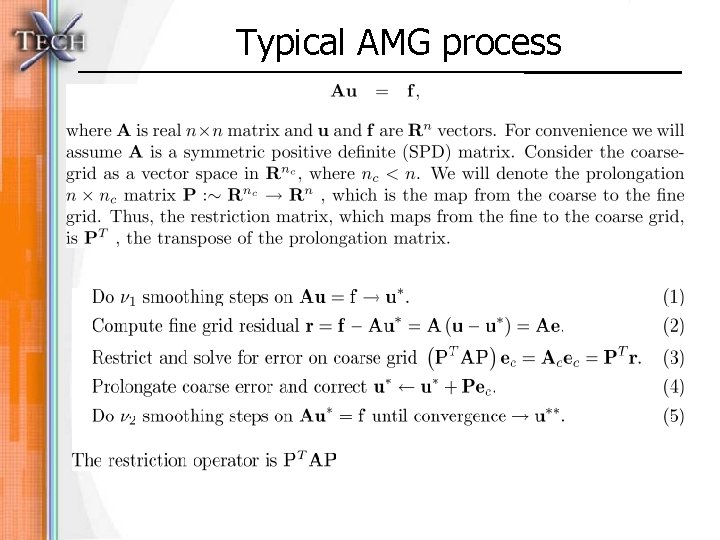

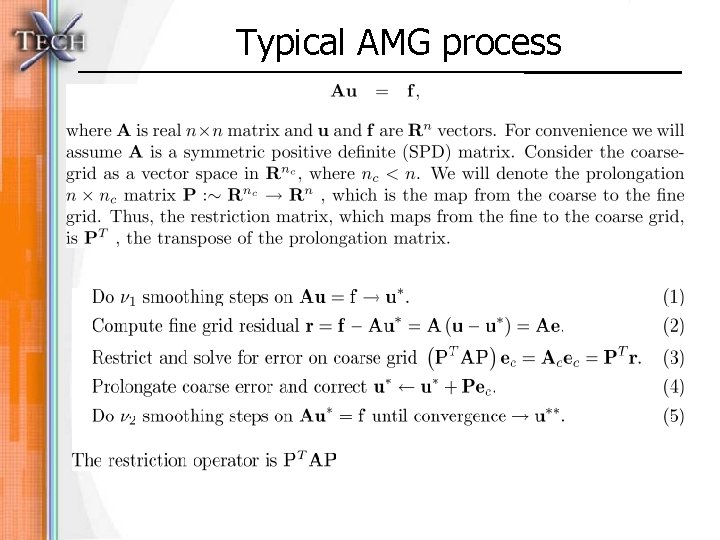

Typical AMG process

Using PETSc • Level of PETSc Compliance – for developer support – PETSc programs usually initialize and kill their own MPI communicators. . need to match patterns • Calling from fortran (77 mentality): – #include ”include/finclude/includefile. h”, *. F for preproccesor – careful to only “include” once in each encapsulated subroutine – must access arrays via an integer index name internal to PETSc – zero indexing *IMPORTANT*

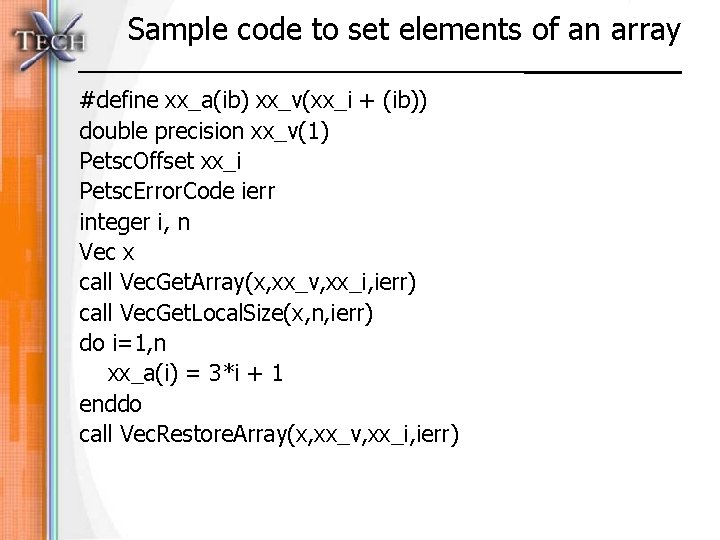

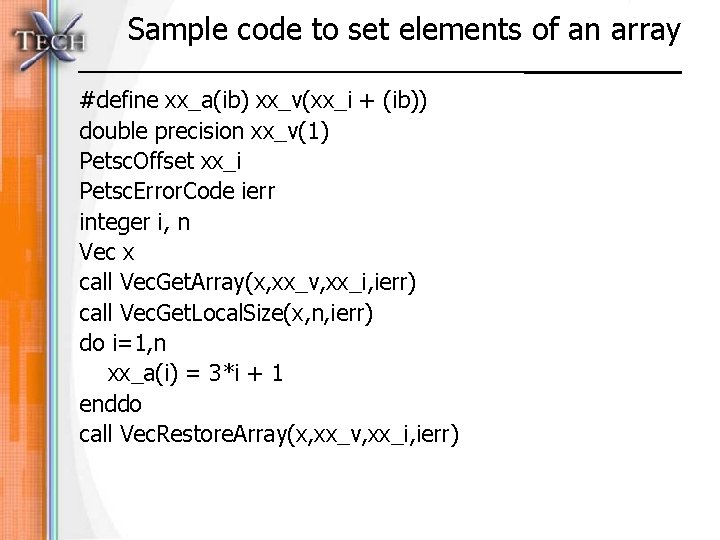

Sample code to set elements of an array #define xx_a(ib) xx_v(xx_i + (ib)) double precision xx_v(1) Petsc. Offset xx_i Petsc. Error. Code ierr integer i, n Vec x call Vec. Get. Array(x, xx_v, xx_i, ierr) call Vec. Get. Local. Size(x, n, ierr) do i=1, n xx_a(i) = 3*i + 1 enddo call Vec. Restore. Array(x, xx_v, xx_i, ierr)

Hypre calls in PETSc • No change to matrix and vector creation • Will set the preconditioner type to HYPRE types within a linear system solver – KSP package – needed for the smoothing process • PCHYPRESet. Type () • Petsc. Options. Set. Value()

Work Plan for Phase 1 SBIR • Implement HYPRE Solvers In Nimrod via PETSc – understanding full NIMROD data structure – backward compatibility with current solvers – comparative simulations with benchmark cases • Establish metrics for solvers’ efficiency • Initial analysis of MG capability for extended MHD

This Week • Revisit work done with Super. LU interface – implementation of distributed interface will give better insight to NIMROD data structure on communication patterns • Obtain troublesome matrices in triplet format – Send to Sherry Li for further analysis and Super. LU development – possibilty of visualization (matplotlib, etc)

Summary • Beginning implementing PETSc in NIMROD • Will explore HYPRE solvers with derived metrics to establish effectiveness • Explore mathematical properties of the extended MHD system to understand feasibility of AMG still scaling while solving these particular non-symmetric matrices • [way in the future]: May need to use Box. MG (LANL) for anisotropic temperature advance