Implementation and Verification of a Cache Coherence protocol

- Slides: 23

Implementation and Verification of a Cache Coherence protocol using Spin Steven Farago

Goal • To use Spin to design a “plausible” cache coherence protocol – Introduce nothing in the Spin model that would not be realistic in hardware (e. g. instant global knowledge between unrelated state machines) • To verify the correctness of the protocol

Background • Definition: Cache = Small, high-speed memory that is used by a single processor. All processor memory accesses are via the cache. • Problem: – In a multiprocessor system, each processor could have a cache. – Each cache could contain (potentially different) data for the same addresses. – Given this, how to ensure that processors see a consistent picture of memory?

Coherence protocol • A Coherence protocol specifies how caches communicate with processors and each other so that processors will have a predictable view of memory. • Caches that always provide this “predictable view of memory” are said to be coherent.

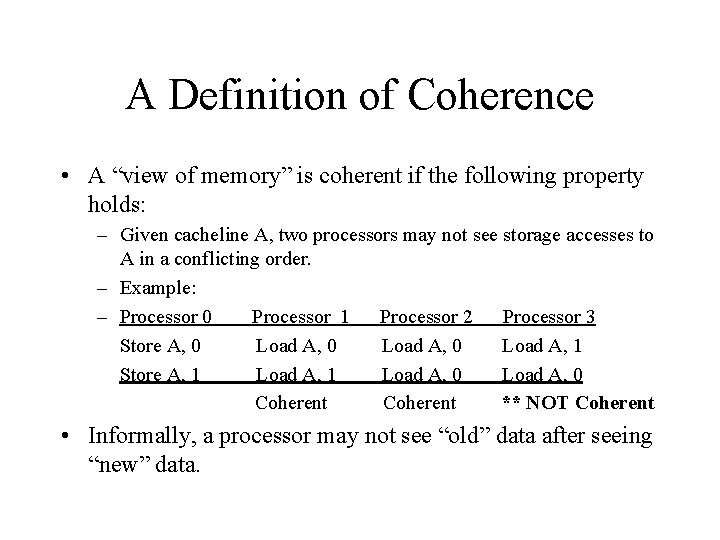

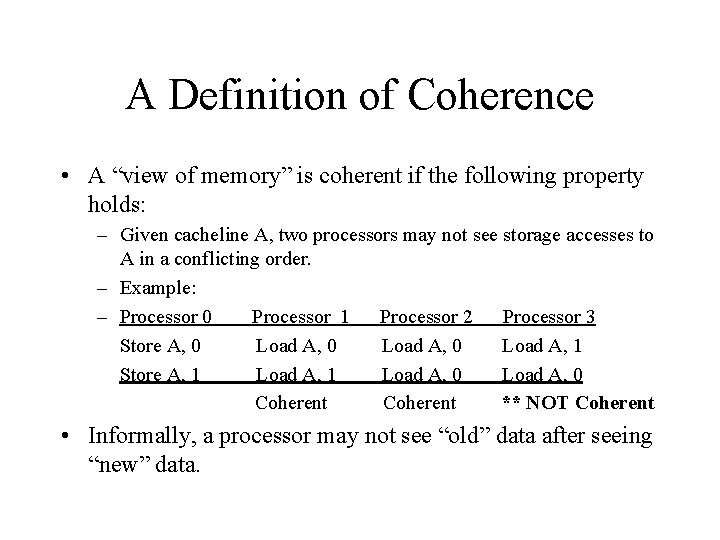

A Definition of Coherence • A “view of memory” is coherent if the following property holds: – Given cacheline A, two processors may not see storage accesses to A in a conflicting order. – Example: – Processor 0 Processor 1 Processor 2 Processor 3 Store A, 0 Load A, 1 Store A, 1 Load A, 0 Coherent ** NOT Coherent • Informally, a processor may not see “old” data after seeing “new” data.

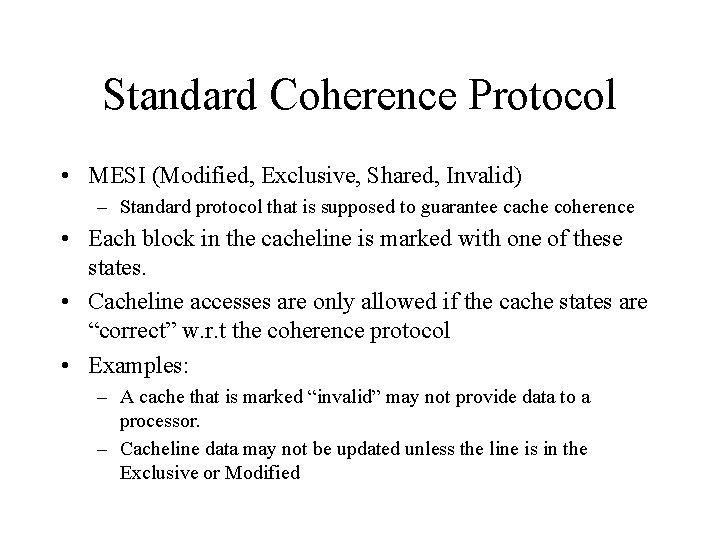

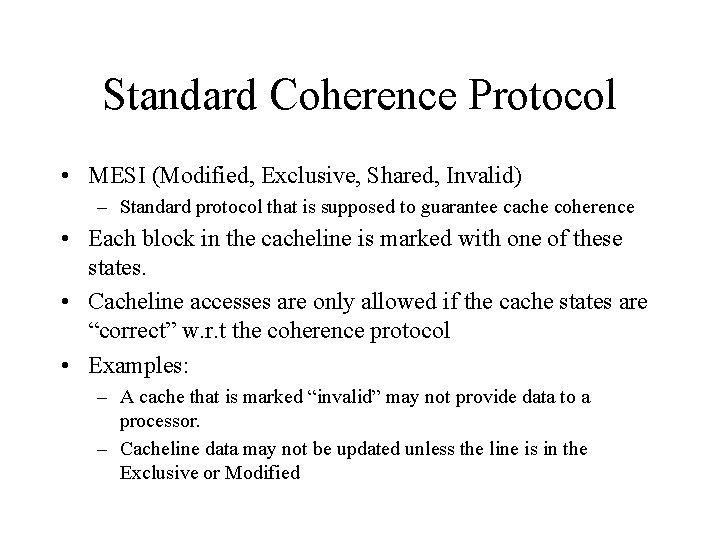

Standard Coherence Protocol • MESI (Modified, Exclusive, Shared, Invalid) – Standard protocol that is supposed to guarantee cache coherence • Each block in the cacheline is marked with one of these states. • Cacheline accesses are only allowed if the cache states are “correct” w. r. t the coherence protocol • Examples: – A cache that is marked “invalid” may not provide data to a processor. – Cacheline data may not be updated unless the line is in the Exclusive or Modified

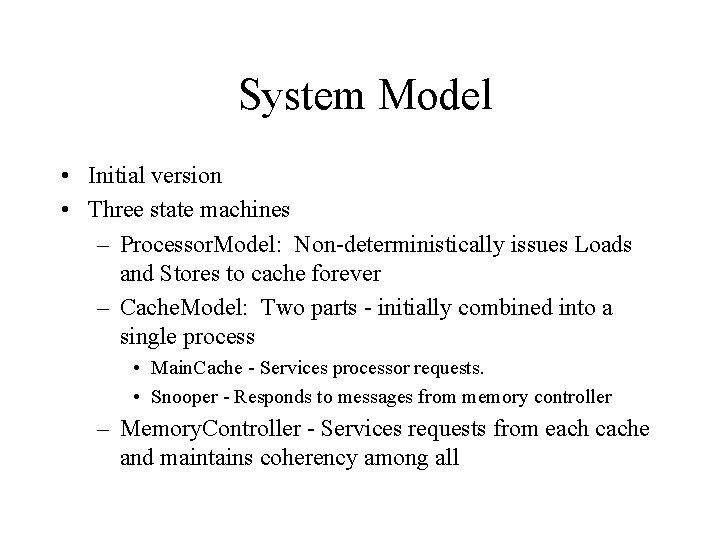

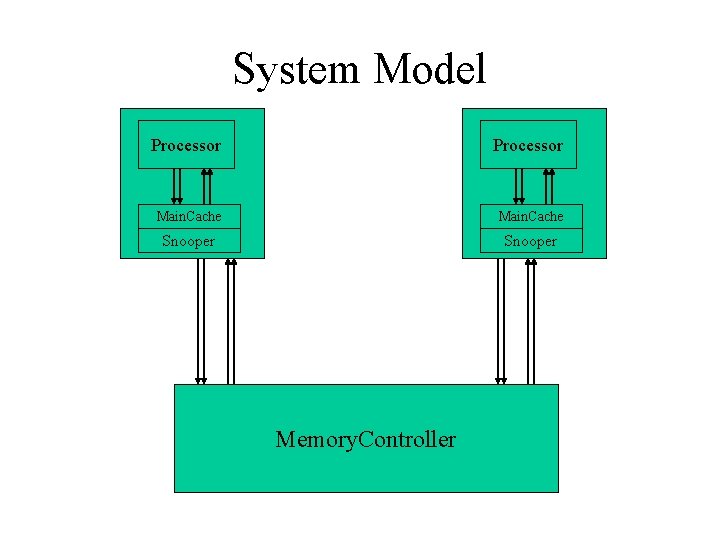

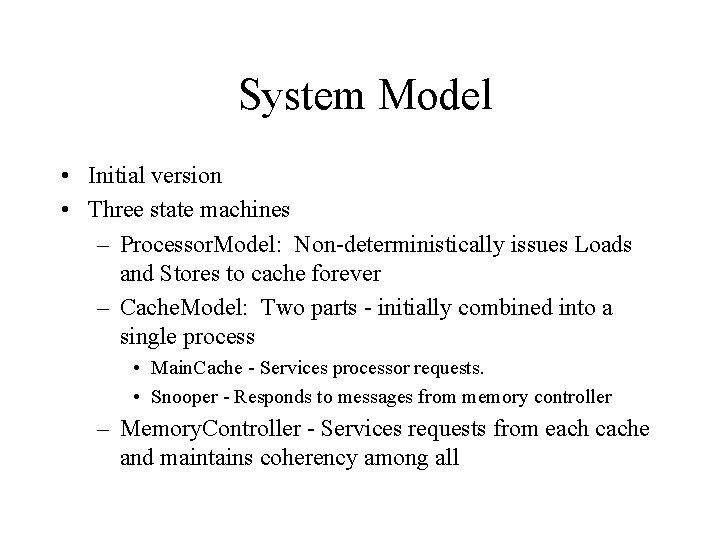

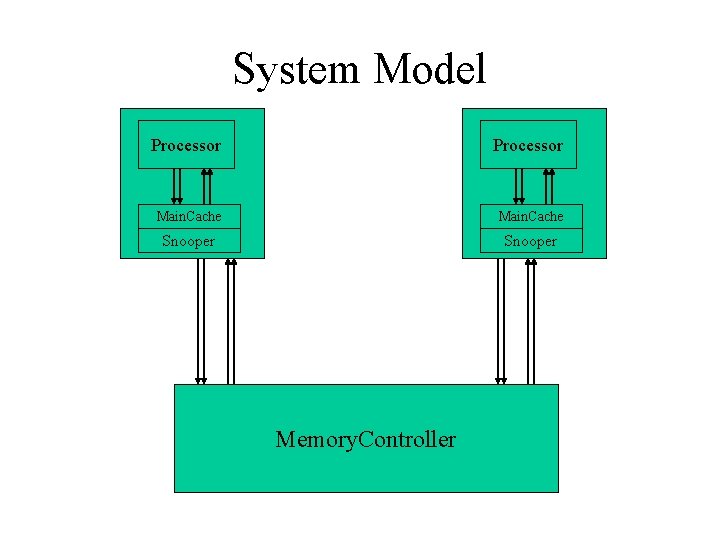

System Model • Initial version • Three state machines – Processor. Model: Non-deterministically issues Loads and Stores to cache forever – Cache. Model: Two parts - initially combined into a single process • Main. Cache - Services processor requests. • Snooper - Responds to messages from memory controller – Memory. Controller - Services requests from each cache and maintains coherency among all

System Model Processor Main. Cache Snooper Memory. Controller

Processor. Model • Simple • Continually issues Load/Store requests to associated Cache. – Communication done via Bus Model. – Read requests are blocking • Coherence verification done when Load receives data (via Spin assert statement)

Cache. Model • Two parts: Main. Cache and Snooper – Main. Cache services Processor. Model Load and Store requests and initiates contact with the Memory. Controller when an “invalid” cache state is encountered – Snooper services independent request from Memory. Controller. Requests necessary for Memory. Controller to coordinate coherence responses.

Memory. Controller. Model • Responsible for servicing Cache requests • 3 Types of requests – Data request: Cache requires up-to-date data to supply to processor – Permission-to-store: A Cache may not transition to the Modified state w/o MC’s permission – A combination of these two • All types of requests may require MC to communicate with all system caches (via Snooper processes) to ensure coherence

Implementation of Busses • All processes represent independent state machines. Need communication mechanism • Use Spin depth 1 queues to simulate communication. • Destructive/Blocking read of queues requires global bool to indicate bus activity (required for polling). – Global between processes valid to make up for differences between Spin queues and real busses

Problems - Part 1 • Main. Cache and Snooper initially implemented as a single process. • Process nondeterministically determines which to execute at each iteration • Communication between Processor/Cache and Cache/Memory done with blocking queues • Blocked receive in Main. Cache --> Snooper cannot execute • Leads to deadlock in certain situations

Solution 1 • Split Main. Cache and Snooper into separate processes. • Both can access “global” cache. Data and cache. State variables independently

--> Problems - Part 2 • As separate processes, Snooper and Main. Cache could change cache state unpredictably. • Race conditions: Snooper changes cache state/data while Main. Cache is in midtransaction --> returns invalidated data to processor.

Solution 2 • Add locking mechanism to cache. – Main. Cache or Snooper may only access cache if they first lock it. • Locking mechanism: For simplicity, cheated by using Spin’s atomic keyword to implement test-set on a shared variable. • Assumption: Real hardware would have some similar mechanism available to lock caches. • Question: Revised model now equivalent to original? ?

--> Problem 3 • Memory controller allows multiple outstanding requests from caches. • Snooper of cache which has a Main. Cache request outstanding cannot respond to MC queries for other outstanding requests (due to locked cacheline). • Deadlock.

Solution 3 • Disallow multiple outstanding Cache/MC transactions. • Introduce global bool variable shared across all caches: outstanding. Bus. Op. • A cache may only issue requests to the memory controller if no requests from other caches outstanding. • Global knowledge across all caches unrealistic. • Equivalent to “retries” from MC? ?

--> Problem 4 • Previous problems failed in Spin simulation within 1000 steps. • Given last solution, random simulation failures vanish in first 3000 steps. • Verification fails after ~20000 steps • Cause of problem as yet unresolved

Verification • How to verify coherence generally? ? • Verify something stronger: A processor will never see conflicting ordering of data if it always sees the newest data available in the system. • For all loads, assert that data is “new”

Modeling of Data • Concern that modeling data as random integer would cause Spin to run out of memory • Model data as a bit with values OLD and NEW. • All processor Stores store NEW data. • When transitioning to a Modified state, a cache will change all other values of data in memory and other caches to OLD – Global access to data here strictly a part of verification effort, not algorithm. Thus allowed.

Debugging • Found debugging parallel processes difficult. • Made much easier by Spin’s message sequence diagrams – Graphically shows sends and receives of all messages. – Requires use of Spin queues rather than globals for interprocess communication

Future work • Make existing protocol completely bug free • Activate additional “features” disabled for debugging purposes (e. g. bus transaction types) • Verify protocol specific rules – No two caches may be simultaneously Modified – Cache Modified or Exclusive --> no other cache is Shared