Impact of parallelism on HEP software April 29

- Slides: 50

Impact of parallelism on HEP software April 29 th 2013 Ecole Polytechnique/LLR Rene Brun

Software Upgrades n n All LHC experiments and groups like CERN/SFT are looking at all possible performance improvements or rethinking their software stack for the post LS 2 years. This effort is driven by the new hardware and also the analysis of the hot spots. Work is going on in ROOT to support thread safety, parallel buffer merges and parallel Tree I/O. In the GEANT world, several projects(eg G 4 MT) investigate multi-core, gpus or like solutions. In this talk I will review the progress with one of these projects. 2

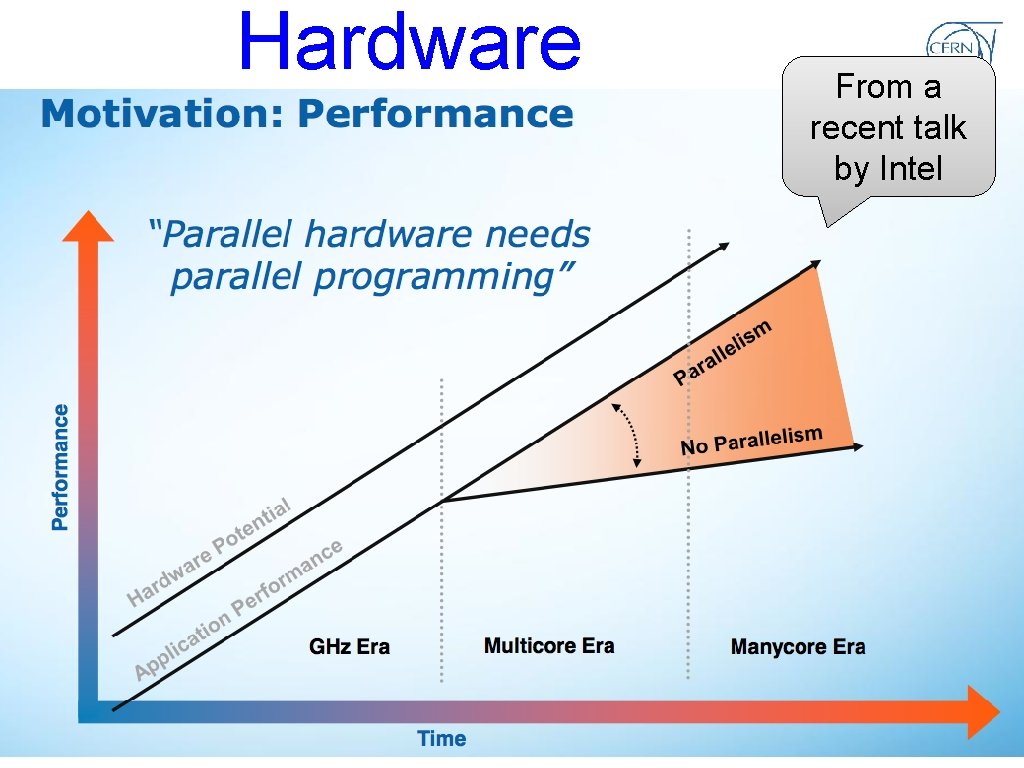

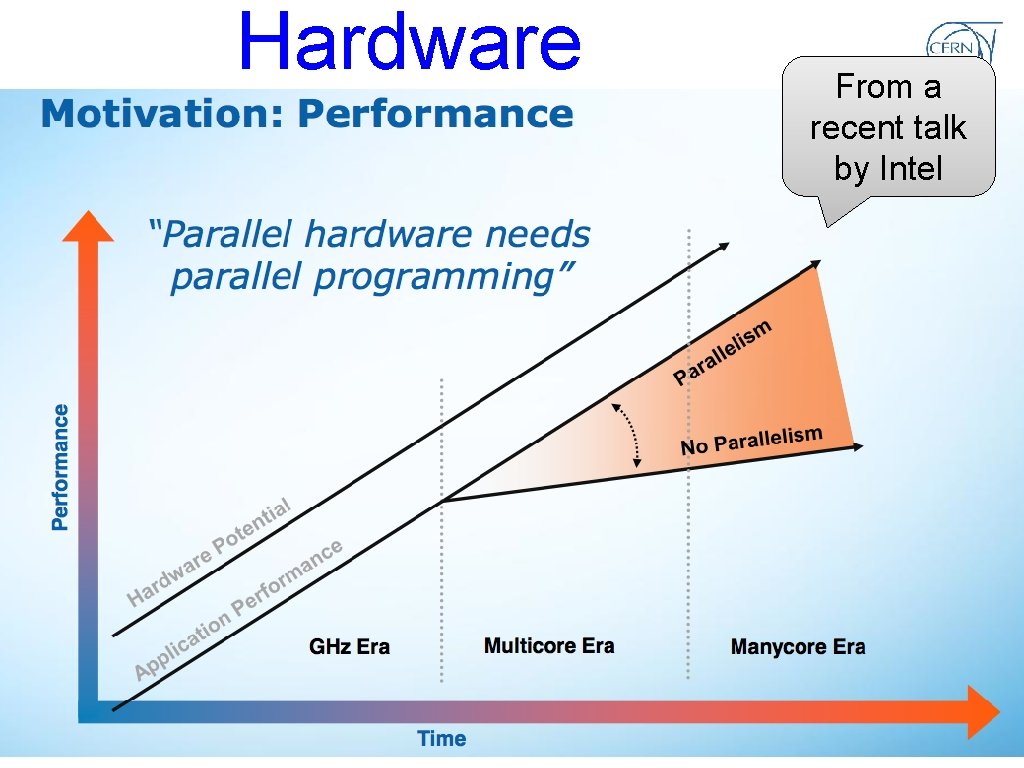

Hardware R. Brun : Paralllelism and HEP software From a recent talk by Intel 3

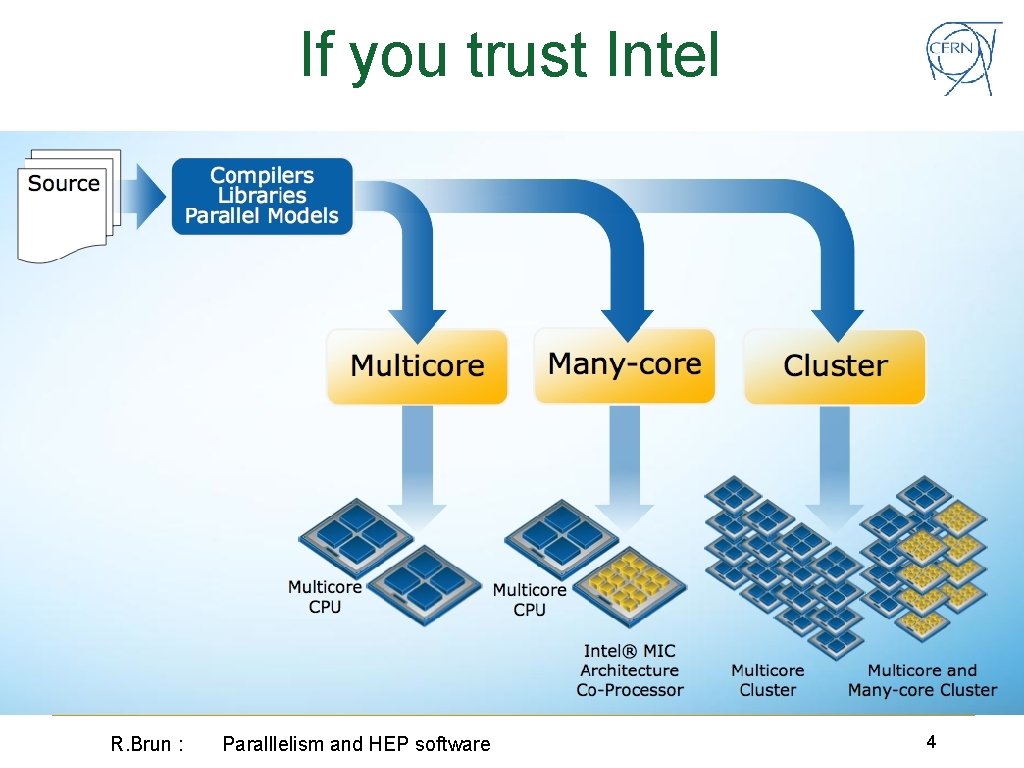

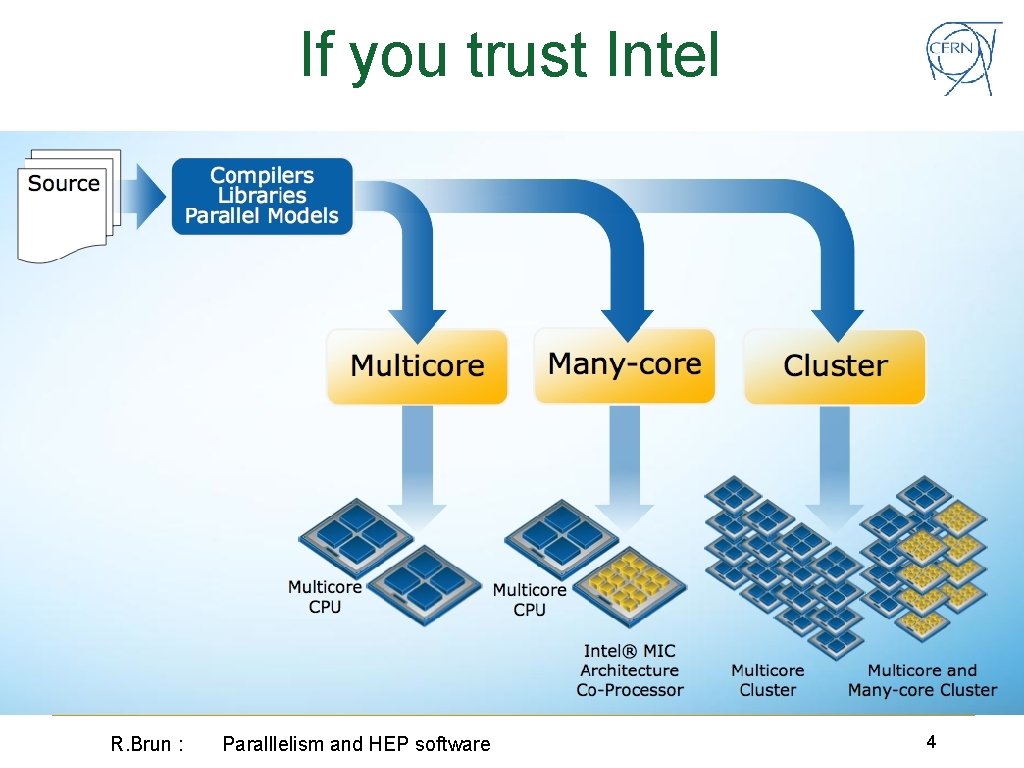

If you trust Intel R. Brun : Paralllelism and HEP software 4

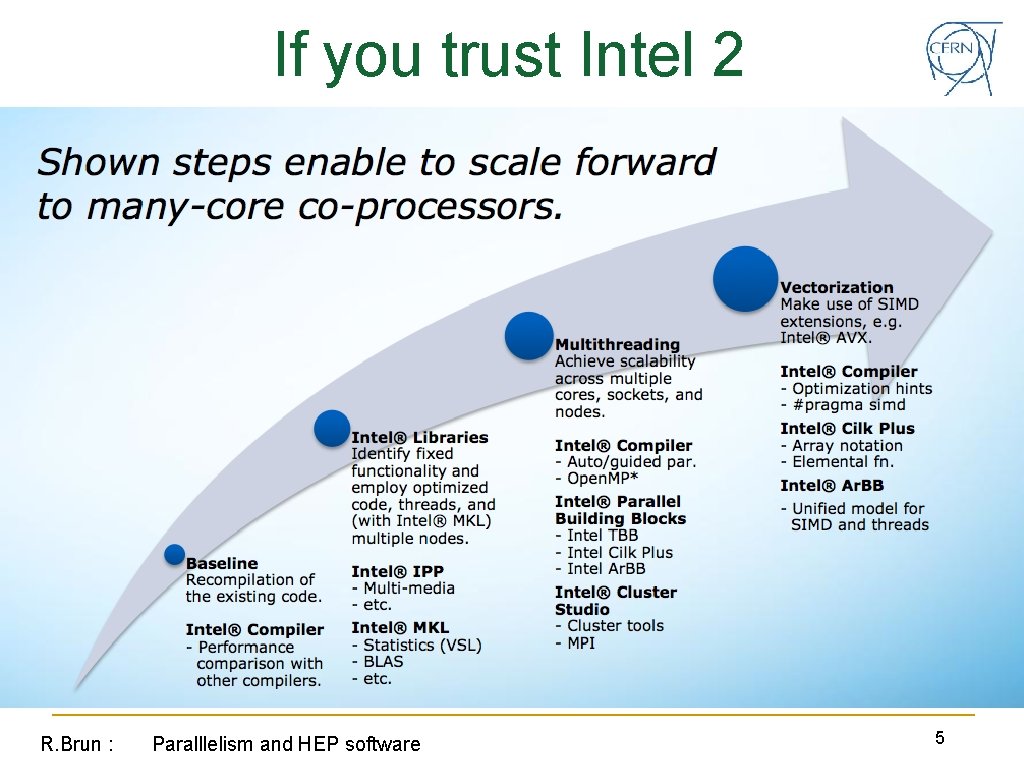

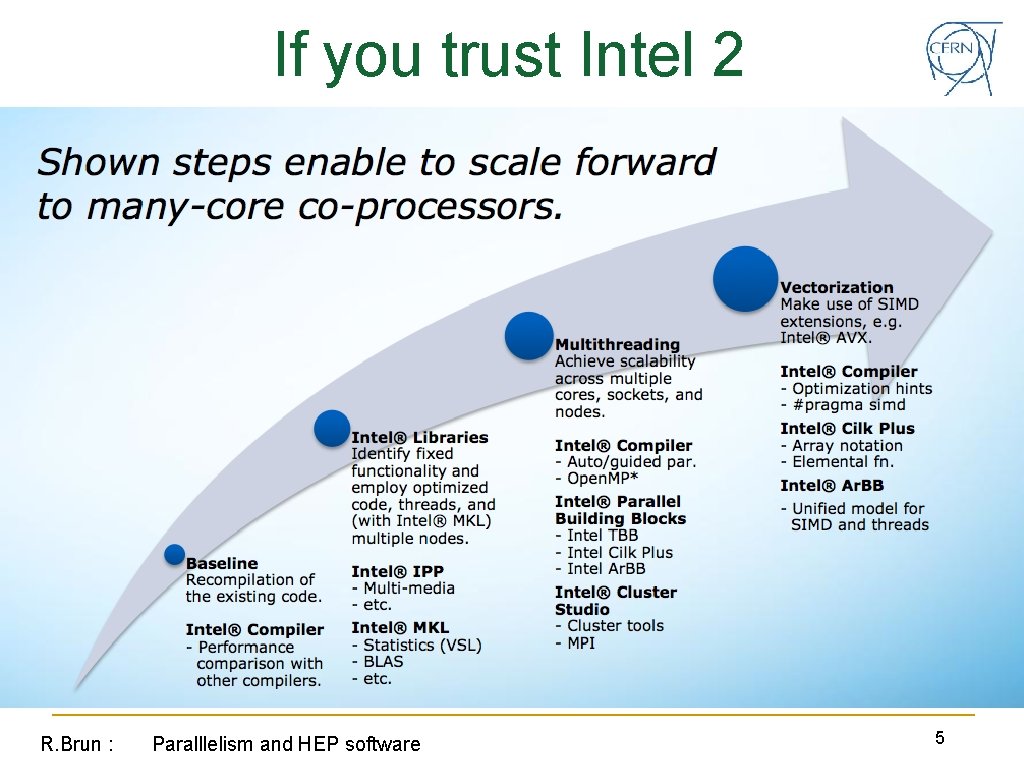

If you trust Intel 2 R. Brun : Paralllelism and HEP software 5

Vendors race parallelism parallelism parallelism parallelism parallelism parallelism parallelism parallelism parallelism parallelism R. Brun : Paralllelism and HEP software 6

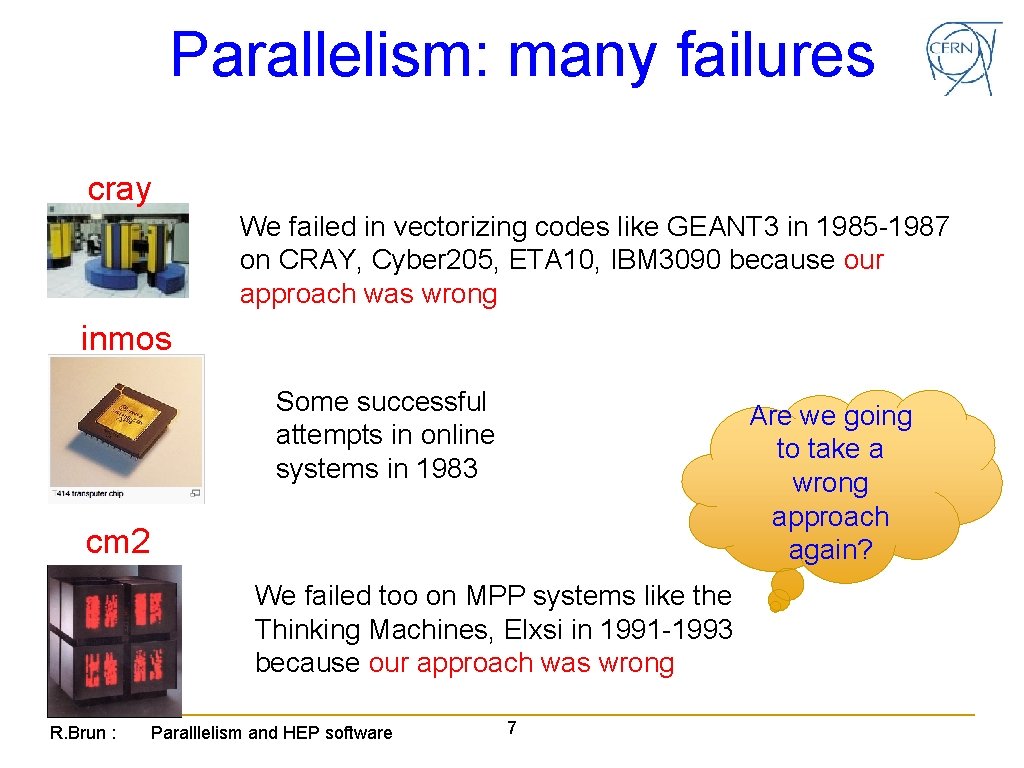

Parallelism: many failures cray We failed in vectorizing codes like GEANT 3 in 1985 -1987 on CRAY, Cyber 205, ETA 10, IBM 3090 because our approach was wrong inmos Some successful attempts in online systems in 1983 Are we going to take a wrong approach again? cm 2 We failed too on MPP systems like the Thinking Machines, Elxsi in 1991 -1993 because our approach was wrong R. Brun : Paralllelism and HEP software 7

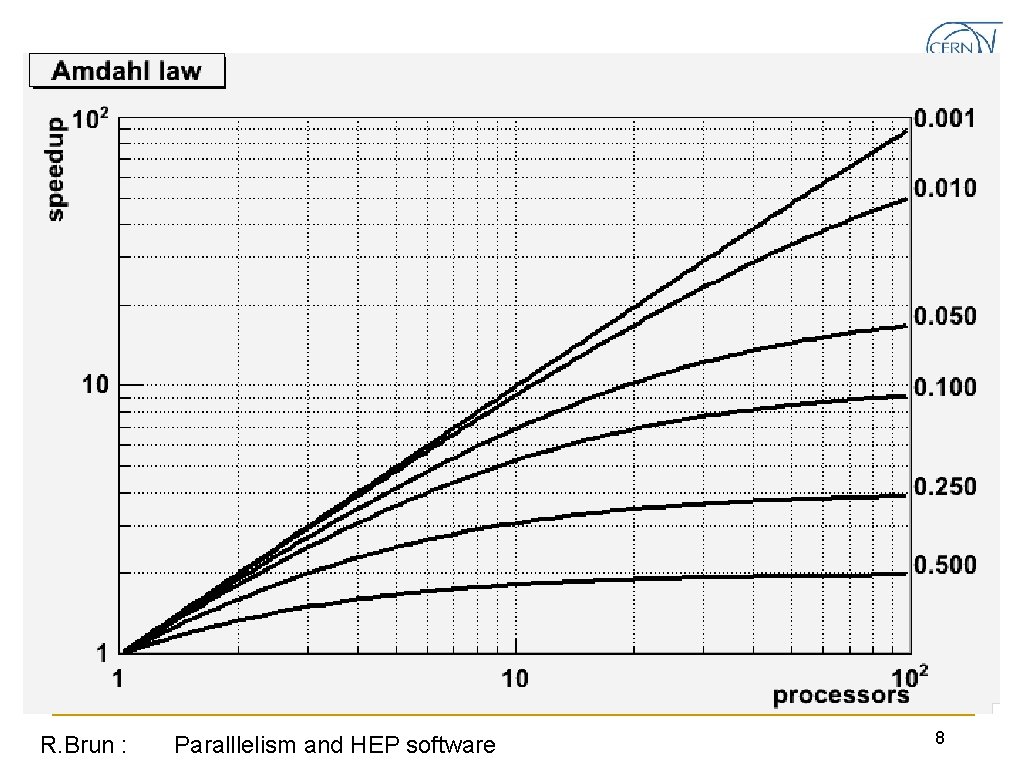

R. Brun : Paralllelism and HEP software 8

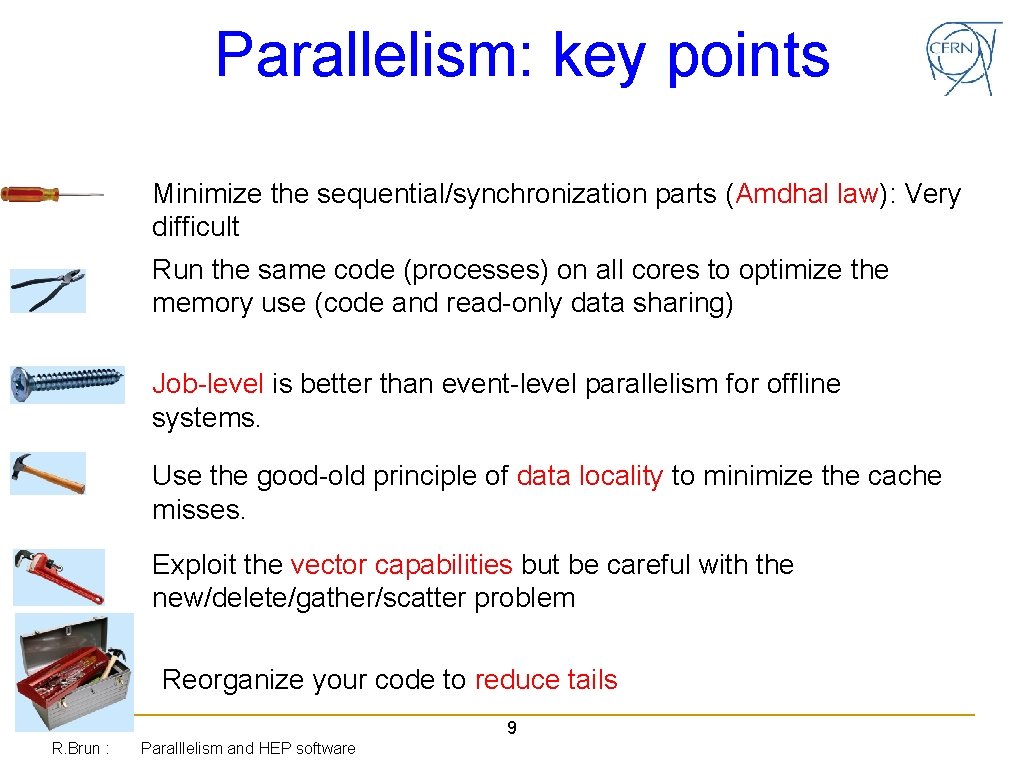

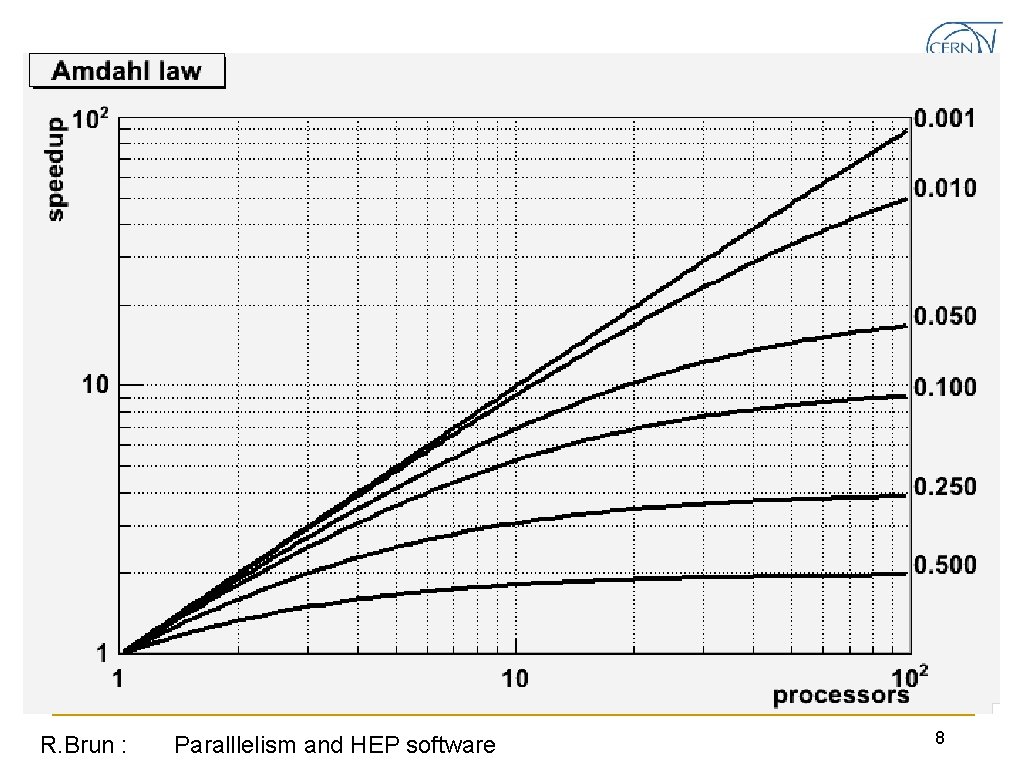

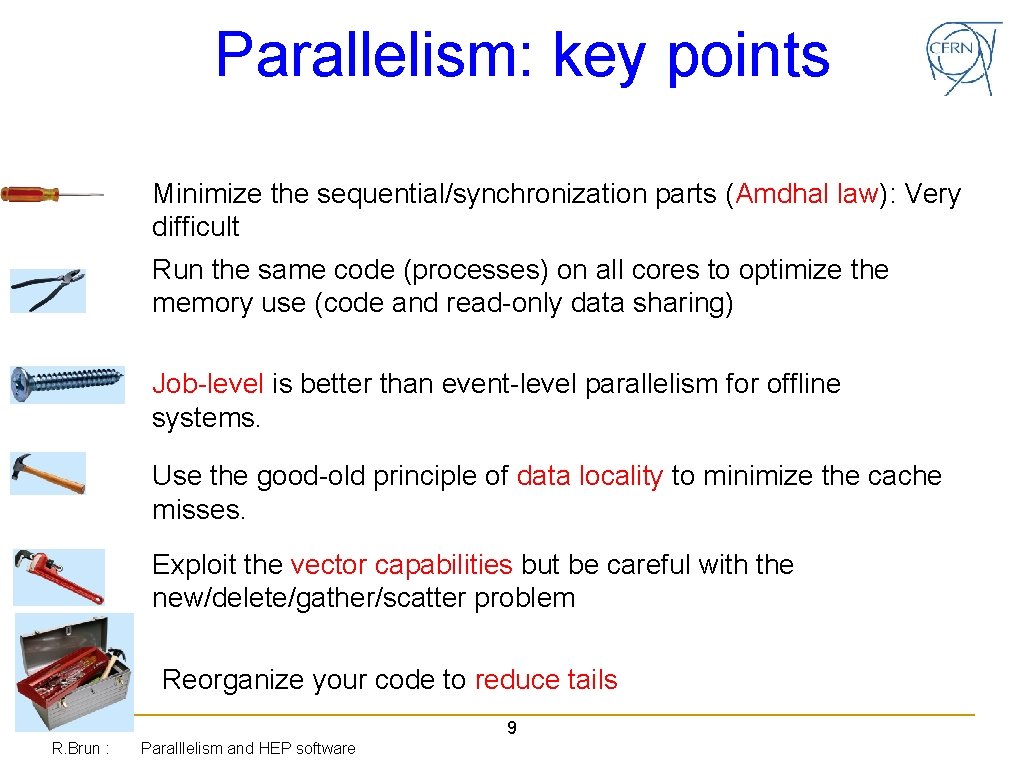

Parallelism: key points Minimize the sequential/synchronization parts (Amdhal law): Very difficult Run the same code (processes) on all cores to optimize the memory use (code and read-only data sharing) Job-level is better than event-level parallelism for offline systems. Use the good-old principle of data locality to minimize the cache misses. Exploit the vector capabilities but be careful with the new/delete/gather/scatter problem Reorganize your code to reduce tails 9 R. Brun : Paralllelism and HEP software

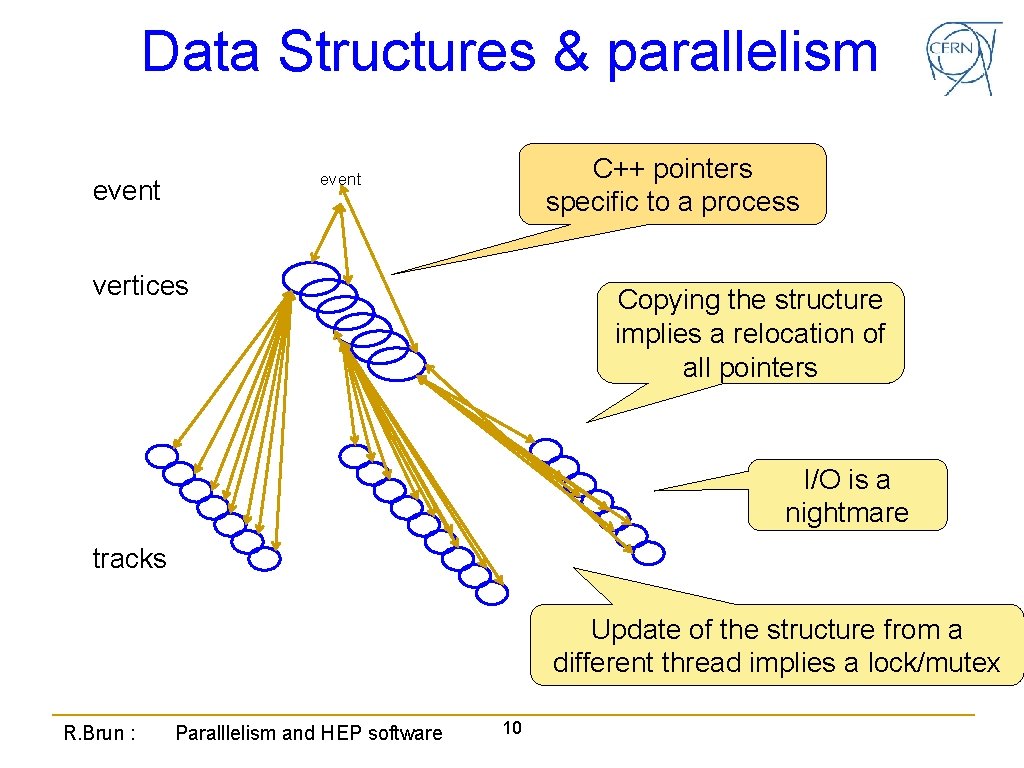

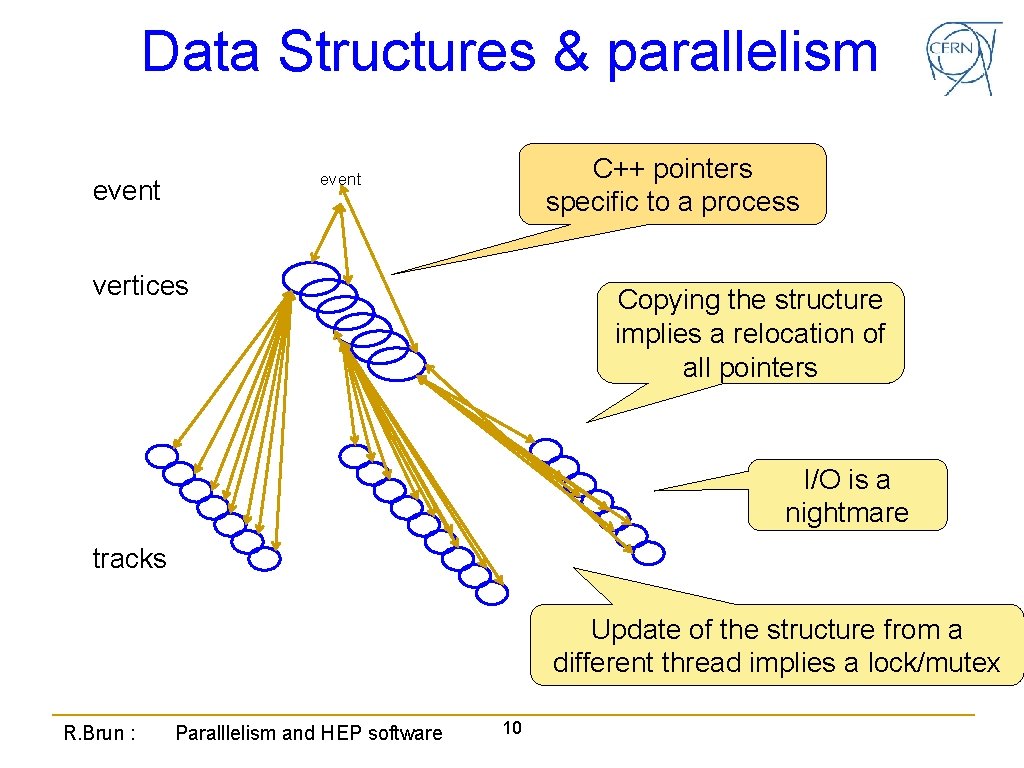

Data Structures & parallelism C++ pointers specific to a process event vertices Copying the structure implies a relocation of all pointers I/O is a nightmare tracks Update of the structure from a different thread implies a lock/mutex R. Brun : Paralllelism and HEP software 10

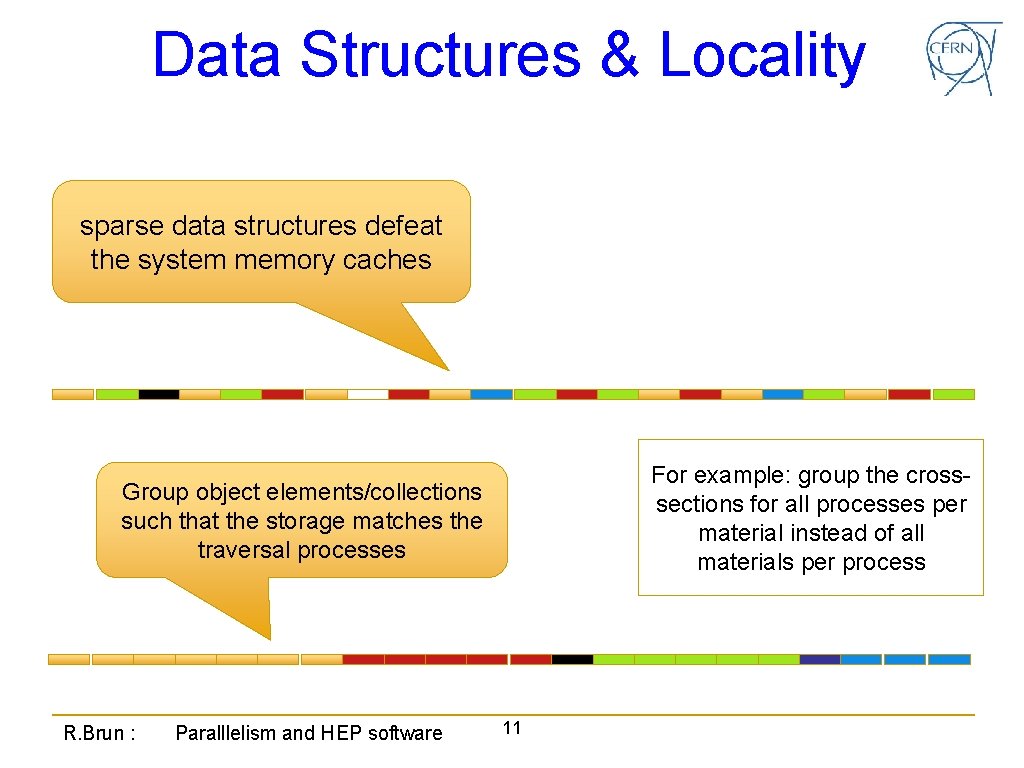

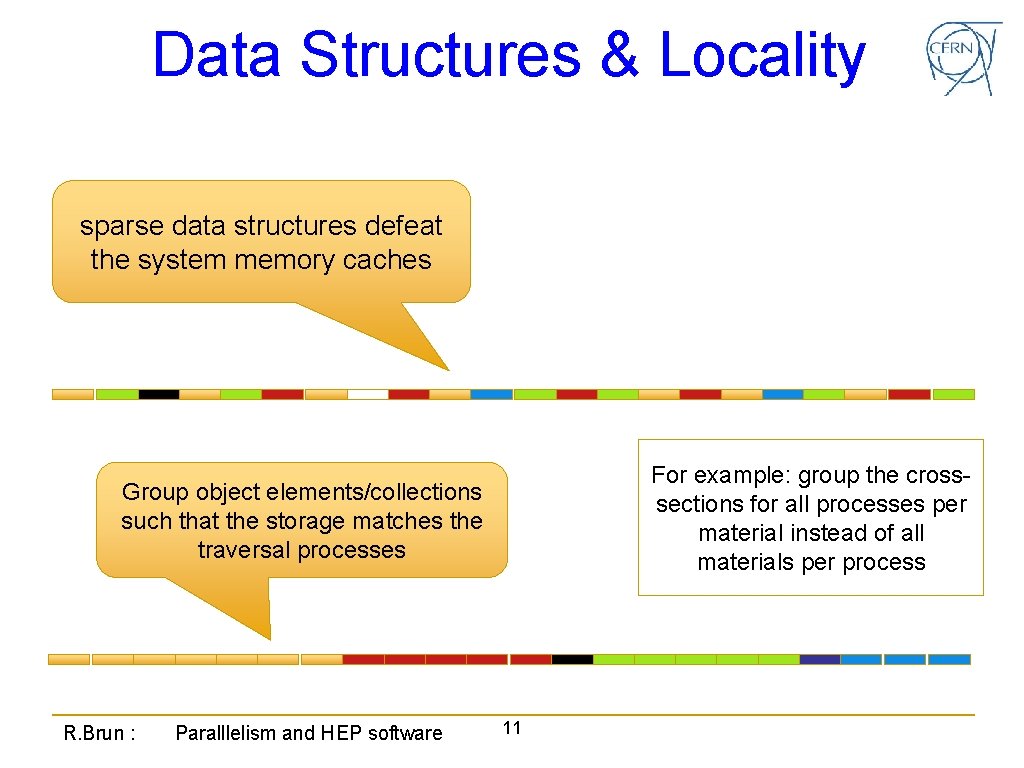

Data Structures & Locality sparse data structures defeat the system memory caches For example: group the crosssections for all processes per material instead of all materials per process Group object elements/collections such that the storage matches the traversal processes R. Brun : Paralllelism and HEP software 11

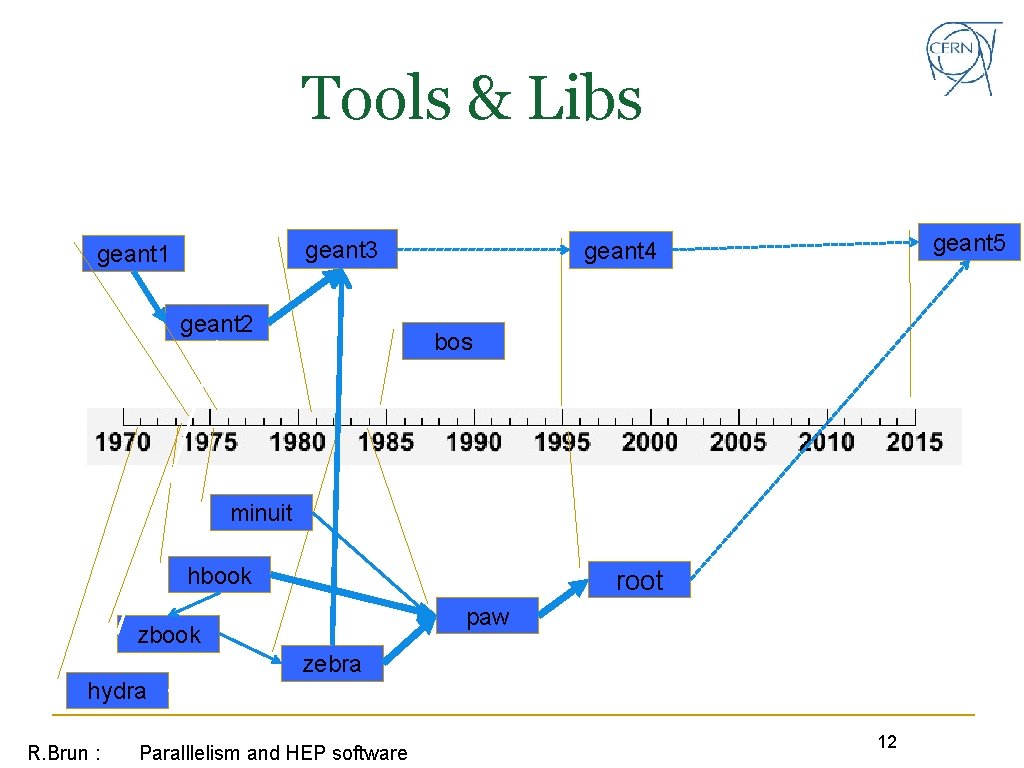

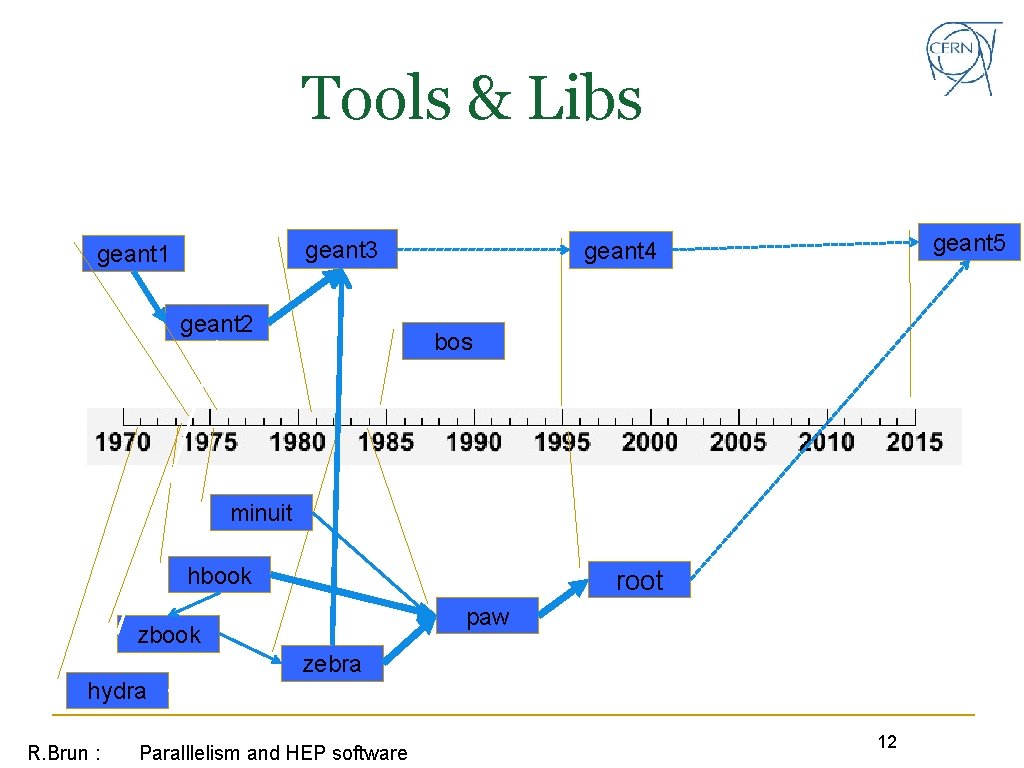

Tools & Libs geant 3 geant 1 geant 2 geant 5 geant 4 bos minuit hbook root paw zbook zebra hydra R. Brun : Paralllelism and HEP software 12

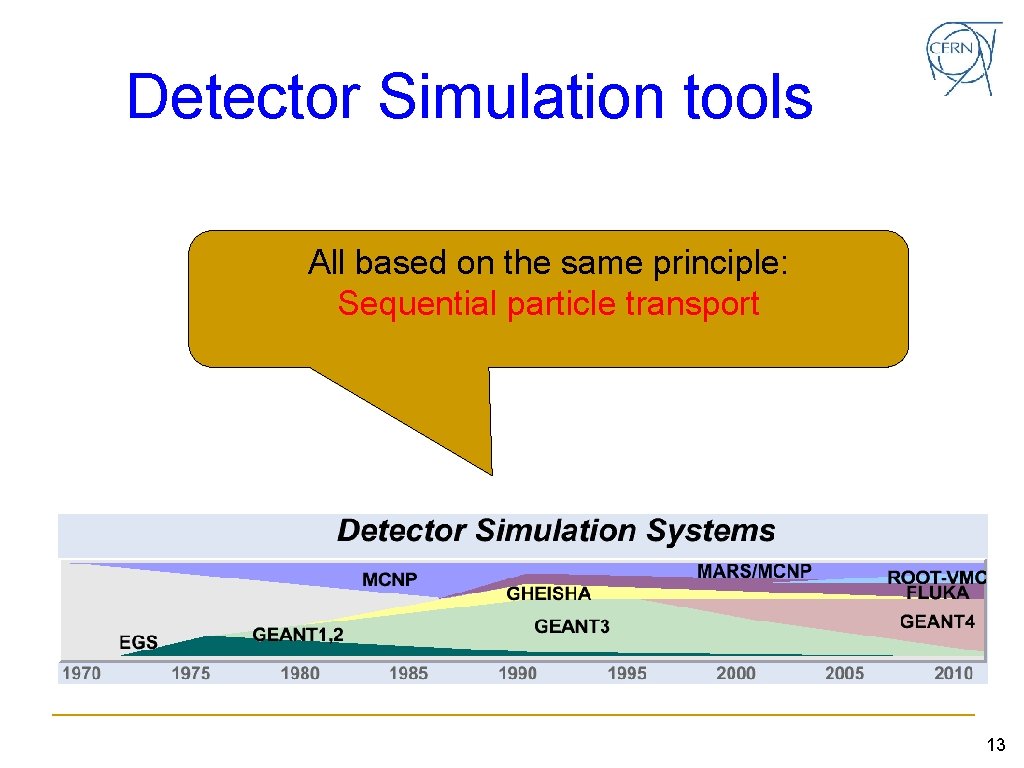

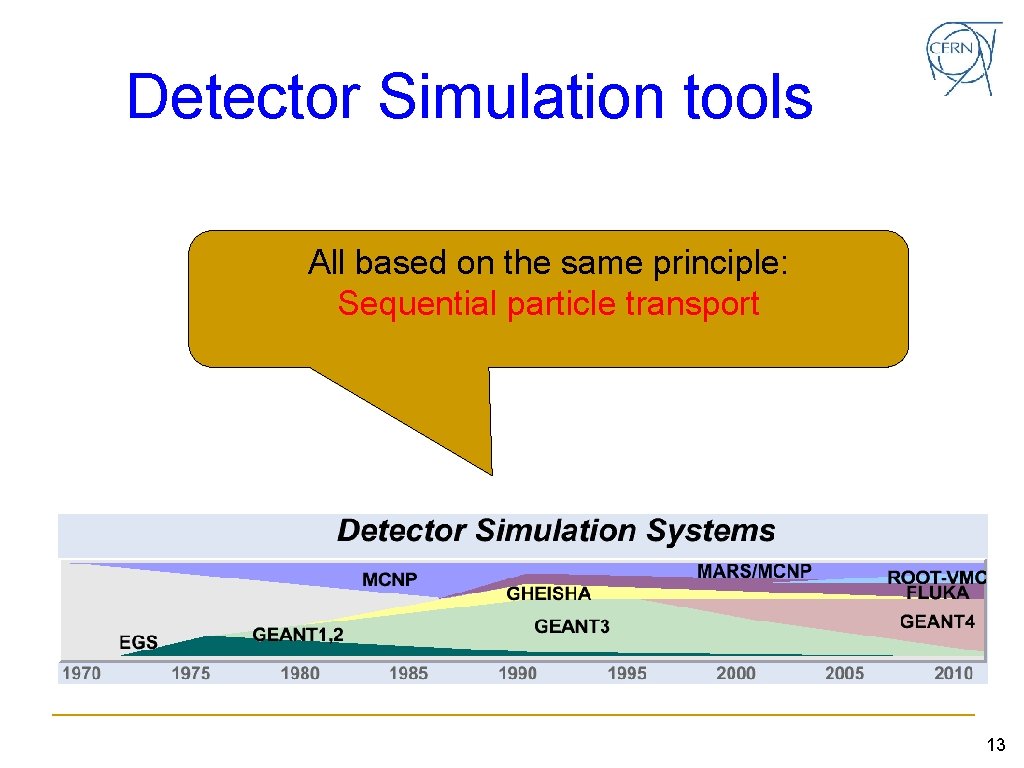

Detector Simulation tools All based on the same principle: Sequential particle transport 13

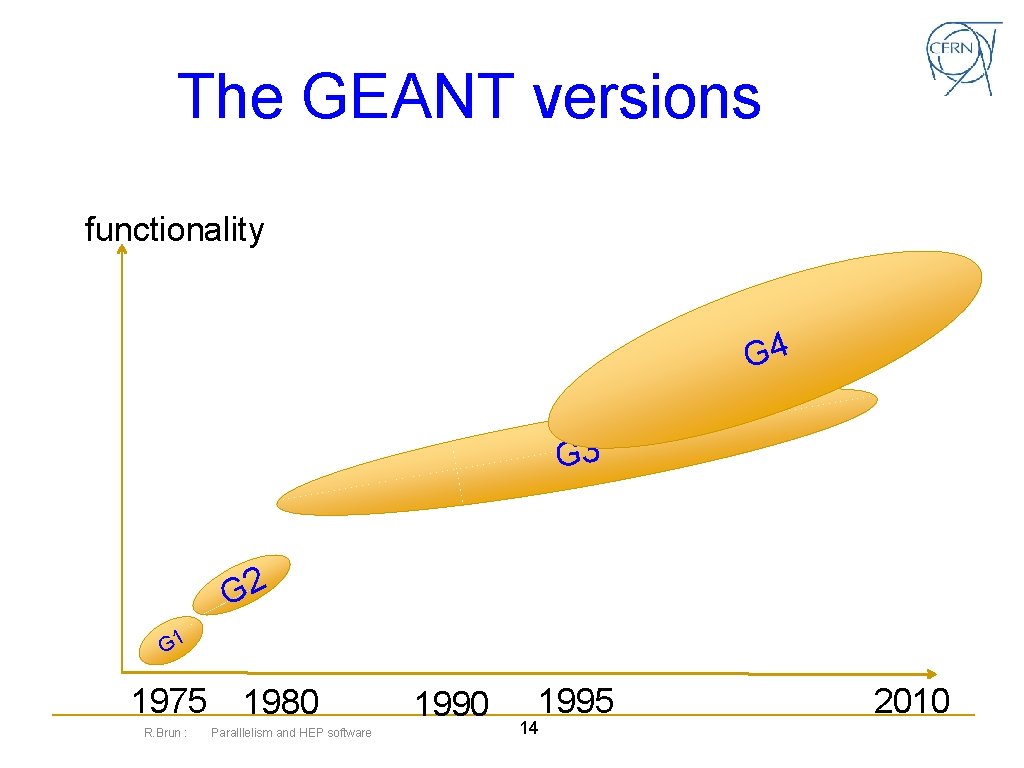

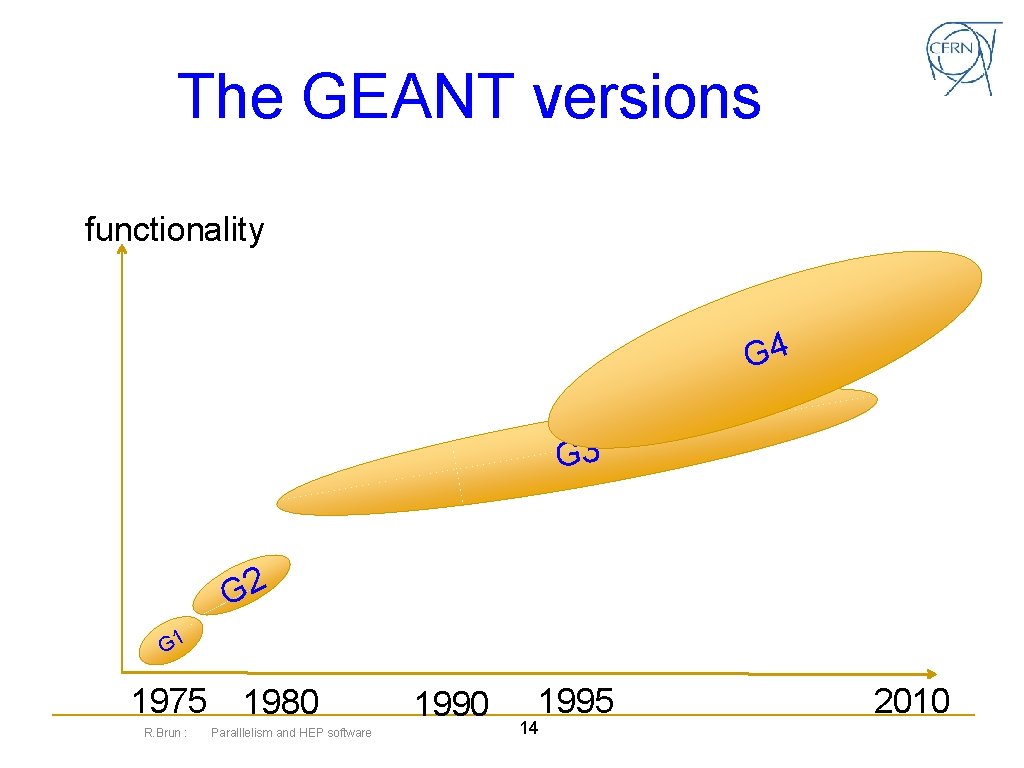

The GEANT versions functionality G 4 G 3 G 2 G 1 1975 R. Brun : 1980 Paralllelism and HEP software 1990 1995 14 2010

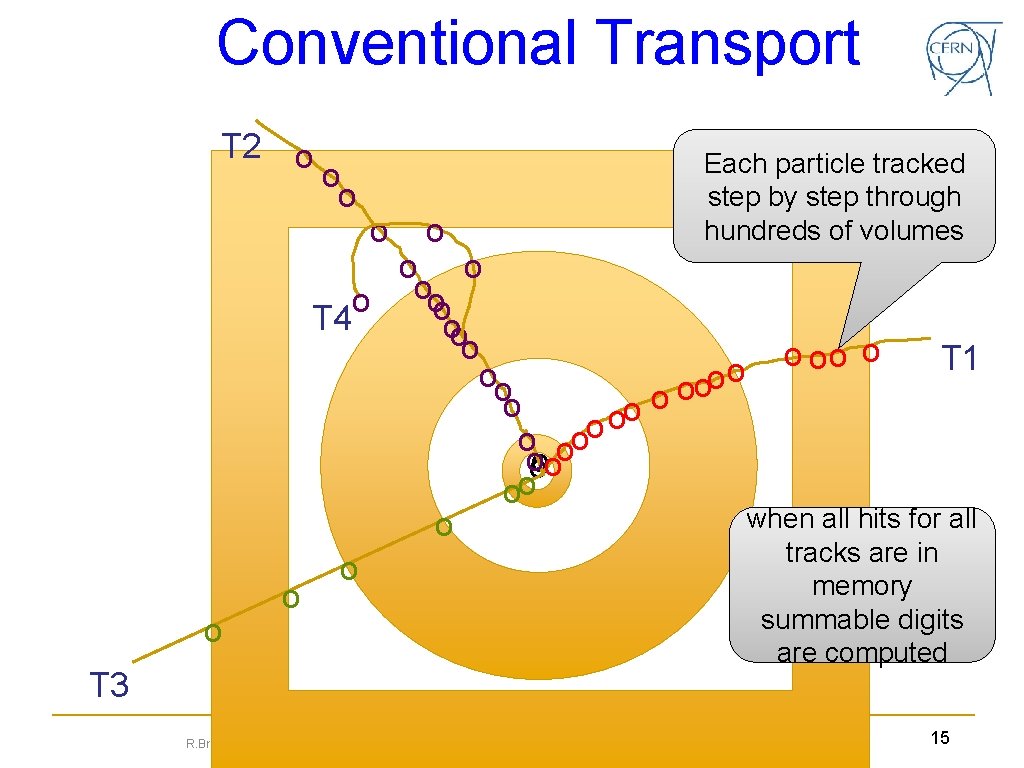

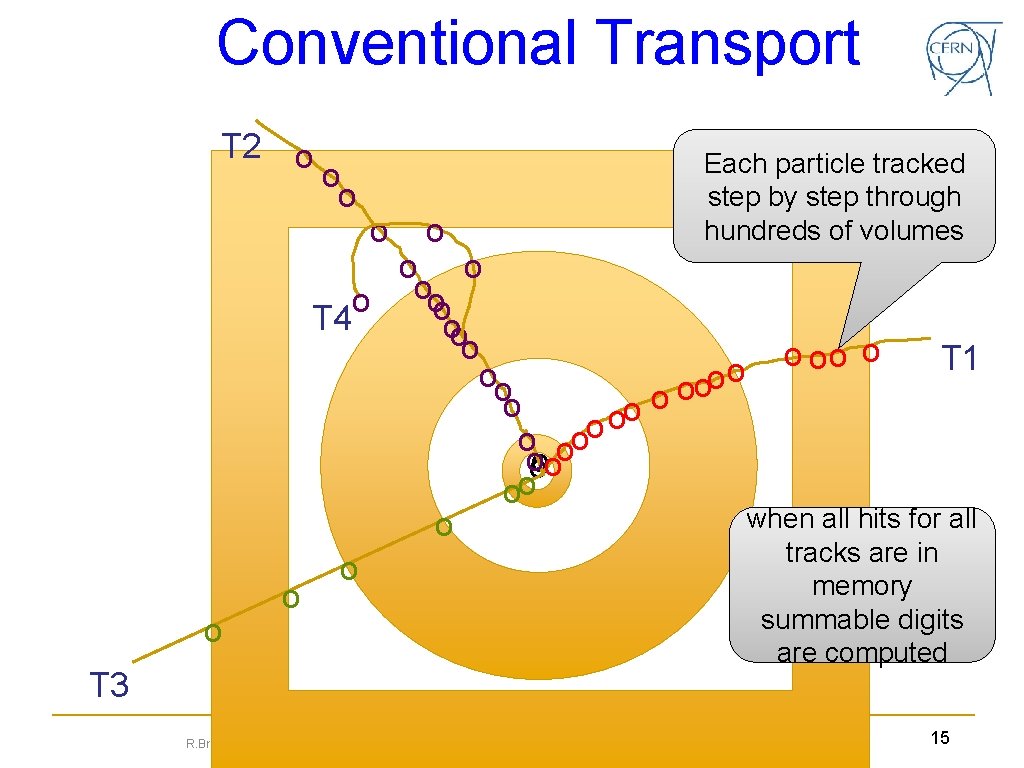

Conventional Transport T 2 o o o o ooo T 4 oo o o T 1 o oo o oo oo when all hits for all o tracks are in o memory T 3 R. Brun : o Each particle tracked step by step through hundreds of volumes Paralllelism and HEP software summable digits are computed 15

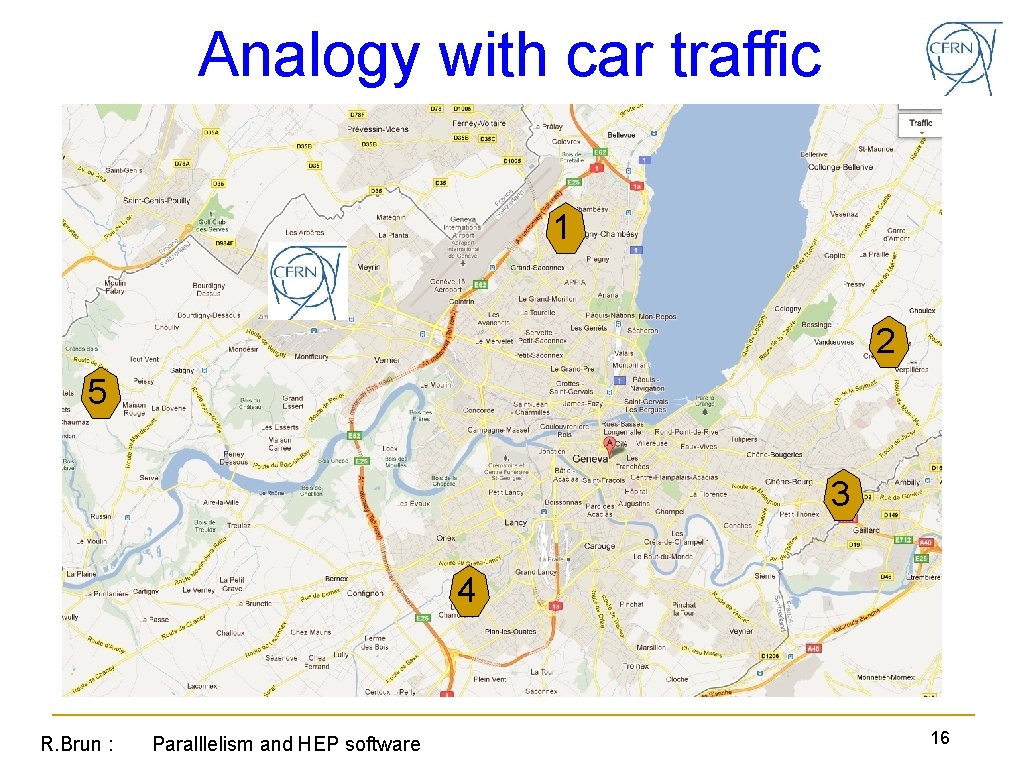

Analogy with car traffic 1 2 5 3 4 R. Brun : Paralllelism and HEP software 16

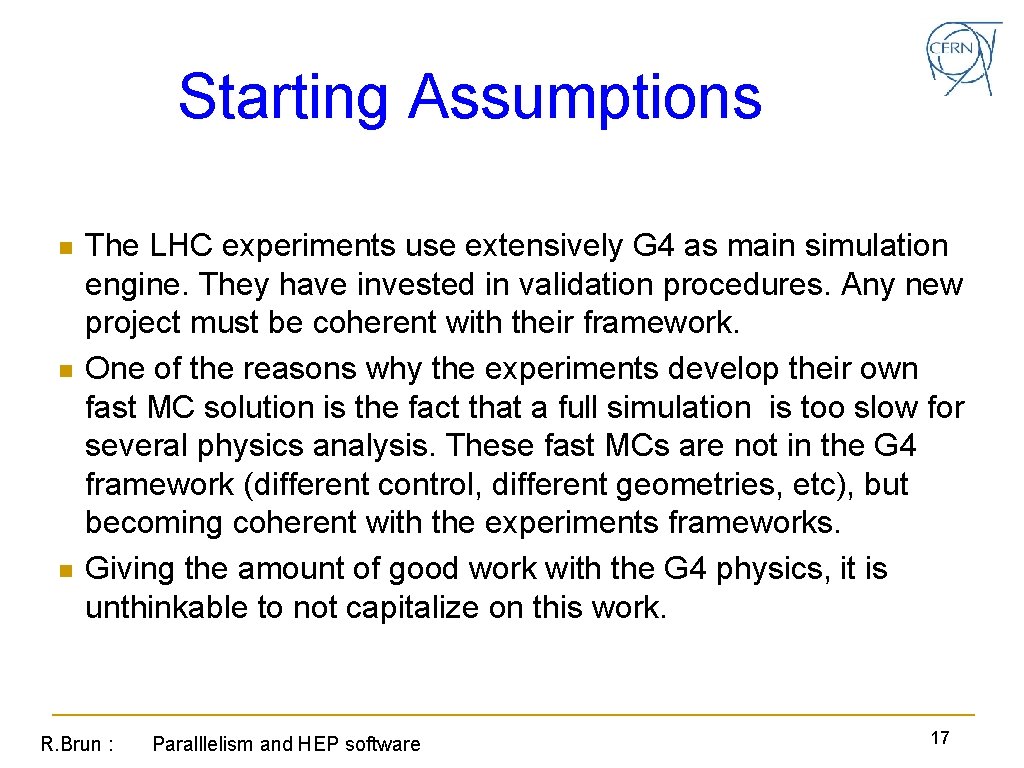

Starting Assumptions n n n The LHC experiments use extensively G 4 as main simulation engine. They have invested in validation procedures. Any new project must be coherent with their framework. One of the reasons why the experiments develop their own fast MC solution is the fact that a full simulation is too slow for several physics analysis. These fast MCs are not in the G 4 framework (different control, different geometries, etc), but becoming coherent with the experiments frameworks. Giving the amount of good work with the G 4 physics, it is unthinkable to not capitalize on this work. R. Brun : Paralllelism and HEP software 17

Goals n Design a new detector simulation tool derived from the Geant 4 physics , but with a radically new transport engine supporting: Full and Fast simulation (not exclusive) q Designed to exploit parallel hardware this talk q 18

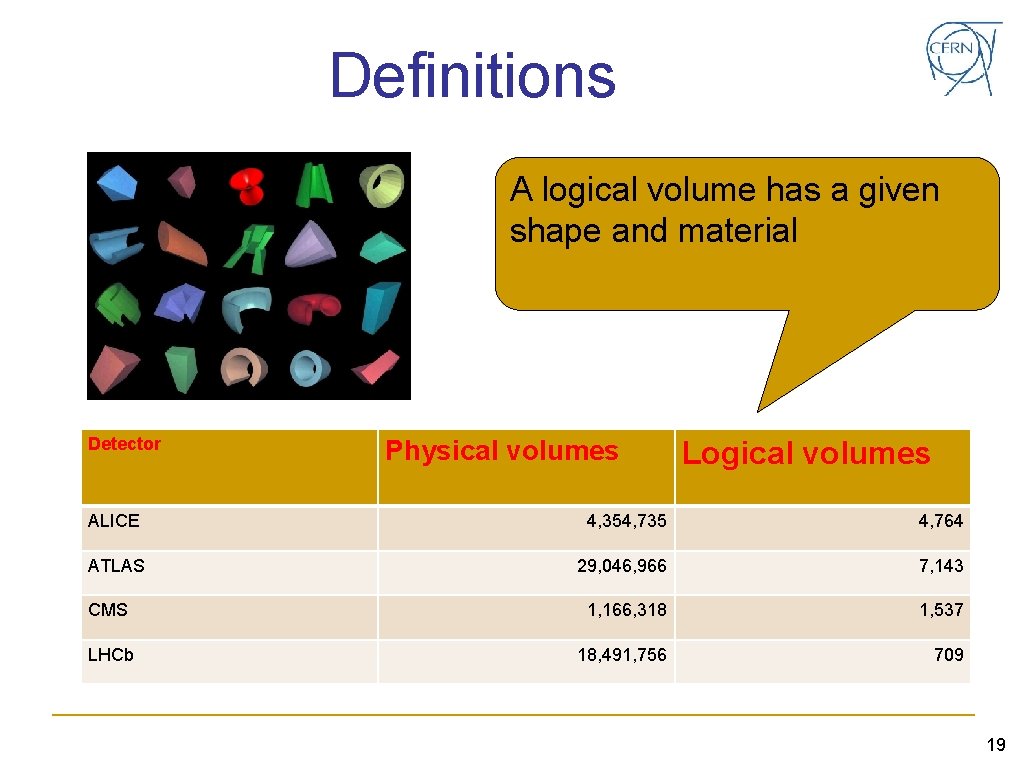

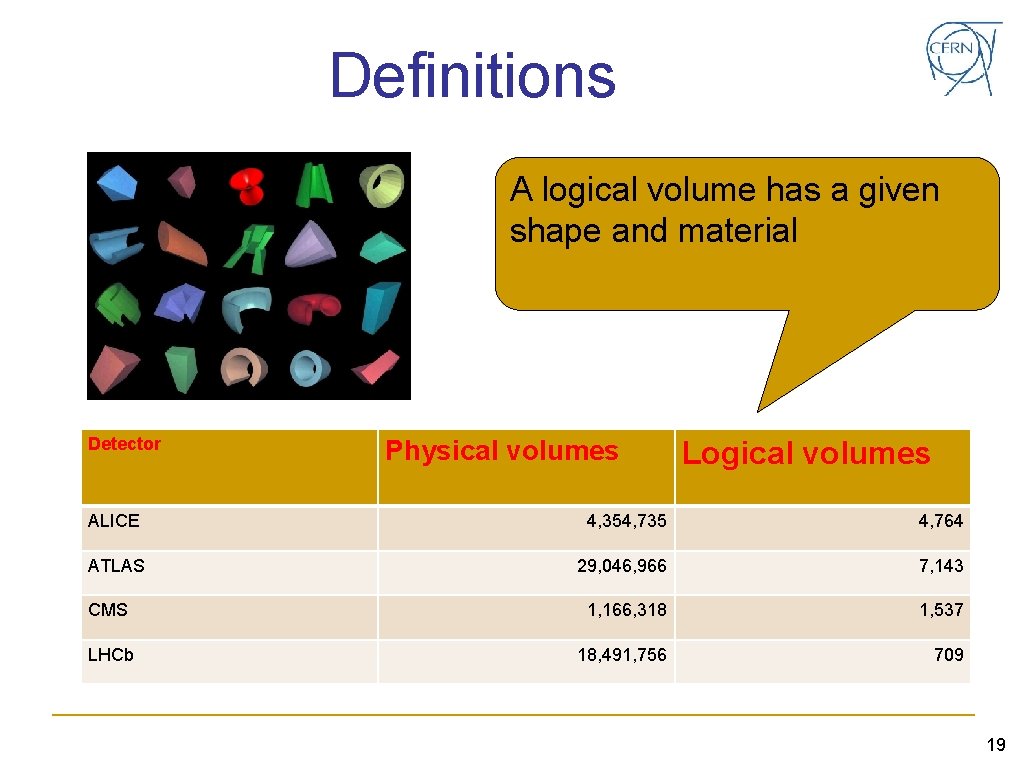

Definitions A logical volume has a given shape and material Detector Physical volumes Logical volumes ALICE 4, 354, 735 4, 764 ATLAS 29, 046, 966 7, 143 CMS 1, 166, 318 1, 537 LHCb 18, 491, 756 709 19

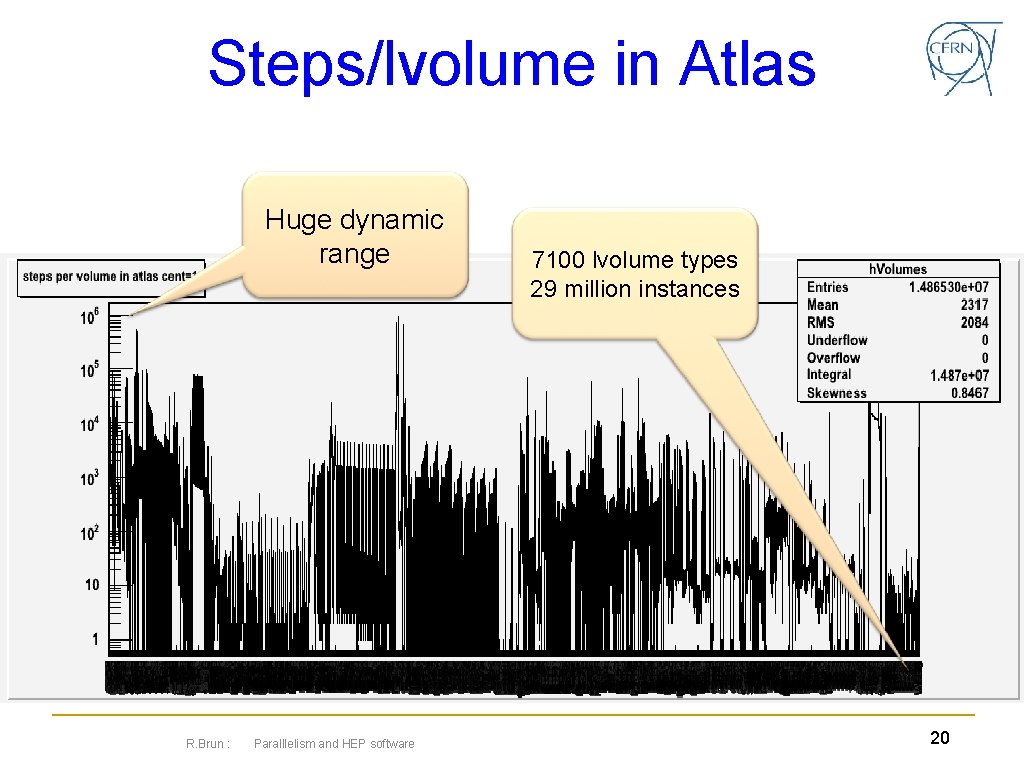

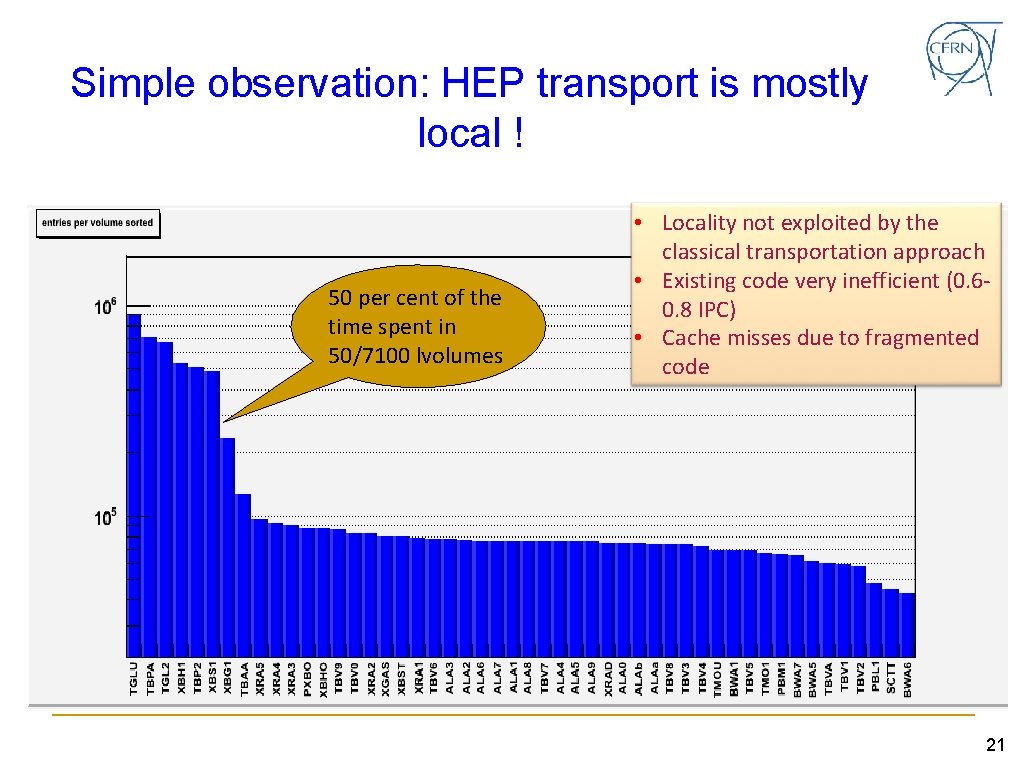

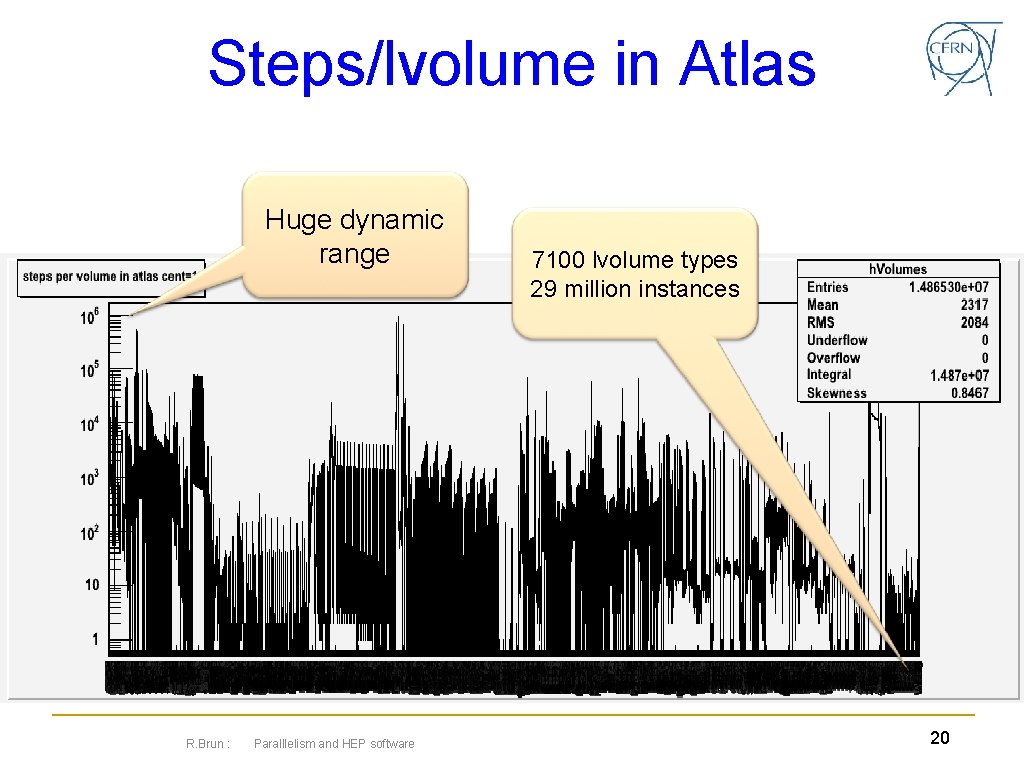

Steps/lvolume in Atlas Huge dynamic range R. Brun : Paralllelism and HEP software 7100 lvolume types 29 million instances 20

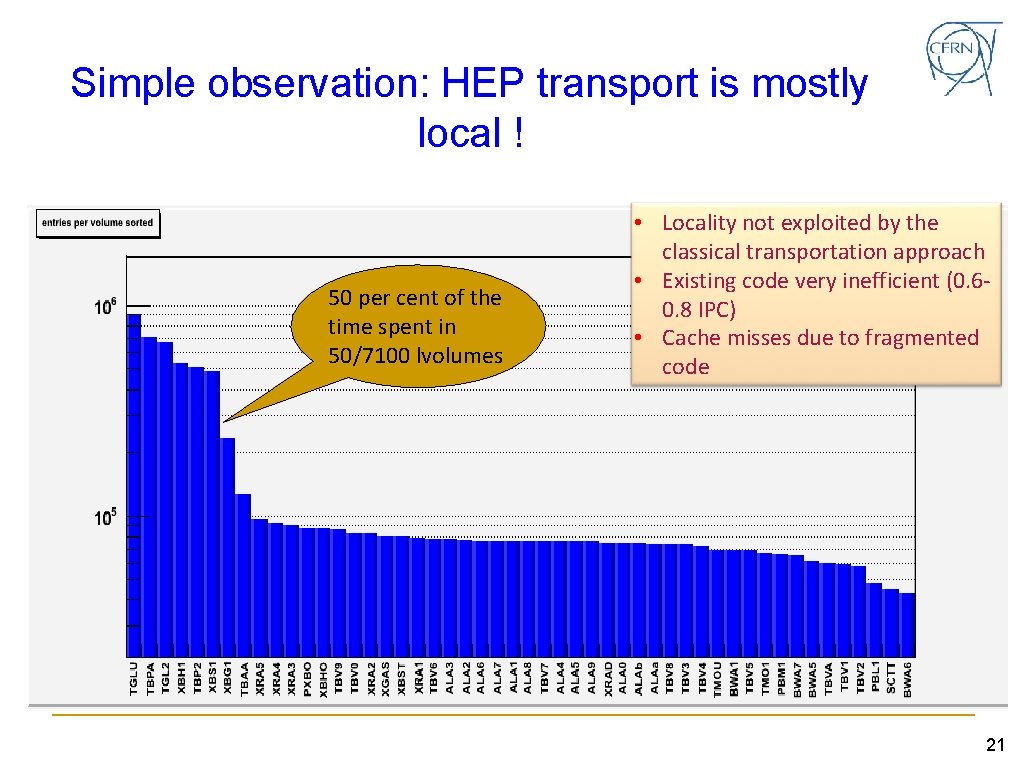

Simple observation: HEP transport is mostly local ! 50 per cent of the time spent in 50/7100 lvolumes • Locality not exploited by the classical transportation approach • Existing code very inefficient (0. 60. 8 IPC) • Cache misses due to fragmented code 21

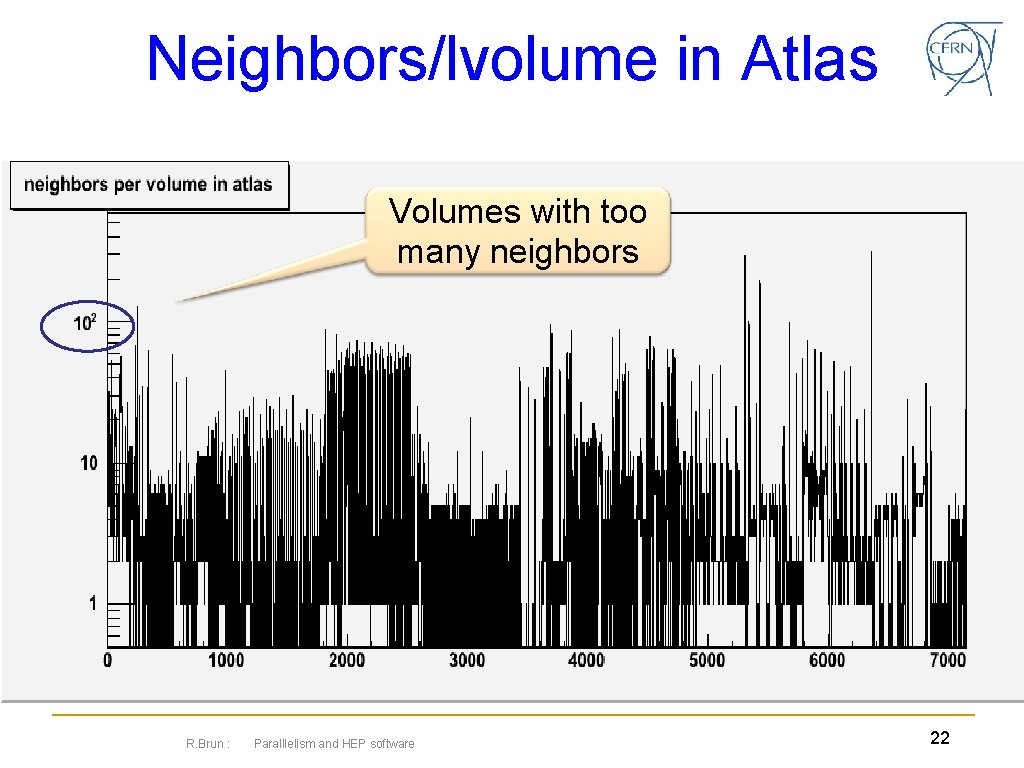

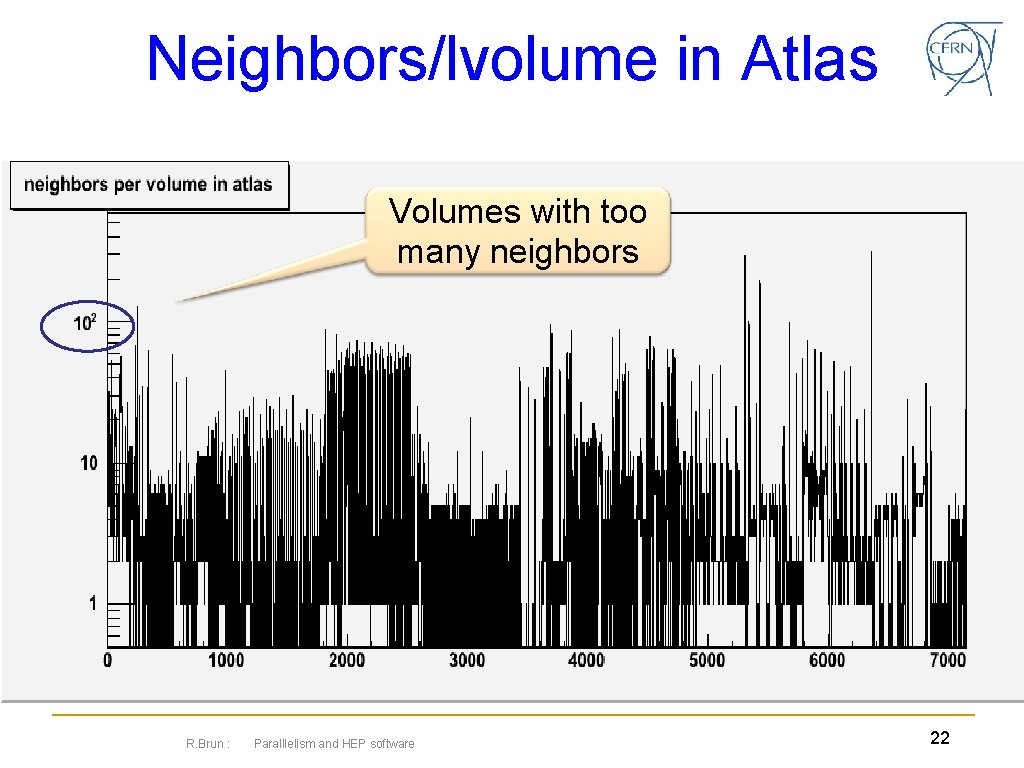

Neighbors/lvolume in Atlas Volumes with too many neighbors R. Brun : Paralllelism and HEP software 22

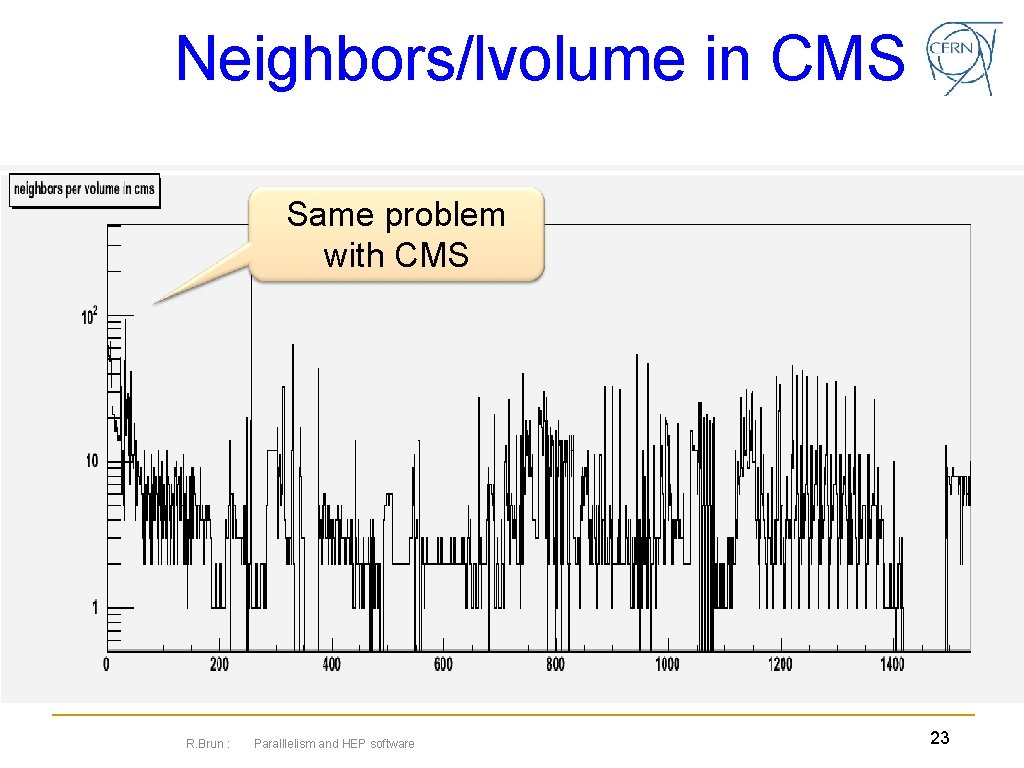

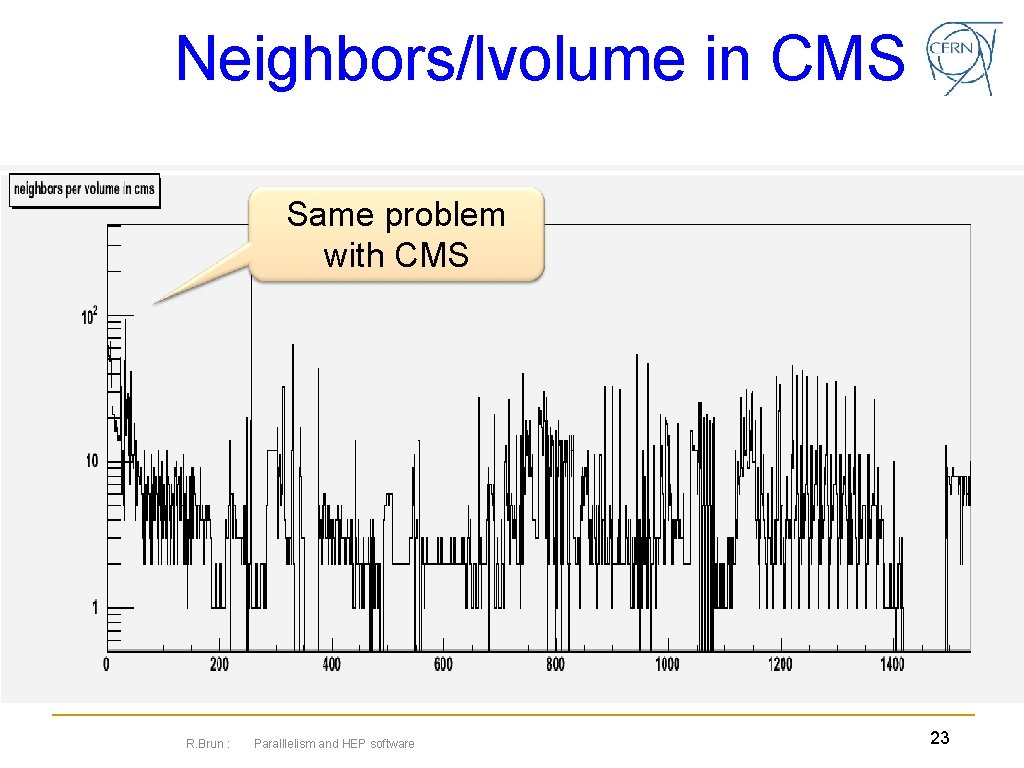

Neighbors/lvolume in CMS Same problem with CMS R. Brun : Paralllelism and HEP software 23

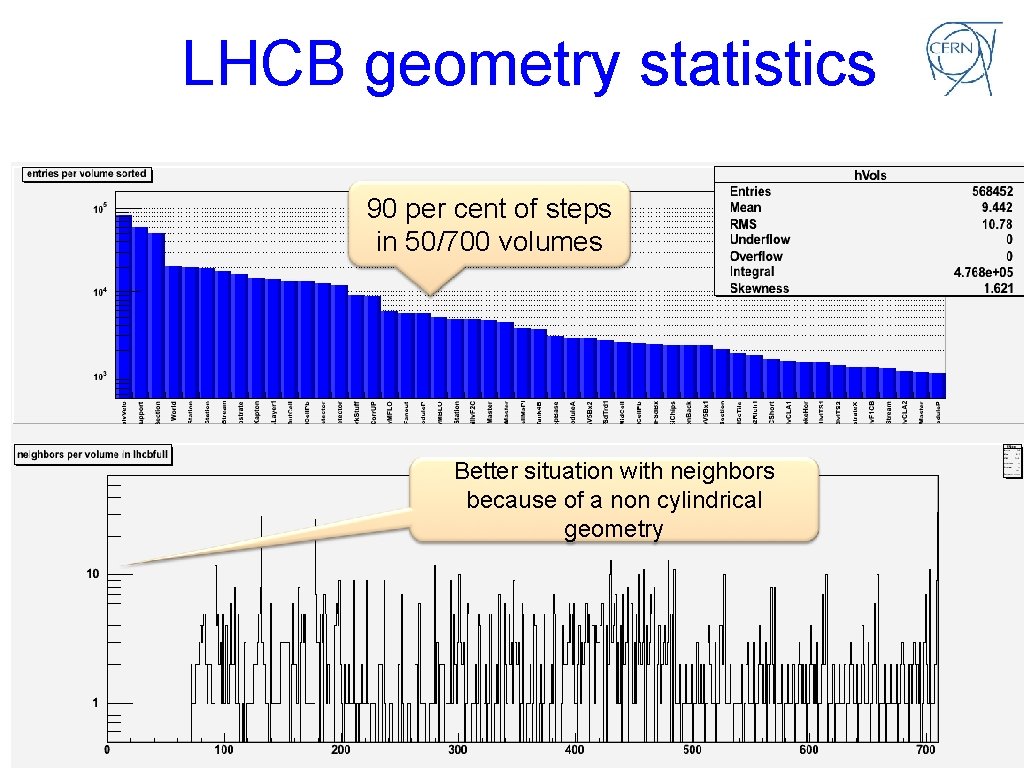

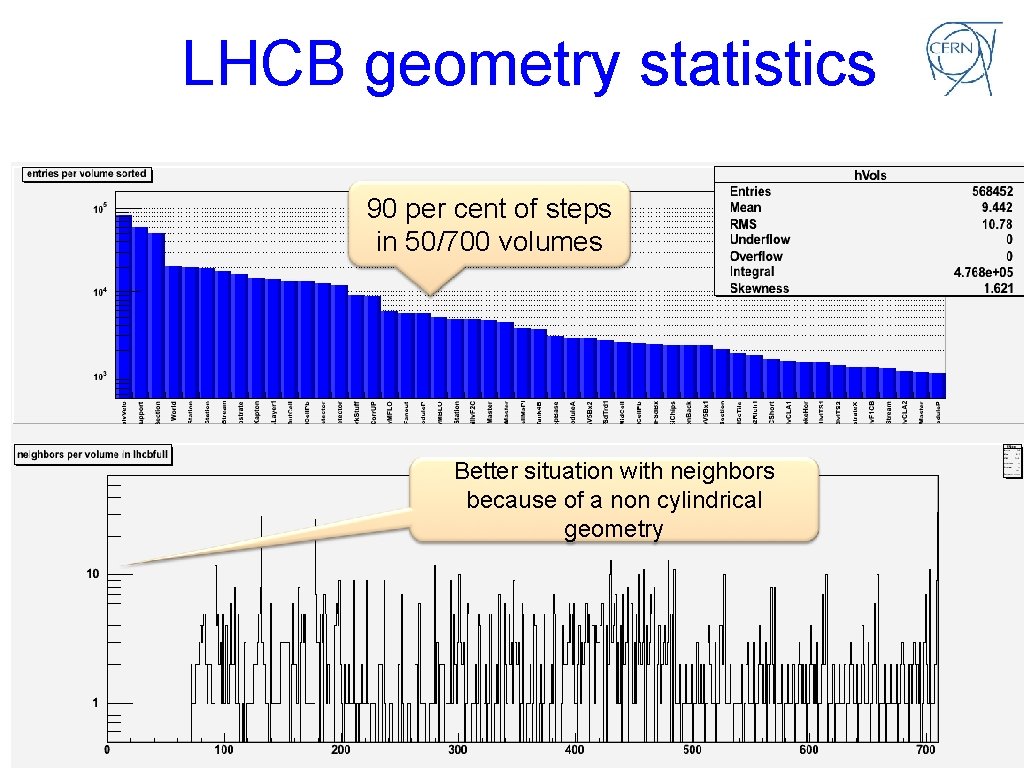

LHCB geometry statistics 90 per cent of steps in 50/700 volumes Better situation with neighbors because of a non cylindrical geometry R. Brun : Paralllelism and HEP software 24

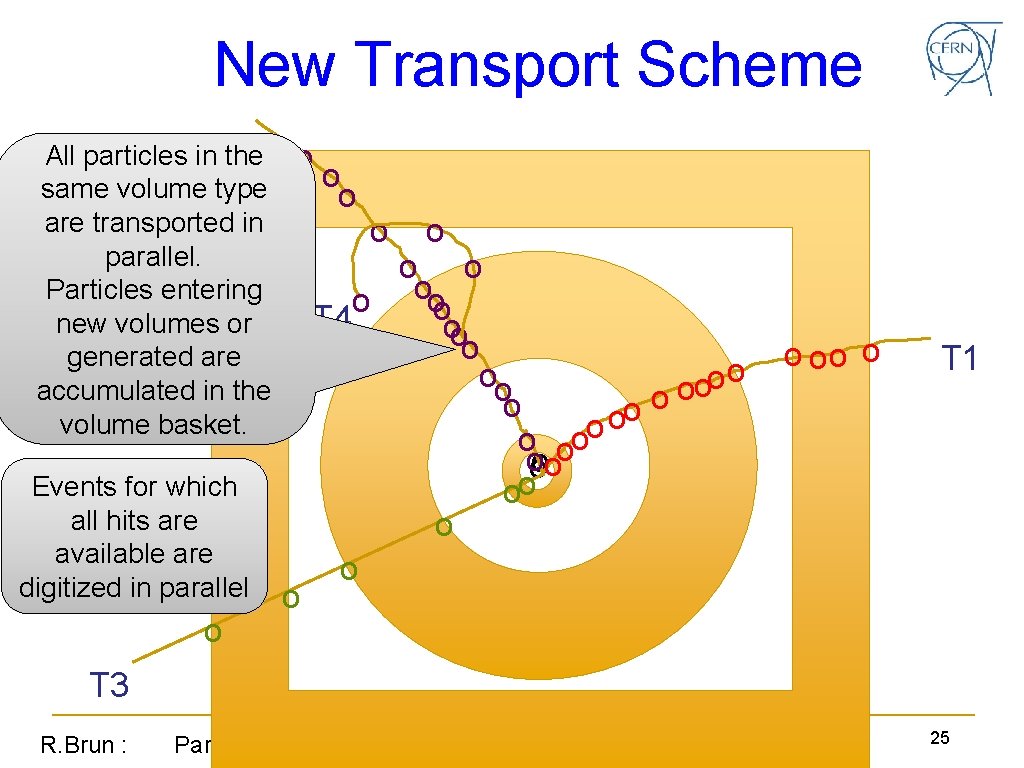

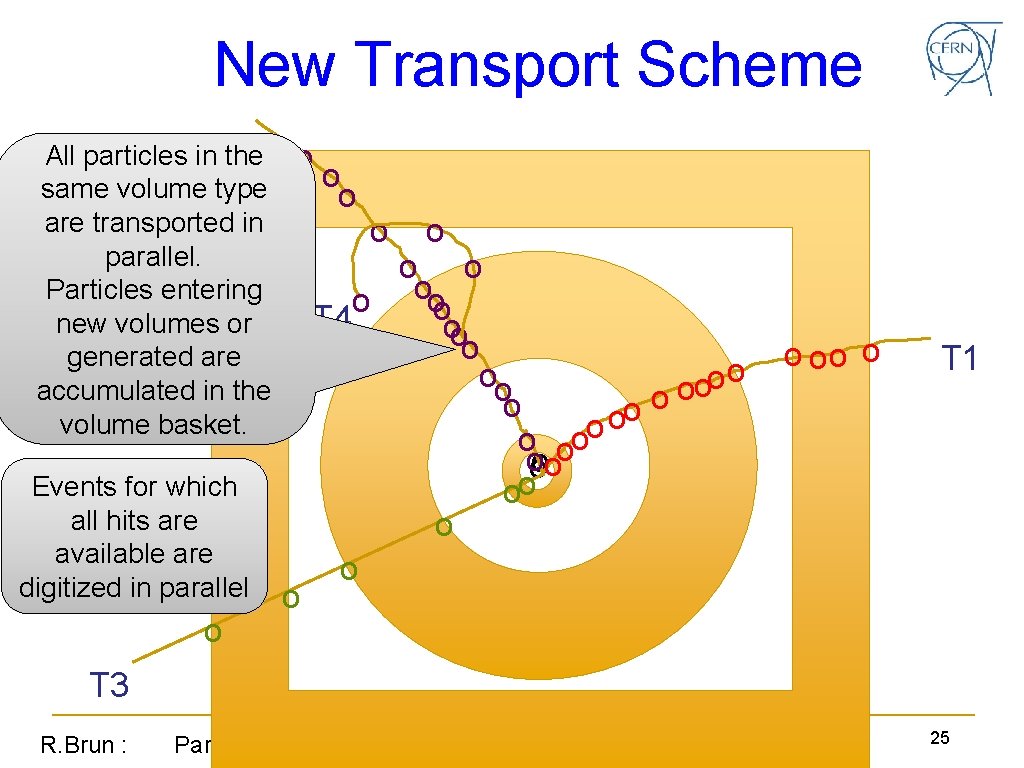

New Transport Scheme All particles in T 2 the same volume type are transported in parallel. Particles entering new volumes or generated are accumulated in the volume basket. Events for which all hits are available are digitized in parallel o o ooo T 4 oo o o ooo oo o T 1 T 3 R. Brun : Paralllelism and HEP software 25

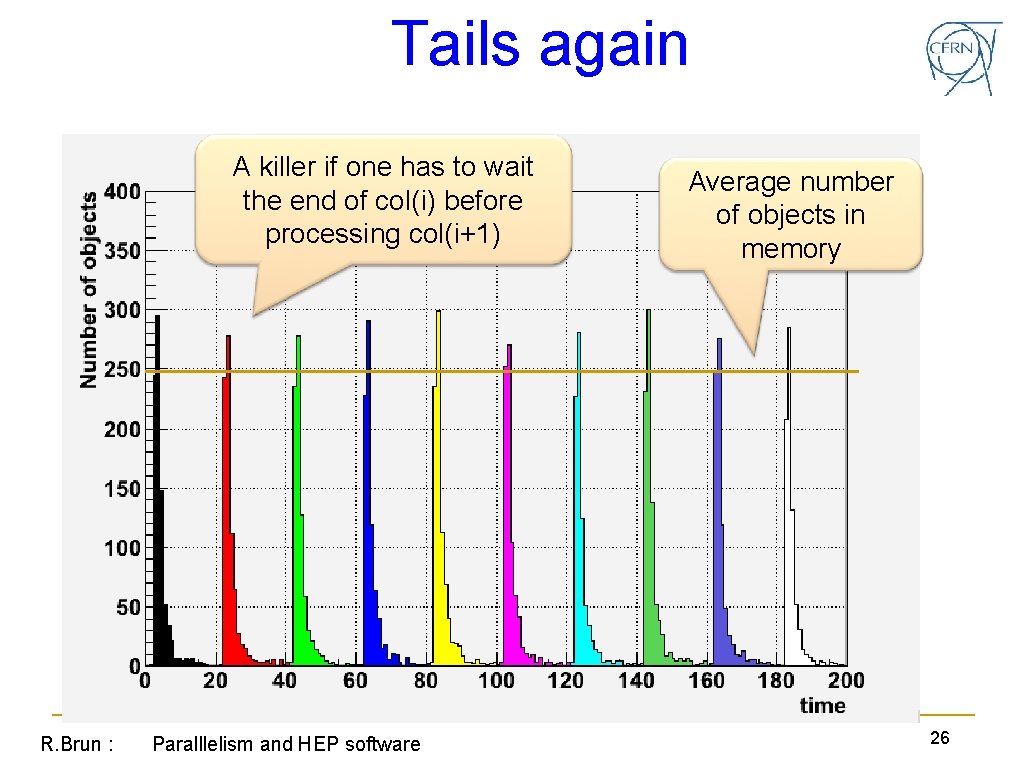

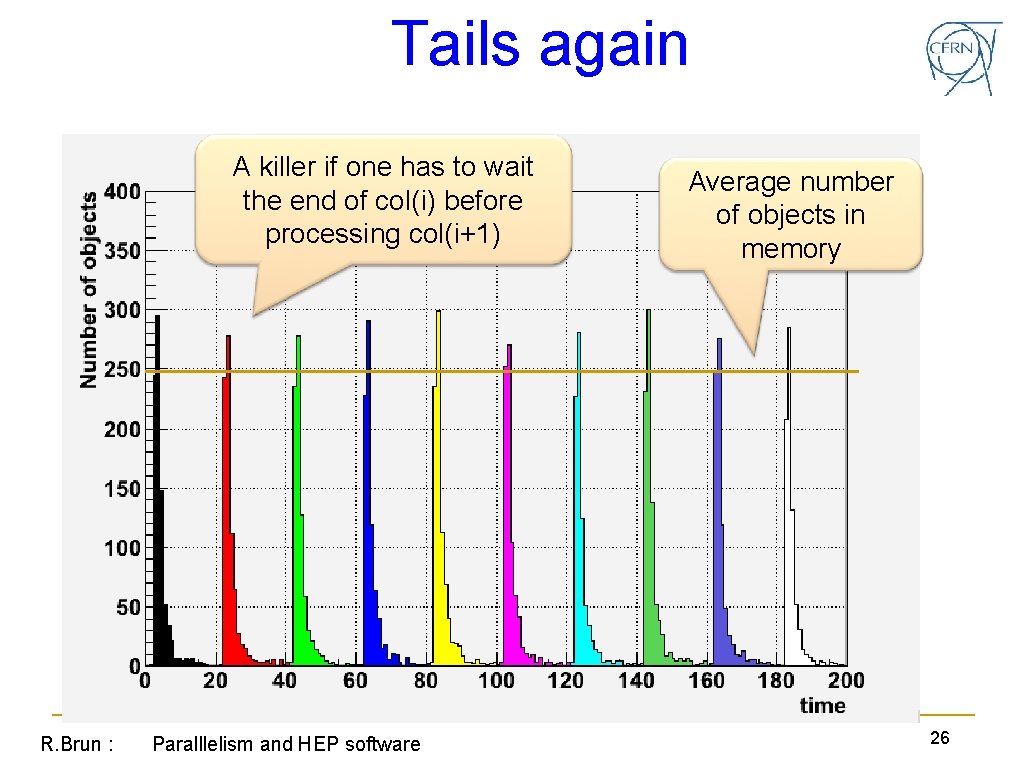

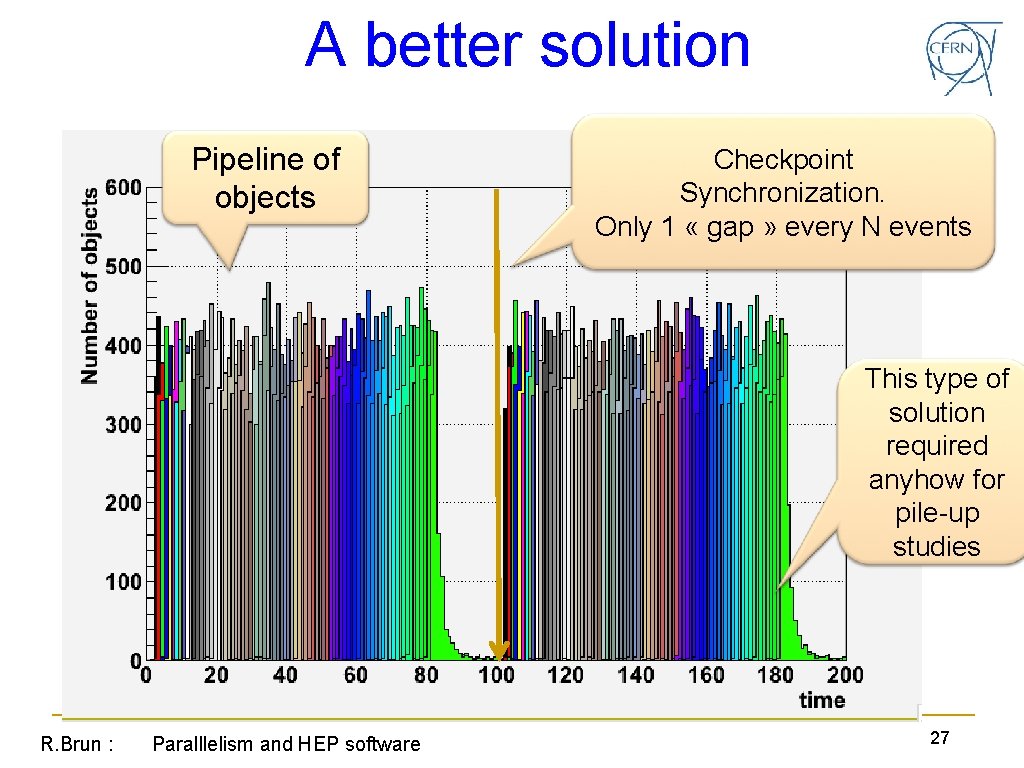

Tails again A killer if one has to wait the end of col(i) before processing col(i+1) R. Brun : Paralllelism and HEP software Average number of objects in memory 26

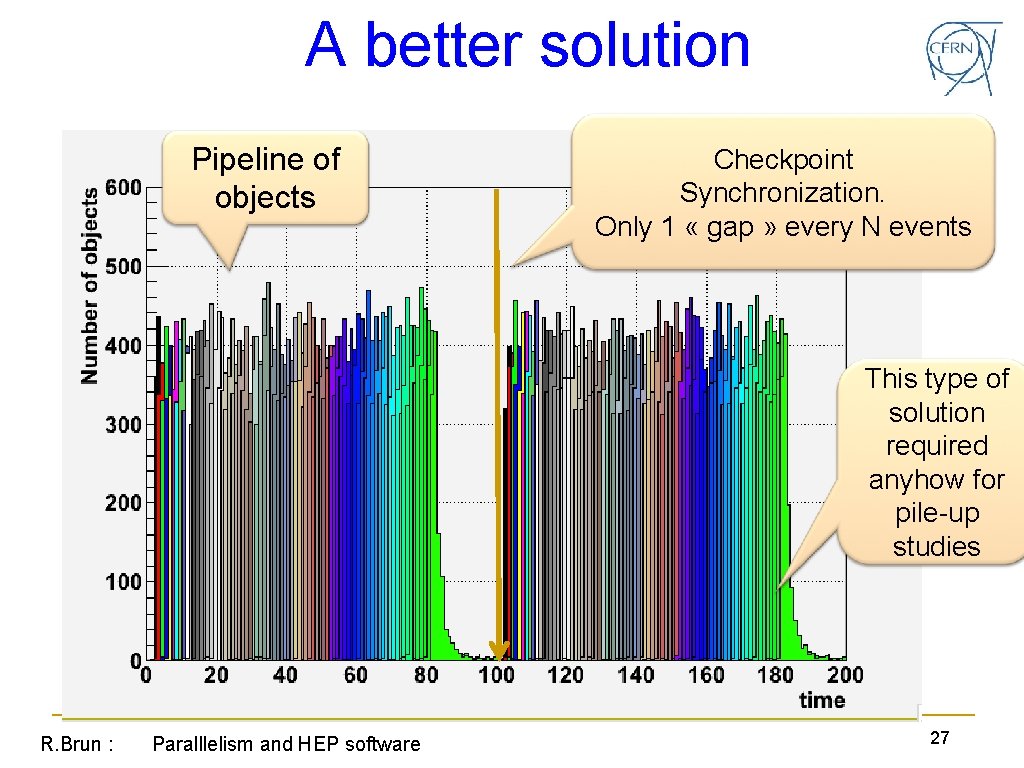

A better solution Pipeline of objects Checkpoint Synchronization. Only 1 « gap » every N events This type of solution required anyhow for pile-up studies R. Brun : Paralllelism and HEP software 27

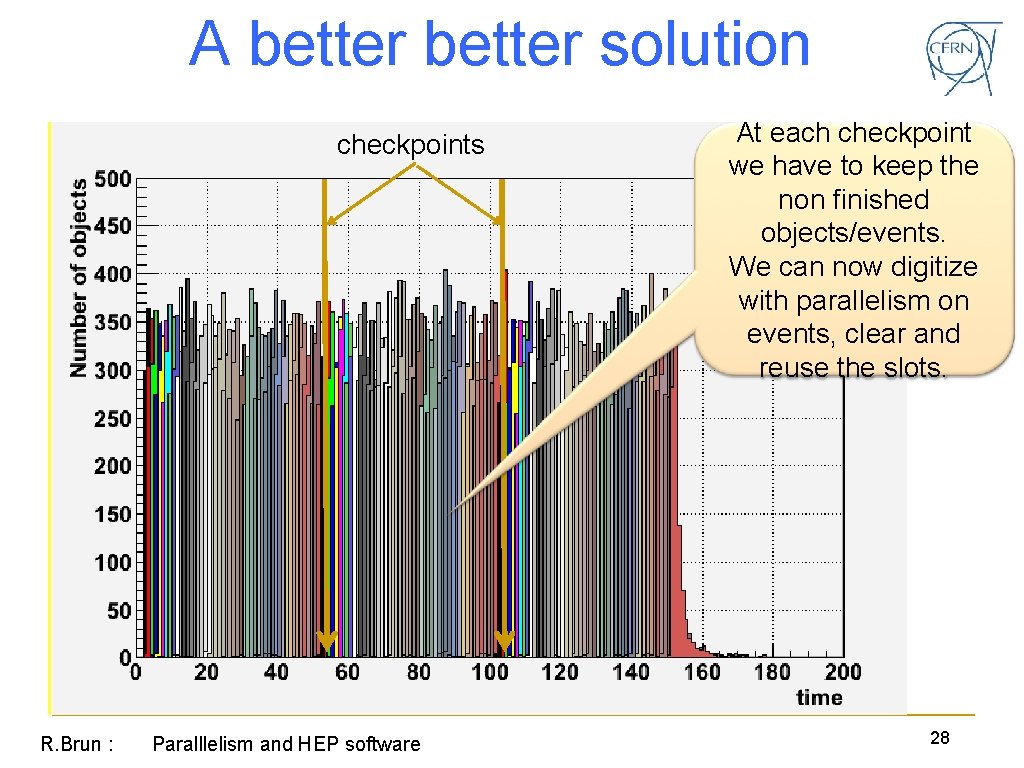

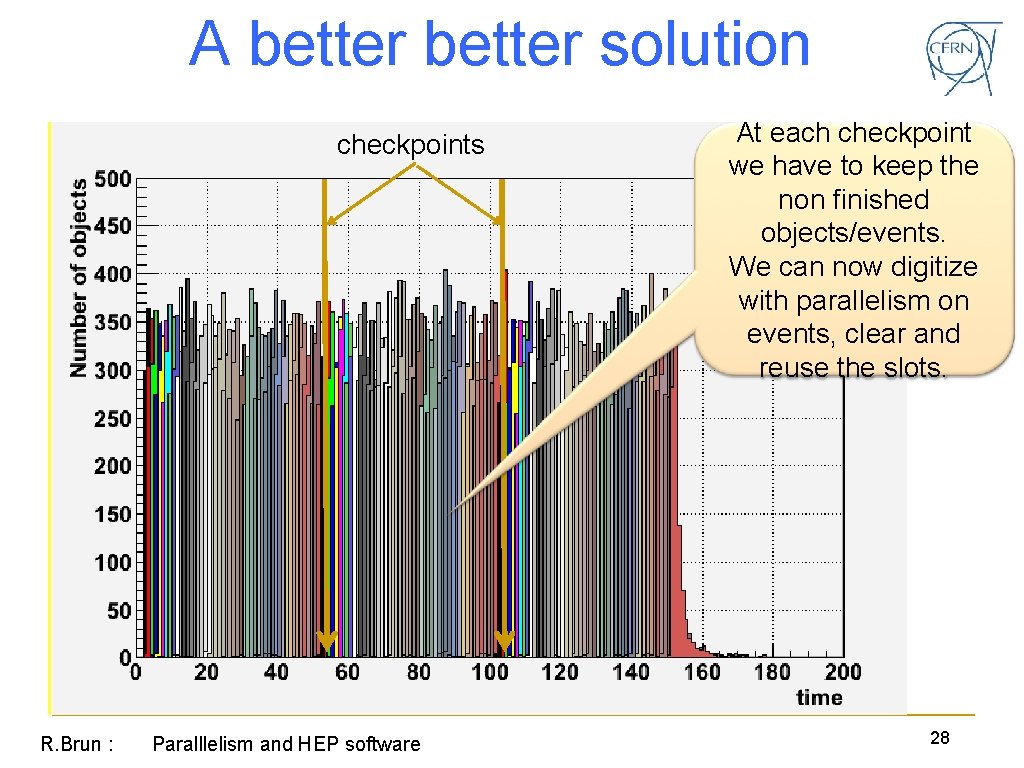

A better solution checkpoints R. Brun : Paralllelism and HEP software At each checkpoint we have to keep the non finished objects/events. We can now digitize with parallelism on events, clear and reuse the slots. 28

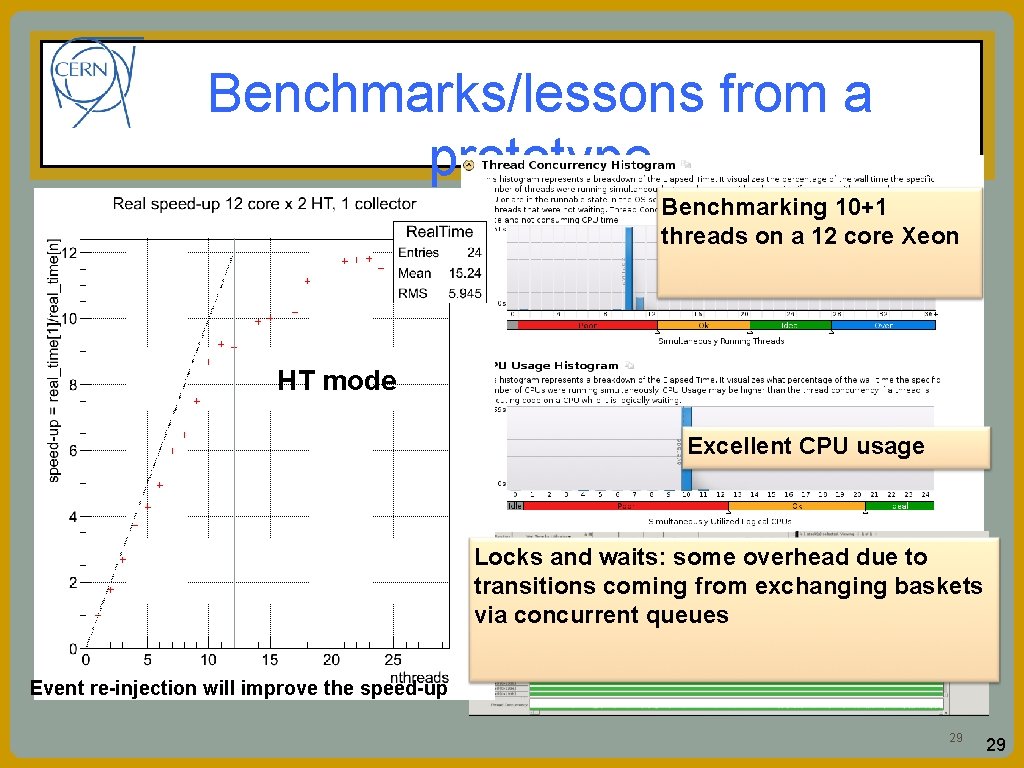

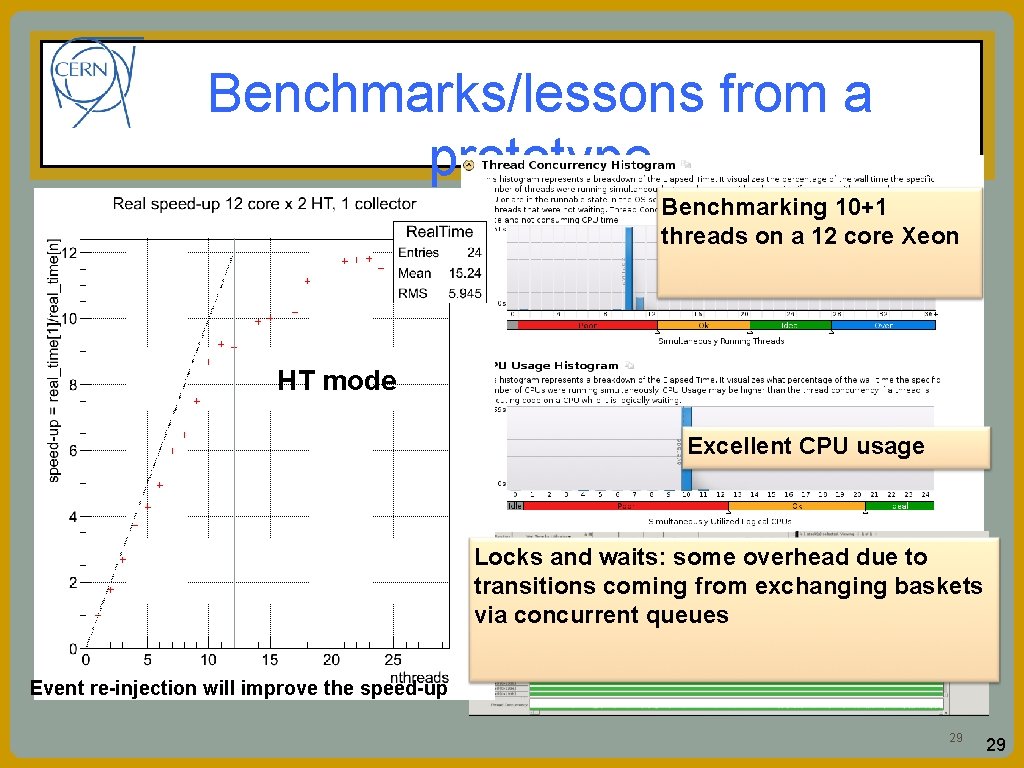

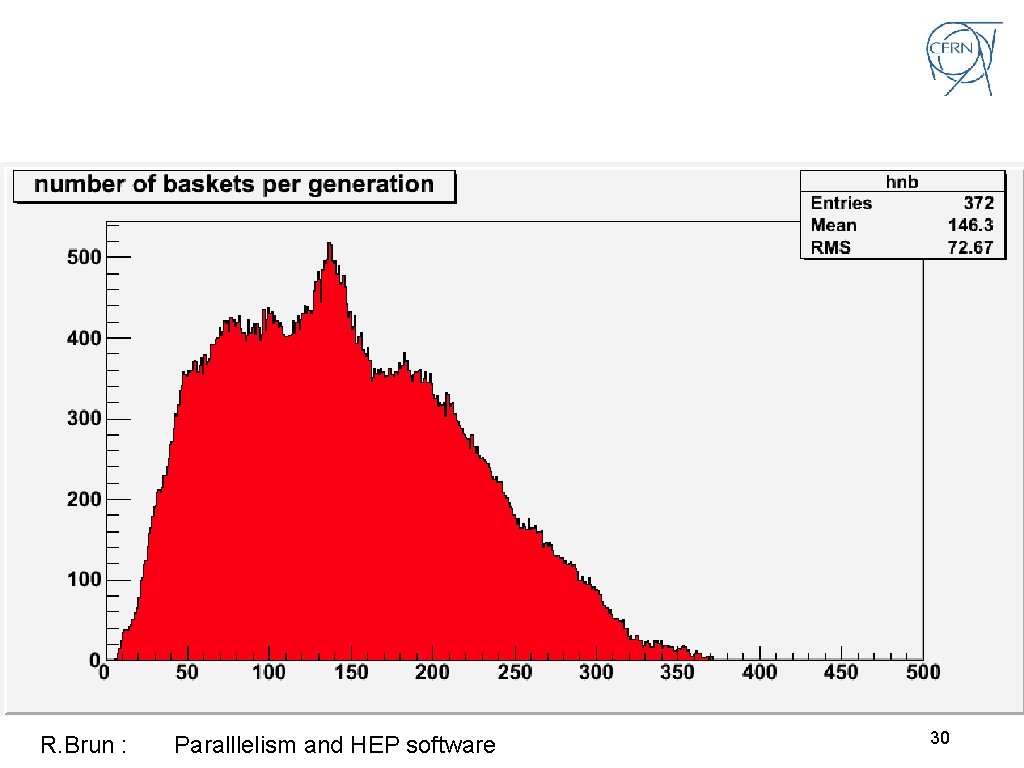

Benchmarks/lessons from a prototype Benchmarking 10+1 threads on a 12 core Xeon HT mode Excellent CPU usage Locks and waits: some overhead due to transitions coming from exchanging baskets via concurrent queues Event re-injection will improve the speed-up 29 29

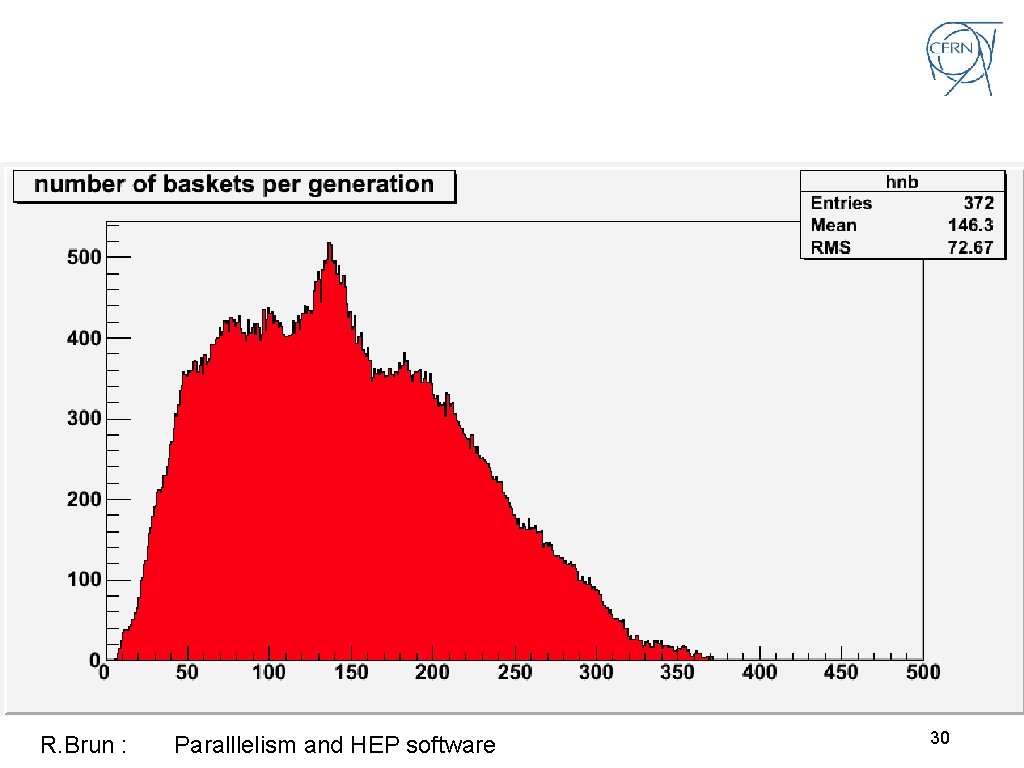

R. Brun : Paralllelism and HEP software 30

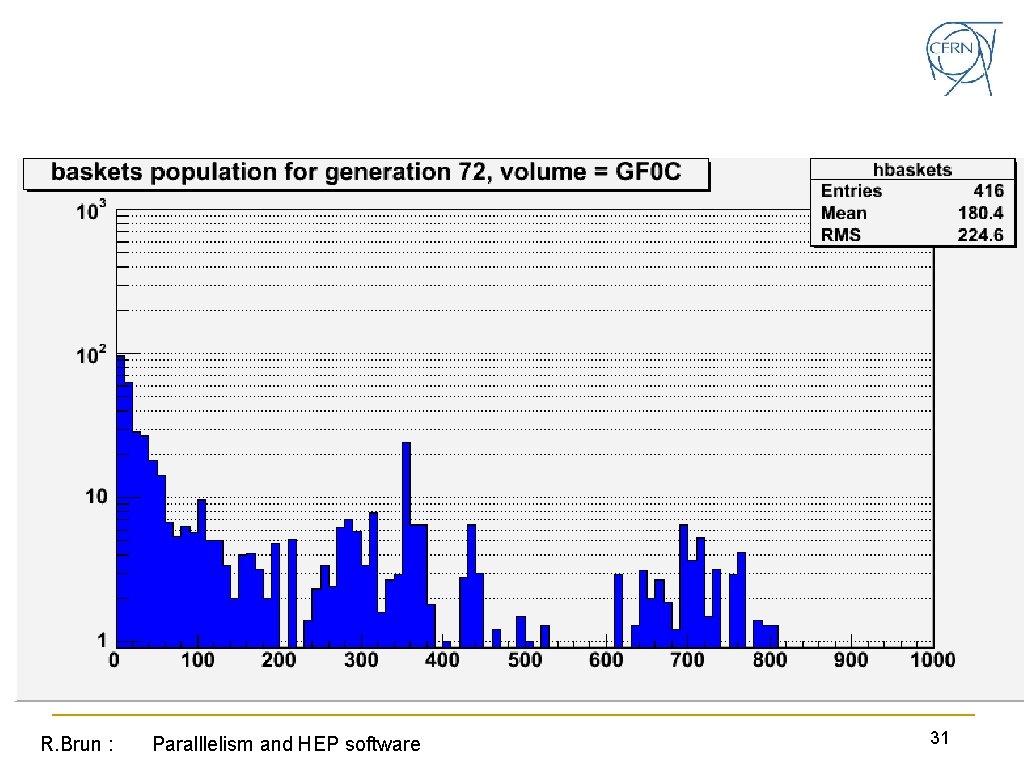

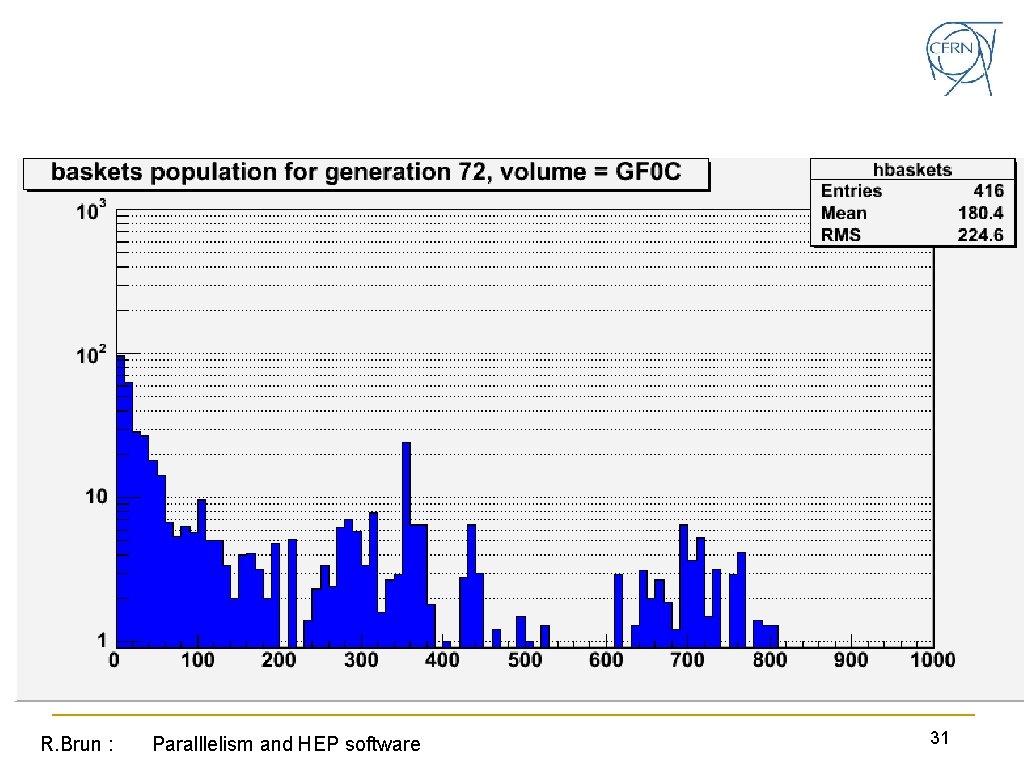

R. Brun : Paralllelism and HEP software 31

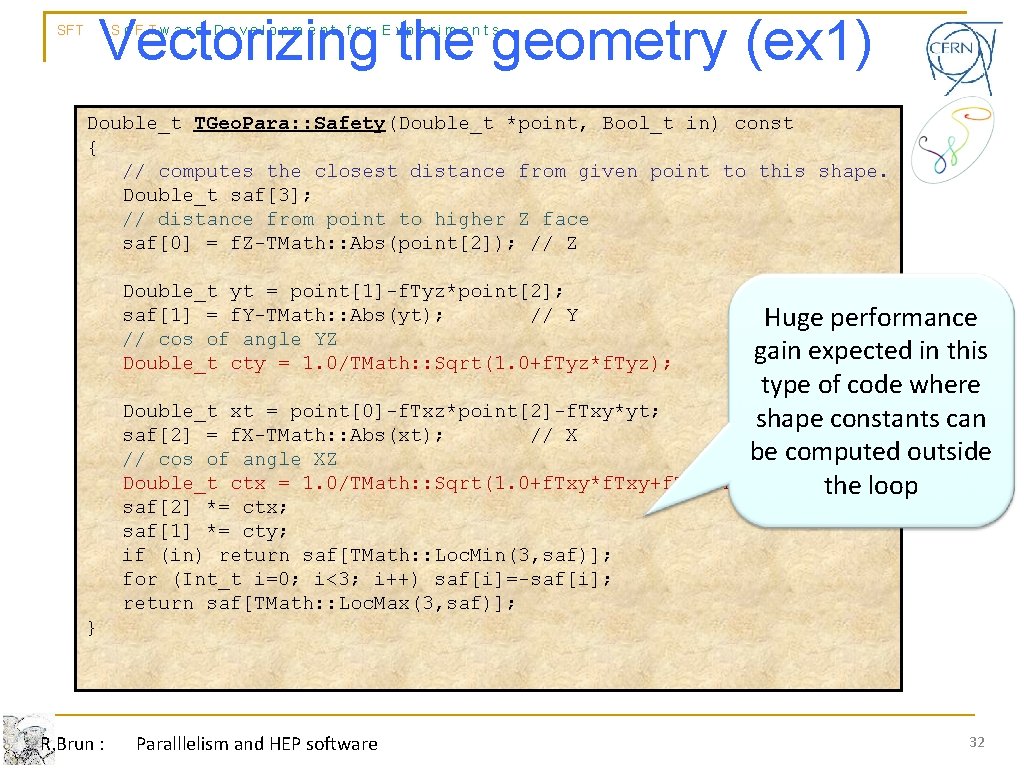

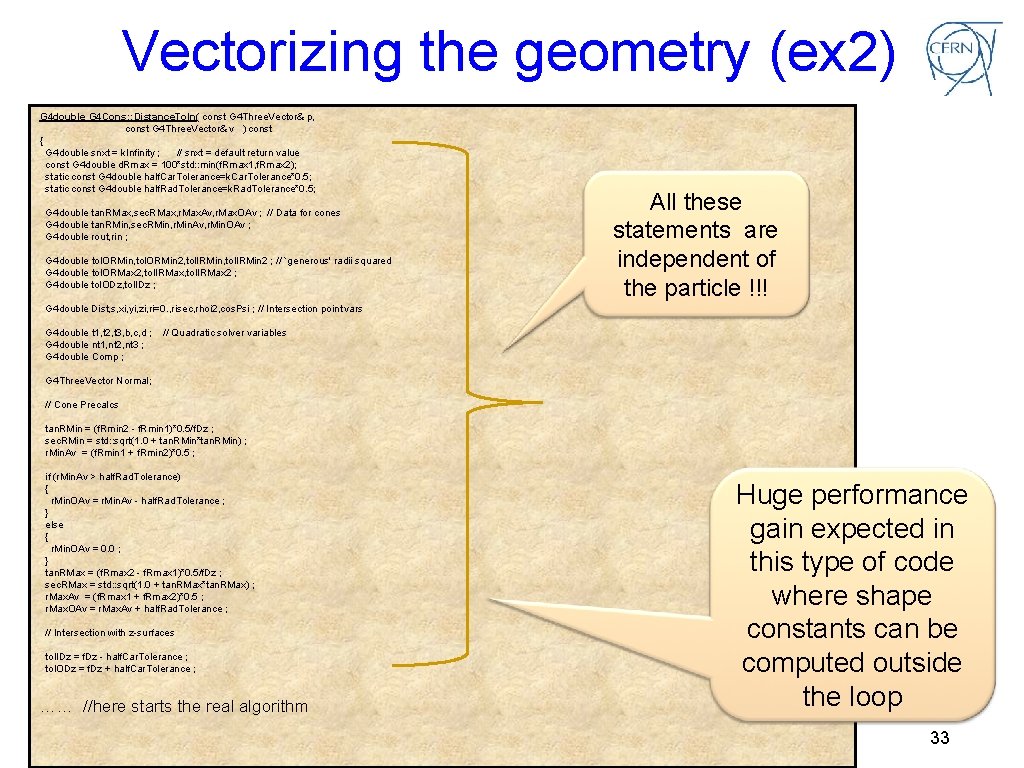

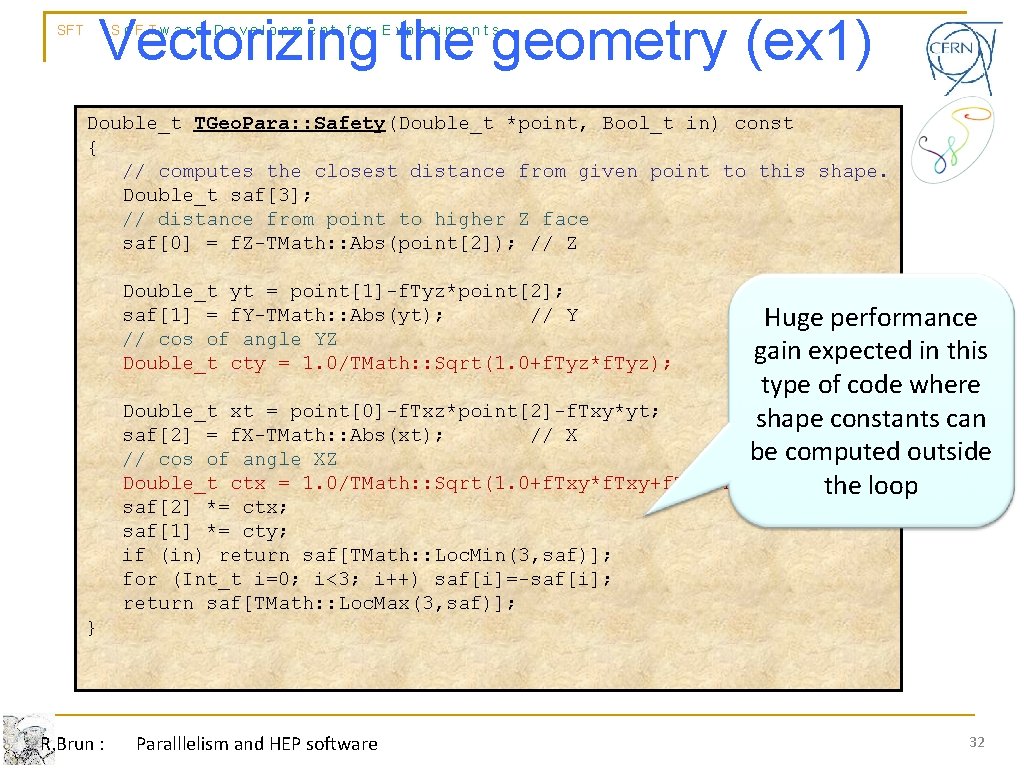

Vectorizing the geometry (ex 1) SFT So. FTware Development for Experiments Double_t TGeo. Para: : Safety(Double_t *point, Bool_t in) const { // computes the closest distance from given point to this shape. Double_t saf[3]; // distance from point to higher Z face saf[0] = f. Z-TMath: : Abs(point[2]); // Z Double_t yt = point[1]-f. Tyz*point[2]; saf[1] = f. Y-TMath: : Abs(yt); // Y // cos of angle YZ Double_t cty = 1. 0/TMath: : Sqrt(1. 0+f. Tyz*f. Tyz); Huge performance gain expected in this type of code where Double_t xt = point[0]-f. Txz*point[2]-f. Txy*yt; shape constants can saf[2] = f. X-TMath: : Abs(xt); // X be computed outside // cos of angle XZ Double_t ctx = 1. 0/TMath: : Sqrt(1. 0+f. Txy*f. Txy+f. Txz*f. Txz); the loop saf[2] *= ctx; saf[1] *= cty; if (in) return saf[TMath: : Loc. Min(3, saf)]; for (Int_t i=0; i<3; i++) saf[i]=-saf[i]; return saf[TMath: : Loc. Max(3, saf)]; } R. Brun : Paralllelism and HEP software 32

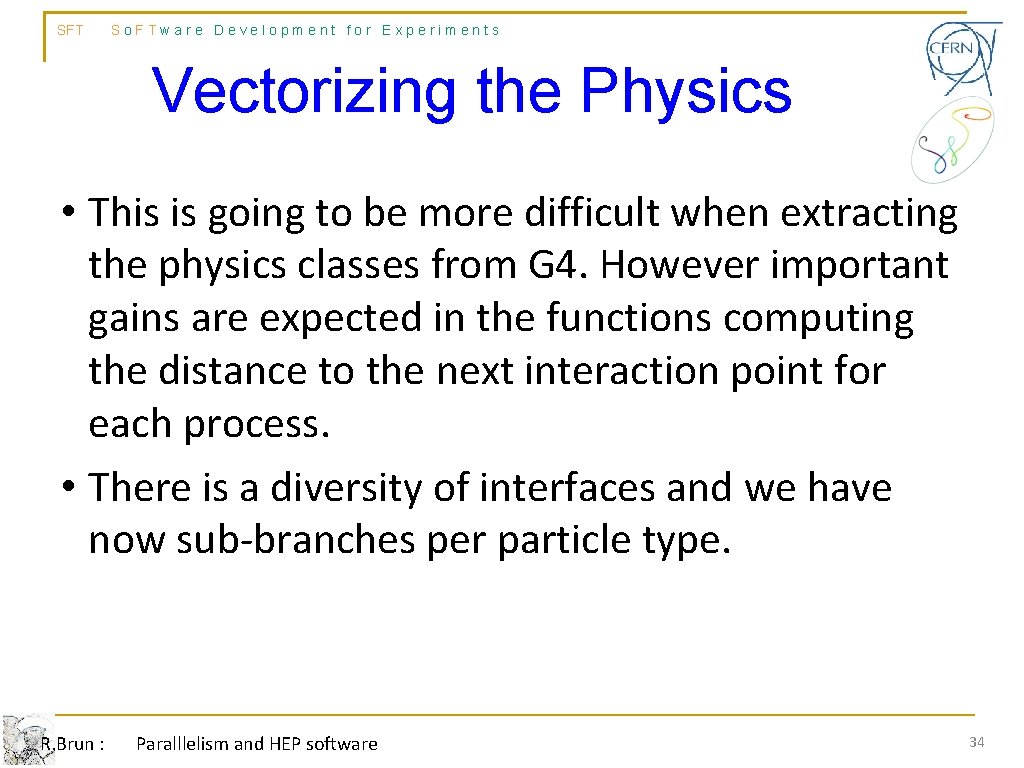

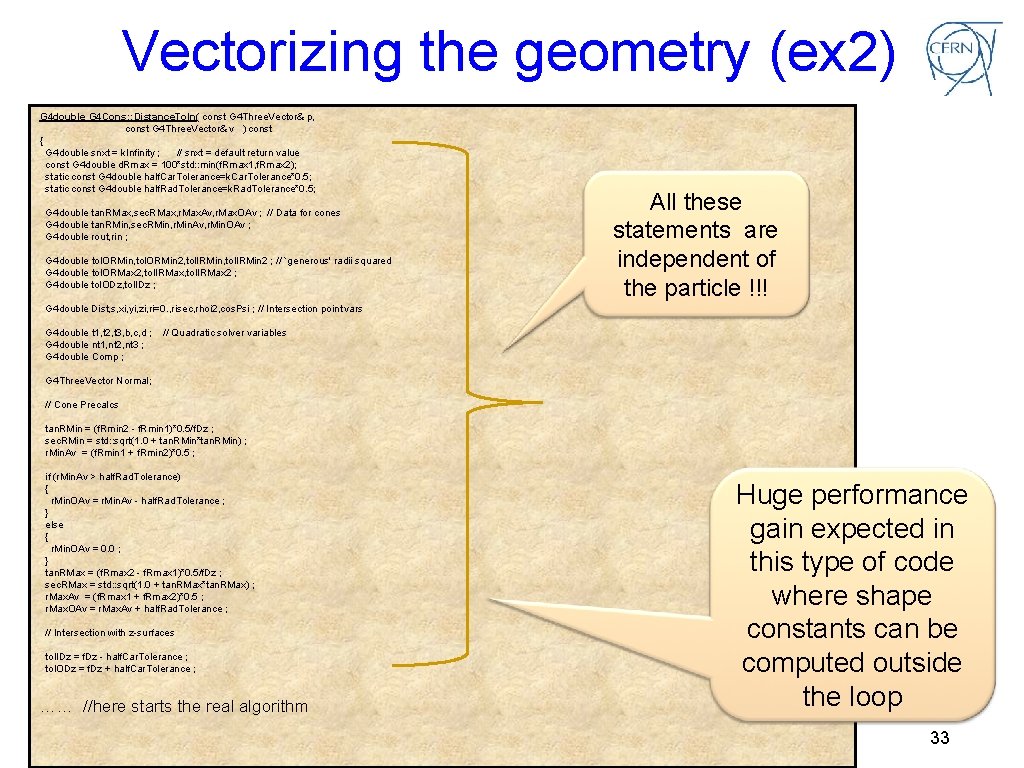

Vectorizing the geometry (ex 2) G 4 double G 4 Cons: : Distance. To. In( const G 4 Three. Vector& p, const G 4 Three. Vector& v ) const { G 4 double snxt = k. Infinity ; // snxt = default return value const G 4 double d. Rmax = 100*std: : min(f. Rmax 1, f. Rmax 2); static const G 4 double half. Car. Tolerance=k. Car. Tolerance*0. 5; static const G 4 double half. Rad. Tolerance=k. Rad. Tolerance*0. 5; G 4 double tan. RMax, sec. RMax, r. Max. Av, r. Max. OAv ; // Data for cones G 4 double tan. RMin, sec. RMin, r. Min. Av, r. Min. OAv ; G 4 double rout, rin ; G 4 double tol. ORMin, tol. ORMin 2, tol. IRMin 2 ; // `generous' radii squared G 4 double tol. ORMax 2, tol. IRMax 2 ; G 4 double tol. ODz, tol. IDz ; All these statements are independent of the particle !!! G 4 double Dist, s, xi, yi, zi, ri=0. , risec, rhoi 2, cos. Psi ; // Intersection point vars G 4 double t 1, t 2, t 3, b, c, d ; G 4 double nt 1, nt 2, nt 3 ; G 4 double Comp ; // Quadratic solver variables G 4 Three. Vector Normal; // Cone Precalcs tan. RMin = (f. Rmin 2 - f. Rmin 1)*0. 5/f. Dz ; sec. RMin = std: : sqrt(1. 0 + tan. RMin*tan. RMin) ; r. Min. Av = (f. Rmin 1 + f. Rmin 2)*0. 5 ; if (r. Min. Av > half. Rad. Tolerance) { r. Min. OAv = r. Min. Av - half. Rad. Tolerance ; } else { r. Min. OAv = 0. 0 ; } tan. RMax = (f. Rmax 2 - f. Rmax 1)*0. 5/f. Dz ; sec. RMax = std: : sqrt(1. 0 + tan. RMax*tan. RMax) ; r. Max. Av = (f. Rmax 1 + f. Rmax 2)*0. 5 ; r. Max. OAv = r. Max. Av + half. Rad. Tolerance ; // Intersection with z-surfaces tol. IDz = f. Dz - half. Car. Tolerance ; tol. ODz = f. Dz + half. Car. Tolerance ; …… //here starts the real algorithm R. Brun : Paralllelism and HEP software Huge performance gain expected in this type of code where shape constants can be computed outside the loop 33

SFT So. FTware Development for Experiments Vectorizing the Physics • This is going to be more difficult when extracting the physics classes from G 4. However important gains are expected in the functions computing the distance to the next interaction point for each process. • There is a diversity of interfaces and we have now sub-branches per particle type. R. Brun : Paralllelism and HEP software 34

Where are we now? n Present q q status Several investigations of possible alternatives for “extremely parallel – no lock” transport Not much code written, several blackboards full Some investigation on a simplified but fully vectorized model to prove vectorization gain New design in preparation 35

Major points under discussion How to minimize locks and maximize local handling of particles q How to handle hit and digit structures q How to preserve the history of the particles q n This point seems more difficult at the moment and it requires more design What is the possible speedup obtained by microparallelisation q What are the bottlenecks and opportunities with parallel I/O q 36

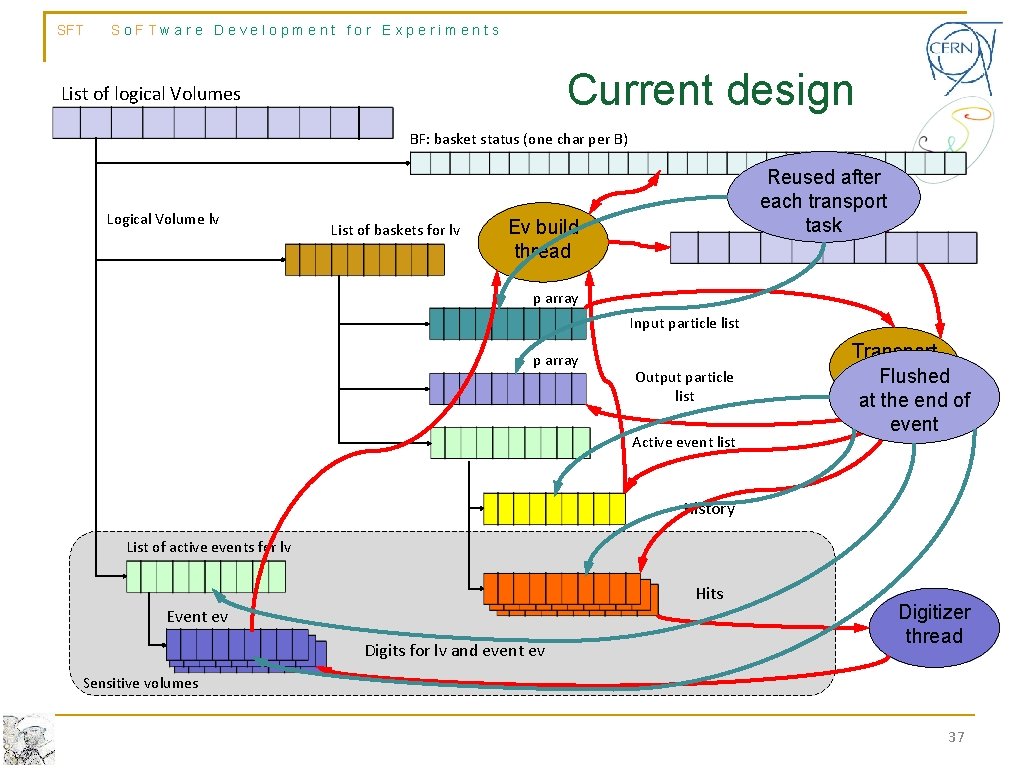

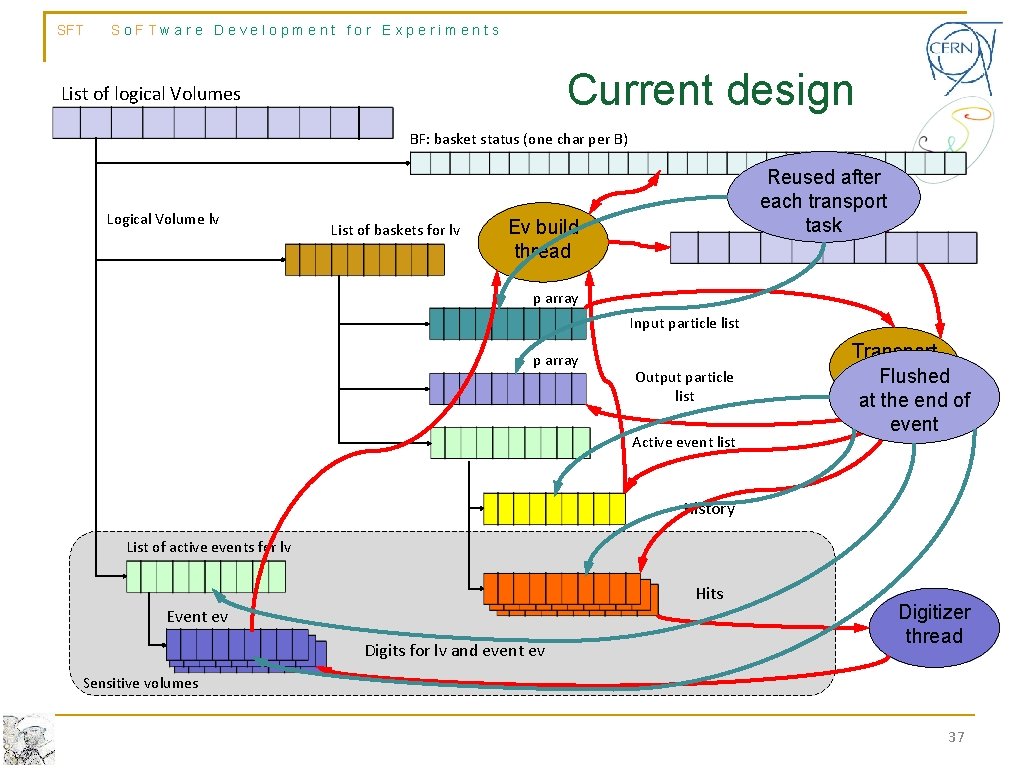

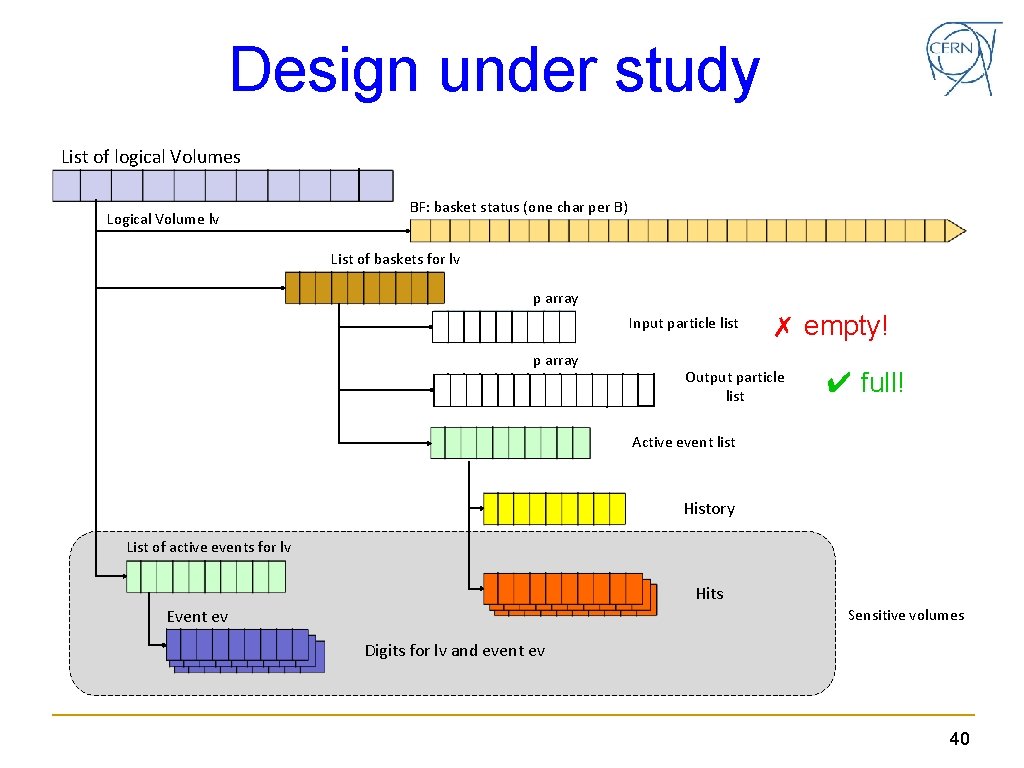

SFT So. FTware Development for Experiments Current design List of logical Volumes BF: basket status (one char per B) Logical Volume lv List of baskets for lv Reused after each transport Events task Ev build thread p array Input particle list p array Output particle list Active event list Transport thread Flushed at the end of event History List of active events for lv Hits Event ev Digits for lv and event ev Digitizer thread Sensitive volumes 37

Features q Pros n n q Excellent potential locality Easy to introduce hits and digits Cons n n One more copy (but it is done in parallel) More difficult to preserve particle history (it is non-local!) and introduce particle pruning 38

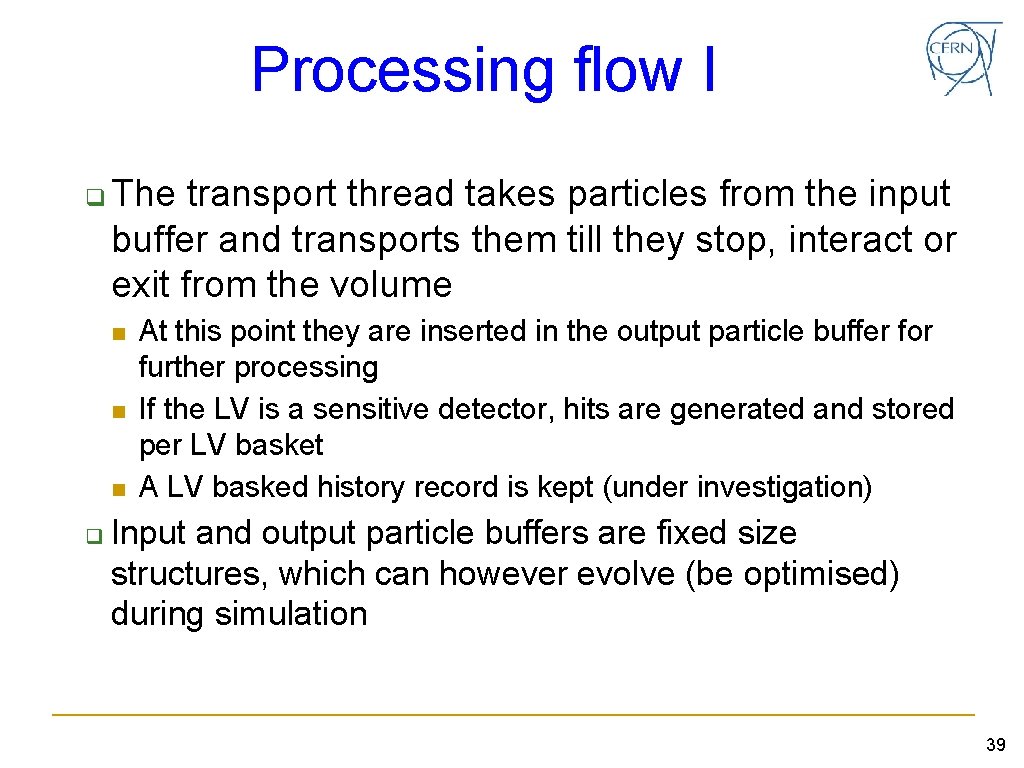

Processing flow I q The transport thread takes particles from the input buffer and transports them till they stop, interact or exit from the volume n n n q At this point they are inserted in the output particle buffer for further processing If the LV is a sensitive detector, hits are generated and stored per LV basket A LV basked history record is kept (under investigation) Input and output particle buffers are fixed size structures, which can however evolve (be optimised) during simulation 39

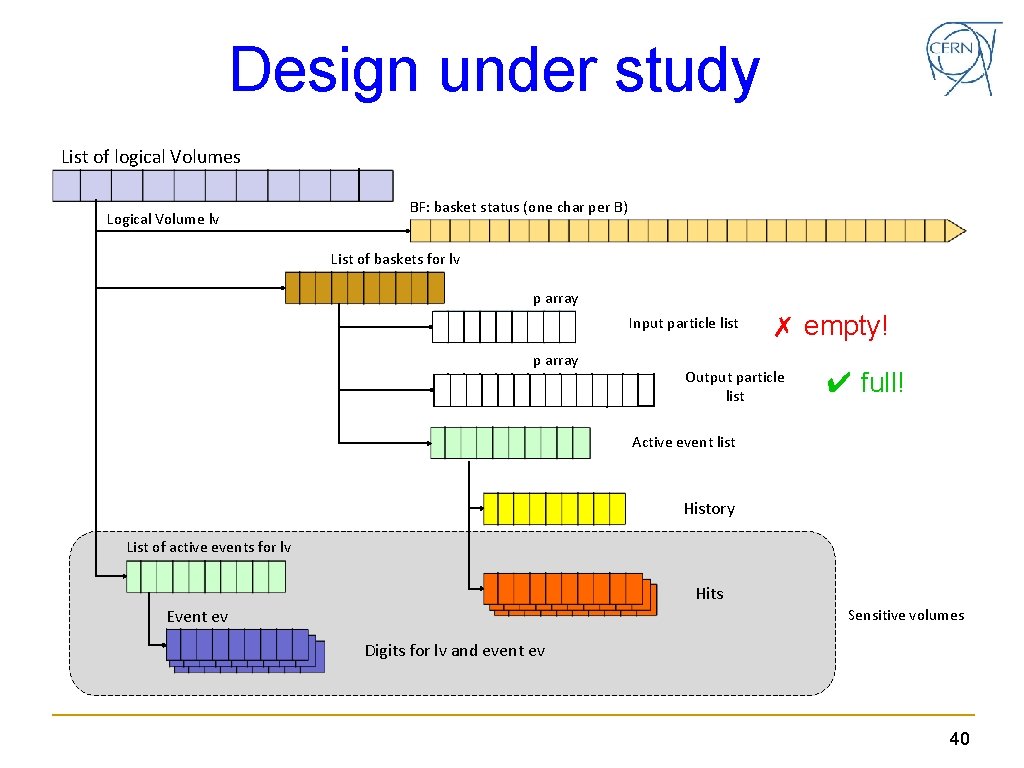

Design under study List of logical Volumes Logical Volume lv BF: basket status (one char per B) List of baskets for lv p array Input particle list p array ✗ empty! Output particle list ✔ full! Active event list History List of active events for lv Hits Sensitive volumes Event ev Digits for lv and event ev 40

Note n n Containers are “slow growing” contiguous containers Every time a container has to grow, it is realloc-ated contiguously to the new size q n A blocking operation We expect containers size to converge q If not, there is a design problem 41

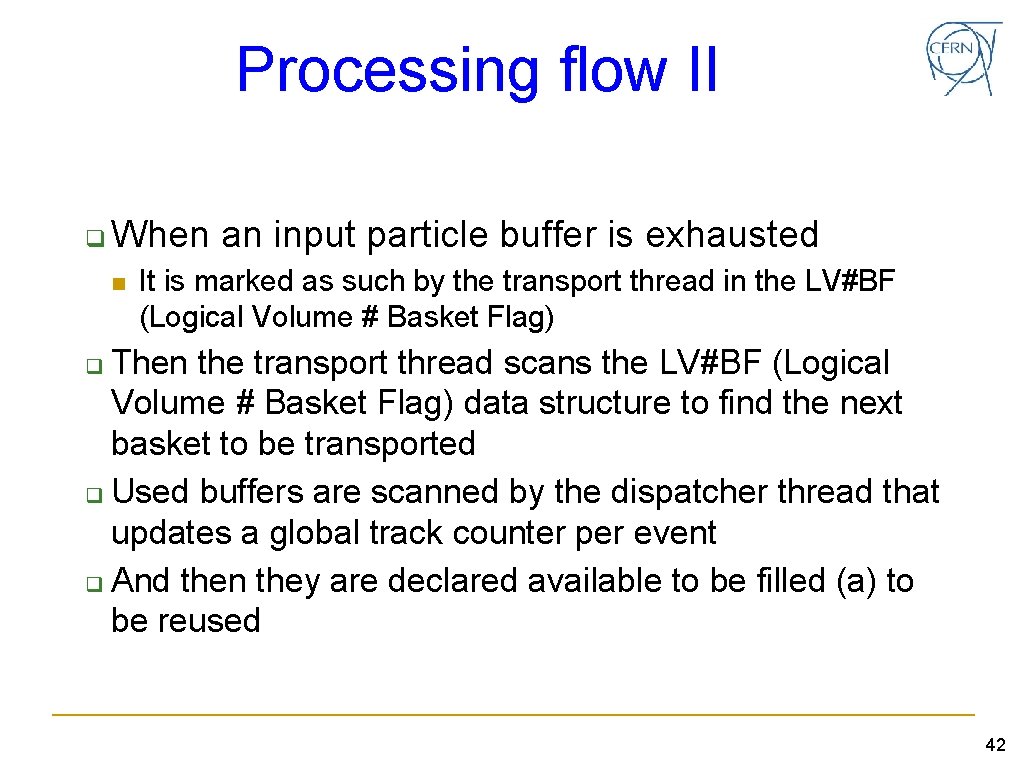

Processing flow II q When an input particle buffer is exhausted n It is marked as such by the transport thread in the LV#BF (Logical Volume # Basket Flag) Then the transport thread scans the LV#BF (Logical Volume # Basket Flag) data structure to find the next basket to be transported q Used buffers are scanned by the dispatcher thread that updates a global track counter per event q And then they are declared available to be filled (a) to be reused q 42

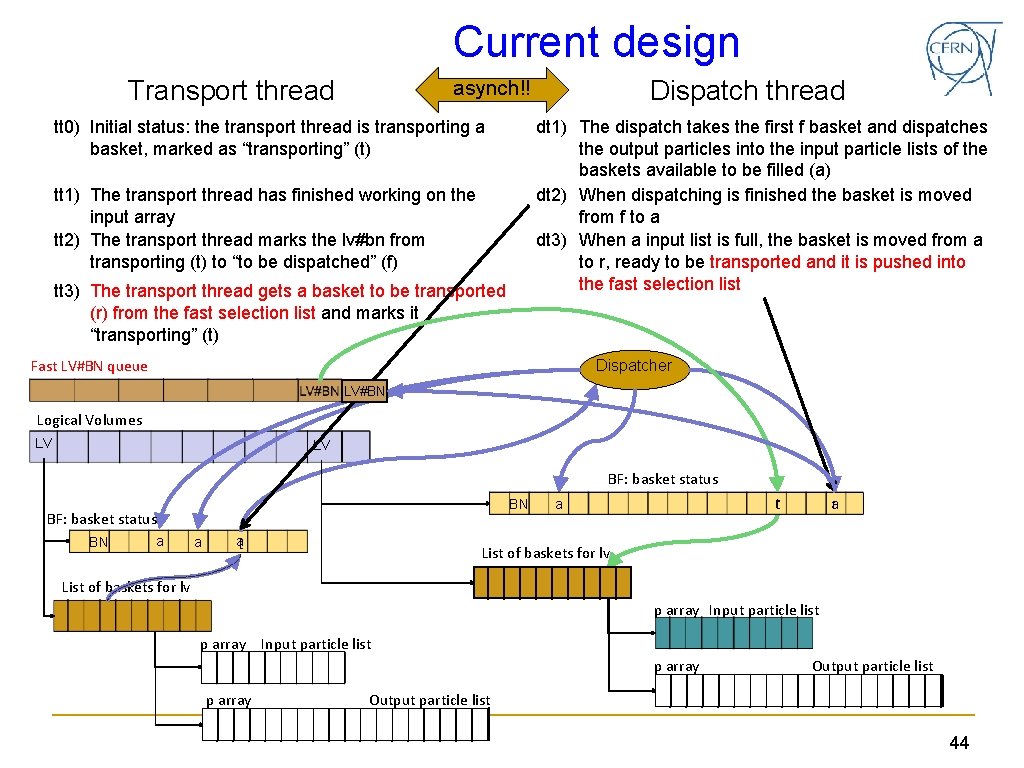

Important!! n n a f r t (available) basket being filled by the dispatcher (full) basket ready to be dispatched (ready) basket ready to be transported (transporting) basket being transported 43

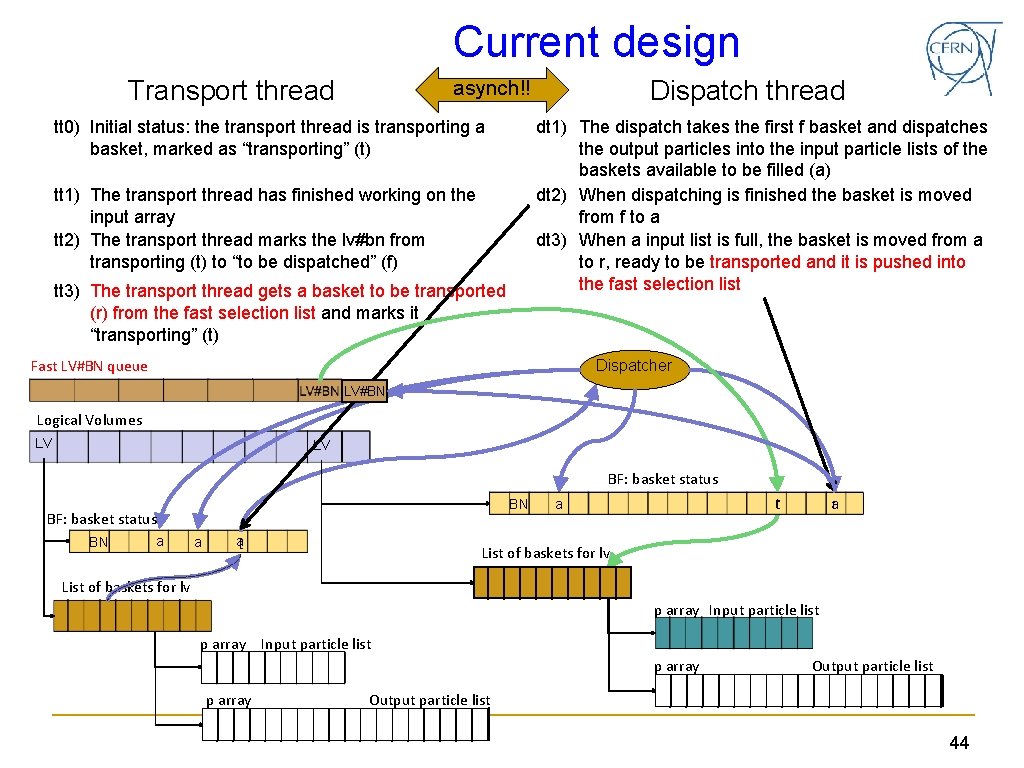

Current design Transport thread Dispatch thread asynch!! tt 0) Initial status: the transport thread is transporting a basket, marked as “transporting” (t) dt 1) The dispatch takes the first f basket and dispatches the output particles into the input particle lists of the baskets available to be filled (a) dt 2) When dispatching is finished the basket is moved from f to a dt 3) When a input list is full, the basket is moved from a to r, ready to be transported and it is pushed into the fast selection list tt 1) The transport thread has finished working on the input array tt 2) The transport thread marks the lv#bn from transporting (t) to “to be dispatched” (f) tt 3) The transport thread gets a basket to be transported (r) from the fast selection list and marks it “transporting” (t) Fast LV#BN queue Dispatcher LV#BN Logical Volumes LV LV BF: basket status BN a a aft rt a ar List of baskets for lv p array Input particle list p array Output particle list 44

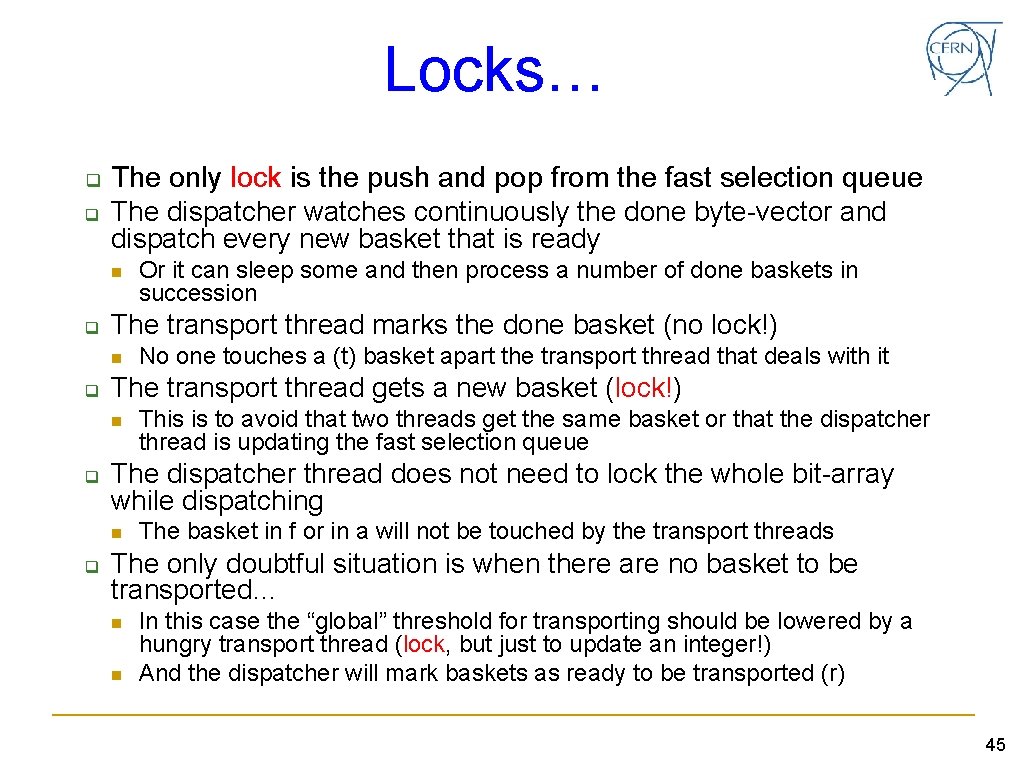

Locks… q q The only lock is the push and pop from the fast selection queue The dispatcher watches continuously the done byte-vector and dispatch every new basket that is ready n q The transport thread marks the done basket (no lock!) n q This is to avoid that two threads get the same basket or that the dispatcher thread is updating the fast selection queue The dispatcher thread does not need to lock the whole bit-array while dispatching n q No one touches a (t) basket apart the transport thread that deals with it The transport thread gets a new basket (lock!) n q Or it can sleep some and then process a number of done baskets in succession The basket in f or in a will not be touched by the transport threads The only doubtful situation is when there are no basket to be transported… n n In this case the “global” threshold for transporting should be lowered by a hungry transport thread (lock, but just to update an integer!) And the dispatcher will mark baskets as ready to be transported (r) 45

Memory n n n We hope to have a self-adjusting system that will stabilise with time In case of an “accident” (an event much larger that any other), we need a way to “quench inflation” We have identified two methods q q n Event flushing: do NOT transport particles from a given set of events and move them directly to the output buffer Energy flushing: transport low energy particles and move the high energy ones to the output buffer “Untransported” particles are just reinjected into the system, but they do not shower 46

Processing flow III q Note an important point The LV basket structure has input and output particle buffers and hits and history buffers q Input and output particle buffers are n n n q Multi-event Volatile, they get emptied and filled during transport of a single event Hits and history buffers are n n Per event Permanent during the transport of a single event A basket of a LV can be handled by different threads successively, each one with a new input and output buffers …but all these threads will add to the Hits and history data structure till the event is flushed 47

Processing flow IV When an event is finished, the digitizer thread kicks in and scans all the hits in all the baskets of all the LVs and digitise them, inserting them in the LV event->digit structure q When this is over, the event is built into the event structure (to be designed!) by the event builder thread q After that, the history for this event is assembled by the same thread q n q If… Then the event is output n By an output thread or in parallel? 48

Questions? q How many dispatcher, digitizer and event-builder threads? n n q Difficult to say, we need some more quantitative design work Measurements with G 4 simulations could help Transport thread numbers will have to adapt to the size of simulation and of the detector n n In ATLAS for instance 50% of the time is spent in 0. 75% of the volumes Threads could be distributed proportionally to the time spent in the different LVs 49

Short term tasks n Continue the design work – essential before any more substantial implementation q q n n Implement the new design and evaluate it against the first Demonstrate speedup of some chosen geometry routines q n This is the most important task at the moment We have to evaluate the potential bottlenecks before starting the implementation Both on x 86 CPUs and GPUs Demonstrate speedup of some chosen physics methods q Particularly in the EM domain 50