ImageBased Synthetic Aperture Rendering MIT 9904 14 PIs

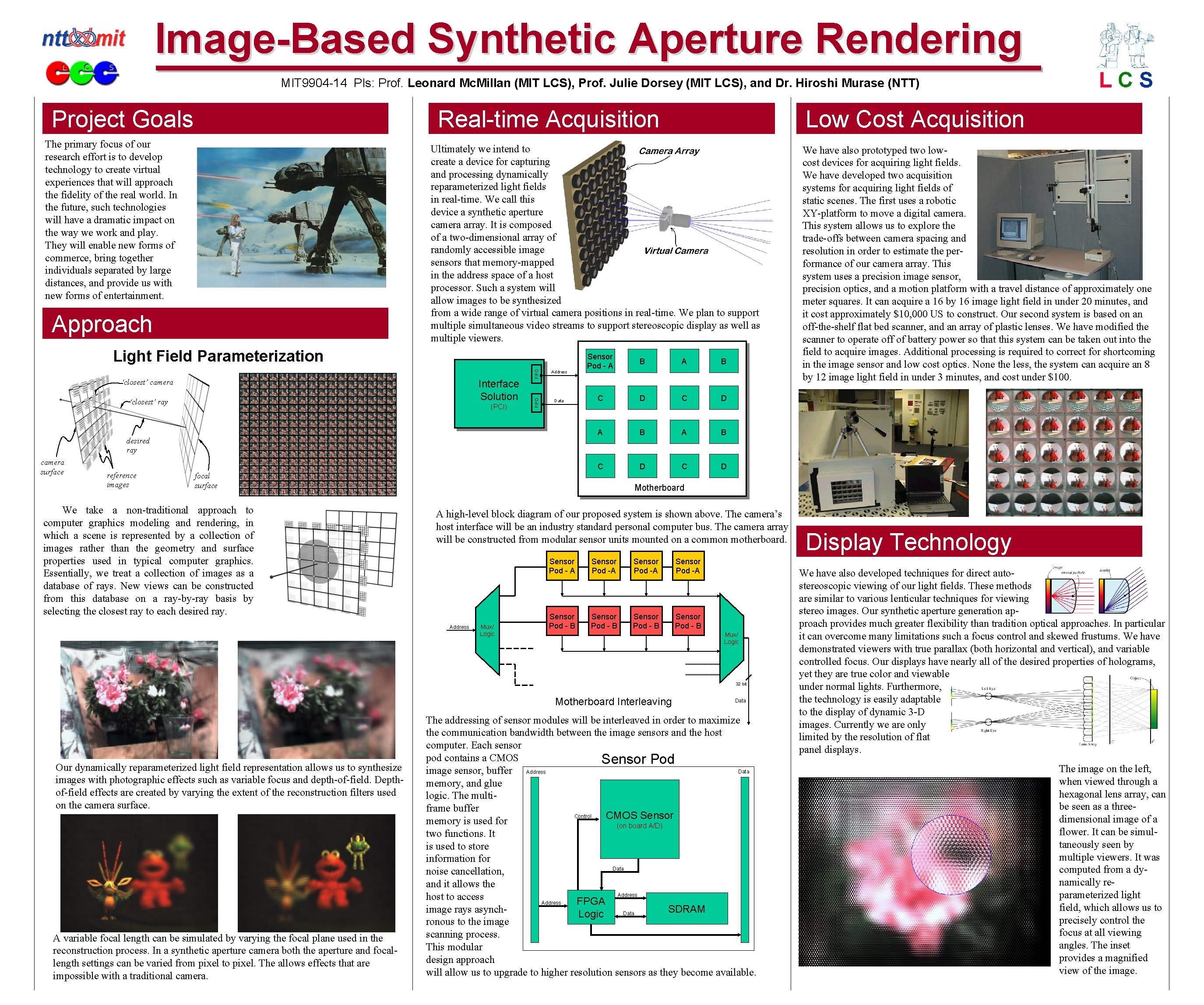

Image-Based Synthetic Aperture Rendering MIT 9904 -14 PIs: Prof. Leonard Mc. Millan (MIT LCS), Prof. Julie Dorsey (MIT LCS), and Dr. Hiroshi Murase (NTT) Project Goals Real-time Acquisition The primary focus of our research effort is to develop technology to create virtual experiences that will approach the fidelity of the real world. In the future, such technologies will have a dramatic impact on the way we work and play. They will enable new forms of commerce, bring together individuals separated by large distances, and provide us with new forms of entertainment. Ultimately we intend to create a device for capturing and processing dynamically reparameterized light fields in real-time. We call this device a synthetic aperture camera array. It is composed of a two-dimensional array of randomly accessible image sensors that memory-mapped in the address space of a host processor. Such a system will allow images to be synthesized from a wide range of virtual camera positions in real-time. We plan to support multiple simultaneous video streams to support stereoscopic display as well as multiple viewers. Approach ‘closest’ ray (PCI) FIFO Interface Solution ‘closest’ camera Address FIFO Light Field Parameterization Data desired ray camera surface reference images Low Cost Acquisition Sensor Pod - A B A B C D C D focal surface We take a non-traditional approach to computer graphics modeling and rendering, in which a scene is represented by a collection of images rather than the geometry and surface properties used in typical computer graphics. Essentially, we treat a collection of images as a database of rays. New views can be constructed from this database on a ray-by-ray basis by selecting the closest ray to each desired ray. Motherboard A high-level block diagram of our proposed system is shown above. The camera’s host interface will be an industry standard personal computer bus. The camera array will be constructed from modular sensor units mounted on a common motherboard. Address Mux/ Logic Sensor Pod - A Sensor Pod -A Sensor Pod - B Sensor Pod - B Mux/ Logic 32 bit Motherboard Interleaving Our dynamically reparameterized light field representation allows us to synthesize images with photographic effects such as variable focus and depth-of-field. Depthof-field effects are created by varying the extent of the reconstruction filters used on the camera surface. A variable focal length can be simulated by varying the focal plane used in the reconstruction process. In a synthetic aperture camera both the aperture and focallength settings can be varied from pixel to pixel. The allows effects that are impossible with a traditional camera. We have also prototyped two lowcost devices for acquiring light fields. We have developed two acquisition systems for acquiring light fields of static scenes. The first uses a robotic XY-platform to move a digital camera. This system allows us to explore the trade-offs between camera spacing and resolution in order to estimate the performance of our camera array. This system uses a precision image sensor, precision optics, and a motion platform with a travel distance of approximately one meter squares. It can acquire a 16 by 16 image light field in under 20 minutes, and it cost approximately $10, 000 US to construct. Our second system is based on an off-the-shelf flat bed scanner, and an array of plastic lenses. We have modified the scanner to operate off of battery power so that this system can be taken out into the field to acquire images. Additional processing is required to correct for shortcoming in the image sensor and low cost optics. None the less, the system can acquire an 8 by 12 image light field in under 3 minutes, and cost under $100. Data The addressing of sensor modules will be interleaved in order to maximize the communication bandwidth between the image sensors and the host computer. Each sensor pod contains a CMOS Sensor Pod Data image sensor, buffer Address memory, and glue logic. The multiframe buffer Control CMOS Sensor memory is used for (on board A/D) two functions. It is used to store information for Data noise cancellation, and it allows the Address host to access Address FPGA image rays asynch. SDRAM Data Logic ronous to the image scanning process. This modular design approach will allow us to upgrade to higher resolution sensors as they become available. Display Technology We have also developed techniques for direct autostereoscopic viewing of our light fields. These methods are similar to various lenticular techniques for viewing stereo images. Our synthetic aperture generation approach provides much greater flexibility than tradition optical approaches. In particular it can overcome many limitations such a focus control and skewed frustums. We have demonstrated viewers with true parallax (both horizontal and vertical), and variable controlled focus. Our displays have nearly all of the desired properties of holograms, yet they are true color and viewable under normal lights. Furthermore, the technology is easily adaptable to the display of dynamic 3 -D images. Currently we are only limited by the resolution of flat panel displays. The image on the left, when viewed through a hexagonal lens array, can be seen as a threedimensional image of a flower. It can be simultaneously seen by multiple viewers. It was computed from a dynamically reparameterized light field, which allows us to precisely control the focus at all viewing angles. The inset provides a magnified view of the image.

- Slides: 1