Image Style Transfer Using Convolutional Neural Networks LEON

- Slides: 16

Image Style Transfer Using Convolutional Neural Networks LEON A. GATYS, ALEXANDER S. ECKER, MATTHIAS BETHGE UNIVERSITY OF TÜBINGEN, GERMANY OVERVIEW PRESENTED BY: KYLE ROBINSON

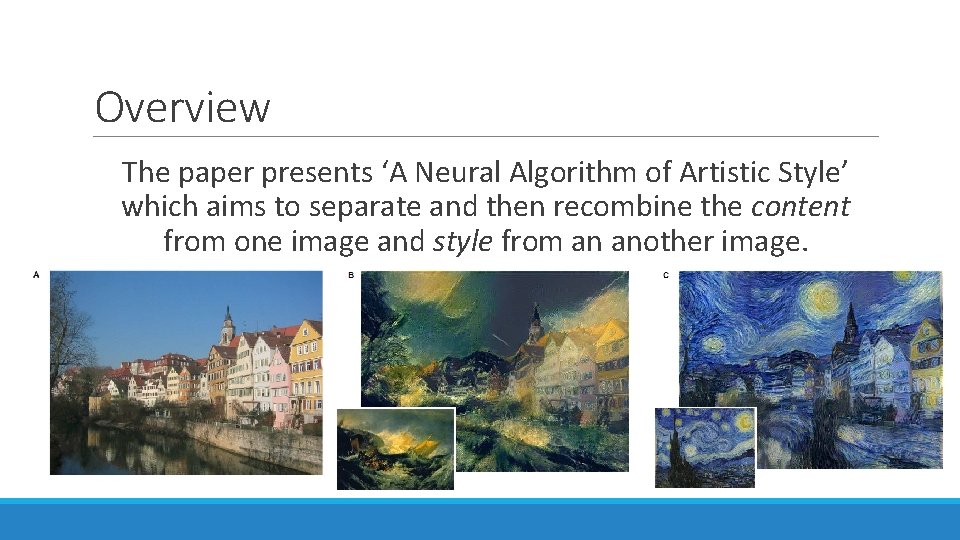

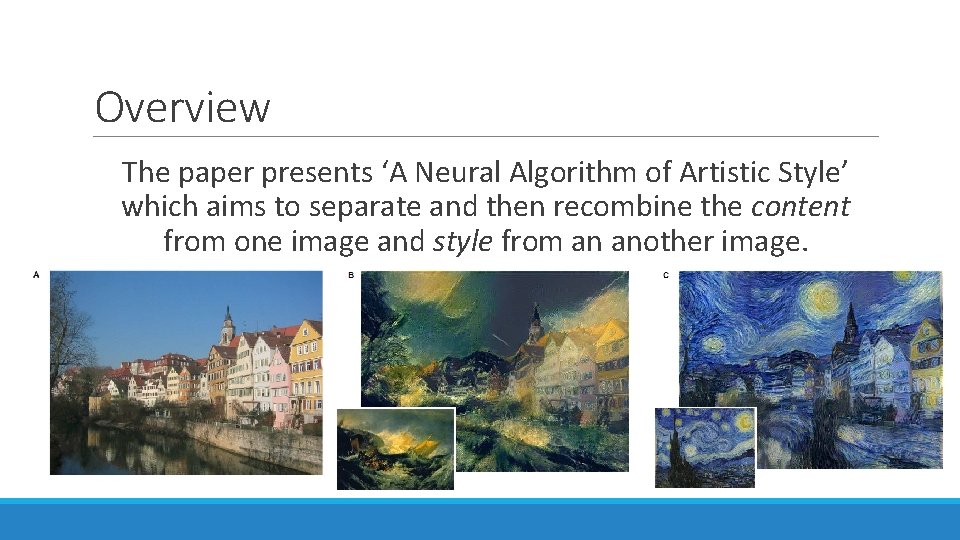

Overview The paper presents ‘A Neural Algorithm of Artistic Style’ which aims to separate and then recombine the content from one image and style from an another image.

Overview The paper combines two existing CNN visualization techniques 1. Brief overview of the supporting work 2. How the algorithm works 3. Parameters and tweaks 4. Discussion

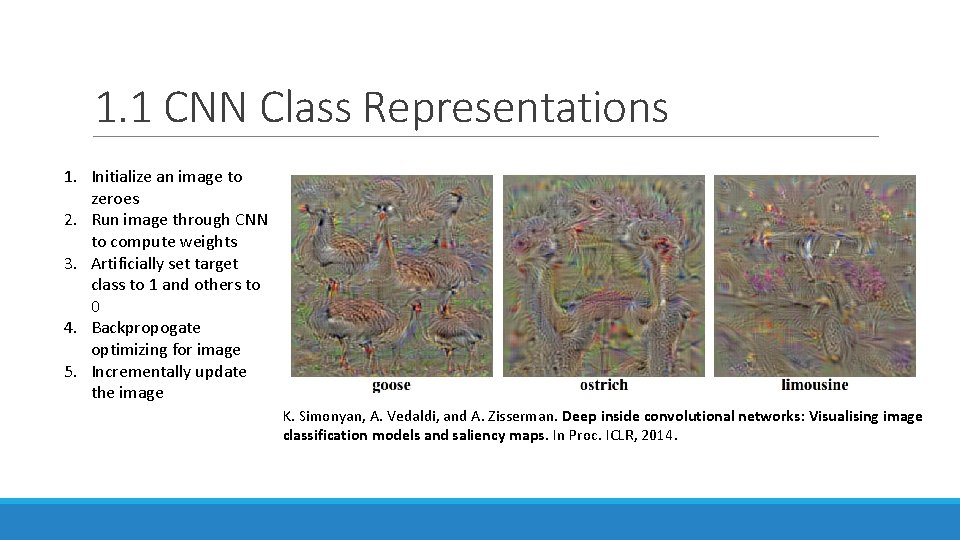

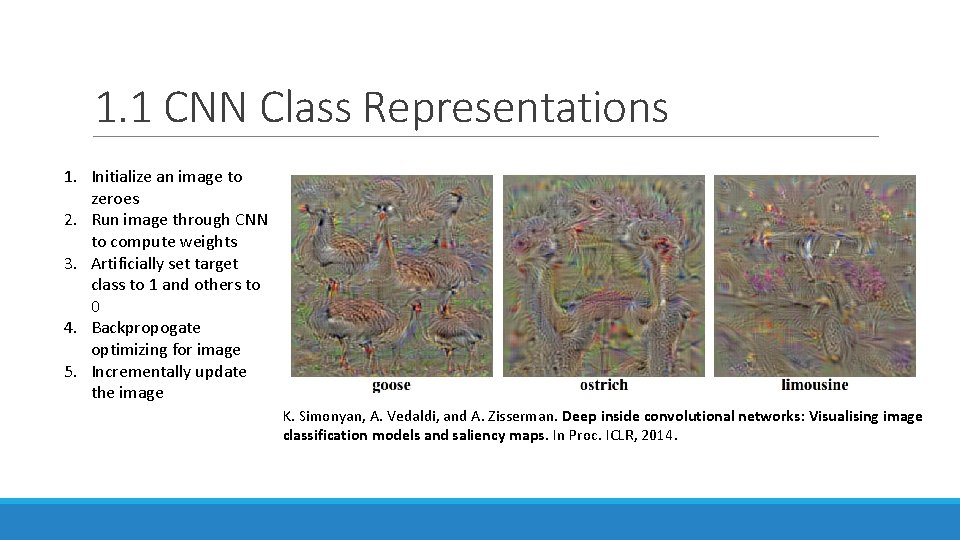

1. 1 CNN Class Representations 1. Initialize an image to zeroes 2. Run image through CNN to compute weights 3. Artificially set target class to 1 and others to 0 4. Backpropogate optimizing for image 5. Incrementally update the image K. Simonyan, A. Vedaldi, and A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. In Proc. ICLR, 2014.

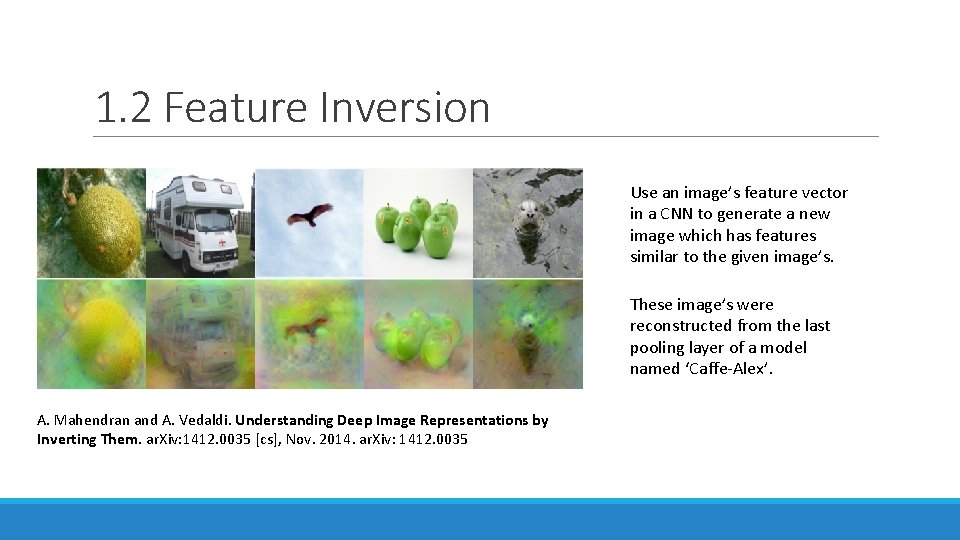

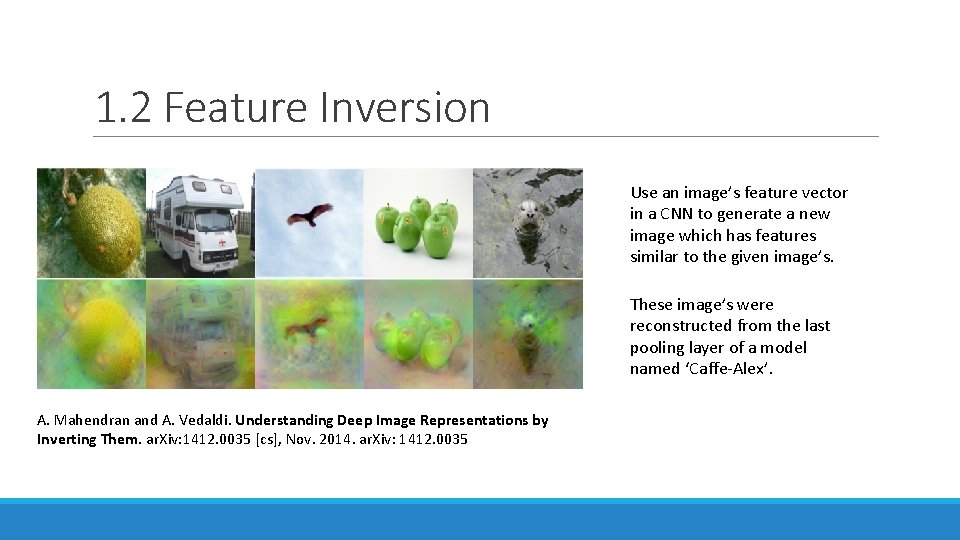

1. 2 Feature Inversion Use an image’s feature vector in a CNN to generate a new image which has features similar to the given image’s. These image’s were reconstructed from the last pooling layer of a model named ‘Caffe-Alex’. A. Mahendran and A. Vedaldi. Understanding Deep Image Representations by Inverting Them. ar. Xiv: 1412. 0035 [cs], Nov. 2014. ar. Xiv: 1412. 0035

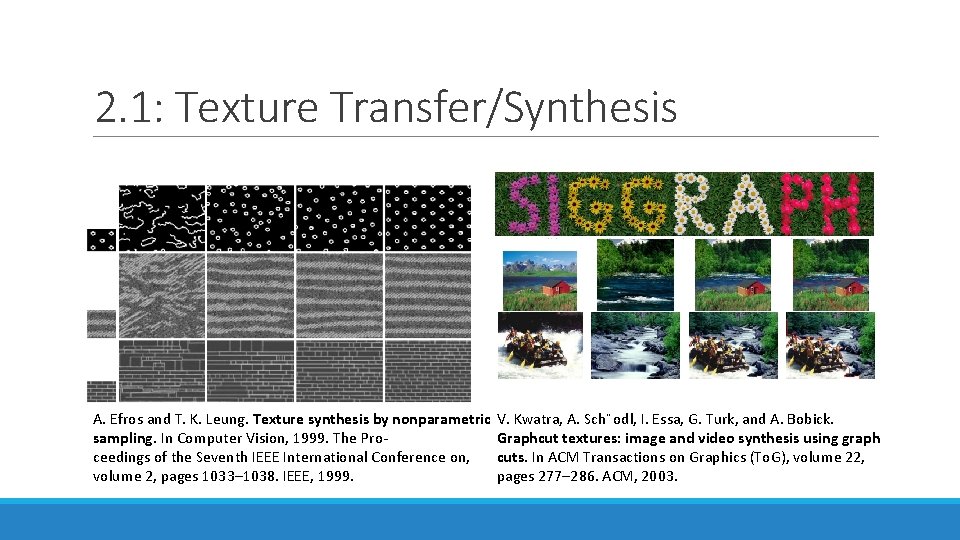

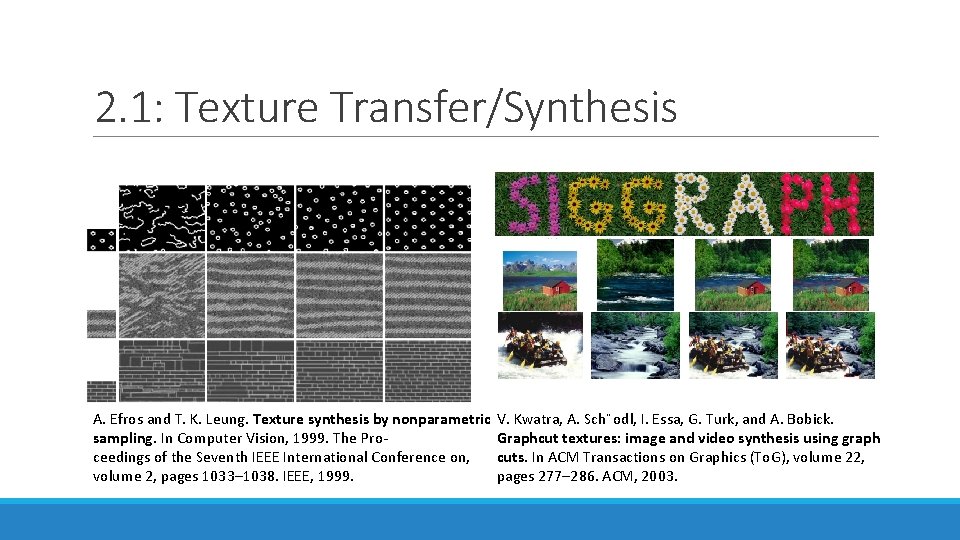

2. 1: Texture Transfer/Synthesis A. Efros and T. K. Leung. Texture synthesis by nonparametric sampling. In Computer Vision, 1999. The Proceedings of the Seventh IEEE International Conference on, volume 2, pages 1033– 1038. IEEE, 1999. V. Kwatra, A. Sch¨odl, I. Essa, G. Turk, and A. Bobick. Graphcut textures: image and video synthesis using graph cuts. In ACM Transactions on Graphics (To. G), volume 22, pages 277– 286. ACM, 2003.

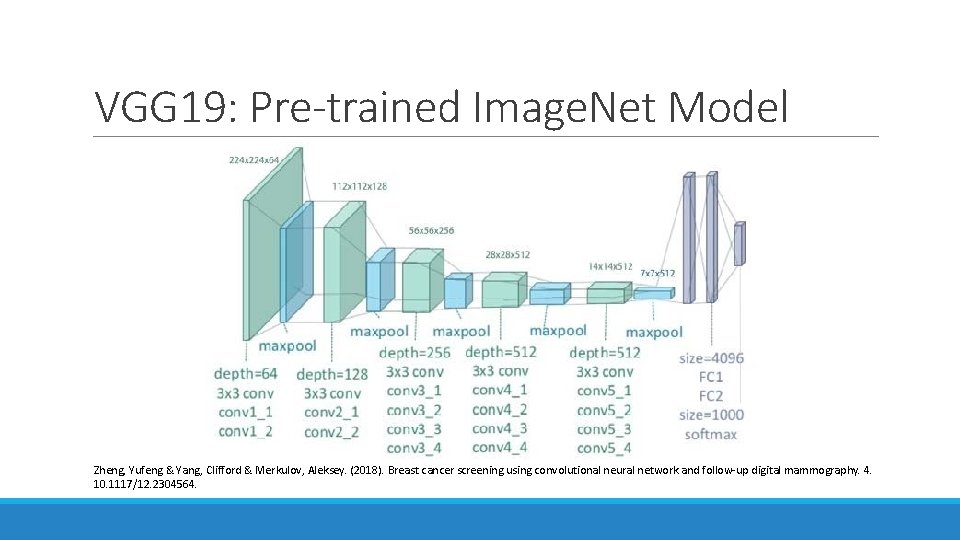

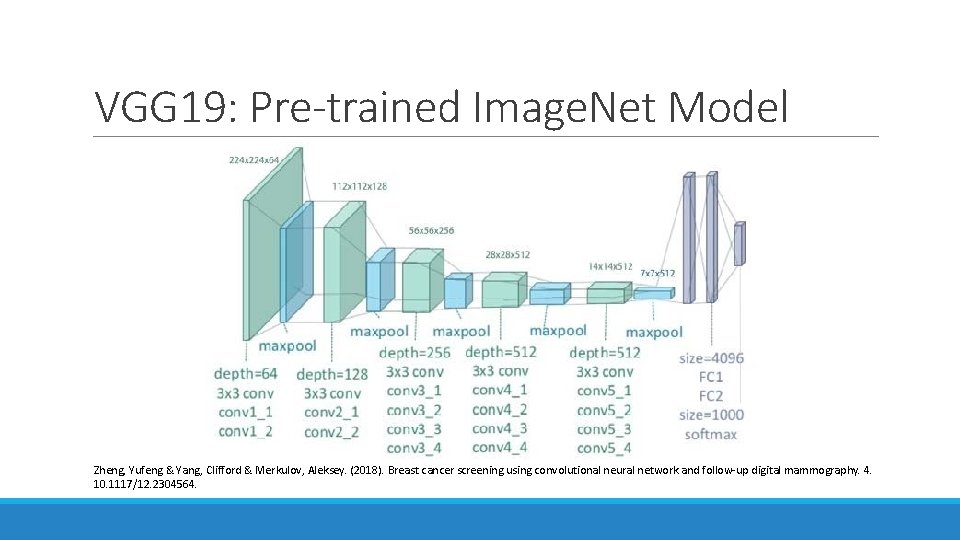

VGG 19: Pre-trained Image. Net Model Zheng, Yufeng & Yang, Clifford & Merkulov, Aleksey. (2018). Breast cancer screening using convolutional neural network and follow-up digital mammography. 4. 10. 1117/12. 2304564.

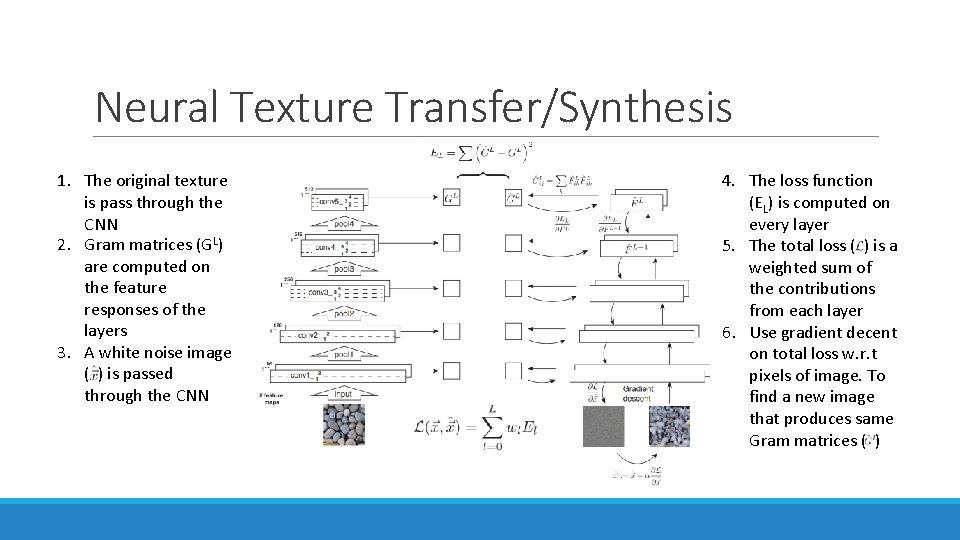

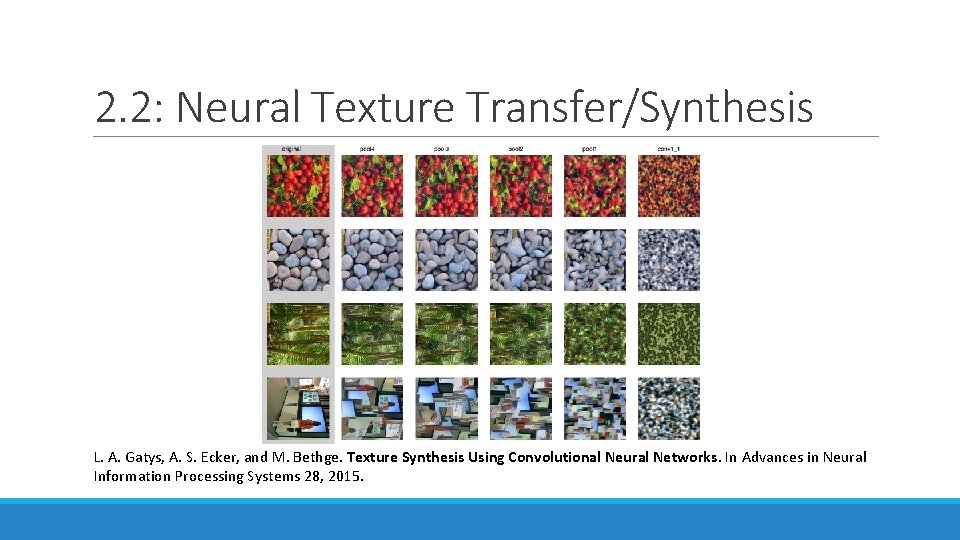

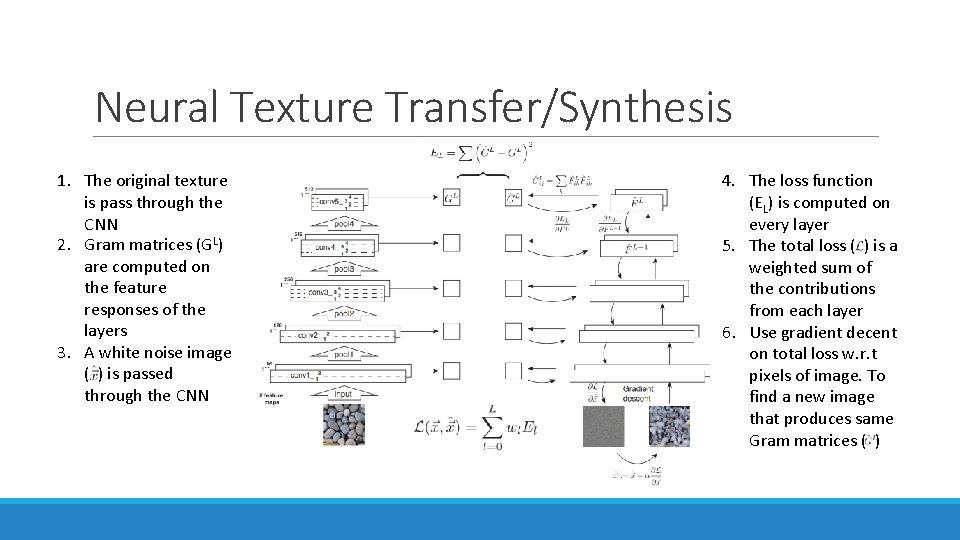

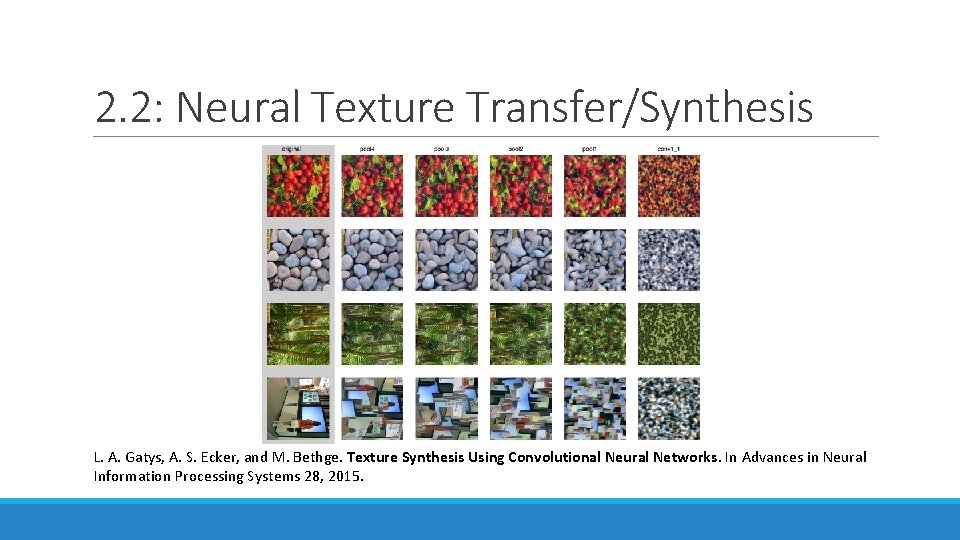

Neural Texture Transfer/Synthesis 1. The original texture is pass through the CNN 2. Gram matrices (GL) are computed on the feature responses of the layers 3. A white noise image ( ) is passed through the CNN 4. The loss function (EL) is computed on every layer 5. The total loss ( ) is a weighted sum of the contributions from each layer 6. Use gradient decent on total loss w. r. t pixels of image. To find a new image that produces same Gram matrices ( )

2. 2: Neural Texture Transfer/Synthesis L. A. Gatys, A. S. Ecker, and M. Bethge. Texture Synthesis Using Convolutional Neural Networks. In Advances in Neural Information Processing Systems 28, 2015.

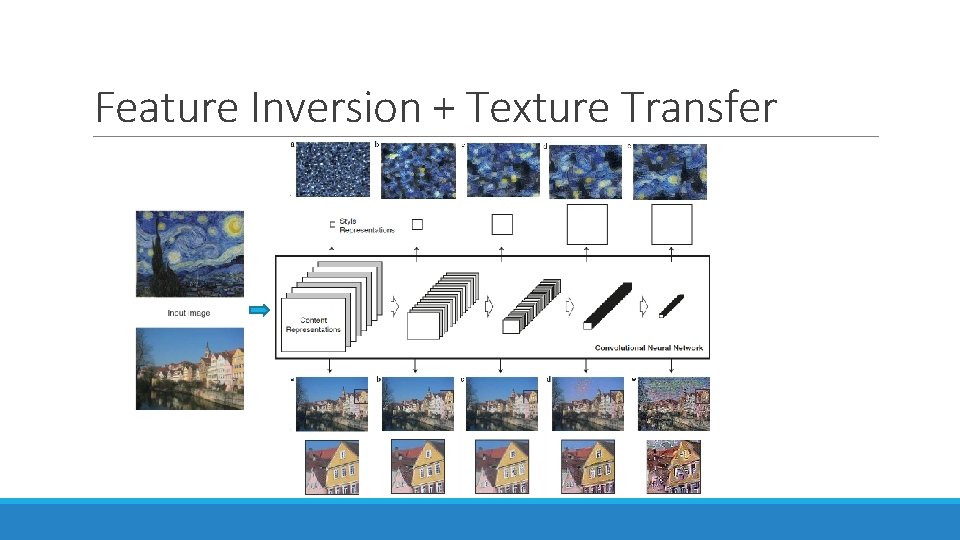

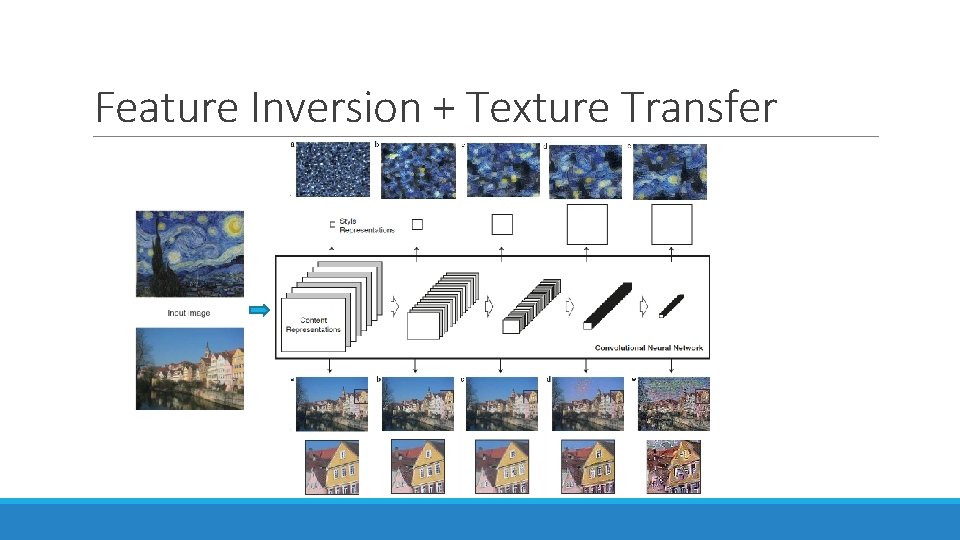

Feature Inversion + Texture Transfer

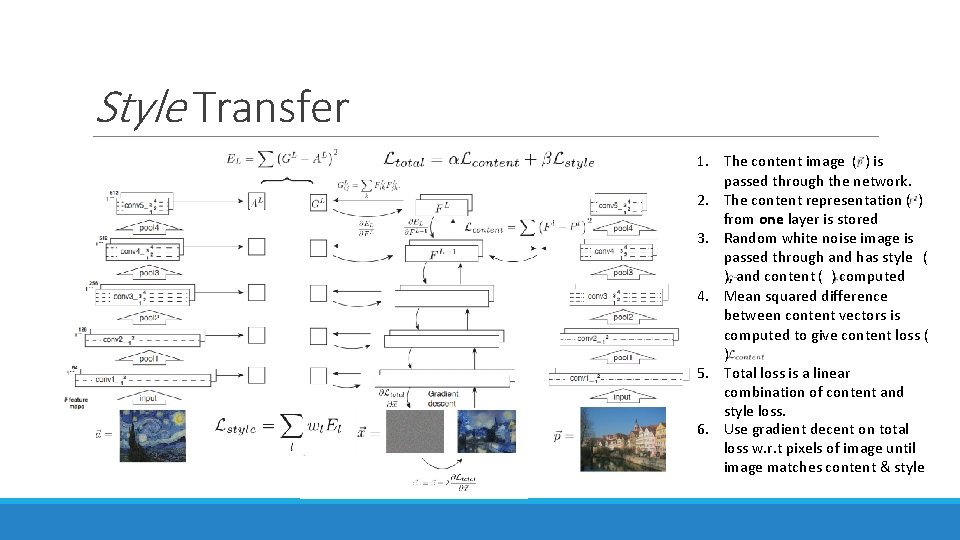

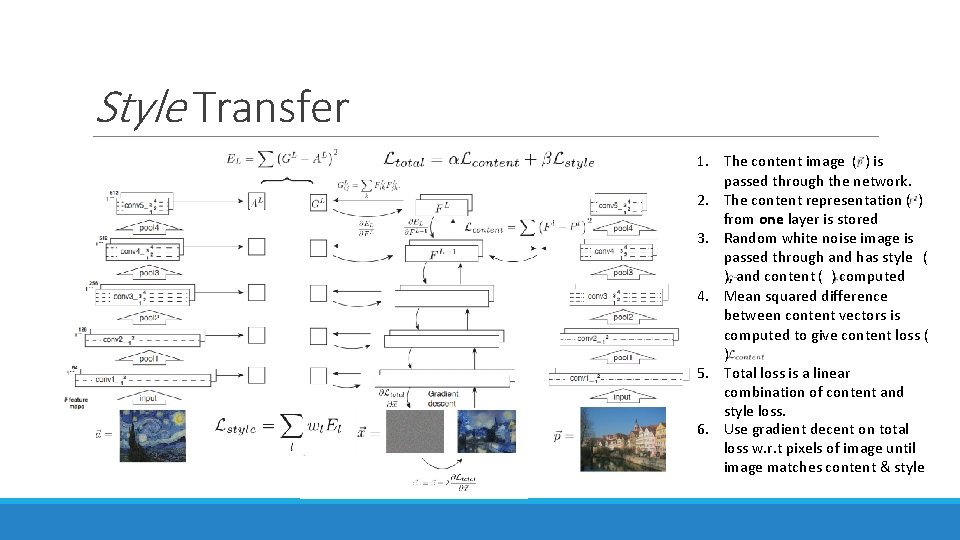

Style Transfer 1. The content image ( ) is passed through the network. 2. The content representation ( ) from one layer is stored 3. Random white noise image is passed through and has style ( ), and content ( ) computed 4. Mean squared difference between content vectors is computed to give content loss ( ) 5. Total loss is a linear combination of content and style loss. 6. Use gradient decent on total loss w. r. t pixels of image until image matches content & style

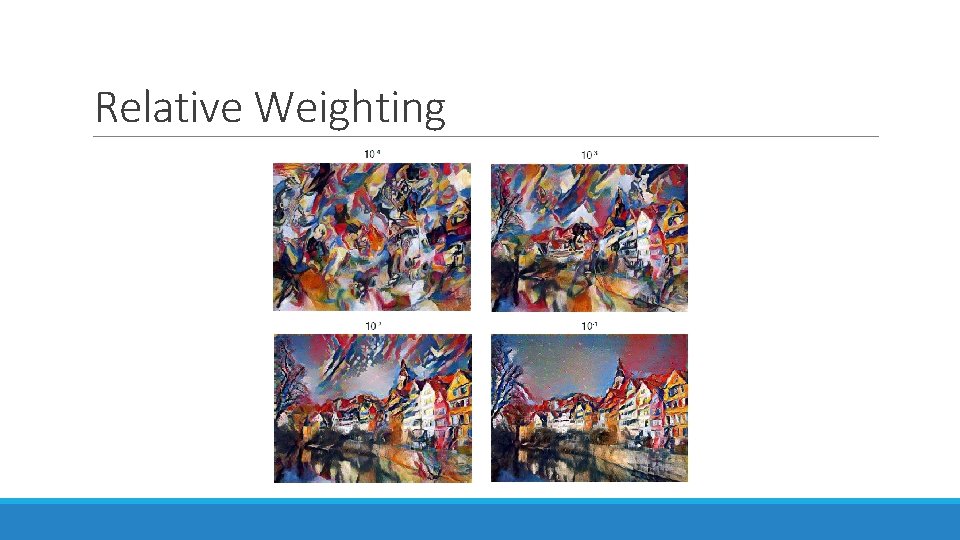

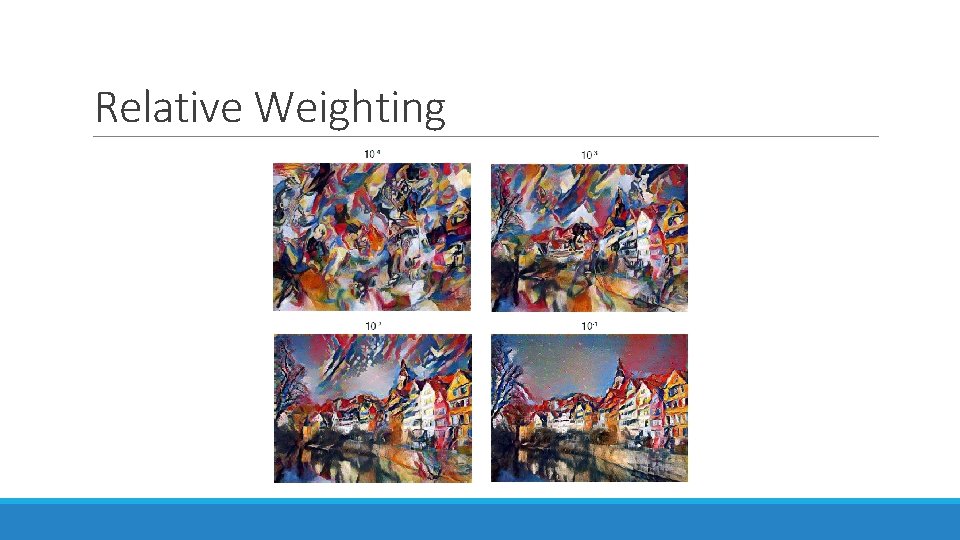

Relative Weighting

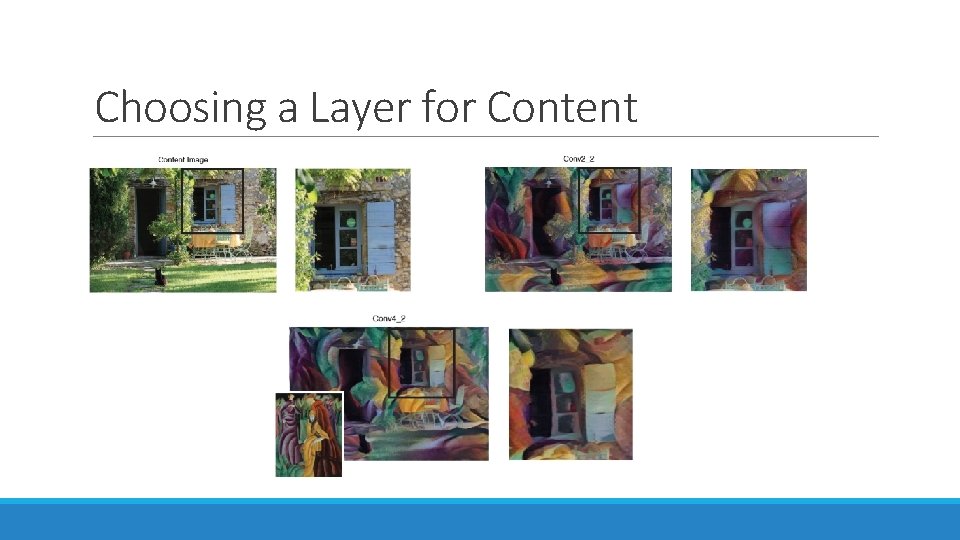

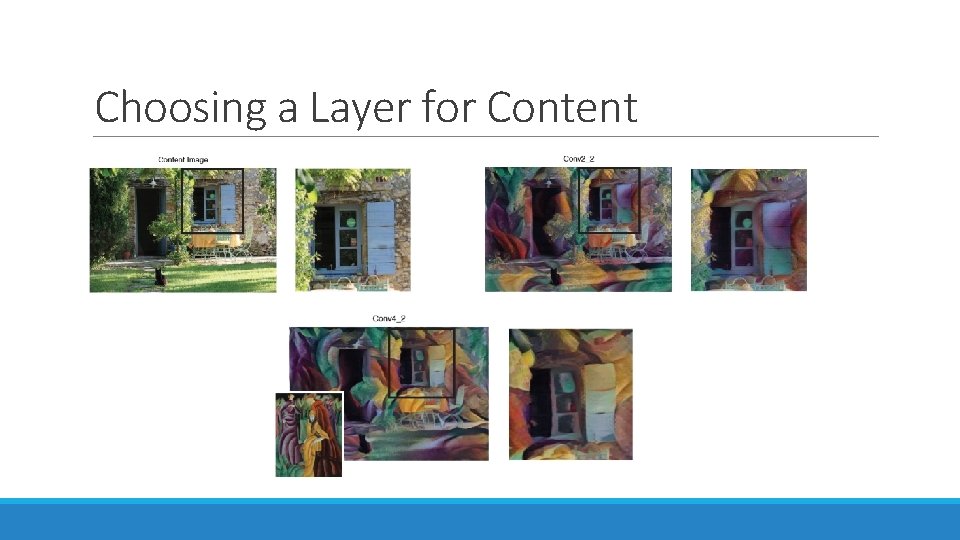

Choosing a Layer for Content

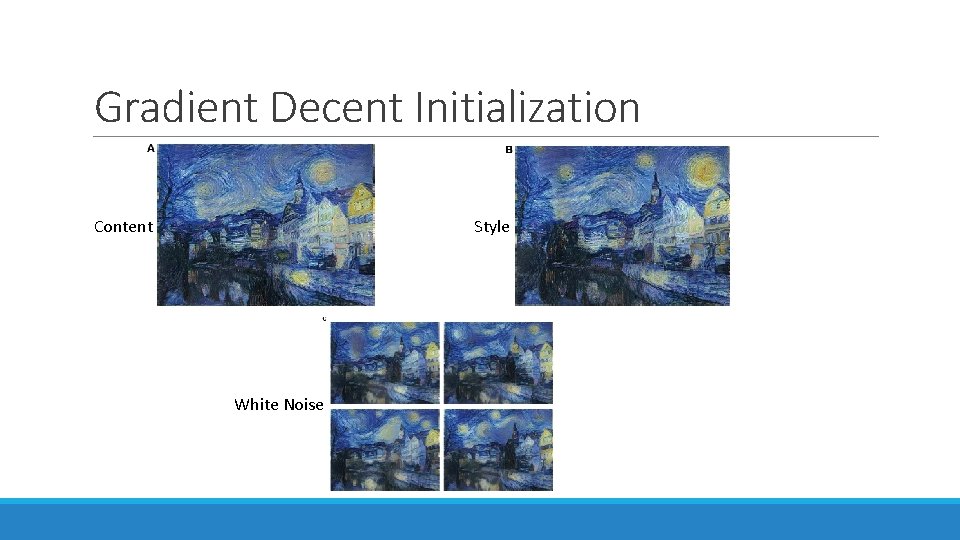

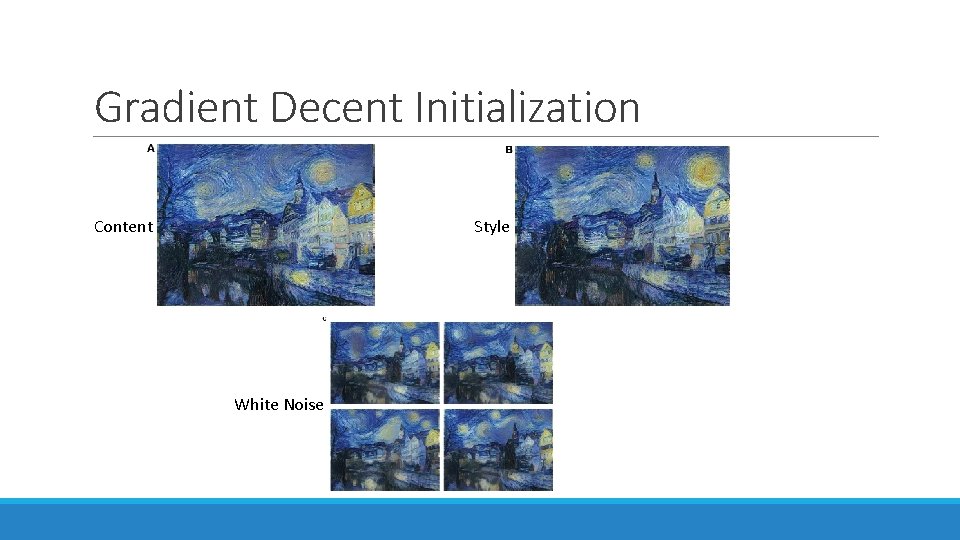

Gradient Decent Initialization Content Style White Noise

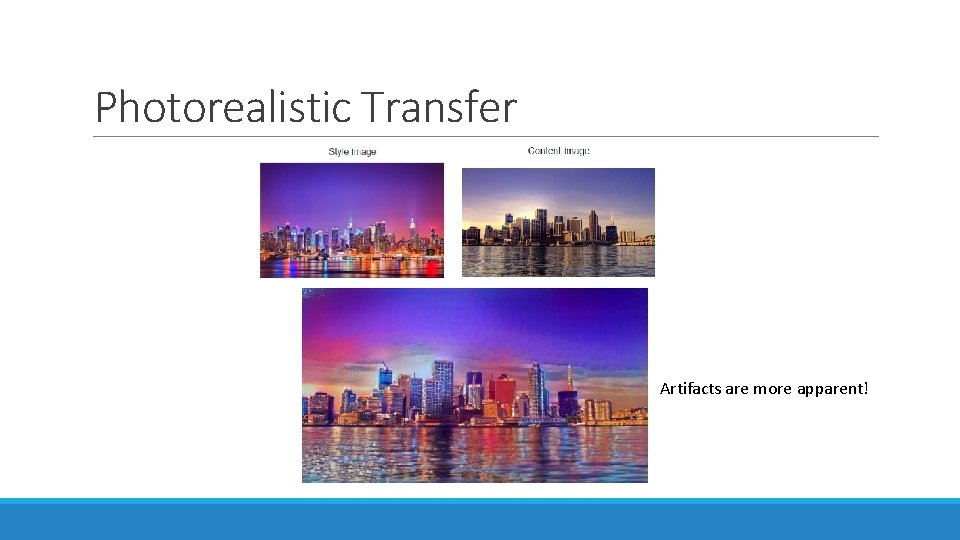

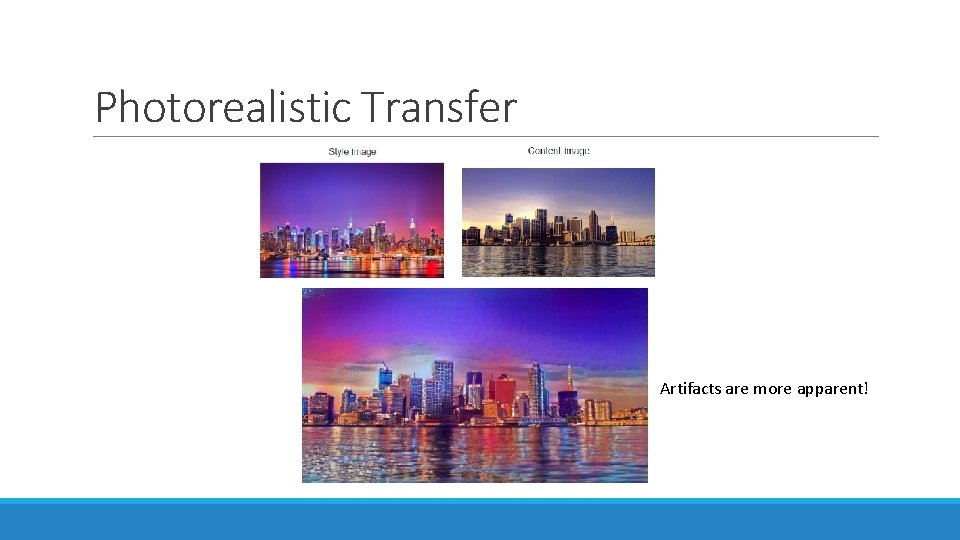

Photorealistic Transfer Artifacts are more apparent!

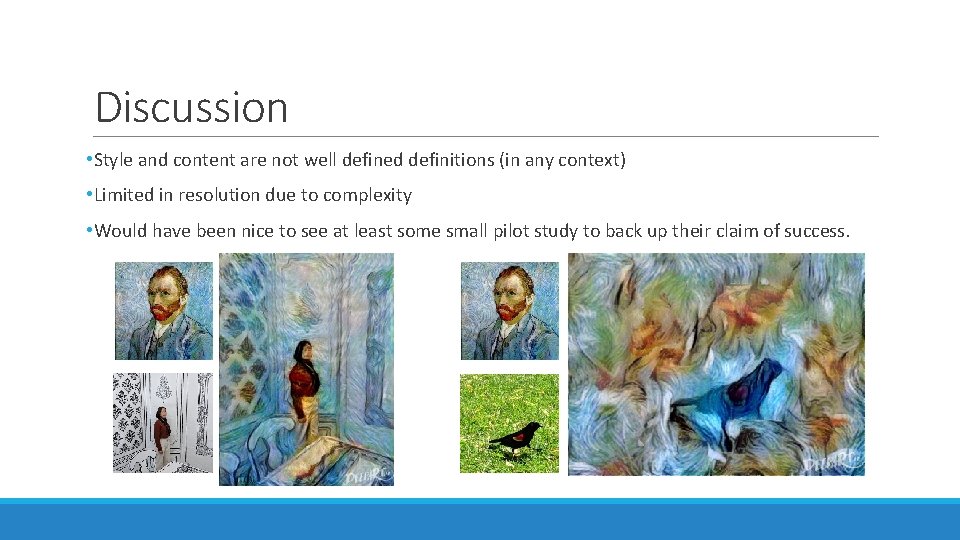

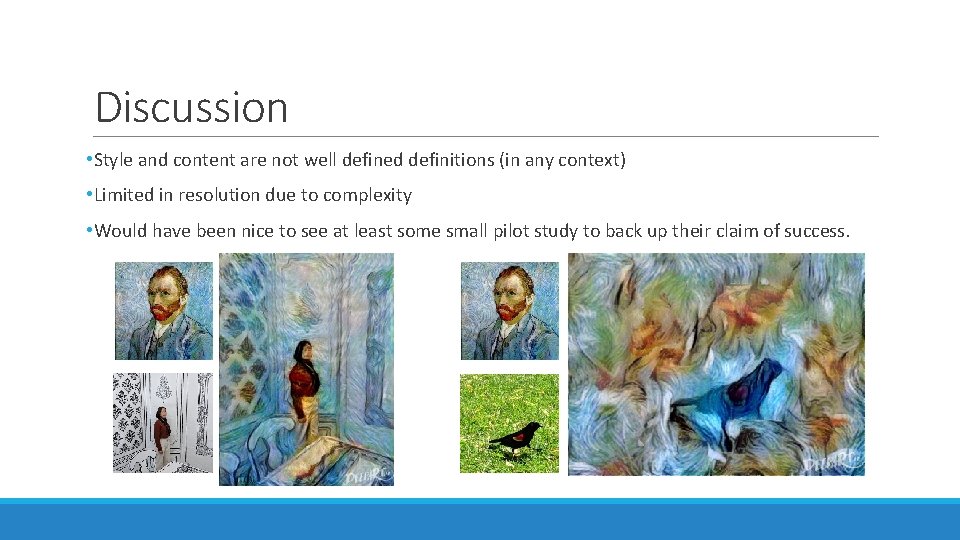

Discussion • Style and content are not well defined definitions (in any context) • Limited in resolution due to complexity • Would have been nice to see at least some small pilot study to back up their claim of success.