Image Net Classification with Deep Convolutional Neural Networks

- Slides: 30

Image. Net Classification with Deep Convolutional Neural Networks http: //people. cs. ksu. edu/~okerinde /

Introduction Objective: ü To train a large, deep convolutional neural network (on the subsets of Image. Net) to classify 1. 2 million high-resolution images into 1000 different categories. ü To learn about thousands of objects from millions of images

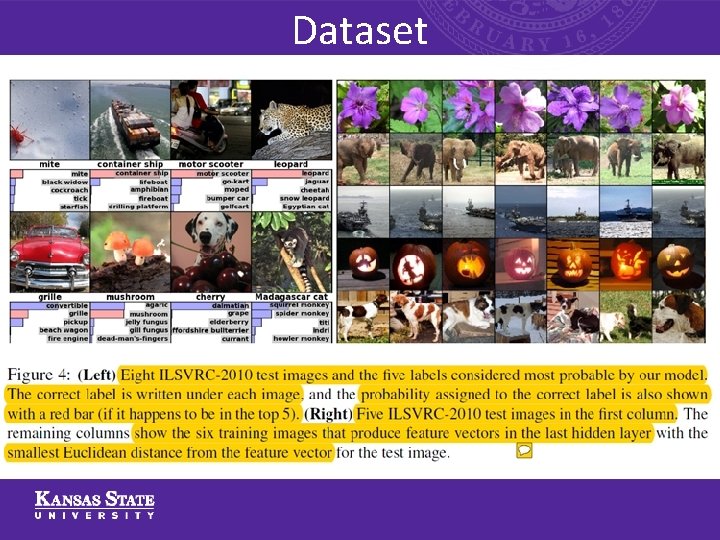

Introduction Dataset: ü Over 15 million labeled high-resolution images belonging to roughly 22, 000 categories ü Amazon’s Mechanical Turk crowd-sourcing tool ü 1. 2 million training images ü 50, 000 validation images ü 150, 000 testing images

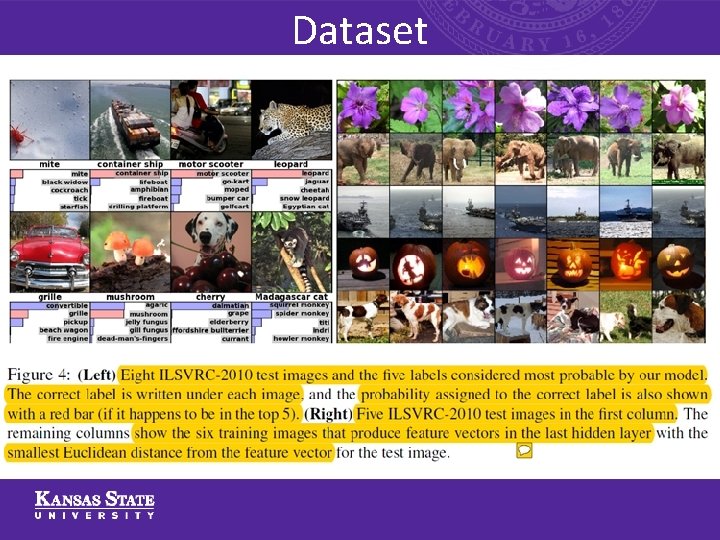

Dataset

Terminology ü Deep Convolutional Neural Networks – Convolutional neural networks’ (CNNs) capacity can be controlled by varying their depth and breadth, and they also make strong and mostly correct assumptions about the nature of images (namely, stationarity of statistics and locality of pixel dependencies). ü Object recognition

Methodology ü The Architecture ü Re. LU Nonlinearity ü Local Response Normalization ü Dropout ü Data Augmentation ü Principal Component Analysis ü Stochastic Gradient with Momentum

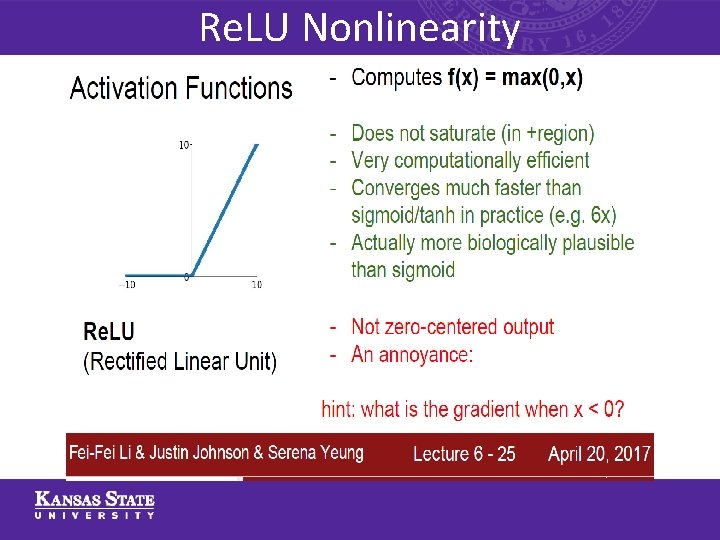

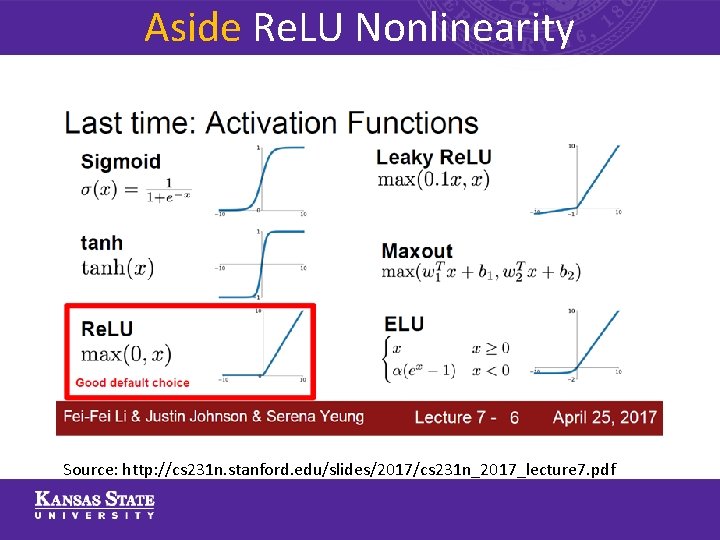

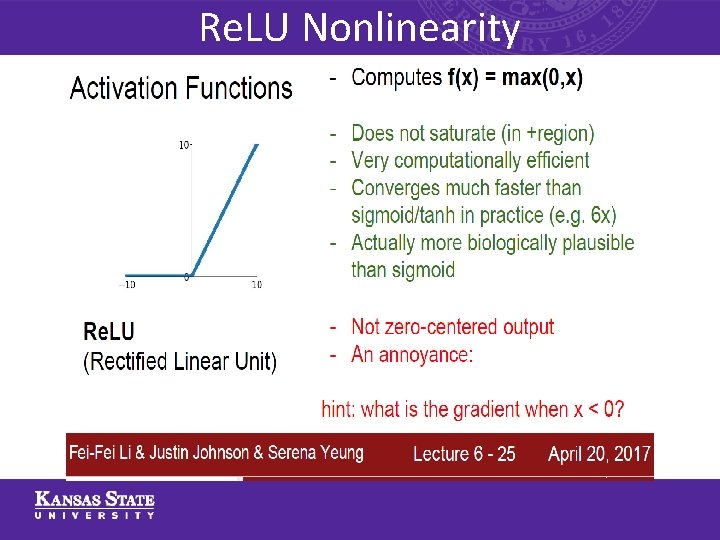

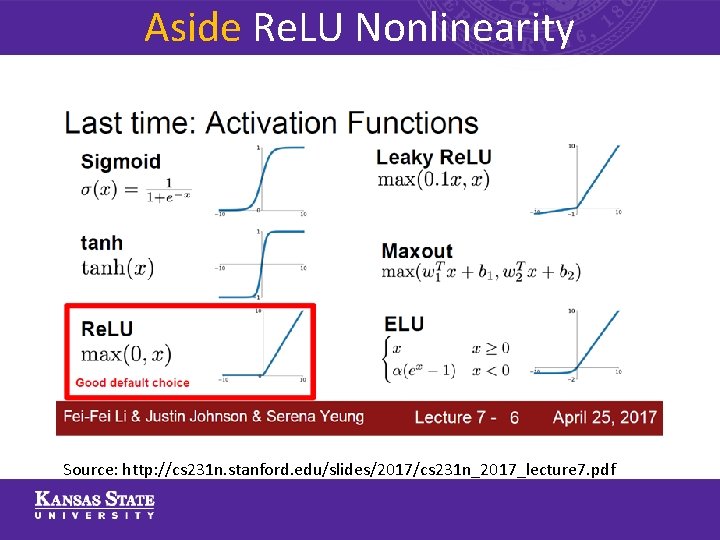

Re. LU Nonlinearity

Aside Re. LU Nonlinearity Source: http: //cs 231 n. stanford. edu/slides/2017/cs 231 n_2017_lecture 7. pdf

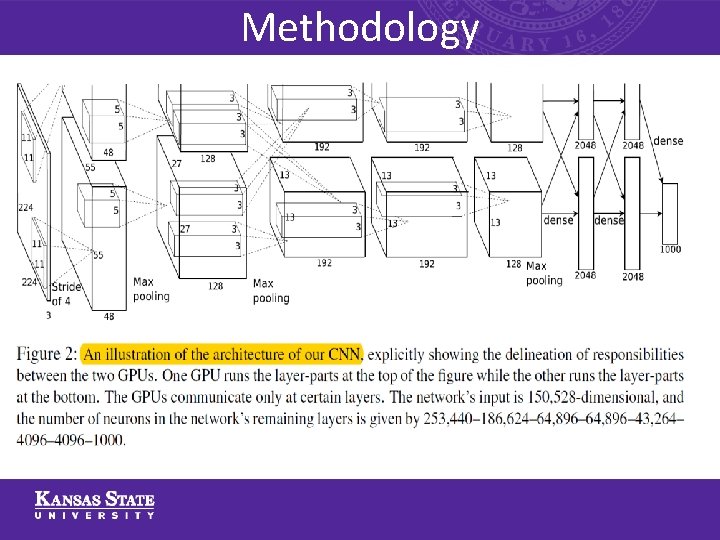

Methodology

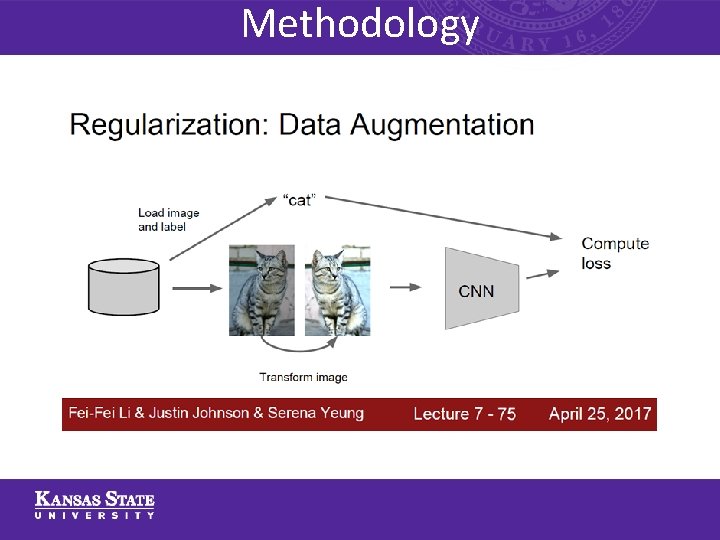

Methodology Reducing Overfitting – ü Data Augmentation ü Dropout

Methodology

Methodology

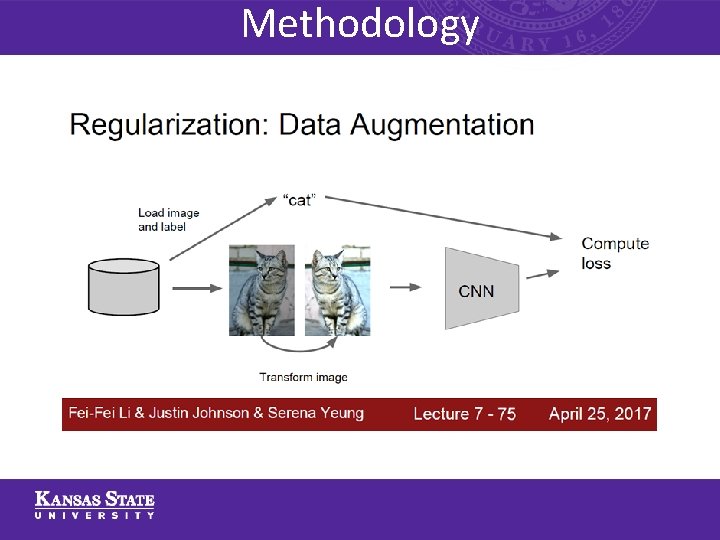

Methodology Data Augmentation Section 4. 1 …discuss

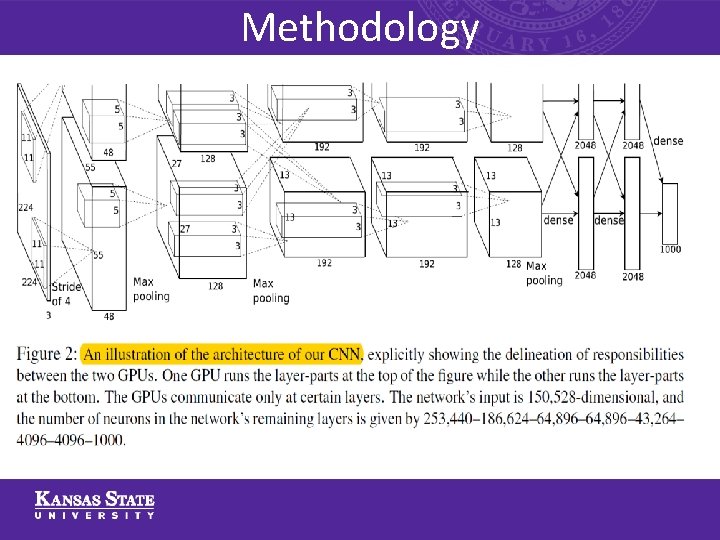

Methodology The Neural Network has: ü 60 million parameters ü 650, 000 neurons ü Five convolutional layers ü Three fully-connected layers ü 1000 -way softmax

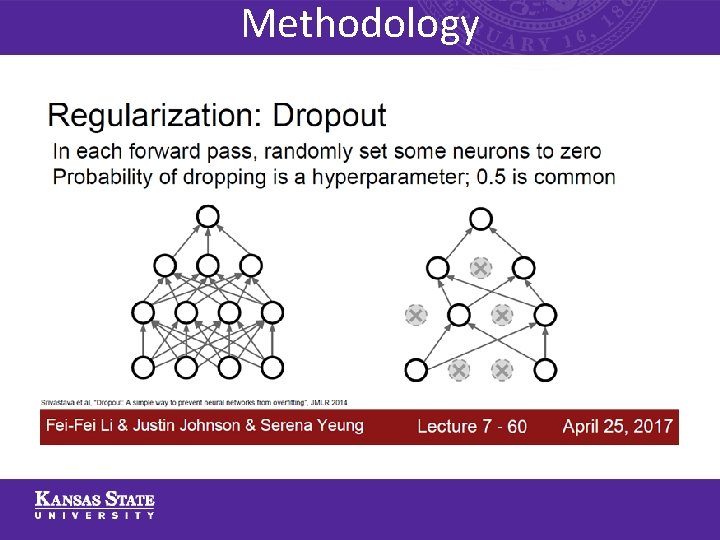

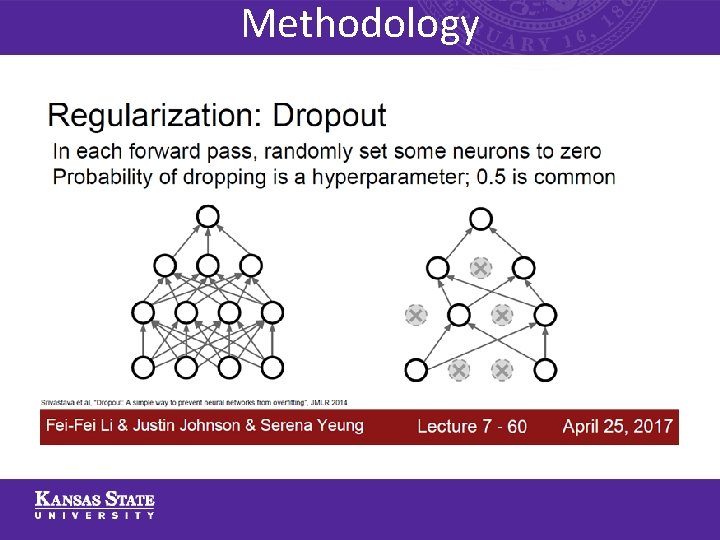

Methodology Regularization method used to reduce overfitting – ü Dropout.

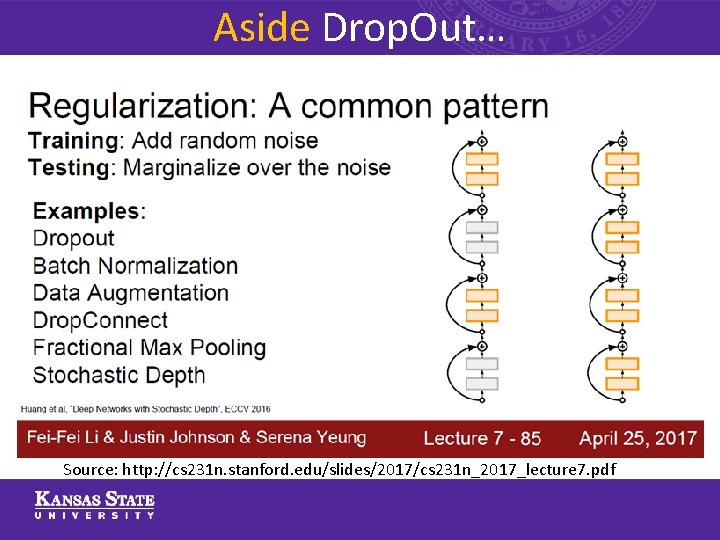

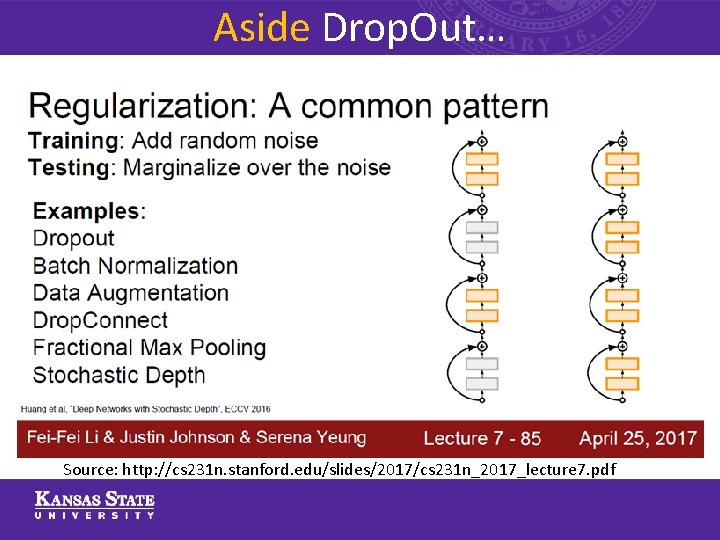

Aside Drop. Out… Source: http: //cs 231 n. stanford. edu/slides/2017/cs 231 n_2017_lecture 7. pdf

Methodology Local Response Normalization Section 3. 3. …discuss

Methodology Training Time Complexity: ü Non-saturating neurons ü Efficient GPU implementation of the convolution operation ü Network takes between five and six days to train on two GTX 580 3 GB GPUs

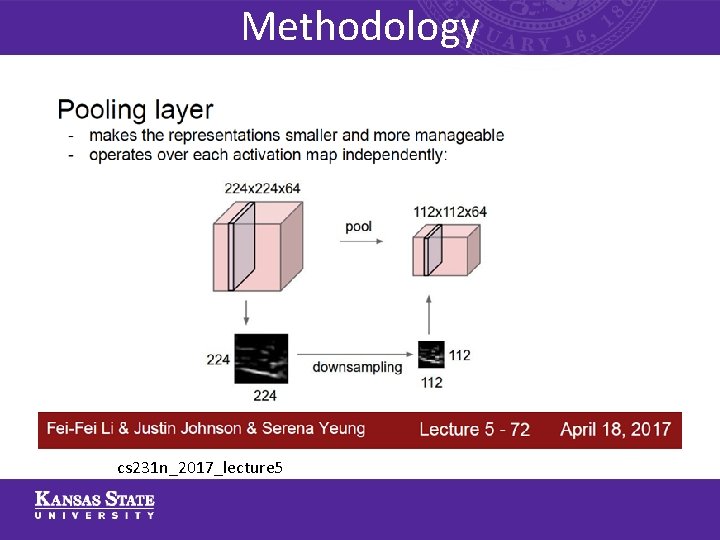

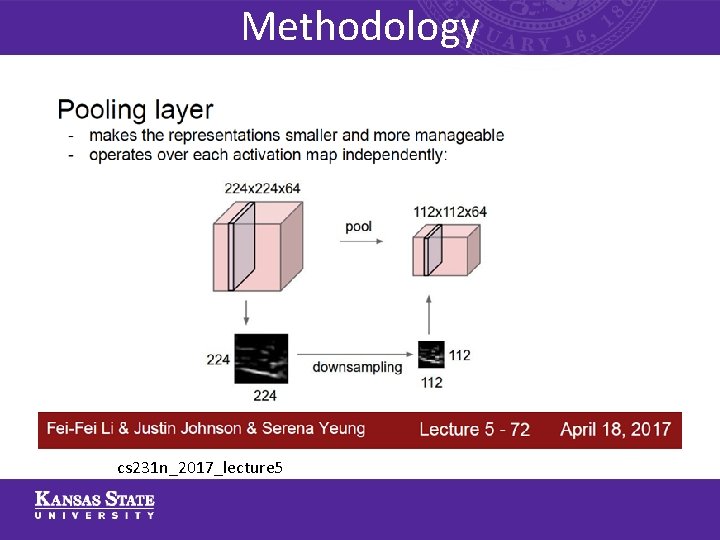

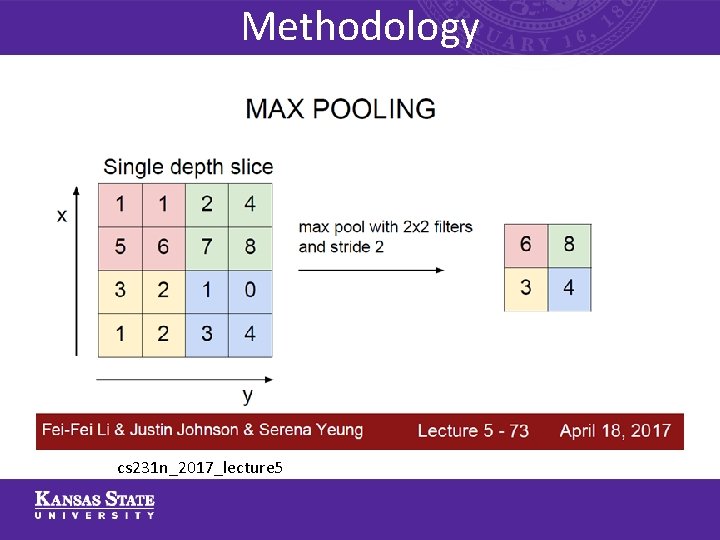

Methodology cs 231 n_2017_lecture 5

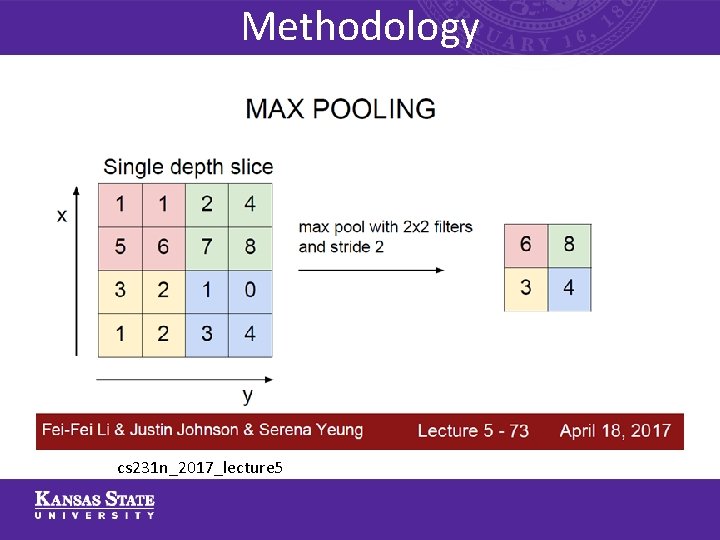

Methodology cs 231 n_2017_lecture 5

Methodology Overlapping Pooling Section 3. 4 …discuss

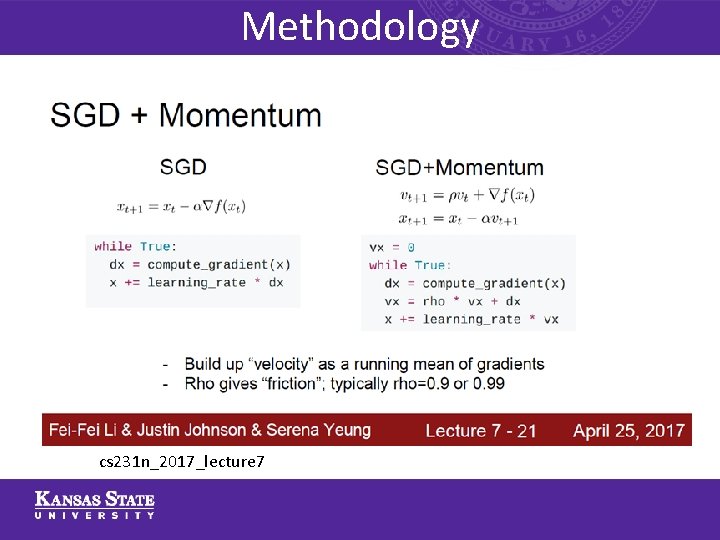

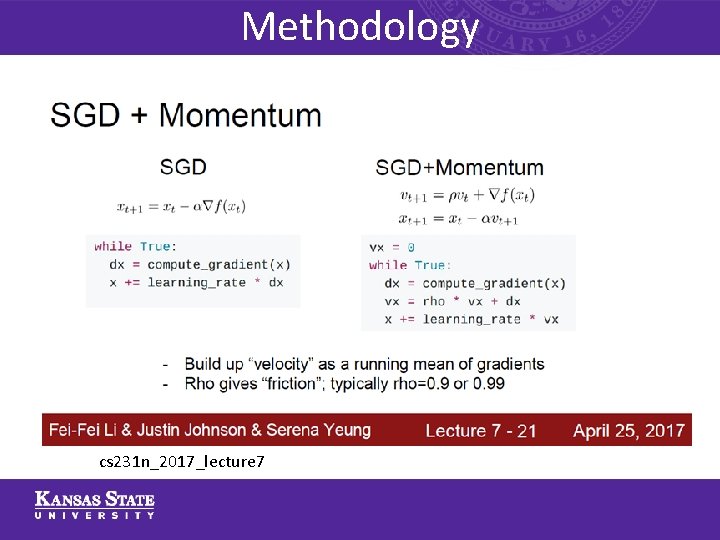

Methodology Details of Learning ü stochastic gradient descent ü Batch size of 128 examples ü Momentum of 0. 9 ü Weight decay of 0. 0005

Methodology cs 231 n_2017_lecture 7

Methodology Details of Learning Section 5 …discuss

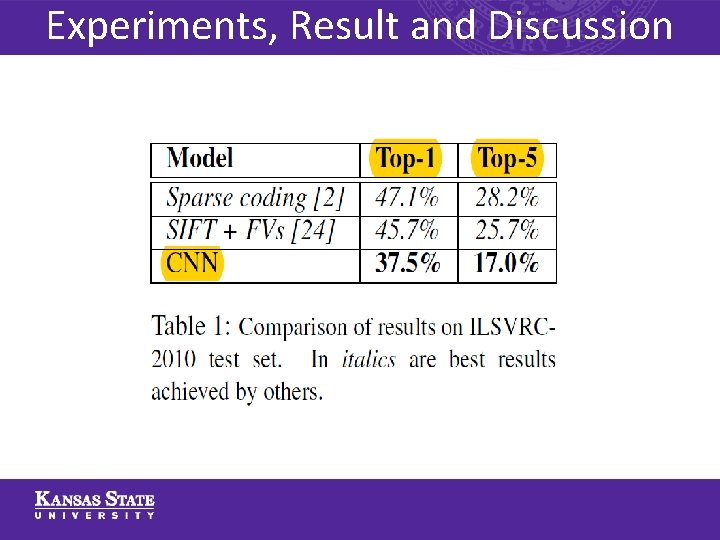

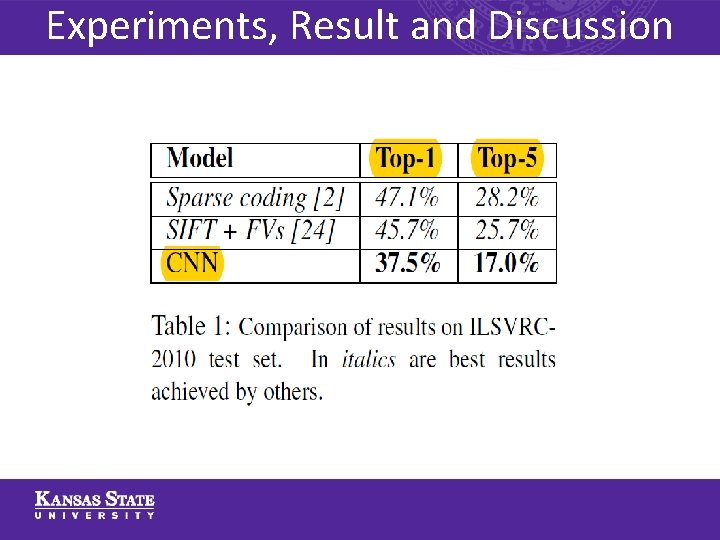

Experiments, Result and Discussion Error rates Top-1 => 37. 5% Top-5 => 17. 0% Top-5 error rate is the fraction of test images for which the correct label is not among the five labels considered most probable by the model

Experiments, Result and Discussion

Conclusion and Future Work ü Result showed a large, deep convolutional neural network is capable of achieving record-breaking results on a highly challenging dataset using purely supervised learning ü Network degrades if a single convolutional layer is removed ü Depth is important ü Yet to match the infero-temporal pathway of human visual system ü Future work on video sequences

Resource ü https: //www. youtube. com/watch? v=40 ri. Cqv. Ro. Ms

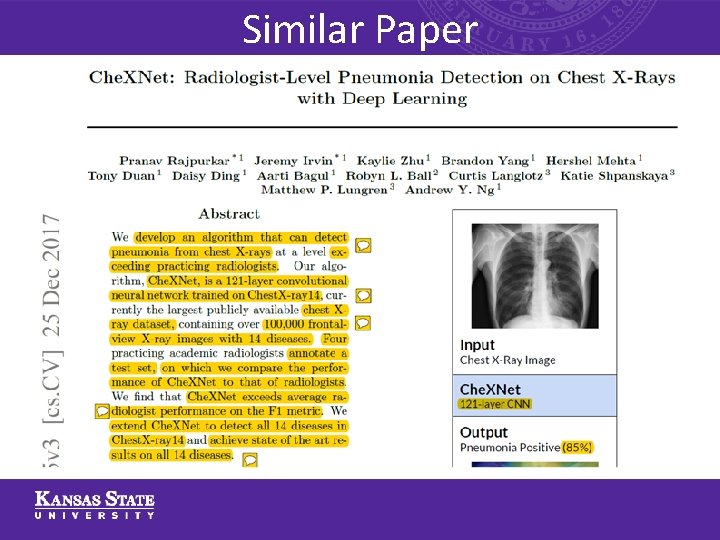

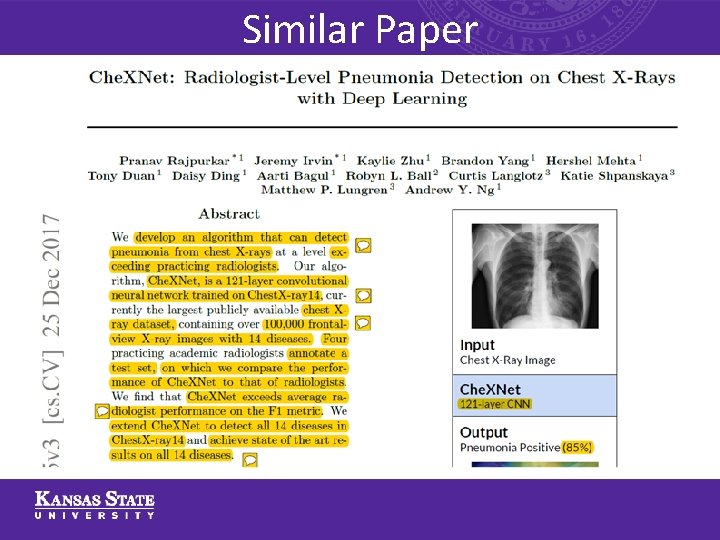

Similar Paper

THANK YOU FOR LISTENING http: //people. cs. ksu. edu/~okerinde/