Image Classification and Convolutional Networks Presented by T

Image Classification and Convolutional Networks Presented by T. H. Yang, S. H. Cho, L. W. Kao

Outline Review Back Propagation Neural Networks p Demo – Two-layer NN Training Neural Networks

Neural Networks How does our brain work?

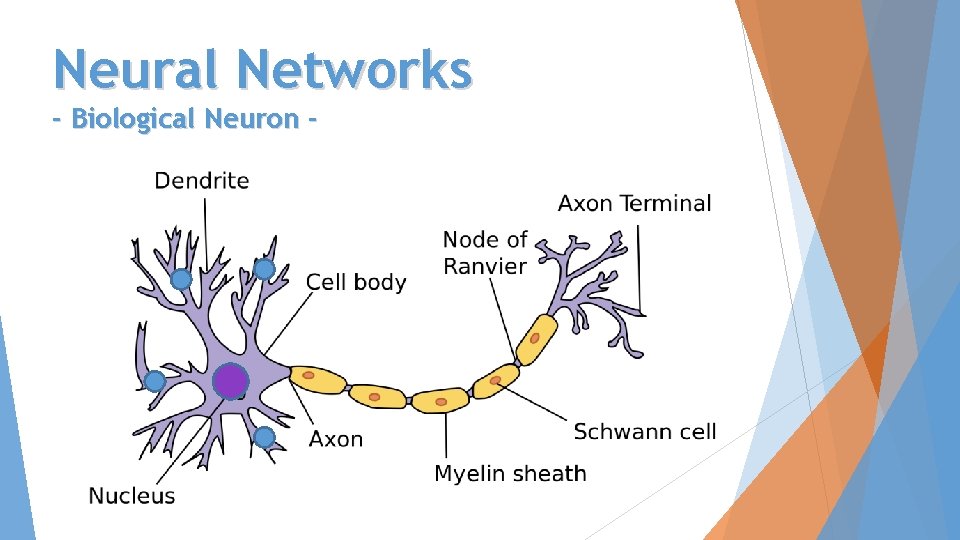

Neural Networks - Biological Neuron -

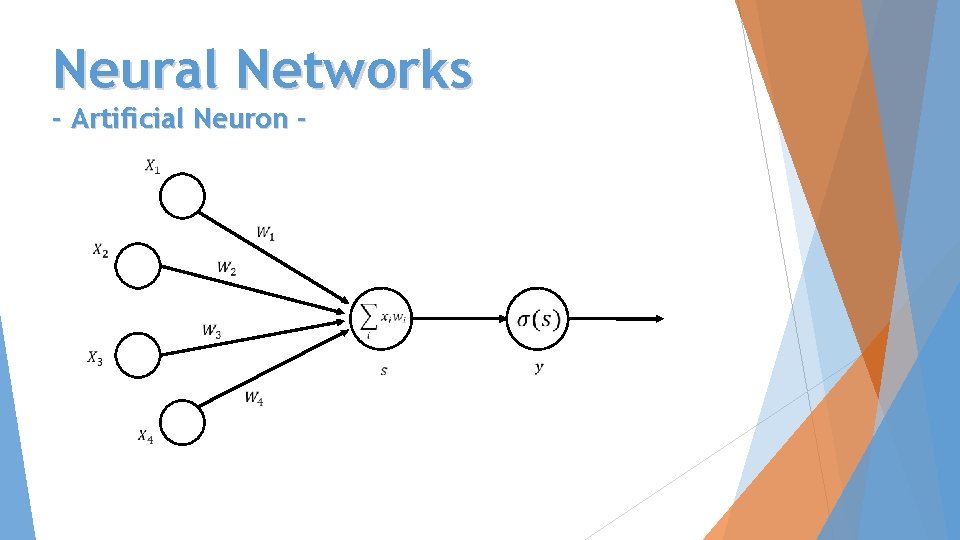

Neural Networks - Artificial Neuron -

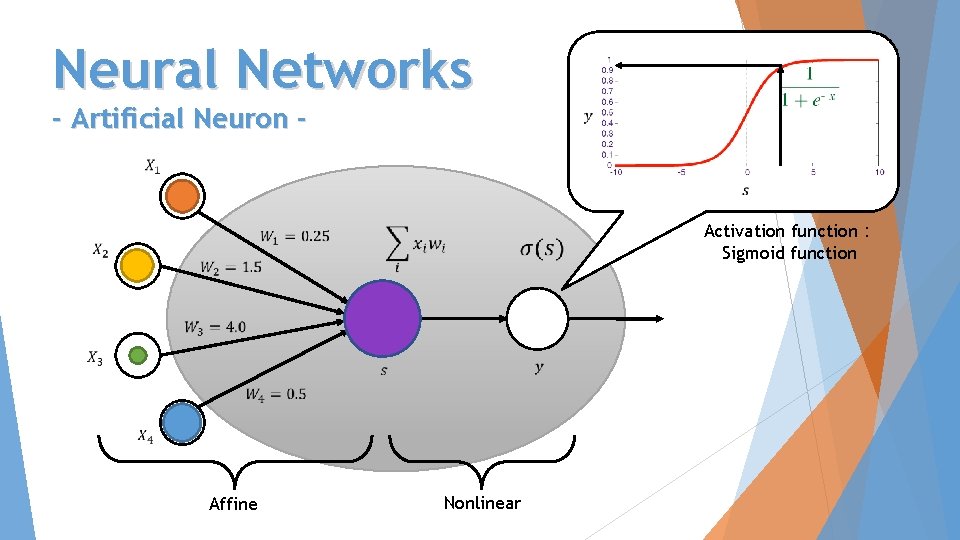

Neural Networks - Artificial Neuron - Activation function: Sigmoid function Affine Nonlinear

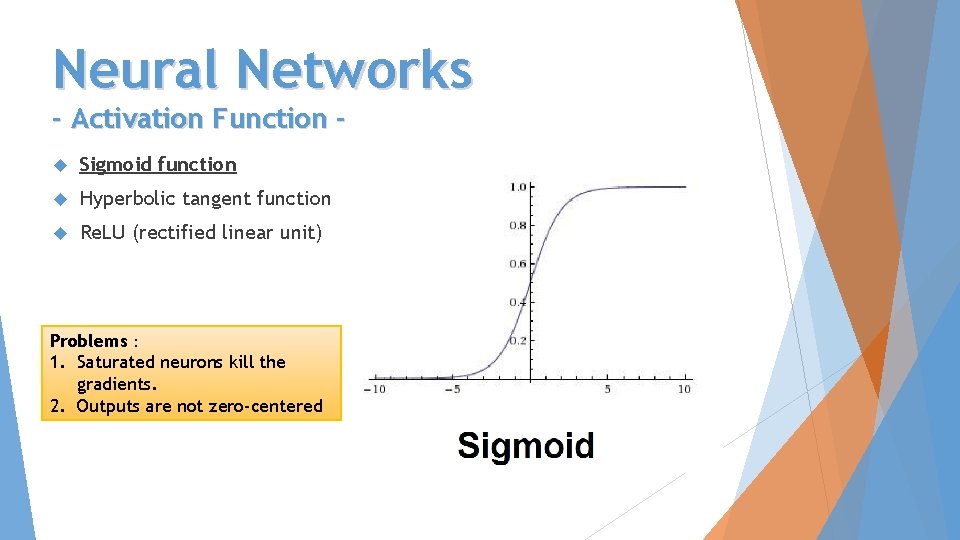

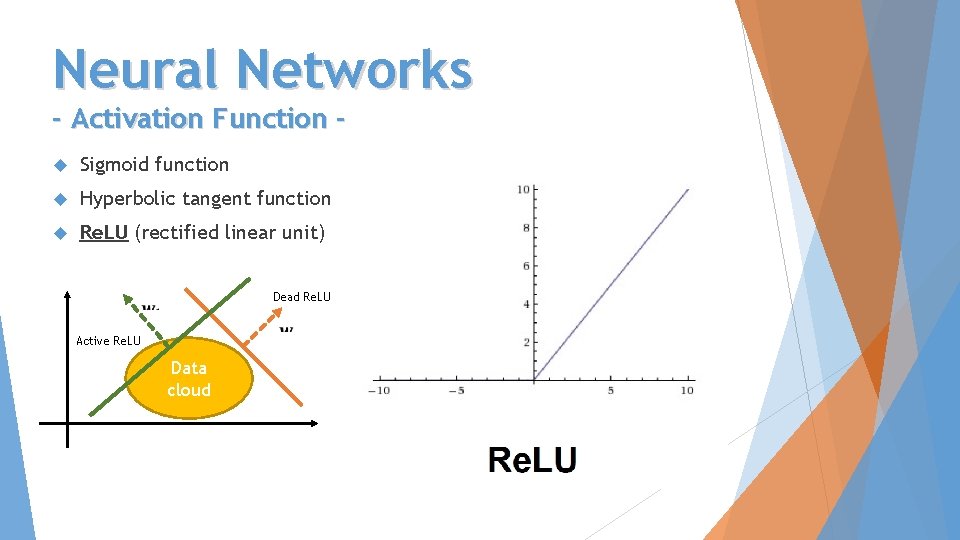

Neural Networks - Activation Function Sigmoid function Hyperbolic tangent function Re. LU (rectified linear unit) Problems: 1. Saturated neurons kill the gradients. 2. Outputs are not zero-centered

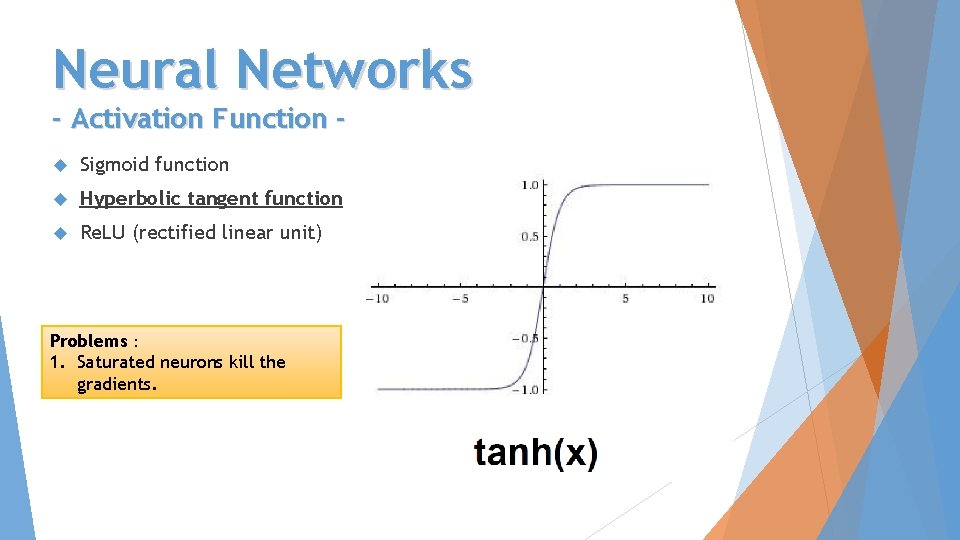

Neural Networks - Activation Function Sigmoid function Hyperbolic tangent function Re. LU (rectified linear unit) Problems: 1. Saturated neurons kill the gradients.

Neural Networks - Activation Function Sigmoid function Hyperbolic tangent function Re. LU (rectified linear unit) Dead Re. LU Active Re. LU Data cloud

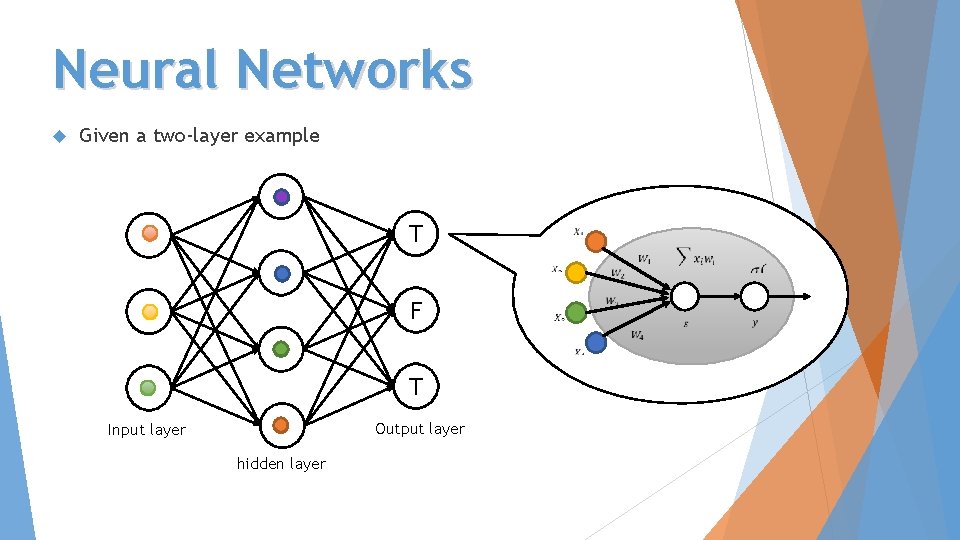

Neural Networks Given a two-layer example T F T Output layer Input layer hidden layer

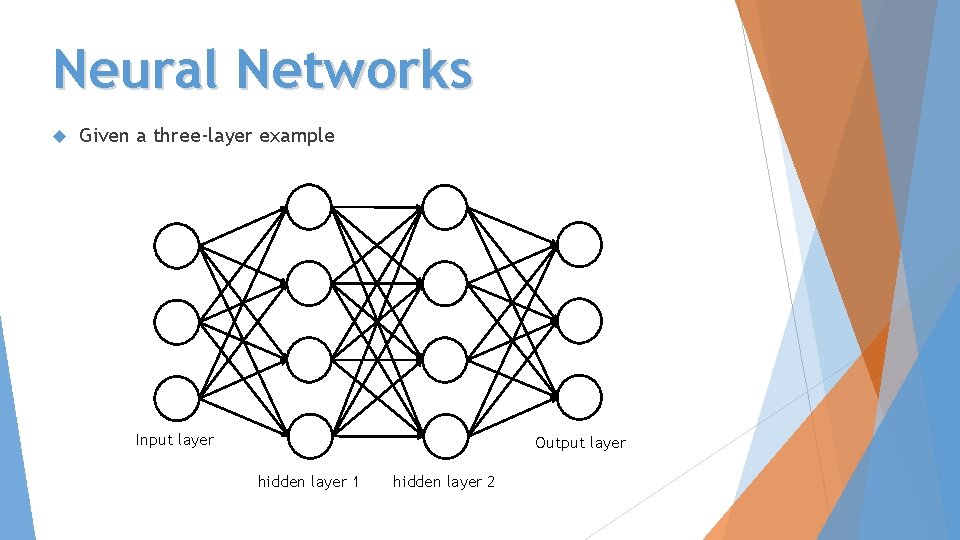

Neural Networks Given a three-layer example Input layer Output layer hidden layer 1 hidden layer 2

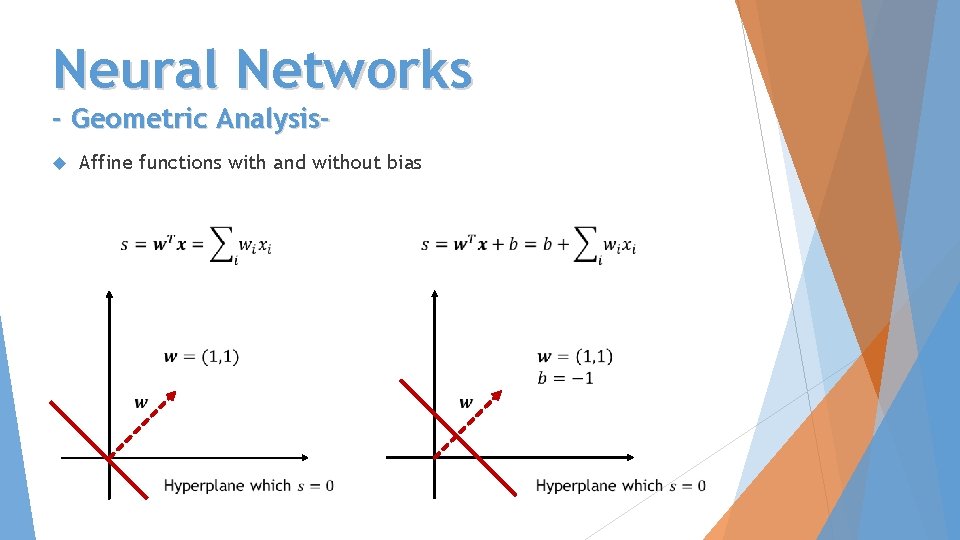

Neural Networks - Geometric Analysis Affine functions with and without bias

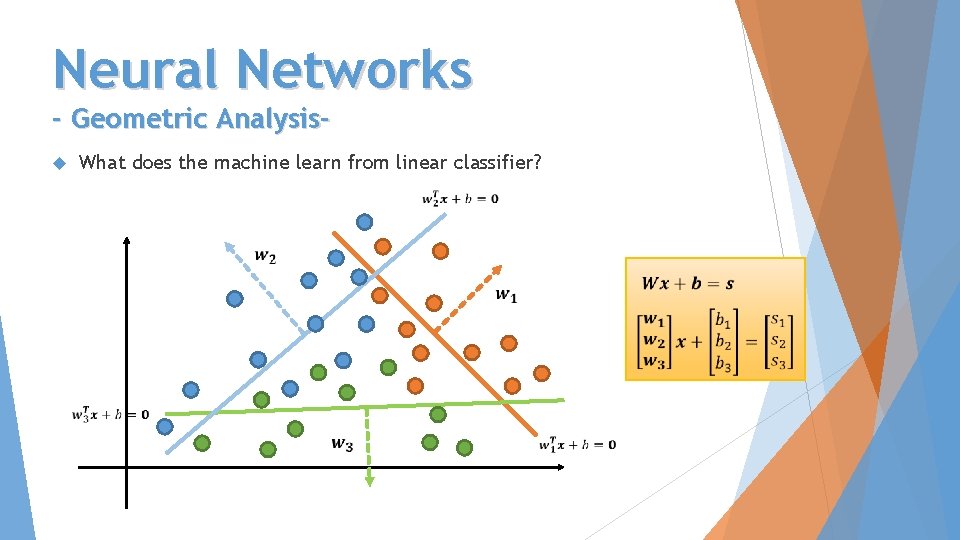

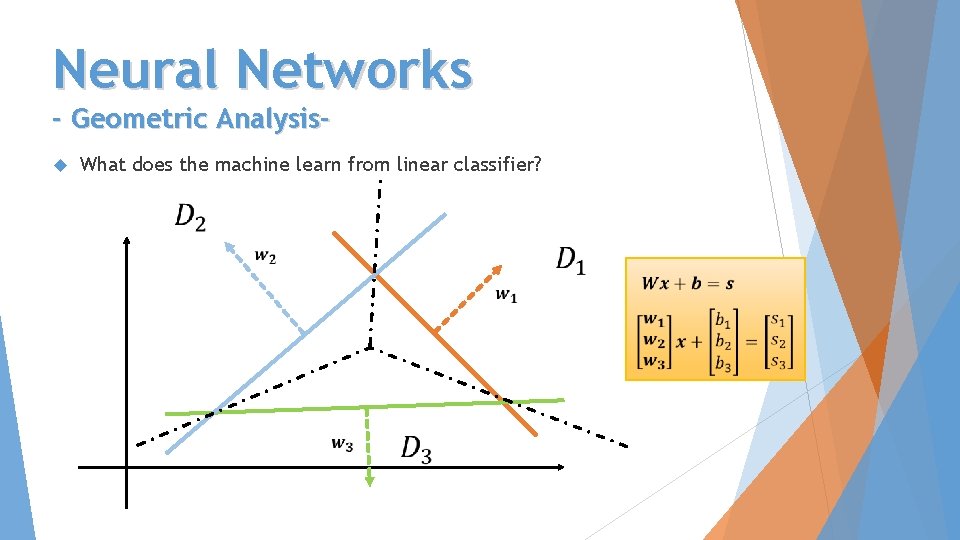

Neural Networks - Geometric Analysis What does the machine learn from linear classifier?

Neural Networks - Geometric Analysis What does the machine learn from linear classifier?

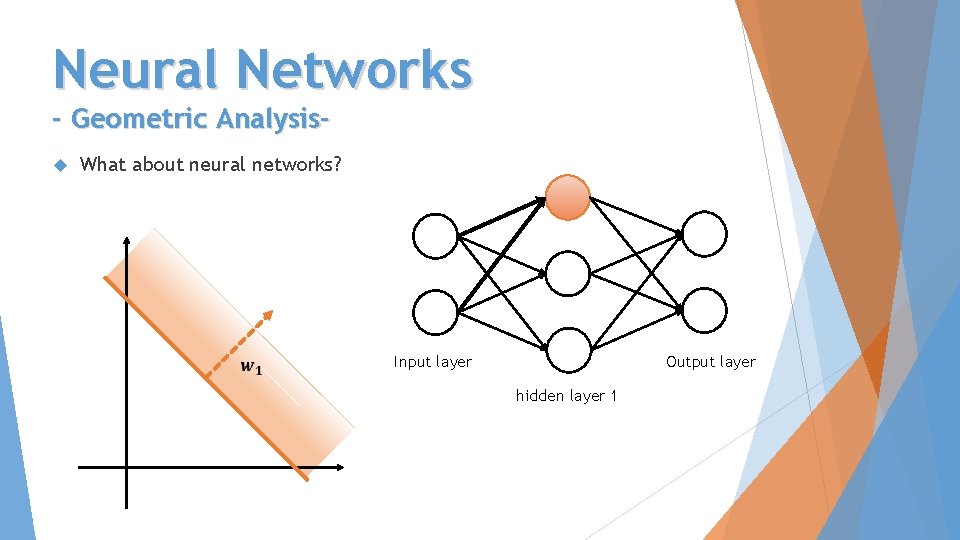

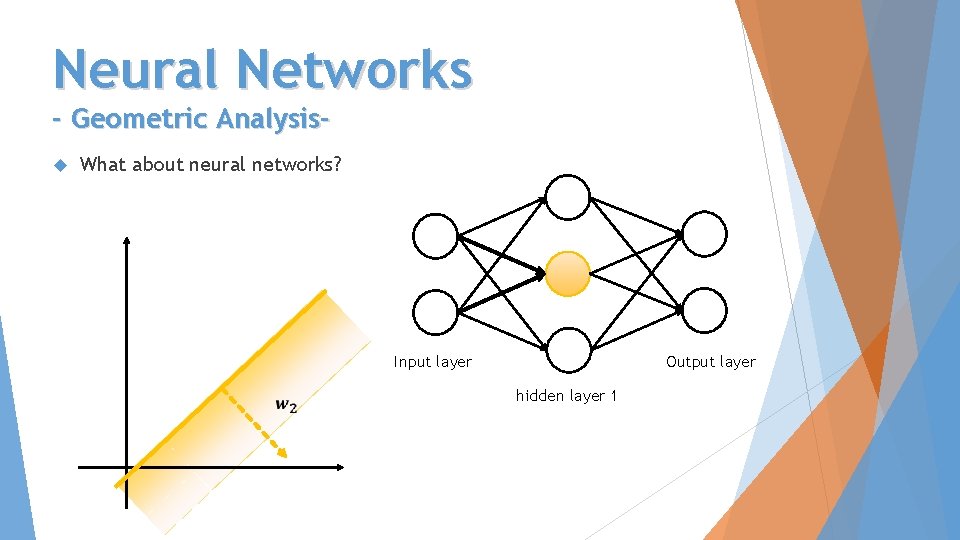

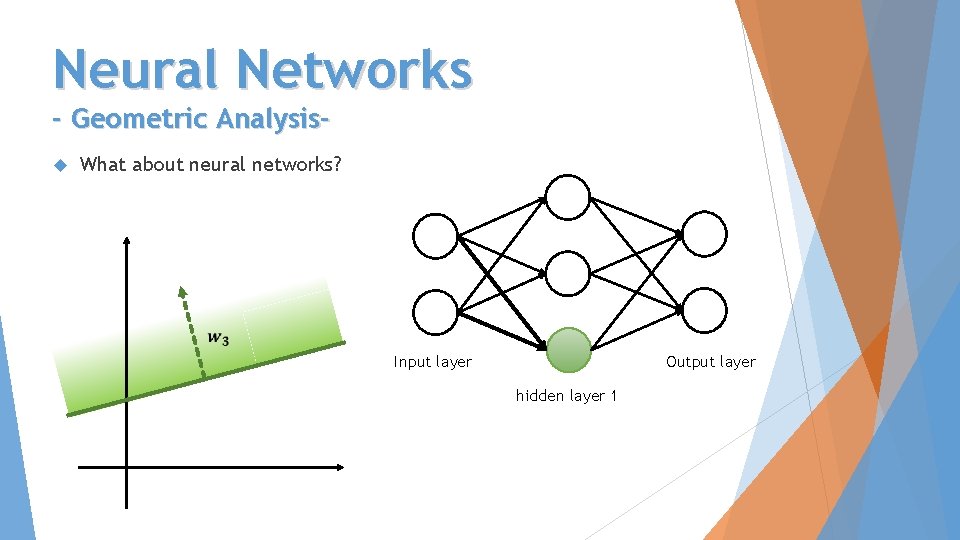

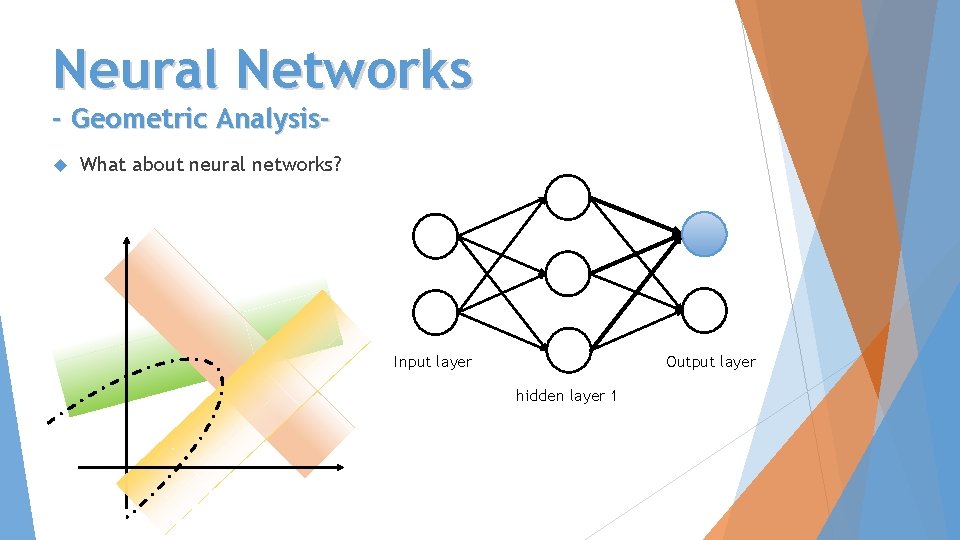

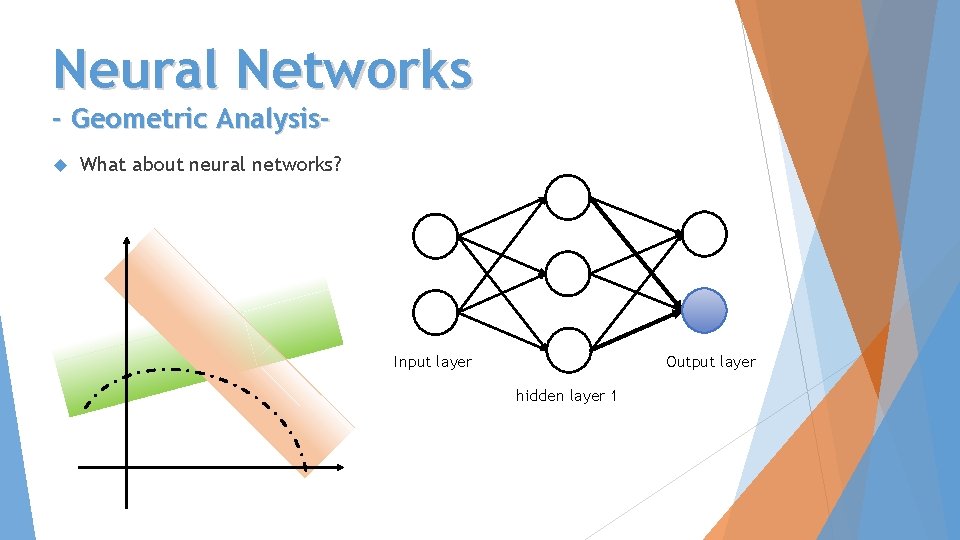

Neural Networks - Geometric Analysis What about neural networks? Input layer Output layer hidden layer 1

Neural Networks - Geometric Analysis What about neural networks? Input layer Output layer hidden layer 1

Neural Networks - Geometric Analysis What about neural networks? Input layer Output layer hidden layer 1

Neural Networks - Geometric Analysis What about neural networks? z Input layer Output layer hidden layer 1

Neural Networks - Geometric Analysis What about neural networks? z Input layer Output layer hidden layer 1

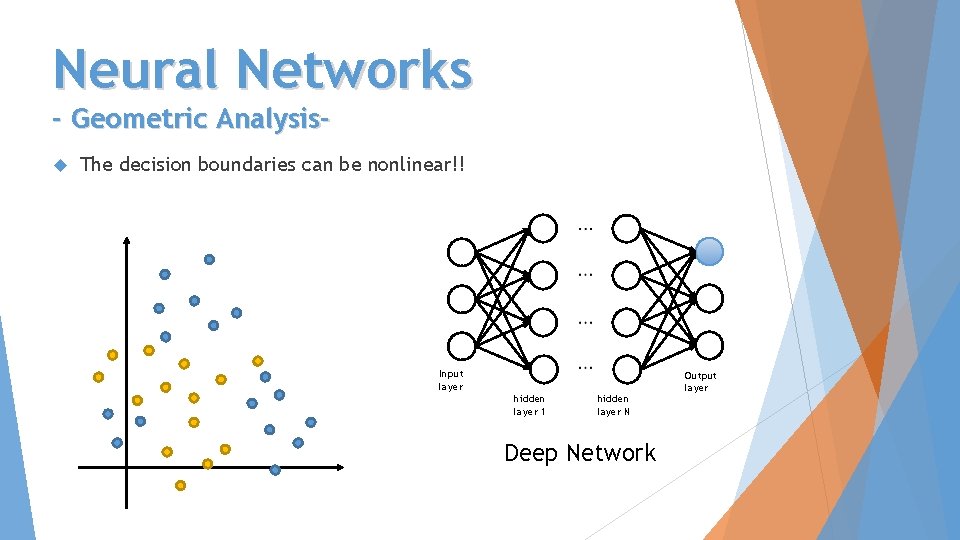

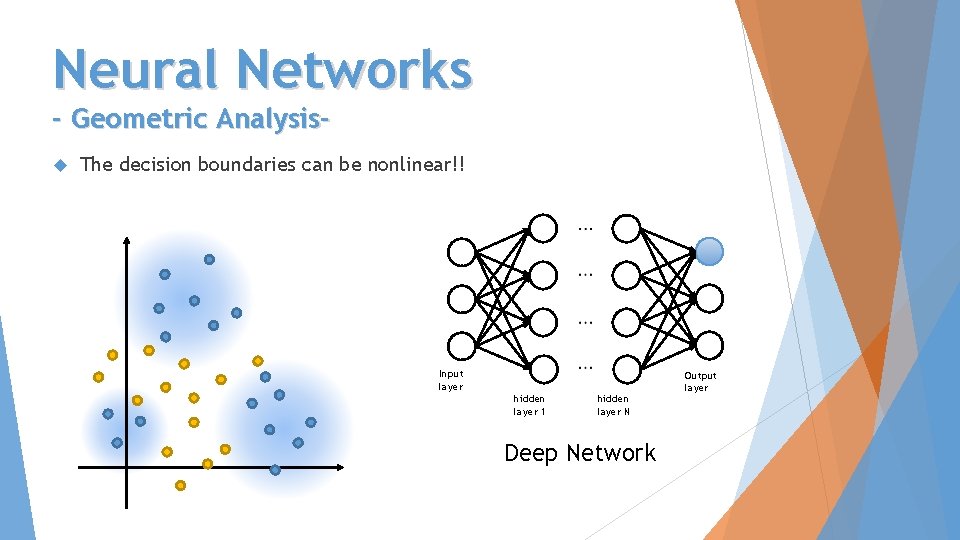

Neural Networks - Geometric Analysis The decision boundaries can be nonlinear!! Input layer hidden layer 1 hidden layer N Deep Network Output layer

Neural Networks - Geometric Analysis The decision boundaries can be nonlinear!! Input layer hidden layer 1 hidden layer N Deep Network Output layer

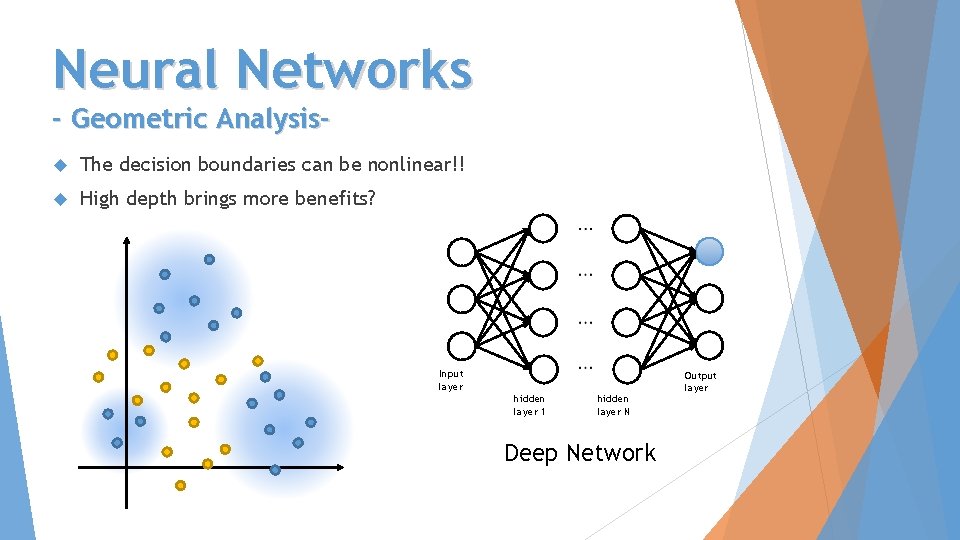

Neural Networks - Geometric Analysis The decision boundaries can be nonlinear!! High depth brings more benefits? Input layer hidden layer 1 hidden layer N Deep Network Output layer

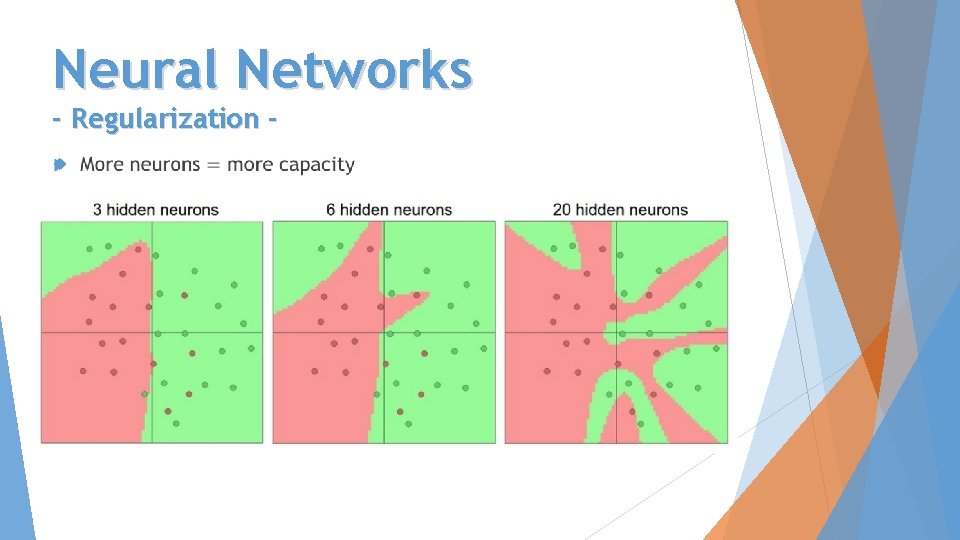

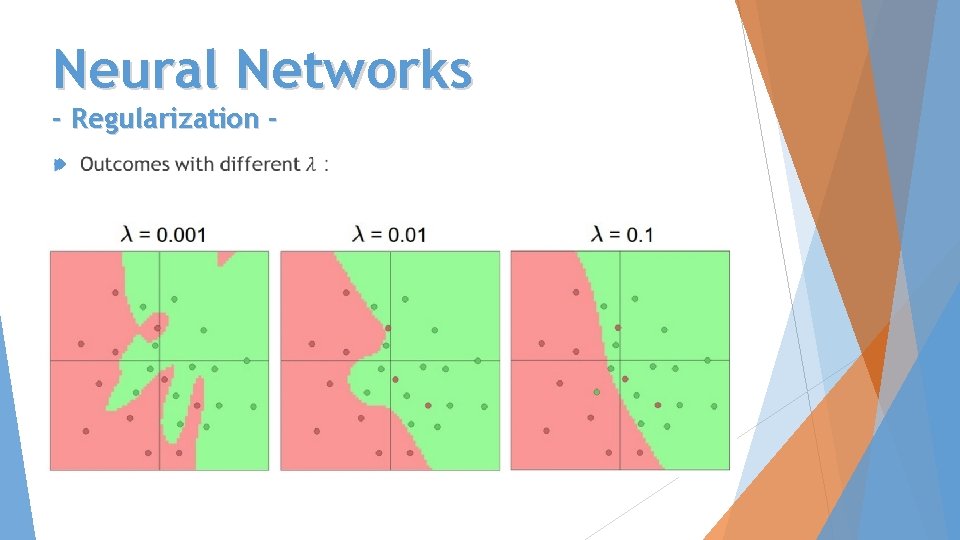

Neural Networks - Regularization

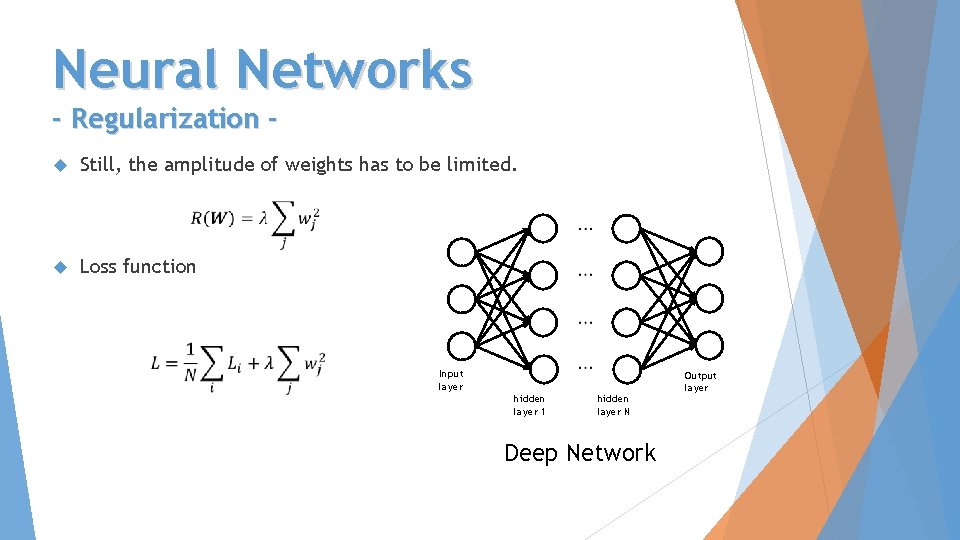

Neural Networks - Regularization Still, the amplitude of weights has to be limited. Loss function Input layer hidden layer 1 hidden layer N Deep Network Output layer

Neural Networks - Regularization

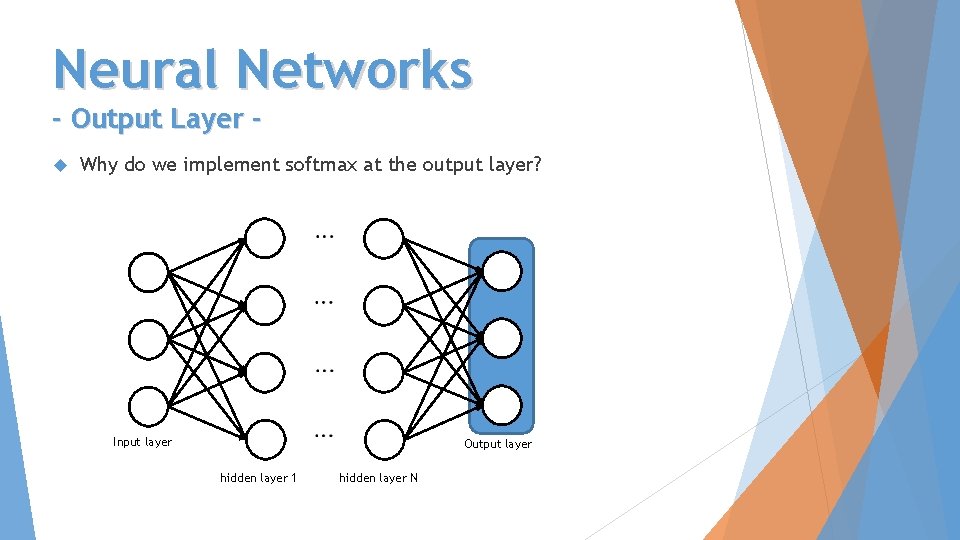

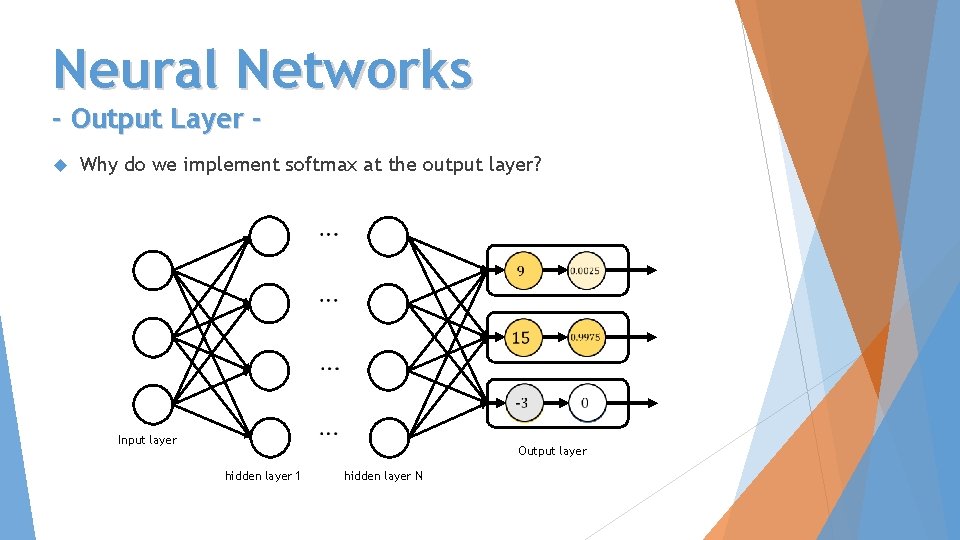

Neural Networks - Output Layer Why do we implement softmax at the output layer? Input layer Output layer hidden layer 1 hidden layer N

Neural Networks - Output Layer Why do we implement softmax at the output layer? Input layer Output layer hidden layer 1 hidden layer N

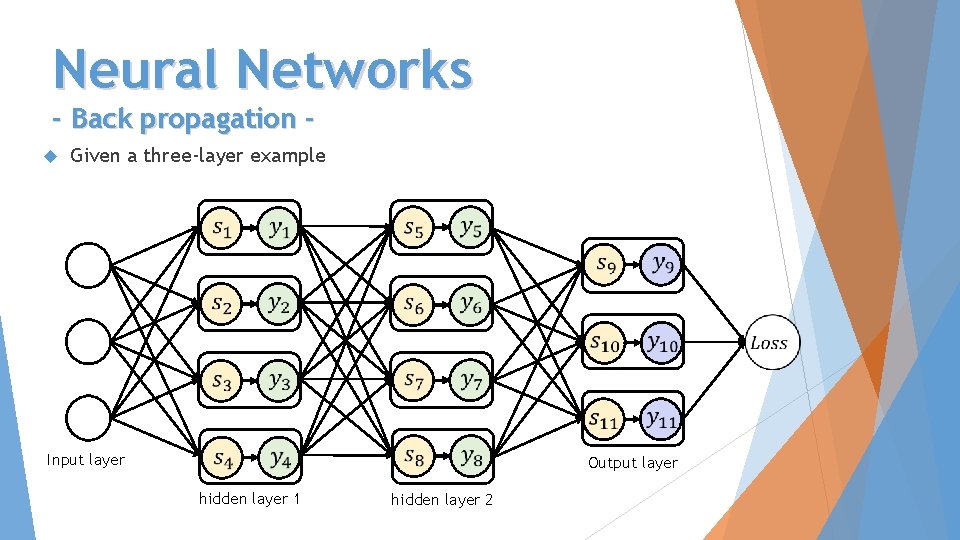

Neural Networks - Back propagation Given a three-layer example Input layer Output layer hidden layer 1 hidden layer 2

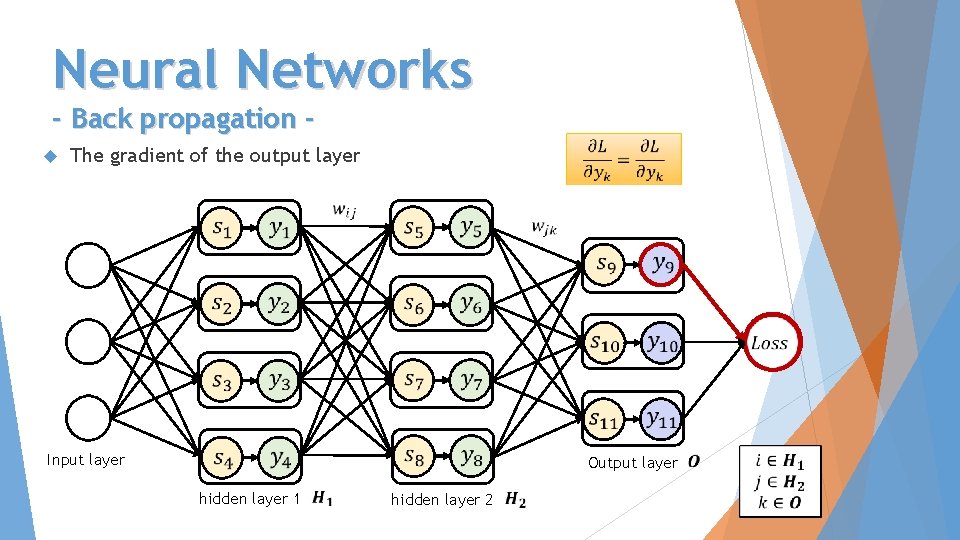

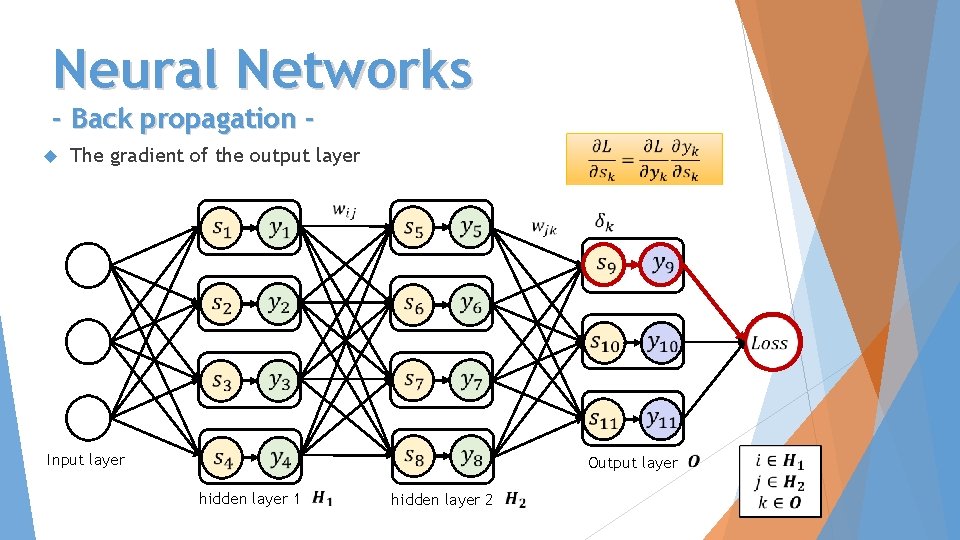

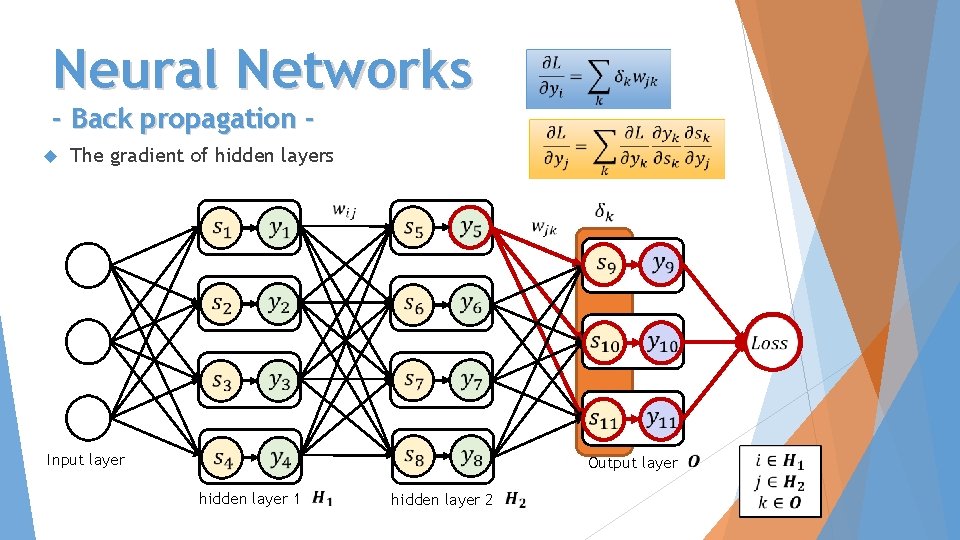

Neural Networks - Back propagation The gradient of the output layer Input layer Output layer hidden layer 1 hidden layer 2

Neural Networks - Back propagation The gradient of the output layer Input layer Output layer hidden layer 1 hidden layer 2

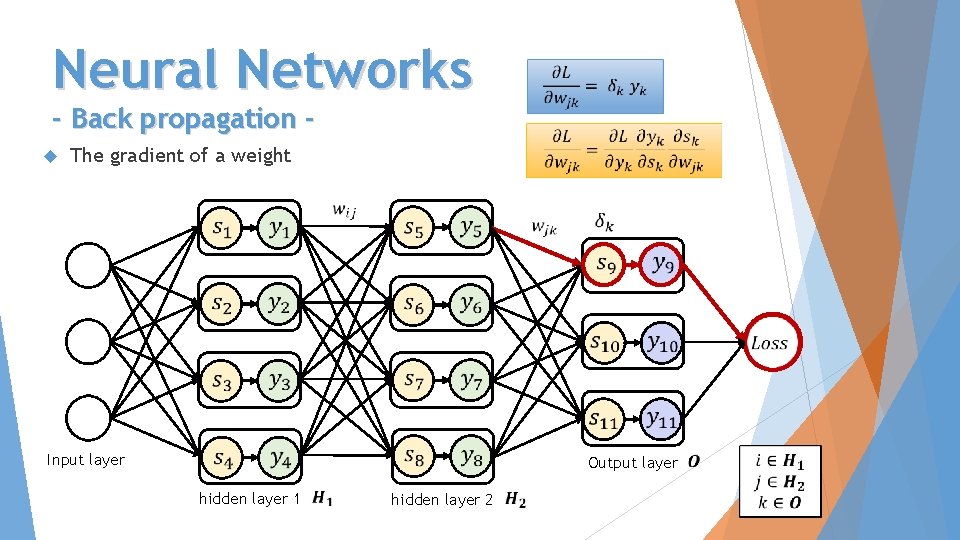

Neural Networks - Back propagation The gradient of a weight Input layer Output layer hidden layer 1 hidden layer 2

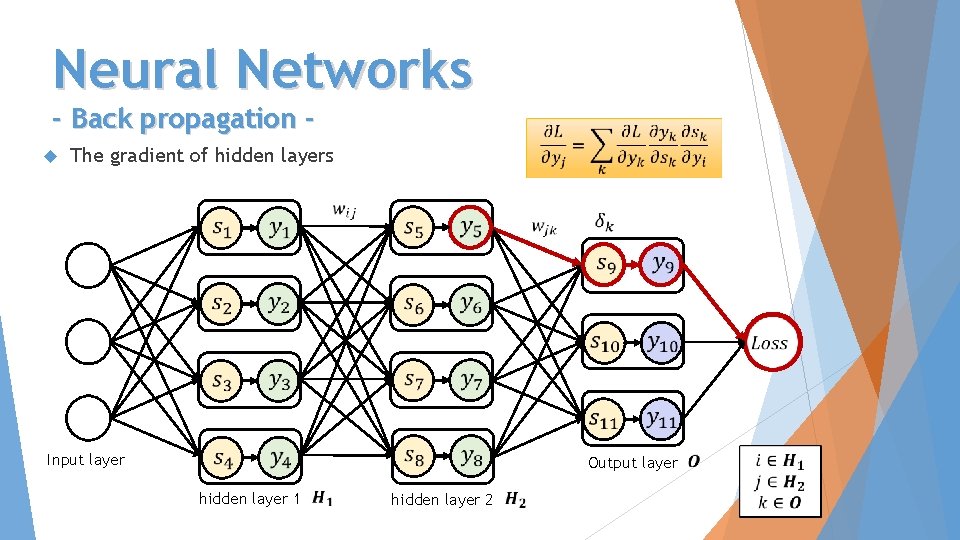

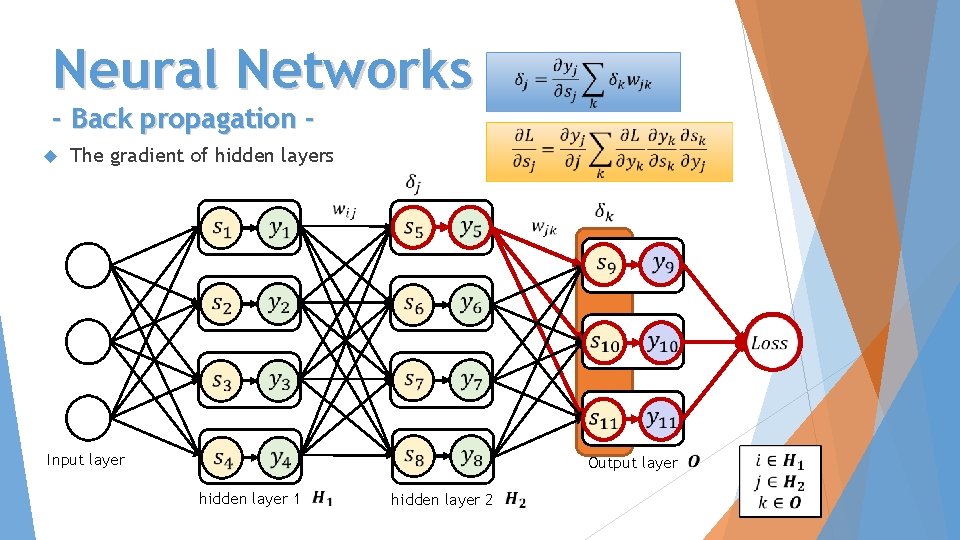

Neural Networks - Back propagation The gradient of hidden layers Input layer Output layer hidden layer 1 hidden layer 2

Neural Networks - Back propagation The gradient of hidden layers Input layer Output layer hidden layer 1 hidden layer 2

Neural Networks - Back propagation The gradient of hidden layers Input layer Output layer hidden layer 1 hidden layer 2

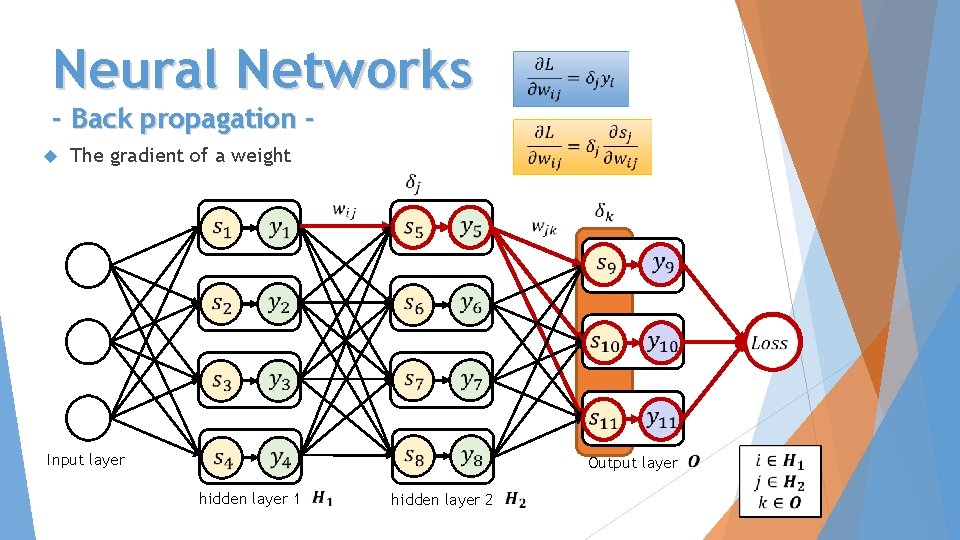

Neural Networks - Back propagation The gradient of a weight Input layer Output layer hidden layer 1 hidden layer 2

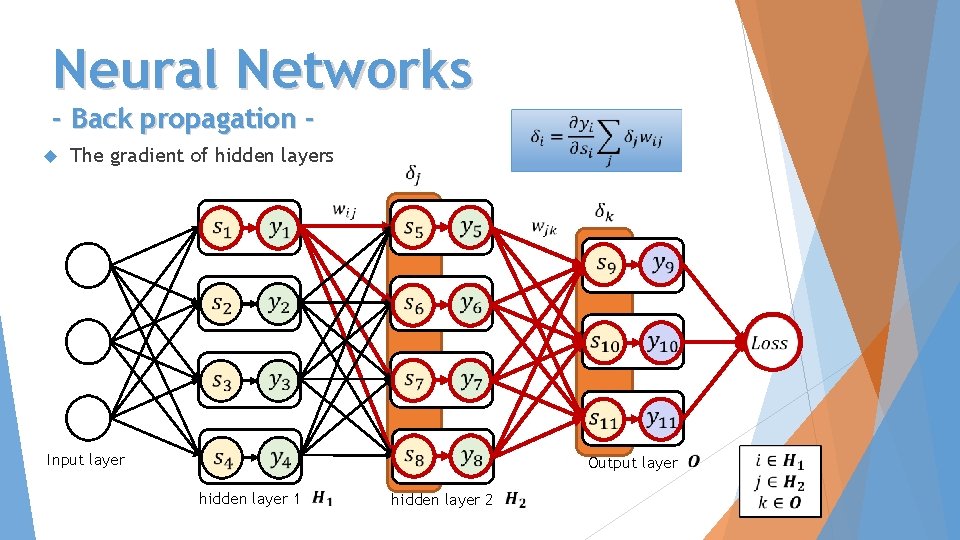

Neural Networks - Back propagation The gradient of hidden layers Input layer Output layer hidden layer 1 hidden layer 2

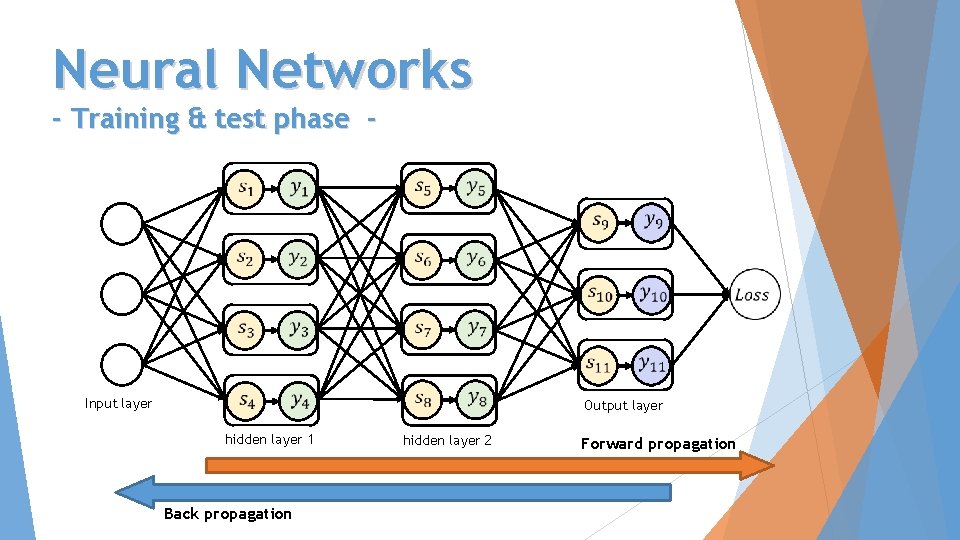

Neural Networks - Training & test phase - Input layer Output layer hidden layer 1 Back propagation hidden layer 2 Forward propagation

Neural Networks - Demo – Two-layer NN Let’s play with this demo over at Conv. Net. JS http: //cs. stanford. edu/people/karpathy/convnetjs/demo/classify 2 d. html

- Slides: 38