Image Classification and Convolutional Networks Presented by T

Image Classification and Convolutional Networks Presented by T. H. Yang, S. H. Cho, L. W. Kao

Outline Introduction to Video classification K-Nearest-Neighbor classifier p Demo#1 - K-Nearest-Neighbor Linear Classification Optimization p Demo#2 - SVM and Softmax

Linear Classification What is Linear Classifier X 2 X 1

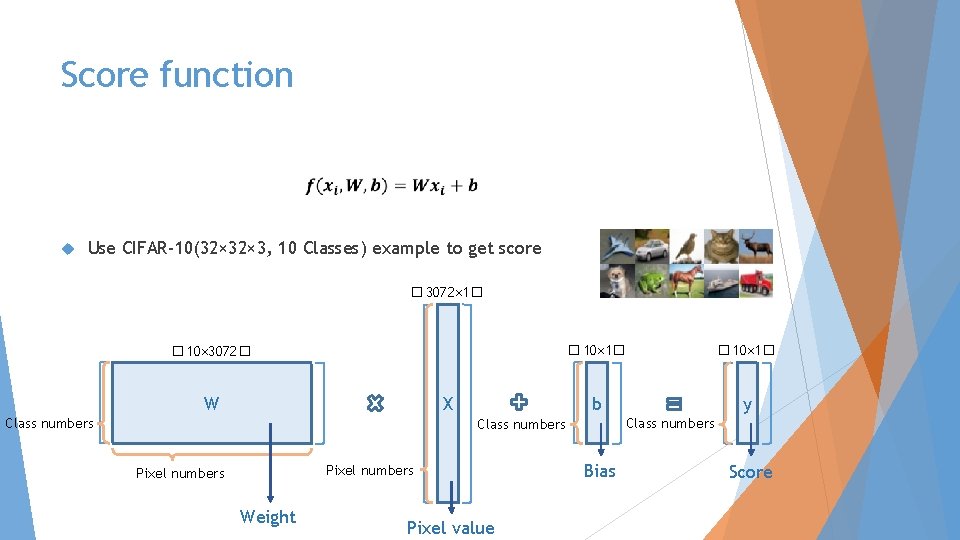

Score function Use CIFAR-10(32× 3, 10 Classes) example to get score � 3072× 1� � 10× 3072� W X Class numbers � 10× 1� b y Class numbers Pixel numbers Weight Pixel value Bias Score

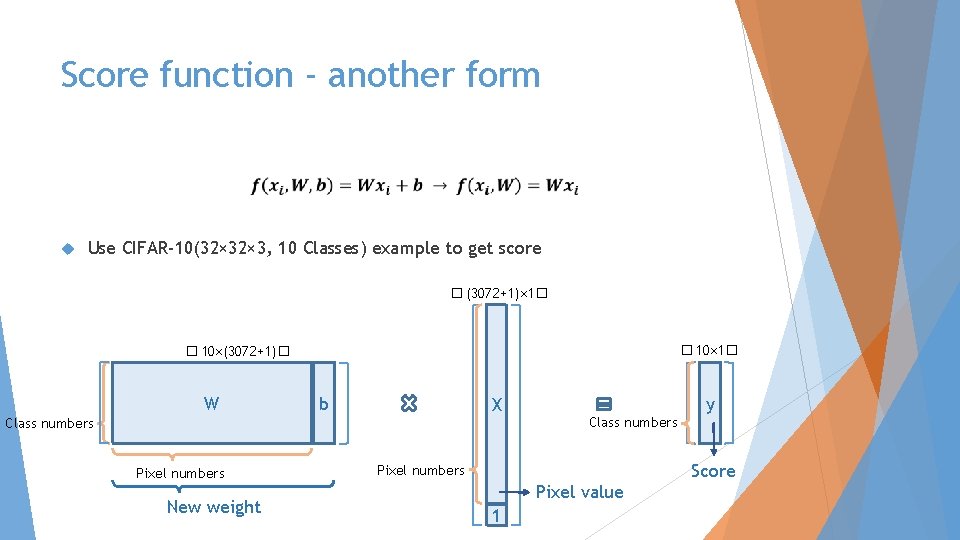

Score function - another form Use CIFAR-10(32× 3, 10 Classes) example to get score � (3072+1)× 1� � 10×(3072+1)� W b X Class numbers Pixel numbers New weight y Class numbers Pixel value 1 Score

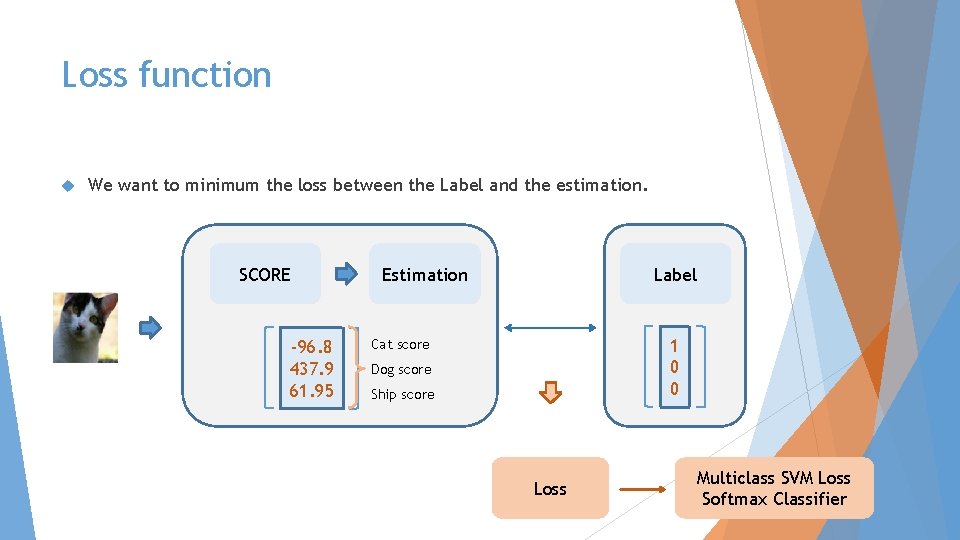

Loss function We want to minimum the loss between the Label and the estimation. SCORE -96. 8 437. 9 61. 95 Estimation Label 1 0 0 Cat score Dog score Ship score Loss Multiclass SVM Loss Softmax Classifier

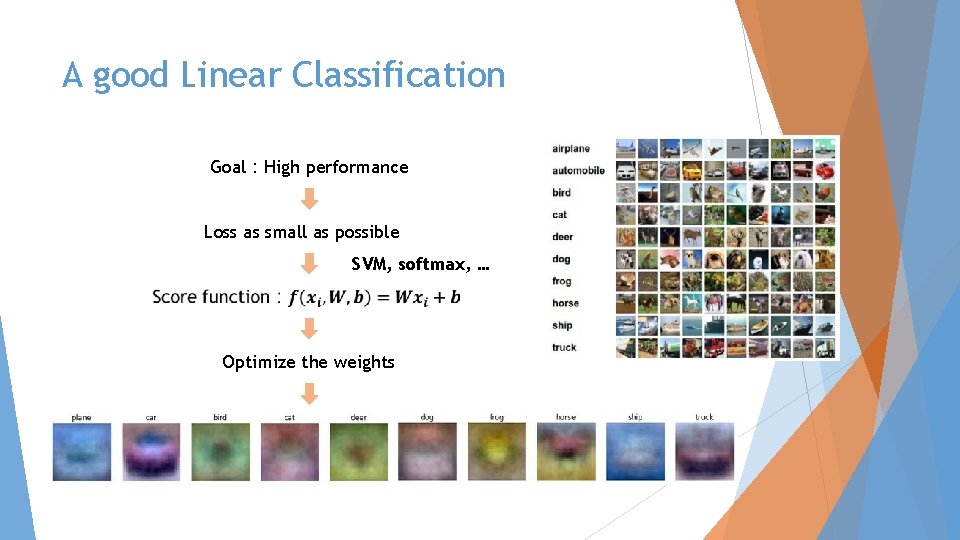

A good Linear Classification Goal:High performance Loss as small as possible SVM, softmax, … Optimize the weights

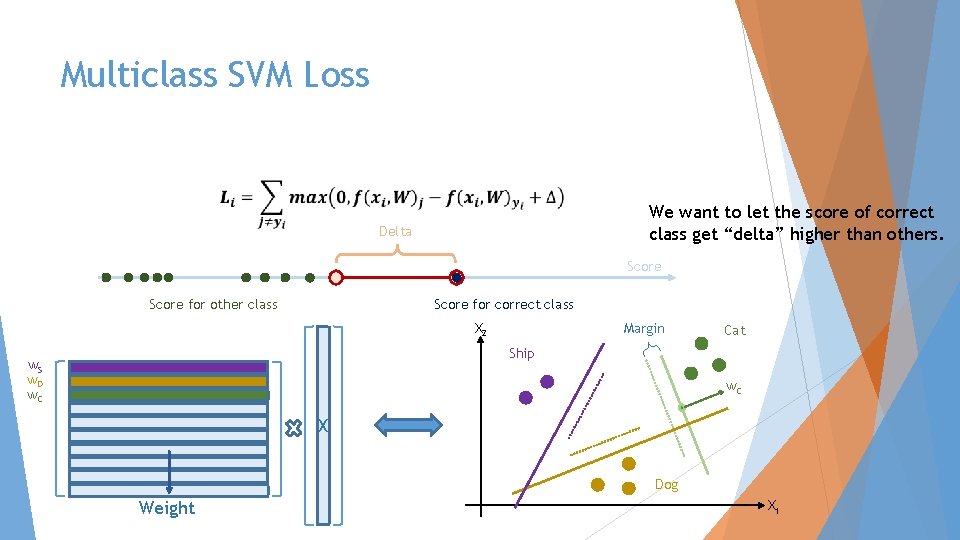

Multiclass SVM Loss We want to let the score of correct class get “delta” higher than others. Delta Score for other class Score for correct class X 2 Margin Cat Ship w. S w. D w. C X Dog Weight X 1

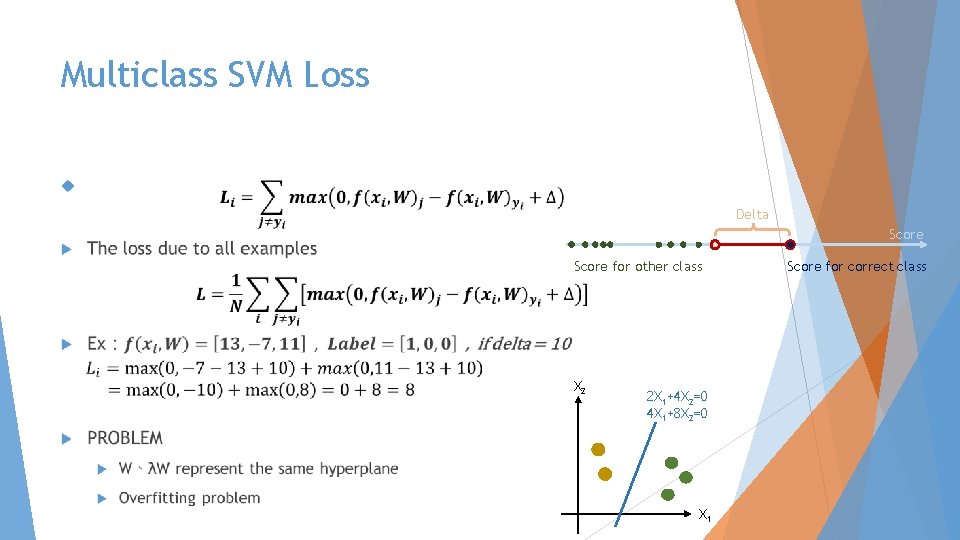

Multiclass SVM Loss Delta Score for other class X 2 2 X 1+4 X 2=0 4 X 1+8 X 2=0 X 1 Score for correct class

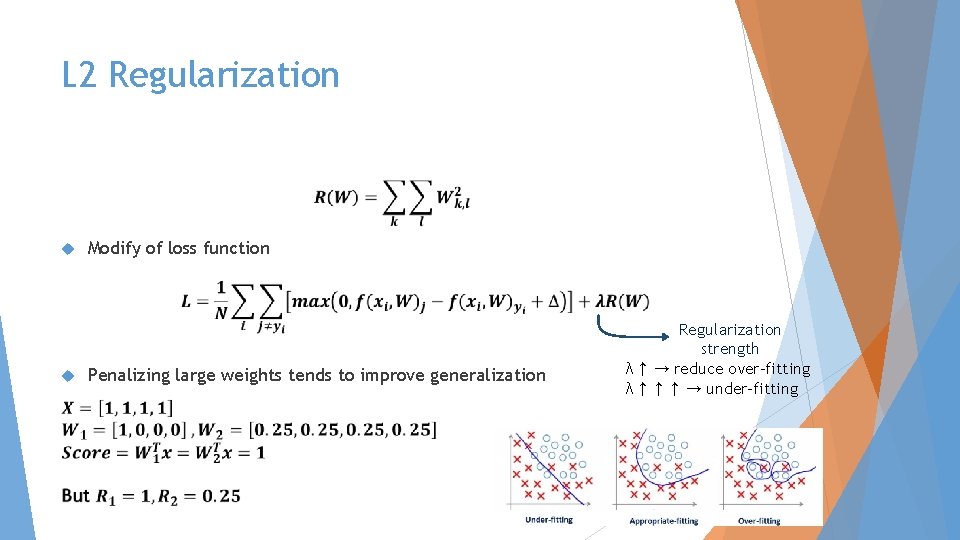

L 2 Regularization Modify of loss function Penalizing large weights tends to improve generalization Regularization strength λ↑ → reduce over-fitting λ↑↑↑ → under-fitting

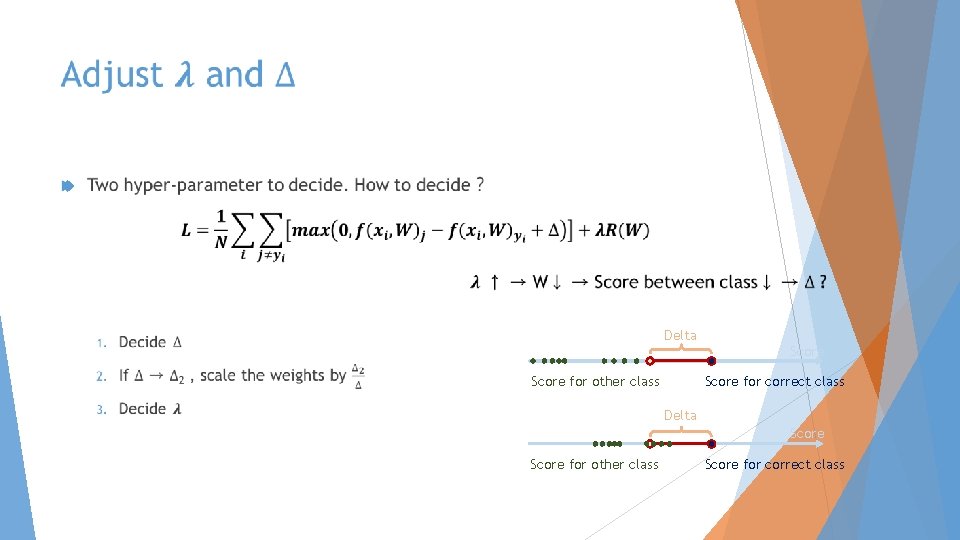

Delta Score for other class Score for correct class

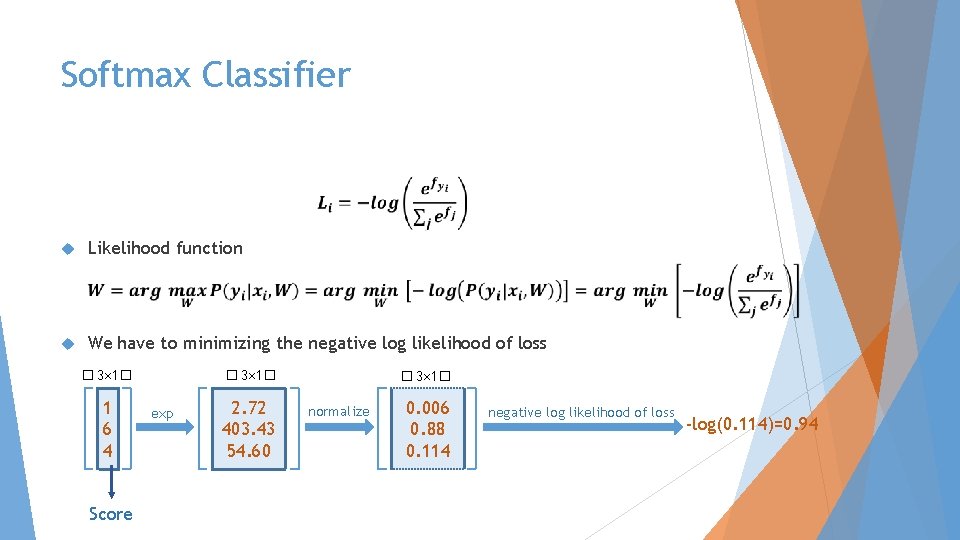

Softmax Classifier Likelihood function We have to minimizing the negative log likelihood of loss � 3× 1� 1 6 4 Score exp 2. 72 403. 43 54. 60 � 3× 1� normalize 0. 006 0. 88 0. 114 negative log likelihood of loss -log(0. 114)=0. 94

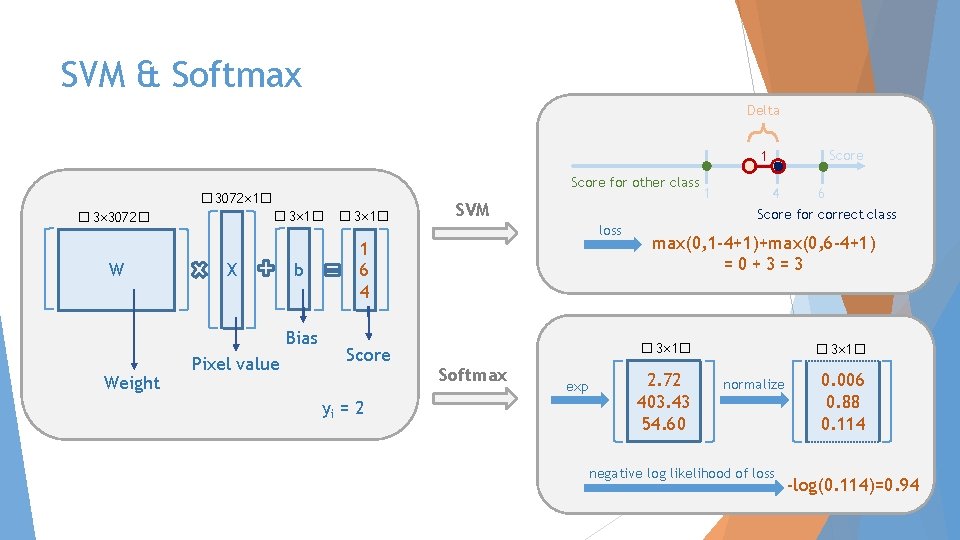

SVM & Softmax Delta 1 Score for other class � 3072× 1� � 3× 3072� W X b Bias Weight Pixel value SVM loss 1 6 4 Score yi = 2 1 4 6 Score for correct class max(0, 1 -4+1)+max(0, 6 -4+1) =0+3=3 � 3× 1� Softmax exp Score 2. 72 403. 43 54. 60 � 3× 1� normalize negative log likelihood of loss 0. 006 0. 88 0. 114 -log(0. 114)=0. 94

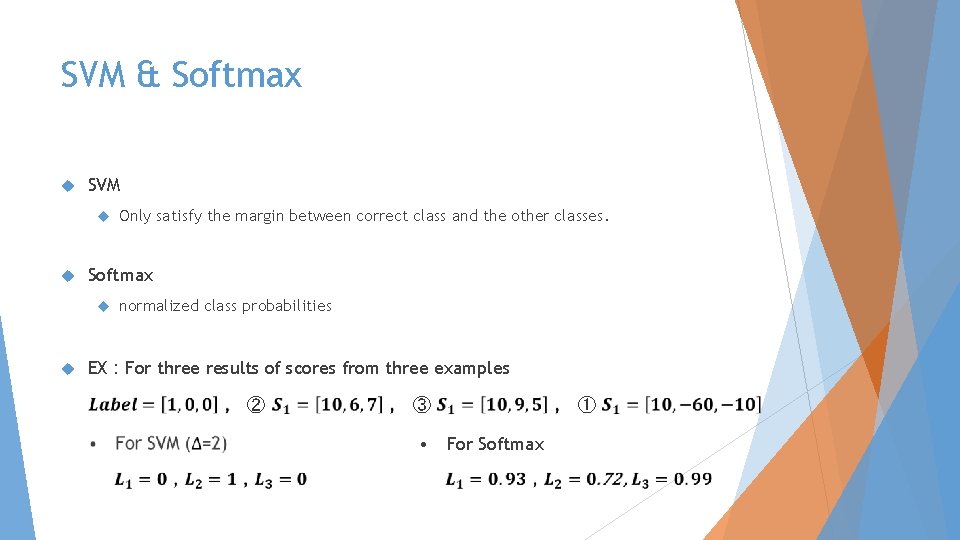

SVM & Softmax SVM Softmax Only satisfy the margin between correct class and the other classes. normalized class probabilities EX:For three results of scores from three examples ② ③ • ① For Softmax

- Slides: 14