If I Only had a Brain Search II

“If I Only had a Brain” Search II Lecture 3 -2 January 21 st, 1999 CS 250 Lecture 3 -2 CS 250: Intro to AI/Lisp

Monotonicity • Monotonic heuristic functions are nondecreasing – Why might this be an important feature? • Non-monotonic? Use pathmax: – Given a node, n, and its child, n’ f(n’) = max(f(n), g(n’) + h(n’)) Lecture 3 -2 CS 250: Intro to AI/Lisp

A* in Action Contoured state space A* starts at initial node Expands leaf node of lowest f(n) Fans out to increasing contours Lecture 3 -2 CS 250: Intro to AI/Lisp

A* in Perspective • If h(n) = 0 everywhere, A* is uniform cost • If h(n) is an exact estimate of the remaining cost A* runs in linear time! • Different errors lead to different performance factors • A* is the best (in terms of expanded nodes) of optimal best-first searches Lecture 3 -2 CS 250: Intro to AI/Lisp

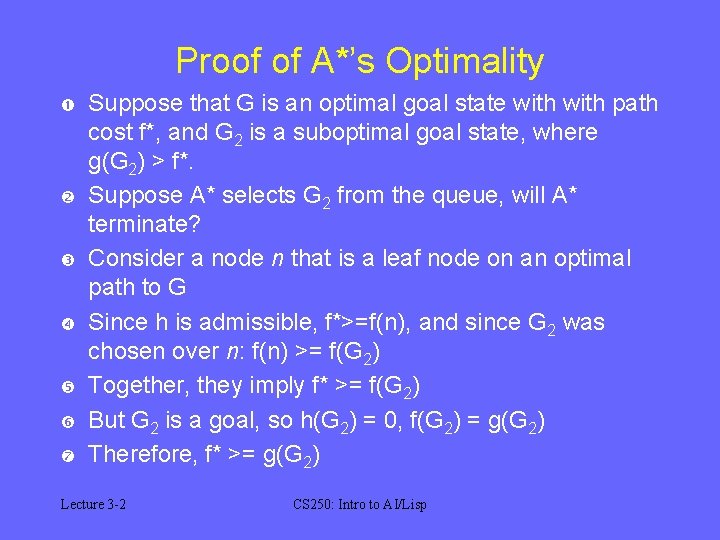

Proof of A*’s Optimality Suppose that G is an optimal goal state with path cost f*, and G 2 is a suboptimal goal state, where g(G 2) > f*. Suppose A* selects G 2 from the queue, will A* terminate? Consider a node n that is a leaf node on an optimal path to G Since h is admissible, f*>=f(n), and since G 2 was chosen over n: f(n) >= f(G 2) Together, they imply f* >= f(G 2) But G 2 is a goal, so h(G 2) = 0, f(G 2) = g(G 2) Therefore, f* >= g(G 2) Lecture 3 -2 CS 250: Intro to AI/Lisp

A*’s Complexity • Depends on the error of h(n) – Always 0: Breadth-first search – Exactly right: Time O(n) – Constant absolute error: Time O(n), but more than exactly right – Constant relative error: Time O(nk), Space O(nk) • See Figure 4. 8 Lecture 3 -2 CS 250: Intro to AI/Lisp

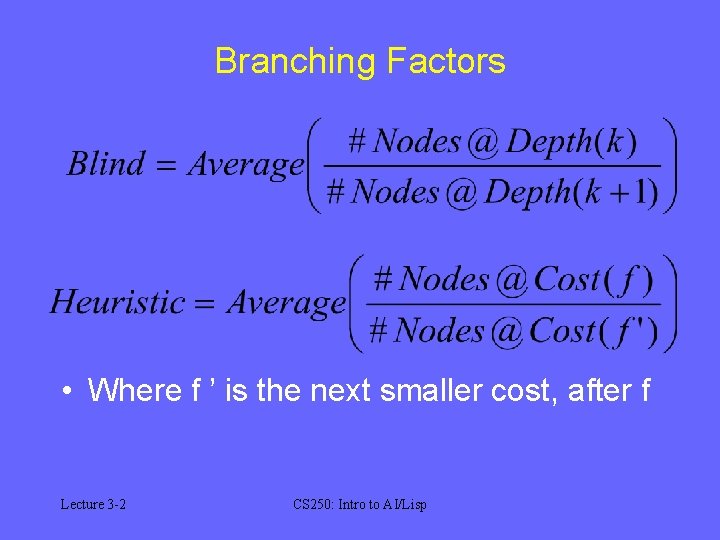

Branching Factors • Where f ’ is the next smaller cost, after f Lecture 3 -2 CS 250: Intro to AI/Lisp

Inventing Heuristics • Dominant heuristics: Bigger is better, if you don’t overestimate • How do you create heuristics? – Relaxed problem – Statistical approach • Constraint satisfaction – Most-constrained variable – Most-constraining variable – Least-constraining value Lecture 3 -2 CS 250: Intro to AI/Lisp

Improving on A* • Best of both worlds with DFID • Can we repeat with A*? – Successive iterations: • Increasing search depth (as with DFID) • Increasing total path cost Lecture 3 -2 CS 250: Intro to AI/Lisp

Iterative Deepening A* • Good stuff in A* • Limited memory Lecture 3 -2 CS 250: Intro to AI/Lisp

Iterative Improvements • Loop through, trying to “zero in” on the solution • Hill climbing – Climb higher – Problems? • Solution? Add a touch of randomness Lecture 3 -2 CS 250: Intro to AI/Lisp

Annealing an. neal vb [ME anelen to set on fire, fr. OE onaelan, fr. on + aelan to set on fire, burn, fr. al fire; akin to OE aeled fire, ON eldr] vt (1664) 1 a: to heat and then cool (as steel or glass) usu. for softening and making less brittle; also: to cool slowly usu. in a furnace b: to heat and then cool (nucleic acid) in order to separate strands and induce combination at lower temperature esp. with complementary strands of a different species 2: strengthen, toughen ~ vi: to be capable of combining with complementary nucleic acid by a process of heating and cooling Lecture 3 -2 CS 250: Intro to AI/Lisp

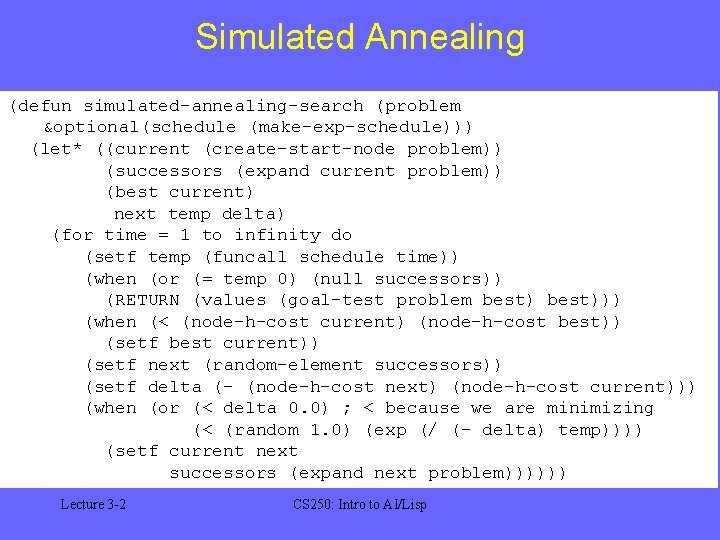

Simulated Annealing (defun simulated-annealing-search (problem &optional(schedule (make-exp-schedule))) (let* ((current (create-start-node problem)) (successors (expand current problem)) (best current) next temp delta) (for time = 1 to infinity do (setf temp (funcall schedule time)) (when (or (= temp 0) (null successors)) (RETURN (values (goal-test problem best))) (when (< (node-h-cost current) (node-h-cost best)) (setf best current)) (setf next (random-element successors)) (setf delta (- (node-h-cost next) (node-h-cost current))) (when (or (< delta 0. 0) ; < because we are minimizing (< (random 1. 0) (exp (/ (- delta) temp)))) (setf current next successors (expand next problem)))))) Lecture 3 -2 CS 250: Intro to AI/Lisp

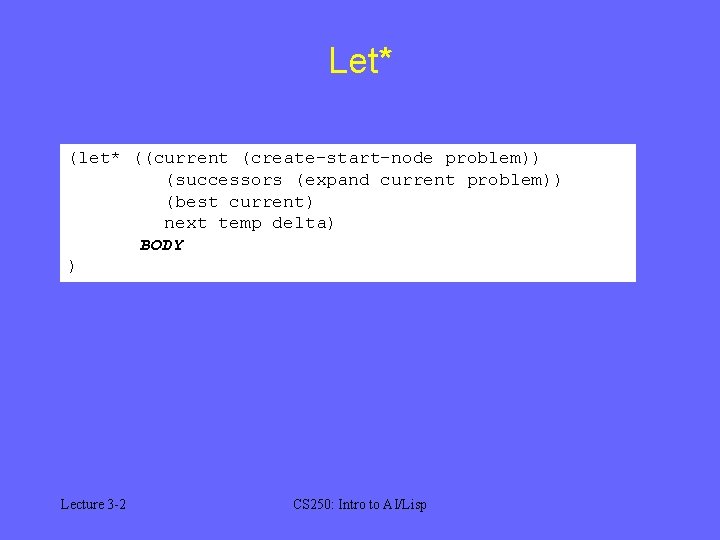

Let* (let* ((current (create-start-node problem)) (successors (expand current problem)) (best current) next temp delta) BODY ) Lecture 3 -2 CS 250: Intro to AI/Lisp

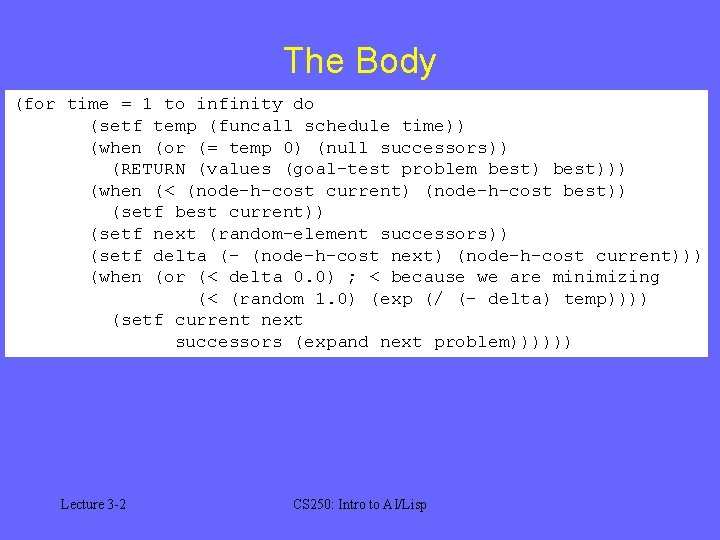

The Body (for time = 1 to infinity do (setf temp (funcall schedule time)) (when (or (= temp 0) (null successors)) (RETURN (values (goal-test problem best))) (when (< (node-h-cost current) (node-h-cost best)) (setf best current)) (setf next (random-element successors)) (setf delta (- (node-h-cost next) (node-h-cost current))) (when (or (< delta 0. 0) ; < because we are minimizing (< (random 1. 0) (exp (/ (- delta) temp)))) (setf current next successors (expand next problem)))))) Lecture 3 -2 CS 250: Intro to AI/Lisp

- Slides: 15