IEEE Floatingpoint Standards IEEE Standards IEEE 754 what

- Slides: 34

IEEE Floating-point Standards

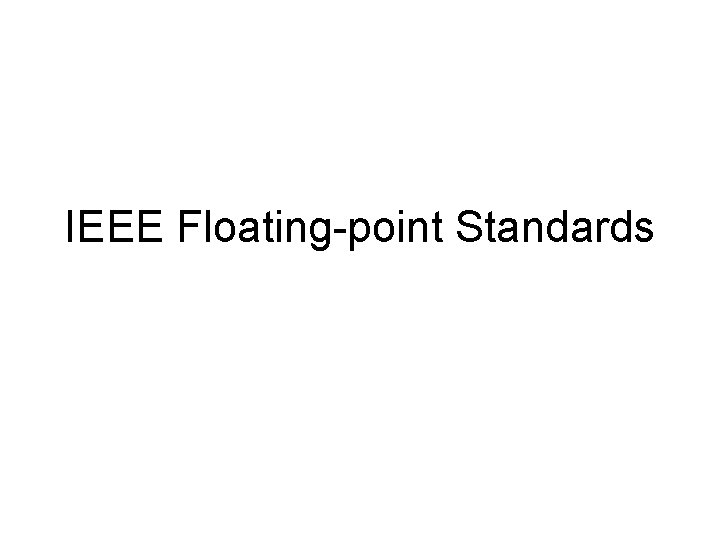

IEEE Standards • IEEE 754 (what we will study) – Specifies single and double precision • Correspond to float and double • A binary system (base = 2) • Specifies bit layout – Also allows for extended precision • IEEE 854 – “Radix-independent” standard • But only allows bases 2 and 10 : -) – – Allows for either decimal or binary Calculators use decimal Does not constrain layout Used for decimal systems (financial, etc. )

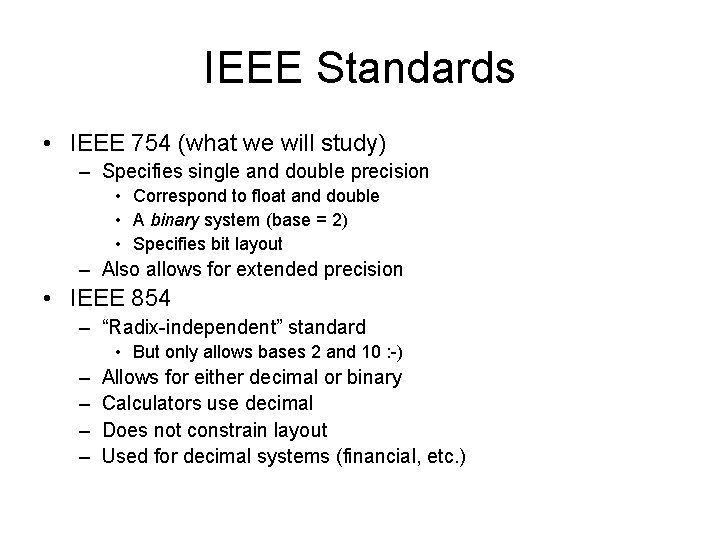

IEEE 754 Single Precision • 32 bits • Base = 2 • Precision = 24 – Only 23 are stored for normalized numbers – Will explain subnormal numbers shortly • Will also explain 0! • ε = 2 -23 ≈ 1. 9209*10 -7

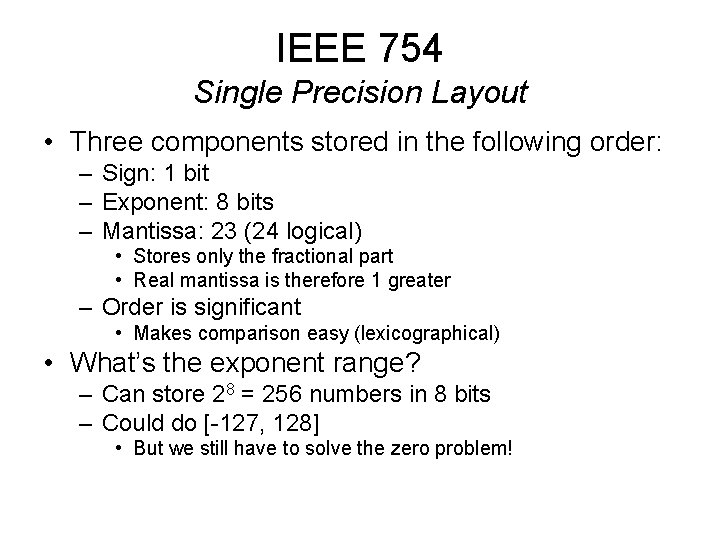

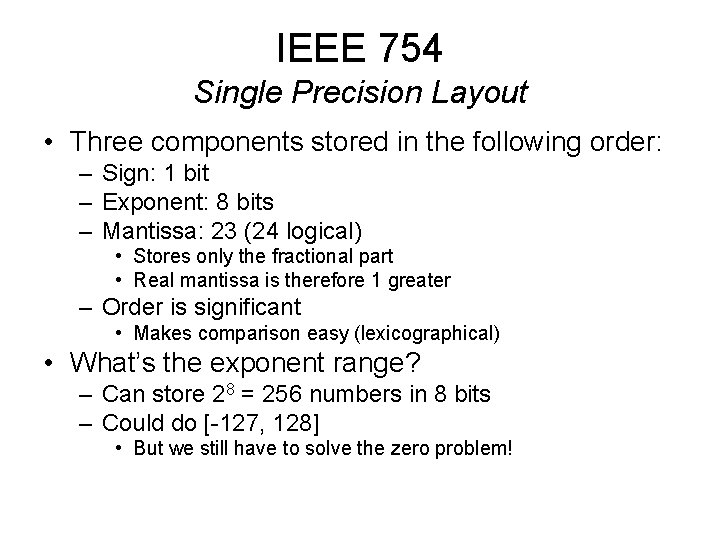

IEEE 754 Single Precision Layout • Three components stored in the following order: – Sign: 1 bit – Exponent: 8 bits – Mantissa: 23 (24 logical) • Stores only the fractional part • Real mantissa is therefore 1 greater – Order is significant • Makes comparison easy (lexicographical) • What’s the exponent range? – Can store 28 = 256 numbers in 8 bits – Could do [-127, 128] • But we still have to solve the zero problem!

IEEE 754 Single Precision Layout - Exponent • Stores exponent in a biased format – That is, it is offset by the bias before storing – The bias for single precision is 127 • The stored (biased) range [0 -255] represents an actual (unbiased) range of [-127, 128] – So when storing, add 127 to the actual exponent to get the biased exponent – When retrieving, subtract 127 from the biased exponent to get the actual exponent

IEEE Float examples • 1: 0 01111111 000000000000 • 2: 0 1000000000000 • 6. 5: 0 10000001 1010000000000 • -6. 5 1 10000001 1010000000000

IEEE 754 Single Precision – Zero and Friends • How shall we represent 0? • Since numbers are normalized, a 0 mantissa is impossible • Instead, we’ll usurp the exponent -127 – This exponent is unavailable for normalized numbers – We’ll use it for multiple purposes • So 0 is represented as (1). 00… 0 * 2 -127 – But stored as all 0’s!

Consequences of Zero • We can have a negative zero too! – The sign bit can be 1, everything else is 0 – They are considered the same number, naturally • Now that we have put exponent -127 out of commission for normalized numbers, how about if we use it for some more numbers… – Let’s milk this thing! – We’ll use non-zero fractions with it

Subnormal Numbers (aka Denormalized Numbers) • Numbers with an exponent of -127 – They assume that the unit digit is 0, not 1 – In other words, they represent the numbers: 0. b 1 b 2…b 23 * 2 -126 • Why -126? – Otherwise we’d be skipping numbers – 0. 1 * 2 -126 = 1. 0 * 2 -127

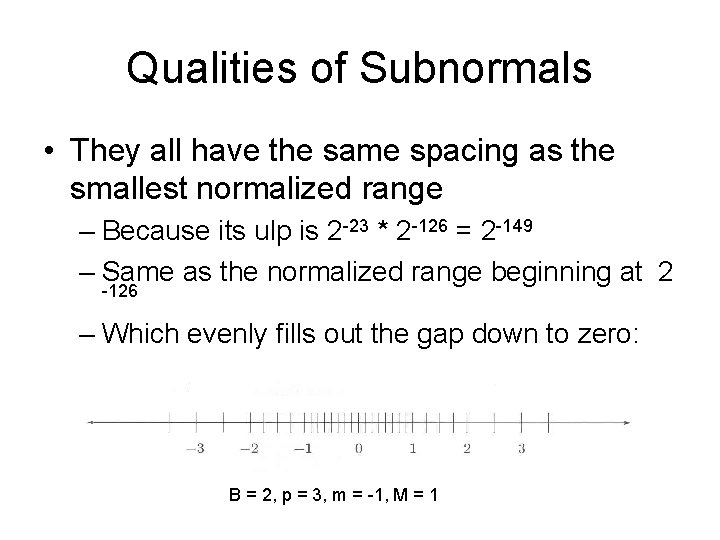

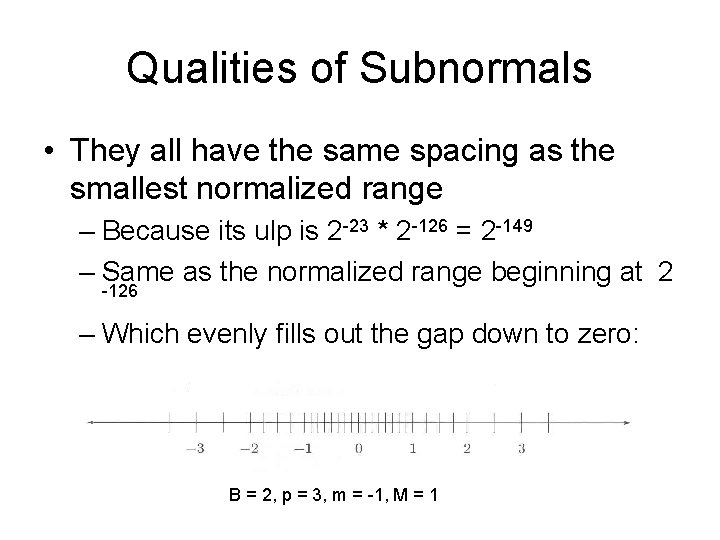

Qualities of Subnormals • They all have the same spacing as the smallest normalized range – Because its ulp is 2 -23 * 2 -126 = 2 -149 – Same as the normalized range beginning at 2 -126 – Which evenly fills out the gap down to zero: B = 2, p = 3, m = -1, M = 1

Subnormal Numbers More Info • The smallest normalized float is 2 -126 – Approx 1. 175 * 10 -38 – Use numeric_limits<float>: : min() • The smallest subnormal number is. 00… 1 * 2 -126 = 2 -149 ≈ 1. 4013 * 10 -45 – Use numeric_limits<float>: : denorm_min() • Not all compilers support subnormal numbers

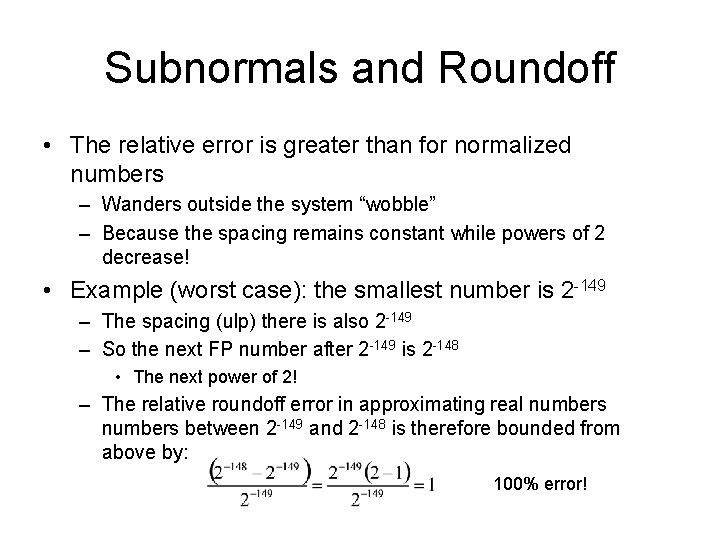

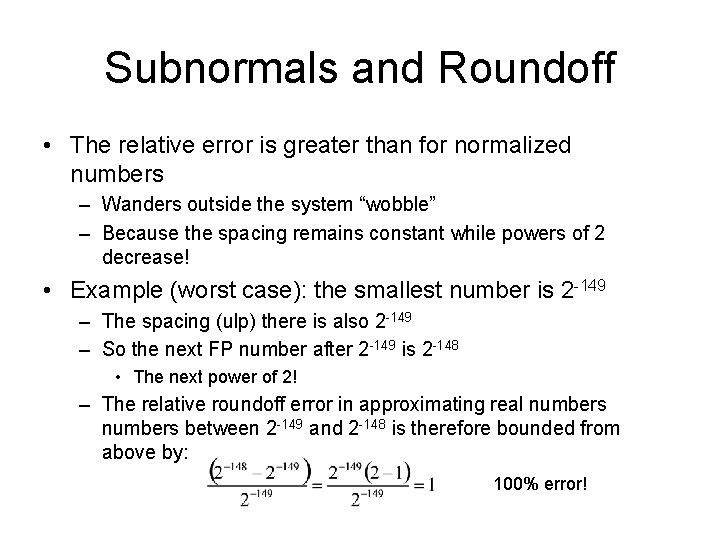

Subnormals and Roundoff • The relative error is greater than for normalized numbers – Wanders outside the system “wobble” – Because the spacing remains constant while powers of 2 decrease! • Example (worst case): the smallest number is 2 -149 – The spacing (ulp) there is also 2 -149 – So the next FP number after 2 -149 is 2 -148 • The next power of 2! – The relative roundoff error in approximating real numbers between 2 -149 and 2 -148 is therefore bounded from above by: 100% error!

More Special Numbers • Infinity – Both the positive and negative kind – Once they are obtained in a calculation, the right thing mathematically happens • e. g. , 1/∞ = 0, 1/-∞ = -0, 1/0 = ∞, -1/0 = -∞ – Layout: exponent is all 1’s (the biased number 255, 128 unbiased), fraction is all 0’s • So the exponent 128 is also usurped – So 127 is really the largest usable exponent – Largest number = 1. 111… 1 * 2127 ≈ 3. 40282 * 1038

Another Special “Number” • Na. N – “not a number” – Occurs when an invalid computation is attempted (like sqrt of a negative value) • Any subsequent calculations involving a Na. N result in a Na. N • So you don’t have to mess with exception handling • Layout: exponent all 1’s (like ∞), but fraction is non-zero (just a leading 1 by default) • The only quantity that doesn’t compare equal to itself! – That’s okay. It’s not a number : -)

Quiet Na. Ns vs. Signaling Na. Ns • Has to do with whether FP exceptions are enabled – Allowing users to install handlers – Extra info is encoded in the fraction • We won’t worry about this – C++ does not explicitly support FP exceptions • A Quiet Na. N does not throw an exception – Our Na. Ns are Quiet • A Signaling Na. N does – Common trick: Fill uninitialized variables with signaling Na. Ns, so their use causes an exception

Quiet Na. N (Na. NQ) Examples • • • sqrt(negative number) 0*∞ 0/0 ∞/∞ x%0 ∞%x infinity - infinity (same signs) Any computation involving a Quiet Na. N Note: division by zero does not result in a Na. N unless if the numerator is 0 or a Na. N (if not, you get an infinity as expected)

Signaling Na. Ns (Na. NS) • Only active when exceptions are enabled • No portable way to do in C++ • Mostly happens only when using an uninitialized variable – Or if a Na. NS is used in a computation

Na. Ns in Computations • They taint every computation – A Na. N begets a Na. N • For boolean tests, you always get false! – Except for x != y • Including x != x – These are true • For logical consistency • Do not compare x == x to detect a Na. N, though! – Optimizers can hard-code a value of true! – Can compare for inequality with numeric_limits<>: : quiet_Na. N( ) – But we’ll write our own (inspects bit representation)

Bit Patterns for Special Numbers Summary • • • Zero: 0 x 0000 Positive infinity = 0 x 7 f 800000 Negative infinity = 0 xff 800000 Signaling Na. N: in [0 xff 800001, 0 xffbfffff] Quiet Na. N: in [0 x 7 fc 00000, 0 x 7 fffffff] or in [0 xffc 00000, 0 xffff] • Similar for double (64 -bit)

Revisiting ulps(x, y) • See IEEE_ulps. h

Endian-ness • Big Endian vs. Little Endian • Big Endian stores the most significant bytes of a number first – The order we envision normally • Little Endian reverses the bytes! – Intel does this – it puts the most significant bytes at higher addresses • Very important issue for portability and examining bit representations – See code example (IEEEFloat. cpp, coming up soon)

Revealing Endian-ness • Bitwise operations do the right thing – They know where the correct bits are, so little endianness goes undetected with bitwise ops • Unions, however, reflect physical layout – Can also do this via pointers • Bit fields go a step further – They return even the bits in a byte in reversed order on Little Endian machines • Although they’re not really stored that way • This is actually a convenience (you can think of the entire set of bits as being reversed) • Example: endian. cpp

Mondo Cool Example • IEEEFloat. cpp • Summarizes everything so far for float – Except signaling Na. Ns, of course – Reveals endian-ness • You will do similar operations for double as part of Program 2

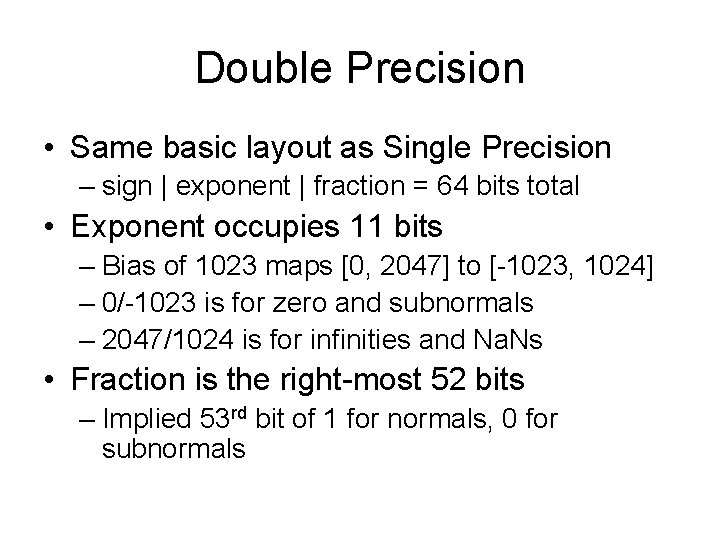

Double Precision • Same basic layout as Single Precision – sign | exponent | fraction = 64 bits total • Exponent occupies 11 bits – Bias of 1023 maps [0, 2047] to [-1023, 1024] – 0/-1023 is for zero and subnormals – 2047/1024 is for infinities and Na. Ns • Fraction is the right-most 52 bits – Implied 53 rd bit of 1 for normals, 0 for subnormals

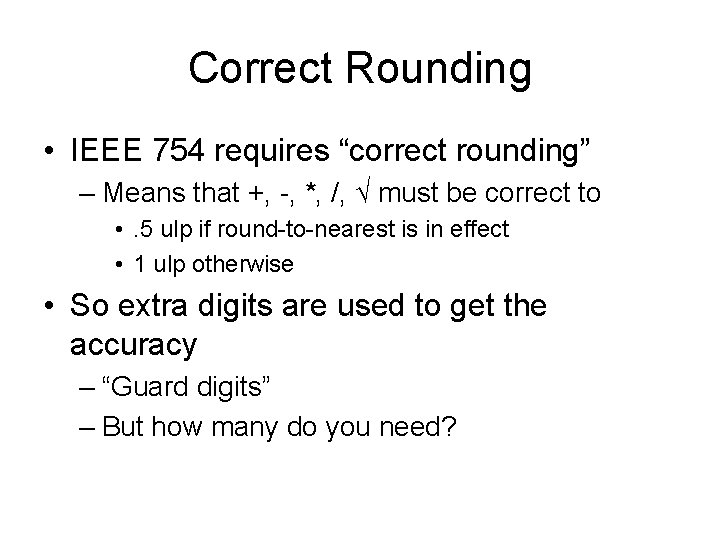

Correct Rounding • IEEE 754 requires “correct rounding” – Means that +, -, *, /, √ must be correct to • . 5 ulp if round-to-nearest is in effect • 1 ulp otherwise • So extra digits are used to get the accuracy – “Guard digits” – But how many do you need?

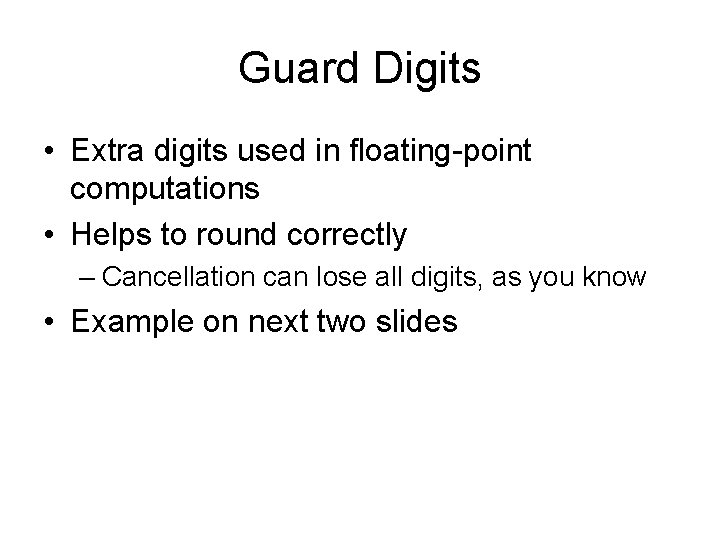

Guard Digits • Extra digits used in floating-point computations • Helps to round correctly – Cancellation can lose all digits, as you know • Example on next two slides

Guard Digit Example B = 2, p = 3, subtract 1. 00 x 21 – 1. 11 x 20 (2 – 1. 75 =. 25) Must align (always to larger power, so you don’t go beyond 1’s place): 1. 00 x 21 - 0. 11 x 21 (shifted right 1 place) -------0. 01 x 21 = 1. 00 x 2 -1 = 1/2 => 100% error With a guard digit: 1. 000 x 21 - 0. 111 x 21 (preserves original 1 in 3 rd place) -------0. 001 x 21 = 1. 000 x 2 -2 = 1/4 => correct

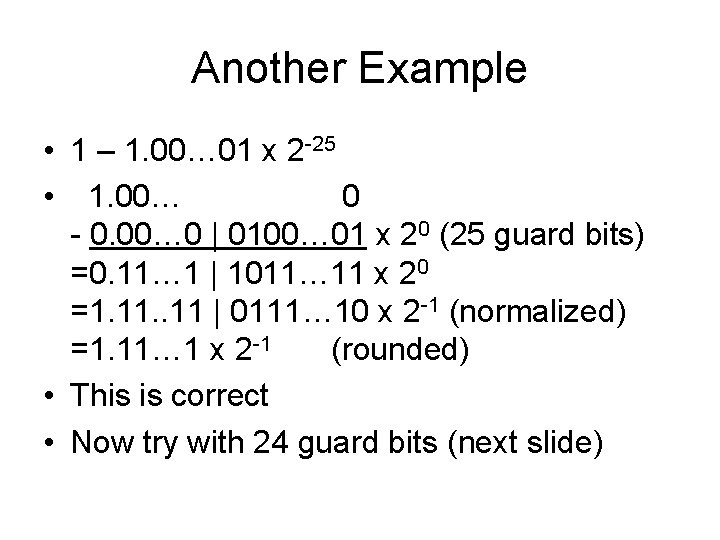

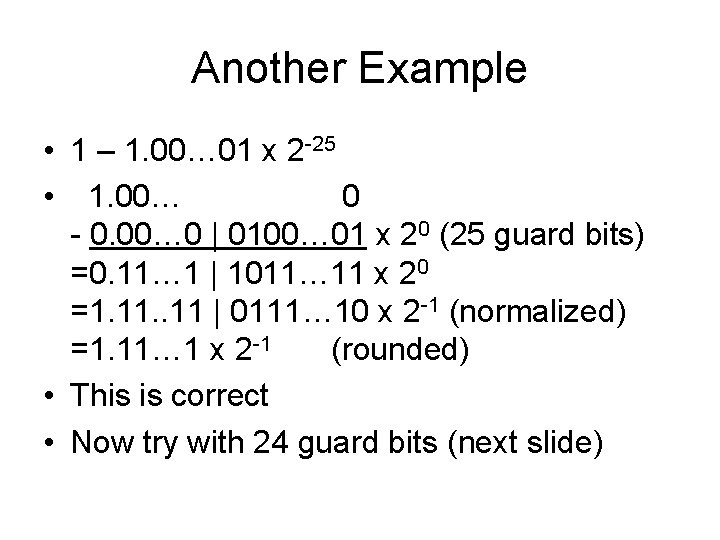

Another Example • 1 – 1. 00… 01 x 2 -25 • 1. 00… 0 - 0. 00… 0 | 0100… 01 x 20 (25 guard bits) =0. 11… 1 | 1011… 11 x 20 =1. 11 | 0111… 10 x 2 -1 (normalized) =1. 11… 1 x 2 -1 (rounded) • This is correct • Now try with 24 guard bits (next slide)

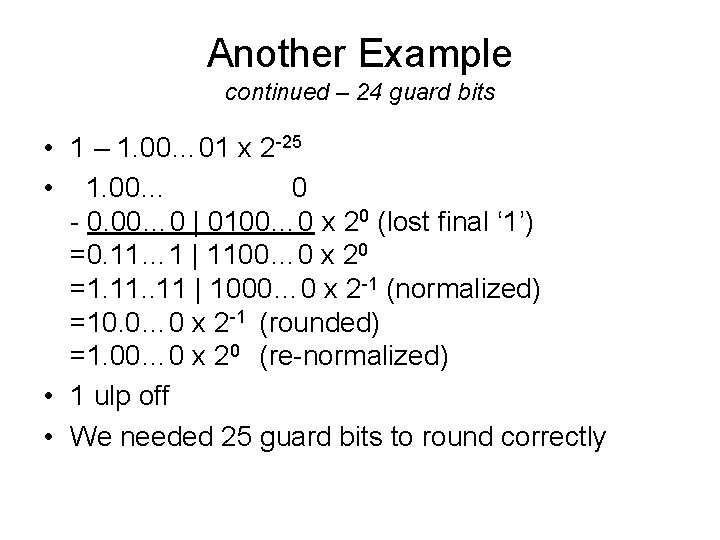

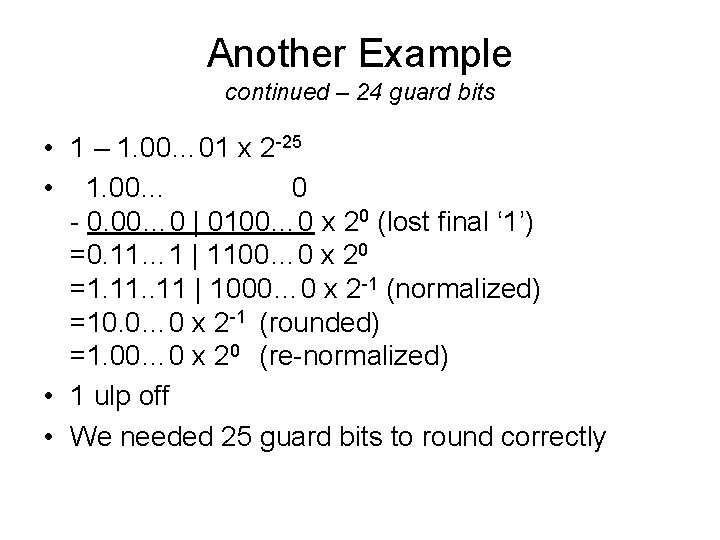

Another Example continued – 24 guard bits • 1 – 1. 00… 01 x 2 -25 • 1. 00… 0 - 0. 00… 0 | 0100… 0 x 20 (lost final ‘ 1’) =0. 11… 1 | 1100… 0 x 20 =1. 11 | 1000… 0 x 2 -1 (normalized) =10. 0… 0 x 2 -1 (rounded) =1. 00… 0 x 20 (re-normalized) • 1 ulp off • We needed 25 guard bits to round correctly

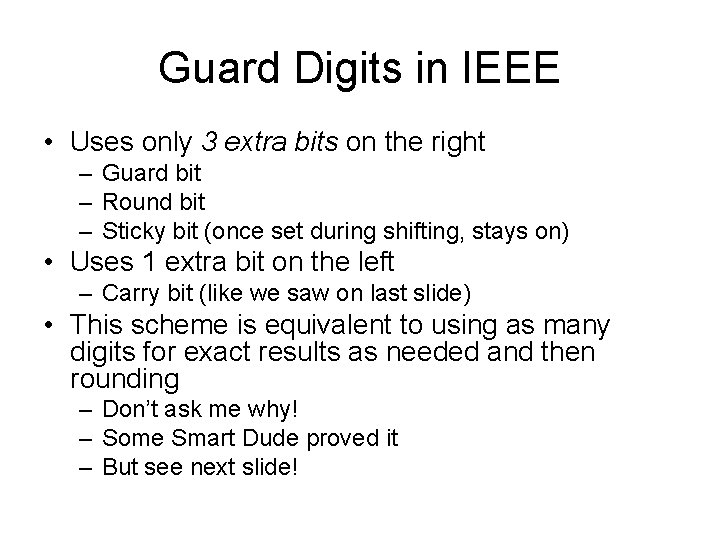

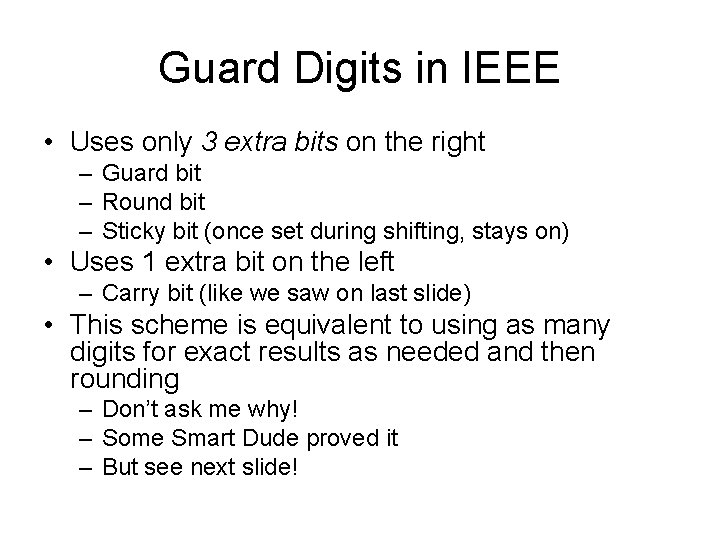

Guard Digits in IEEE • Uses only 3 extra bits on the right – Guard bit – Round bit – Sticky bit (once set during shifting, stays on) • Uses 1 extra bit on the left – Carry bit (like we saw on last slide) • This scheme is equivalent to using as many digits for exact results as needed and then rounding – Don’t ask me why! – Some Smart Dude proved it – But see next slide!

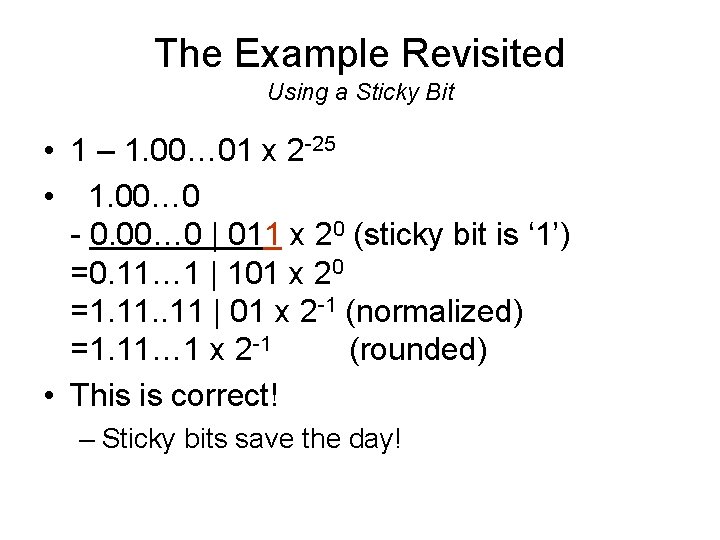

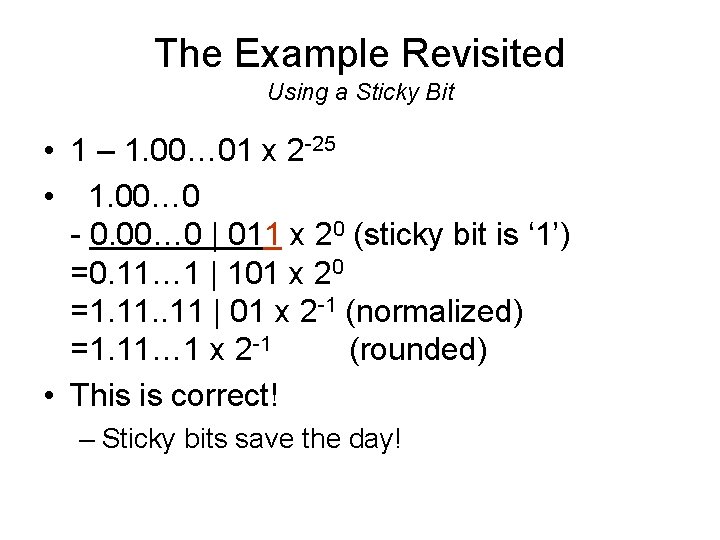

The Example Revisited Using a Sticky Bit • 1 – 1. 00… 01 x 2 -25 • 1. 00… 0 - 0. 00… 0 | 011 x 20 (sticky bit is ‘ 1’) =0. 11… 1 | 101 x 20 =1. 11 | 01 x 2 -1 (normalized) =1. 11… 1 x 2 -1 (rounded) • This is correct! – Sticky bits save the day!

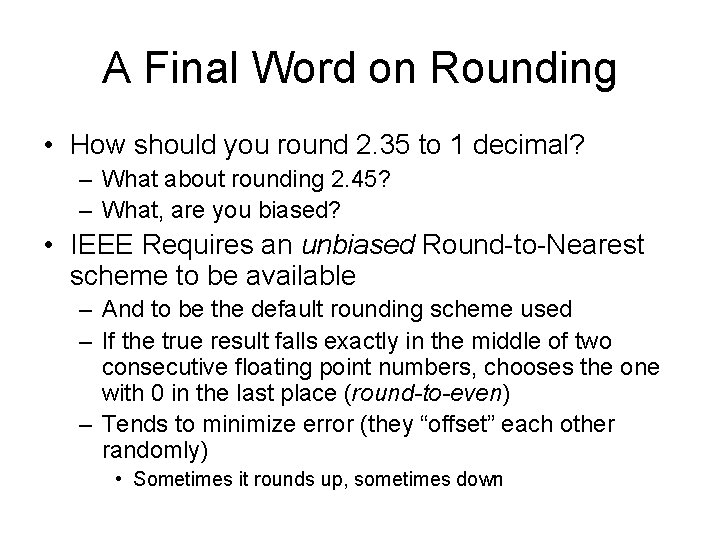

A Final Word on Rounding • How should you round 2. 35 to 1 decimal? – What about rounding 2. 45? – What, are you biased? • IEEE Requires an unbiased Round-to-Nearest scheme to be available – And to be the default rounding scheme used – If the true result falls exactly in the middle of two consecutive floating point numbers, chooses the one with 0 in the last place (round-to-even) – Tends to minimize error (they “offset” each other randomly) • Sometimes it rounds up, sometimes down

Guard Digits in Calculators • Use 3 extra decimal digits • They use 13 internally, but limit the display to 10 • So 10 correct digits remain – Unless cancellation occurs, of course • But the non-zero digits that remain are good

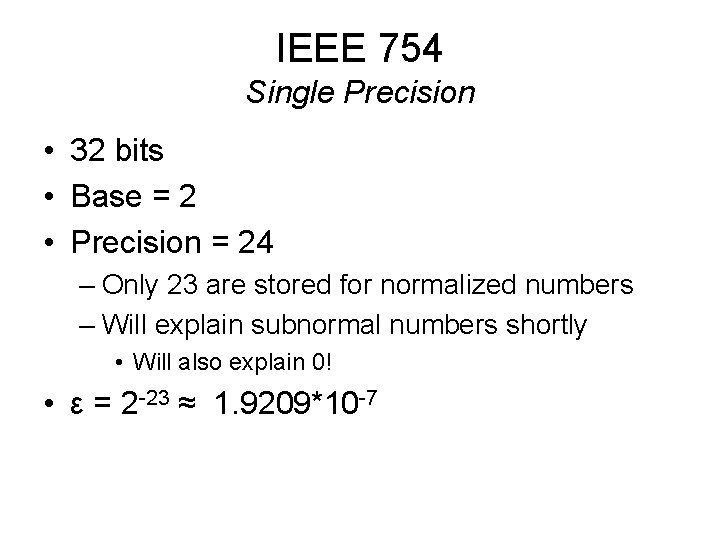

IEEE Extended Precision • Optional recommendations for precision greater than float/double – long double – Implemented in hardware (Intel 80 -bit) – Not crucial, since the guard/round/sticky scheme is pretty good (although helps minimize cancellation) • Note: – Intel often uses extended precision for intermediate operations – So 1 – (1 + x) may not equal: z = 1 + x; // Truncates to z’s precision 1 -z